LSST Data Management Overview Jeff Kantor LSST Corporation

- Slides: 20

LSST Data Management Overview Jeff Kantor LSST Corporation LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 1

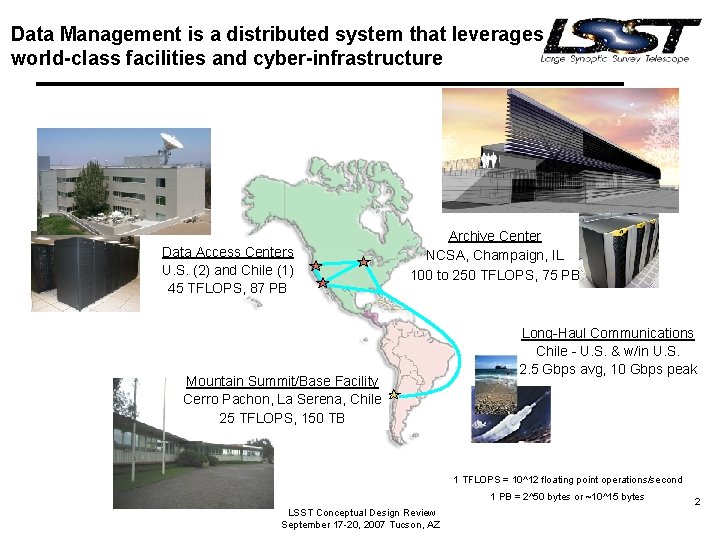

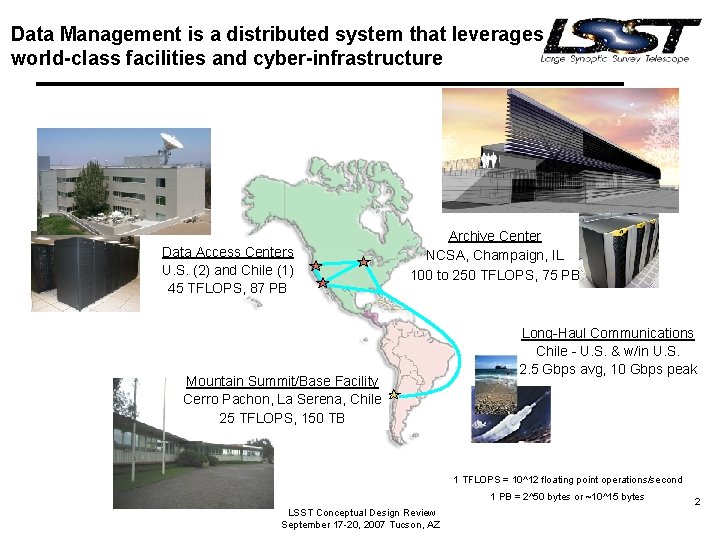

Data Management is a distributed system that leverages world-class facilities and cyber-infrastructure Data Access Centers U. S. (2) and Chile (1) 45 TFLOPS, 87 PB Archive Center NCSA, Champaign, IL 100 to 250 TFLOPS, 75 PB Mountain Summit/Base Facility Cerro Pachon, La Serena, Chile 25 TFLOPS, 150 TB Long-Haul Communications Chile - U. S. & w/in U. S. 2. 5 Gbps avg, 10 Gbps peak 1 TFLOPS = 10^12 floating point operations/second 1 PB = 2^50 bytes or ~10^15 bytes LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 2

LSST Data Management provides a unique national resource for research & education • Astronomy and astrophysics – Scale and depth of LSST database is unprecedented in astronomy • Provides calibrated databases for frontier science • Breaks new ground with combination of depth, width, epochs/field • Enables science that cannot be anticipated today • Cyber-infrastructure and computer science – Requires multi-disciplinary approach to solving challenges • Massively parallel image data processing • Peta-scale data ingest and data access • Efficient scientific and quality analysis of peta-scale data LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 3

DM system complexity exists but overall is tractable • Complexities we have to deal with in DM – Very high data volumes (transfer, ingest, and especially query) – Advances in scale of algorithms for photometry, astrometry, PSF estimation, moving object detection, shape measurement of faint galaxies – Provenance recording and reprocessing – Evolution of algorithms and technology • Complexities we DON’T have to deal with in DM – Tens of thousands of simultaneous users (e. g. online stores) – Fusion of remote sensing data from many sources (e. g. earthquake prediction systems) – Millisecond or faster time constraints (e. g. flight control systems) – Very deeply nested multi-level transactions (e. g. banking OLTP systems) – Severe operating environment-driven hardware limitations (e. g. spaceborne instruments) – Processing that is highly coupled across entire data set with large amount of inter-process communication (e. g. geophysics 3 D Kirchhoff migration) LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 4

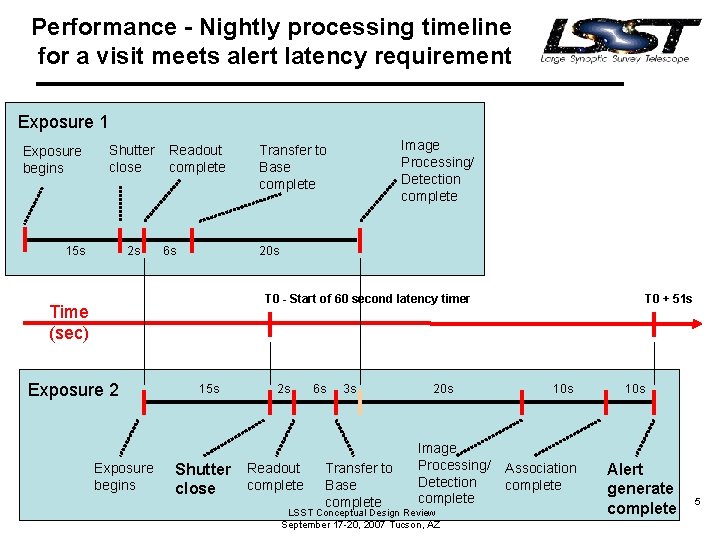

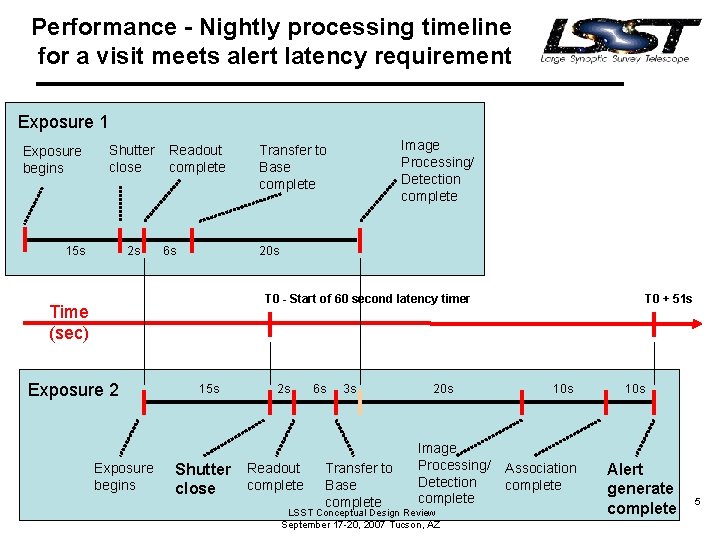

Performance - Nightly processing timeline for a visit meets alert latency requirement Exposure 1 Exposure begins Shutter close 15 s 2 s Readout complete 6 s Image Processing/ Detection complete Transfer to Base complete 20 s T 0 - Start of 60 second latency timer Time (sec) Exposure 2 Exposure begins 15 s Shutter close 2 s Readout complete 6 s 3 s Transfer to Base complete 20 s Image Processing/ Detection complete LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ T 0 + 51 s 10 s Association complete 10 s Alert generate complete 5

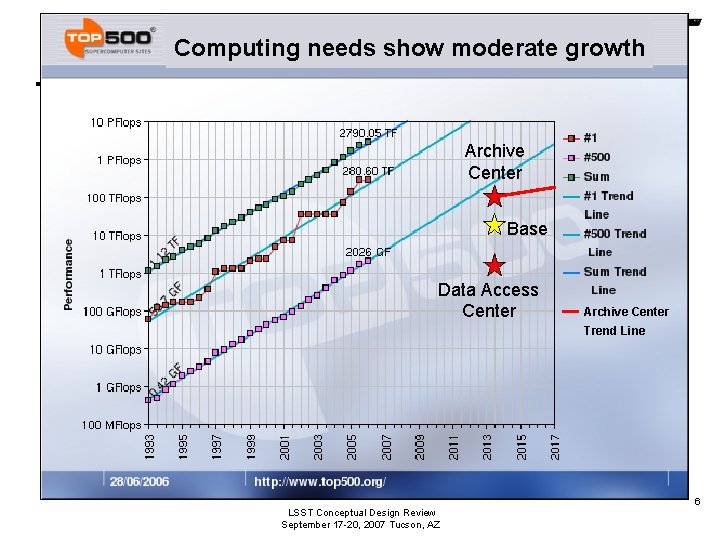

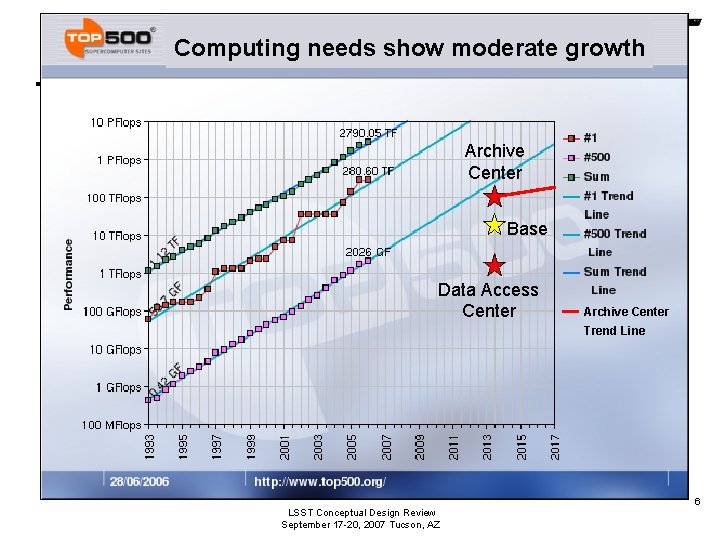

Computing needs show moderate growth Archive Center Base Data Access Center Archive Center Trend Line LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 6

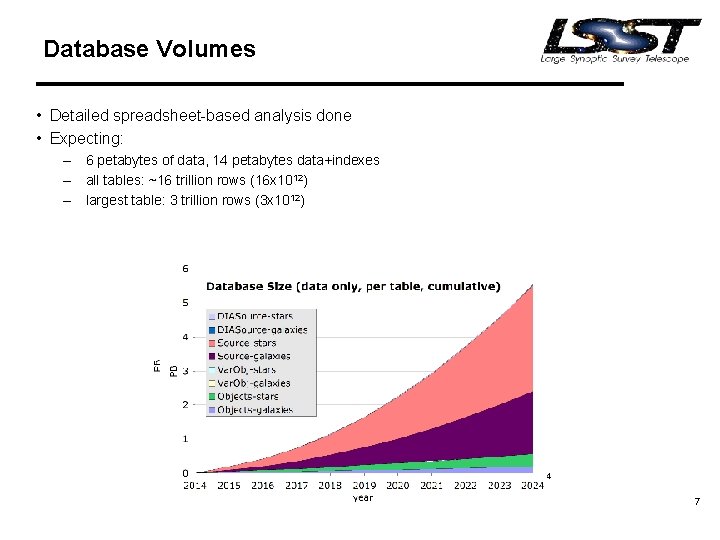

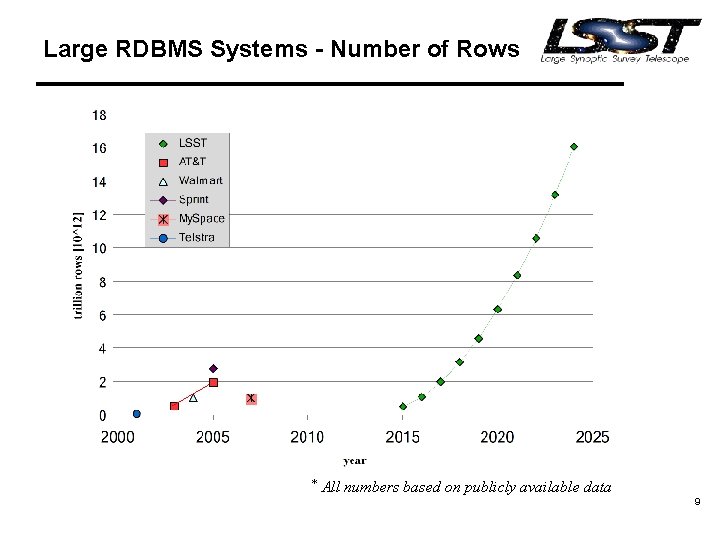

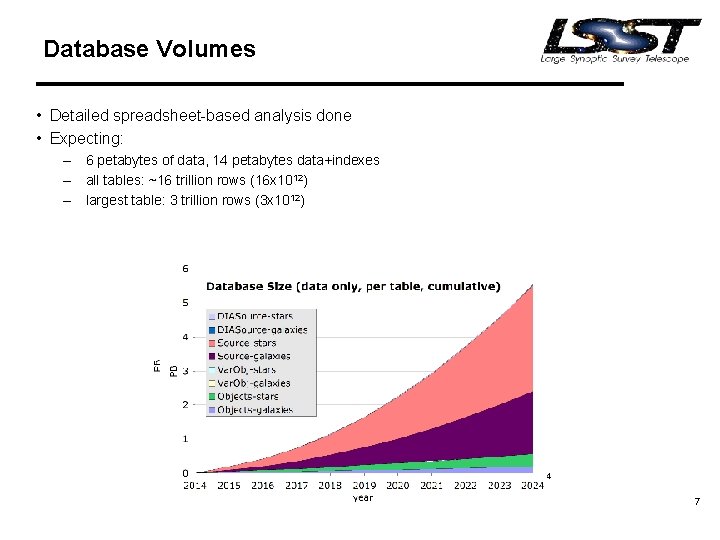

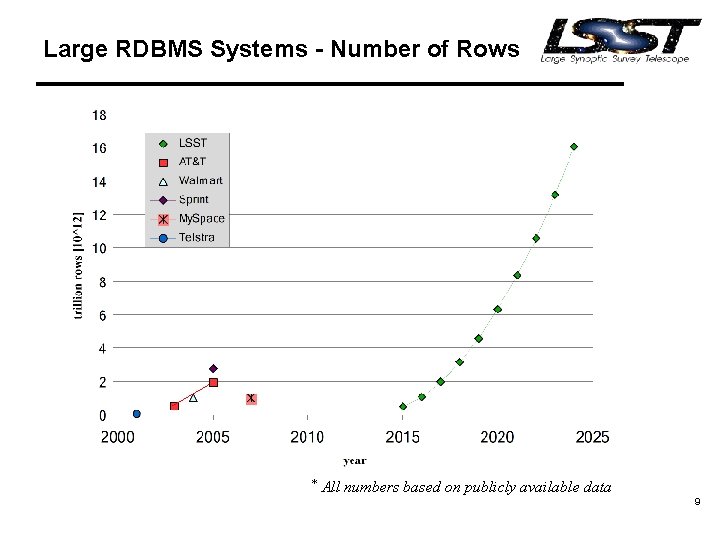

Database Volumes • Detailed spreadsheet-based analysis done • Expecting: – 6 petabytes of data, 14 petabytes data+indexes – all tables: ~16 trillion rows (16 x 1012) – largest table: 3 trillion rows (3 x 1012) 7

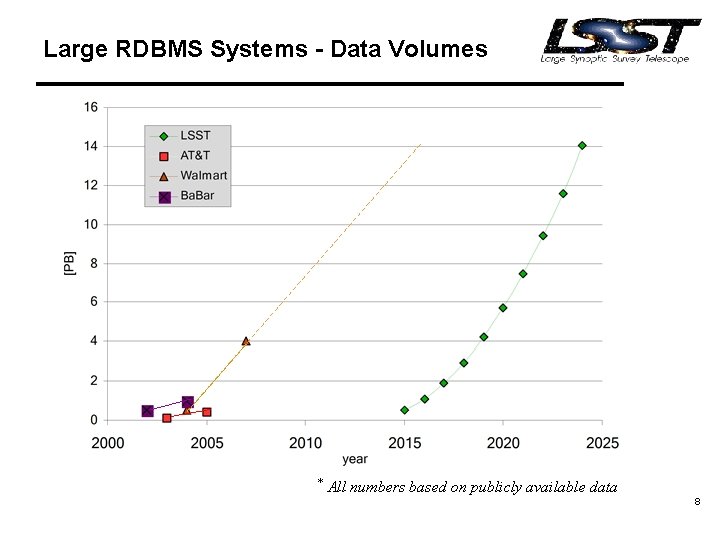

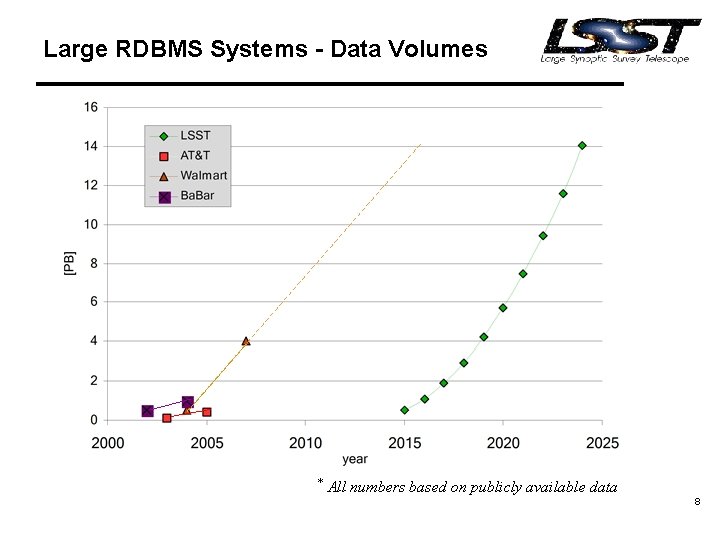

Large RDBMS Systems - Data Volumes * All numbers based on publicly available data 8

Large RDBMS Systems - Number of Rows * All numbers based on publicly available data 9

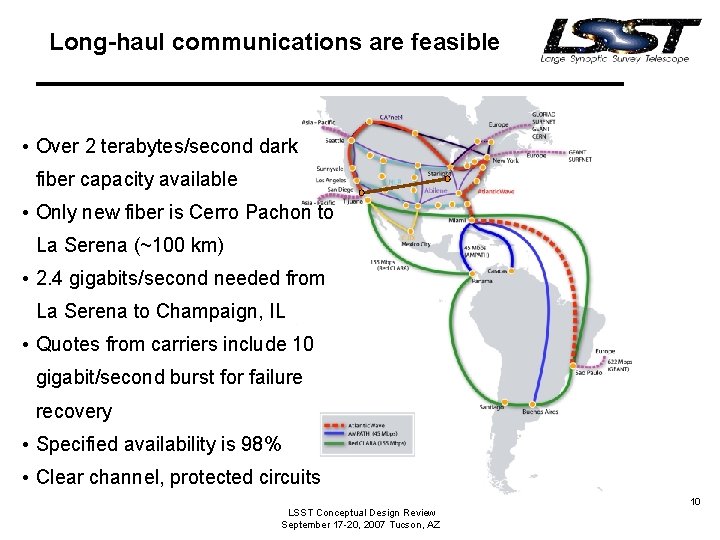

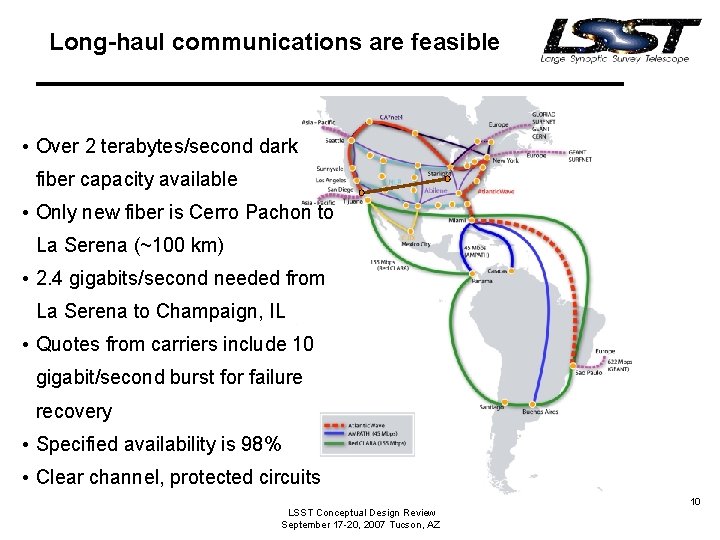

Long-haul communications are feasible • Over 2 terabytes/second dark fiber capacity available • Only new fiber is Cerro Pachon to La Serena (~100 km) • 2. 4 gigabits/second needed from La Serena to Champaign, IL • Quotes from carriers include 10 gigabit/second burst for failure Cerro La Pachon Serena recovery • Specified availability is 98% • Clear channel, protected circuits LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 10

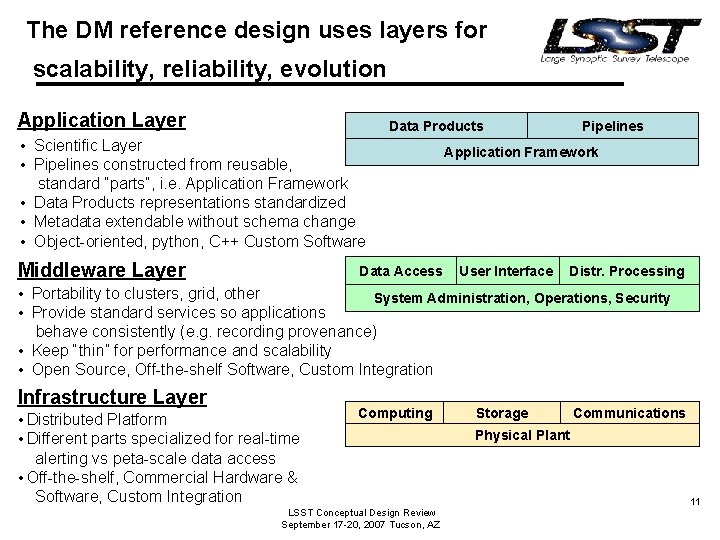

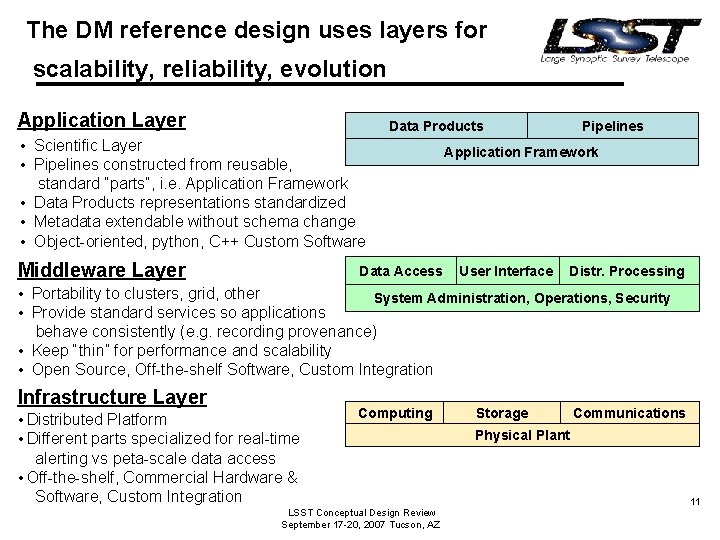

The DM reference design uses layers for scalability, reliability, evolution Application Layer Data Products • Scientific Layer • Pipelines constructed from reusable, standard “parts”, i. e. Application Framework • Data Products representations standardized • Metadata extendable without schema change • Object-oriented, python, C++ Custom Software Middleware Layer Data Access Pipelines Application Framework User Interface Distr. Processing • Portability to clusters, grid, other System Administration, Operations, Security • Provide standard services so applications behave consistently (e. g. recording provenance) • Keep “thin” for performance and scalability • Open Source, Off-the-shelf Software, Custom Integration Infrastructure Layer • Distributed Platform • Different parts specialized for real-time alerting vs peta-scale data access • Off-the-shelf, Commercial Hardware & Software, Custom Integration Computing LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ Storage Communications Physical Plant 11

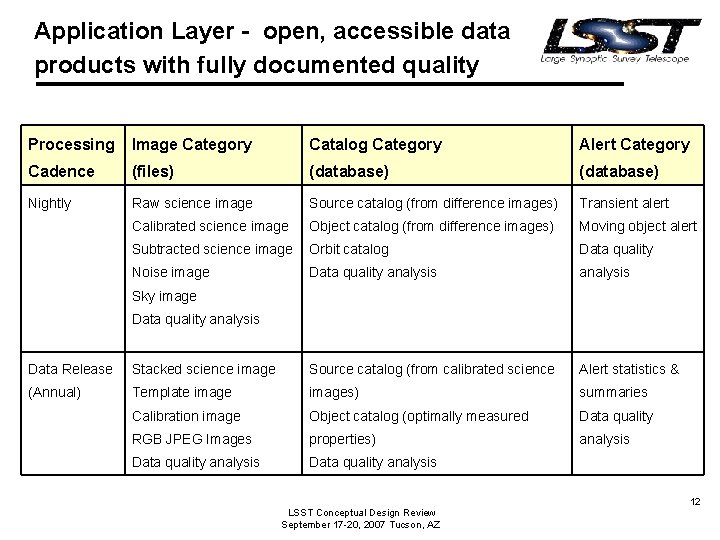

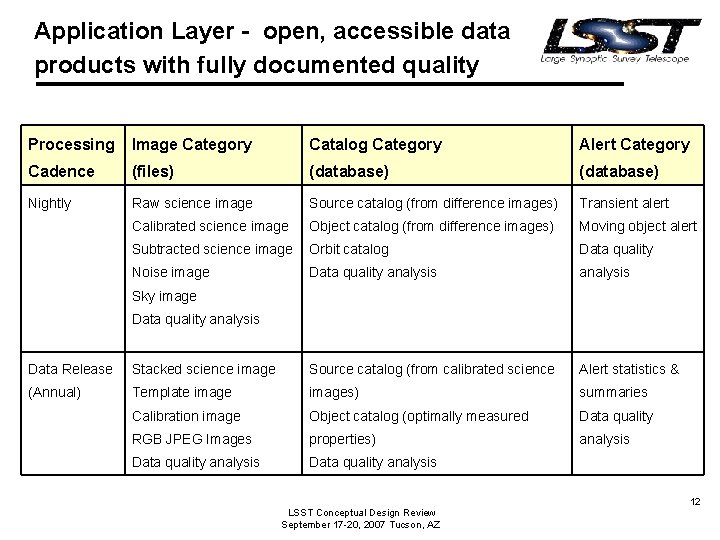

Application Layer - open, accessible data products with fully documented quality Processing Image Category Catalog Category Alert Category Cadence (files) (database) Nightly Raw science image Source catalog (from difference images) Transient alert Calibrated science image Object catalog (from difference images) Moving object alert Subtracted science image Orbit catalog Data quality Noise image Data quality analysis Sky image Data quality analysis Data Release Stacked science image Source catalog (from calibrated science Alert statistics & (Annual) Template images) summaries Calibration image Object catalog (optimally measured Data quality RGB JPEG Images properties) analysis Data quality analysis LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 12

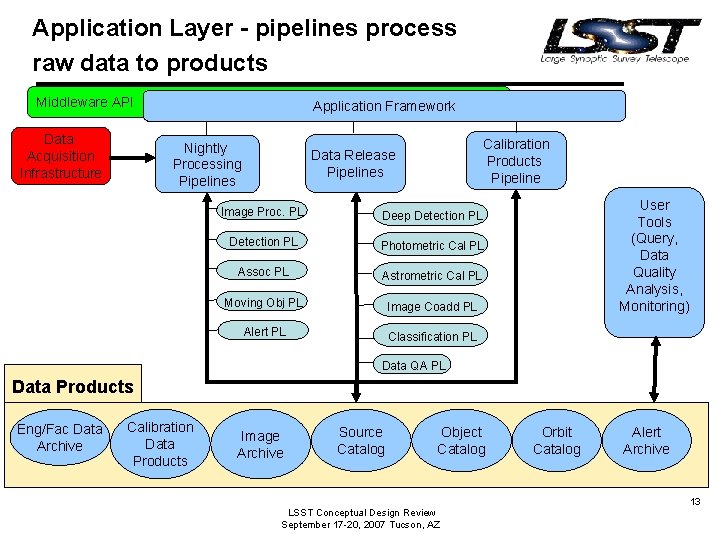

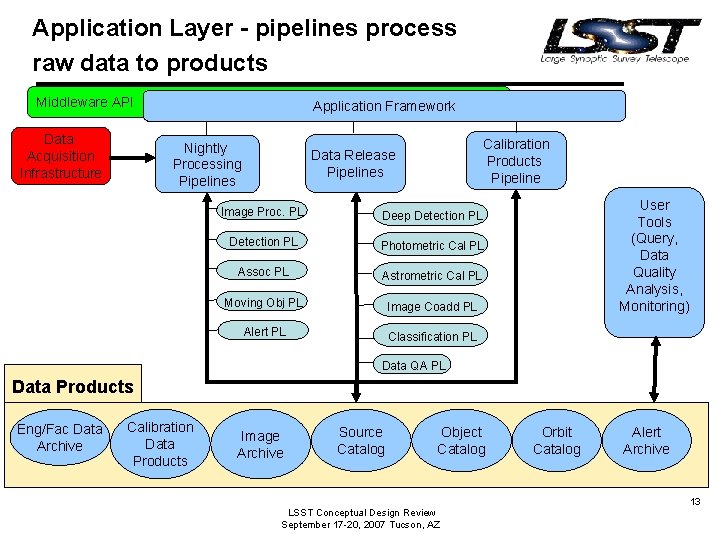

Application Layer - pipelines process raw data to products Middleware API Data Acquisition Infrastructure Application Framework Nightly Processing Pipelines Calibration Products Pipeline Data Release Pipelines Image Proc. PL Deep Detection PL Photometric Cal PL Assoc PL Astrometric Cal PL Moving Obj PL Image Coadd PL Alert PL Classification PL User Tools (Query, Data Quality Analysis, Monitoring) Data QA PL Data Products Eng/Fac Data Archive Calibration Data Products Image Archive Source Catalog Object Catalog LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ Orbit Catalog Alert Archive 13

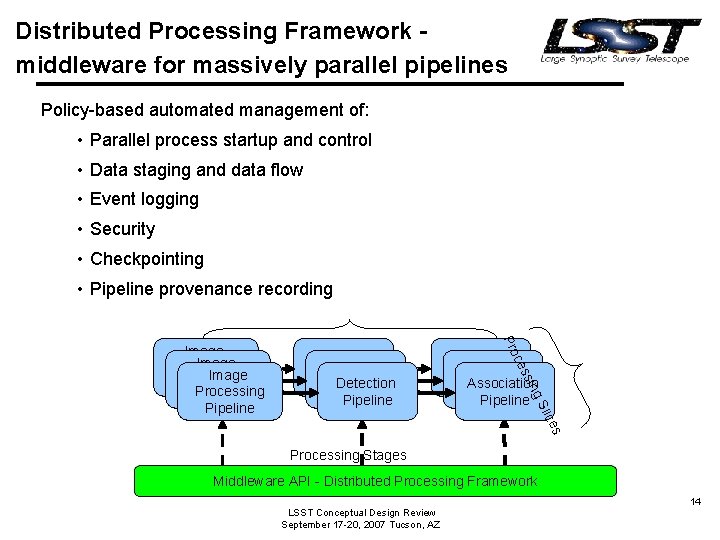

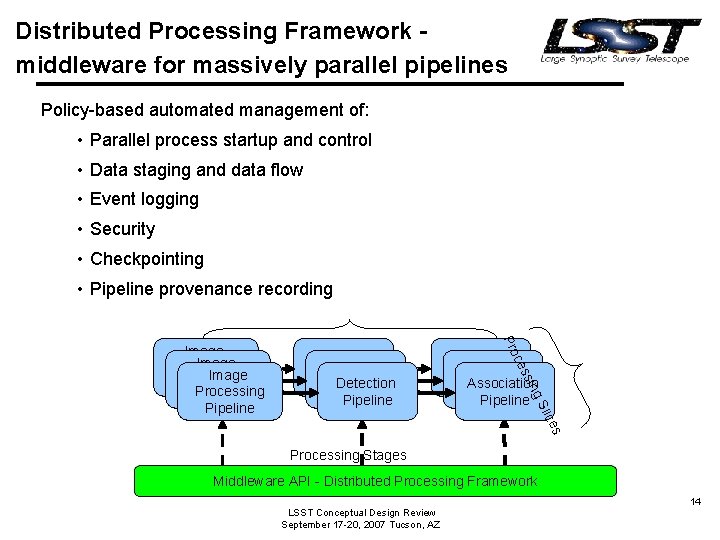

Distributed Processing Framework middleware for massively parallel pipelines Policy-based automated management of: • Parallel process startup and control • Data staging and data flow • Event logging • Security • Checkpointing • Pipeline provenance recording Association Pipeline s lice g. S sin ces Detection Pipeline Pro Image Processing Pipeline Processing Stages Middleware API - Distributed Processing Framework LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 14

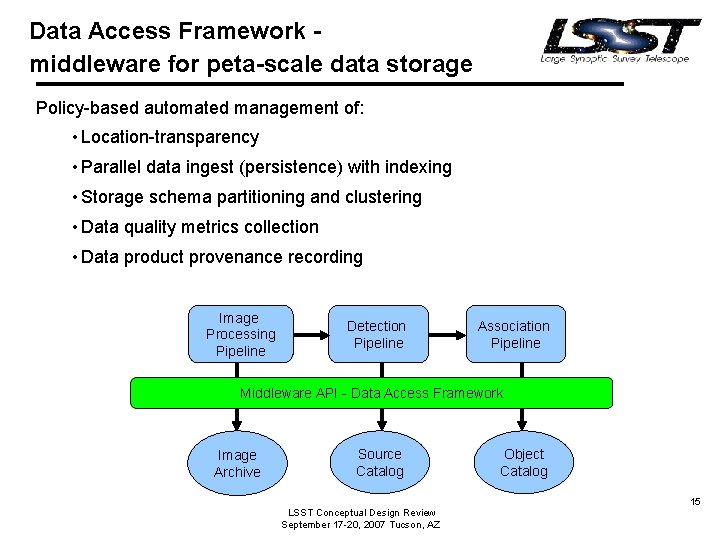

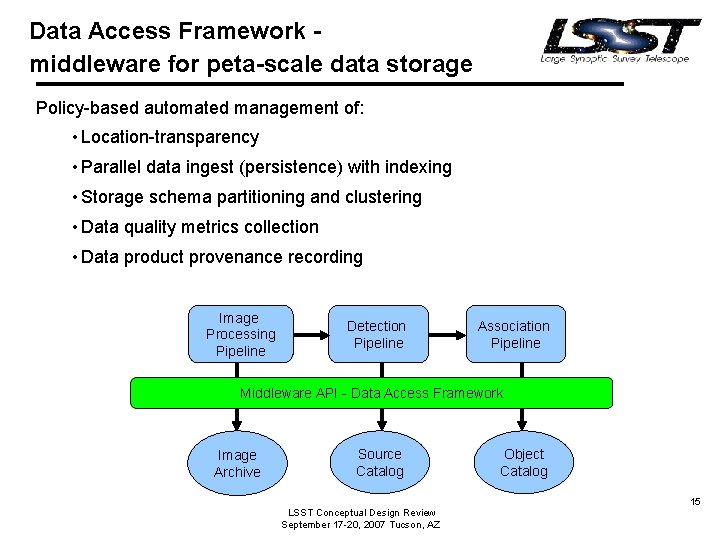

Data Access Framework middleware for peta-scale data storage Policy-based automated management of: • Location-transparency • Parallel data ingest (persistence) with indexing • Storage schema partitioning and clustering • Data quality metrics collection • Data product provenance recording Image Processing Pipeline Detection Pipeline Association Pipeline Middleware API - Data Access Framework Image Archive Source Catalog LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ Object Catalog 15

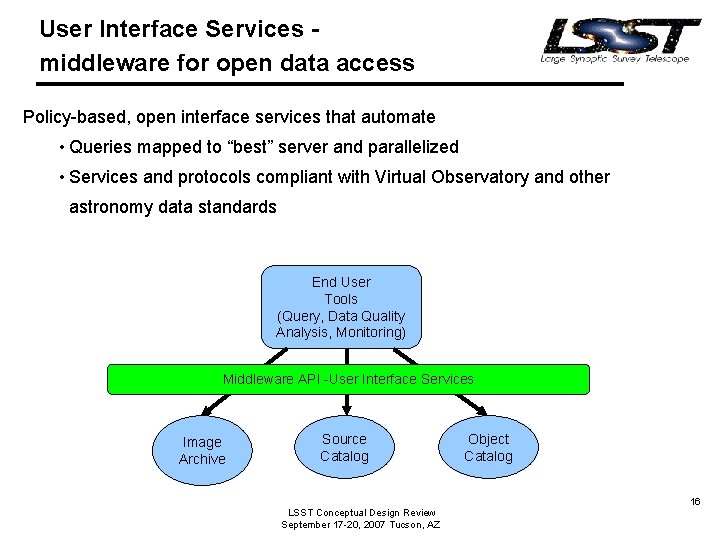

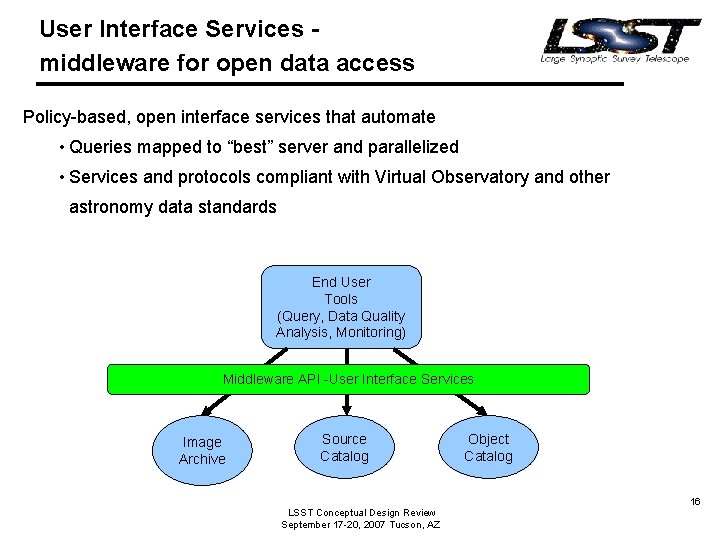

User Interface Services middleware for open data access Policy-based, open interface services that automate • Queries mapped to “best” server and parallelized • Services and protocols compliant with Virtual Observatory and other astronomy data standards End User Tools (Query, Data Quality Analysis, Monitoring) Middleware API -User Interface Services Image Archive Source Catalog LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ Object Catalog 16

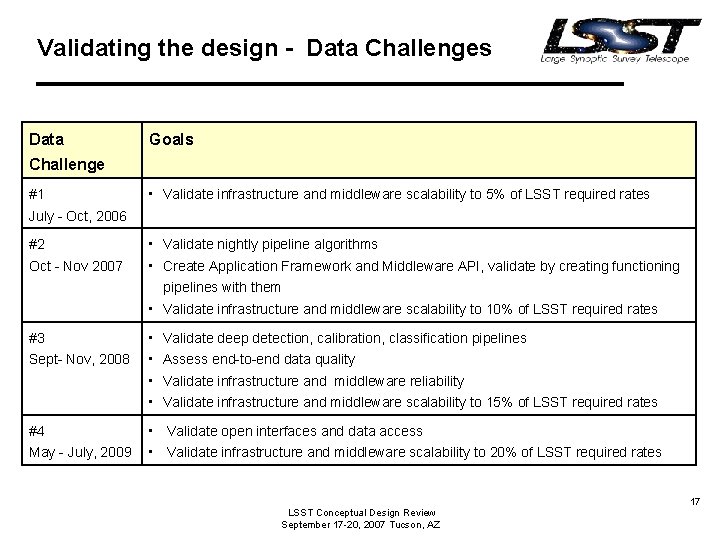

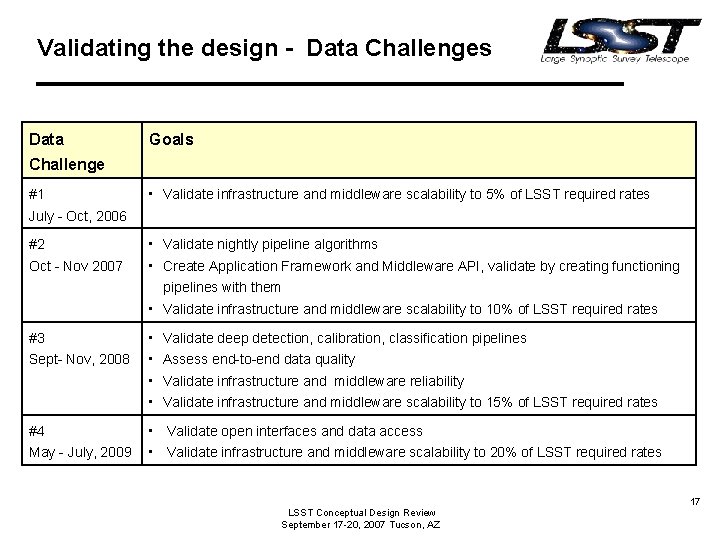

Validating the design - Data Challenges Data Goals Challenge #1 • Validate infrastructure and middleware scalability to 5% of LSST required rates July - Oct, 2006 #2 Oct - Nov 2007 #3 Sept- Nov, 2008 #4 May - July, 2009 • Validate nightly pipeline algorithms • Create Application Framework and Middleware API, validate by creating functioning pipelines with them • Validate infrastructure and middleware scalability to 10% of LSST required rates • • Validate deep detection, calibration, classification pipelines Assess end-to-end data quality Validate infrastructure and middleware reliability Validate infrastructure and middleware scalability to 15% of LSST required rates • Validate open interfaces and data access • Validate infrastructure and middleware scalability to 20% of LSST required rates LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 17

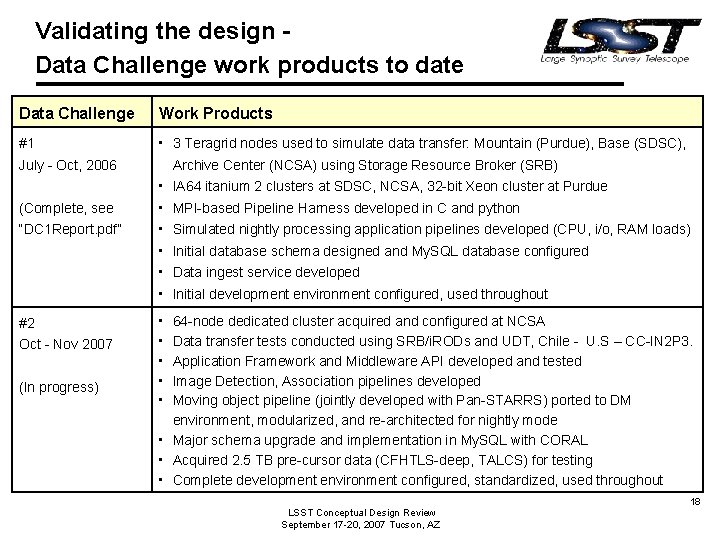

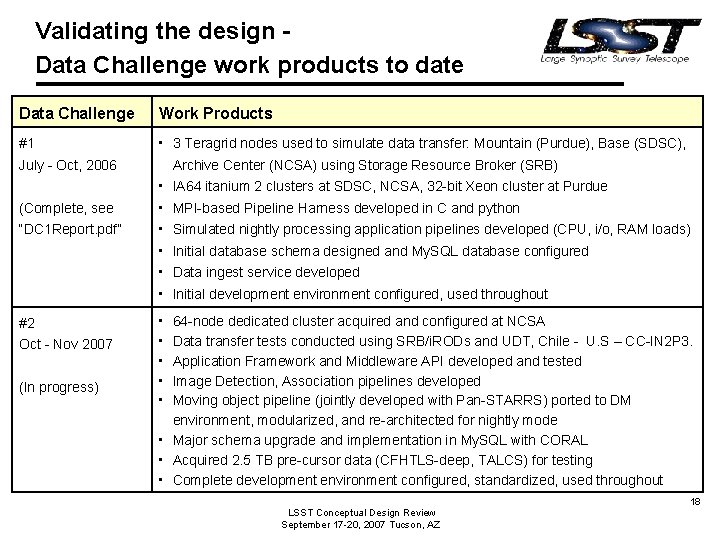

Validating the design Data Challenge work products to date Data Challenge Work Products #1 • 3 Teragrid nodes used to simulate data transfer: Mountain (Purdue), Base (SDSC), Archive Center (NCSA) using Storage Resource Broker (SRB) • IA 64 itanium 2 clusters at SDSC, NCSA, 32 -bit Xeon cluster at Purdue • MPI-based Pipeline Harness developed in C and python • Simulated nightly processing application pipelines developed (CPU, i/o, RAM loads) • Initial database schema designed and My. SQL database configured • Data ingest service developed • Initial development environment configured, used throughout July - Oct, 2006 (Complete, see “DC 1 Report. pdf” #2 Oct - Nov 2007 (In progress) • • • 64 -node dedicated cluster acquired and configured at NCSA Data transfer tests conducted using SRB/i. RODs and UDT, Chile - U. S – CC-IN 2 P 3. Application Framework and Middleware API developed and tested Image Detection, Association pipelines developed Moving object pipeline (jointly developed with Pan-STARRS) ported to DM environment, modularized, and re-architected for nightly mode • Major schema upgrade and implementation in My. SQL with CORAL • Acquired 2. 5 TB pre-cursor data (CFHTLS-deep, TALCS) for testing • Complete development environment configured, standardized, used throughout LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 18

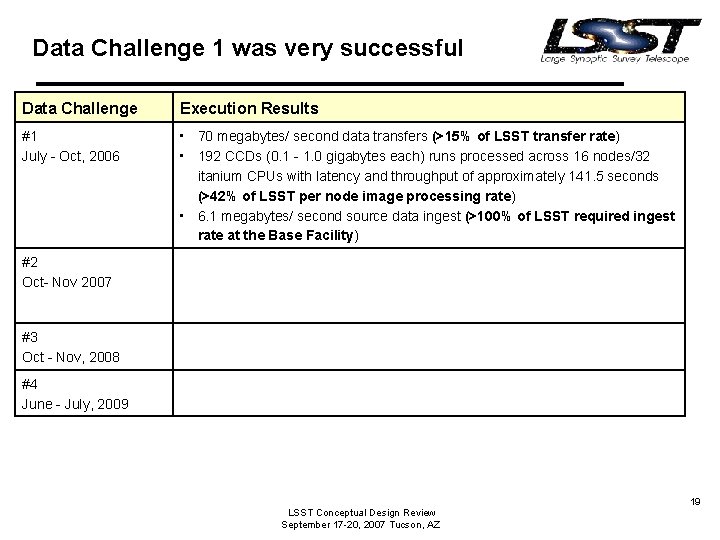

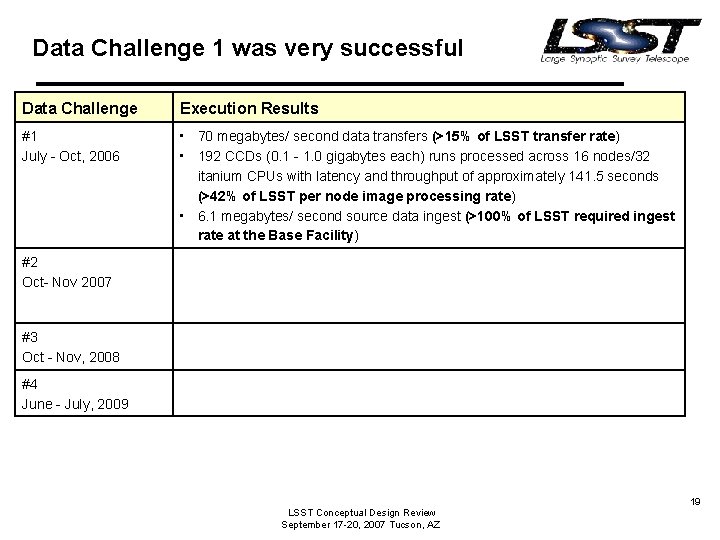

Data Challenge 1 was very successful Data Challenge Execution Results #1 July - Oct, 2006 • 70 megabytes/ second data transfers (>15% of LSST transfer rate) • 192 CCDs (0. 1 - 1. 0 gigabytes each) runs processed across 16 nodes/32 itanium CPUs with latency and throughput of approximately 141. 5 seconds (>42% of LSST per node image processing rate) • 6. 1 megabytes/ second source data ingest (>100% of LSST required ingest rate at the Base Facility) #2 Oct- Nov 2007 #3 Oct - Nov, 2008 #4 June - July, 2009 LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 19

More information • Contact person: – Jeff Kantor (DM coordinator): jkantor@lsst. org LSST Conceptual Design Review September 17 -20, 2007 Tucson, AZ 20