LSF introduction for LILAC cluster June 22 2017

![LSF CPU CLI job submission (cont. ) • Array job bsub -J “array[1 -100]” LSF CPU CLI job submission (cont. ) • Array job bsub -J “array[1 -100]”](https://slidetodoc.com/presentation_image_h2/e83e07636b7904f14205c5054ee23c01/image-7.jpg)

- Slides: 13

LSF introduction for LILAC cluster June 22 2017 Sveta Mazurkova HPC system administrator hpc. mskcc. org

Agenda • • LILAC computational resources LILAC LSF queues LSF CPU job submission LSF CPU/GPU job submission LSF job monitoring Useful LSF commands Questions

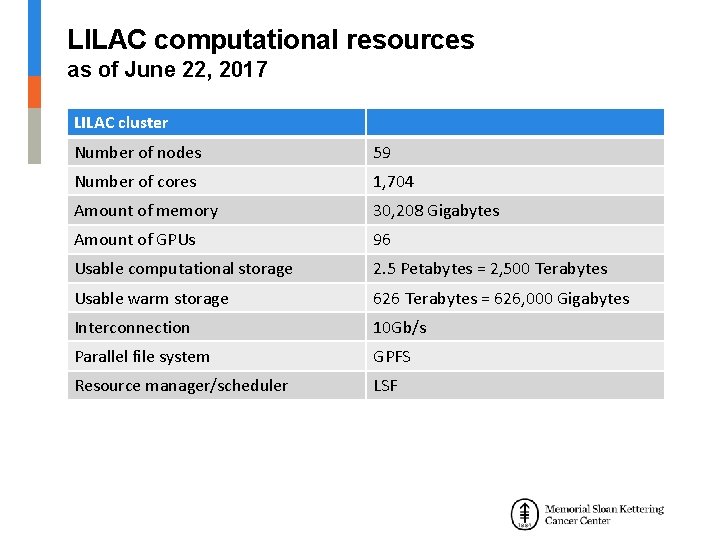

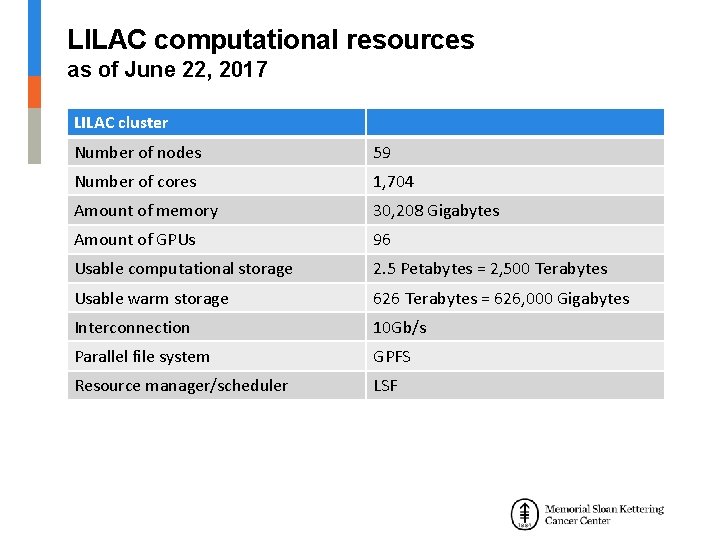

LILAC computational resources as of June 22, 2017 LILAC cluster Number of nodes 59 Number of cores 1, 704 Amount of memory 30, 208 Gigabytes Amount of GPUs 96 Usable computational storage 2. 5 Petabytes = 2, 500 Terabytes Usable warm storage 626 Terabytes = 626, 000 Gigabytes Interconnection 10 Gb/s Parallel file system GPFS Resource manager/scheduler LSF

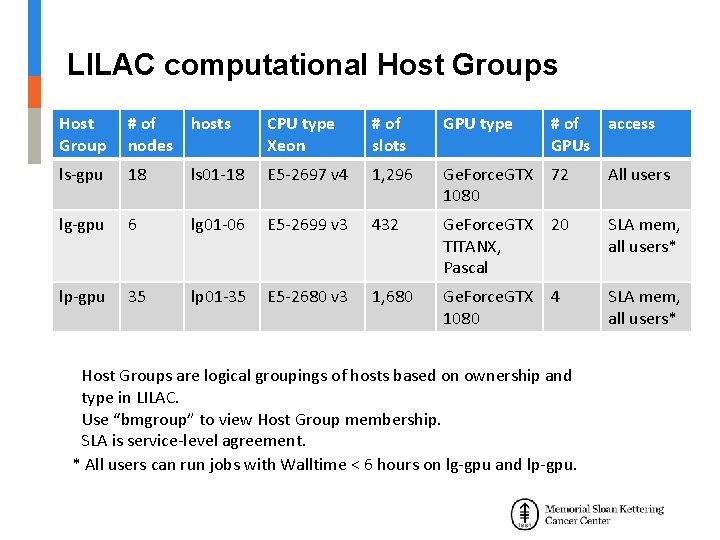

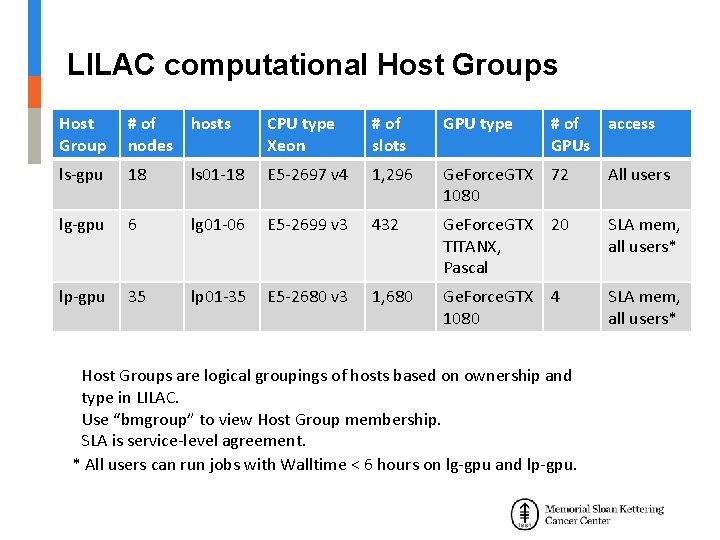

LILAC computational Host Groups Host Group # of nodes hosts CPU type Xeon # of slots GPU type # of access GPUs ls-gpu 18 ls 01 -18 E 5 -2697 v 4 1, 296 Ge. Force. GTX 72 1080 All users lg-gpu 6 lg 01 -06 E 5 -2699 v 3 432 Ge. Force. GTX 20 TITANX, Pascal SLA mem, all users* lp-gpu 35 lp 01 -35 E 5 -2680 v 3 1, 680 Ge. Force. GTX 4 1080 SLA mem, all users* Host Groups are logical groupings of hosts based on ownership and type in LILAC. Use “bmgroup” to view Host Group membership. SLA is service-level agreement. * All users can run jobs with Walltime < 6 hours on lg-gpu and lp-gpu.

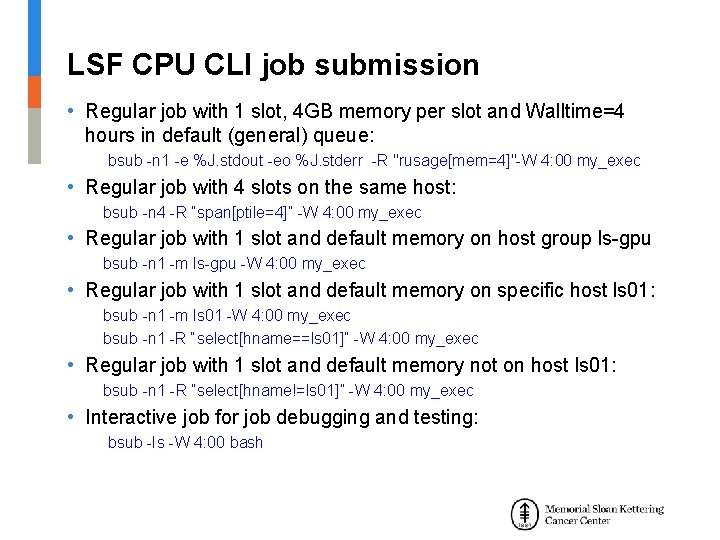

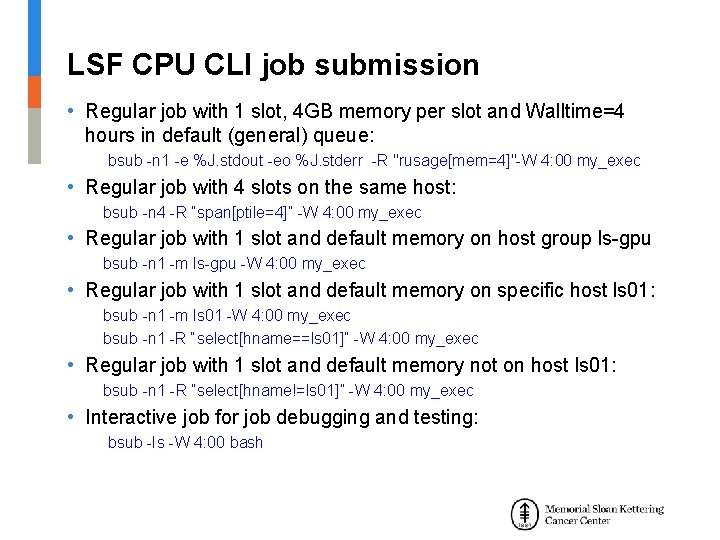

LILAC LSF queues LILAC queues “bqueues”: ‒ general (default queue) ‒ gpushared ‒ wholenode LSF uses cgroups to limit the CPU cores, number of GPUs and memory that a job can use • All queues have default resource parameters for jobs: 1 cpu thread (-n 1); 2 GB of memory (rusage[mem=2]); 1 hour (-W 1: 00) runtime • To submit a job to LSF use the “bsub” command • “bsub” will overwrite the default resource parameters • To check the queue configuration: – bqueues -l queue_name

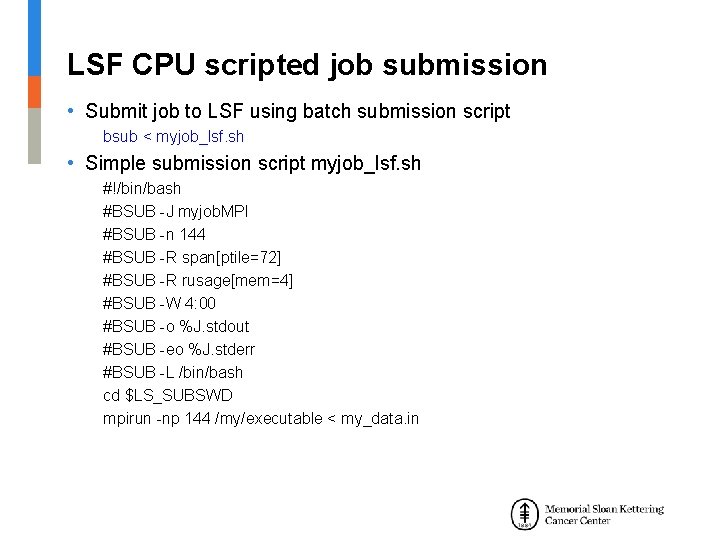

LSF CPU CLI job submission • Regular job with 1 slot, 4 GB memory per slot and Walltime=4 hours in default (general) queue: bsub -n 1 -e %J. stdout -eo %J. stderr -R "rusage[mem=4]"-W 4: 00 my_exec • Regular job with 4 slots on the same host: bsub -n 4 -R “span[ptile=4]” -W 4: 00 my_exec • Regular job with 1 slot and default memory on host group ls-gpu bsub -n 1 -m ls-gpu -W 4: 00 my_exec • Regular job with 1 slot and default memory on specific host ls 01: bsub -n 1 -m ls 01 -W 4: 00 my_exec bsub -n 1 -R “select[hname==ls 01]” -W 4: 00 my_exec • Regular job with 1 slot and default memory not on host ls 01: bsub -n 1 -R “select[hname!=ls 01]” -W 4: 00 my_exec • Interactive job for job debugging and testing: bsub -Is -W 4: 00 bash

![LSF CPU CLI job submission cont Array job bsub J array1 100 LSF CPU CLI job submission (cont. ) • Array job bsub -J “array[1 -100]”](https://slidetodoc.com/presentation_image_h2/e83e07636b7904f14205c5054ee23c01/image-7.jpg)

LSF CPU CLI job submission (cont. ) • Array job bsub -J “array[1 -100]” -n 1 my_exec • Array job with slot limit allowed to run 10 jobs in any one time bsub -J “array[1 -100%10]” -n 1 my_exec • Regular job that request all CPUs and GPUs on one node bsub -n 72 -app wholenode_GTX 1080 -q wholenode

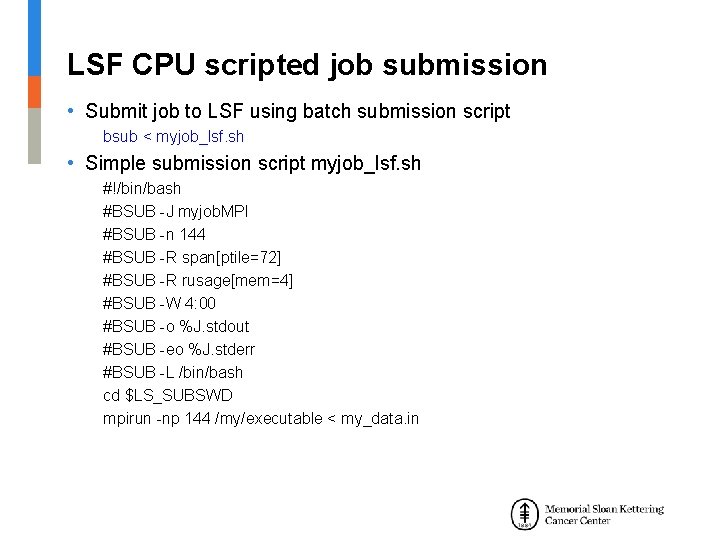

LSF CPU scripted job submission • Submit job to LSF using batch submission script bsub < myjob_lsf. sh • Simple submission script myjob_lsf. sh #!/bin/bash #BSUB -J myjob. MPI #BSUB -n 144 #BSUB -R span[ptile=72] #BSUB -R rusage[mem=4] #BSUB -W 4: 00 #BSUB -o %J. stdout #BSUB -eo %J. stderr #BSUB -L /bin/bash cd $LS_SUBSWD mpirun -np 144 /my/executable < my_data. in

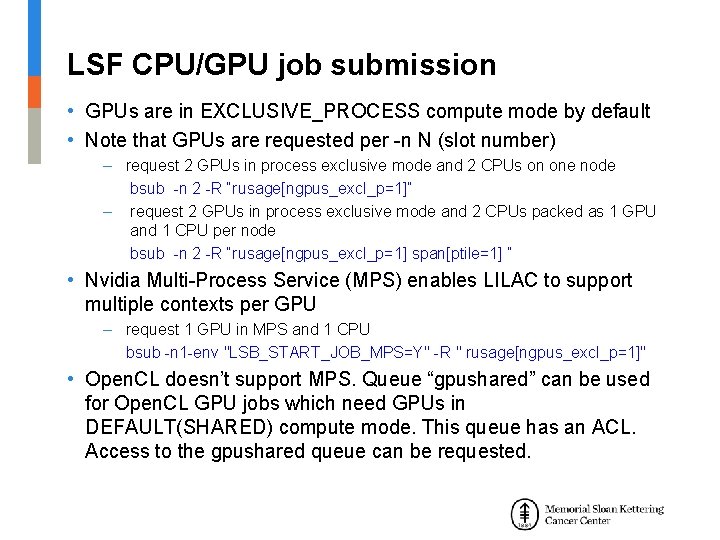

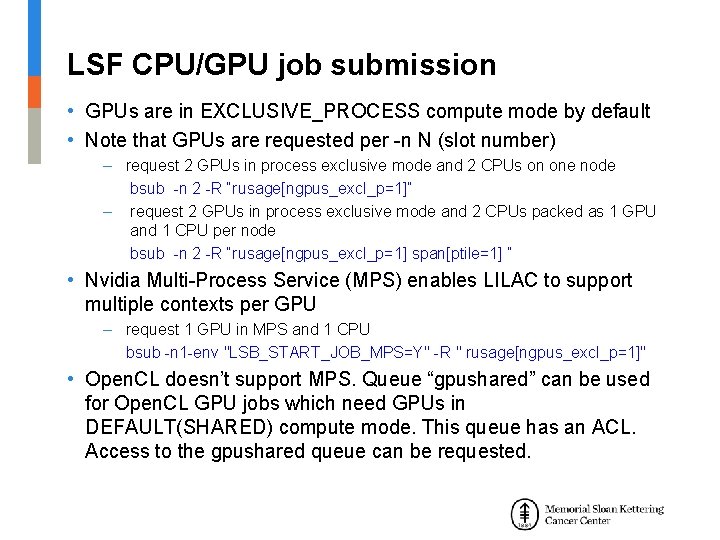

LSF CPU/GPU job submission • GPUs are in EXCLUSIVE_PROCESS compute mode by default • Note that GPUs are requested per -n N (slot number) – request 2 GPUs in process exclusive mode and 2 CPUs on one node bsub -n 2 -R “rusage[ngpus_excl_p=1]” – request 2 GPUs in process exclusive mode and 2 CPUs packed as 1 GPU and 1 CPU per node bsub -n 2 -R “rusage[ngpus_excl_p=1] span[ptile=1] ” • Nvidia Multi-Process Service (MPS) enables LILAC to support multiple contexts per GPU – request 1 GPU in MPS and 1 CPU bsub -n 1 -env "LSB_START_JOB_MPS=Y" -R " rusage[ngpus_excl_p=1]" • Open. CL doesn’t support MPS. Queue “gpushared” can be used for Open. CL GPU jobs which need GPUs in DEFAULT(SHARED) compute mode. This queue has an ACL. Access to the gpushared queue can be requested.

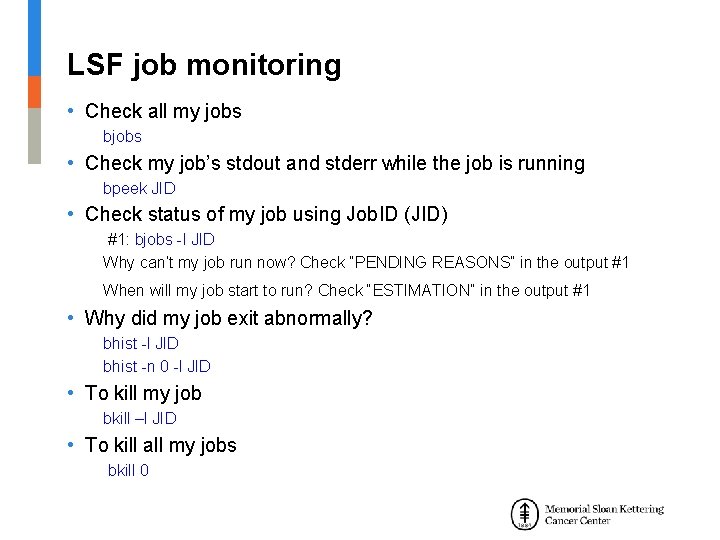

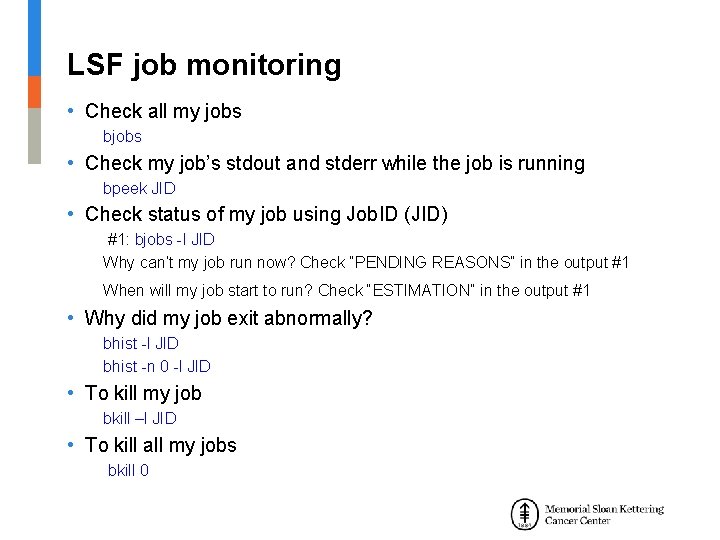

LSF CPU/GPU job submission (continue) • Nodes on LILAC have different types of GPUs – to check available GPU types on LILAC lsload -s| grep gpu_model – to check available GPU types on LILAC per node lsload -l node_name – to check the current number of available GPUs on LILAC bhosts -R ngpus_excl_p – request a particular GPU type bsub -n 1 -R " select[gpu_model 0 == 'Ge. Force. GTX 1080’|| gpu_model 1== 'Ge. Force. GTX 1080’||gpu_model 2 == 'Ge. Force. GTX 1080’||gpu_model 3 == 'Ge. Force. GTX 1080’] rusage[ngpus_excl_p=1]” – If all GPUs are the same type in the host group, you can request GPUs by the host group bsub -n 1 -R “rusage[ngpus_excl_p=1]” -m ls-gpu

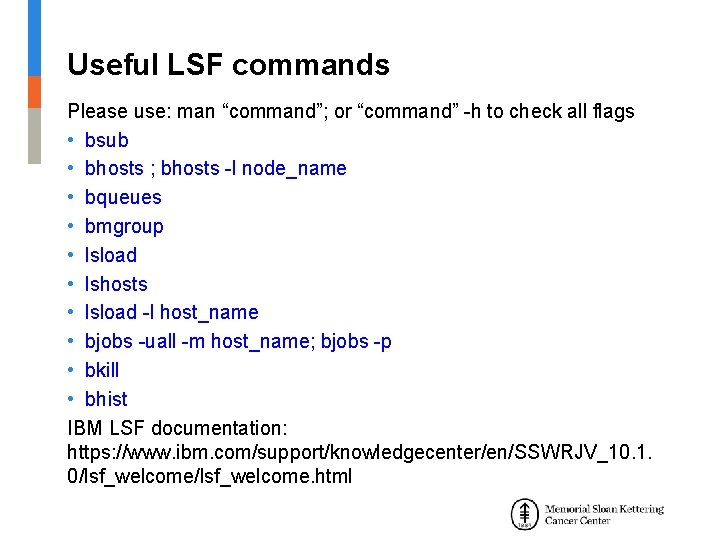

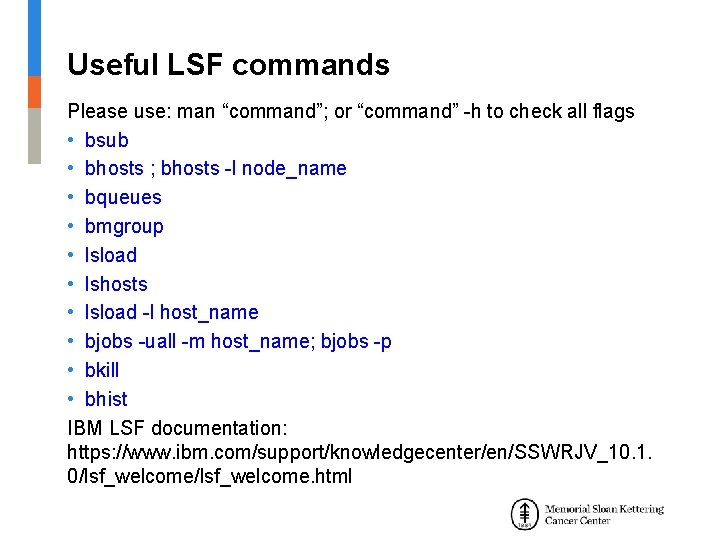

LSF job monitoring • Check all my jobs bjobs • Check my job’s stdout and stderr while the job is running bpeek JID • Check status of my job using Job. ID (JID) #1: bjobs -l JID Why can’t my job run now? Check “PENDING REASONS” in the output #1 When will my job start to run? Check “ESTIMATION” in the output #1 • Why did my job exit abnormally? bhist -l JID bhist -n 0 -l JID • To kill my job bkill –l JID • To kill all my jobs bkill 0

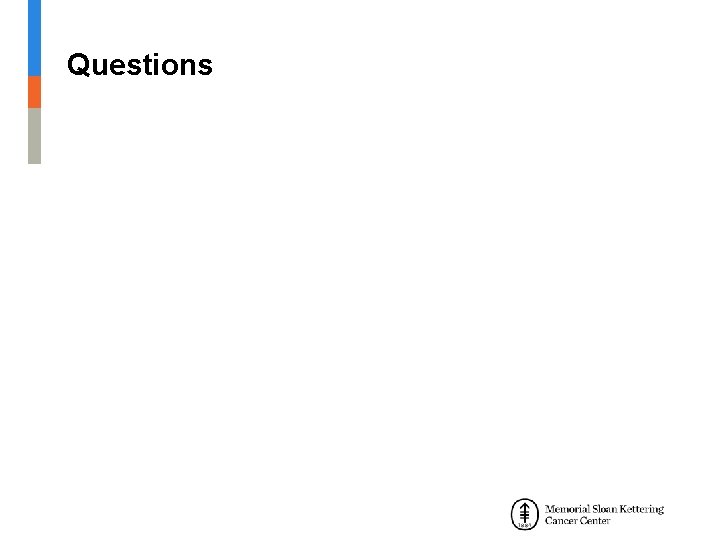

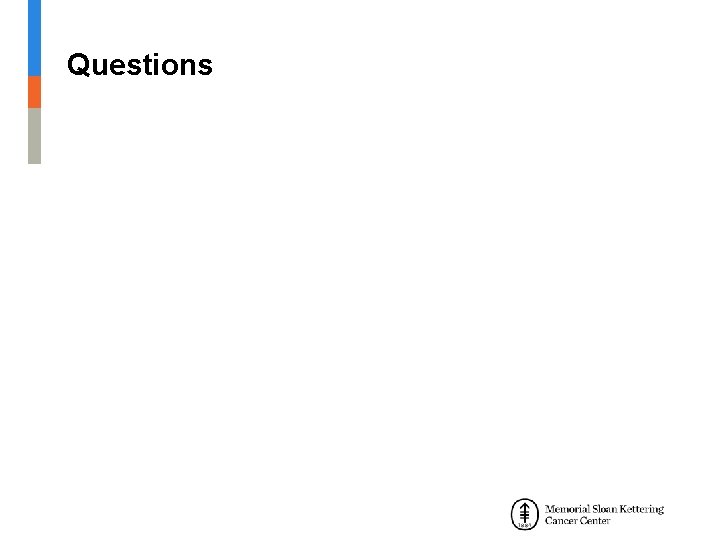

Useful LSF commands Please use: man “command”; or “command” -h to check all flags • bsub • bhosts ; bhosts -l node_name • bqueues • bmgroup • lsload • lshosts • lsload -l host_name • bjobs -uall -m host_name; bjobs -p • bkill • bhist IBM LSF documentation: https: //www. ibm. com/support/knowledgecenter/en/SSWRJV_10. 1. 0/lsf_welcome. html

Questions