LSA 352 Speech Recognition and Synthesis Dan Jurafsky

![Phrase-based LM “I would like to make a collect call” “a [wfrag]” <dig 3> Phrase-based LM “I would like to make a collect call” “a [wfrag]” <dig 3>](https://slidetodoc.com/presentation_image/2ad9ce18b77484498dd8ef80a0e8e280/image-60.jpg)

![MDE transcription Conventions: . / for statement SU boundaries, <> for fillers, [] for MDE transcription Conventions: . / for statement SU boundaries, <> for fillers, [] for](https://slidetodoc.com/presentation_image/2ad9ce18b77484498dd8ef80a0e8e280/image-70.jpg)

- Slides: 95

LSA 352 Speech Recognition and Synthesis Dan Jurafsky Lecture 7: Baum Welch/Learning and Disfluencies IP Notice: Some of these slides were derived from Andrew Ng’s CS 229 notes, as well as lecture notes from Chen, Picheny et al, and Bryan Pellom. I’ll try to accurately give credit on each slide. LSA 352 Summer 2007 1

Outline for Today Learning EM for HMMs (the “Baum-Welch Algorithm”) Embedded Training mixture gaussians Disfluencies LSA 352 Summer 2007 2

The Learning Problem Baum-Welch = Forward-Backward Algorithm (Baum 1972) Is a special case of the EM or Expectation. Maximization algorithm (Dempster, Laird, Rubin) The algorithm will let us train the transition probabilities A= {aij} and the emission probabilities B={bi(ot)} of the HMM LSA 352 Summer 2007 3

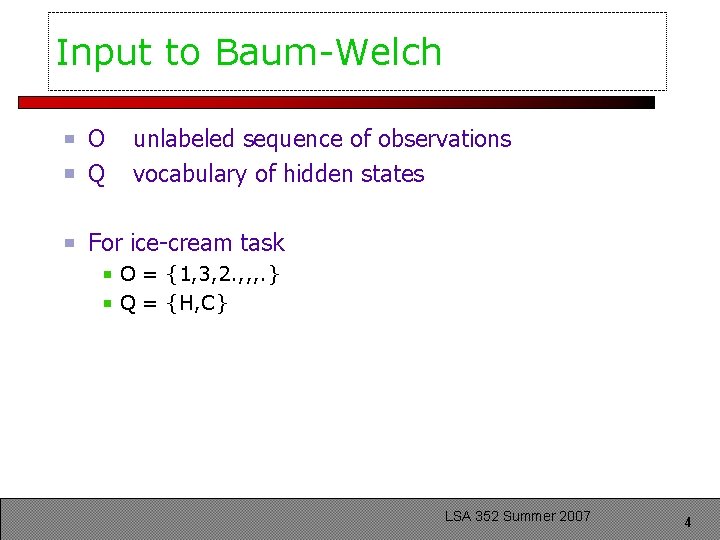

Input to Baum-Welch O Q unlabeled sequence of observations vocabulary of hidden states For ice-cream task O = {1, 3, 2. , , , . } Q = {H, C} LSA 352 Summer 2007 4

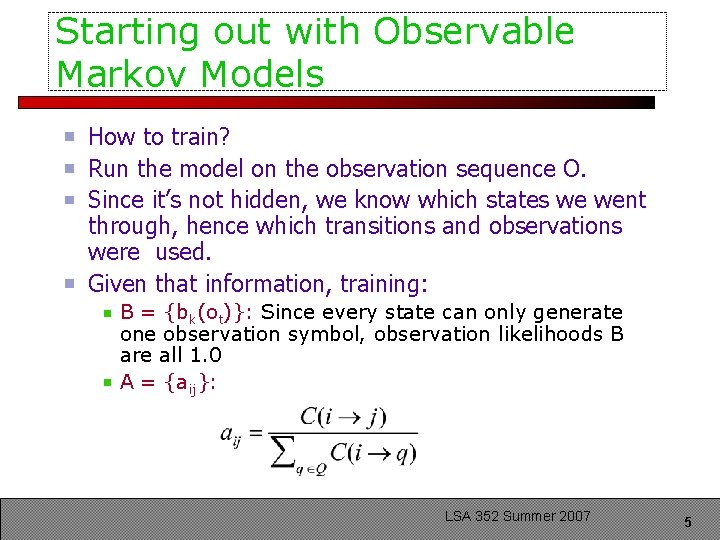

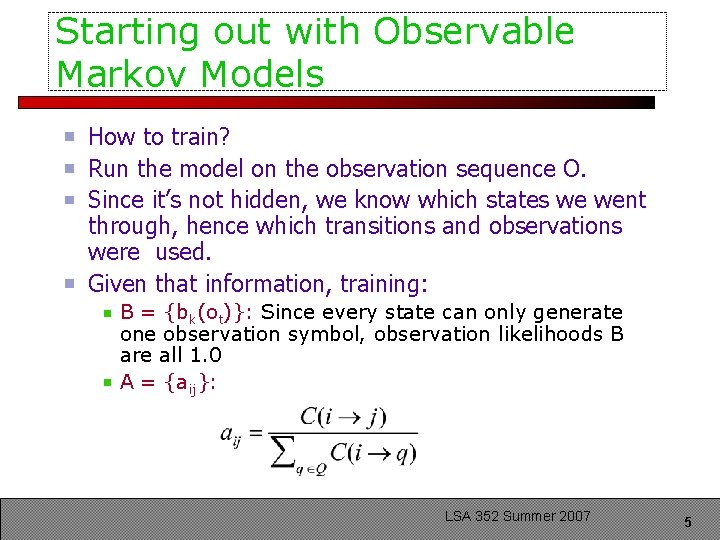

Starting out with Observable Markov Models How to train? Run the model on the observation sequence O. Since it’s not hidden, we know which states we went through, hence which transitions and observations were used. Given that information, training: B = {bk(ot)}: Since every state can only generate one observation symbol, observation likelihoods B are all 1. 0 A = {aij}: LSA 352 Summer 2007 5

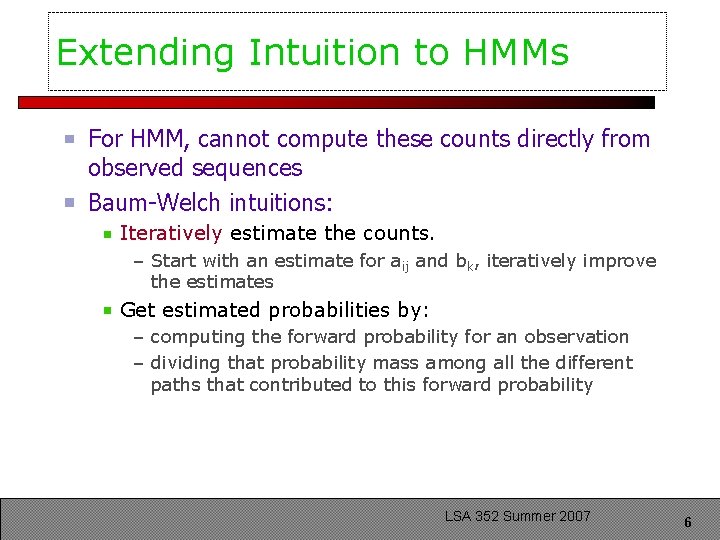

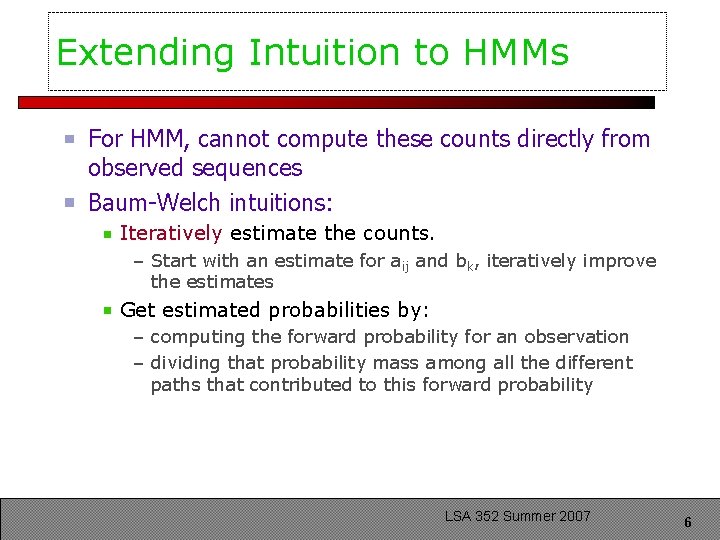

Extending Intuition to HMMs For HMM, cannot compute these counts directly from observed sequences Baum-Welch intuitions: Iteratively estimate the counts. – Start with an estimate for aij and bk, iteratively improve the estimates Get estimated probabilities by: – computing the forward probability for an observation – dividing that probability mass among all the different paths that contributed to this forward probability LSA 352 Summer 2007 6

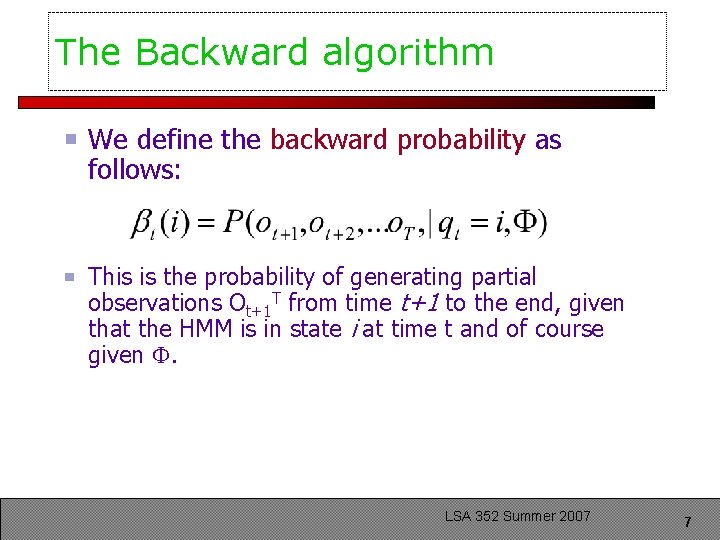

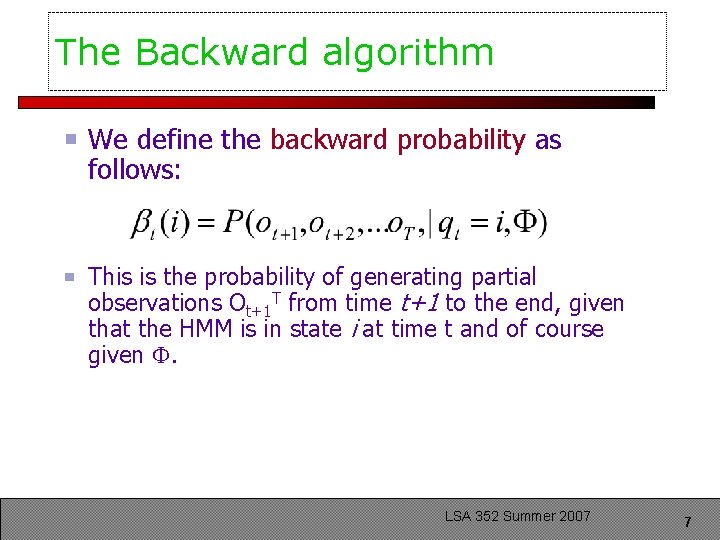

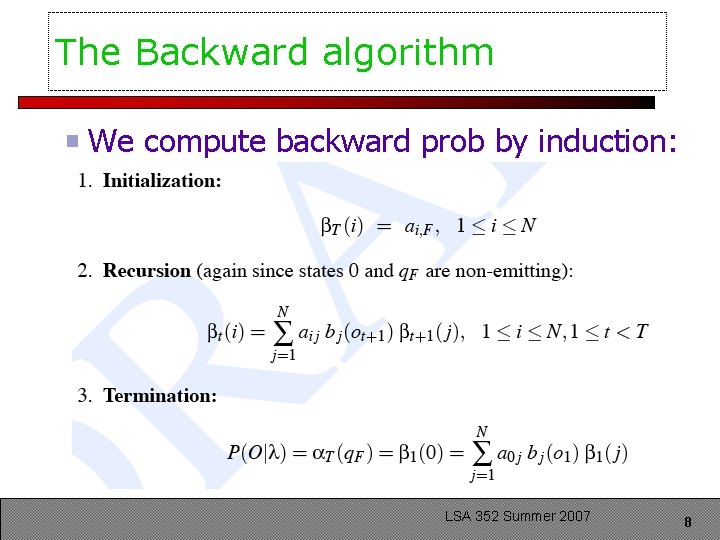

The Backward algorithm We define the backward probability as follows: This is the probability of generating partial observations Ot+1 T from time t+1 to the end, given that the HMM is in state i at time t and of course given . LSA 352 Summer 2007 7

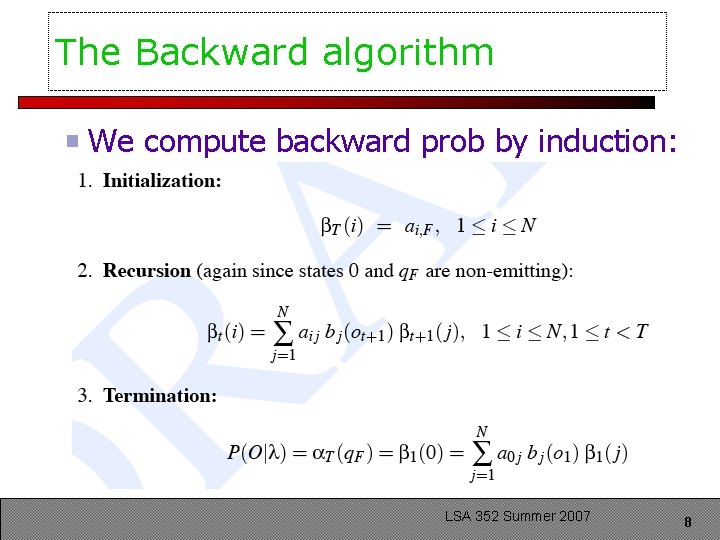

The Backward algorithm We compute backward prob by induction: LSA 352 Summer 2007 8

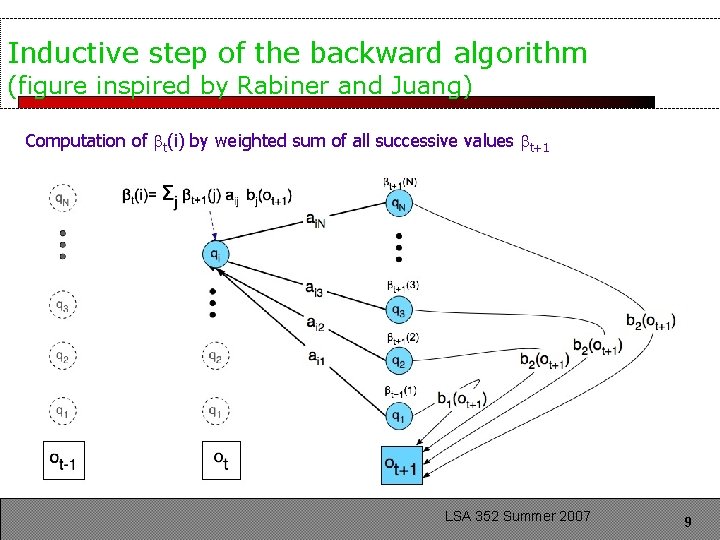

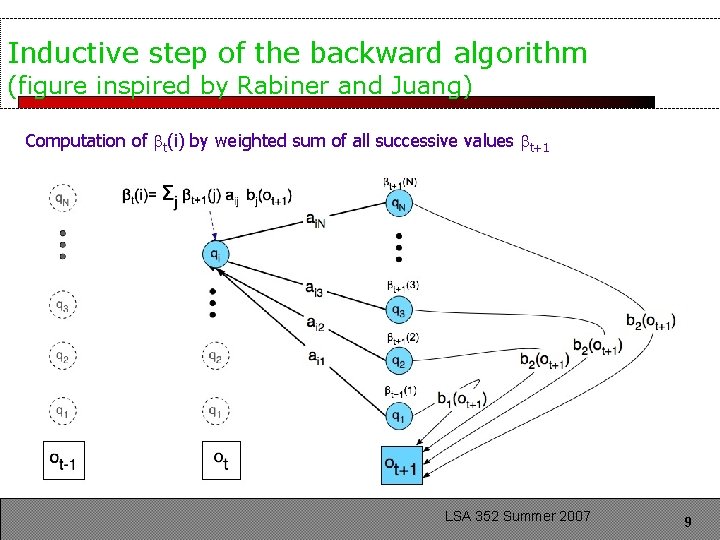

Inductive step of the backward algorithm (figure inspired by Rabiner and Juang) Computation of t(i) by weighted sum of all successive values t+1 LSA 352 Summer 2007 9

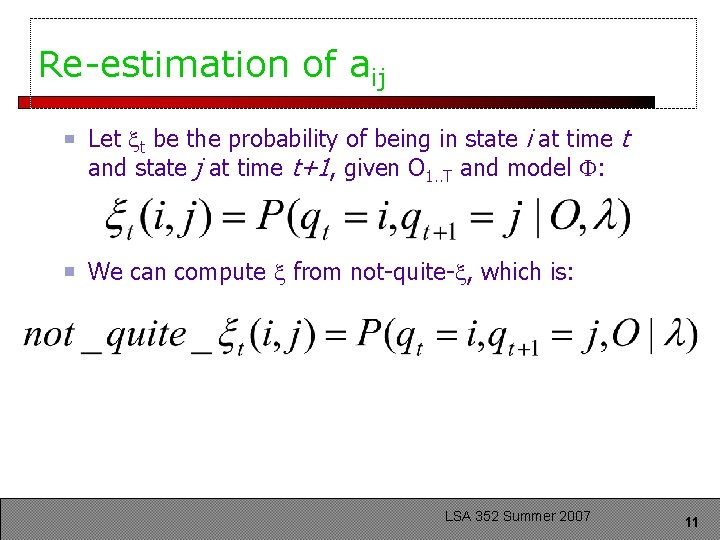

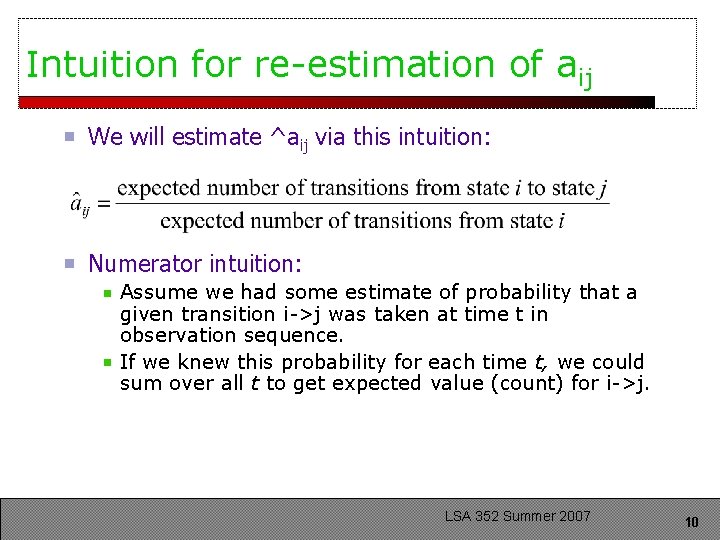

Intuition for re-estimation of aij We will estimate ^aij via this intuition: Numerator intuition: Assume we had some estimate of probability that a given transition i->j was taken at time t in observation sequence. If we knew this probability for each time t, we could sum over all t to get expected value (count) for i->j. LSA 352 Summer 2007 10

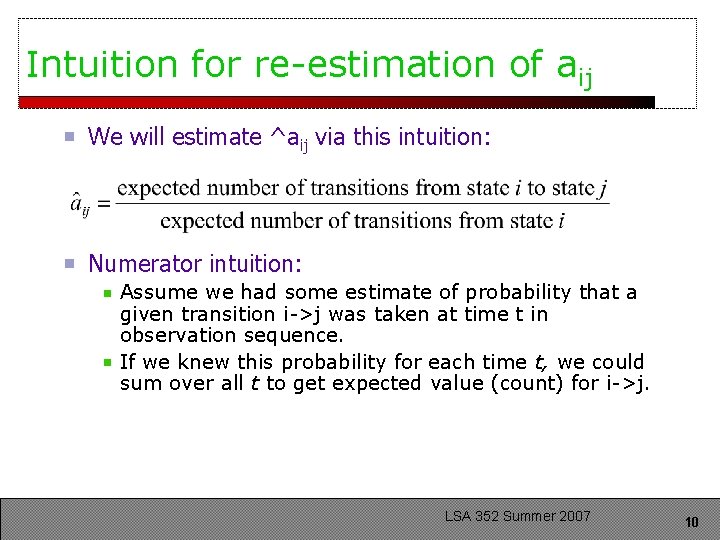

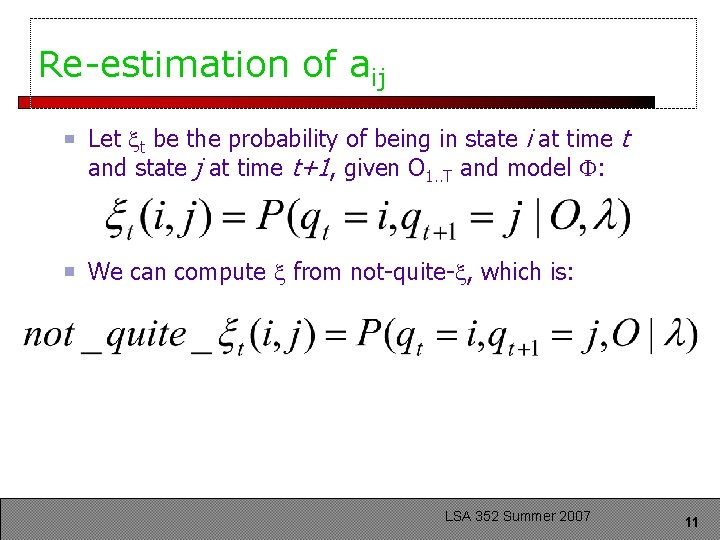

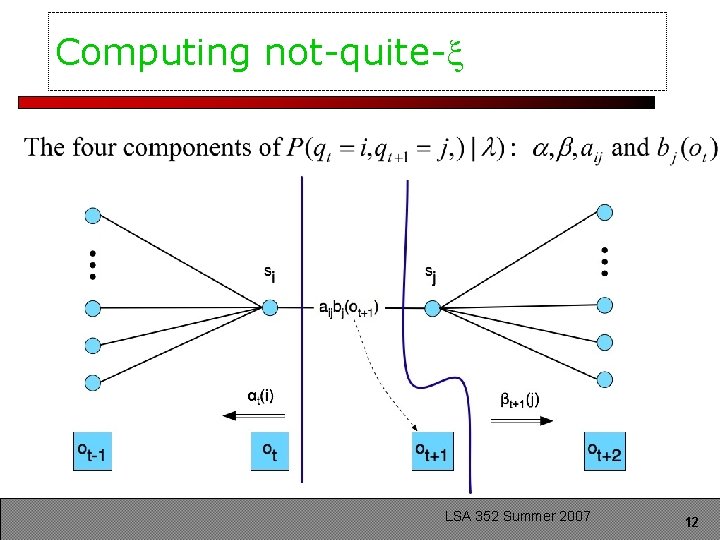

Re-estimation of aij Let t be the probability of being in state i at time t and state j at time t+1, given O 1. . T and model : We can compute from not-quite- , which is: LSA 352 Summer 2007 11

Computing not-quite- LSA 352 Summer 2007 12

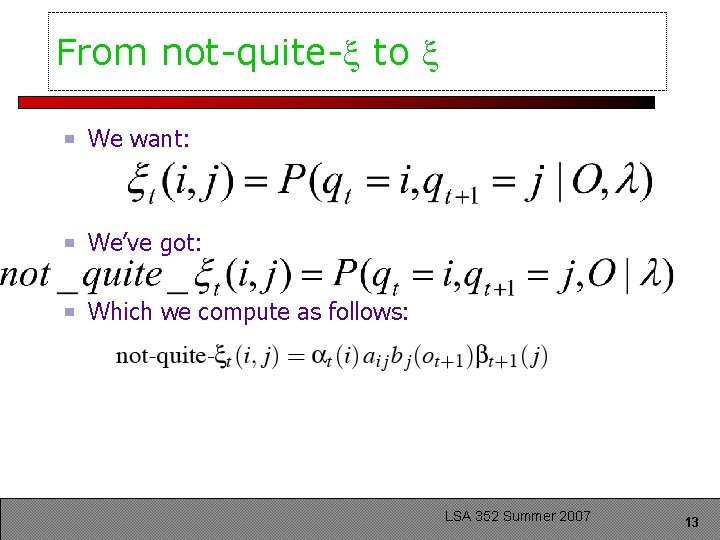

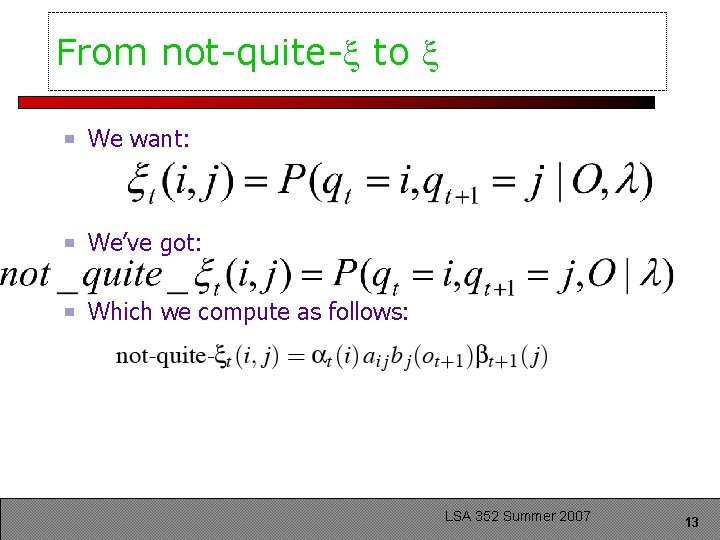

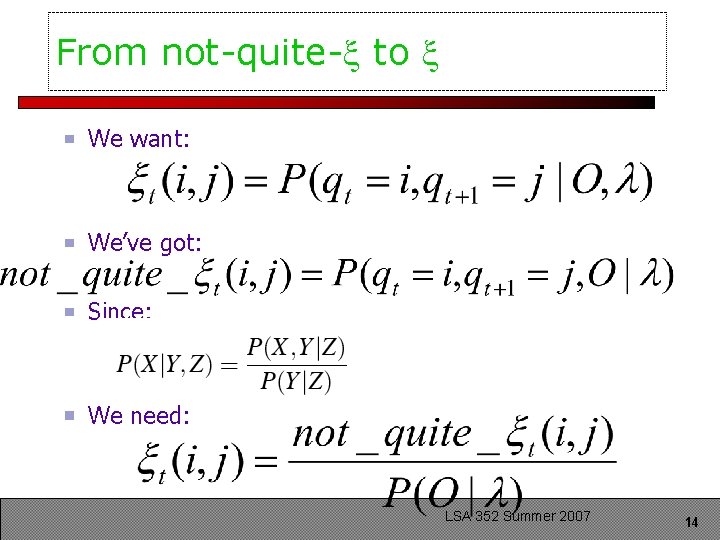

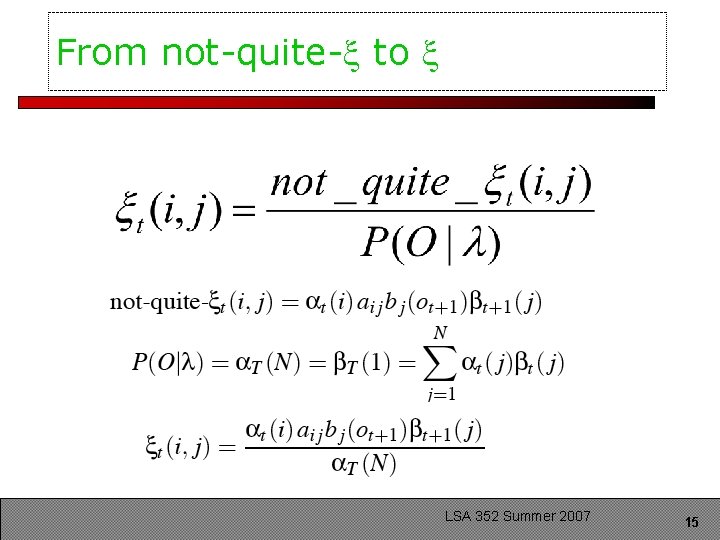

From not-quite- to We want: We’ve got: Which we compute as follows: LSA 352 Summer 2007 13

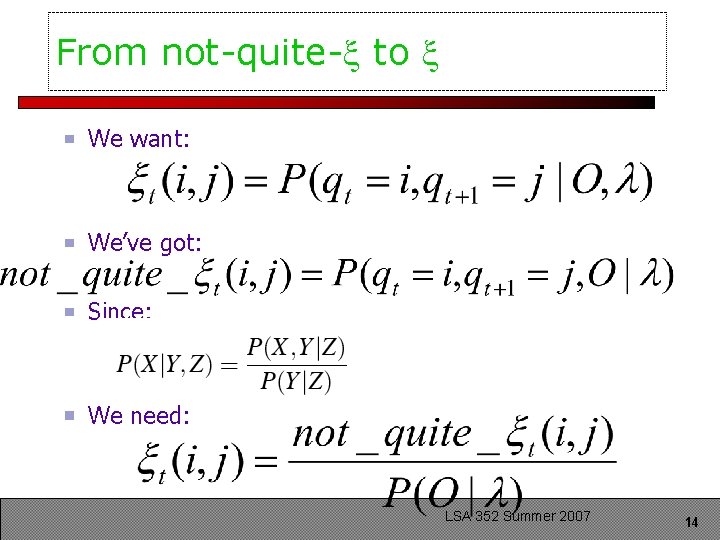

From not-quite- to We want: We’ve got: Since: We need: LSA 352 Summer 2007 14

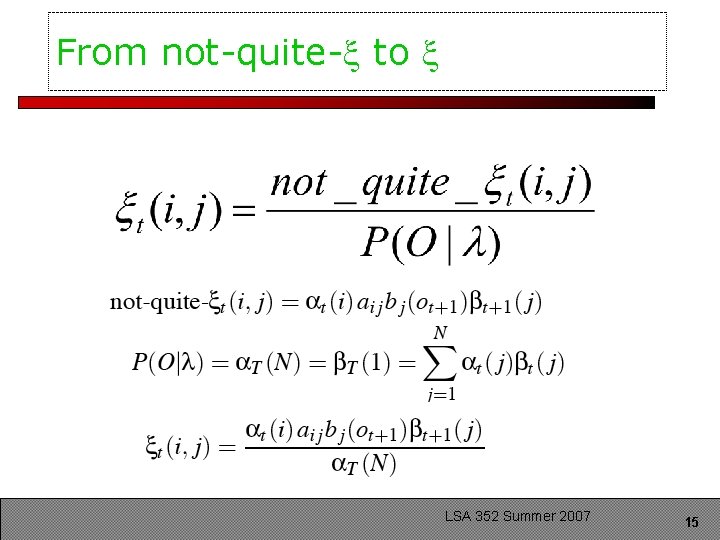

From not-quite- to LSA 352 Summer 2007 15

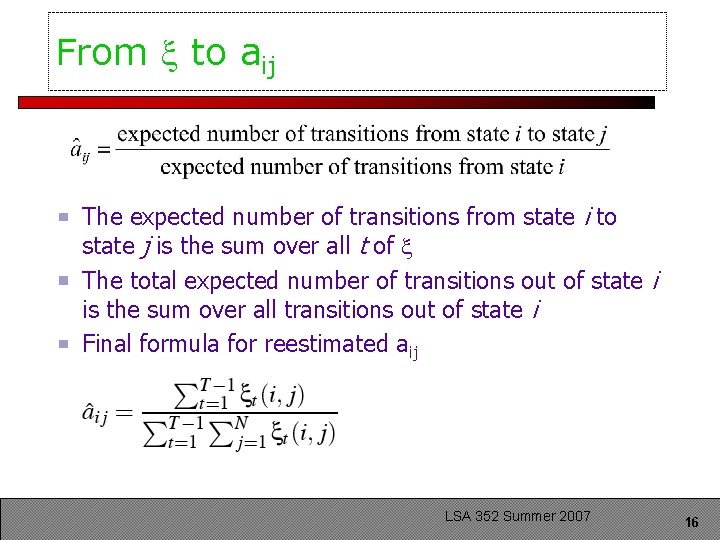

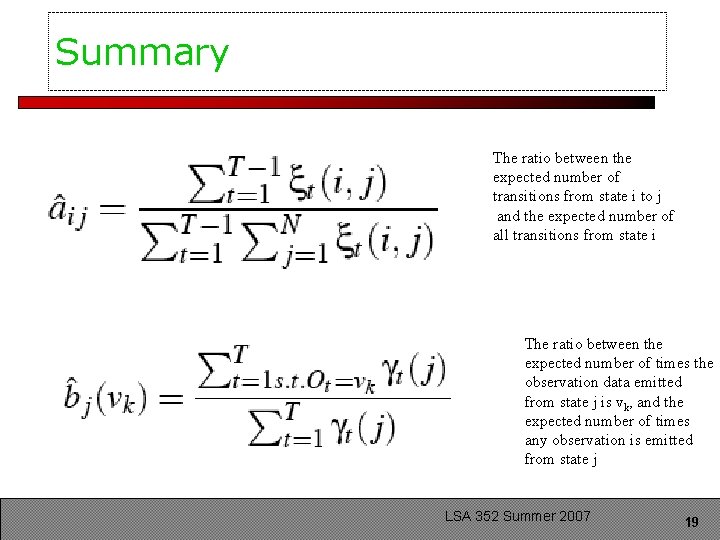

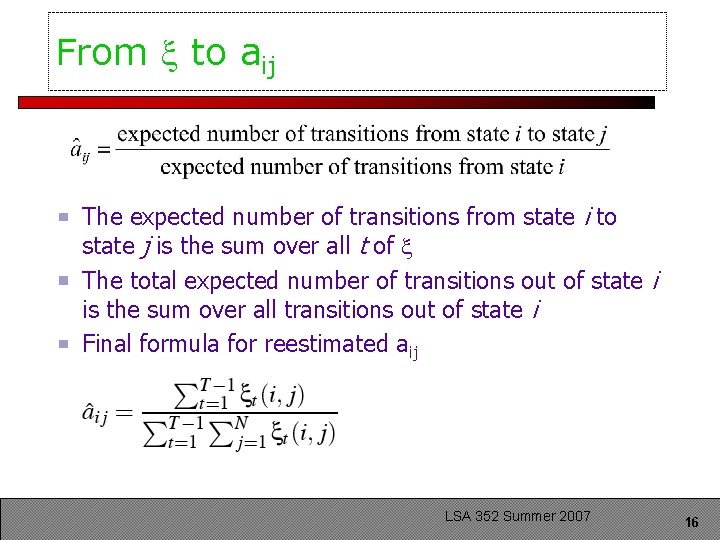

From to aij The expected number of transitions from state i to state j is the sum over all t of The total expected number of transitions out of state i is the sum over all transitions out of state i Final formula for reestimated aij LSA 352 Summer 2007 16

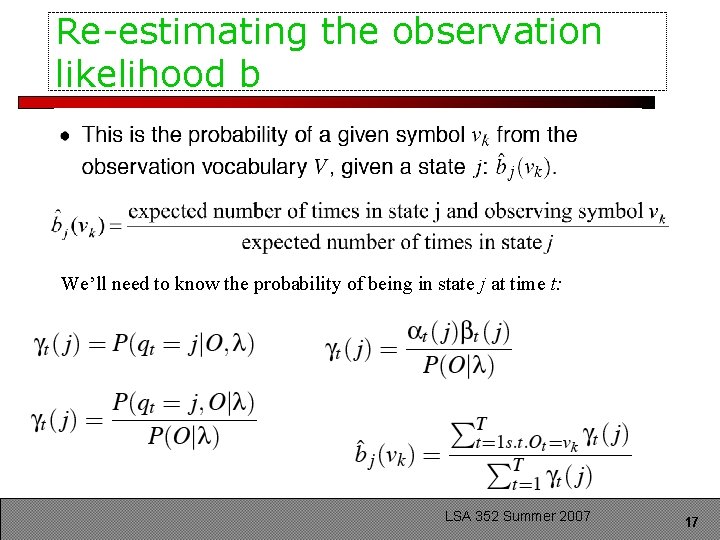

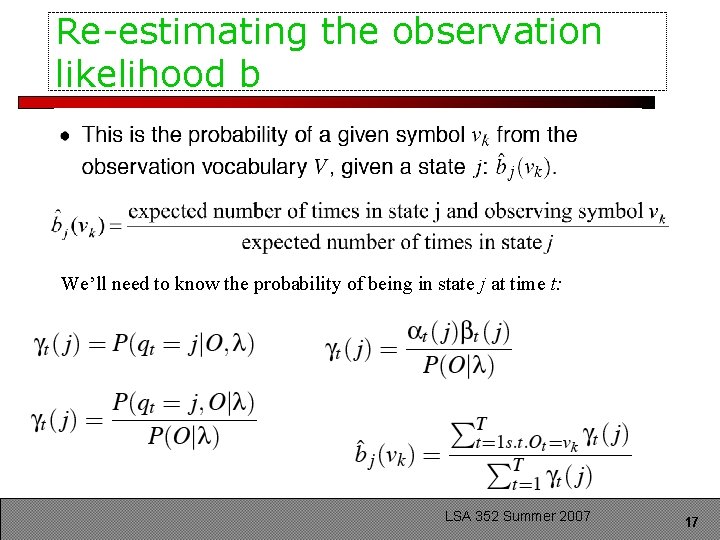

Re-estimating the observation likelihood b We’ll need to know the probability of being in state j at time t: LSA 352 Summer 2007 17

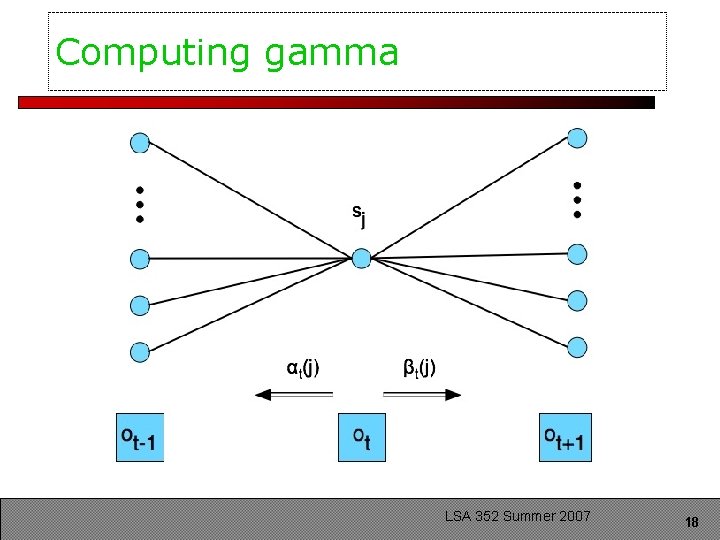

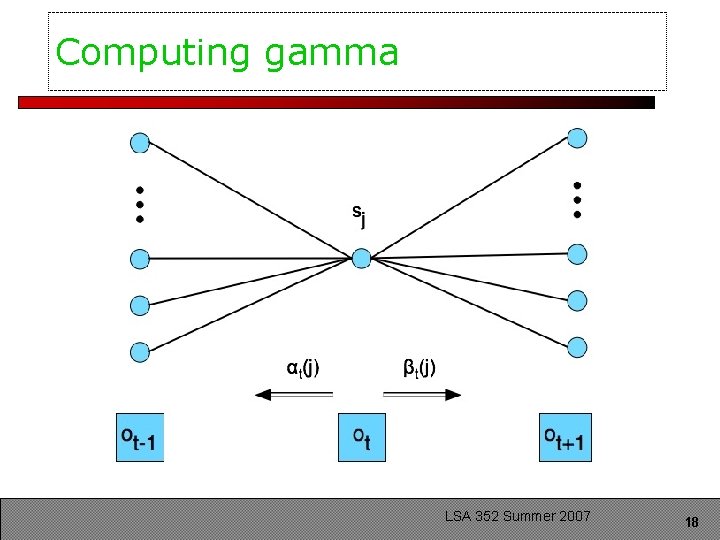

Computing gamma LSA 352 Summer 2007 18

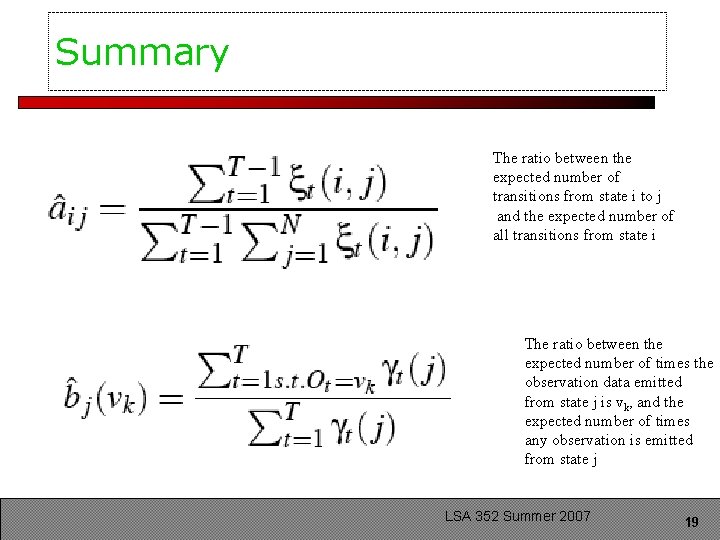

Summary The ratio between the expected number of transitions from state i to j and the expected number of all transitions from state i The ratio between the expected number of times the observation data emitted from state j is vk, and the expected number of times any observation is emitted from state j LSA 352 Summer 2007 19

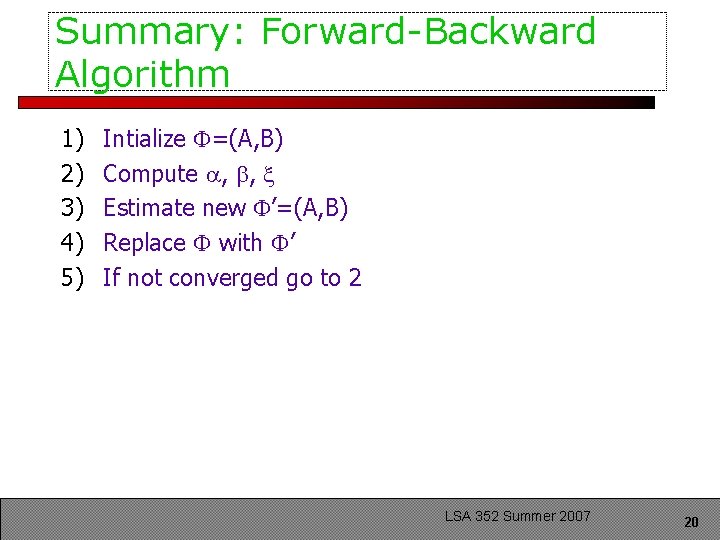

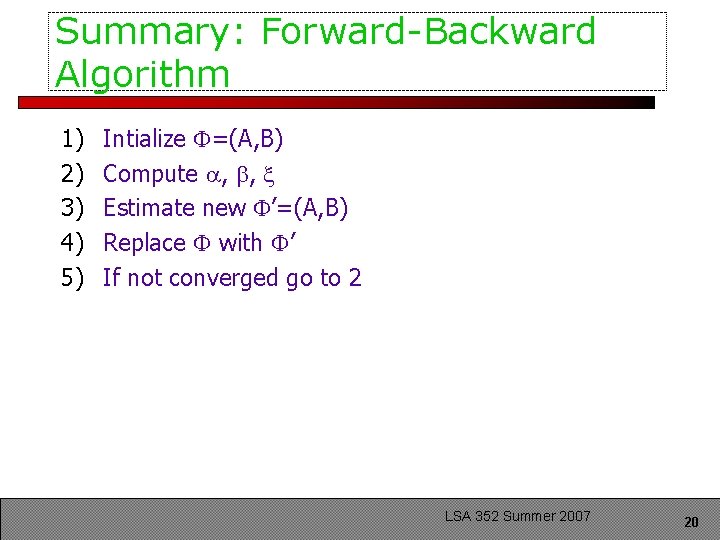

Summary: Forward-Backward Algorithm 1) 2) 3) 4) 5) Intialize =(A, B) Compute , , Estimate new ’=(A, B) Replace with ’ If not converged go to 2 LSA 352 Summer 2007 20

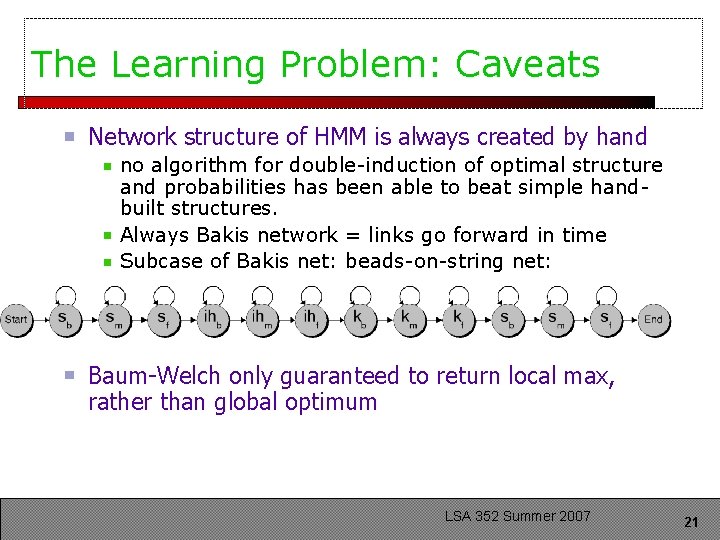

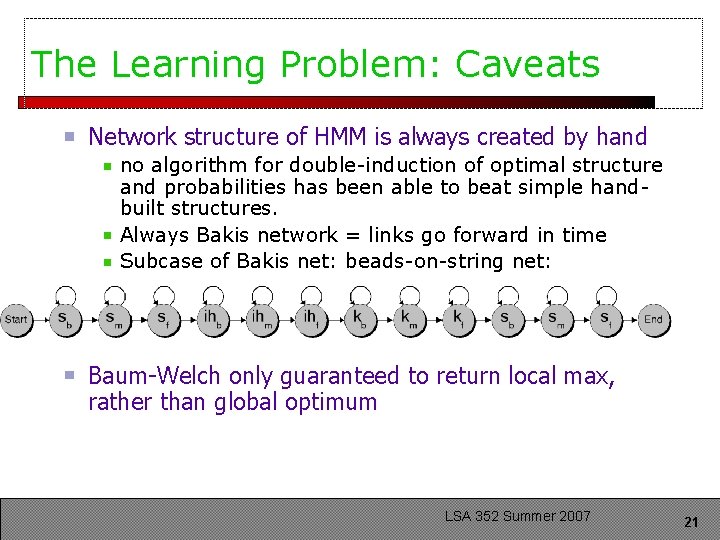

The Learning Problem: Caveats Network structure of HMM is always created by hand no algorithm for double-induction of optimal structure and probabilities has been able to beat simple handbuilt structures. Always Bakis network = links go forward in time Subcase of Bakis net: beads-on-string net: Baum-Welch only guaranteed to return local max, rather than global optimum LSA 352 Summer 2007 21

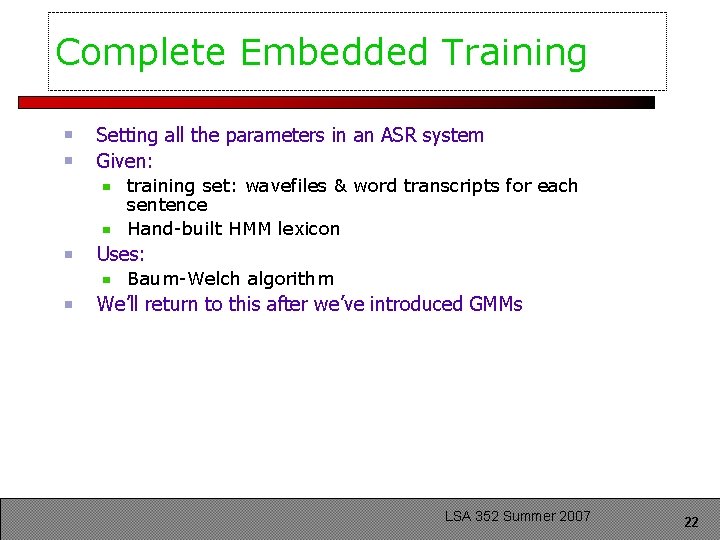

Complete Embedded Training Setting all the parameters in an ASR system Given: training set: wavefiles & word transcripts for each sentence Hand-built HMM lexicon Uses: Baum-Welch algorithm We’ll return to this after we’ve introduced GMMs LSA 352 Summer 2007 22

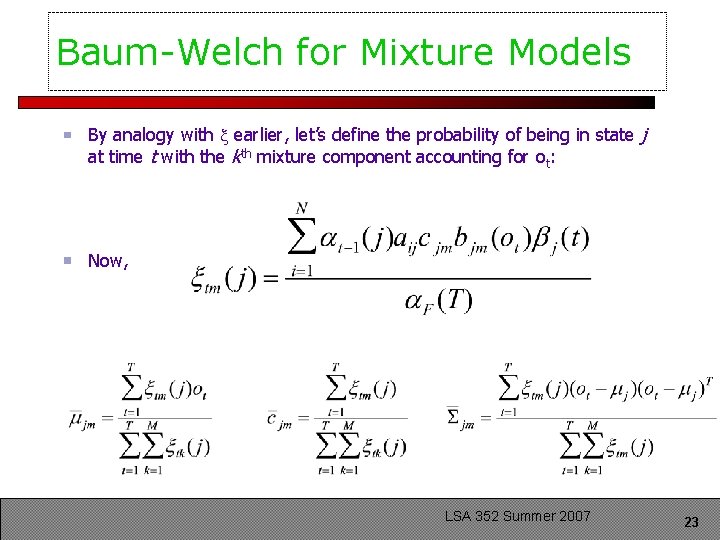

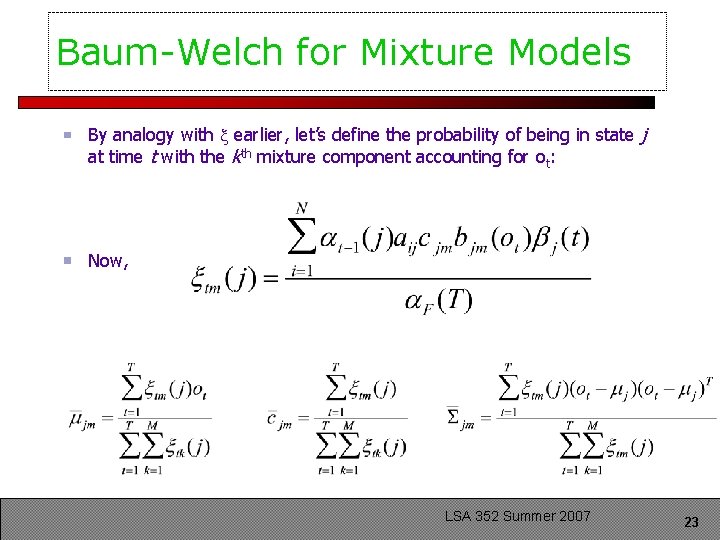

Baum-Welch for Mixture Models By analogy with earlier, let’s define the probability of being in state j at time t with the kth mixture component accounting for ot: Now, LSA 352 Summer 2007 23

How to train mixtures? • • • 1) 2) 3) 4) Choose M (often 16; or can tune M dependent on amount of training observations) Then can do various splitting or clustering algorithms One simple method for “splitting”: Compute global mean and global variance Split into two Gaussians, with means (sometimes is 0. 2 ) Run Forward-Backward to retrain Go to 2 until we have 16 mixtures LSA 352 Summer 2007 24

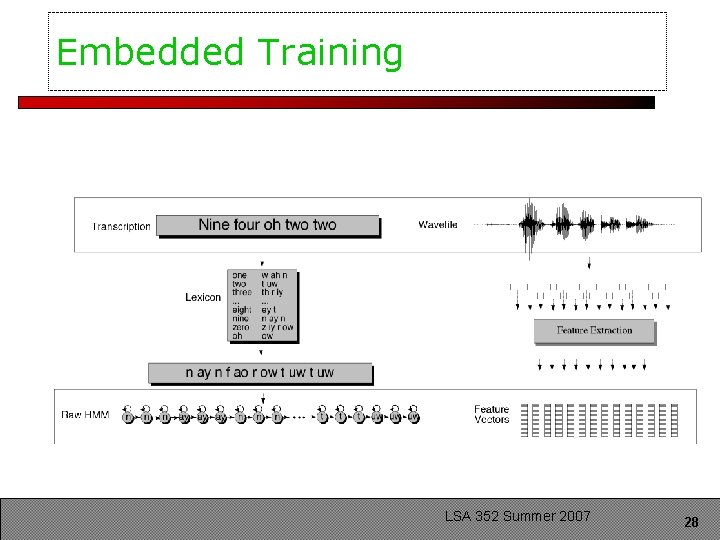

Embedded Training Components of a speech recognizer: Feature extraction: not statistical Language model: word transition probabilities, trained on some other corpus Acoustic model: – Pronunciation lexicon: the HMM structure for each word, built by hand – Observation likelihoods bj(ot) – Transition probabilities aij LSA 352 Summer 2007 25

Embedded training of acoustic model If we had hand-segmented and hand-labeled training data With word and phone boundaries We could just compute the B: means and variances of all our triphone gaussians A: transition probabilities And we’d be done! But we don’t have word and phone boundaries, nor phone labeling LSA 352 Summer 2007 26

Embedded training Instead: We’ll train each phone HMM embedded in an entire sentence We’ll do word/phone segmentation and alignment automatically as part of training process LSA 352 Summer 2007 27

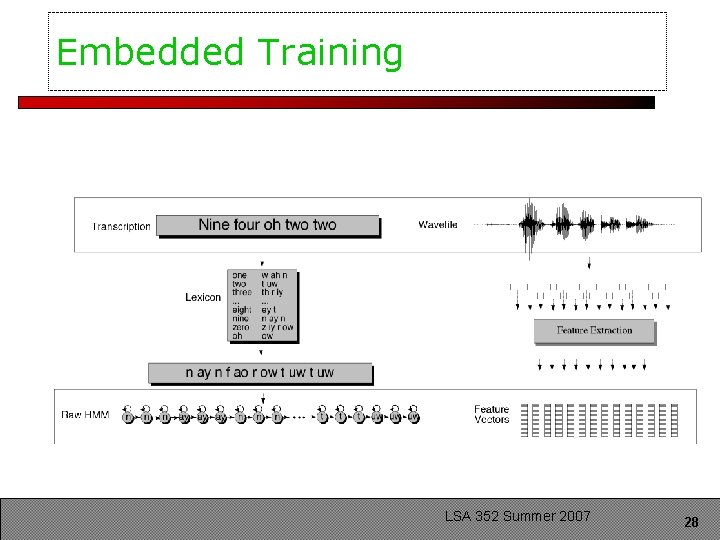

Embedded Training LSA 352 Summer 2007 28

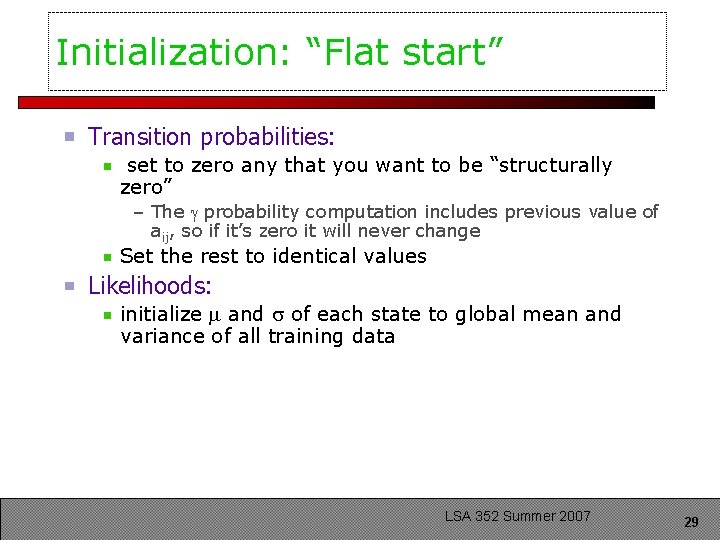

Initialization: “Flat start” Transition probabilities: set to zero any that you want to be “structurally zero” – The probability computation includes previous value of aij, so if it’s zero it will never change Set the rest to identical values Likelihoods: initialize and of each state to global mean and variance of all training data LSA 352 Summer 2007 29

Embedded Training Now we have estimates for A and B So we just run the EM algorithm During each iteration, we compute forward and backward probabilities Use them to re-estimate A and B Run EM til converge LSA 352 Summer 2007 30

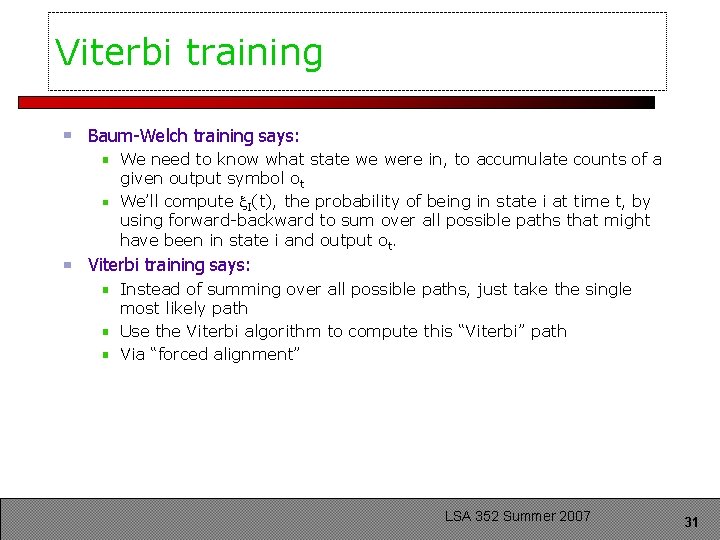

Viterbi training Baum-Welch training says: We need to know what state we were in, to accumulate counts of a given output symbol ot We’ll compute I(t), the probability of being in state i at time t, by using forward-backward to sum over all possible paths that might have been in state i and output ot. Viterbi training says: Instead of summing over all possible paths, just take the single most likely path Use the Viterbi algorithm to compute this “Viterbi” path Via “forced alignment” LSA 352 Summer 2007 31

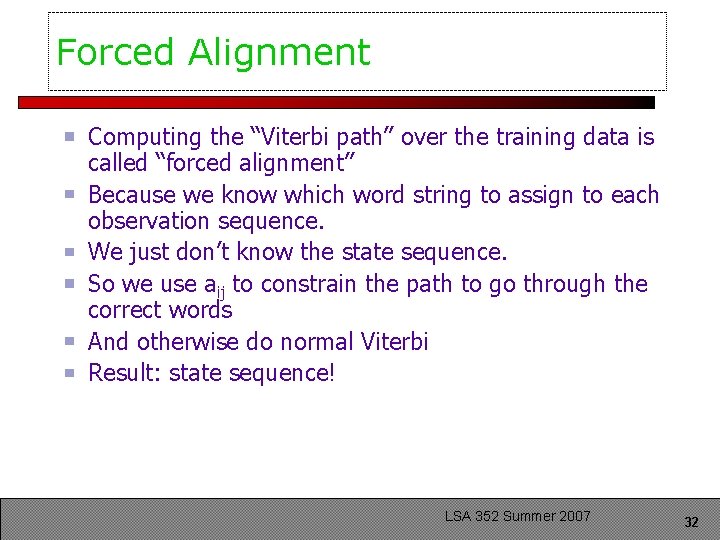

Forced Alignment Computing the “Viterbi path” over the training data is called “forced alignment” Because we know which word string to assign to each observation sequence. We just don’t know the state sequence. So we use aij to constrain the path to go through the correct words And otherwise do normal Viterbi Result: state sequence! LSA 352 Summer 2007 32

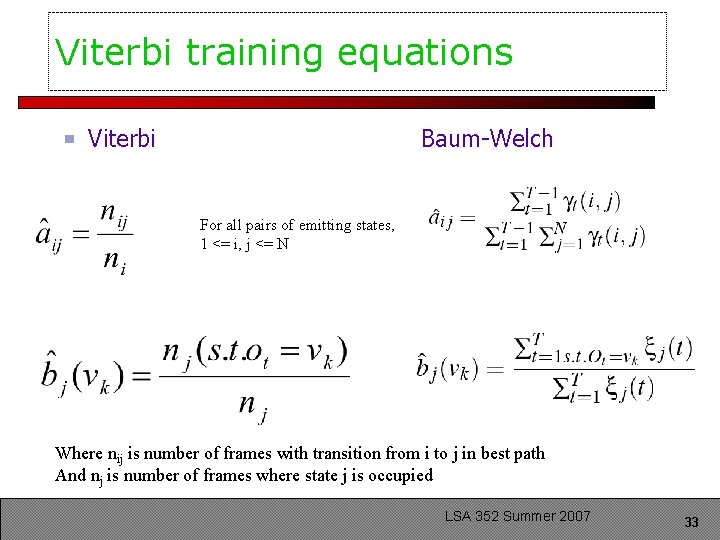

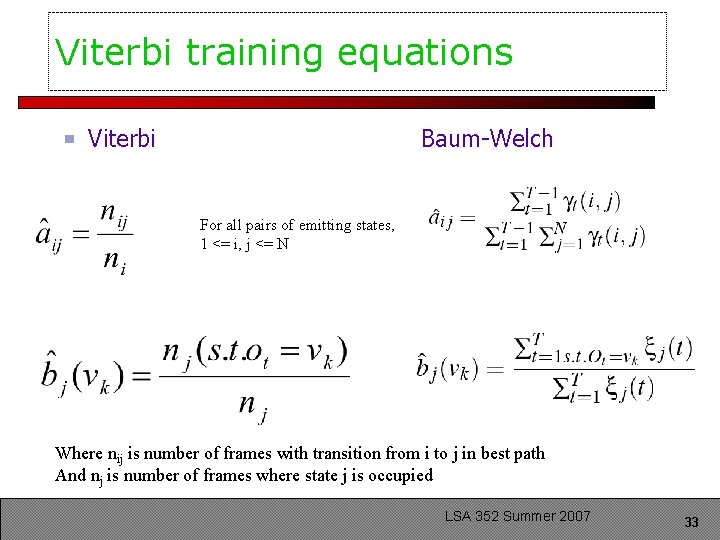

Viterbi training equations Viterbi Baum-Welch For all pairs of emitting states, 1 <= i, j <= N Where nij is number of frames with transition from i to j in best path And nj is number of frames where state j is occupied LSA 352 Summer 2007 33

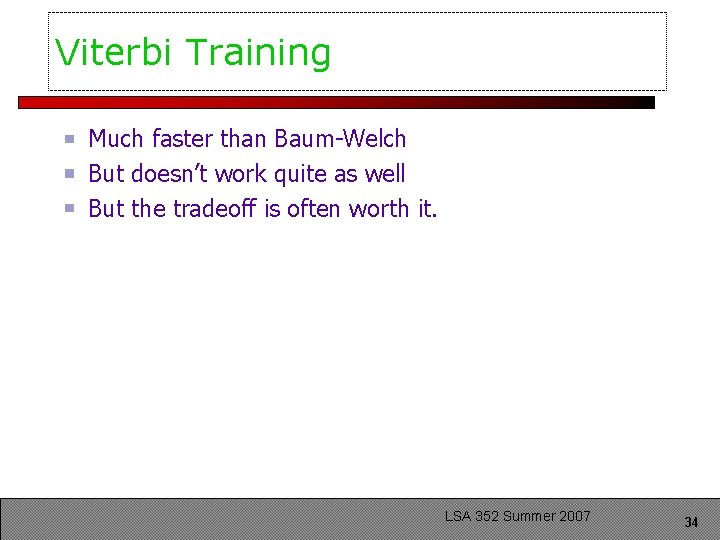

Viterbi Training Much faster than Baum-Welch But doesn’t work quite as well But the tradeoff is often worth it. LSA 352 Summer 2007 34

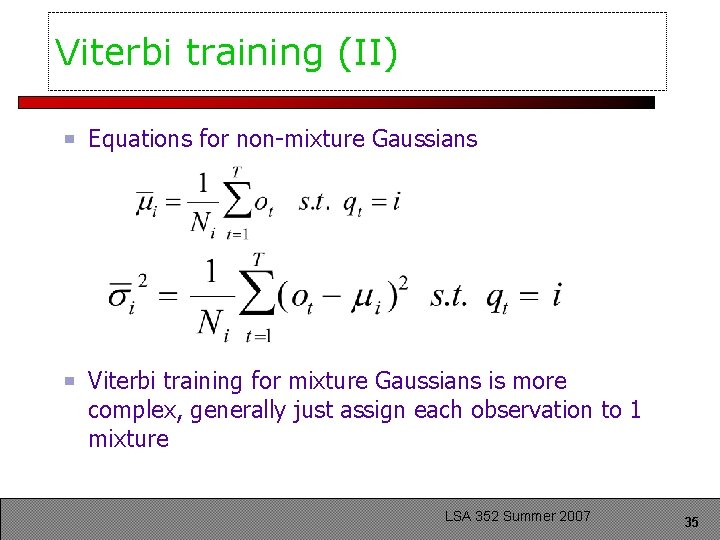

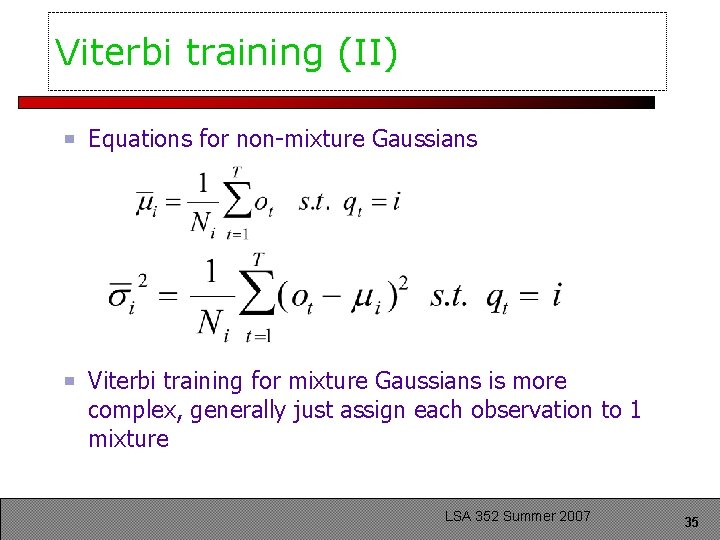

Viterbi training (II) Equations for non-mixture Gaussians Viterbi training for mixture Gaussians is more complex, generally just assign each observation to 1 mixture LSA 352 Summer 2007 35

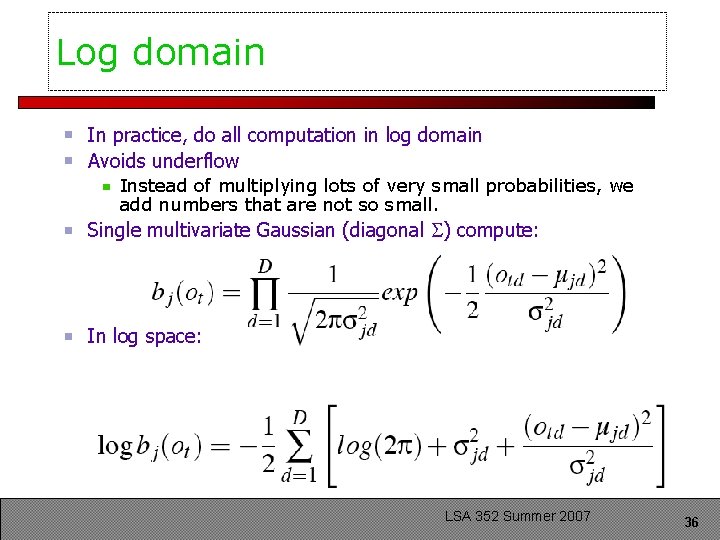

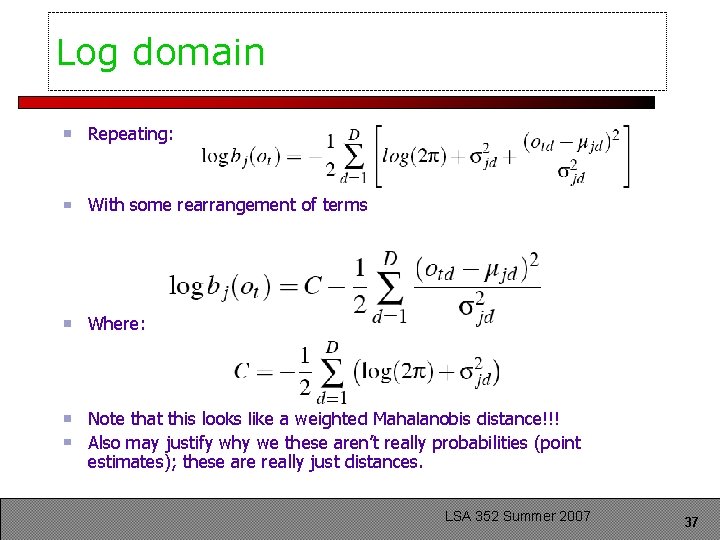

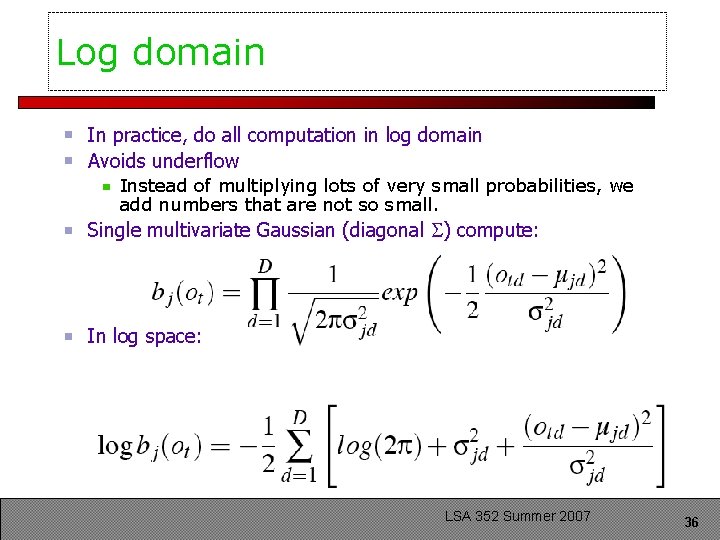

Log domain In practice, do all computation in log domain Avoids underflow Instead of multiplying lots of very small probabilities, we add numbers that are not so small. Single multivariate Gaussian (diagonal ) compute: In log space: LSA 352 Summer 2007 36

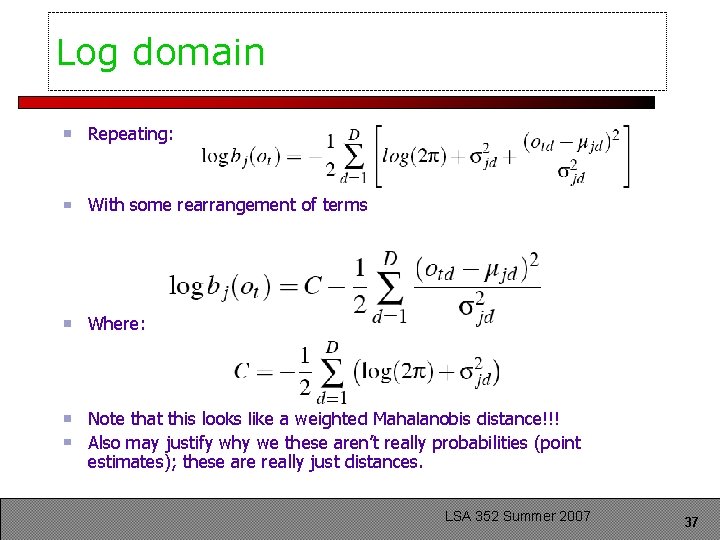

Log domain Repeating: With some rearrangement of terms Where: Note that this looks like a weighted Mahalanobis distance!!! Also may justify why we these aren’t really probabilities (point estimates); these are really just distances. LSA 352 Summer 2007 37

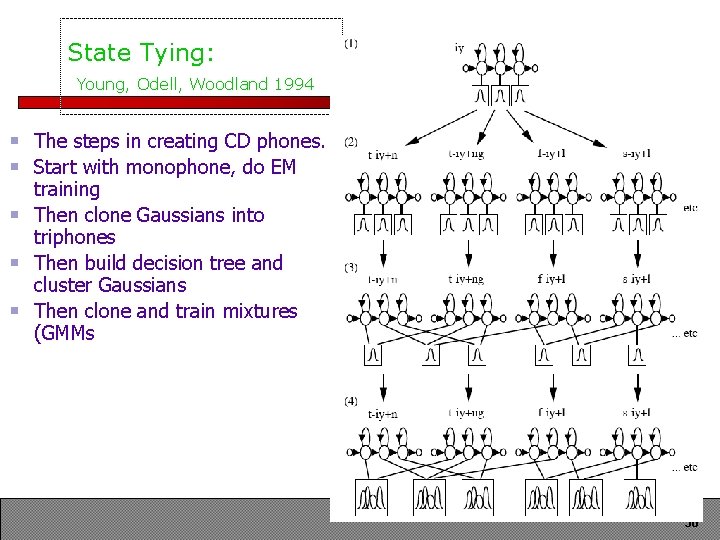

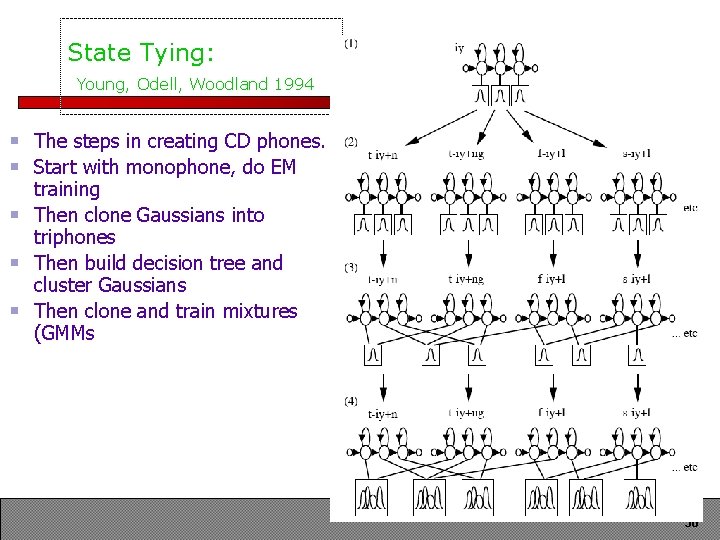

State Tying: Young, Odell, Woodland 1994 The steps in creating CD phones. Start with monophone, do EM training Then clone Gaussians into triphones Then build decision tree and cluster Gaussians Then clone and train mixtures (GMMs LSA 352 Summer 2007 38

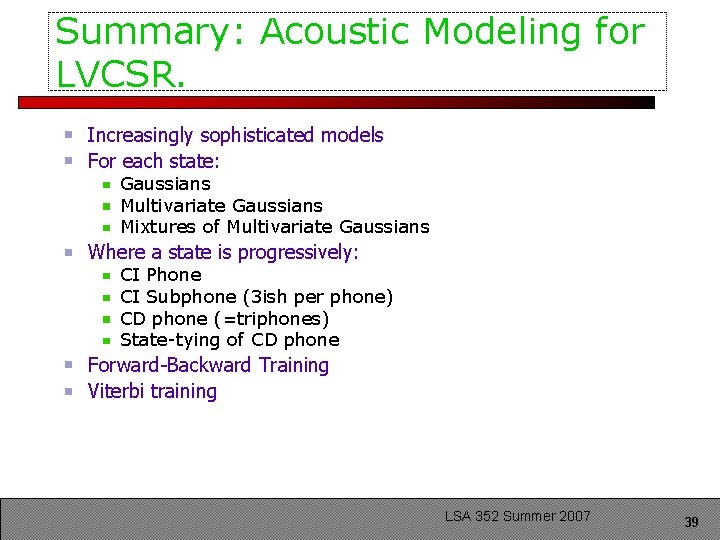

Summary: Acoustic Modeling for LVCSR. Increasingly sophisticated models For each state: Gaussians Multivariate Gaussians Mixtures of Multivariate Gaussians Where a state is progressively: CI Phone CI Subphone (3 ish per phone) CD phone (=triphones) State-tying of CD phone Forward-Backward Training Viterbi training LSA 352 Summer 2007 39

Outline Disfluencies Characteristics of disfluences Detecting disfluencies MDE bakeoff Fragments LSA 352 Summer 2007 40

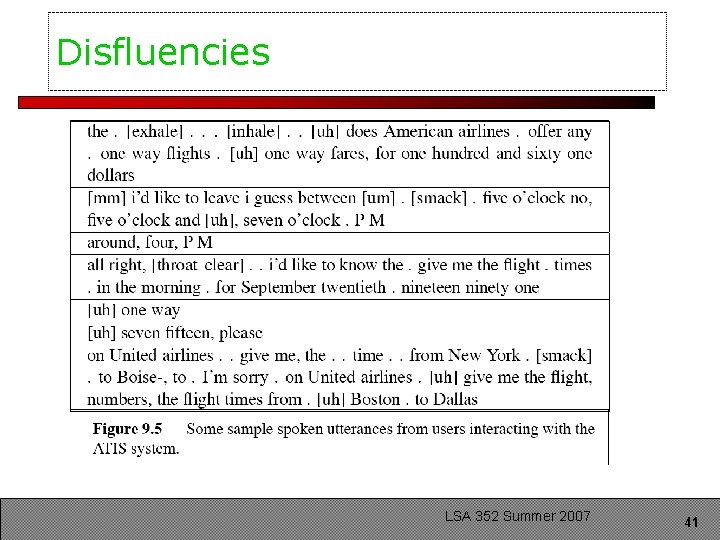

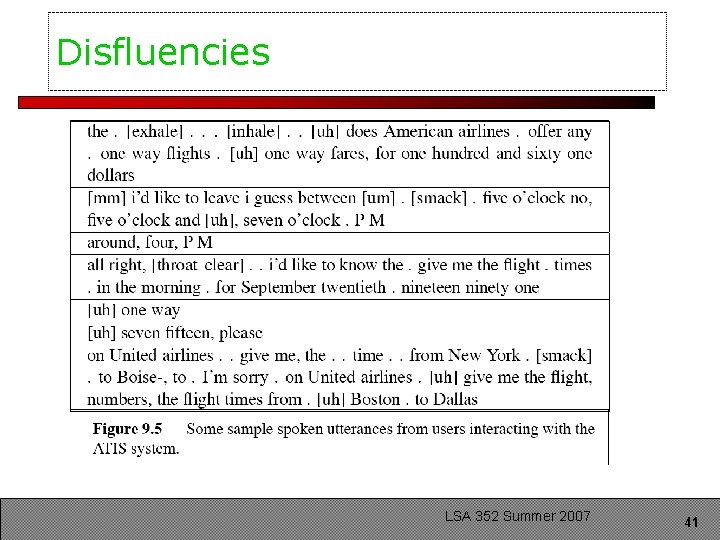

Disfluencies LSA 352 Summer 2007 41

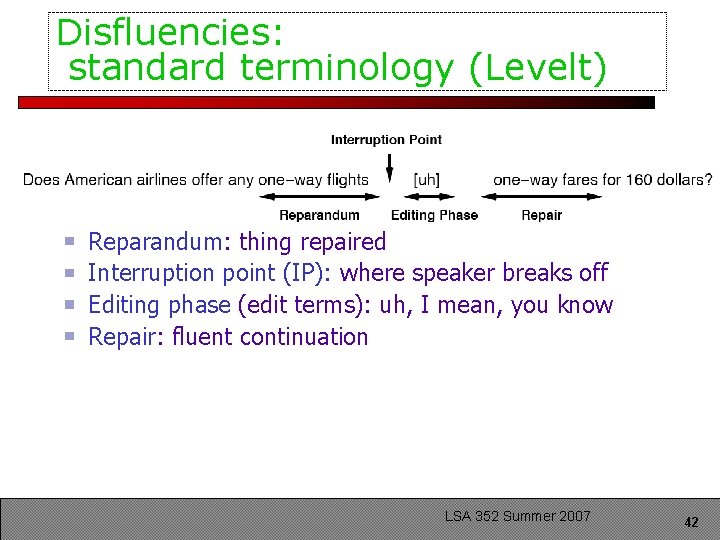

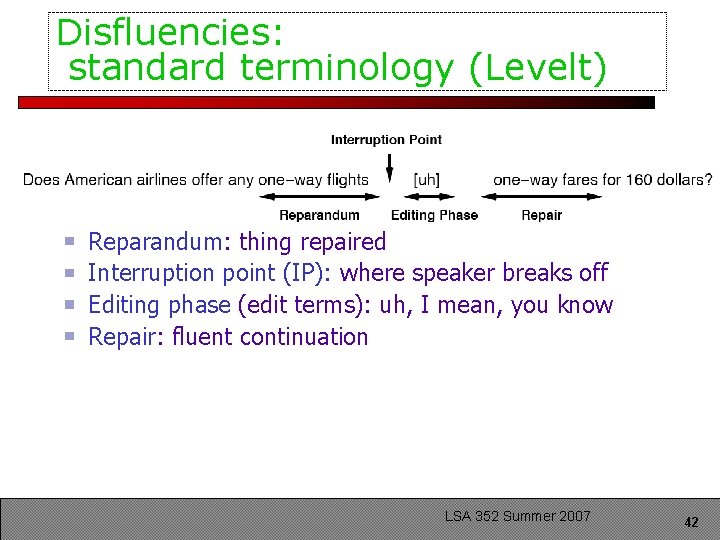

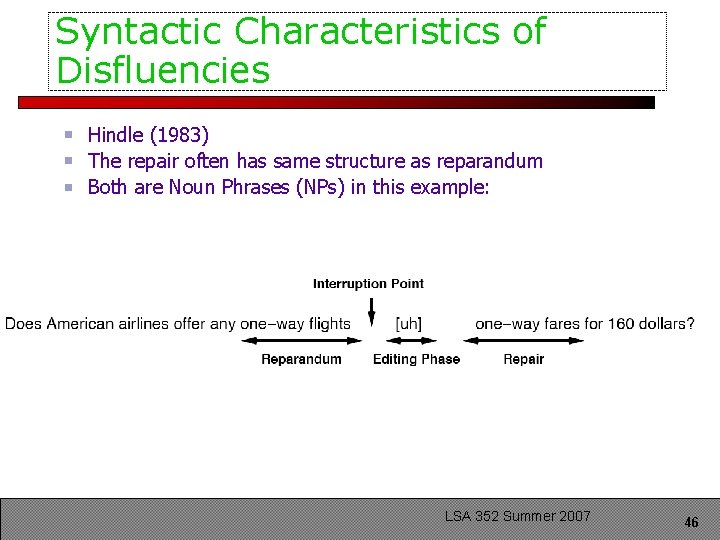

Disfluencies: standard terminology (Levelt) Reparandum: thing repaired Interruption point (IP): where speaker breaks off Editing phase (edit terms): uh, I mean, you know Repair: fluent continuation LSA 352 Summer 2007 42

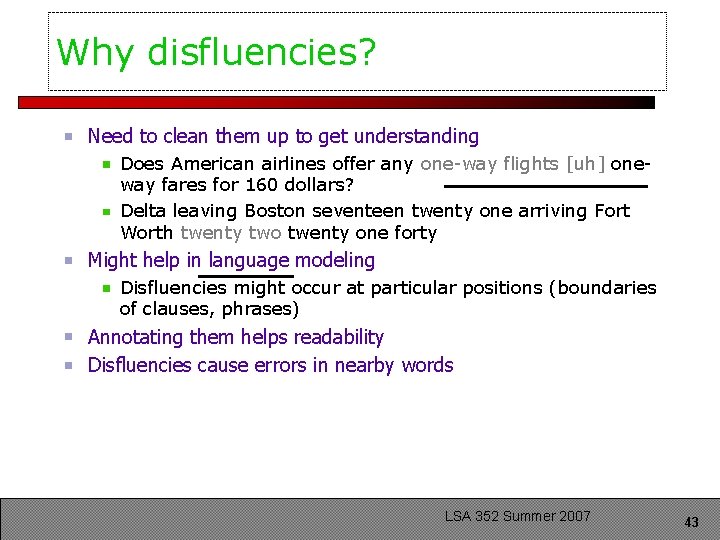

Why disfluencies? Need to clean them up to get understanding Does American airlines offer any one-way flights [uh] oneway fares for 160 dollars? Delta leaving Boston seventeen twenty one arriving Fort Worth twenty two twenty one forty Might help in language modeling Disfluencies might occur at particular positions (boundaries of clauses, phrases) Annotating them helps readability Disfluencies cause errors in nearby words LSA 352 Summer 2007 43

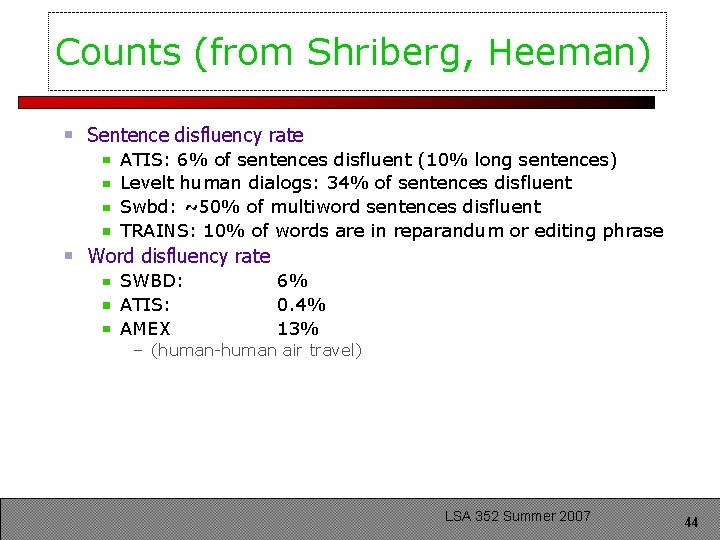

Counts (from Shriberg, Heeman) Sentence disfluency rate ATIS: 6% of sentences disfluent (10% long sentences) Levelt human dialogs: 34% of sentences disfluent Swbd: ~50% of multiword sentences disfluent TRAINS: 10% of words are in reparandum or editing phrase Word disfluency rate SWBD: ATIS: AMEX 6% 0. 4% 13% – (human-human air travel) LSA 352 Summer 2007 44

Prosodic characteristics of disfluencies Nakatani and Hirschberg 1994 Fragments are good cues to disfluencies Prosody: Pause duration is shorter in disfluent silence than fluent silence F 0 increases from end of reparandum to beginning of repair, but only minor change Repair interval offsets have minor prosodic phrase boundary, even in middle of NP: – Show me all n- | round-trip flights | from Pittsburgh | to Atlanta LSA 352 Summer 2007 45

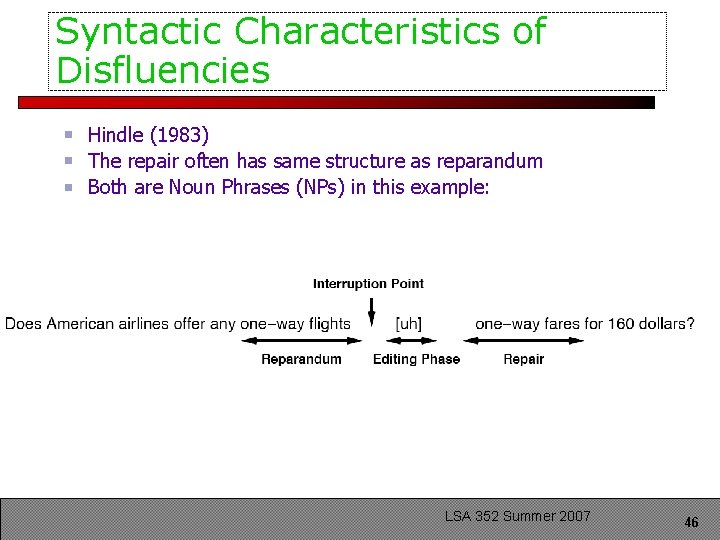

Syntactic Characteristics of Disfluencies Hindle (1983) The repair often has same structure as reparandum Both are Noun Phrases (NPs) in this example: So if could automatically find IP, could find and correct reparandum! LSA 352 Summer 2007 46

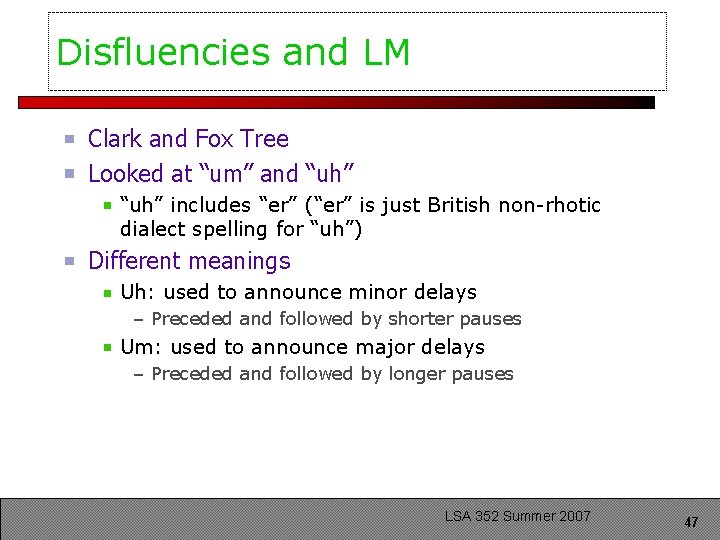

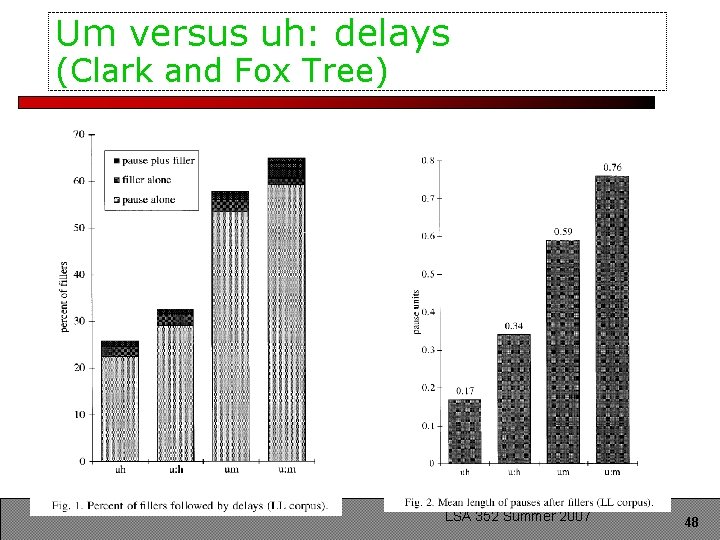

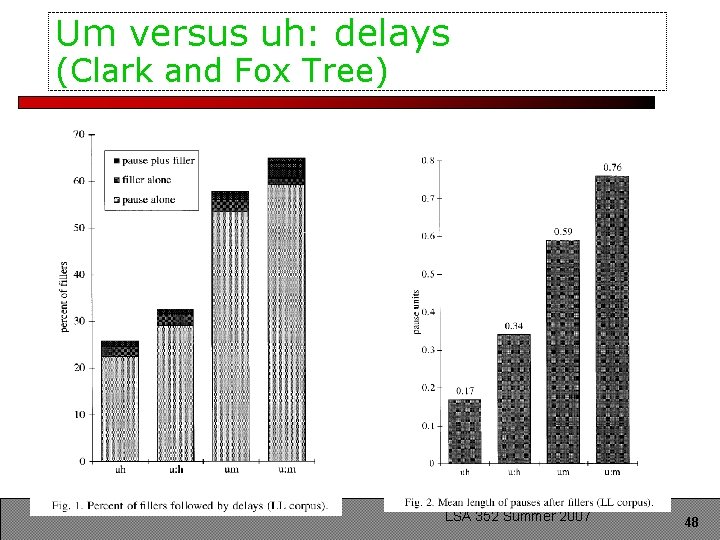

Disfluencies and LM Clark and Fox Tree Looked at “um” and “uh” includes “er” (“er” is just British non-rhotic dialect spelling for “uh”) Different meanings Uh: used to announce minor delays – Preceded and followed by shorter pauses Um: used to announce major delays – Preceded and followed by longer pauses LSA 352 Summer 2007 47

Um versus uh: delays (Clark and Fox Tree) LSA 352 Summer 2007 48

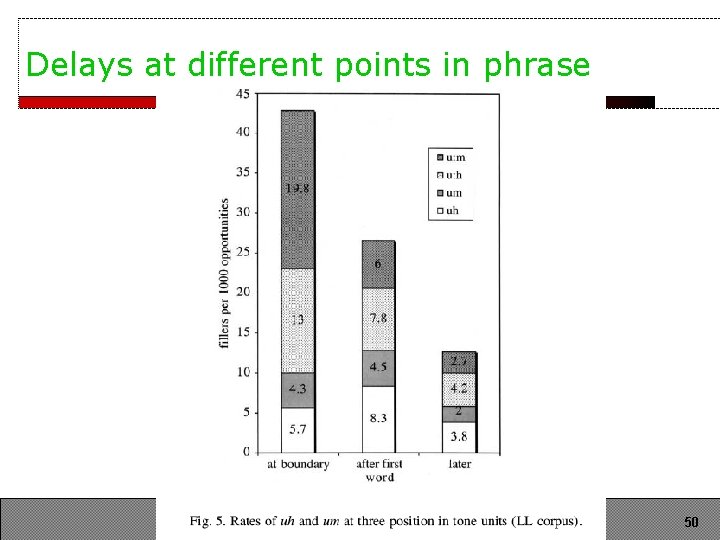

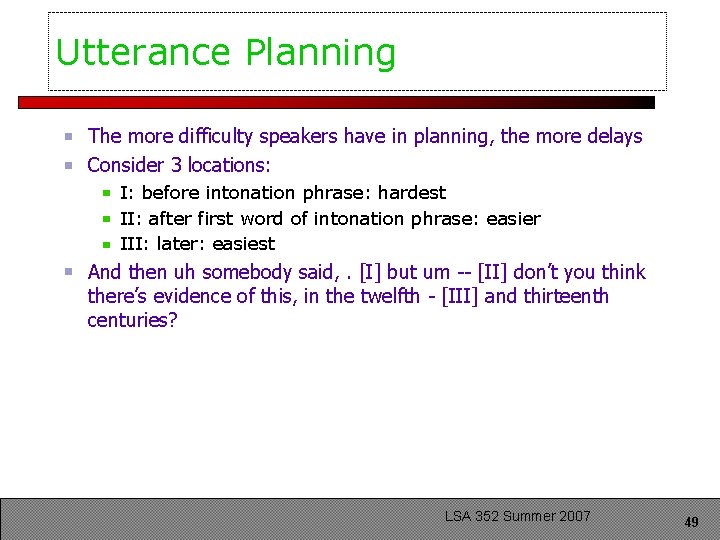

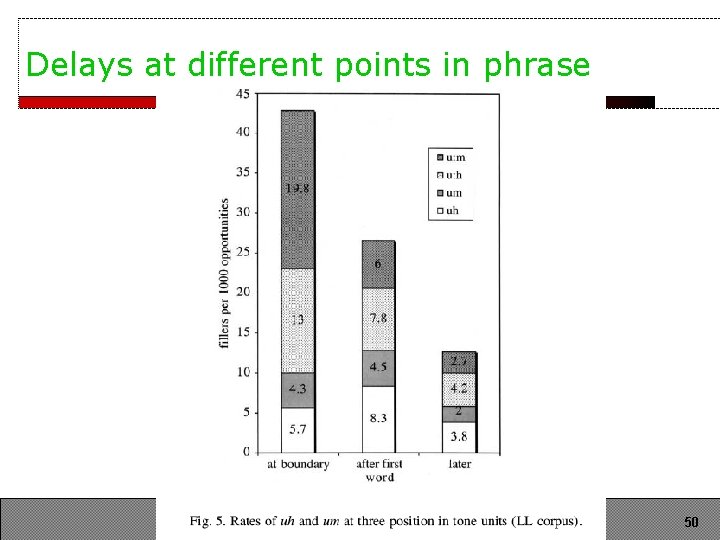

Utterance Planning The more difficulty speakers have in planning, the more delays Consider 3 locations: I: before intonation phrase: hardest II: after first word of intonation phrase: easier III: later: easiest And then uh somebody said, . [I] but um -- [II] don’t you think there’s evidence of this, in the twelfth - [III] and thirteenth centuries? LSA 352 Summer 2007 49

Delays at different points in phrase LSA 352 Summer 2007 50

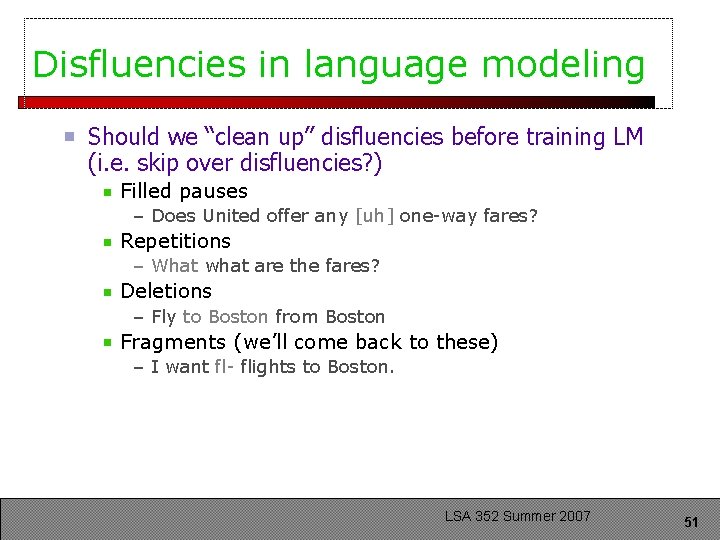

Disfluencies in language modeling Should we “clean up” disfluencies before training LM (i. e. skip over disfluencies? ) Filled pauses – Does United offer any [uh] one-way fares? Repetitions – What what are the fares? Deletions – Fly to Boston from Boston Fragments (we’ll come back to these) – I want fl- flights to Boston. LSA 352 Summer 2007 51

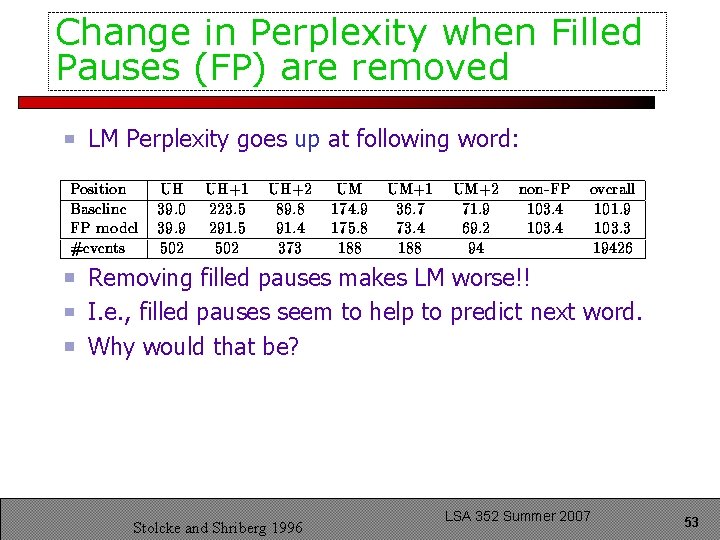

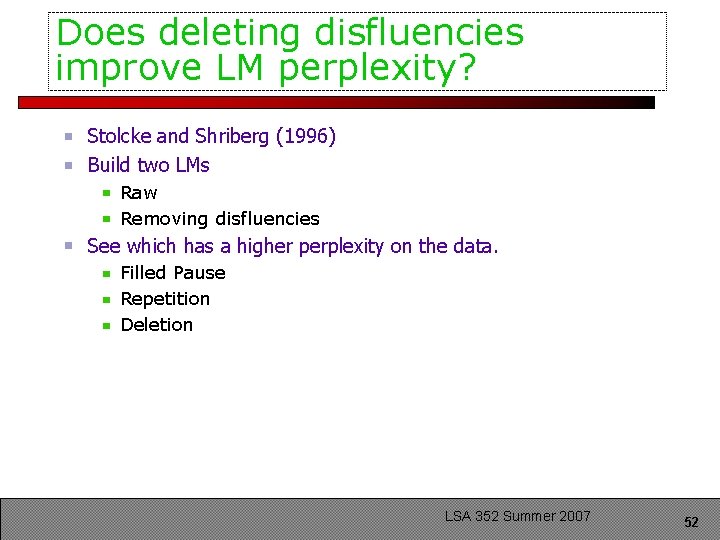

Does deleting disfluencies improve LM perplexity? Stolcke and Shriberg (1996) Build two LMs Raw Removing disfluencies See which has a higher perplexity on the data. Filled Pause Repetition Deletion LSA 352 Summer 2007 52

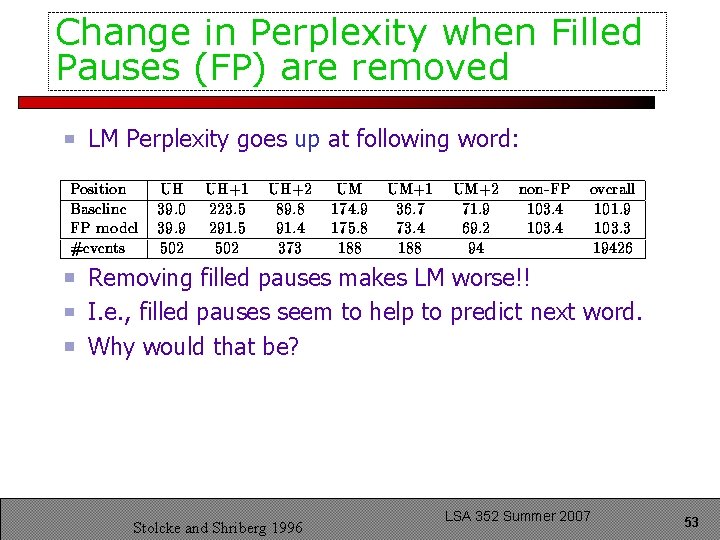

Change in Perplexity when Filled Pauses (FP) are removed LM Perplexity goes up at following word: Removing filled pauses makes LM worse!! I. e. , filled pauses seem to help to predict next word. Why would that be? Stolcke and Shriberg 1996 LSA 352 Summer 2007 53

Filled pauses tend to occur at clause boundaries Word before FP is end of previous clause; word after is start of new clause; Best not to delete FP Some of the different things we’re doing [uh] there’s not time to do it all “there’s” is very likely to start a sentence So P(there’s|uh) is better estimate than P(there’s|doing) LSA 352 Summer 2007 54

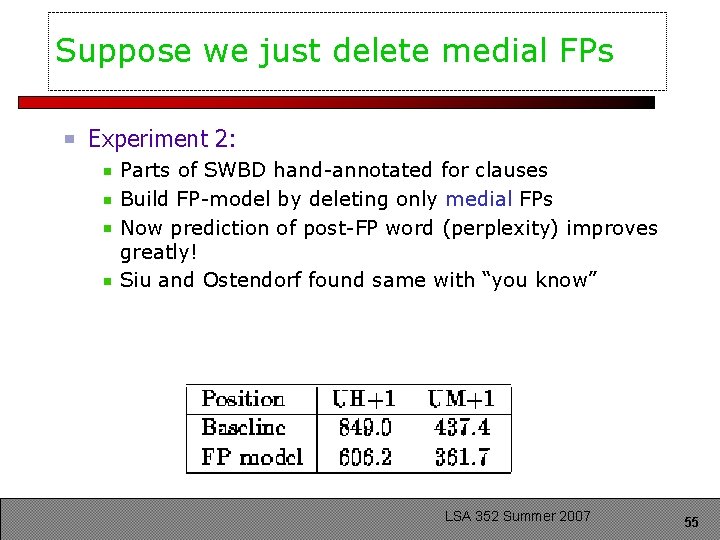

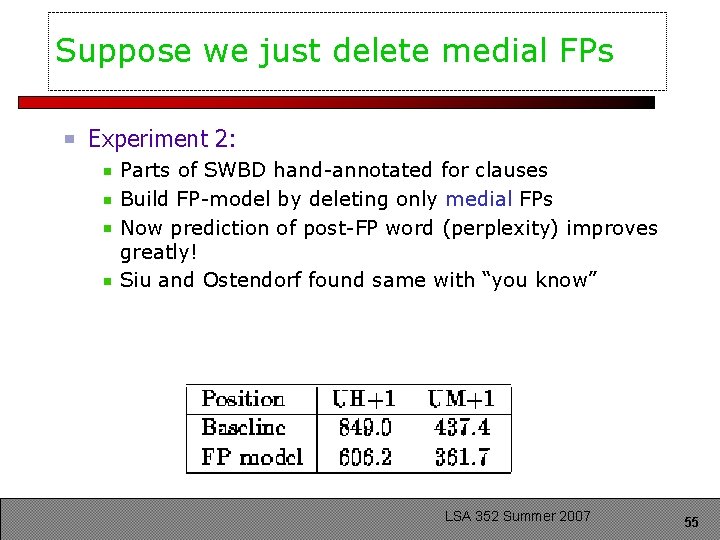

Suppose we just delete medial FPs Experiment 2: Parts of SWBD hand-annotated for clauses Build FP-model by deleting only medial FPs Now prediction of post-FP word (perplexity) improves greatly! Siu and Ostendorf found same with “you know” LSA 352 Summer 2007 55

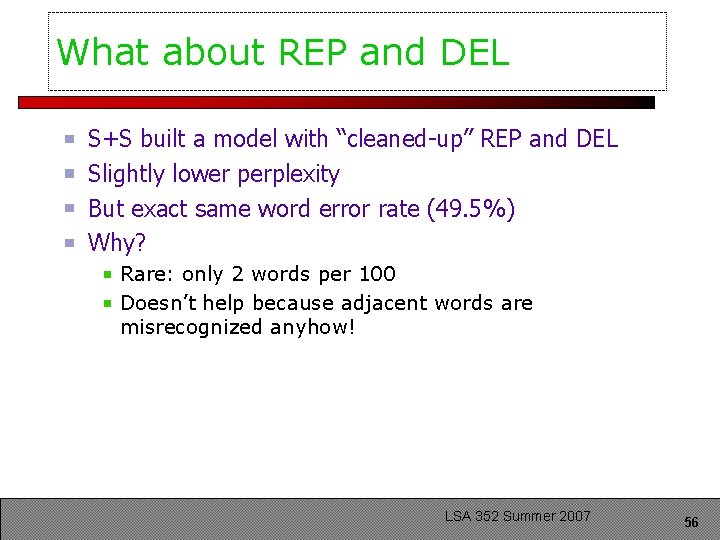

What about REP and DEL S+S built a model with “cleaned-up” REP and DEL Slightly lower perplexity But exact same word error rate (49. 5%) Why? Rare: only 2 words per 100 Doesn’t help because adjacent words are misrecognized anyhow! LSA 352 Summer 2007 56

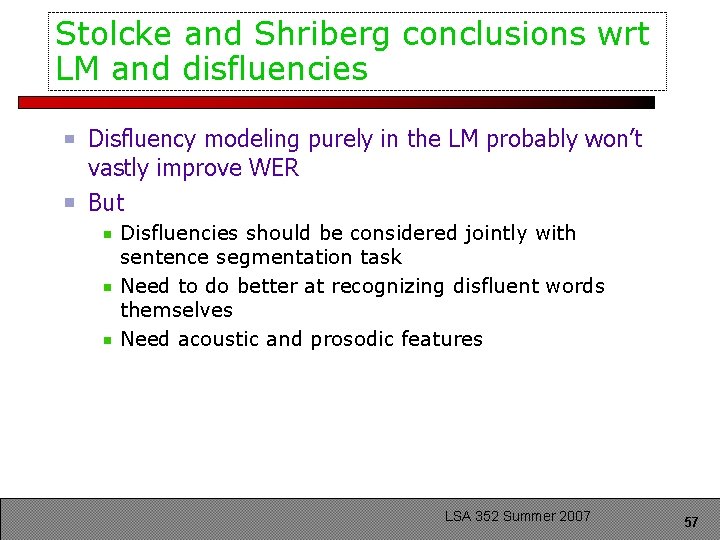

Stolcke and Shriberg conclusions wrt LM and disfluencies Disfluency modeling purely in the LM probably won’t vastly improve WER But Disfluencies should be considered jointly with sentence segmentation task Need to do better at recognizing disfluent words themselves Need acoustic and prosodic features LSA 352 Summer 2007 57

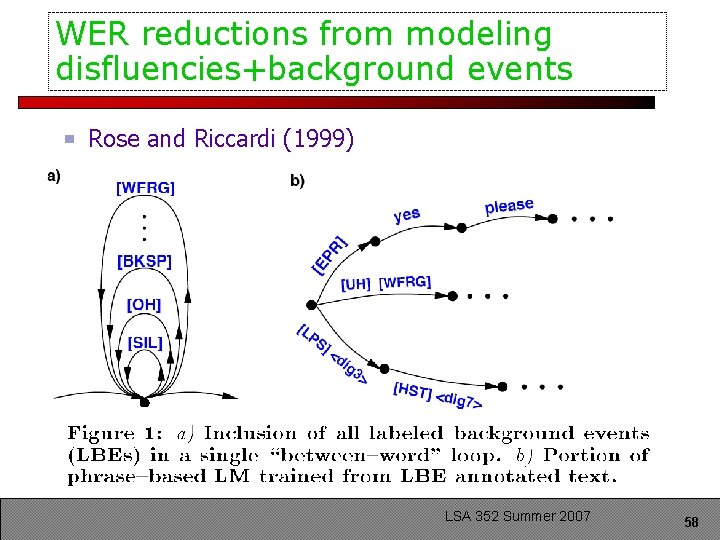

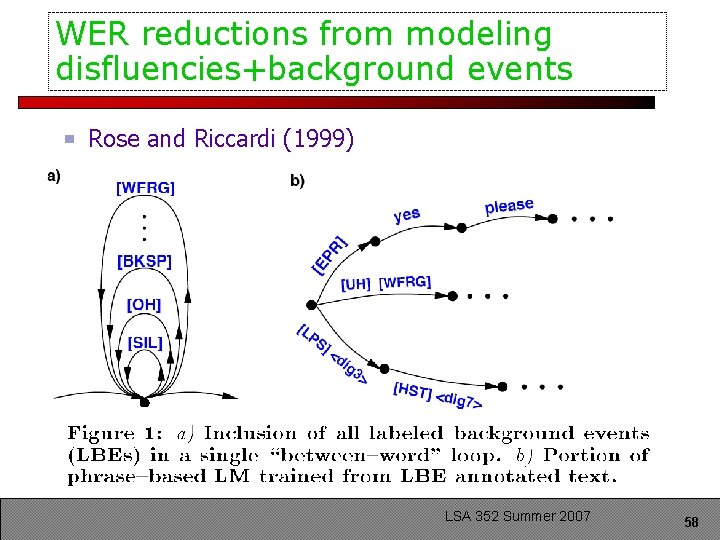

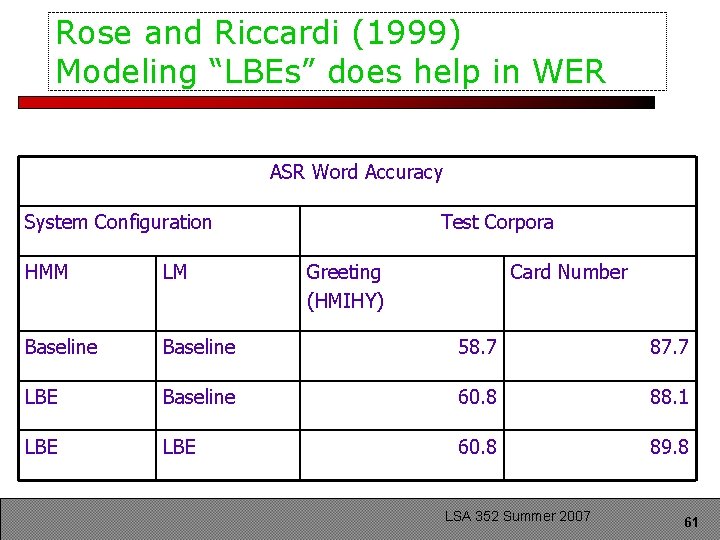

WER reductions from modeling disfluencies+background events Rose and Riccardi (1999) LSA 352 Summer 2007 58

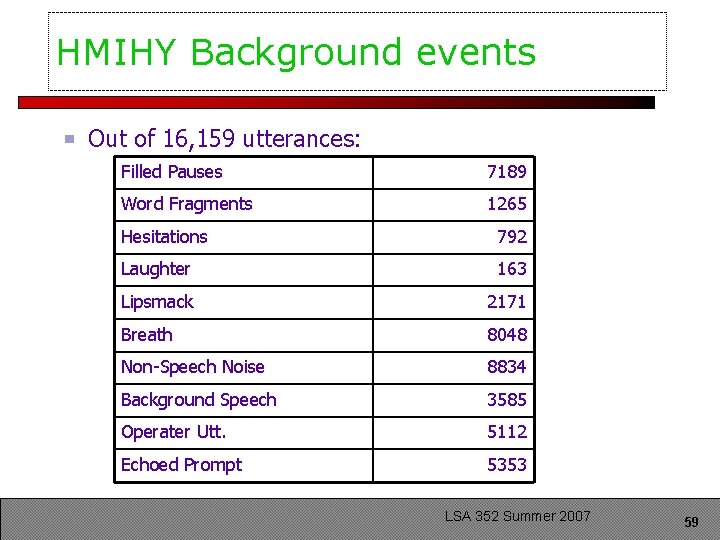

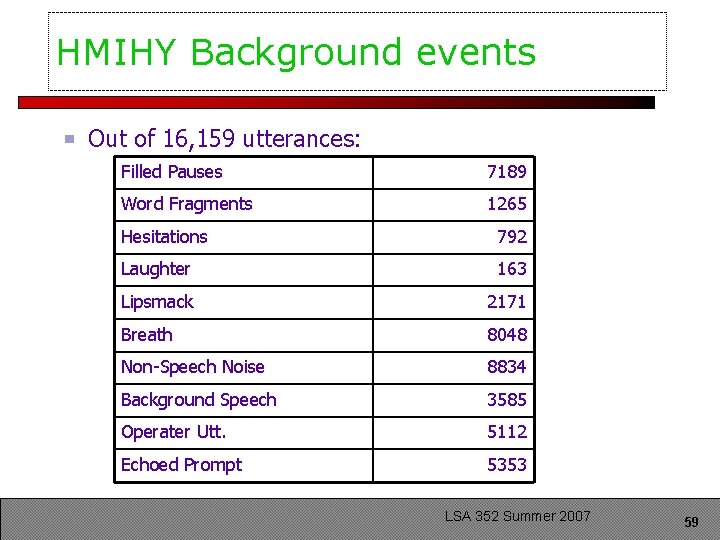

HMIHY Background events Out of 16, 159 utterances: Filled Pauses 7189 Word Fragments 1265 Hesitations 792 Laughter 163 Lipsmack 2171 Breath 8048 Non-Speech Noise 8834 Background Speech 3585 Operater Utt. 5112 Echoed Prompt 5353 LSA 352 Summer 2007 59

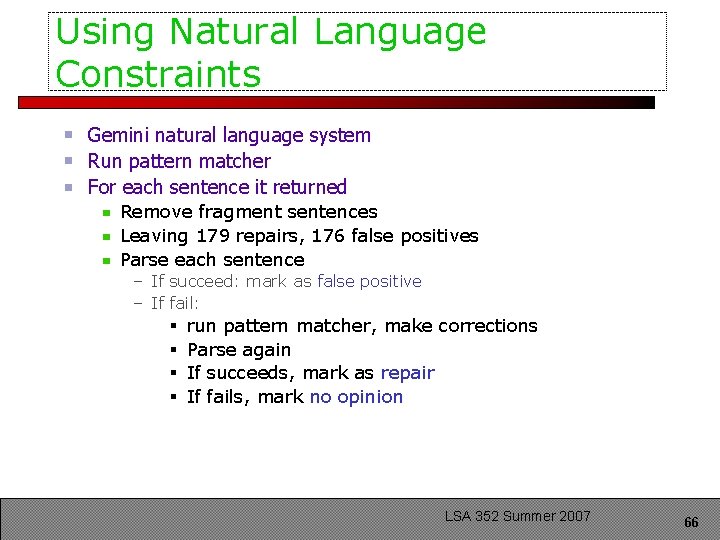

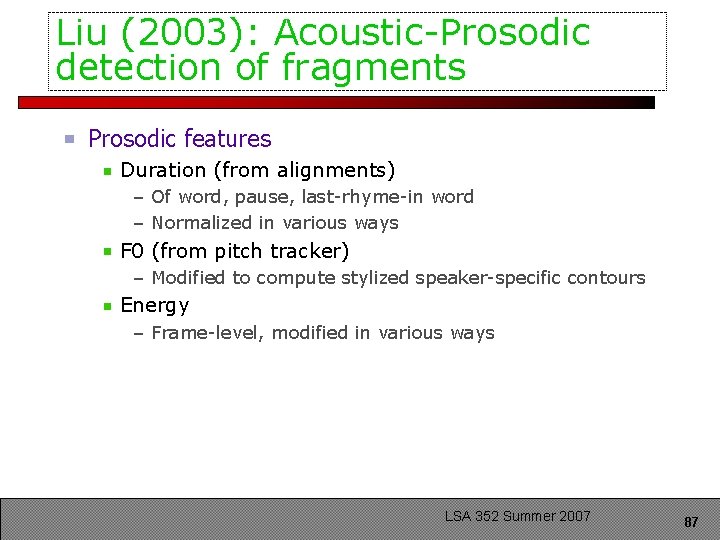

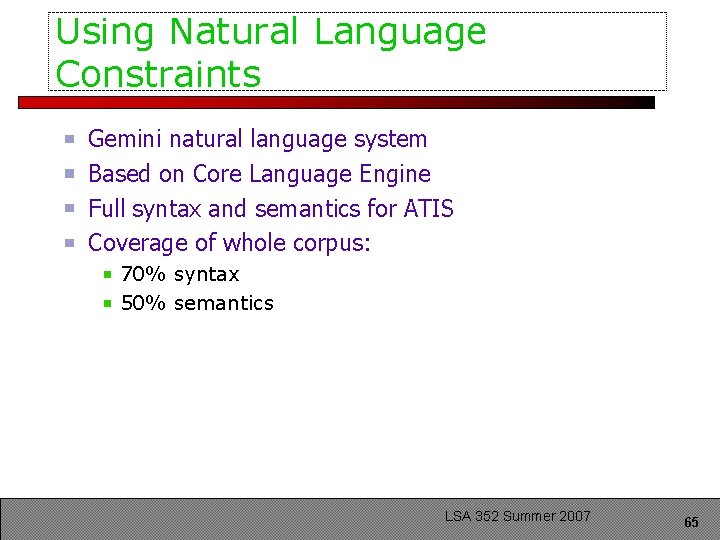

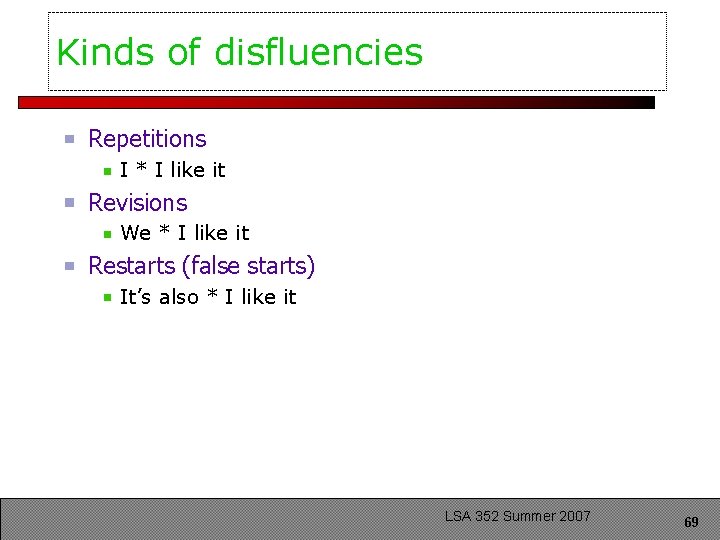

![Phrasebased LM I would like to make a collect call a wfrag dig 3 Phrase-based LM “I would like to make a collect call” “a [wfrag]” <dig 3>](https://slidetodoc.com/presentation_image/2ad9ce18b77484498dd8ef80a0e8e280/image-60.jpg)

Phrase-based LM “I would like to make a collect call” “a [wfrag]” <dig 3> [brth] <dig 3> “[brth] and I” LSA 352 Summer 2007 60

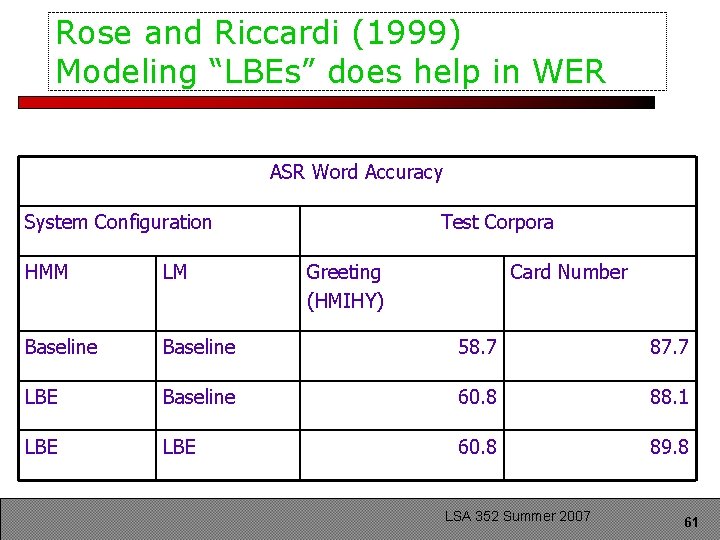

Rose and Riccardi (1999) Modeling “LBEs” does help in WER ASR Word Accuracy System Configuration Test Corpora HMM LM Greeting (HMIHY) Card Number Baseline 58. 7 87. 7 LBE Baseline 60. 8 88. 1 LBE 60. 8 89. 8 LSA 352 Summer 2007 61

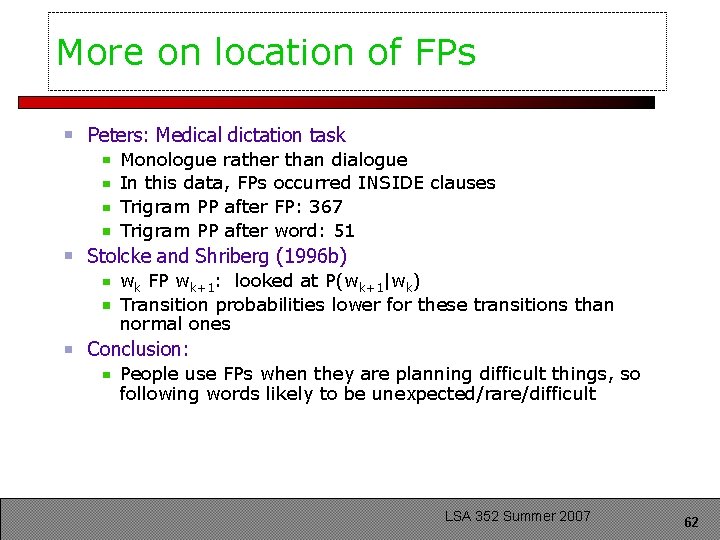

More on location of FPs Peters: Medical dictation task Monologue rather than dialogue In this data, FPs occurred INSIDE clauses Trigram PP after FP: 367 Trigram PP after word: 51 Stolcke and Shriberg (1996 b) wk FP wk+1: looked at P(wk+1|wk) Transition probabilities lower for these transitions than normal ones Conclusion: People use FPs when they are planning difficult things, so following words likely to be unexpected/rare/difficult LSA 352 Summer 2007 62

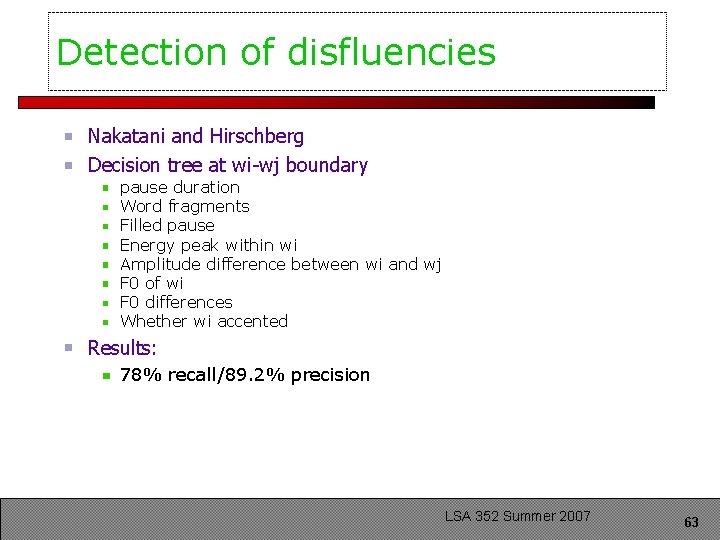

Detection of disfluencies Nakatani and Hirschberg Decision tree at wi-wj boundary pause duration Word fragments Filled pause Energy peak within wi Amplitude difference between wi and wj F 0 of wi F 0 differences Whether wi accented Results: 78% recall/89. 2% precision LSA 352 Summer 2007 63

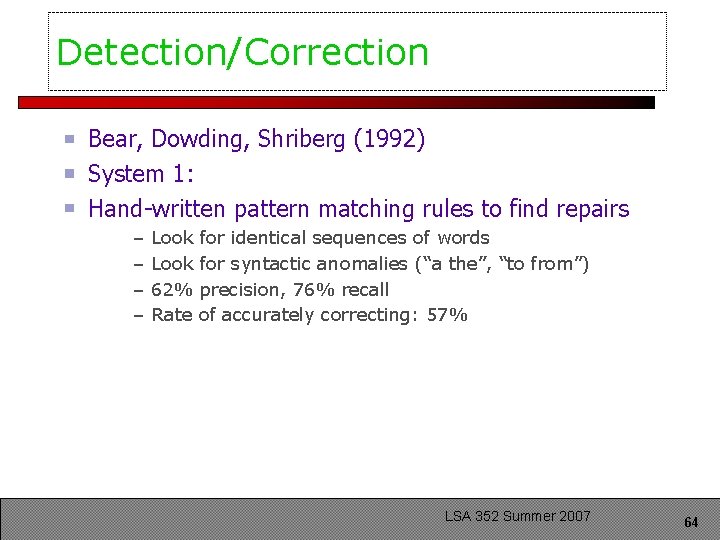

Detection/Correction Bear, Dowding, Shriberg (1992) System 1: Hand-written pattern matching rules to find repairs – – Look for identical sequences of words Look for syntactic anomalies (“a the”, “to from”) 62% precision, 76% recall Rate of accurately correcting: 57% LSA 352 Summer 2007 64

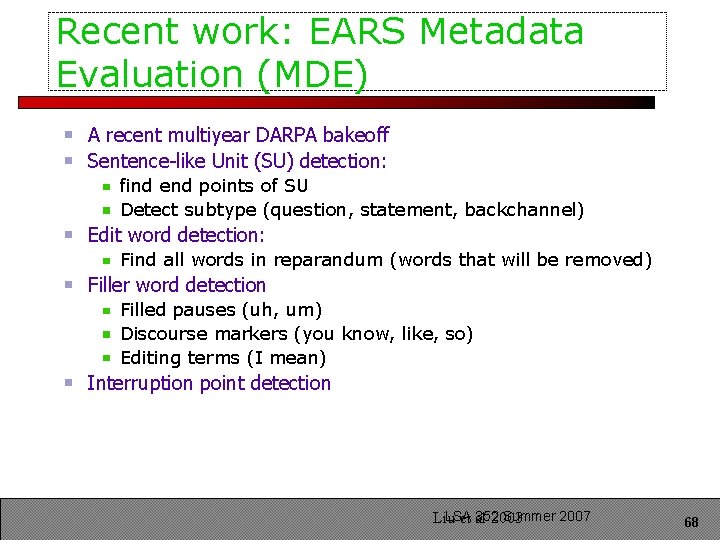

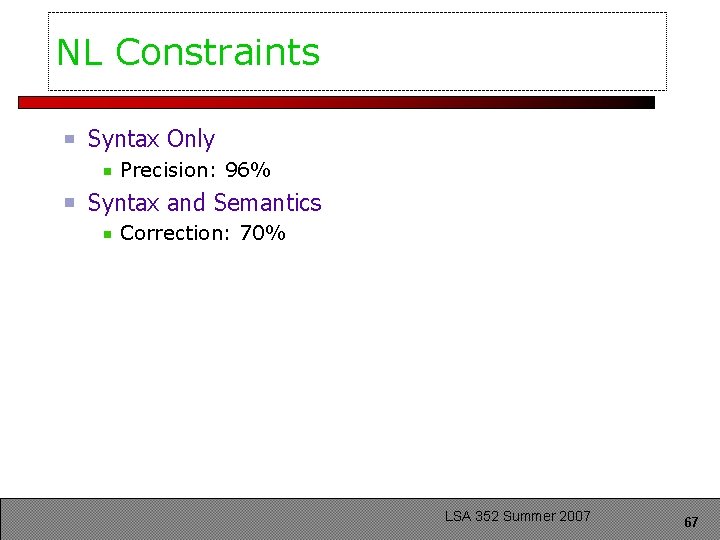

Using Natural Language Constraints Gemini natural language system Based on Core Language Engine Full syntax and semantics for ATIS Coverage of whole corpus: 70% syntax 50% semantics LSA 352 Summer 2007 65

Using Natural Language Constraints Gemini natural language system Run pattern matcher For each sentence it returned Remove fragment sentences Leaving 179 repairs, 176 false positives Parse each sentence – If succeed: mark as false positive – If fail: § § run pattern matcher, make corrections Parse again If succeeds, mark as repair If fails, mark no opinion LSA 352 Summer 2007 66

NL Constraints Syntax Only Precision: 96% Syntax and Semantics Correction: 70% LSA 352 Summer 2007 67

Recent work: EARS Metadata Evaluation (MDE) A recent multiyear DARPA bakeoff Sentence-like Unit (SU) detection: find end points of SU Detect subtype (question, statement, backchannel) Edit word detection: Find all words in reparandum (words that will be removed) Filler word detection Filled pauses (uh, um) Discourse markers (you know, like, so) Editing terms (I mean) Interruption point detection LSA 352 Summer 2007 Liu et al 2003 68

Kinds of disfluencies Repetitions I * I like it Revisions We * I like it Restarts (false starts) It’s also * I like it LSA 352 Summer 2007 69

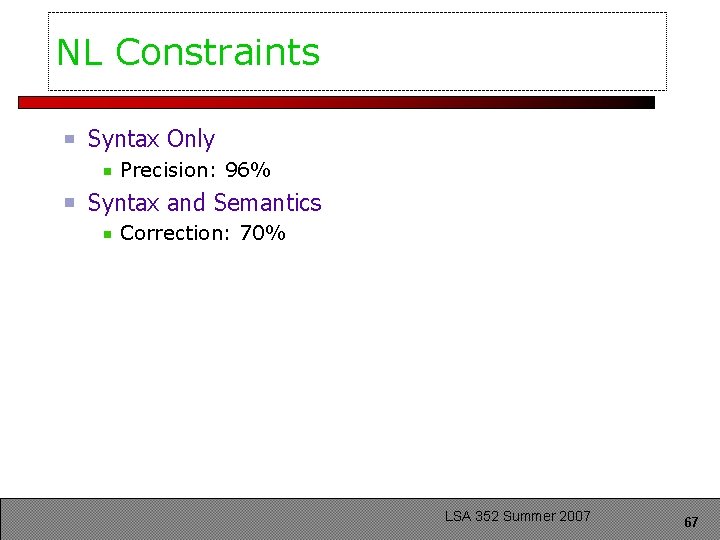

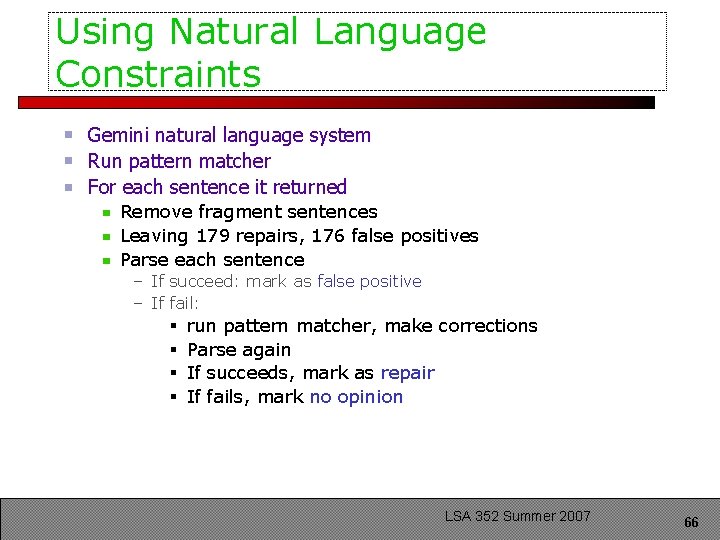

![MDE transcription Conventions for statement SU boundaries for fillers for MDE transcription Conventions: . / for statement SU boundaries, <> for fillers, [] for](https://slidetodoc.com/presentation_image/2ad9ce18b77484498dd8ef80a0e8e280/image-70.jpg)

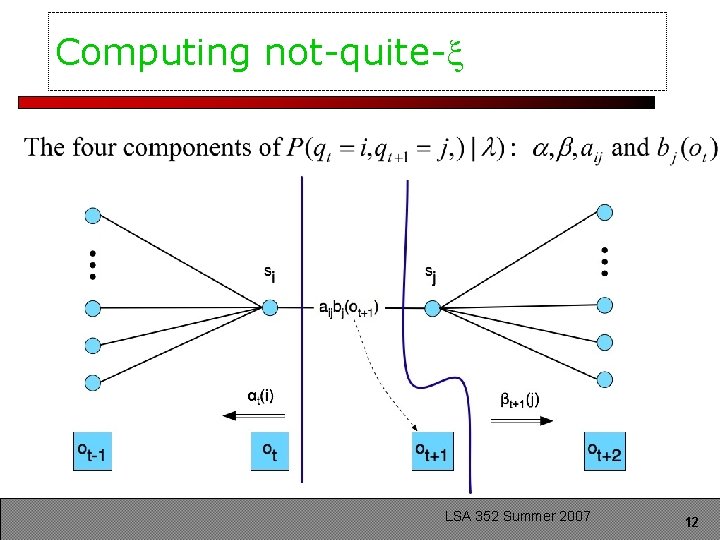

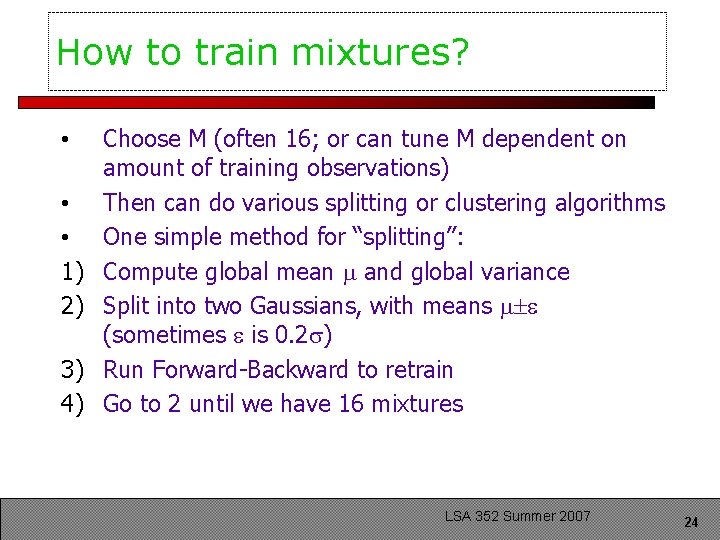

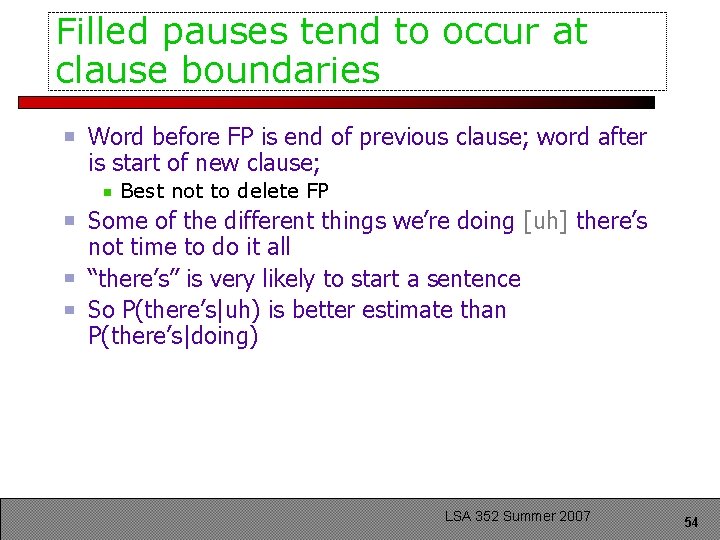

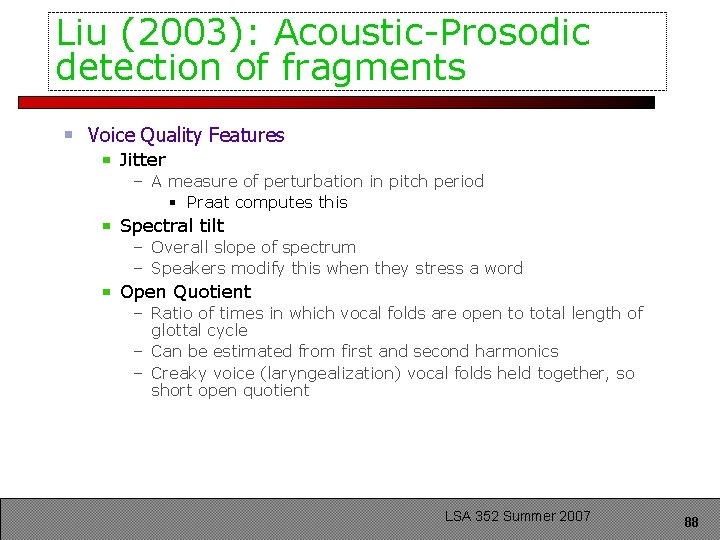

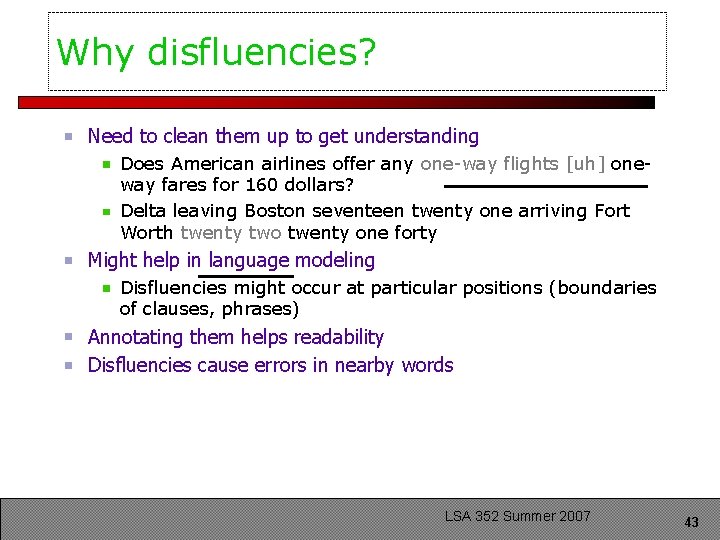

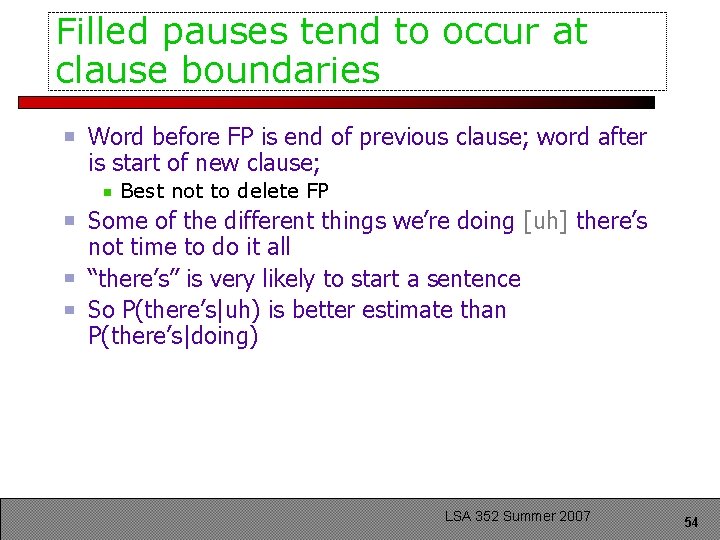

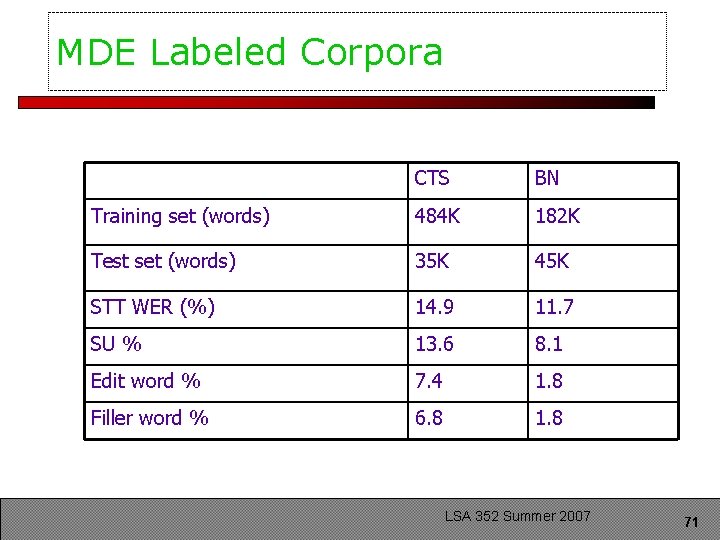

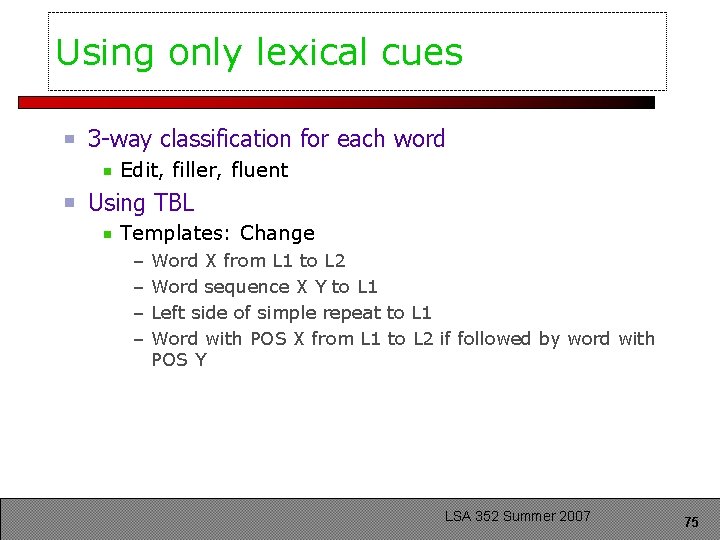

MDE transcription Conventions: . / for statement SU boundaries, <> for fillers, [] for edit words, * for IP (interruption point) inside edits And <uh> <you know> wash your clothes wherever you are. / and [ you ] * you really get used to the outdoors. / LSA 352 Summer 2007 70

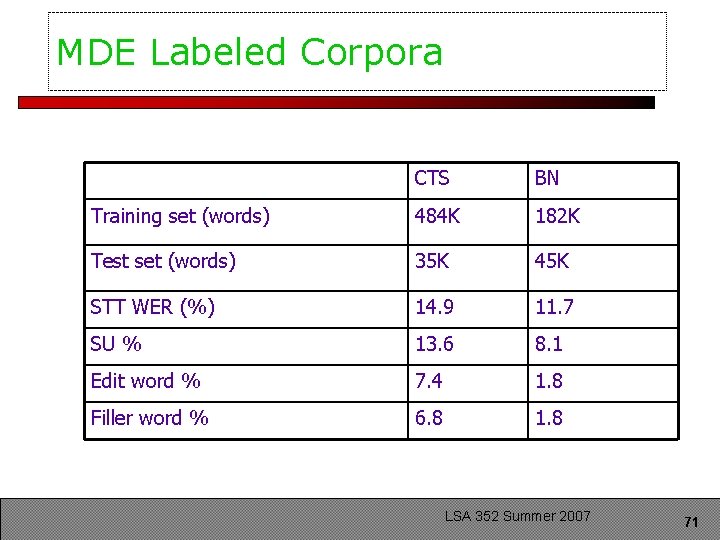

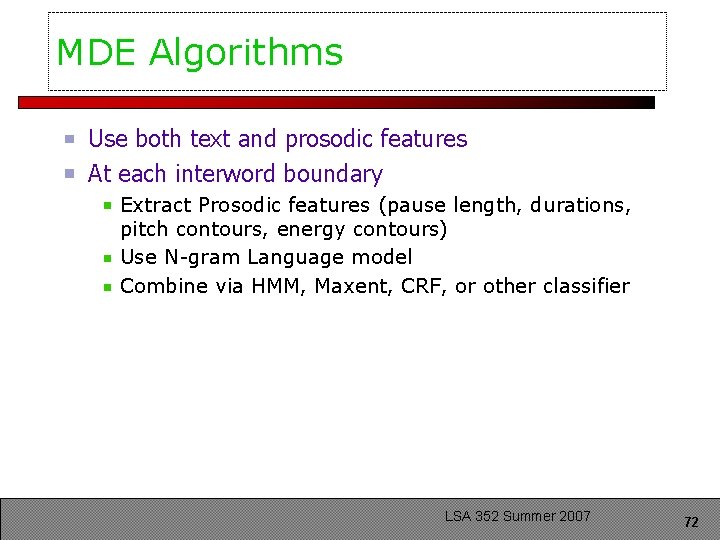

MDE Labeled Corpora CTS BN Training set (words) 484 K 182 K Test set (words) 35 K 45 K STT WER (%) 14. 9 11. 7 SU % 13. 6 8. 1 Edit word % 7. 4 1. 8 Filler word % 6. 8 1. 8 LSA 352 Summer 2007 71

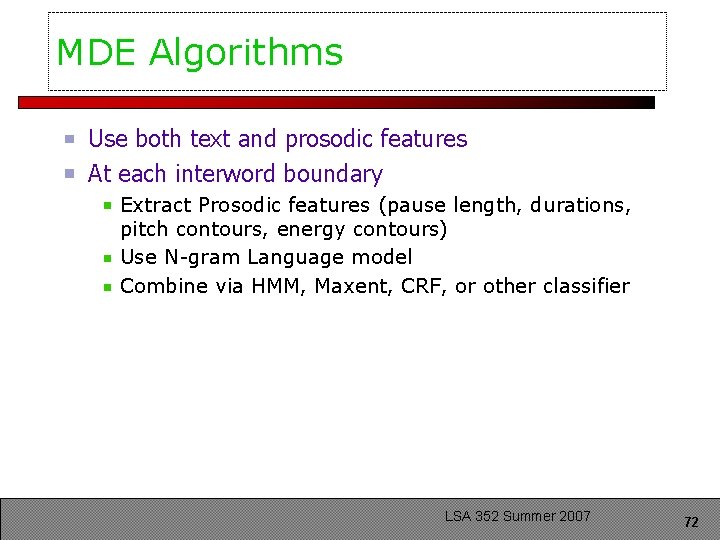

MDE Algorithms Use both text and prosodic features At each interword boundary Extract Prosodic features (pause length, durations, pitch contours, energy contours) Use N-gram Language model Combine via HMM, Maxent, CRF, or other classifier LSA 352 Summer 2007 72

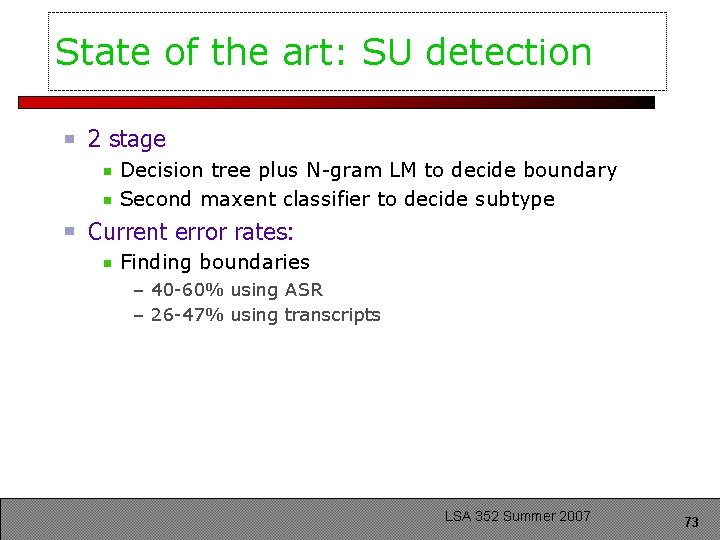

State of the art: SU detection 2 stage Decision tree plus N-gram LM to decide boundary Second maxent classifier to decide subtype Current error rates: Finding boundaries – 40 -60% using ASR – 26 -47% using transcripts LSA 352 Summer 2007 73

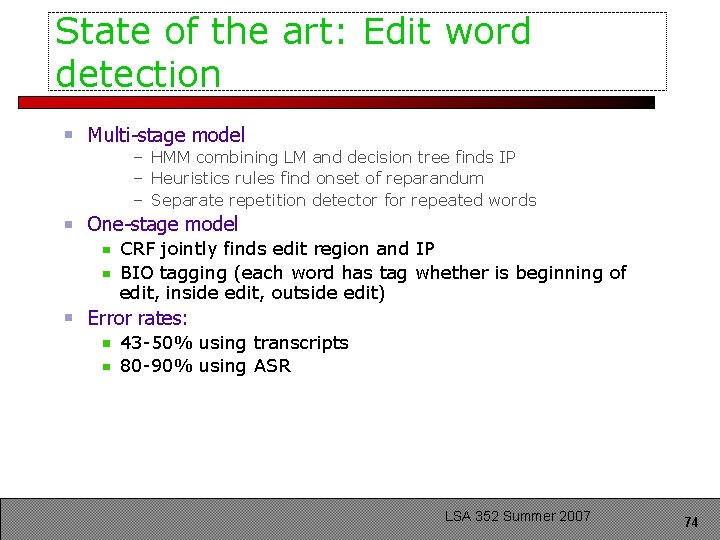

State of the art: Edit word detection Multi-stage model – HMM combining LM and decision tree finds IP – Heuristics rules find onset of reparandum – Separate repetition detector for repeated words One-stage model CRF jointly finds edit region and IP BIO tagging (each word has tag whether is beginning of edit, inside edit, outside edit) Error rates: 43 -50% using transcripts 80 -90% using ASR LSA 352 Summer 2007 74

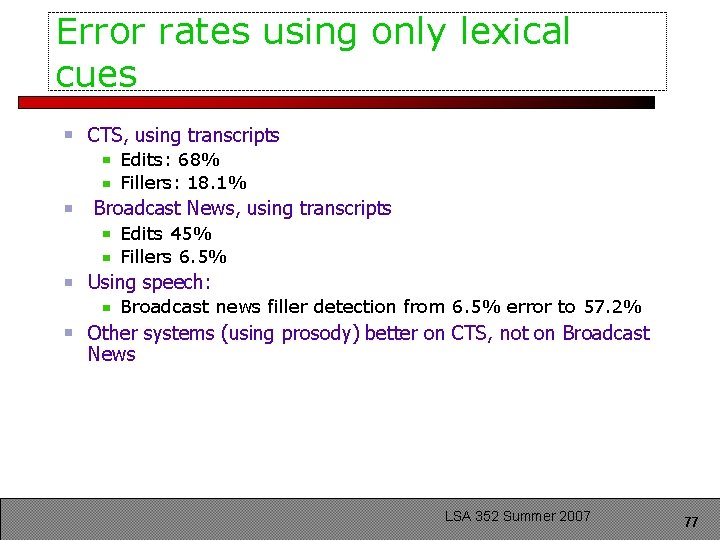

Using only lexical cues 3 -way classification for each word Edit, filler, fluent Using TBL Templates: Change – – Word X from L 1 to L 2 Word sequence X Y to L 1 Left side of simple repeat to L 1 Word with POS X from L 1 to L 2 if followed by word with POS Y LSA 352 Summer 2007 75

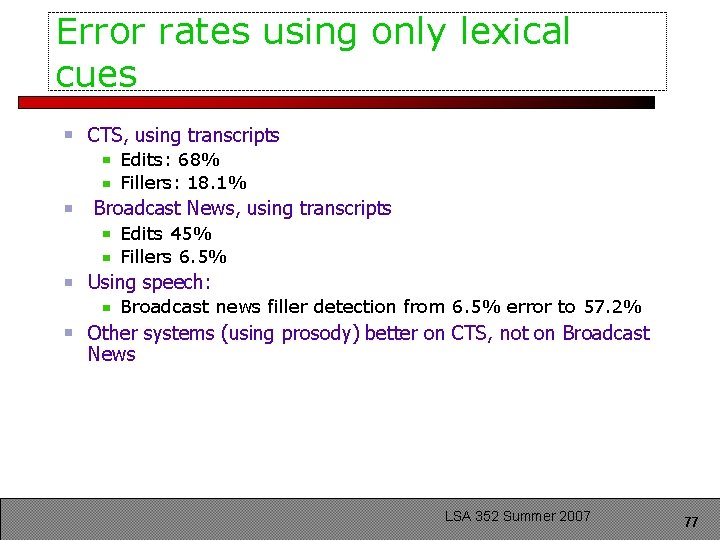

Rules learned Label Label all fluent filled pauses as fillers the left side of a simple repeat as an edit “you know” as fillers fluent well’s as filler fluent fragments as edits “I mean” as a filler LSA 352 Summer 2007 76

Error rates using only lexical cues CTS, using transcripts Edits: 68% Fillers: 18. 1% Broadcast News, using transcripts Edits 45% Fillers 6. 5% Using speech: Broadcast news filler detection from 6. 5% error to 57. 2% Other systems (using prosody) better on CTS, not on Broadcast News LSA 352 Summer 2007 77

Conclusions: Lexical Cues Only Can do pretty well with only words (As long as the words are correct) Much harder to do fillers and fragments from ASR output, since recognition of these is bad LSA 352 Summer 2007 78

Fragments Incomplete or cut-off words: Leaving at seven fif- eight thirty uh, I, I d-, don't feel comfortable You know the fam-, well, the families I need to know, uh, how- how do you feel… Uh yeah, well, it- that’s right. And it- SWBD: around 0. 7% of words are fragments (Liu 2003) ATIS: 60. 2% of repairs contain fragments (6% of corpus sentences had a least 1 repair) Bear et al (1992) Another ATIS corpus: 74% of all reparanda end in word fragments (Nakatani and Hirschberg 1994) LSA 352 Summer 2007 79

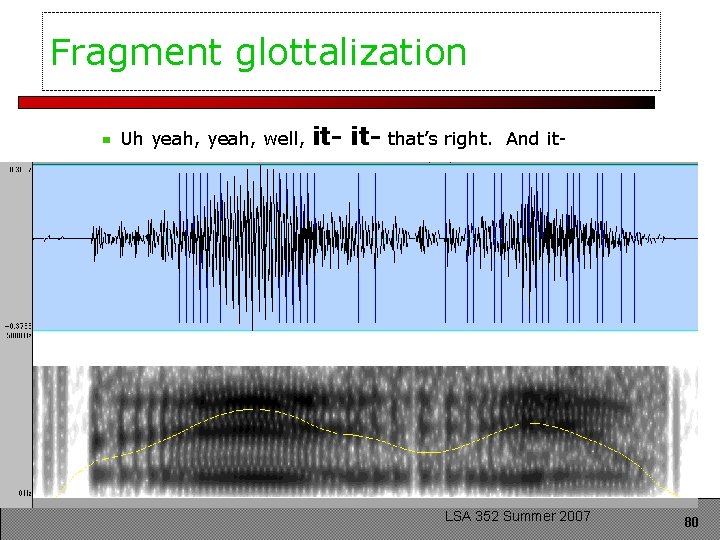

Fragment glottalization Uh yeah, well, it- that’s right. And it- LSA 352 Summer 2007 80

Why fragments are important Frequent enough to be a problem: Only 1% of words/3% of sentences But if miss fragment, likely to get surrounding words wrong (word segmentation error). So could be 3 or more times worse (3% words, 9% of sentences): problem! Useful for finding other repairs In 40% of SRI-ATIS sentences containing fragments, fragment occurred at right edge of long repair 74% of ATT-ATIS reparanda ended in fragments Sometimes are the only cue to repair “leaving at <seven> <fif-> eight thirty” LSA 352 Summer 2007 81

How fragments are dealt with in current ASR systems In training, throw out any sentences with fragments In test, get them wrong Probably get neighboring words wrong too! !!!!! LSA 352 Summer 2007 82

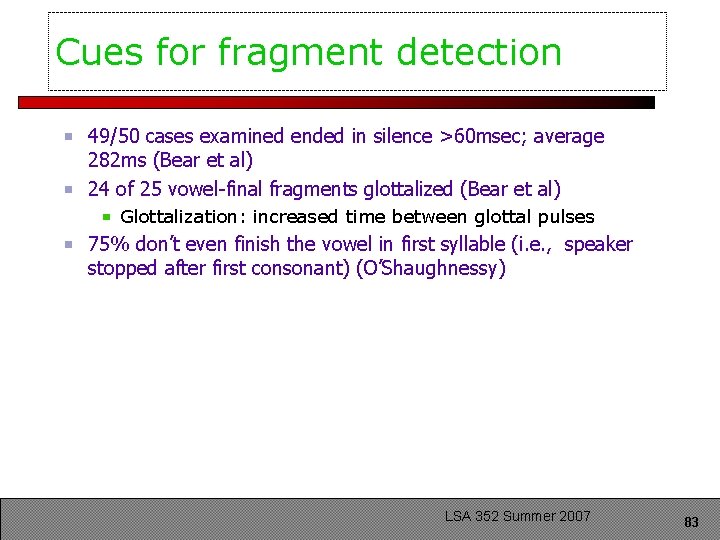

Cues for fragment detection 49/50 cases examined ended in silence >60 msec; average 282 ms (Bear et al) 24 of 25 vowel-final fragments glottalized (Bear et al) Glottalization: increased time between glottal pulses 75% don’t even finish the vowel in first syllable (i. e. , speaker stopped after first consonant) (O’Shaughnessy) LSA 352 Summer 2007 83

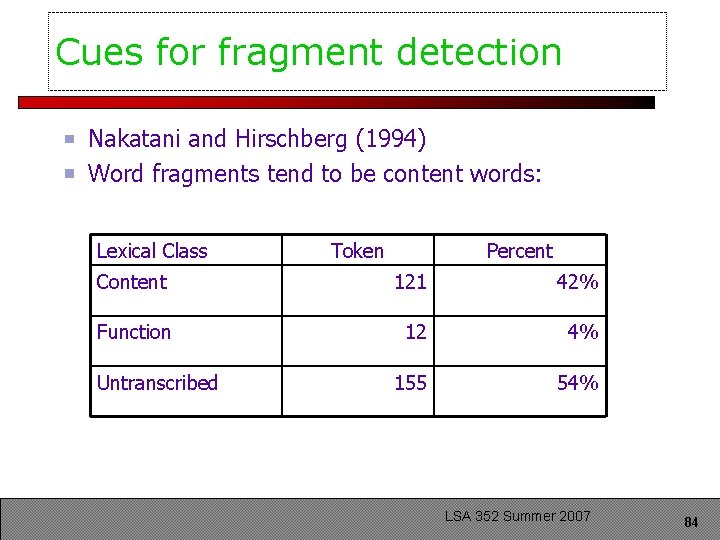

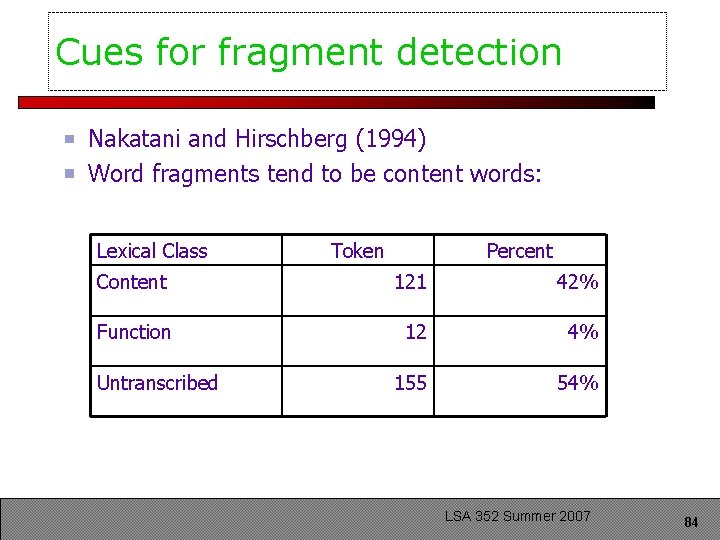

Cues for fragment detection Nakatani and Hirschberg (1994) Word fragments tend to be content words: Lexical Class Token Percent Content 121 42% Function 12 4% 155 54% Untranscribed LSA 352 Summer 2007 84

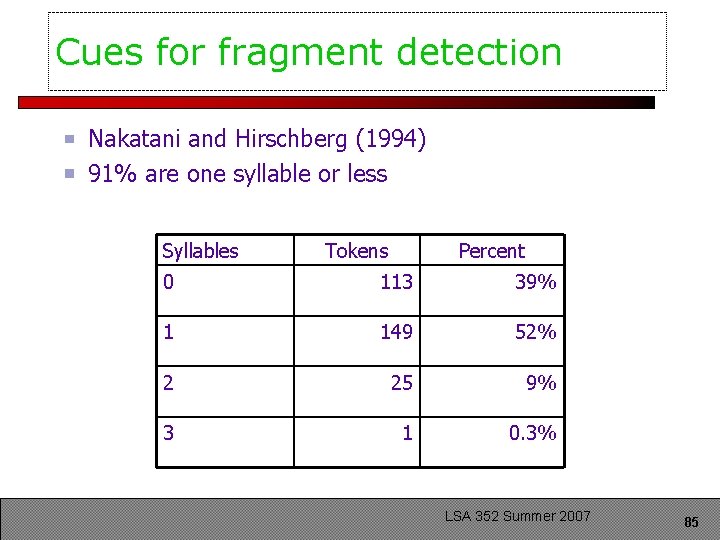

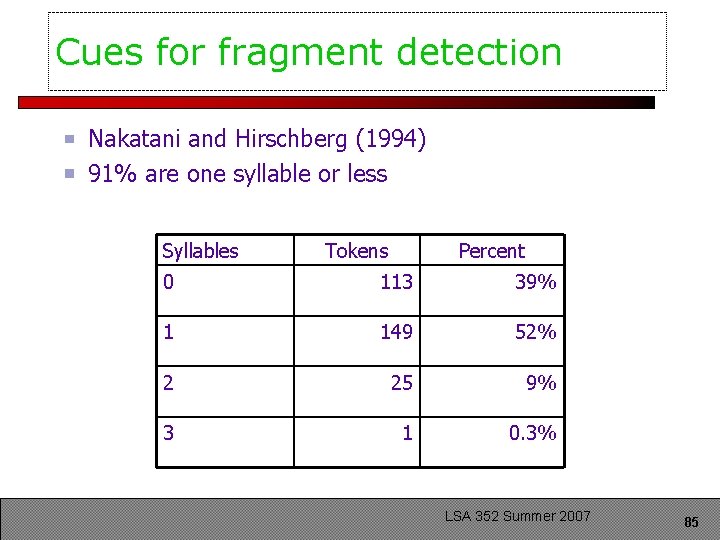

Cues for fragment detection Nakatani and Hirschberg (1994) 91% are one syllable or less Syllables Tokens Percent 0 113 39% 1 149 52% 2 25 9% 3 1 0. 3% LSA 352 Summer 2007 85

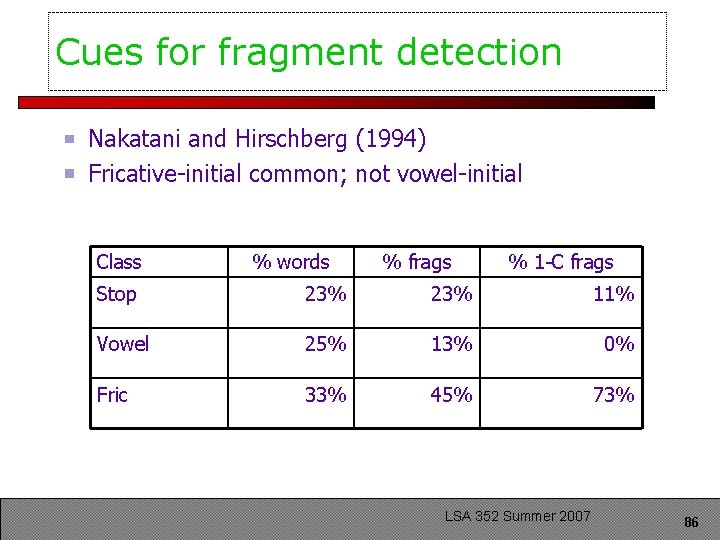

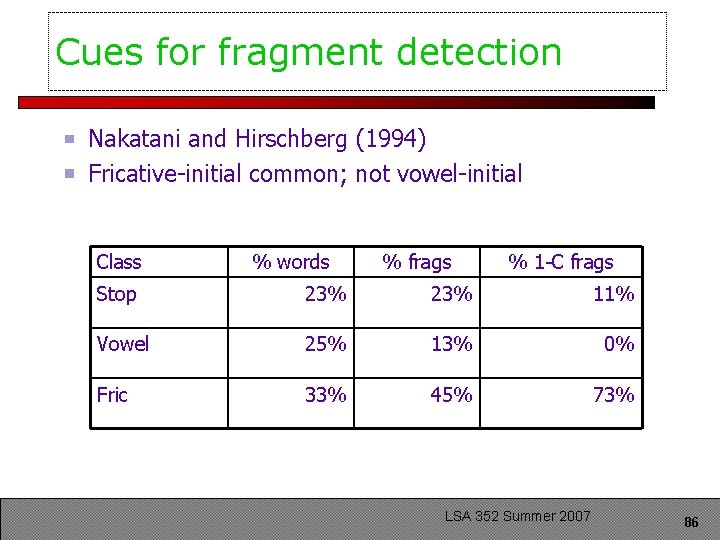

Cues for fragment detection Nakatani and Hirschberg (1994) Fricative-initial common; not vowel-initial Class % words % frags % 1 -C frags Stop 23% 11% Vowel 25% 13% 0% Fric 33% 45% 73% LSA 352 Summer 2007 86

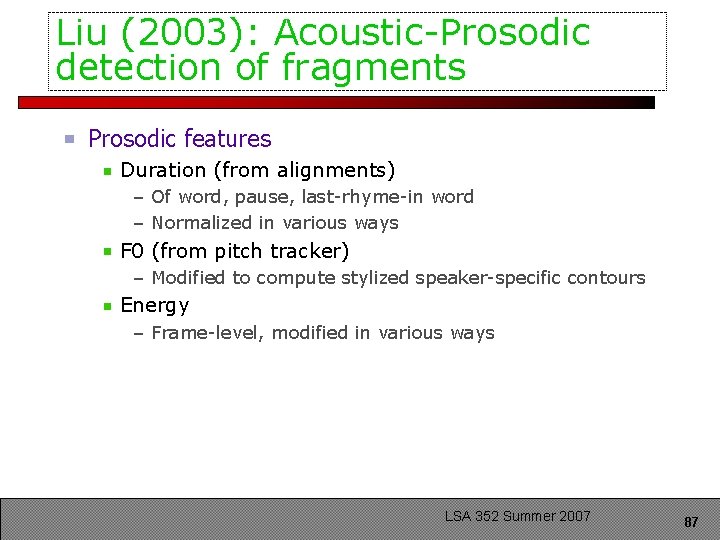

Liu (2003): Acoustic-Prosodic detection of fragments Prosodic features Duration (from alignments) – Of word, pause, last-rhyme-in word – Normalized in various ways F 0 (from pitch tracker) – Modified to compute stylized speaker-specific contours Energy – Frame-level, modified in various ways LSA 352 Summer 2007 87

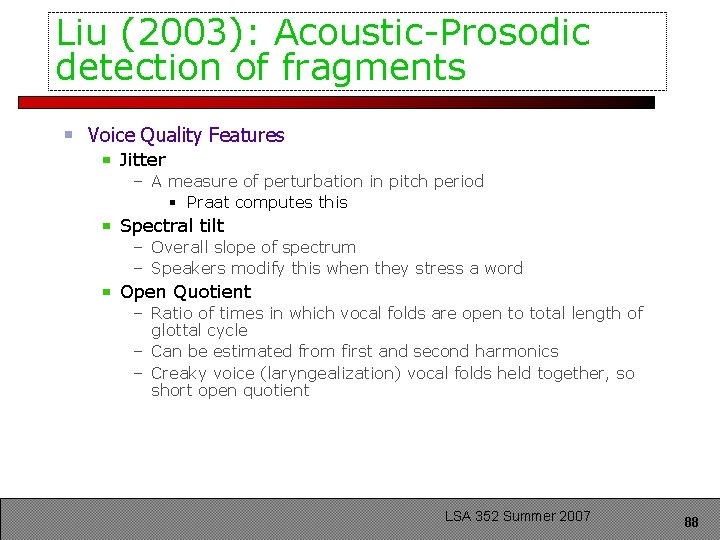

Liu (2003): Acoustic-Prosodic detection of fragments Voice Quality Features Jitter – A measure of perturbation in pitch period § Praat computes this Spectral tilt – Overall slope of spectrum – Speakers modify this when they stress a word Open Quotient – Ratio of times in which vocal folds are open to total length of glottal cycle – Can be estimated from first and second harmonics – Creaky voice (laryngealization) vocal folds held together, so short open quotient LSA 352 Summer 2007 88

Liu (2003) Use Switchboard 80%/20% Downsampled to 50% frags, 50% words Generated forced alignments with gold transcripts Extract prosodic and voice quality features Train decision tree LSA 352 Summer 2007 89

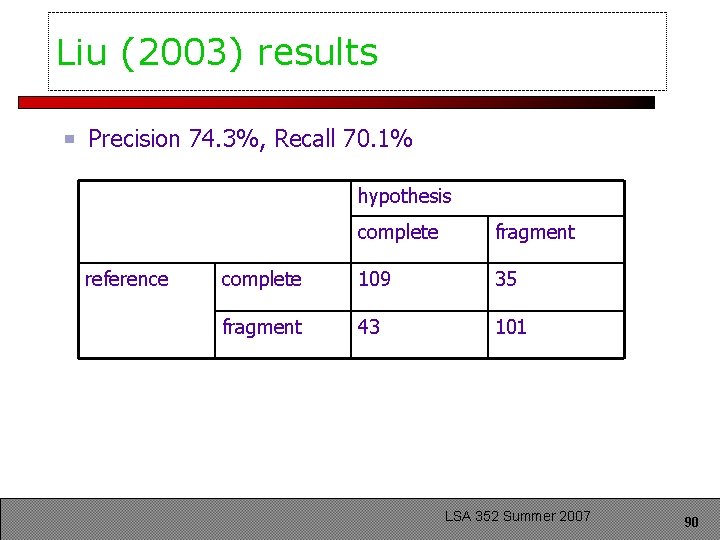

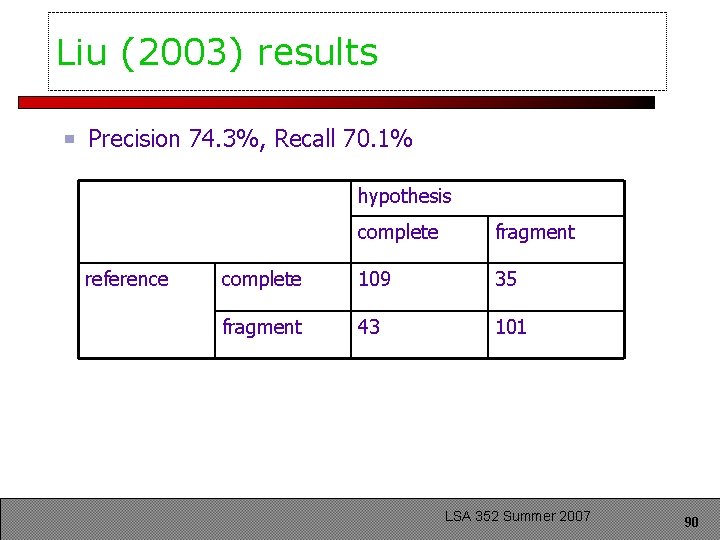

Liu (2003) results Precision 74. 3%, Recall 70. 1% hypothesis reference complete fragment complete 109 35 fragment 43 101 LSA 352 Summer 2007 90

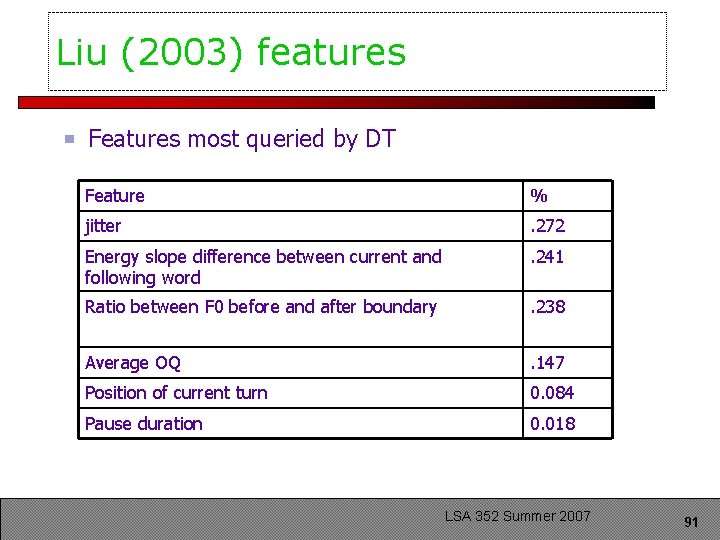

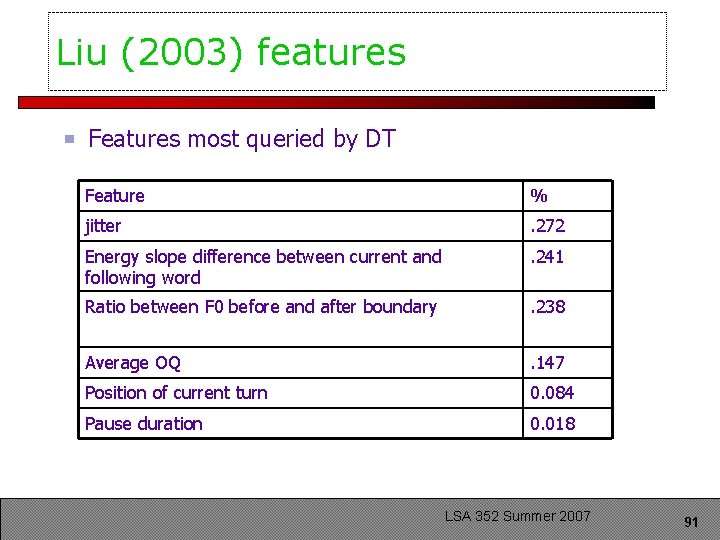

Liu (2003) features Features most queried by DT Feature % jitter . 272 Energy slope difference between current and following word . 241 Ratio between F 0 before and after boundary . 238 Average OQ . 147 Position of current turn 0. 084 Pause duration 0. 018 LSA 352 Summer 2007 91

Liu (2003) conclusion Very preliminary work Fragment detection is good problem that is understudied! LSA 352 Summer 2007 92

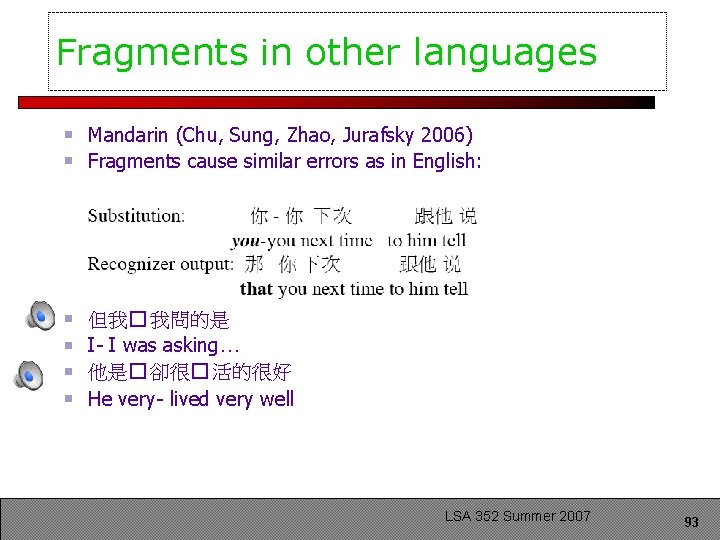

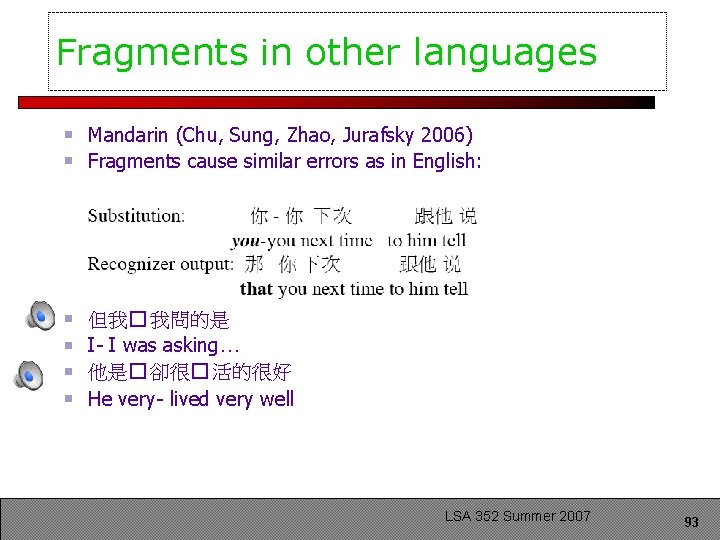

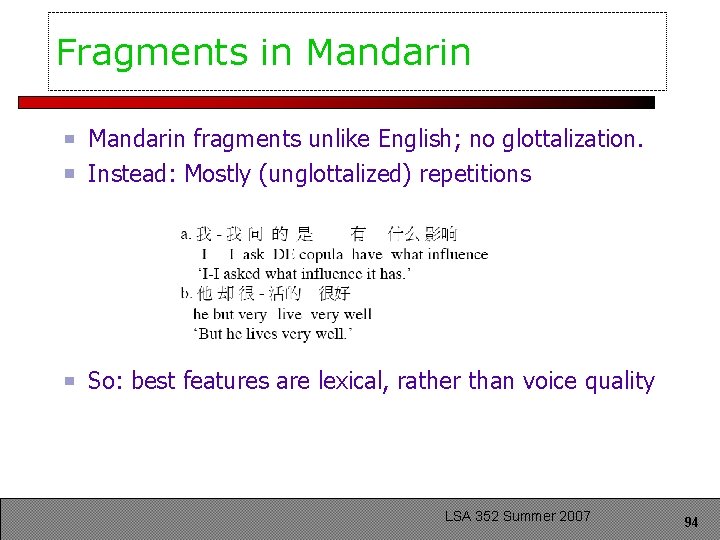

Fragments in other languages Mandarin (Chu, Sung, Zhao, Jurafsky 2006) Fragments cause similar errors as in English: 但我� 我問的是 I- I was asking… 他是� 卻很� 活的很好 He very- lived very well LSA 352 Summer 2007 93

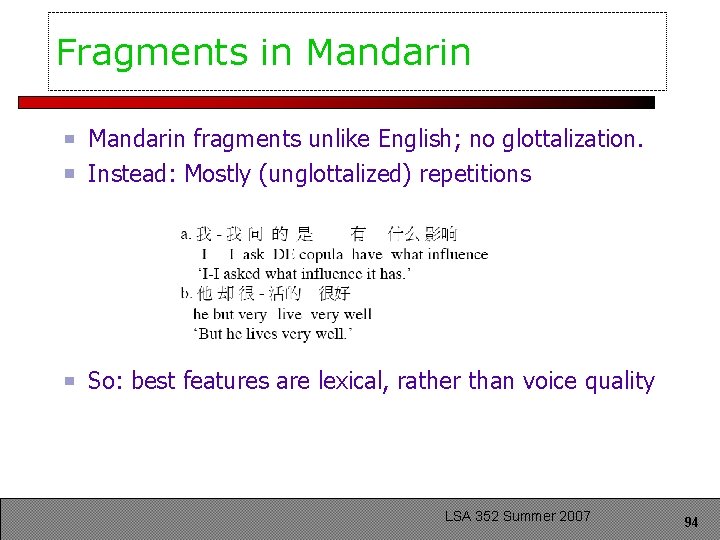

Fragments in Mandarin fragments unlike English; no glottalization. Instead: Mostly (unglottalized) repetitions So: best features are lexical, rather than voice quality LSA 352 Summer 2007 94

Summary Disfluencies Characteristics of disfluences Detecting disfluencies MDE bakeoff Fragments LSA 352 Summer 2007 95