Location Privacy Protection based on Differential Privacy Strategy

Location Privacy Protection based on Differential Privacy Strategy for Big Data in Industrial Internet-of-Things Published in: IEEE Transactions on Industrial Informatics Henrique Potter

Overview • Privacy risks in Io. T • Privacy protection techniques • k-anonymity • Differential Privacy • How to protect

Privacy risks in Io. T

Privacy risks in Io. T • Unauthorized access to private data • Data stored in a remote storage • Personal Devices

Privacy risks in Io. T • Unauthorized access to private data • Data stored in a remote storage • Personal Devices • Infer information based on device/user profiling, messaging patterns and public data • Statistical and Machine Learning techniques

Privacy risks in Io. T • Privacy leaks • From the Netflix Prize competition • Released 100 M ratings of 480 K users over 18 K movies • Claimed to have anonymized the data

Privacy risks in Io. T • Privacy leaks • From the Netflix Prize competition • Released 100 M ratings of 480 K users over 18 K movies • Claimed to have anonymized the data • 96% of users could be uniquely identified when crossing the data against IMDB data (Narayanan & Shmatikov 2006)

Privacy risks in Io. T • How to protect privacy • Unauthorized access to private data • Infer information based on device/user profiling, messaging patterns and public data

Differential Privacy • Developed by Cynthia Dwork in 2006 • Formal definition of privacy • Offers a framework to develop privacy solutions • Constrained to aggregate data analysis

Differential Privacy • Developed by Cynthia Dwork in 2006 • Formal definition of privacy • Offers a framework to develop privacy solutions • Constrained to aggregate data analysis • Averages • Profiling techniques • Machine Learning models etc.

Differential Privacy • Developed by Cynthia Dwork in 2006 • • Formal definition of privacy Offers a framework to develop privacy solutions Constrained to aggregate data analysis Assumes that the attacker has maximum auxiliary information about the target

Differential Privacy - Scenario Example • Database to compute the avg income of residents

Differential Privacy - Scenario Example • Database to compute the avg income of residents • If you knew that Bob is going to move

Differential Privacy - Scenario Example • Database to compute the avg income of residents • If you knew that Bob is going to move • Execute the algorithm A to compute the average before and after he moves D = database state with Bob record D’ = database state without Bob record

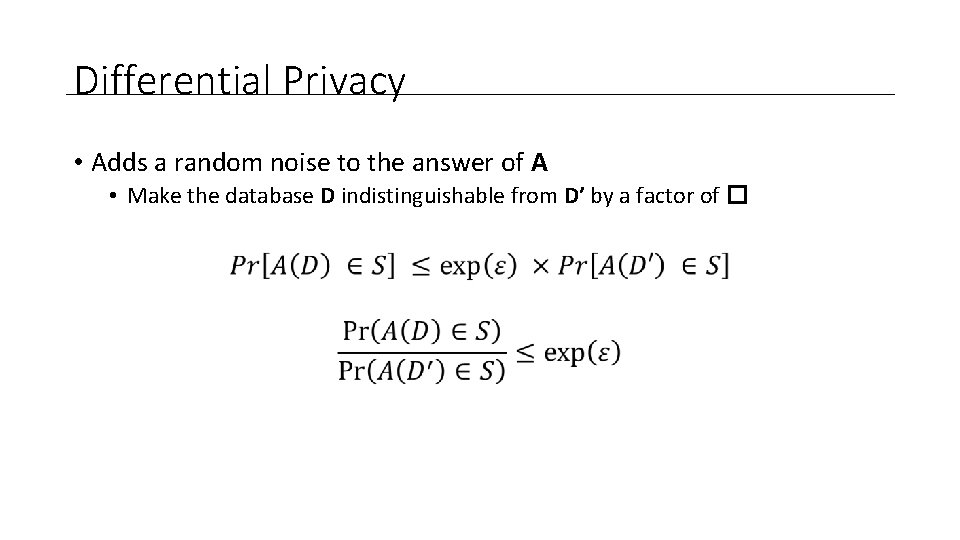

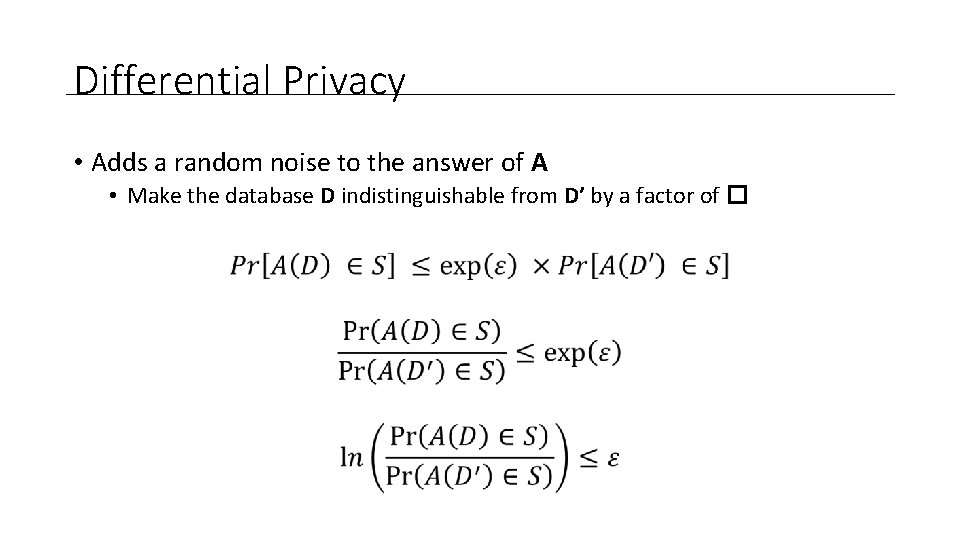

Differential Privacy • Adds a random noise to the answer of A • Make the database D indistinguishable from D’ by a factor of � x

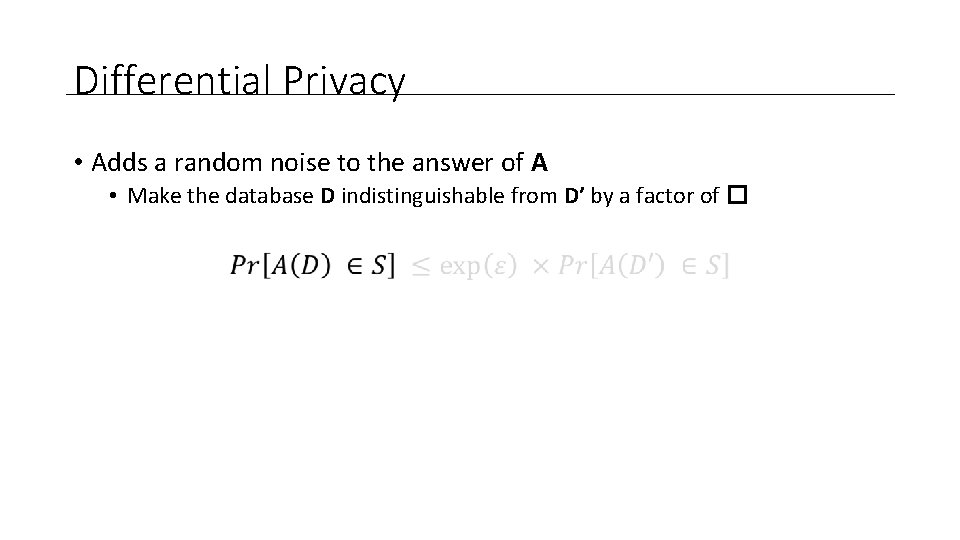

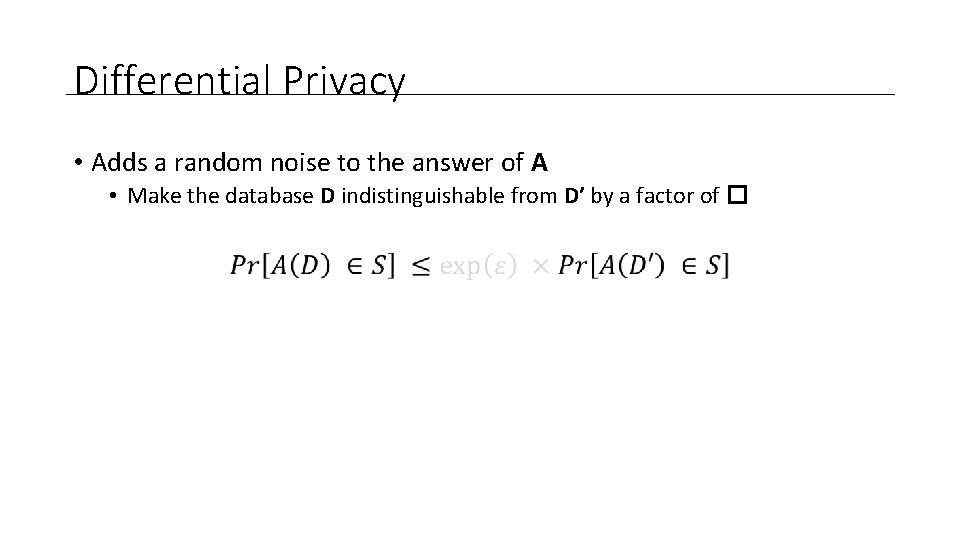

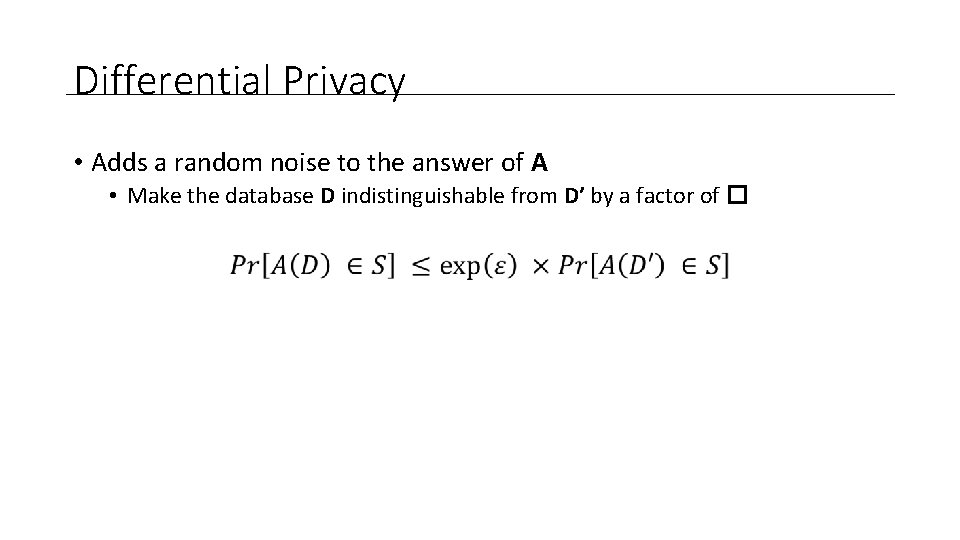

Differential Privacy • Adds a random noise to the answer of A • Make the database D indistinguishable from D’ by a factor of �

Differential Privacy • Adds a random noise to the answer of A • Make the database D indistinguishable from D’ by a factor of �

Differential Privacy • Adds a random noise to the answer of A • Make the database D indistinguishable from D’ by a factor of �

Differential Privacy • Adds a random noise to the answer of A • Make the database D indistinguishable from D’ by a factor of �

Differential Privacy • Adds a random noise to the answer of A • Make the database D indistinguishable from D’ by a factor of �

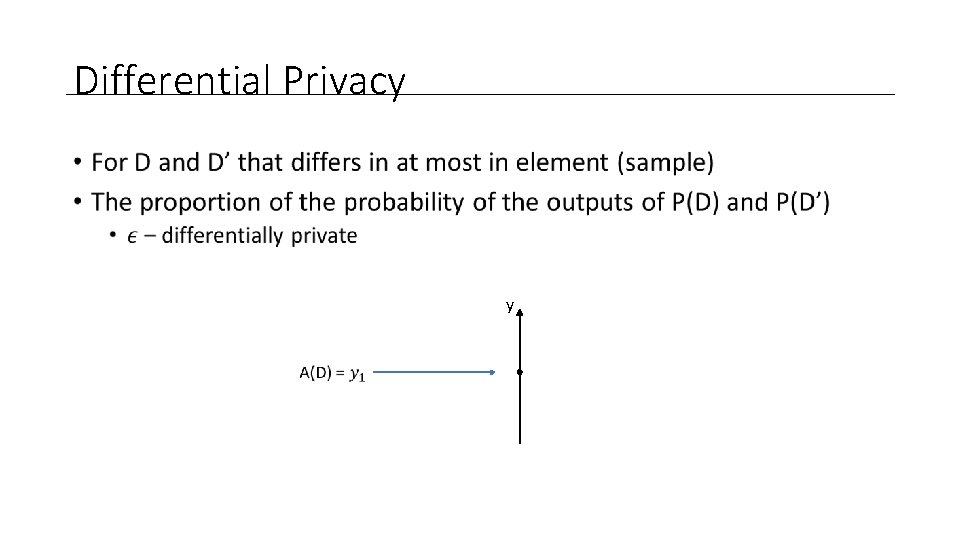

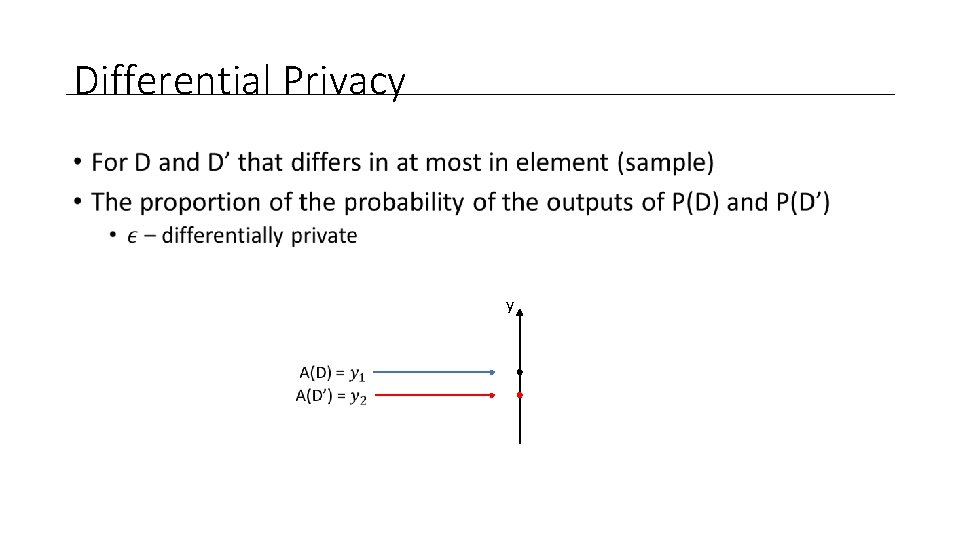

Differential Privacy • y

Differential Privacy • y

Differential Privacy • y

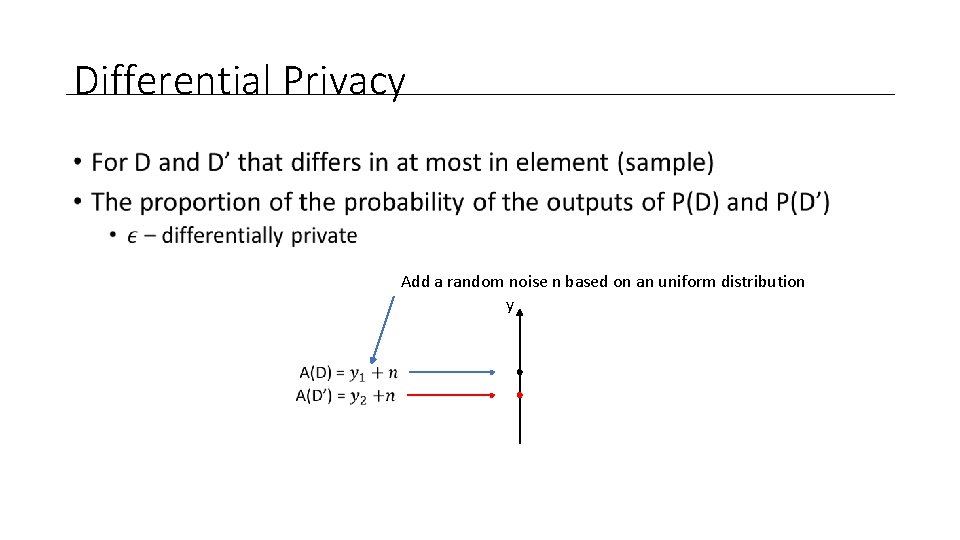

Differential Privacy • Add a random noise n based on an uniform distribution y

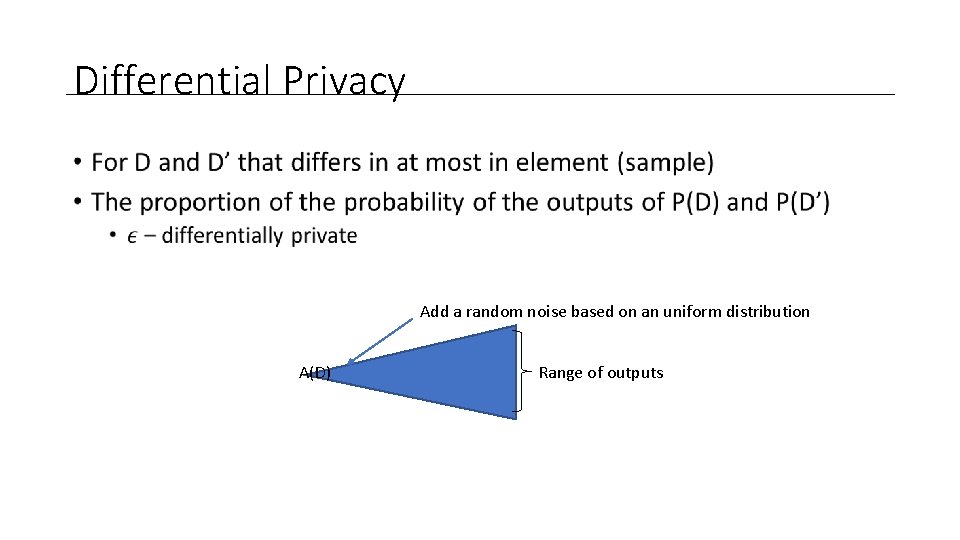

Differential Privacy • Add a random noise based on an uniform distribution A(D) Range of outputs

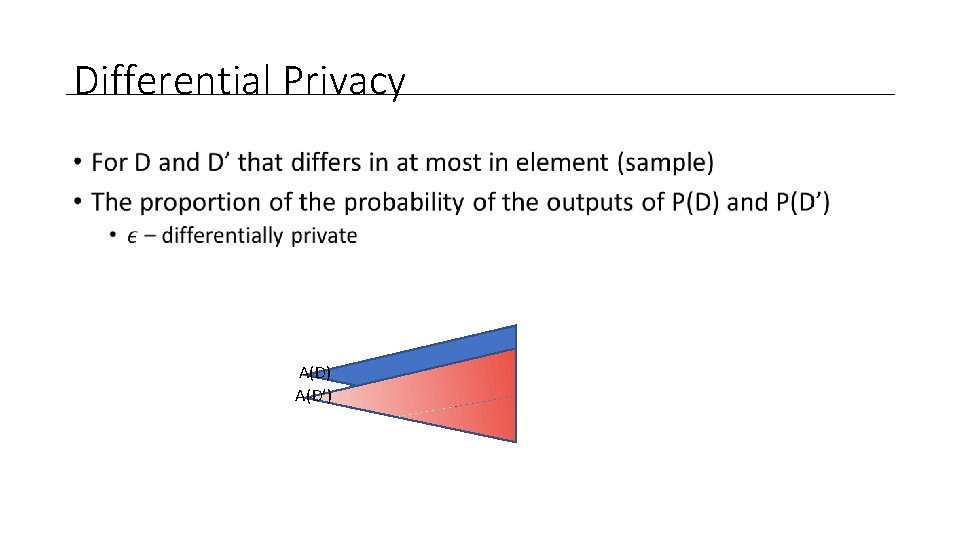

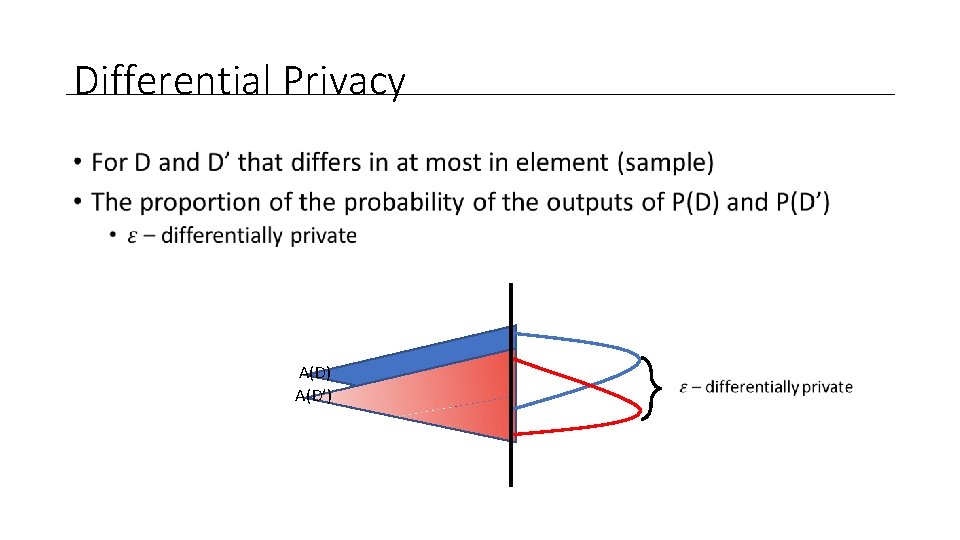

Differential Privacy • A(D) A(D’)

Differential Privacy • A(D) A(D’)

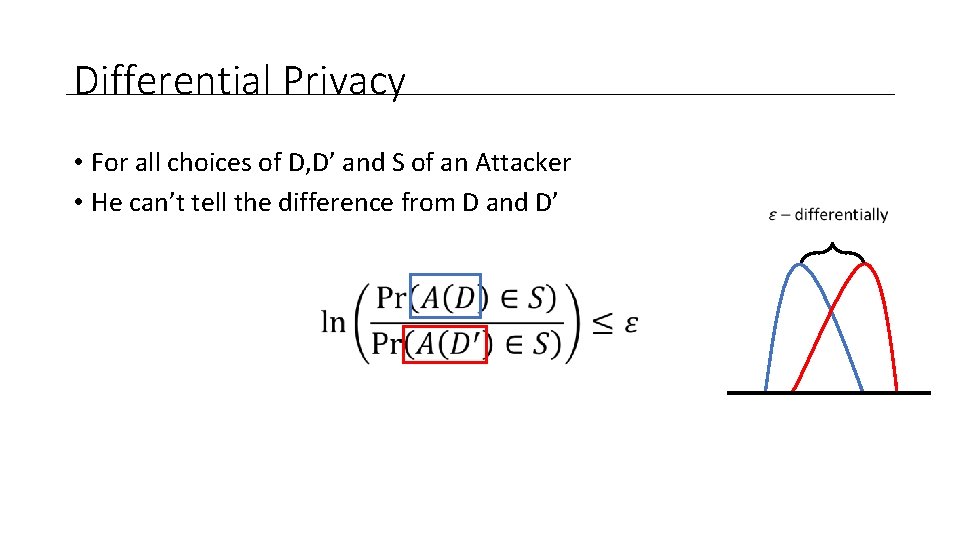

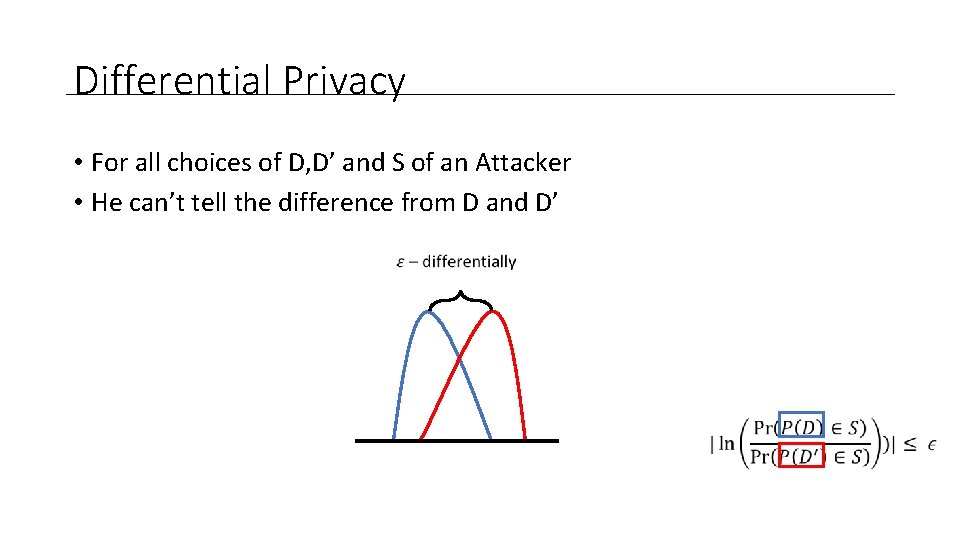

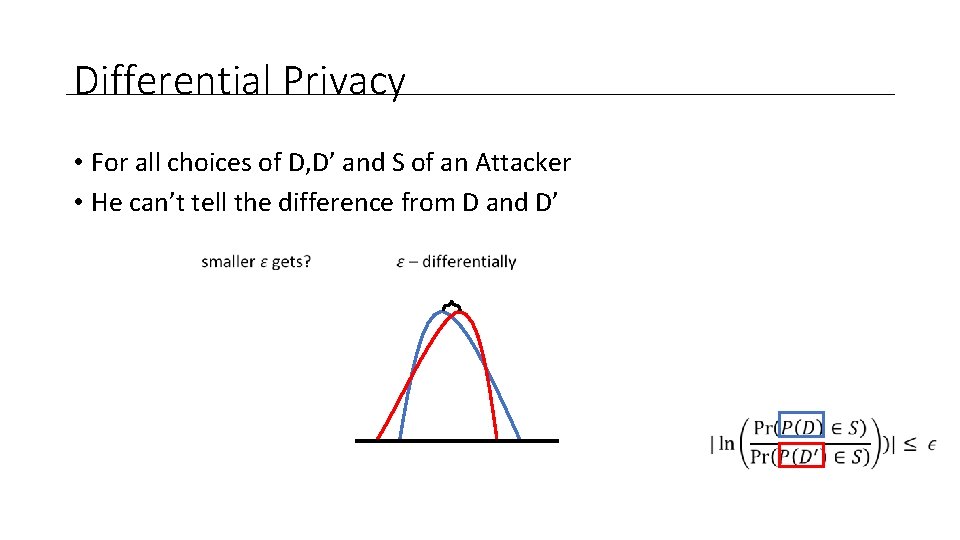

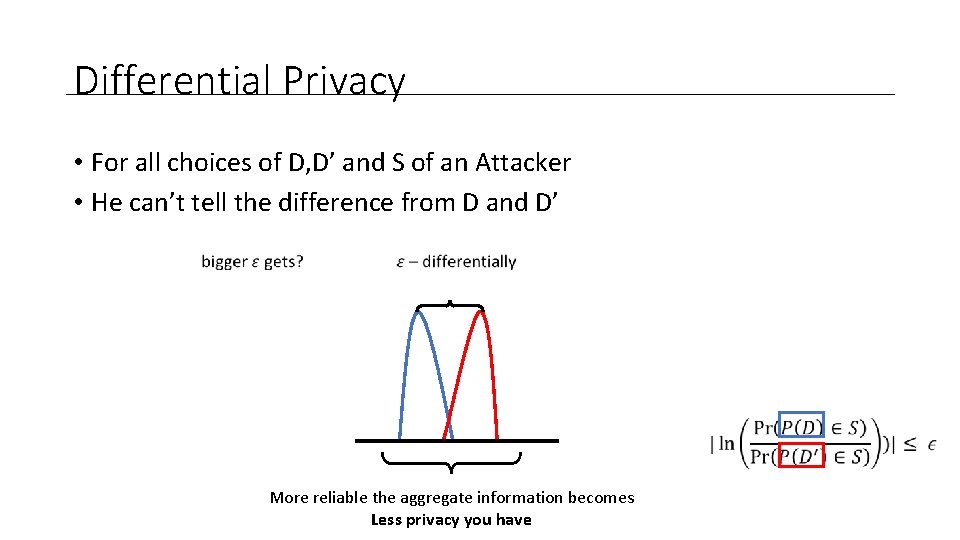

Differential Privacy • For all choices of D, D’ and S of an Attacker • He can’t tell the difference from D and D’

Differential Privacy • For all choices of D, D’ and S of an Attacker • He can’t tell the difference from D and D’

Differential Privacy • For all choices of D, D’ and S of an Attacker • He can’t tell the difference from D and D’

Differential Privacy • For all choices of D, D’ and S of an Attacker • He can’t tell the difference from D and D’

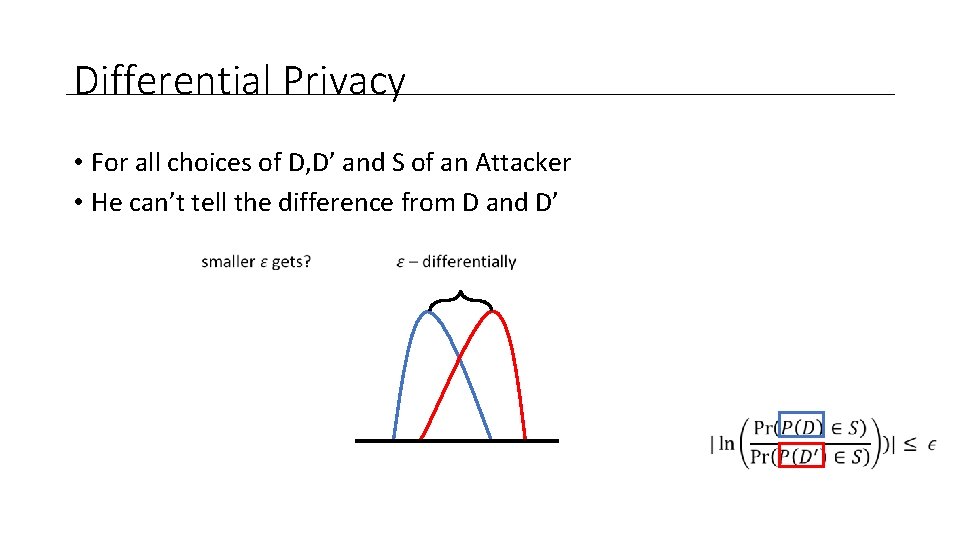

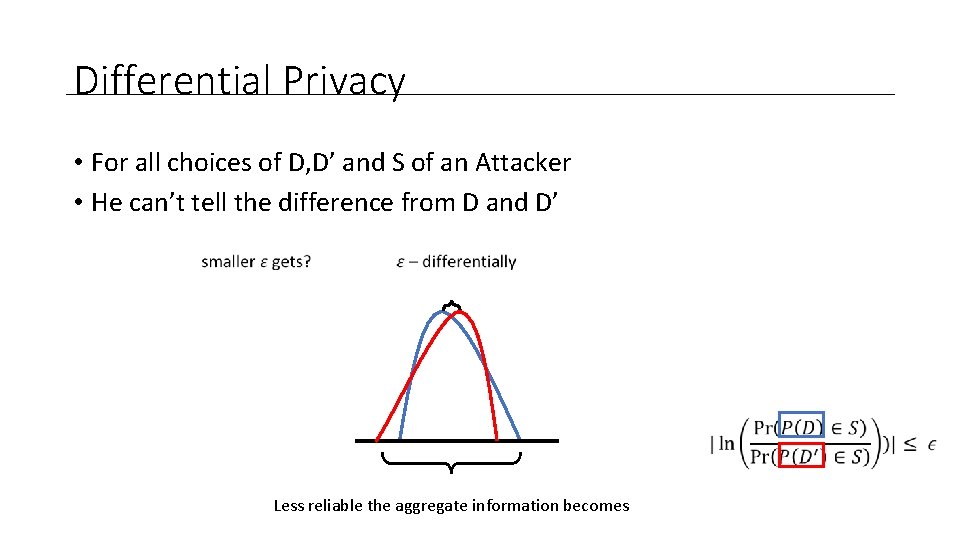

Differential Privacy • For all choices of D, D’ and S of an Attacker • He can’t tell the difference from D and D’ Less reliable the aggregate information becomes

Differential Privacy • For all choices of D, D’ and S of an Attacker • He can’t tell the difference from D and D’ Less reliable the aggregate information becomes

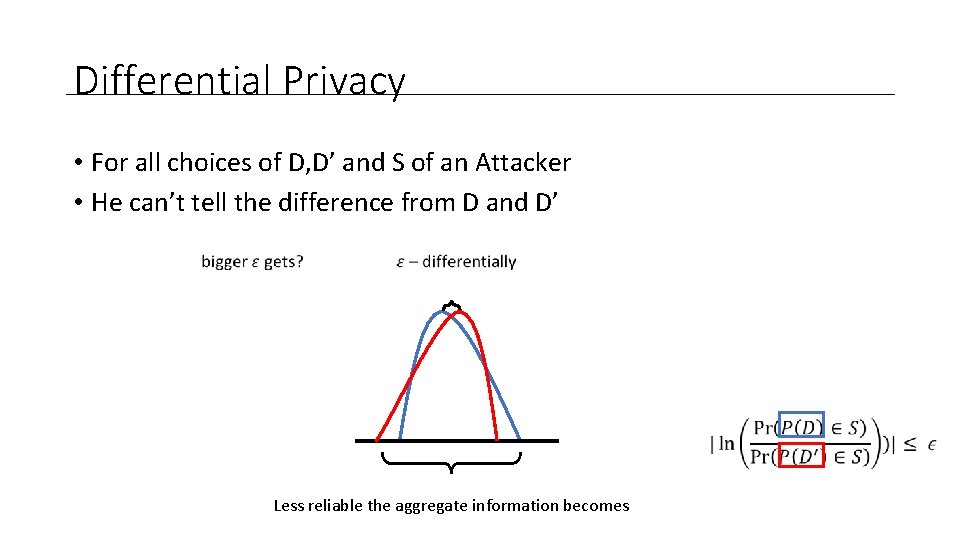

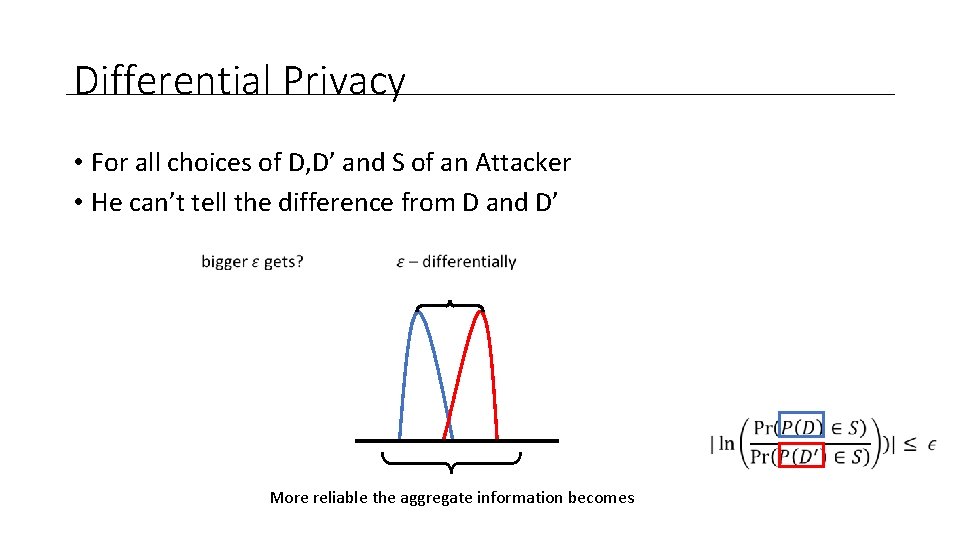

Differential Privacy • For all choices of D, D’ and S of an Attacker • He can’t tell the difference from D and D’ More reliable the aggregate information becomes

Differential Privacy • For all choices of D, D’ and S of an Attacker • He can’t tell the difference from D and D’ More reliable the aggregate information becomes Less privacy you have

Differential Privacy •

Differential Privacy •

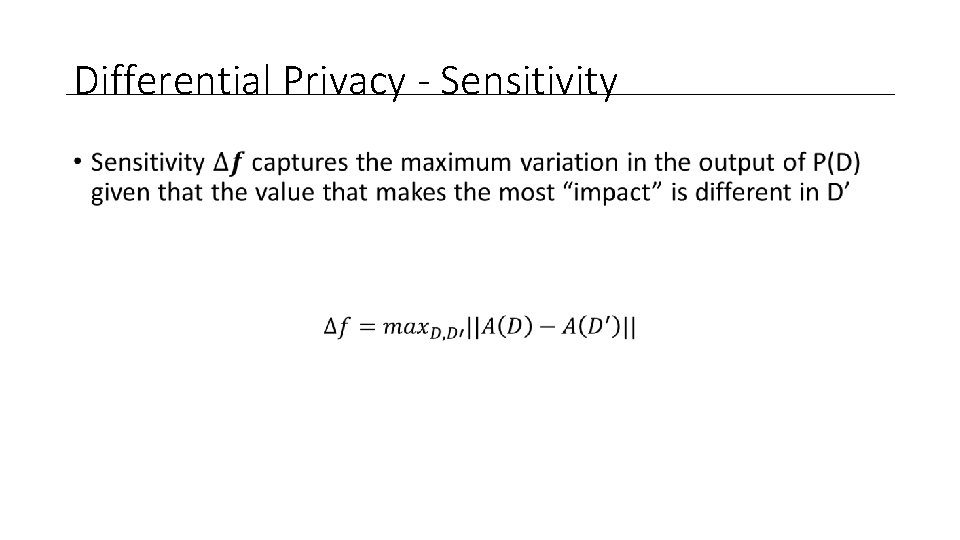

Differential Privacy - Sensitivity •

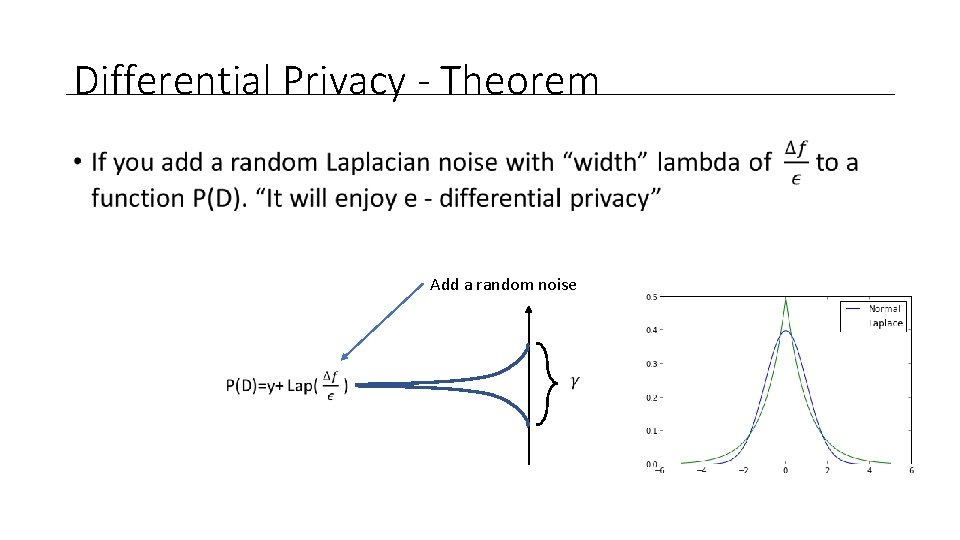

Differential Privacy - Theorem • Add a random noise

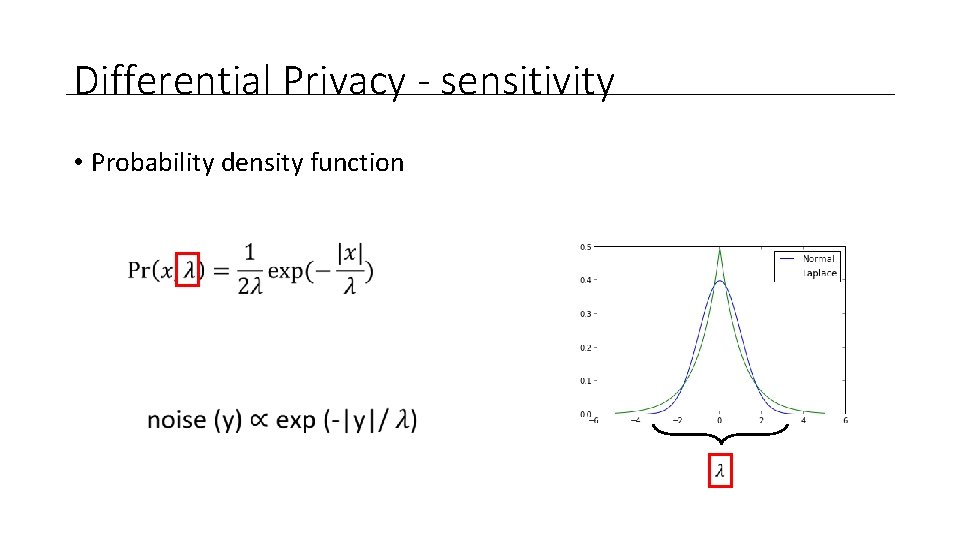

Differential Privacy - Mechanisms • Laplacian Mechanism • Adding Laplacian noise bigger then the sensitivity

Differential Privacy - Mechanisms • Laplacian Mechanism • Adding Laplacian noise bigger then the sensitivity • Exponential Mechanism • Randomly selects elements to participate in the aggregate analysis

LPT-DP-K Algorithm • Designed for location data • Adds noise to proportional to most frequently visited locations • Can’t add noise to all data since they defining the position of something

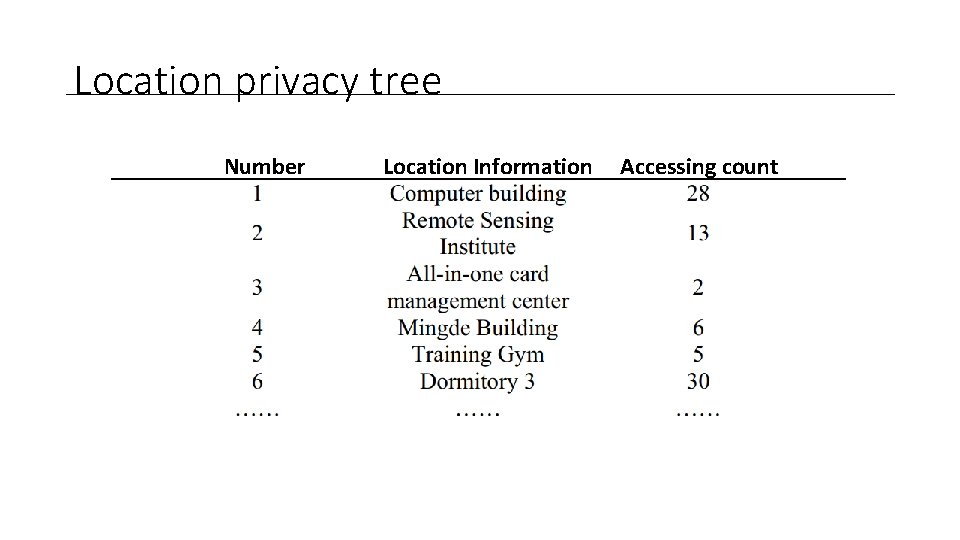

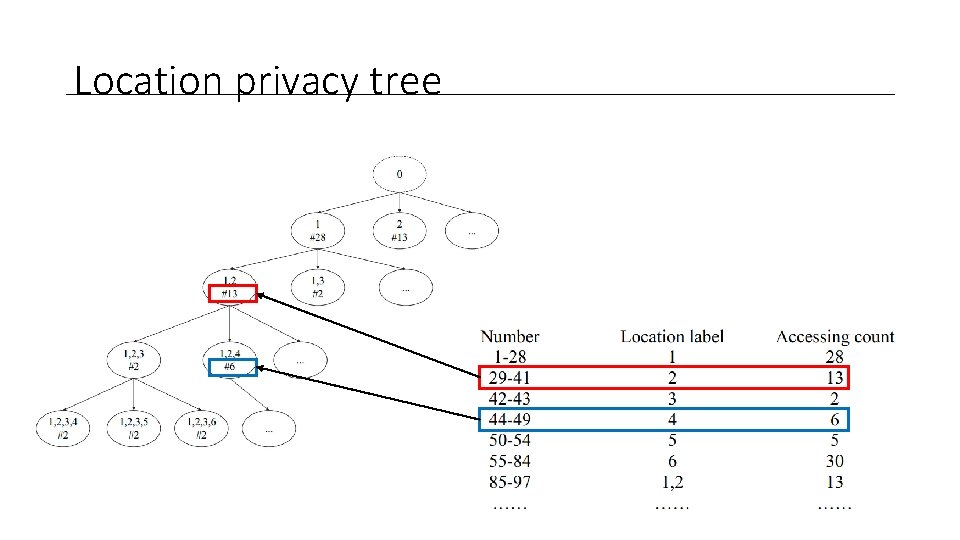

Location privacy tree Number Location Information Accessing count

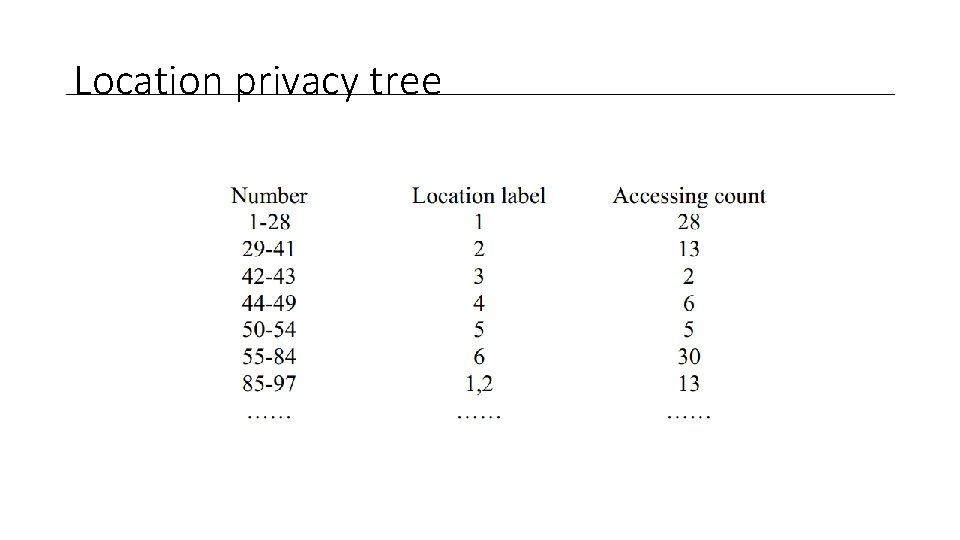

Location privacy tree

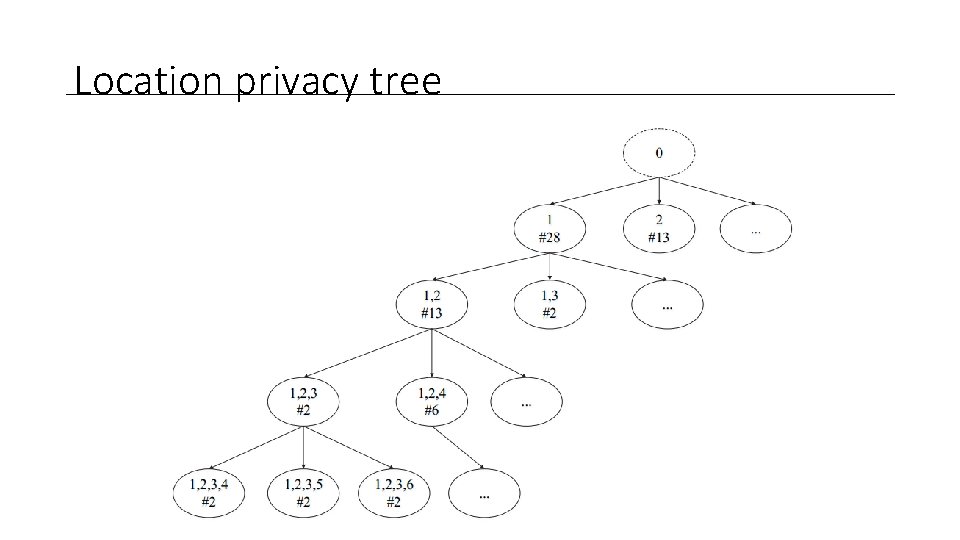

Location privacy tree

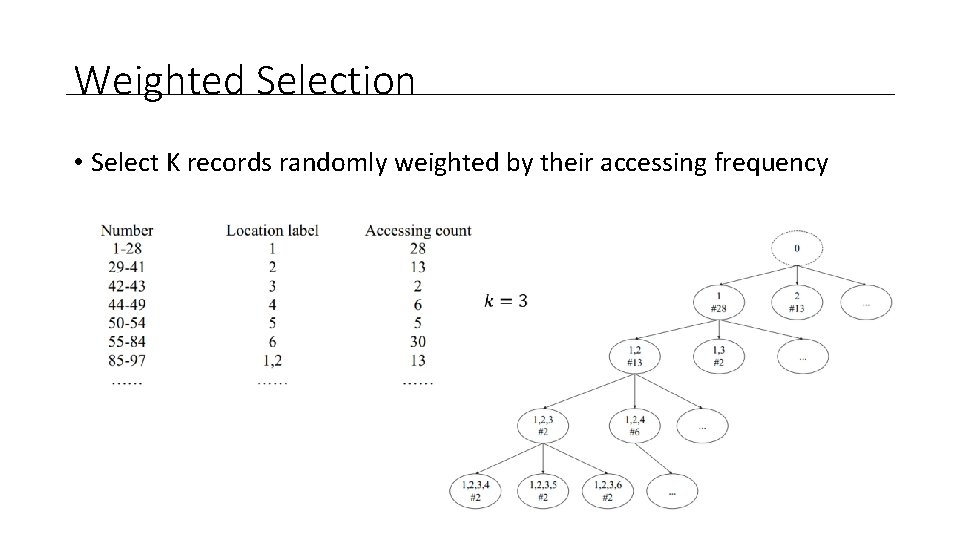

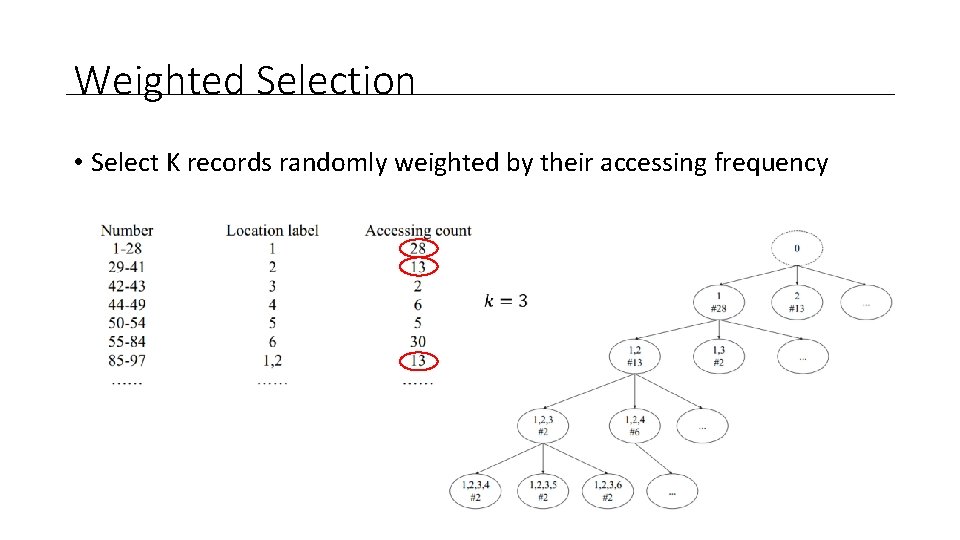

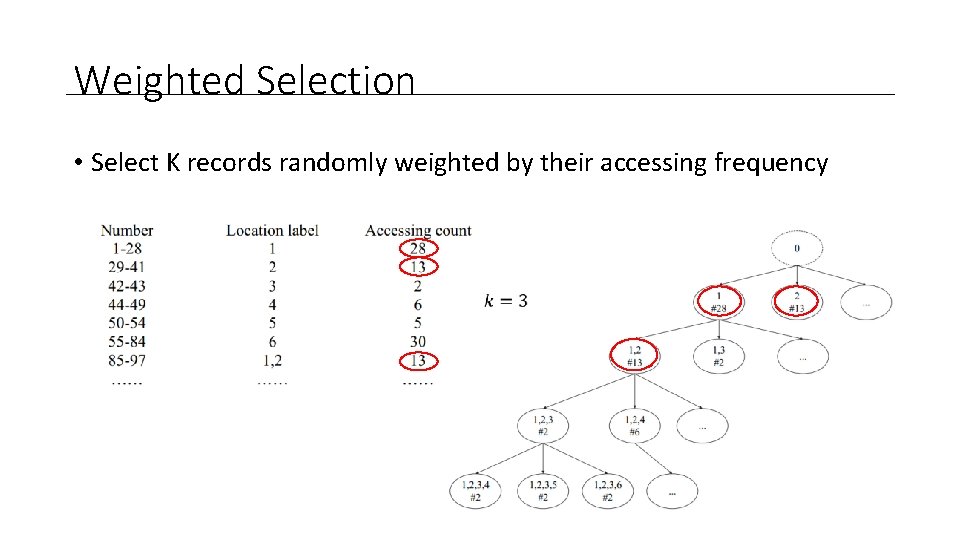

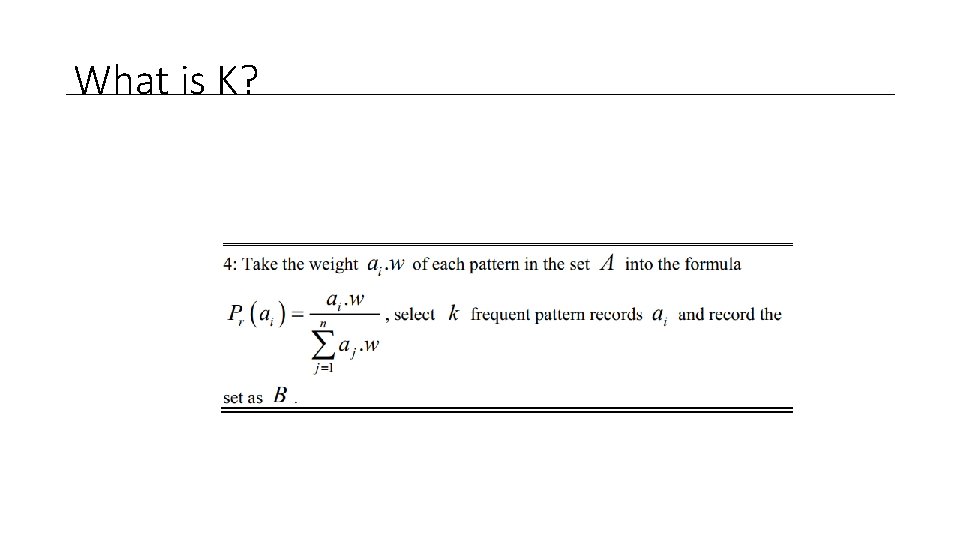

Weighted Selection • Select K records randomly weighted by their accessing frequency

Weighted Selection • Select K records randomly weighted by their accessing frequency

Weighted Selection • Select K records randomly weighted by their accessing frequency

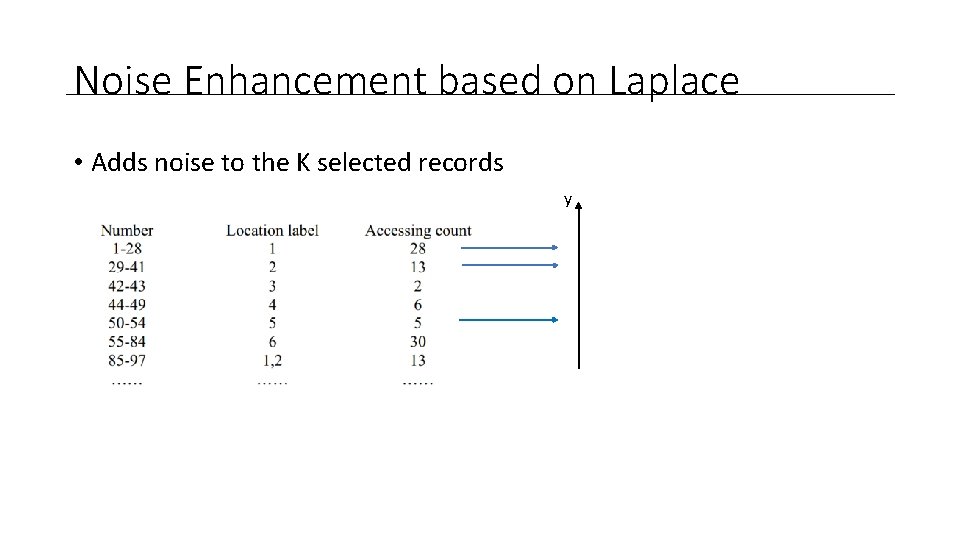

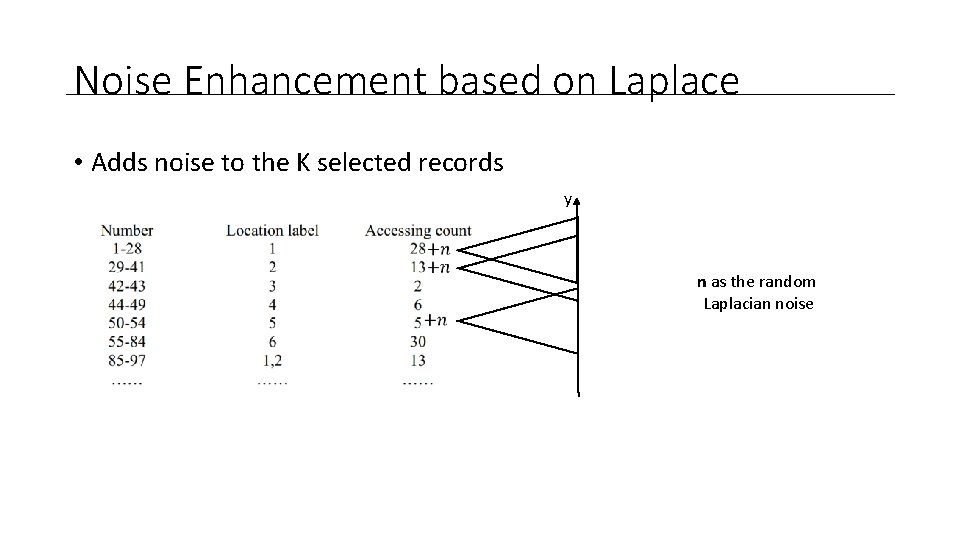

Noise Enhancement based on Laplace • Adds noise to the K selected records y

Noise Enhancement based on Laplace • Adds noise to the K selected records y n as the random Laplacian noise

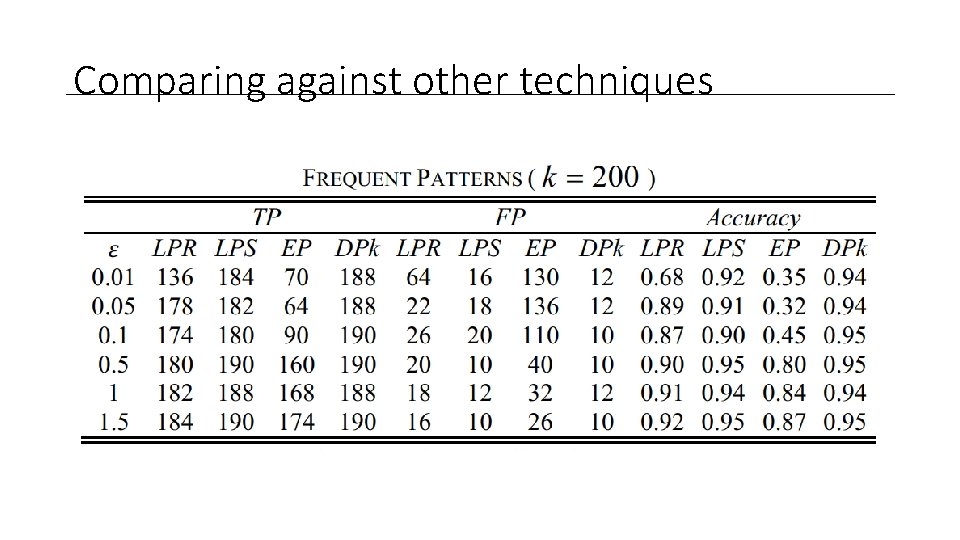

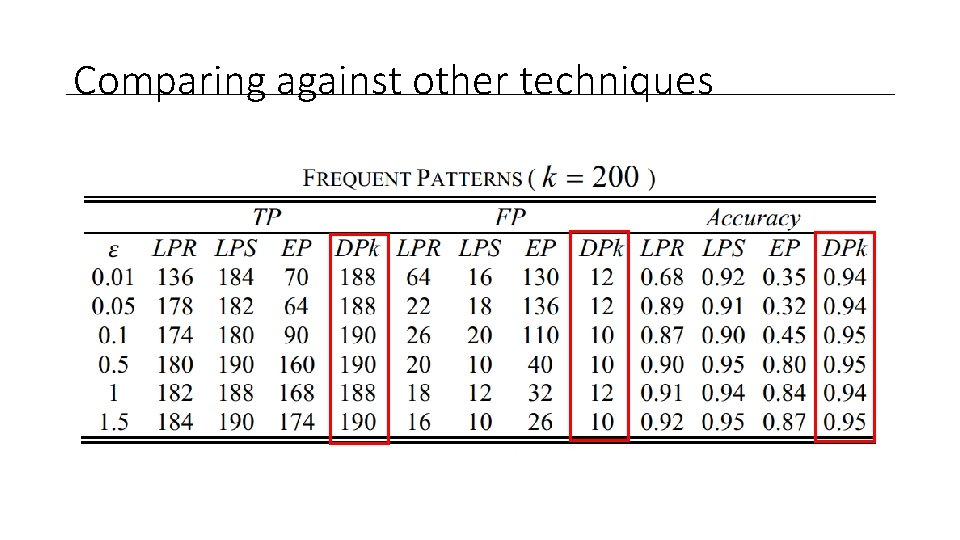

Measuring the utility • True Positive (TP) • Patterns in both Databases D and D’ • False Positive (FP) • False Positive are the unique values in D’ • Accuracy • The ratio between what is unique in D’ against the total of D’

Experimental Analysis • Check-in data set from Gowalla data set • Location-based social networking website where users share their locations by checking-in

Experimental Analysis • Check-in data set from Gowalla data set • Location-based social networking website where users share their locations by checking-in

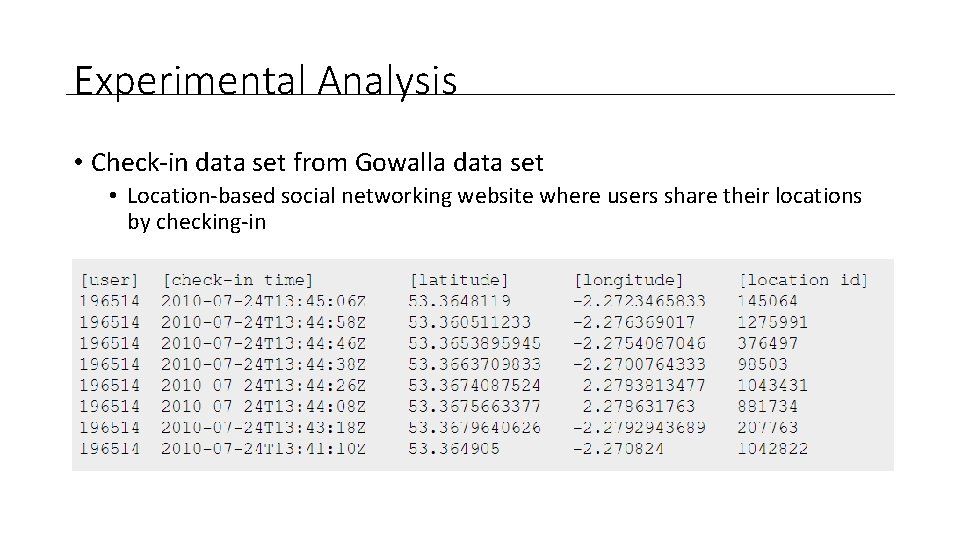

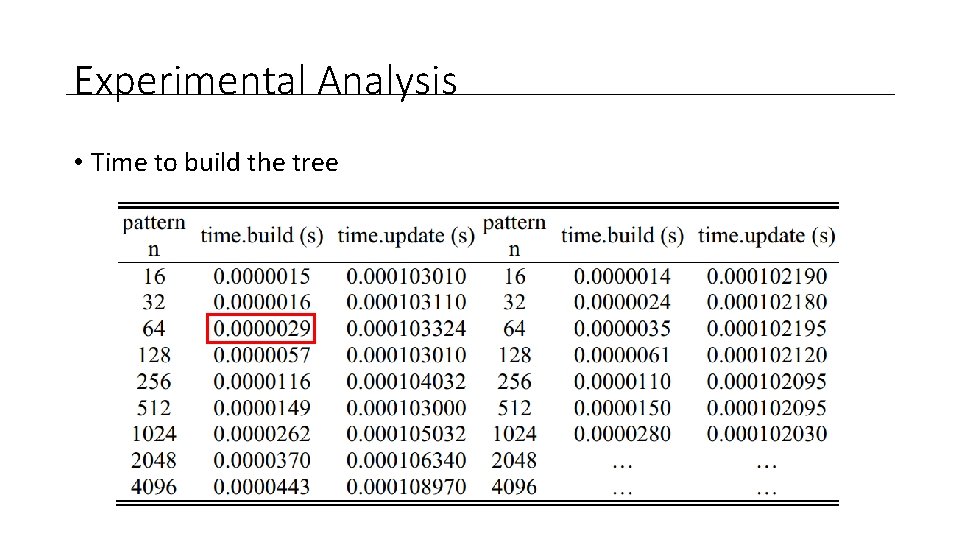

Experimental Analysis • Time to build the tree

Experimental Analysis • Time to build the tree

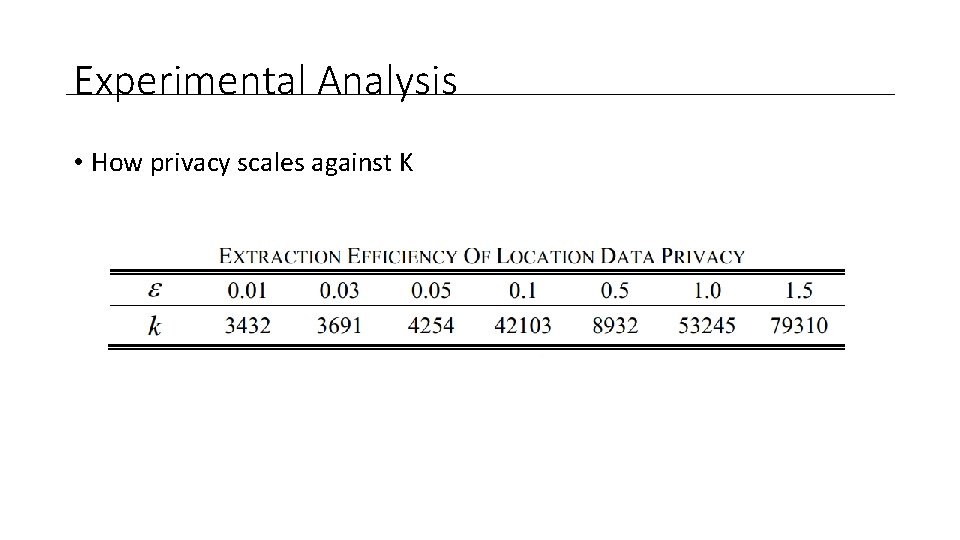

Experimental Analysis • How privacy scales against K

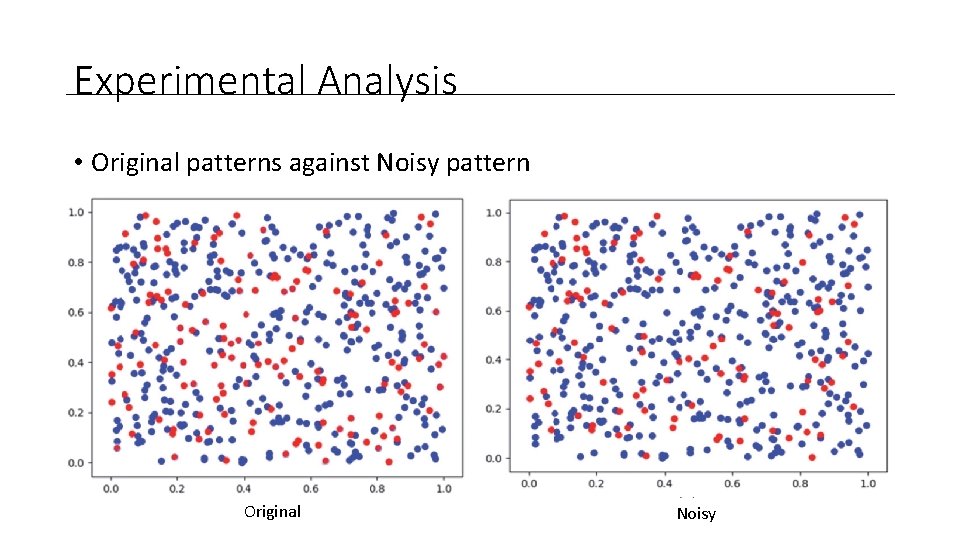

Experimental Analysis • Original patterns against Noisy pattern Original Noisy

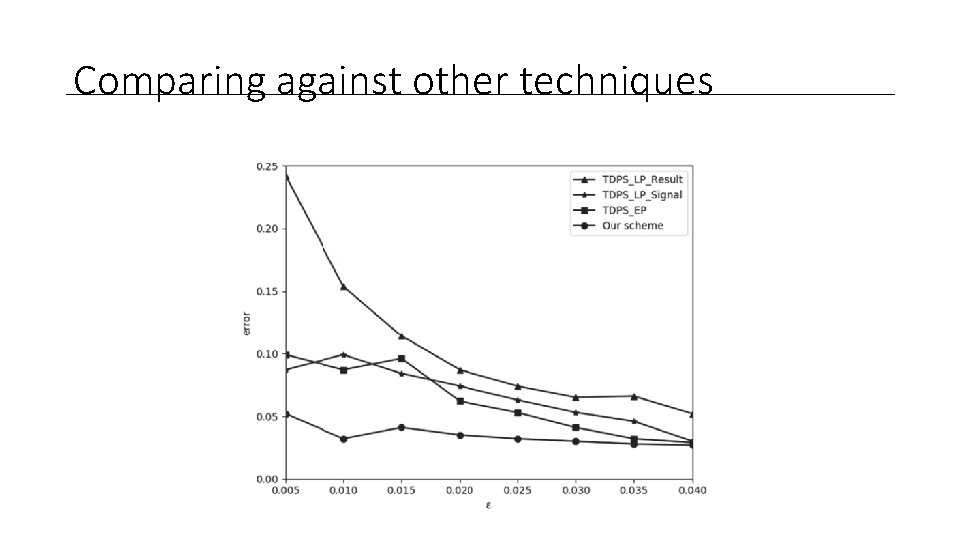

Comparing against other techniques

Comparing against other techniques

Comparing against other techniques

Remarks • This is not Io. T • They are just using stored data • The tree grows exponentially in |D|

References • Cynthia Dwork. 2008. Differential privacy: a survey of results. In Proceedings of the 5 th international conference on Theory and applications of models of computation (TAMC'08), Manindra Agrawal, Dingzhu Du, Zhenhua Duan, and Angsheng Li (Eds. ). Springer-Verlag, Berlin, Heidelberg, 1 -19. • Dwork, C. : Differential Privacy. In: Bugliesi, M. , Preneel, B. , Sassone, V. , Wegener, I. (eds. ) ICALP 2006. LNCS, vol. 4052, pp. 1– 12. Springer, Heidelberg (2006)

Location Privacy Protection based on Differential Privacy Strategy for Big Data in Industrial Internet-of-Things Published in: IEEE Transactions on Industrial Informatics Henrique Potter

What is K?

Differential Privacy - sensitivity • Probability density function

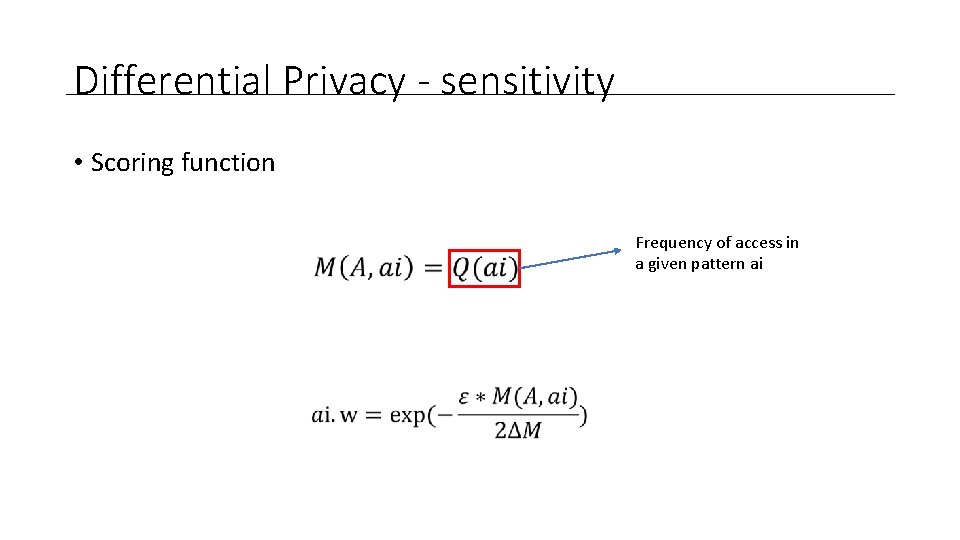

Differential Privacy - sensitivity • Scoring function Frequency of access in a given pattern ai

Location privacy tree

- Slides: 67