Linux Synchronization Computer Science Engineering Department Arizona State

- Slides: 19

Linux Synchronization Computer Science & Engineering Department Arizona State University Tempe, AZ 85287 Dr. Yann-Hang Lee yhlee@asu. edu (480) 727 -7507

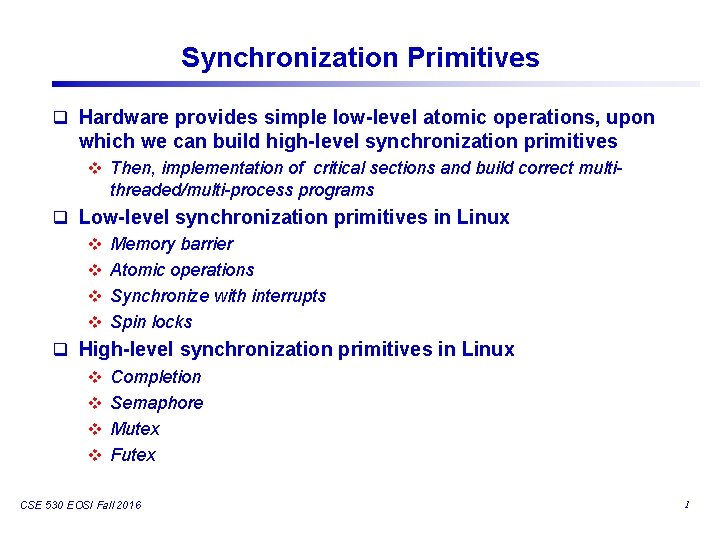

Synchronization Primitives q Hardware provides simple low-level atomic operations, upon which we can build high-level synchronization primitives v Then, implementation of critical sections and build correct multi- threaded/multi-process programs q Low-level synchronization primitives in Linux v Memory barrier v Atomic operations v Synchronize with interrupts v Spin locks q High-level synchronization primitives in Linux v Completion v Semaphore v Mutex v Futex CSE 530 EOSI Fall 2016 1

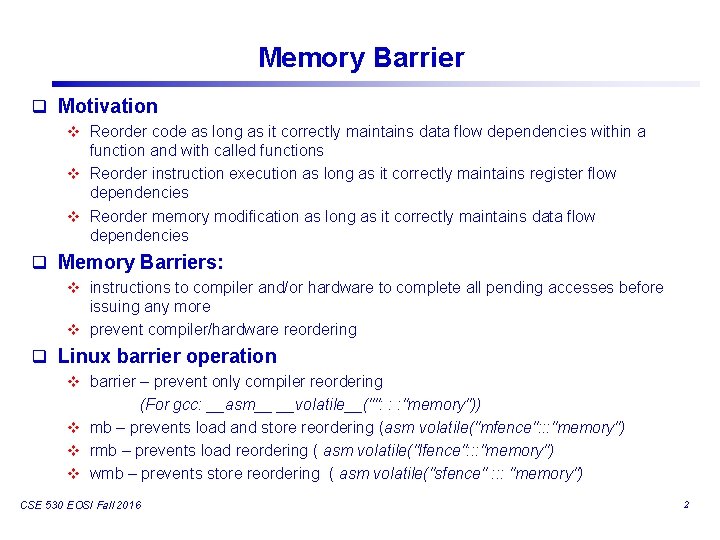

Memory Barrier q Motivation v Reorder code as long as it correctly maintains data flow dependencies within a function and with called functions v Reorder instruction execution as long as it correctly maintains register flow dependencies v Reorder memory modification as long as it correctly maintains data flow dependencies q Memory Barriers: v instructions to compiler and/or hardware to complete all pending accesses before issuing any more v prevent compiler/hardware reordering q Linux barrier operation v barrier – prevent only compiler reordering (For gcc: __asm__ __volatile__("": : : "memory")) v mb – prevents load and store reordering (asm volatile("mfence": : : "memory") v rmb – prevents load reordering ( asm volatile("lfence": : : "memory") v wmb – prevents store reordering ( asm volatile("sfence" : : : "memory") CSE 530 EOSI Fall 2016 2

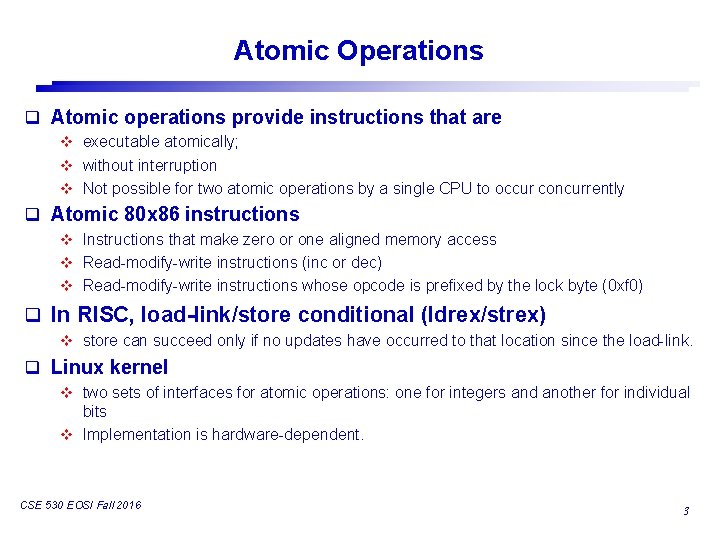

Atomic Operations q Atomic operations provide instructions that are v executable atomically; v without interruption v Not possible for two atomic operations by a single CPU to occur concurrently q Atomic 80 x 86 instructions v Instructions that make zero or one aligned memory access v Read-modify-write instructions (inc or dec) v Read-modify-write instructions whose opcode is prefixed by the lock byte (0 xf 0) q In RISC, load-link/store conditional (ldrex/strex) v store can succeed only if no updates have occurred to that location since the load-link. q Linux kernel v two sets of interfaces for atomic operations: one for integers and another for individual bits v Implementation is hardware-dependent. CSE 530 EOSI Fall 2016 3

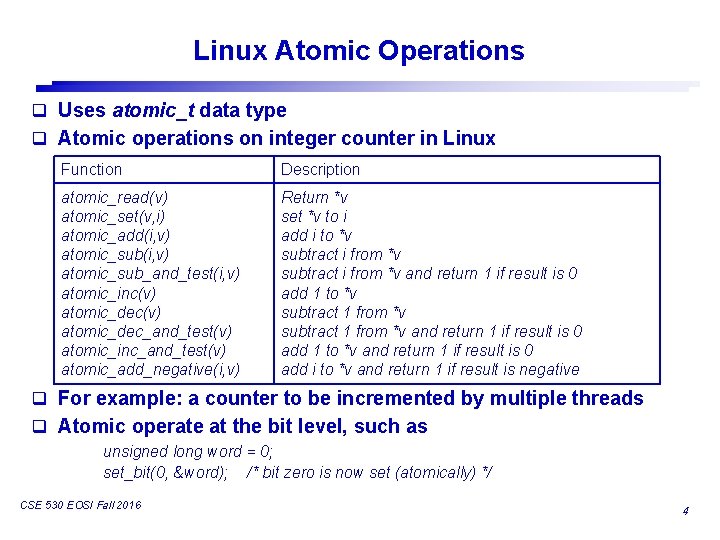

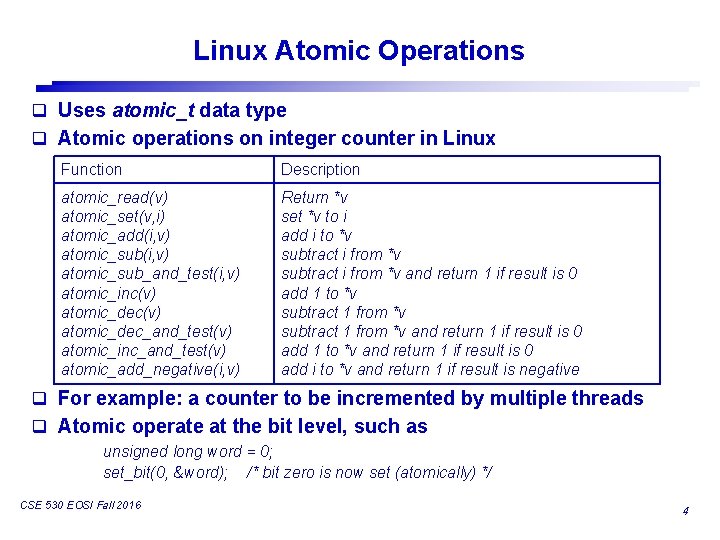

Linux Atomic Operations q Uses atomic_t data type q Atomic operations on integer counter in Linux Function Description atomic_read(v) atomic_set(v, i) atomic_add(i, v) atomic_sub_and_test(i, v) atomic_inc(v) atomic_dec_and_test(v) atomic_inc_and_test(v) atomic_add_negative(i, v) Return *v set *v to i add i to *v subtract i from *v and return 1 if result is 0 add 1 to *v subtract 1 from *v and return 1 if result is 0 add 1 to *v and return 1 if result is 0 add i to *v and return 1 if result is negative q For example: a counter to be incremented by multiple threads q Atomic operate at the bit level, such as unsigned long word = 0; set_bit(0, &word); /* bit zero is now set (atomically) */ CSE 530 EOSI Fall 2016 4

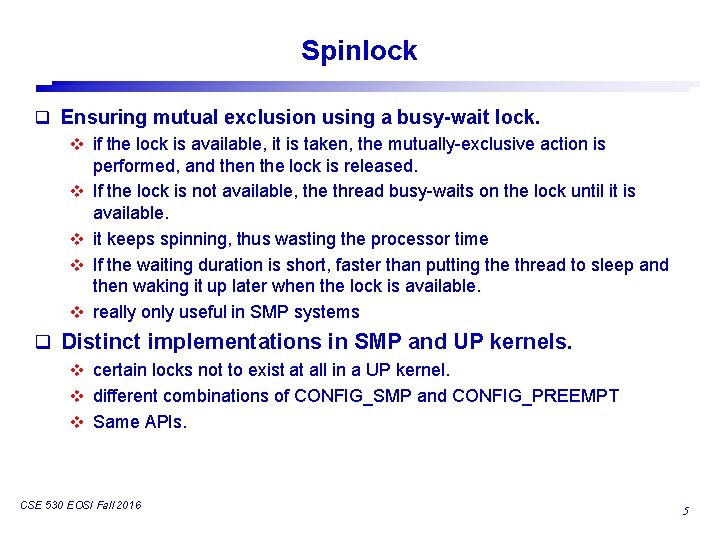

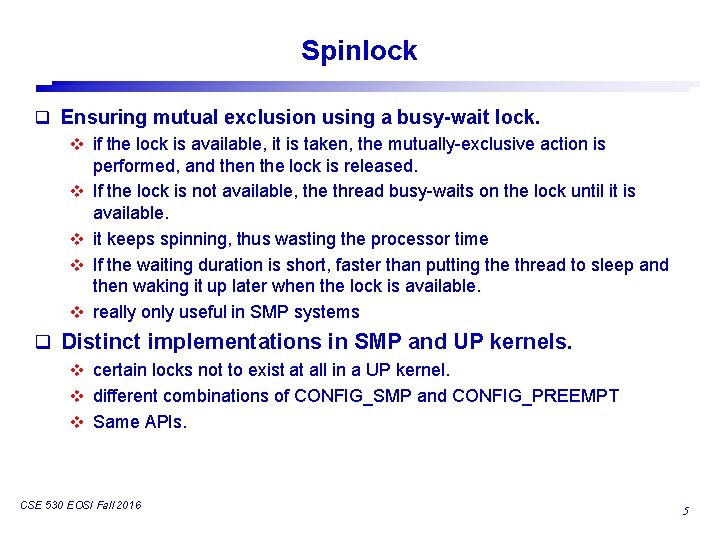

Spinlock q Ensuring mutual exclusion using a busy-wait lock. v if the lock is available, it is taken, the mutually-exclusive action is performed, and then the lock is released. v If the lock is not available, the thread busy-waits on the lock until it is available. v it keeps spinning, thus wasting the processor time v If the waiting duration is short, faster than putting the thread to sleep and then waking it up later when the lock is available. v really only useful in SMP systems q Distinct implementations in SMP and UP kernels. v certain locks not to exist at all in a UP kernel. v different combinations of CONFIG_SMP and CONFIG_PREEMPT v Same APIs. CSE 530 EOSI Fall 2016 5

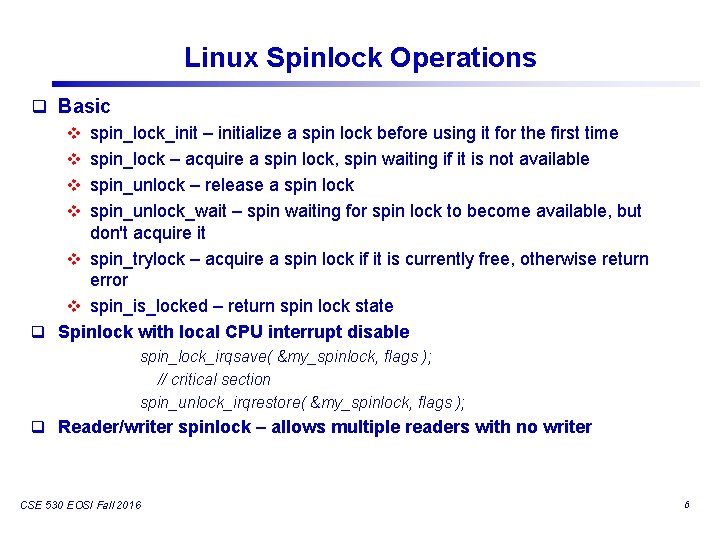

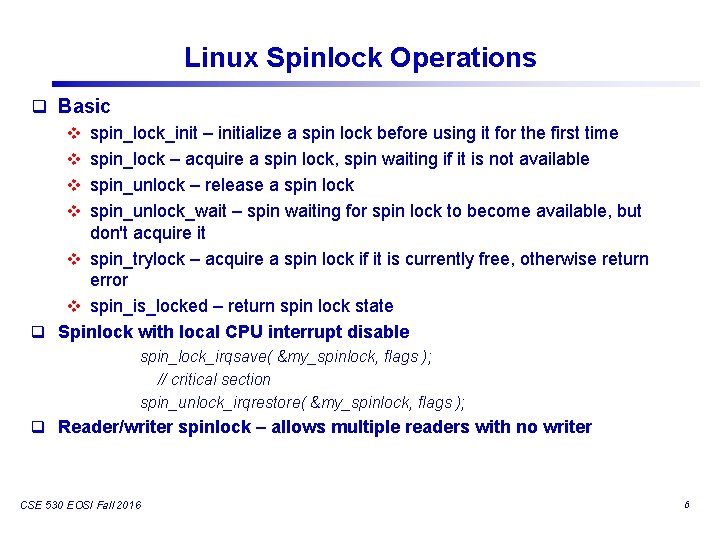

Linux Spinlock Operations q Basic v spin_lock_init – initialize a spin lock before using it for the first time v spin_lock – acquire a spin lock, spin waiting if it is not available v spin_unlock – release a spin lock v spin_unlock_wait – spin waiting for spin lock to become available, but don't acquire it v spin_trylock – acquire a spin lock if it is currently free, otherwise return error v spin_is_locked – return spin lock state q Spinlock with local CPU interrupt disable spin_lock_irqsave( &my_spinlock, flags ); // critical section spin_unlock_irqrestore( &my_spinlock, flags ); q Reader/writer spinlock – allows multiple readers with no writer CSE 530 EOSI Fall 2016 6

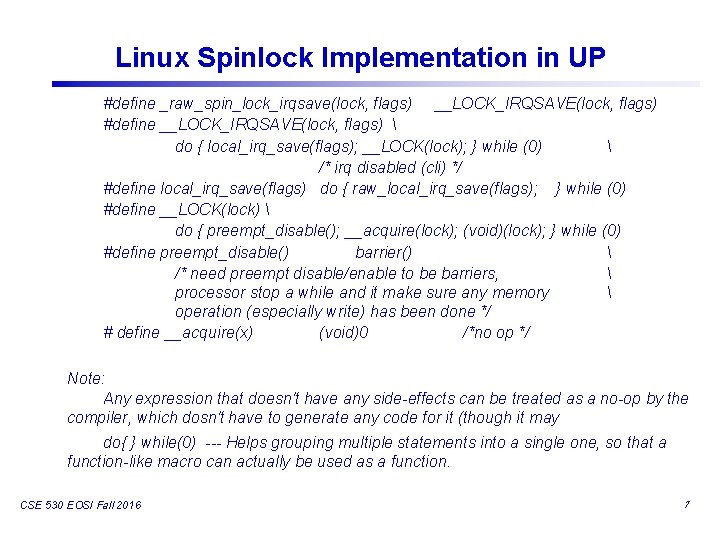

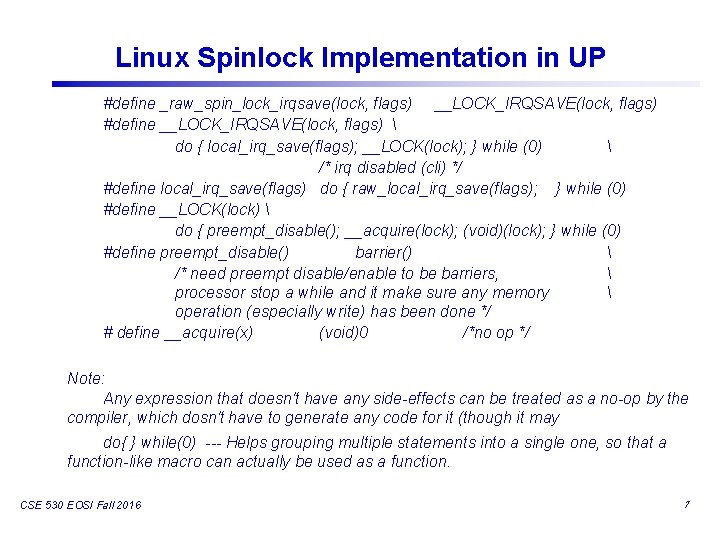

Linux Spinlock Implementation in UP #define _raw_spin_lock_irqsave(lock, flags) __LOCK_IRQSAVE(lock, flags) #define __LOCK_IRQSAVE(lock, flags) do { local_irq_save(flags); __LOCK(lock); } while (0) /* irq disabled (cli) */ #define local_irq_save(flags) do { raw_local_irq_save(flags); } while (0) #define __LOCK(lock) do { preempt_disable(); __acquire(lock); (void)(lock); } while (0) #define preempt_disable() barrier() /* need preempt disable/enable to be barriers, processor stop a while and it make sure any memory operation (especially write) has been done */ # define __acquire(x) (void)0 /*no op */ Note: Any expression that doesn't have any side-effects can be treated as a no-op by the compiler, which dosn't have to generate any code for it (though it may do{ } while(0) --- Helps grouping multiple statements into a single one, so that a function-like macro can actually be used as a function. CSE 530 EOSI Fall 2016 7

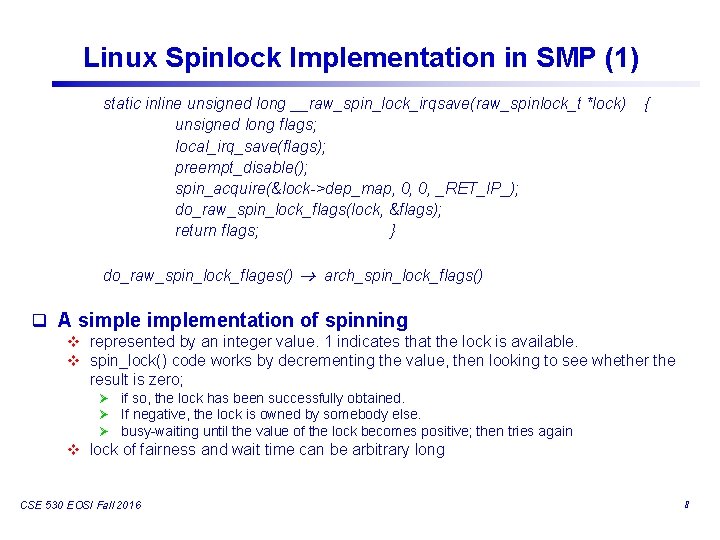

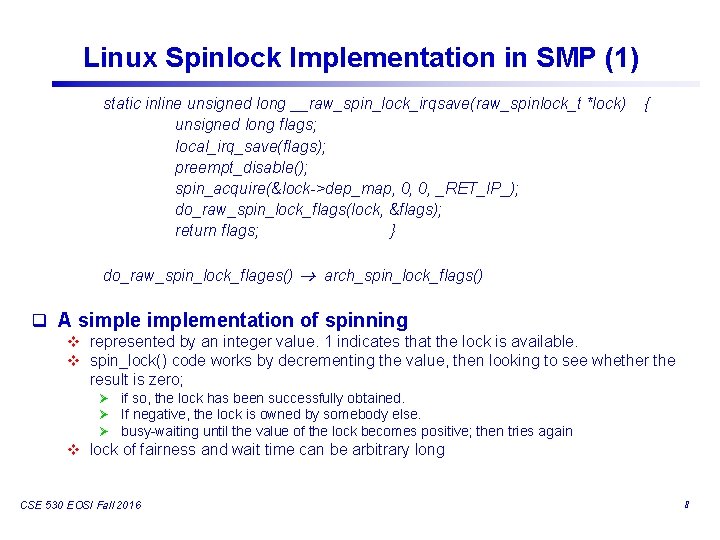

Linux Spinlock Implementation in SMP (1) static inline unsigned long __raw_spin_lock_irqsave(raw_spinlock_t *lock) unsigned long flags; local_irq_save(flags); preempt_disable(); spin_acquire(&lock->dep_map, 0, 0, _RET_IP_); do_raw_spin_lock_flags(lock, &flags); return flags; } { do_raw_spin_lock_flages() arch_spin_lock_flags() q A simplementation of spinning v represented by an integer value. 1 indicates that the lock is available. v spin_lock() code works by decrementing the value, then looking to see whether the result is zero; Ø if so, the lock has been successfully obtained. Ø If negative, the lock is owned by somebody else. Ø busy-waiting until the value of the lock becomes positive; then tries again v lock of fairness and wait time can be arbitrary long CSE 530 EOSI Fall 2016 8

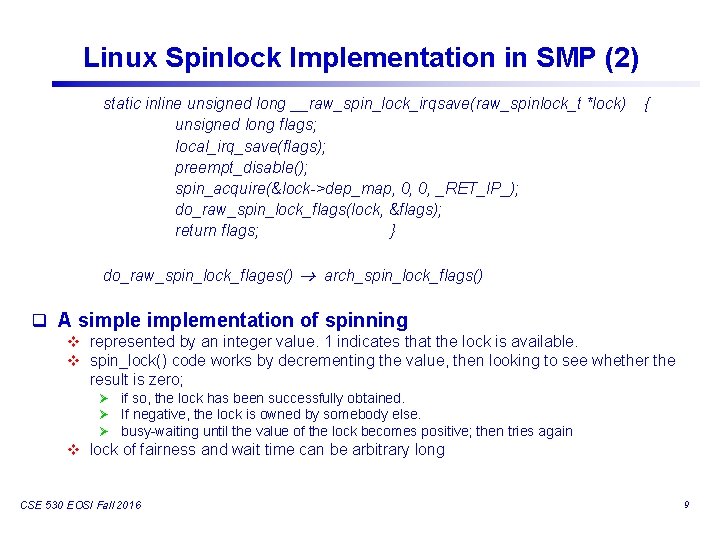

Linux Spinlock Implementation in SMP (2) static inline unsigned long __raw_spin_lock_irqsave(raw_spinlock_t *lock) unsigned long flags; local_irq_save(flags); preempt_disable(); spin_acquire(&lock->dep_map, 0, 0, _RET_IP_); do_raw_spin_lock_flags(lock, &flags); return flags; } { do_raw_spin_lock_flages() arch_spin_lock_flags() q A simplementation of spinning v represented by an integer value. 1 indicates that the lock is available. v spin_lock() code works by decrementing the value, then looking to see whether the result is zero; Ø if so, the lock has been successfully obtained. Ø If negative, the lock is owned by somebody else. Ø busy-waiting until the value of the lock becomes positive; then tries again v lock of fairness and wait time can be arbitrary long CSE 530 EOSI Fall 2016 9

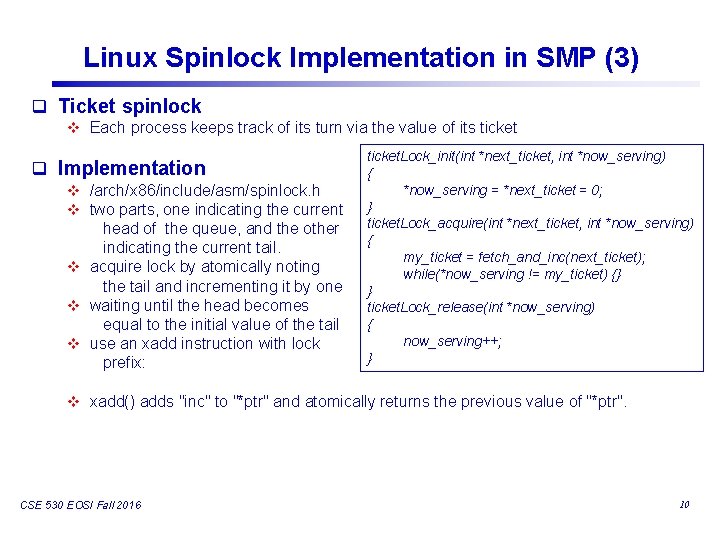

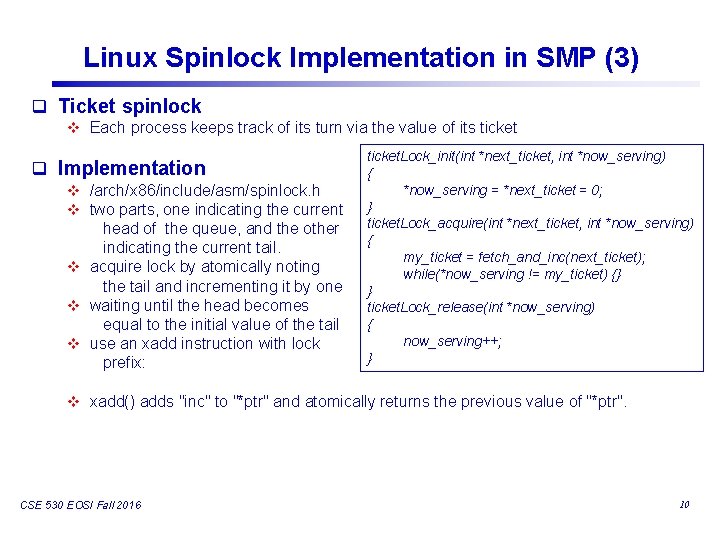

Linux Spinlock Implementation in SMP (3) q Ticket spinlock v Each process keeps track of its turn via the value of its ticket q Implementation v /arch/x 86/include/asm/spinlock. h v two parts, one indicating the current head of the queue, and the other indicating the current tail. v acquire lock by atomically noting the tail and incrementing it by one v waiting until the head becomes equal to the initial value of the tail v use an xadd instruction with lock prefix: ticket. Lock_init(int *next_ticket, int *now_serving) { *now_serving = *next_ticket = 0; } ticket. Lock_acquire(int *next_ticket, int *now_serving) { my_ticket = fetch_and_inc(next_ticket); while(*now_serving != my_ticket) {} } ticket. Lock_release(int *now_serving) { now_serving++; } v xadd() adds "inc" to "*ptr" and atomically returns the previous value of "*ptr". CSE 530 EOSI Fall 2016 10

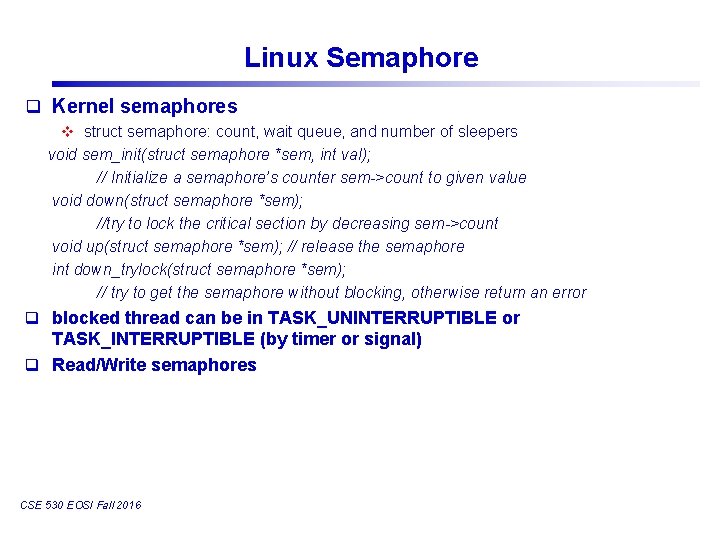

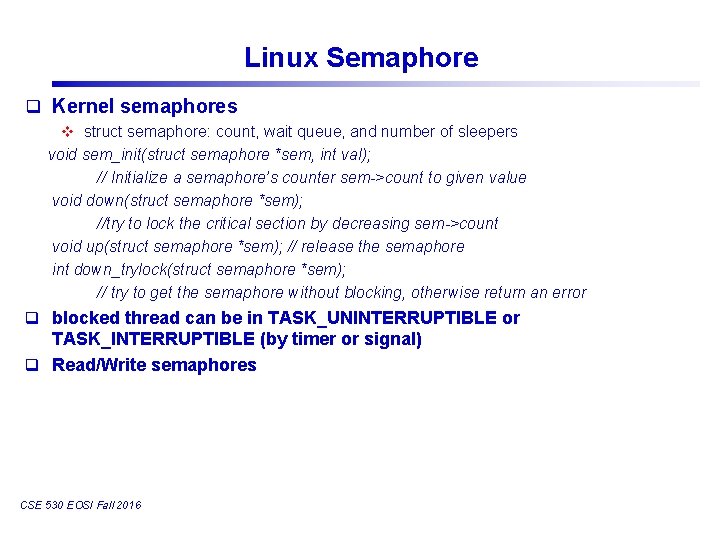

Linux Semaphore q Kernel semaphores v struct semaphore: count, wait queue, and number of sleepers void sem_init(struct semaphore *sem, int val); // Initialize a semaphore’s counter sem->count to given value void down(struct semaphore *sem); //try to lock the critical section by decreasing sem->count void up(struct semaphore *sem); // release the semaphore int down_trylock(struct semaphore *sem); // try to get the semaphore without blocking, otherwise return an error q blocked thread can be in TASK_UNINTERRUPTIBLE or TASK_INTERRUPTIBLE (by timer or signal) q Read/Write semaphores CSE 530 EOSI Fall 2016

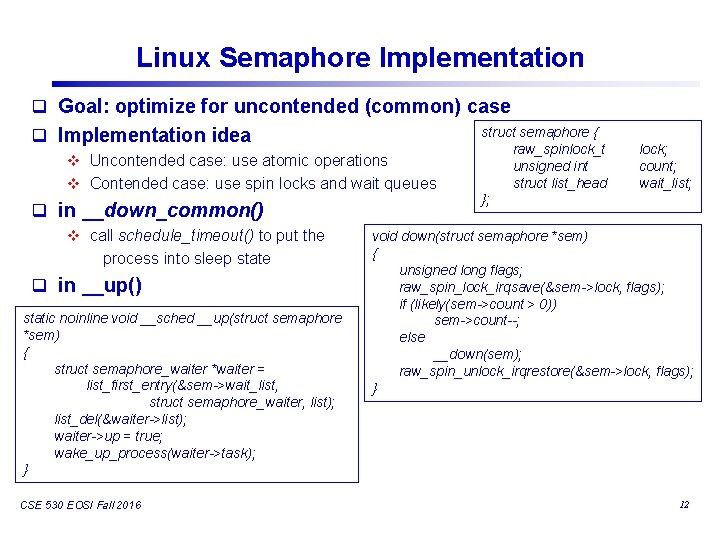

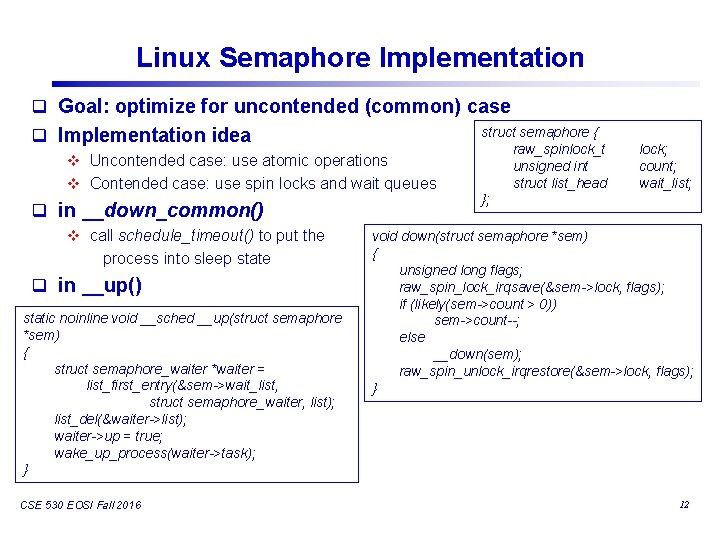

Linux Semaphore Implementation q Goal: optimize for uncontended (common) case q Implementation idea v Uncontended case: use atomic operations v Contended case: use spin locks and wait queues q in __down_common() v call schedule_timeout() to put the process into sleep state q in __up() static noinline void __sched __up(struct semaphore *sem) { struct semaphore_waiter *waiter = list_first_entry(&sem->wait_list, struct semaphore_waiter, list); list_del(&waiter->list); waiter->up = true; wake_up_process(waiter->task); } CSE 530 EOSI Fall 2016 struct semaphore { raw_spinlock_t unsigned int struct list_head }; lock; count; wait_list; void down(struct semaphore *sem) { unsigned long flags; raw_spin_lock_irqsave(&sem->lock, flags); if (likely(sem->count > 0)) sem->count--; else __down(sem); raw_spin_unlock_irqrestore(&sem->lock, flags); } 12

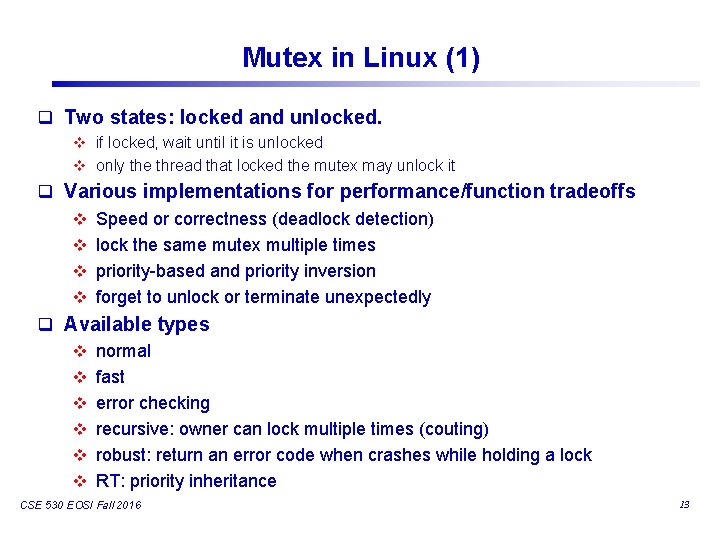

Mutex in Linux (1) q Two states: locked and unlocked. v if locked, wait until it is unlocked v only the thread that locked the mutex may unlock it q Various implementations for performance/function tradeoffs v Speed or correctness (deadlock detection) v lock the same mutex multiple times v priority-based and priority inversion v forget to unlock or terminate unexpectedly q Available types v normal v fast v error checking v recursive: owner can lock multiple times (couting) v robust: return an error code when crashes while holding a lock v RT: priority inheritance CSE 530 EOSI Fall 2016 13

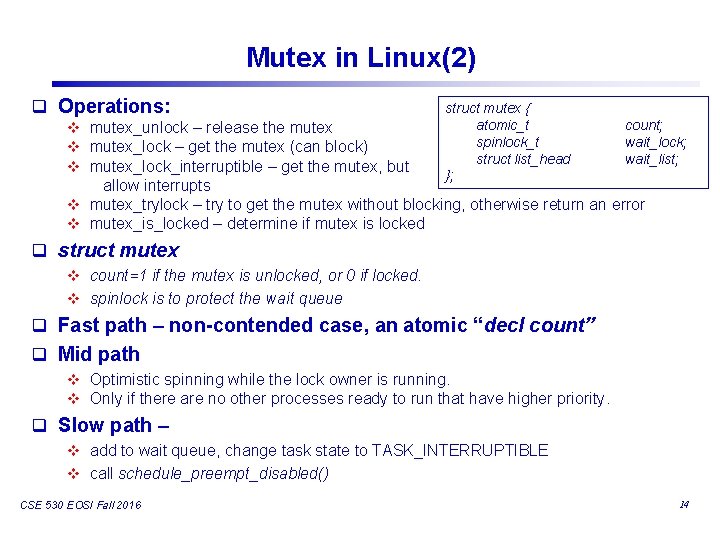

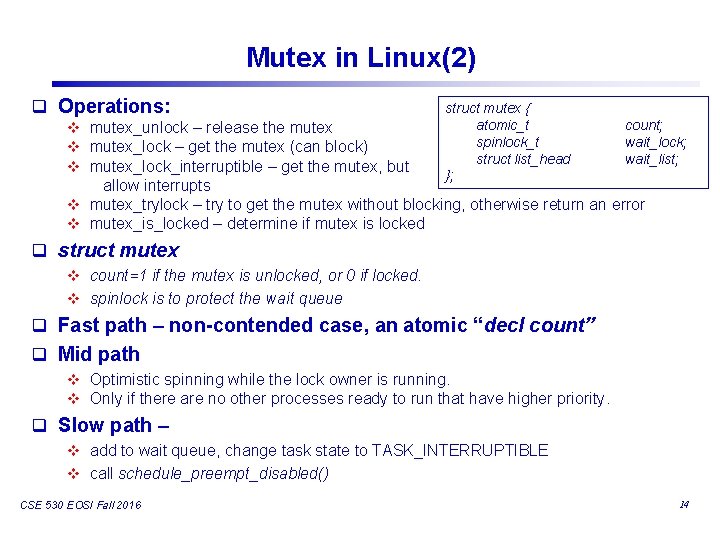

Mutex in Linux(2) q Operations: v mutex_unlock – release the mutex v mutex_lock – get the mutex (can block) v mutex_lock_interruptible – get the mutex, but struct mutex { atomic_t spinlock_t struct list_head }; count; wait_lock; wait_list; allow interrupts v mutex_trylock – try to get the mutex without blocking, otherwise return an error v mutex_is_locked – determine if mutex is locked q struct mutex v count=1 if the mutex is unlocked, or 0 if locked. v spinlock is to protect the wait queue q Fast path – non-contended case, an atomic “decl count” q Mid path v Optimistic spinning while the lock owner is running. v Only if there are no other processes ready to run that have higher priority. q Slow path – v add to wait queue, change task state to TASK_INTERRUPTIBLE v call schedule_preempt_disabled() CSE 530 EOSI Fall 2016 14

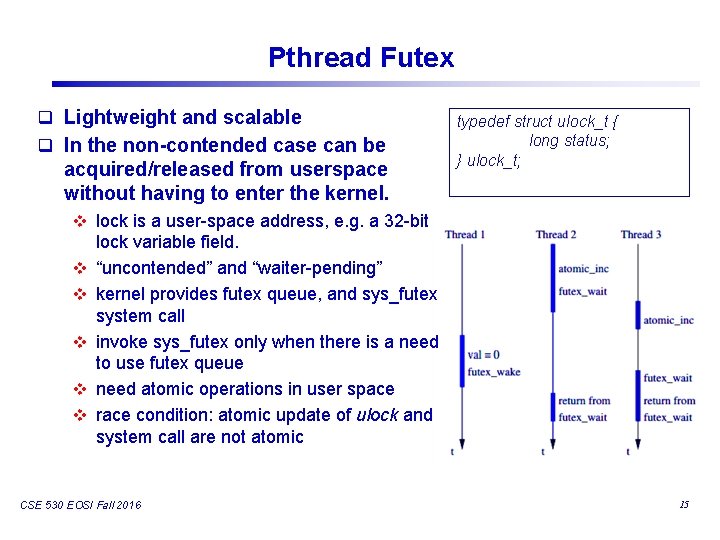

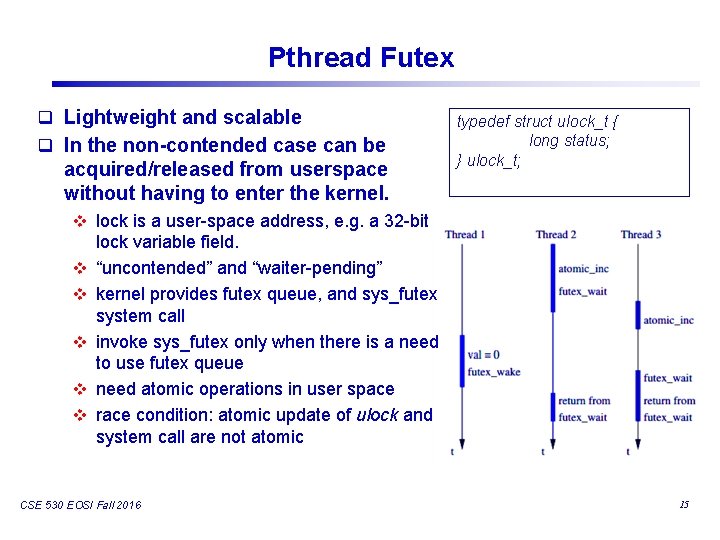

Pthread Futex q Lightweight and scalable q In the non-contended case can be acquired/released from userspace without having to enter the kernel. typedef struct ulock_t { long status; } ulock_t; v lock is a user-space address, e. g. a 32 -bit v v v lock variable field. “uncontended” and “waiter-pending” kernel provides futex queue, and sys_futex system call invoke sys_futex only when there is a need to use futex queue need atomic operations in user space race condition: atomic update of ulock and system call are not atomic CSE 530 EOSI Fall 2016 15

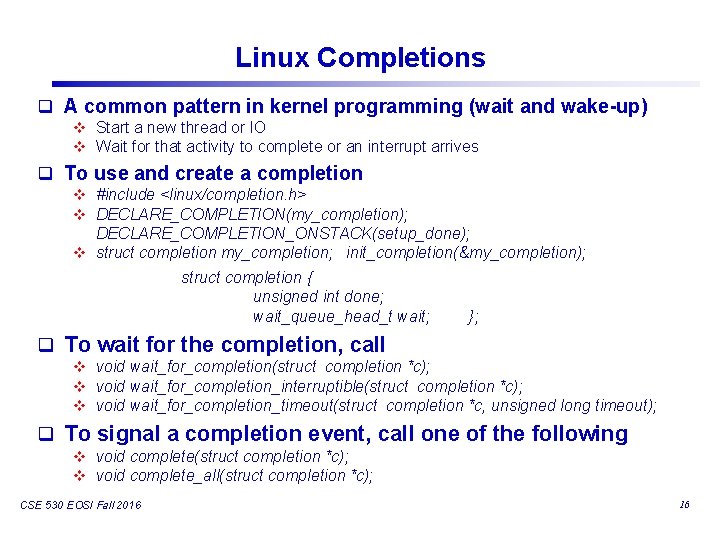

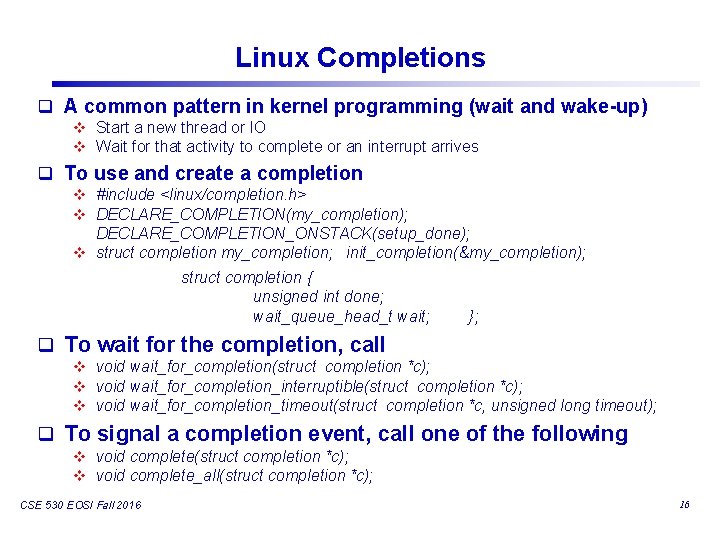

Linux Completions q A common pattern in kernel programming (wait and wake-up) v Start a new thread or IO v Wait for that activity to complete or an interrupt arrives q To use and create a completion v #include <linux/completion. h> v DECLARE_COMPLETION(my_completion); DECLARE_COMPLETION_ONSTACK(setup_done); v struct completion my_completion; init_completion(&my_completion); struct completion { unsigned int done; wait_queue_head_t wait; }; q To wait for the completion, call v void wait_for_completion(struct completion *c); v void wait_for_completion_interruptible(struct completion *c); v void wait_for_completion_timeout(struct completion *c, unsigned long timeout); q To signal a completion event, call one of the following v void complete(struct completion *c); v void complete_all(struct completion *c); CSE 530 EOSI Fall 2016 16

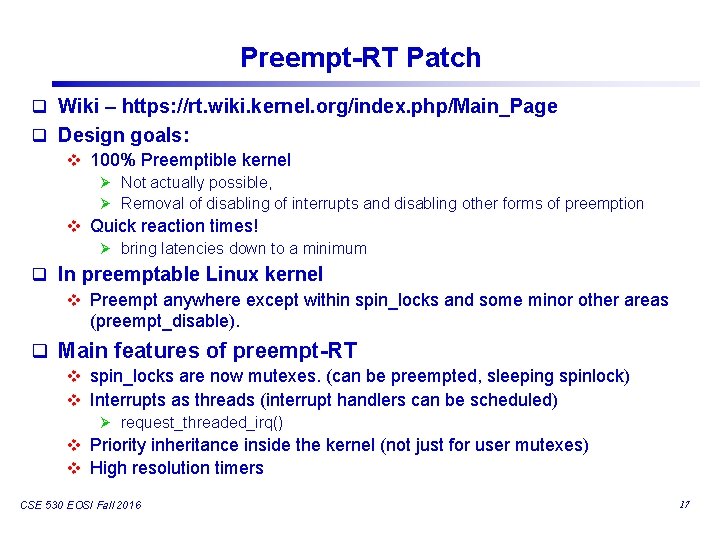

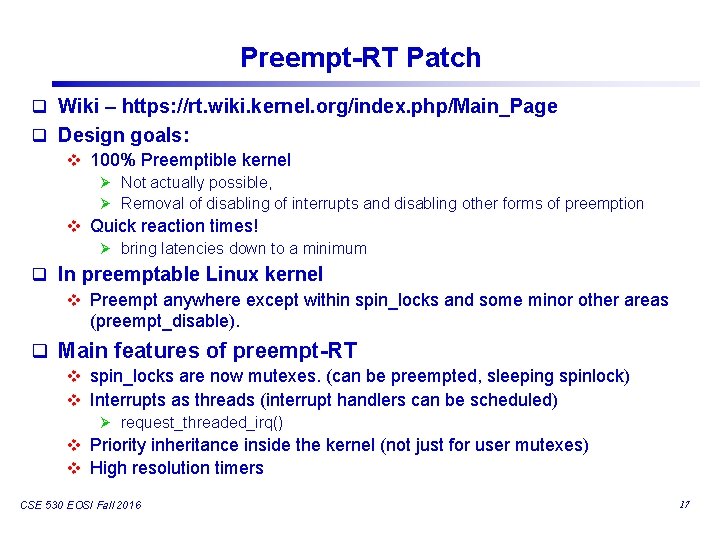

Preempt-RT Patch q Wiki – https: //rt. wiki. kernel. org/index. php/Main_Page q Design goals: v 100% Preemptible kernel Ø Not actually possible, Ø Removal of disabling of interrupts and disabling other forms of preemption v Quick reaction times! Ø bring latencies down to a minimum q In preemptable Linux kernel v Preempt anywhere except within spin_locks and some minor other areas (preempt_disable). q Main features of preempt-RT v spin_locks are now mutexes. (can be preempted, sleeping spinlock) v Interrupts as threads (interrupt handlers can be scheduled) Ø request_threaded_irq() v Priority inheritance inside the kernel (not just for user mutexes) v High resolution timers CSE 530 EOSI Fall 2016 17

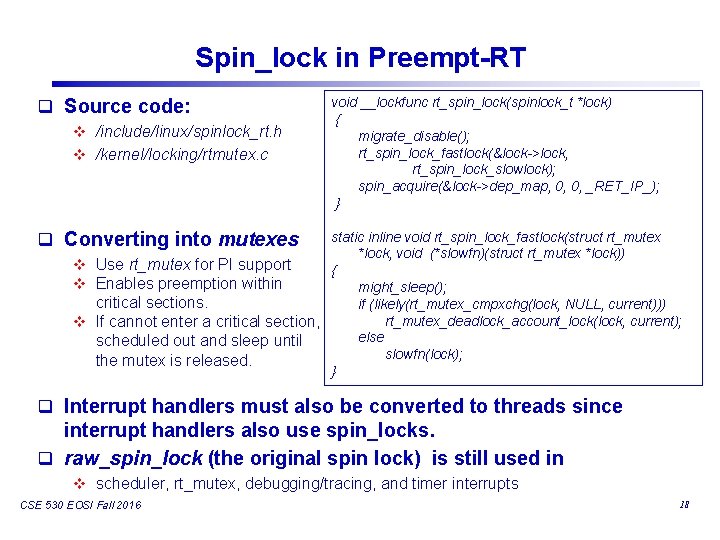

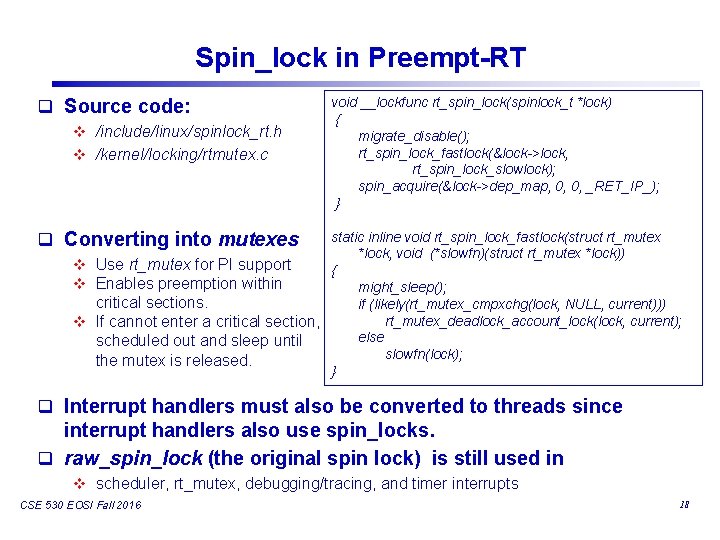

Spin_lock in Preempt-RT q Source code: v /include/linux/spinlock_rt. h v /kernel/locking/rtmutex. c void __lockfunc rt_spin_lock(spinlock_t *lock) { migrate_disable(); rt_spin_lock_fastlock(&lock->lock, rt_spin_lock_slowlock); spin_acquire(&lock->dep_map, 0, 0, _RET_IP_); } q Converting into mutexes static inline void rt_spin_lock_fastlock(struct rt_mutex *lock, void (*slowfn)(struct rt_mutex *lock)) v Use rt_mutex for PI support { v Enables preemption within might_sleep(); critical sections. if (likely(rt_mutex_cmpxchg(lock, NULL, current))) rt_mutex_deadlock_account_lock(lock, current); v If cannot enter a critical section, else scheduled out and sleep until slowfn(lock); the mutex is released. } q Interrupt handlers must also be converted to threads since interrupt handlers also use spin_locks. q raw_spin_lock (the original spin lock) is still used in v scheduler, rt_mutex, debugging/tracing, and timer interrupts CSE 530 EOSI Fall 2016 18