Linux Kernel Programming 1 C Preprocessor Stringification n

![Structure of Hard IRQ Stack static char hardirq_stack[NR_CPUS * THREAD_SIZE] __attribute__((__aligned__(THREAD_SIZE))); element 5 (THREAD_SIZE Structure of Hard IRQ Stack static char hardirq_stack[NR_CPUS * THREAD_SIZE] __attribute__((__aligned__(THREAD_SIZE))); element 5 (THREAD_SIZE](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-6.jpg)

![Structure of Soft IRQ Stack static char softirq_stack[NR_CPUS * THREAD_SIZE] __attribute__((__aligned__(THREAD_SIZE))); element 5 (THREAD_SIZE Structure of Soft IRQ Stack static char softirq_stack[NR_CPUS * THREAD_SIZE] __attribute__((__aligned__(THREAD_SIZE))); element 5 (THREAD_SIZE](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-8.jpg)

![per CPU per process hard IRQ stack hardirq_stack[i ] kernel mode exception stack ss per CPU per process hard IRQ stack hardirq_stack[i ] kernel mode exception stack ss](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-22.jpg)

![Equivalent Code of __do_IRQ() spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); /* e. g. set IMR of Equivalent Code of __do_IRQ() spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); /* e. g. set IMR of](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-30.jpg)

![8259 A Block Diagram [Intel] 32 8259 A Block Diagram [Intel] 32](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-32.jpg)

![PIC Registers [wiki] n There are three registers, an Interrupt Mask Register (IMR), an PIC Registers [wiki] n There are three registers, an Interrupt Mask Register (IMR), an](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-33.jpg)

![EOI [wiki] n n An End Of Interrupt (EOI) is a signal sent to EOI [wiki] n n An End Of Interrupt (EOI) is a signal sent to](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-34.jpg)

![8259 A Interrupt Sequence [Intel] 1. 2. 3. 4. 5. One or more of 8259 A Interrupt Sequence [Intel] 1. 2. 3. 4. 5. One or more of](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-35.jpg)

![Fully Nested Mode [Intel] n n This mode is entered after initialization unless another Fully Nested Mode [Intel] n n This mode is entered after initialization unless another](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-36.jpg)

![8259 A Block Diagram [Intel] A C K While the IS bit is set, 8259 A Block Diagram [Intel] A C K While the IS bit is set,](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-37.jpg)

![Equivalent Code of __do_IRQ() spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); /* e. g. set IMR of Equivalent Code of __do_IRQ() spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); /* e. g. set IMR of](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-41.jpg)

![irq_desc[irq]. action This case occurs when there is no interrupt service routine associated with irq_desc[irq]. action This case occurs when there is no interrupt service routine associated with](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-48.jpg)

![Switch on and off of Interrupts in do_IRQ(): Interrupts from all IRQ lines spin_lock(&(irq_desc[irq]. Switch on and off of Interrupts in do_IRQ(): Interrupts from all IRQ lines spin_lock(&(irq_desc[irq].](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-66.jpg)

![Array softirq_vec and Type softirq_action static struct softirq_action softirq_vec[32] __cacheline_aligned_in_smp struct softirq_action { void Array softirq_vec and Type softirq_action static struct softirq_action softirq_vec[32] __cacheline_aligned_in_smp struct softirq_action { void](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-83.jpg)

![Softirq Activation-Related Code #define __IRQ_STAT(cpu, member) (irq_stat[cpu]. member) #define local_softirq_pending() __IRQ_STAT(smp_processor_id(), __softirq_pending) #define Softirq Activation-Related Code #define __IRQ_STAT(cpu, member) (irq_stat[cpu]. member) #define local_softirq_pending() __IRQ_STAT(smp_processor_id(), __softirq_pending) #define](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-92.jpg)

- Slides: 134

Linux Kernel Programming 許 富 皓 1

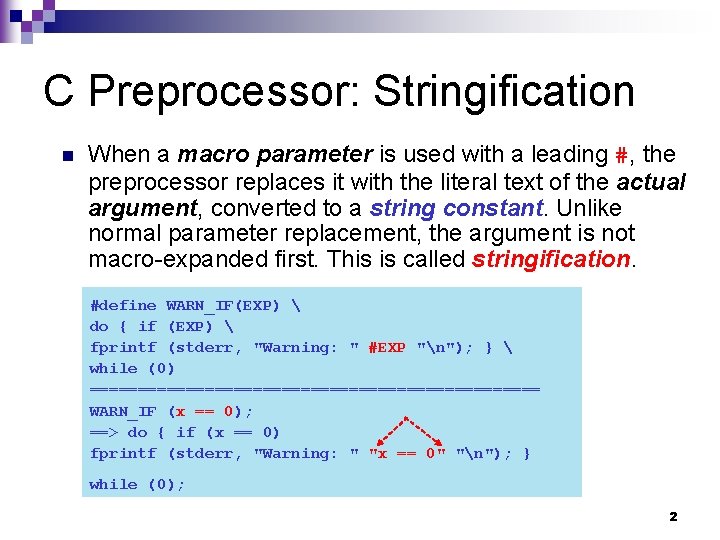

C Preprocessor: Stringification n When a macro parameter is used with a leading #, the preprocessor replaces it with the literal text of the actual argument, converted to a string constant. Unlike normal parameter replacement, the argument is not macro-expanded first. This is called stringification. #define WARN_IF(EXP) do { if (EXP) fprintf (stderr, "Warning: " #EXP "n"); } while (0) ======================== WARN_IF (x == 0); ==> do { if (x == 0) fprintf (stderr, "Warning: " "x == 0" "n"); } while (0); 2

Multiple Kernel Mode Stacks If the size of the thread_union structure is 8 KB, the kernel mode stack of the current process is used for every type of kernel control path: exceptions, interrupts, and deferrable functions. n If the size of the thread_union structure is 4 KB, the kernel makes use of three types of kernel mode stacks. n 3

Exception Stack The exception stack is used when handling exceptions (including system calls). n This is the stack contained in the perprocess thread_union data structure, thus the kernel makes use of a different exception stack for each process in the system. n 4

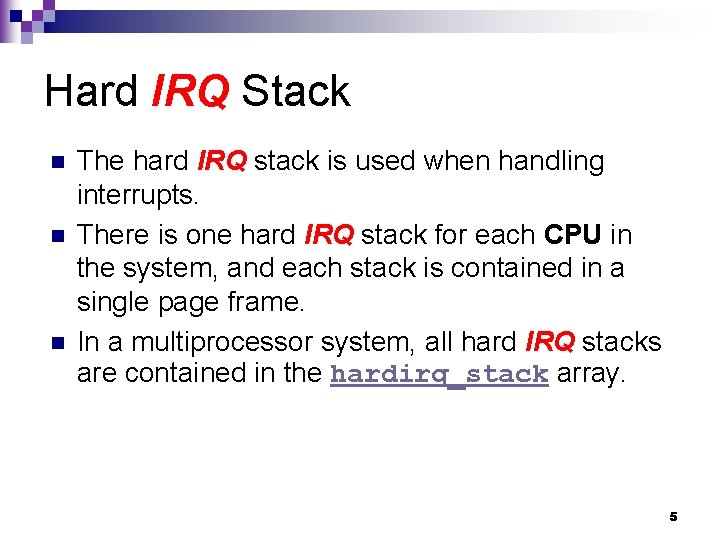

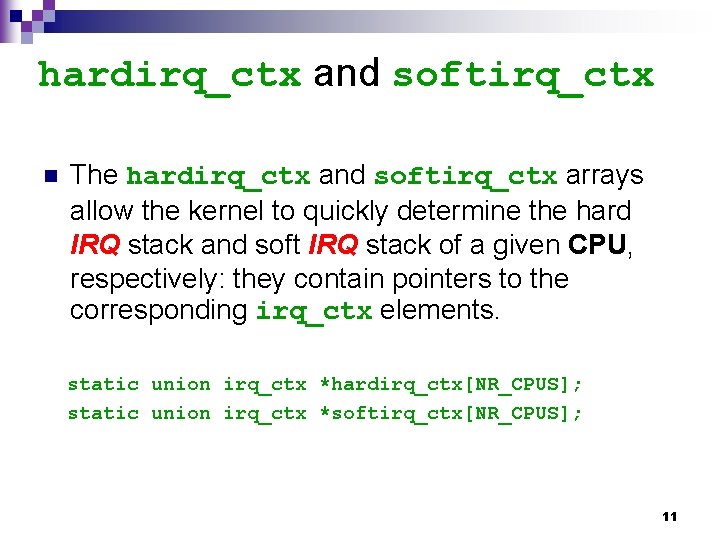

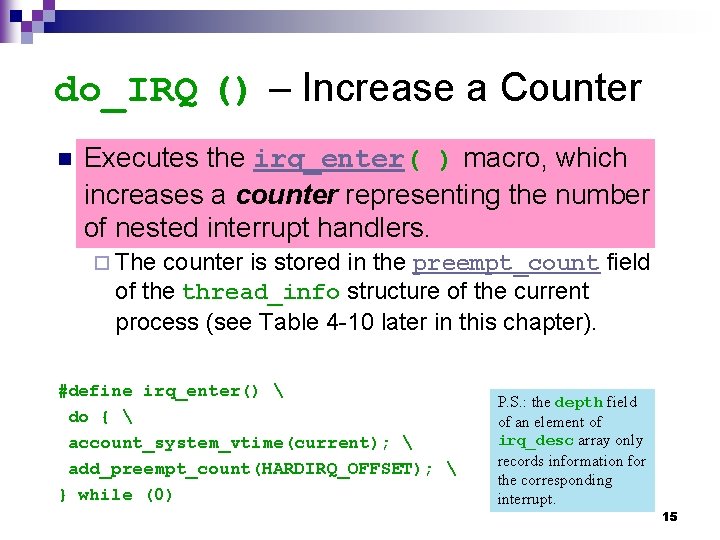

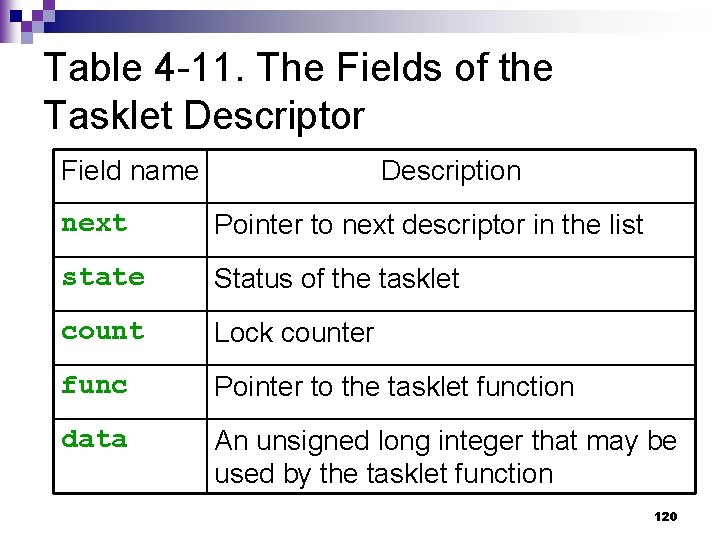

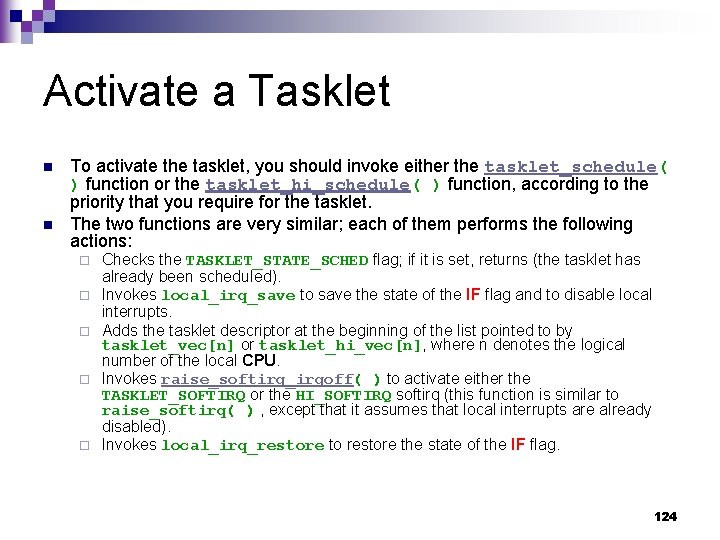

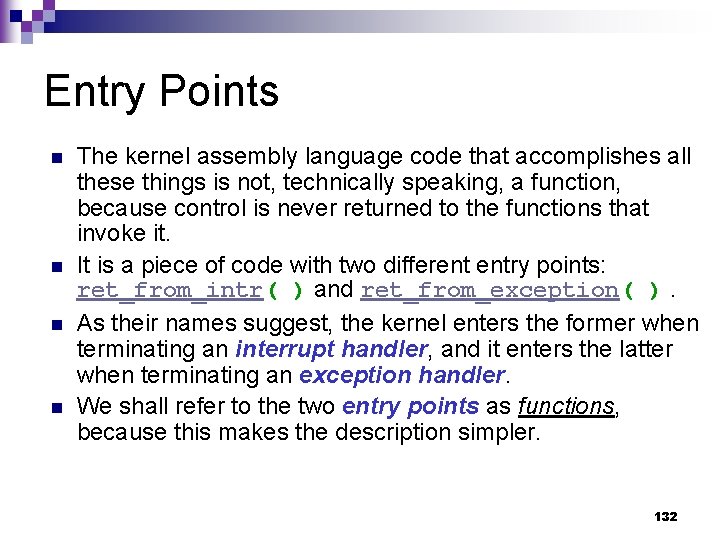

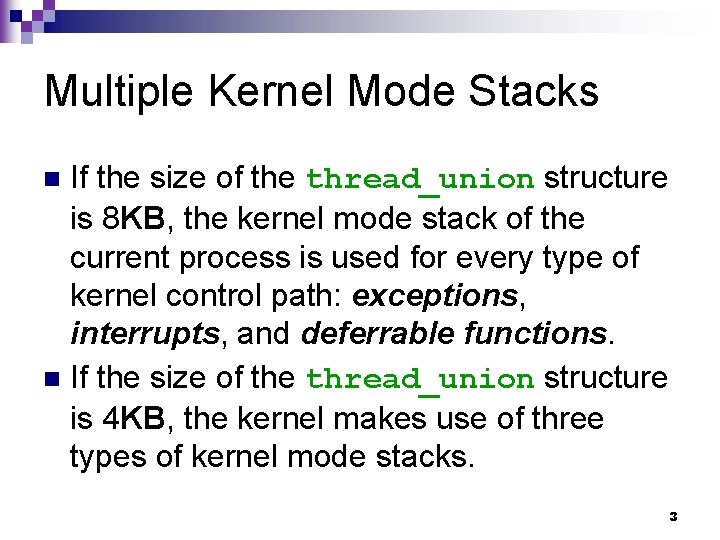

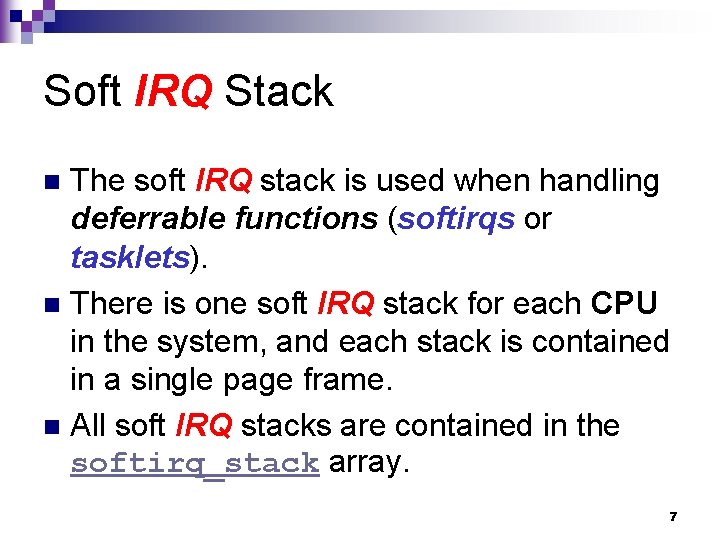

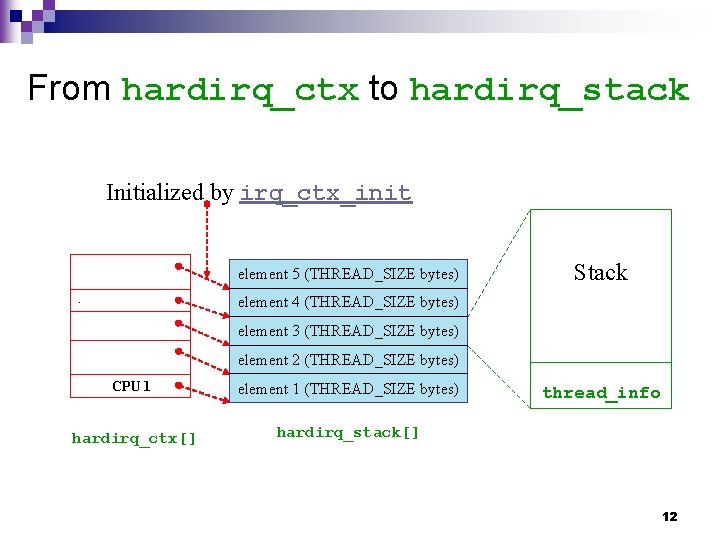

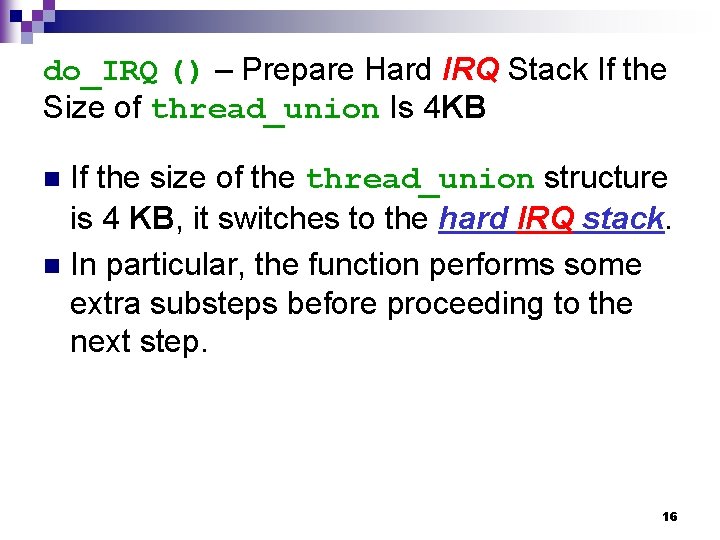

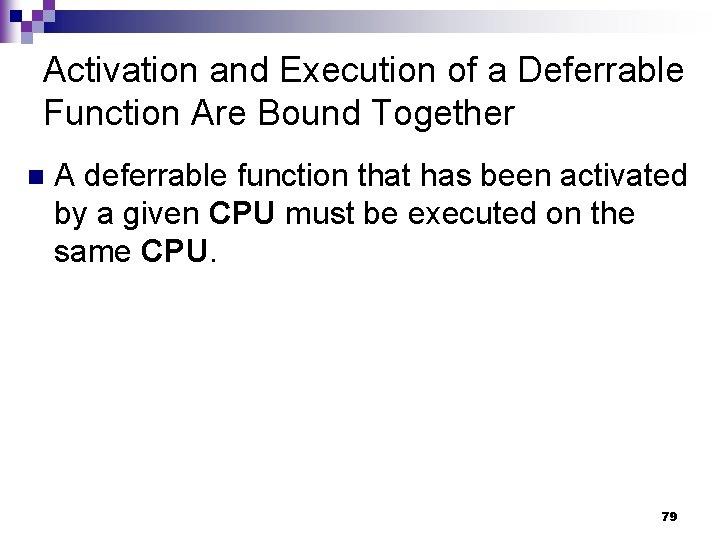

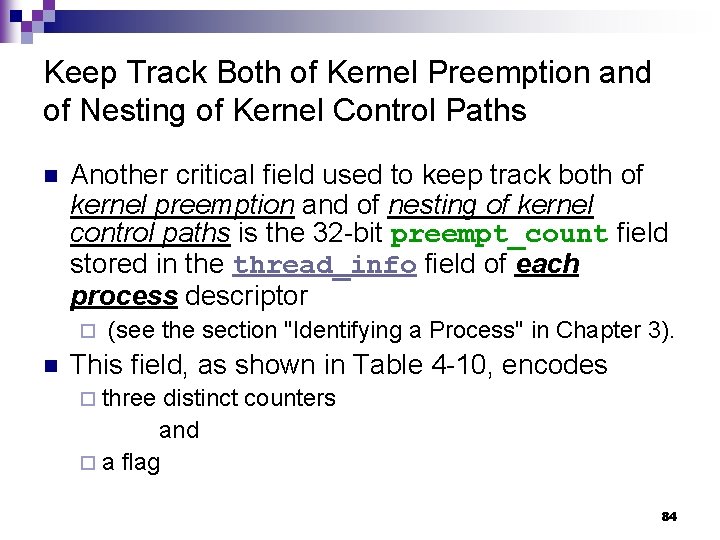

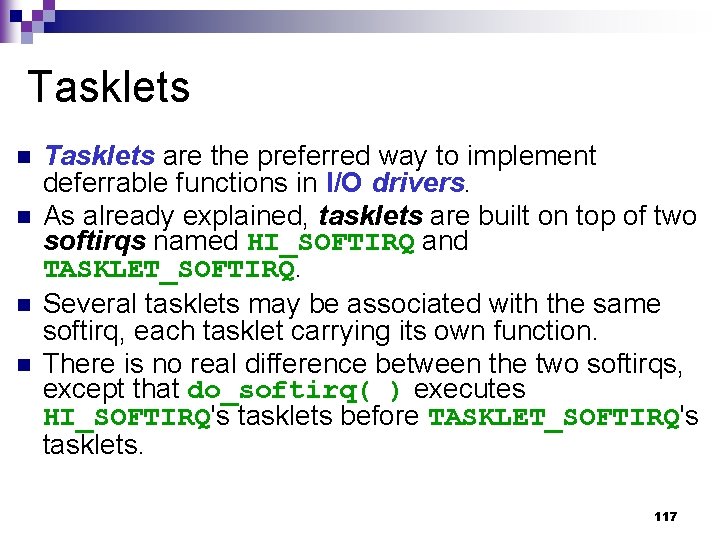

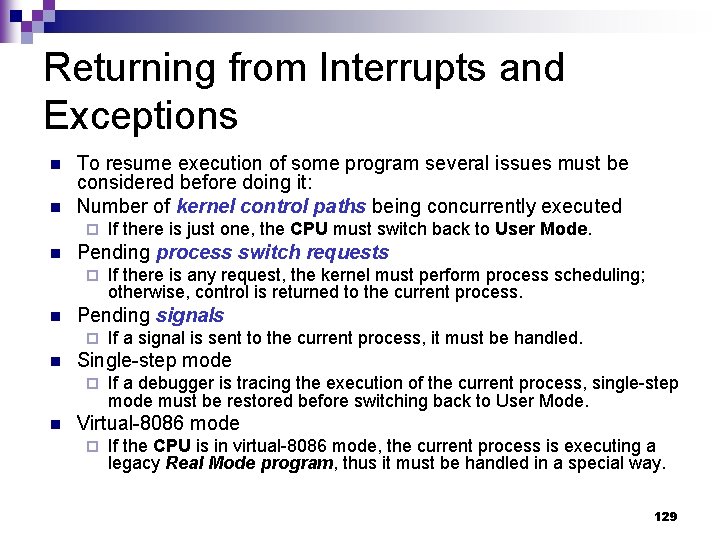

Hard IRQ Stack n n n The hard IRQ stack is used when handling interrupts. There is one hard IRQ stack for each CPU in the system, and each stack is contained in a single page frame. In a multiprocessor system, all hard IRQ stacks are contained in the hardirq_stack array. 5

![Structure of Hard IRQ Stack static char hardirqstackNRCPUS THREADSIZE attributealignedTHREADSIZE element 5 THREADSIZE Structure of Hard IRQ Stack static char hardirq_stack[NR_CPUS * THREAD_SIZE] __attribute__((__aligned__(THREAD_SIZE))); element 5 (THREAD_SIZE](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-6.jpg)

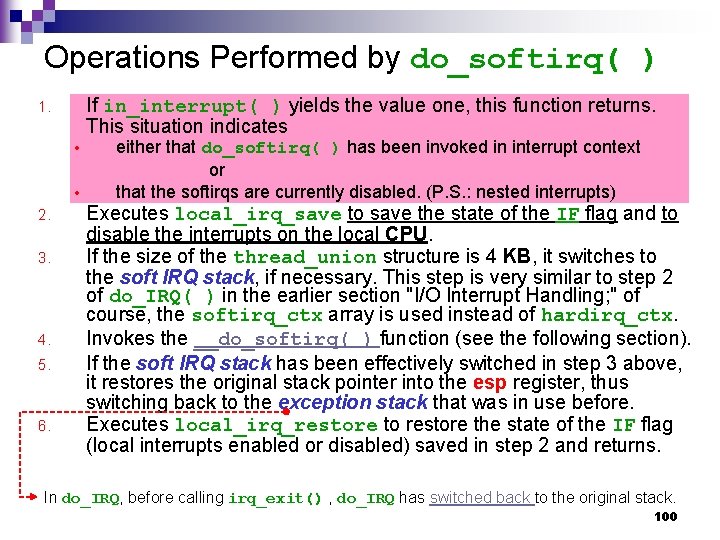

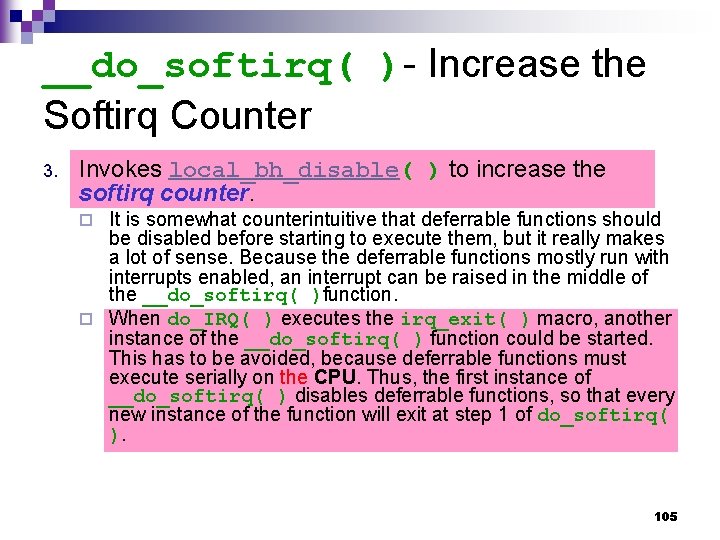

Structure of Hard IRQ Stack static char hardirq_stack[NR_CPUS * THREAD_SIZE] __attribute__((__aligned__(THREAD_SIZE))); element 5 (THREAD_SIZE bytes) element 4 (THREAD_SIZE bytes) element 3 (THREAD_SIZE bytes) hardirq_stack element 2 (THREAD_SIZE bytes) element 1 (THREAD_SIZE bytes) Each hardirq_stack array element is a union of type irq_ctx that span a single page. union irq_ctx { struct thread_info tinfo; u 32 stack[THREAD_SIZE/sizeof(u 32)]; }; 6

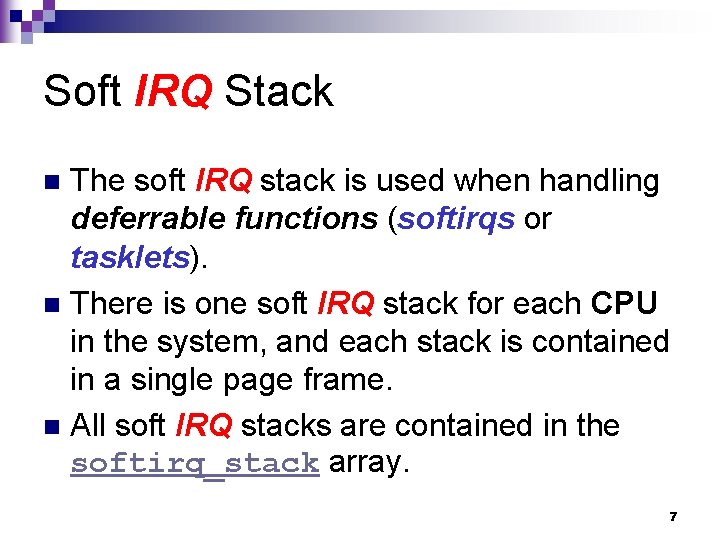

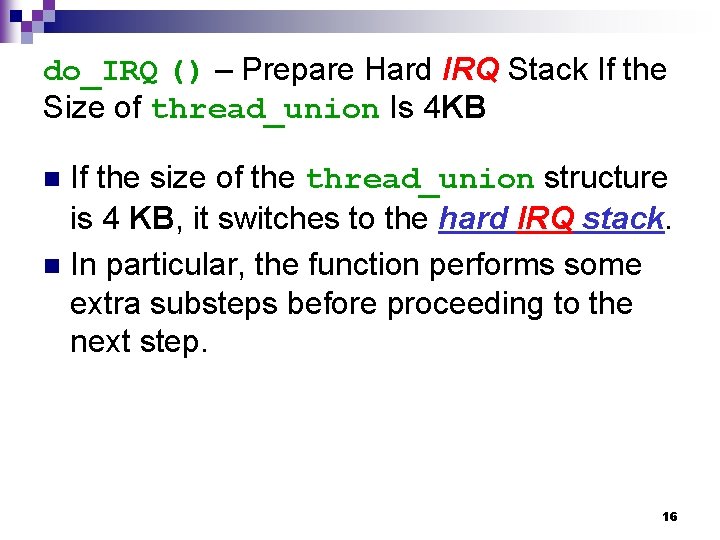

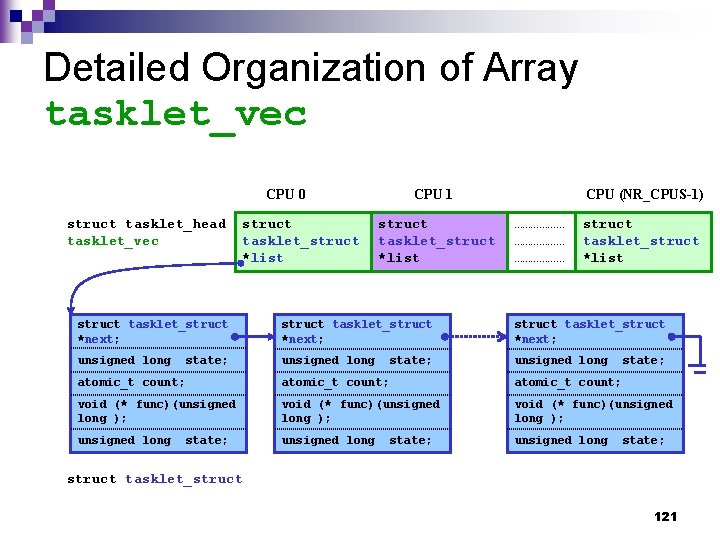

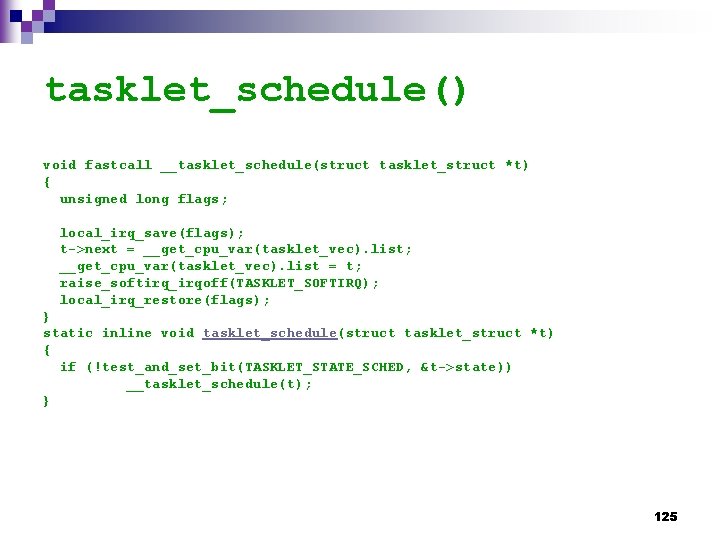

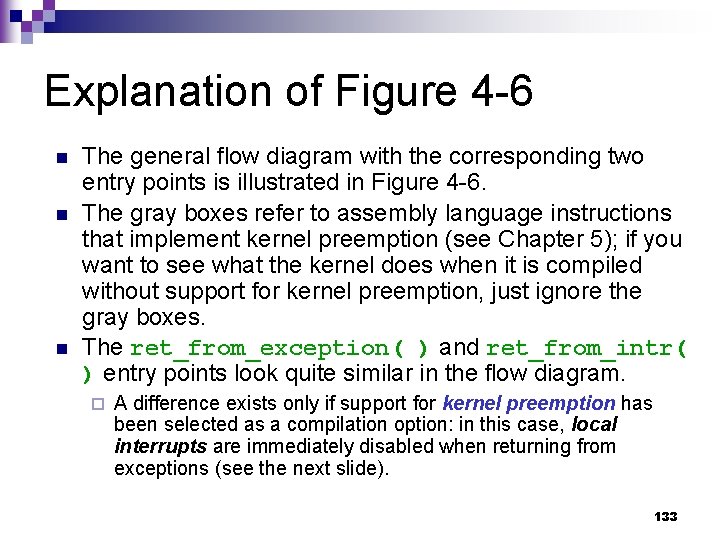

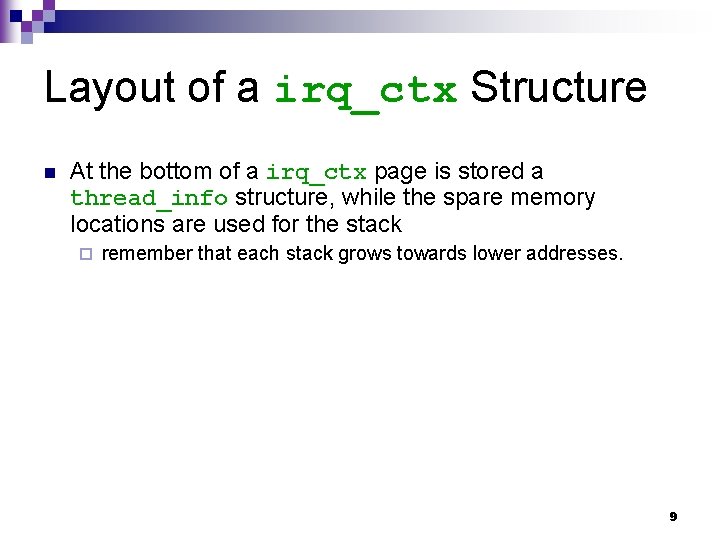

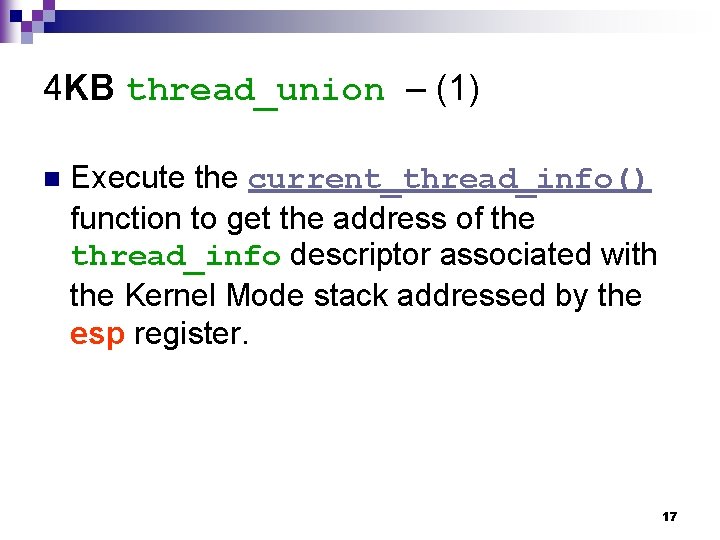

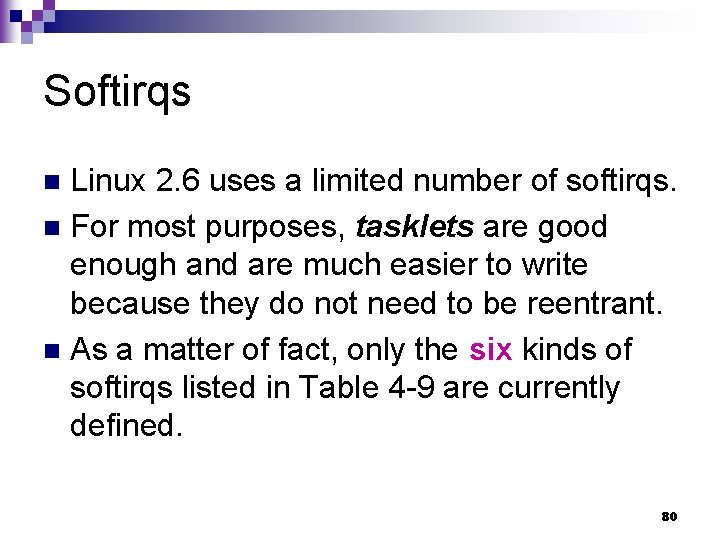

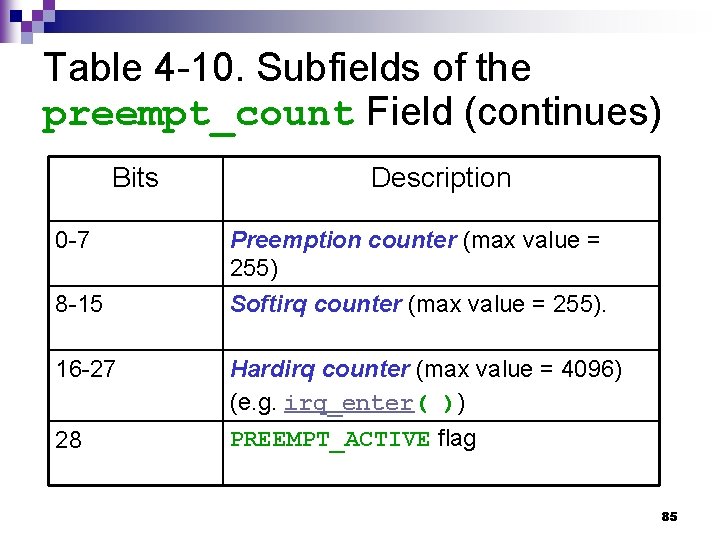

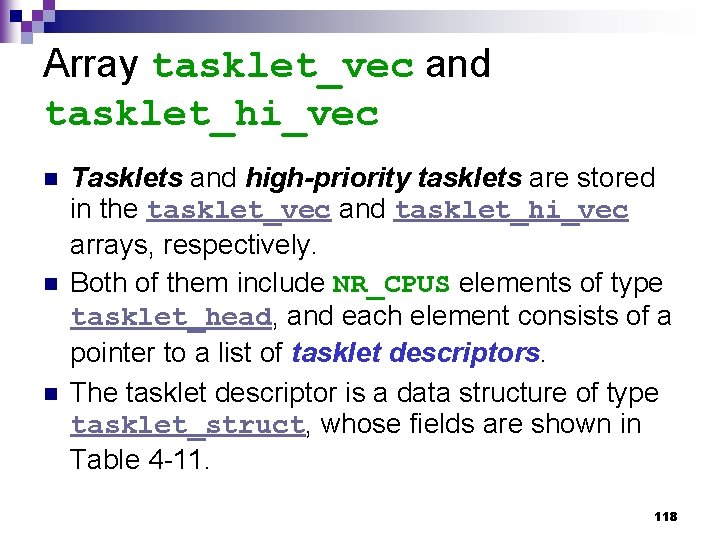

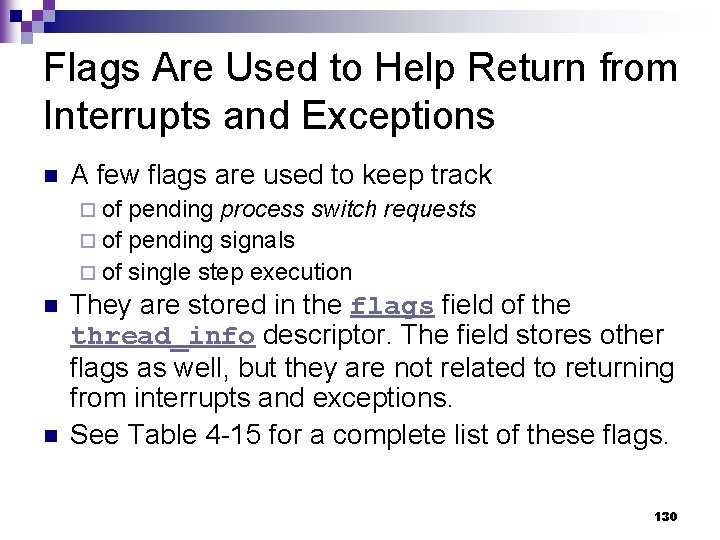

Soft IRQ Stack The soft IRQ stack is used when handling deferrable functions (softirqs or tasklets). n There is one soft IRQ stack for each CPU in the system, and each stack is contained in a single page frame. n All soft IRQ stacks are contained in the softirq_stack array. n 7

![Structure of Soft IRQ Stack static char softirqstackNRCPUS THREADSIZE attributealignedTHREADSIZE element 5 THREADSIZE Structure of Soft IRQ Stack static char softirq_stack[NR_CPUS * THREAD_SIZE] __attribute__((__aligned__(THREAD_SIZE))); element 5 (THREAD_SIZE](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-8.jpg)

Structure of Soft IRQ Stack static char softirq_stack[NR_CPUS * THREAD_SIZE] __attribute__((__aligned__(THREAD_SIZE))); element 5 (THREAD_SIZE bytes) element 4 (THREAD_SIZE bytes) element 3 (THREAD_SIZE bytes) element 2 (THREAD_SIZE bytes) softirq_stack element 1 (THREAD_SIZE bytes) Each softirq_stack array element is a union of type irq_ctx that span a single page. union irq_ctx { struct thread_info tinfo; u 32 stack[THREAD_SIZE/sizeof(u 32)]; }; 8

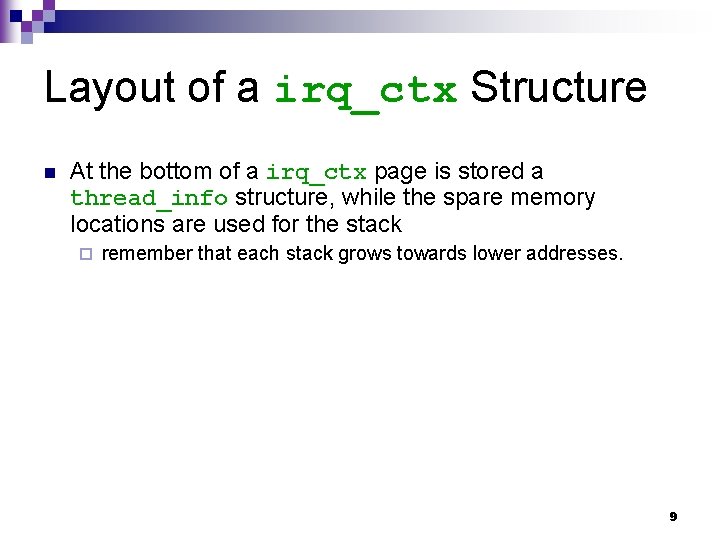

Layout of a irq_ctx Structure n At the bottom of a irq_ctx page is stored a thread_info structure, while the spare memory locations are used for the stack ¨ remember that each stack grows towards lower addresses. 9

Differences between Hard IRQ Stacks, Soft IRQ Stacks and Exception Stacks n Hard IRQ stacks and soft IRQ stacks are very similar to the exception stacks, the only difference is that in the former the thread_info structure coupled with each stack is associated with a CPU rather than a process. 10

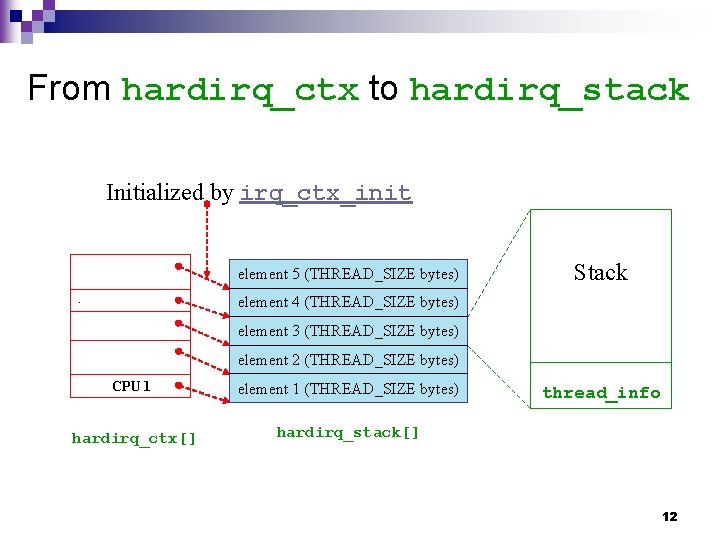

hardirq_ctx and softirq_ctx n The hardirq_ctx and softirq_ctx arrays allow the kernel to quickly determine the hard IRQ stack and soft IRQ stack of a given CPU, respectively: they contain pointers to the corresponding irq_ctx elements. static union irq_ctx *hardirq_ctx[NR_CPUS]; static union irq_ctx *softirq_ctx[NR_CPUS]; 11

From hardirq_ctx to hardirq_stack Initialized by irq_ctx_init element 5 (THREAD_SIZE bytes). Stack element 4 (THREAD_SIZE bytes) element 3 (THREAD_SIZE bytes) element 2 (THREAD_SIZE bytes) CPU 1 element 1 (THREAD_SIZE bytes) hardirq_ctx[] hardirq_stack[] thread_info 12

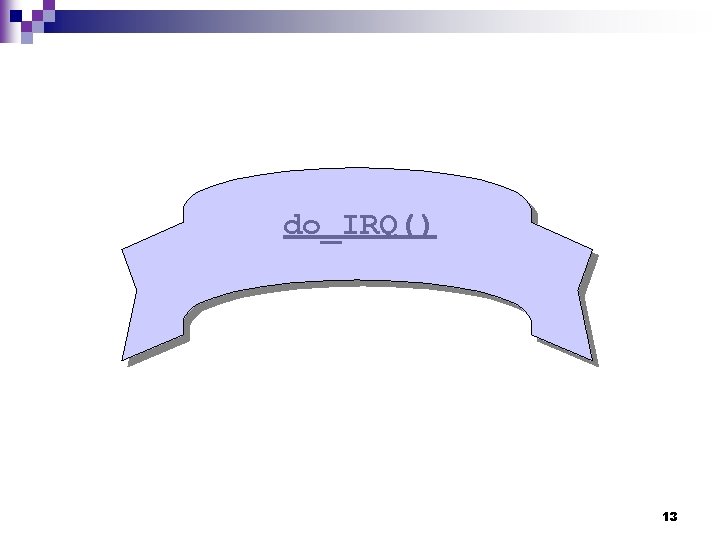

do_IRQ() 13

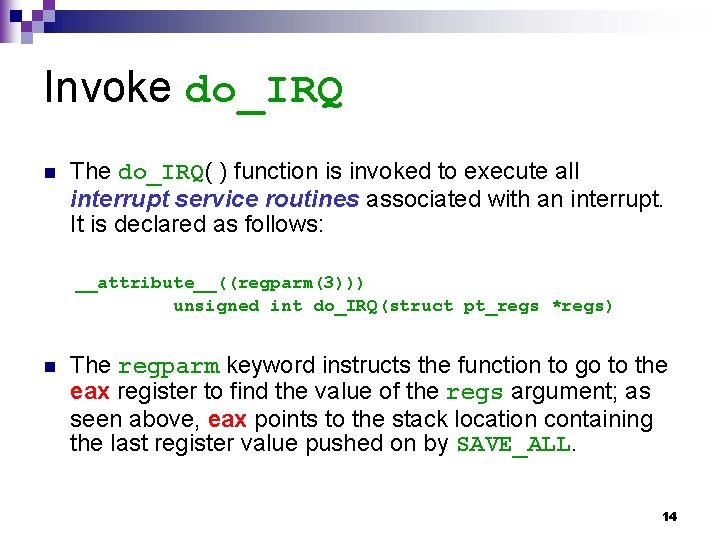

Invoke do_IRQ n The do_IRQ( ) function is invoked to execute all interrupt service routines associated with an interrupt. It is declared as follows: __attribute__((regparm(3))) unsigned int do_IRQ(struct pt_regs *regs) n The regparm keyword instructs the function to go to the eax register to find the value of the regs argument; as seen above, eax points to the stack location containing the last register value pushed on by SAVE_ALL. 14

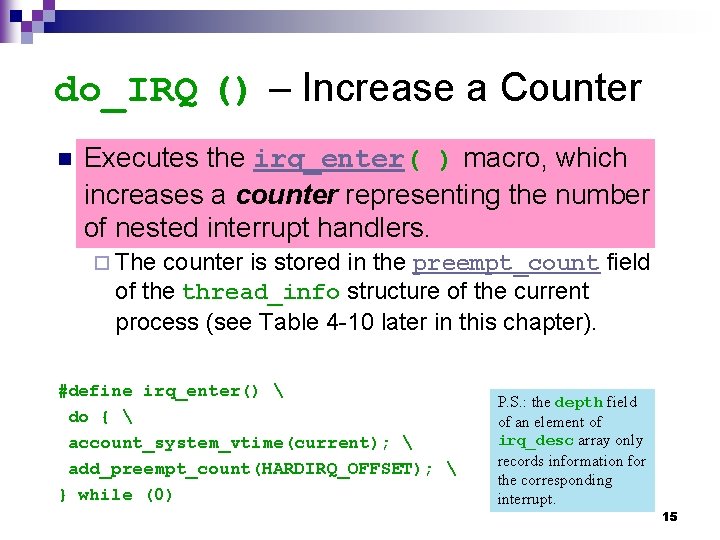

do_IRQ () – Increase a Counter n Executes the irq_enter( ) macro, which increases a counter representing the number of nested interrupt handlers. ¨ The counter is stored in the preempt_count field of the thread_info structure of the current process (see Table 4 -10 later in this chapter). #define irq_enter() do { account_system_vtime(current); add_preempt_count(HARDIRQ_OFFSET); } while (0) P. S. : the depth field of an element of irq_desc array only records information for the corresponding interrupt. 15

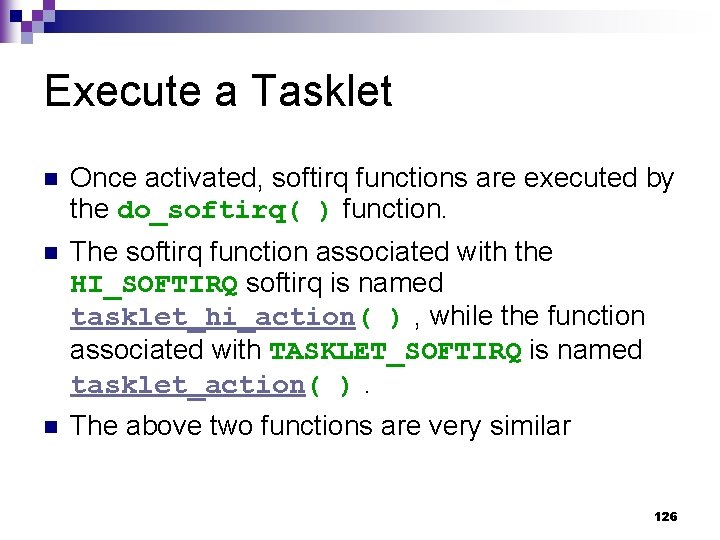

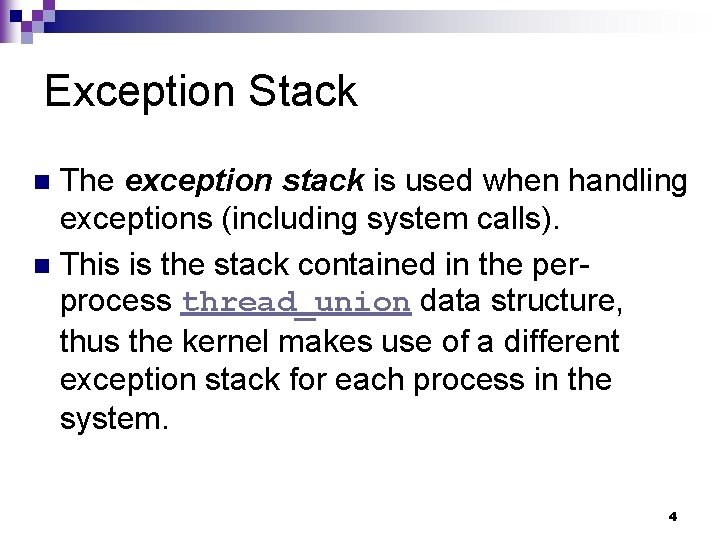

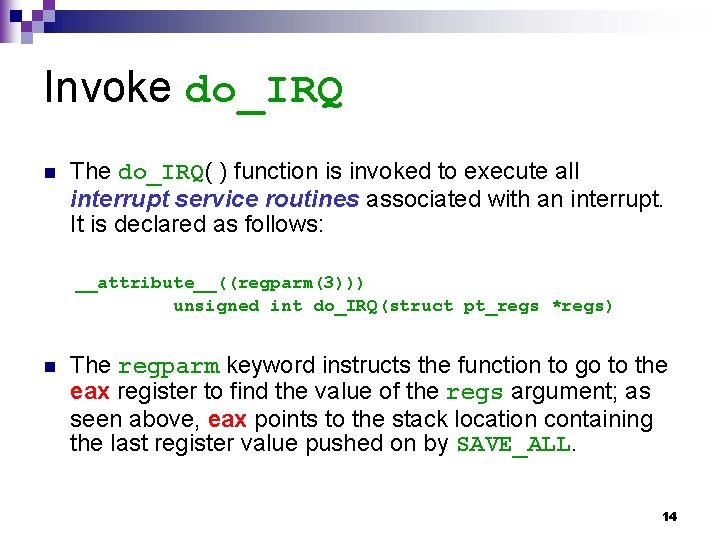

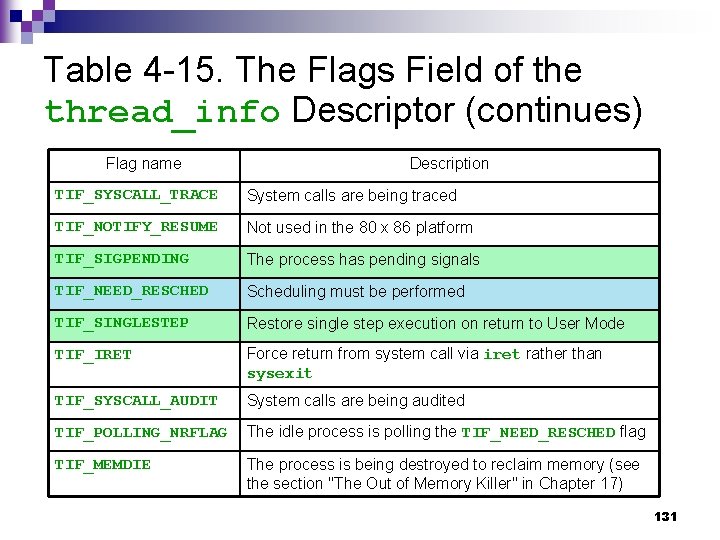

do_IRQ () – Prepare Hard IRQ Stack If the Size of thread_union Is 4 KB If the size of the thread_union structure is 4 KB, it switches to the hard IRQ stack. n In particular, the function performs some extra substeps before proceeding to the next step. n 16

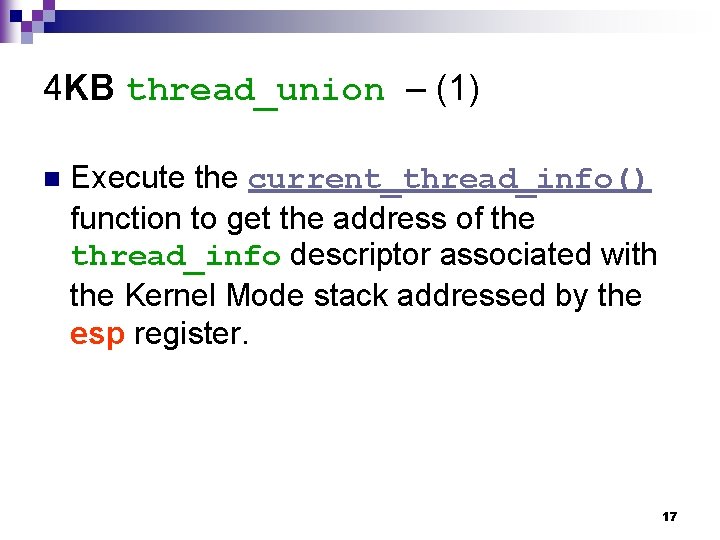

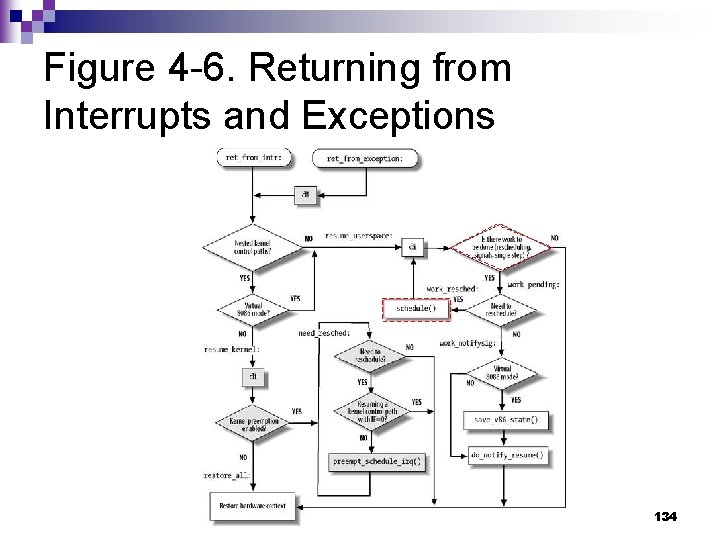

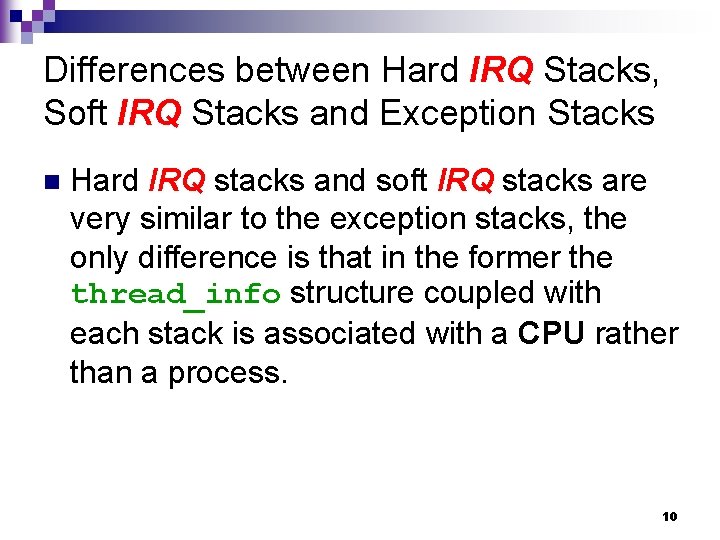

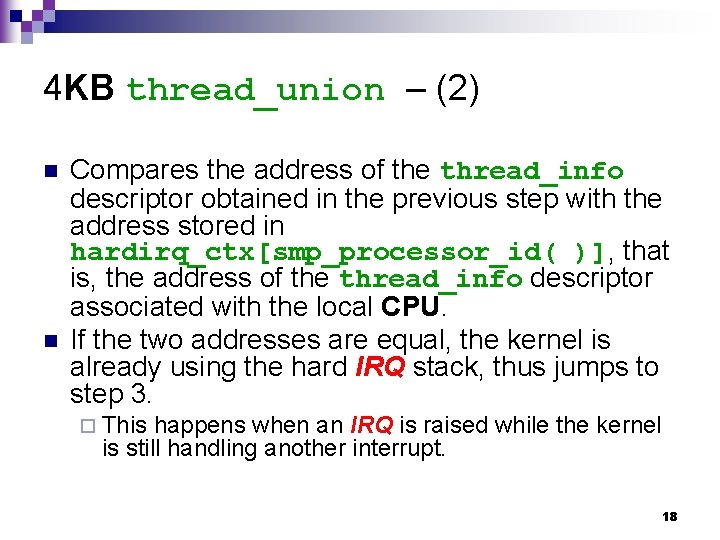

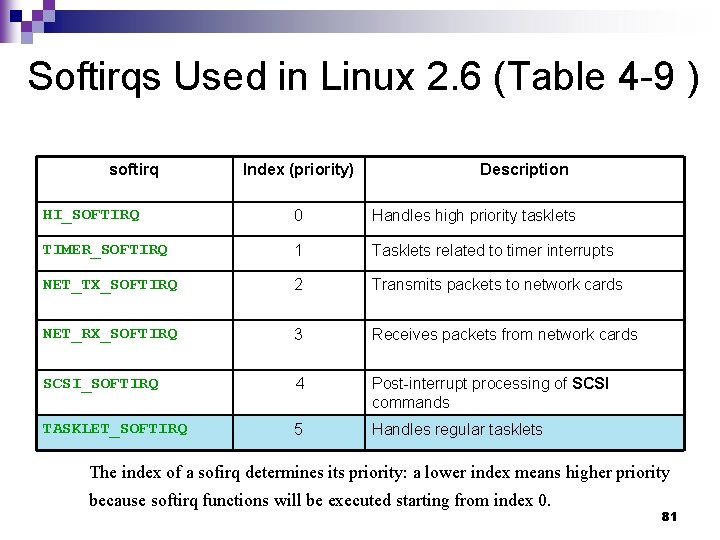

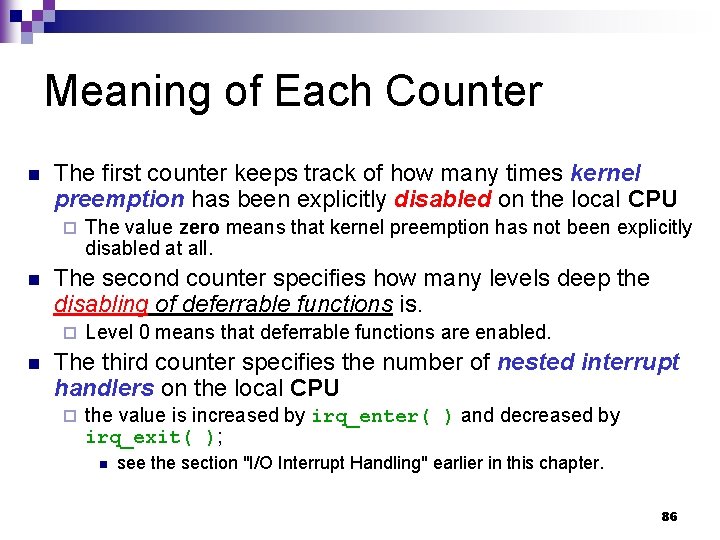

4 KB thread_union – (1) n Execute the current_thread_info() function to get the address of the thread_info descriptor associated with the Kernel Mode stack addressed by the esp register. 17

4 KB thread_union – (2) n n Compares the address of the thread_info descriptor obtained in the previous step with the address stored in hardirq_ctx[smp_processor_id( )], that is, the address of the thread_info descriptor associated with the local CPU. If the two addresses are equal, the kernel is already using the hard IRQ stack, thus jumps to step 3. ¨ This happens when an IRQ is raised while the kernel is still handling another interrupt. 18

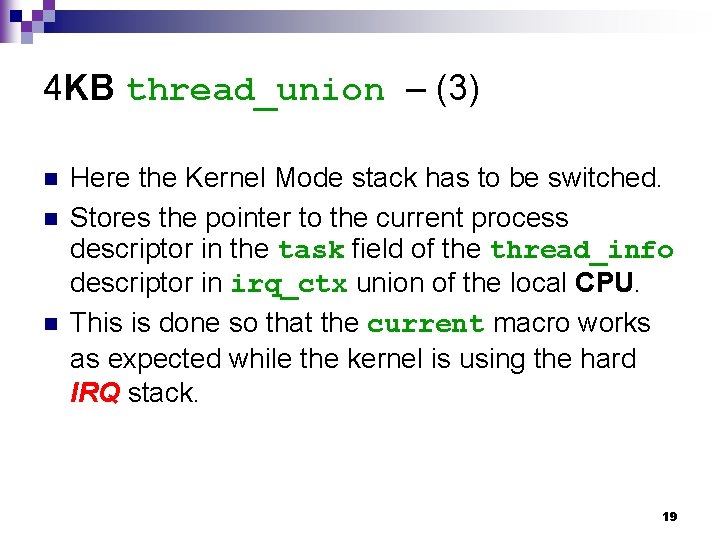

4 KB thread_union – (3) n n n Here the Kernel Mode stack has to be switched. Stores the pointer to the current process descriptor in the task field of the thread_info descriptor in irq_ctx union of the local CPU. This is done so that the current macro works as expected while the kernel is using the hard IRQ stack. 19

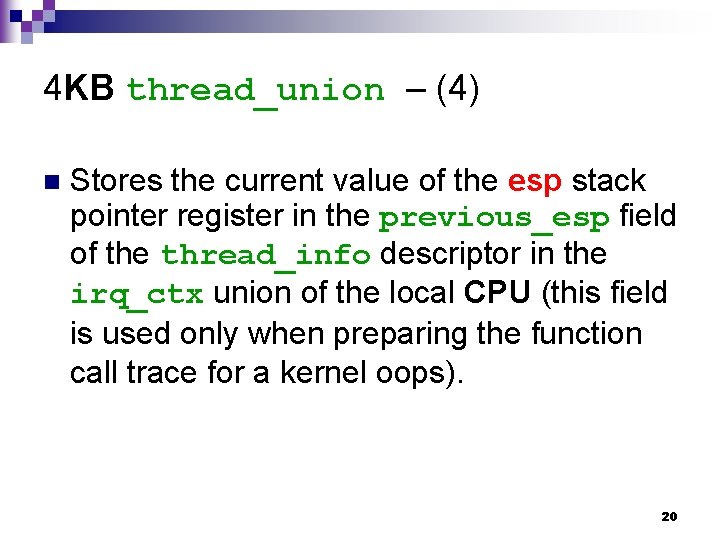

4 KB thread_union – (4) n Stores the current value of the esp stack pointer register in the previous_esp field of the thread_info descriptor in the irq_ctx union of the local CPU (this field is used only when preparing the function call trace for a kernel oops). 20

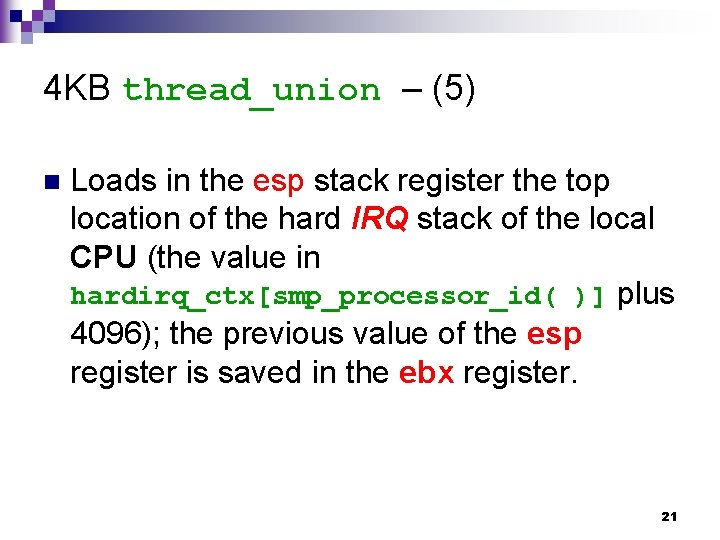

4 KB thread_union – (5) n Loads in the esp stack register the top location of the hard IRQ stack of the local CPU (the value in hardirq_ctx[smp_processor_id( )] plus 4096); the previous value of the esp register is saved in the ebx register. 21

![per CPU per process hard IRQ stack hardirqstacki kernel mode exception stack ss per CPU per process hard IRQ stack hardirq_stack[i ] kernel mode exception stack ss](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-22.jpg)

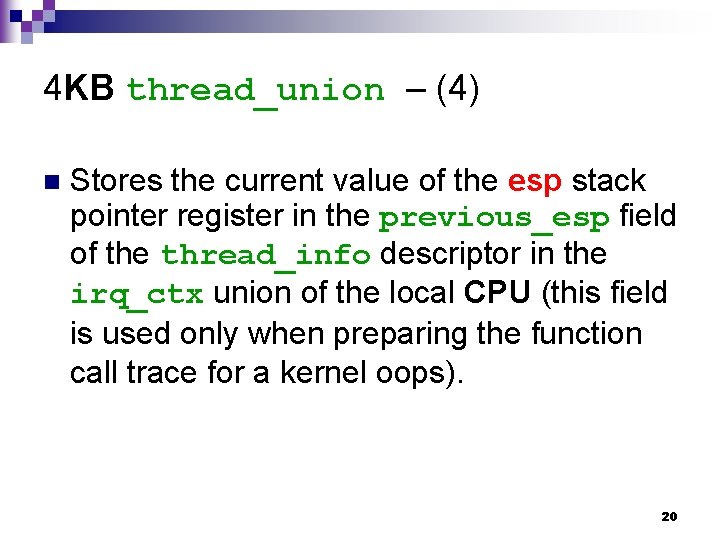

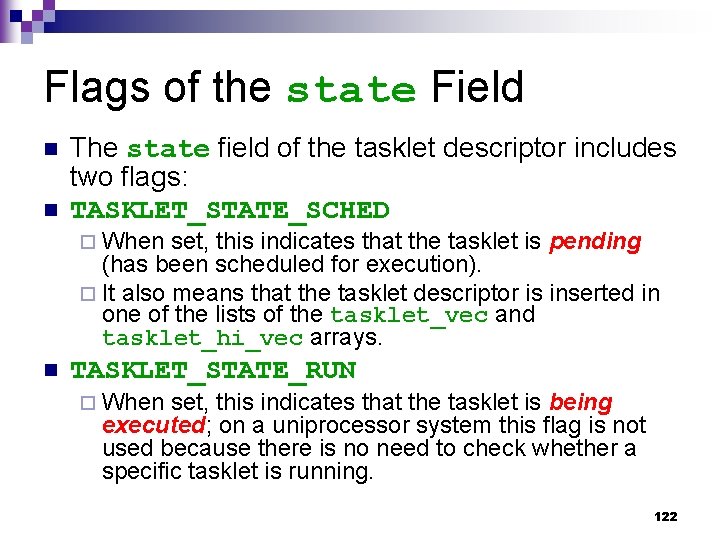

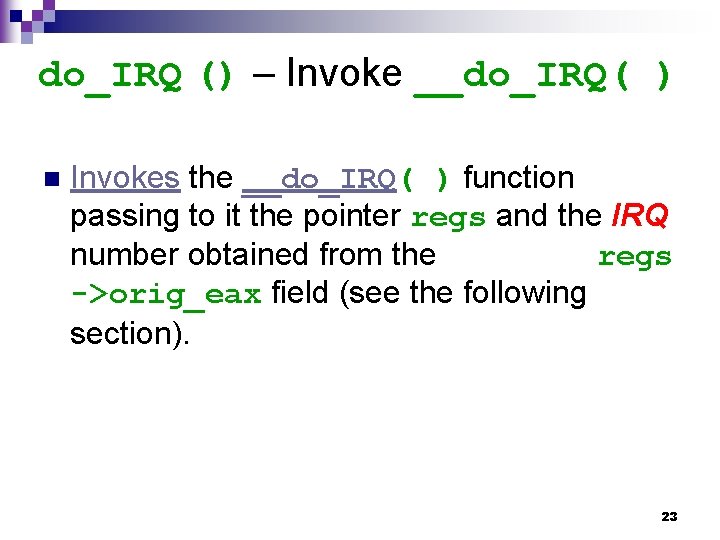

per CPU per process hard IRQ stack hardirq_stack[i ] kernel mode exception stack ss esp process descriptor eflags cs eip $n-256 es ds esp thread esp 0 eip eax ebp edi esi edx ebx %esp ecx ebx %esp task %esp thread_info hardirq_ctx[i ] previous_esp thread_info 22

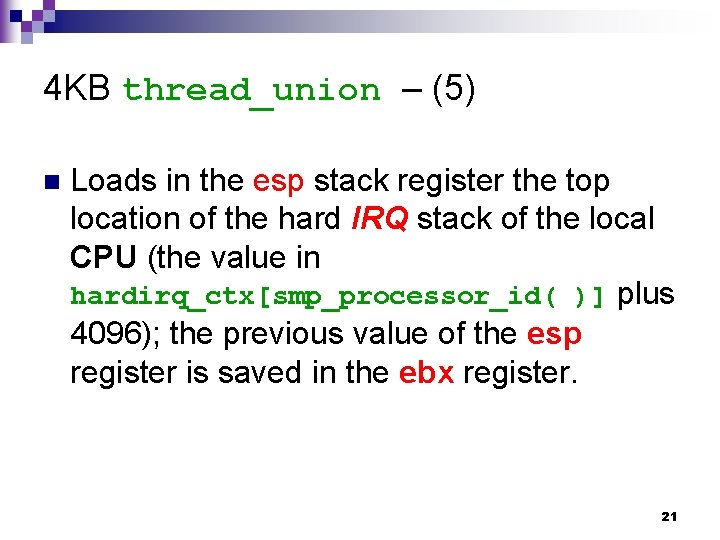

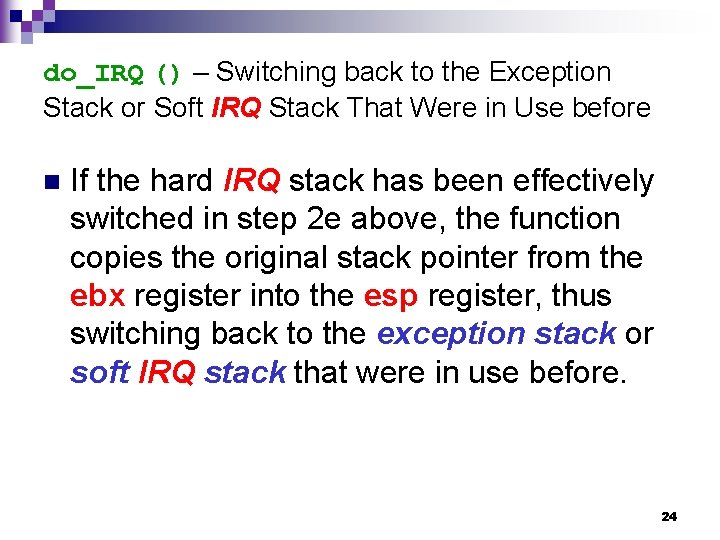

do_IRQ () – Invoke __do_IRQ( ) n Invokes the __do_IRQ( ) function passing to it the pointer regs and the IRQ number obtained from the regs ->orig_eax field (see the following section). 23

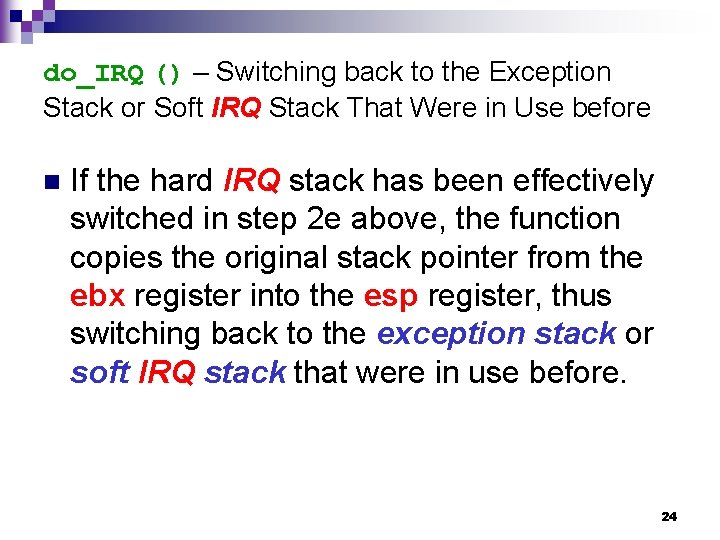

do_IRQ () – Switching back to the Exception Stack or Soft IRQ Stack That Were in Use before n If the hard IRQ stack has been effectively switched in step 2 e above, the function copies the original stack pointer from the ebx register into the esp register, thus switching back to the exception stack or soft IRQ stack that were in use before. 24

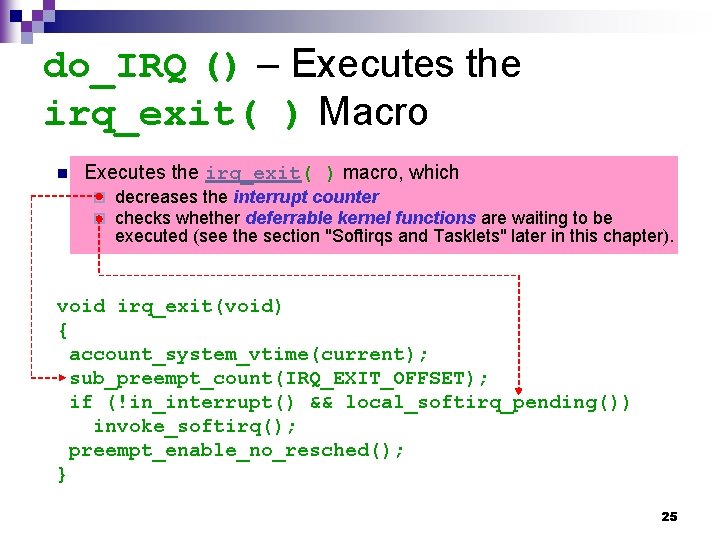

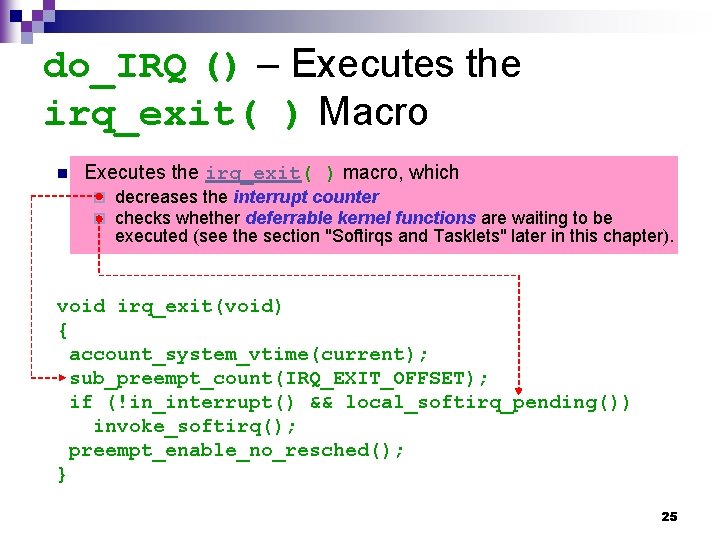

do_IRQ () – Executes the irq_exit( ) Macro n Executes the irq_exit( ) macro, which ¨ ¨ decreases the interrupt counter checks whether deferrable kernel functions are waiting to be executed (see the section "Softirqs and Tasklets" later in this chapter). void irq_exit(void) { account_system_vtime(current); sub_preempt_count(IRQ_EXIT_OFFSET); if (!in_interrupt() && local_softirq_pending()) invoke_softirq(); preempt_enable_no_resched(); } 25

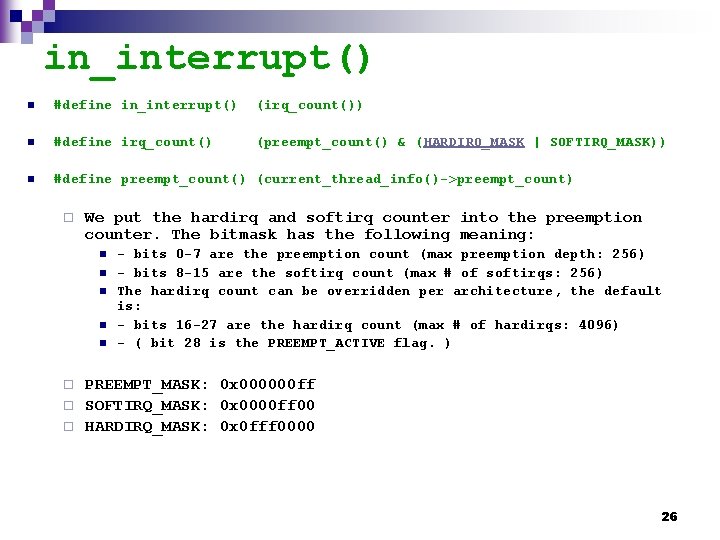

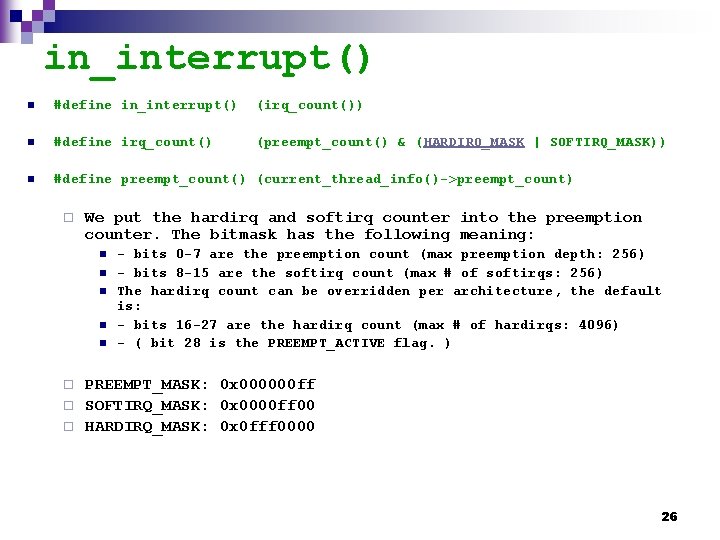

in_interrupt() n #define in_interrupt() (irq_count()) n #define irq_count() (preempt_count() & (HARDIRQ_MASK | SOFTIRQ_MASK)) n #define preempt_count() (current_thread_info()->preempt_count) ¨ We put the hardirq and softirq counter into the preemption counter. The bitmask has the following meaning: n n n - bits 0 -7 are the preemption count (max preemption depth: 256) - bits 8 -15 are the softirq count (max # of softirqs: 256) The hardirq count can be overridden per architecture, the default is: - bits 16 -27 are the hardirq count (max # of hardirqs: 4096) - ( bit 28 is the PREEMPT_ACTIVE flag. ) PREEMPT_MASK: 0 x 000000 ff ¨ SOFTIRQ_MASK: 0 x 0000 ff 00 ¨ HARDIRQ_MASK: 0 x 0 fff 0000 ¨ 26

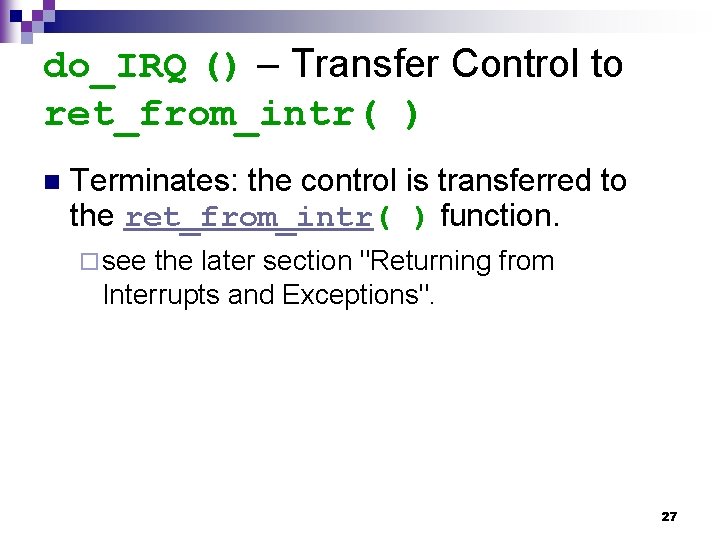

do_IRQ () – Transfer Control to ret_from_intr( ) n Terminates: the control is transferred to the ret_from_intr( ) function. ¨ see the later section "Returning from Interrupts and Exceptions". 27

__do_IRQ() 28

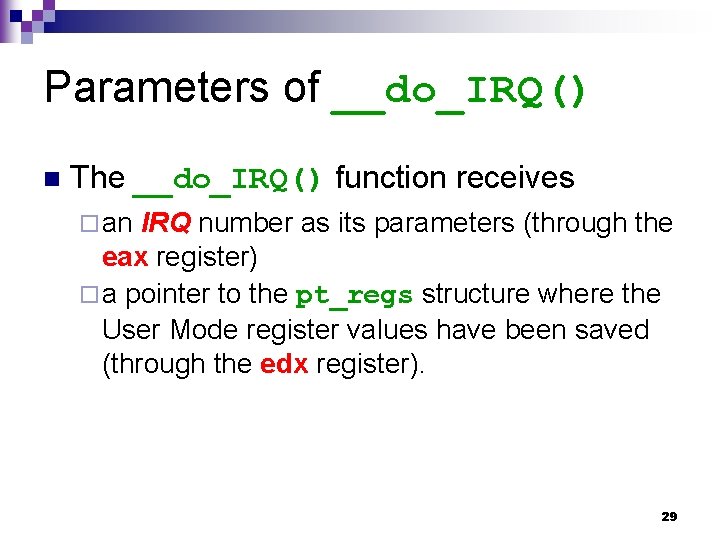

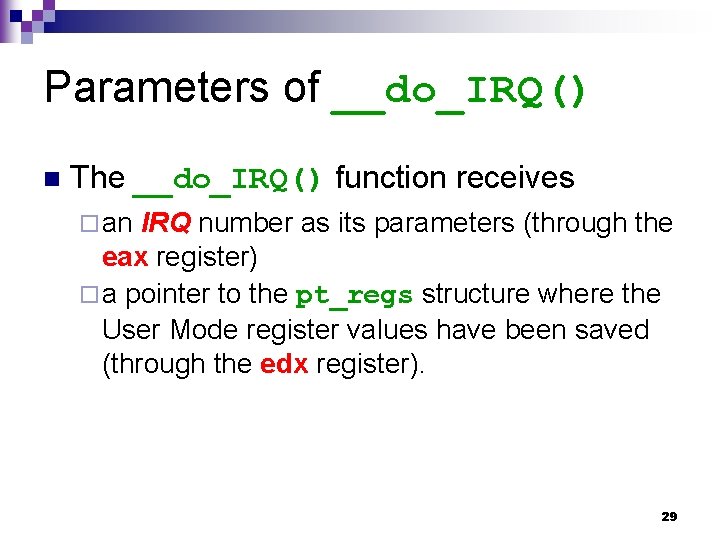

Parameters of __do_IRQ() n The __do_IRQ() function receives ¨ an IRQ number as its parameters (through the eax register) ¨ a pointer to the pt_regs structure where the User Mode register values have been saved (through the edx register). 29

![Equivalent Code of doIRQ spinlockirqdescirq lock irqdescirq handlerackirq e g set IMR of Equivalent Code of __do_IRQ() spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); /* e. g. set IMR of](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-30.jpg)

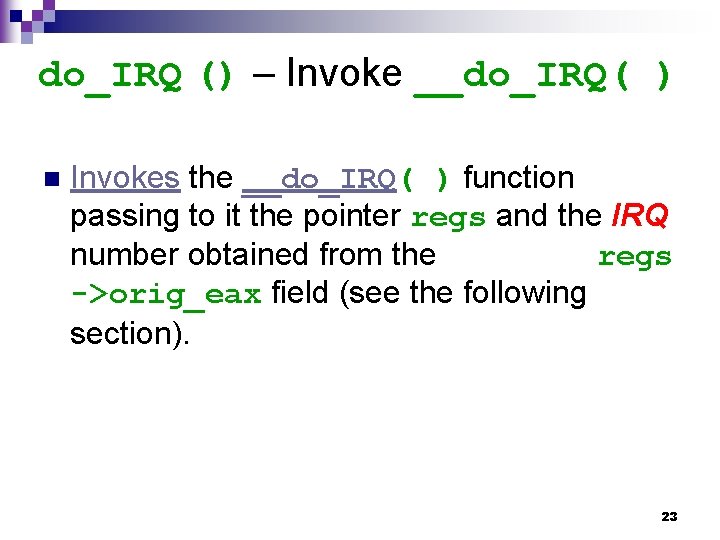

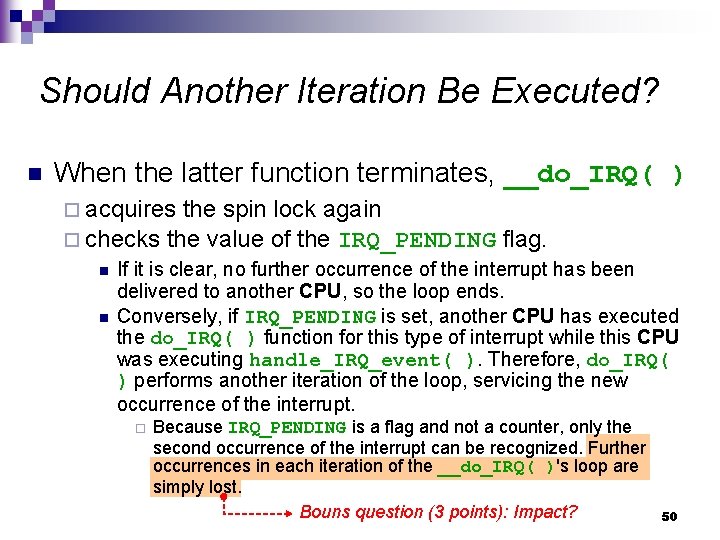

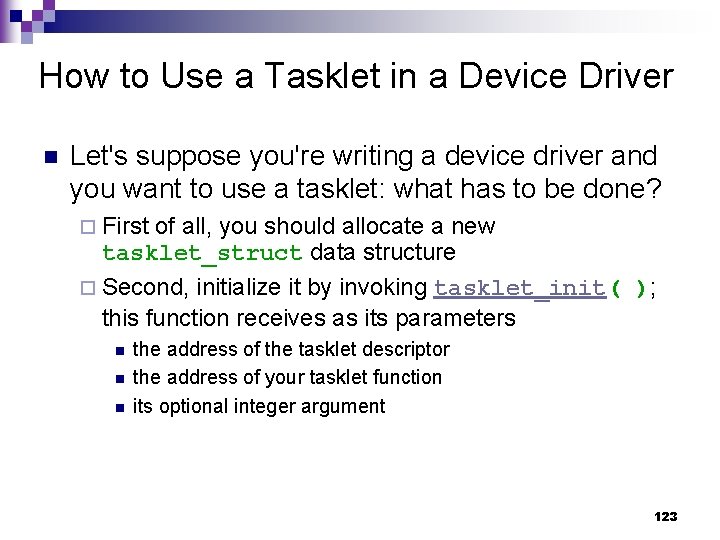

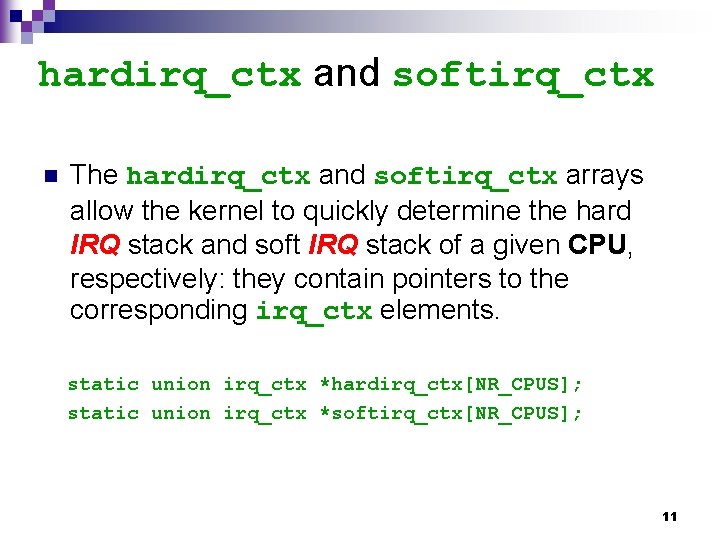

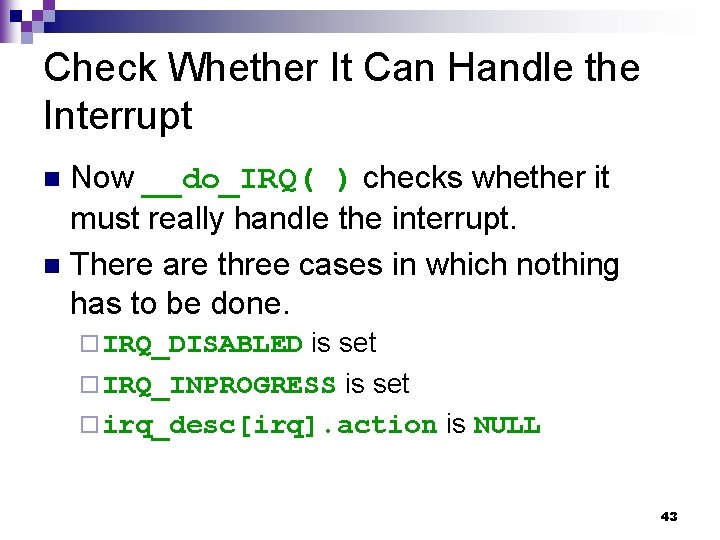

Equivalent Code of __do_IRQ() spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); /* e. g. set IMR of 8259 A */ irq_desc[irq]. status &= ~(IRQ_REPLAY | IRQ_WAITING); irq_desc[irq]. status |= IRQ_PENDING; if (!(irq_desc[irq]. status & (IRQ_DISABLED | IRQ_INPROGRESS)) && irq_desc[irq]. action) { irq_desc[irq]. status |= IRQ_INPROGRESS; do { irq_desc[irq]. status &= ~IRQ_PENDING; spin_unlock(&(irq_desc[irq]. lock)); handle_IRQ_event(irq, regs, irq_desc[irq]. action); spin_lock(&(irq_desc[irq]. lock)); } while (irq_desc[irq]. status & IRQ_PENDING); irq_desc[irq]. status &= ~IRQ_INPROGRESS; } irq_desc[irq]. handler->end(irq); /* e. g. clean IMR of 8259 A */ spin_unlock(&(irq_desc[irq]. lock)); 30

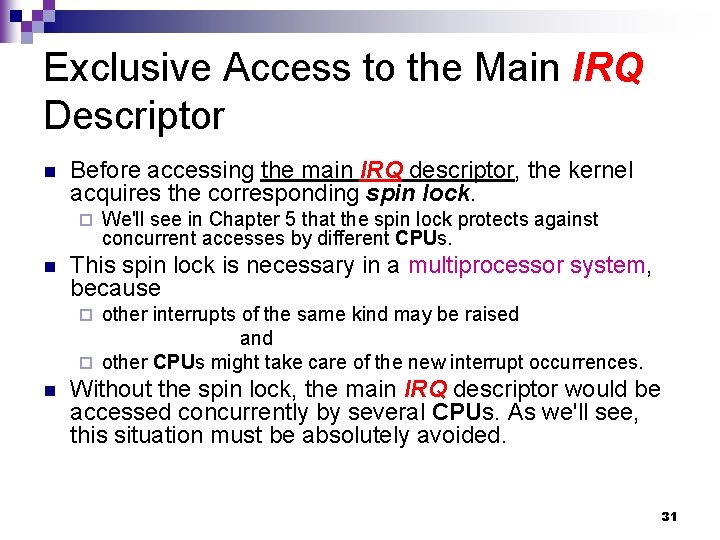

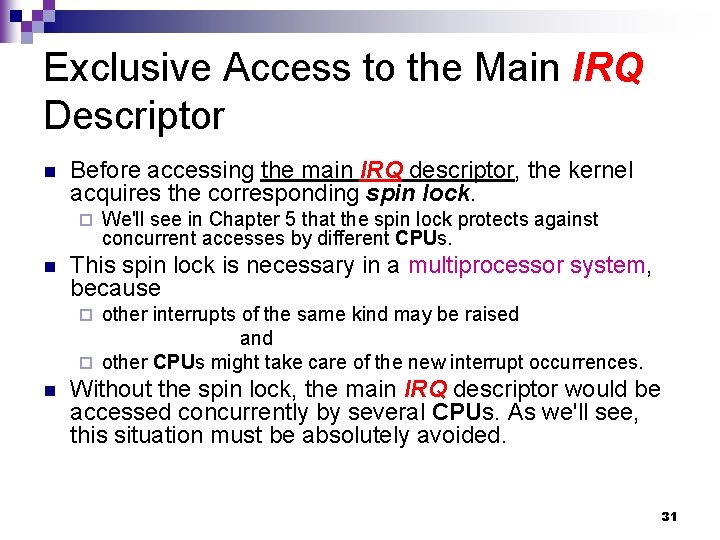

Exclusive Access to the Main IRQ Descriptor n Before accessing the main IRQ descriptor, the kernel acquires the corresponding spin lock. ¨ n We'll see in Chapter 5 that the spin lock protects against concurrent accesses by different CPUs. This spin lock is necessary in a multiprocessor system, because other interrupts of the same kind may be raised and ¨ other CPUs might take care of the new interrupt occurrences. ¨ n Without the spin lock, the main IRQ descriptor would be accessed concurrently by several CPUs. As we'll see, this situation must be absolutely avoided. 31

![8259 A Block Diagram Intel 32 8259 A Block Diagram [Intel] 32](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-32.jpg)

8259 A Block Diagram [Intel] 32

![PIC Registers wiki n There are three registers an Interrupt Mask Register IMR an PIC Registers [wiki] n There are three registers, an Interrupt Mask Register (IMR), an](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-33.jpg)

PIC Registers [wiki] n There are three registers, an Interrupt Mask Register (IMR), an Interrupt Request Register (IRR), and ¨ an In-Service Register (ISR). ¨ ¨ n n n The IRR maintains a mask of the current interrupts that are pending acknowledgement. different The ISR maintains a mask of the interrupts that are events pending an EOI. The IMR maintains a mask of interrupts that should not be sent an acknowledgement. 33

![EOI wiki n n An End Of Interrupt EOI is a signal sent to EOI [wiki] n n An End Of Interrupt (EOI) is a signal sent to](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-34.jpg)

EOI [wiki] n n An End Of Interrupt (EOI) is a signal sent to a Programmable Interrupt Controller (PIC) to indicate the completion of interrupt processing for a given interrupt. An EOI is used to cause a PIC to clear the corresponding bit in the In-Service Register (ISR), and thus allow more interrupt requests of equal or lower priority to be generated by the PIC. 34

![8259 A Interrupt Sequence Intel 1 2 3 4 5 One or more of 8259 A Interrupt Sequence [Intel] 1. 2. 3. 4. 5. One or more of](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-35.jpg)

8259 A Interrupt Sequence [Intel] 1. 2. 3. 4. 5. One or more of the INTERRUPT REQUEST lines (IR 7± 0) are raised high, setting the corresponding IRR bit(s). The 8259 A evaluates these requests, and sends an INT to the CPU, if appropriate. The CPU acknowledges the INT and responds with an INTA pulse. Upon receiving an INTA from the CPU group, the highest priority ISR bit is set and the corresponding IRR bit is reset. The 8259 A does not drive the Data Bus during this cycle. … 35

![Fully Nested Mode Intel n n This mode is entered after initialization unless another Fully Nested Mode [Intel] n n This mode is entered after initialization unless another](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-36.jpg)

Fully Nested Mode [Intel] n n This mode is entered after initialization unless another mode is programmed. The interrupt requests are ordered in priority from 0 through 7 (0 highest). When an interrupt is acknowledged the highest priority request is determined and its vector placed on the bus. Additionally, a bit of the Interrupt Service Register (ISO-7) is set. This bit remains set until the microprocessor issues an End of Interrupt (EOI) command immediately before returning from the service routine, or ¨ if AEOI (Automatic End of Interrupt) bit is set, until the trailing edge of the last INTA. ¨ n While the IS bit is set, all further interrupts of the same or lower priority are inhibited, while higher levels will generate an interrupt (which will be acknowledged only if the microprocessor internal Interrupt enable flip-flop has been re 36 enabled through software).

![8259 A Block Diagram Intel A C K While the IS bit is set 8259 A Block Diagram [Intel] A C K While the IS bit is set,](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-37.jpg)

8259 A Block Diagram [Intel] A C K While the IS bit is set, all further interrupts of the same or lower priority are inhibited, while higher levels will generate an interrupt. EOI 37

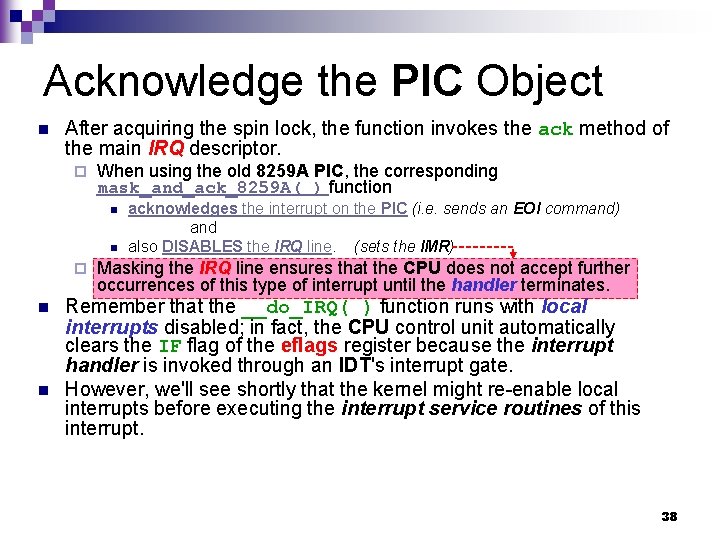

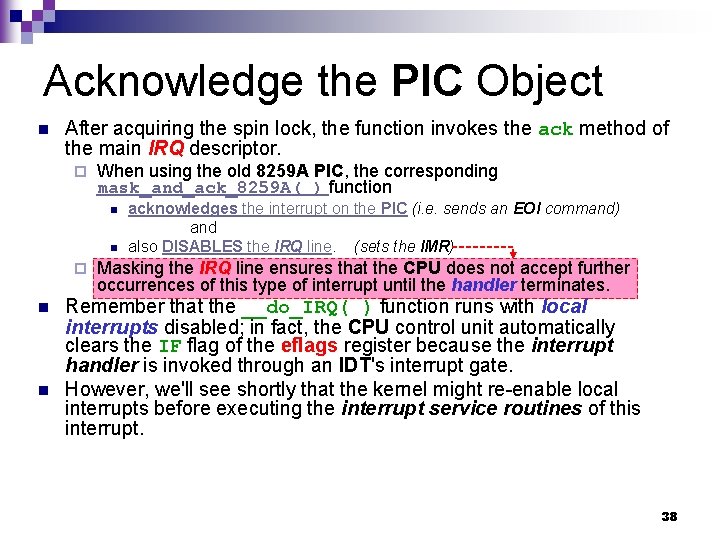

Acknowledge the PIC Object n After acquiring the spin lock, the function invokes the ack method of the main IRQ descriptor. ¨ When using the old 8259 A PIC, the corresponding mask_and_ack_8259 A( ) function n n ¨ n n acknowledges the interrupt on the PIC (i. e. sends an EOI command) and also DISABLES the IRQ line. (sets the IMR) Masking the IRQ line ensures that the CPU does not accept further occurrences of this type of interrupt until the handler terminates. Remember that the __do_IRQ( ) function runs with local interrupts disabled; in fact, the CPU control unit automatically clears the IF flag of the eflags register because the interrupt handler is invoked through an IDT's interrupt gate. However, we'll see shortly that the kernel might re-enable local interrupts before executing the interrupt service routines of this interrupt. 38

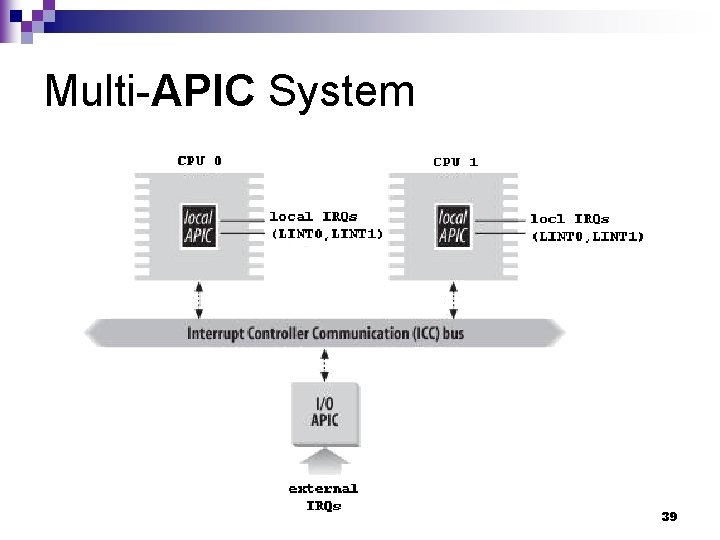

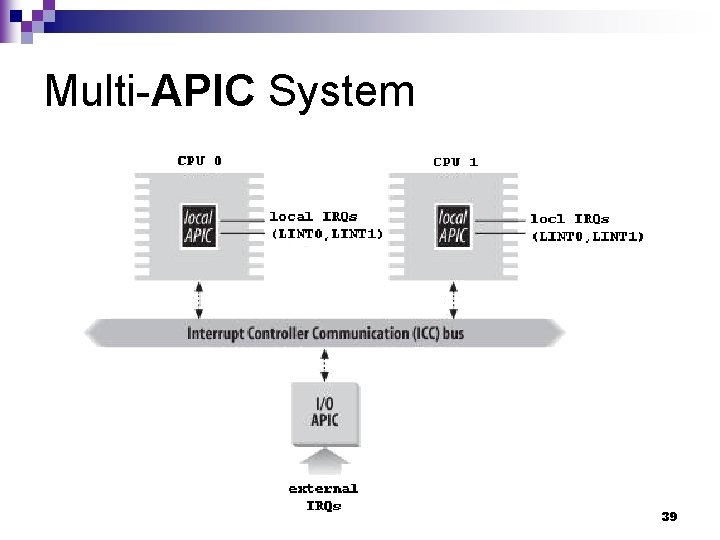

Multi-APIC System 39

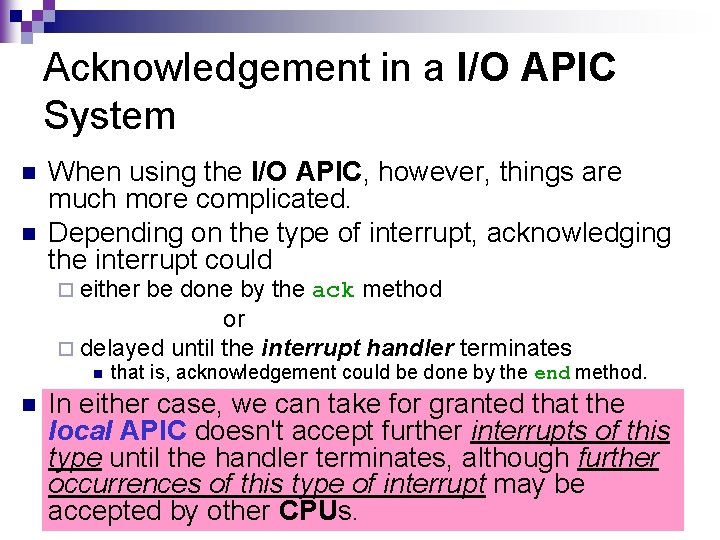

Acknowledgement in a I/O APIC System n n When using the I/O APIC, however, things are much more complicated. Depending on the type of interrupt, acknowledging the interrupt could ¨ either be done by the ack method or ¨ delayed until the interrupt handler terminates n n that is, acknowledgement could be done by the end method. In either case, we can take for granted that the local APIC doesn't accept further interrupts of this type until the handler terminates, although further occurrences of this type of interrupt may be accepted by other CPUs. 40

![Equivalent Code of doIRQ spinlockirqdescirq lock irqdescirq handlerackirq e g set IMR of Equivalent Code of __do_IRQ() spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); /* e. g. set IMR of](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-41.jpg)

Equivalent Code of __do_IRQ() spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); /* e. g. set IMR of 8259 A */ irq_desc[irq]. status &= ~(IRQ_REPLAY | IRQ_WAITING); irq_desc[irq]. status |= IRQ_PENDING; if (!(irq_desc[irq]. status & (IRQ_DISABLED | IRQ_INPROGRESS)) && irq_desc[irq]. action) { irq_desc[irq]. status |= IRQ_INPROGRESS; do { irq_desc[irq]. status &= ~IRQ_PENDING; spin_unlock(&(irq_desc[irq]. lock)); handle_IRQ_event(irq, regs, irq_desc[irq]. action); spin_lock(&(irq_desc[irq]. lock)); } while (irq_desc[irq]. status & IRQ_PENDING); irq_desc[irq]. status &= ~IRQ_INPROGRESS; } irq_desc[irq]. handler->end(irq); /* e. g. clean IMR of 8259 A */ spin_unlock(&(irq_desc[irq]. lock)); 41

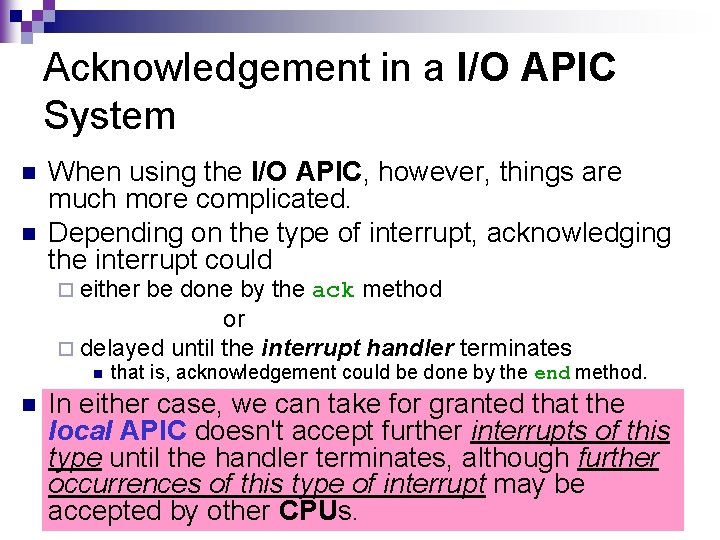

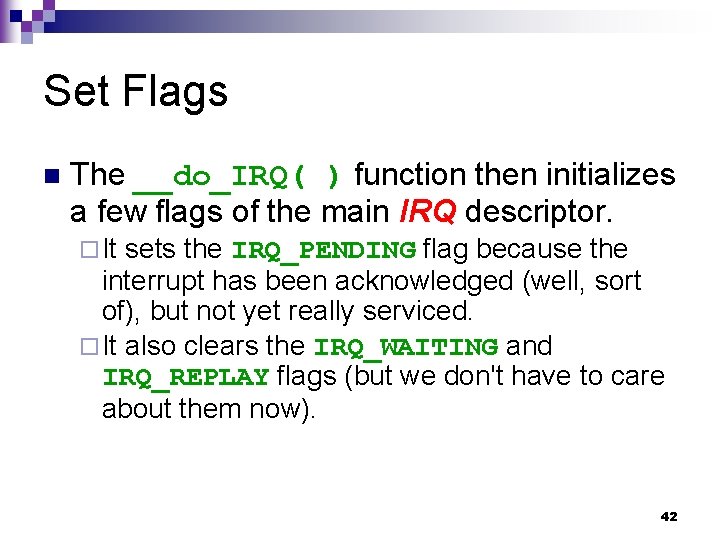

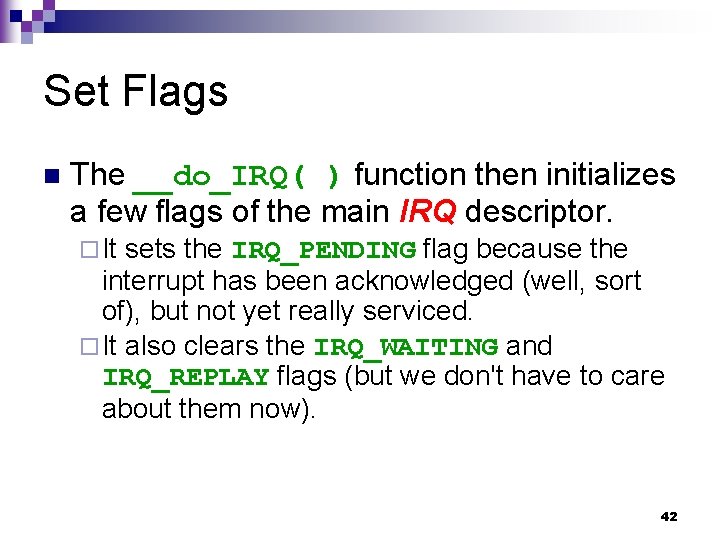

Set Flags n The __do_IRQ( ) function then initializes a few flags of the main IRQ descriptor. ¨ It sets the IRQ_PENDING flag because the interrupt has been acknowledged (well, sort of), but not yet really serviced. ¨ It also clears the IRQ_WAITING and IRQ_REPLAY flags (but we don't have to care about them now). 42

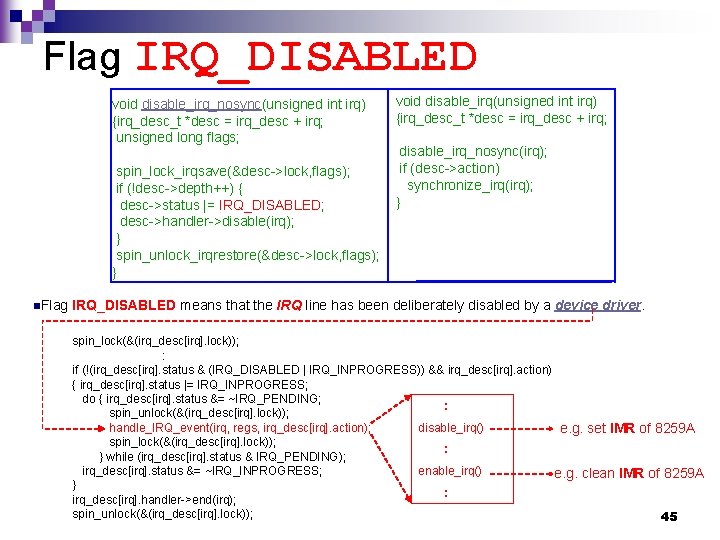

Check Whether It Can Handle the Interrupt Now __do_IRQ( ) checks whether it must really handle the interrupt. n There are three cases in which nothing has to be done. n is set ¨ IRQ_INPROGRESS is set ¨ irq_desc[irq]. action is NULL ¨ IRQ_DISABLED 43

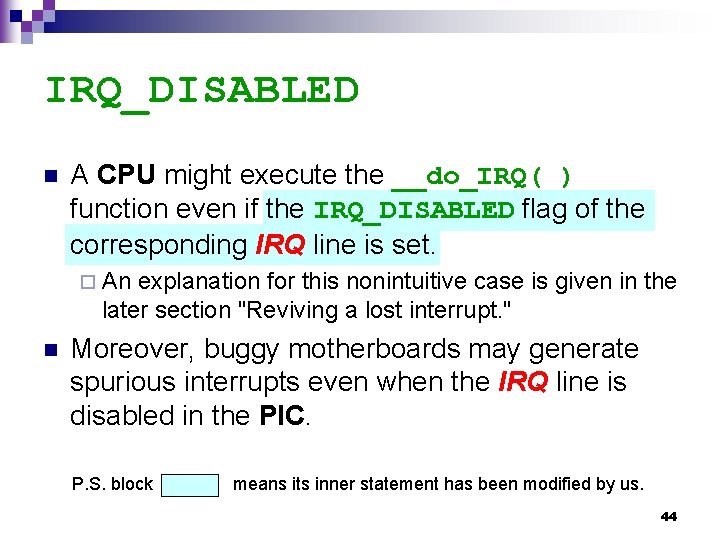

IRQ_DISABLED n A CPU might execute the __do_IRQ( ) function even if the IRQ_DISABLED flag of the corresponding IRQ line is set. ¨ An explanation for this nonintuitive case is given in the later section "Reviving a lost interrupt. " n Moreover, buggy motherboards may generate spurious interrupts even when the IRQ line is disabled in the PIC. P. S. block means its inner statement has been modified by us. 44

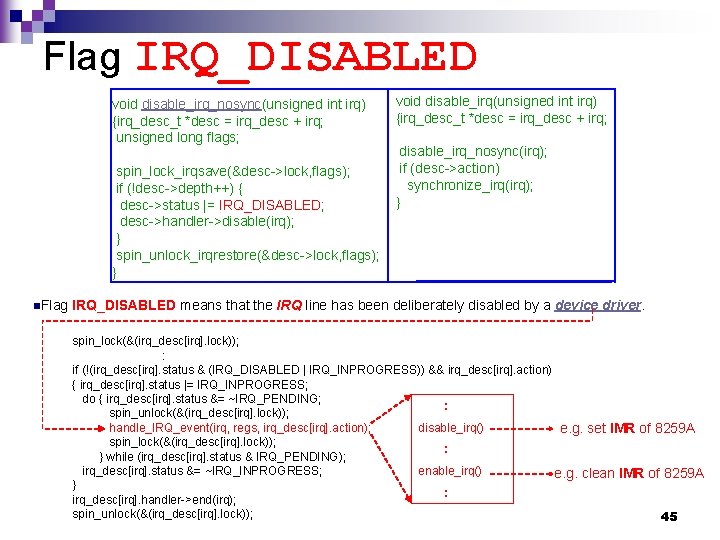

Flag IRQ_DISABLED void disable_irq_nosync(unsigned int irq) {irq_desc_t *desc = irq_desc + irq; unsigned long flags; spin_lock_irqsave(&desc->lock, flags); if (!desc->depth++) { desc->status |= IRQ_DISABLED; desc->handler->disable(irq); } spin_unlock_irqrestore(&desc->lock, flags); } n. Flag void disable_irq(unsigned int irq) {irq_desc_t *desc = irq_desc + irq; disable_irq_nosync(irq); if (desc->action) synchronize_irq(irq); } IRQ_DISABLED means that the IRQ line has been deliberately disabled by a device driver. spin_lock(&(irq_desc[irq]. lock)); : if (!(irq_desc[irq]. status & (IRQ_DISABLED | IRQ_INPROGRESS)) && irq_desc[irq]. action) { irq_desc[irq]. status |= IRQ_INPROGRESS; do { irq_desc[irq]. status &= ~IRQ_PENDING; : spin_unlock(&(irq_desc[irq]. lock)); disable_irq() handle_IRQ_event(irq, regs, irq_desc[irq]. action); e. g. set IMR of 8259 A spin_lock(&(irq_desc[irq]. lock)); : } while (irq_desc[irq]. status & IRQ_PENDING); enable_irq() irq_desc[irq]. status &= ~IRQ_INPROGRESS; e. g. clean IMR of 8259 A } : irq_desc[irq]. handler->end(irq); spin_unlock(&(irq_desc[irq]. lock)); 45

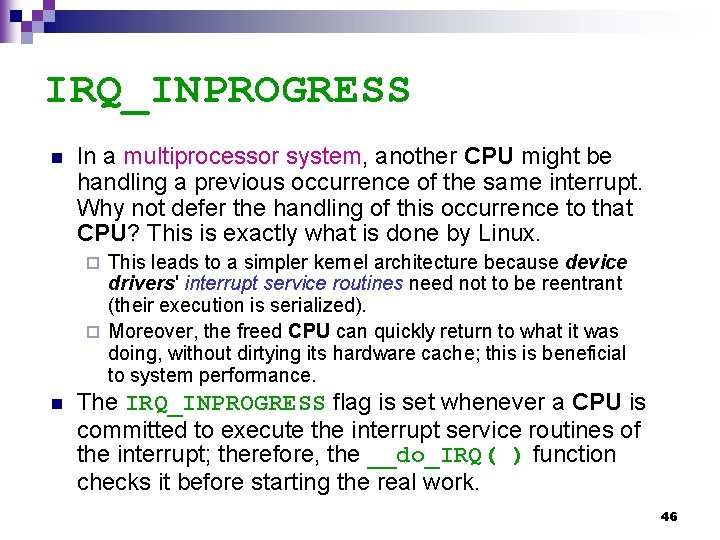

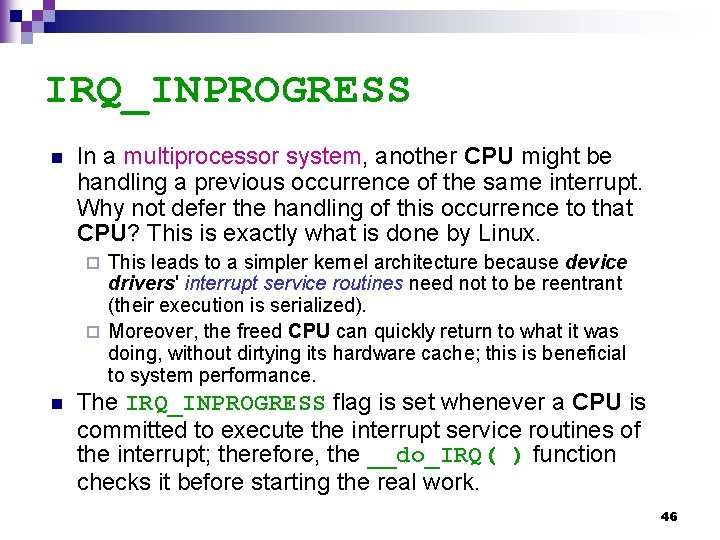

IRQ_INPROGRESS n In a multiprocessor system, another CPU might be handling a previous occurrence of the same interrupt. Why not defer the handling of this occurrence to that CPU? This is exactly what is done by Linux. This leads to a simpler kernel architecture because device drivers' interrupt service routines need not to be reentrant (their execution is serialized). ¨ Moreover, the freed CPU can quickly return to what it was doing, without dirtying its hardware cache; this is beneficial to system performance. ¨ n The IRQ_INPROGRESS flag is set whenever a CPU is committed to execute the interrupt service routines of the interrupt; therefore, the __do_IRQ( ) function checks it before starting the real work. 46

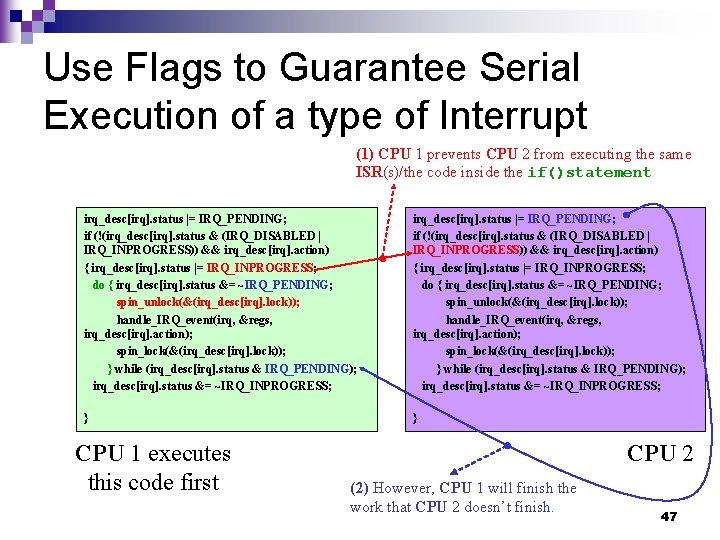

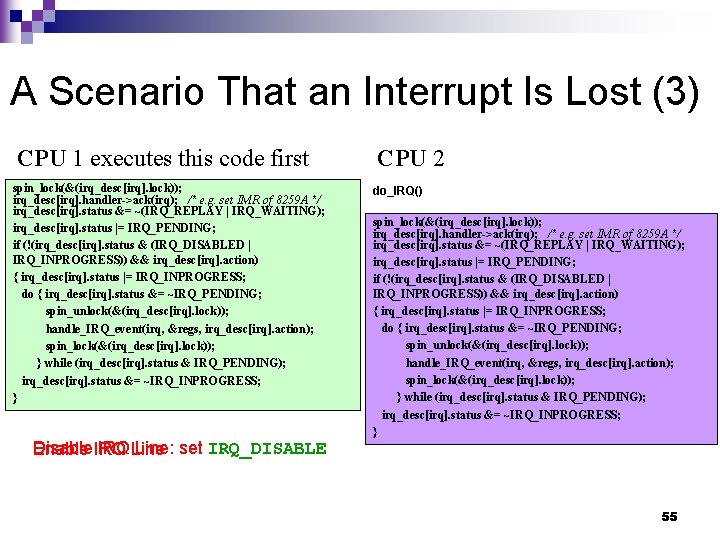

Use Flags to Guarantee Serial Execution of a type of Interrupt (1) CPU 1 prevents CPU 2 from executing the same ISR(s)/the code inside the if()statement irq_desc[irq]. status |= IRQ_PENDING; if (!(irq_desc[irq]. status & (IRQ_DISABLED | IRQ_INPROGRESS)) && irq_desc[irq]. action) { irq_desc[irq]. status |= IRQ_INPROGRESS; do { irq_desc[irq]. status &= ~IRQ_PENDING; spin_unlock(&(irq_desc[irq]. lock)); handle_IRQ_event(irq, ®s, irq_desc[irq]. action); spin_lock(&(irq_desc[irq]. lock)); } while (irq_desc[irq]. status & IRQ_PENDING); irq_desc[irq]. status &= ~IRQ_INPROGRESS; } } CPU 1 executes this code first CPU 2 (2) However, CPU 1 will finish the work that CPU 2 doesn’t finish. 47

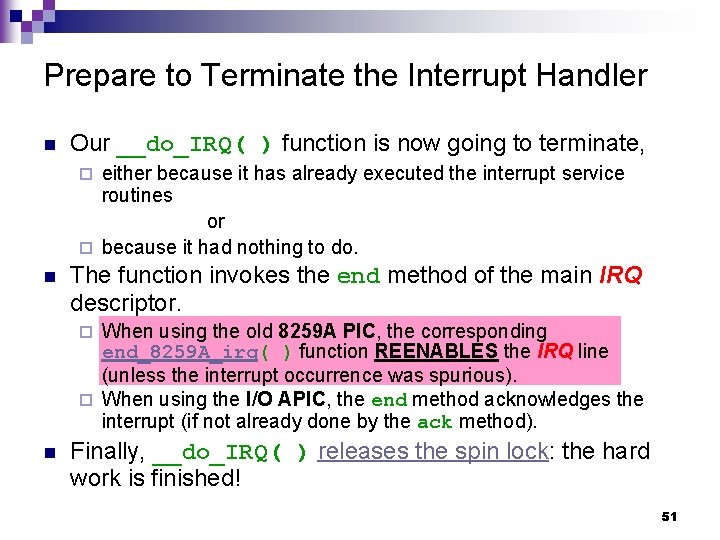

![irqdescirq action This case occurs when there is no interrupt service routine associated with irq_desc[irq]. action This case occurs when there is no interrupt service routine associated with](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-48.jpg)

irq_desc[irq]. action This case occurs when there is no interrupt service routine associated with the interrupt. n Normally, this happens only when the kernel is probing a hardware device. n 48

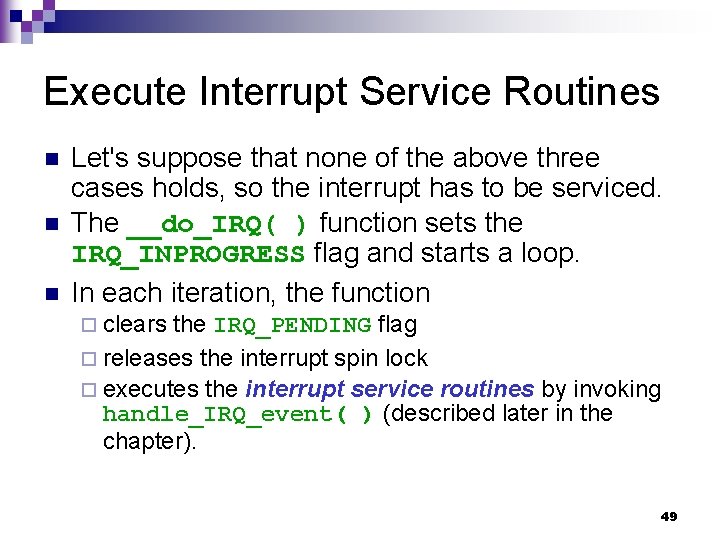

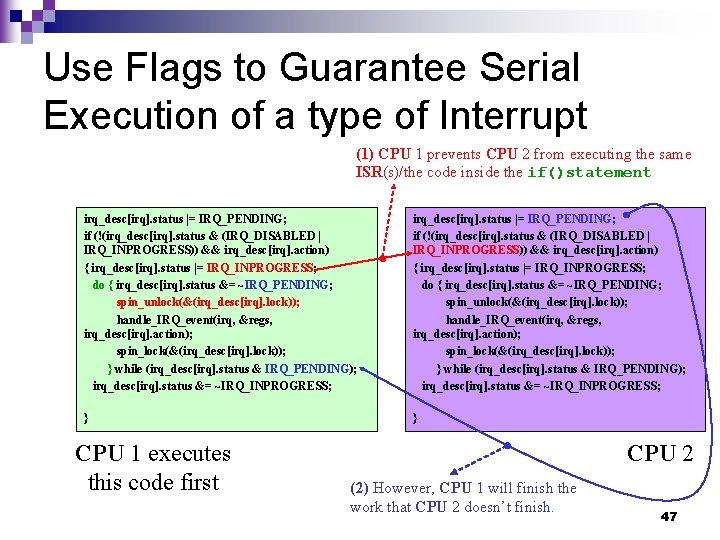

Execute Interrupt Service Routines n n n Let's suppose that none of the above three cases holds, so the interrupt has to be serviced. The __do_IRQ( ) function sets the IRQ_INPROGRESS flag and starts a loop. In each iteration, the function ¨ clears the IRQ_PENDING flag ¨ releases the interrupt spin lock ¨ executes the interrupt service routines by invoking handle_IRQ_event( ) (described later in the chapter). 49

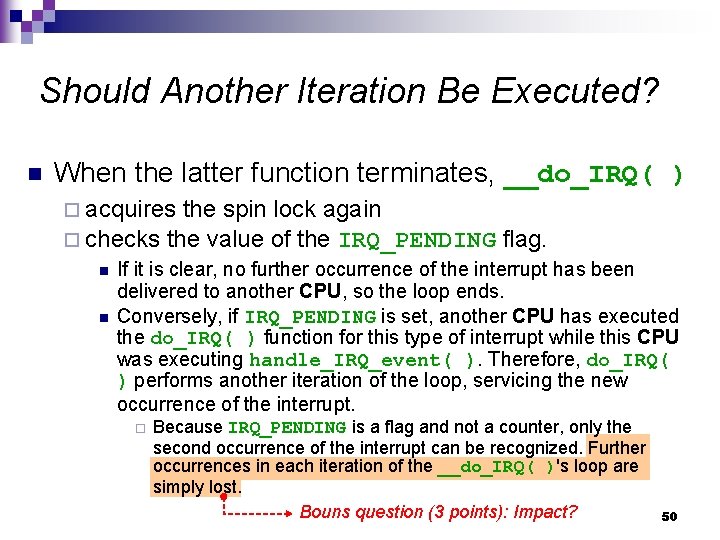

Should Another Iteration Be Executed? n When the latter function terminates, __do_IRQ( ) ¨ acquires the spin lock again ¨ checks the value of the IRQ_PENDING flag. n n If it is clear, no further occurrence of the interrupt has been delivered to another CPU, so the loop ends. Conversely, if IRQ_PENDING is set, another CPU has executed the do_IRQ( ) function for this type of interrupt while this CPU was executing handle_IRQ_event( ). Therefore, do_IRQ( ) performs another iteration of the loop, servicing the new occurrence of the interrupt. ¨ Because IRQ_PENDING is a flag and not a counter, only the second occurrence of the interrupt can be recognized. Further occurrences in each iteration of the __do_IRQ( )'s loop are simply lost. Bouns question (3 points): Impact? 50

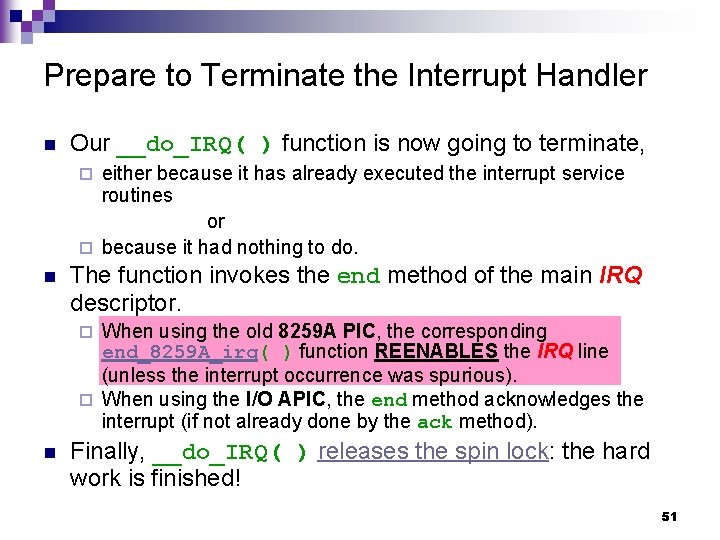

Prepare to Terminate the Interrupt Handler n Our __do_IRQ( ) function is now going to terminate, either because it has already executed the interrupt service routines or ¨ because it had nothing to do. ¨ n The function invokes the end method of the main IRQ descriptor. When using the old 8259 A PIC, the corresponding end_8259 A_irq( ) function REENABLES the IRQ line (unless the interrupt occurrence was spurious). ¨ When using the I/O APIC, the end method acknowledges the interrupt (if not already done by the ack method). ¨ n Finally, __do_IRQ( ) releases the spin lock: the hard work is finished! 51

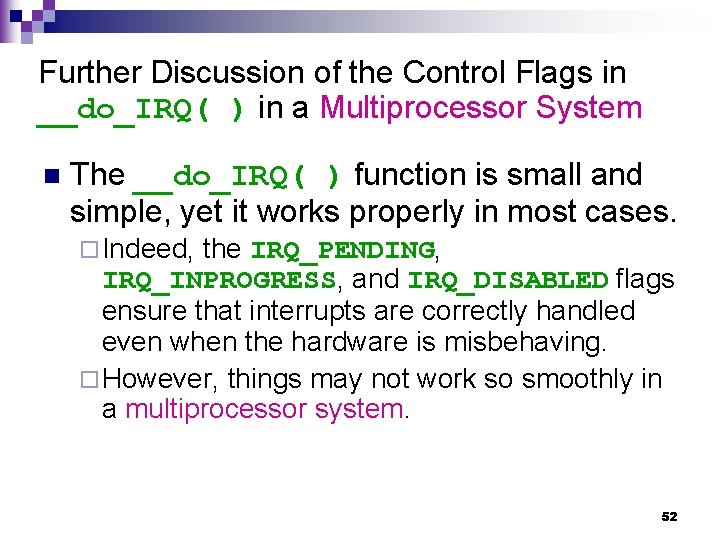

Further Discussion of the Control Flags in __do_IRQ( ) in a Multiprocessor System n The __do_IRQ( ) function is small and simple, yet it works properly in most cases. ¨ Indeed, the IRQ_PENDING, IRQ_INPROGRESS, and IRQ_DISABLED flags ensure that interrupts are correctly handled even when the hardware is misbehaving. ¨ However, things may not work so smoothly in a multiprocessor system. 52

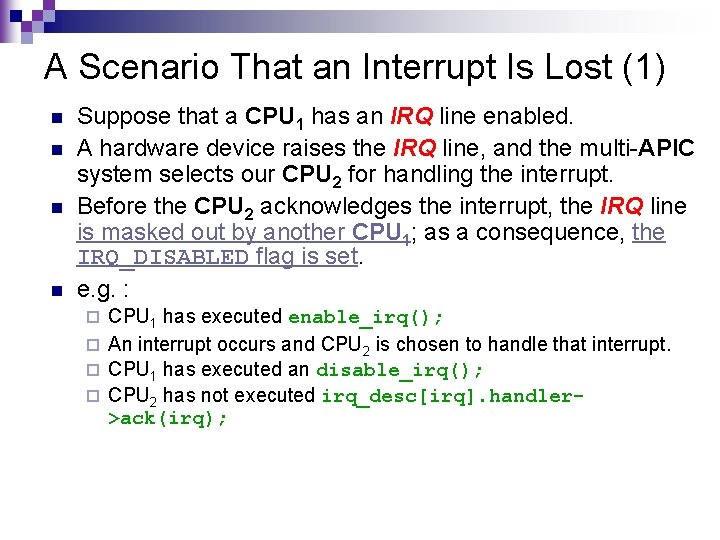

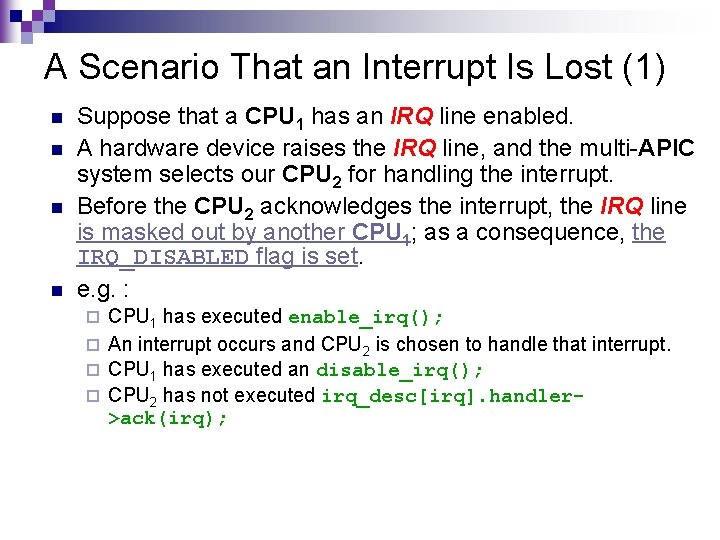

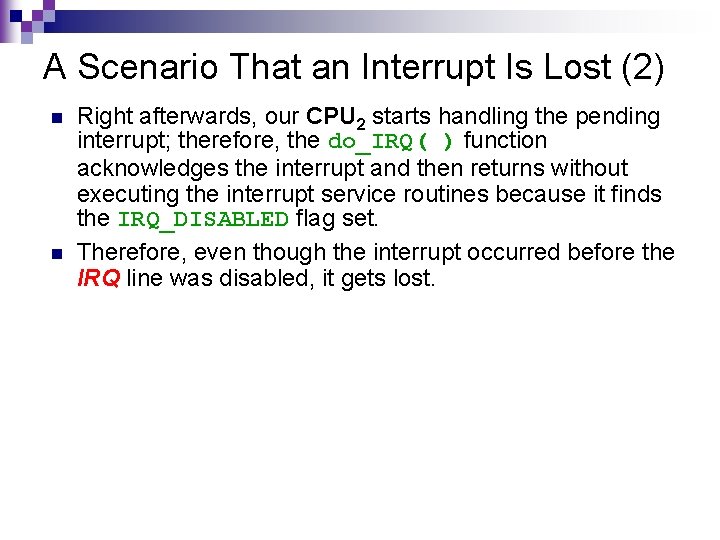

A Scenario That an Interrupt Is Lost (1) n n Suppose that a CPU 1 has an IRQ line enabled. A hardware device raises the IRQ line, and the multi-APIC system selects our CPU 2 for handling the interrupt. Before the CPU 2 acknowledges the interrupt, the IRQ line is masked out by another CPU 1; as a consequence, the IRQ_DISABLED flag is set. e. g. : CPU 1 has executed enable_irq(); ¨ An interrupt occurs and CPU 2 is chosen to handle that interrupt. ¨ CPU 1 has executed an disable_irq(); ¨ CPU 2 has not executed irq_desc[irq]. handler>ack(irq); ¨

A Scenario That an Interrupt Is Lost (2) n n Right afterwards, our CPU 2 starts handling the pending interrupt; therefore, the do_IRQ( ) function acknowledges the interrupt and then returns without executing the interrupt service routines because it finds the IRQ_DISABLED flag set. Therefore, even though the interrupt occurred before the IRQ line was disabled, it gets lost.

A Scenario That an Interrupt Is Lost (3) CPU 1 executes this code first spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); /* e. g. set IMR of 8259 A */ irq_desc[irq]. status &= ~(IRQ_REPLAY | IRQ_WAITING); irq_desc[irq]. status |= IRQ_PENDING; if (!(irq_desc[irq]. status & (IRQ_DISABLED | IRQ_INPROGRESS)) && irq_desc[irq]. action) { irq_desc[irq]. status |= IRQ_INPROGRESS; do { irq_desc[irq]. status &= ~IRQ_PENDING; spin_unlock(&(irq_desc[irq]. lock)); handle_IRQ_event(irq, ®s, irq_desc[irq]. action); spin_lock(&(irq_desc[irq]. lock)); } while (irq_desc[irq]. status & IRQ_PENDING); irq_desc[irq]. status &= ~IRQ_INPROGRESS; } Disable IRQ Line: set IRQ_DISABLE Enable CPU 2 do_IRQ() spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); /* e. g. set IMR of 8259 A */ irq_desc[irq]. status &= ~(IRQ_REPLAY | IRQ_WAITING); irq_desc[irq]. status |= IRQ_PENDING; if (!(irq_desc[irq]. status & (IRQ_DISABLED | IRQ_INPROGRESS)) && irq_desc[irq]. action) { irq_desc[irq]. status |= IRQ_INPROGRESS; do { irq_desc[irq]. status &= ~IRQ_PENDING; spin_unlock(&(irq_desc[irq]. lock)); handle_IRQ_event(irq, ®s, irq_desc[irq]. action); spin_lock(&(irq_desc[irq]. lock)); } while (irq_desc[irq]. status & IRQ_PENDING); irq_desc[irq]. status &= ~IRQ_INPROGRESS; } 55

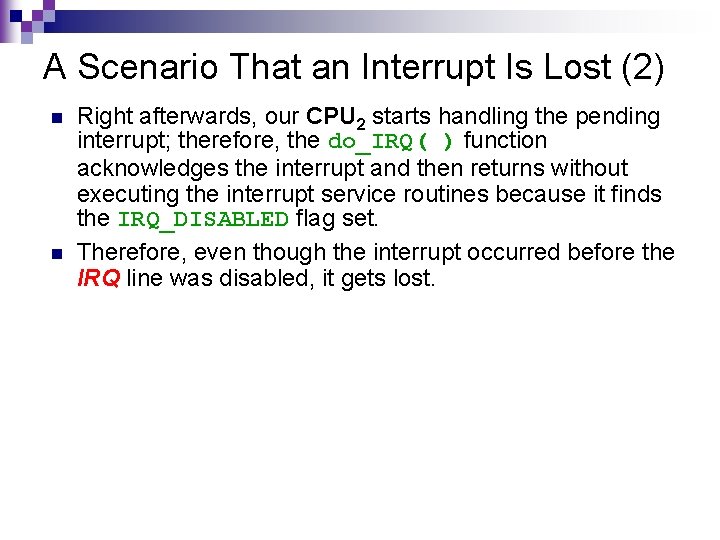

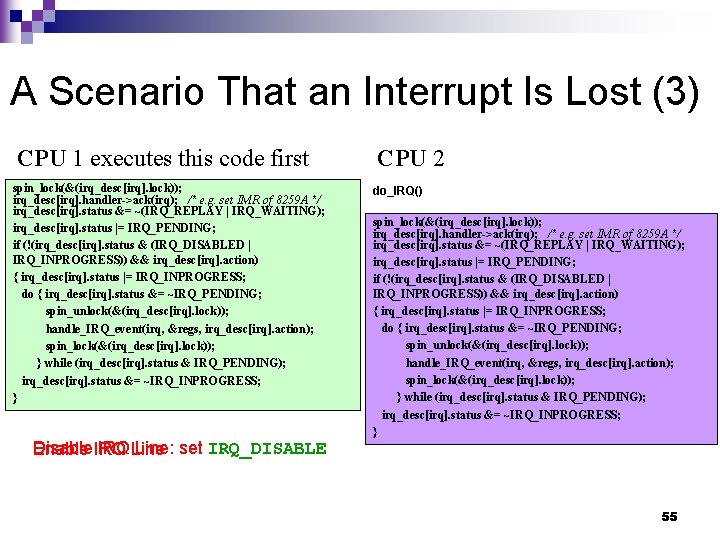

Code to Revive a Lost Interrupt n To cope with this scenario, the enable_irq( ) function, which is used by the kernel to enable an IRQ line, checks first whether an interrupt has been lost. ¨ If so, the function forces the hardware to generate a new occurrence of the lost interrupt: spin_lock_irqsave(&(irq_desc[irq]. lock), flags); if (--irq_desc[irq]. depth == 0) { irq_desc[irq]. status &= ~IRQ_DISABLED; if ((irq_desc[irq]. status & (IRQ_PENDING | IRQ_REPLAY)) == IRQ_PENDING) { irq_desc[irq]. status |= IRQ_REPLAY; hw_resend_irq(irq_desc[irq]. handler, irq); } irq_desc[irq]. handler->enable(irq); } spin_lock_irqrestore(&(irq_desc[irq]. lock), flags); 56

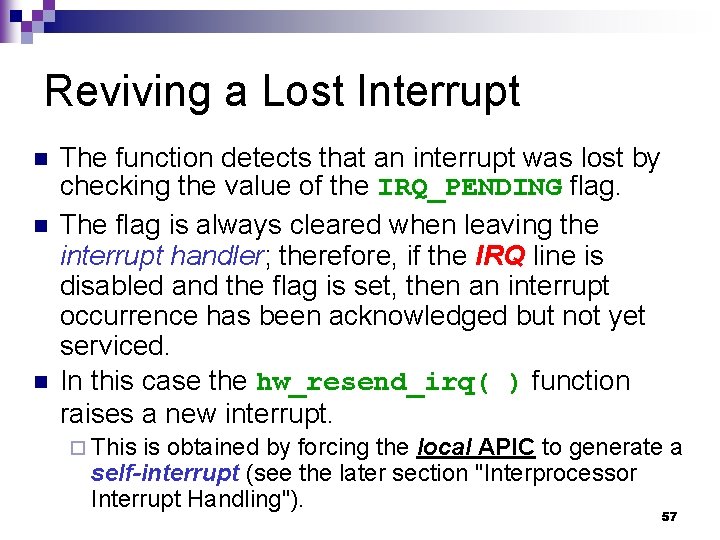

Reviving a Lost Interrupt n n n The function detects that an interrupt was lost by checking the value of the IRQ_PENDING flag. The flag is always cleared when leaving the interrupt handler; therefore, if the IRQ line is disabled and the flag is set, then an interrupt occurrence has been acknowledged but not yet serviced. In this case the hw_resend_irq( ) function raises a new interrupt. ¨ This is obtained by forcing the local APIC to generate a self-interrupt (see the later section "Interprocessor Interrupt Handling"). 57

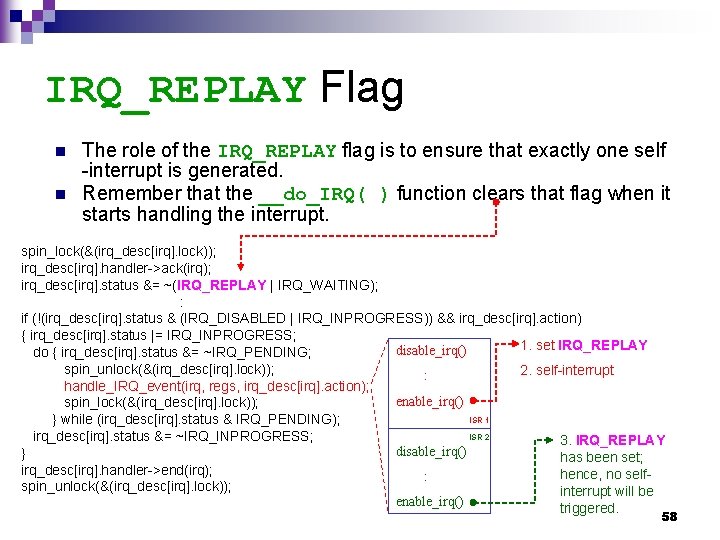

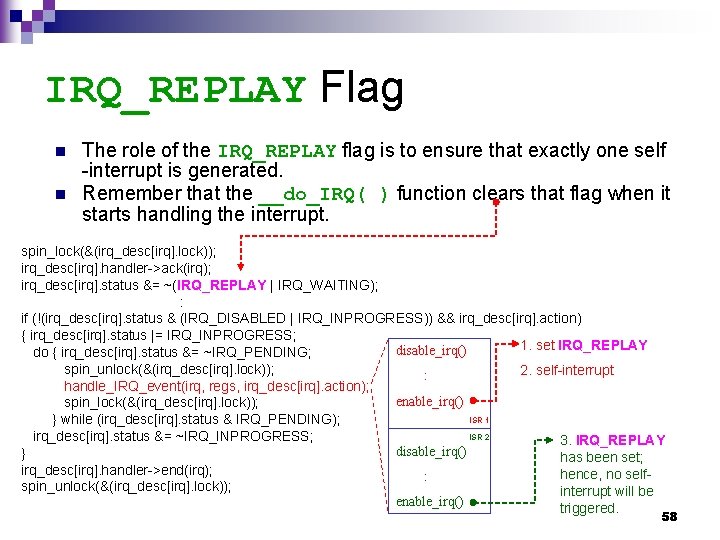

IRQ_REPLAY Flag n n The role of the IRQ_REPLAY flag is to ensure that exactly one self -interrupt is generated. Remember that the __do_IRQ( ) function clears that flag when it starts handling the interrupt. spin_lock(&(irq_desc[irq]. lock)); irq_desc[irq]. handler->ack(irq); irq_desc[irq]. status &= ~(IRQ_REPLAY | IRQ_WAITING); : if (!(irq_desc[irq]. status & (IRQ_DISABLED | IRQ_INPROGRESS)) && irq_desc[irq]. action) { irq_desc[irq]. status |= IRQ_INPROGRESS; 1. set IRQ_REPLAY disable_irq() do { irq_desc[irq]. status &= ~IRQ_PENDING; spin_unlock(&(irq_desc[irq]. lock)); 2. self-interrupt : handle_IRQ_event(irq, regs, irq_desc[irq]. action); enable_irq() spin_lock(&(irq_desc[irq]. lock)); ISR 1 } while (irq_desc[irq]. status & IRQ_PENDING); ISR 2 irq_desc[irq]. status &= ~IRQ_INPROGRESS; 3. IRQ_REPLAY disable_irq() } has been set; irq_desc[irq]. handler->end(irq); hence, no self: spin_unlock(&(irq_desc[irq]. lock)); interrupt will be enable_irq() triggered. 58

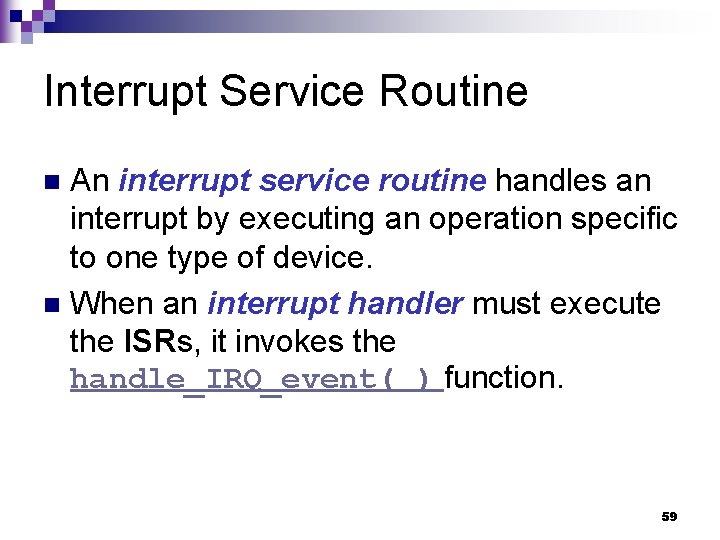

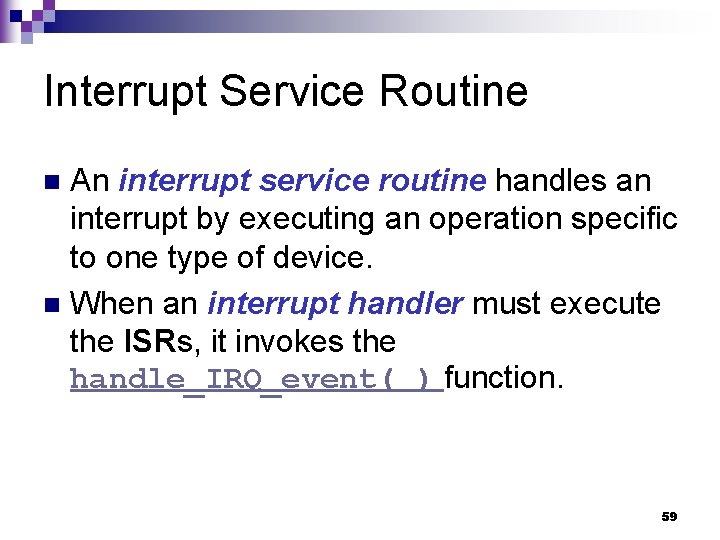

Interrupt Service Routine An interrupt service routine handles an interrupt by executing an operation specific to one type of device. n When an interrupt handler must execute the ISRs, it invokes the handle_IRQ_event( ) function. n 59

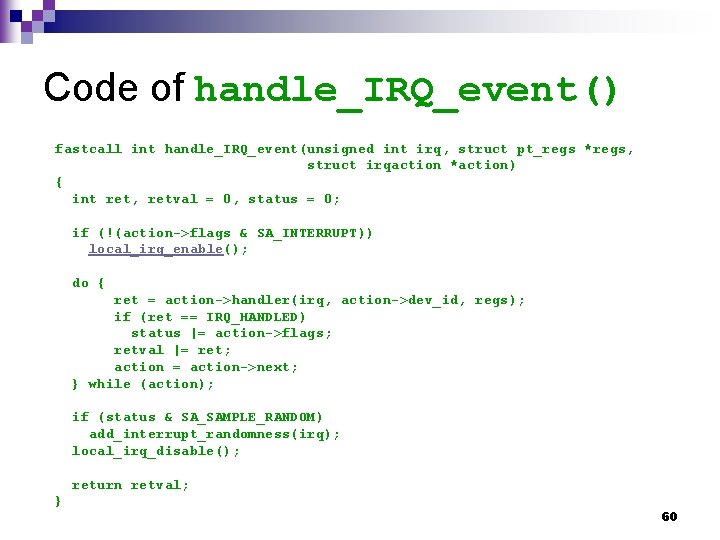

Code of handle_IRQ_event() fastcall int handle_IRQ_event(unsigned int irq, struct pt_regs *regs, struct irqaction *action) { int ret, retval = 0, status = 0; if (!(action->flags & SA_INTERRUPT)) local_irq_enable(); do { ret = action->handler(irq, action->dev_id, regs); if (ret == IRQ_HANDLED) status |= action->flags; retval |= ret; action = action->next; } while (action); if (status & SA_SAMPLE_RANDOM) add_interrupt_randomness(irq); local_irq_disable(); return retval; } 60

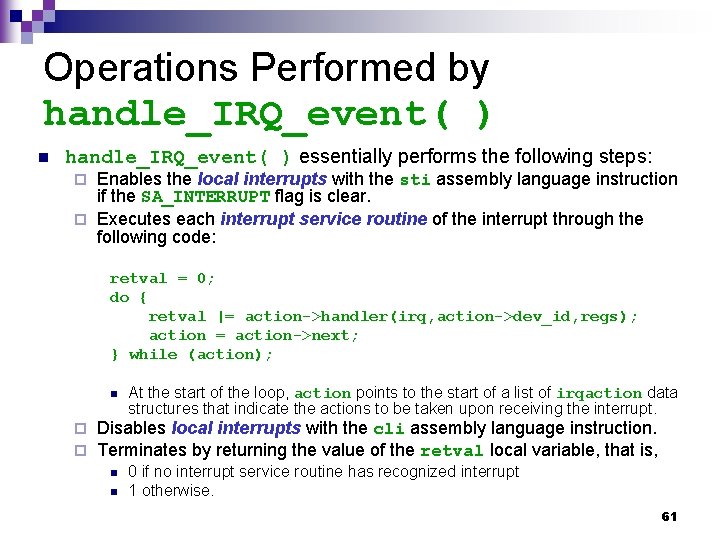

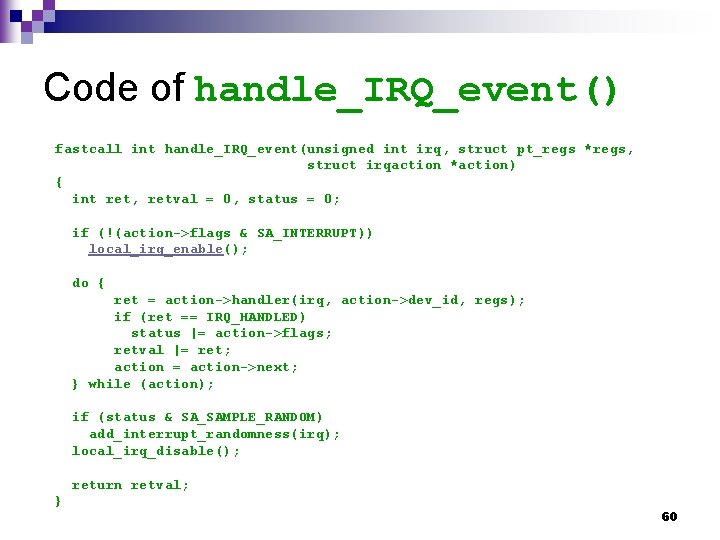

Operations Performed by handle_IRQ_event( ) n handle_IRQ_event( ) essentially performs the following steps: Enables the local interrupts with the sti assembly language instruction if the SA_INTERRUPT flag is clear. ¨ Executes each interrupt service routine of the interrupt through the following code: ¨ retval = 0; do { retval |= action->handler(irq, action->dev_id, regs); action = action->next; } while (action); n ¨ ¨ At the start of the loop, action points to the start of a list of irqaction data structures that indicate the actions to be taken upon receiving the interrupt. Disables local interrupts with the cli assembly language instruction. Terminates by returning the value of the retval local variable, that is, n n 0 if no interrupt service routine has recognized interrupt 1 otherwise. 61

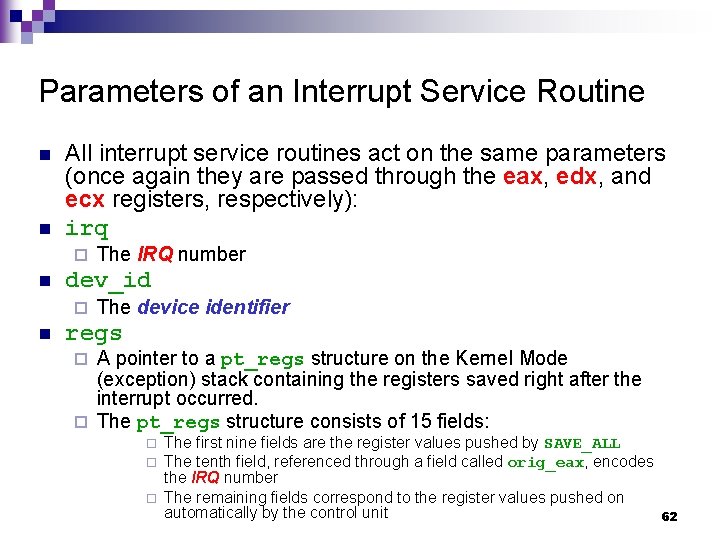

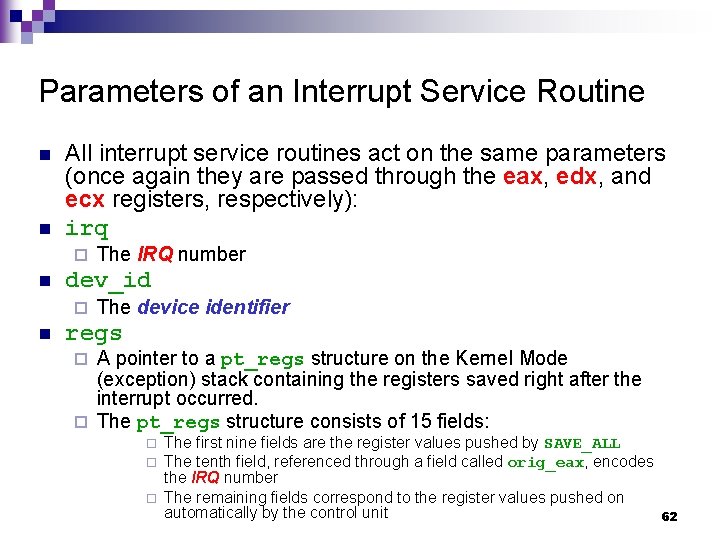

Parameters of an Interrupt Service Routine n n All interrupt service routines act on the same parameters (once again they are passed through the eax, edx, and ecx registers, respectively): irq ¨ n dev_id ¨ n The IRQ number The device identifier regs A pointer to a pt_regs structure on the Kernel Mode (exception) stack containing the registers saved right after the interrupt occurred. ¨ The pt_regs structure consists of 15 fields: ¨ ¨ The first nine fields are the register values pushed by SAVE_ALL The tenth field, referenced through a field called orig_eax, encodes the IRQ number The remaining fields correspond to the register values pushed on automatically by the control unit 62

Functions of the Parameters n n The first parameter allows a single ISR to handle several IRQ lines. The second one allows a single ISR to take care of several devices of the same type. The last one allows the ISR to access the execution context of the interrupted kernel control path. In practice, most ISRs do not use these parameters. 63

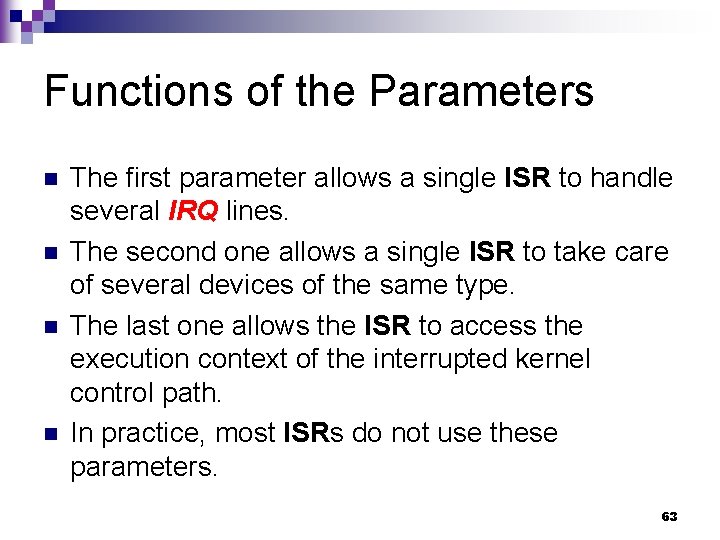

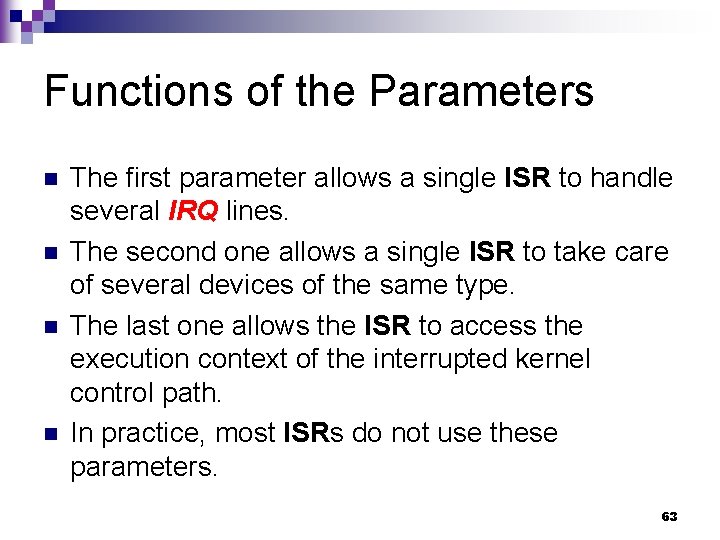

The Return Code of an ISR n Every interrupt service routine returns the value 1 if the interrupt has been effectively handled. ¨ that is, if the signal was raised by the hardware device handled by the interrupt service routine (and not by another device sharing the same IRQ). n n It returns the value 0 otherwise. This return code allows the kernel to update the counter of unexpected interrupts mentioned in the section " IRQ data structures" earlier in this chapter. 64

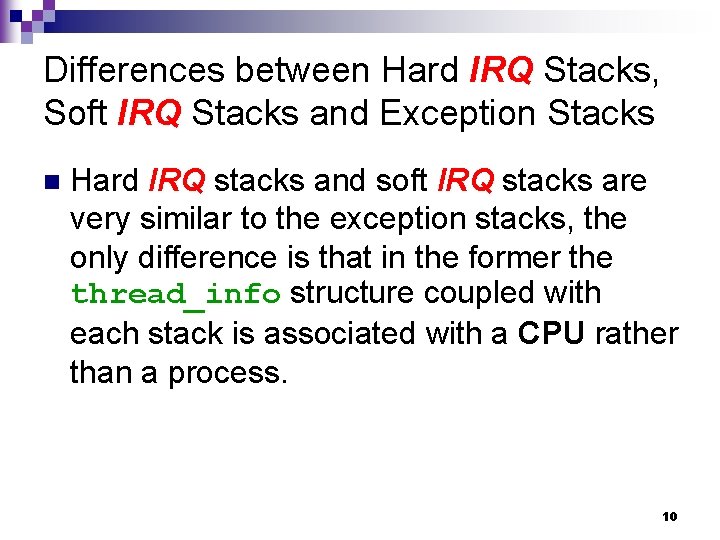

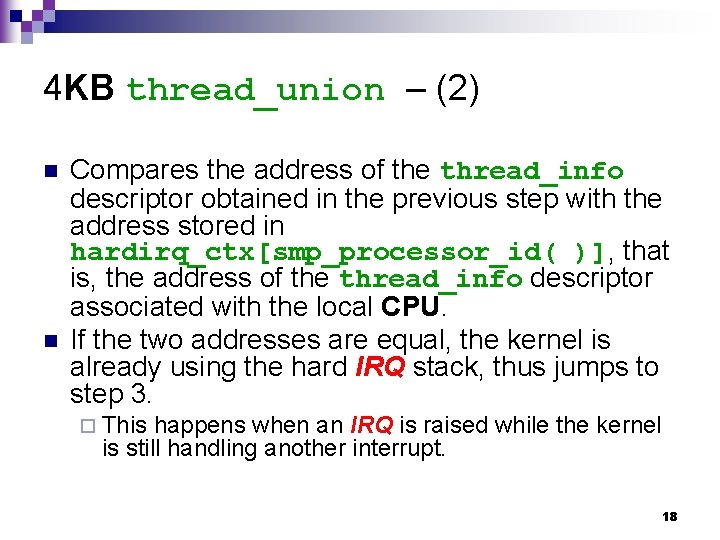

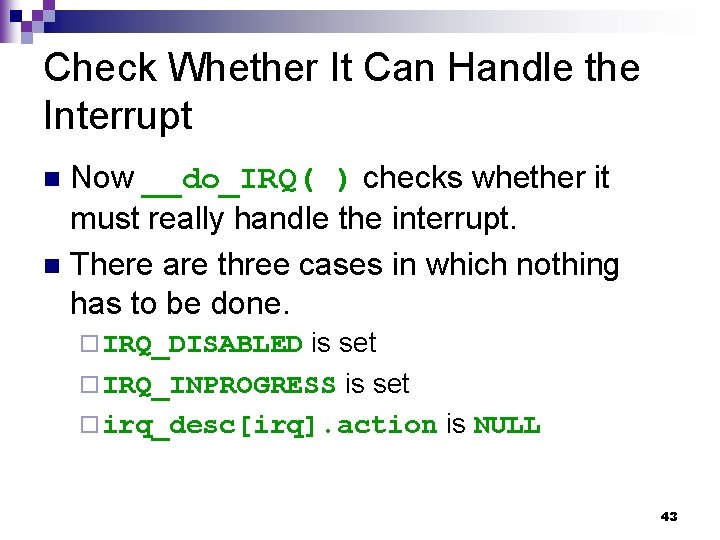

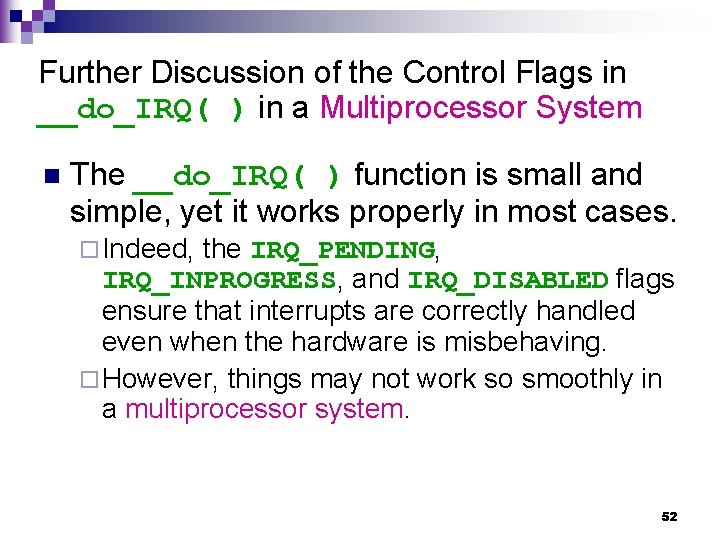

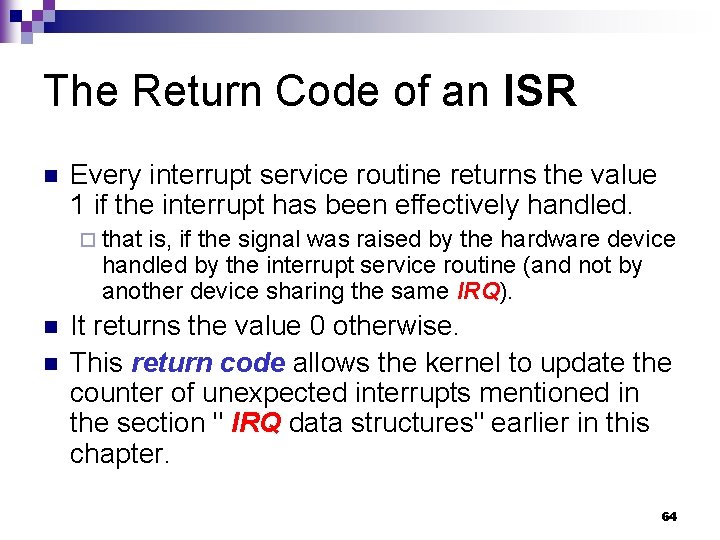

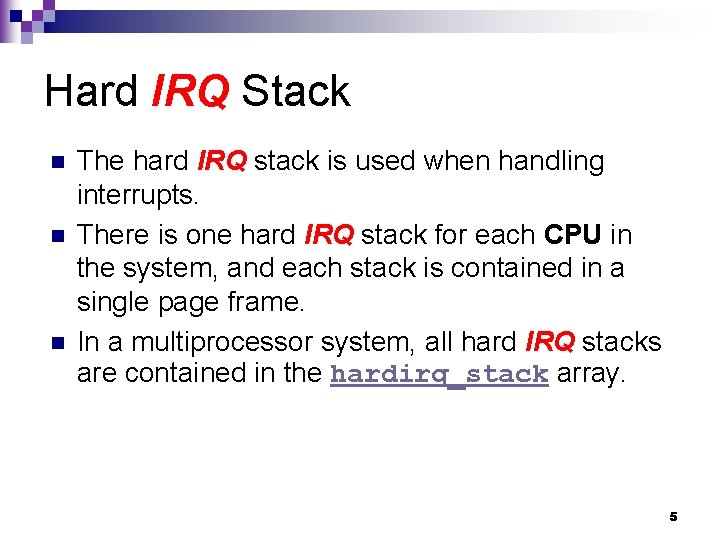

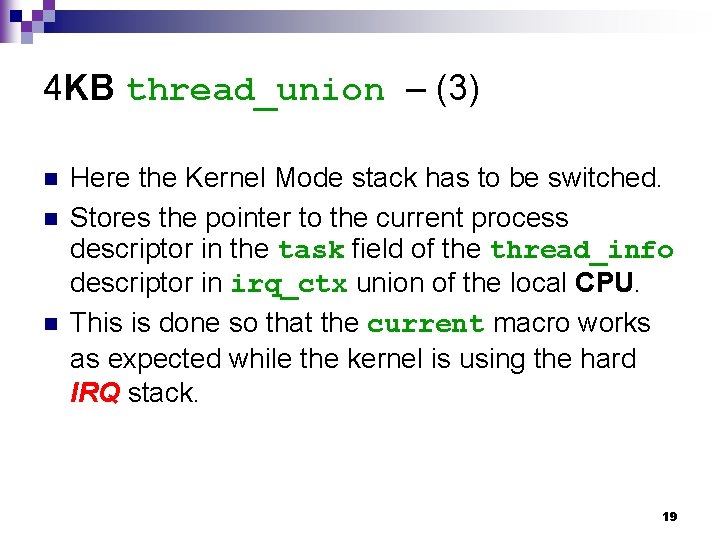

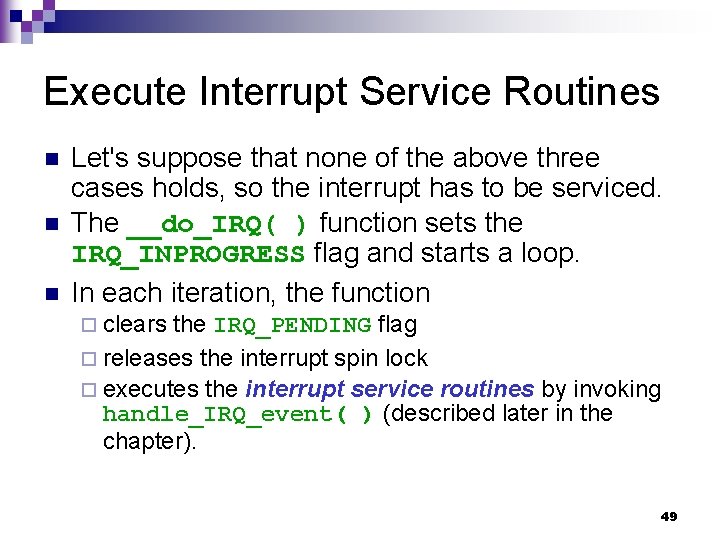

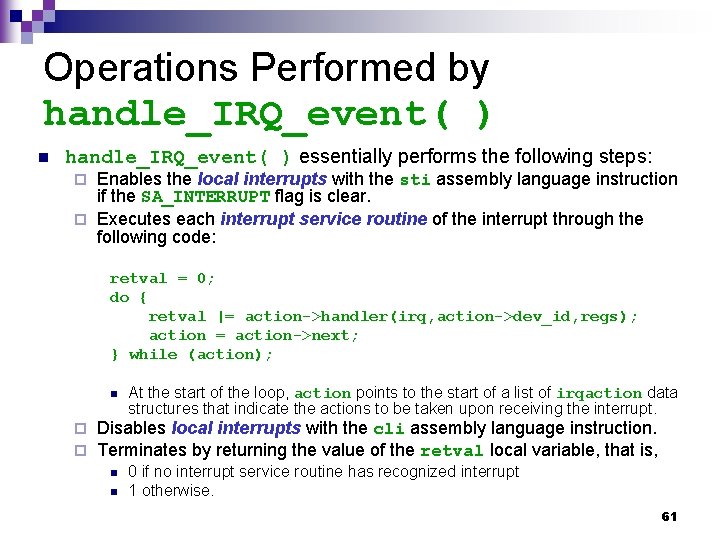

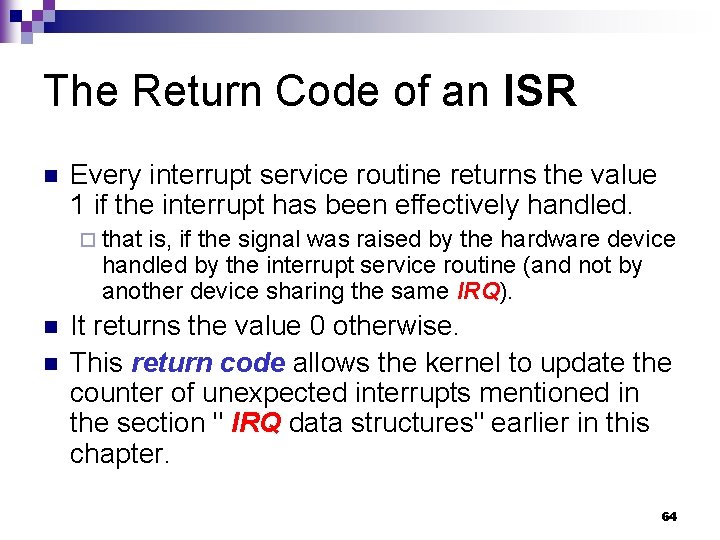

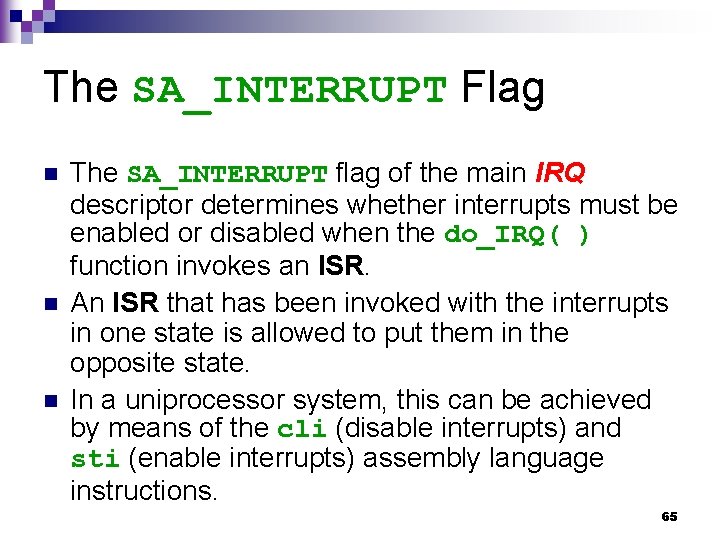

The SA_INTERRUPT Flag n n n The SA_INTERRUPT flag of the main IRQ descriptor determines whether interrupts must be enabled or disabled when the do_IRQ( ) function invokes an ISR. An ISR that has been invoked with the interrupts in one state is allowed to put them in the opposite state. In a uniprocessor system, this can be achieved by means of the cli (disable interrupts) and sti (enable interrupts) assembly language instructions. 65

![Switch on and off of Interrupts in doIRQ Interrupts from all IRQ lines spinlockirqdescirq Switch on and off of Interrupts in do_IRQ(): Interrupts from all IRQ lines spin_lock(&(irq_desc[irq].](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-66.jpg)

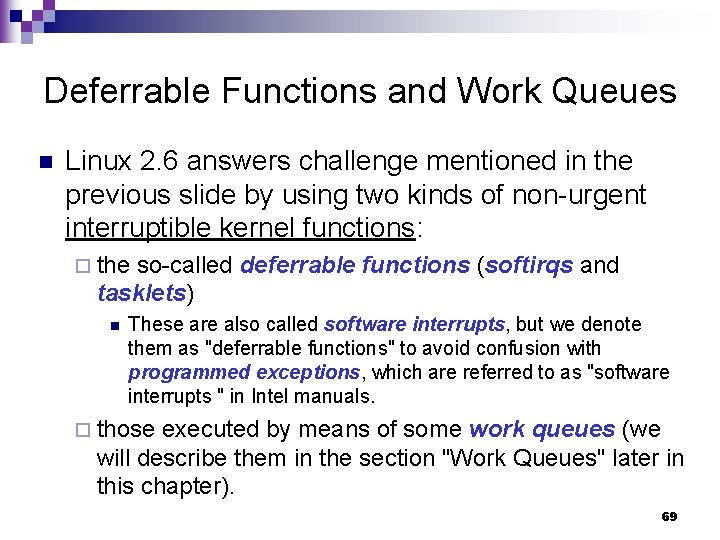

Switch on and off of Interrupts in do_IRQ(): Interrupts from all IRQ lines spin_lock(&(irq_desc[irq]. lock)); are ignored by the CPU at this point. irq_desc[irq]. handler->ack(irq); Interrupts from the same IRQ irq_desc[irq]. status &= ~(IRQ_REPLAY | IRQ_WAITING); line are disabled by the PIC after irq_desc[irq]. status |= IRQ_PENDING; this statement. if (!(irq_desc[irq]. status & (IRQ_DISABLED | IRQ_INPROGRESS)) && irq_desc[irq]. action) { irq_desc[irq]. status |= IRQ_INPROGRESS; • If action->flags is not equal to do { irq_desc[irq]. status &= ~IRQ_PENDING; SA_INTERRUPT, then interrupts spin_unlock(&(irq_desc[irq]. lock)); from all other IRQ lines will be allowed to be passed to the CPU handle_IRQ_event(irq, regs, irq_desc[irq]. action); within this function. spin_lock(&(irq_desc[irq]. lock)); } while (irq_desc[irq]. status & IRQ_PENDING); • After this function is finished, interrupts from all IRQ lines will be irq_desc[irq]. status &= ~IRQ_INPROGRESS; ignored again. } irq_desc[irq]. handler->end(irq); spin_unlock(&(irq_desc[irq]. lock)); An interrupt from the same IRQ line is allowed to be sent to the CPU after this statement. 66

Deferrable Functions 67

Small Kernel Response Time n n We mentioned earlier in the section "Interrupt Handling" that several tasks among those executed by the kernel are not critical: they can be deferred for a long period of time, if necessary. Remember that the interrupt service routines of an interrupt handler are serialized and ¨ often there should be NO occurrence of an interrupt until the corresponding interrupt handler has terminated. ¨ n Conversely, the deferrable tasks can execute with all interrupts enabled. ¨ Taking them out of the interrupt handler helps keep kernel response time small. This is a very important property for many time-critical applications that expect their interrupt requests to be serviced in a few milliseconds. 68

Deferrable Functions and Work Queues n Linux 2. 6 answers challenge mentioned in the previous slide by using two kinds of non-urgent interruptible kernel functions: ¨ the so-called deferrable functions (softirqs and tasklets) n These are also called software interrupts, but we denote them as "deferrable functions" to avoid confusion with programmed exceptions, which are referred to as "software interrupts " in Intel manuals. ¨ those executed by means of some work queues (we will describe them in the section "Work Queues" later in this chapter). 69

Relationship between Softirqs and Tasklets Softirqs and tasklets are strictly correlated, because tasklets are implemented on top of softirqs. n As a matter of fact, the term "softirq, " which appears in the kernel source code, often denotes both kinds of deferrable functions. n 70

Interrupt Context n Interrupt context : it specifies that the kernel is currently executing either ¨ an interrupt handler or ¨ a deferrable function 71

Properties of Softirqs are statically allocated (i. e. , defined at compile time). n Softirqs can run concurrently on several CPUs, even if they are of the same type. n Thus, softirqs are reentrant functions and must explicitly protect their data structures with spin locks. n 72

Properties of Tasklets n n Tasklets can also be allocated and initialized at runtime (for instance, when loading a kernel module). Tasklets of the same type are always serialized: in other words, the same type of tasklet CANNOT be executed by two CPUs at the same time. However, tasklets of different types can be executed concurrently on several CPUs. Serializing the tasklet simplifies the life of device driver developers, because the tasklet function needs NOT be reentrant. 73

Operations That Can Be Performed on Deferrable Functions n Generally speaking, four kinds of operations can be performed on deferrable functions: ¨ Initialization ¨ Activation ¨ Masking ¨ Execution 74

Initialization Defines a new deferrable function. n This operation is usually done when n ¨ the kernel initializes itself ¨ a module is loaded. 75

Activation Marks a deferrable function as "pending" to be run the next time the kernel schedules a round of executions of deferrable functions. n Activation can be done at any time (even while handling interrupts). n 76

Masking n Selectively disables a deferrable function so that it will not be executed by the kernel even if activated. ¨ We'll see in the section "Disabling and Enabling Deferrable Functions" in Chapter 5 that disabling deferrable functions is sometimes essential. 77

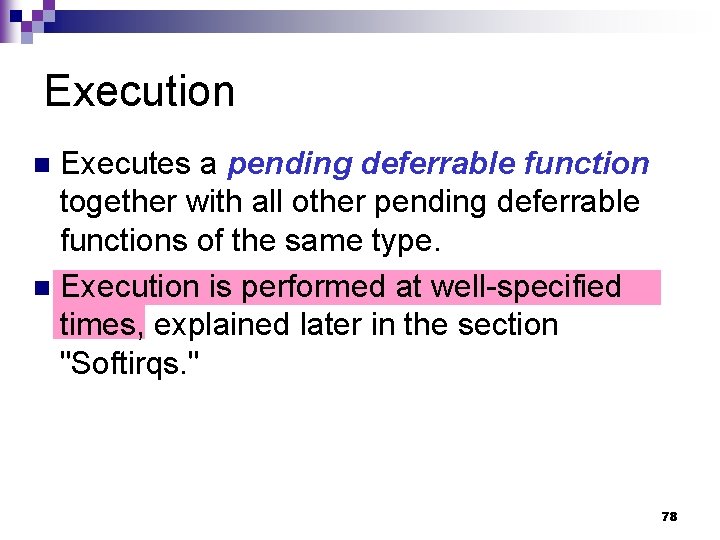

Execution Executes a pending deferrable function together with all other pending deferrable functions of the same type. n Execution is performed at well-specified times, explained later in the section "Softirqs. " n 78

Activation and Execution of a Deferrable Function Are Bound Together n A deferrable function that has been activated by a given CPU must be executed on the same CPU. 79

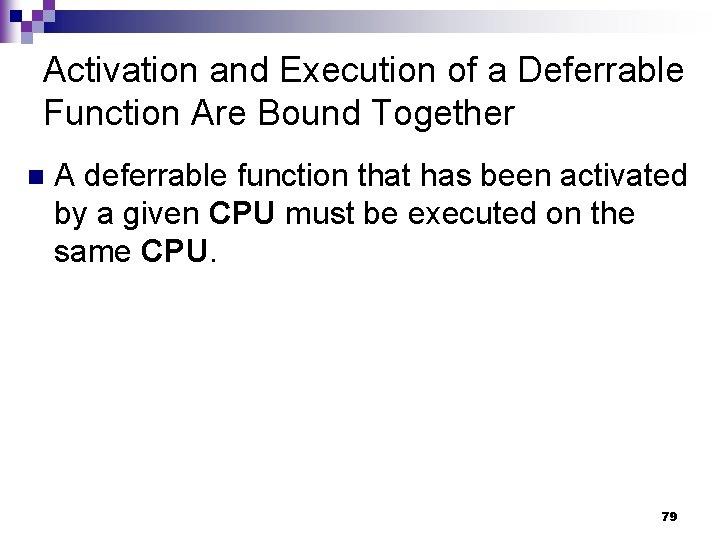

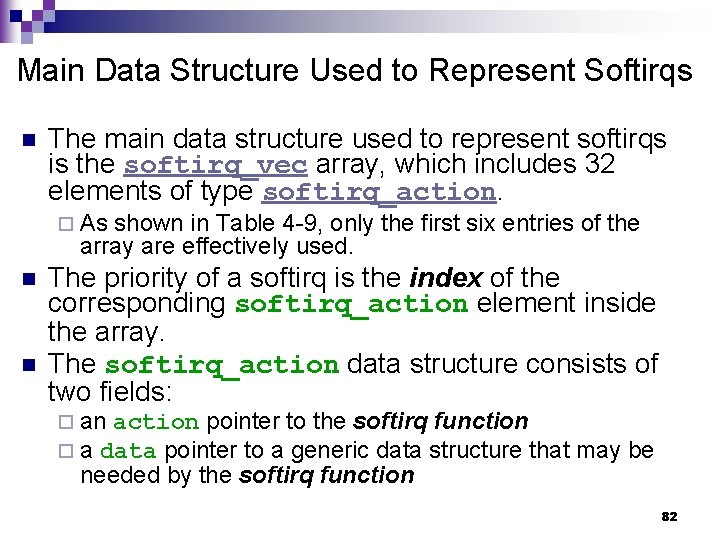

Softirqs Linux 2. 6 uses a limited number of softirqs. n For most purposes, tasklets are good enough and are much easier to write because they do not need to be reentrant. n As a matter of fact, only the six kinds of softirqs listed in Table 4 -9 are currently defined. n 80

Softirqs Used in Linux 2. 6 (Table 4 -9 ) softirq Index (priority) Description HI_SOFTIRQ 0 Handles high priority tasklets TIMER_SOFTIRQ 1 Tasklets related to timer interrupts NET_TX_SOFTIRQ 2 Transmits packets to network cards NET_RX_SOFTIRQ 3 Receives packets from network cards SCSI_SOFTIRQ 4 Post-interrupt processing of SCSI commands TASKLET_SOFTIRQ 5 Handles regular tasklets The index of a sofirq determines its priority: a lower index means higher priority because softirq functions will be executed starting from index 0. 81

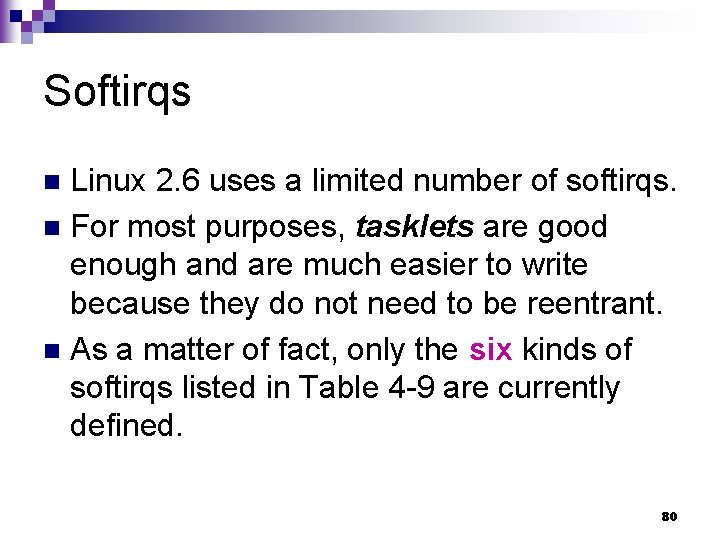

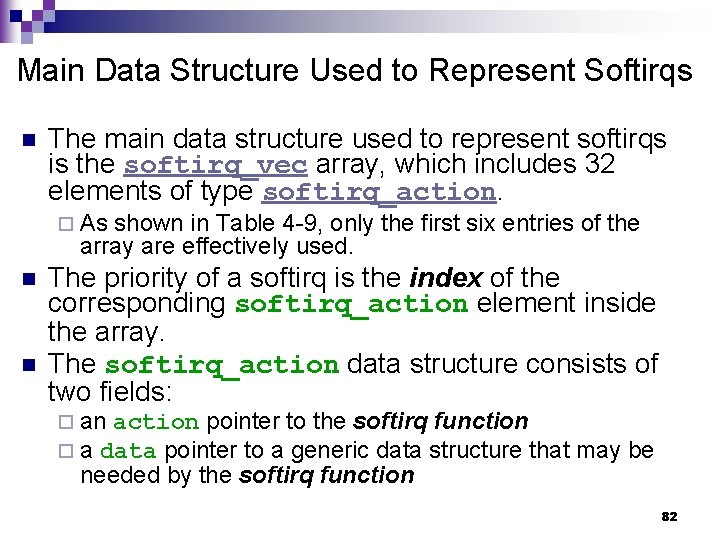

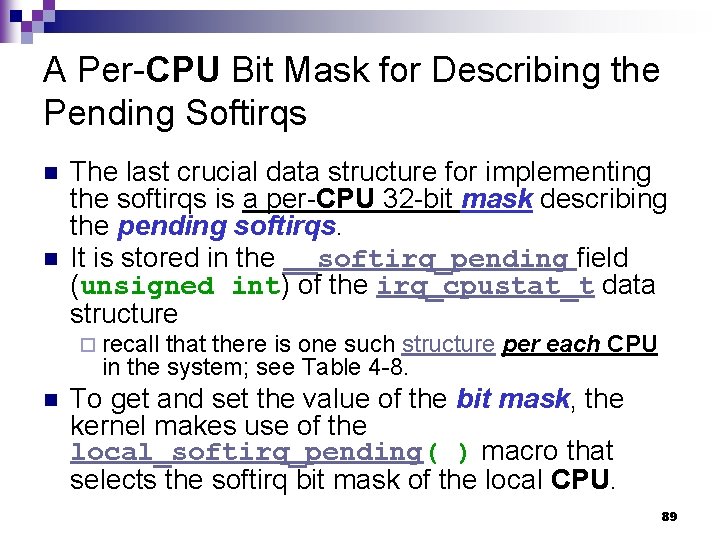

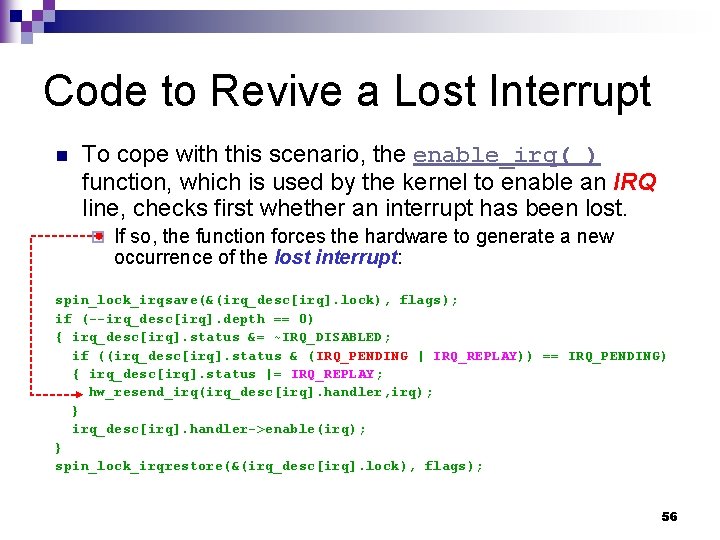

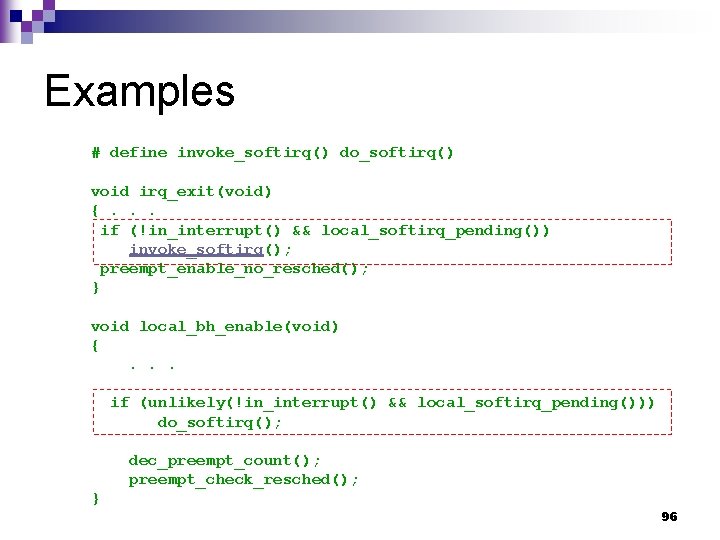

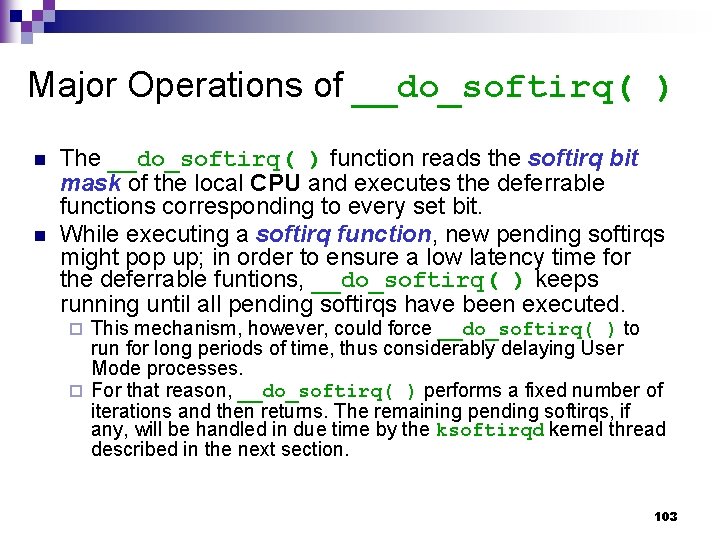

Main Data Structure Used to Represent Softirqs n The main data structure used to represent softirqs is the softirq_vec array, which includes 32 elements of type softirq_action. ¨ As shown in Table 4 -9, only the first six entries of the array are effectively used. n n The priority of a softirq is the index of the corresponding softirq_action element inside the array. The softirq_action data structure consists of two fields: ¨ an action pointer to the softirq function ¨ a data pointer to a generic data structure that needed by the softirq function may be 82

![Array softirqvec and Type softirqaction static struct softirqaction softirqvec32 cachelinealignedinsmp struct softirqaction void Array softirq_vec and Type softirq_action static struct softirq_action softirq_vec[32] __cacheline_aligned_in_smp struct softirq_action { void](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-83.jpg)

Array softirq_vec and Type softirq_action static struct softirq_action softirq_vec[32] __cacheline_aligned_in_smp struct softirq_action { void (*action)(struct softirq_action *); void *data; }; 83

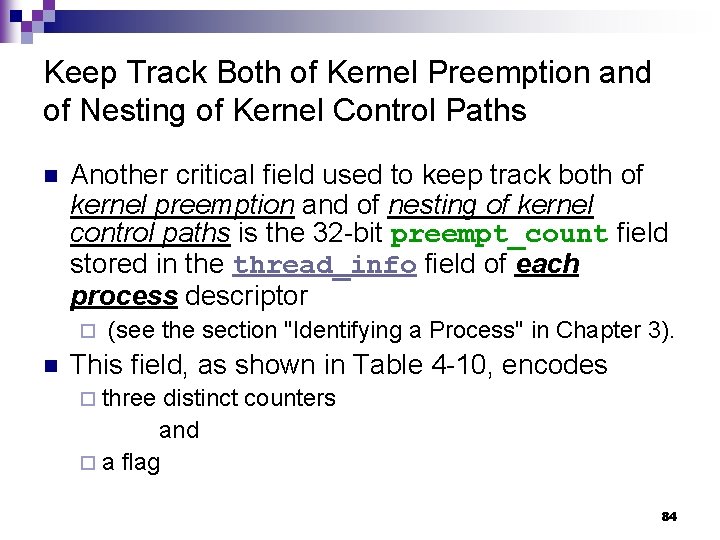

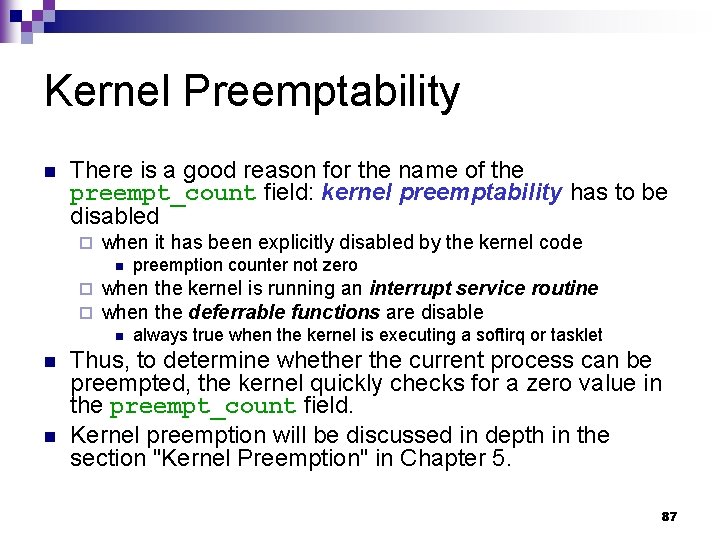

Keep Track Both of Kernel Preemption and of Nesting of Kernel Control Paths n Another critical field used to keep track both of kernel preemption and of nesting of kernel control paths is the 32 -bit preempt_count field stored in the thread_info field of each process descriptor ¨ n (see the section "Identifying a Process" in Chapter 3). This field, as shown in Table 4 -10, encodes ¨ three distinct counters and ¨ a flag 84

Table 4 -10. Subfields of the preempt_count Field (continues) Bits Description 0 -7 Preemption counter (max value = 255) 8 -15 Softirq counter (max value = 255). 16 -27 Hardirq counter (max value = 4096) (e. g. irq_enter( )) PREEMPT_ACTIVE flag 28 85

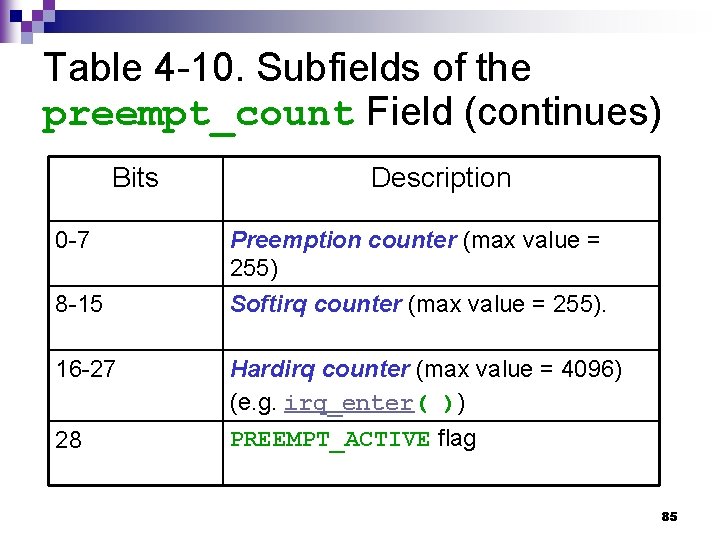

Meaning of Each Counter n The first counter keeps track of how many times kernel preemption has been explicitly disabled on the local CPU ¨ n The second counter specifies how many levels deep the disabling of deferrable functions is. ¨ n The value zero means that kernel preemption has not been explicitly disabled at all. Level 0 means that deferrable functions are enabled. The third counter specifies the number of nested interrupt handlers on the local CPU ¨ the value is increased by irq_enter( ) and decreased by irq_exit( ); n see the section "I/O Interrupt Handling" earlier in this chapter. 86

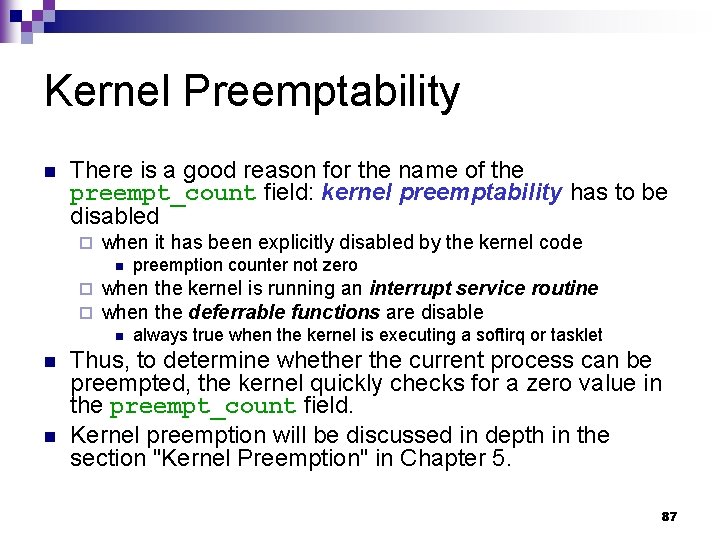

Kernel Preemptability n There is a good reason for the name of the preempt_count field: kernel preemptability has to be disabled ¨ when it has been explicitly disabled by the kernel code n ¨ ¨ when the kernel is running an interrupt service routine when the deferrable functions are disable n n n preemption counter not zero always true when the kernel is executing a softirq or tasklet Thus, to determine whether the current process can be preempted, the kernel quickly checks for a zero value in the preempt_count field. Kernel preemption will be discussed in depth in the section "Kernel Preemption" in Chapter 5. 87

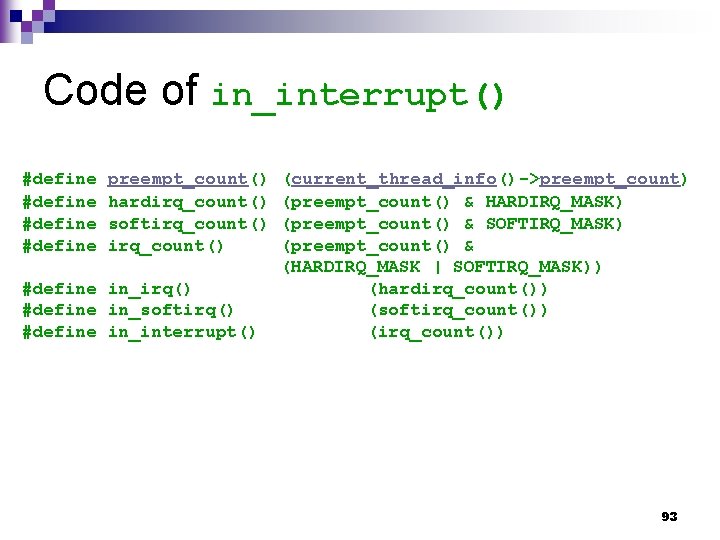

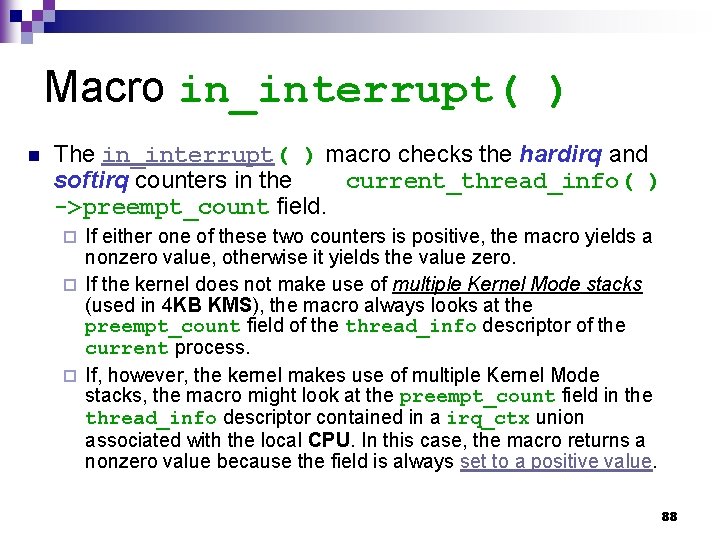

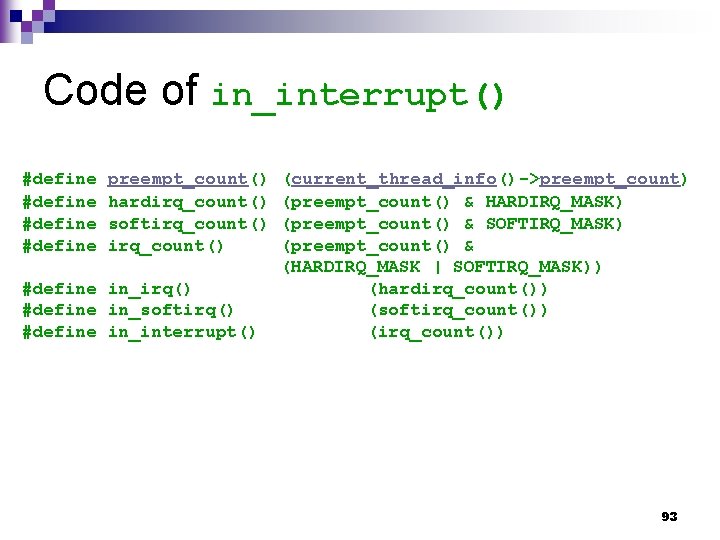

Macro in_interrupt( ) n The in_interrupt( ) macro checks the hardirq and softirq counters in the current_thread_info( ) ->preempt_count field. If either one of these two counters is positive, the macro yields a nonzero value, otherwise it yields the value zero. ¨ If the kernel does not make use of multiple Kernel Mode stacks (used in 4 KB KMS), the macro always looks at the preempt_count field of the thread_info descriptor of the current process. ¨ If, however, the kernel makes use of multiple Kernel Mode stacks, the macro might look at the preempt_count field in the thread_info descriptor contained in a irq_ctx union associated with the local CPU. In this case, the macro returns a nonzero value because the field is always set to a positive value. ¨ 88

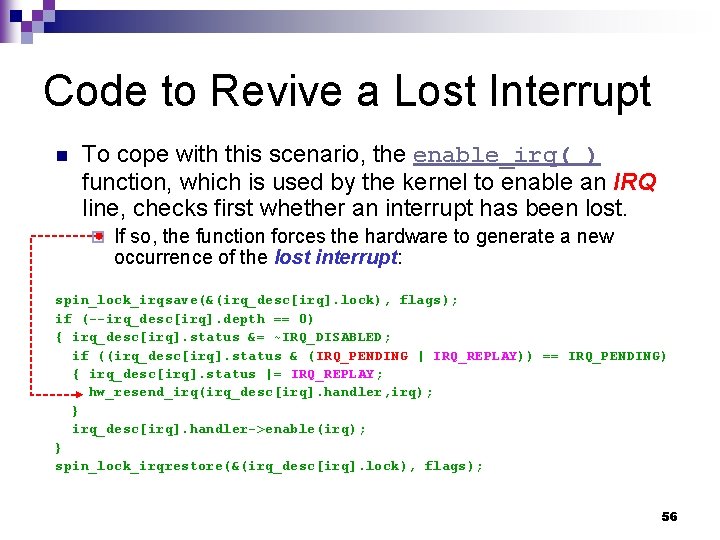

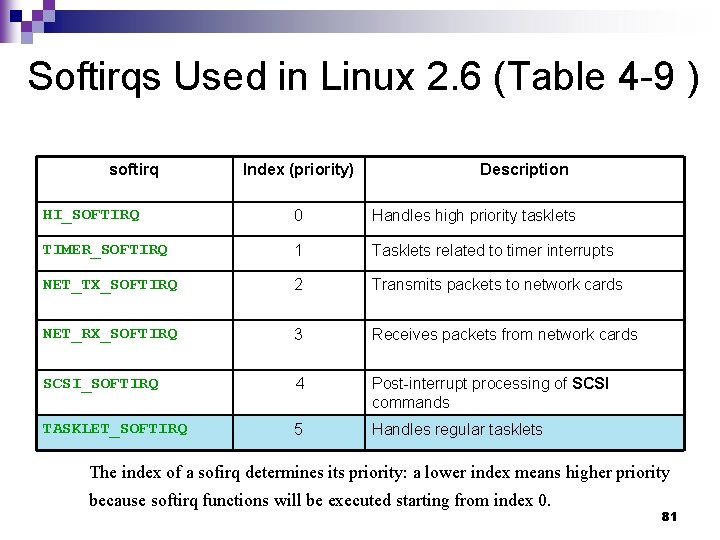

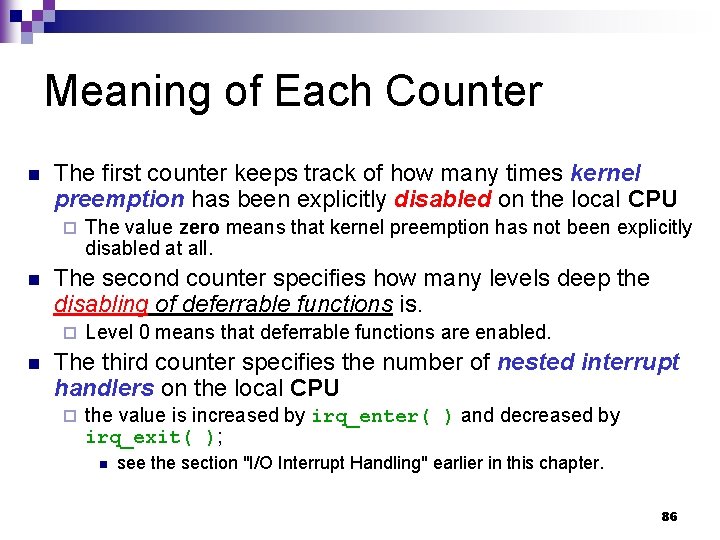

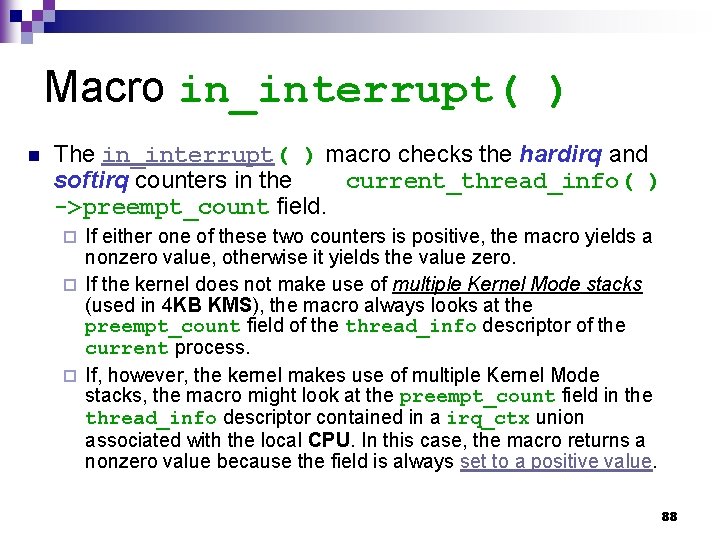

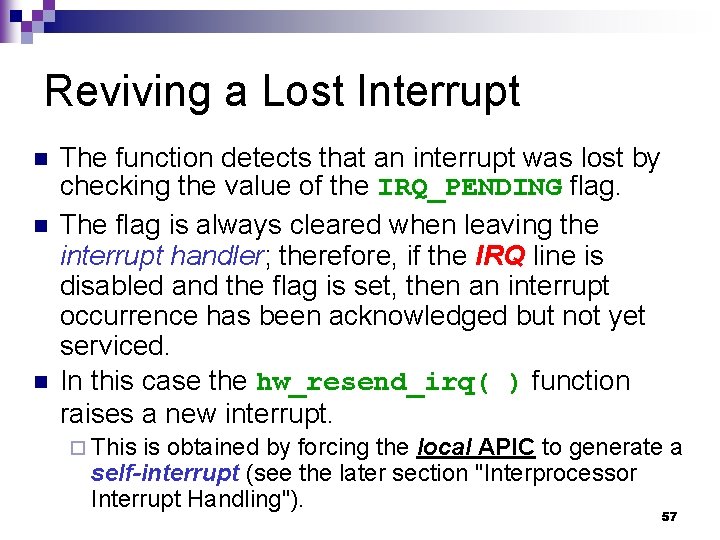

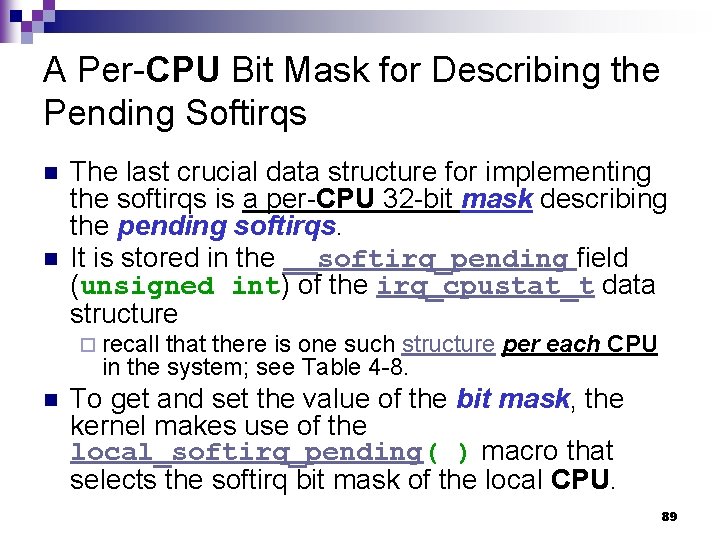

A Per-CPU Bit Mask for Describing the Pending Softirqs n n The last crucial data structure for implementing the softirqs is a per-CPU 32 -bit mask describing the pending softirqs. It is stored in the __softirq_pending field (unsigned int) of the irq_cpustat_t data structure ¨ recall that there is one such structure per each CPU in the system; see Table 4 -8. n To get and set the value of the bit mask, the kernel makes use of the local_softirq_pending( ) macro that selects the softirq bit mask of the local CPU. 89

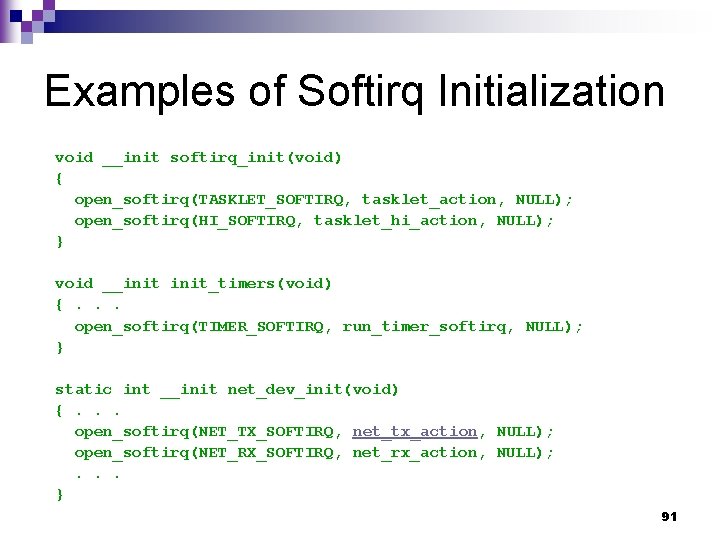

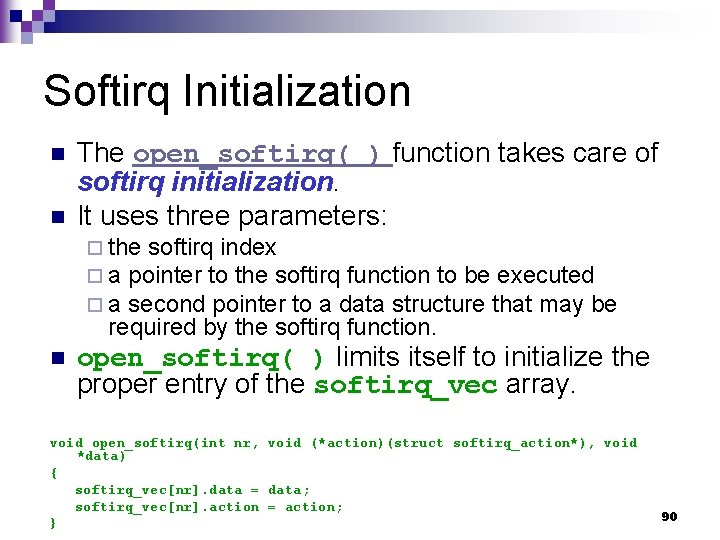

Softirq Initialization n n The open_softirq( ) function takes care of softirq initialization. It uses three parameters: ¨ the softirq index ¨ a pointer to the softirq function to be executed ¨ a second pointer to a data structure that may be required by the softirq function. n open_softirq( ) limits itself to initialize the proper entry of the softirq_vec array. void open_softirq(int nr, void (*action)(struct softirq_action*), void *data) { softirq_vec[nr]. data = data; softirq_vec[nr]. action = action; } 90

Examples of Softirq Initialization void __init softirq_init(void) { open_softirq(TASKLET_SOFTIRQ, tasklet_action, NULL); open_softirq(HI_SOFTIRQ, tasklet_hi_action, NULL); } void __init_timers(void) {. . . open_softirq(TIMER_SOFTIRQ, run_timer_softirq, NULL); } static int __init net_dev_init(void) {. . . open_softirq(NET_TX_SOFTIRQ, net_tx_action, NULL); open_softirq(NET_RX_SOFTIRQ, net_rx_action, NULL); . . . } 91

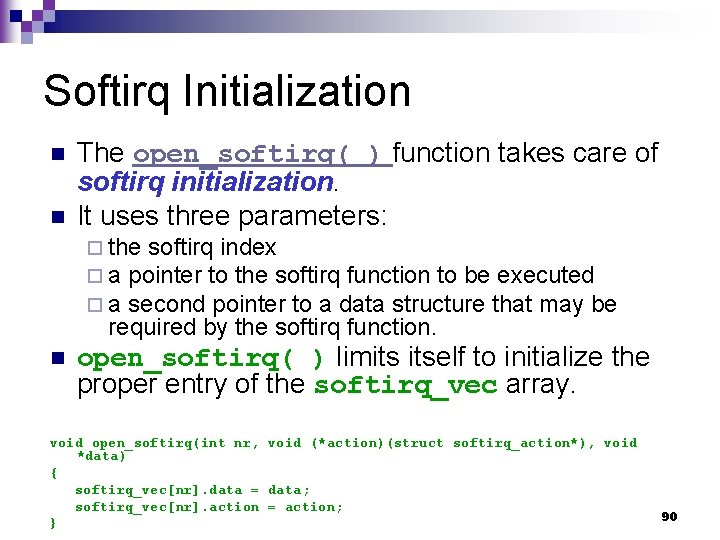

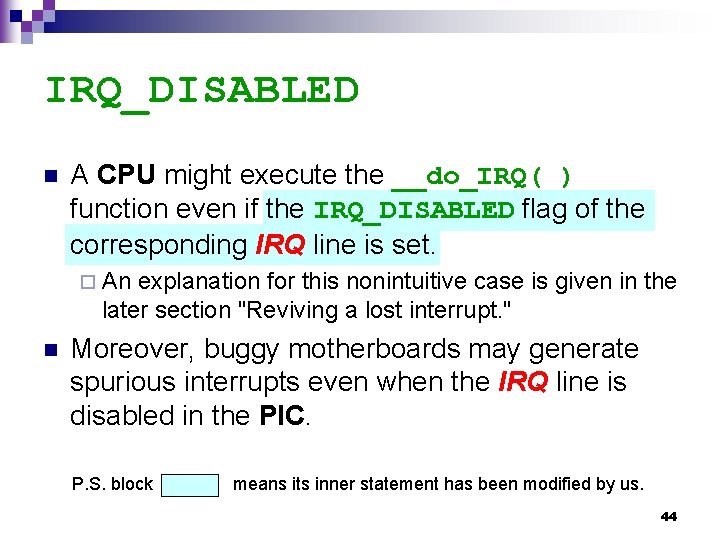

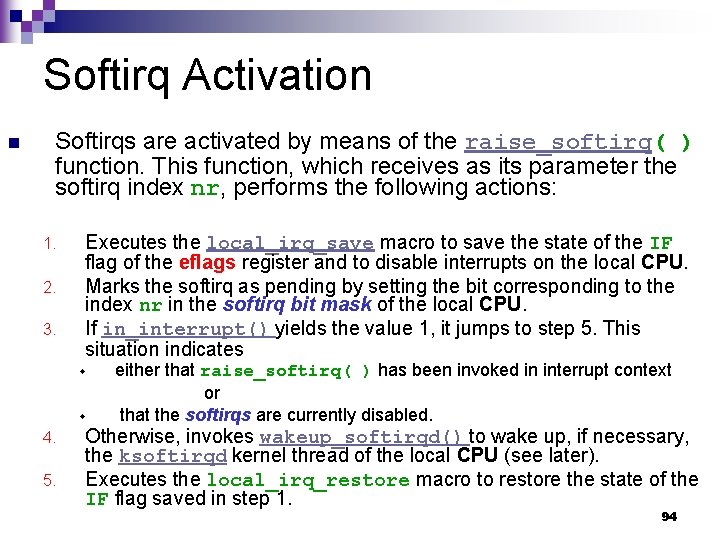

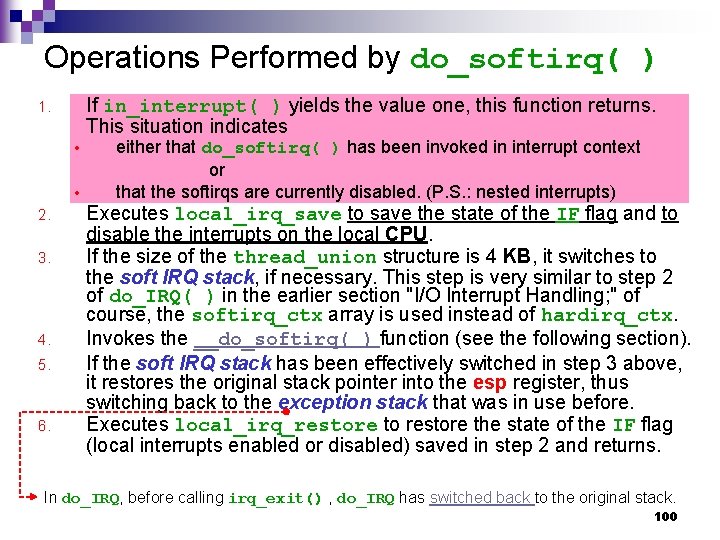

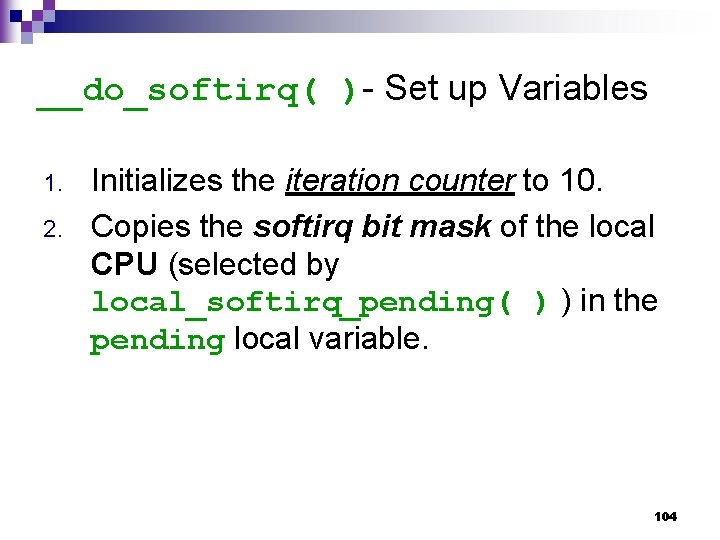

![Softirq ActivationRelated Code define IRQSTATcpu member irqstatcpu member define localsoftirqpending IRQSTATsmpprocessorid softirqpending define Softirq Activation-Related Code #define __IRQ_STAT(cpu, member) (irq_stat[cpu]. member) #define local_softirq_pending() __IRQ_STAT(smp_processor_id(), __softirq_pending) #define](https://slidetodoc.com/presentation_image_h2/d833b0ec652f6add411edc40615d0f66/image-92.jpg)

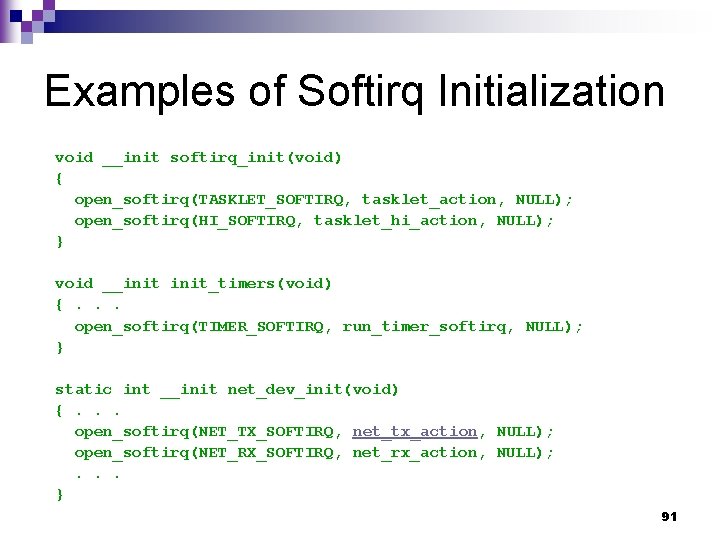

Softirq Activation-Related Code #define __IRQ_STAT(cpu, member) (irq_stat[cpu]. member) #define local_softirq_pending() __IRQ_STAT(smp_processor_id(), __softirq_pending) #define __raise_softirq_irqoff(nr) do { local_softirq_pending() |= 1 UL << (nr); } while (0) inline fastcall void raise_softirq_irqoff(unsigned int nr) { __raise_softirq_irqoff(nr); if (!in_interrupt()) wakeup_softirqd(); } void fastcall raise_softirq(unsigned int nr) { unsigned long flags; local_irq_save(flags); raise_softirq_irqoff(nr); local_irq_restore(flags); } 92

Code of in_interrupt() #define preempt_count() hardirq_count() softirq_count() #define in_irq() #define in_softirq() #define in_interrupt() (current_thread_info()->preempt_count) (preempt_count() & HARDIRQ_MASK) (preempt_count() & SOFTIRQ_MASK) (preempt_count() & (HARDIRQ_MASK | SOFTIRQ_MASK)) (hardirq_count()) (softirq_count()) (irq_count()) 93

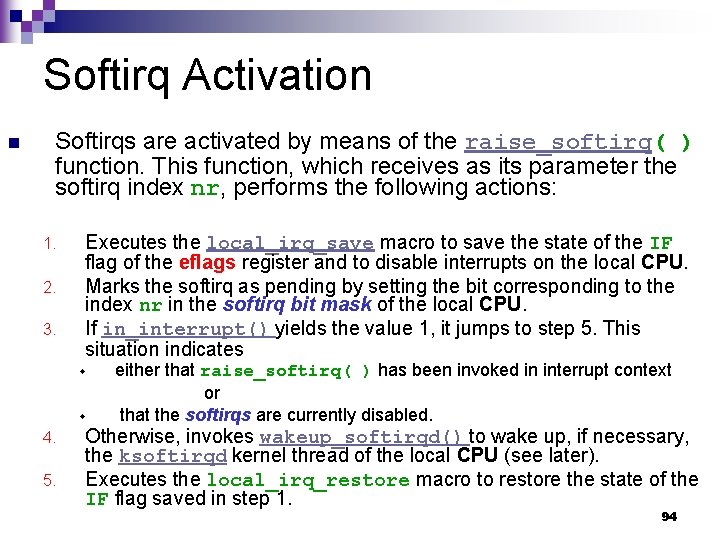

Softirq Activation n Softirqs are activated by means of the raise_softirq( ) function. This function, which receives as its parameter the softirq index nr, performs the following actions: 1. 2. 3. Executes the local_irq_save macro to save the state of the IF flag of the eflags register and to disable interrupts on the local CPU. Marks the softirq as pending by setting the bit corresponding to the index nr in the softirq bit mask of the local CPU. If in_interrupt() yields the value 1, it jumps to step 5. This situation indicates w w 4. 5. either that raise_softirq( ) has been invoked in interrupt context or that the softirqs are currently disabled. Otherwise, invokes wakeup_softirqd() to wake up, if necessary, the ksoftirqd kernel thread of the local CPU (see later). Executes the local_irq_restore macro to restore the state of the IF flag saved in step 1. 94

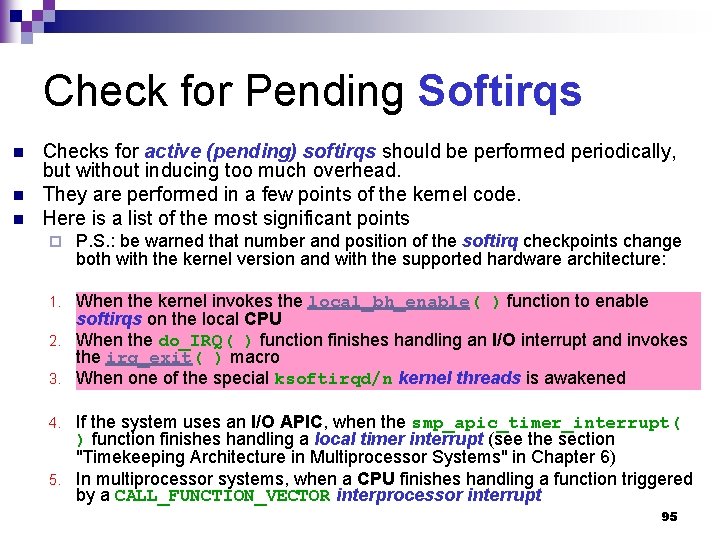

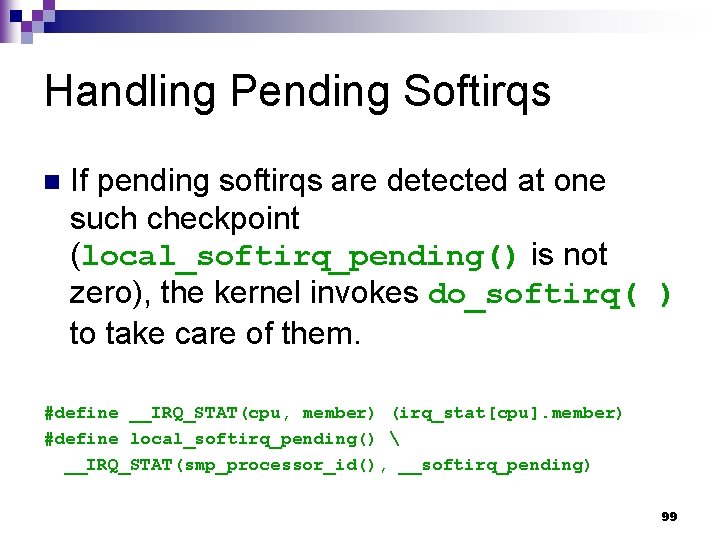

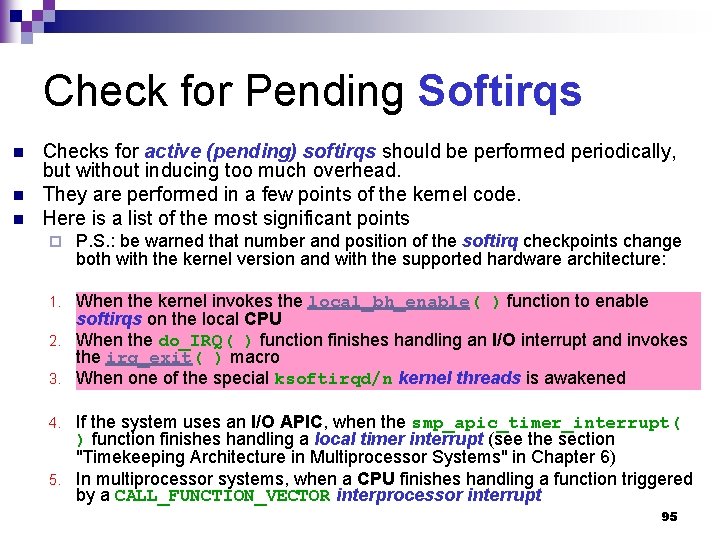

Check for Pending Softirqs n n n Checks for active (pending) softirqs should be performed periodically, but without inducing too much overhead. They are performed in a few points of the kernel code. Here is a list of the most significant points ¨ P. S. : be warned that number and position of the softirq checkpoints change both with the kernel version and with the supported hardware architecture: When the kernel invokes the local_bh_enable( ) function to enable softirqs on the local CPU 2. When the do_IRQ( ) function finishes handling an I/O interrupt and invokes the irq_exit( ) macro 3. When one of the special ksoftirqd/n kernel threads is awakened 1. If the system uses an I/O APIC, when the smp_apic_timer_interrupt( ) function finishes handling a local timer interrupt (see the section "Timekeeping Architecture in Multiprocessor Systems" in Chapter 6) 5. In multiprocessor systems, when a CPU finishes handling a function triggered by a CALL_FUNCTION_VECTOR interprocessor interrupt 4. 95

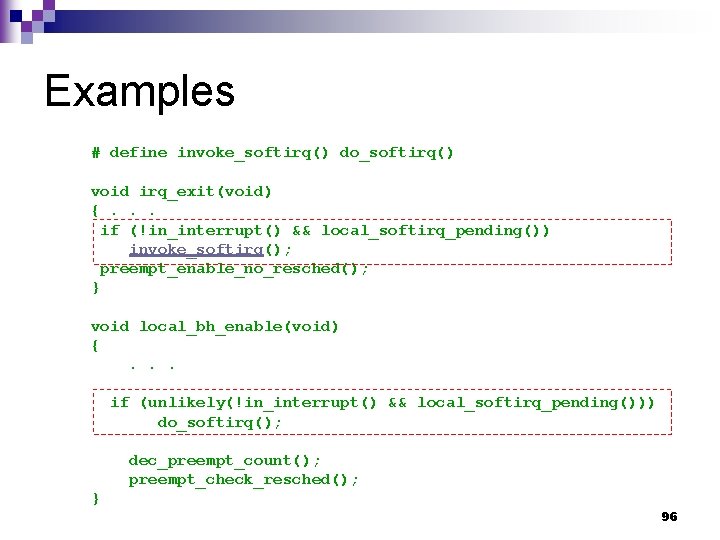

Examples # define invoke_softirq() do_softirq() void irq_exit(void) {. . . if (!in_interrupt() && local_softirq_pending()) invoke_softirq(); preempt_enable_no_resched(); } void local_bh_enable(void) {. . . if (unlikely(!in_interrupt() && local_softirq_pending())) do_softirq(); dec_preempt_count(); preempt_check_resched(); } 96

do_softirq() 97

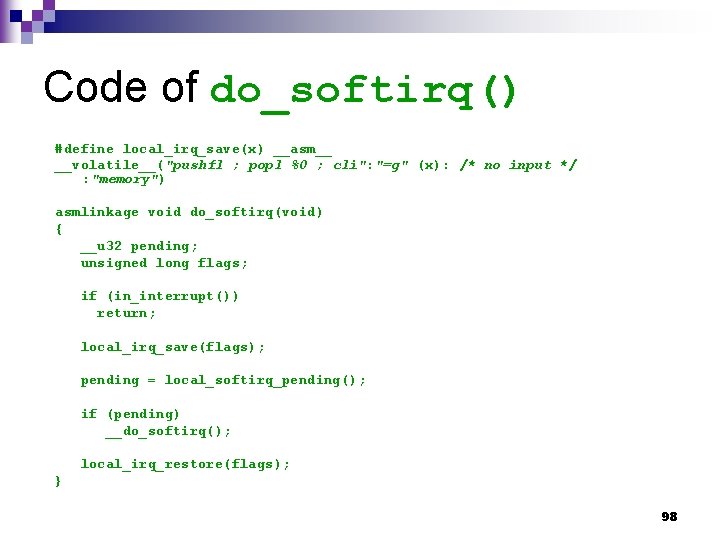

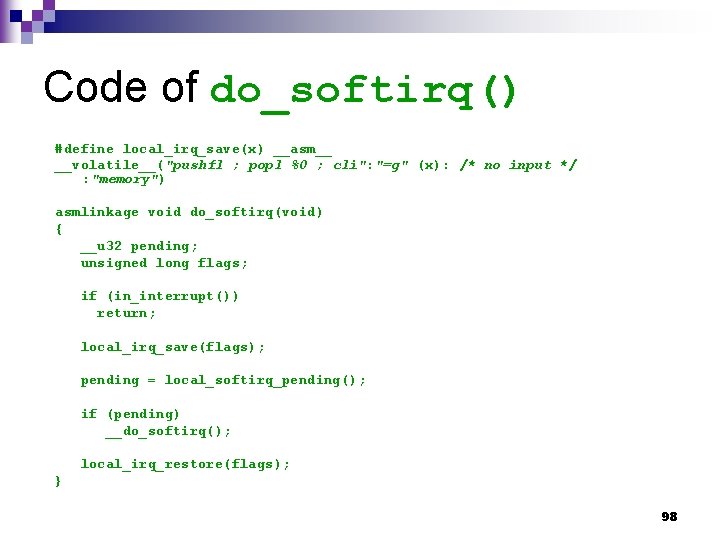

Code of do_softirq() #define local_irq_save(x) __asm__ __volatile__("pushfl ; popl %0 ; cli": "=g" (x): /* no input */ : "memory") asmlinkage void do_softirq(void) { __u 32 pending; unsigned long flags; if (in_interrupt()) return; local_irq_save(flags); pending = local_softirq_pending(); if (pending) __do_softirq(); local_irq_restore(flags); } 98

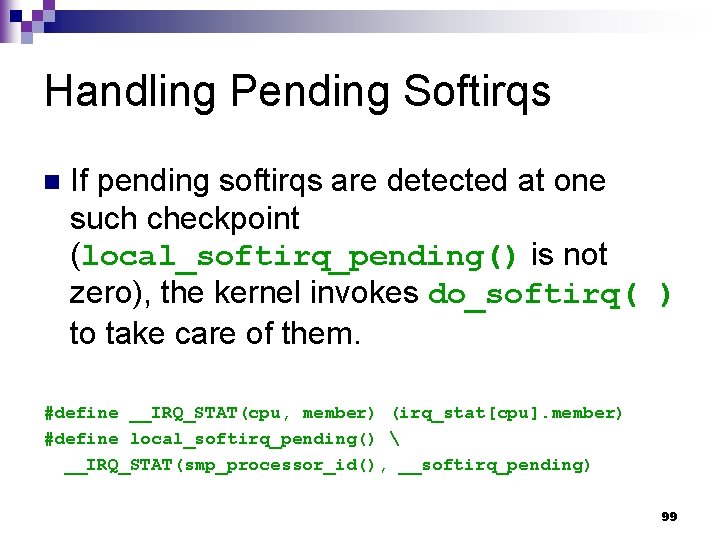

Handling Pending Softirqs n If pending softirqs are detected at one such checkpoint (local_softirq_pending() is not zero), the kernel invokes do_softirq( ) to take care of them. #define __IRQ_STAT(cpu, member) (irq_stat[cpu]. member) #define local_softirq_pending() __IRQ_STAT(smp_processor_id(), __softirq_pending) 99

Operations Performed by do_softirq( ) If in_interrupt( ) yields the value one, this function returns. This situation indicates 1. • • 2. 3. 4. 5. 6. either that do_softirq( ) has been invoked in interrupt context or that the softirqs are currently disabled. (P. S. : nested interrupts) Executes local_irq_save to save the state of the IF flag and to disable the interrupts on the local CPU. If the size of the thread_union structure is 4 KB, it switches to the soft IRQ stack, if necessary. This step is very similar to step 2 of do_IRQ( ) in the earlier section "I/O Interrupt Handling; " of course, the softirq_ctx array is used instead of hardirq_ctx. Invokes the __do_softirq( ) function (see the following section). If the soft IRQ stack has been effectively switched in step 3 above, it restores the original stack pointer into the esp register, thus switching back to the exception stack that was in use before. Executes local_irq_restore to restore the state of the IF flag (local interrupts enabled or disabled) saved in step 2 and returns. In do_IRQ, before calling irq_exit() , do_IRQ has switched back to the original stack. 100

__do_softirq() 101

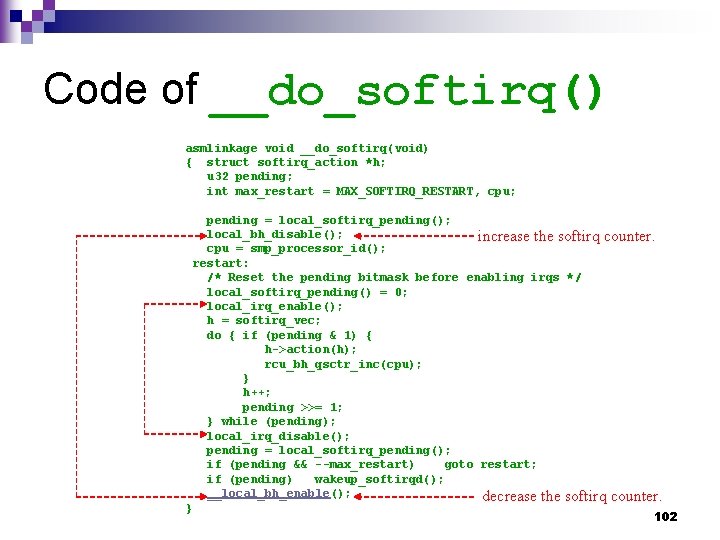

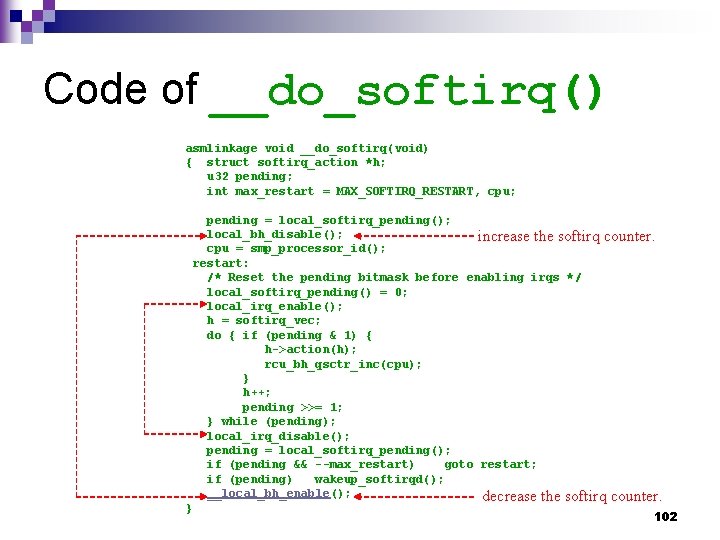

Code of __do_softirq() asmlinkage void __do_softirq(void) { struct softirq_action *h; u 32 pending; int max_restart = MAX_SOFTIRQ_RESTART, cpu; } pending = local_softirq_pending(); local_bh_disable(); increase the softirq counter. cpu = smp_processor_id(); restart: /* Reset the pending bitmask before enabling irqs */ local_softirq_pending() = 0; local_irq_enable(); h = softirq_vec; do { if (pending & 1) { h->action(h); rcu_bh_qsctr_inc(cpu); } h++; pending >>= 1; } while (pending); local_irq_disable(); pending = local_softirq_pending(); if (pending && --max_restart) goto restart; if (pending) wakeup_softirqd(); __local_bh_enable(); decrease the softirq counter. 102

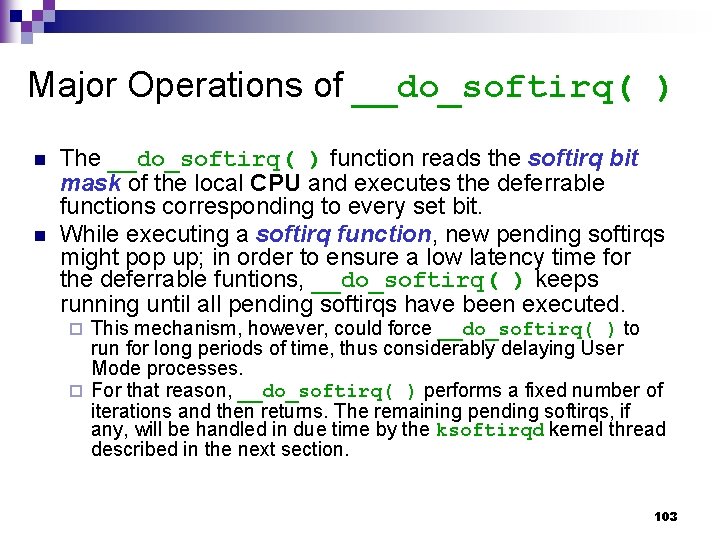

Major Operations of __do_softirq( ) n n The __do_softirq( ) function reads the softirq bit mask of the local CPU and executes the deferrable functions corresponding to every set bit. While executing a softirq function, new pending softirqs might pop up; in order to ensure a low latency time for the deferrable funtions, __do_softirq( ) keeps running until all pending softirqs have been executed. This mechanism, however, could force __do_softirq( ) to run for long periods of time, thus considerably delaying User Mode processes. ¨ For that reason, __do_softirq( ) performs a fixed number of iterations and then returns. The remaining pending softirqs, if any, will be handled in due time by the ksoftirqd kernel thread described in the next section. ¨ 103

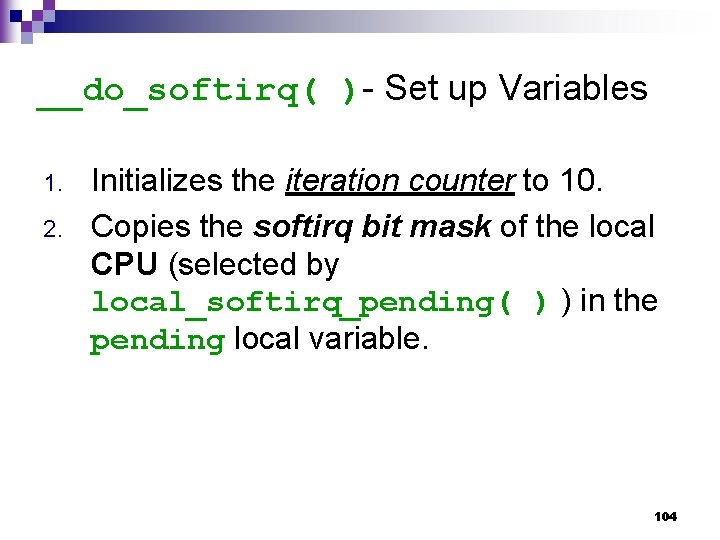

__do_softirq( )- Set up Variables 1. 2. Initializes the iteration counter to 10. Copies the softirq bit mask of the local CPU (selected by local_softirq_pending( ) ) in the pending local variable. 104

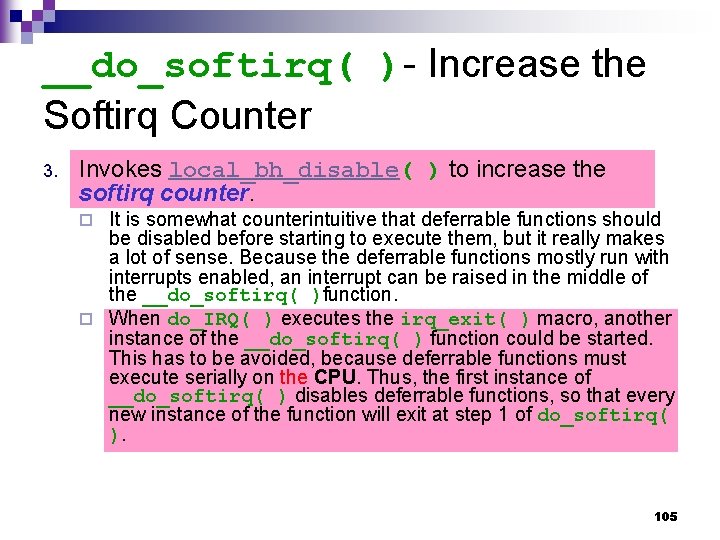

__do_softirq( )- Increase the Softirq Counter 3. Invokes local_bh_disable( ) to increase the softirq counter. It is somewhat counterintuitive that deferrable functions should be disabled before starting to execute them, but it really makes a lot of sense. Because the deferrable functions mostly run with interrupts enabled, an interrupt can be raised in the middle of the __do_softirq( )function. ¨ When do_IRQ( ) executes the irq_exit( ) macro, another instance of the __do_softirq( ) function could be started. This has to be avoided, because deferrable functions must execute serially on the CPU. Thus, the first instance of __do_softirq( ) disables deferrable functions, so that every new instance of the function will exit at step 1 of do_softirq( ). ¨ 105

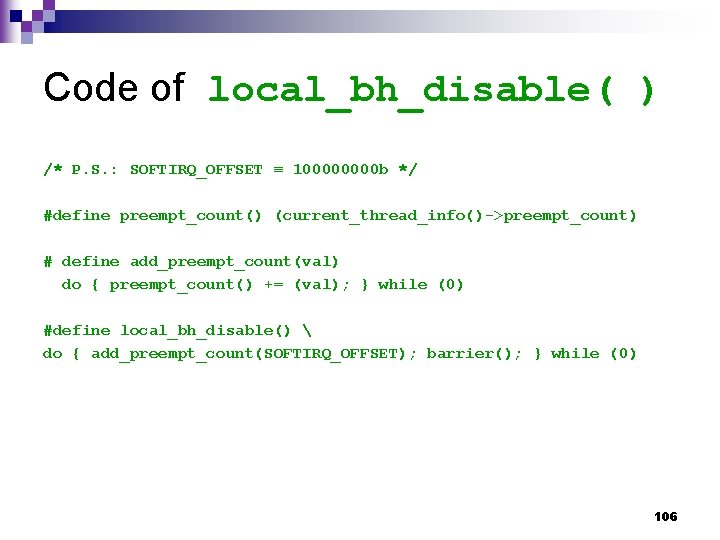

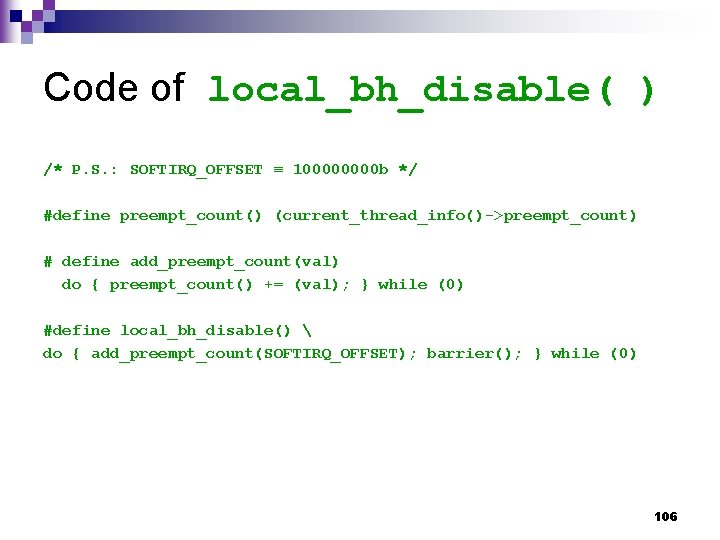

Code of local_bh_disable( ) /* P. S. : SOFTIRQ_OFFSET ≡ 10000 b */ #define preempt_count() (current_thread_info()->preempt_count) # define add_preempt_count(val) do { preempt_count() += (val); } while (0) #define local_bh_disable() do { add_preempt_count(SOFTIRQ_OFFSET); barrier(); } while (0) 106

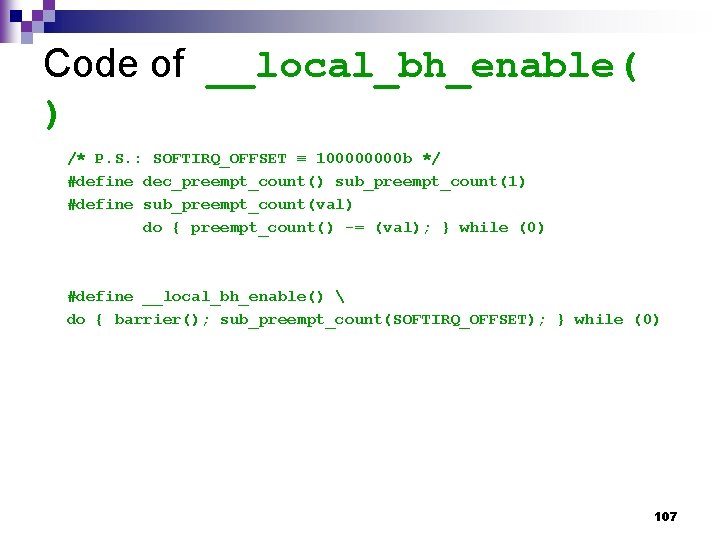

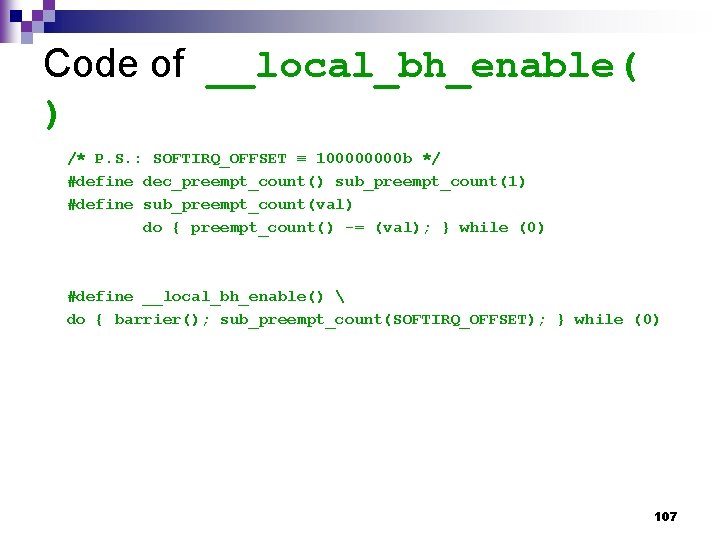

Code of __local_bh_enable( ) /* P. S. : SOFTIRQ_OFFSET ≡ 10000 b */ #define dec_preempt_count() sub_preempt_count(1) #define sub_preempt_count(val) do { preempt_count() -= (val); } while (0) #define __local_bh_enable() do { barrier(); sub_preempt_count(SOFTIRQ_OFFSET); } while (0) 107

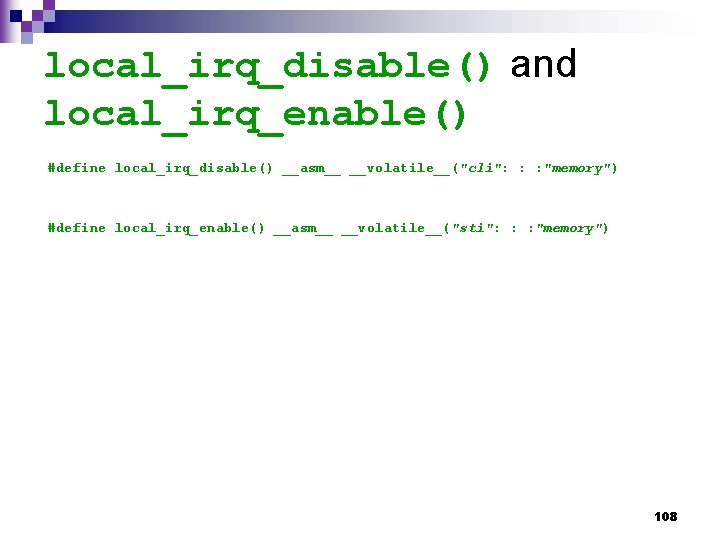

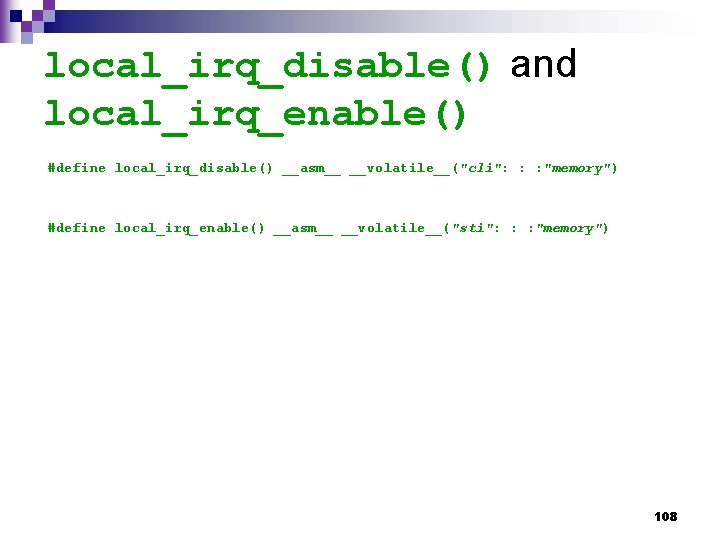

local_irq_disable() and local_irq_enable() #define local_irq_disable() __asm__ __volatile__("cli": : : "memory") #define local_irq_enable() __asm__ __volatile__("sti": : : "memory") 108

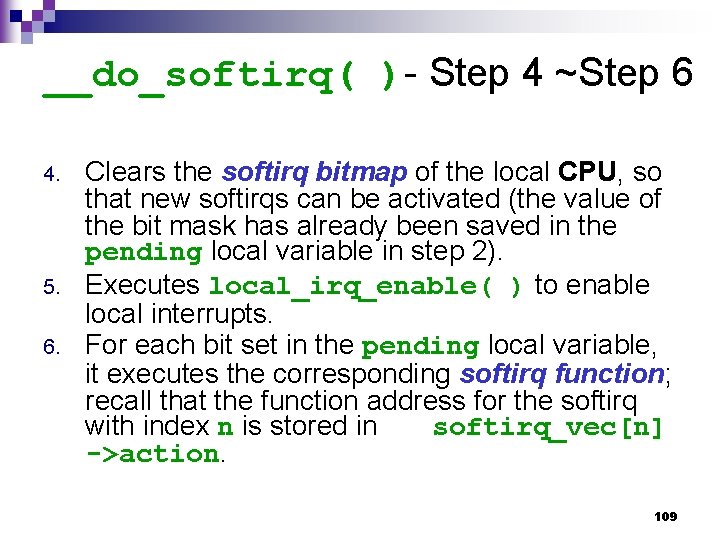

__do_softirq( )- Step 4 ~Step 6 4. 5. 6. Clears the softirq bitmap of the local CPU, so that new softirqs can be activated (the value of the bit mask has already been saved in the pending local variable in step 2). Executes local_irq_enable( ) to enable local interrupts. For each bit set in the pending local variable, it executes the corresponding softirq function; recall that the function address for the softirq with index n is stored in softirq_vec[n] ->action. 109

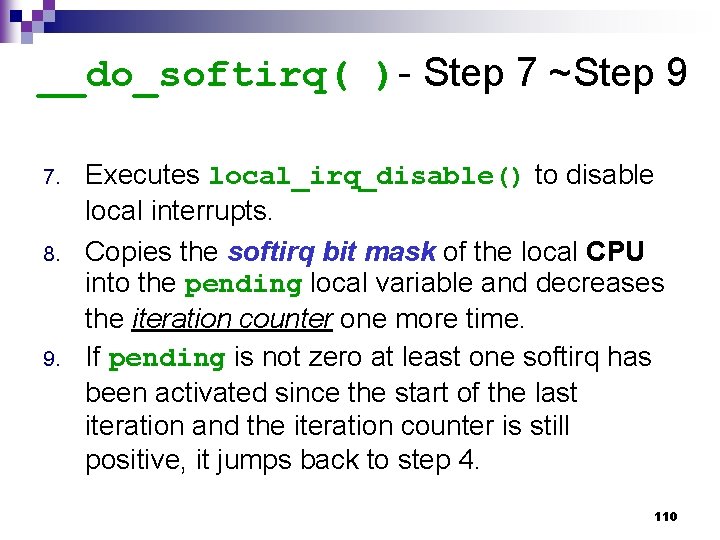

__do_softirq( )- Step 7 ~Step 9 7. 8. 9. Executes local_irq_disable() to disable local interrupts. Copies the softirq bit mask of the local CPU into the pending local variable and decreases the iteration counter one more time. If pending is not zero at least one softirq has been activated since the start of the last iteration and the iteration counter is still positive, it jumps back to step 4. 110

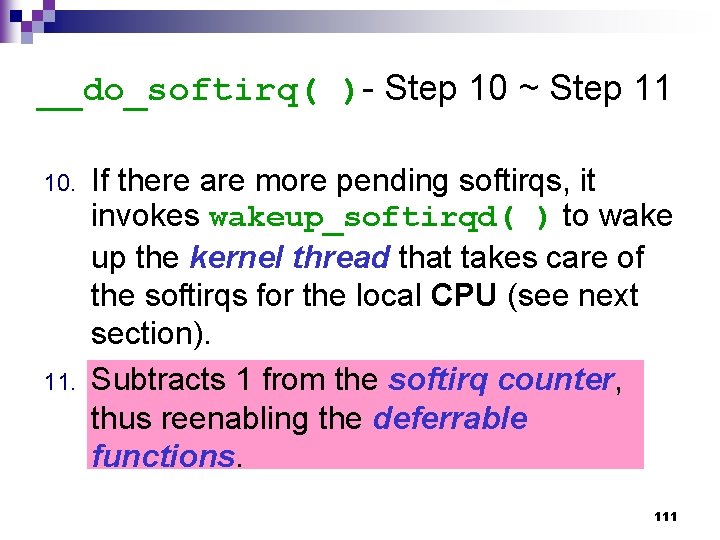

__do_softirq( )- Step 10 ~ Step 11 10. 11. If there are more pending softirqs, it invokes wakeup_softirqd( ) to wake up the kernel thread that takes care of the softirqs for the local CPU (see next section). Subtracts 1 from the softirq counter, thus reenabling the deferrable functions. 111

ksoftirqd 112

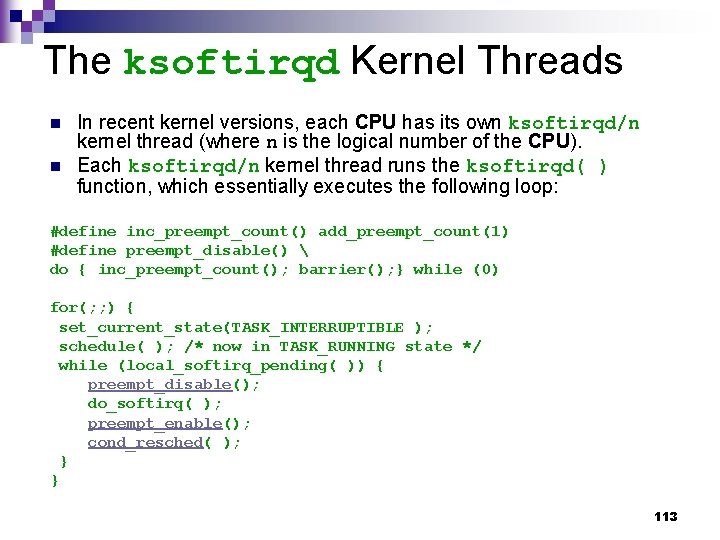

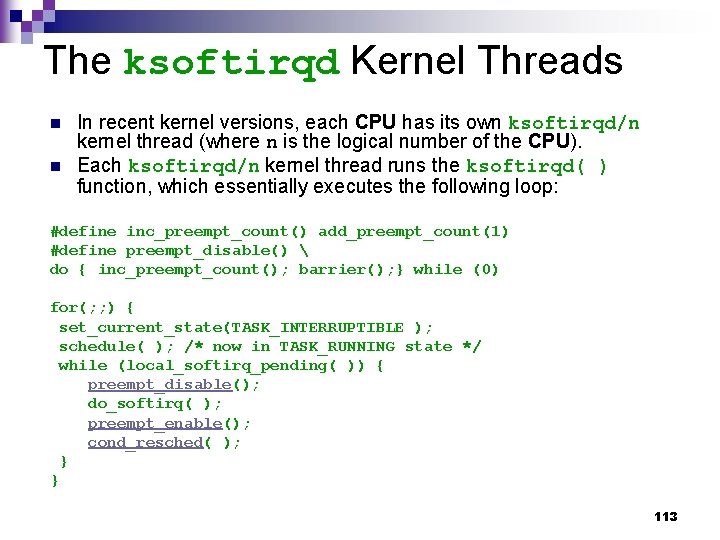

The ksoftirqd Kernel Threads n n In recent kernel versions, each CPU has its own ksoftirqd/n kernel thread (where n is the logical number of the CPU). Each ksoftirqd/n kernel thread runs the ksoftirqd( ) function, which essentially executes the following loop: #define inc_preempt_count() add_preempt_count(1) #define preempt_disable() do { inc_preempt_count(); barrier(); } while (0) for(; ; ) { set_current_state(TASK_INTERRUPTIBLE ); schedule( ); /* now in TASK_RUNNING state */ while (local_softirq_pending( )) { preempt_disable(); do_softirq( ); preempt_enable(); cond_resched( ); } } 113

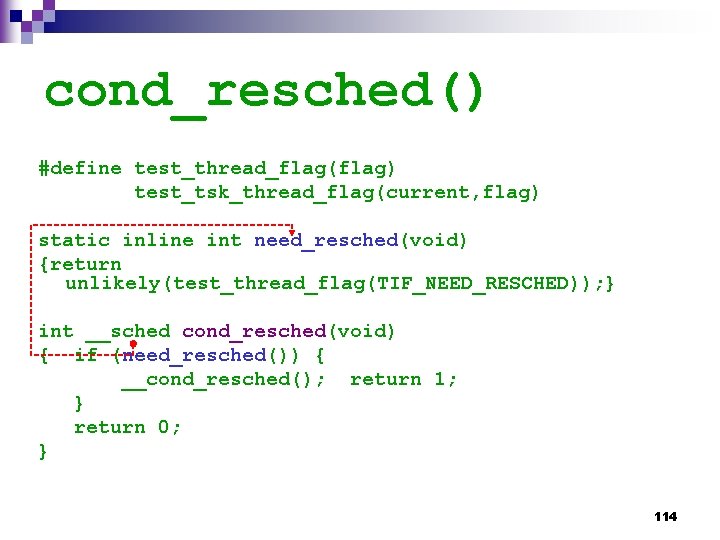

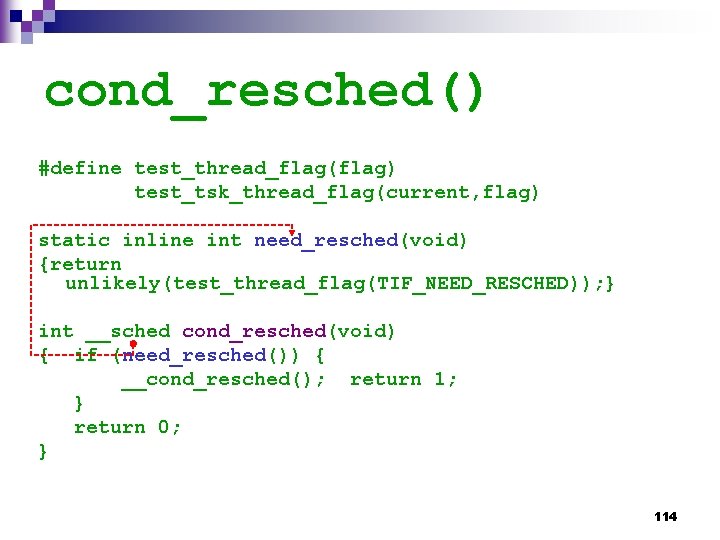

cond_resched() #define test_thread_flag(flag) test_tsk_thread_flag(current, flag) static inline int need_resched(void) {return unlikely(test_thread_flag(TIF_NEED_RESCHED)); } int __sched cond_resched(void) { if (need_resched()) { __cond_resched(); return 1; } return 0; } 114

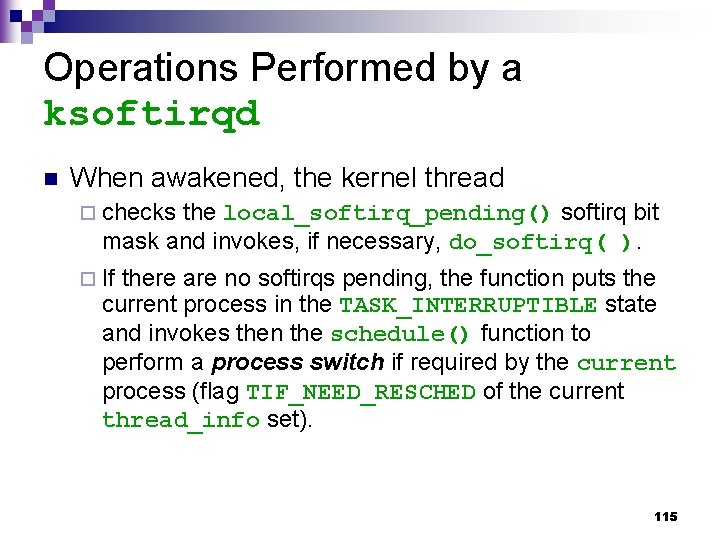

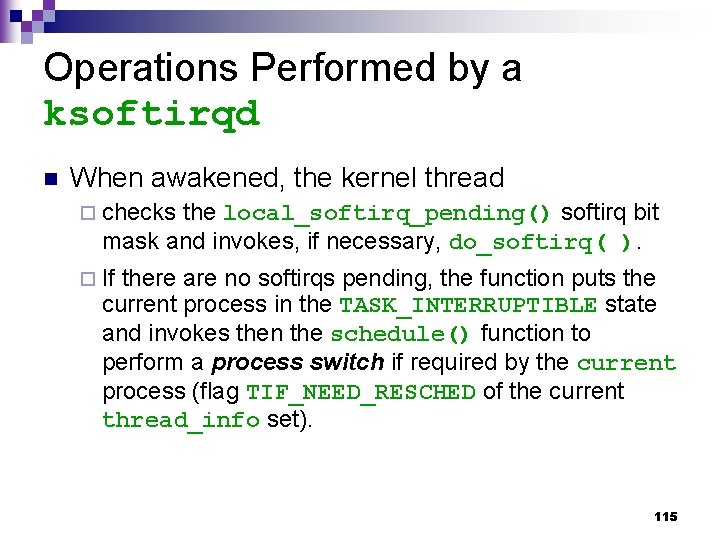

Operations Performed by a ksoftirqd n When awakened, the kernel thread ¨ checks the local_softirq_pending() softirq bit mask and invokes, if necessary, do_softirq( ). ¨ If there are no softirqs pending, the function puts the current process in the TASK_INTERRUPTIBLE state and invokes then the schedule() function to perform a process switch if required by the current process (flag TIF_NEED_RESCHED of the current thread_info set). 115

Tasklets 116

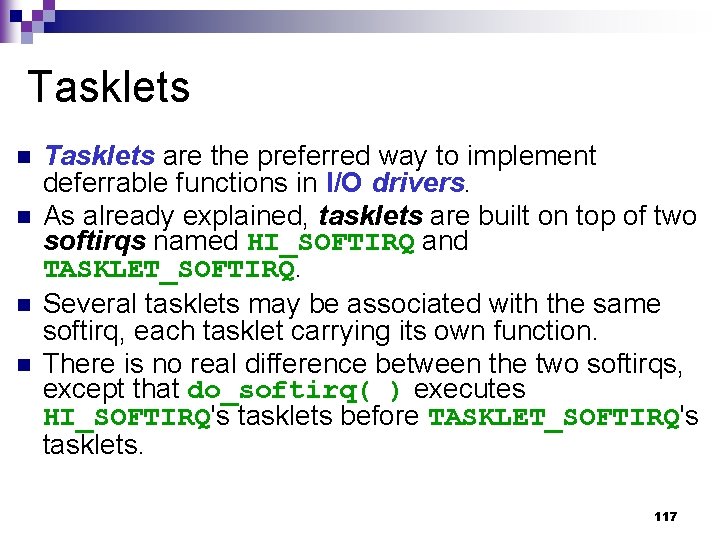

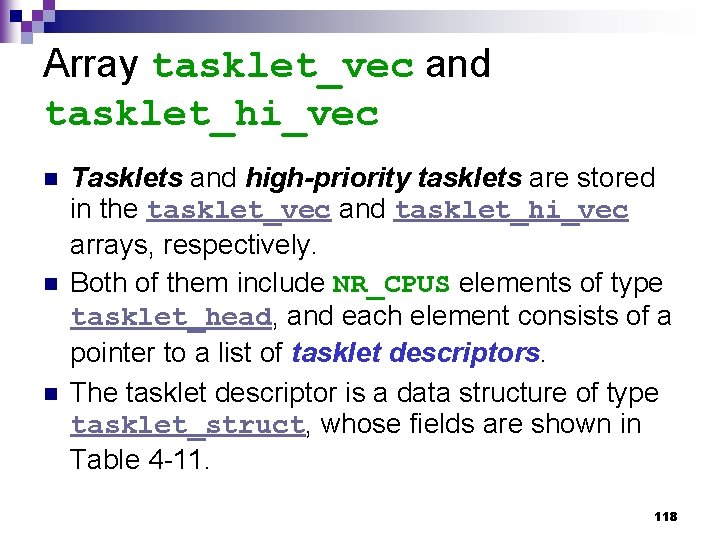

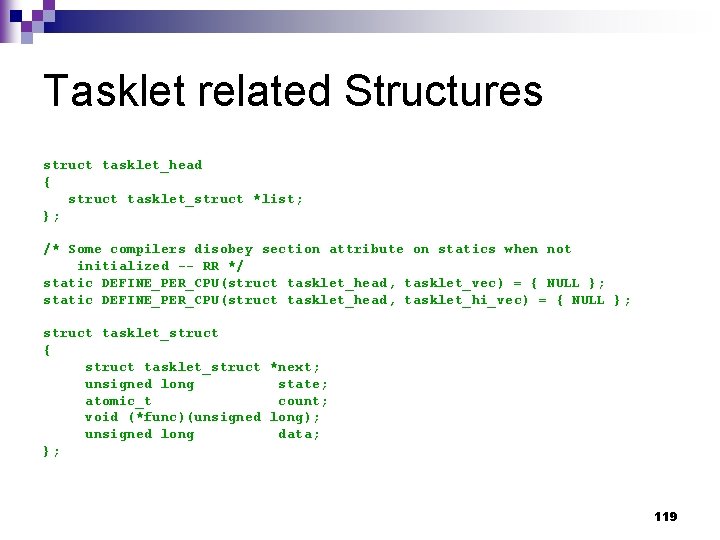

Tasklets n n Tasklets are the preferred way to implement deferrable functions in I/O drivers. As already explained, tasklets are built on top of two softirqs named HI_SOFTIRQ and TASKLET_SOFTIRQ. Several tasklets may be associated with the same softirq, each tasklet carrying its own function. There is no real difference between the two softirqs, except that do_softirq( ) executes HI_SOFTIRQ's tasklets before TASKLET_SOFTIRQ's tasklets. 117

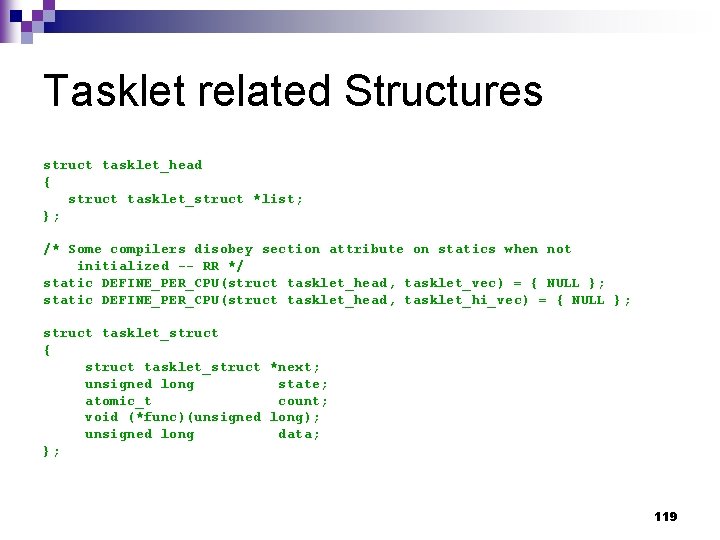

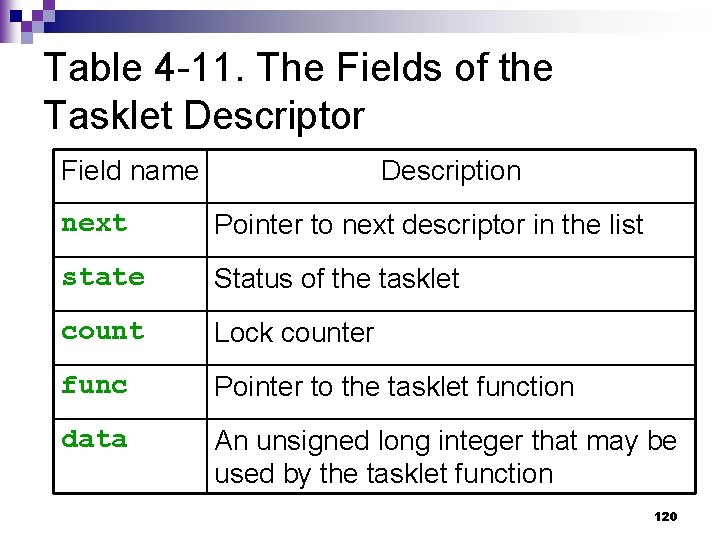

Array tasklet_vec and tasklet_hi_vec n n n Tasklets and high-priority tasklets are stored in the tasklet_vec and tasklet_hi_vec arrays, respectively. Both of them include NR_CPUS elements of type tasklet_head, and each element consists of a pointer to a list of tasklet descriptors. The tasklet descriptor is a data structure of type tasklet_struct, whose fields are shown in Table 4 -11. 118

Tasklet related Structures struct tasklet_head { struct tasklet_struct *list; }; /* Some compilers disobey section attribute on statics when not initialized -- RR */ static DEFINE_PER_CPU(struct tasklet_head, tasklet_vec) = { NULL }; static DEFINE_PER_CPU(struct tasklet_head, tasklet_hi_vec) = { NULL }; struct tasklet_struct { struct tasklet_struct *next; unsigned long state; atomic_t count; void (*func)(unsigned long); unsigned long data; }; 119

Table 4 -11. The Fields of the Tasklet Descriptor Field name Description next Pointer to next descriptor in the list state Status of the tasklet count Lock counter func Pointer to the tasklet function data An unsigned long integer that may be used by the tasklet function 120

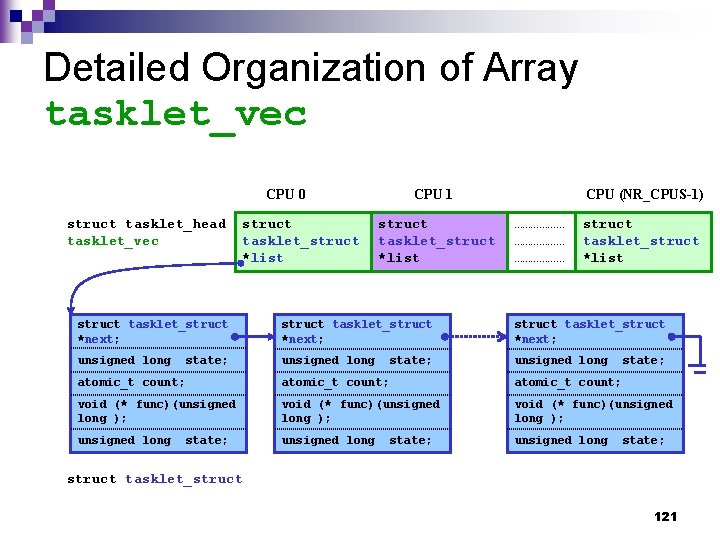

Detailed Organization of Array tasklet_vec CPU 0 struct tasklet_head tasklet_vec struct tasklet_struct *list CPU 1 struct tasklet_struct *list CPU (NR_CPUS-1) ……………… struct tasklet_struct *list struct tasklet_struct *next; unsigned long state; atomic_t count; void (* func)(unsigned long ); unsigned long state; struct tasklet_struct 121

Flags of the state Field n n The state field of the tasklet descriptor includes two flags: TASKLET_STATE_SCHED ¨ When set, this indicates that the tasklet is pending (has been scheduled for execution). ¨ It also means that the tasklet descriptor is inserted in one of the lists of the tasklet_vec and tasklet_hi_vec arrays. n TASKLET_STATE_RUN ¨ When set, this indicates that the tasklet is being executed; on a uniprocessor system this flag is not used because there is no need to check whether a specific tasklet is running. 122

How to Use a Tasklet in a Device Driver n Let's suppose you're writing a device driver and you want to use a tasklet: what has to be done? ¨ First of all, you should allocate a new tasklet_struct data structure ¨ Second, initialize it by invoking tasklet_init( ); this function receives as its parameters n n n the address of the tasklet descriptor the address of your tasklet function its optional integer argument 123

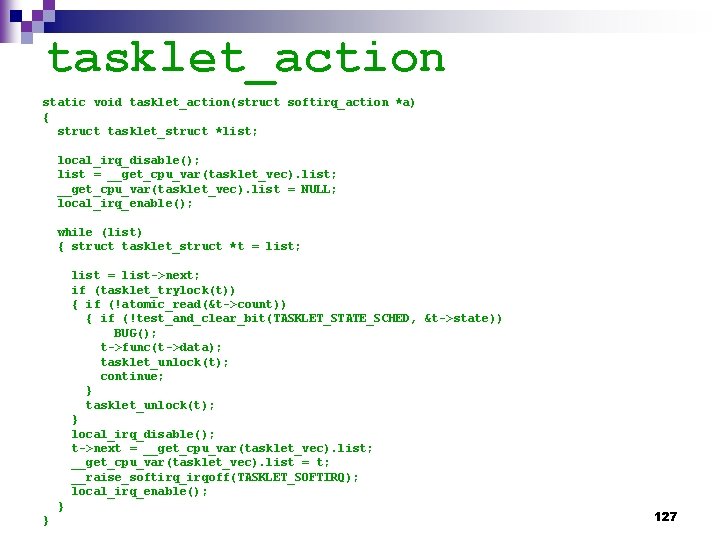

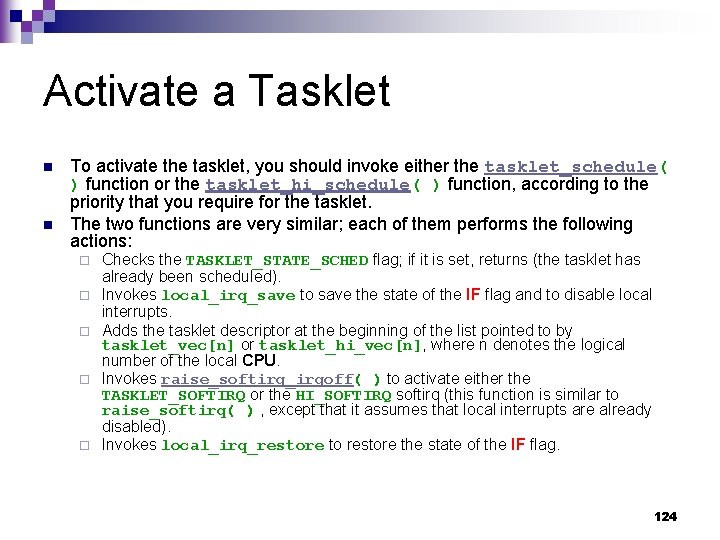

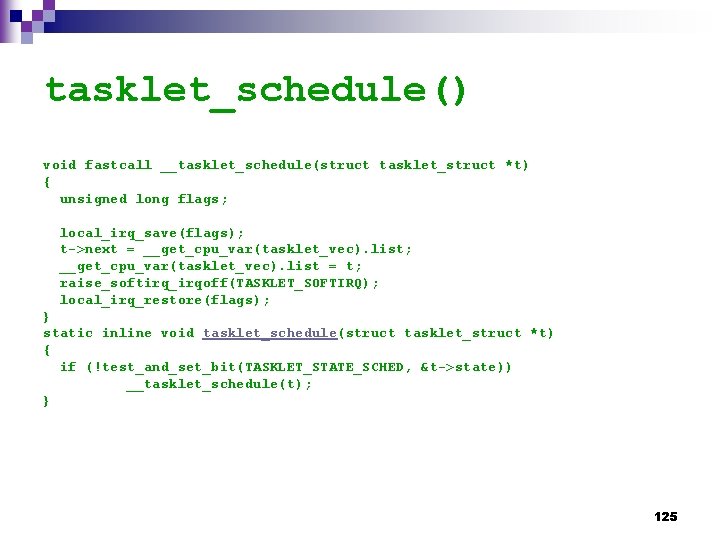

Activate a Tasklet n n To activate the tasklet, you should invoke either the tasklet_schedule( ) function or the tasklet_hi_schedule( ) function, according to the priority that you require for the tasklet. The two functions are very similar; each of them performs the following actions: ¨ ¨ ¨ Checks the TASKLET_STATE_SCHED flag; if it is set, returns (the tasklet has already been scheduled). Invokes local_irq_save to save the state of the IF flag and to disable local interrupts. Adds the tasklet descriptor at the beginning of the list pointed to by tasklet_vec[n] or tasklet_hi_vec[n], where n denotes the logical number of the local CPU. Invokes raise_softirq_irqoff( ) to activate either the TASKLET_SOFTIRQ or the HI_SOFTIRQ softirq (this function is similar to raise_softirq( ) , except that it assumes that local interrupts are already disabled). Invokes local_irq_restore to restore the state of the IF flag. 124

tasklet_schedule() void fastcall __tasklet_schedule(struct tasklet_struct *t) { unsigned long flags; local_irq_save(flags); t->next = __get_cpu_var(tasklet_vec). list; __get_cpu_var(tasklet_vec). list = t; raise_softirq_irqoff(TASKLET_SOFTIRQ); local_irq_restore(flags); } static inline void tasklet_schedule(struct tasklet_struct *t) { if (!test_and_set_bit(TASKLET_STATE_SCHED, &t->state)) __tasklet_schedule(t); } 125

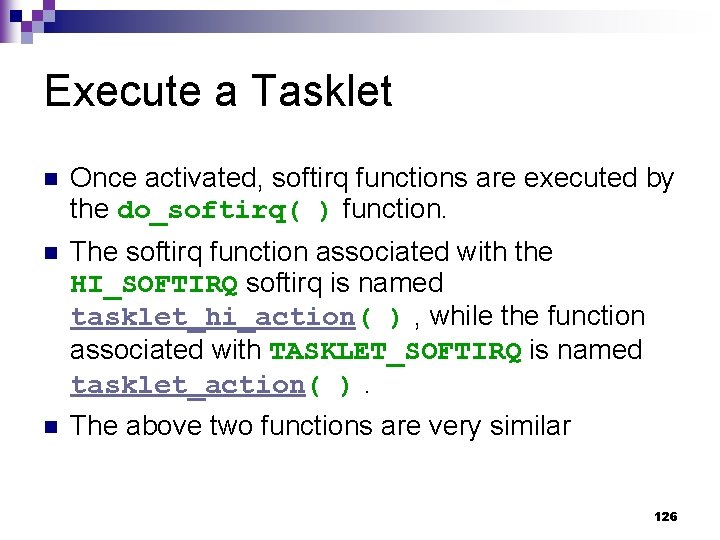

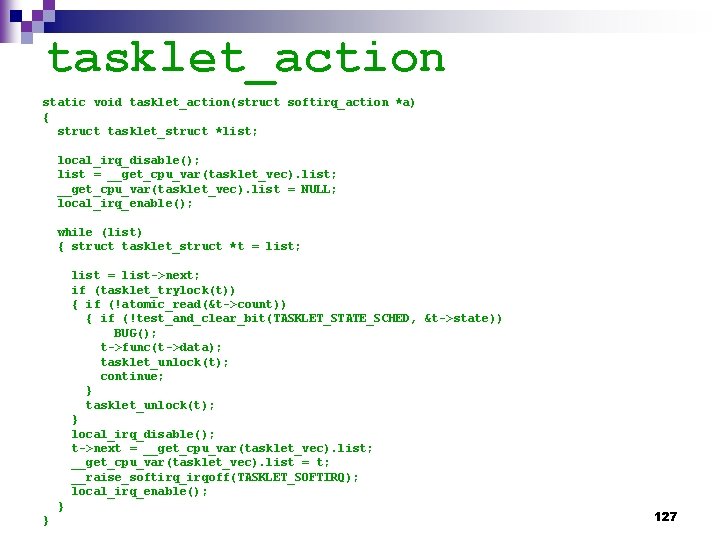

Execute a Tasklet n Once activated, softirq functions are executed by the do_softirq( ) function. n The softirq function associated with the HI_SOFTIRQ softirq is named tasklet_hi_action( ) , while the function associated with TASKLET_SOFTIRQ is named tasklet_action( ). n The above two functions are very similar 126

tasklet_action static void tasklet_action(struct softirq_action *a) { struct tasklet_struct *list; local_irq_disable(); list = __get_cpu_var(tasklet_vec). list; __get_cpu_var(tasklet_vec). list = NULL; local_irq_enable(); while (list) { struct tasklet_struct *t = list; list = list->next; if (tasklet_trylock(t)) { if (!atomic_read(&t->count)) { if (!test_and_clear_bit(TASKLET_STATE_SCHED, &t->state)) BUG(); t->func(t->data); tasklet_unlock(t); continue; } tasklet_unlock(t); } local_irq_disable(); t->next = __get_cpu_var(tasklet_vec). list; __get_cpu_var(tasklet_vec). list = t; __raise_softirq_irqoff(TASKLET_SOFTIRQ); local_irq_enable(); } } 127

Return from Interrupts and Exceptions 128

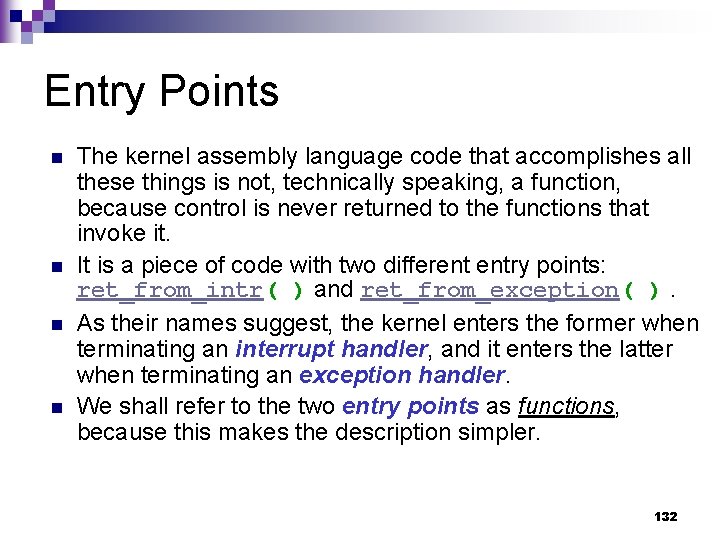

Returning from Interrupts and Exceptions n n To resume execution of some program several issues must be considered before doing it: Number of kernel control paths being concurrently executed ¨ n Pending process switch requests ¨ n If a signal is sent to the current process, it must be handled. Single-step mode ¨ n If there is any request, the kernel must perform process scheduling; otherwise, control is returned to the current process. Pending signals ¨ n If there is just one, the CPU must switch back to User Mode. If a debugger is tracing the execution of the current process, single-step mode must be restored before switching back to User Mode. Virtual-8086 mode ¨ If the CPU is in virtual-8086 mode, the current process is executing a legacy Real Mode program, thus it must be handled in a special way. 129

Flags Are Used to Help Return from Interrupts and Exceptions n A few flags are used to keep track ¨ of pending process switch requests ¨ of pending signals ¨ of single step execution n n They are stored in the flags field of the thread_info descriptor. The field stores other flags as well, but they are not related to returning from interrupts and exceptions. See Table 4 -15 for a complete list of these flags. 130

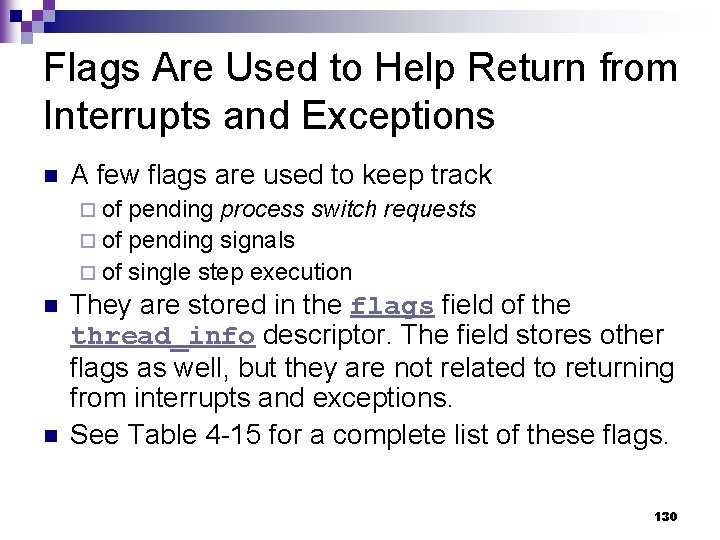

Table 4 -15. The Flags Field of the thread_info Descriptor (continues) Flag name Description TIF_SYSCALL_TRACE System calls are being traced TIF_NOTIFY_RESUME Not used in the 80 x 86 platform TIF_SIGPENDING The process has pending signals TIF_NEED_RESCHED Scheduling must be performed TIF_SINGLESTEP Restore single step execution on return to User Mode TIF_IRET Force return from system call via iret rather than sysexit TIF_SYSCALL_AUDIT System calls are being audited TIF_POLLING_NRFLAG The idle process is polling the TIF_NEED_RESCHED flag TIF_MEMDIE The process is being destroyed to reclaim memory (see the section "The Out of Memory Killer" in Chapter 17) 131

Entry Points n n The kernel assembly language code that accomplishes all these things is not, technically speaking, a function, because control is never returned to the functions that invoke it. It is a piece of code with two different entry points: ret_from_intr( ) and ret_from_exception( ). As their names suggest, the kernel enters the former when terminating an interrupt handler, and it enters the latter when terminating an exception handler. We shall refer to the two entry points as functions, because this makes the description simpler. 132

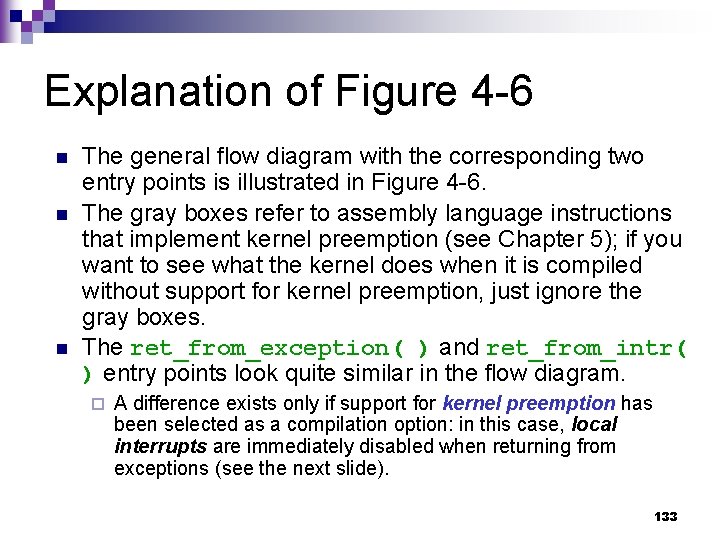

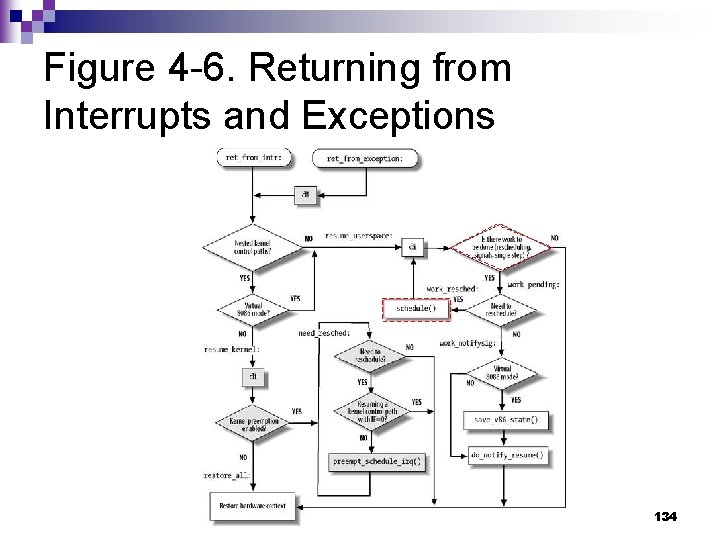

Explanation of Figure 4 -6 n n n The general flow diagram with the corresponding two entry points is illustrated in Figure 4 -6. The gray boxes refer to assembly language instructions that implement kernel preemption (see Chapter 5); if you want to see what the kernel does when it is compiled without support for kernel preemption, just ignore the gray boxes. The ret_from_exception( ) and ret_from_intr( ) entry points look quite similar in the flow diagram. ¨ A difference exists only if support for kernel preemption has been selected as a compilation option: in this case, local interrupts are immediately disabled when returning from exceptions (see the next slide). 133

Figure 4 -6. Returning from Interrupts and Exceptions 134