Linux Kernel Development Chapter 15 The Page Cache

- Slides: 24

Linux Kernel Development Chapter 15. The Page Cache and Page Writeback Geum-Seo Koo 2007. 06. 05 - Tue. Operating System Lab. Love 05

Page Cache n The goal of this cache n n Disk caches benefit n n n to minimize disk I/O by storing in physical memory data disk access is magnitudes slower than memory access data accessed once will, with a high likelihood, find itself accessed again in the near future(Temporal locality) The pages originate n from reads and writes of regular filesystem files, block device files, and memorymapped files n A cache of pages n Contains entire pages from recently accessed files Love 05 2

Buffer Cache n n Individual disk blocks can also tie into the page cache–block I/O buffer Buffer n n n Buffer cache n n the in-memory representation of a single physical disk block map pages in memory to disk block reduces disk access during block I/O operations by both caching disk blocks and buffering block I/O operations until later Part of the page cache * Temporal Locality Access to a particular piece of data tends to be clustered in time Ensures if data is cached on its first access a high probability of a cache hit in the near future Love 05 3

Sort of Operations n Page cache n n Page I/O operations n n primarily populated by page I/O operations, such as read() and write() manipulate entire pages of data at a time entails operations on more than one disk block caches page-size chunks of files Block I/O operations n n n manipulate a single disk block at a time reading and writing inodes the bread() function to perform a low-level read of a single block Love 05 4

The address_space Object n A physical page n might comprise multiple noncontiguous physical blocks n noncontiguous nature of the blocks n not possible to index the data in the page cache using only a device name and block number The linux page cache uses the address_space structure to identify pages in the page cache Defined in <linux/fs. h> Love 05 5

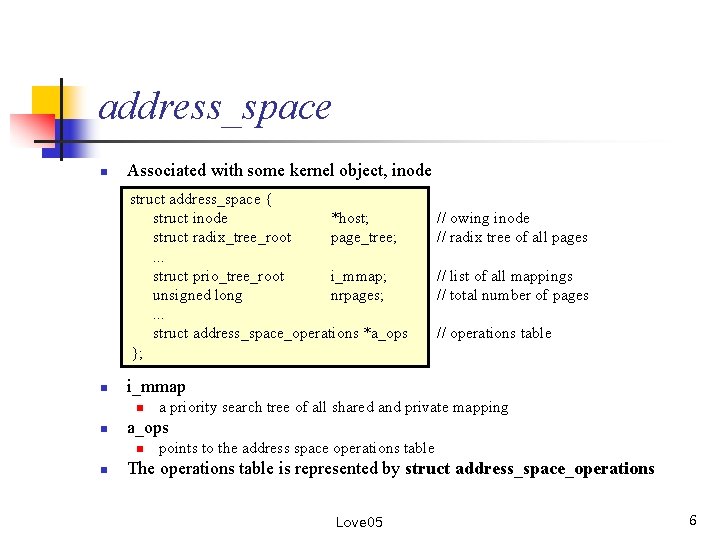

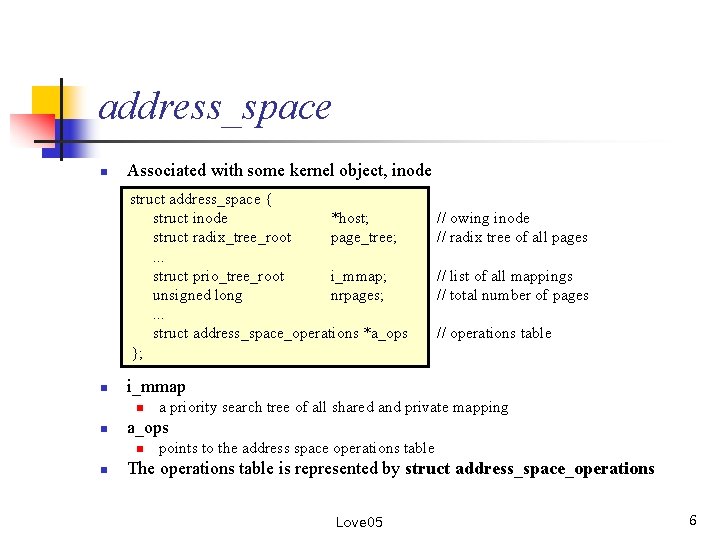

address_space n Associated with some kernel object, inode struct address_space { struct inode *host; struct radix_tree_root page_tree; . . . struct prio_tree_root i_mmap; unsigned long nrpages; . . . struct address_space_operations *a_ops }; n // operations table a priority search tree of all shared and private mapping a_ops n n // list of all mappings // total number of pages i_mmap n n // owing inode // radix tree of all pages points to the address space operations table The operations table is represented by struct address_space_operations Love 05 6

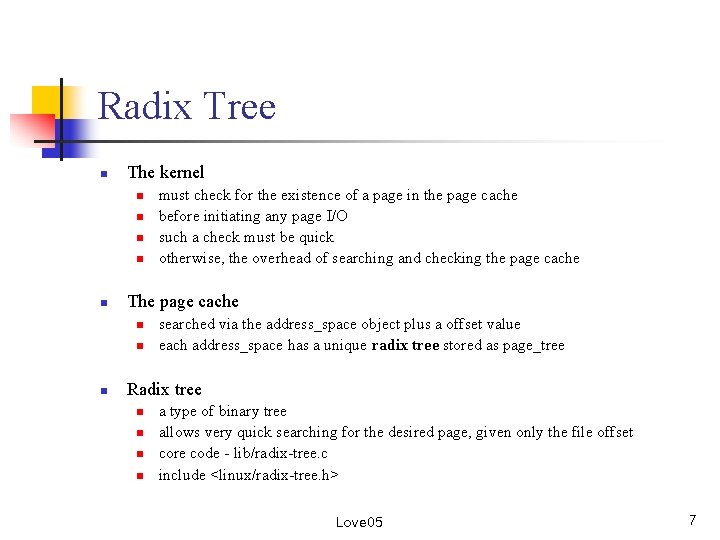

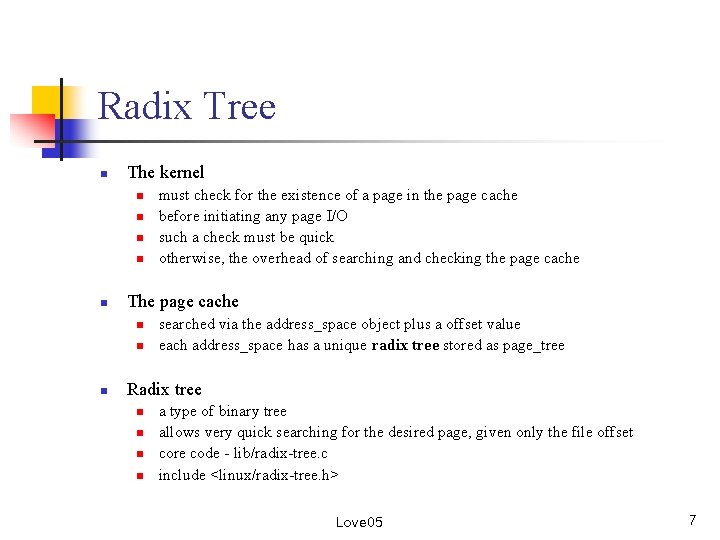

Radix Tree n The kernel n n n The page cache n n n must check for the existence of a page in the page cache before initiating any page I/O such a check must be quick otherwise, the overhead of searching and checking the page cache searched via the address_space object plus a offset value each address_space has a unique radix tree stored as page_tree Radix tree n n a type of binary tree allows very quick searching for the desired page, given only the file offset core code - lib/radix-tree. c include <linux/radix-tree. h> Love 05 7

The Old Page Hash Table n Prior to the 2. 6 kernel n n Instead, a global hash n n the page cache was not searched via radix tree maintained over all the pages in the system The hash returned a doubly linked list of entries that hash to the same given value Love 05 8

The Old Page Hash Table n Four Primary Problems: n n n a single global lock protected the hash – Lock Contention the hash was larger than necessary – because it contained all the pages performance when the hash lookup failed was slower than desired the hash consumed more memory Radix tree-based page cache in 2. 6 solved these issues Love 05 9

The Buffer Cache n Linux no longer has a distinct buffer cache n The 2. 2 kernel, there were two separate disk caches n n the page cache & the buffer cache two caches were not unified in the least n n n a disk block could exist in both caches simultaneously extensive effort in synchronization between the two cached copies--not to mention wasted memory The 2. 4 linux kernel the two caches were unified n have one disk cache: the page cache Love 05 10

The pdflush Daemon n Dirty page writeback occurs in two situations: n n n when free memory shrinks below a specified threshold when dirty data grows older than a specific threshold Continues writing out data until two conditions are true n n n data in the page cache is newer than the data on the backing store the specified minimum number of pages has been written out the amount of free memory is above the dirty_background_ratio threshold background_writeout() function – writeback o dirty pages Love 05 11

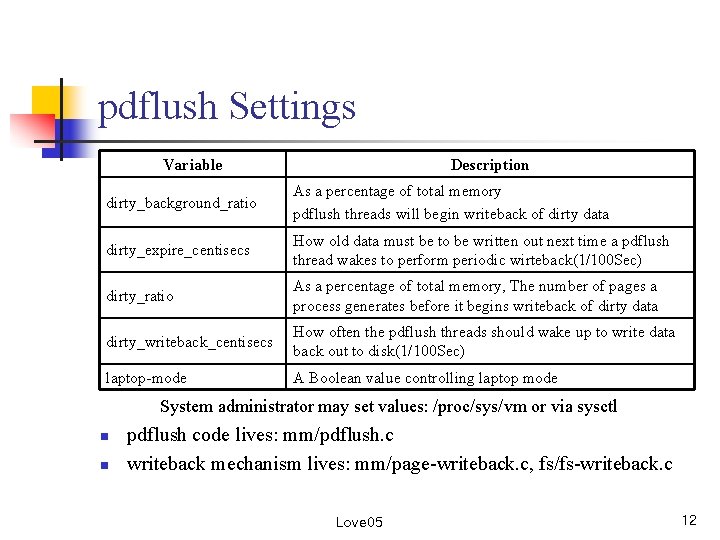

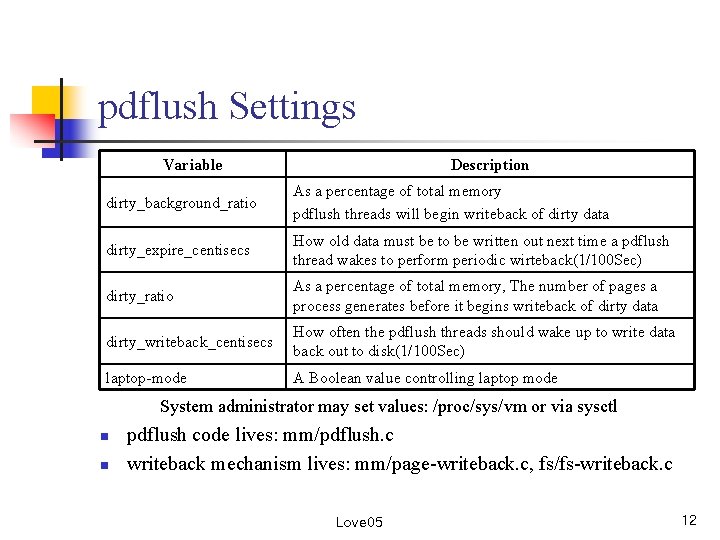

pdflush Settings Variable Description dirty_background_ratio As a percentage of total memory pdflush threads will begin writeback of dirty data dirty_expire_centisecs How old data must be to be written out next time a pdflush thread wakes to perform periodic wirteback(1/100 Sec) dirty_ratio As a percentage of total memory, The number of pages a process generates before it begins writeback of dirty data dirty_writeback_centisecs How often the pdflush threads should wake up to write data back out to disk(1/100 Sec) laptop-mode A Boolean value controlling laptop mode System administrator may set values: /proc/sys/vm or via sysctl n n pdflush code lives: mm/pdflush. c writeback mechanism lives: mm/page-writeback. c, fs/fs-writeback. c Love 05 12

Laptop Mode n A special page writeback strategy n n n intended to optimize battery life by minimizing hard disk activity allowing hard drives to remain spun down as long as possible configurable via /proc/sys/vm/laptop_mode by default, zero(laptop mode is disabled) A Single change to page writeback behavior n n performing writeback of dirty pages when they grow too old pdflush also piggybacks off any other physical disk I/O flushing all dirty buffers to disk advantage of the fact that the disk was just spun up Love 05 13

bdflush and kupdated n The bdflush kernel thread n n Two main differences distinguish bdflush and pdflush n n n performed background writeback of dirty pages when available memory was low a set of thresholds was maintained awakened via wakeup_bdflush() bdflush daemon is always only one : pdflush threads is dynamic bdflush was buffer-based : pdflush is page-based kupdated n introduced to periodically write back dirty pages Love 05 14

Congestion Avoidance: Why We Have Multiple Threads n Major flaws in the bdflush solution n n Solves this problem n n allowing Multiple pdflush threads to exist The number of threads changes(algorithm) n n n consisted of one thread possible congestion during heavy page writeback throughput of disks is a finite--and rather small--number if all existing pdflush threads are busy for at least on second, a new pdflush thread is created(MAX_PDFLUSH_THREADS - 8) if a pdflush thread is asleep for more than a second, it is terminated (MIN_PDFLUSH_THREADS – 2) Congestion avoidance n actively try to write back pages whose queues are not congested Love 05 15

Linux Kernel Development Chapter 16. Modules Geum-Seo Koo 2007. 06. 05 – Tue. Operating System Lab. Love 05

Modules n Linux kernel n n n Module n n monorithic – kernel running in a single protection domain modular – allowing the dynamic insertion and removal of code from the kernel at run-time related subroutines, data, entry and exit point are grouped together in a single binary image, a loadable kernel object Support for modules n n allows systems to have only a minimal base kernel image provide easy removal and reloading of kernel code facilitate debugging loading of new drivers(hotplugging) Love 05 17

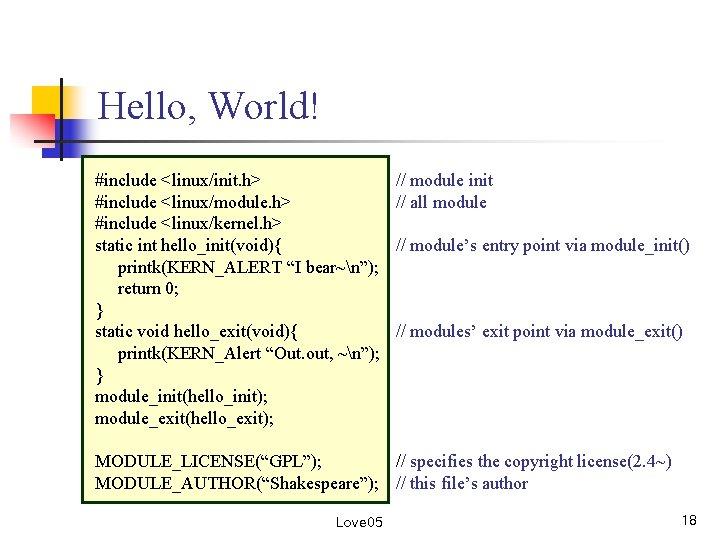

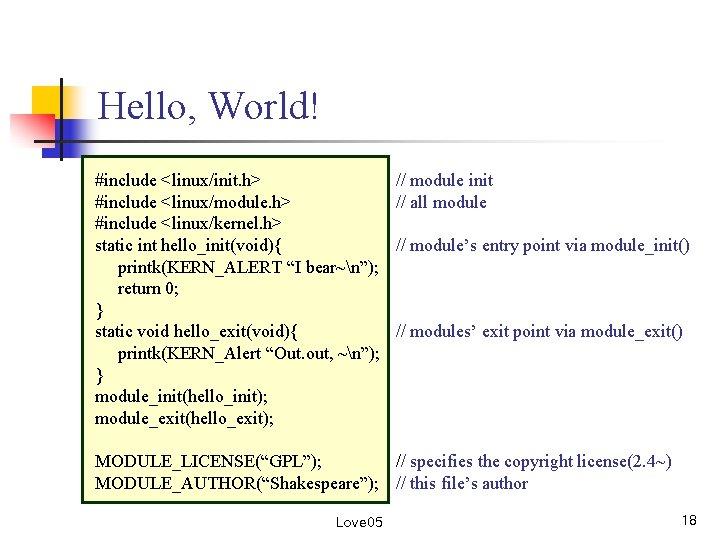

Hello, World! #include <linux/init. h> #include <linux/module. h> #include <linux/kernel. h> static int hello_init(void){ printk(KERN_ALERT “I bear~n”); return 0; } static void hello_exit(void){ printk(KERN_Alert “Out. out, ~n”); } module_init(hello_init); module_exit(hello_exit); // module init // all module // module’s entry point via module_init() // modules’ exit point via module_exit() MODULE_LICENSE(“GPL”); // specifies the copyright license(2. 4~) MODULE_AUTHOR(“Shakespeare”); // this file’s author Love 05 18

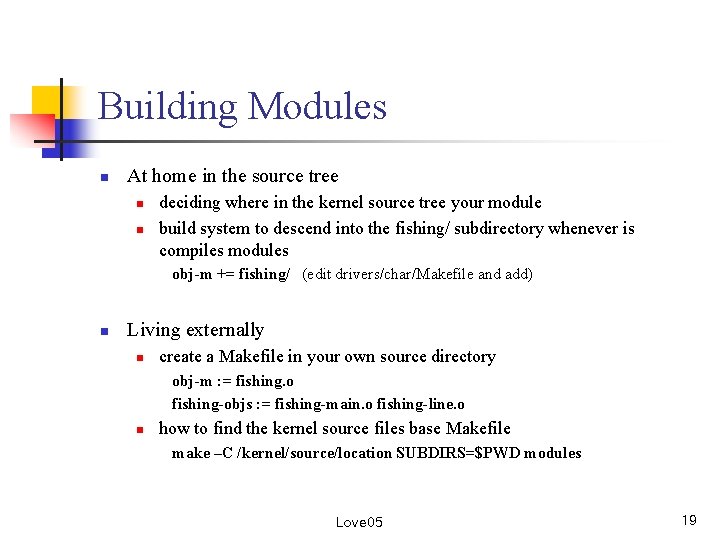

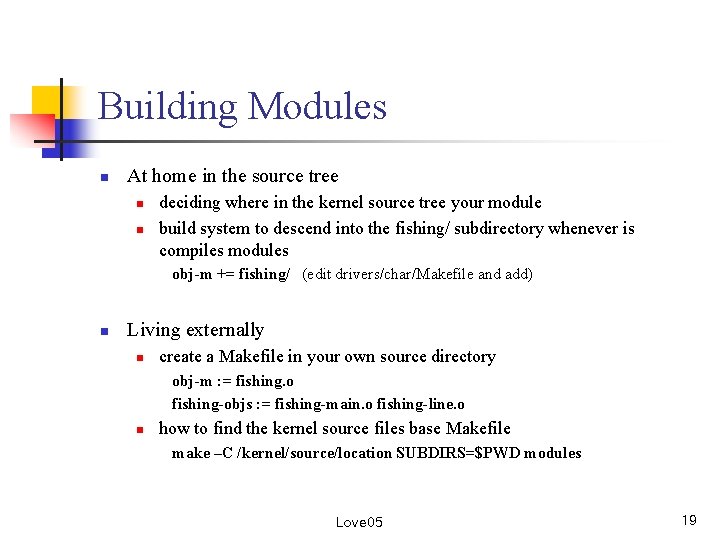

Building Modules n At home in the source tree n n deciding where in the kernel source tree your module build system to descend into the fishing/ subdirectory whenever is compiles modules obj-m += fishing/ (edit drivers/char/Makefile and add) n Living externally n create a Makefile in your own source directory obj-m : = fishing. o fishing-objs : = fishing-main. o fishing-line. o n how to find the kernel source files base Makefile make –C /kernel/source/location SUBDIRS=$PWD modules Love 05 19

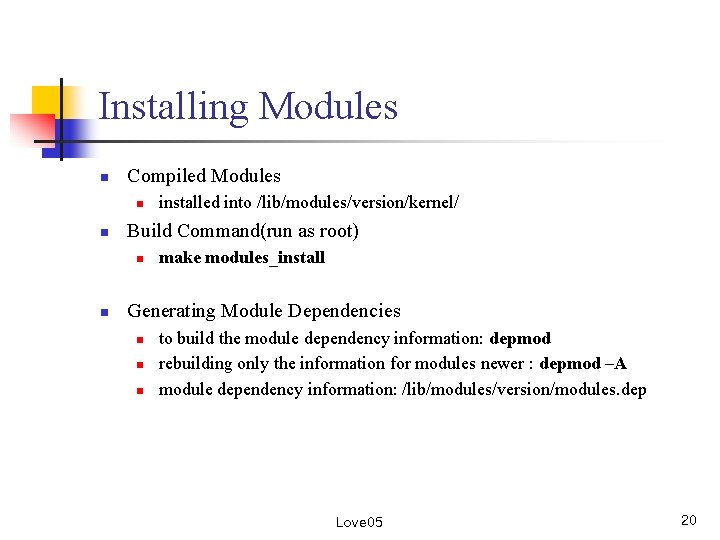

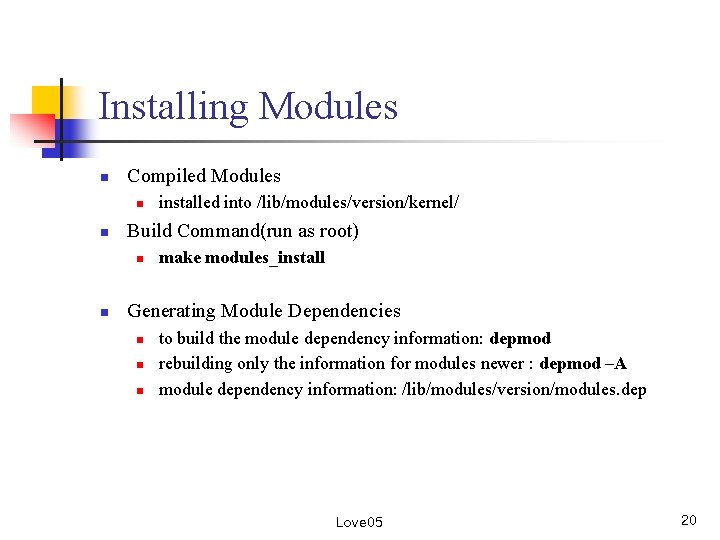

Installing Modules n Compiled Modules n n Build Command(run as root) n n installed into /lib/modules/version/kernel/ make modules_install Generating Module Dependencies n n n to build the module dependency information: depmod rebuilding only the information for modules newer : depmod –A module dependency information: /lib/modules/version/modules. dep Love 05 20

Loading Modules n Load a module ex) insmod module n Remove a module ex) rmmod module n Modprobe n n provides dependency resolution, intelligent error checking and reporting ex) modprobe module [module parameters] removes any modules on which the given module depends, if they are unused ex) modprobe –r modules Love 05 21

Managing Configuration Options n “kbuild” system in the 2. 6 kernel n n n adding new configuration options is very easy add an entry to the Kconfig file If you created a new subdirectory and want a new Kconfig file n source “drivers/char/fishing/Kconfig”(edit drivers/char/Kconfig and add) Love 05 22

Module Parameters n Defining a module parameter ex) module_param(name, type, perm) n Name: both the parameter exposed to the user and the variable n Type: parameter’s data type(byte, short, ushort, int, uint, long, charp. . . ) n Perm: permissions of the corresponding file in sysfs n The kernel copy the string directly into a character array n n You can document your parameters n n module_param_string(name, string, len, perm) MODULE_PARM_DESC() macros require the inclusion of <linux/moduleparam. h> Love 05 23

Exported Symbols n When modules are loaded, dynamically linked into the kernel n Dynamically linked binaries can call only into external functions n EXPORT_SYMBOL() & EXPORT_SYMBOL_GPL() n Functions that are exported are available for use by modules n Not exported cannot be invoked from modules n n the linking and invoking rule are much more stringent for module The set of kernel symbols that art exported n exported kernel interface or even the kernel API Love 05 24