Linux Clustering Storage Management Peter J Braam CMU

Linux Clustering & Storage Management Peter J. Braam CMU, Stelias Computing, Red Hat

Disclaimer n Several people are involved: n n Stephen Tweedie (Red Hat) Michael Callahan (Stelias) Larry Mc. Voy (Bit. Mover) Much of it is not new – n n Digital had it all and documented it! IBM/SGI. . . have similar stuff (no docs)

Content n n n What is this cluster fuzz about? Linux cluster design Distributed lock manager Linux cluster file systems Lustre: the OBSD cluster file system

Cluster Fuz

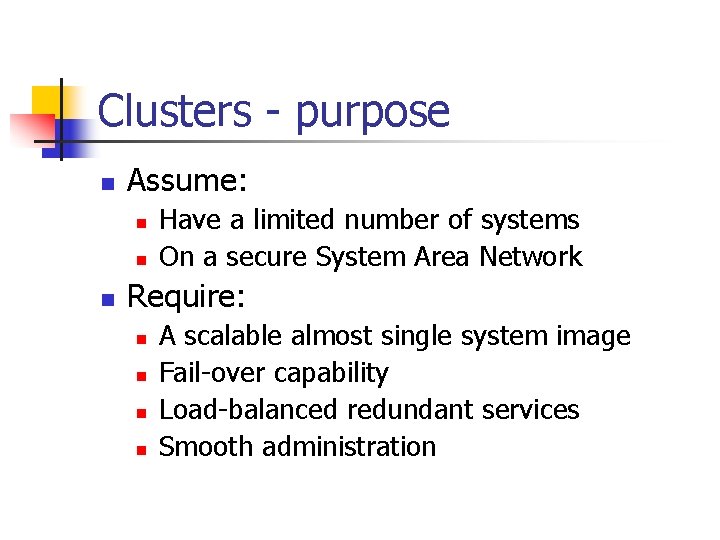

Clusters - purpose n Assume: n n n Have a limited number of systems On a secure System Area Network Require: n n A scalable almost single system image Fail-over capability Load-balanced redundant services Smooth administration

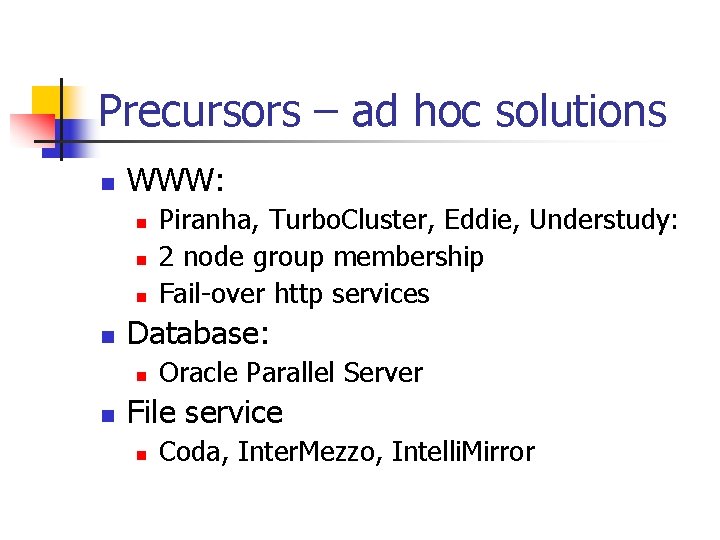

Precursors – ad hoc solutions n WWW: n n Database: n n Piranha, Turbo. Cluster, Eddie, Understudy: 2 node group membership Fail-over http services Oracle Parallel Server File service n Coda, Inter. Mezzo, Intelli. Mirror

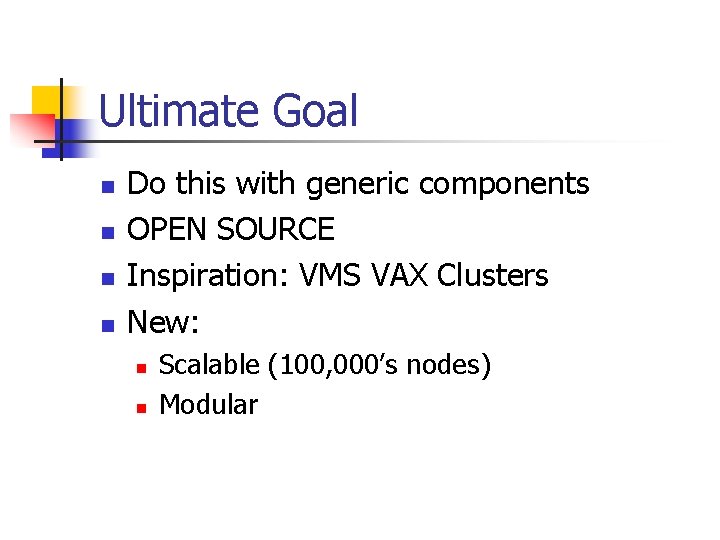

Ultimate Goal n n Do this with generic components OPEN SOURCE Inspiration: VMS VAX Clusters New: n n Scalable (100, 000’s nodes) Modular

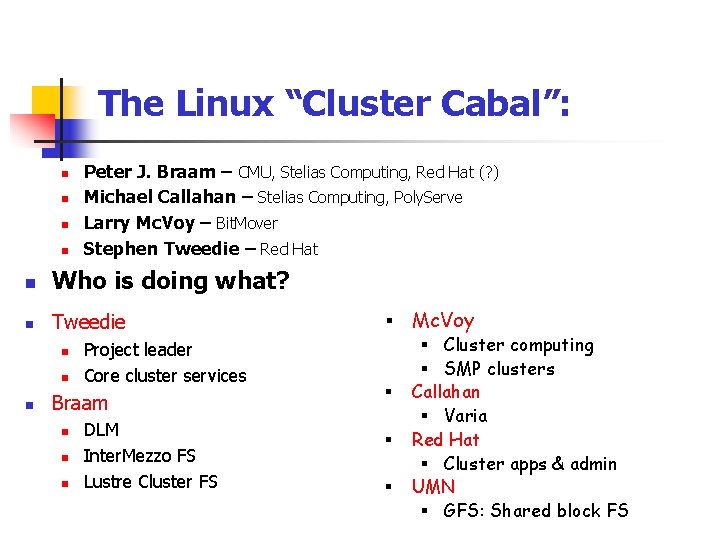

The Linux “Cluster Cabal”: n n Peter J. Braam – CMU, Stelias Computing, Red Hat (? ) Michael Callahan – Stelias Computing, Poly. Serve Larry Mc. Voy – Bit. Mover Stephen Tweedie – Red Hat n Who is doing what? n Tweedie n n n Project leader Core cluster services Braam n n n DLM Inter. Mezzo FS Lustre Cluster FS § Mc. Voy § Cluster computing § SMP clusters § § § Callahan § Varia Red Hat § Cluster apps & admin UMN § GFS: Shared block FS

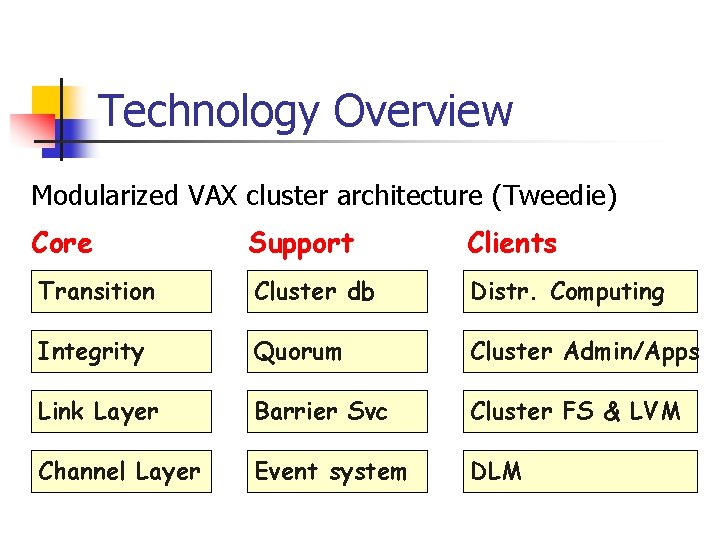

Technology Overview Modularized VAX cluster architecture (Tweedie) Core Support Clients Transition Cluster db Distr. Computing Integrity Quorum Cluster Admin/Apps Link Layer Barrier Svc Cluster FS & LVM Channel Layer Event system DLM

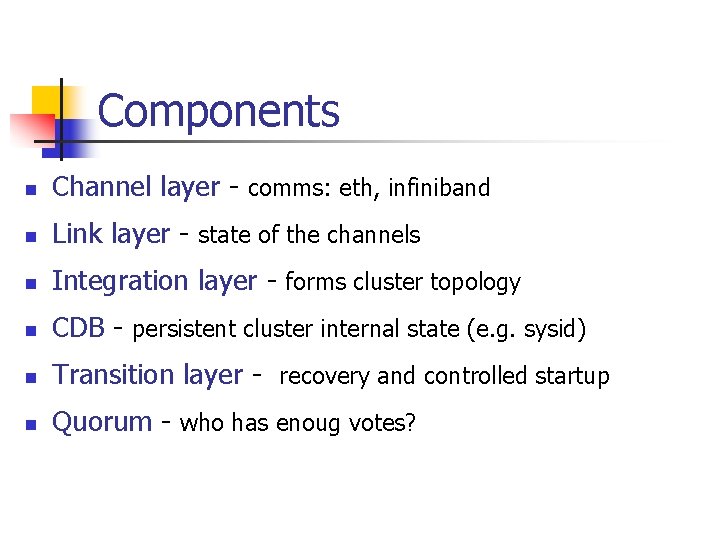

Components n Channel layer - comms: eth, infiniband n Link layer - state of the channels n Integration layer - forms cluster topology n CDB - persistent cluster internal state (e. g. sysid) n Transition layer - recovery and controlled startup n Quorum - who has enoug votes?

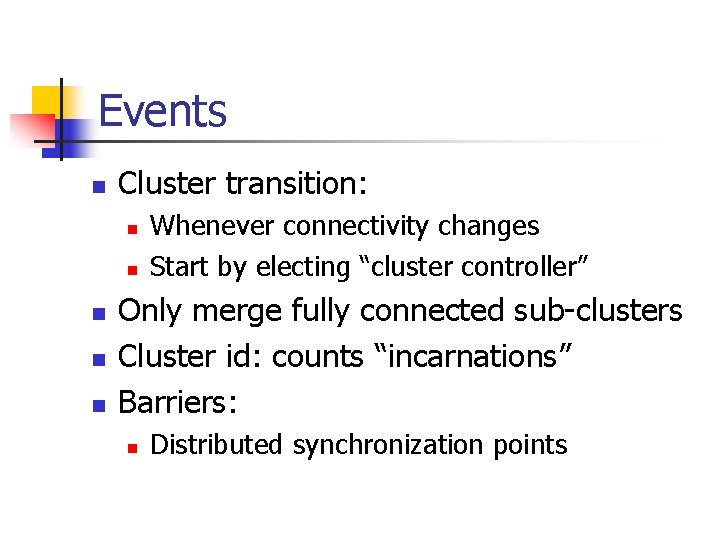

Events n Cluster transition: n n n Whenever connectivity changes Start by electing “cluster controller” Only merge fully connected sub-clusters Cluster id: counts “incarnations” Barriers: n Distributed synchronization points

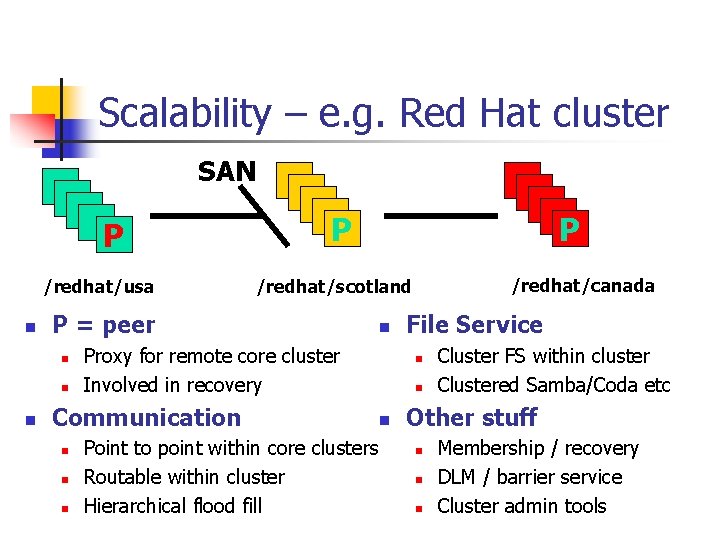

Scalability – e. g. Red Hat cluster SAN P /redhat/usa n n n Proxy for remote core cluster Involved in recovery Communication n P /redhat/canada /redhat/scotland P = peer n n P Point to point within core clusters Routable within cluster Hierarchical flood fill File Service n n n Cluster FS within cluster Clustered Samba/Coda etc Other stuff n n n Membership / recovery DLM / barrier service Cluster admin tools

Distributed Lock Manager

Locks & resources n Purpose: generic, rich lock service Will subsume “callbacks”, “leases” etc. n Lock resources: resource database n n n Organize resources in trees High performance n node that acquires resource manages tree

![Typical simple lock sequence Resource mgr = Vec[hash(R)] Sys A: has Lock on R Typical simple lock sequence Resource mgr = Vec[hash(R)] Sys A: has Lock on R](http://slidetodoc.com/presentation_image_h2/a50ae8dbc1c6a609c4d03ffae93f081e/image-15.jpg)

Typical simple lock sequence Resource mgr = Vec[hash(R)] Sys A: has Lock on R Who has R? Sys A Block B’s request: Trigger owning process Owning process: releases lock Grant lock to sys B I want lock on A Sys B: need Lock on R

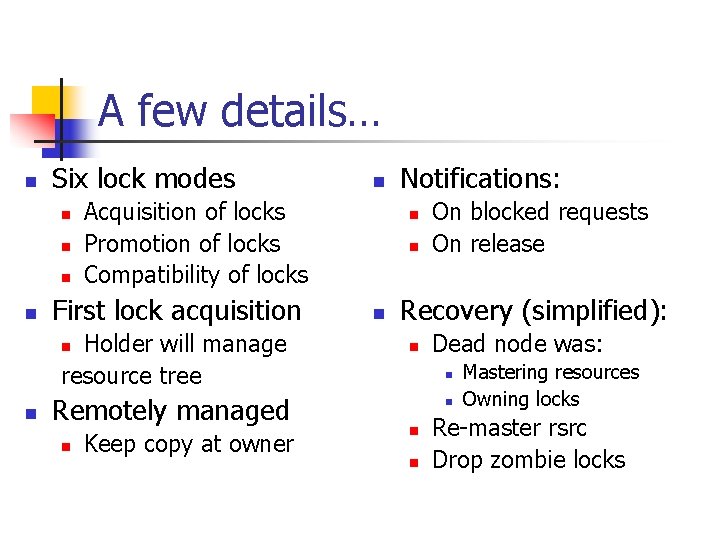

A few details… n Six lock modes n n Acquisition of locks Promotion of locks Compatibility of locks First lock acquisition Holder will manage resource tree n n Remotely managed n n Keep copy at owner Notifications: n n n On blocked requests On release Recovery (simplified): n Dead node was: n n Mastering resources Owning locks Re-master rsrc Drop zombie locks

Lustre file system n Based on object storage n Exploits cluster infrastructure and DLM n Cluster wide Unix semantics

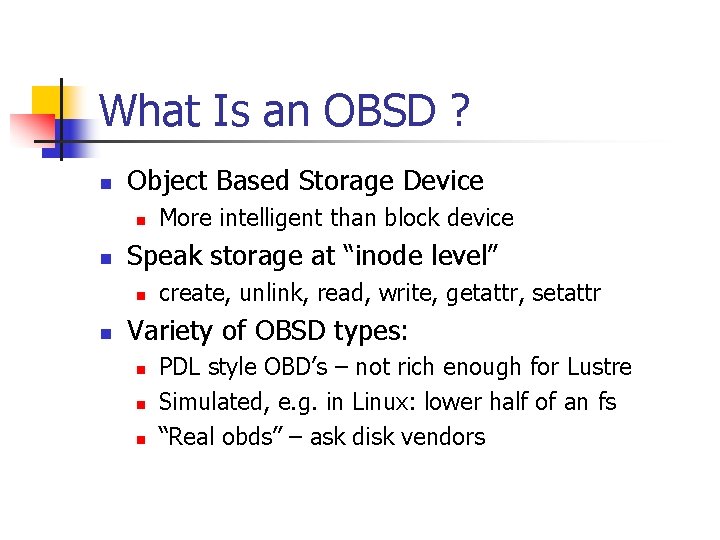

What Is an OBSD ? n Object Based Storage Device n n Speak storage at “inode level” n n More intelligent than block device create, unlink, read, write, getattr, setattr Variety of OBSD types: n n n PDL style OBD’s – not rich enough for Lustre Simulated, e. g. in Linux: lower half of an fs “Real obds” – ask disk vendors

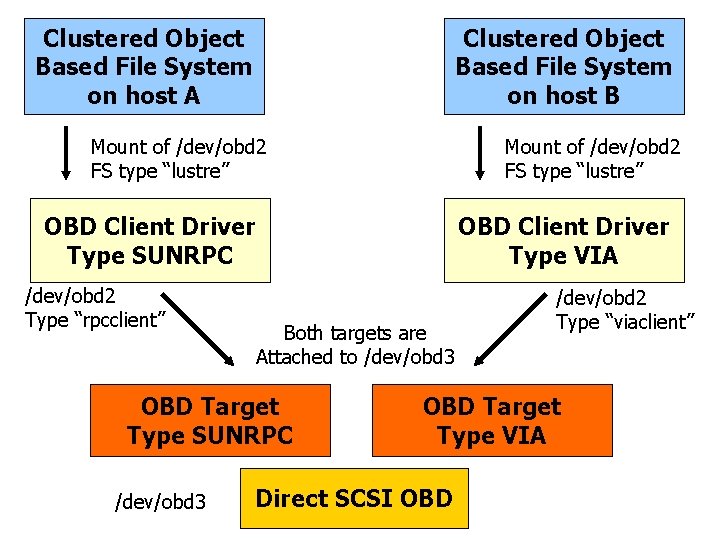

Components of OB Storage n Storage Object Device Drivers n class drivers – attach driver to interface n n Targets, clients – remote access Direct drivers – to manage physical storage Logical drivers – for storage management object storage applications: n n Object (cluster) file system: blockless Specialized apps: caches, db’s, filesrv

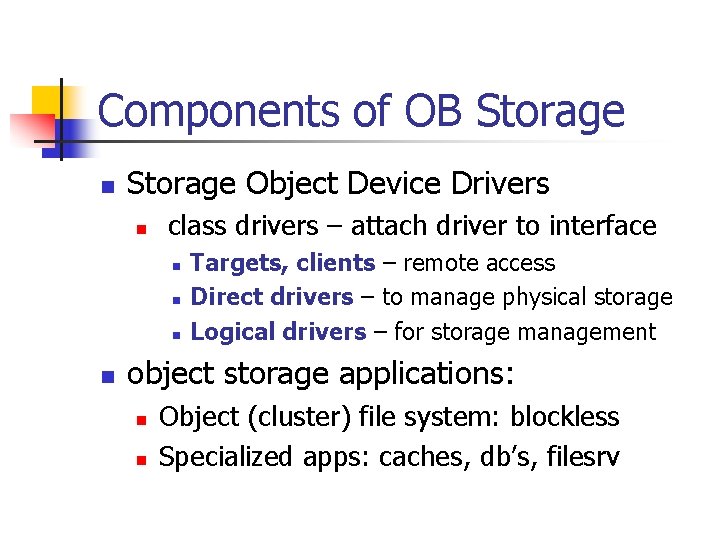

Object Based Disk File System (OBDFS) Object Based Database /dev/obd 1 mount on /mnt/obd type “obdfs” Simulated Ext 2 Direct OBD driver (obdext 2) /dev/obd 1 of type “ext 2” attached to /dev/hda 2 SBD (e. g. IDE disk) Data on /dev/obd 2 Raid 0 Logical OBD Driver (obdraid 0) /dev/obd 2 Type “raid 0” attached to /dev/obd 3 & 4 Direct SCSI OBD /dev/obd 3 Direct SCSI OBD /dev/obd 4

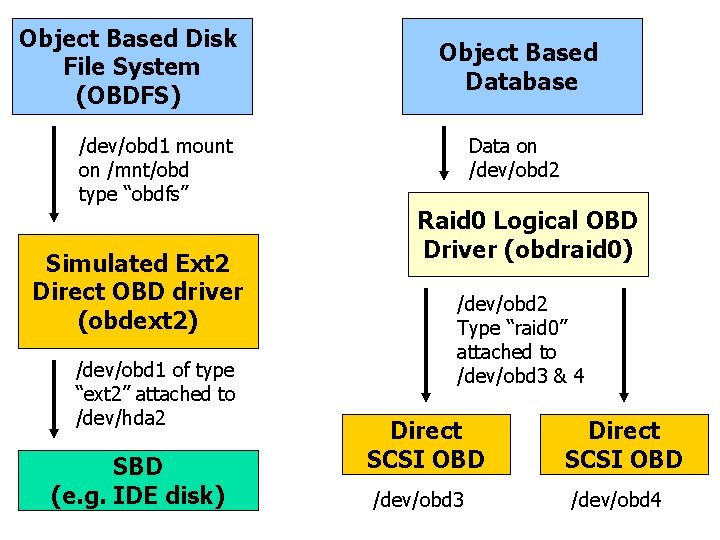

Clustered Object Based File System on host A Clustered Object Based File System on host B Mount of /dev/obd 2 FS type “lustre” OBD Client Driver Type SUNRPC /dev/obd 2 Type “rpcclient” OBD Client Driver Type VIA Both targets are Attached to /dev/obd 3 OBD Target Type SUNRPC /dev/obd 3 /dev/obd 2 Type “viaclient” OBD Target Type VIA Direct SCSI OBD

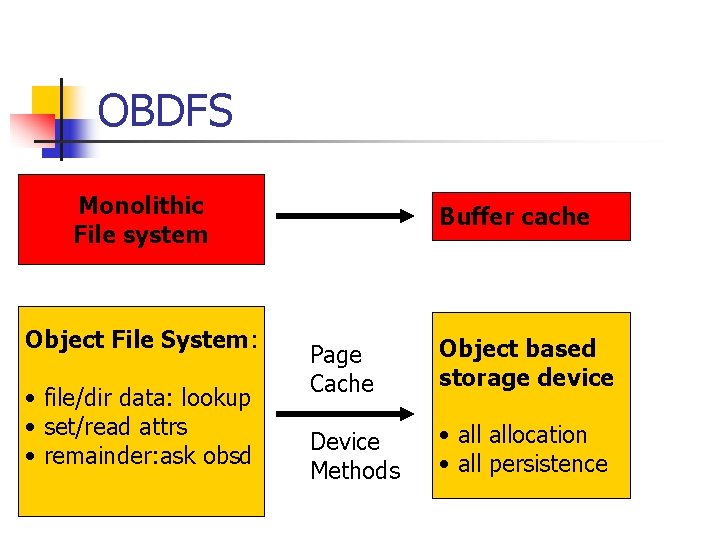

OBDFS Monolithic File system Object File System: • file/dir data: lookup • set/read attrs • remainder: ask obsd Buffer cache Page Cache Object based storage device Device Methods • allocation • all persistence

Why This Is Better… n Clustering n Storage management

Storage Management n Many problems become easier: n n n File system snapshots Hot file migration Hot resizing Raid Backup

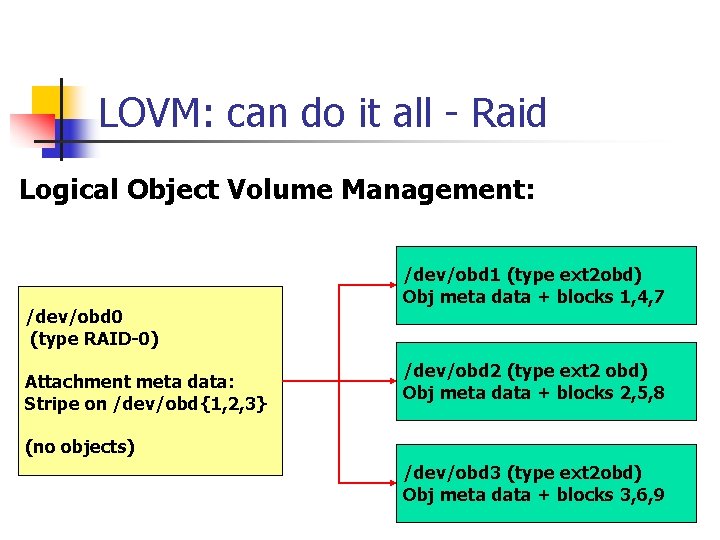

LOVM: can do it all - Raid Logical Object Volume Management: /dev/obd 0 (type RAID-0) Attachment meta data: Stripe on /dev/obd{1, 2, 3} /dev/obd 1 (type ext 2 obd) Obj meta data + blocks 1, 4, 7 /dev/obd 2 (type ext 2 obd) Obj meta data + blocks 2, 5, 8 (no objects) /dev/obd 3 (type ext 2 obd) Obj meta data + blocks 3, 6, 9

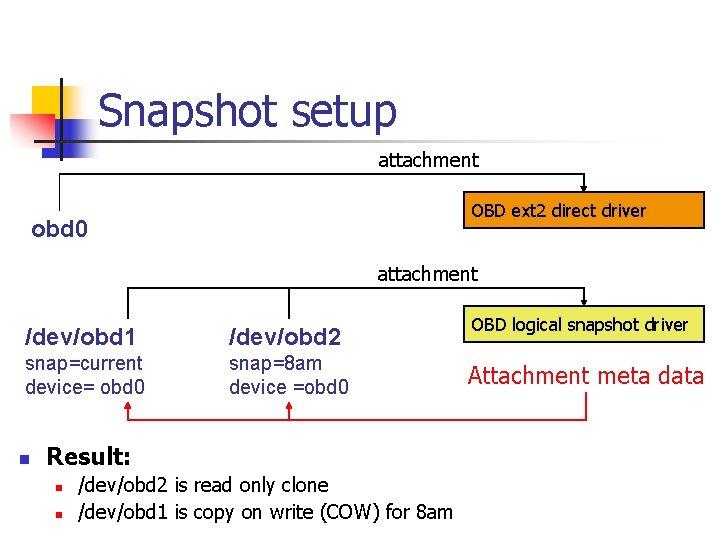

Snapshot setup attachment OBD ext 2 direct driver obd 0 attachment /dev/obd 1 /dev/obd 2 snap=current device= obd 0 snap=8 am device =obd 0 n Result: n n /dev/obd 2 is read only clone /dev/obd 1 is copy on write (COW) for 8 am OBD logical snapshot driver Attachment meta data

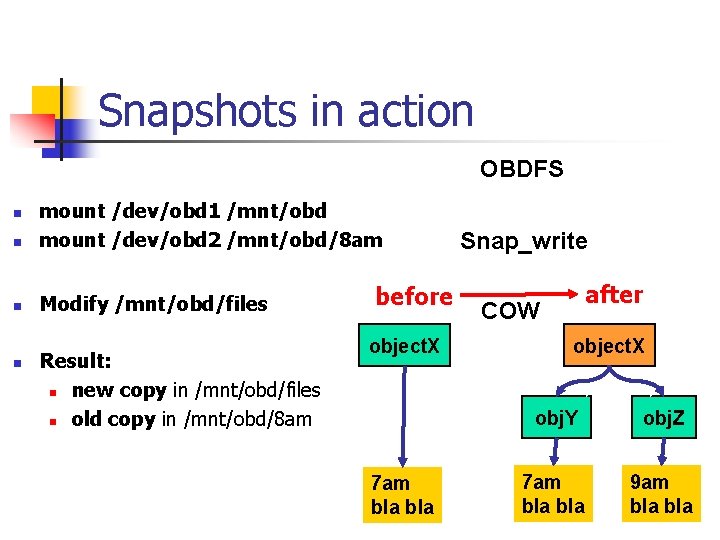

Snapshots in action OBDFS n mount /dev/obd 1 /mnt/obd mount /dev/obd 2 /mnt/obd/8 am n Modify /mnt/obd/files n n Result: n new copy in /mnt/obd/files n old copy in /mnt/obd/8 am before object. X 7 am bla Snap_write after COW object. X obj. Y obj. Z 7 am bla 9 am bla

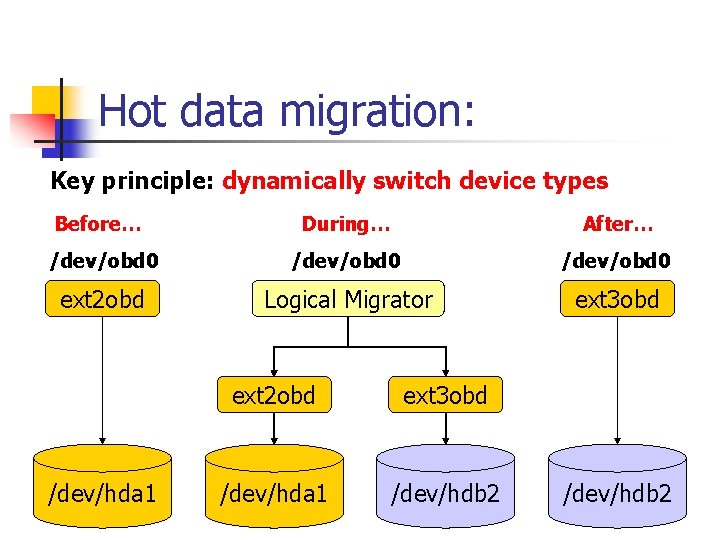

Hot data migration: Key principle: dynamically switch device types Before… During… After… /dev/obd 0 ext 2 obd Logical Migrator ext 3 obd /dev/hda 1 ext 2 obd ext 3 obd /dev/hda 1 /dev/hdb 2

Lustre File System n Lustre ~ Linux Cluster n Object Based Cluster File System n n Based on OBSD’s Symmetric - no file manager Cluster wide Unix semantics: DLM Journal recovery etc.

Benefits of Lustre design n space & object allocation n n Managed where it is needed !! consequences n n IBM (Devarakonda etc): less traffic Much simpler locking

Others… n Coda: n n GFS: n n Smart “replicator”. Exploits disk fs. Lustre n n n shared storage file system, logical volumes Inter. Mezzo: n n mobile use, server replication, security shared storage file system likely best with smarter storage devices NFS

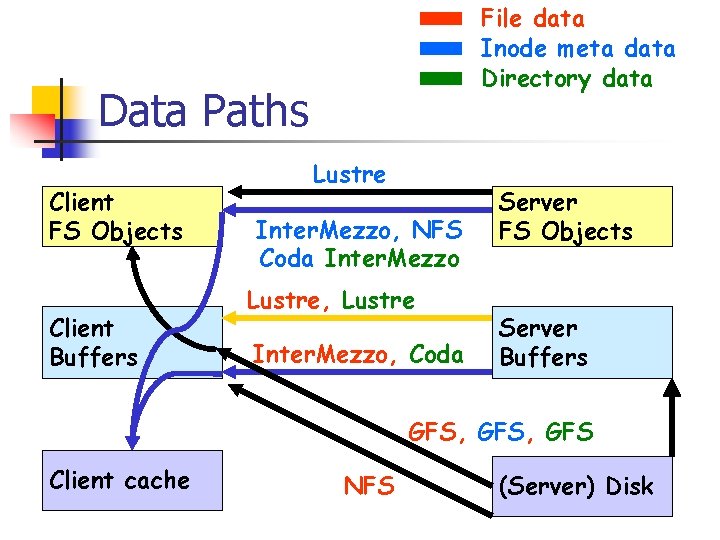

File data Inode meta data Directory data Data Paths Client FS Objects Client Buffers Lustre Inter. Mezzo, NFS Coda Inter. Mezzo Lustre, Lustre Inter. Mezzo, Coda Server FS Objects Server Buffers GFS, GFS Client cache NFS (Server) Disk

Conclusions n Linux needs this stuff n n Relatively little literature n n n Badly cluster file systems DLMs Good opportunity to innovate

- Slides: 33