Links as a Service Guaranteed Tenant Isolation in

- Slides: 24

Links as a Service: Guaranteed Tenant Isolation in the Shared Cloud Eitan Zahavi Electrical Engineering, Technion, Mellanox Technologies Joint work with: Isaac Keslassy (Technion), Alex Shpiner (Mellanox), Ori Rottenstreich (Princeton), Avinoam Kolodny (Technion) 1

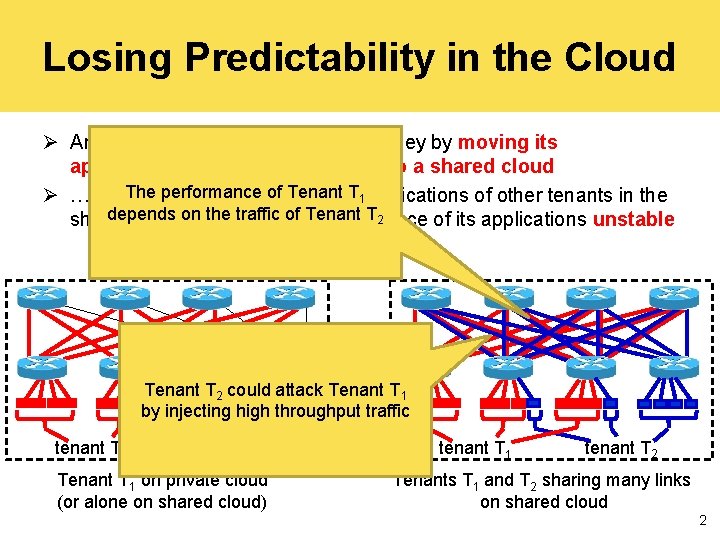

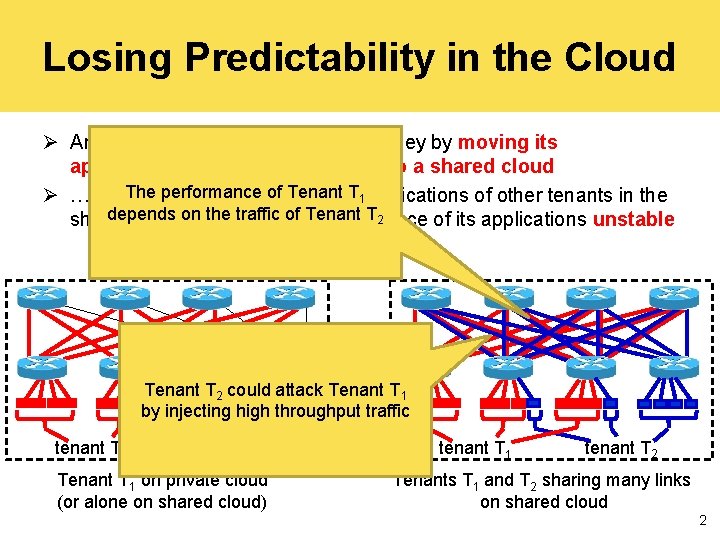

Losing Predictability in the Cloud Ø An organization can save a lot of money by moving its applications from a private cloud to a shared cloud The performance of Tenant T 1 Ø … But it often won’t because the applications of other tenants in the depends on the traffic of Tenant T 2 shared cloud can make the performance of its applications unstable Tenant T 2 could attack Tenant T 1 by injecting high throughput traffic tenant T 1 Tenant T 1 on private cloud (or alone on shared cloud) tenant T 1 tenant T 2 Tenants T 1 and T 2 sharing many links on shared cloud 2

Sensitive Applications Ø Applications that depend on the weakest link Ø Map. Reduce Ø Any Bulk Synchronous Parallel programs Ø Scientific computing • Stencil Applications for example Ø Some mission critical applications can’t be late Ø Bank customers rollup – must complete overnight Ø Weather prediction – a new result every few hours Ø… 3

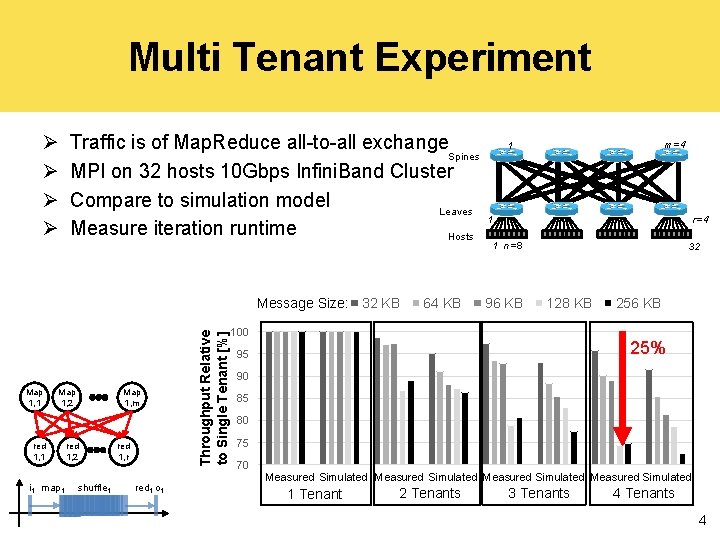

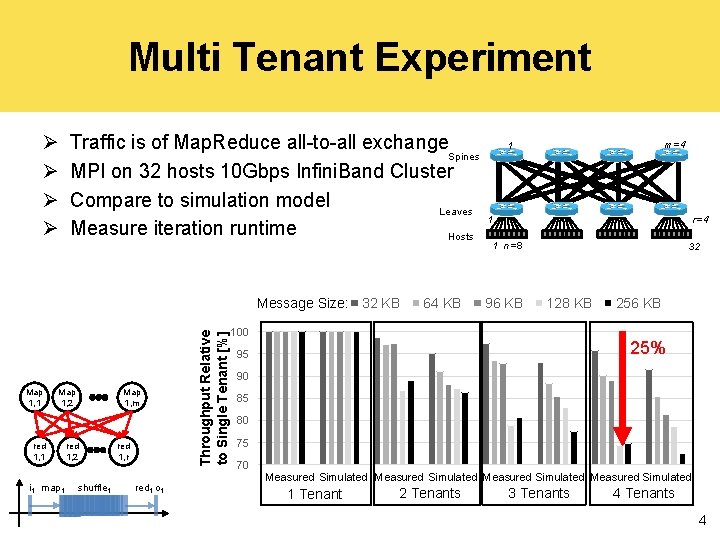

Multi Tenant Experiment Ø Ø Traffic is of Map. Reduce all-to-all exchange Spines MPI on 32 hosts 10 Gbps Infini. Band Cluster Compare to simulation model Leaves Measure iteration runtime Hosts Map 1, 1 Map 1, 2 red 1, 1 i 1 map 1 Map 1, m red 1, 2 shuffle 1 red 1, r red 1 o 1 Throughput Relative to Single Tenant [%] Message Size: 32 KB 64 KB m=4 1 r=4 1 1 n=8 96 KB 32 128 KB 100 256 KB 25% 95 90 85 80 75 70 Measured Simulated 1 Tenant 2 Tenants 3 Tenants 4

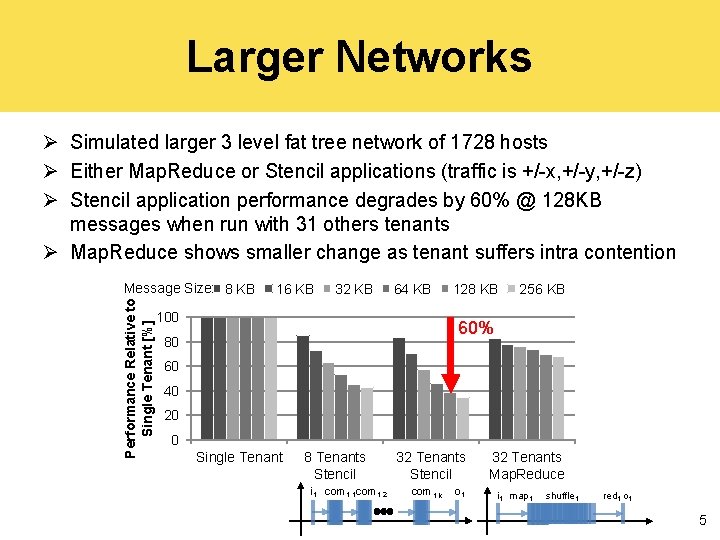

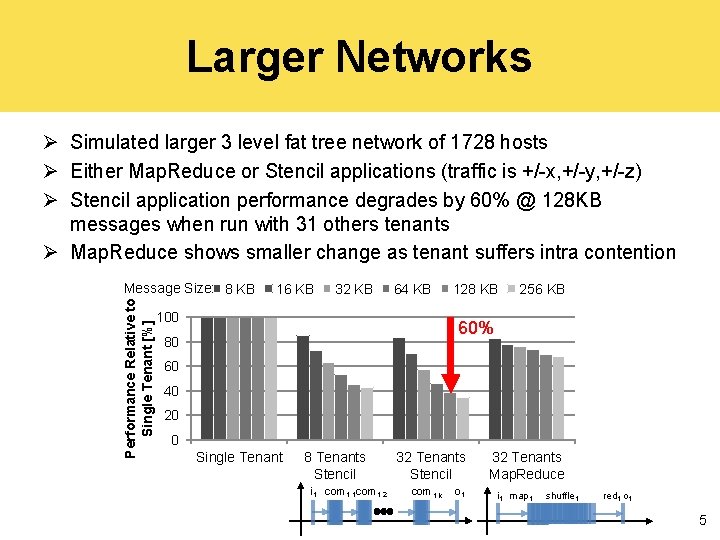

Larger Networks Ø Simulated larger 3 level fat tree network of 1728 hosts Ø Either Map. Reduce or Stencil applications (traffic is +/-x, +/-y, +/-z) Ø Stencil application performance degrades by 60% @ 128 KB messages when run with 31 others tenants Ø Map. Reduce shows smaller change as tenant suffers intra contention Performance Relative to Single Tenant [%] Message Size: 8 KB 16 KB 32 KB 64 KB 100 128 KB 256 KB 60% 80 60 40 20 0 Single Tenant 8 Tenants Stencil i 1 com 1, 2 32 Tenants Stencil com 1, k o 1 32 Tenants Map. Reduce i 1 map 1 shuffle 1 red 1 o 1 5

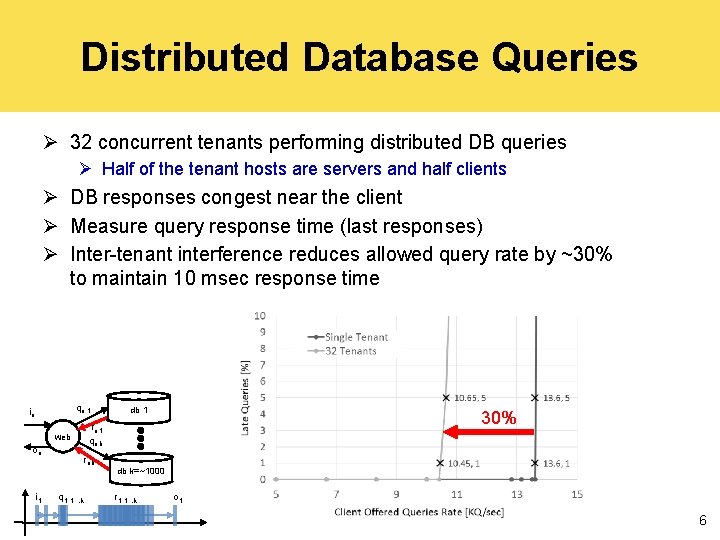

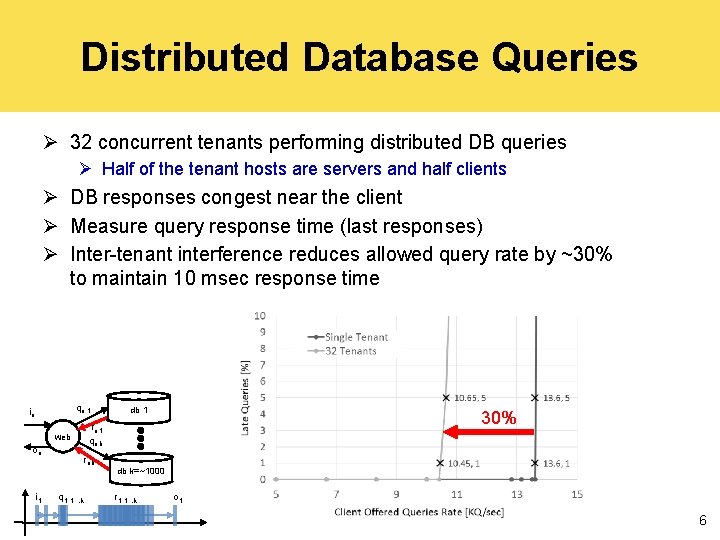

Distributed Database Queries Ø 32 concurrent tenants performing distributed DB queries Ø Half of the tenant hosts are servers and half clients Ø DB responses congest near the client Ø Measure query response time (last responses) Ø Inter-tenant interference reduces allowed query rate by ~30% to maintain 10 msec response time qn, 1 in i 1 30% rn, 1 qn, k web on db 1 rn, k q 1, 1…k db k=~1000 r 1, 1…k o 1 6

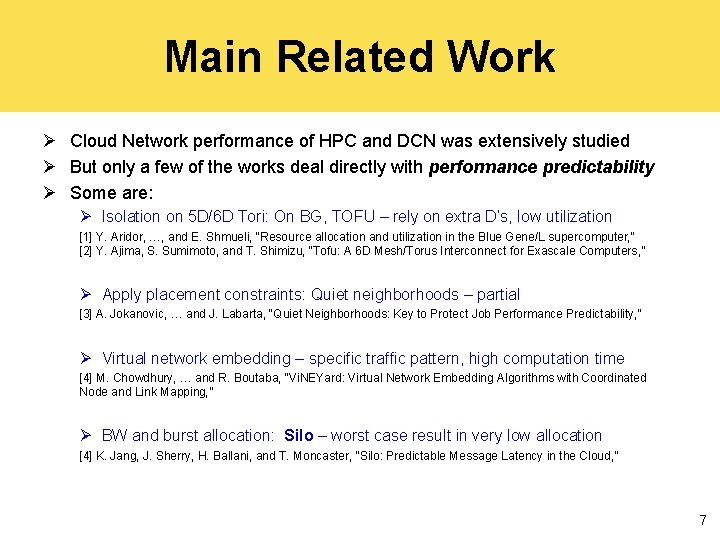

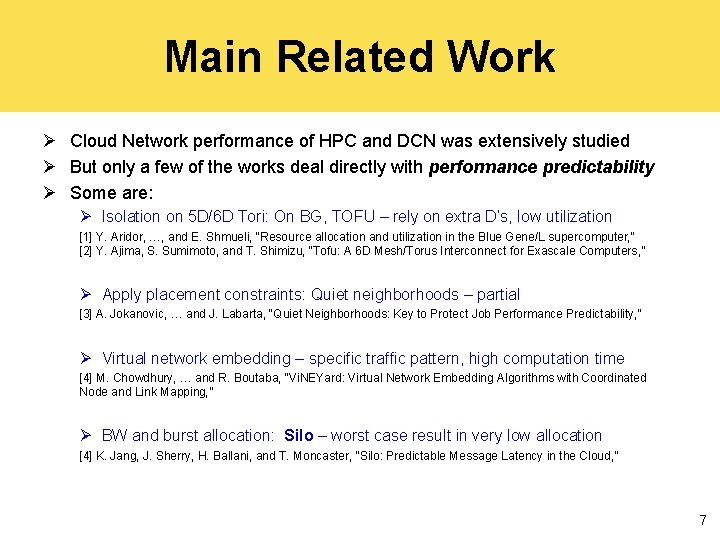

Main Related Work Ø Cloud Network performance of HPC and DCN was extensively studied Ø But only a few of the works deal directly with performance predictability Ø Some are: Ø Isolation on 5 D/6 D Tori: On BG, TOFU – rely on extra D’s, low utilization [1] Y. Aridor, …, and E. Shmueli, “Resource allocation and utilization in the Blue Gene/L supercomputer, ” [2] Y. Ajima, S. Sumimoto, and T. Shimizu, “Tofu: A 6 D Mesh/Torus Interconnect for Exascale Computers, ” Ø Apply placement constraints: Quiet neighborhoods – partial [3] A. Jokanovic, … and J. Labarta, “Quiet Neighborhoods: Key to Protect Job Performance Predictability, ” Ø Virtual network embedding – specific traffic pattern, high computation time [4] M. Chowdhury, … and R. Boutaba, “Vi. NEYard: Virtual Network Embedding Algorithms with Coordinated Node and Link Mapping, ” Ø BW and burst allocation: Silo – worst case result in very low allocation [4] K. Jang, J. Sherry, H. Ballani, and T. Moncaster, “Silo: Predictable Message Latency in the Cloud, ” 7

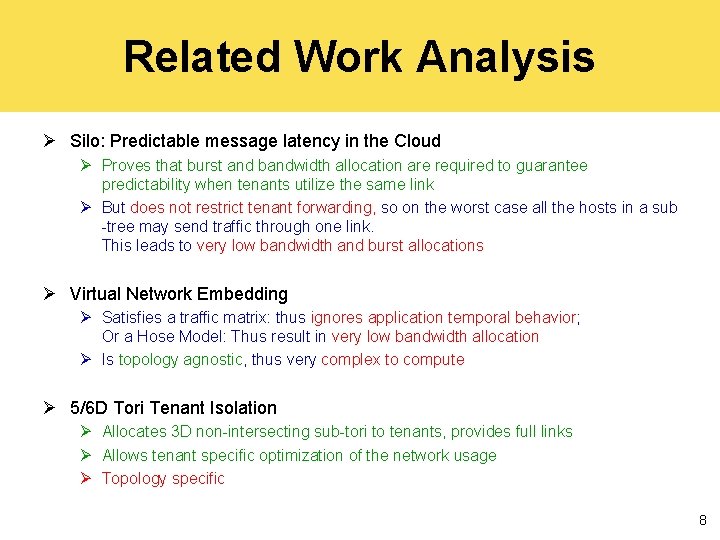

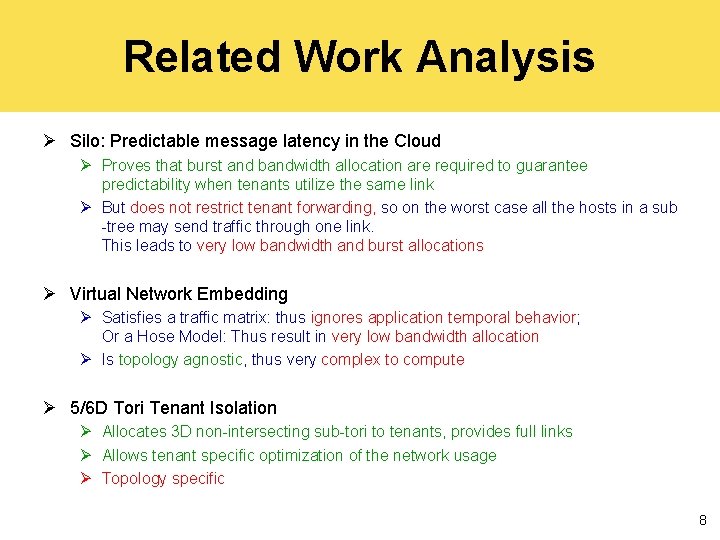

Related Work Analysis Ø Silo: Predictable message latency in the Cloud Ø Proves that burst and bandwidth allocation are required to guarantee predictability when tenants utilize the same link Ø But does not restrict tenant forwarding, so on the worst case all the hosts in a sub -tree may send traffic through one link. This leads to very low bandwidth and burst allocations Ø Virtual Network Embedding Ø Satisfies a traffic matrix: thus ignores application temporal behavior; Or a Hose Model: Thus result in very low bandwidth allocation Ø Is topology agnostic, thus very complex to compute Ø 5/6 D Tori Tenant Isolation Ø Allocates 3 D non-intersecting sub-tori to tenants, provides full links Ø Allows tenant specific optimization of the network usage Ø Topology specific 8

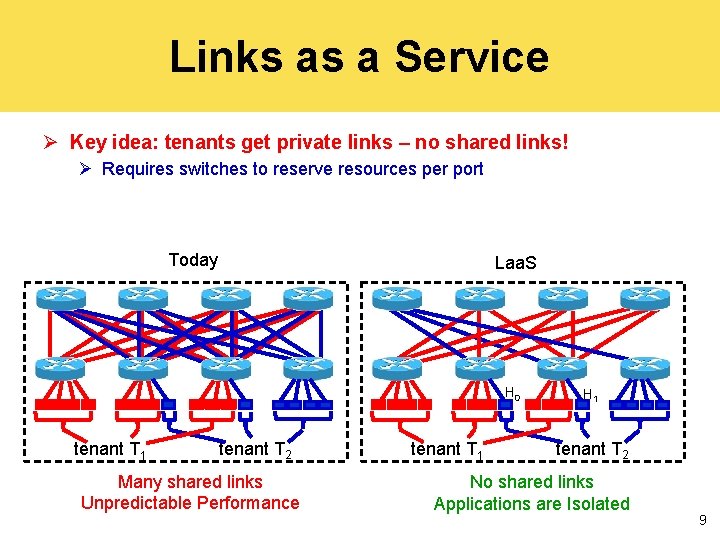

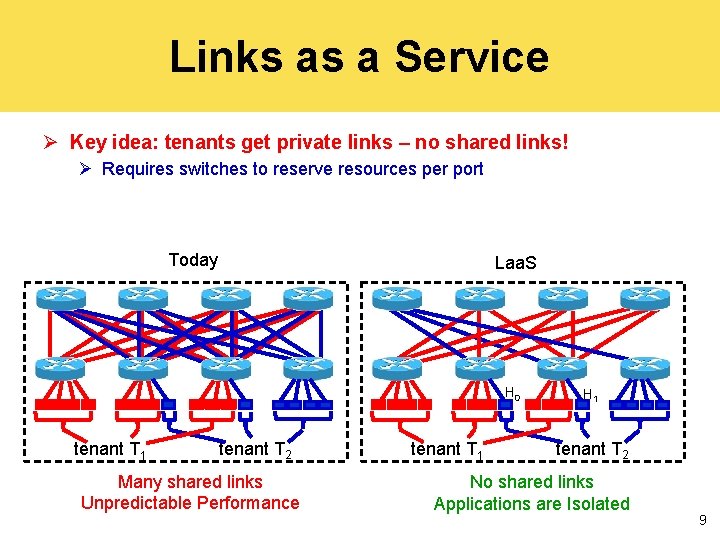

Links as a Service Ø Key idea: tenants get private links – no shared links! Ø Requires switches to reserve resources per port Today Laa. S H 0 tenant T 1 tenant T 2 Many shared links Unpredictable Performance tenant T 1 H 1 tenant T 2 No shared links Applications are Isolated 9

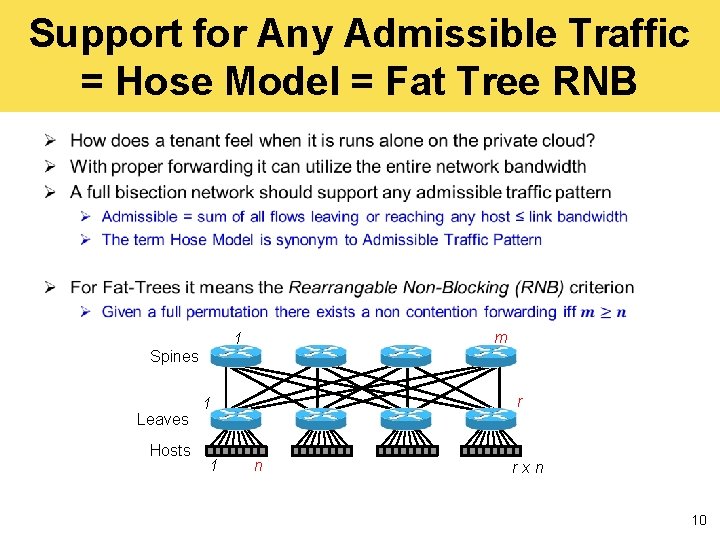

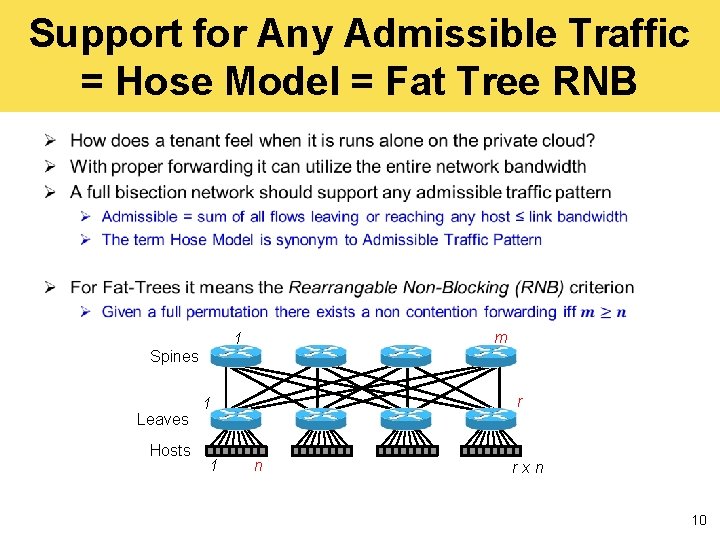

Support for Any Admissible Traffic = Hose Model = Fat Tree RNB Ø m 1 Spines Leaves Hosts r 1 1 n rxn 10

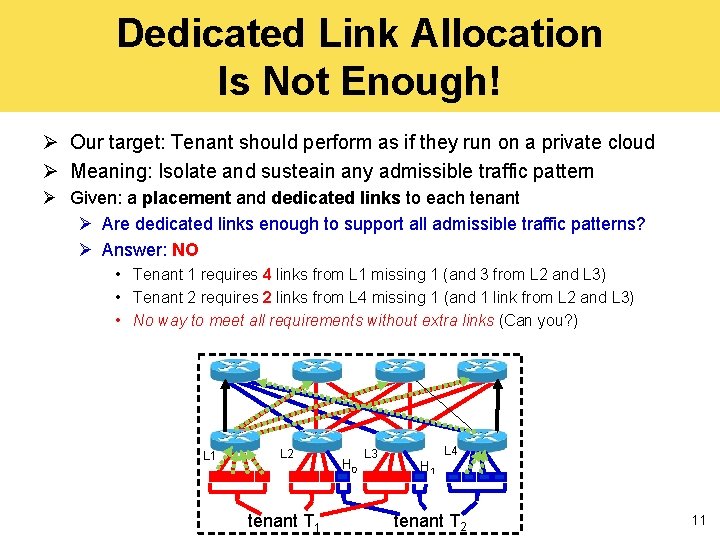

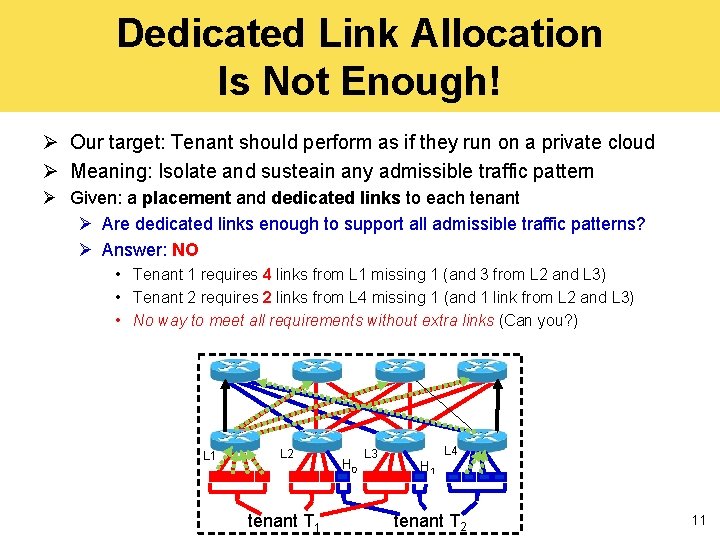

Dedicated Link Allocation Is Not Enough! Ø Our target: Tenant should perform as if they run on a private cloud Ø Meaning: Isolate and susteain any admissible traffic pattern Ø Given: a placement and dedicated links to each tenant Ø Are dedicated links enough to support all admissible traffic patterns? Ø Answer: NO • Tenant 1 requires 4 links from L 1 missing 1 (and 3 from L 2 and L 3) • Tenant 2 requires 2 links from L 4 missing 1 (and 1 link from L 2 and L 3) • No way to meet all requirements without extra links (Can you? ) L 1 L 2 tenant T 1 H 0 L 3 L 4 H 1 tenant T 2 11

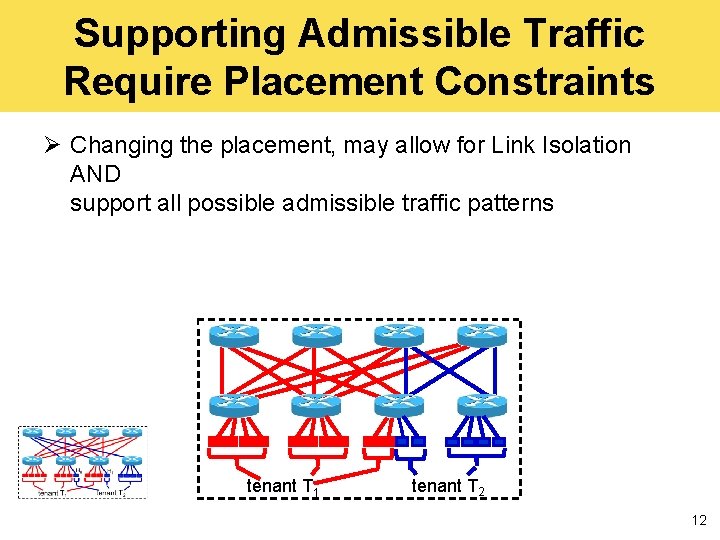

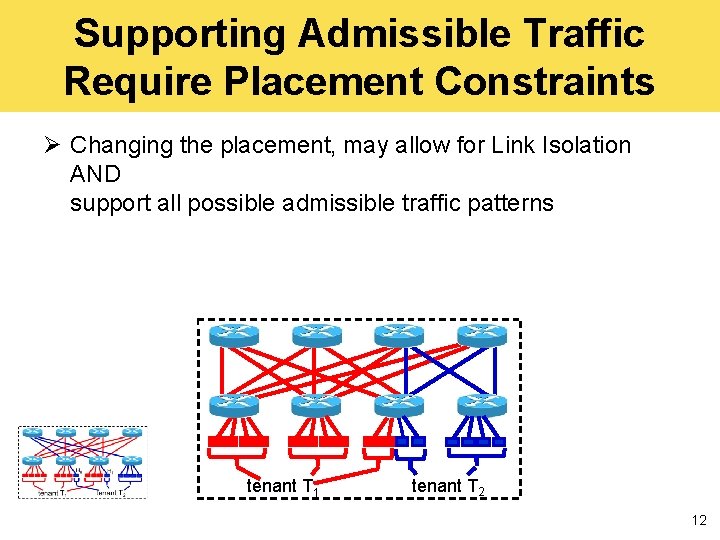

Supporting Admissible Traffic Require Placement Constraints Ø Changing the placement, may allow for Link Isolation AND support all possible admissible traffic patterns tenant T 1 tenant T 2 12

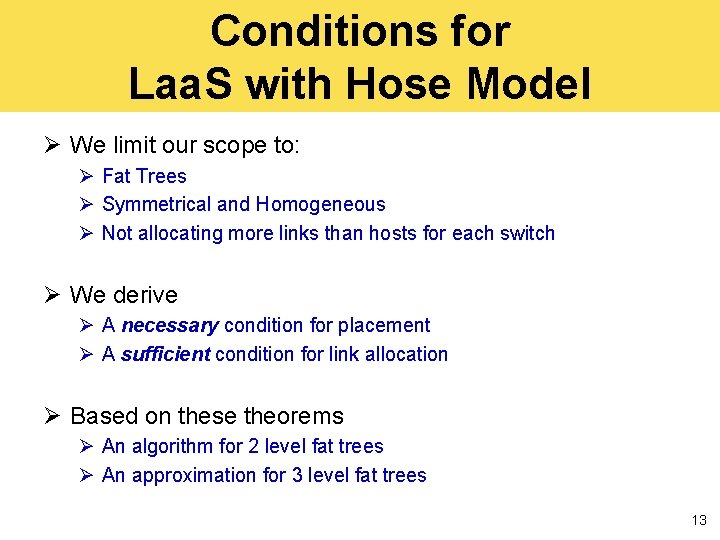

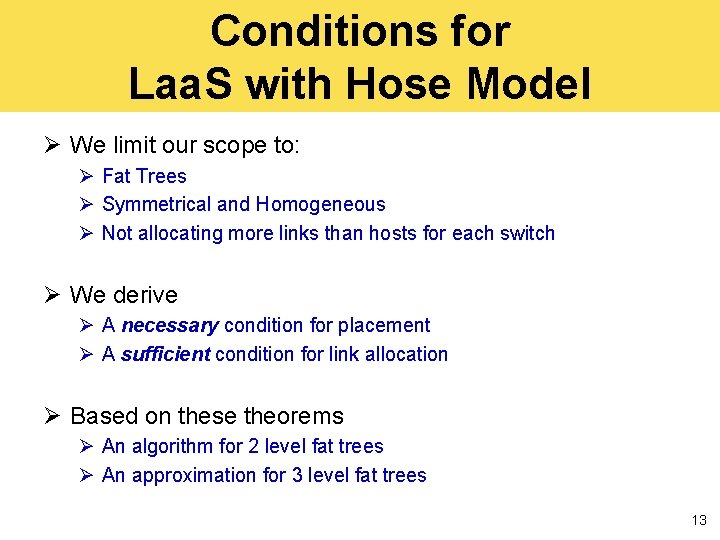

Conditions for Laa. S with Hose Model Ø We limit our scope to: Ø Fat Trees Ø Symmetrical and Homogeneous Ø Not allocating more links than hosts for each switch Ø We derive Ø A necessary condition for placement Ø A sufficient condition for link allocation Ø Based on these theorems Ø An algorithm for 2 level fat trees Ø An approximation for 3 level fat trees 13

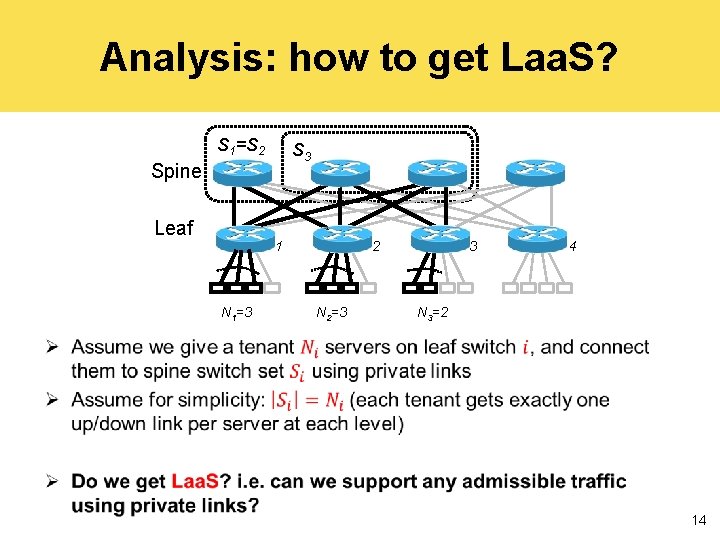

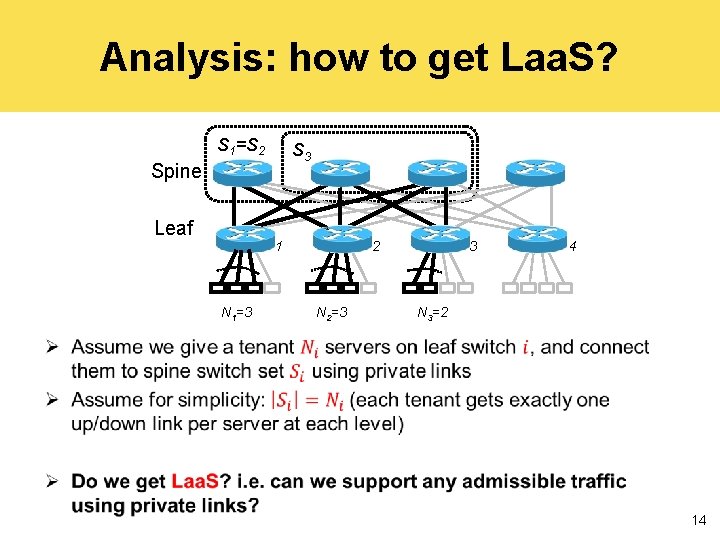

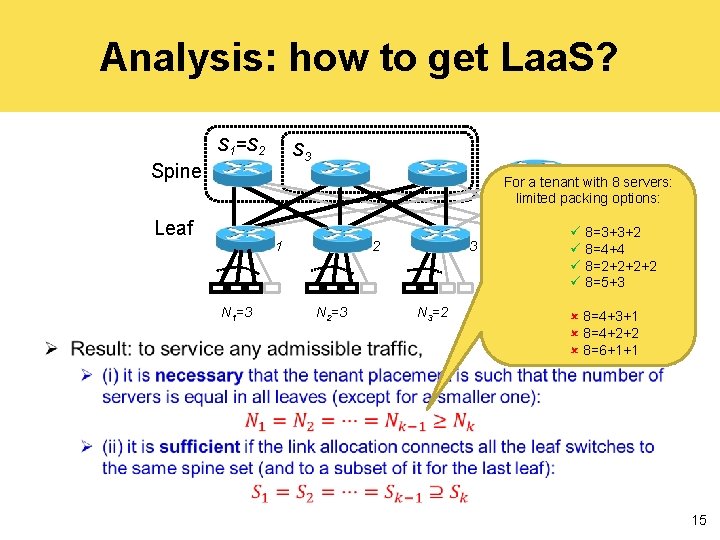

Analysis: how to get Laa. S? S 1=S 2 S 3 Spine Leaf 1 N 1=3 3 2 N 2=3 4 N 3=2 Ø 14

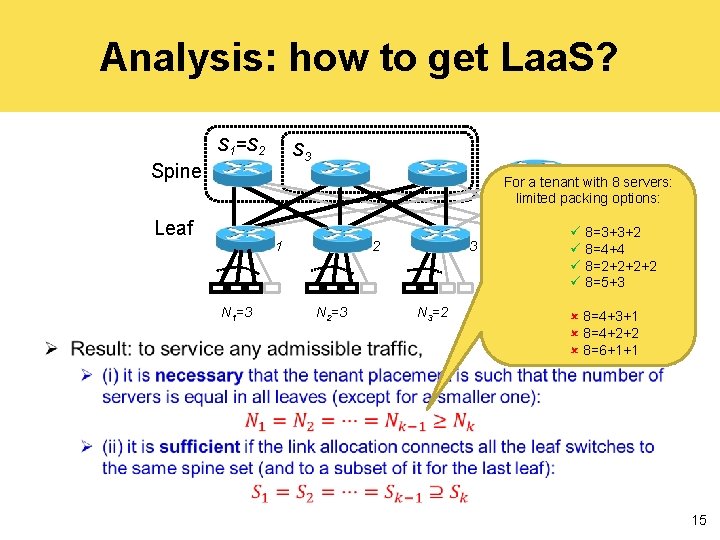

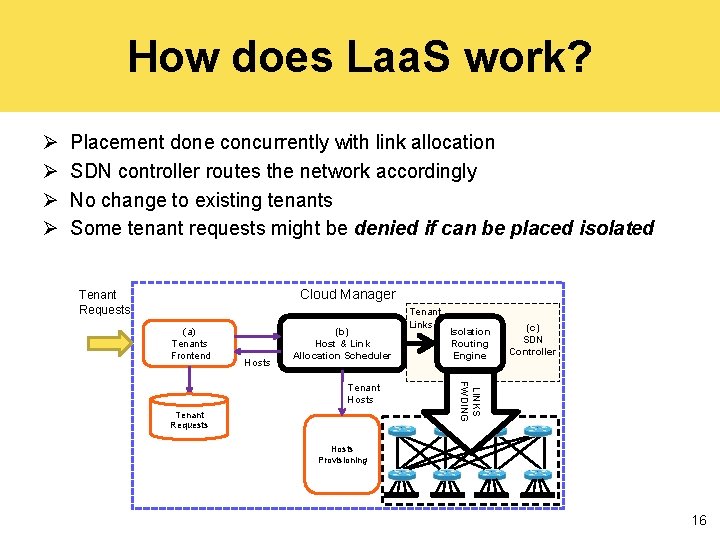

Analysis: how to get Laa. S? S 1=S 2 S 3 Spine For a tenant with 8 servers: limited packing options: Leaf 1 N 1=3 Ø 3 2 N 2=3 N 3=2 8=3+3+2 4 8=4+4 8=2+2+2+2 8=5+3 8=4+3+1 8=4+2+2 8=6+1+1 15

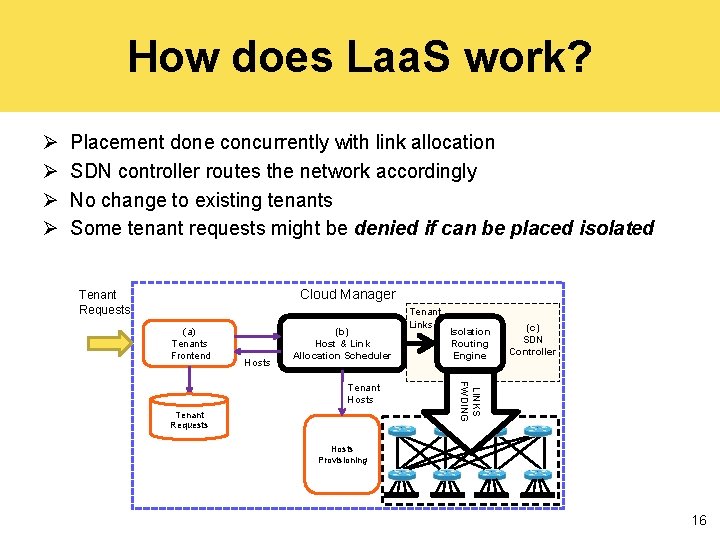

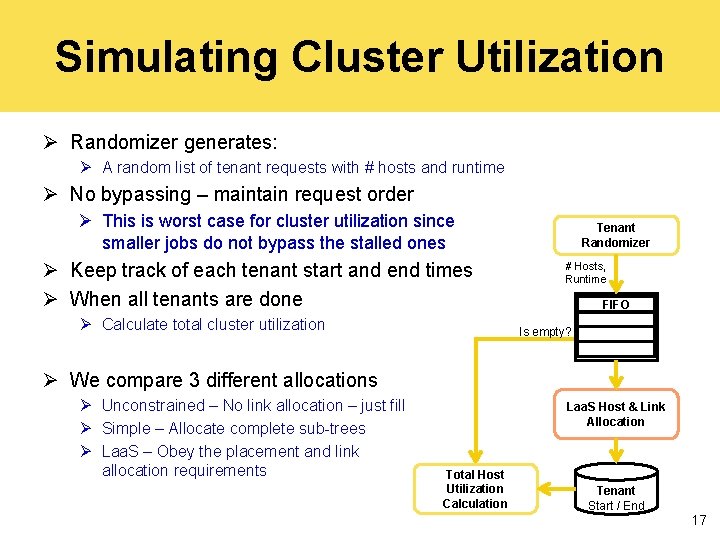

How does Laa. S work? Ø Ø Placement done concurrently with link allocation SDN controller routes the network accordingly No change to existing tenants Some tenant requests might be denied if can be placed isolated Cloud Manager Tenant Requests (a) Tenants Frontend Hosts (b) Host & Link Allocation Scheduler Tenant Requests Isolation Routing Engine (c) SDN Controller LINKS FWDING Tenant Hosts Tenant Links Hosts Provisioning 16

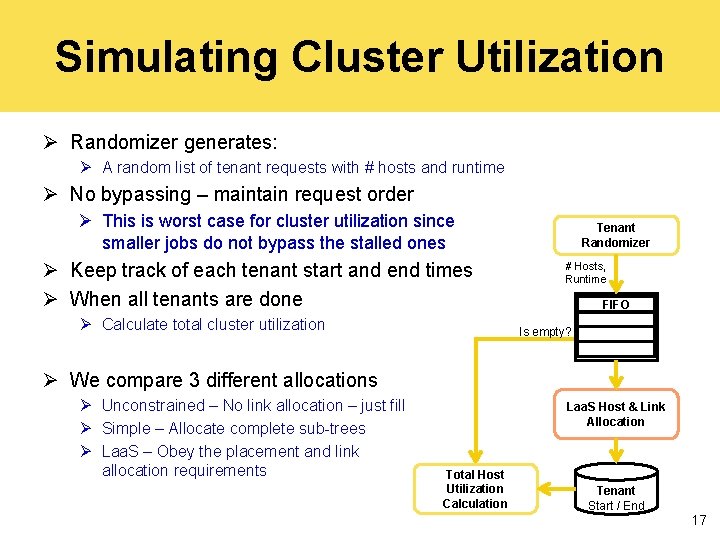

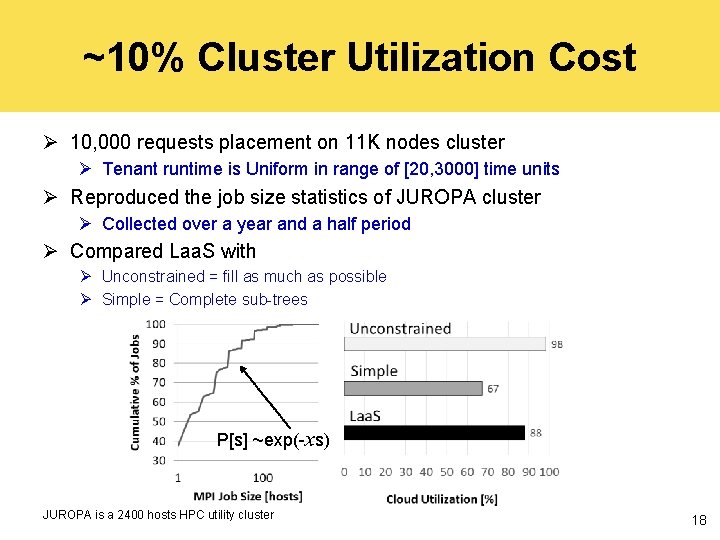

Simulating Cluster Utilization Ø Randomizer generates: Ø A random list of tenant requests with # hosts and runtime Ø No bypassing – maintain request order Ø This is worst case for cluster utilization since smaller jobs do not bypass the stalled ones Ø Keep track of each tenant start and end times Ø When all tenants are done Ø Calculate total cluster utilization Tenant Randomizer # Hosts, Runtime FIFO Is empty? Ø We compare 3 different allocations Ø Unconstrained – No link allocation – just fill Ø Simple – Allocate complete sub-trees Ø Laa. S – Obey the placement and link allocation requirements Laa. S Host & Link Allocation Total Host Utilization Calculation Tenant Start / End 17

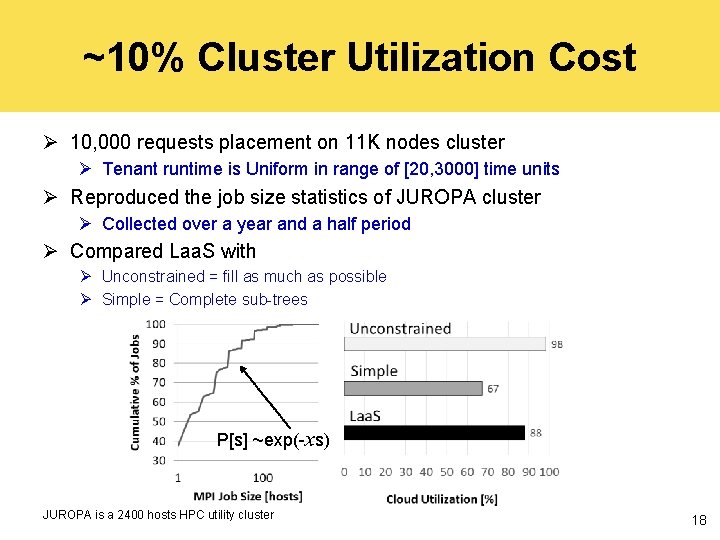

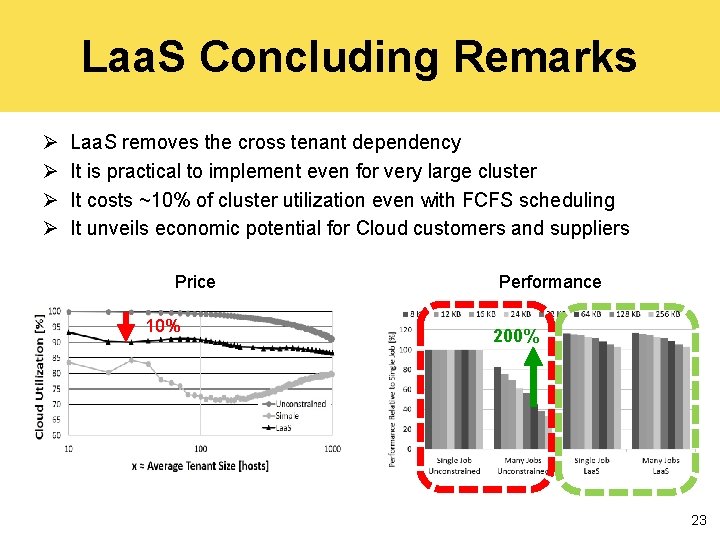

~10% Cluster Utilization Cost Ø 10, 000 requests placement on 11 K nodes cluster Ø Tenant runtime is Uniform in range of [20, 3000] time units Ø Reproduced the job size statistics of JUROPA cluster Ø Collected over a year and a half period Ø Compared Laa. S with Ø Unconstrained = fill as much as possible Ø Simple = Complete sub-trees P[s] ~exp(-xs) JUROPA is a 2400 hosts HPC utility cluster 18

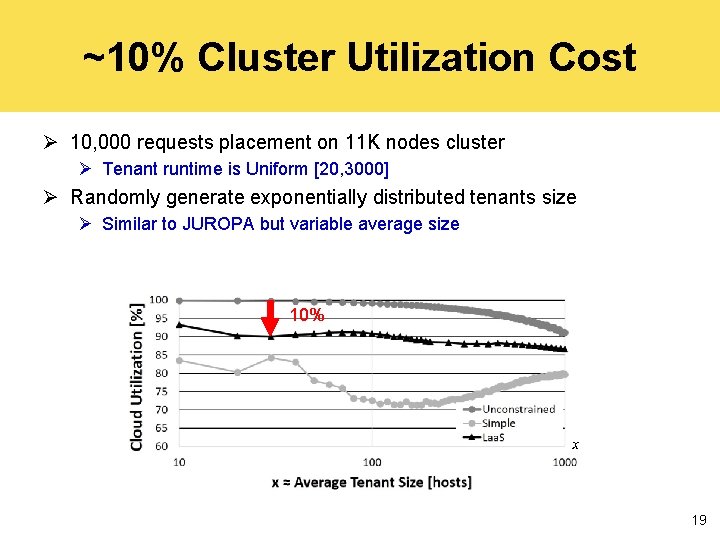

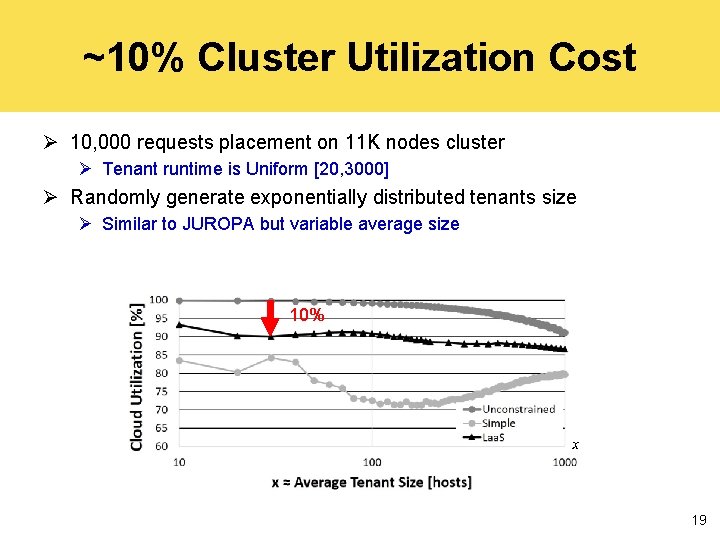

~10% Cluster Utilization Cost Ø 10, 000 requests placement on 11 K nodes cluster Ø Tenant runtime is Uniform [20, 3000] Ø Randomly generate exponentially distributed tenants size Ø Similar to JUROPA but variable average size 10% x 19

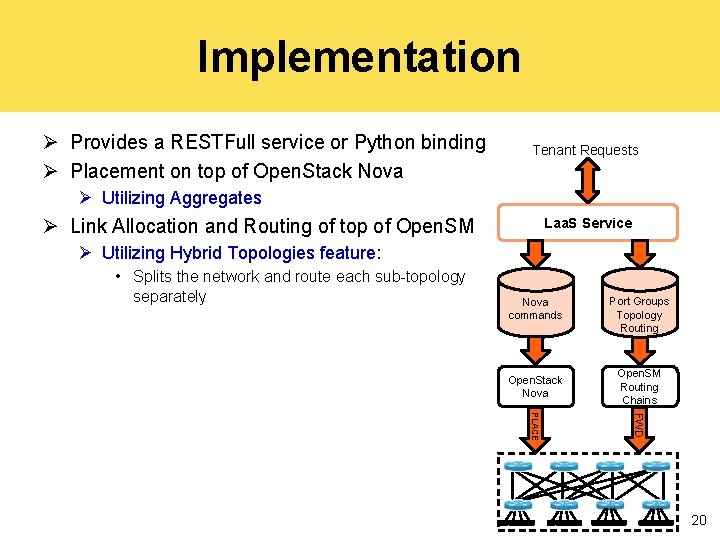

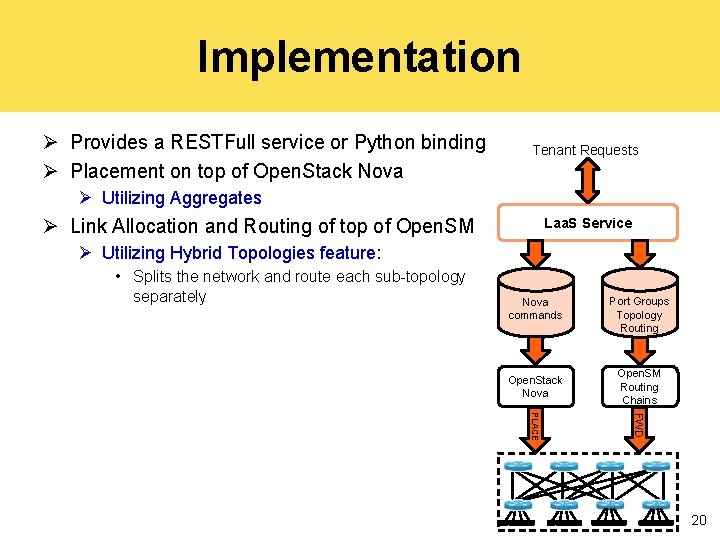

Implementation Ø Provides a RESTFull service or Python binding Ø Placement on top of Open. Stack Nova Tenant Requests Ø Utilizing Aggregates Ø Link Allocation and Routing of top of Open. SM Laa. S Service Ø Utilizing Hybrid Topologies feature: • Splits the network and route each sub-topology separately Nova commands Port Groups Topology Routing Open. Stack Nova Open. SM Routing Chains PLACE FWD 20

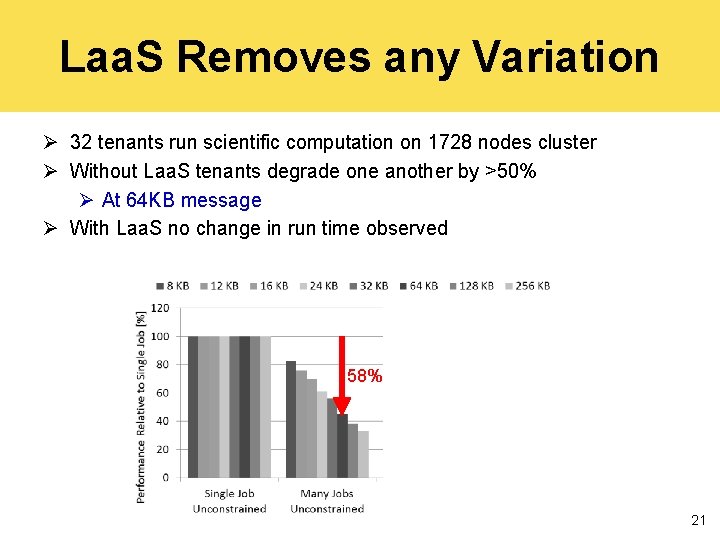

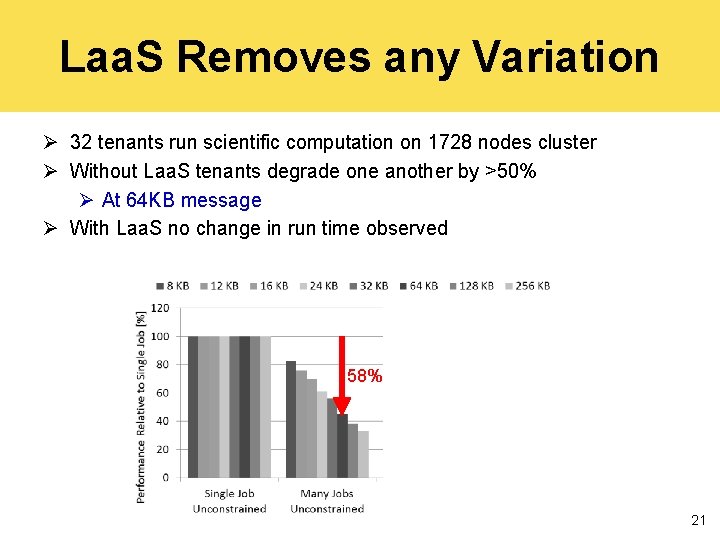

Laa. S Removes any Variation Ø 32 tenants run scientific computation on 1728 nodes cluster Ø Without Laa. S tenants degrade one another by >50% Ø At 64 KB message Ø With Laa. S no change in run time observed 58% 21

Enhancements Ø Slimmed Fat Trees (where bandwidth reduced closer to the roots) Ø Fully described by our work Ø A mixed bare metal and shared resources environment Ø Via pre-allocation of large “virtual tenant” Ø Requires TDMA like allocation of link and switch resources to tenants Ø Heterogeneous clusters where node selection should minimize cost and adheres to node capabilities constraints Ø Requires ordering the search and multiple iterations 22

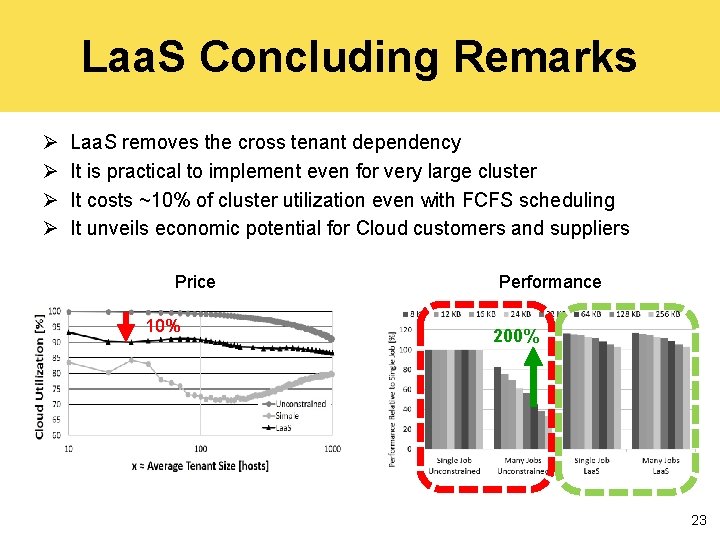

Laa. S Concluding Remarks Ø Ø Laa. S removes the cross tenant dependency It is practical to implement even for very large cluster It costs ~10% of cluster utilization even with FCFS scheduling It unveils economic potential for Cloud customers and suppliers Price 10% Performance 200% 23

Questions 24