LING 138238 SYMBSYS 138 Intro to Computer Speech

LING 138/238 SYMBSYS 138 Intro to Computer Speech and Language Processing Lecture 6: Part of Speech Tagging (II): October 14, 2004 Neal Snider Thanks to Dan Jurafsky, Jim Martin, Dekang Lin, and Bonnie Dorr for some of the examples and details in these slides! 3/5/2021 LING 138/238 Autumn 2004 1

Week 3: Part of Speech tagging • Part of speech tagging – – – Parts of speech What’s POS tagging good for anyhow? Tag sets Rule-based tagging Statistical tagging “TBL” tagging 3/5/2021 LING 138/238 Autumn 2004 2

Rule-based tagging • Start with a dictionary • Assign all possible tags to words from the dictionary • Write rules by hand to selectively remove tags • Leaving the correct tag for each word. 3/5/2021 LING 138/238 Autumn 2004 3

3 methods for POS tagging 1. Rule-based tagging – (ENGTWOL) 2. Stochastic (=Probabilistic) tagging – HMM (Hidden Markov Model) tagging 3. Transformation-based tagging – 3/5/2021 Brill tagger LING 138/238 Autumn 2004 4

Statistical Tagging • Based on probability theory • Today we’ll go over a few basic ideas of probability theory • Then we’ll do HMM and TBL tagging. 3/5/2021 LING 138/238 Autumn 2004 5

Probability and part of speech tags • What’s the probability of drawing a 2 from a deck of 52 cards with four 2 s? • What’s the probability of a random word (from a random dictionary page) being a verb? 3/5/2021 LING 138/238 Autumn 2004 6

Probability and part of speech tags • What’s the probability of a random word (from a random dictionary page) being a verb? • How to compute each of these • All words = just count all the words in the dictionary • # of ways to get a verb: number of words which are verbs! • If a dictionary has 50, 000 entries, and 10, 000 are verbs…. P(V) is 10000/50000 = 1/5 =. 20 3/5/2021 LING 138/238 Autumn 2004 7

Probability and Independent Events • What’s the probability of picking two verbs randomly from the dictionary • Events are independent, so multiply probs P(w 1=V, w 2=V) = P(V) * P(V) = 1/5 * 1/5 = 0. 04 • What if events are not independent? 3/5/2021 LING 138/238 Autumn 2004 8

Conditional Probability Written P(A|B). Let’s say A is “it’s raining”. Let’s say P(A) in drought-stricken California is. 01 Let’s say B is “it was sunny ten minutes ago” P(A|B) means “what is the probability of it raining now if it was sunny 10 minutes ago” • P(A|B) is probably way less than P(A) • Let’s say P(A|B) is. 0001 • • • 3/5/2021 LING 138/238 Autumn 2004 9

Conditional Probability and Tags • P(Verb) is the probability of a randomly selected word being a verb. • P(Verb|race) is “what’s the probability of a word being a verb given that it’s the word “race”? • Race can be a noun or a verb. • It’s more likely to be a noun. • P(Verb|race) can be estimated by looking at some corpus and saying “out of all the times we saw ‘race’, how many were verbs? • In Brown corpus, P(Verb|race) = 96/98 =. 98 • How to calculate for a tag sequence, say P(NN|DT)? 3/5/2021 LING 138/238 Autumn 2004 10

Stochastic Tagging • Based on probability of certain tag occurring given various possibilities • Necessitates a training corpus • No probabilities for words not in corpus. • Training corpus may be too different from test corpus. 3/5/2021 LING 138/238 Autumn 2004 11

Stochastic Tagging (cont. ) Simple Method: Choose most frequent tag in training text for each word! – – 3/5/2021 Result: 90% accuracy Why? Baseline: Others will do better HMM is an example LING 138/238 Autumn 2004 12

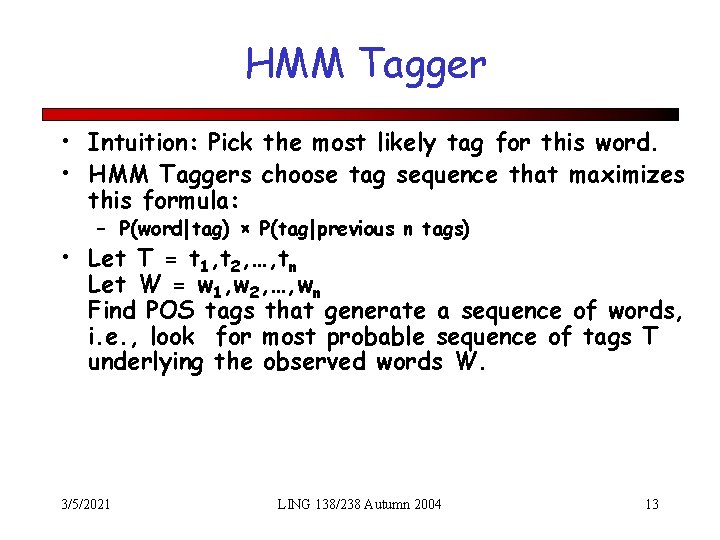

HMM Tagger • Intuition: Pick the most likely tag for this word. • HMM Taggers choose tag sequence that maximizes this formula: – P(word|tag) × P(tag|previous n tags) • Let T = t 1, t 2, …, tn Let W = w 1, w 2, …, wn Find POS tags that generate a sequence of words, i. e. , look for most probable sequence of tags T underlying the observed words W. 3/5/2021 LING 138/238 Autumn 2004 13

HMM Tagger • argmax. T P(T|W) • argmax. TP(W|T)P(T) Bayes Rule • argmax. TP(w 1…wn|t 1…tn)P(t 1…tn) Remember, we are trying to find the sequence T that will maximize P(T|W) so this equation is calculated over the whole sentence. 3/5/2021 LING 138/238 Autumn 2004 14

HMM Tagger • Assume word is dependent only on its own POS tag: it is independent of the others around it • argmax. T[P(w 1|t 1)P(w 2|t 2)…P(wn|tn)][P(t 1)P(t 2|t 1)…P(tn|tn-1)] 3/5/2021 LING 138/238 Autumn 2004 15

Bigram HMM Tagger • Also assume that probability is dependent only on previous tag • For each word and possible tag, need to calculate: P(ti) = P(wi|ti)P(ti|ti-1) then multiply this over each possible tag and each word over the sequence of tags 3/5/2021 LING 138/238 Autumn 2004 16

Bigram HMM Tagger • How do we compute P(ti|ti-1)? – c(ti-1 ti)/c(ti-1) • How do we compute P(wi|ti)? – c(wi, ti)/c(ti) • How do we compute the most probable tag sequence? – Viterbi algorithm 3/5/2021 LING 138/238 Autumn 2004 17

An Example • Secretariat/NNP is/VBZ expected/VBN to/TO race/VB tomorrow/NN • People/NNS continue/VBP to/TO inquire/VB the DT reason/NN for/IN the/DT race/NN for/IN outer/JJ space/NN • to/TO race/? ? ? the/DT race/? ? ? • ti = argmaxj P(tj|ti-1)P(wi|tj) – i = num of word in sequence, j = num among possible tags – max[P(VB|TO)P(race|VB) , P(NN|TO)P(race|NN)] • Brown: – P(NN|TO) =. 021 × – P(VB|TO) =. 34 × 3/5/2021 P(race|NN) =. 00041 P(race|VB) =. 00003 LING 138/238 Autumn 2004 =. 000007 =. 00001 18

Viterbi Algorithm S 1 3/5/2021 S 2 S 3 LING 138/238 Autumn 2004 S 5 19

Transformation-Based Tagging (Brill Tagging) • Combination of Rule-based and stochastic tagging methodologies – Like rule-based because rules are used to specify tags in a certain environment – Like stochastic approach because machine learning is used—with tagged corpus as input • Input: – tagged corpus – dictionary (with most frequent tags) 3/5/2021 LING 138/238 Autumn 2004 20

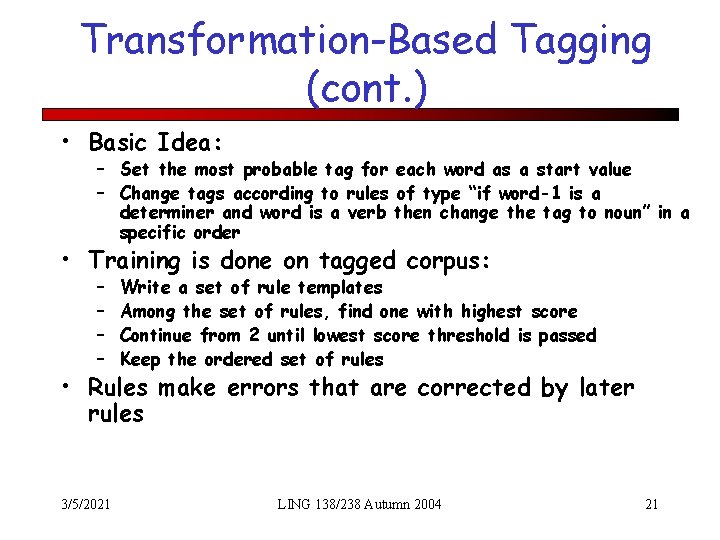

Transformation-Based Tagging (cont. ) • Basic Idea: – Set the most probable tag for each word as a start value – Change tags according to rules of type “if word-1 is a determiner and word is a verb then change the tag to noun” in a specific order • Training is done on tagged corpus: – – Write a set of rule templates Among the set of rules, find one with highest score Continue from 2 until lowest score threshold is passed Keep the ordered set of rules • Rules make errors that are corrected by later rules 3/5/2021 LING 138/238 Autumn 2004 21

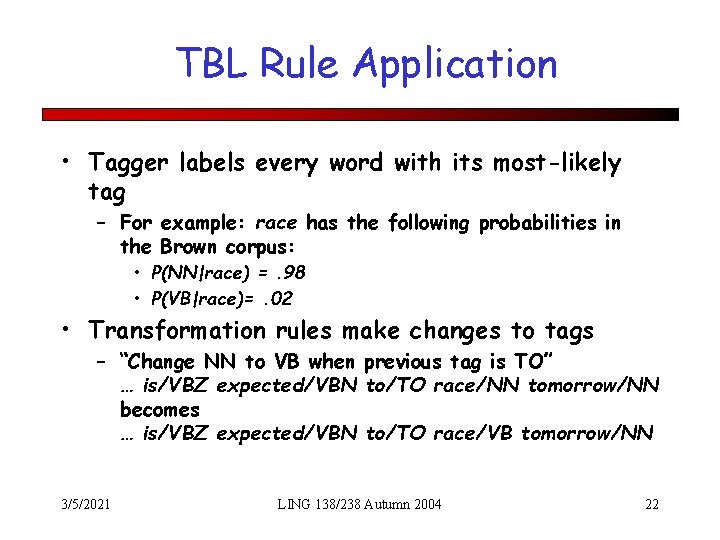

TBL Rule Application • Tagger labels every word with its most-likely tag – For example: race has the following probabilities in the Brown corpus: • P(NN|race) =. 98 • P(VB|race)=. 02 • Transformation rules make changes to tags – “Change NN to VB when previous tag is TO” … is/VBZ expected/VBN to/TO race/NN tomorrow/NN becomes … is/VBZ expected/VBN to/TO race/VB tomorrow/NN 3/5/2021 LING 138/238 Autumn 2004 22

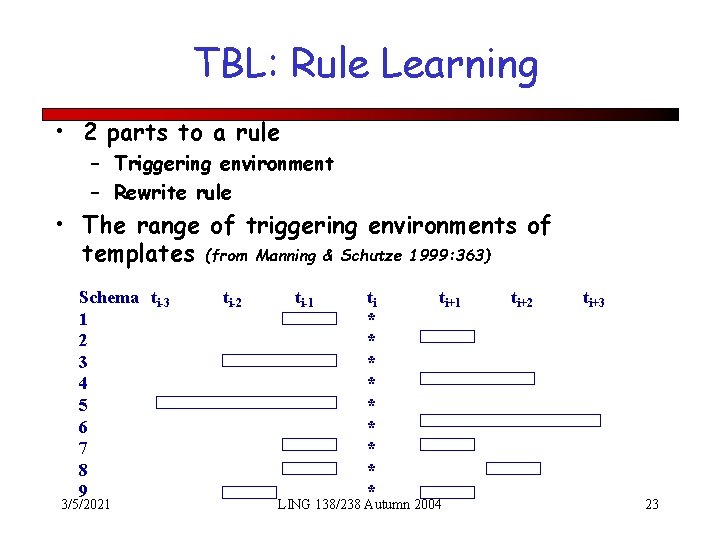

TBL: Rule Learning • 2 parts to a rule – Triggering environment – Rewrite rule • The range of triggering environments of templates (from Manning & Schutze 1999: 363) Schema ti-3 1 2 3 4 5 6 7 8 9 3/5/2021 ti-2 ti-1 ti * * * * * ti+1 LING 138/238 Autumn 2004 ti+2 ti+3 23

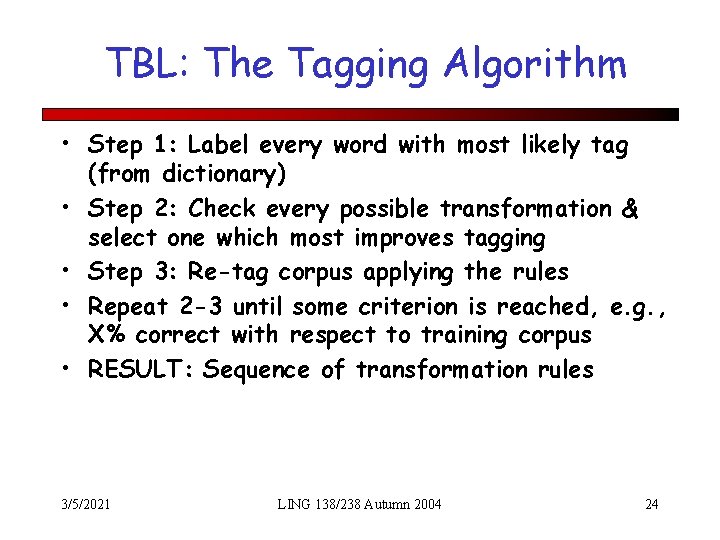

TBL: The Tagging Algorithm • Step 1: Label every word with most likely tag (from dictionary) • Step 2: Check every possible transformation & select one which most improves tagging • Step 3: Re-tag corpus applying the rules • Repeat 2 -3 until some criterion is reached, e. g. , X% correct with respect to training corpus • RESULT: Sequence of transformation rules 3/5/2021 LING 138/238 Autumn 2004 24

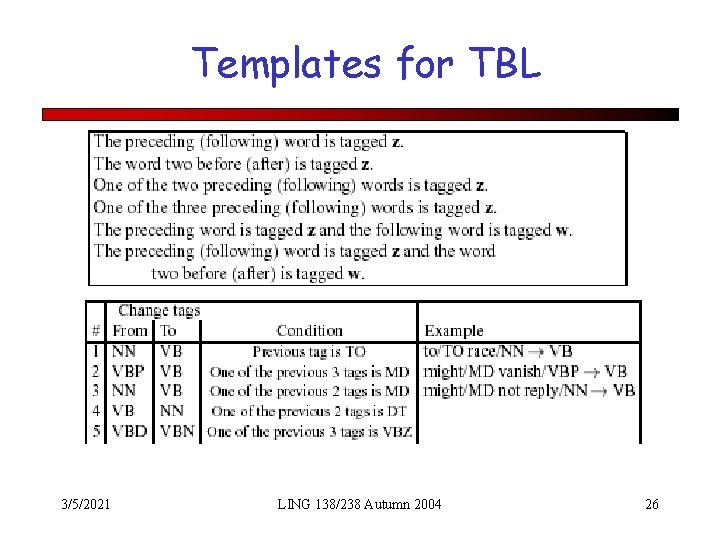

TBL: Rule Learning (cont. ) • Problem: Could apply transformations ad infinitum! • Constrain the set of transformations with “templates”: – Replace tag X with tag Y, provided tag Z or word Z’ appears in some position • Rules are learned in ordered sequence • Rules may interact. • Rules are compact and can be inspected by humans 3/5/2021 LING 138/238 Autumn 2004 25

Templates for TBL 3/5/2021 LING 138/238 Autumn 2004 26

TBL: Problems • First 100 rules achieve 96. 8% accuracy First 200 rules achieve 97. 0% accuracy • Execution Speed: TBL tagger is slower than HMM approach • Learning Speed: Brill’s implementation over a day (600 k tokens) BUT … (1) Learns small number of simple, non-stochastic rules (2) Can be made to work faster with FST (3) Best performing algorithm on unknown words 3/5/2021 LING 138/238 Autumn 2004 27

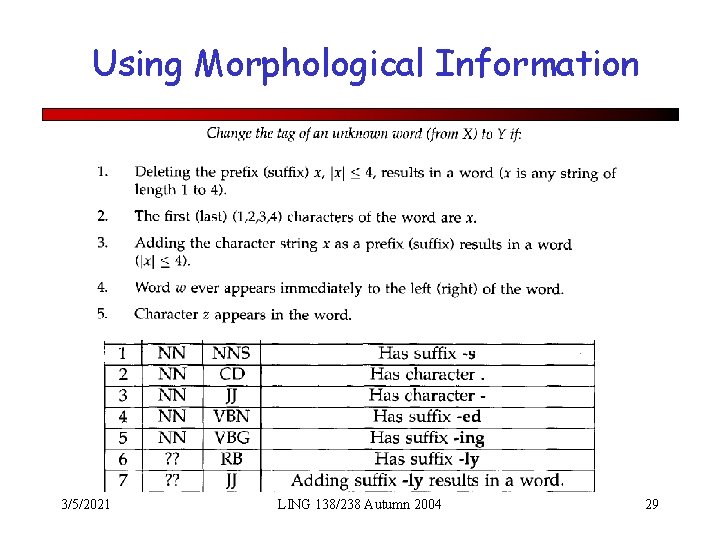

Tagging Unknown Words • New words added to (newspaper) language 20+ per month • Plus many proper names … • Increases error rates by 1 -2% • Method 1: assume they are nouns • Method 2: assume the unknown words have a probability distribution similar to words only occurring once in the training set. • Method 3: Use morphological information, e. g. , words ending with –ed tend to be tagged VBN. 3/5/2021 LING 138/238 Autumn 2004 28

Using Morphological Information 3/5/2021 LING 138/238 Autumn 2004 29

Evaluation • The result is compared with a manually coded “Gold Standard” – Typically accuracy reaches 96 -97% – This may be compared with result for a baseline tagger (one that uses no context). • Important: 100% is impossible even for human annotators. 3/5/2021 LING 138/238 Autumn 2004 30

- Slides: 30