Linear regression with one variable Model representation Machine

Linear regression with one variable Model representation Machine Learning Andrew Ng

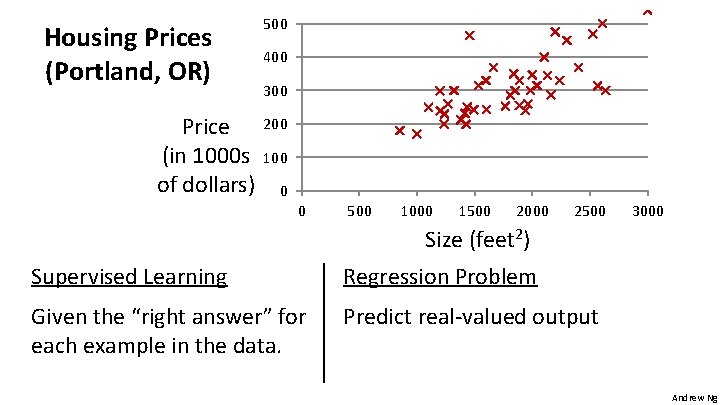

Housing Prices (Portland, OR) Price (in 1000 s of dollars) 500 400 300 200 100 0 0 500 1000 1500 Size 2000 (feet 2) 2500 Supervised Learning Regression Problem Given the “right answer” for each example in the data. Predict real-valued output 3000 Andrew Ng

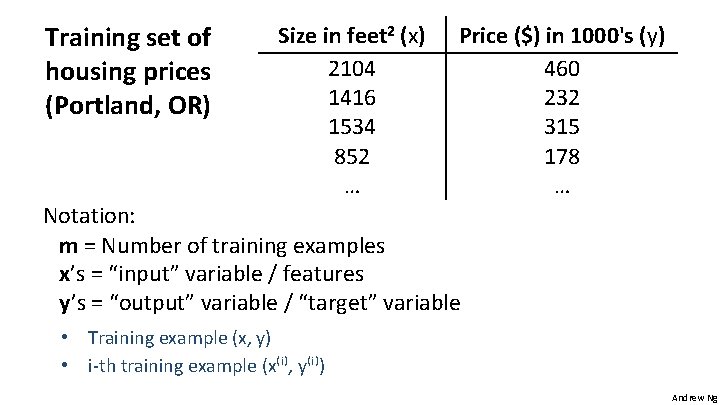

Training set of housing prices (Portland, OR) Size in feet 2 (x) 2104 1416 1534 852 … Price ($) in 1000's (y) 460 232 315 178 … Notation: m = Number of training examples x’s = “input” variable / features y’s = “output” variable / “target” variable • Training example (x, y) • i-th training example (x⁽ⁱ⁾, y⁽ⁱ⁾) Andrew Ng

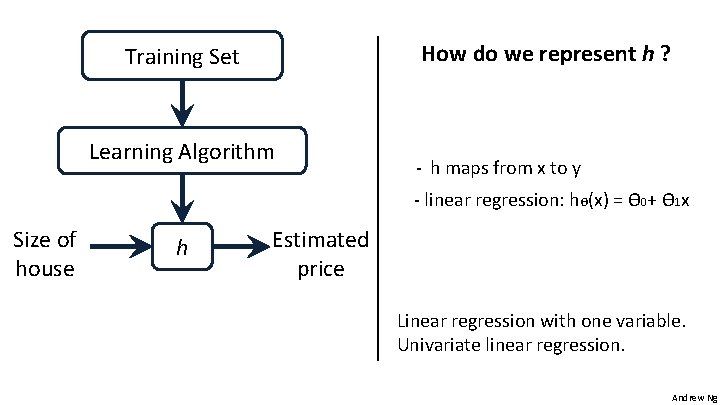

How do we represent h ? Training Set Learning Algorithm - h maps from x to y - linear regression: hӨ(x) = Ө 0+ Ө 1 x Size of house h Estimated price Linear regression with one variable. Univariate linear regression. Andrew Ng

Linear regression with one variable Cost function Machine Learning Andrew Ng

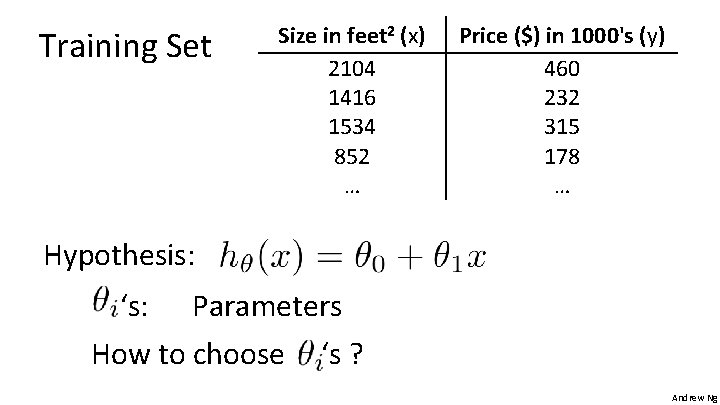

Training Set Size in feet 2 (x) 2104 1416 1534 852 … Price ($) in 1000's (y) 460 232 315 178 … Hypothesis: ‘s: Parameters How to choose ‘s ? Andrew Ng

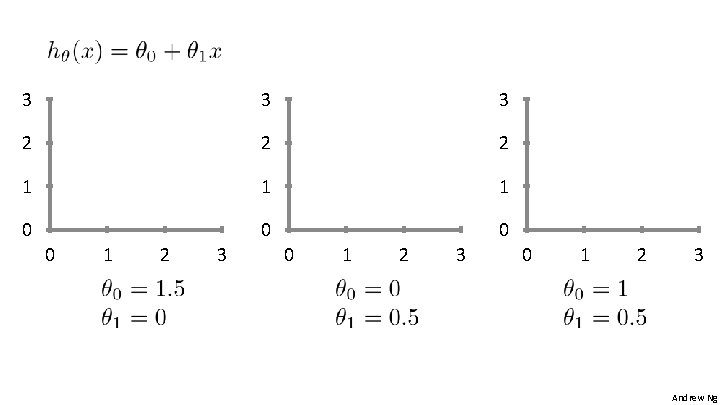

3 3 3 2 2 2 1 1 1 0 0 1 2 3 Andrew Ng

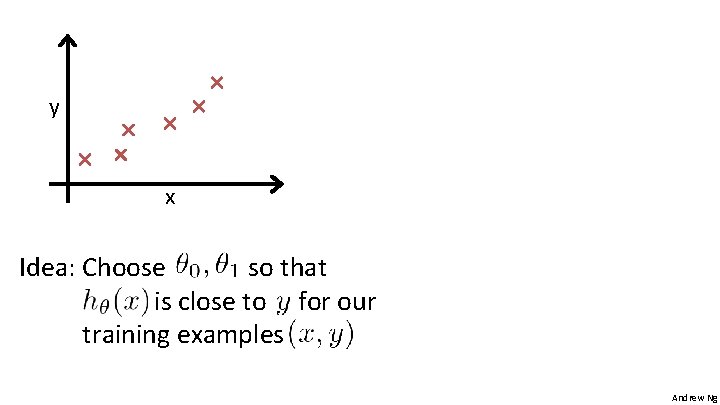

y x Idea: Choose so that is close to for our training examples Andrew Ng

Linear regression with one variable Cost function intuition I Machine Learning Andrew Ng

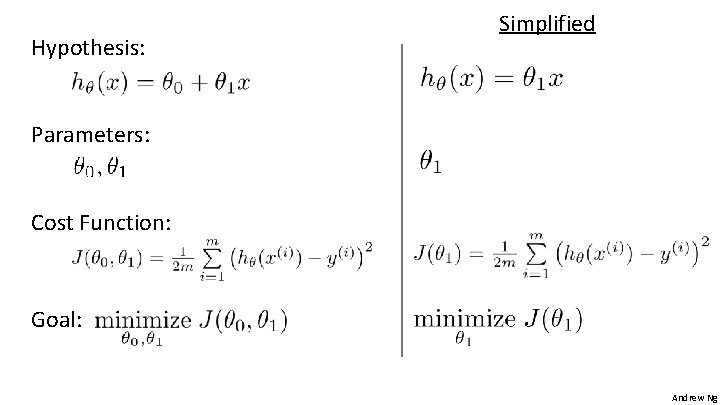

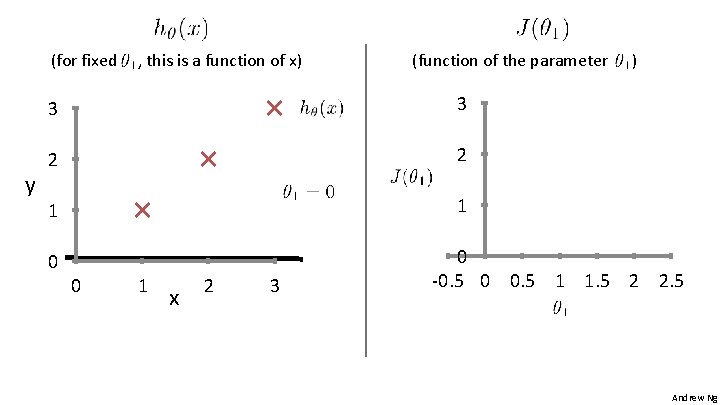

Hypothesis: Simplified Parameters: Cost Function: Goal: Andrew Ng

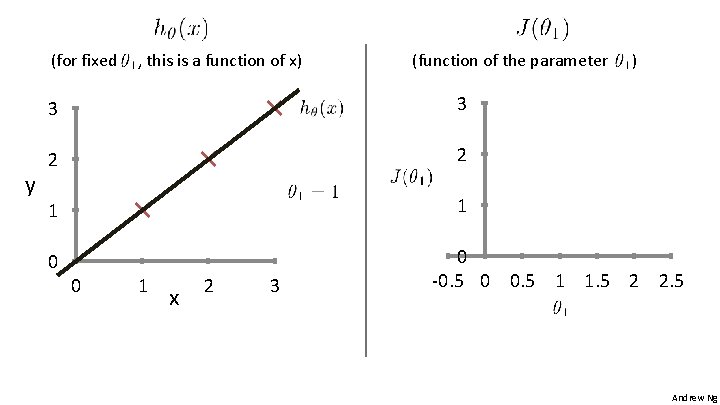

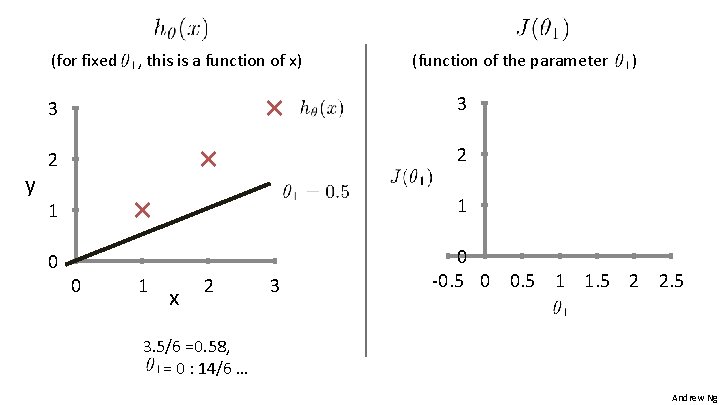

(for fixed y , this is a function of x) (function of the parameter 3 3 2 2 1 1 0 0 1 x 2 3 ) 0 -0. 5 0 0. 5 1 1. 5 2 2. 5 Andrew Ng

(for fixed y , this is a function of x) (function of the parameter 3 3 2 2 1 1 0 0 1 x 2 3 ) 0 -0. 5 0 0. 5 1 1. 5 2 2. 5 3. 5/6 =0. 58, = 0 : 14/6 … Andrew Ng

(for fixed y , this is a function of x) (function of the parameter 3 3 2 2 1 1 0 0 1 x 2 3 ) 0 -0. 5 0 0. 5 1 1. 5 2 2. 5 Andrew Ng

Linear regression with one variable Cost function intuition II Machine Learning Andrew Ng

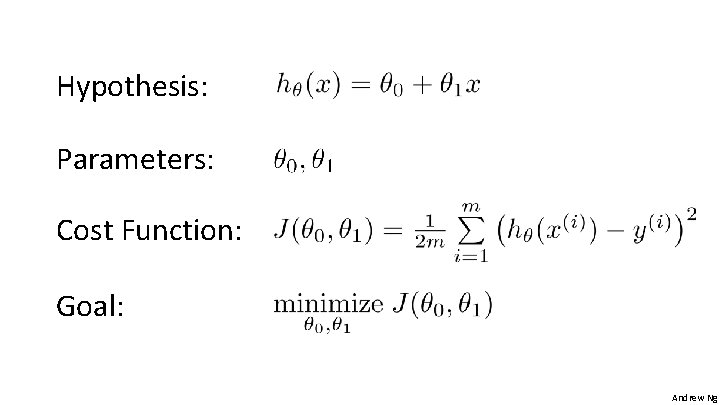

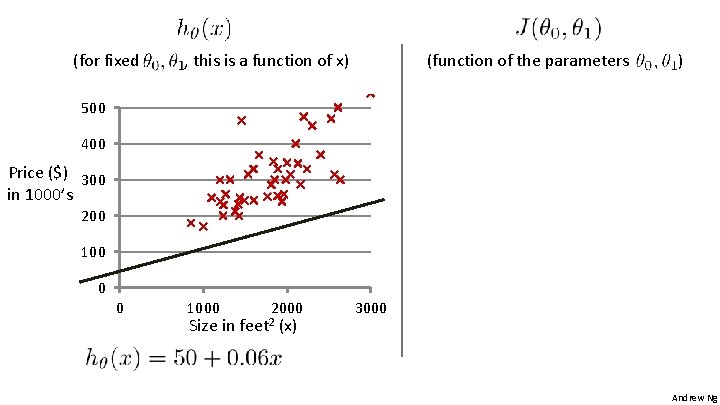

Hypothesis: Parameters: Cost Function: Goal: Andrew Ng

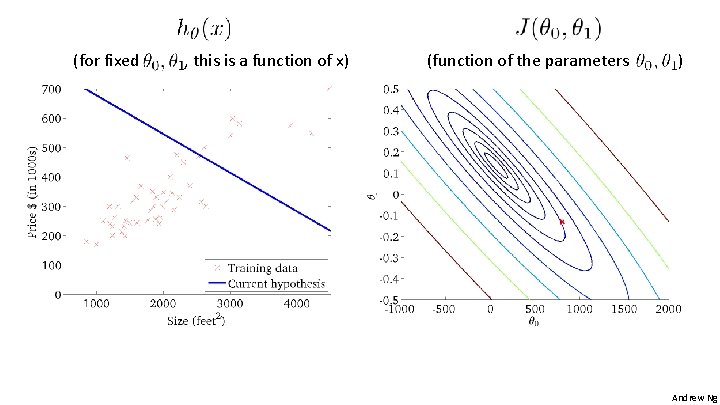

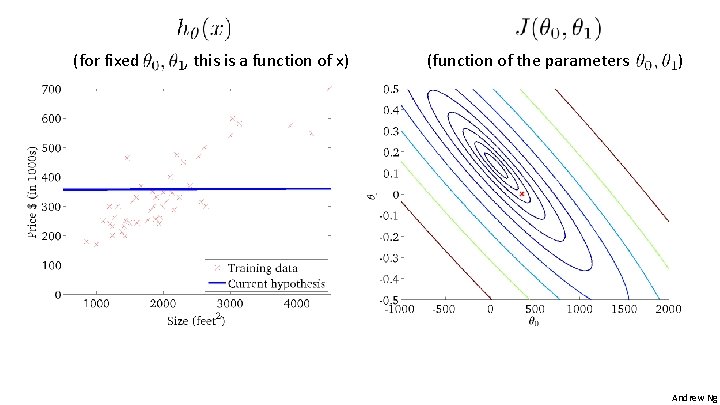

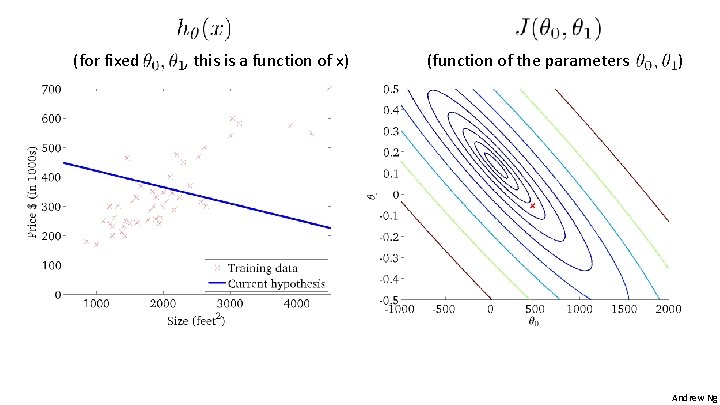

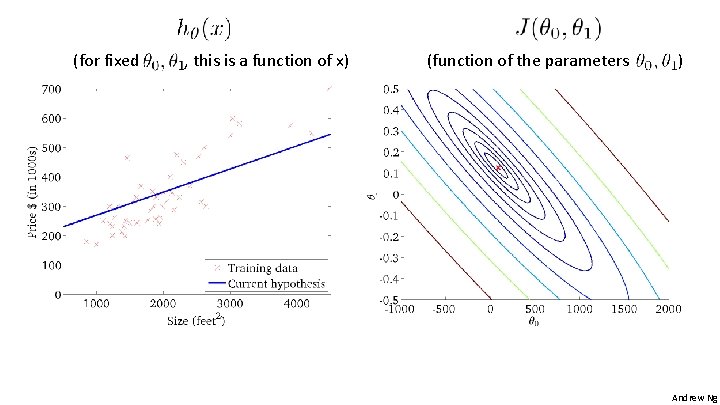

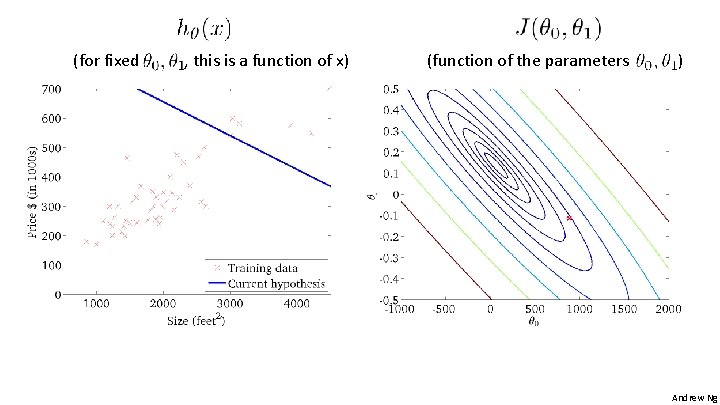

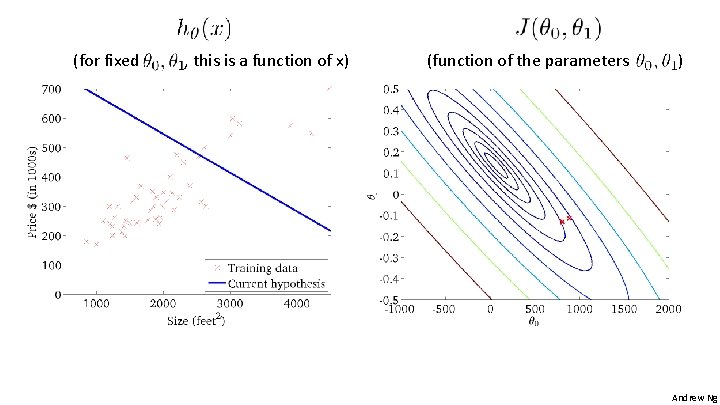

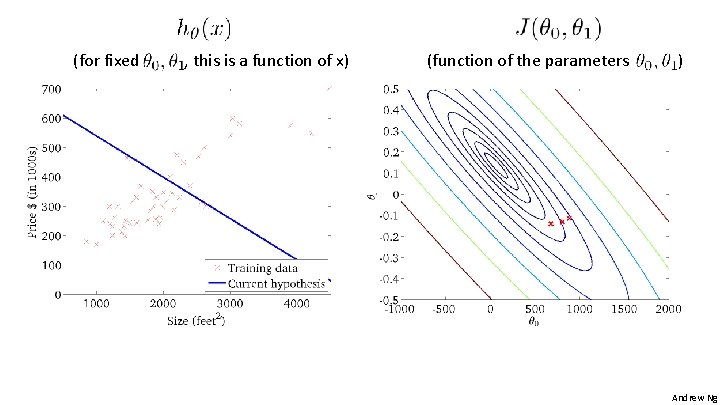

(for fixed , this is a function of x) (function of the parameters ) 500 400 Price ($) 300 in 1000’s 200 100 0 0 1000 Size in 2000 feet 2 (x) 3000 Andrew Ng

Andrew Ng

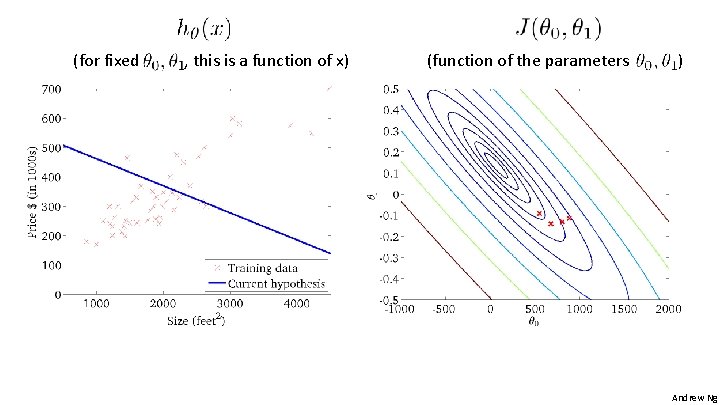

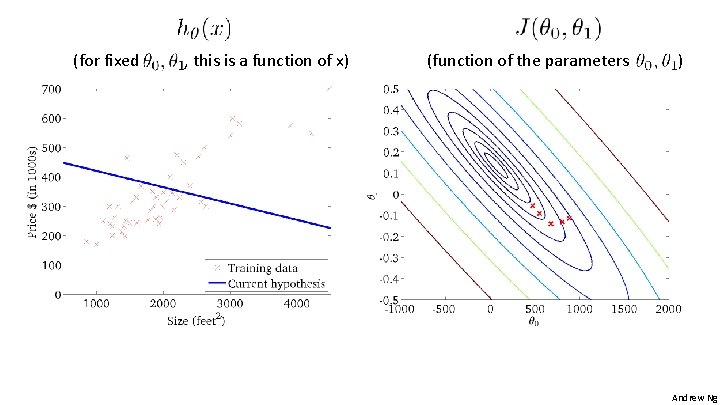

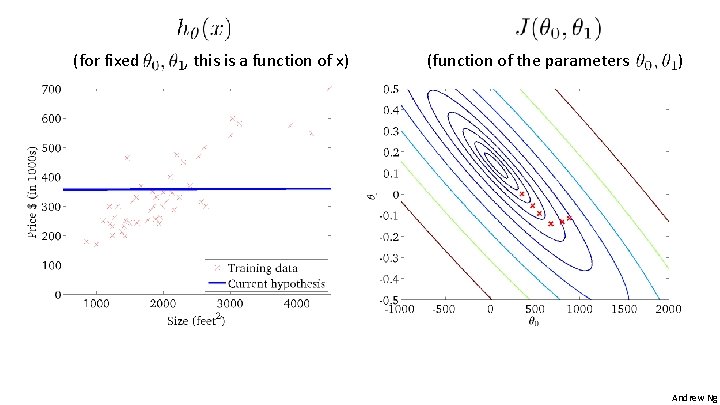

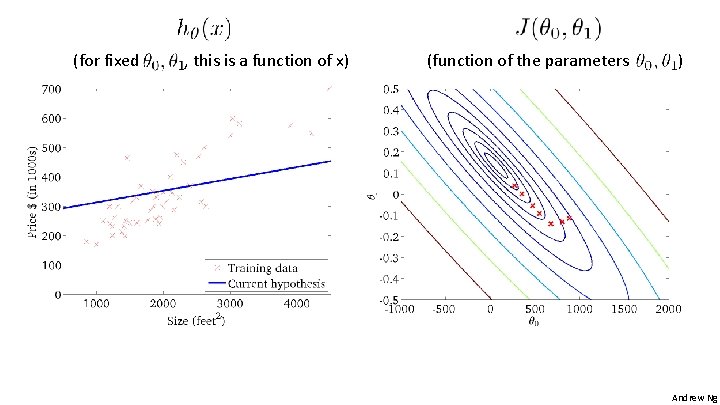

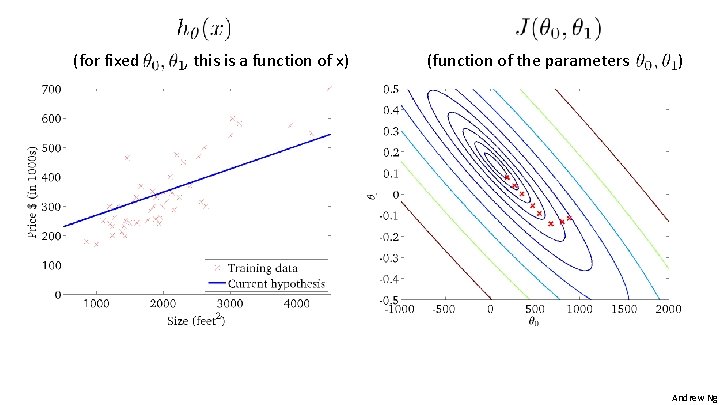

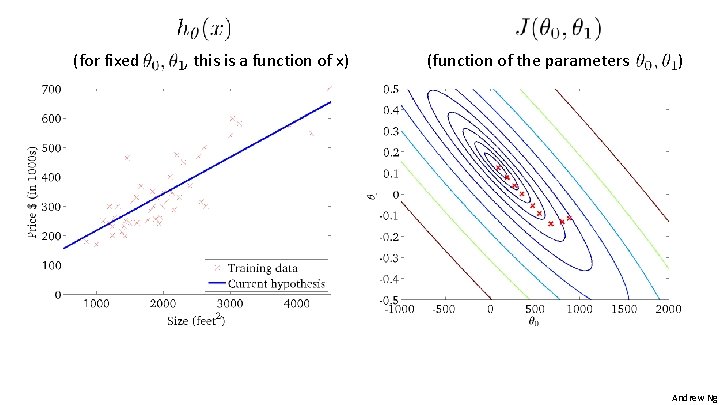

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

Linear regression with one variable Machine Learning Gradient descent Andrew Ng

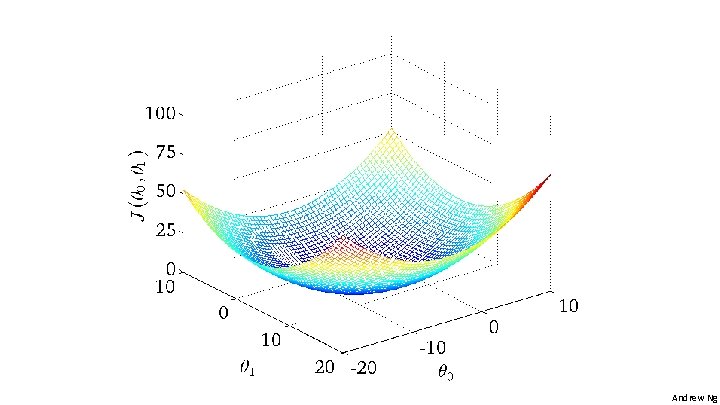

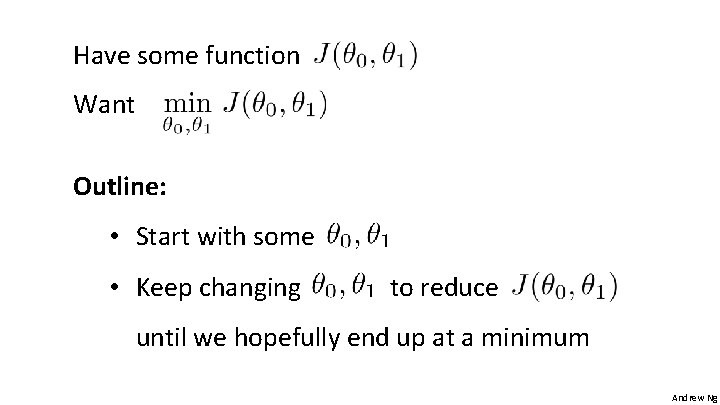

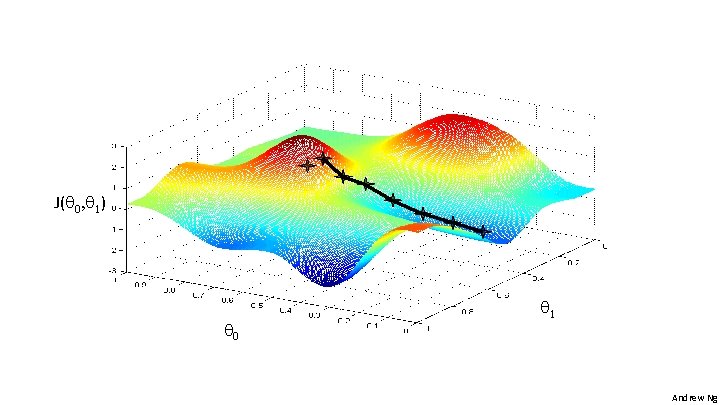

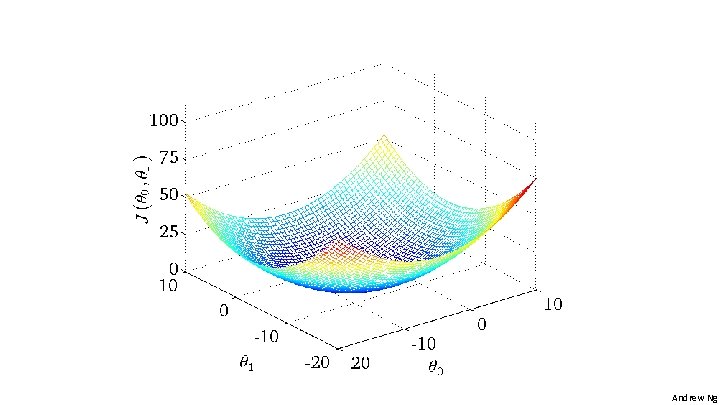

Have some function Want Outline: • Start with some • Keep changing to reduce until we hopefully end up at a minimum Andrew Ng

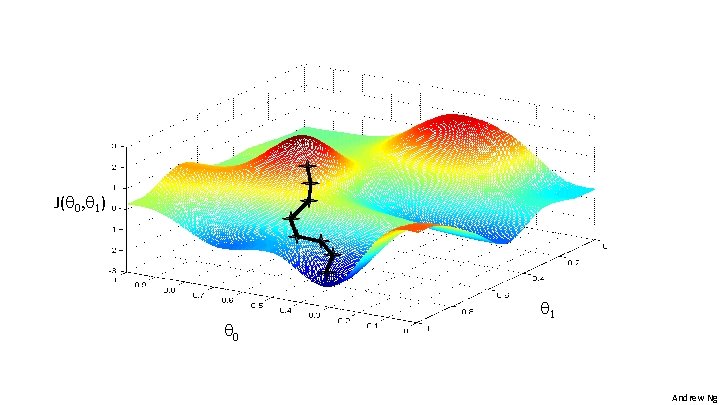

J( 0, 1) 0 1 Andrew Ng

J( 0, 1) 0 1 Andrew Ng

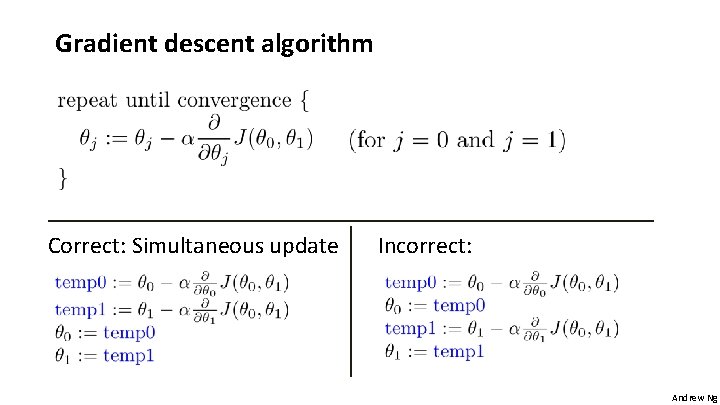

Gradient descent algorithm Correct: Simultaneous update Incorrect: Andrew Ng

Linear regression with one variable Gradient descent intuition Machine Learning Andrew Ng

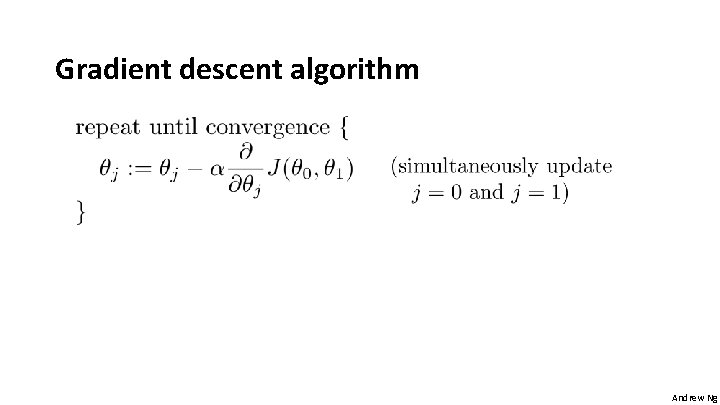

Gradient descent algorithm Andrew Ng

Andrew Ng

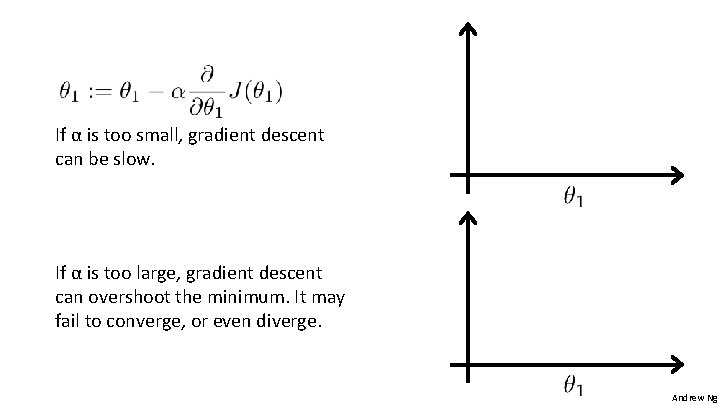

If α is too small, gradient descent can be slow. If α is too large, gradient descent can overshoot the minimum. It may fail to converge, or even diverge. Andrew Ng

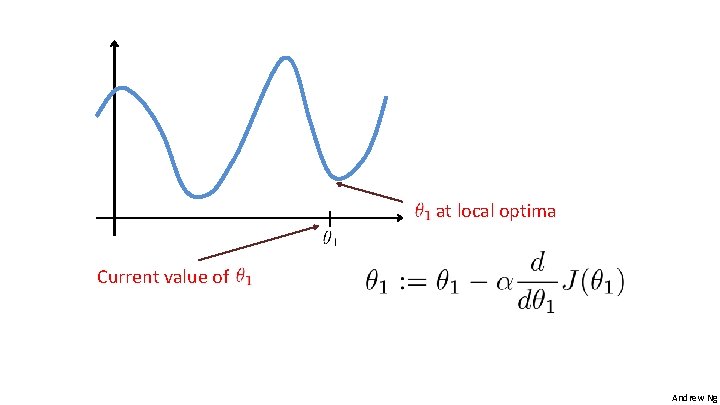

at local optima Current value of Andrew Ng

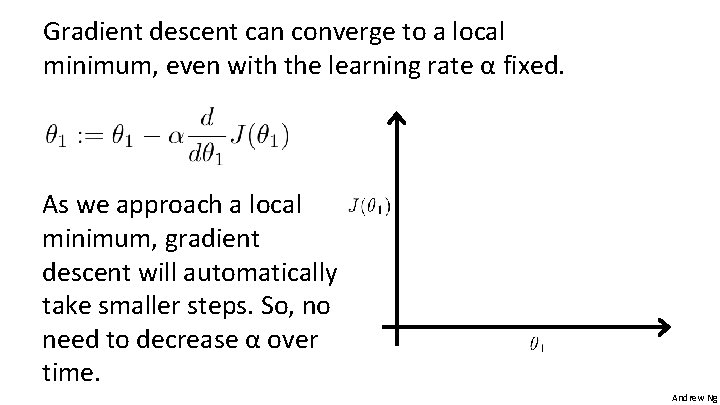

Gradient descent can converge to a local minimum, even with the learning rate α fixed. As we approach a local minimum, gradient descent will automatically take smaller steps. So, no need to decrease α over time. Andrew Ng

Andrew Ng

Linear regression with one variable Gradient descent for linear regression Machine Learning Andrew Ng

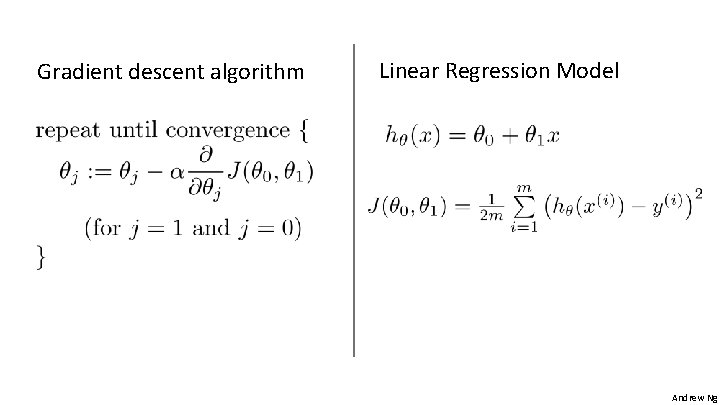

Gradient descent algorithm Linear Regression Model Andrew Ng

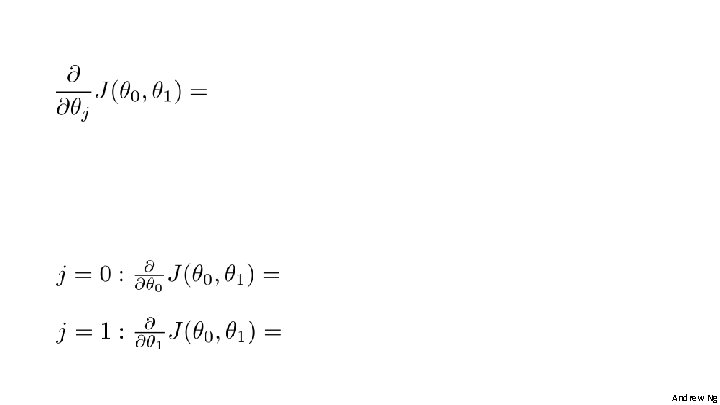

Andrew Ng

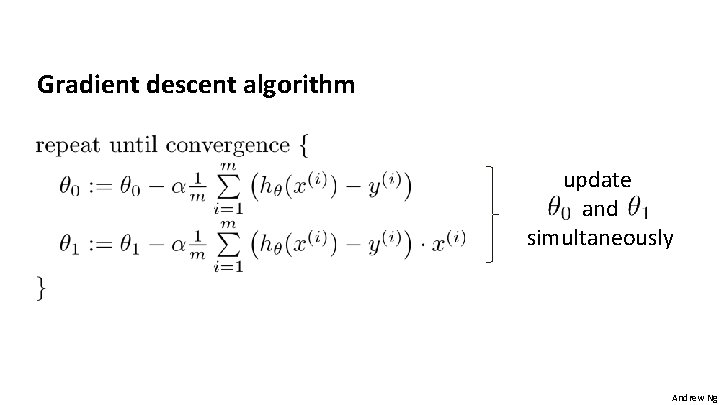

Gradient descent algorithm update and simultaneously Andrew Ng

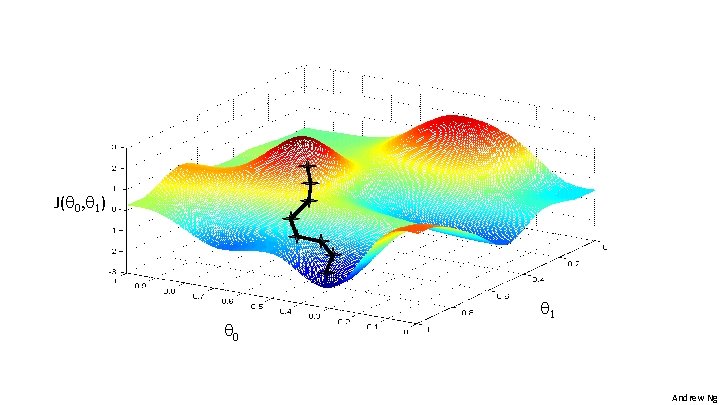

J( 0, 1) 0 1 Andrew Ng

Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

(for fixed , this is a function of x) (function of the parameters ) Andrew Ng

“Batch” Gradient Descent “Batch”: Each step of gradient descent uses all the training examples. Andrew Ng

- Slides: 49