Linear Regression Least Squares Method the Meaning of

- Slides: 14

Linear Regression Least Squares Method: the Meaning of r 2

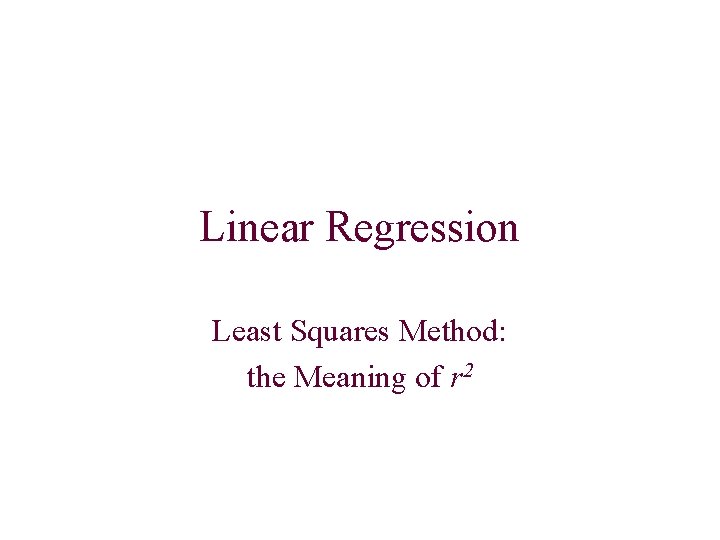

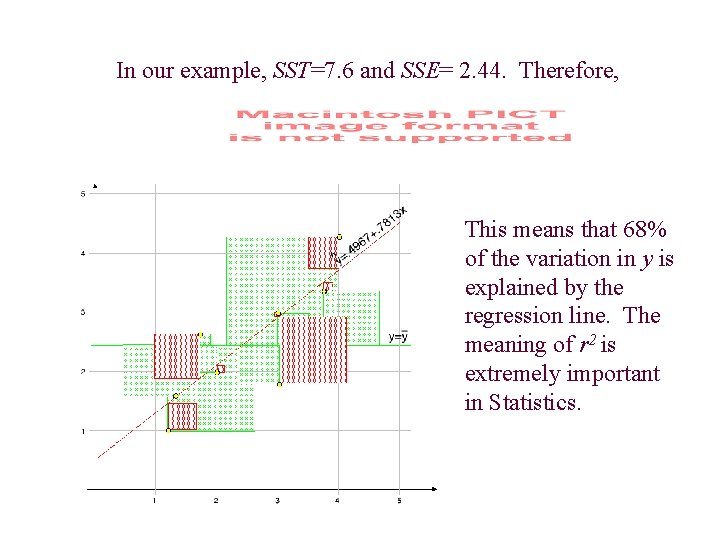

We are given the following ordered pairs: (1. 2, 1), (1. 3, 1. 6), (1. 7, 2. 7), (2, 2), (3, 1. 8), (3, 3), (3. 8, 3. 3), (4, 4. 2). They are shown in the scatterplot below:

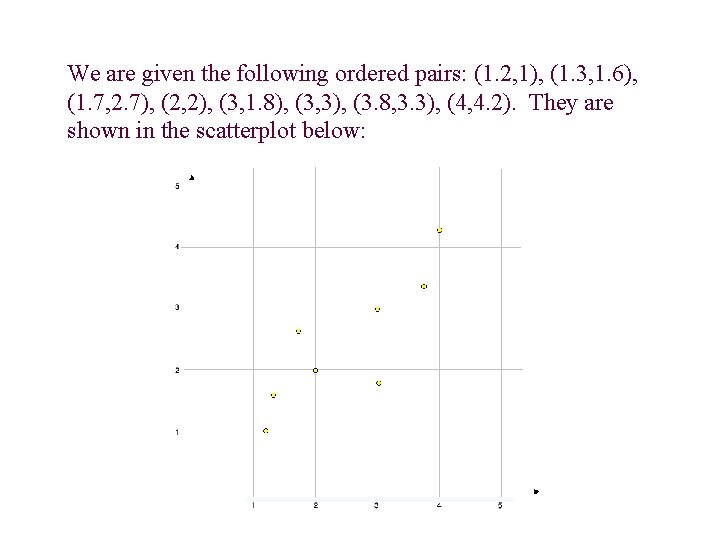

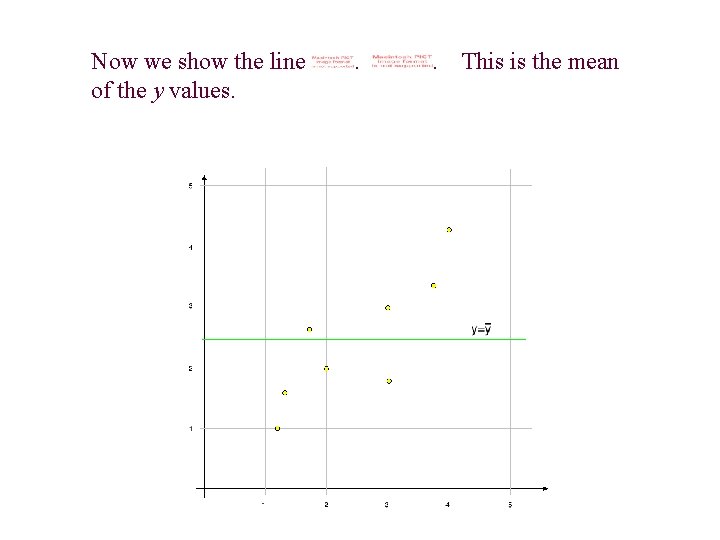

Now we show the line of the y values. . . This is the mean

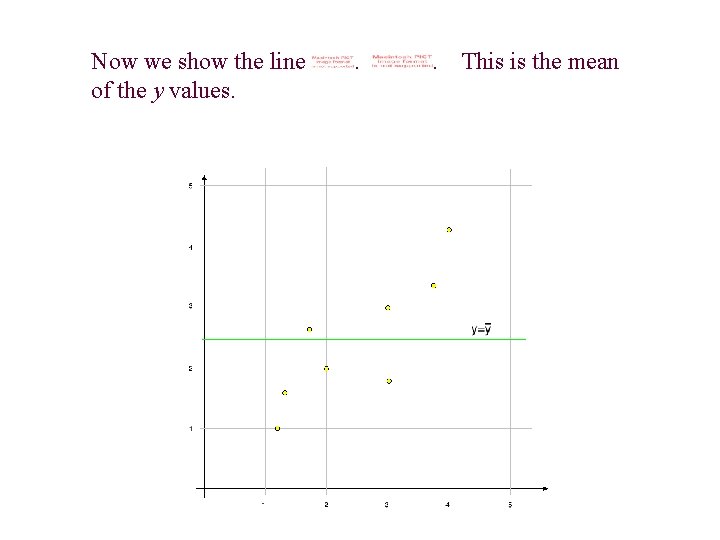

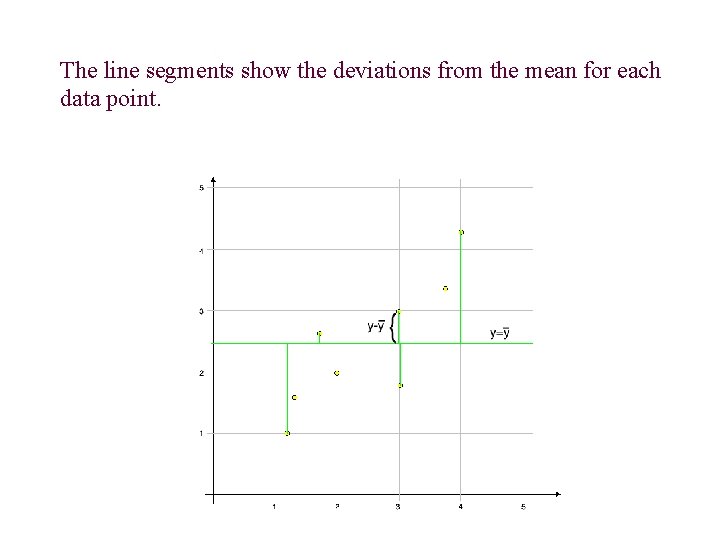

The line segments show the deviations from the mean for each data point.

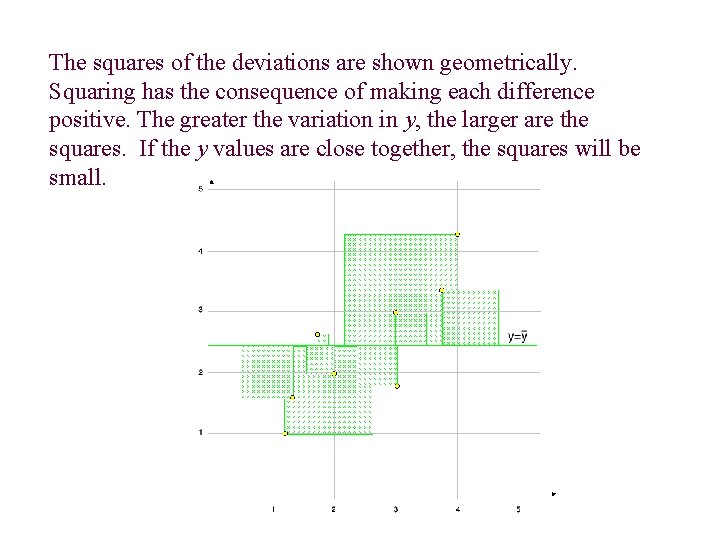

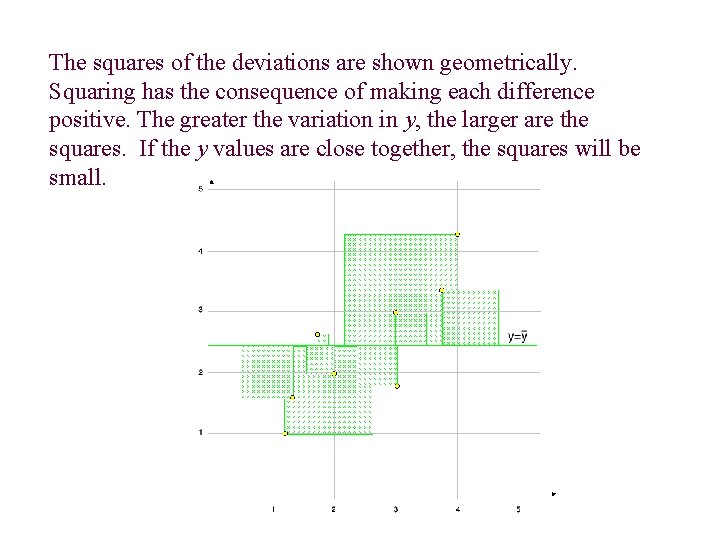

The squares of the deviations are shown geometrically. Squaring has the consequence of making each difference positive. The greater the variation in y, the larger are the squares. If the y values are close together, the squares will be small.

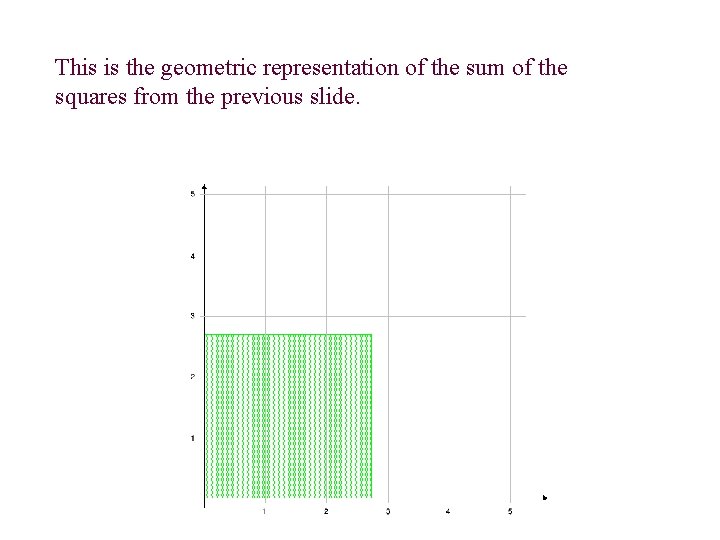

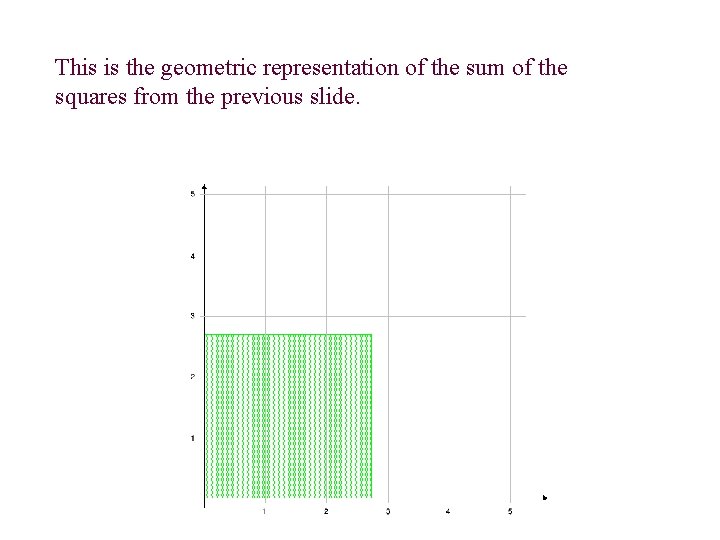

This is the geometric representation of the sum of the squares from the previous slide.

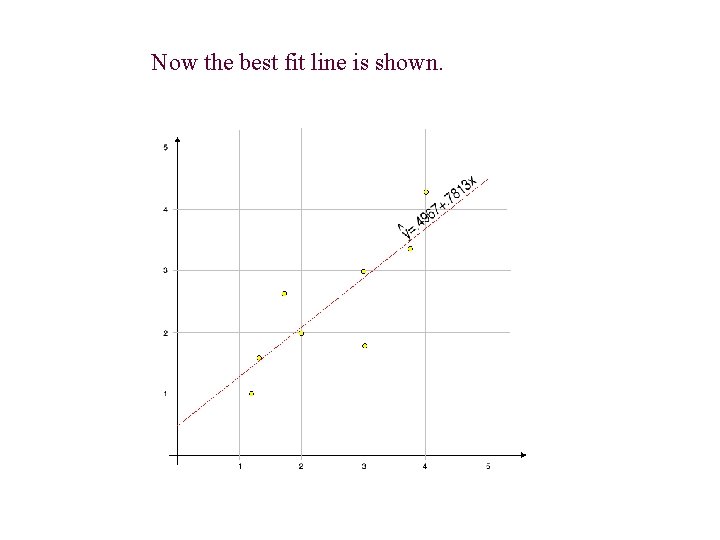

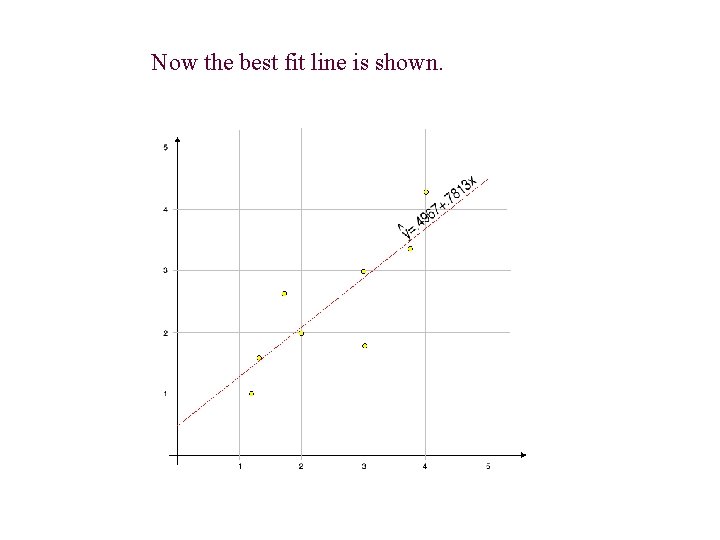

Now the best fit line is shown.

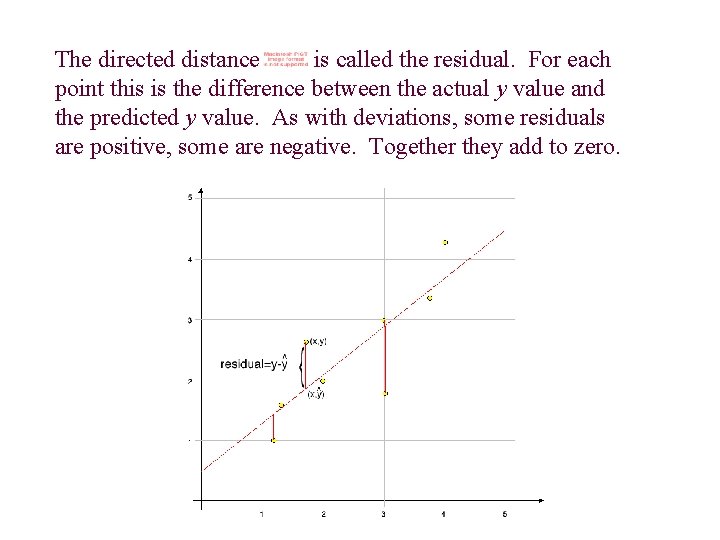

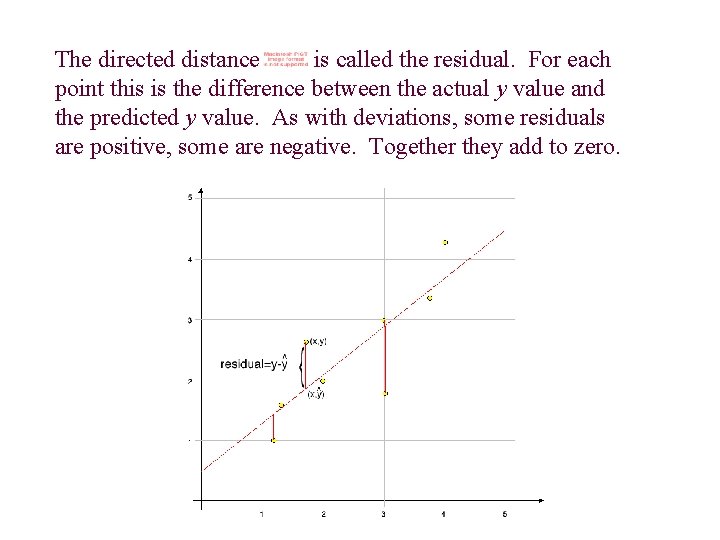

The directed distance is called the residual. For each point this is the difference between the actual y value and the predicted y value. As with deviations, some residuals are positive, some are negative. Together they add to zero.

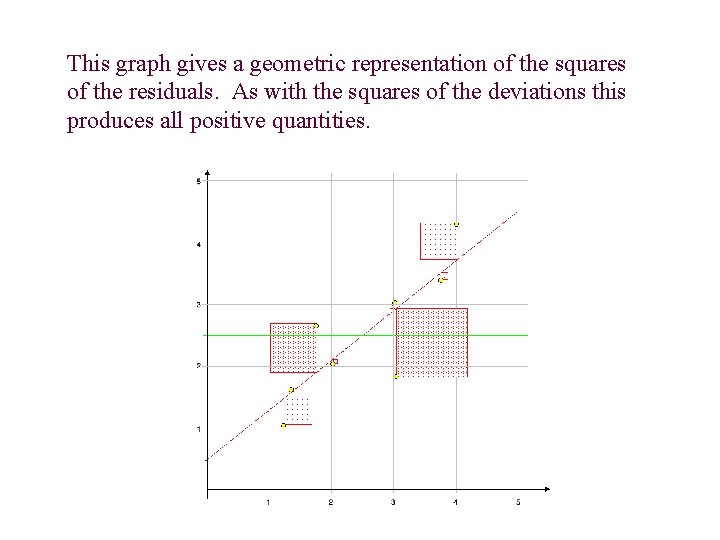

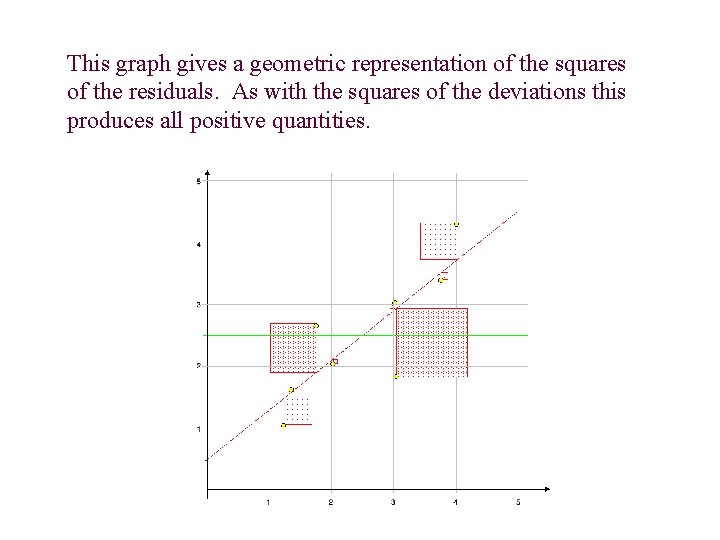

This graph gives a geometric representation of the squares of the residuals. As with the squares of the deviations this produces all positive quantities.

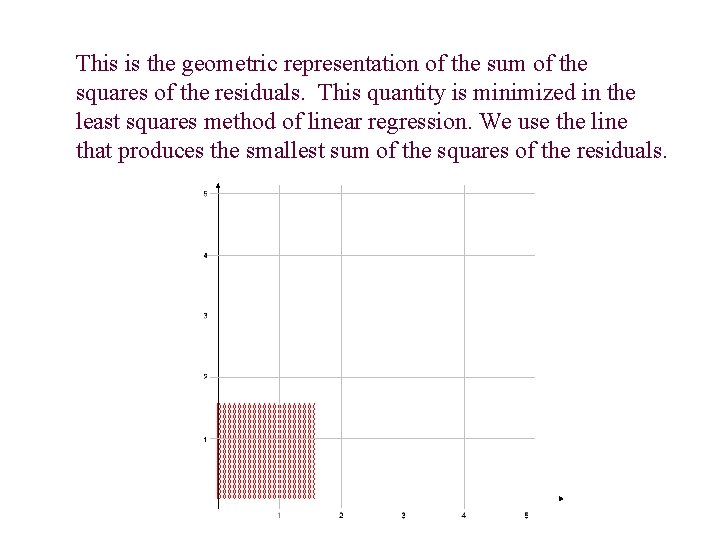

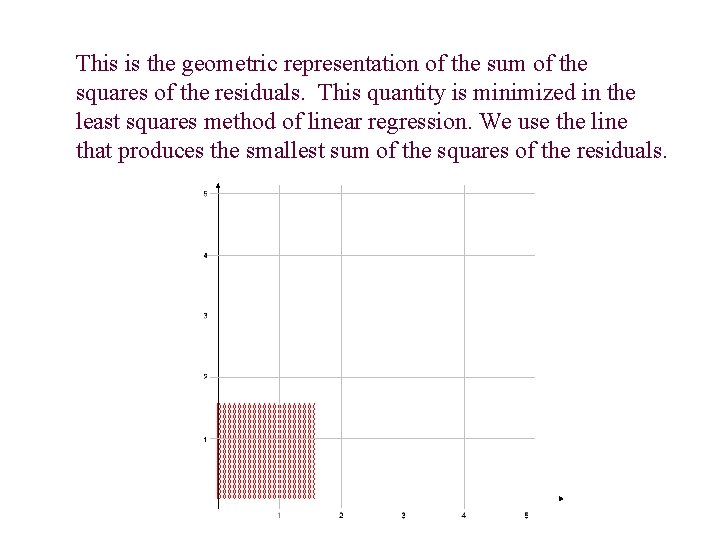

This is the geometric representation of the sum of the squares of the residuals. This quantity is minimized in the least squares method of linear regression. We use the line that produces the smallest sum of the squares of the residuals.

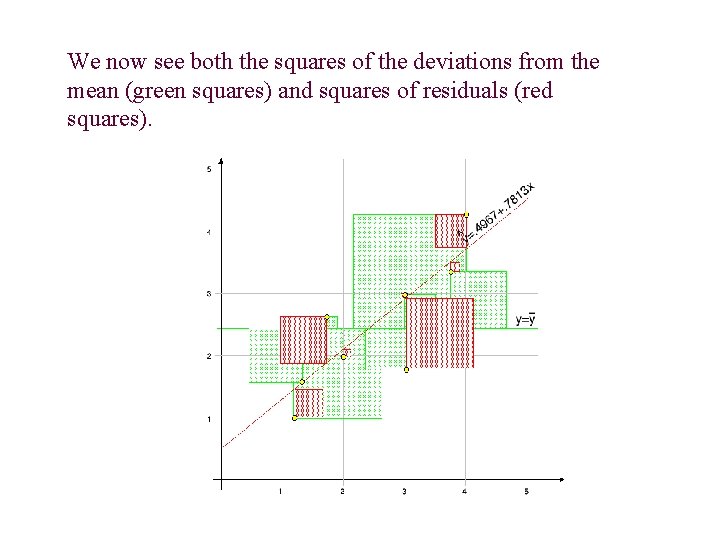

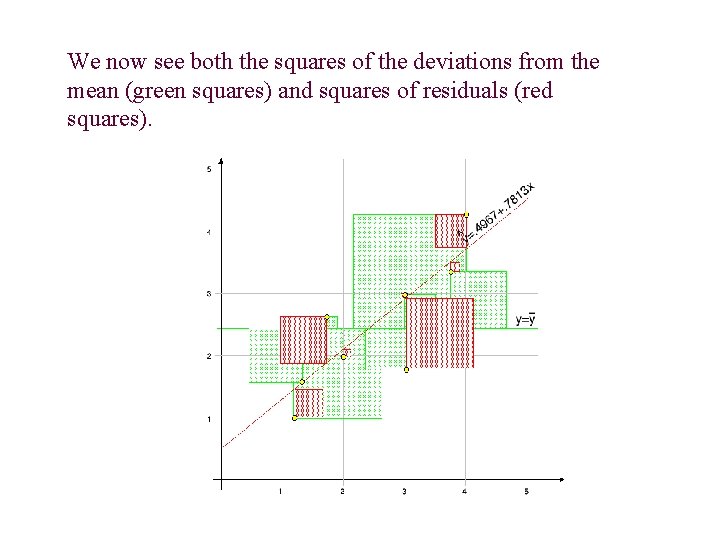

We now see both the squares of the deviations from the mean (green squares) and squares of residuals (red squares).

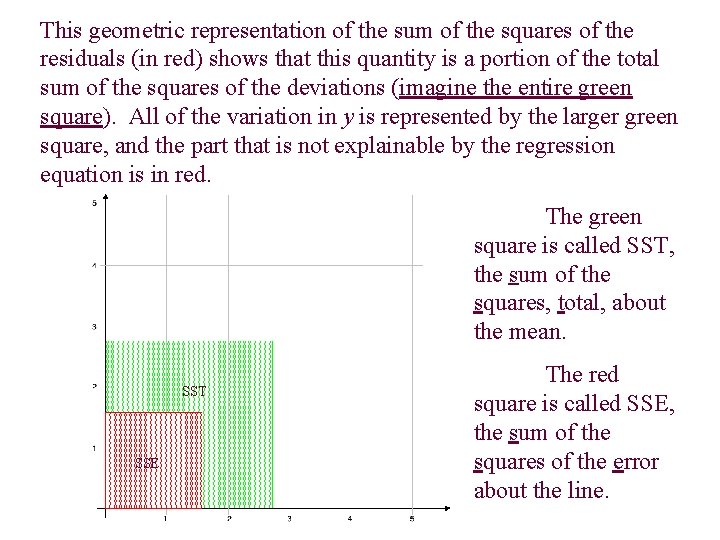

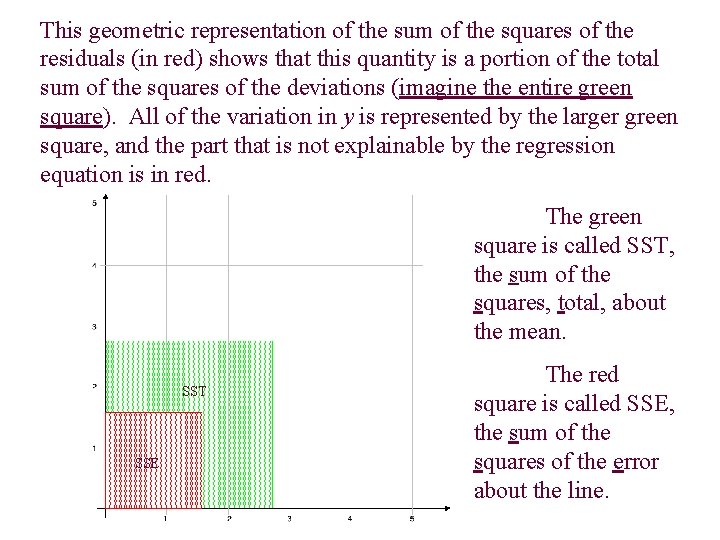

This geometric representation of the sum of the squares of the residuals (in red) shows that this quantity is a portion of the total sum of the squares of the deviations (imagine the entire green square). All of the variation in y is represented by the larger green square, and the part that is not explainable by the regression equation is in red. The green square is called SST, the sum of the squares, total, about the mean. SST SSE The red square is called SSE, the sum of the squares of the error about the line.

If we now measure the quantities SSE and SST, we can make a useful calculation, the coefficient of determination, or r 2. Recall that SST is the total sum of the squares of the deviations about the mean value of y, and SSE is the sum of the squares of the error (residuals) about the line. n. b. This r 2 is the square of the correlation coefficient.

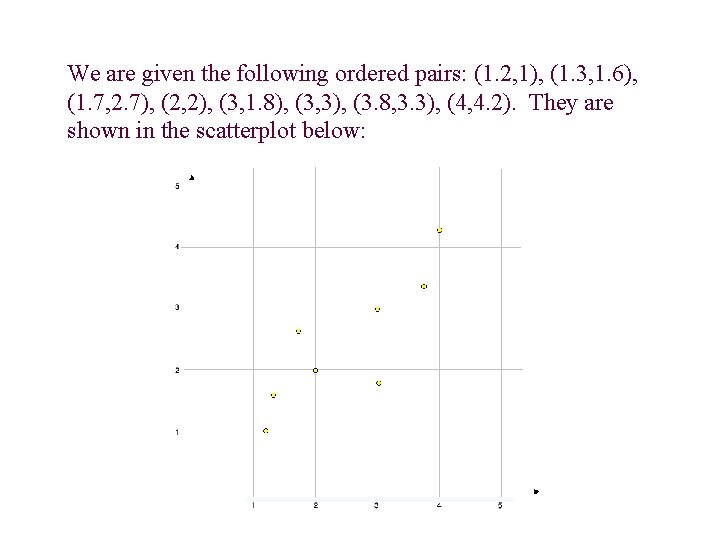

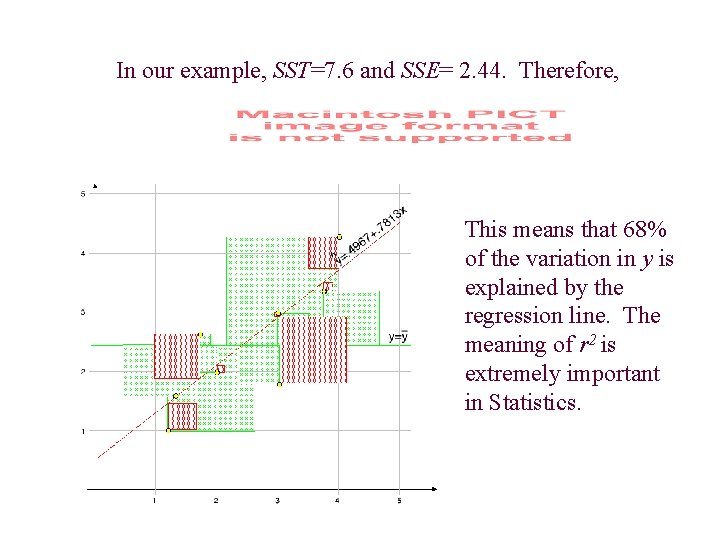

In our example, SST=7. 6 and SSE= 2. 44. Therefore, This means that 68% of the variation in y is explained by the regression line. The meaning of r 2 is extremely important in Statistics.