Linear Prediction 1 Linear Prediction Introduction n The

- Slides: 11

Linear Prediction 1

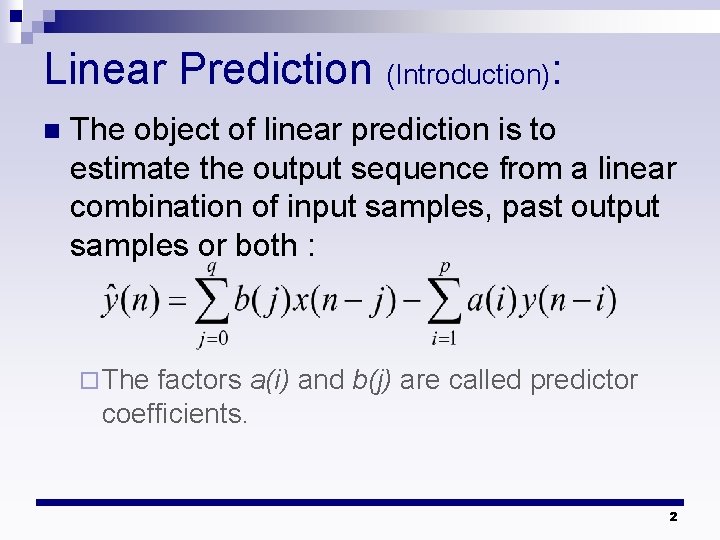

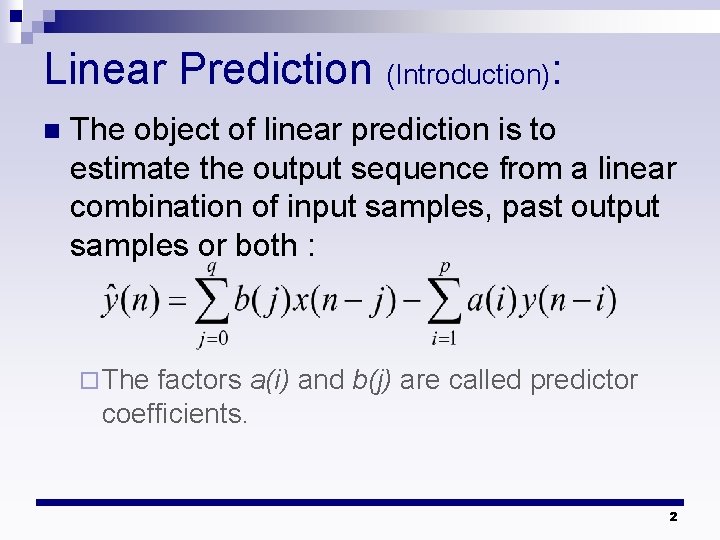

Linear Prediction (Introduction): n The object of linear prediction is to estimate the output sequence from a linear combination of input samples, past output samples or both : ¨ The factors a(i) and b(j) are called predictor coefficients. 2

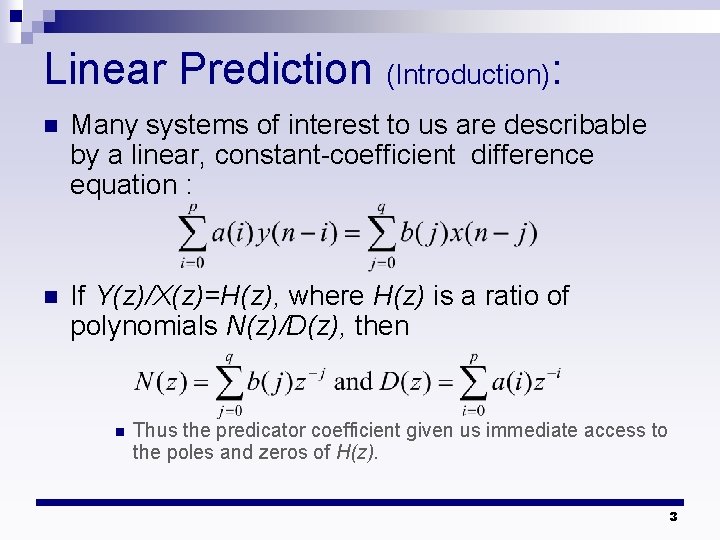

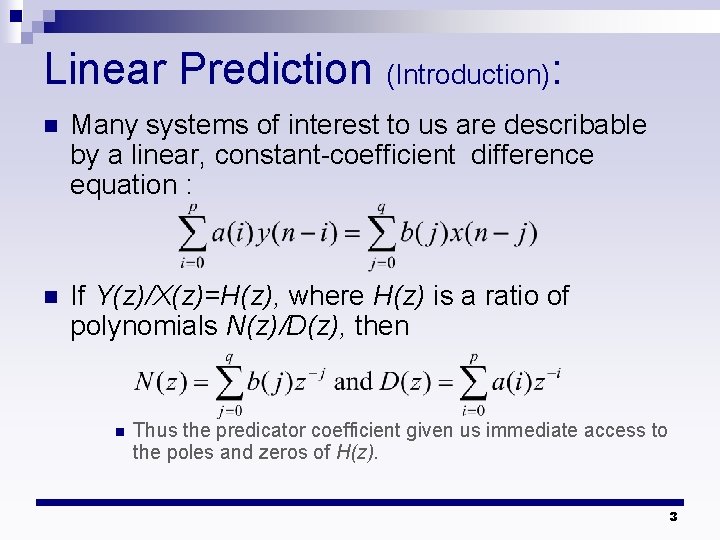

Linear Prediction (Introduction): n Many systems of interest to us are describable by a linear, constant-coefficient difference equation : n If Y(z)/X(z)=H(z), where H(z) is a ratio of polynomials N(z)/D(z), then n Thus the predicator coefficient given us immediate access to the poles and zeros of H(z). 3

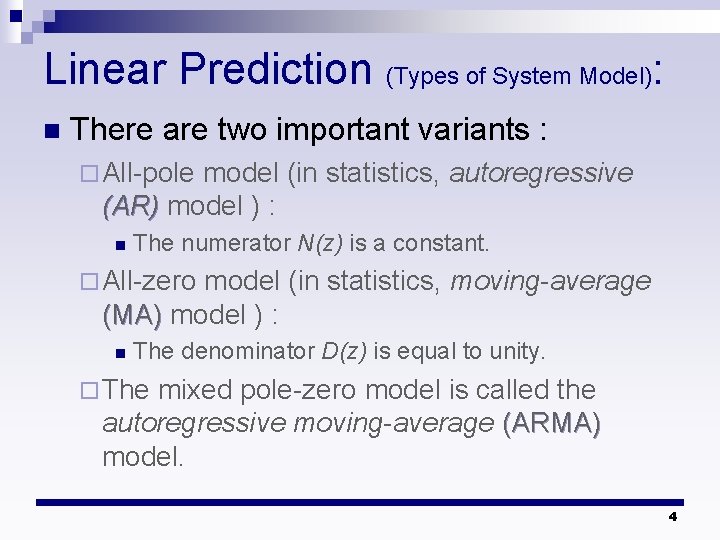

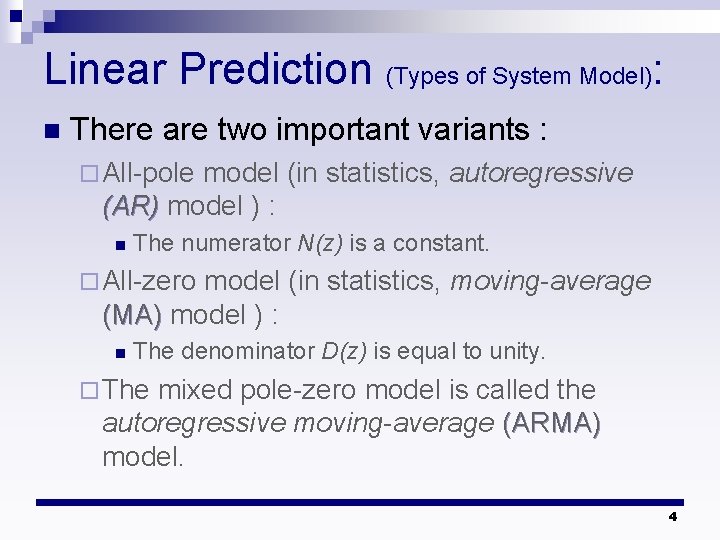

Linear Prediction (Types of System Model): n There are two important variants : ¨ All-pole model (in statistics, autoregressive (AR) model ) : n The numerator N(z) is a constant. ¨ All-zero model (in statistics, moving-average (MA) model ) : n The denominator D(z) is equal to unity. ¨ The mixed pole-zero model is called the autoregressive moving-average (ARMA) model. 4

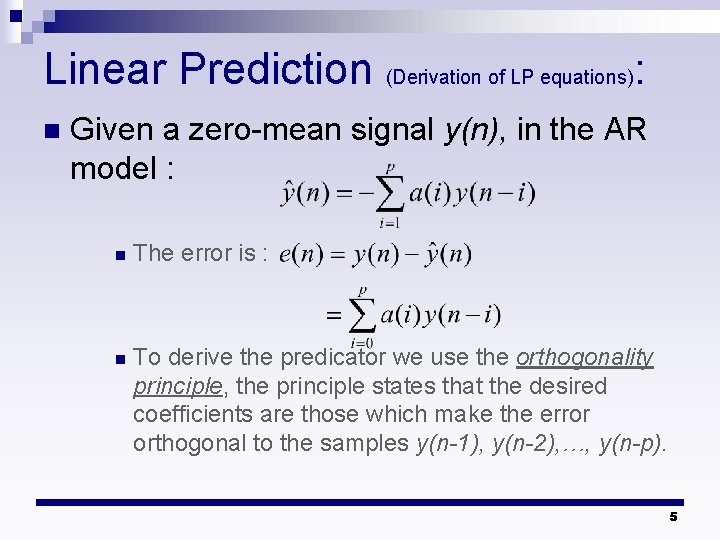

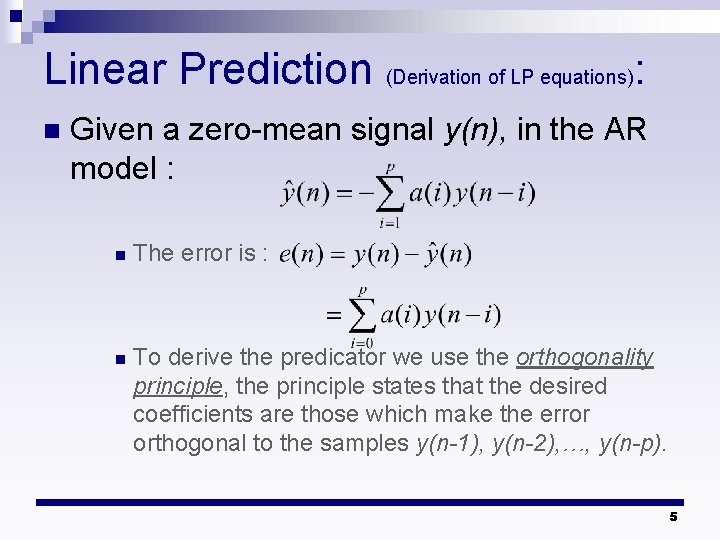

Linear Prediction (Derivation of LP equations): n Given a zero-mean signal y(n), in the AR model : n The error is : n To derive the predicator we use the orthogonality principle, the principle states that the desired coefficients are those which make the error orthogonal to the samples y(n-1), y(n-2), …, y(n-p). 5

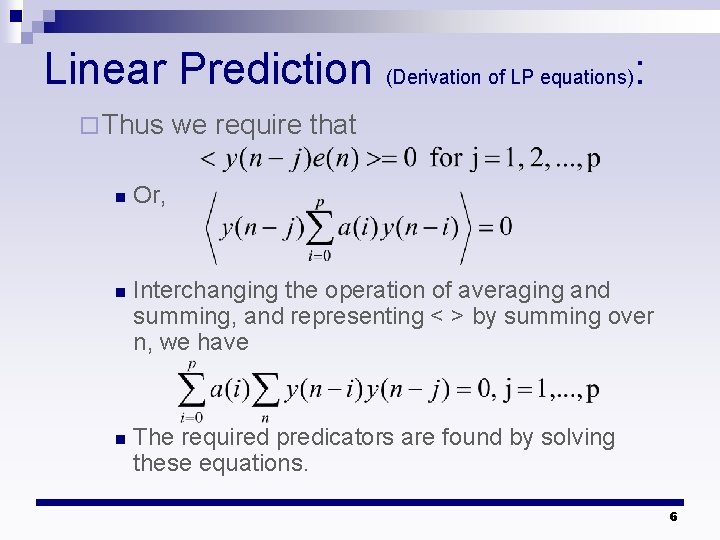

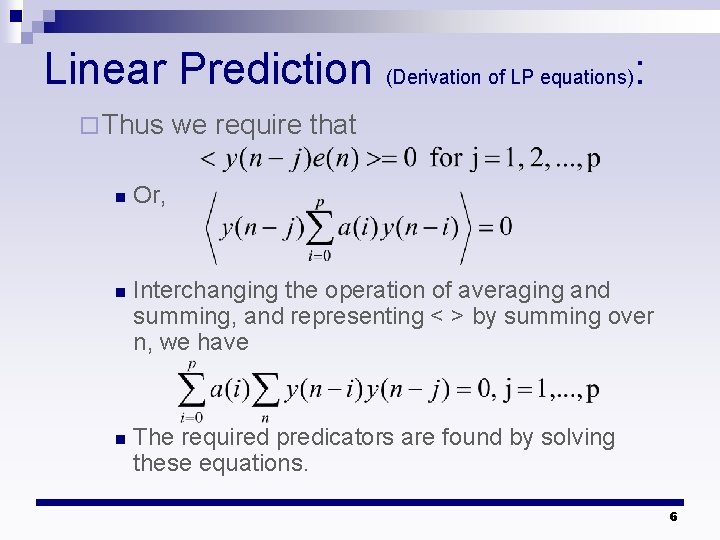

Linear Prediction (Derivation of LP equations): ¨ Thus we require that n Or, n Interchanging the operation of averaging and summing, and representing < > by summing over n, we have n The required predicators are found by solving these equations. 6

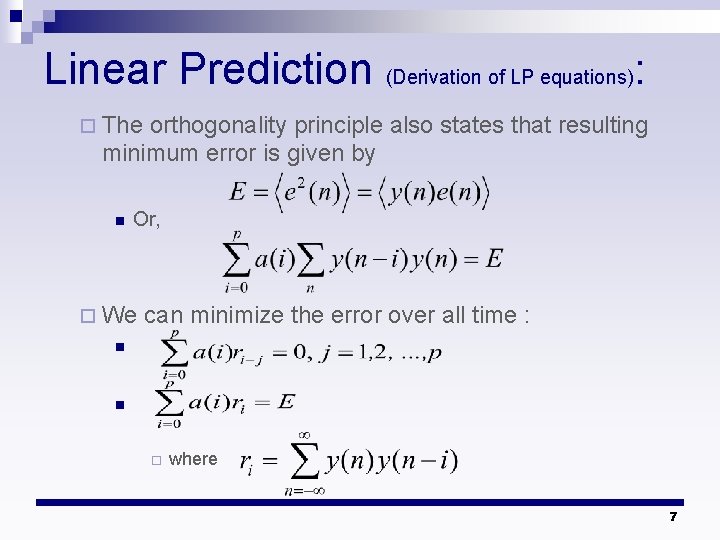

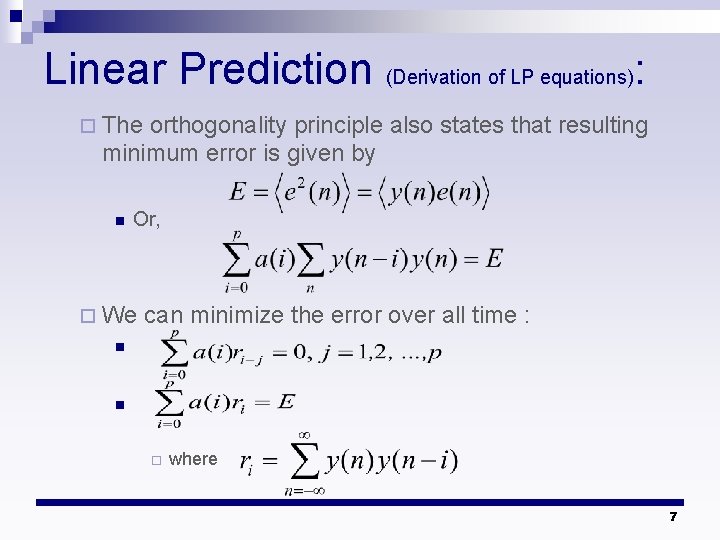

Linear Prediction (Derivation of LP equations): ¨ The orthogonality principle also states that resulting minimum error is given by n Or, ¨ We can minimize the error over all time : n n ¨ where 7

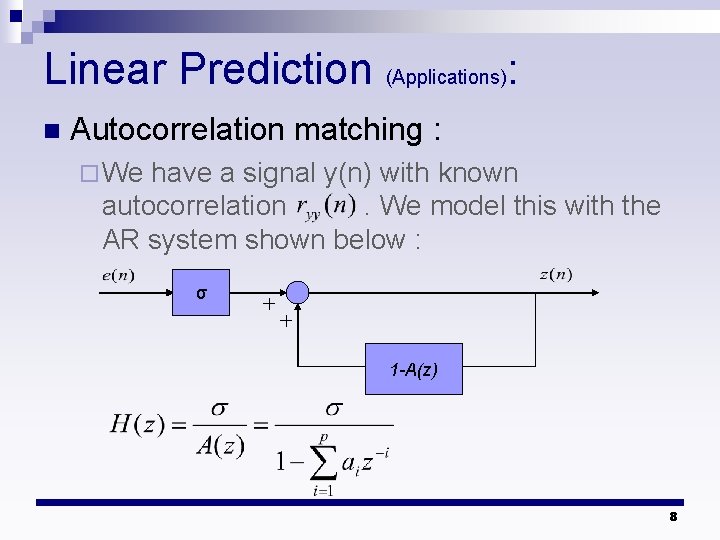

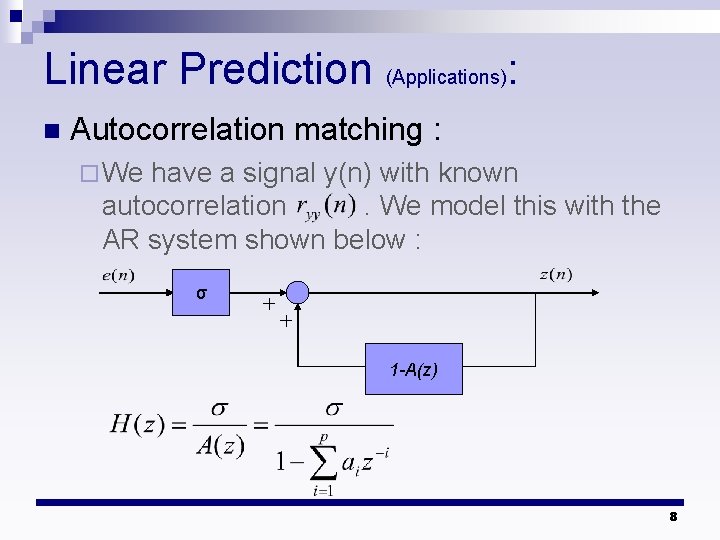

Linear Prediction (Applications): n Autocorrelation matching : ¨ We have a signal y(n) with known autocorrelation. We model this with the AR system shown below : σ 1 -A(z) 8

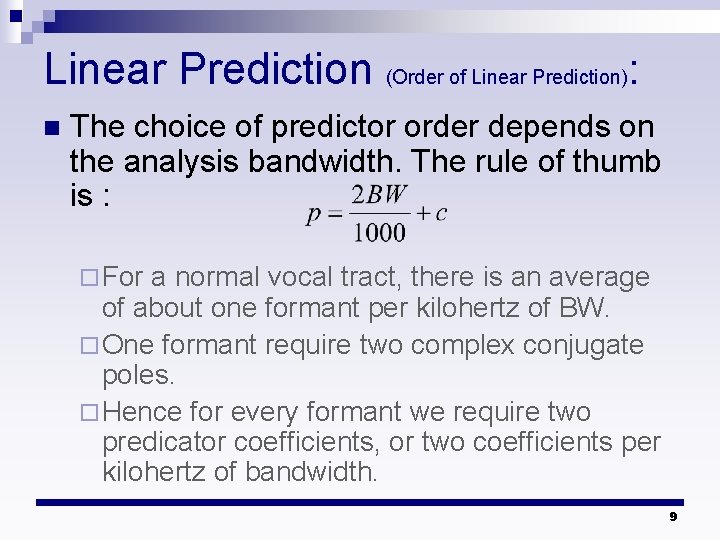

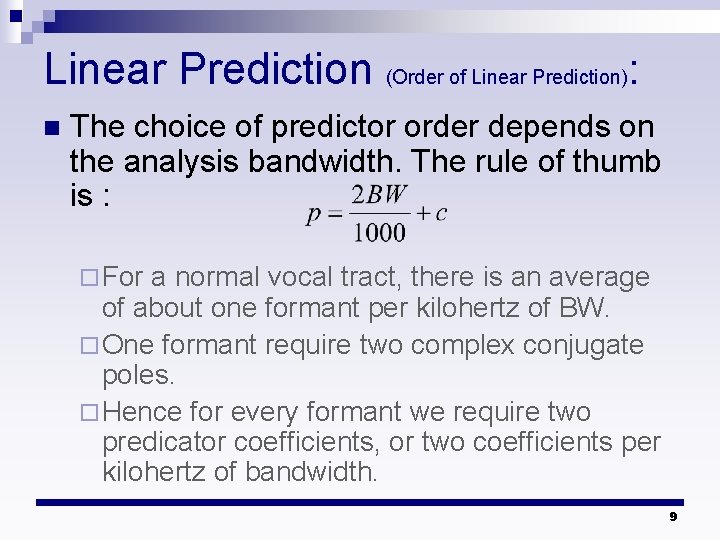

Linear Prediction (Order of Linear Prediction): n The choice of predictor order depends on the analysis bandwidth. The rule of thumb is : ¨ For a normal vocal tract, there is an average of about one formant per kilohertz of BW. ¨ One formant require two complex conjugate poles. ¨ Hence for every formant we require two predicator coefficients, or two coefficients per kilohertz of bandwidth. 9

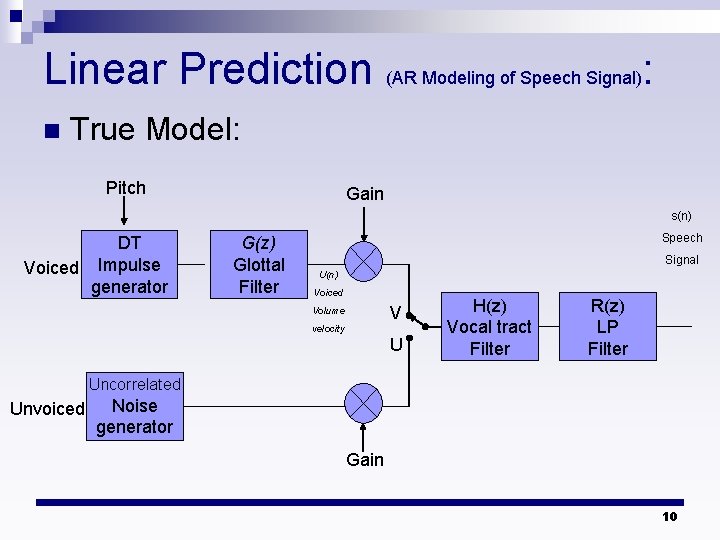

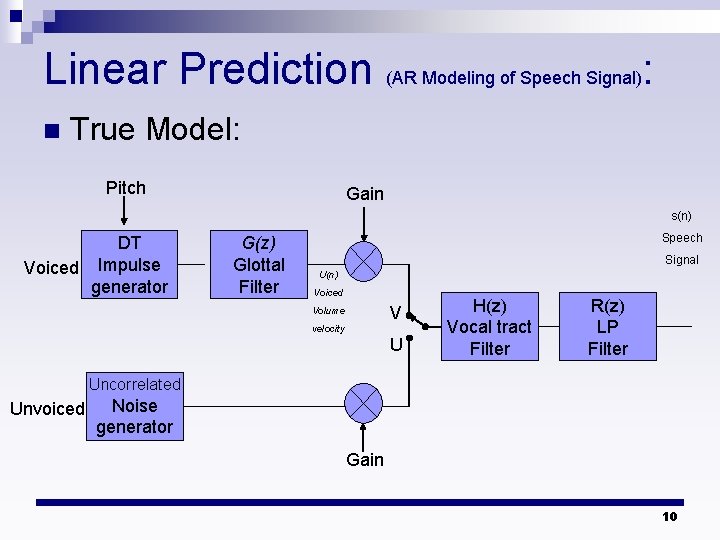

Linear Prediction (AR Modeling of Speech Signal): n True Model: Pitch Gain s(n) DT Voiced Impulse generator G(z) Glottal Filter Speech Signal U(n) Voiced V Volume velocity U H(z) Vocal tract Filter R(z) LP Filter Uncorrelated Unvoiced Noise generator Gain 10

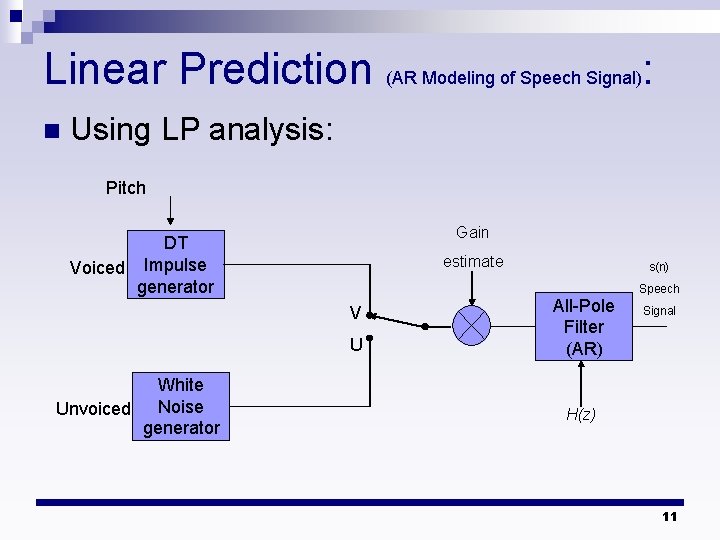

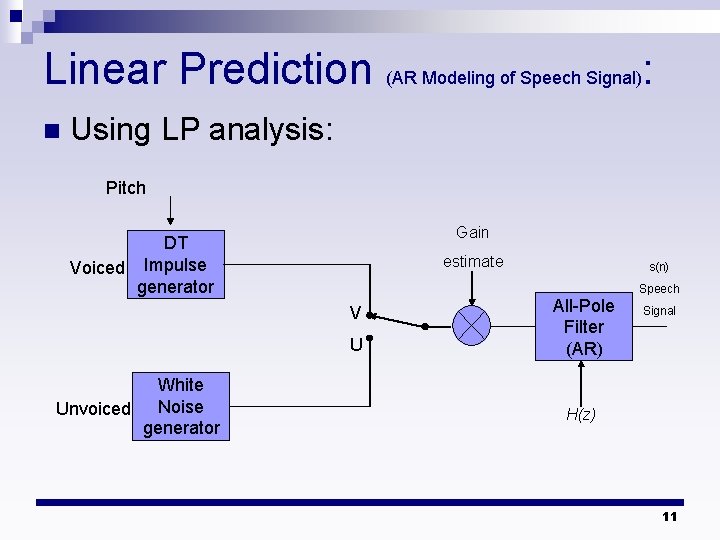

Linear Prediction (AR Modeling of Speech Signal): n Using LP analysis: Pitch Gain DT Voiced Impulse generator estimate V U White Unvoiced Noise generator s(n) All-Pole Filter (AR) Speech Signal H(z) 11