Linear Model YI NG SHE N SSE TON

- Slides: 50

Linear Model YI NG SHE N SSE, TON GJI UNIVERSITY SEP. 2016

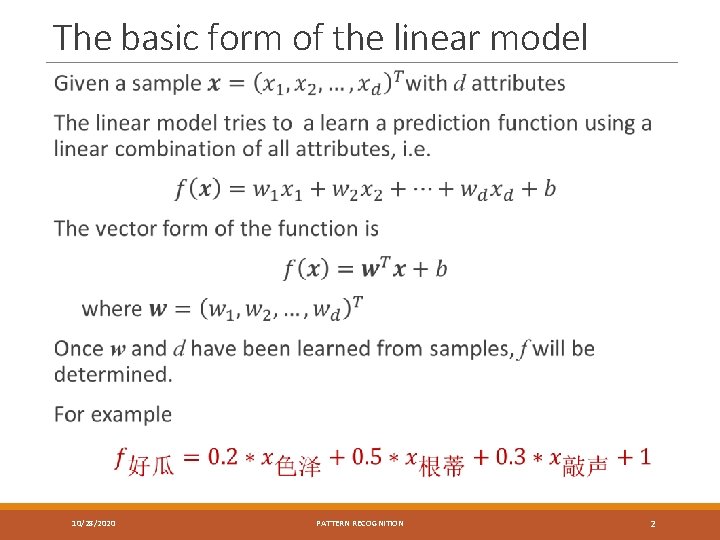

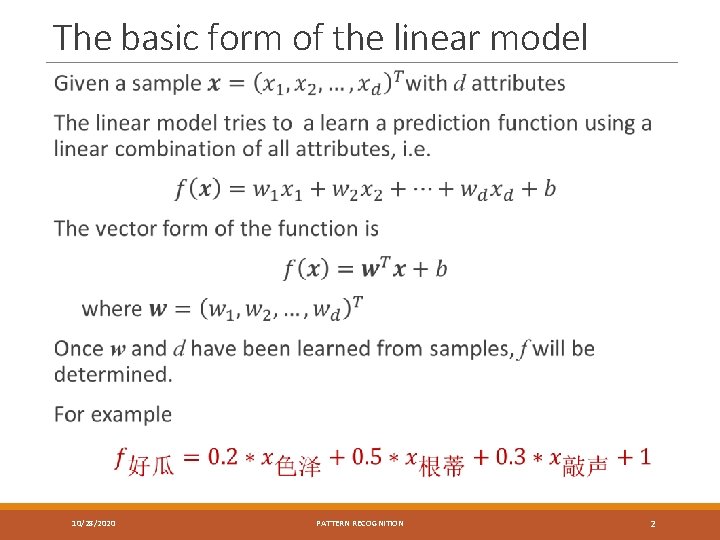

The basic form of the linear model 10/28/2020 PATTERN RECOGNITION 2

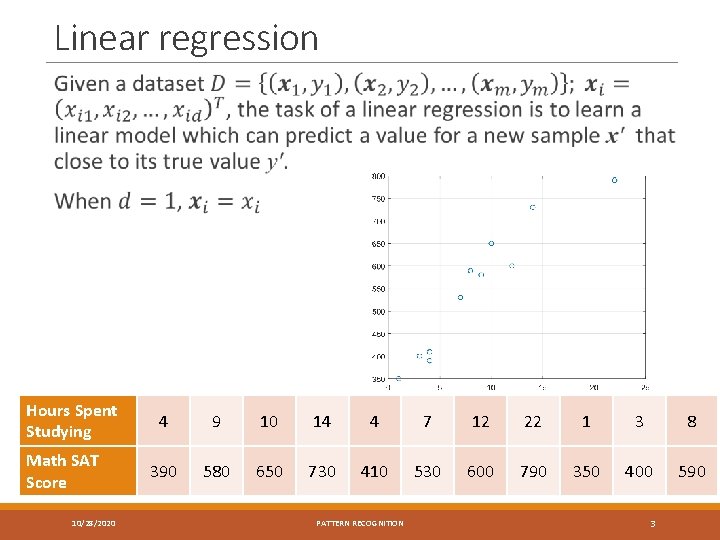

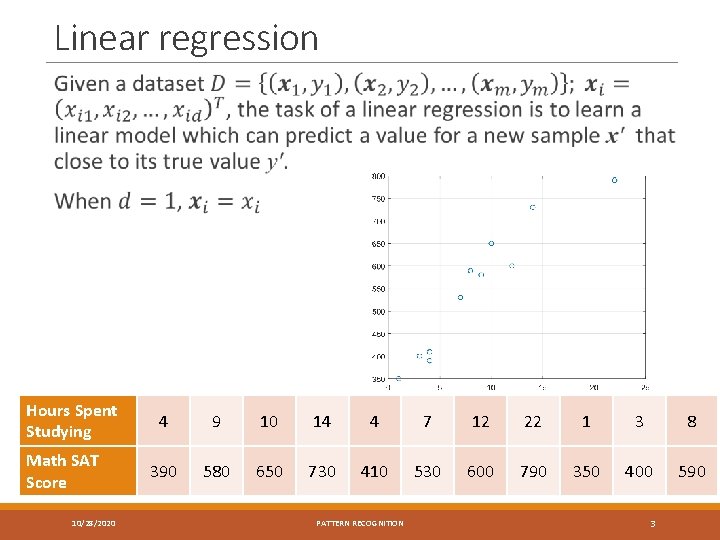

Linear regression Hours Spent Studying Math SAT Score 10/28/2020 4 9 10 14 4 7 12 22 1 3 8 390 580 650 730 410 530 600 790 350 400 590 PATTERN RECOGNITION 3

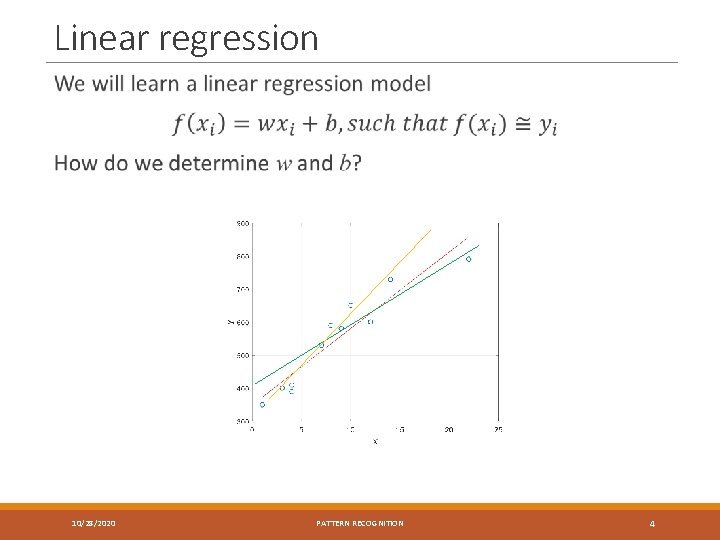

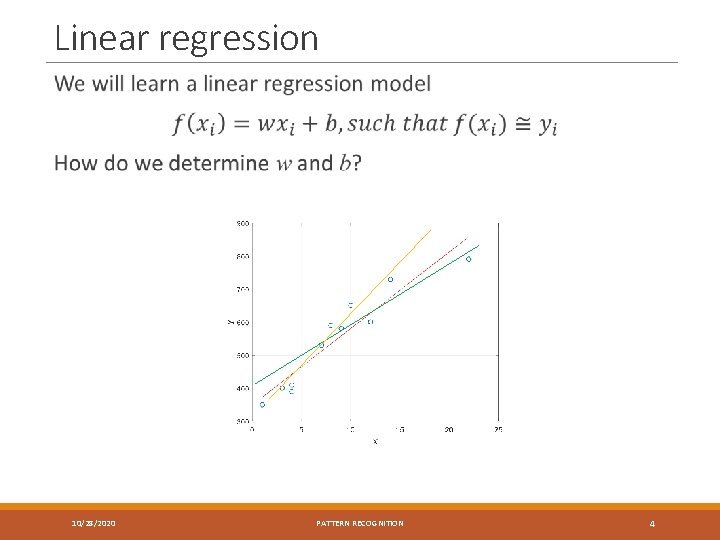

Linear regression 10/28/2020 PATTERN RECOGNITION 4

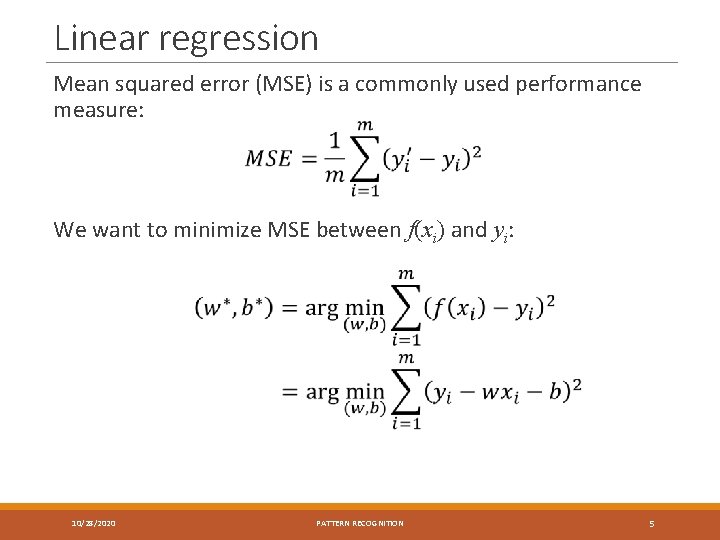

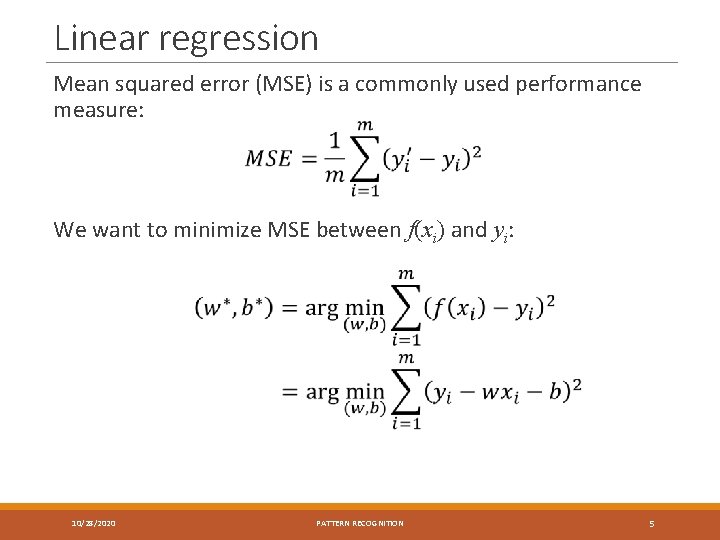

Linear regression Mean squared error (MSE) is a commonly used performance measure: We want to minimize MSE between f(xi) and yi: 10/28/2020 PATTERN RECOGNITION 5

Linear regression The method of determining the fitting model based on MSE is called the least square method In linear regression problem, the least square method aims to find a line such that the sum of distances of all the samples to it is the smallest. 10/28/2020 PATTERN RECOGNITION 6

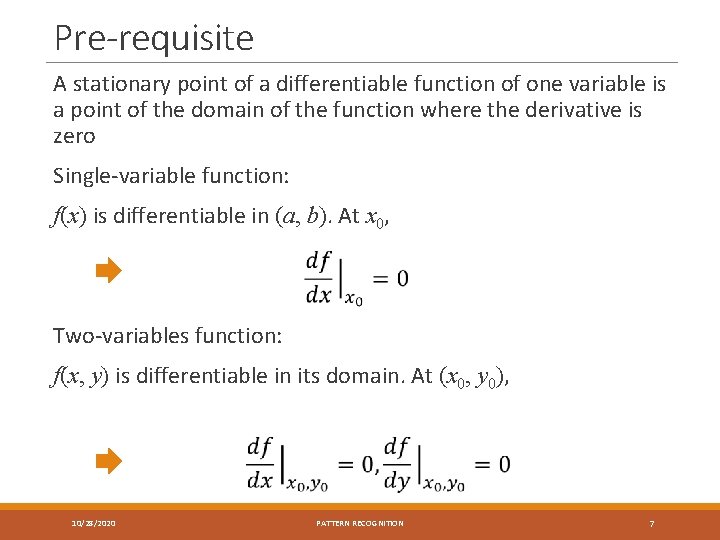

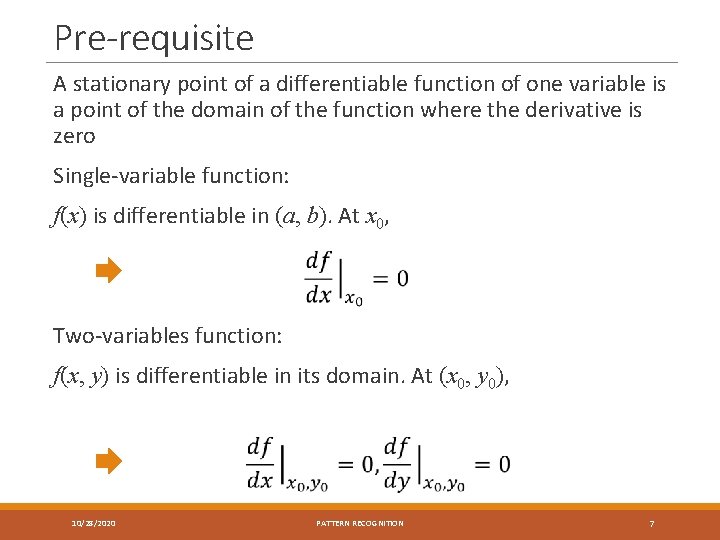

Pre-requisite A stationary point of a differentiable function of one variable is a point of the domain of the function where the derivative is zero Single-variable function: f(x) is differentiable in (a, b). At x 0, Two-variables function: f(x, y) is differentiable in its domain. At (x 0, y 0), 10/28/2020 PATTERN RECOGNITION 7

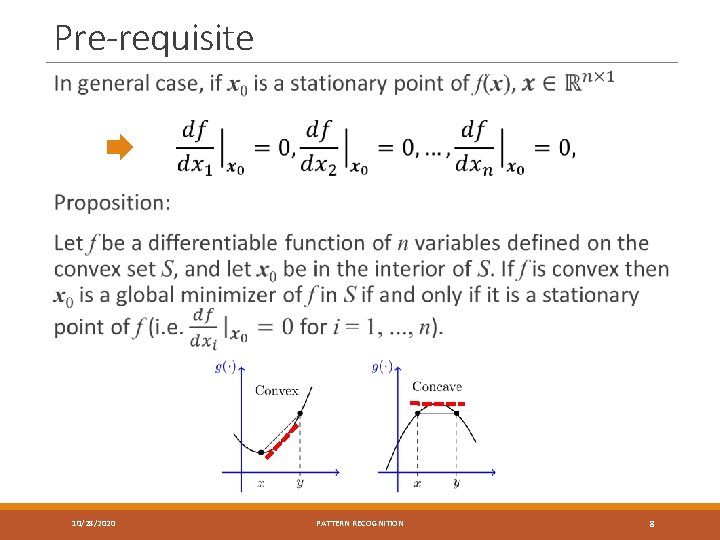

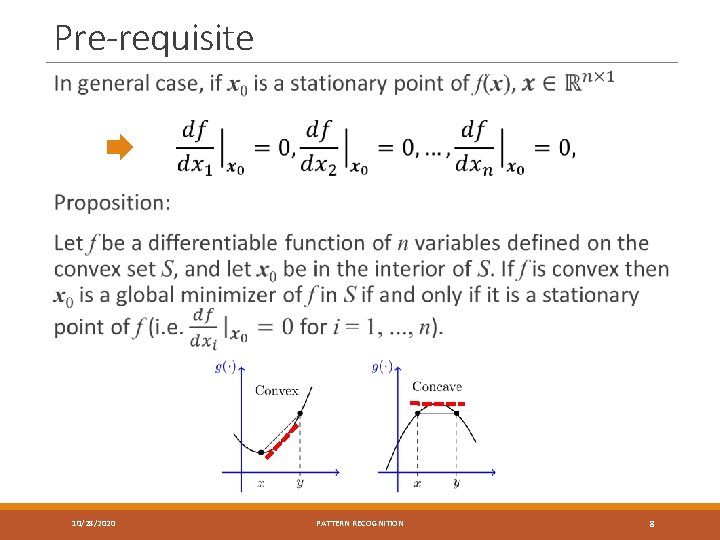

Pre-requisite 10/28/2020 PATTERN RECOGNITION 8

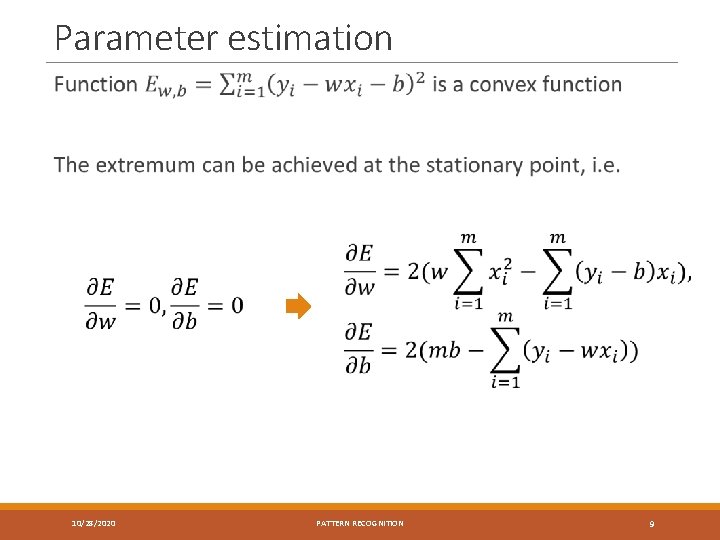

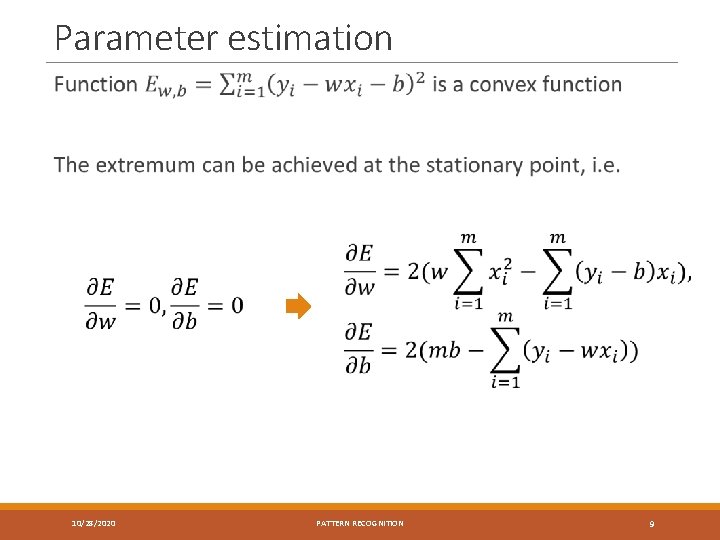

Parameter estimation 10/28/2020 PATTERN RECOGNITION 9

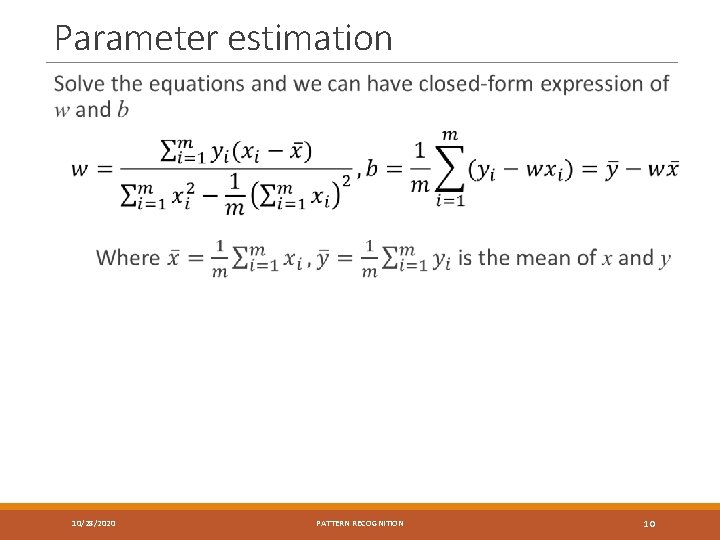

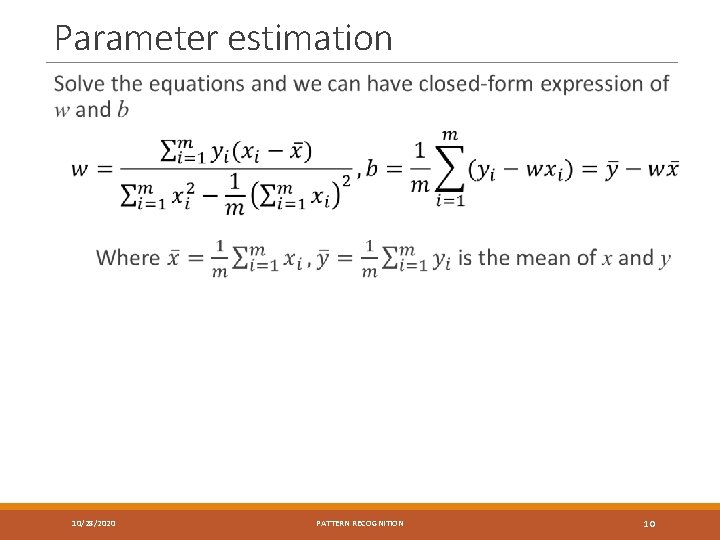

Parameter estimation 10/28/2020 PATTERN RECOGNITION 10

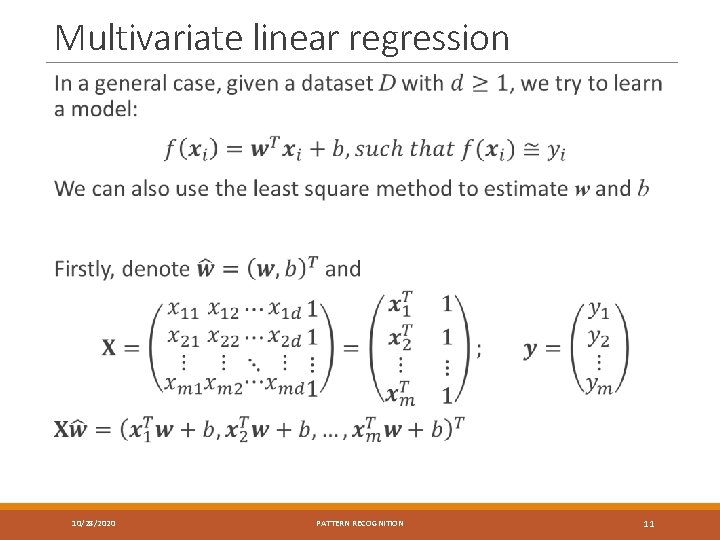

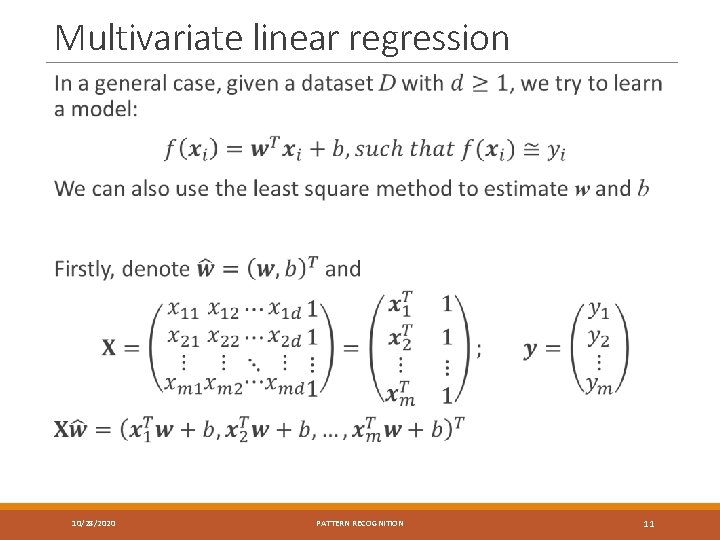

Multivariate linear regression 10/28/2020 PATTERN RECOGNITION 11

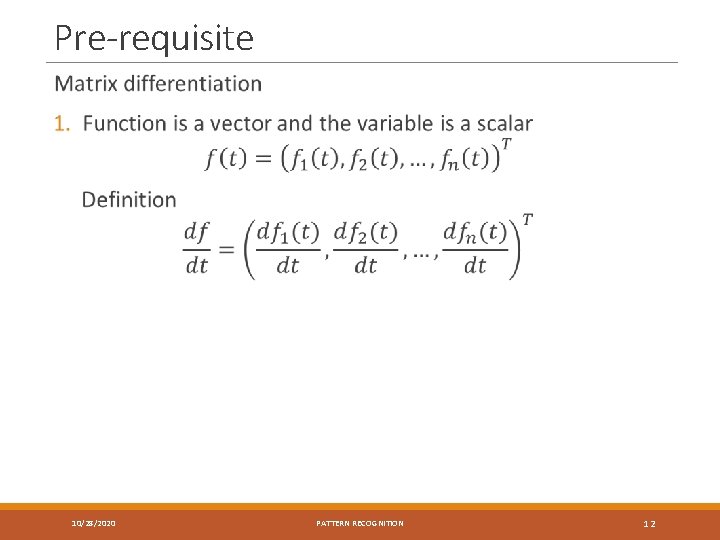

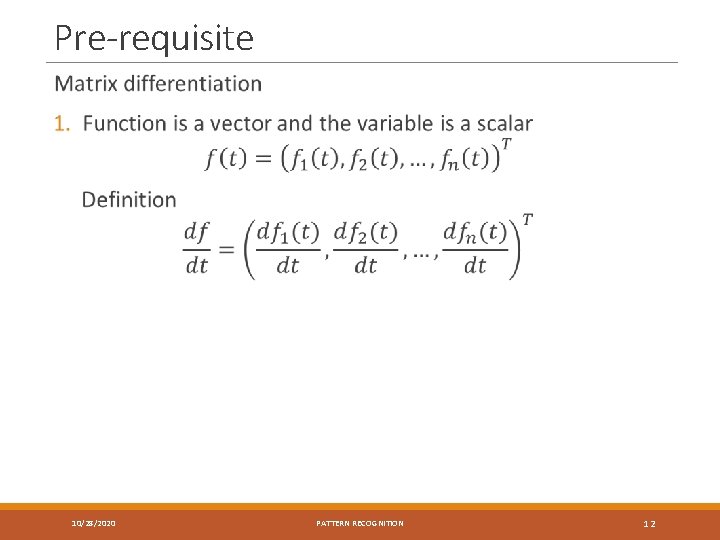

Pre-requisite 10/28/2020 PATTERN RECOGNITION 12

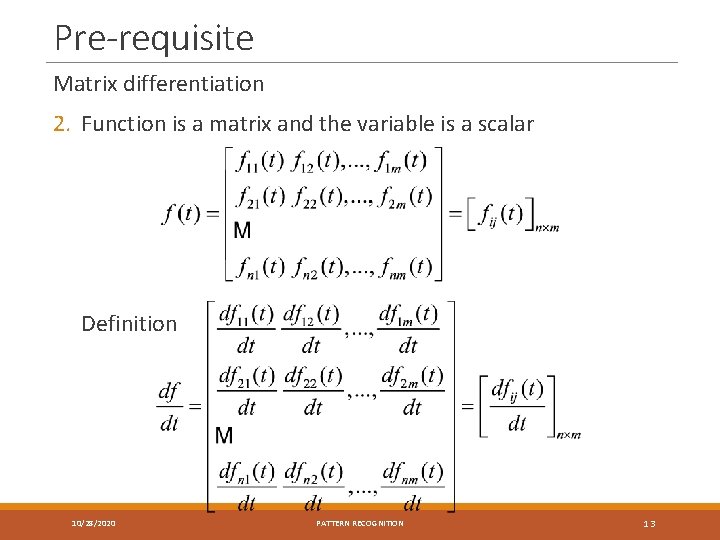

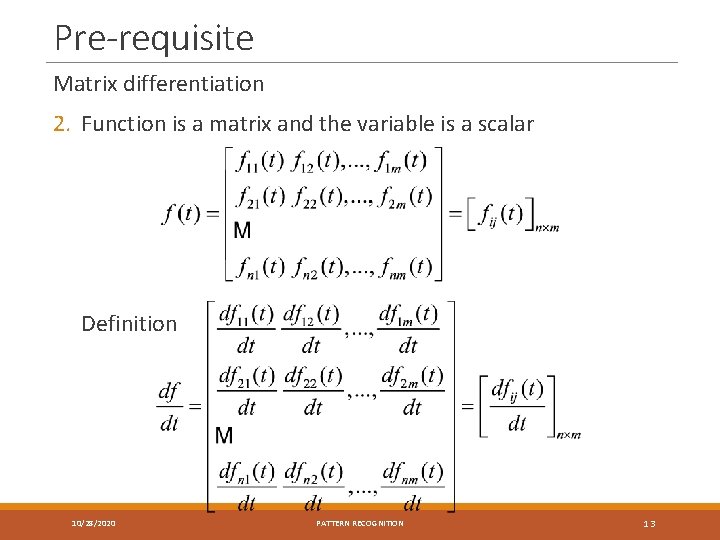

Pre-requisite Matrix differentiation 2. Function is a matrix and the variable is a scalar Definition 10/28/2020 PATTERN RECOGNITION 13

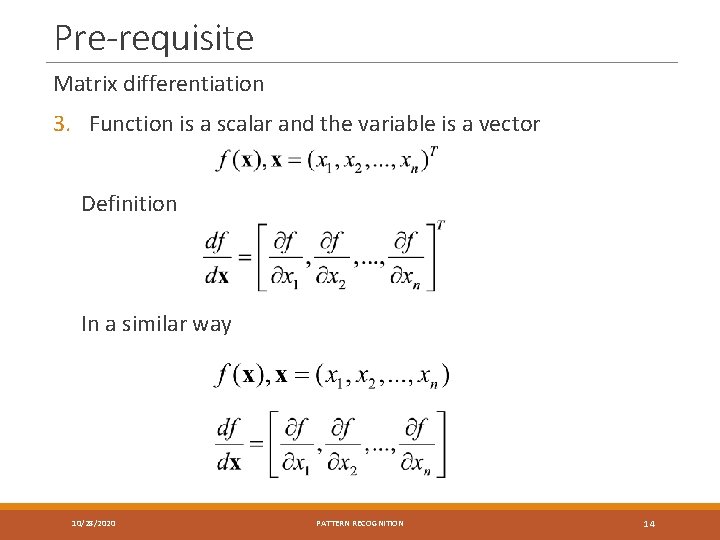

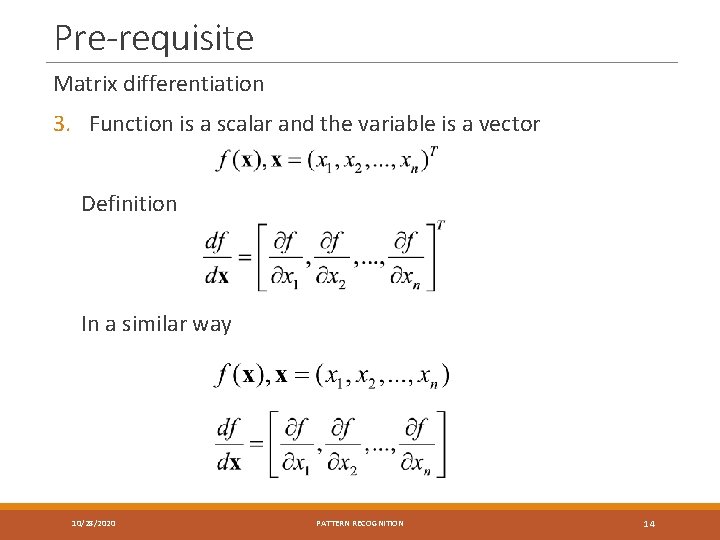

Pre-requisite Matrix differentiation 3. Function is a scalar and the variable is a vector Definition In a similar way 10/28/2020 PATTERN RECOGNITION 14

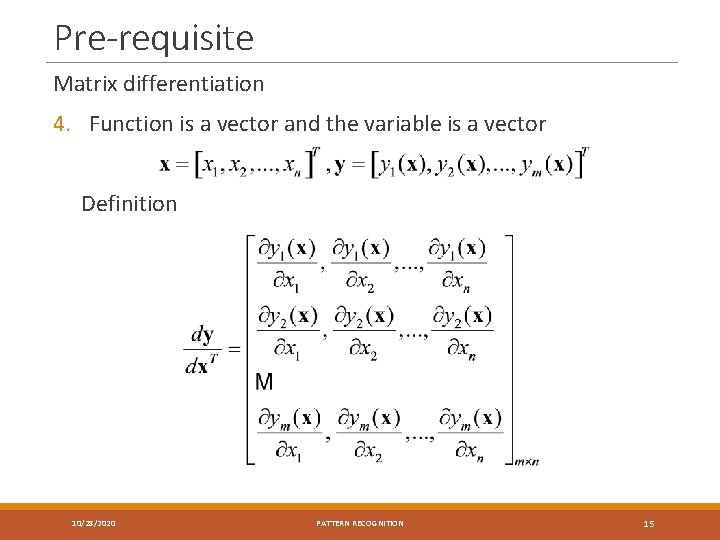

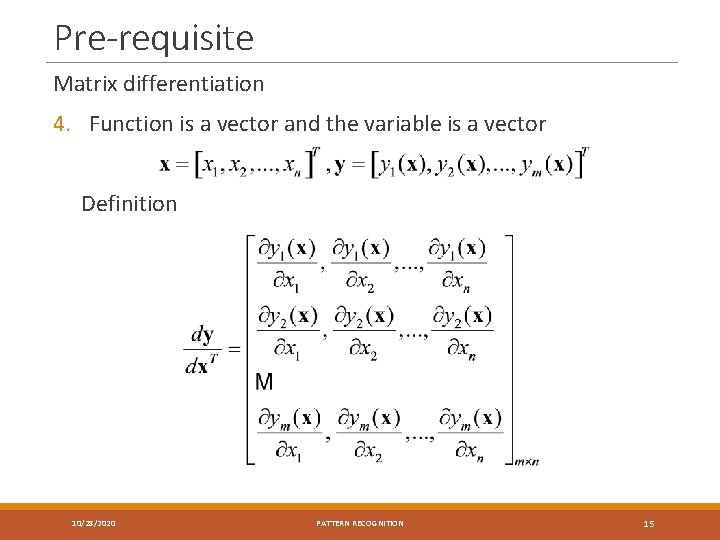

Pre-requisite Matrix differentiation 4. Function is a vector and the variable is a vector Definition 10/28/2020 PATTERN RECOGNITION 15

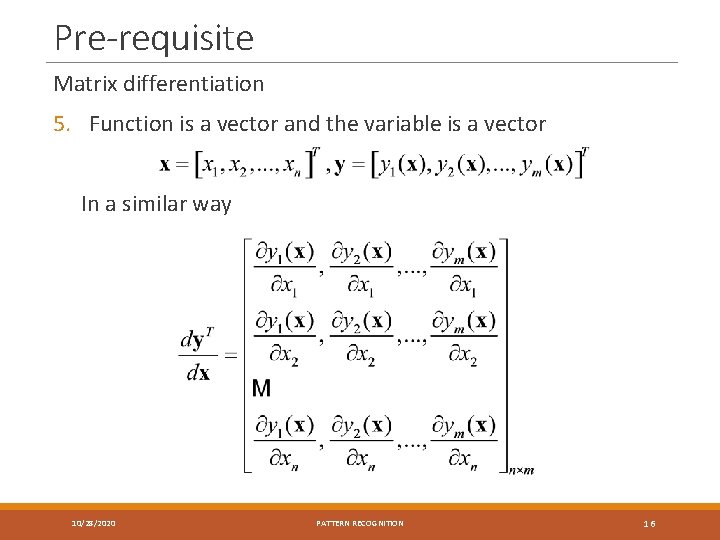

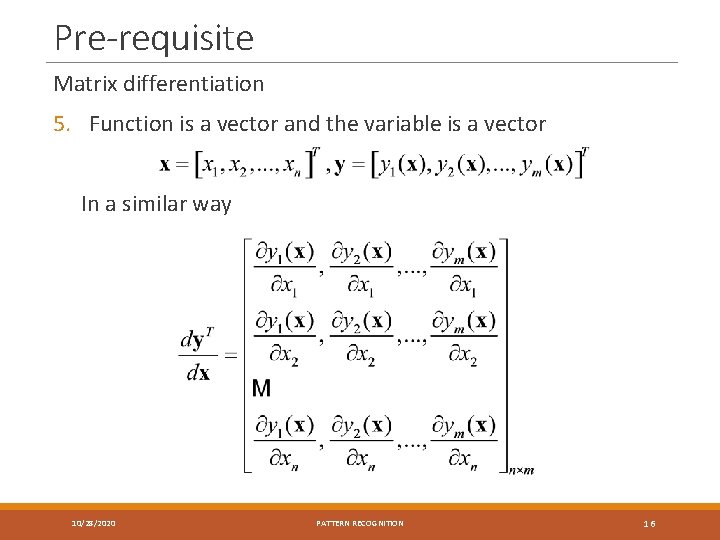

Pre-requisite Matrix differentiation 5. Function is a vector and the variable is a vector In a similar way 10/28/2020 PATTERN RECOGNITION 16

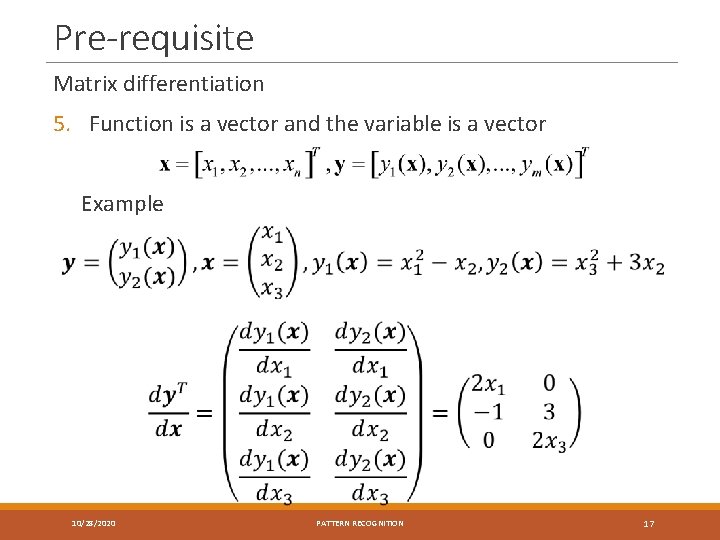

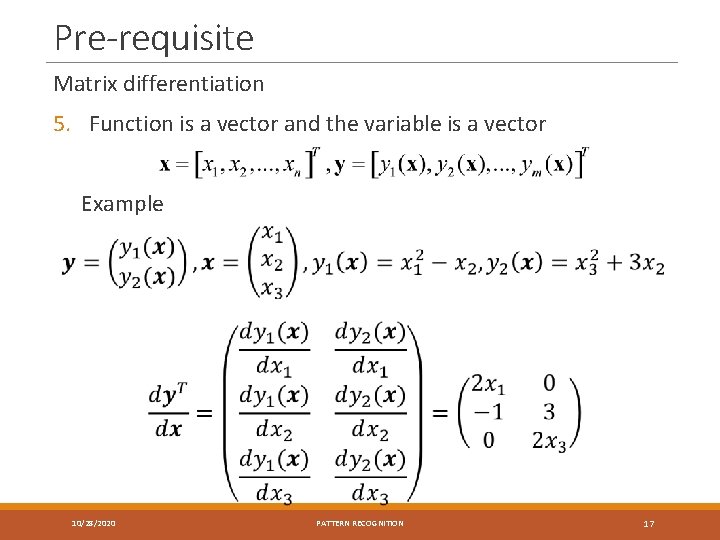

Pre-requisite Matrix differentiation 5. Function is a vector and the variable is a vector Example 10/28/2020 PATTERN RECOGNITION 17

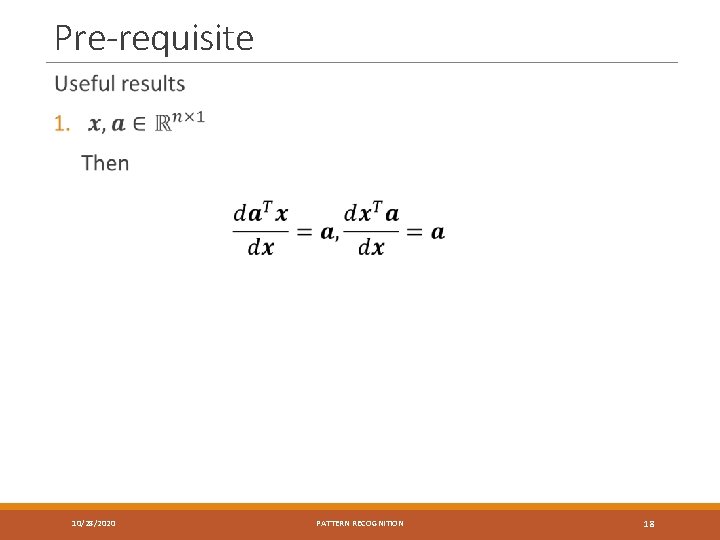

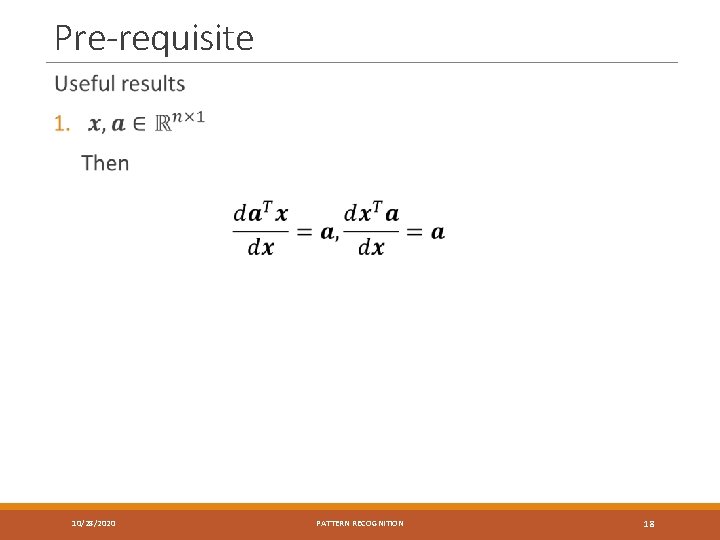

Pre-requisite 10/28/2020 PATTERN RECOGNITION 18

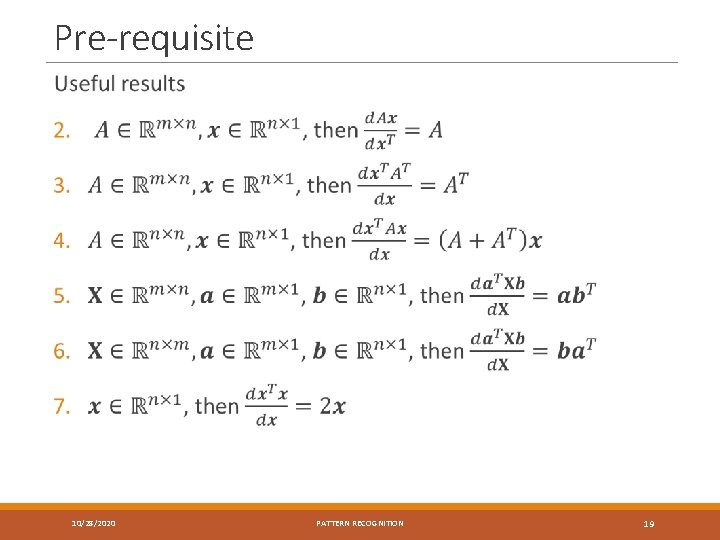

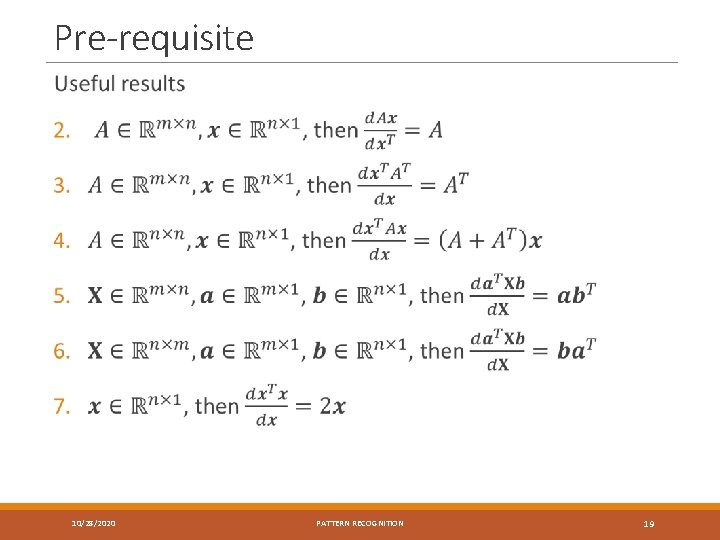

Pre-requisite 10/28/2020 PATTERN RECOGNITION 19

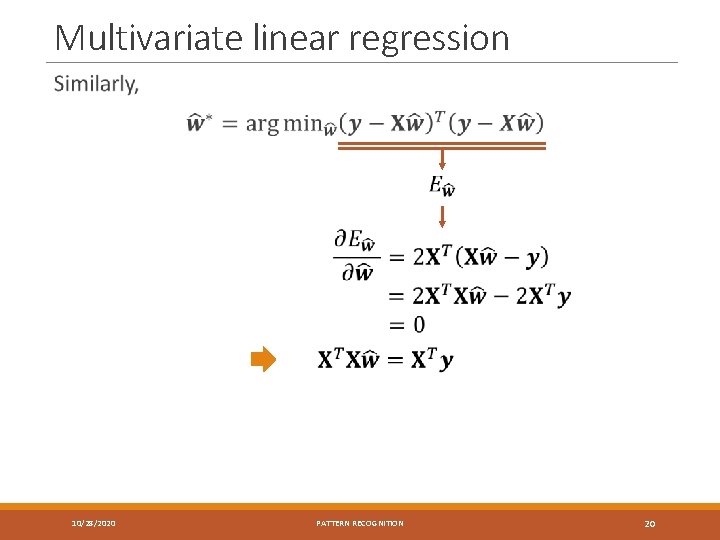

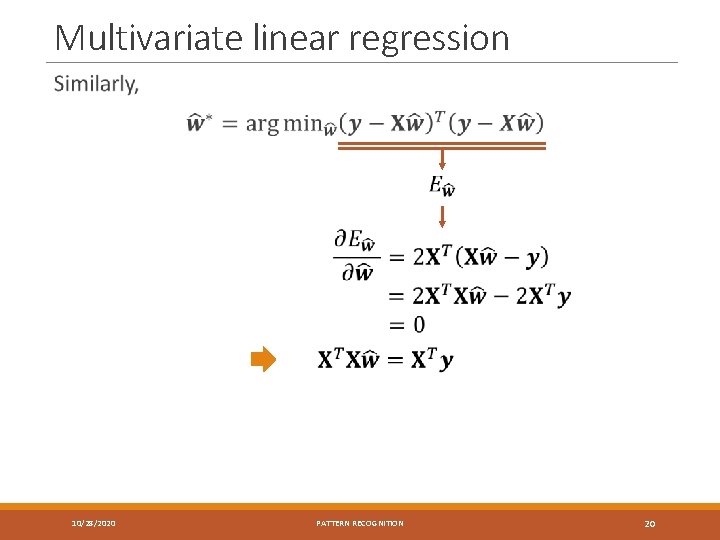

Multivariate linear regression 10/28/2020 PATTERN RECOGNITION 20

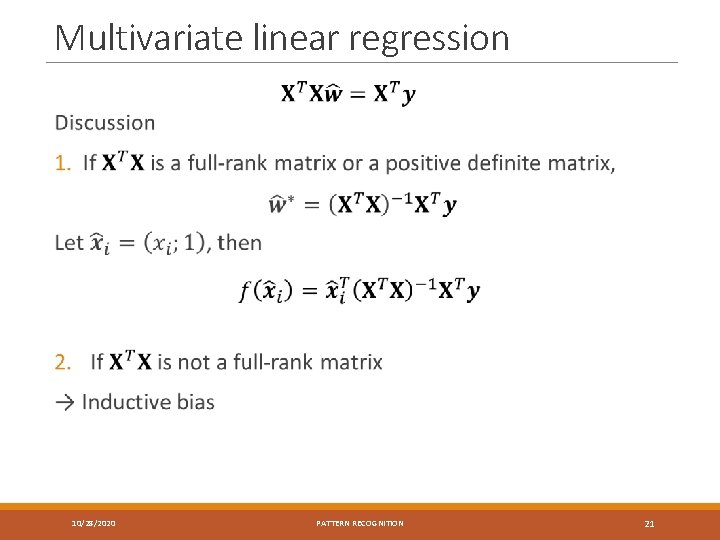

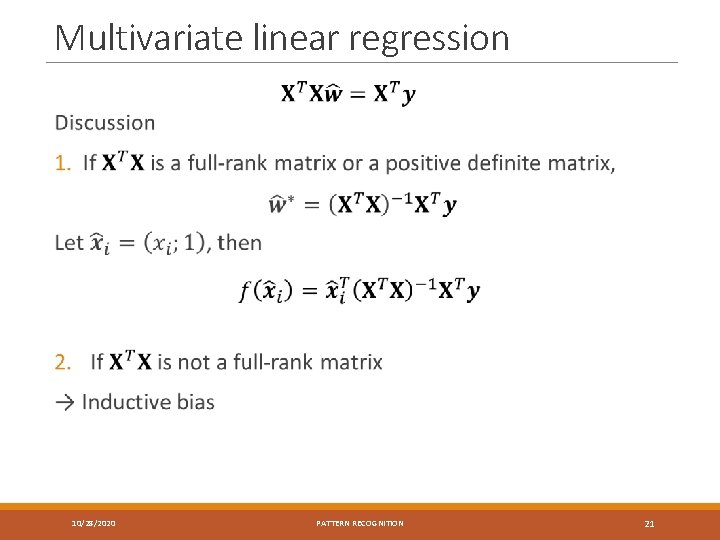

Multivariate linear regression 10/28/2020 PATTERN RECOGNITION 21

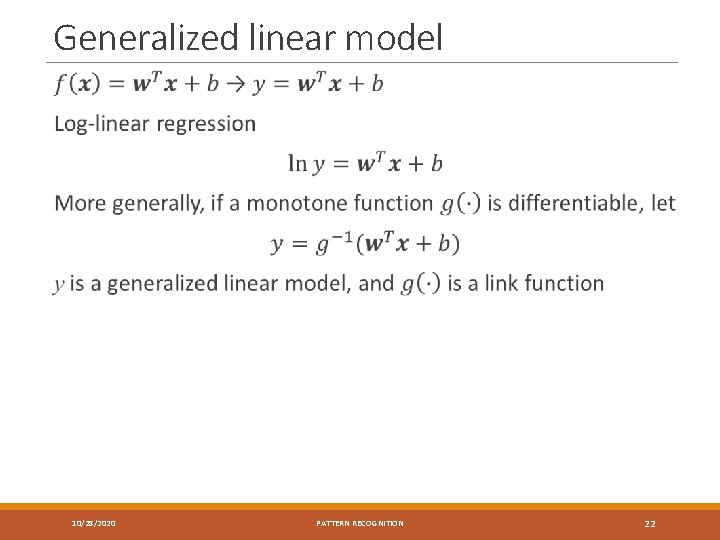

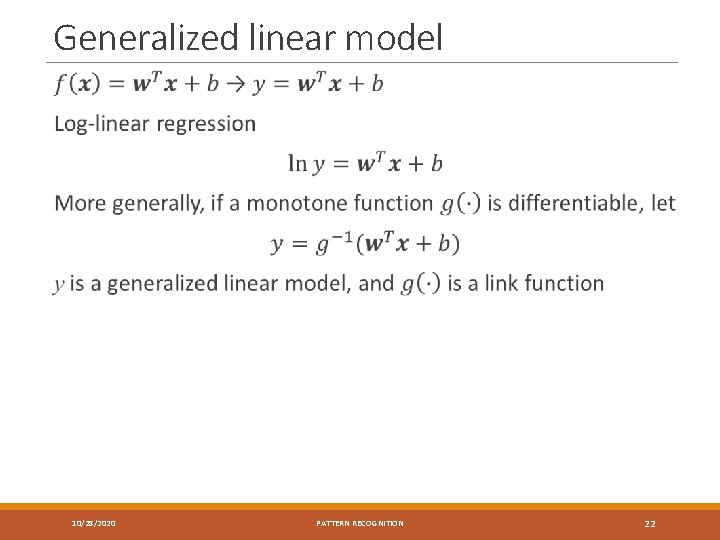

Generalized linear model 10/28/2020 PATTERN RECOGNITION 22

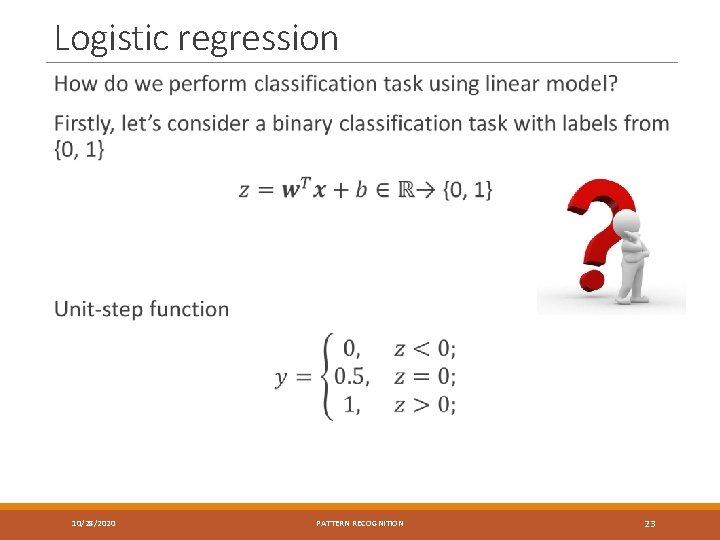

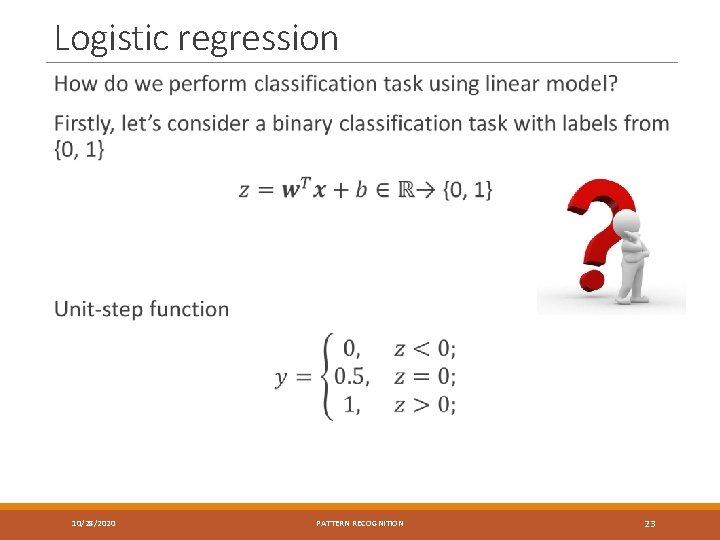

Logistic regression 10/28/2020 PATTERN RECOGNITION 23

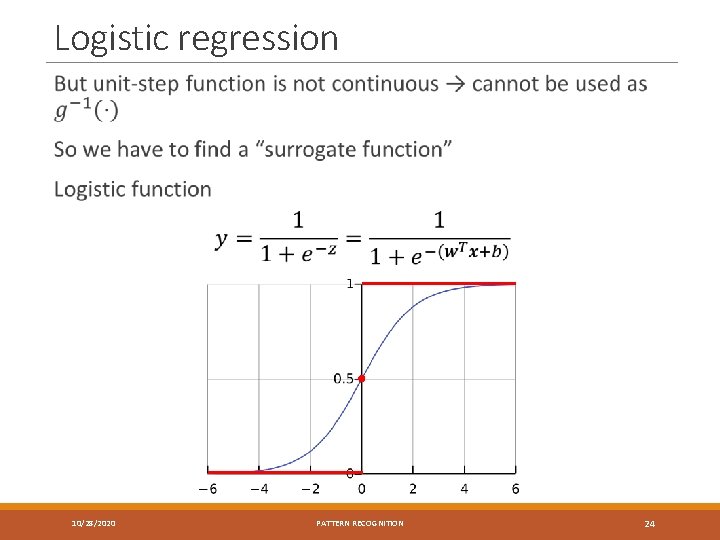

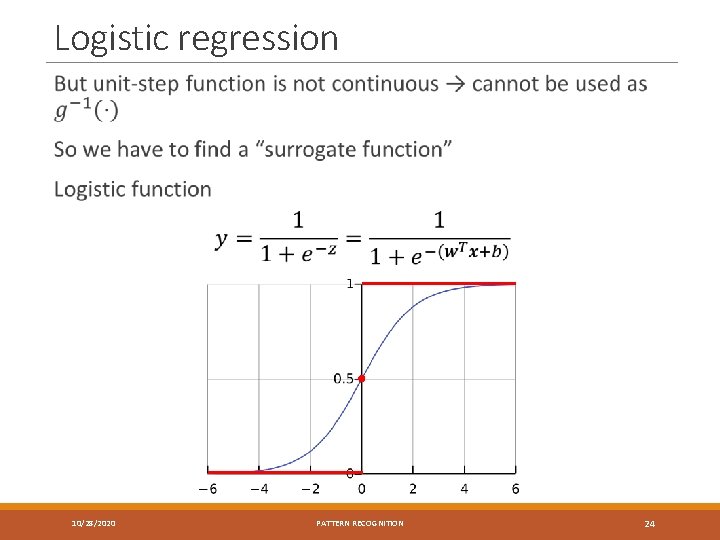

Logistic regression 10/28/2020 PATTERN RECOGNITION 24

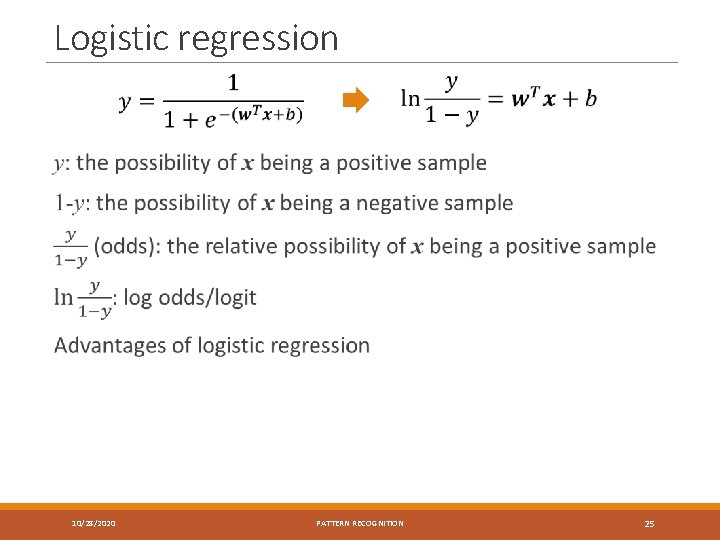

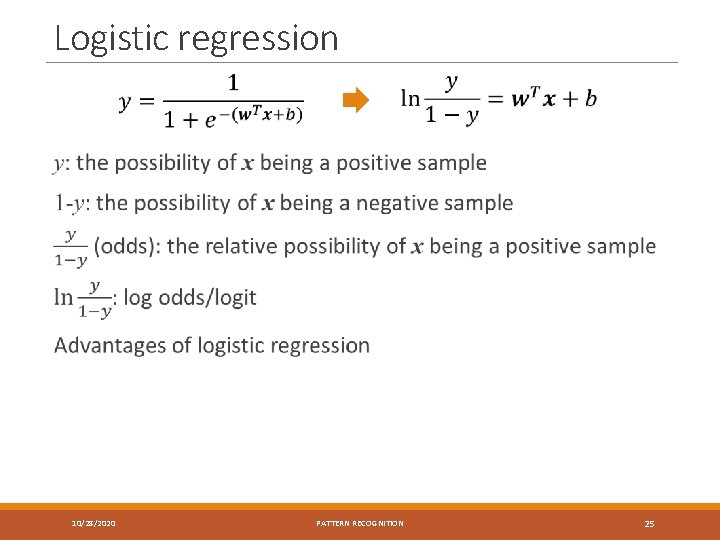

Logistic regression 10/28/2020 PATTERN RECOGNITION 25

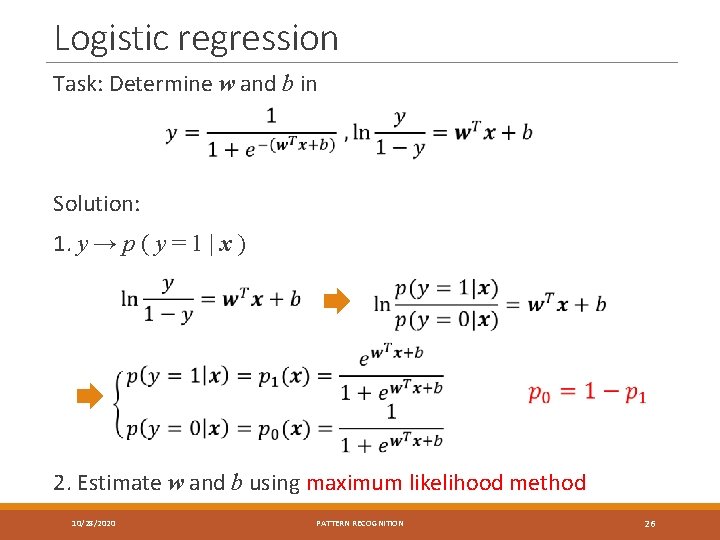

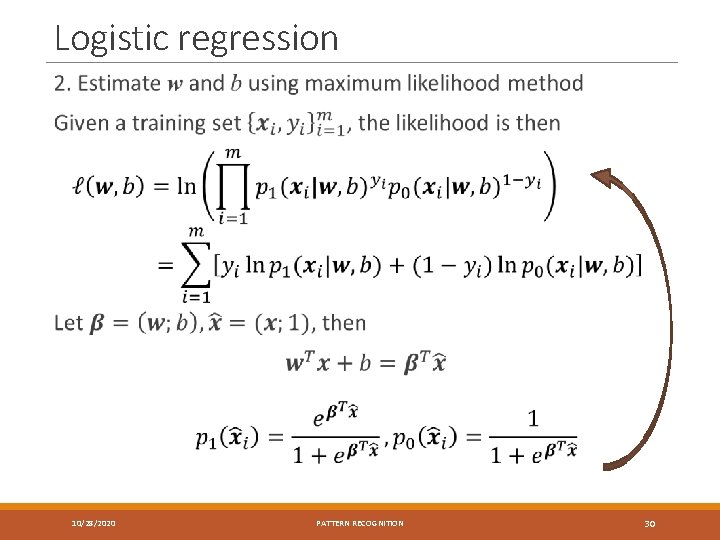

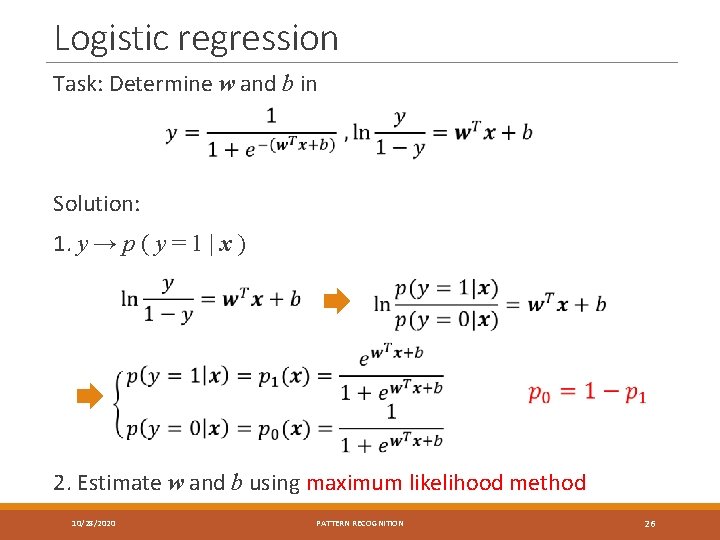

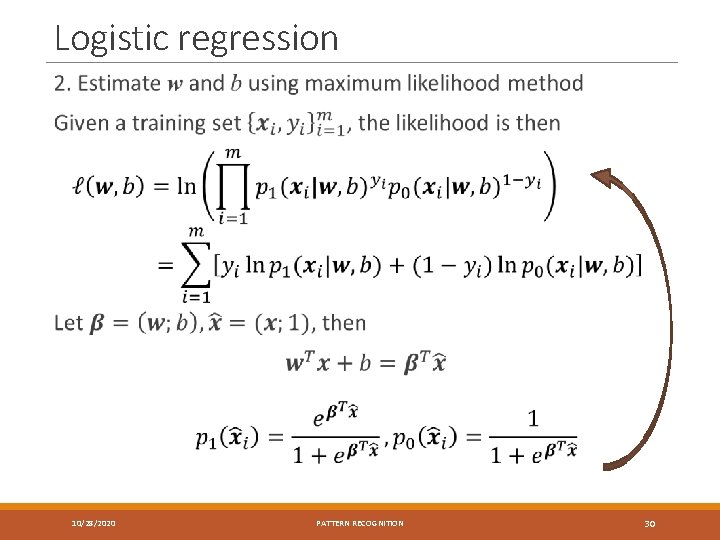

Logistic regression Task: Determine w and b in Solution: 1. y → p ( y = 1 | x ) 2. Estimate w and b using maximum likelihood method 10/28/2020 PATTERN RECOGNITION 26

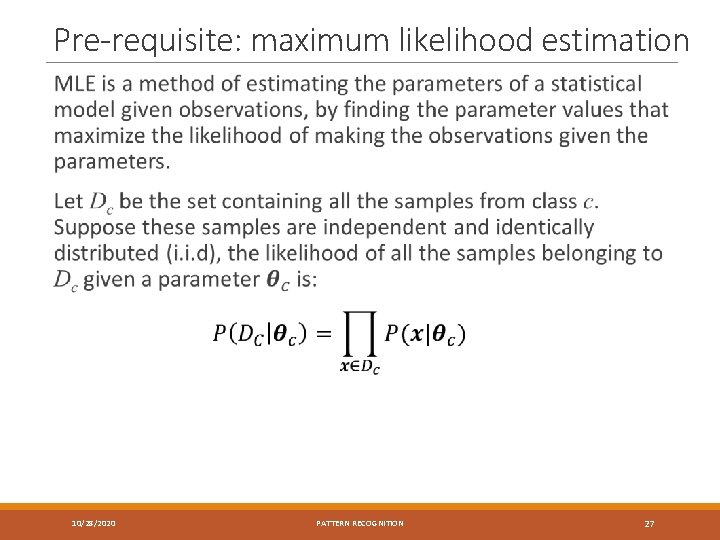

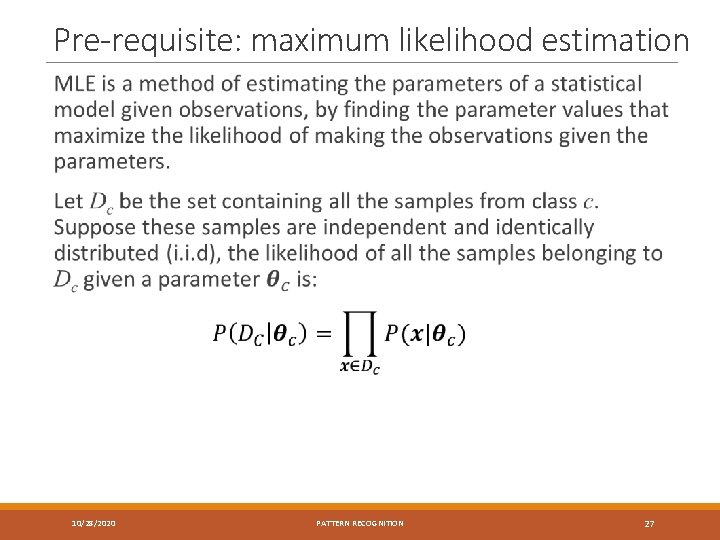

Pre-requisite: maximum likelihood estimation 10/28/2020 PATTERN RECOGNITION 27

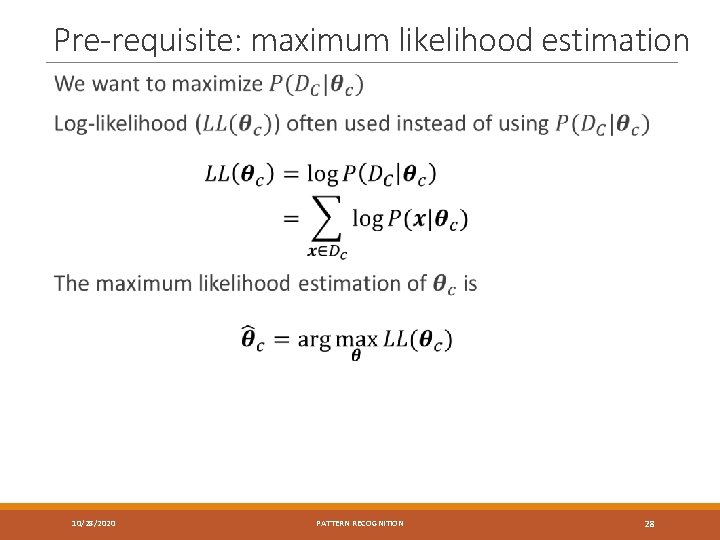

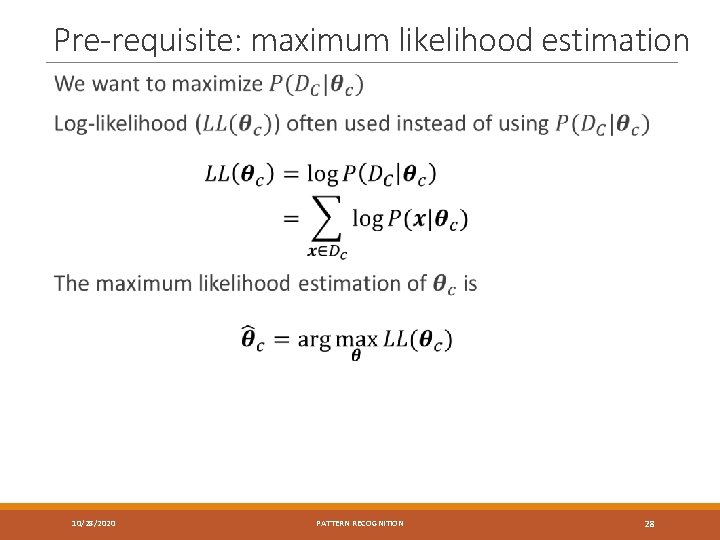

Pre-requisite: maximum likelihood estimation 10/28/2020 PATTERN RECOGNITION 28

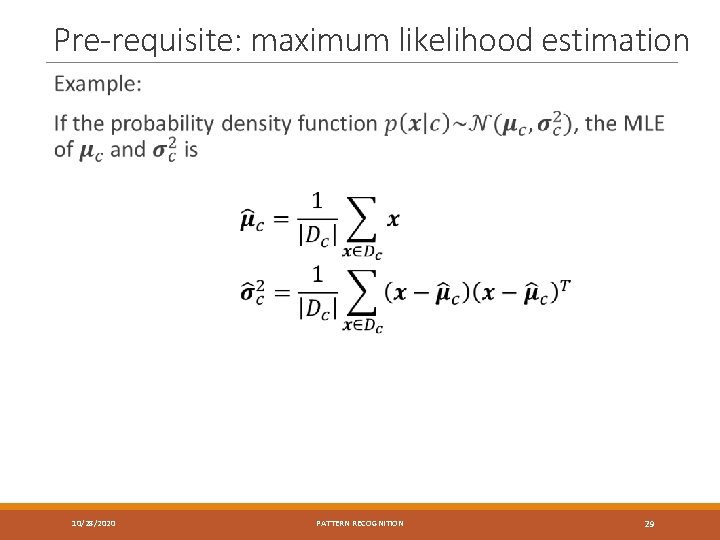

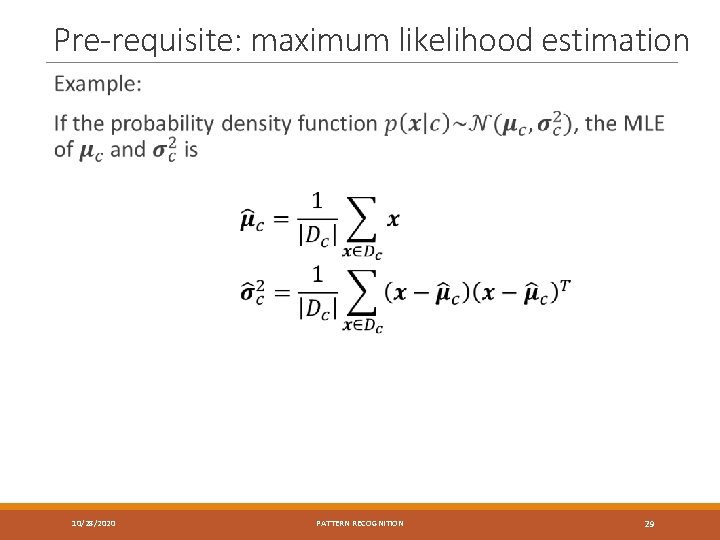

Pre-requisite: maximum likelihood estimation 10/28/2020 PATTERN RECOGNITION 29

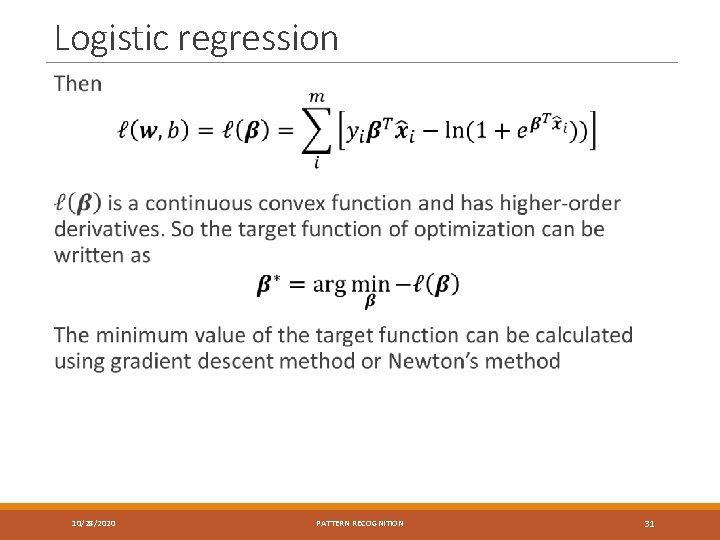

Logistic regression 10/28/2020 PATTERN RECOGNITION 30

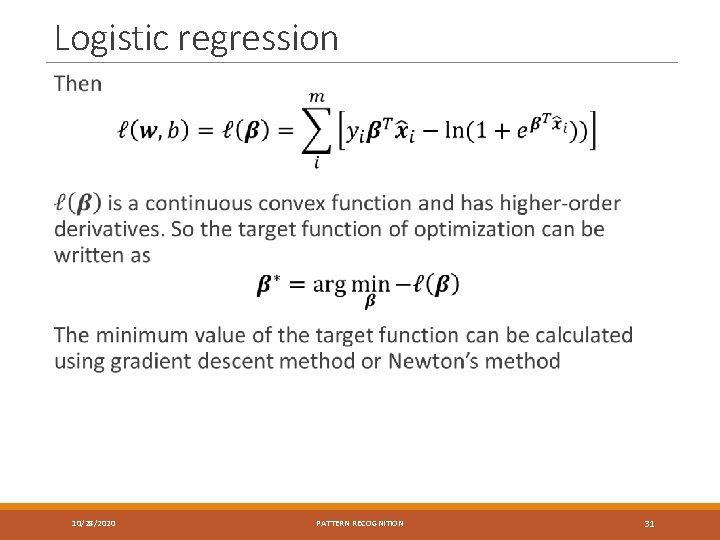

Logistic regression 10/28/2020 PATTERN RECOGNITION 31

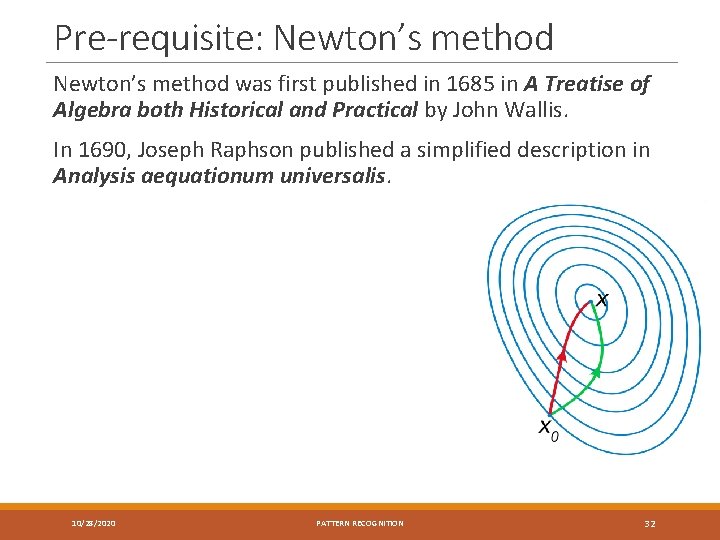

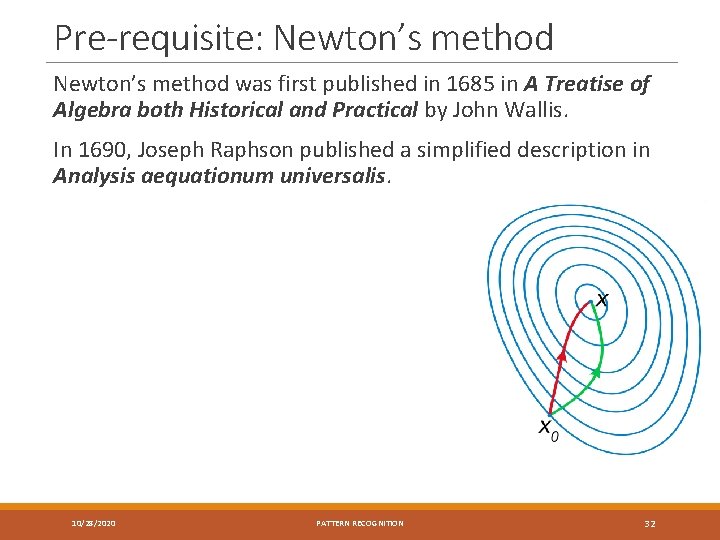

Pre-requisite: Newton’s method was first published in 1685 in A Treatise of Algebra both Historical and Practical by John Wallis. In 1690, Joseph Raphson published a simplified description in Analysis aequationum universalis. 10/28/2020 PATTERN RECOGNITION 32

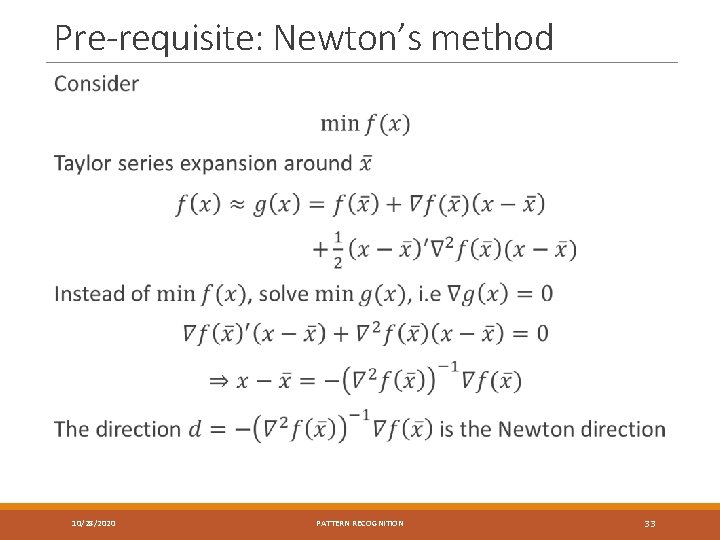

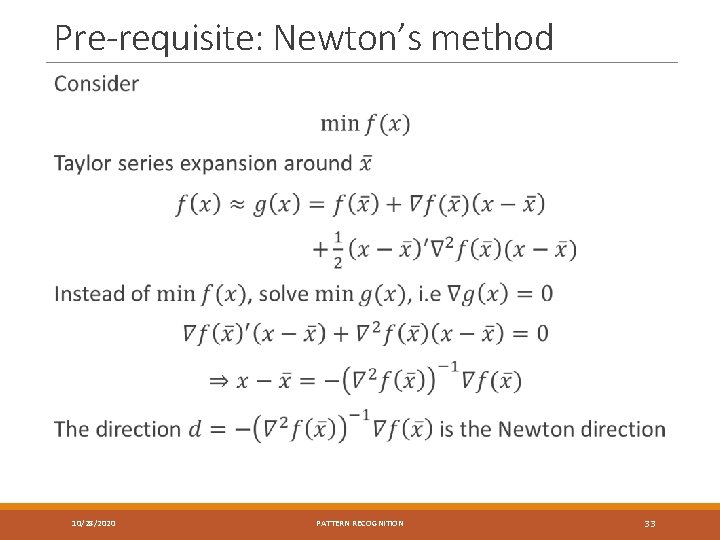

Pre-requisite: Newton’s method 10/28/2020 PATTERN RECOGNITION 33

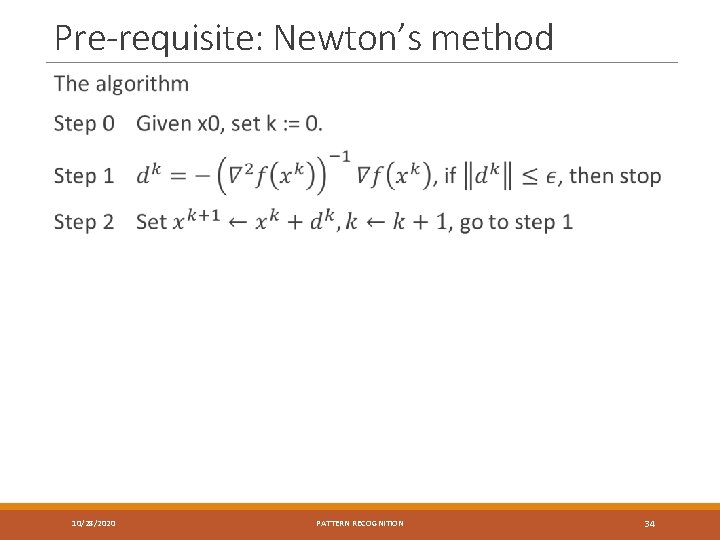

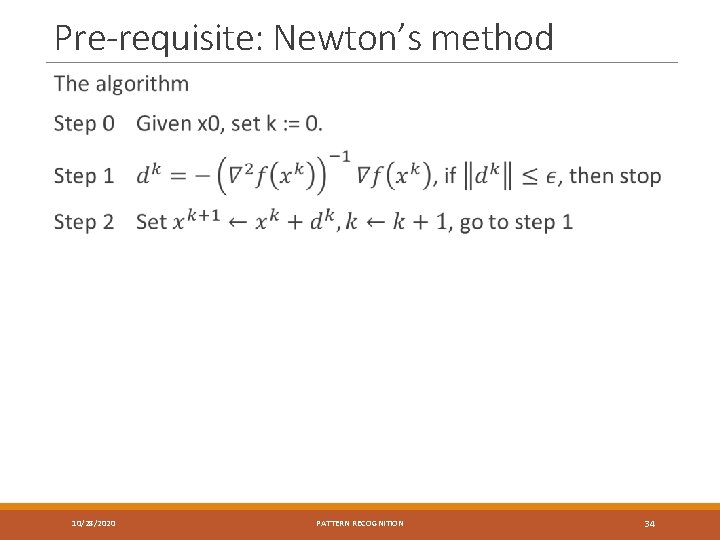

Pre-requisite: Newton’s method 10/28/2020 PATTERN RECOGNITION 34

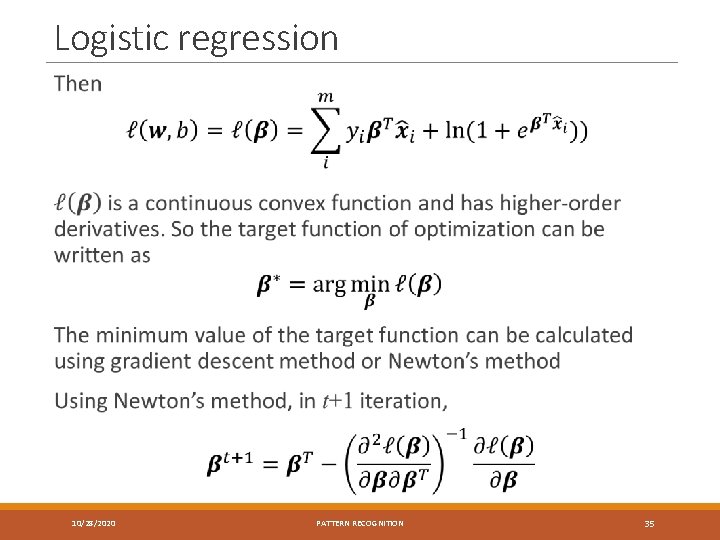

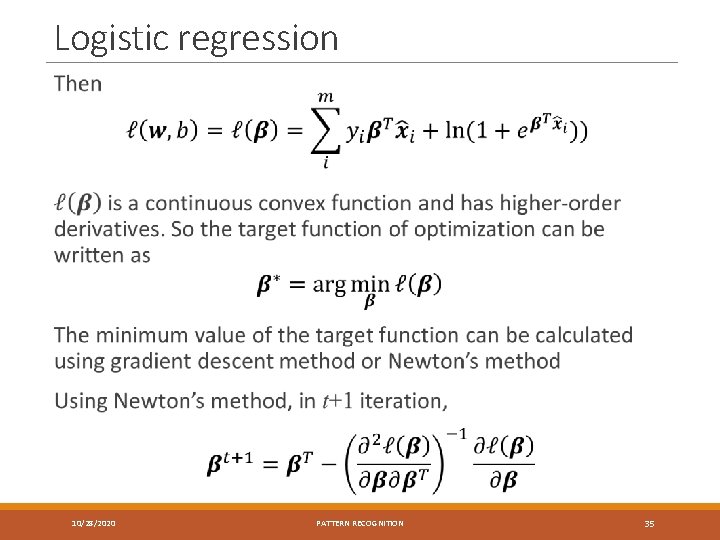

Logistic regression 10/28/2020 PATTERN RECOGNITION 35

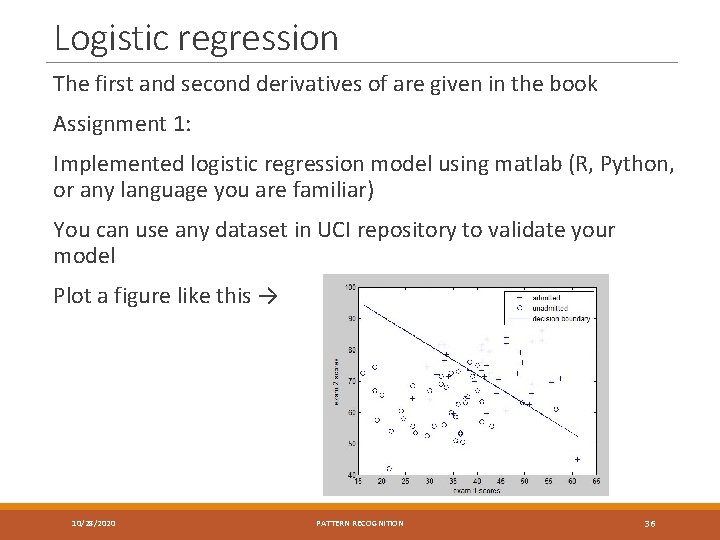

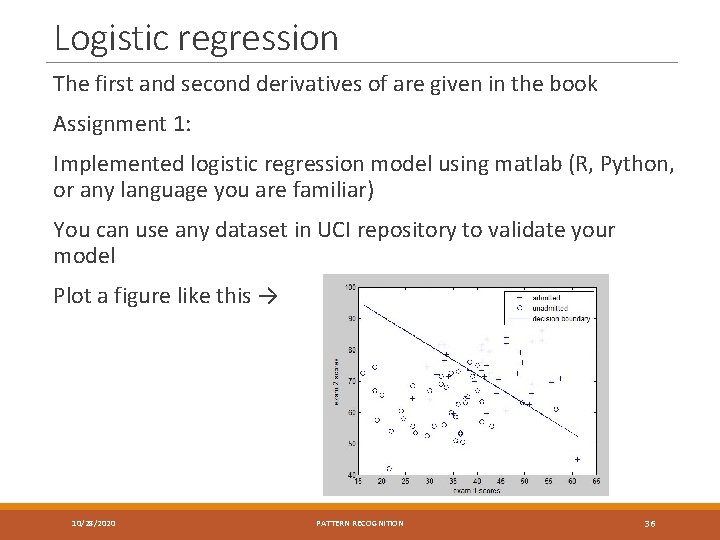

Logistic regression The first and second derivatives of are given in the book Assignment 1: Implemented logistic regression model using matlab (R, Python, or any language you are familiar) You can use any dataset in UCI repository to validate your model Plot a figure like this → 10/28/2020 PATTERN RECOGNITION 36

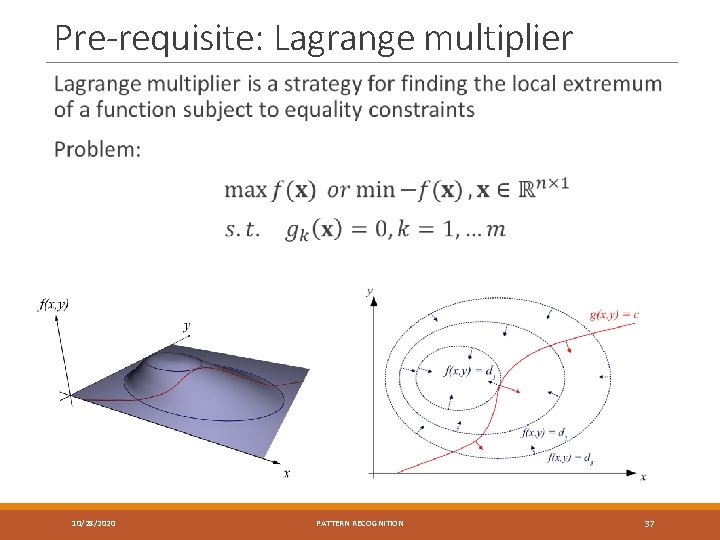

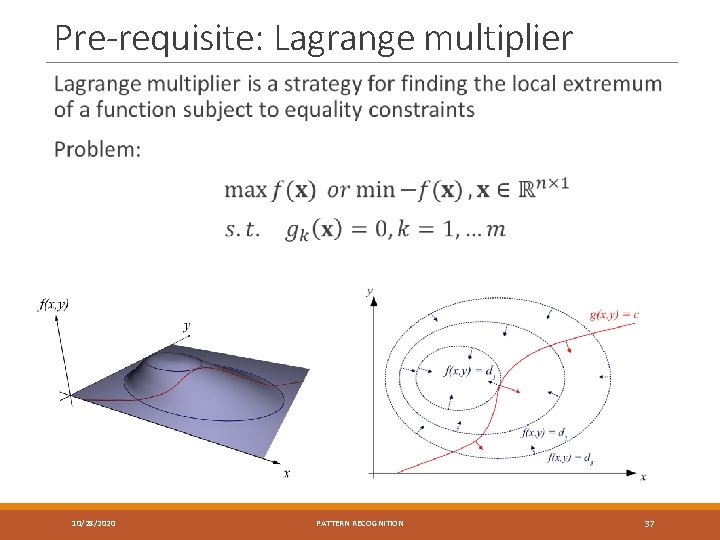

Pre-requisite: Lagrange multiplier 10/28/2020 PATTERN RECOGNITION 37

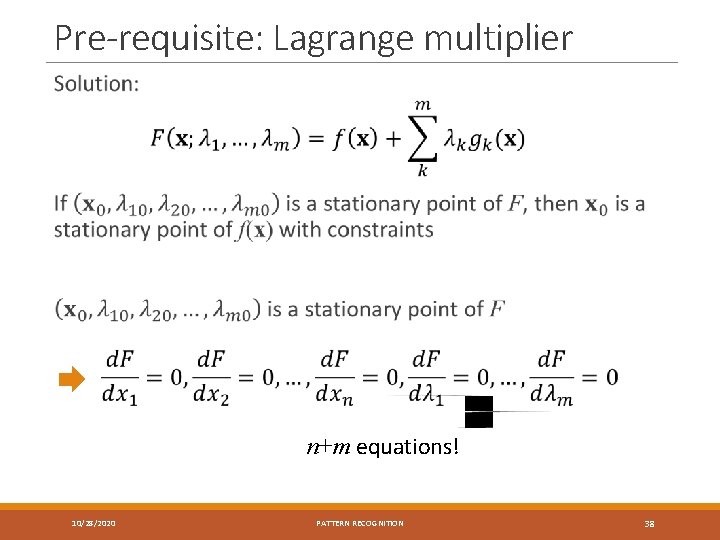

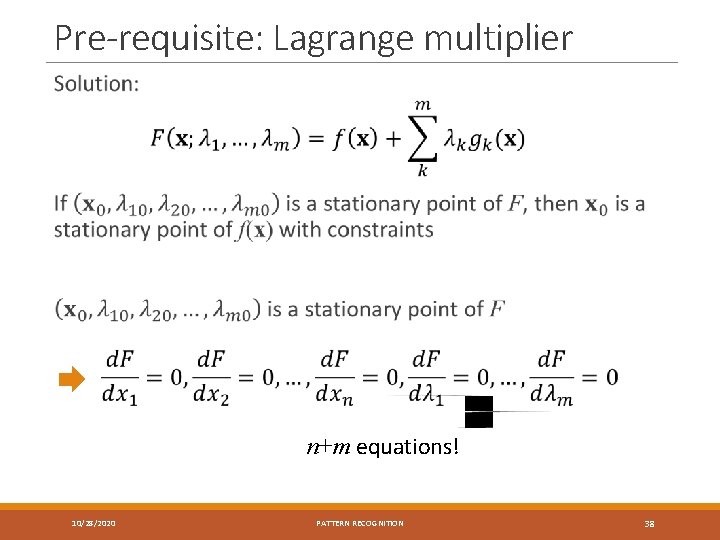

Pre-requisite: Lagrange multiplier n+m equations! 10/28/2020 PATTERN RECOGNITION 38

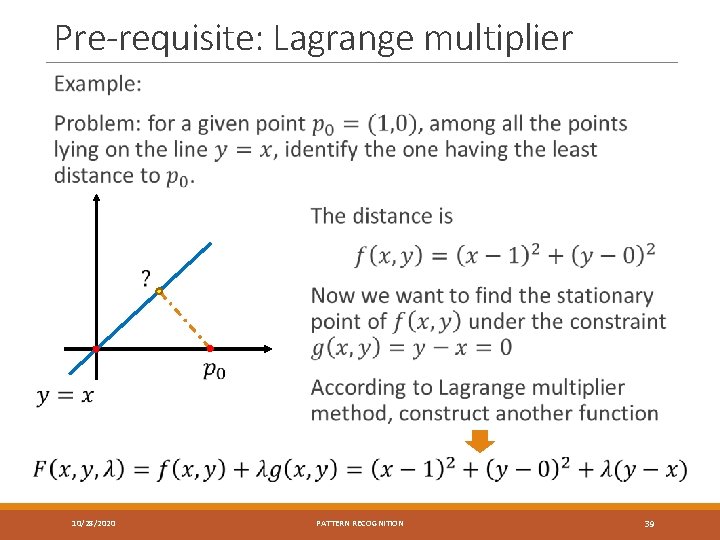

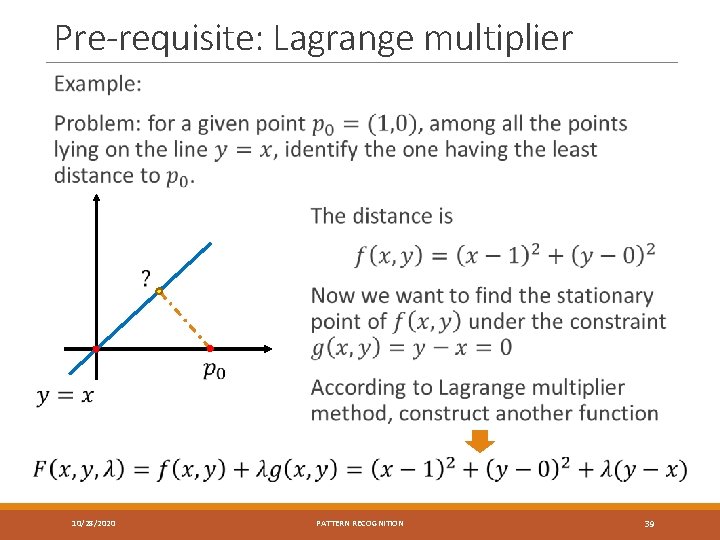

Pre-requisite: Lagrange multiplier 10/28/2020 PATTERN RECOGNITION 39

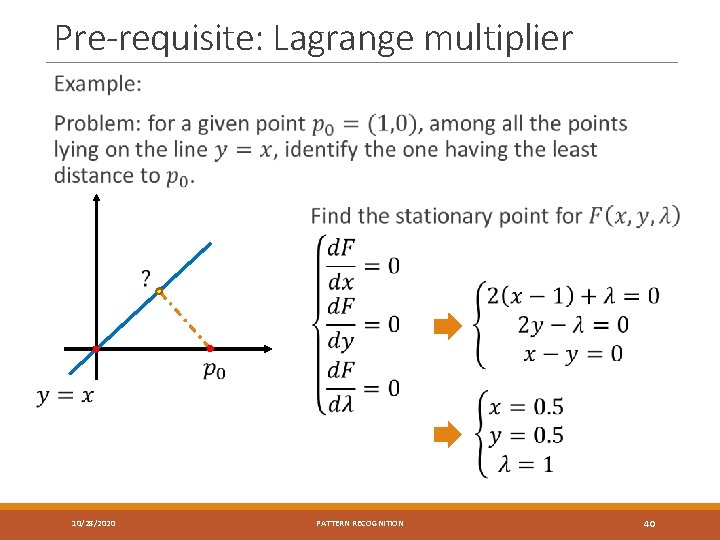

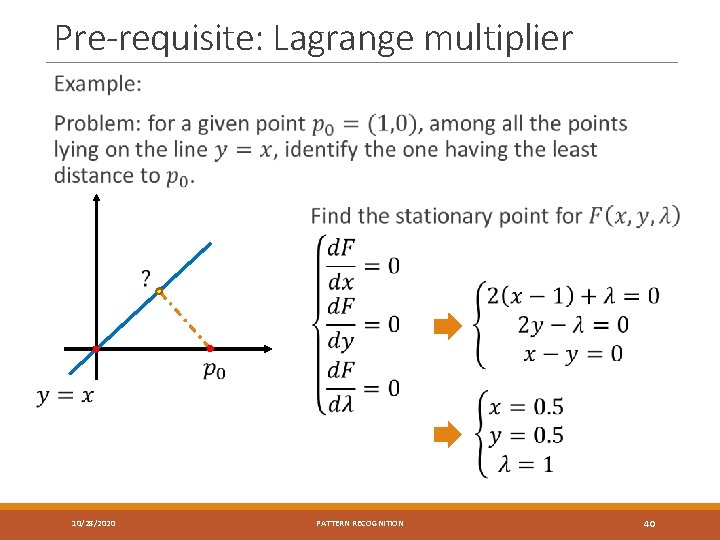

Pre-requisite: Lagrange multiplier 10/28/2020 PATTERN RECOGNITION 40

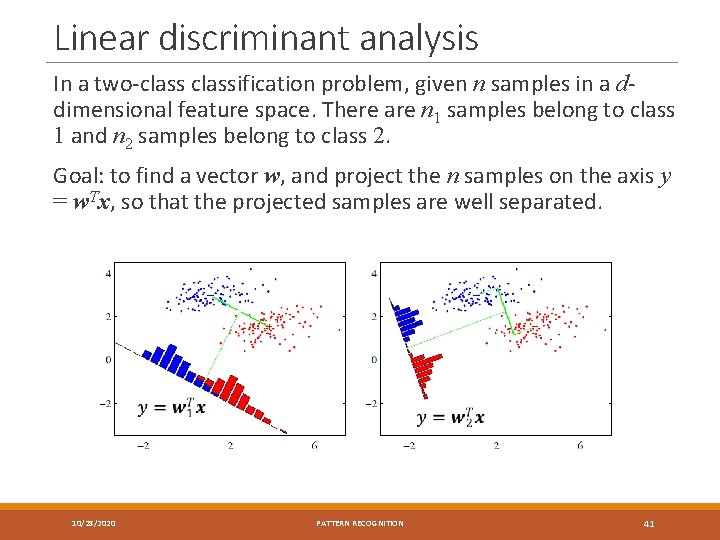

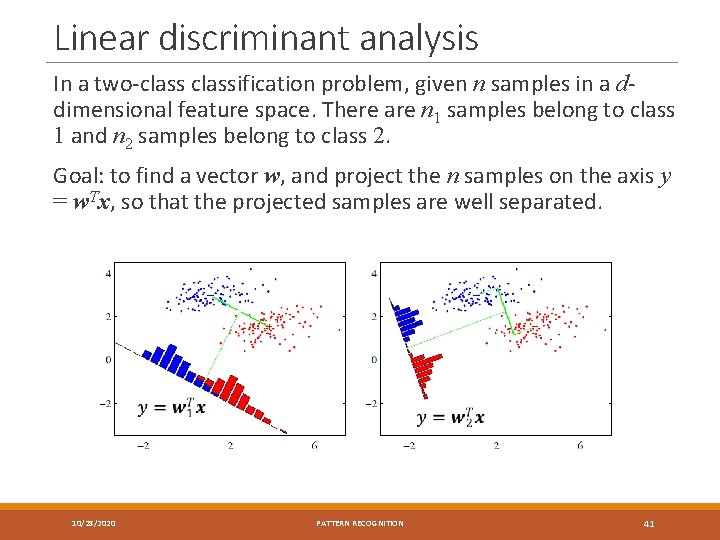

Linear discriminant analysis In a two-classification problem, given n samples in a ddimensional feature space. There are n 1 samples belong to class 1 and n 2 samples belong to class 2. Goal: to find a vector w, and project the n samples on the axis y = w. Tx, so that the projected samples are well separated. 10/28/2020 PATTERN RECOGNITION 41

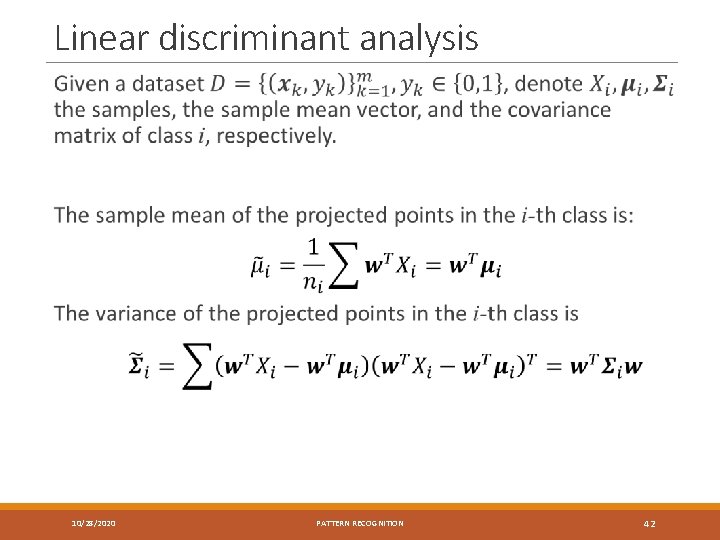

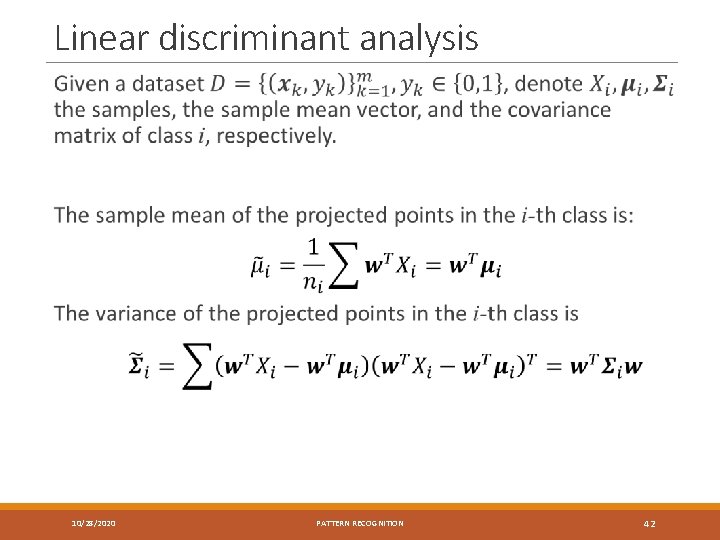

Linear discriminant analysis 10/28/2020 PATTERN RECOGNITION 42

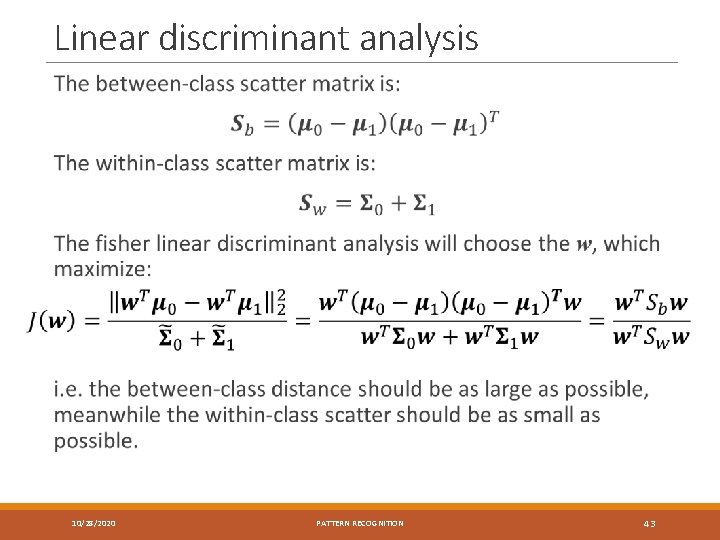

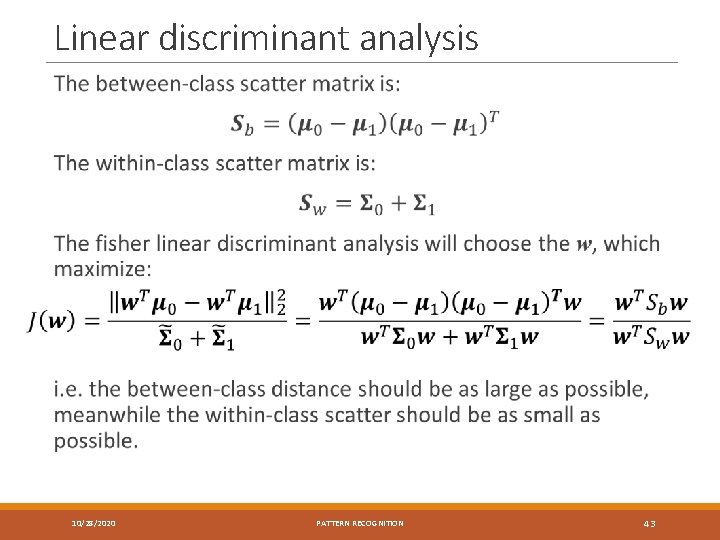

Linear discriminant analysis 10/28/2020 PATTERN RECOGNITION 43

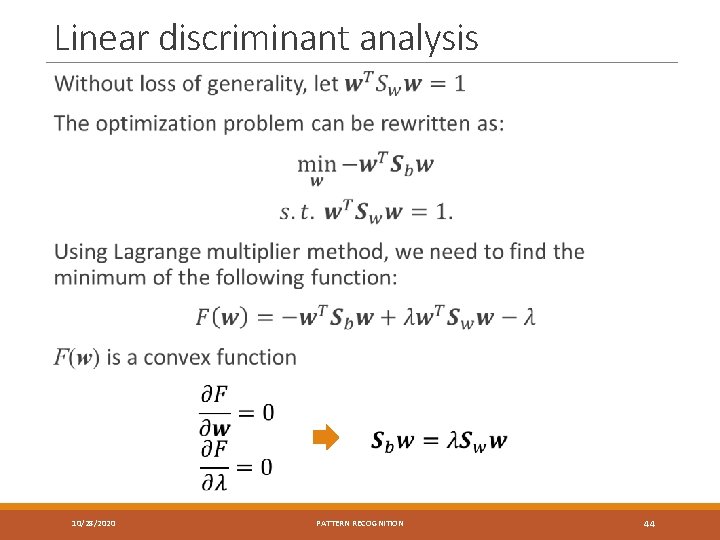

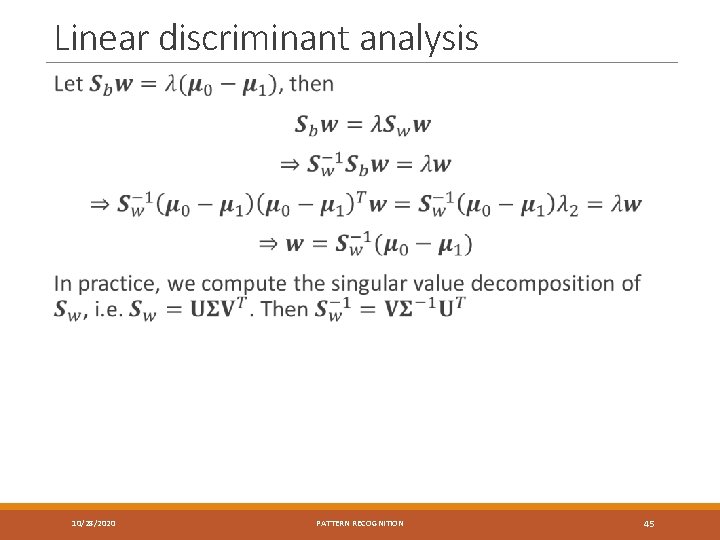

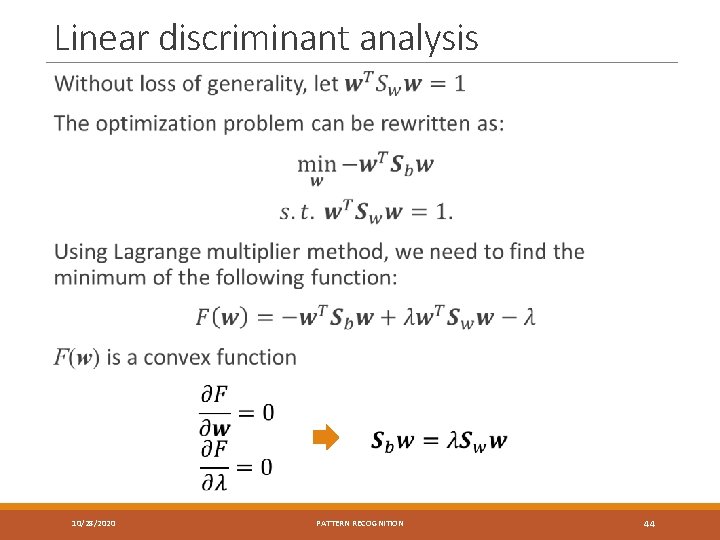

Linear discriminant analysis 10/28/2020 PATTERN RECOGNITION 44

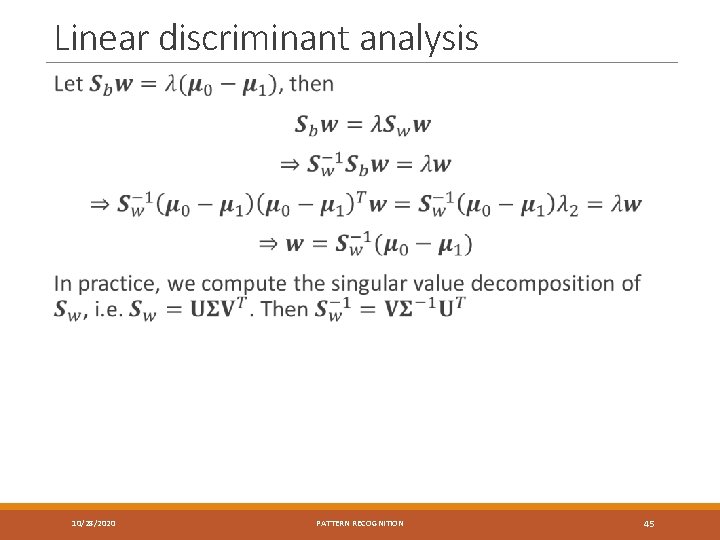

Linear discriminant analysis 10/28/2020 PATTERN RECOGNITION 45

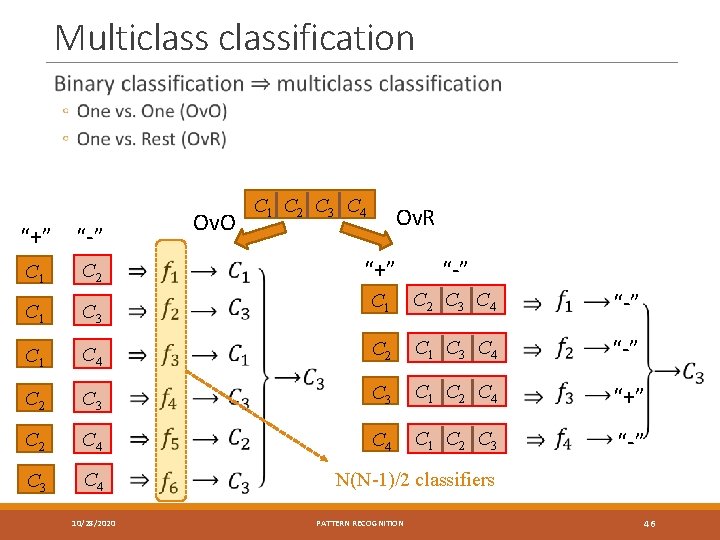

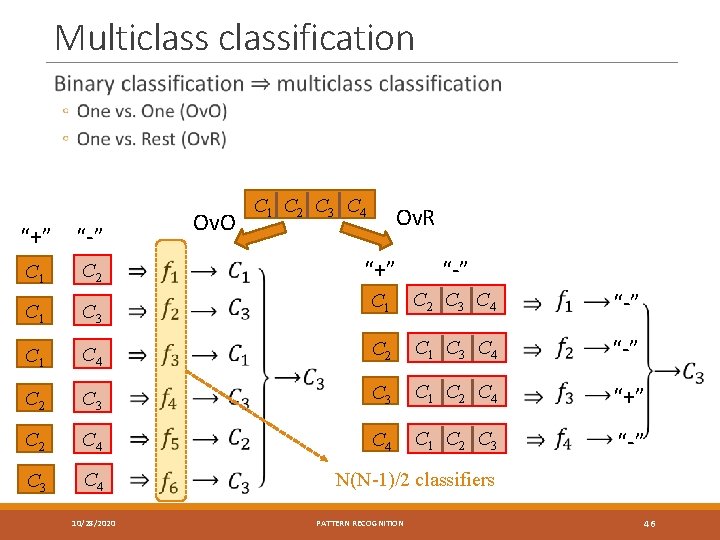

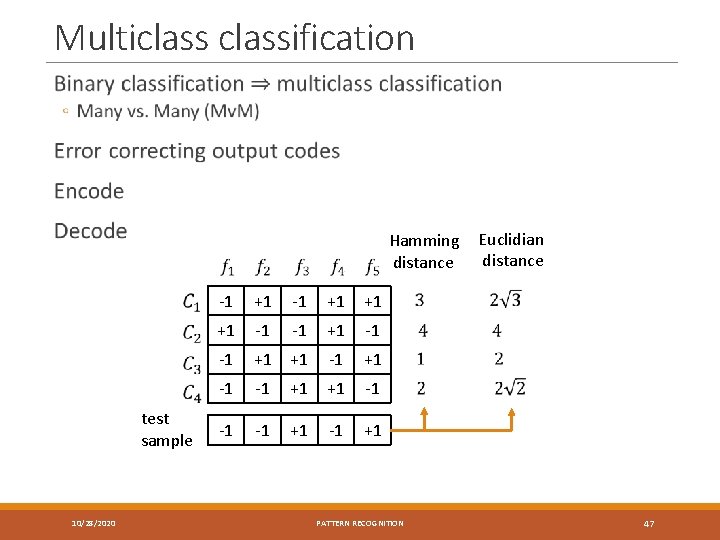

Multiclassification Ov. O “+” “-” C 1 C 2 C 1 C 3 C 1 C 4 C 2 C 3 C 2 C 4 C 3 C 4 10/28/2020 C 1 C 2 C 3 C 4 Ov. R “+” “-” C 1 C 2 C 3 C 4 “-” C 2 C 1 C 3 C 4 “-” C 3 C 1 C 2 C 4 “+” C 4 C 1 C 2 C 3 “-” N(N-1)/2 classifiers PATTERN RECOGNITION 46

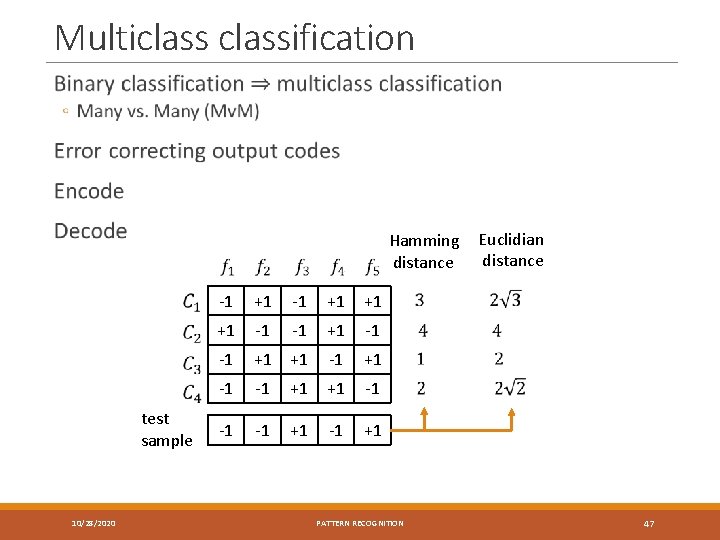

Multiclassification Hamming distance test sample 10/28/2020 -1 +1 +1 +1 -1 -1 +1 +1 -1 -1 +1 PATTERN RECOGNITION Euclidian distance 47

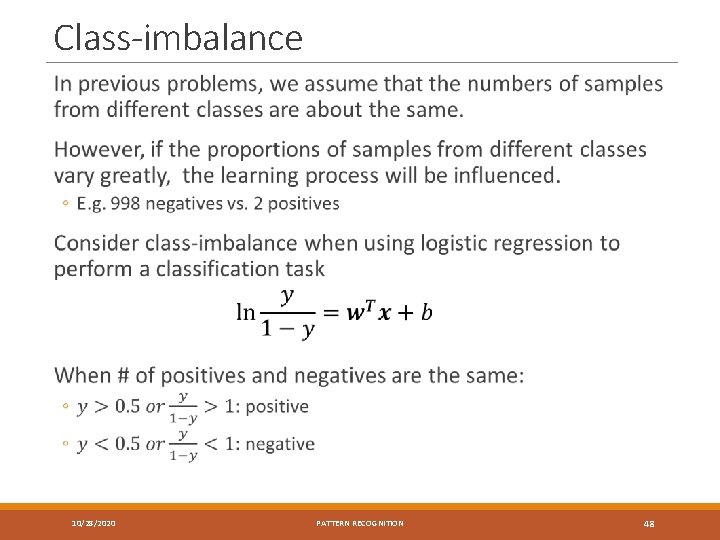

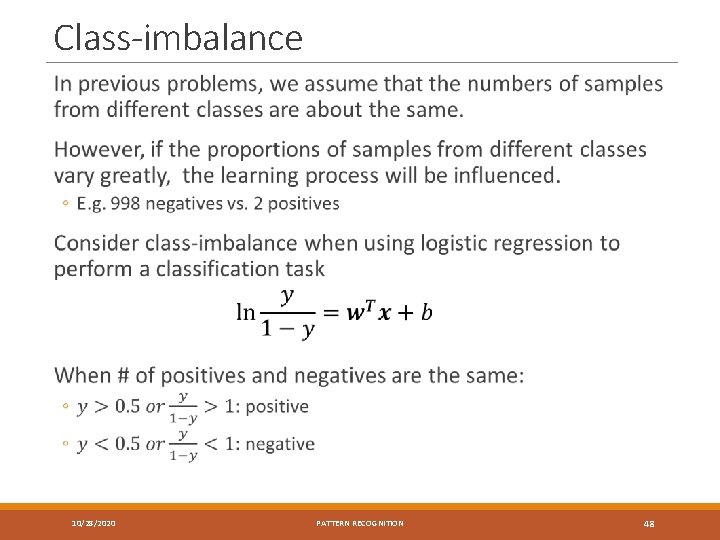

Class-imbalance 10/28/2020 PATTERN RECOGNITION 48

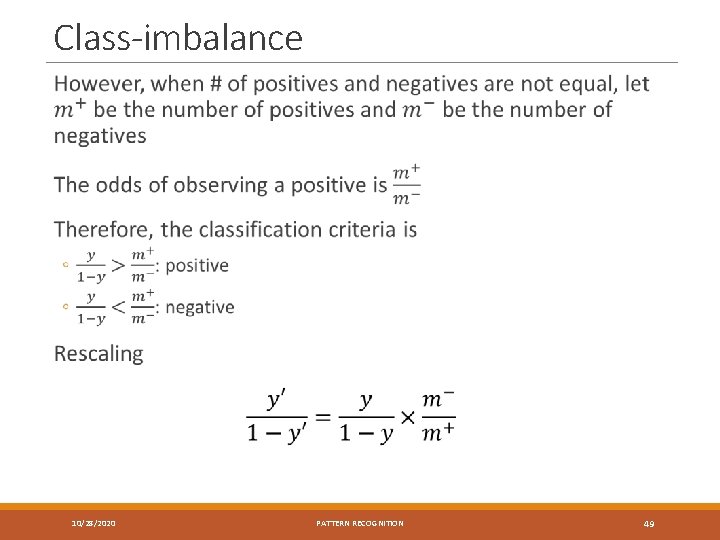

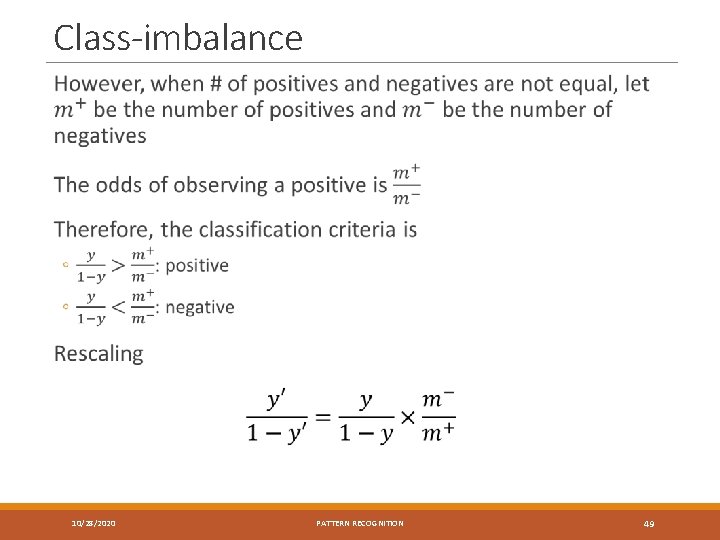

Class-imbalance 10/28/2020 PATTERN RECOGNITION 49

Class-imbalance Undersampling Oversampling Threshold-moving 10/28/2020 PATTERN RECOGNITION 50