Linear Discriminant Feature Extraction for Speech Recognition HungShin

- Slides: 72

Linear Discriminant Feature Extraction for Speech Recognition Hung-Shin Lee Master Student Spoken Language Processing Lab National Taiwan Normal University 2008/08/14

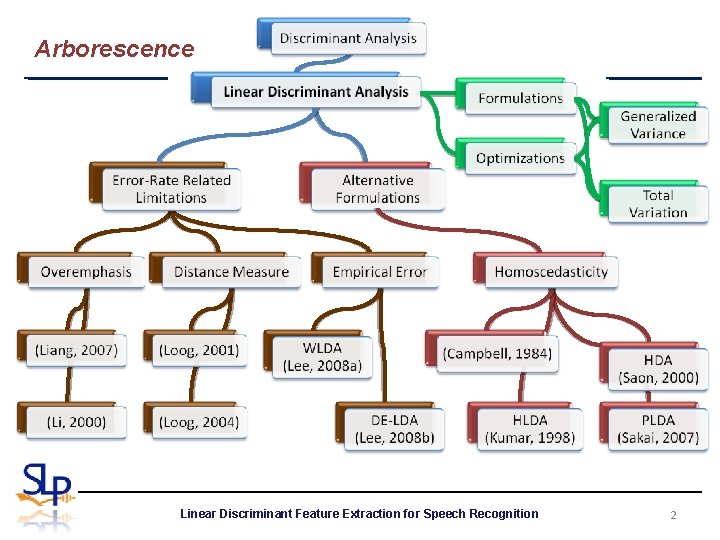

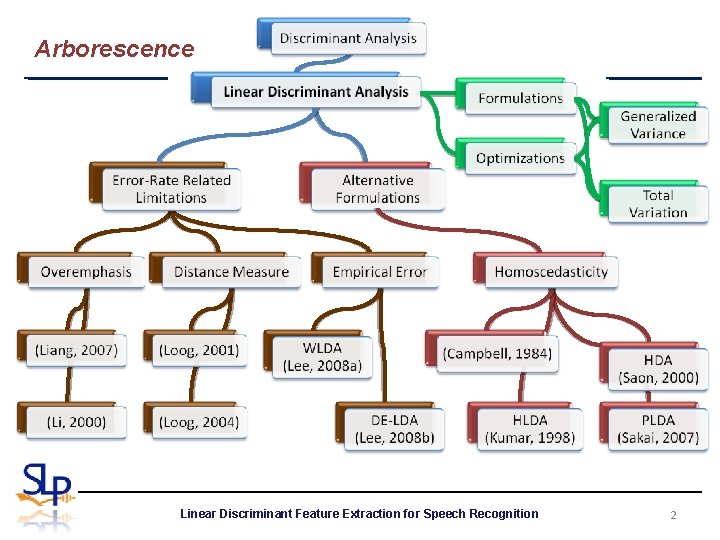

Arborescence Linear Discriminant Feature Extraction for Speech Recognition 2

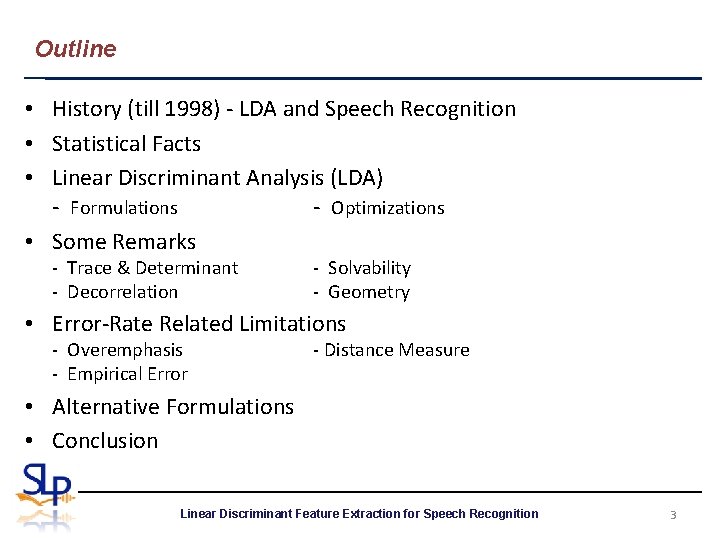

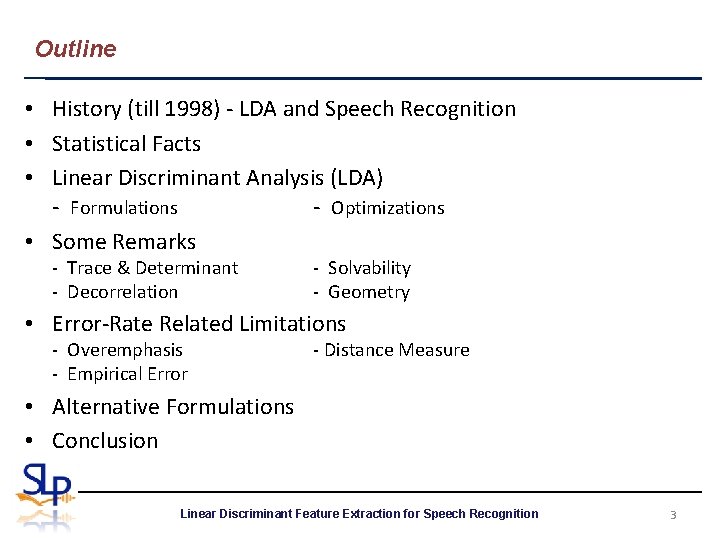

Outline • History (till 1998) - LDA and Speech Recognition • Statistical Facts • Linear Discriminant Analysis (LDA) - Formulations - Optimizations • Some Remarks - Trace & Determinant - Decorrelation - Solvability - Geometry • Error-Rate Related Limitations - Overemphasis - Empirical Error - Distance Measure • Alternative Formulations • Conclusion Linear Discriminant Feature Extraction for Speech Recognition 3

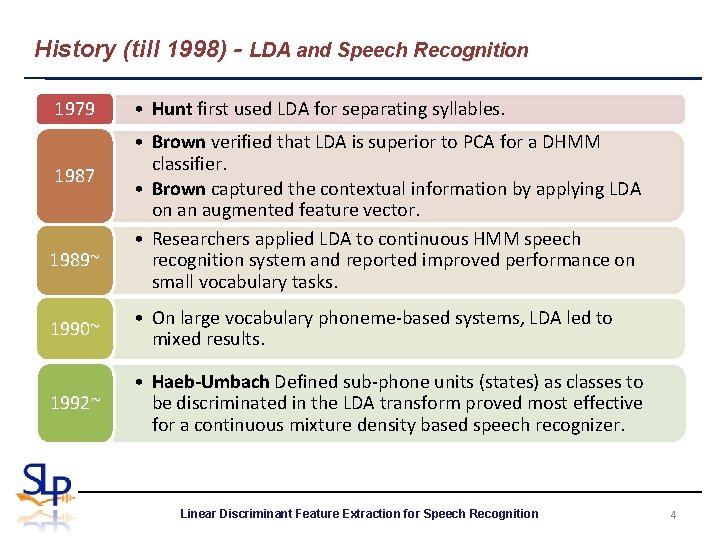

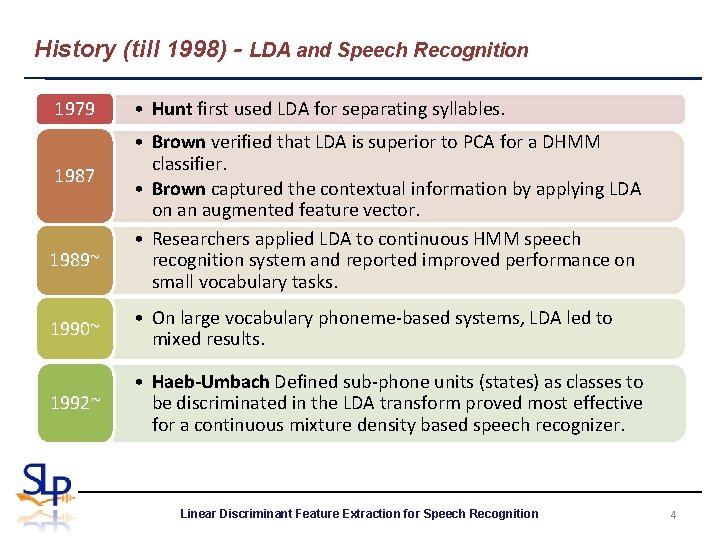

History (till 1998) - LDA and Speech Recognition 1979 1987 1989~ • Hunt first used LDA for separating syllables. • Brown verified that LDA is superior to PCA for a DHMM classifier. • Brown captured the contextual information by applying LDA on an augmented feature vector. • Researchers applied LDA to continuous HMM speech recognition system and reported improved performance on small vocabulary tasks. 1990~ • On large vocabulary phoneme-based systems, LDA led to mixed results. 1992~ • Haeb-Umbach Defined sub-phone units (states) as classes to be discriminated in the LDA transform proved most effective for a continuous mixture density based speech recognizer. Linear Discriminant Feature Extraction for Speech Recognition 4

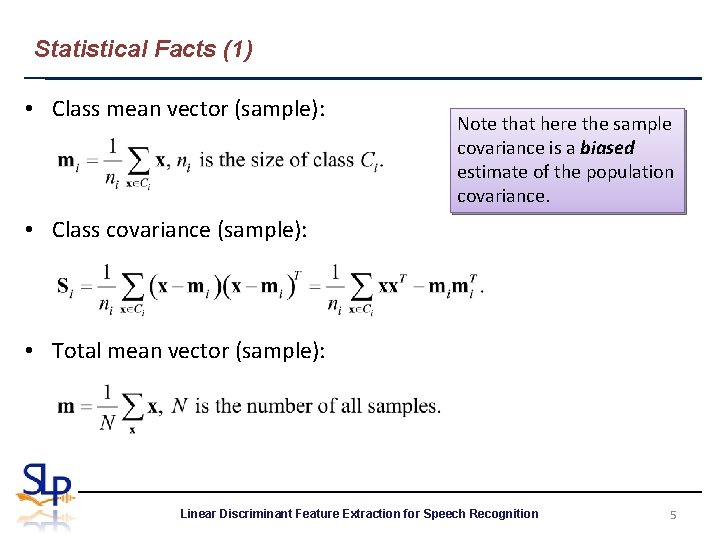

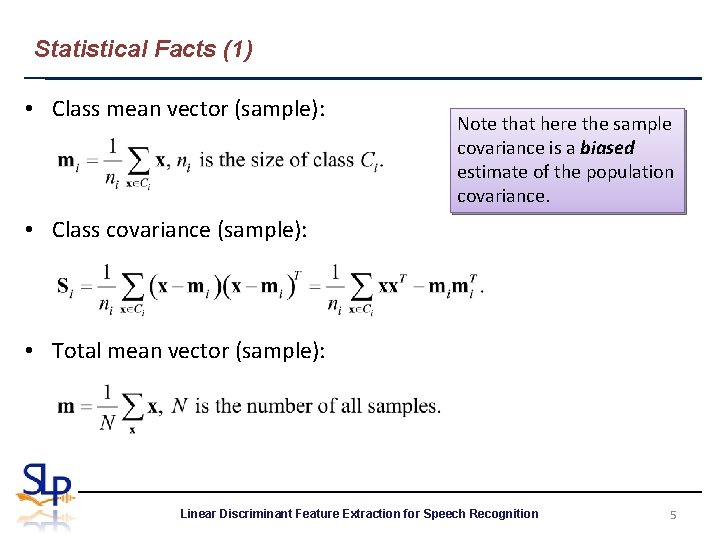

Statistical Facts (1) • Class mean vector (sample): Note that here the sample covariance is a biased estimate of the population covariance. • Class covariance (sample): • Total mean vector (sample): Linear Discriminant Feature Extraction for Speech Recognition 5

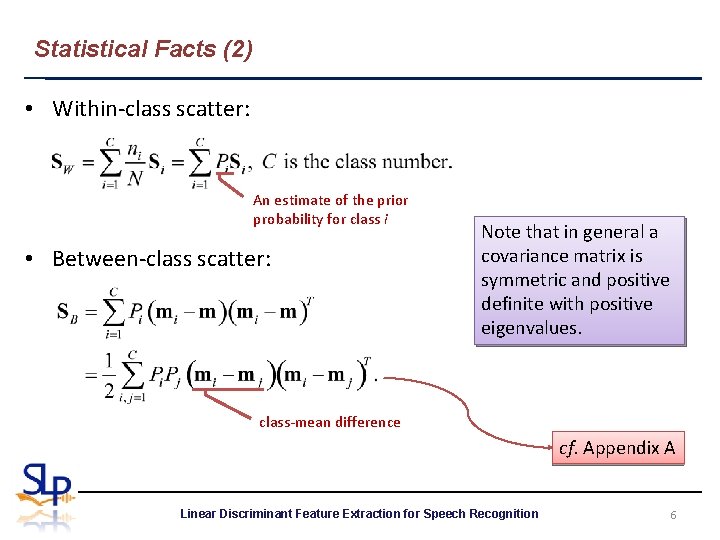

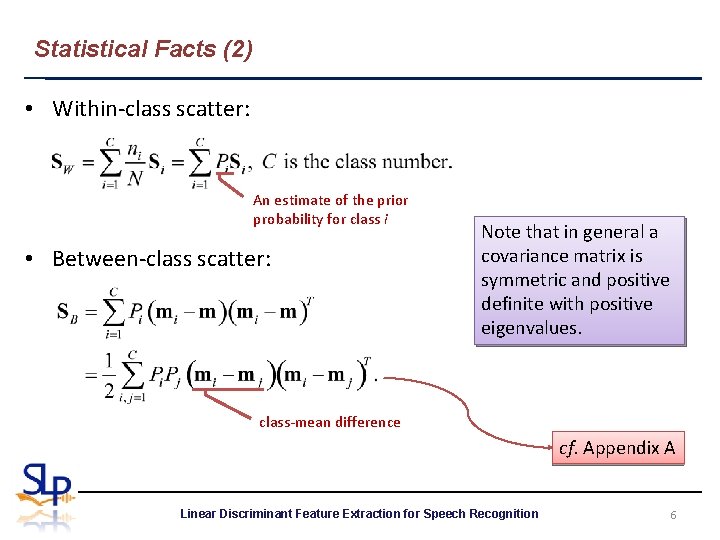

Statistical Facts (2) • Within-class scatter: An estimate of the prior probability for class i • Between-class scatter: Note that in general a covariance matrix is symmetric and positive definite with positive eigenvalues. class-mean difference cf. Appendix A Linear Discriminant Feature Extraction for Speech Recognition 6

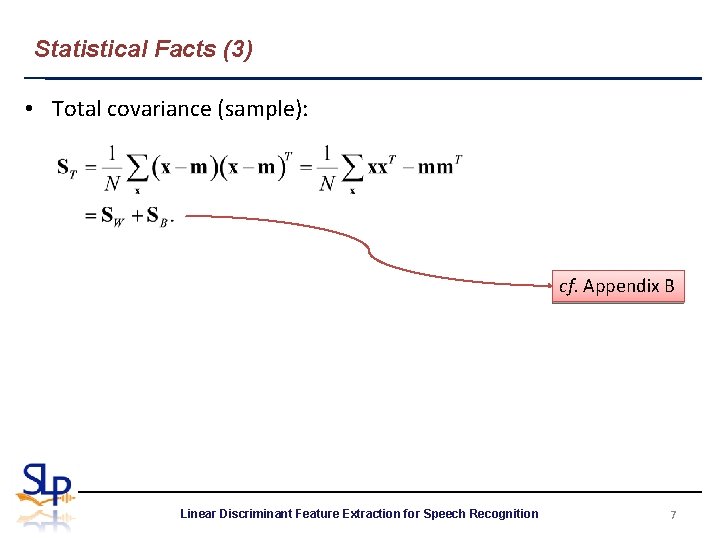

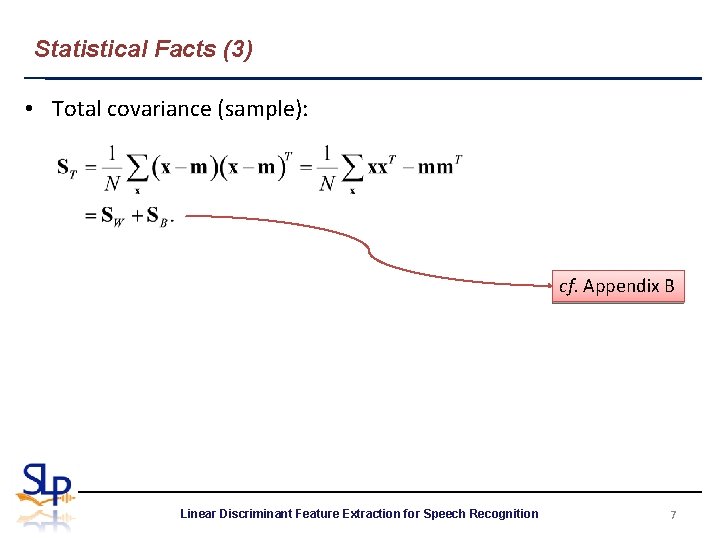

Statistical Facts (3) • Total covariance (sample): cf. Appendix B Linear Discriminant Feature Extraction for Speech Recognition 7

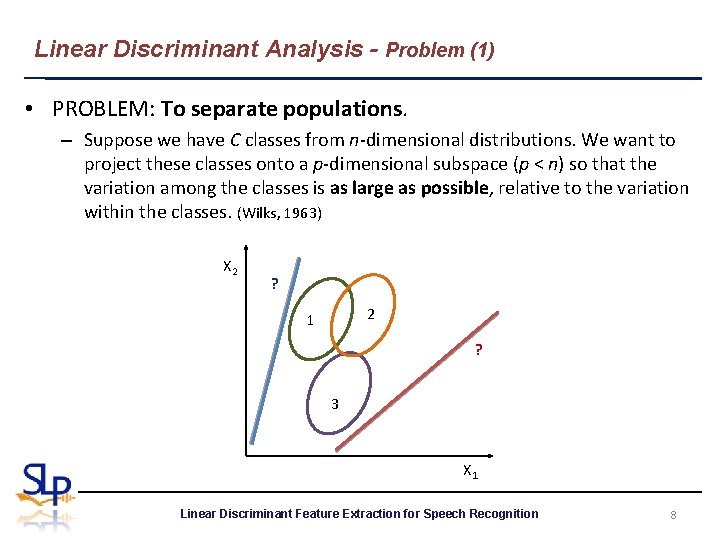

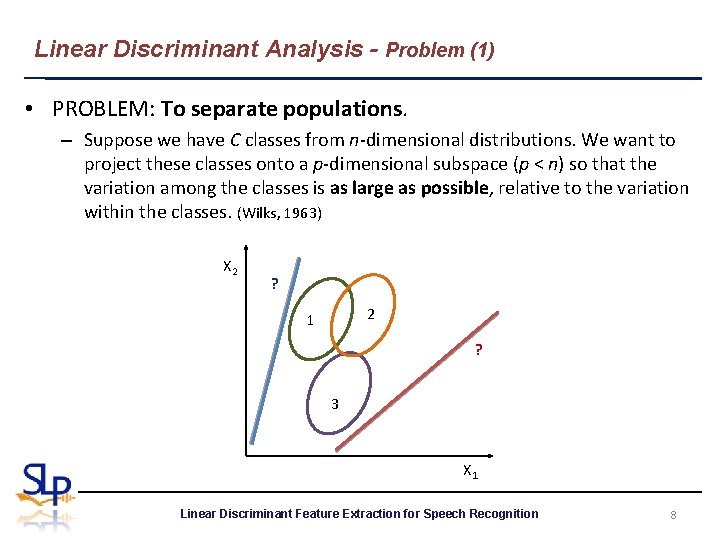

Linear Discriminant Analysis - Problem (1) • PROBLEM: To separate populations. – Suppose we have C classes from n-dimensional distributions. We want to project these classes onto a p-dimensional subspace (p < n) so that the variation among the classes is as large as possible, relative to the variation within the classes. (Wilks, 1963) X 2 ? 2 1 ? 3 X 1 Linear Discriminant Feature Extraction for Speech Recognition 8

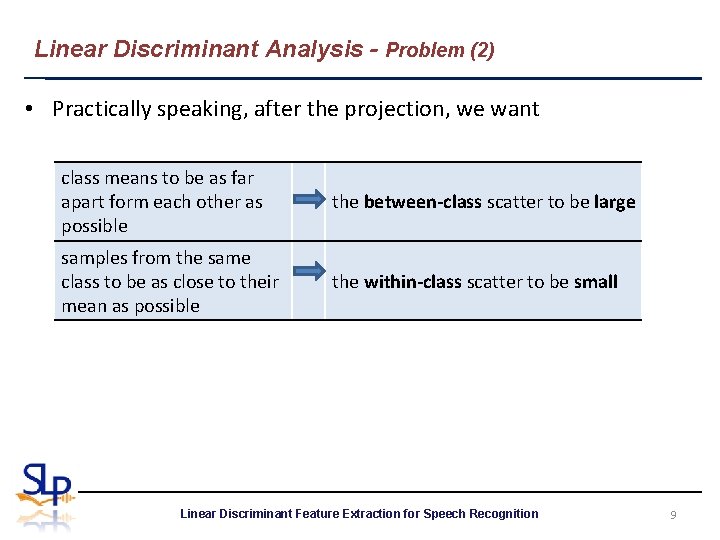

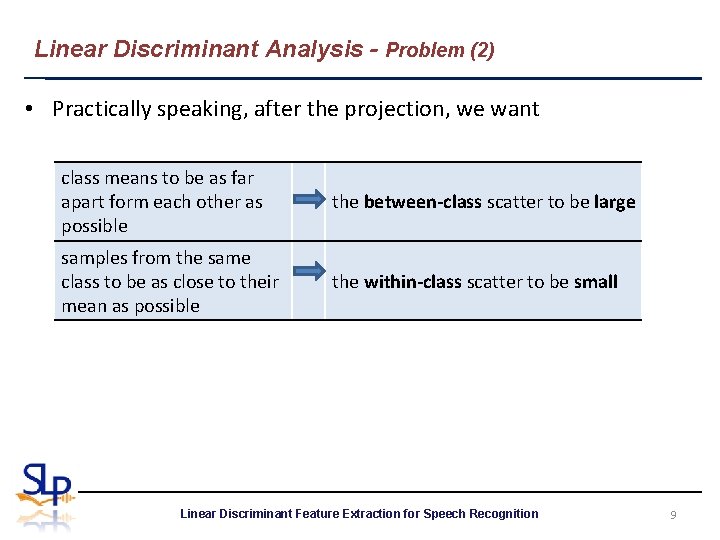

Linear Discriminant Analysis - Problem (2) • Practically speaking, after the projection, we want class means to be as far apart form each other as possible the between-class scatter to be large samples from the same class to be as close to their mean as possible the within-class scatter to be small Linear Discriminant Feature Extraction for Speech Recognition 9

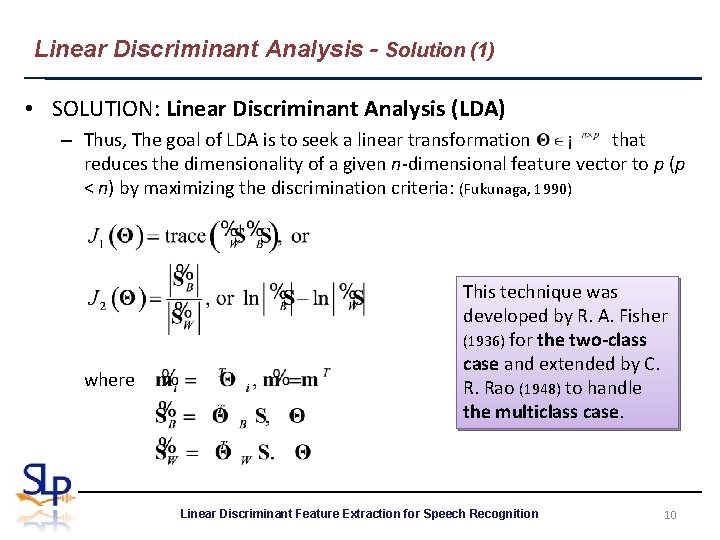

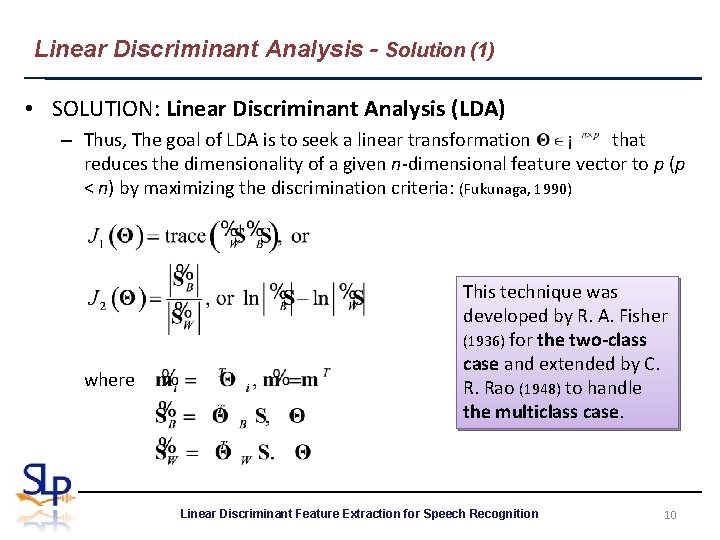

Linear Discriminant Analysis - Solution (1) • SOLUTION: Linear Discriminant Analysis (LDA) – Thus, The goal of LDA is to seek a linear transformation that reduces the dimensionality of a given n-dimensional feature vector to p (p < n) by maximizing the discrimination criteria: (Fukunaga, 1990) where This technique was developed by R. A. Fisher (1936) for the two-class case and extended by C. R. Rao (1948) to handle the multiclass case. Linear Discriminant Feature Extraction for Speech Recognition 10

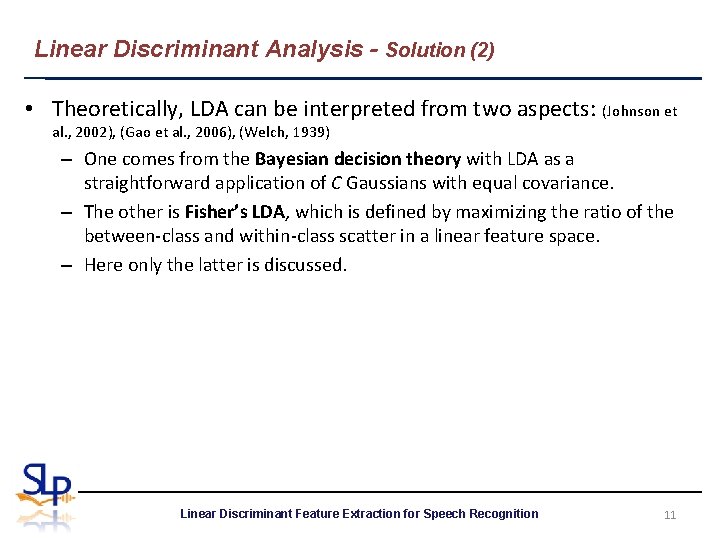

Linear Discriminant Analysis - Solution (2) • Theoretically, LDA can be interpreted from two aspects: (Johnson et al. , 2002), (Gao et al. , 2006), (Welch, 1939) – One comes from the Bayesian decision theory with LDA as a straightforward application of C Gaussians with equal covariance. – The other is Fisher’s LDA, which is defined by maximizing the ratio of the between-class and within-class scatter in a linear feature space. – Here only the latter is discussed. Linear Discriminant Feature Extraction for Speech Recognition 11

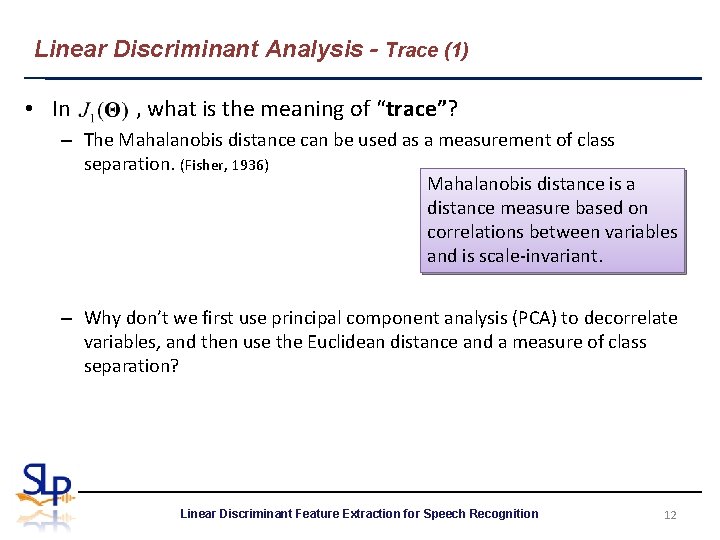

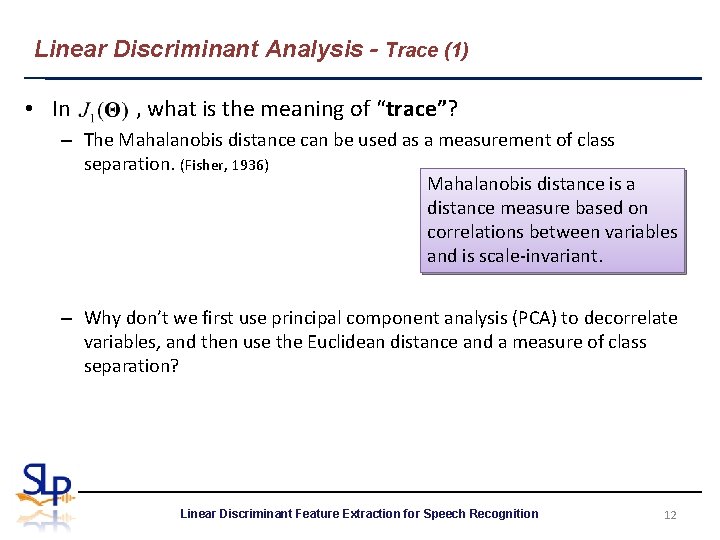

Linear Discriminant Analysis - Trace (1) • In , what is the meaning of “trace”? – The Mahalanobis distance can be used as a measurement of class separation. (Fisher, 1936) Mahalanobis distance is a distance measure based on correlations between variables and is scale-invariant. – Why don’t we first use principal component analysis (PCA) to decorrelate variables, and then use the Euclidean distance and a measure of class separation? Linear Discriminant Feature Extraction for Speech Recognition 12

Linear Discriminant Analysis - Trace (2) • In , what is the meaning of “trace”? (cont. ) – Assume all classes share the same covariance Mahalanobis distance between and is – The average of , the square of the for all classes is Linear Discriminant Feature Extraction for Speech Recognition 13

Linear Discriminant Analysis - Determinant (1) • In , what is the meaning of “determinant”? – The determinant is the product of the eigenvalues, and hence is the product of the “variances” in the principal directions, thereby measuring the square of the hyperellipsoidal scattering volume. (Duda et al. , 2001) – The determinant of a nonsingular covariance matrix can also be viewed as the generalized variance. (Wilks, 1963) – The volume of space occupied by the cloud of data points is proportional to the square root of the generalized variance. Linear Discriminant Feature Extraction for Speech Recognition 14

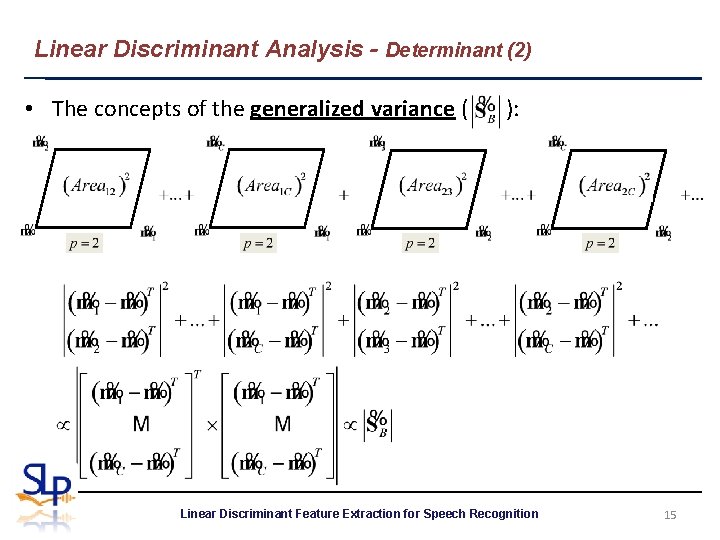

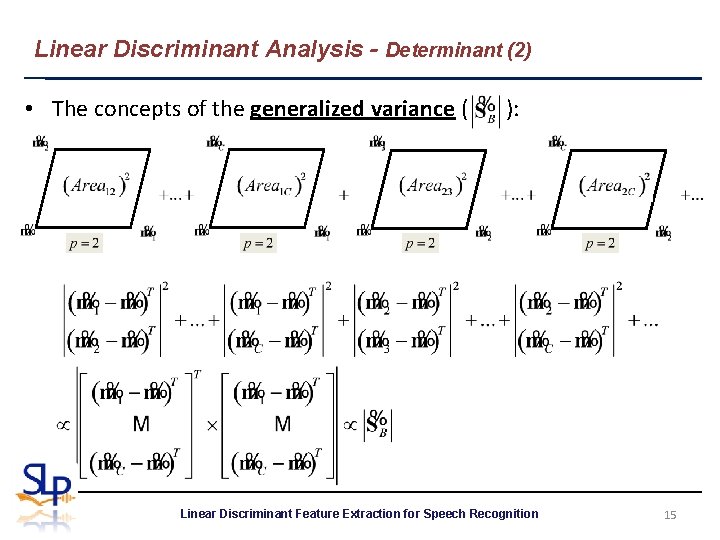

Linear Discriminant Analysis - Determinant (2) • The concepts of the generalized variance ( ): Linear Discriminant Feature Extraction for Speech Recognition 15

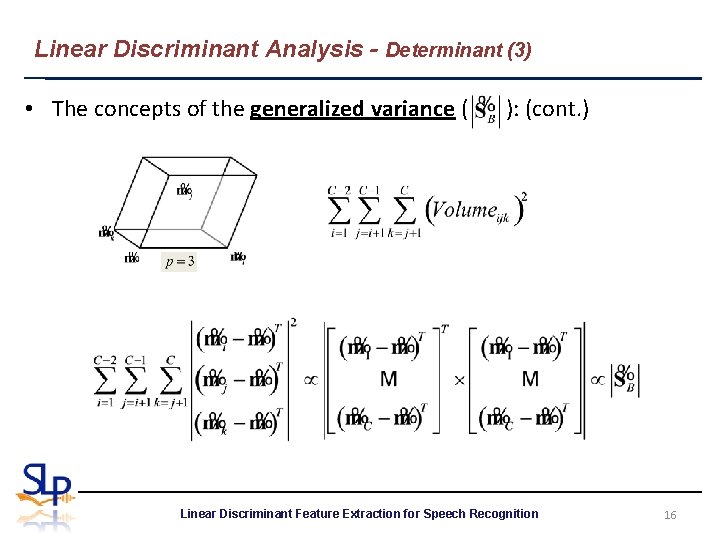

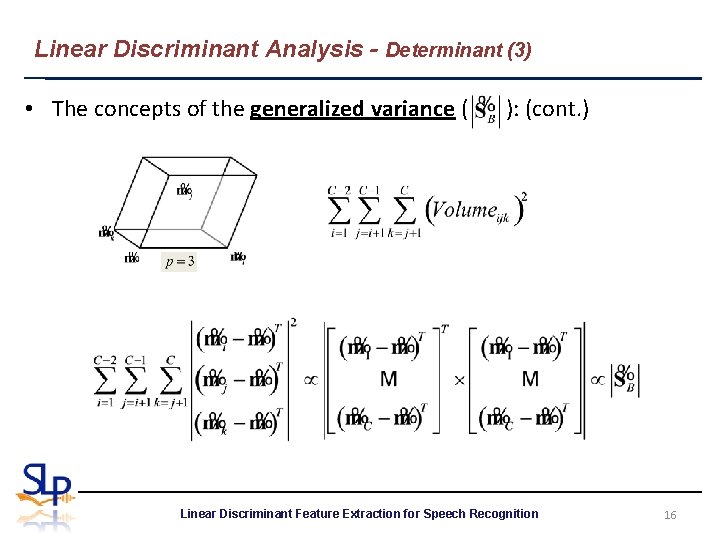

Linear Discriminant Analysis - Determinant (3) • The concepts of the generalized variance ( ): (cont. ) Linear Discriminant Feature Extraction for Speech Recognition 16

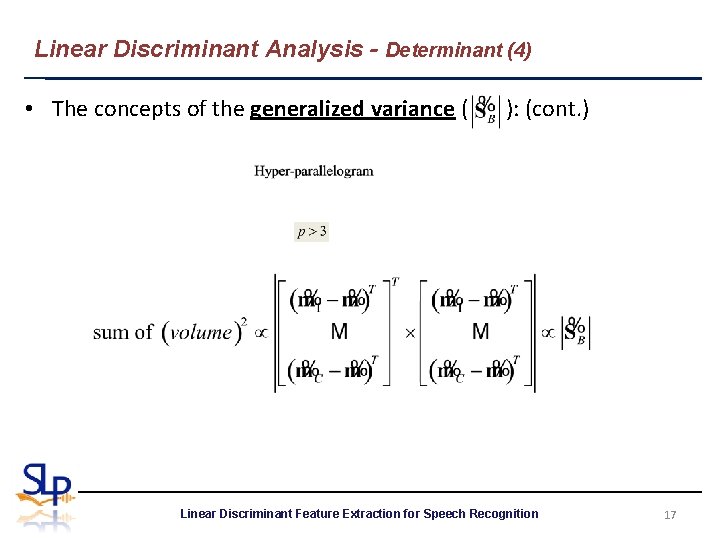

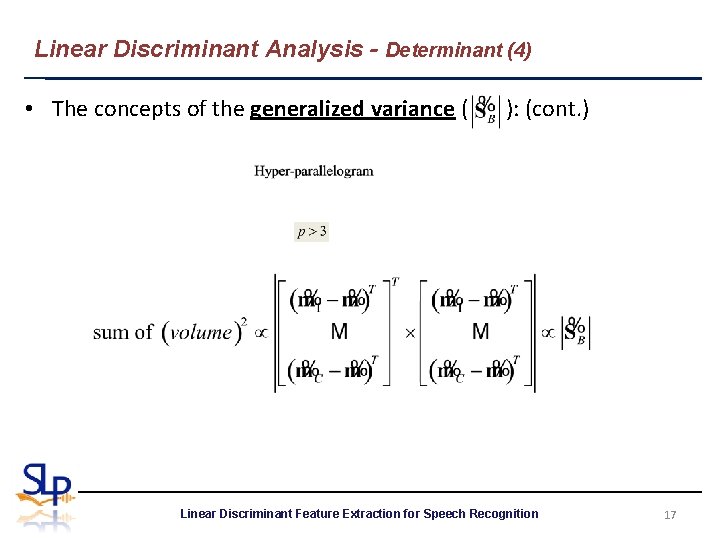

Linear Discriminant Analysis - Determinant (4) • The concepts of the generalized variance ( ): (cont. ) Linear Discriminant Feature Extraction for Speech Recognition 17

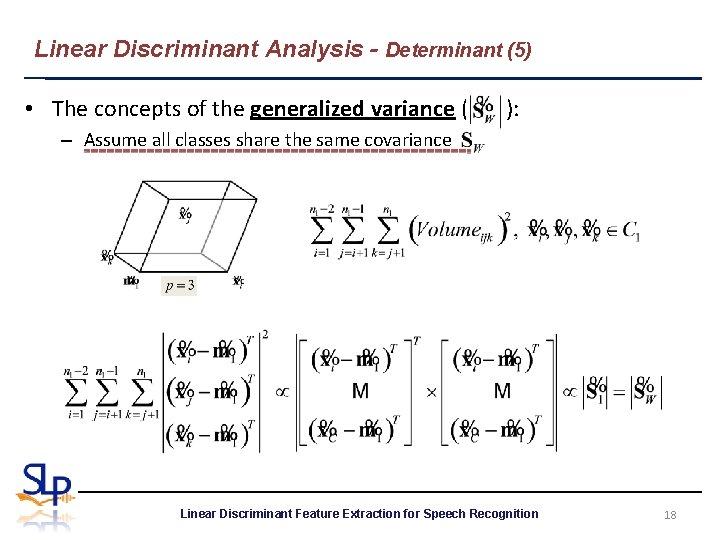

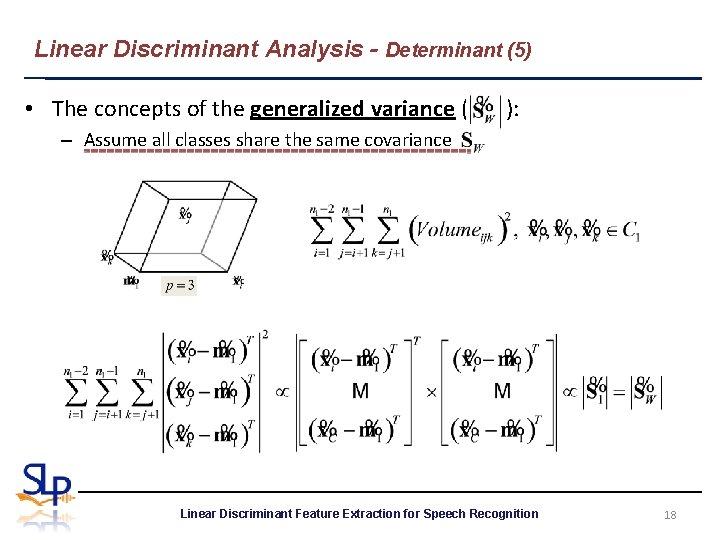

Linear Discriminant Analysis - Determinant (5) • The concepts of the generalized variance ( ): – Assume all classes share the same covariance Linear Discriminant Feature Extraction for Speech Recognition 18

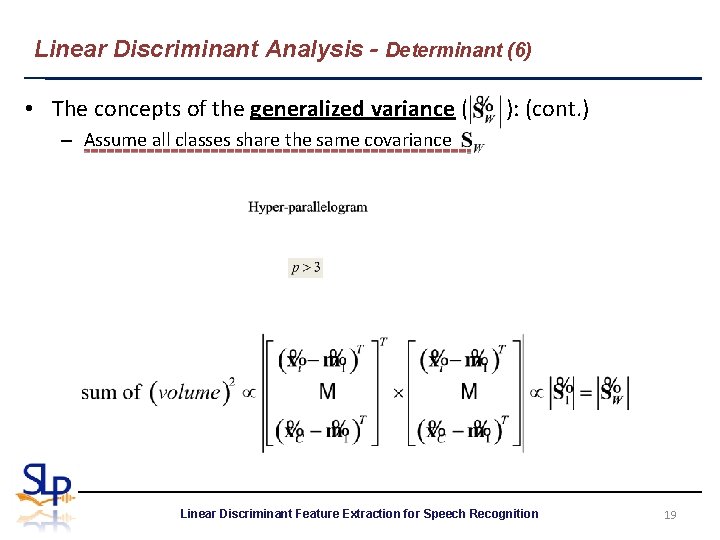

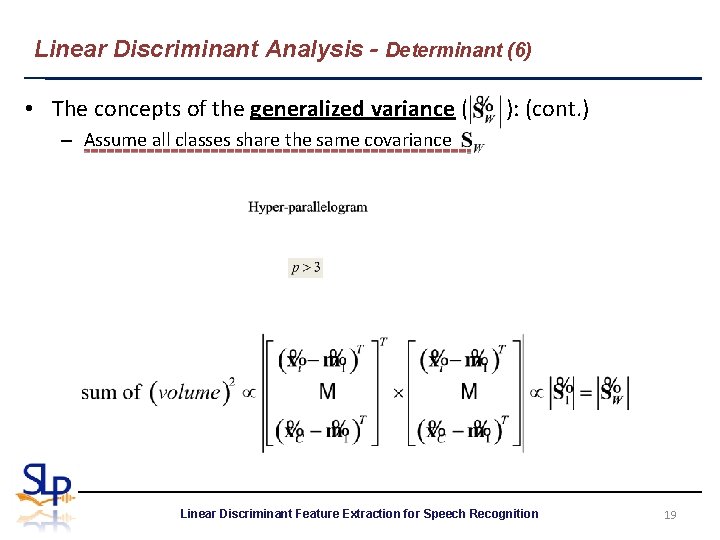

Linear Discriminant Analysis - Determinant (6) • The concepts of the generalized variance ( ): (cont. ) – Assume all classes share the same covariance Linear Discriminant Feature Extraction for Speech Recognition 19

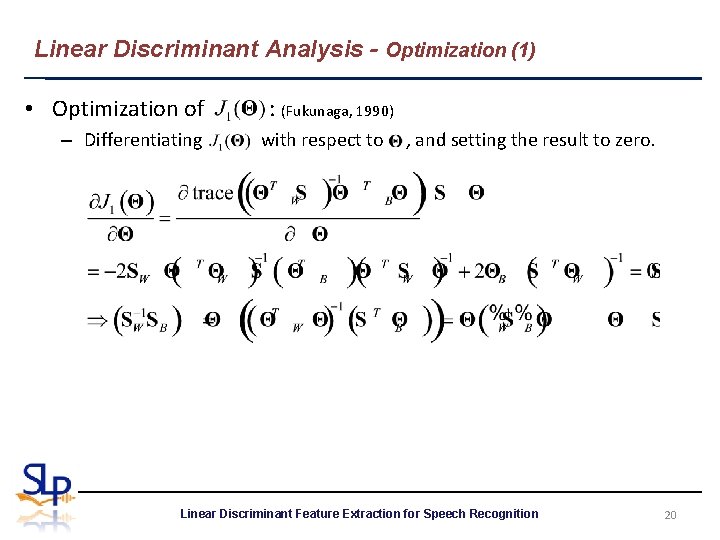

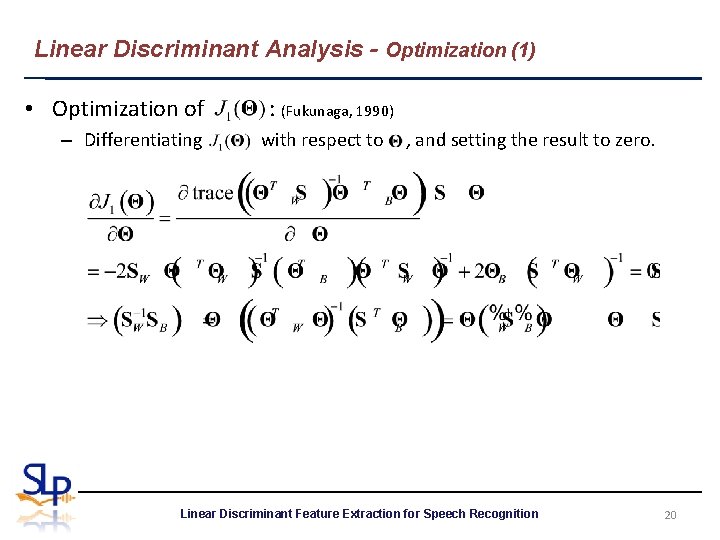

Linear Discriminant Analysis - Optimization (1) • Optimization of – Differentiating : (Fukunaga, 1990) with respect to , and setting the result to zero. Linear Discriminant Feature Extraction for Speech Recognition 20

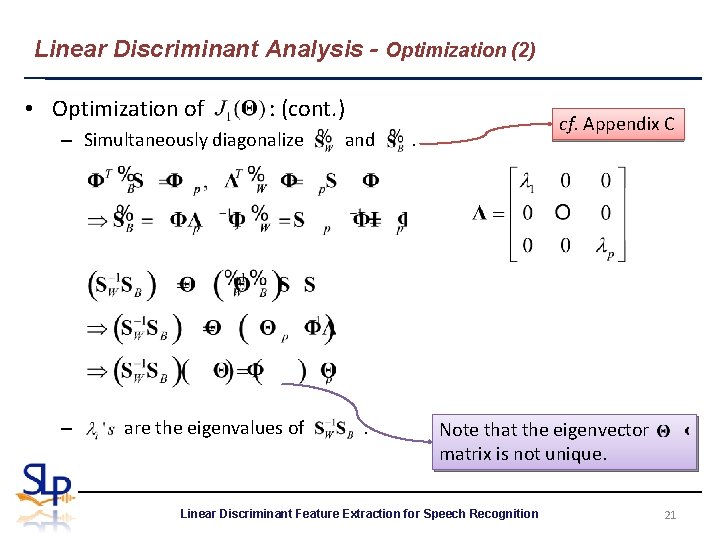

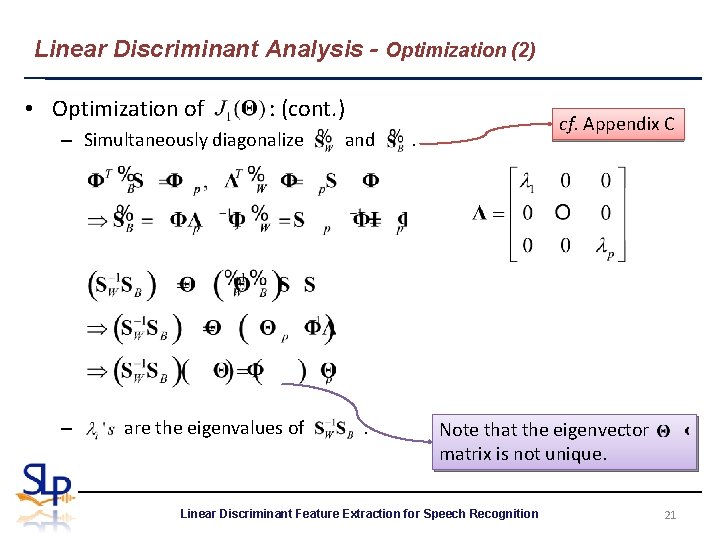

Linear Discriminant Analysis - Optimization (2) • Optimization of : (cont. ) – Simultaneously diagonalize – are the eigenvalues of and . cf. Appendix C . Note that the eigenvector matrix is not unique. Linear Discriminant Feature Extraction for Speech Recognition 21

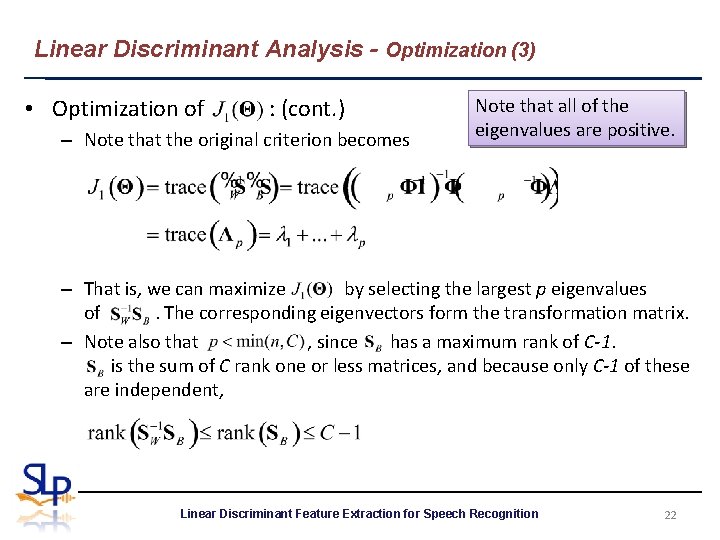

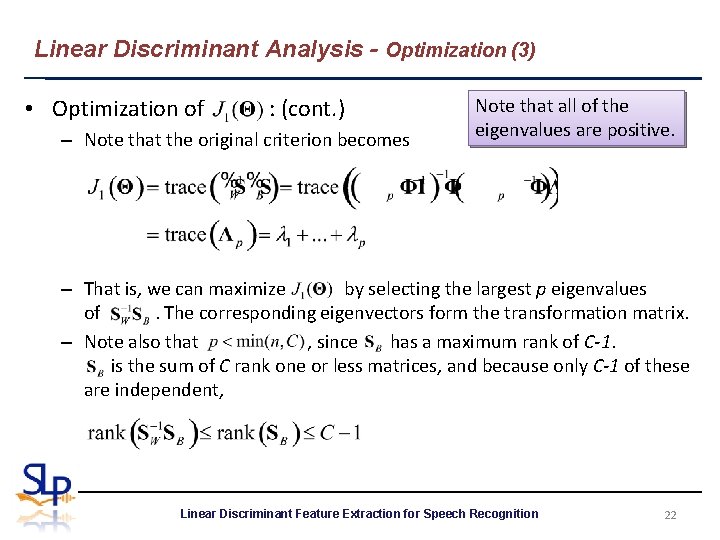

Linear Discriminant Analysis - Optimization (3) • Optimization of : (cont. ) – Note that the original criterion becomes Note that all of the eigenvalues are positive. – That is, we can maximize by selecting the largest p eigenvalues of. The corresponding eigenvectors form the transformation matrix. – Note also that , since has a maximum rank of C-1. is the sum of C rank one or less matrices, and because only C-1 of these are independent, Linear Discriminant Feature Extraction for Speech Recognition 22

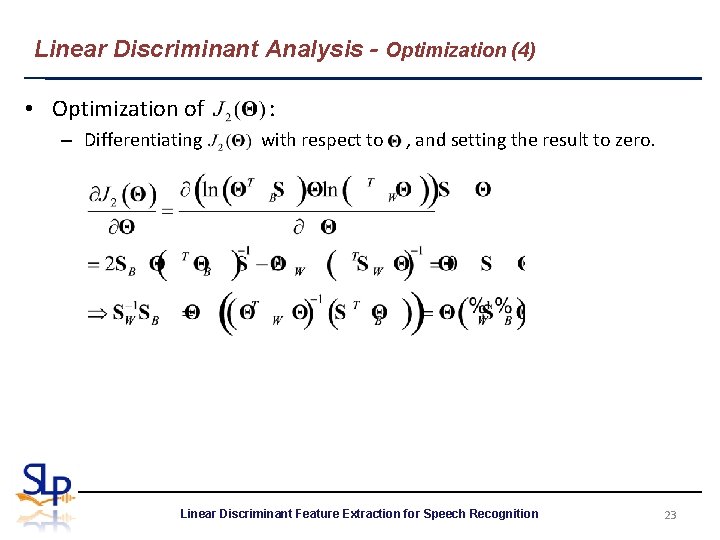

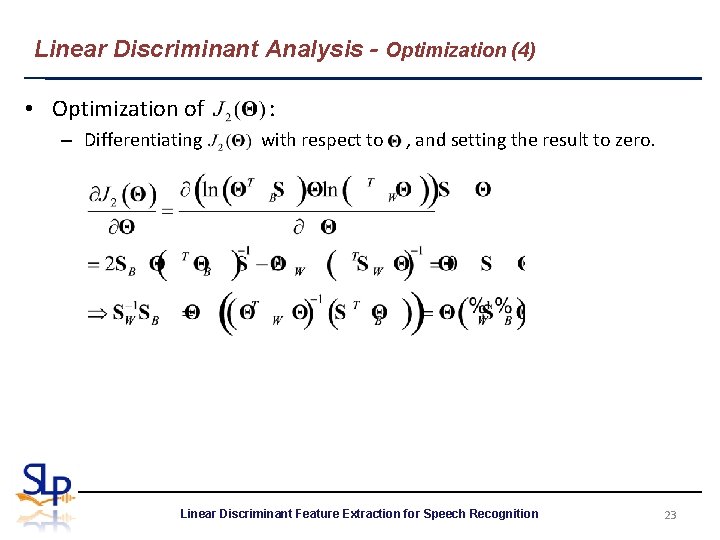

Linear Discriminant Analysis - Optimization (4) • Optimization of – Differentiating : with respect to , and setting the result to zero. Linear Discriminant Feature Extraction for Speech Recognition 23

Linear Discriminant Analysis - Optimization (5) • Optimization of : (cont. ) – We can see that the procedure is similar to that of Note that all of the eigenvalues are positive. – That is, we can maximize by selecting the largest p eigenvalues of. The corresponding eigenvectors form the transformation matrix. Linear Discriminant Feature Extraction for Speech Recognition 24

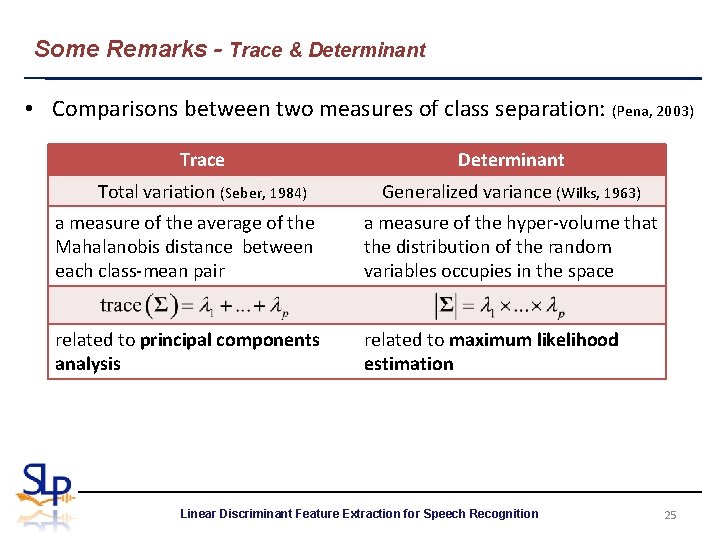

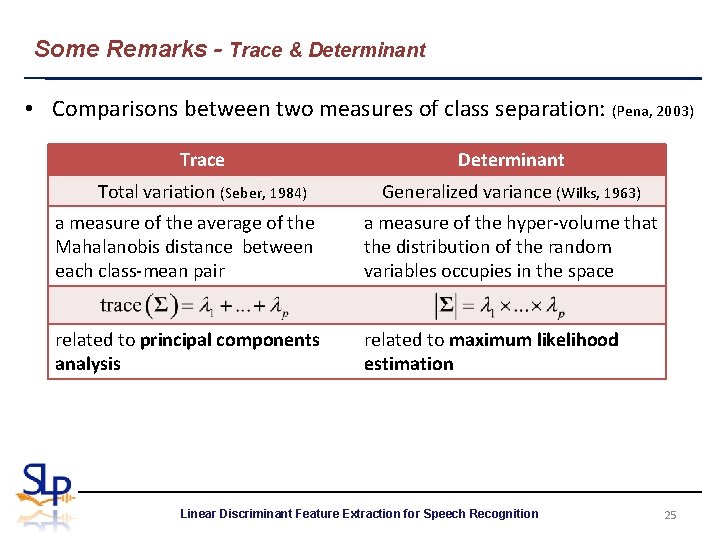

Some Remarks - Trace & Determinant • Comparisons between two measures of class separation: (Pena, 2003) Trace Determinant Total variation (Seber, 1984) Generalized variance (Wilks, 1963) a measure of the average of the Mahalanobis distance between each class-mean pair a measure of the hyper-volume that the distribution of the random variables occupies in the space related to principal components analysis related to maximum likelihood estimation Linear Discriminant Feature Extraction for Speech Recognition 25

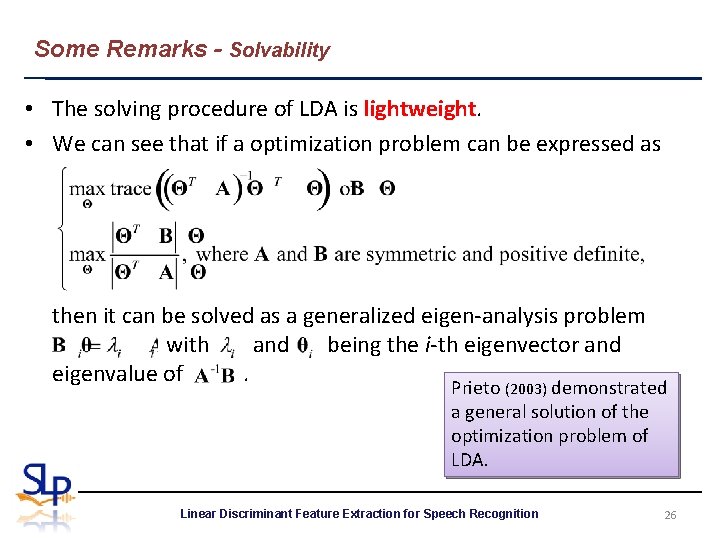

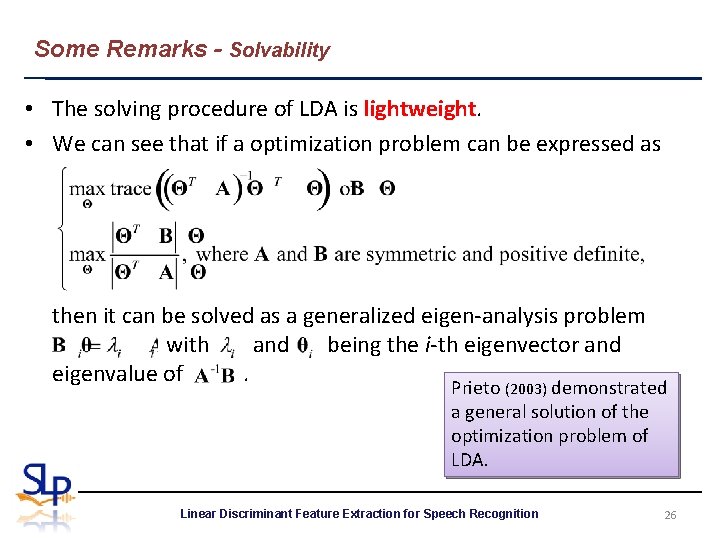

Some Remarks - Solvability • The solving procedure of LDA is lightweight. • We can see that if a optimization problem can be expressed as then it can be solved as a generalized eigen-analysis problem with and being the i-th eigenvector and eigenvalue of. Prieto (2003) demonstrated a general solution of the optimization problem of LDA. Linear Discriminant Feature Extraction for Speech Recognition 26

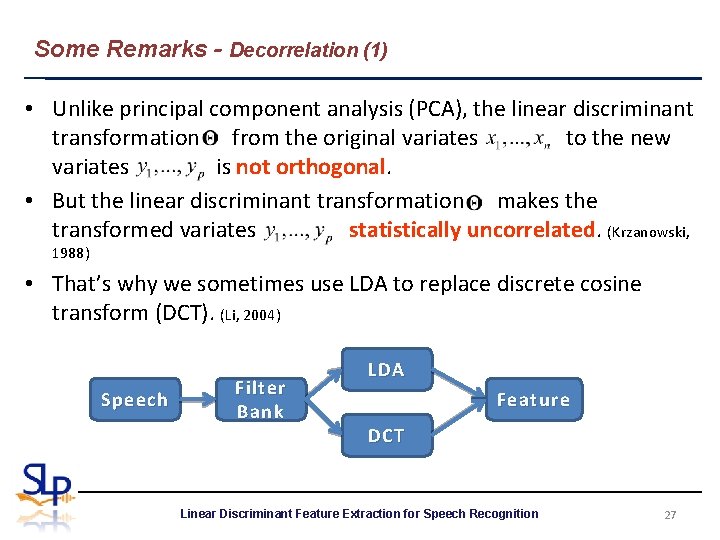

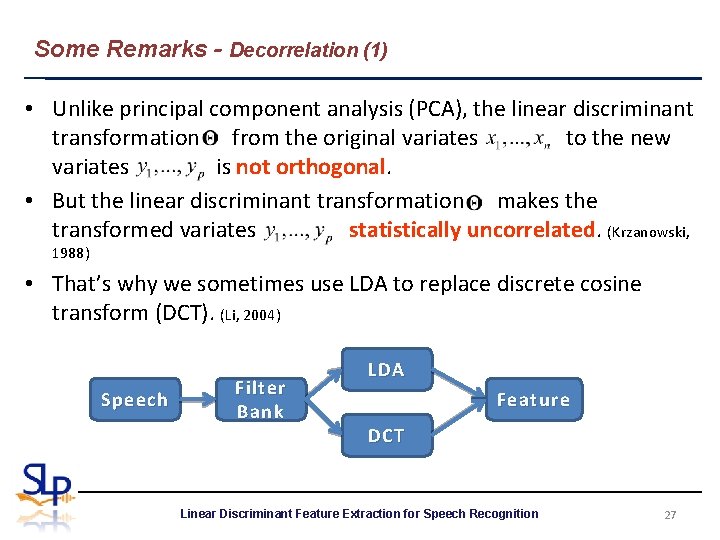

Some Remarks - Decorrelation (1) • Unlike principal component analysis (PCA), the linear discriminant transformation from the original variates to the new variates is not orthogonal. • But the linear discriminant transformation makes the transformed variates statistically uncorrelated. (Krzanowski, 1988) • That’s why we sometimes use LDA to replace discrete cosine transform (DCT). (Li, 2004) Speech Filter Bank LDA Feature DCT Linear Discriminant Feature Extraction for Speech Recognition 27

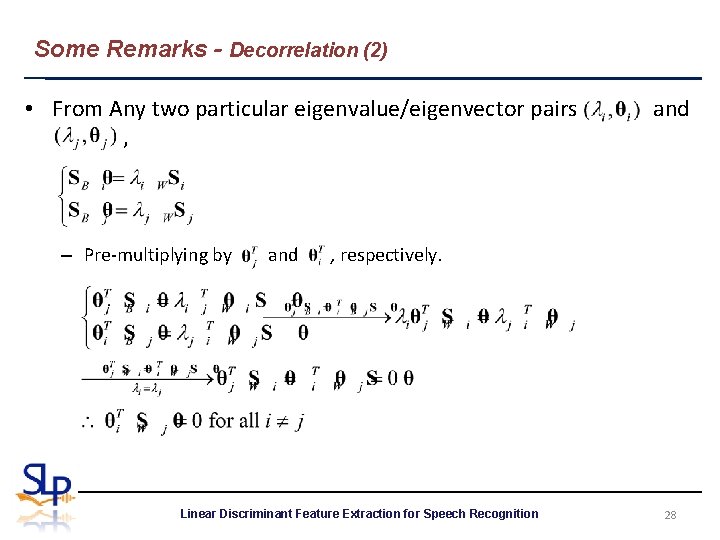

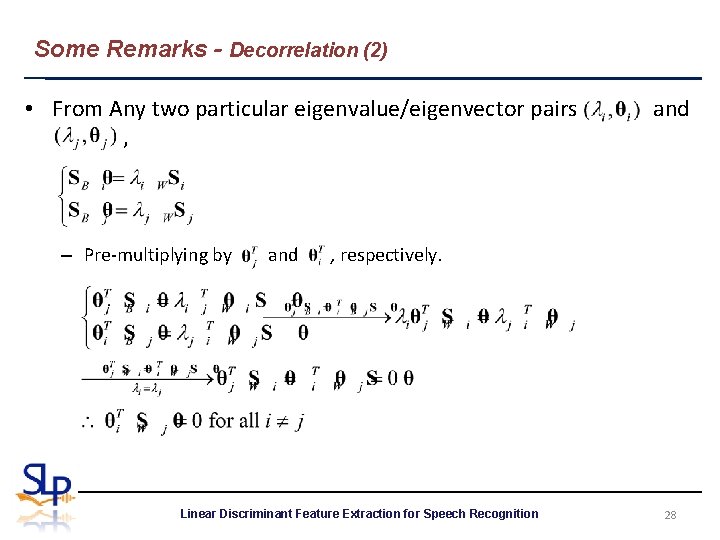

Some Remarks - Decorrelation (2) • From Any two particular eigenvalue/eigenvector pairs , – Pre-multiplying by and , respectively. Linear Discriminant Feature Extraction for Speech Recognition 28

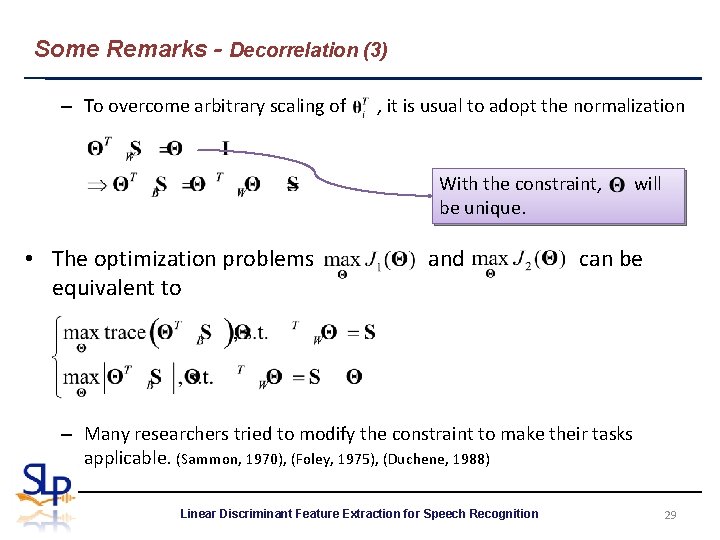

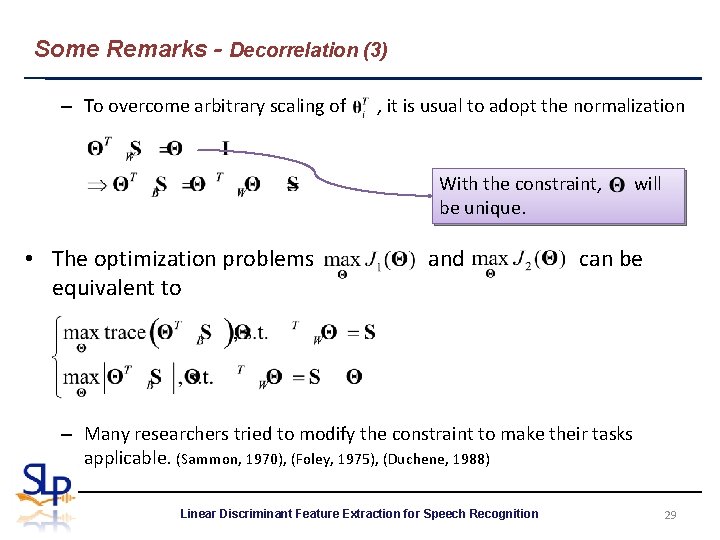

Some Remarks - Decorrelation (3) – To overcome arbitrary scaling of , it is usual to adopt the normalization With the constraint, be unique. • The optimization problems equivalent to and will can be – Many researchers tried to modify the constraint to make their tasks applicable. (Sammon, 1970), (Foley, 1975), (Duchene, 1988) Linear Discriminant Feature Extraction for Speech Recognition 29

Some Remarks - Decorrelation (4) • Thus, the LDA transformation are uncorrelated both within and between classes, and are scaled to have unit variance within classes. • With the constraint, we can give LDA a geometrical meaning. Linear Discriminant Feature Extraction for Speech Recognition 30

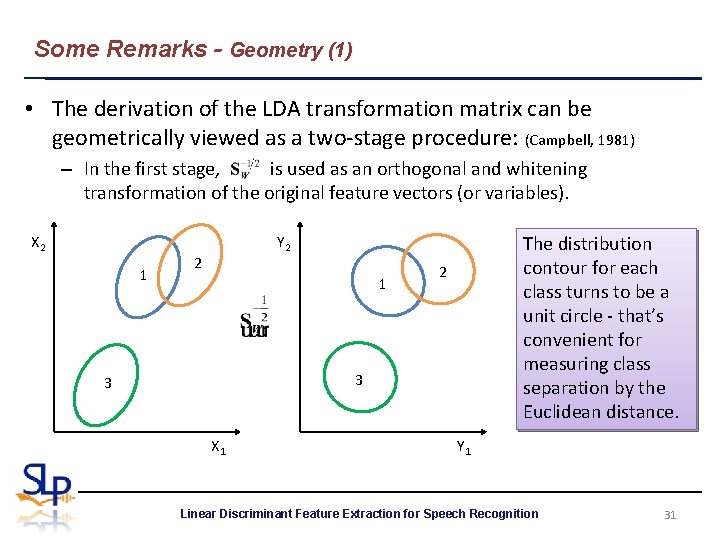

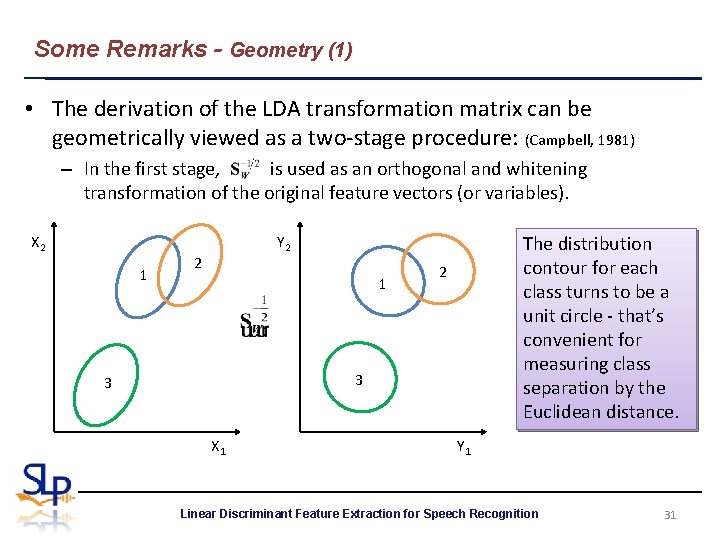

Some Remarks - Geometry (1) • The derivation of the LDA transformation matrix can be geometrically viewed as a two-stage procedure: (Campbell, 1981) – In the first stage, is used as an orthogonal and whitening transformation of the original feature vectors (or variables). X 2 1 The distribution contour for each class turns to be a unit circle - that’s convenient for measuring class separation by the Euclidean distance. Y 2 2 1 2 3 3 X 1 Y 1 Linear Discriminant Feature Extraction for Speech Recognition 31

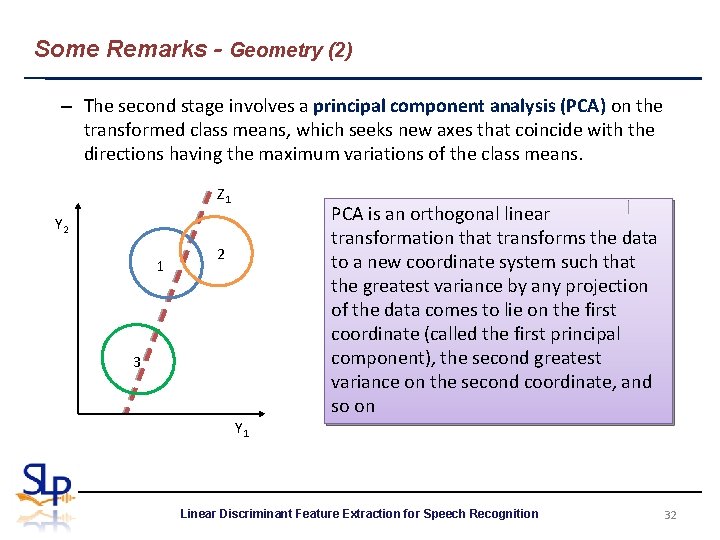

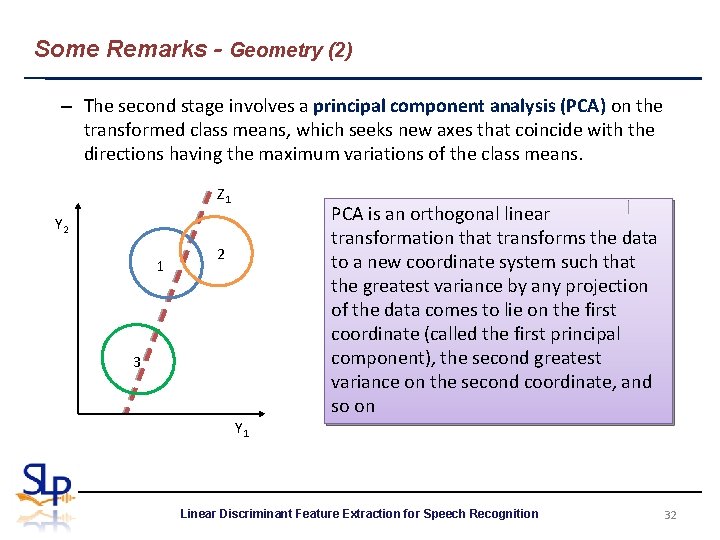

Some Remarks - Geometry (2) – The second stage involves a principal component analysis (PCA) on the transformed class means, which seeks new axes that coincide with the directions having the maximum variations of the class means. Z 1 Y 2 1 2 3 Y 1 PCA is an orthogonal linear transformation that transforms the data to a new coordinate system such that the greatest variance by any projection of the data comes to lie on the first coordinate (called the first principal component), the second greatest variance on the second coordinate, and so on Linear Discriminant Feature Extraction for Speech Recognition 32

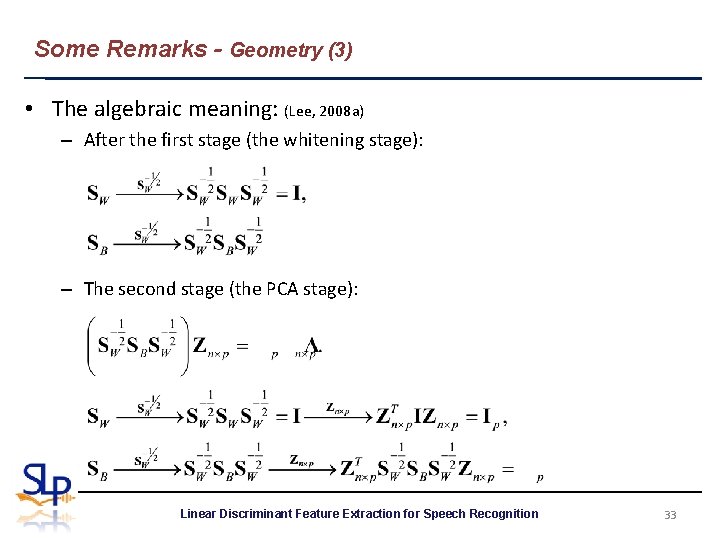

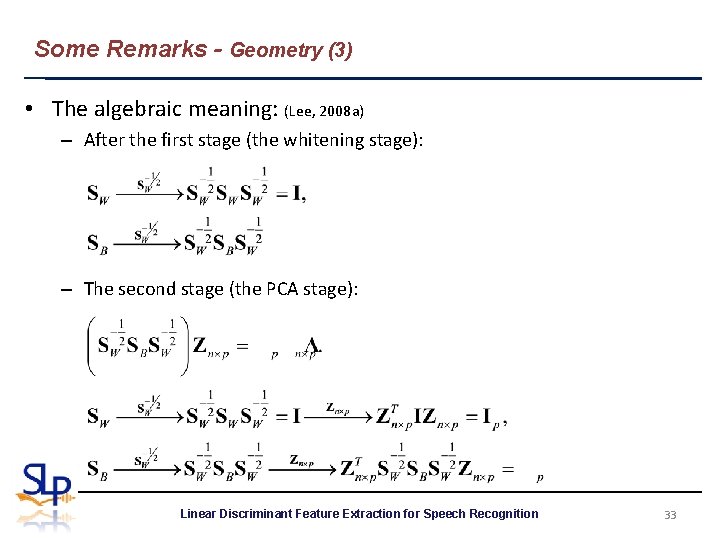

Some Remarks - Geometry (3) • The algebraic meaning: (Lee, 2008 a) – After the first stage (the whitening stage): – The second stage (the PCA stage): Linear Discriminant Feature Extraction for Speech Recognition 33

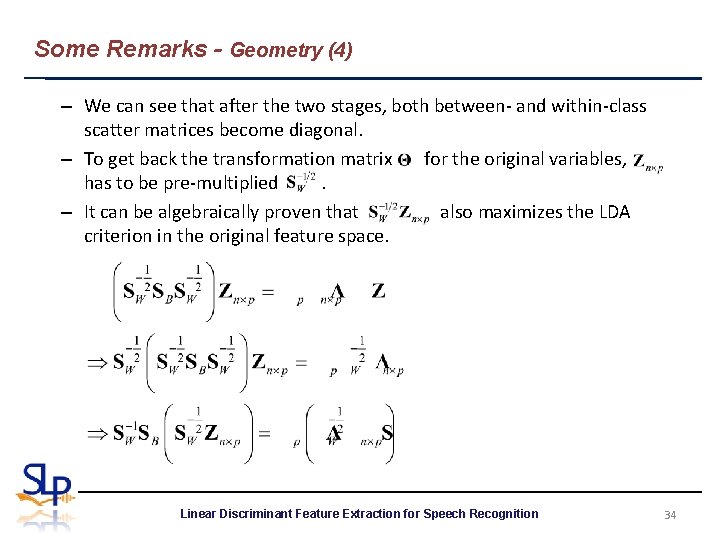

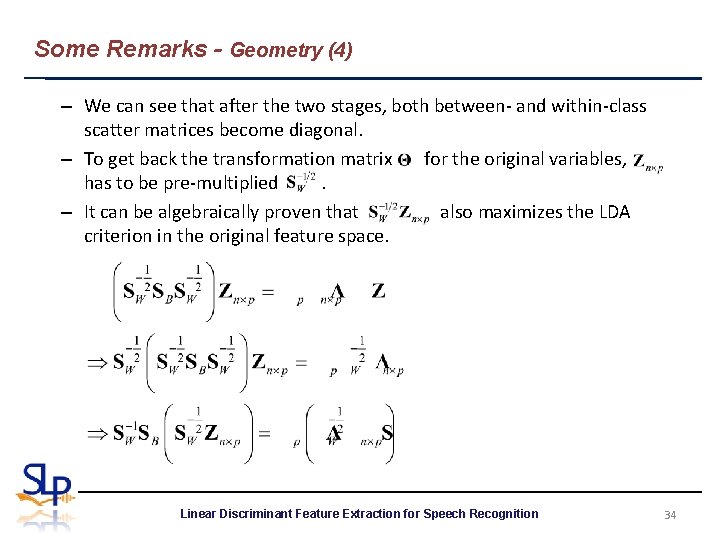

Some Remarks - Geometry (4) – We can see that after the two stages, both between- and within-class scatter matrices become diagonal. – To get back the transformation matrix for the original variables, has to be pre-multiplied by. – It can be algebraically proven that also maximizes the LDA criterion in the original feature space. Linear Discriminant Feature Extraction for Speech Recognition 34

Some Remarks - Geometry (5) • Thus, we can give an alternative algorithm for deriving an unique LDA transformation. Algorithm I. An alternative procedure of LDA 1. Find matrix , which is made up of the eigenvectors corresponding to the p largest eigenvalues of. 2. Derive. • According to the geometric analysis of LDA, at least two possible directions are offered to further generalize LDA. – To obtain more effective estimates of the within-class scatter – To modify the between-class scatter for better class discrimination. Linear Discriminant Feature Extraction for Speech Recognition 35

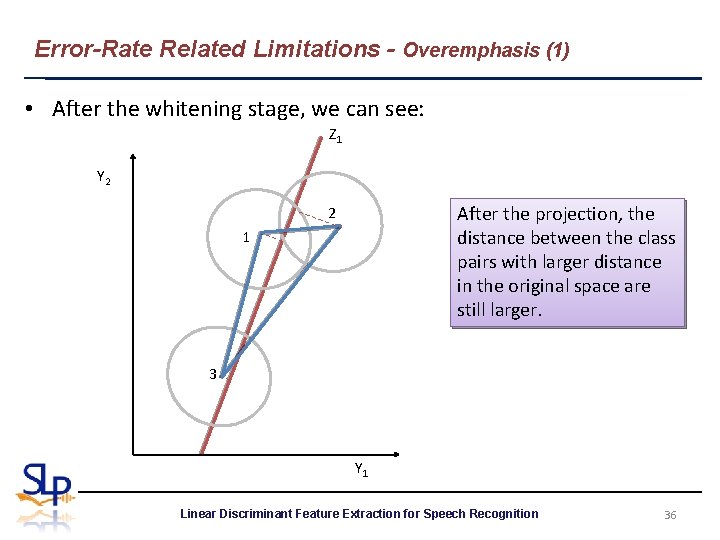

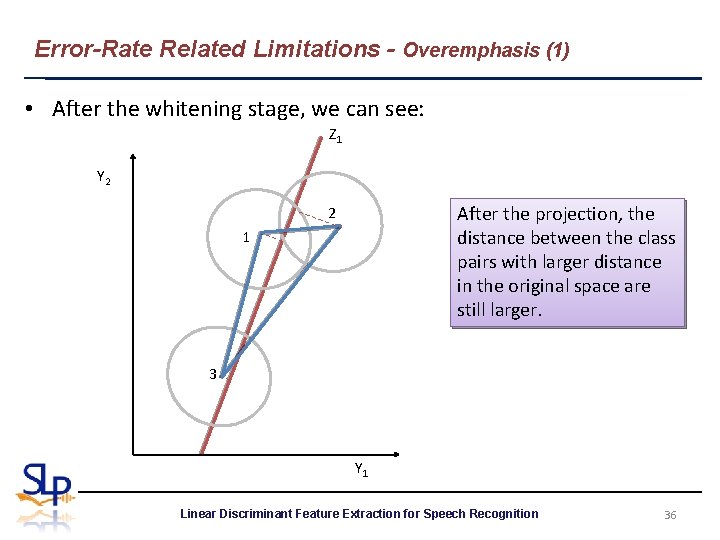

Error-Rate Related Limitations - Overemphasis (1) • After the whitening stage, we can see: Z 1 Y 2 After the projection, the distance between the class pairs with larger distance in the original space are still larger. 2 1 3 Y 1 Linear Discriminant Feature Extraction for Speech Recognition 36

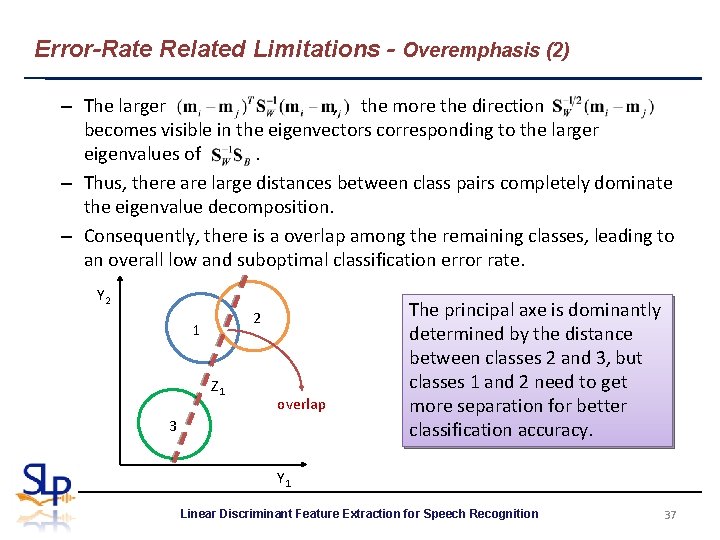

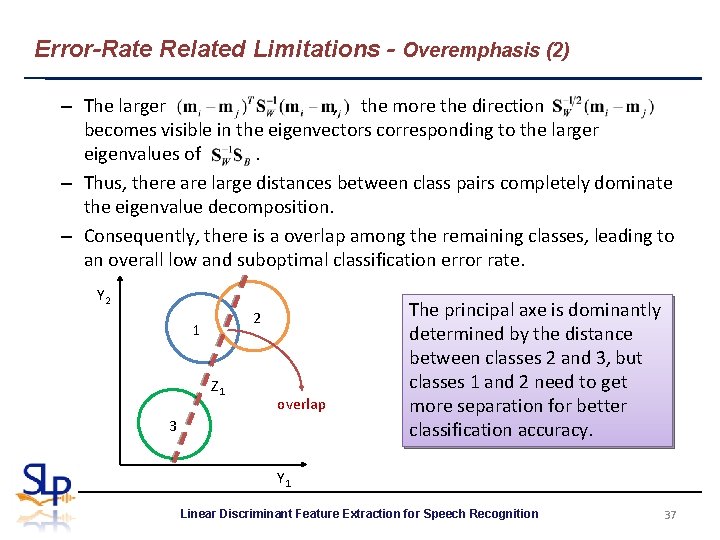

Error-Rate Related Limitations - Overemphasis (2) – The larger , the more the direction becomes visible in the eigenvectors corresponding to the larger eigenvalues of. – Thus, there are large distances between class pairs completely dominate the eigenvalue decomposition. – Consequently, there is a overlap among the remaining classes, leading to an overall low and suboptimal classification error rate. Y 2 2 1 Z 1 overlap 3 The principal axe is dominantly determined by the distance between classes 2 and 3, but classes 1 and 2 need to get more separation for better classification accuracy. Y 1 Linear Discriminant Feature Extraction for Speech Recognition 37

Error-Rate Related Limitations - Overemphasis (3) • To alleviate the overemphasis of the influence of classes that are already well-separated, some weighting based approaches were proposed. • Modifying the LDA criterion by replacing with the following weighted form: weighting function Linear Discriminant Feature Extraction for Speech Recognition 38

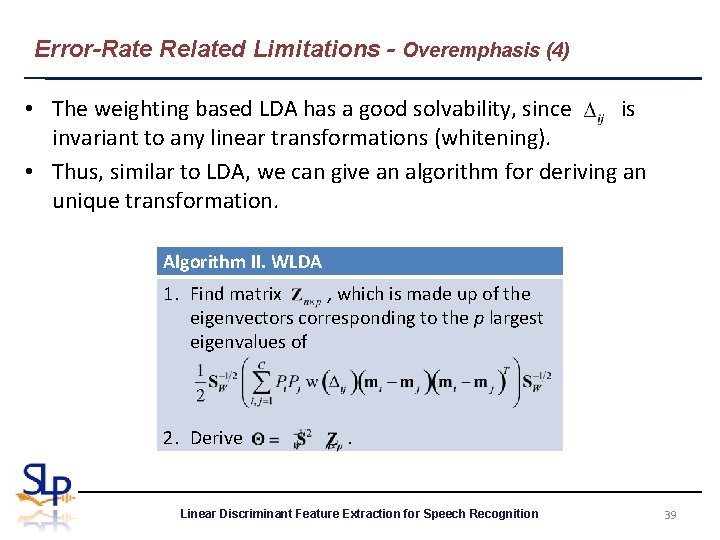

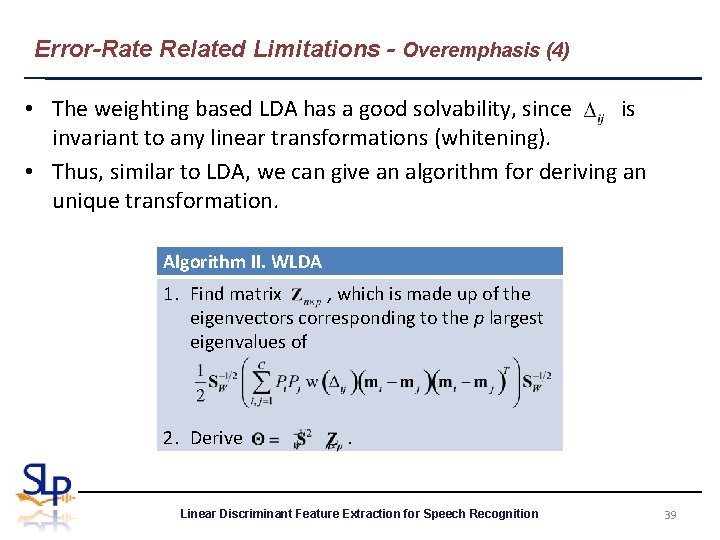

Error-Rate Related Limitations - Overemphasis (4) • The weighting based LDA has a good solvability, since is invariant to any linear transformations (whitening). • Thus, similar to LDA, we can give an algorithm for deriving an unique transformation. Algorithm II. WLDA 1. Find matrix , which is made up of the eigenvectors corresponding to the p largest eigenvalues of 2. Derive . Linear Discriminant Feature Extraction for Speech Recognition 39

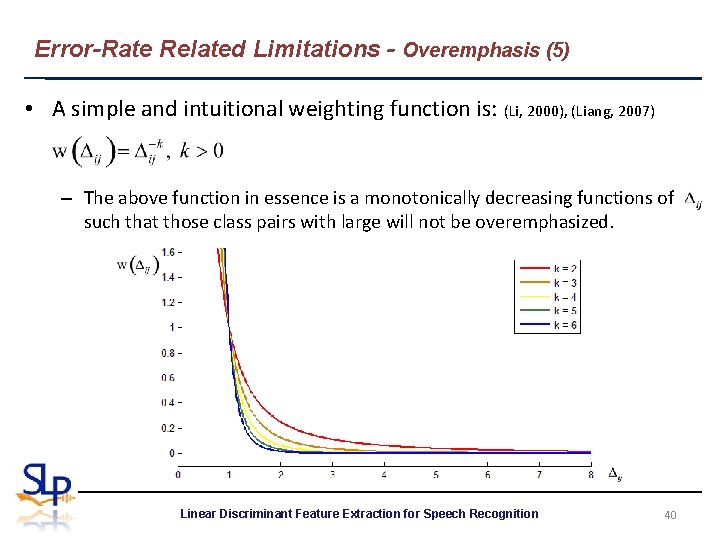

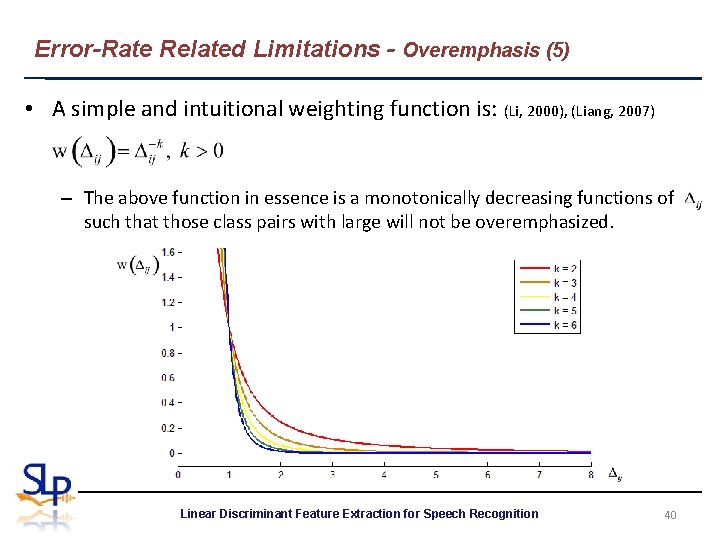

Error-Rate Related Limitations - Overemphasis (5) • A simple and intuitional weighting function is: (Li, 2000), (Liang, 2007) – The above function in essence is a monotonically decreasing functions of such that those class pairs with large will not be overemphasized. Linear Discriminant Feature Extraction for Speech Recognition 40

Error-Rate Related Limitations - Distance Measure (1) • As a distance-measure based approach, LDA tries to maximize the Mahalanobis distance between each class-mean pairs. • LDA is not directly associated with the classification error. • But, LDA is optimal for classification in a Bayesian sense on the following conditions: – The two-class problem – The classes are normal-distributed with equal-covariance. Linear Discriminant Feature Extraction for Speech Recognition 41

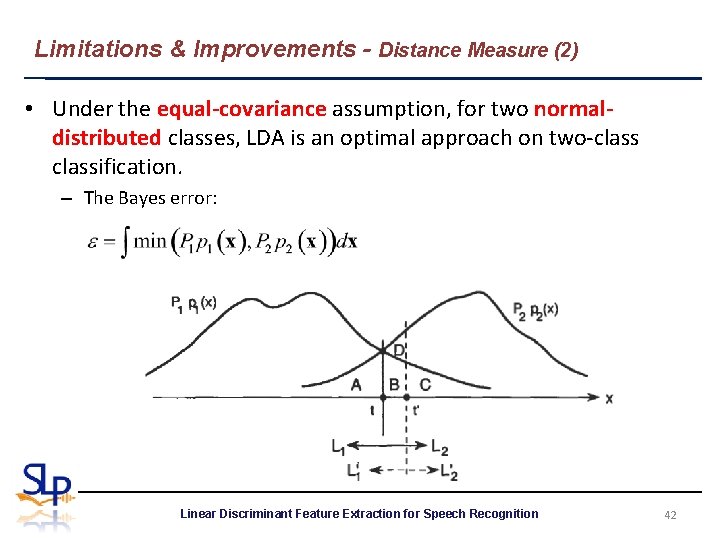

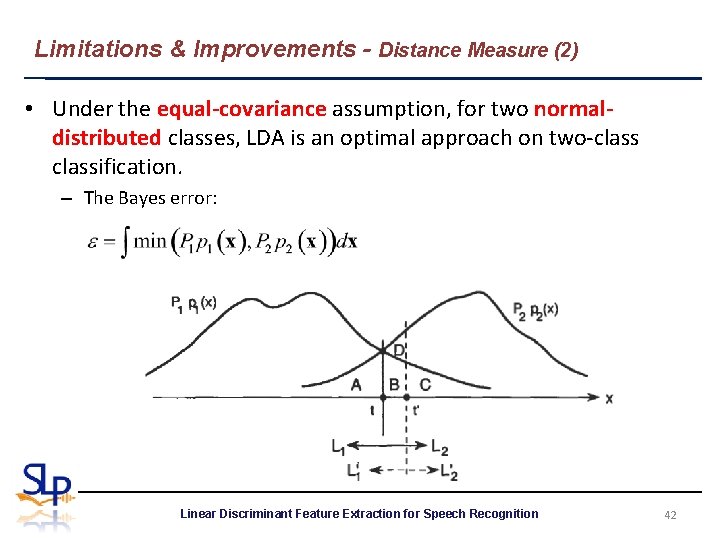

Limitations & Improvements - Distance Measure (2) • Under the equal-covariance assumption, for two normaldistributed classes, LDA is an optimal approach on two-classification. – The Bayes error: Linear Discriminant Feature Extraction for Speech Recognition 42

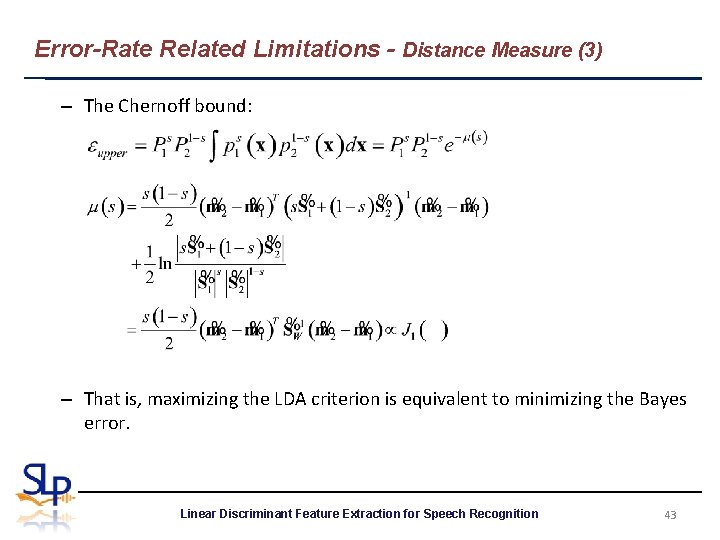

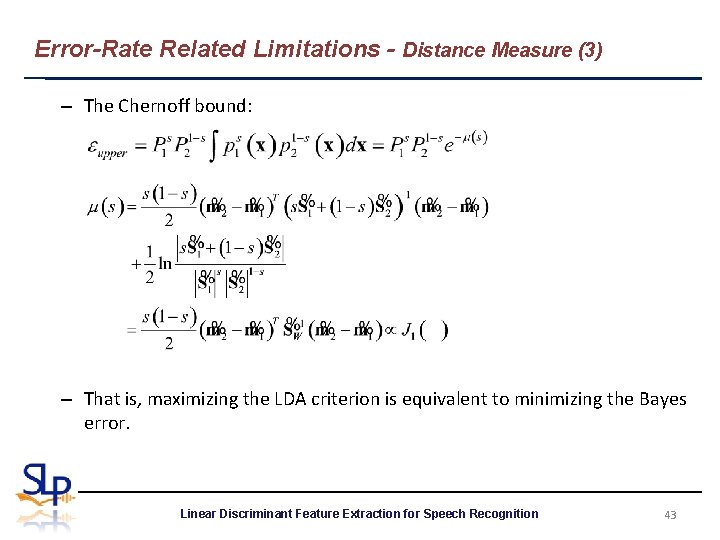

Error-Rate Related Limitations - Distance Measure (3) – The Chernoff bound: – That is, maximizing the LDA criterion is equivalent to minimizing the Bayes error. Linear Discriminant Feature Extraction for Speech Recognition 43

Error-Rate Related Limitations - Distance Measure (4) • On multi-classification, LDA is suboptimal. – The multi-classification error rates have not been fully investigated. – Geometrically speaking, after the whitening stage, even if the equalcovariance assumption is satisfied, LDA can not guarantee the smaller (not necessarily minimum) overlap among overall classes. • Loog (2001) proposed a new criterion, the approximate pairwise accuracy criterion (a. PAC), to solve this problem. – a. PAC is derived from an attempt to approximate the Bayes error for pairs of classes. – a. PAC still retains the equal-covariance assumption of LDA, and simultaneously retains the computational simplicity of LDA. Linear Discriminant Feature Extraction for Speech Recognition 44

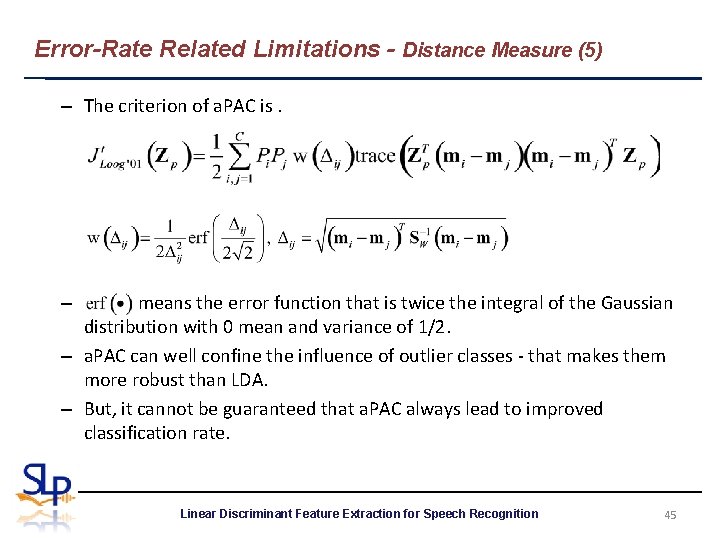

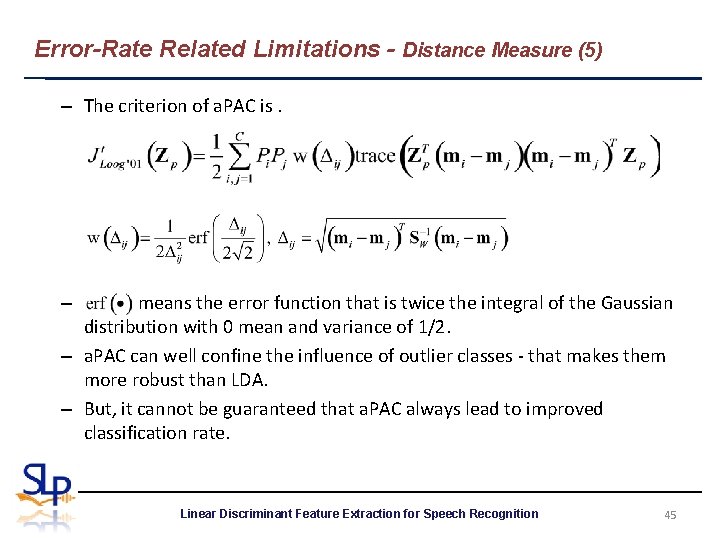

Error-Rate Related Limitations - Distance Measure (5) – The criterion of a. PAC is. means the error function that is twice the integral of the Gaussian distribution with 0 mean and variance of 1/2. – a. PAC can well confine the influence of outlier classes - that makes them more robust than LDA. – But, it cannot be guaranteed that a. PAC always lead to improved classification rate. – Linear Discriminant Feature Extraction for Speech Recognition 45

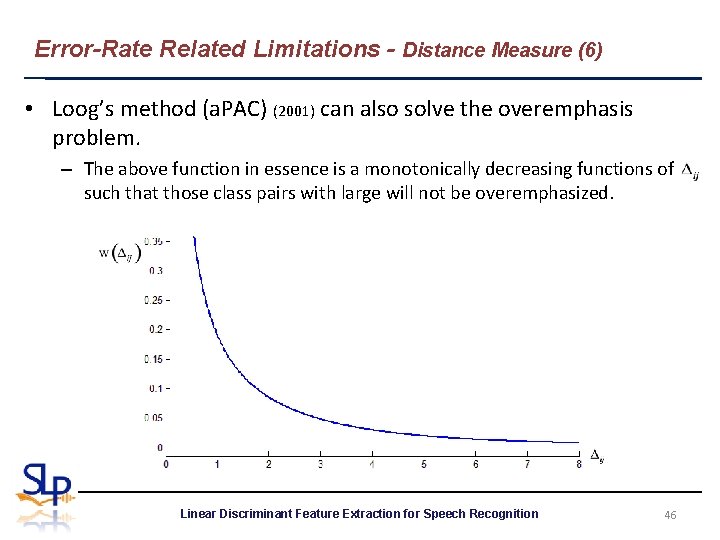

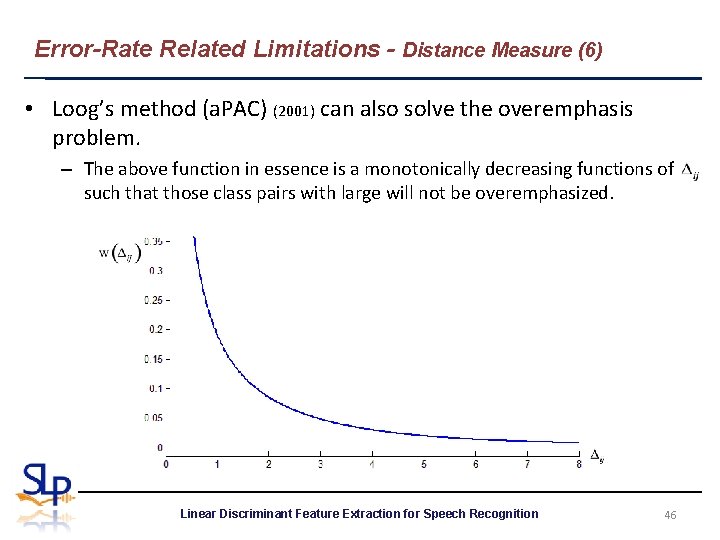

Error-Rate Related Limitations - Distance Measure (6) • Loog’s method (a. PAC) (2001) can also solve the overemphasis problem. – The above function in essence is a monotonically decreasing functions of such that those class pairs with large will not be overemphasized. Linear Discriminant Feature Extraction for Speech Recognition 46

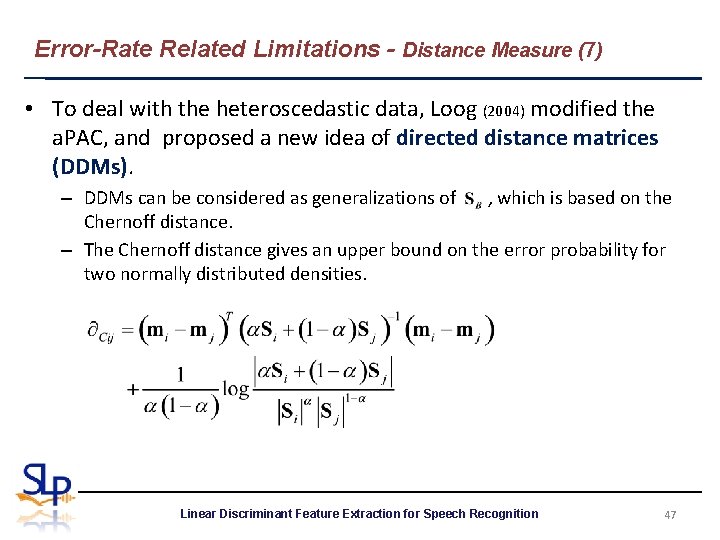

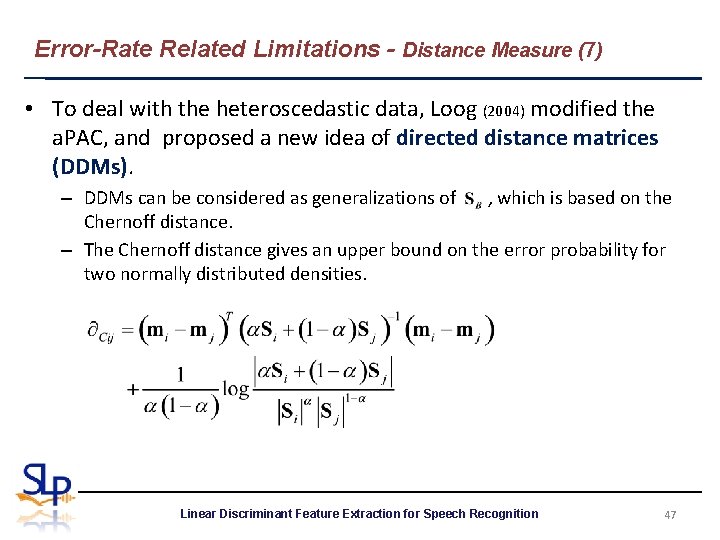

Error-Rate Related Limitations - Distance Measure (7) • To deal with the heteroscedastic data, Loog (2004) modified the a. PAC, and proposed a new idea of directed distance matrices (DDMs). – DDMs can be considered as generalizations of , which is based on the Chernoff distance. – The Chernoff distance gives an upper bound on the error probability for two normally distributed densities. Linear Discriminant Feature Extraction for Speech Recognition 47

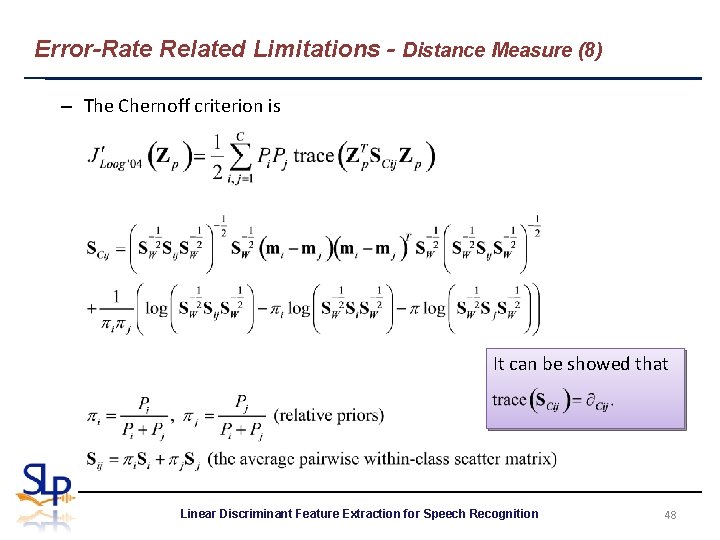

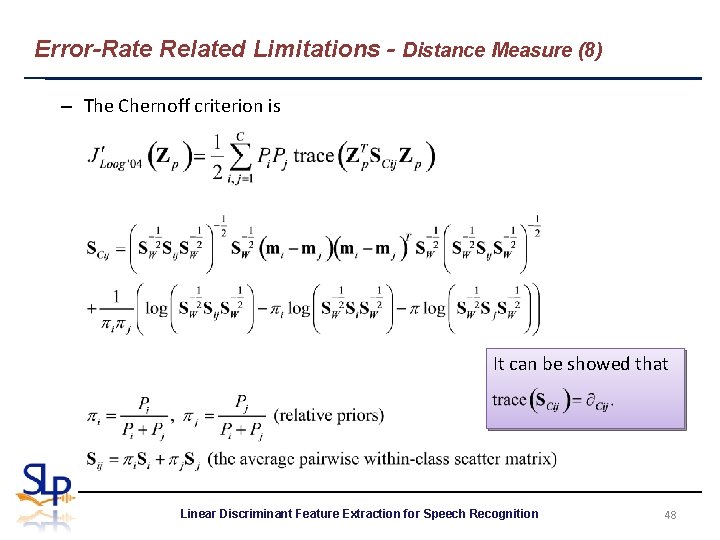

Error-Rate Related Limitations - Distance Measure (8) – The Chernoff criterion is It can be showed that Linear Discriminant Feature Extraction for Speech Recognition 48

Error-Rate Related Limitations - Distance Measure (9) • Although Loog’s method (2004) skillfully transformed the distancemeasure based approach into classification-accuracy based one, it suffers some limitations. – It is not distribution-free. – Similar to Loog’s method (2001), it approximates theoretical C-class Bayes error by sum of the two-class errors, which is an upper bound to the C-class error. – Not all of the classifiers are completely designed as Bayesian contextual ones. Linear Discriminant Feature Extraction for Speech Recognition 49

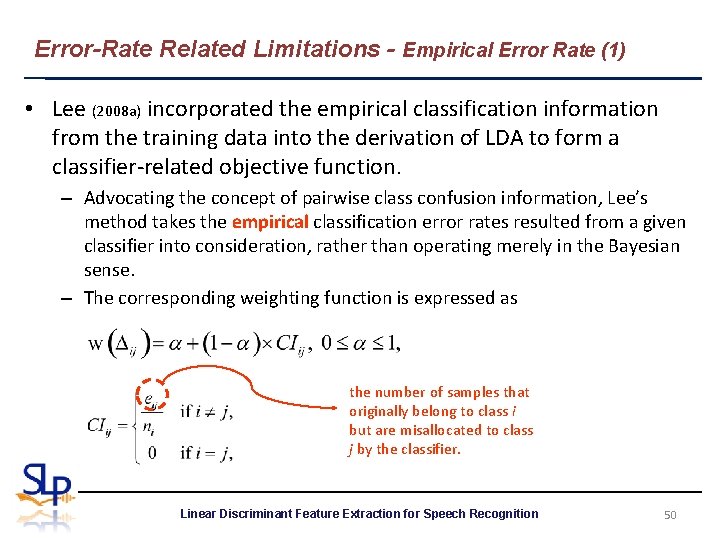

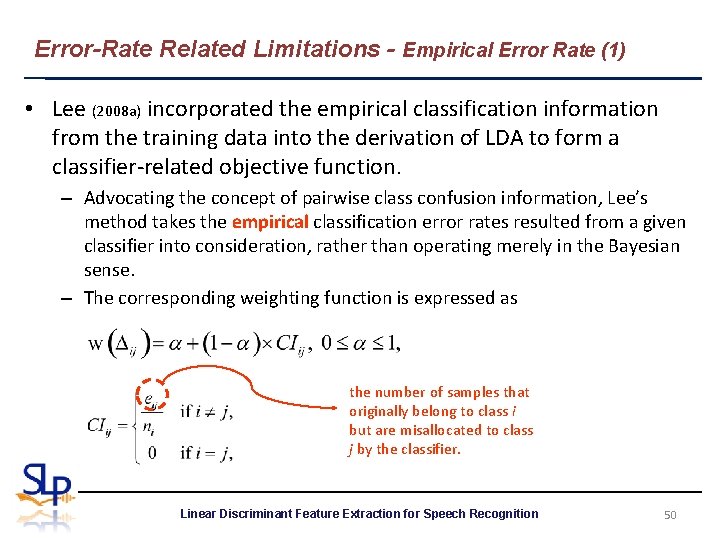

Error-Rate Related Limitations - Empirical Error Rate (1) • Lee (2008 a) incorporated the empirical classification information from the training data into the derivation of LDA to form a classifier-related objective function. – Advocating the concept of pairwise class confusion information, Lee’s method takes the empirical classification error rates resulted from a given classifier into consideration, rather than operating merely in the Bayesian sense. – The corresponding weighting function is expressed as the number of samples that originally belong to class i but are misallocated to class j by the classifier. Linear Discriminant Feature Extraction for Speech Recognition 50

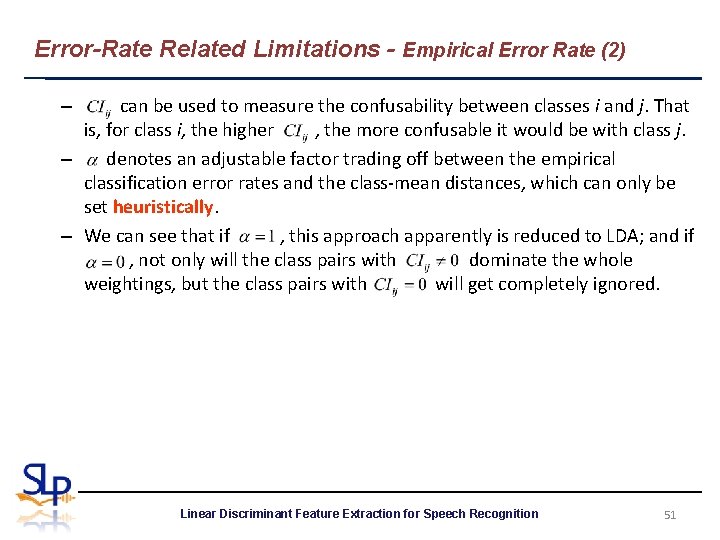

Error-Rate Related Limitations - Empirical Error Rate (2) can be used to measure the confusability between classes i and j. That is, for class i, the higher , the more confusable it would be with class j. – denotes an adjustable factor trading off between the empirical classification error rates and the class-mean distances, which can only be set heuristically. – We can see that if , this approach apparently is reduced to LDA; and if , not only will the class pairs with dominate the whole weightings, but the class pairs with will get completely ignored. – Linear Discriminant Feature Extraction for Speech Recognition 51

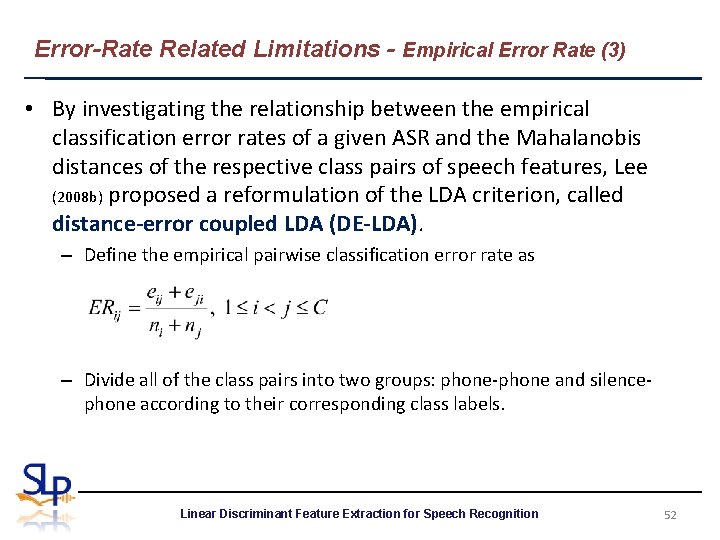

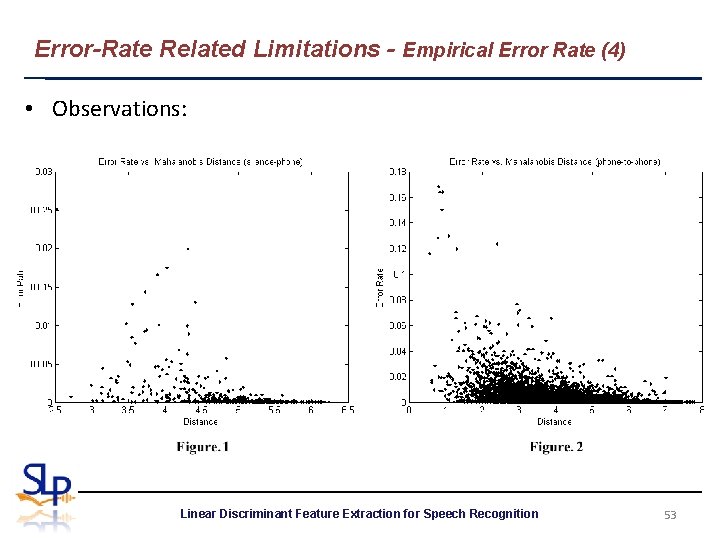

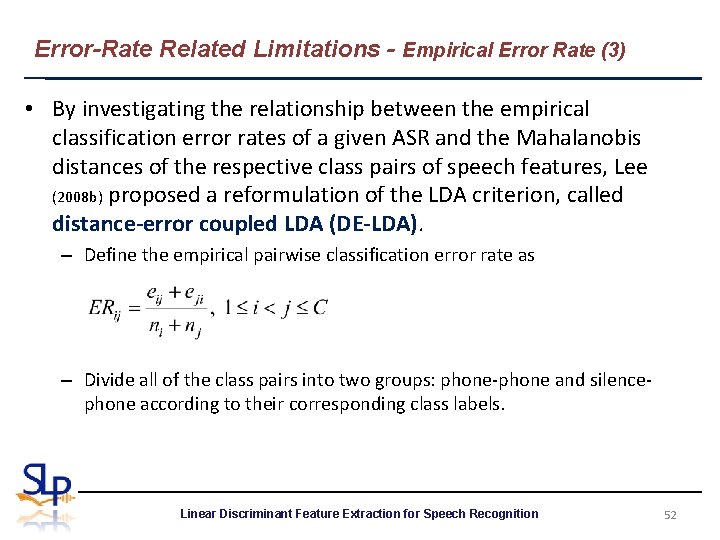

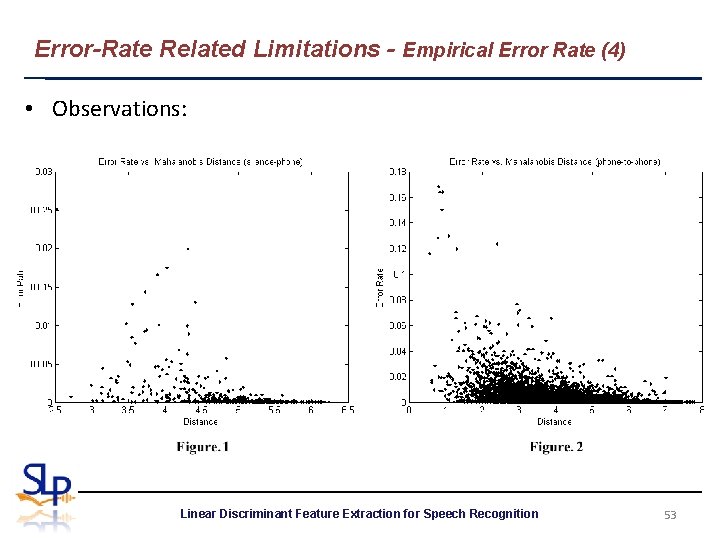

Error-Rate Related Limitations - Empirical Error Rate (3) • By investigating the relationship between the empirical classification error rates of a given ASR and the Mahalanobis distances of the respective class pairs of speech features, Lee (2008 b) proposed a reformulation of the LDA criterion, called distance-error coupled LDA (DE-LDA). – Define the empirical pairwise classification error rate as – Divide all of the class pairs into two groups: phone-phone and silencephone according to their corresponding class labels. Linear Discriminant Feature Extraction for Speech Recognition 52

Error-Rate Related Limitations - Empirical Error Rate (4) • Observations: Linear Discriminant Feature Extraction for Speech Recognition 53

Error-Rate Related Limitations - Empirical Error Rate (5) • Observations: (cont. ) – The error rates of most of the class pairs in the silence-phone group are much lower than that in the phone-phone group. – The correlation between the two variables, i. e. , the distance and the error rate, in the silence-phone group is less pronounced than that in the phone group. – In Fig. 2, we can roughly depict the relationship between these two variables: class pairs with shorter distances tend to have higher error rates; class pairs with larger distances are likely to have lower error rates. – Such a phenomenon, to some extent, confirms to our expectation: the statistics of class pairs with shorter distances need to be emphasized, while those of the class pairs with larger-distances should be deemphasized instead when deriving the LDA-based feature transformation matrix. Linear Discriminant Feature Extraction for Speech Recognition 54

Error-Rate Related Limitations - Empirical Error Rate (6) • Observations: (cont. ) – it is reasonable to disregard the contributions of the class pairs in the silence-phone group to the LDA derivation, due to their irregularities in the distance-error distribution and less influence on the overall error rates. • Data-fitting: – Using the data-fitting (or regression) scheme to find out a function of the Mahalanobis distance , which hopefully can approximate the relationship between the empirical pairwise classification error rate and the corresponding Mahalanobis distance. Linear Discriminant Feature Extraction for Speech Recognition 55

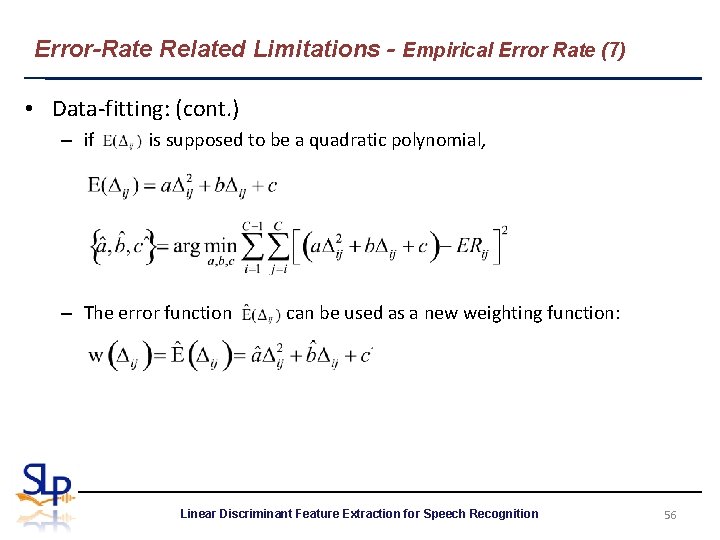

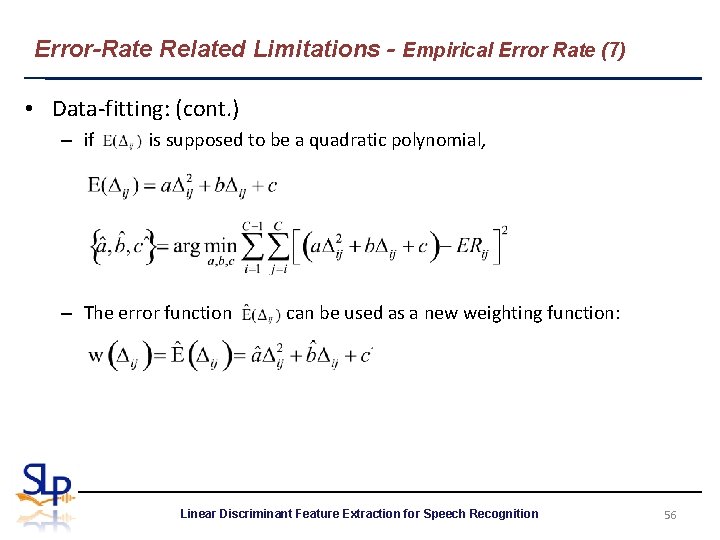

Error-Rate Related Limitations - Empirical Error Rate (7) • Data-fitting: (cont. ) – if is supposed to be a quadratic polynomial, – The error function can be used as a new weighting function: Linear Discriminant Feature Extraction for Speech Recognition 56

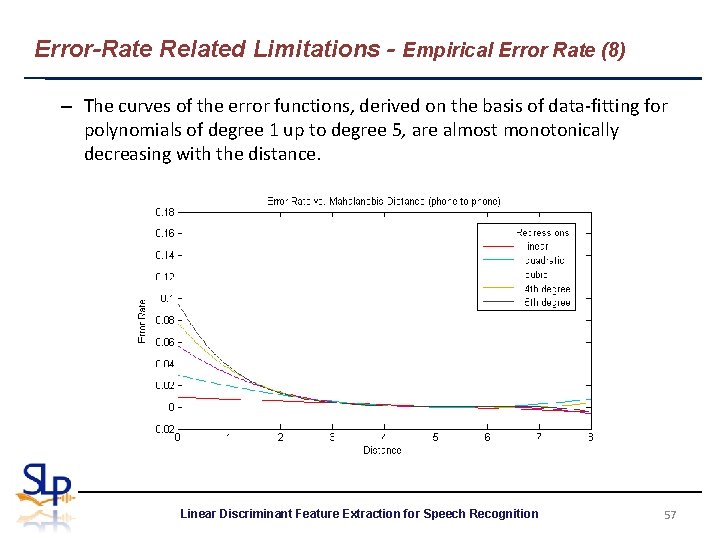

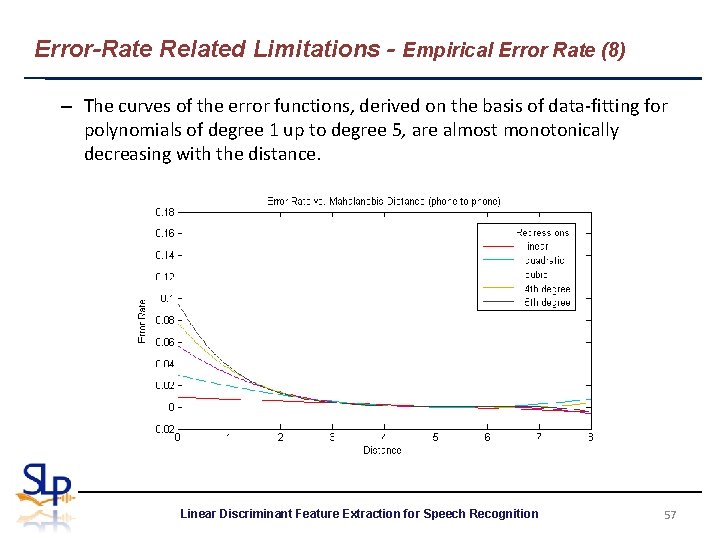

Error-Rate Related Limitations - Empirical Error Rate (8) – The curves of the error functions, derived on the basis of data-fitting for polynomials of degree 1 up to degree 5, are almost monotonically decreasing with the distance. Linear Discriminant Feature Extraction for Speech Recognition 57

Alternative Formulations - Homoscedasticity (1) • From the formulations of the LDA criteria, we can see that LDA assumes that all classes share the same covariance, and the common covariance is. (But LDA dose not have any distribution assumptions. ) • If the assumption is not satisfied, LDA will not perform well. X 2 1 X 2 2 1 3 2 3 X 1 Linear Discriminant Feature Extraction for Speech Recognition X 1 58

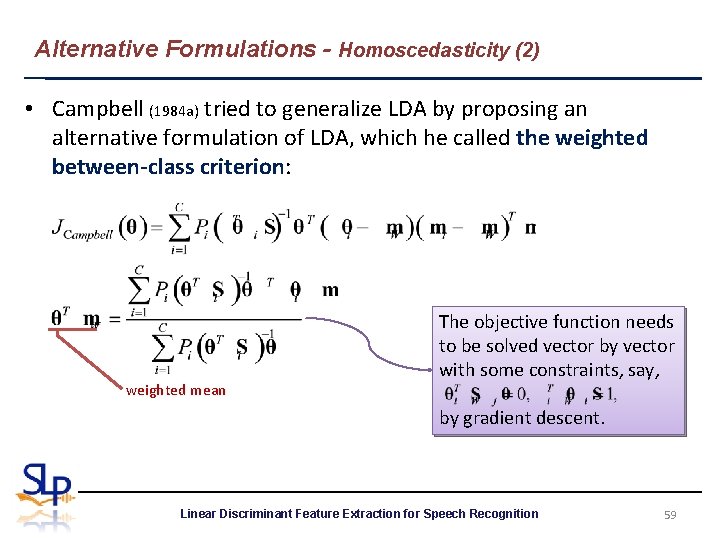

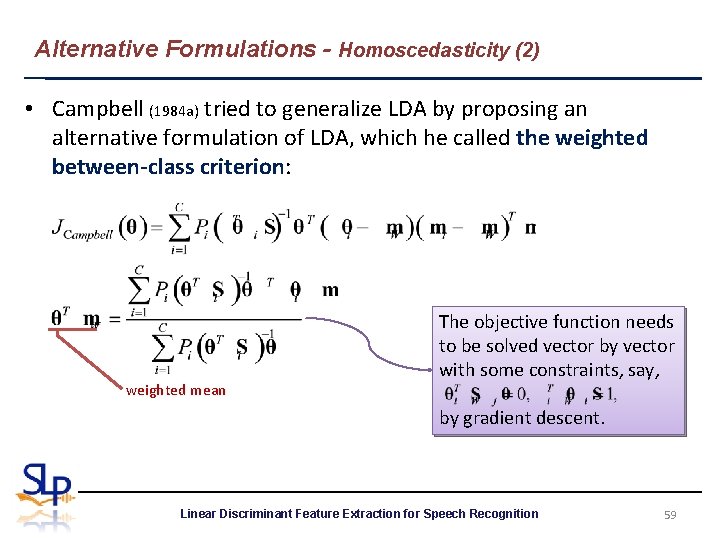

Alternative Formulations - Homoscedasticity (2) • Campbell (1984 a) tried to generalize LDA by proposing an alternative formulation of LDA, which he called the weighted between-class criterion: weighted mean The objective function needs to be solved vector by vector with some constraints, say, by gradient descent. Linear Discriminant Feature Extraction for Speech Recognition 59

Alternative Formulations - Homoscedasticity (3) • Similarly, to generalize , Saon (2000) proposed a new criterion considering the individual covariances of the classes in the objective function, which is named as Heteroscedastic Discriminant Analysis (HDA). distribution-free and the solution is not unique. – HDA can be interpreted as a constrained ML projection, the constraint being given by the maximization of the projected between-class scatter volume. constraint Linear Discriminant Feature Extraction for Speech Recognition 60

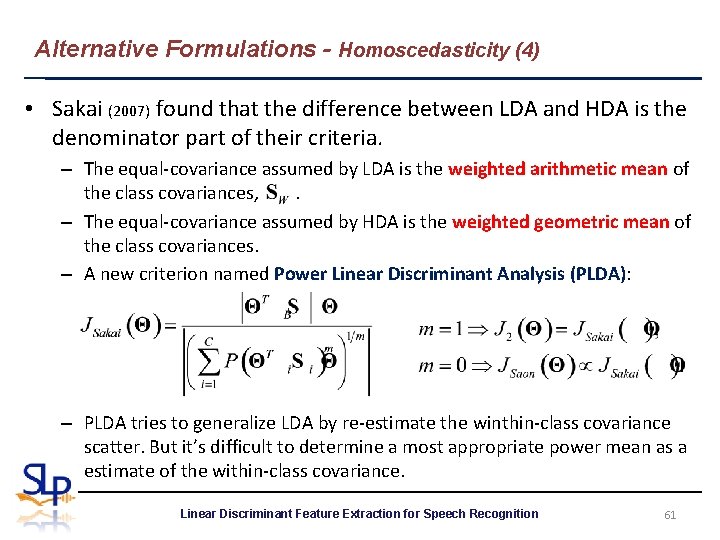

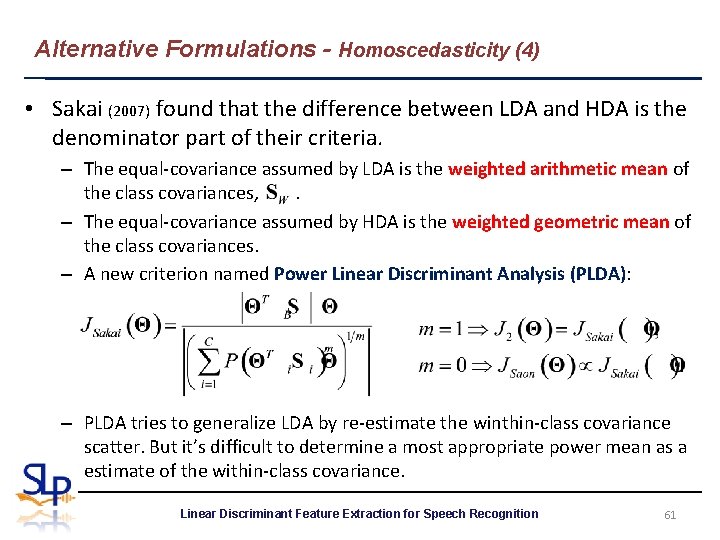

Alternative Formulations - Homoscedasticity (4) • Sakai (2007) found that the difference between LDA and HDA is the denominator part of their criteria. – The equal-covariance assumed by LDA is the weighted arithmetic mean of the class covariances, . – The equal-covariance assumed by HDA is the weighted geometric mean of the class covariances. – A new criterion named Power Linear Discriminant Analysis (PLDA): – PLDA tries to generalize LDA by re-estimate the winthin-class covariance scatter. But it’s difficult to determine a most appropriate power mean as a estimate of the within-class covariance. Linear Discriminant Feature Extraction for Speech Recognition 61

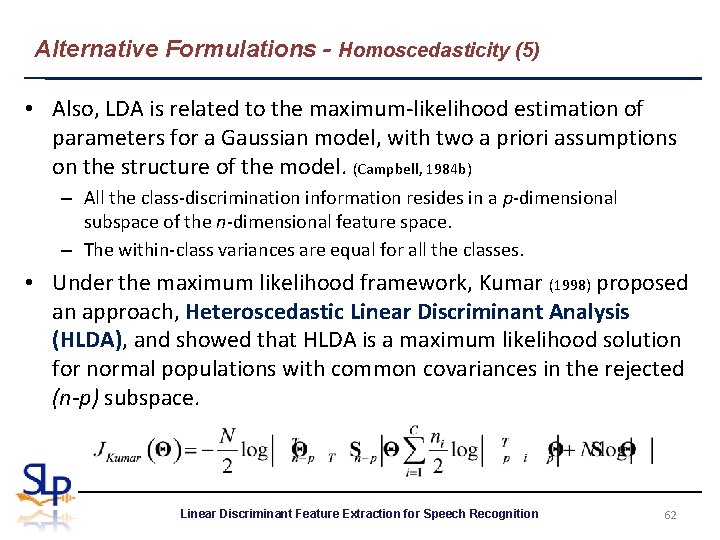

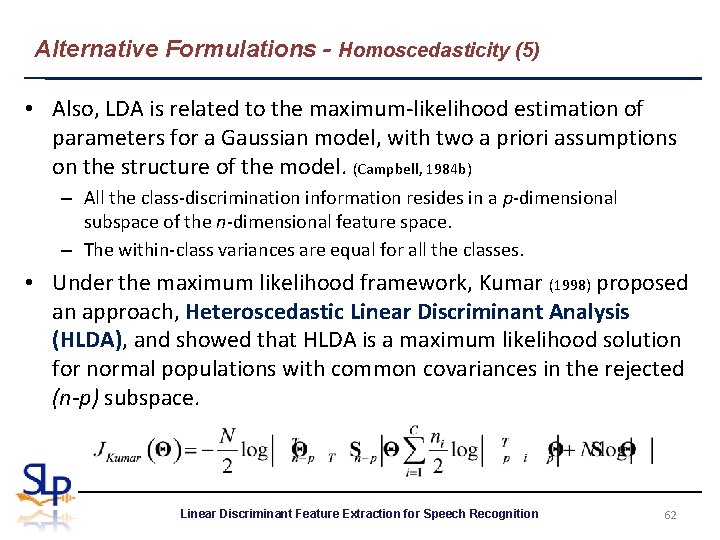

Alternative Formulations - Homoscedasticity (5) • Also, LDA is related to the maximum-likelihood estimation of parameters for a Gaussian model, with two a priori assumptions on the structure of the model. (Campbell, 1984 b) – All the class-discrimination information resides in a p-dimensional subspace of the n-dimensional feature space. – The within-class variances are equal for all the classes. • Under the maximum likelihood framework, Kumar (1998) proposed an approach, Heteroscedastic Linear Discriminant Analysis (HLDA), and showed that HLDA is a maximum likelihood solution for normal populations with common covariances in the rejected (n-p) subspace. Linear Discriminant Feature Extraction for Speech Recognition 62

Alternative Formulations - Homoscedasticity (6) • Some Remarks – From PLDA, we can see HDA seems not to successfully relax the equalcovariance assumption of LDA. – PLDA also implicitly showed that HDA dose not necessarily outperform LDA. – LDA, HDA, and PLDA have no distribution assumption, but HLDA does. – The difference between HDA and HLDA lies in that HDA tries to maximize the between-class separation in the projected (p-dim. ) space, but HLDA tries to minimize the between-class separation in the rejected ((n-p)-dim. ) space. Linear Discriminant Feature Extraction for Speech Recognition 63

Conclusions • Linear discriminant analysis (LDA) is a simple approach with lightweight solvability. Up till now, It has been shown that LDA can lead to consistent performance improvements for smallvocabulary recognition tasks and mixed results on largevocabulary applications. (Haeb-Umbach, 1992) • We roughly unveiled the relationship between the frond-end processing by LDA and the back-end recognition by recognizer and verified that the increasing of class separability is indeed helpful to speech recognition. Linear Discriminant Feature Extraction for Speech Recognition 64

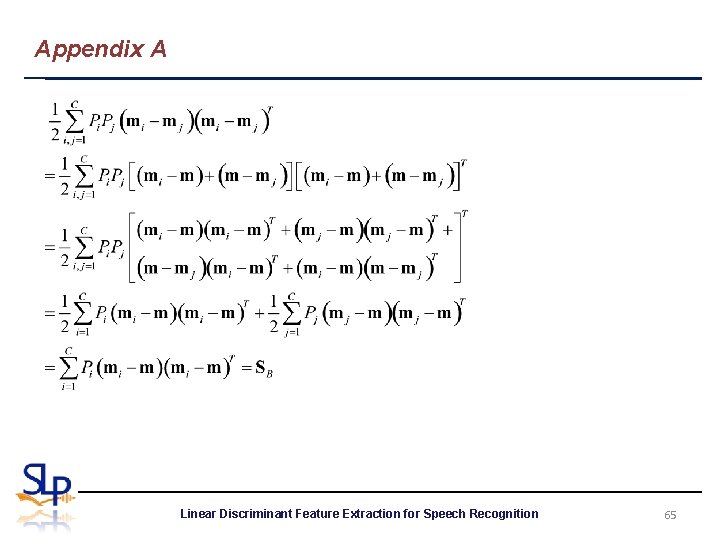

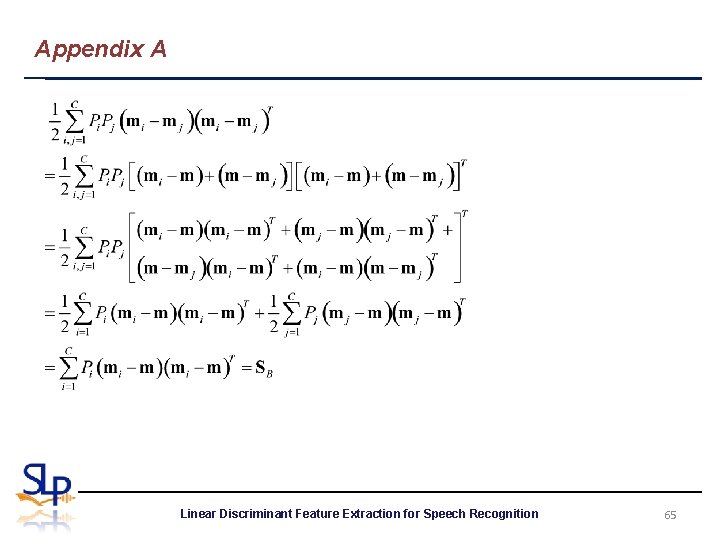

Appendix A Linear Discriminant Feature Extraction for Speech Recognition 65

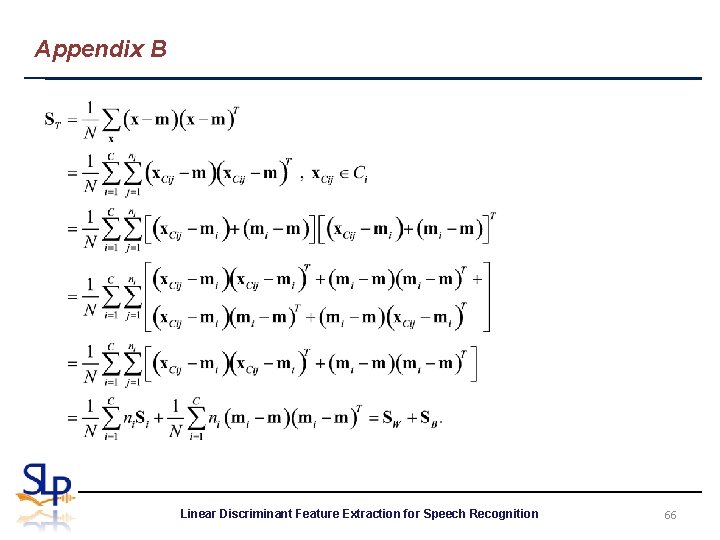

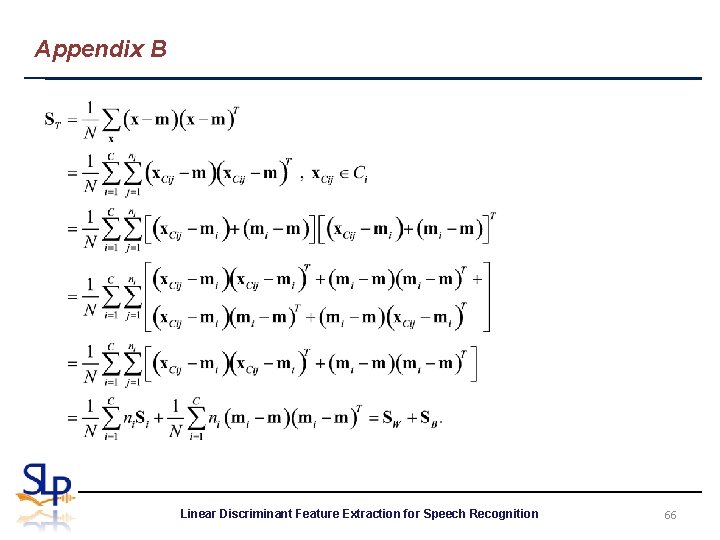

Appendix B Linear Discriminant Feature Extraction for Speech Recognition 66

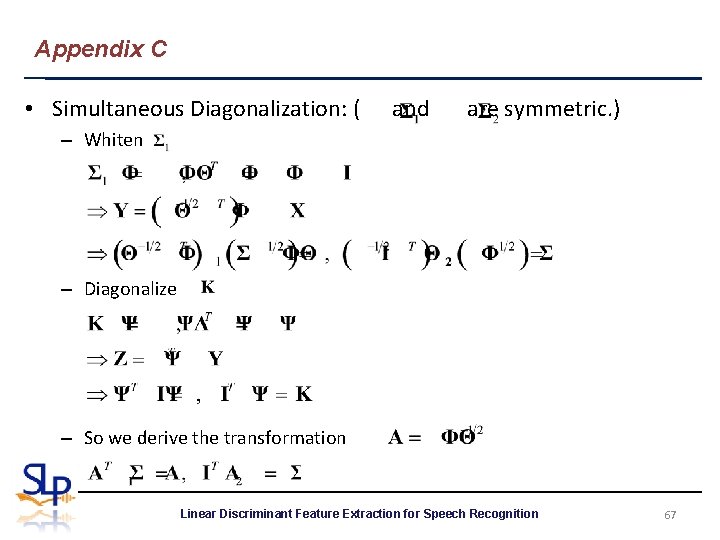

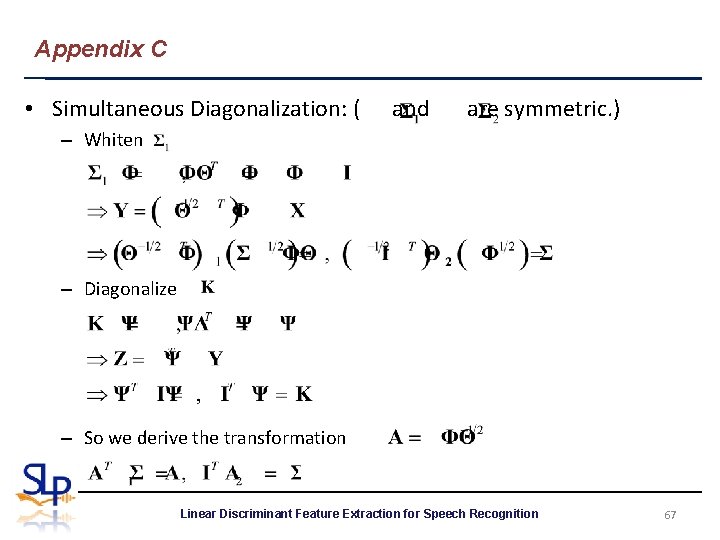

Appendix C • Simultaneous Diagonalization: ( and are symmetric. ) – Whiten – Diagonalize – So we derive the transformation Linear Discriminant Feature Extraction for Speech Recognition 67

References (1) • M. Hunt, “A Statistical Approach to Metrics for Word and Syllable Recognition, ” 98 th Meeting of the Acoustical Society of America, 1979. • P. F. Brown, “The Acoustic-Modeling Problem in Automatic Speech Recognition, ” Ph. D. Thesis, Carnegie Mellon University, 1987. • R. Haeb-Umbach el al. , “Linear Discriminant Analysis for Improved Large Vocabulary Continuous Speech Recognition, ” ICASSP, 1992. • K. Fukunaga, Introduction to Statistical Pattern Recognition, Academic Press, New York, 1990. • R. A. Fisher, “The Use of Multiple Measurements in Taxonomic Problems, ” Annals of Eugenics, vol. 7, 1936. • C. R. Rao, “The Utilization of Multiple Measurements in Problems of Biological Classification, ” J. Royal Statistic al Soc. , Series B, vol. 10, 1948. • R. A. Johnson et al, Applied Multivariate Statistical Analysis, Prentice Hall, 5 th Ed. , 2002. Linear Discriminant Feature Extraction for Speech Recognition 68

References (2) • H. Gao et al. , “Why Direct LDA is not equivalent to LDA, ” Pattern Recognition, vol. 39, 2006. • B. L. Welch, “Note on Discriminant Functions, ” Biometrika, vol. 31, 1939. • R. O. Duda et al. , Pattern Classification, John Wiley and Sons, New York, 2001. • G. A. F. Seber, Multivariate Observations, John Wiley , New York, 1984. • D. Pena et al. , “Descriptive Measures of Multivariate Scatter and Linear Dependence, ” Journal of Multivariate Analysis, vol. 85, issue 2, 2003. • R. E. Prieto, “A General Solution to the Maximization of the Multidimensional Generalized Rayleigh Quotient Used in Linear Discriminant Analysis for Signal Classification, ” ICASSP, 2003. • W. J. Krzanowski, Principles of Multivariate Analysis - A user’s Perspective, Oxford Press, 1988. • X. B. Li, “Dimensionality Reduction Using MCE-Optimized LDA Transformation, ” ICASSP, 2004. Linear Discriminant Feature Extraction for Speech Recognition 69

References (3) • J. W. Sammon, “An Optimal Discriminant Plane, ” IEEE Trans. on Computers, 1970. • D. H. Foley et al. , “An optimal Set of Discriminant Vectors, ” IEEE Trans. on Computers, 1975. • J. Duchene et al. , “An Optimal Transformation for Discriminant and Principal Component Analysis, ” IEEE Trans. on PAMI, 1988. • N. A. Campbell et al. , “The Geometry of Canonical Variate Analysis, ” Syst. Zool. , 30(3), 1981. • H. S. Lee and Berlin Chen, “Linear Discriminant Feature Extraction Using Weighted Classification Confusion Information, ” INTERSPEECH, 2008. • N. A. Campbell, “Canonical Variate Analysis with Unequal Covariance Matrices Generalizations of the Usual Solution, ” Mathematical Geology, 16(2), 1984. • R. Saon et al. , “Maximum Likelihood Discriminant Feature Spaces, ” ICASSP, 2000. Linear Discriminant Feature Extraction for Speech Recognition 70

References (4) • N. A. Campbell, “Canonical Variate Analysis - A general Model Formulation, ” Australian Journal of Statistics, vol. 26, 1984. • N. Kumar et al. , “Heteroscedastic Discriminant Analysis and Reduced Rank HMMs for Improved Speech Recognition, ” Speech Communication, vol. 26, 1998. • M. Sakai et al. “Generalization of Linear Discriminant Analysis Used in Segmental Unit Input Hmm for Speech Recognition, ” ICASSP, 2007. • Y. Li et al. , “Weighted Pairwise Scatter to Improve Linear Discriminant Analysis, ” ICSLP, 2000. • Y. Liang, “Uncorrelated Linear Discriminant Analysis Based on Weighted Pairwise Fisher Criterion, ” Pattern Recognition, vol. 40, 2007. • M. Loog et al. , “Multiclass Linear Dimension Reduction by Weighted Pairwise Fisher Criteria, ” IEEE Trans. on PAMI, 2001. • M. Loog et al. , “Linear Dimensionality Reduction via a Heteroscedastic Extension of LDA, ” IEEE Trans. on PAMI, 2004. Linear Discriminant Feature Extraction for Speech Recognition 71

References (5) • H. S. Lee and Berlin Chen, “Improved Linear Discriminant Analysis Considering Empirical Pairwise Classification Error Rates, ” ISCSLP, 2008, submitted. Linear Discriminant Feature Extraction for Speech Recognition 72