LIN 6932 Topics in Computational Linguistics Hana Filip

![0 Book 1 that 2 flight 3 S • VP, [0, 0] – First 0 Book 1 that 2 flight 3 S • VP, [0, 0] – First](https://slidetodoc.com/presentation_image/3ab82308dc765b2b9b06f8ce4ba27bc8/image-57.jpg)

![Some Earley edges (parser states) 1. S => • NP VP [0, 0]: Incomplete. Some Earley edges (parser states) 1. S => • NP VP [0, 0]: Incomplete.](https://slidetodoc.com/presentation_image/3ab82308dc765b2b9b06f8ce4ba27bc8/image-59.jpg)

![Last Two States Scanner γ→S . LIN 6932 [nil, 3] Completer 69 Last Two States Scanner γ→S . LIN 6932 [nil, 3] Completer 69](https://slidetodoc.com/presentation_image/3ab82308dc765b2b9b06f8ce4ba27bc8/image-69.jpg)

![Error Handling Valid sentences will leave the state S , [nil, N] • What Error Handling Valid sentences will leave the state S , [nil, N] • What](https://slidetodoc.com/presentation_image/3ab82308dc765b2b9b06f8ce4ba27bc8/image-70.jpg)

- Slides: 73

LIN 6932: Topics in Computational Linguistics Hana Filip LIN 6932 1

• Parsing with context-free grammars LIN 6932 2

Grammar Equivalence and Chomsky Normal Form • Weak equivalence • Strong equivalence LIN 6932 3

Grammar Equivalence and Chomsky Normal Form (CNF) many proofs in the field of languages and computability make use of the Chomsky Normal Form. there algorithms that decide whether a given string can be generated by a given grammar and that use the Chomsky normal form: e. g. , the CYK (Cocke-Younger. Kasami) LIN 6932 4

Grammar Equivalence and Chomsky Normal Form (CNF) • Chomsky Normal Form (CNF) is one of the most basic Normal Forms (roughly: in the context of computing and rewriting systems, a form that cannot be further reduced to a simpler form). In CNF each production (rewriting rule) has the form A → B C or A→α where – A, B and C are nonterminal symbols – α is a terminal symbol (i. e. , a symbol that represents a constant value) – productions (rewriting rules) are expansive: throughout the derivation of a string, each string of terminals and nonterminals is always either the same length or one element longer than the previous such string LIN 6932 5

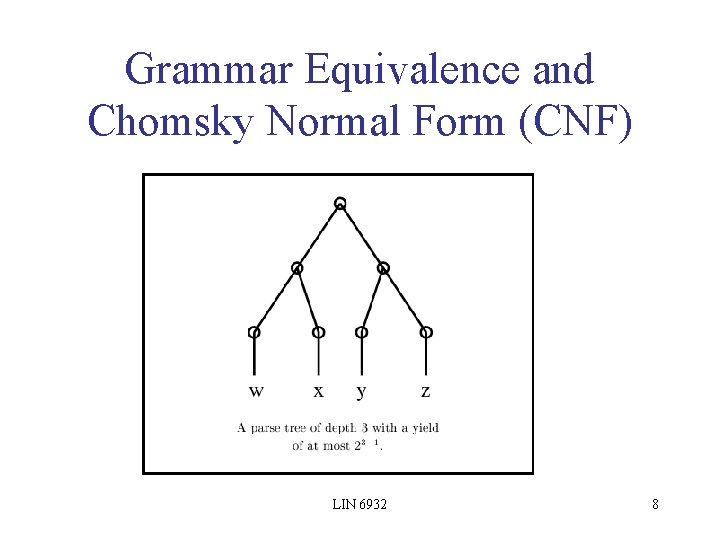

Grammar Equivalence and Chomsky Normal Form (CNF) • For grammars in Chomsky Normal Form the parse tree is always a binary tree. • We can talk about the relationship between: – the depth of the parse tree, and – the length of its yield. LIN 6932 6

Grammar Equivalence and Chomsky Normal Form (CNF) • If a parse tree for a word string w is generated by a CNF and the parse tree – has a path length of at most i, – then the length of w is at most 2 i-1. LIN 6932 7

Grammar Equivalence and Chomsky Normal Form (CNF) LIN 6932 8

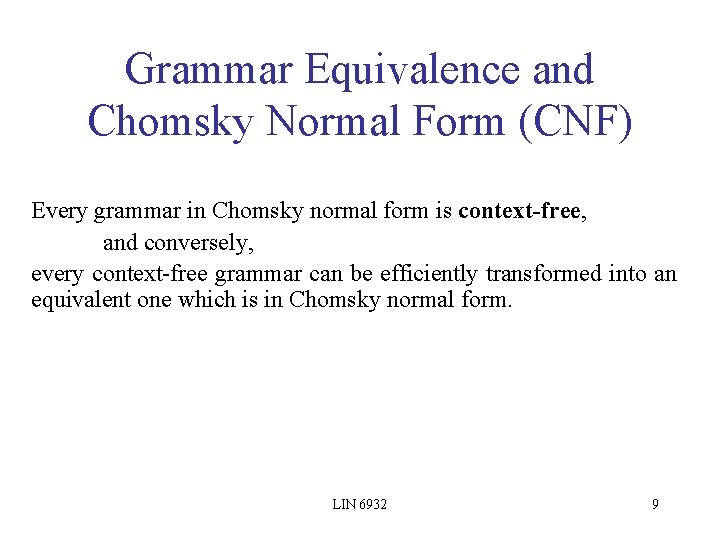

Grammar Equivalence and Chomsky Normal Form (CNF) Every grammar in Chomsky normal form is context-free, and conversely, every context-free grammar can be efficiently transformed into an equivalent one which is in Chomsky normal form. LIN 6932 9

Grammar Equivalence and Chomsky Normal Form (CNF) LIN 6932 10

Grammar Equivalence and Chomsky Normal Form (CNF) LIN 6932 11

CFG for Fragment of English: G 0 LIN 6932 12

Parse Tree for ‘Book that flight’ using G 0 LIN 6932 13

FSA and Syntactic Parsing with CFGs (see previous lecture: types of formal grammar on Chomsky H - the class of languages they generate - types of finite state automata that recognizes each class) CFG rule: NP (Det) Adj* N LIN 6932 14

Parsing as a Search Problem • parsing (linguistics: syntax analysis) is the process of analyzing a sequence of tokens to determine its grammatical structure with respect to a given formal grammar. LIN 6932 15

Parsing as a Search Problem • Searching FSAs – Finding the right path through the automaton – Search space defined by structure of FSA • Searching CFGs – Finding the right parse tree among all possible parse trees – Search space defined by the grammar • Constraints provided by – the input sentence and – the automaton or grammar LIN 6932 16

Two Search Strategies How can we use Go to assign the correct parse tree(s) to a given string of words? • Constraints provided by – the input sentence and – the automaton or grammar Give rise to two search strategies: • Top-Down (Hypothesis-Directed) Search – Search for tree starting from S until input words covered. • Bottom-Up (Data-Directed) Search – Start with words and build upwards toward S LIN 6932 17

Two Search Strategies search strategies and epistemology (the study of knowledge and justified belief, philosophy of science) • Top-Down (Hypothesis-Directed) Search –Search for tree starting from S until all input words covered –Rationalist tradition: emphasizes the use of prior knowledge • Bottom-Up (Data-Directed) Search –Start with words and build upwards toward S –Empiricist tradition: emphasizes the data The rationalist vs. empiricist controversy concerns the extent to which we are dependent upon sense experience in our effort to gain knowledge LIN 6932 18

Top-Down Parser • Builds from the root S node down to the leaves • Assuming we build all trees in parallel: – – Find all trees with root S Next expand all constituents in these trees/rules Continue until leaves are part of speech categories (pos) Candidate trees failing to match pos of input string are rejected • Top-Down: Rationalist Tradition – Expectation- or Theory-driven – Goal: Build tree for input starting with S LIN 6932 19

Top-Down Search Space for G 0 LIN 6932 20

Bottom-Up Parsing • The earliest known parsing algorithm (suggested by Yngve 1955) • Parser begins with words of input and builds up trees, applying G 0 rules whose right-hand sides match • Book that flight N Det N V Det N Book that flight – ‘Book’ ambiguous – Parse continues until an S root node reached or no further node expansion possible • Bottom-Up: Empiricist Tradition – Data driven – Primary consideration: Lowest sub-trees of final tree must hook up with LIN 6932 21 words in input.

Expanding Bottom-Up Search Space for ‘Book that flight’ LIN 6932 22

Comparing Top-Down and Bottom-Up • Top-Down parsers: never explore illegal parses (e. g. parses that can’t form an S) -- but waste time on trees that can never match the input • Bottom-Up parsers: never explore trees inconsistent with input -- but waste time exploring illegal parses (no S root) • For both: how to explore the search space? – Pursuing all parses in parallel or …? – Which node to expand next? – Which rule to apply next? LIN 6932 23

A Possible Top-Down Parsing Strategy • Depth-first search: – start at the root (selecting some node as the root in the graph case) and expand as far as possible until – you reach a state (tree) inconsistent with input, backtrack to the most recent unexplored state (tree) • Which node to expand? – Leftmost • Which grammar rule to use? – Order in the grammar LIN 6932 24

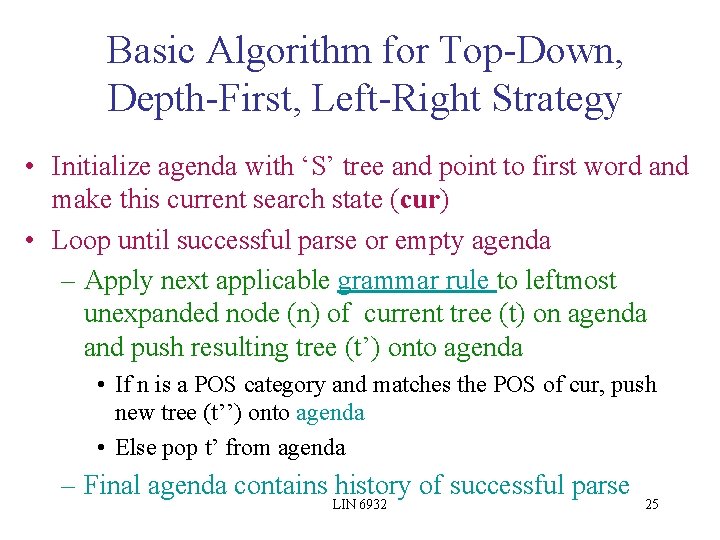

Basic Algorithm for Top-Down, Depth-First, Left-Right Strategy • Initialize agenda with ‘S’ tree and point to first word and make this current search state (cur) • Loop until successful parse or empty agenda – Apply next applicable grammar rule to leftmost unexpanded node (n) of current tree (t) on agenda and push resulting tree (t’) onto agenda • If n is a POS category and matches the POS of cur, push new tree (t’’) onto agenda • Else pop t’ from agenda – Final agenda contains history of successful parse LIN 6932 25

Example: Does this flight include a meal? LIN 6932 26

Example continued … LIN 6932 27

Augmenting Top-Down Parsing with Bottom-Up Filtering • We saw: Top-Down, depth-first, L-to-R parsing – Expands non-terminals along the tree’s left edge down to leftmost leaf of tree – Moves on to expand down to next leftmost leaf… • In a successful parse, current input word will be the first word in derivation of the unexpanded node that the parser is currently processing • So … look ahead to left-corner of the tree – B is a left-corner of A if A ==>* B – Build table with left-corners of all non-terminals in grammar and consult before applying rule LIN 6932 28

Left Corners Pre-compute all POS that can serve as the leftmost POS in the derivations of each non-terminal category LIN 6932 29

Left-Corner Table for G 0 Category Left Corners S NP, Det, Prop. N, Aux, V NP Det, Prop. N Nom N VP V Previous Example: LIN 6932 30

Summing Up Parsing Strategies • Parsing is a search problem which may be implemented with many search strategies • Top-Down vs. Bottom-Up Parsers – Both generate too many useless trees – Combine the two to avoid over-generation: Top-Down Parsing with Bottom-Up look-ahead • Left-corner table provides more efficient lookahead – Pre-compute all POS that can serve as the leftmost POS in the derivations of each non-terminal category LIN 6932 31

Three Critical Problems in Parsing • Left Recursion • Ambiguity • Repeated Parsing of Sub-trees LIN 6932 32

Left Recursion • A long-standing issue regarding algorithms that manipulate context-free grammars (CFGs) in a "top-down" left-to-right fashion is that left recursion can lead to nontermination, to an infinite loop. • Direct Left Recursion happens when you have a rule that calls itself before anything else. Examples: NP NP PP, NP and NP, VP PP, S S and S • Indirect Left Recursion: Example: NP Det Nominal Det NP ’s LIN 6932 33

Left Recursion • Indirect Left Recursion: Example: NP Det Nominal Det NP ’s NP NP NP Det Nominal ’s LIN 6932 34

Solutions to Left Recursion • Don't use recursive rules • Rule ordering • Limit depth of recursion in parsing to some analytically or empirically set limit • Don't use top-down parsing LIN 6932 35

Solution: Grammar Rewriting • Rewrite a left-recursive grammar to a weakly equivalent one which is not leftrecursive. • How? – By Hand (ick) or … – Automatically LIN 6932 36

Solution: Grammar Rewriting I saw the man on the hill with a telescope. N V NP PP PP NP: noun phrase PP: prepositional phrase Phrase: characterized by its head (N, V, P) Ambiguous: 5 possible parses LIN 6932 37

Solution: Grammar Rewriting I saw the man on the hill with a telescope. (1) S NP VP V NP N LIN 6932 PP PP 38

Solution: Grammar Rewriting I saw the man on the hill with a telescope. (2) S NP VP V NP N LIN 6932 PP PP 39

Solution: Grammar Rewriting I saw the man on the hill with a telescope. (3) S NP VP V PP PP NP LIN 6932 40

Solution: Grammar Rewriting I saw the man on the hill with the telescope… NP PP (recursive) NP N PP (nonrecursive) NP N …becomes… NP N NP’ PP NP’ e • Not so obvious what these rules mean… LIN 6932 41

Rule Ordering • Bad: – NP PP – NP Det N • Rule ordering: non-recursive rules first – First: NP Det N – Then: NP PP LIN 6932 42

Depth Bound • Set an arbitrary bound • Set an analytically derived bound • Run tests and derive reasonable bound empirically LIN 6932 43

Ambiguity • Lexical Ambiguity – Leads to hypotheses that are locally reasonable but eventually lead nowhere – “Book that flight” • Structural Ambiguity – Leads to multiple parses for the same input LIN 6932 44

Lexical Ambiguity: Word Sense Disambiguation (WSD) as Text Categorization • Each sense of an ambiguous word is treated as a category. – “play” (verb) • play-game • play-instrument • play-role – “pen” (noun) • writing-instrument • enclosure • Treat current sentence (or preceding and current sentence) as a document to be classified. – “play”: • play-game: “John played soccer in the stadium on Friday. ” • play-instrument: “John played guitar in the band on Friday. ” • play-role: “John played Hamlet in theater on Friday. ” – “pen”: • writing-instrument: “John wrote the letter with a pen in New York. ” • enclosure: “John put the dog in the pen in New York. ” LIN 6932 45

Structural ambiguity • Multiple legal structures – Attachment (e. g. I saw a man on a hill with a telescope) – Coordination (e. g. younger cats and dogs) – NP bracketing (e. g. Spanish language teachers) LIN 6932 46

Two Parse Trees for Ambiguous Sentence LIN 6932 47

Humor and Ambiguity • Many jokes rely on the ambiguity of language: – Groucho Marx: One morning I shot an elephant in my pajamas. How he got into my pajamas, I’ll never know. – She criticized my apartment, so I knocked her flat. – Noah took all of the animals on the ark in pairs. Except the worms, they came in apples. – Policeman to little boy: “We are looking for a thief with a bicycle. ” Little boy: “Wouldn’t you be better using your eyes. ” – Why is the teacher wearing sun-glasses. Because the class is so bright. LIN 6932 48

Ambiguity is Explosive • Ambiguities compound to generate enormous numbers of possible interpretations. • In English, a sentence ending in n prepositional phrases has over 2 n syntactic interpretations. – “I saw the man with the telescope”: 2 parses – “I saw the man on the hill with the telescope. ”: 5 parses – “I saw the man on the hill in Texas with the telescope”: 14 parses – “I saw the man on the hill in Texas with the telescope at noon. ”: 42 parses LIN 6932 49

What’s the solution? Return all possible parses and disambiguate using “other methods” LIN 6932 50

Summing Up • Parsing is a search problem which may be implemented with many control strategies – Top-Down or Bottom-Up approaches each have problems • Combining the two solves some but not all issues – Left recursion – Syntactic ambiguity • Next time: Making use of statistical information about syntactic constituents LIN 6932 51

Dynamic Programming • Create table of solutions to sub-problems (e. g. subtrees) as parse proceeds • Look up subtrees for each constituent rather than reparsing • Since all parses implicitly stored, all available for later disambiguation • Examples: Cocke-Younger-Kasami (CYK) (1960), Graham-Harrison-Ruzzo (GHR) (1980) and Earley (1970) algorithms LIN 6932 52

Earley Algorithm • Jay Earley (1970) • A type of chart parser that uses dynamic programming to do parallel top-down search • Can parse all context-free languages • Dot notation – Given a production A BCD where B, C, and D are symbols in the grammar (terminals or nonterminals), the notation A B • C D represents a condition in which B has already been parsed and the sequence C D is expected. LIN 6932 53

Earley Algorithm • left-to-right pass fills out a chart with N+1 states – Think of chart entries as sitting between words in the input string keeping track of states of the parse at these positions 0 Book 1 that 2 flight 3 – For each word position, chart contains set of states representing all partial parse trees generated to date. E. g. chart[0] contains all partial parse trees generated at the beginning of the sentence LIN 6932 54

Chart Entries Represent three types of constituents: • completed constituents We keep track of what we have built with what we call complete edges in the chart, noting where a constituent stops and where it starts. For the lexical complete edges in 0 Book 1 that 2 we want: Verb [0, 1] Det [1, 2] Noun [2, 3] flight 3 • in-progress constituents • predicted constituents We keep track of what we are looking for with incomplete edges, saying what we are looking for and where it starts (we don't know where it ends yet). We start out looking for an S at 0. S [0] LIN 6932 55

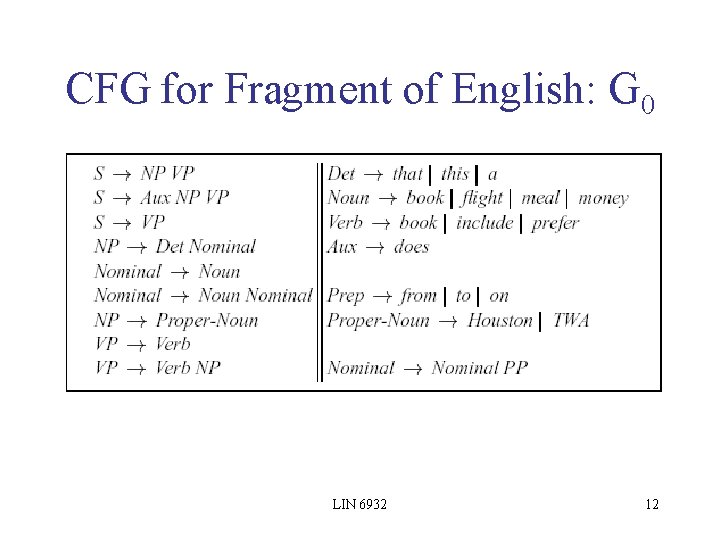

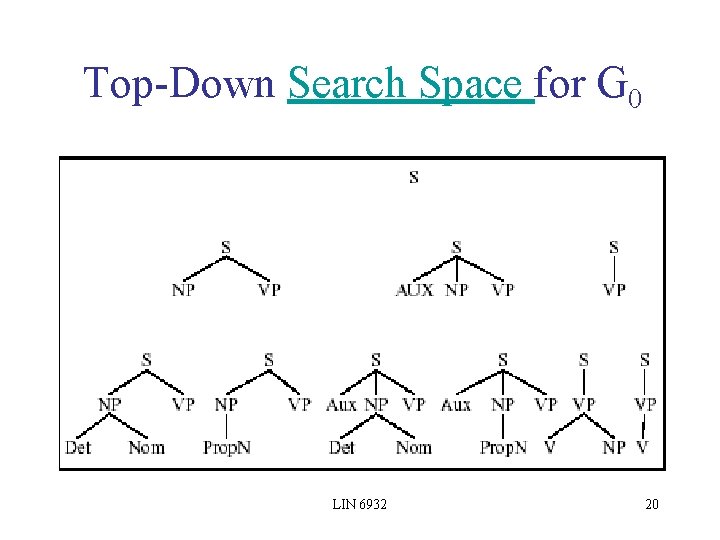

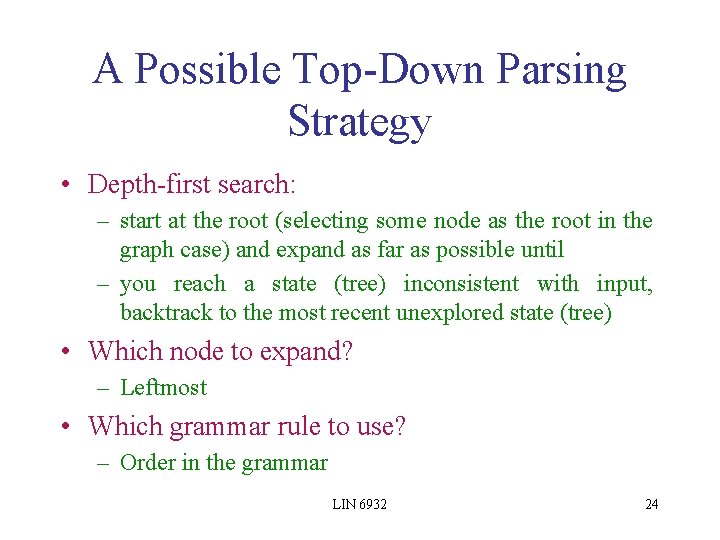

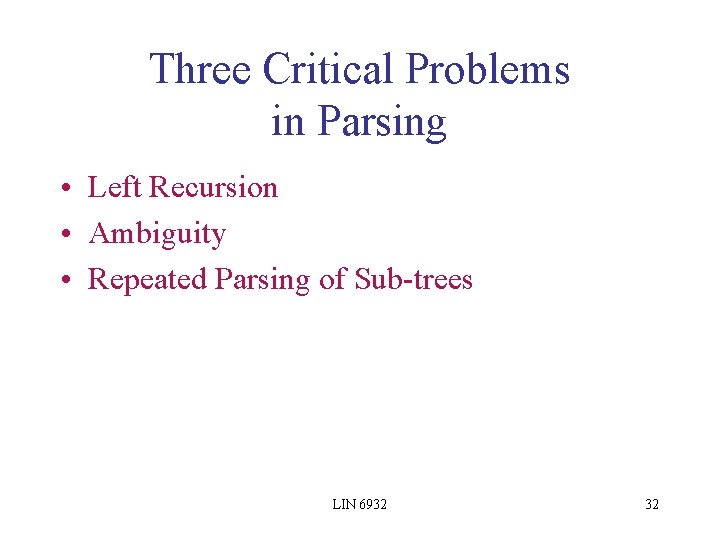

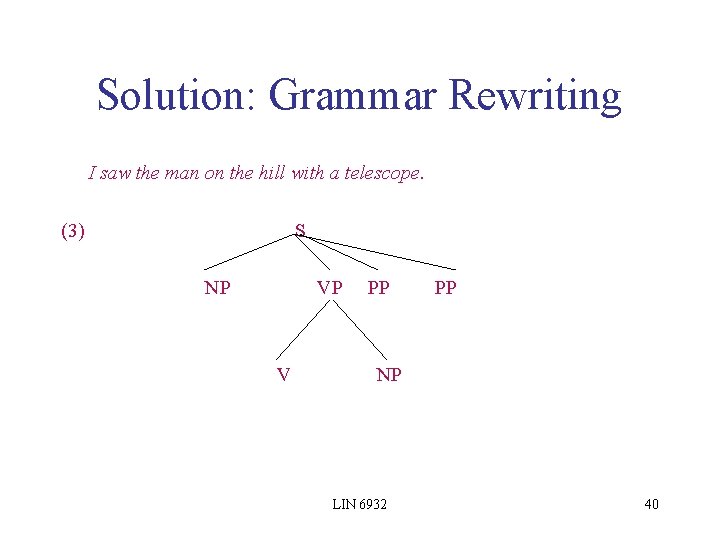

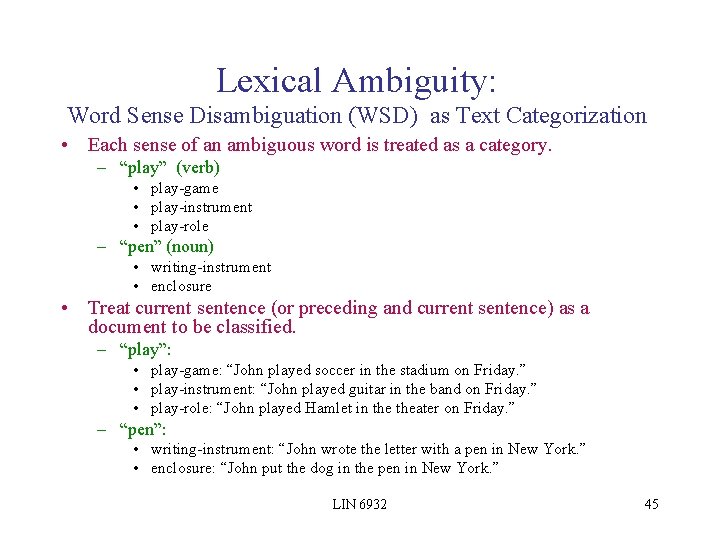

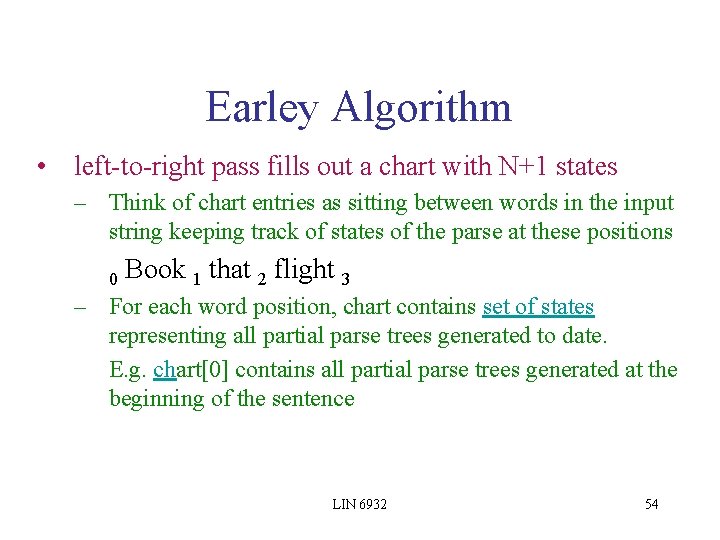

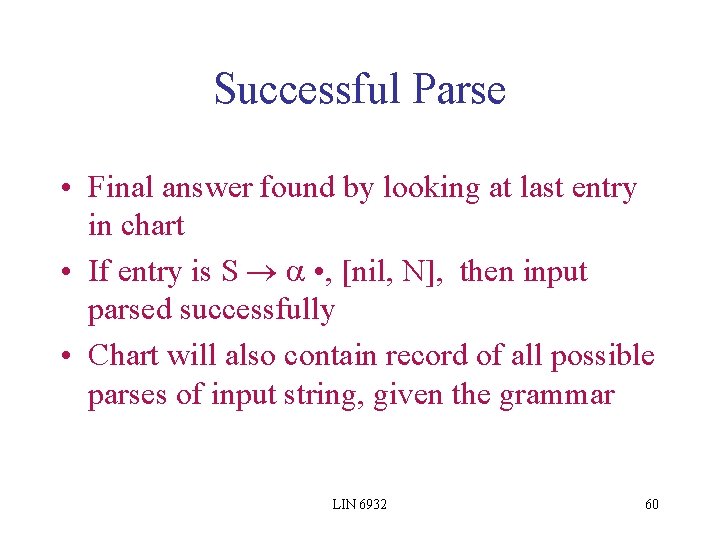

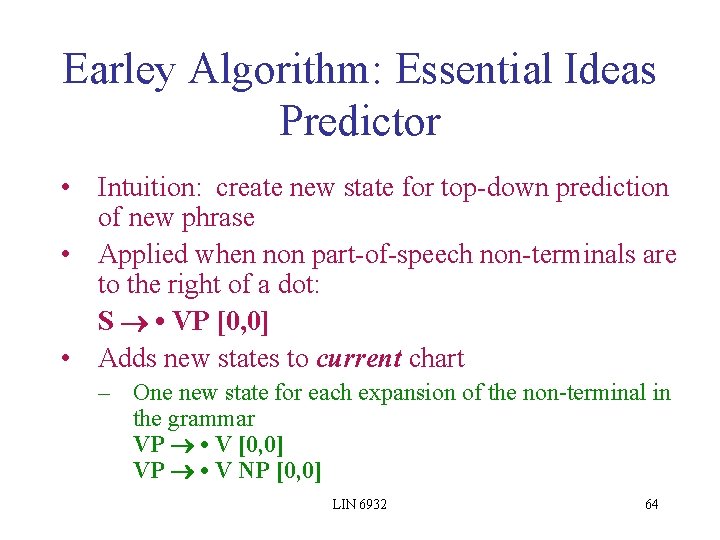

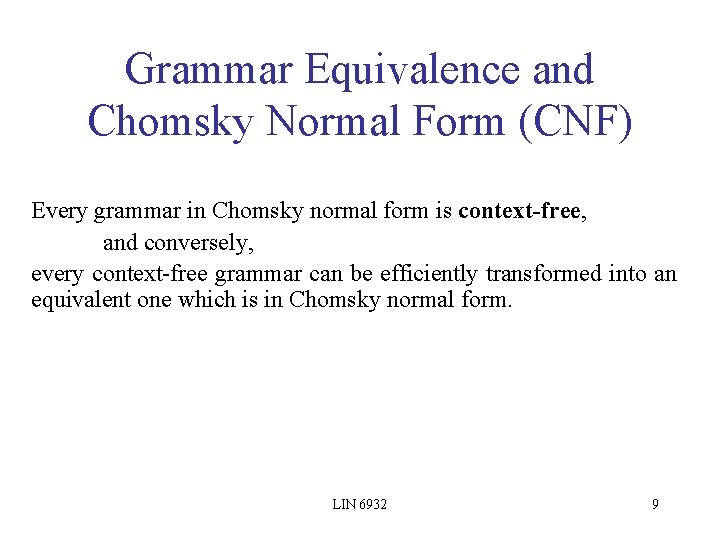

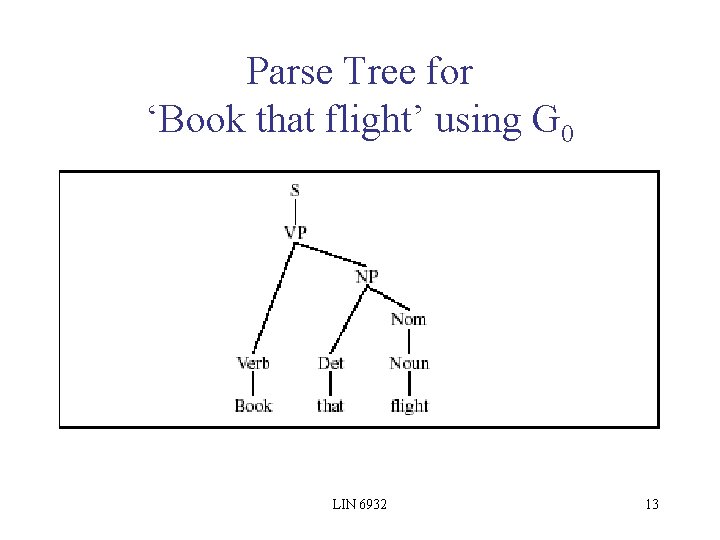

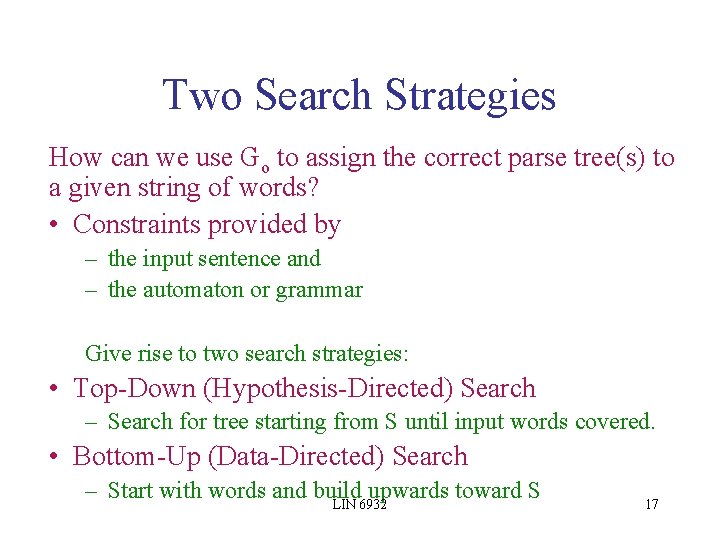

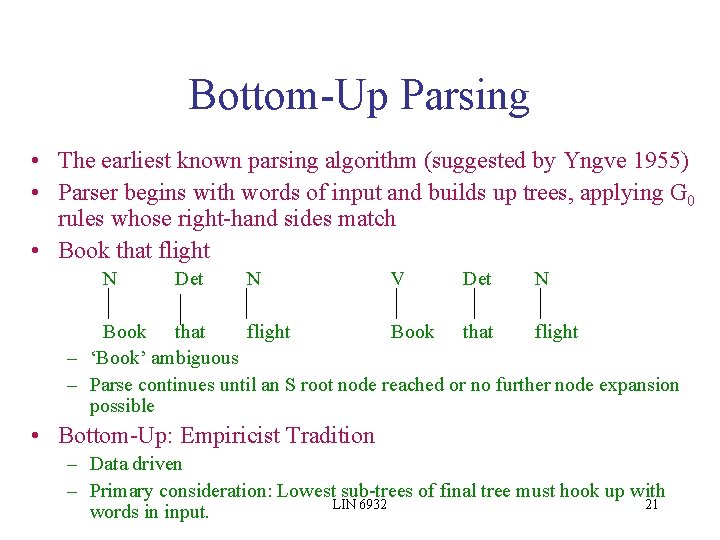

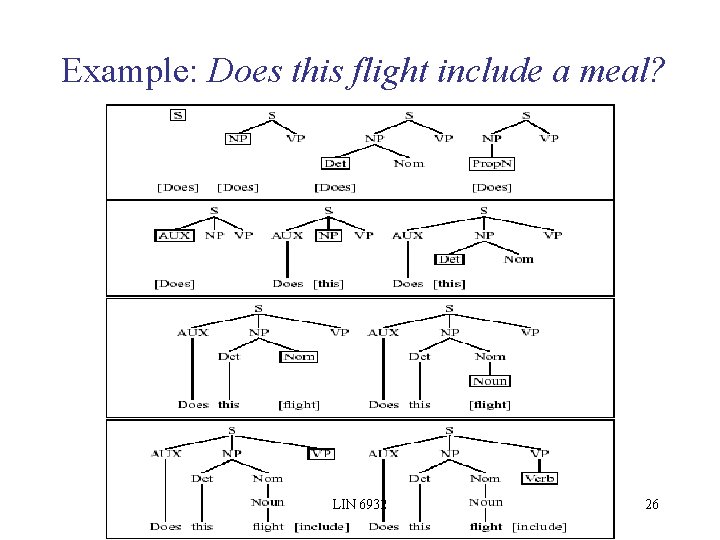

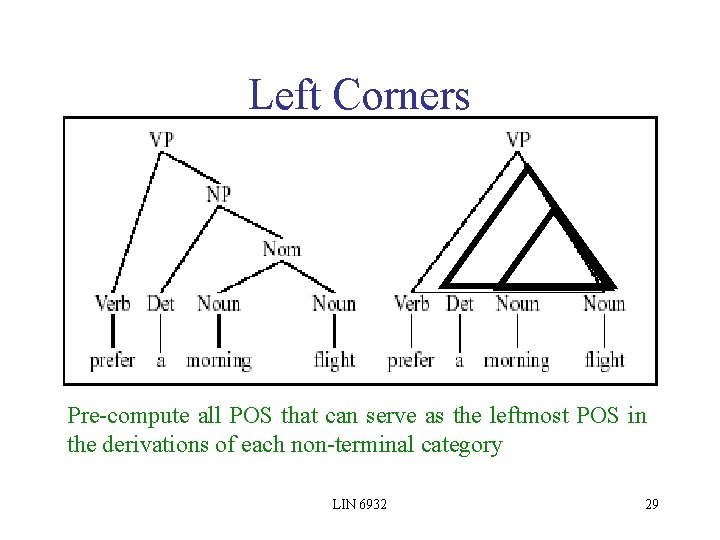

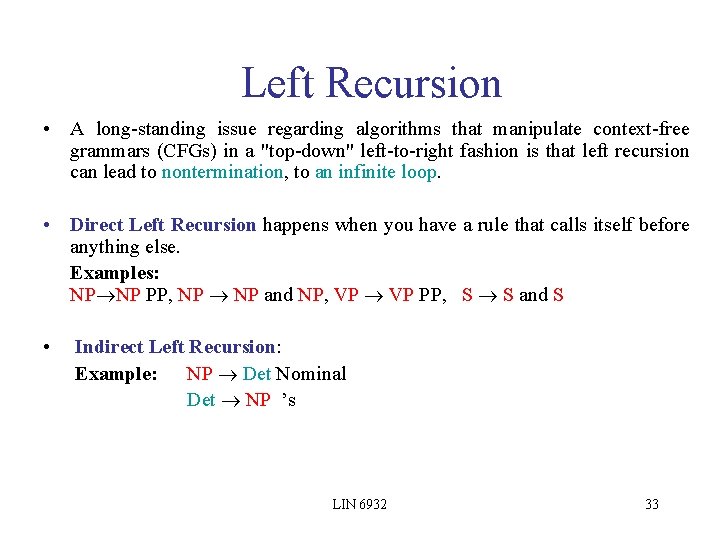

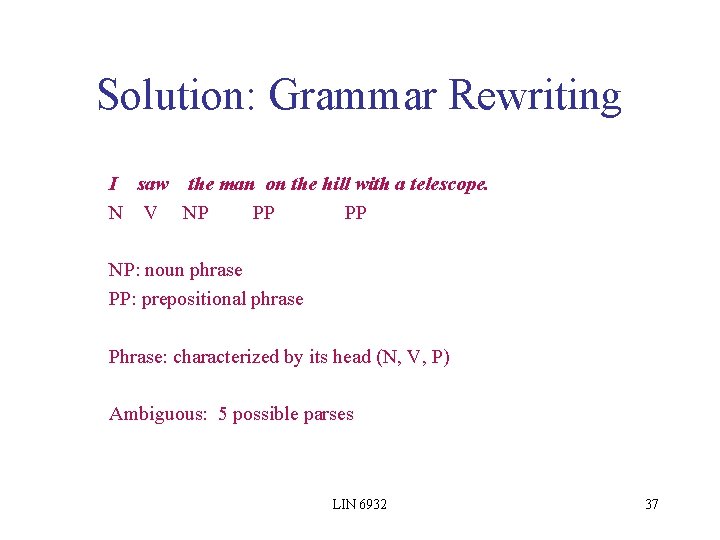

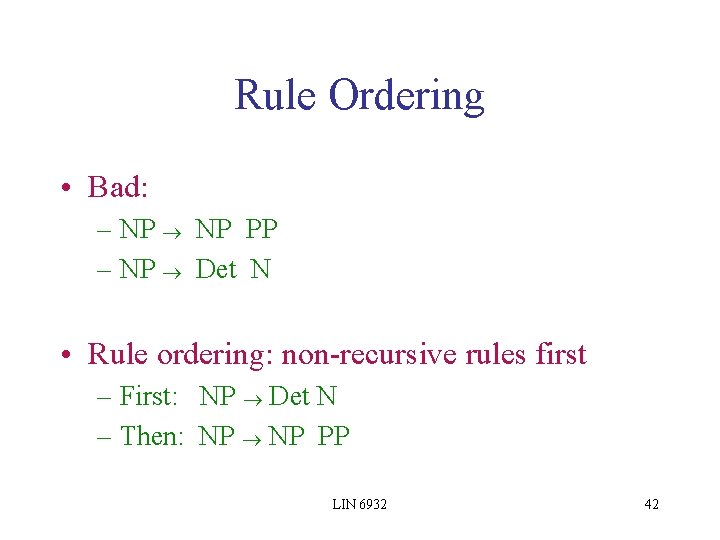

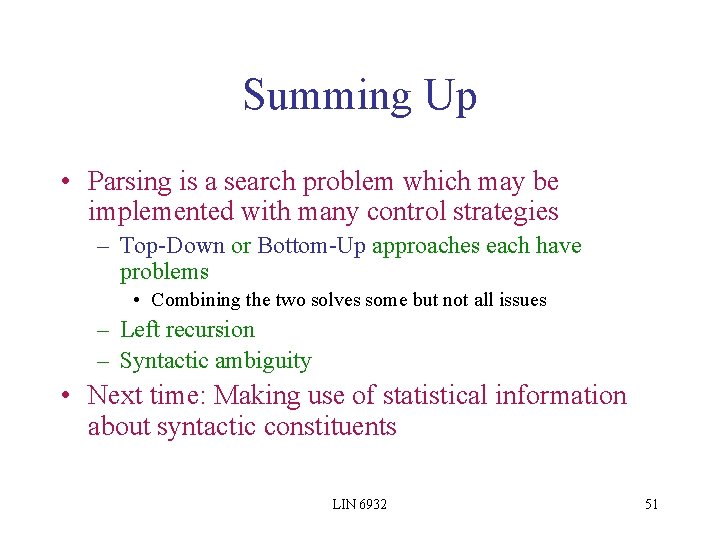

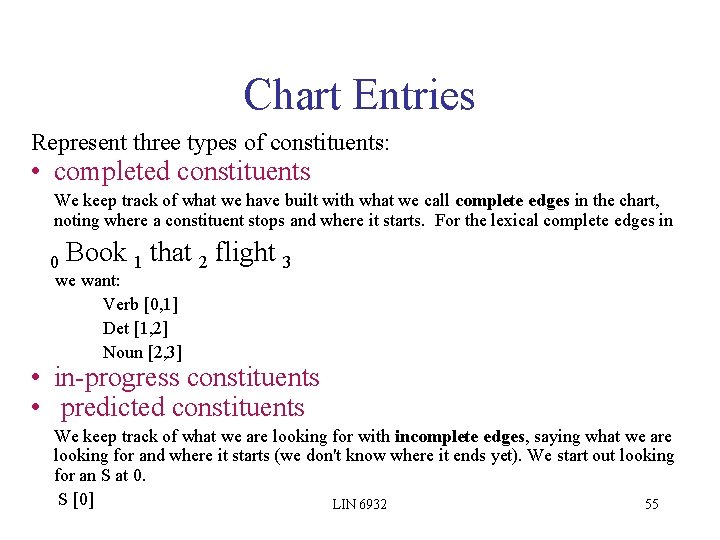

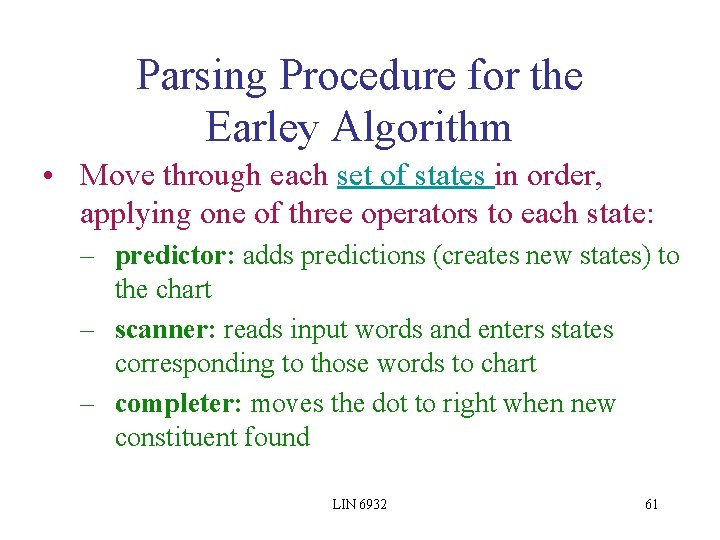

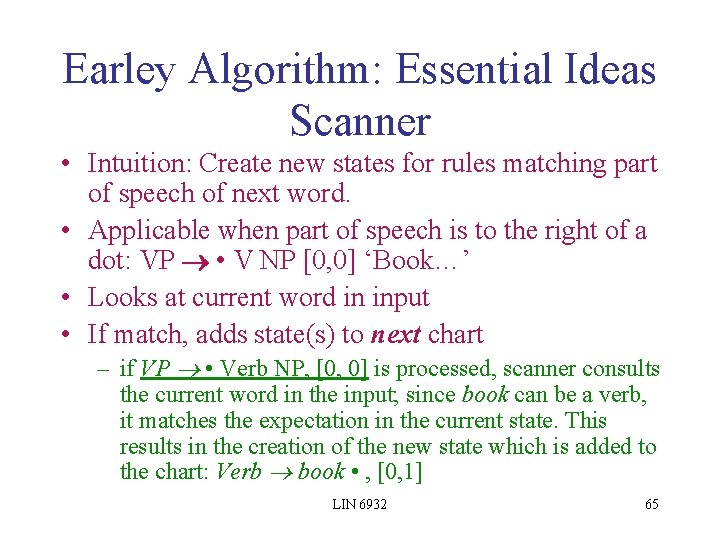

Progress in parse represented by Dotted Rules • Position of • indicates the progress made in recognizing a grammar rule • 0 Book 1 that 2 flight 3 S • VP, [0, 0] (predicted) NP Det • Nom, [1, 2] (in progress) VP V NP • , [0, 3] (completed) • [x, y] tells us what portion of the input is spanned so far by this rule • Each State si: <dotted rule>, [<back pointer>, <current position>] LIN 6932 56

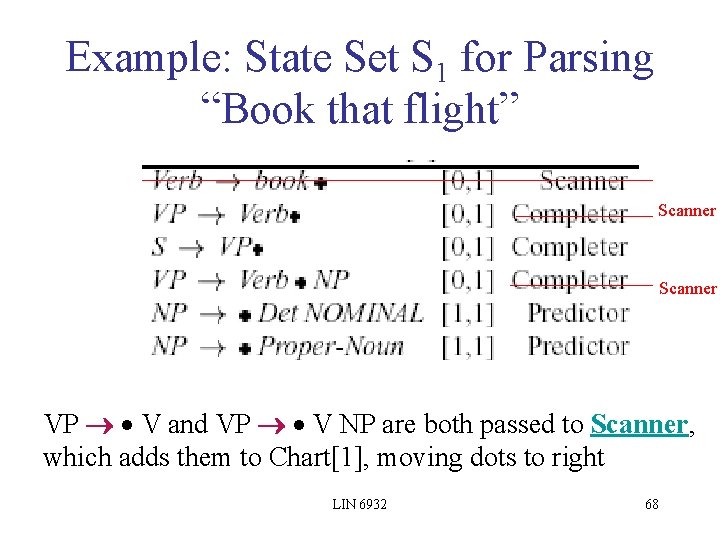

![0 Book 1 that 2 flight 3 S VP 0 0 First 0 Book 1 that 2 flight 3 S • VP, [0, 0] – First](https://slidetodoc.com/presentation_image/3ab82308dc765b2b9b06f8ce4ba27bc8/image-57.jpg)

0 Book 1 that 2 flight 3 S • VP, [0, 0] – First 0 means S constituent begins at the start of input – Second 0 means the dot here too – So, this is a top-down prediction NP Det • Nom, [1, 2] – – the NP begins at position 1 the dot is at position 2 so, Det has been successfully parsed Nom predicted next LIN 6932 57

0 Book 1 that 2 flight 3 (continued) VP V NP • , [0, 3] – Successful VP parse of entire input LIN 6932 58

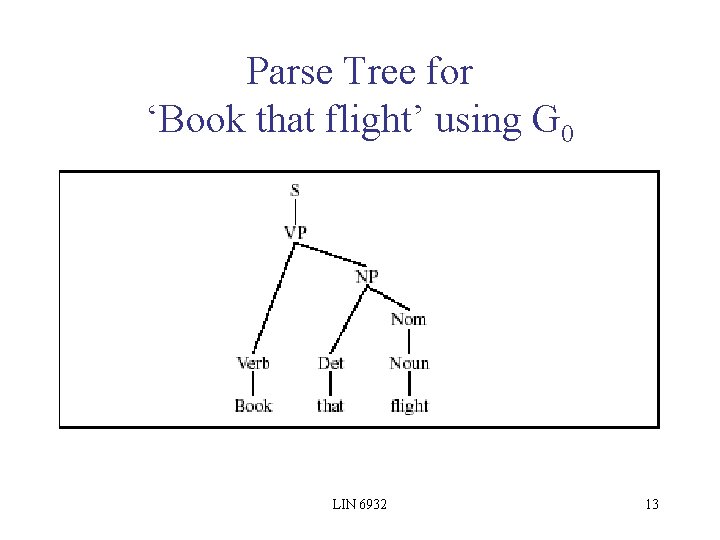

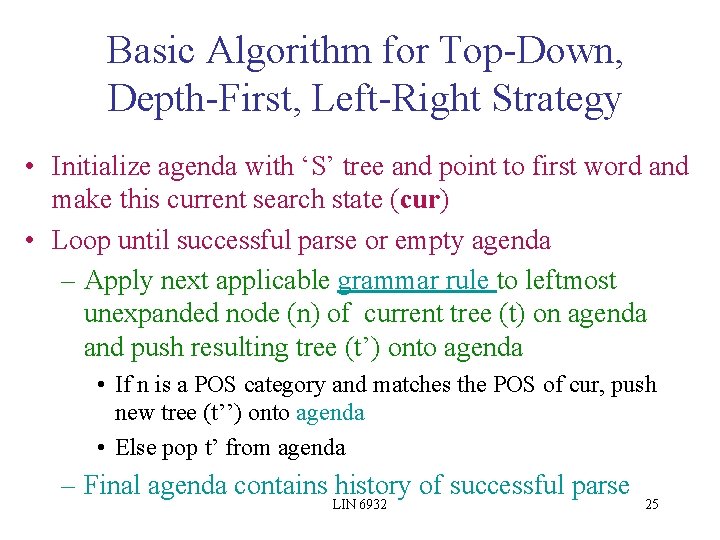

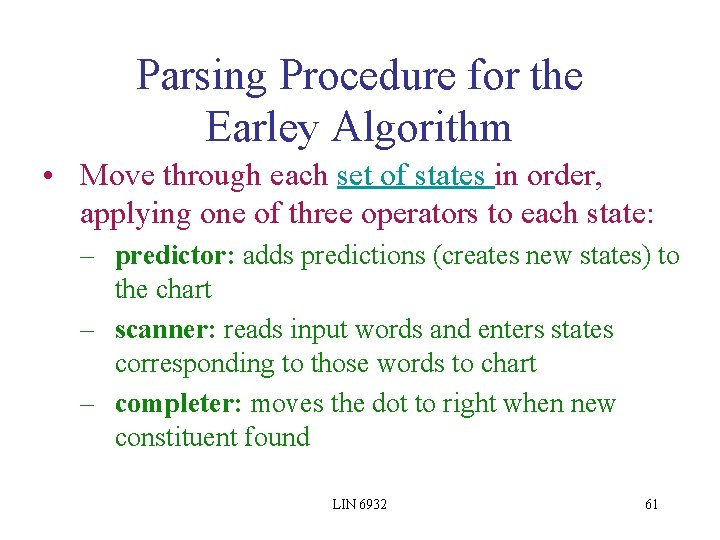

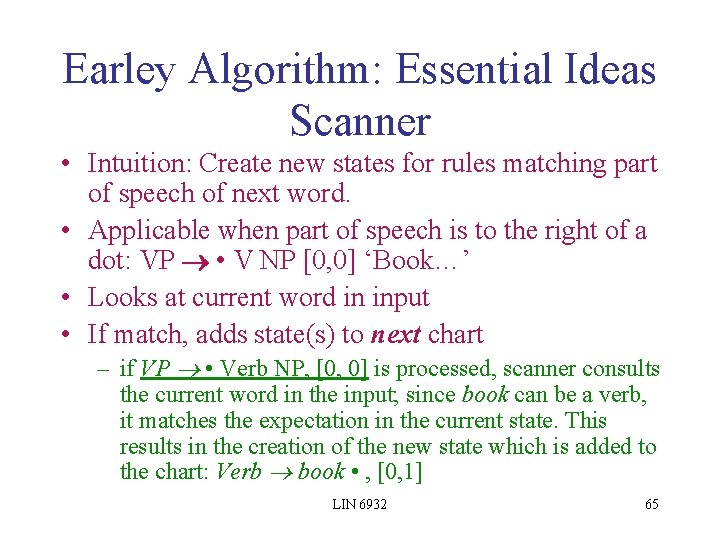

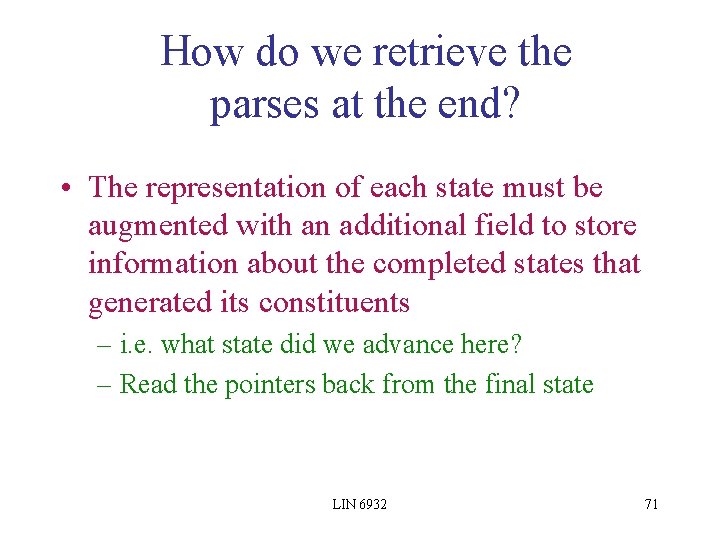

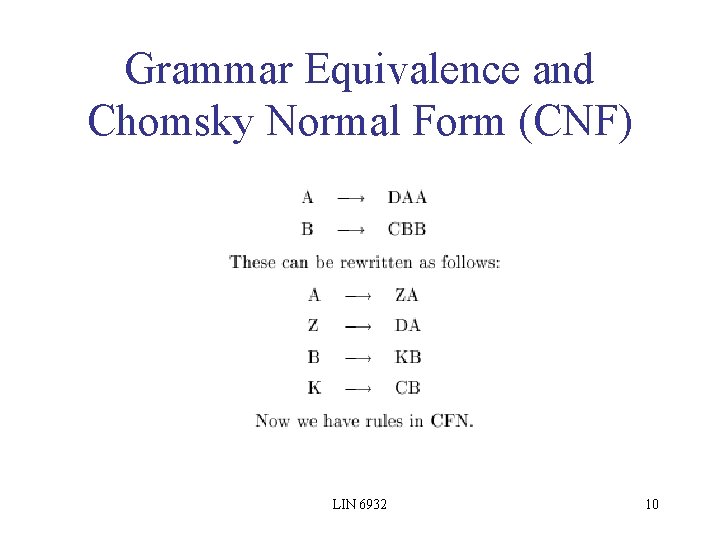

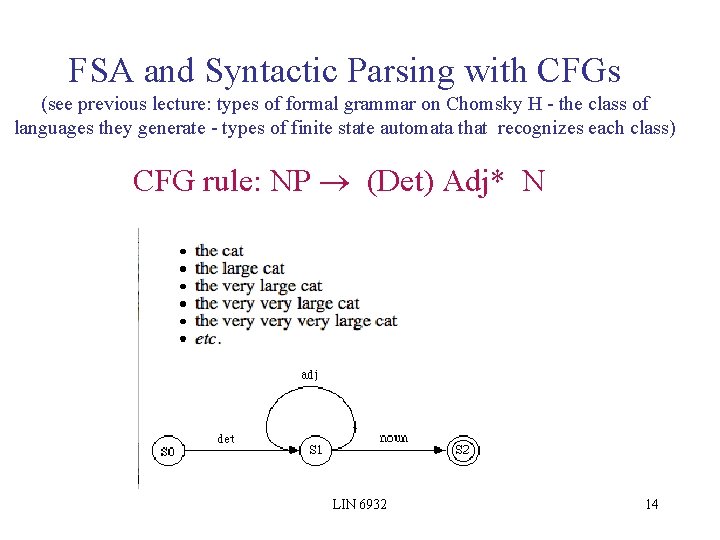

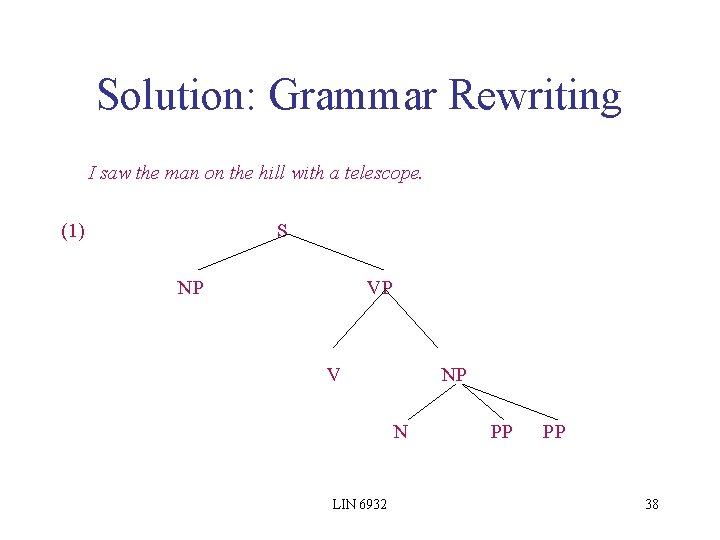

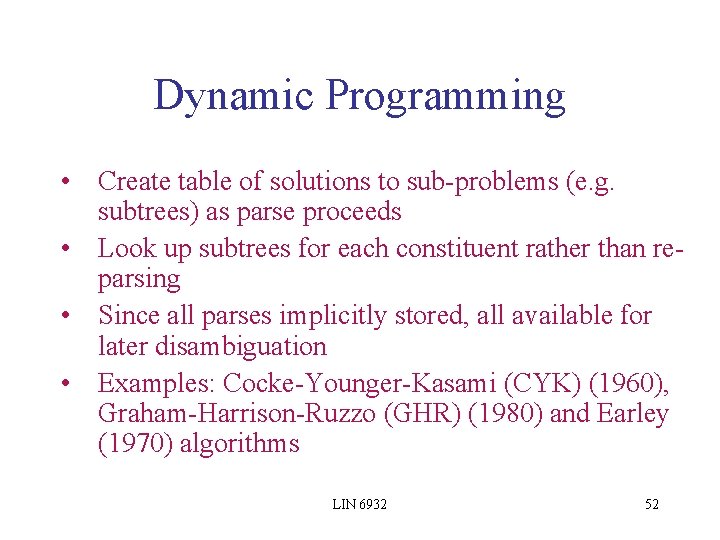

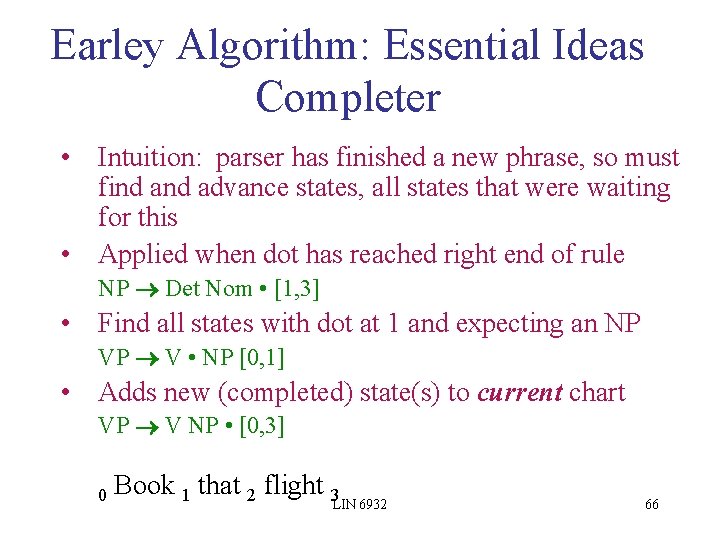

![Some Earley edges parser states 1 S NP VP 0 0 Incomplete Some Earley edges (parser states) 1. S => • NP VP [0, 0]: Incomplete.](https://slidetodoc.com/presentation_image/3ab82308dc765b2b9b06f8ce4ba27bc8/image-59.jpg)

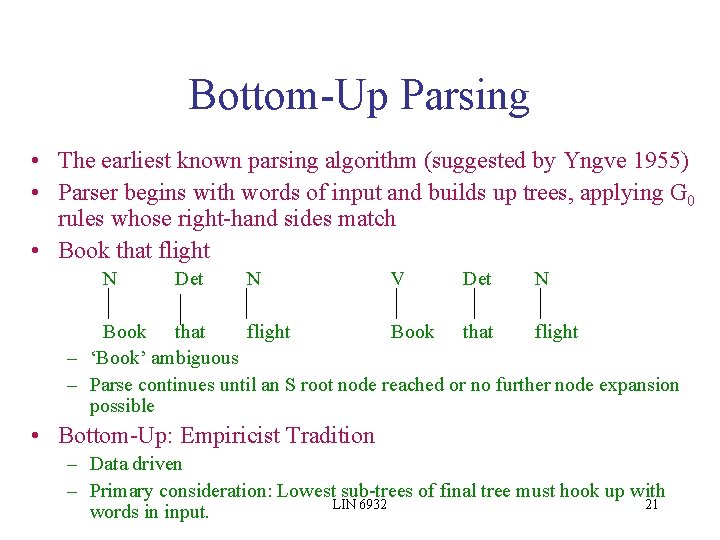

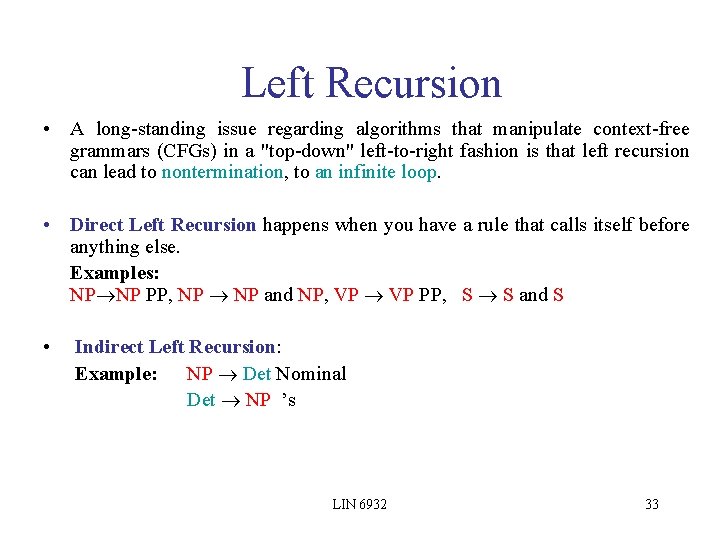

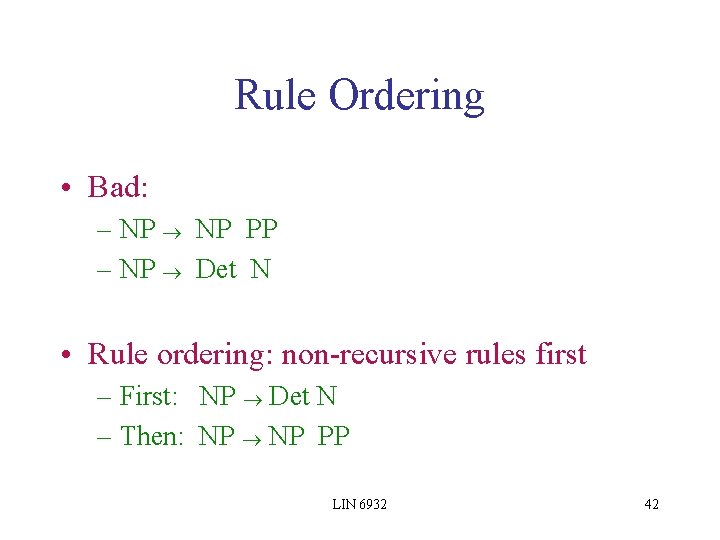

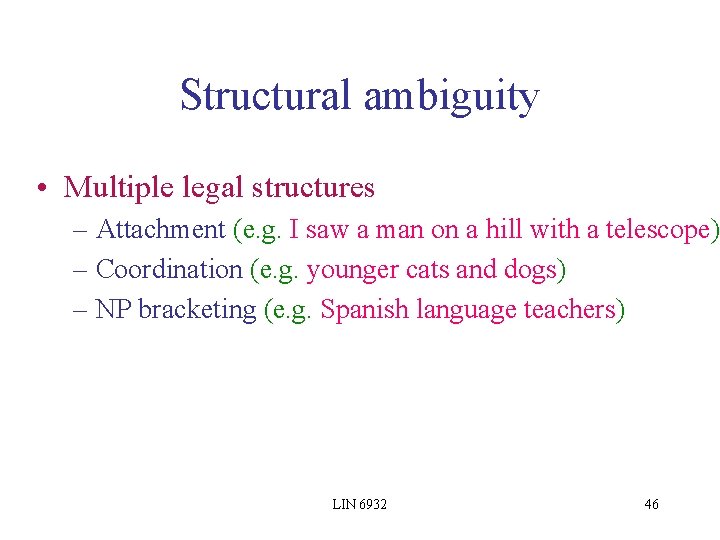

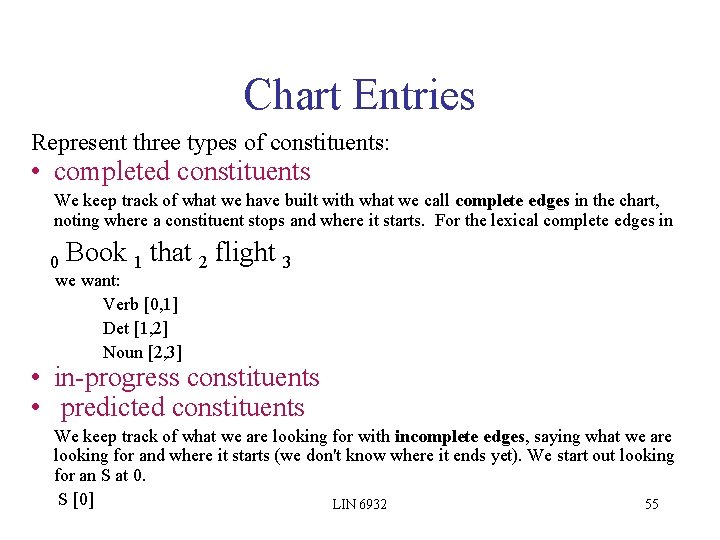

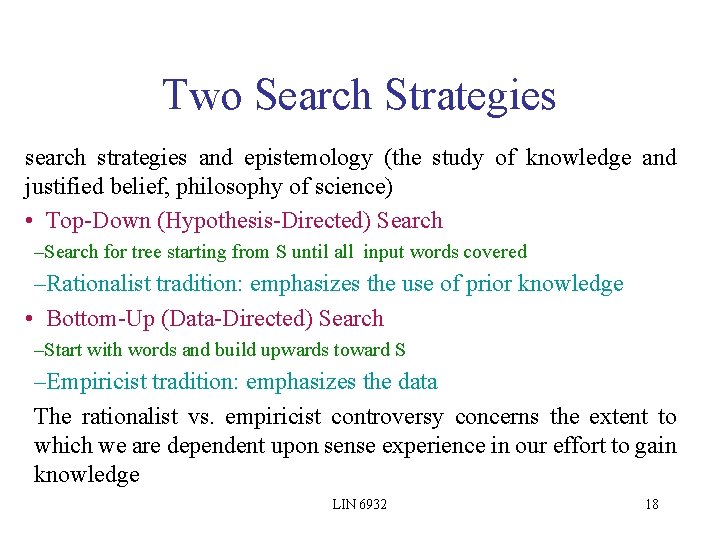

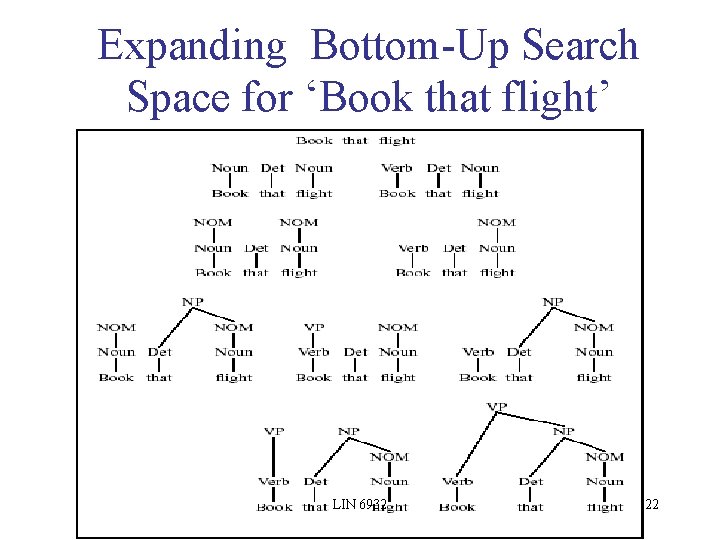

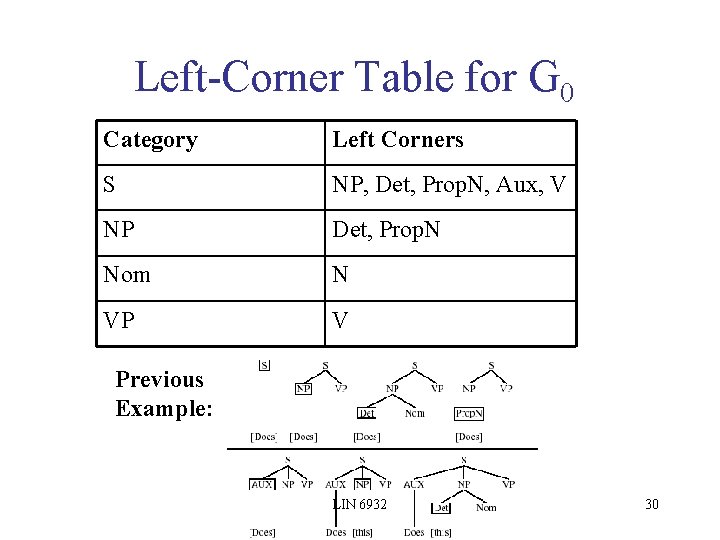

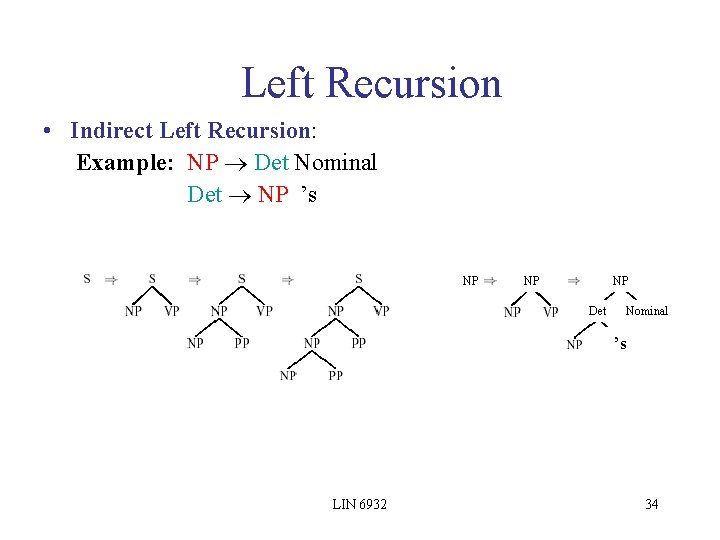

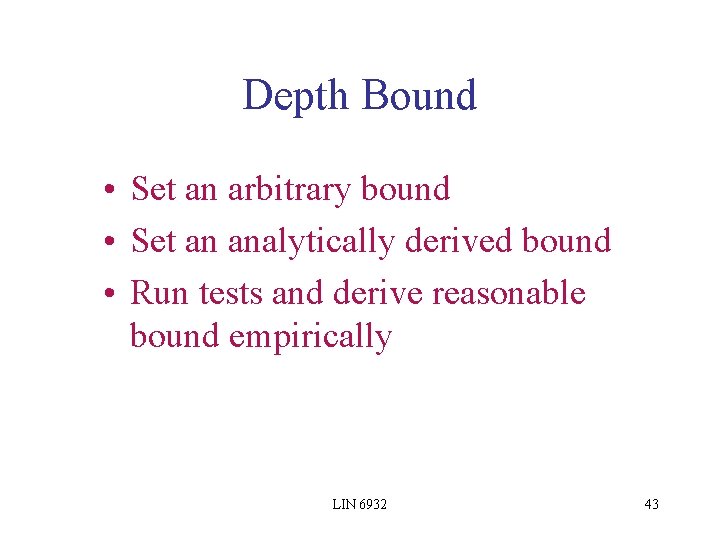

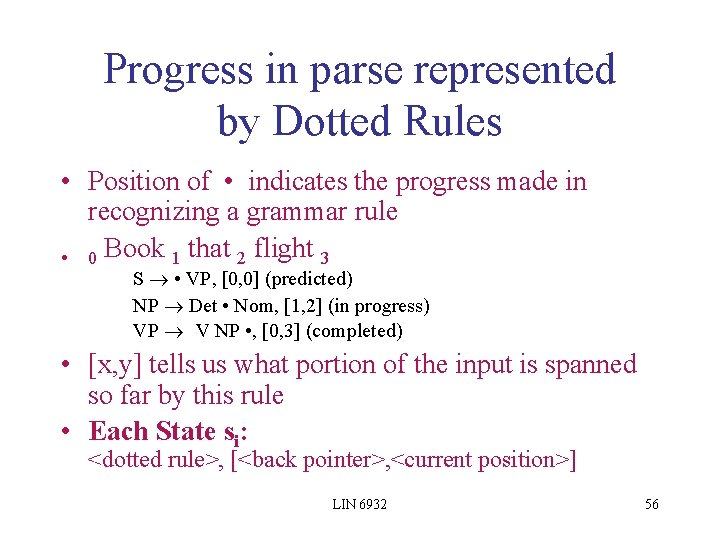

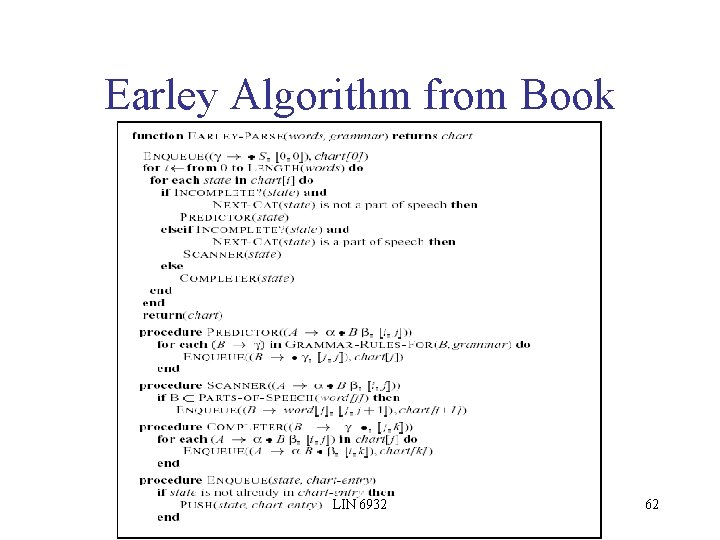

Some Earley edges (parser states) 1. S => • NP VP [0, 0]: Incomplete. We're trying to build an S that starts at 0 using the NP VP rule. We've found nothing yet (the dot is in first position), we're currently looking for an NP that starts at 0. 2. S => NP • VP [0, 1]: Incomplete. We're trying to build an S that starts at 0 using the NP VP rule. We've found an NP (the dot is in second position), we're currently looking for a VP that starts at 0. 3. S => NP VP • [0, 3]: Complete. We've succeeded in building an S that starts at 0 using the NP VP rule. It ends at 3 (the dot is in the last position), we're currently looking for a VP that starts at 0. LIN 6932 59

Successful Parse • Final answer found by looking at last entry in chart • If entry is S • , [nil, N], then input parsed successfully • Chart will also contain record of all possible parses of input string, given the grammar LIN 6932 60

Parsing Procedure for the Earley Algorithm • Move through each set of states in order, applying one of three operators to each state: – predictor: adds predictions (creates new states) to the chart – scanner: reads input words and enters states corresponding to those words to chart – completer: moves the dot to right when new constituent found LIN 6932 61

Earley Algorithm from Book LIN 6932 62

Earley Algorithm: Essential Ideas Initialize • To look for an S at 0, we add the following incomplete edge at 0, which uses a dummy category gamma, • gamma => • S [0, 0] LIN 6932 63

Earley Algorithm: Essential Ideas Predictor • Intuition: create new state for top-down prediction of new phrase • Applied when non part-of-speech non-terminals are to the right of a dot: S • VP [0, 0] • Adds new states to current chart – One new state for each expansion of the non-terminal in the grammar VP • V [0, 0] VP • V NP [0, 0] LIN 6932 64

Earley Algorithm: Essential Ideas Scanner • Intuition: Create new states for rules matching part of speech of next word. • Applicable when part of speech is to the right of a dot: VP • V NP [0, 0] ‘Book…’ • Looks at current word in input • If match, adds state(s) to next chart – if VP • Verb NP, [0, 0] is processed, scanner consults the current word in the input; since book can be a verb, it matches the expectation in the current state. This results in the creation of the new state which is added to the chart: Verb book • , [0, 1] LIN 6932 65

Earley Algorithm: Essential Ideas Completer • Intuition: parser has finished a new phrase, so must find advance states, all states that were waiting for this • Applied when dot has reached right end of rule NP Det Nom • [1, 3] • Find all states with dot at 1 and expecting an NP VP V • NP [0, 1] • Adds new (completed) state(s) to current chart VP V NP • [0, 3] 0 Book 1 that 2 flight 3 LIN 6932 66

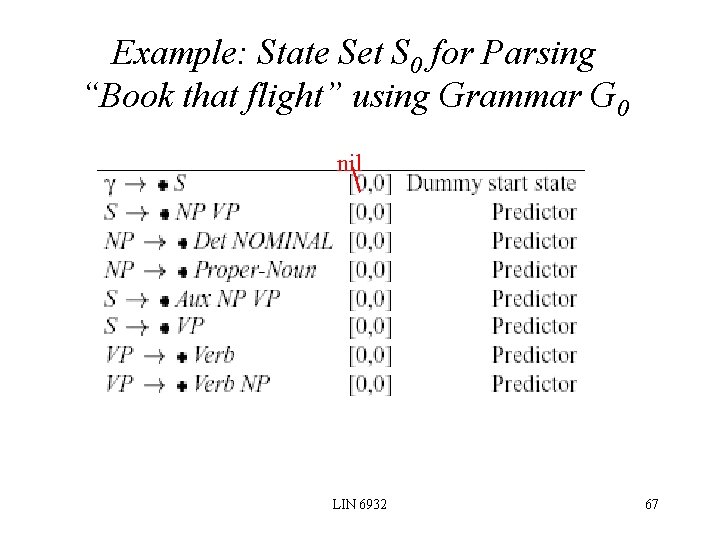

Example: State Set S 0 for Parsing “Book that flight” using Grammar G 0 nil LIN 6932 67

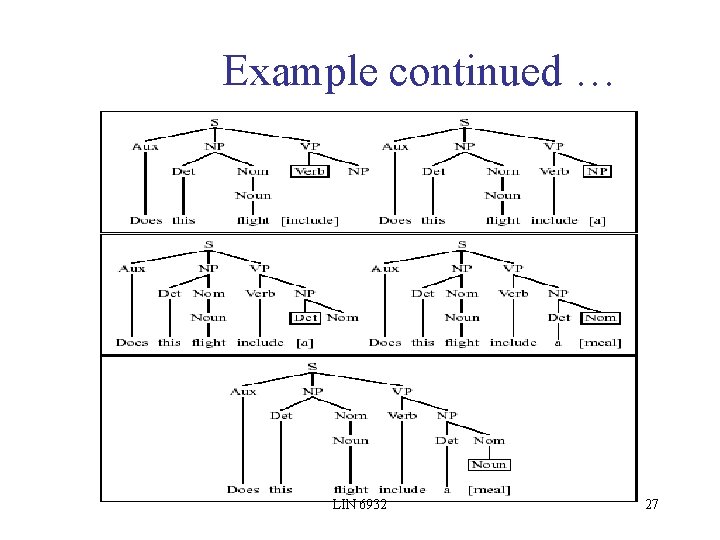

Example: State Set S 1 for Parsing “Book that flight” Scanner VP V and VP V NP are both passed to Scanner, which adds them to Chart[1], moving dots to right LIN 6932 68

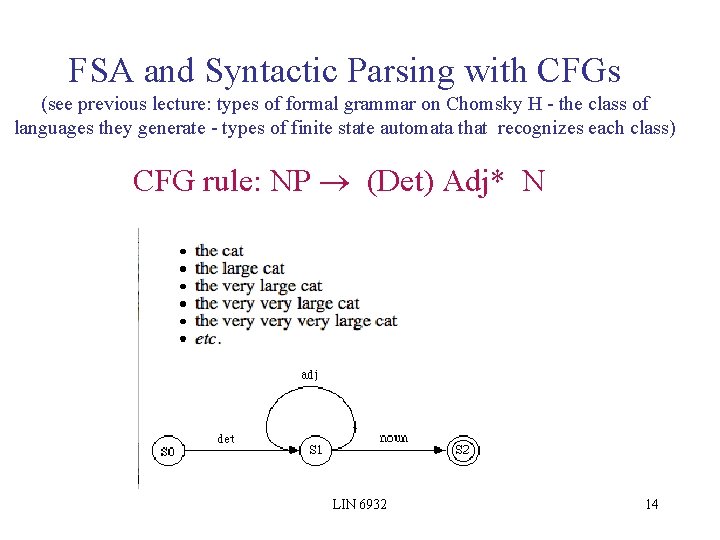

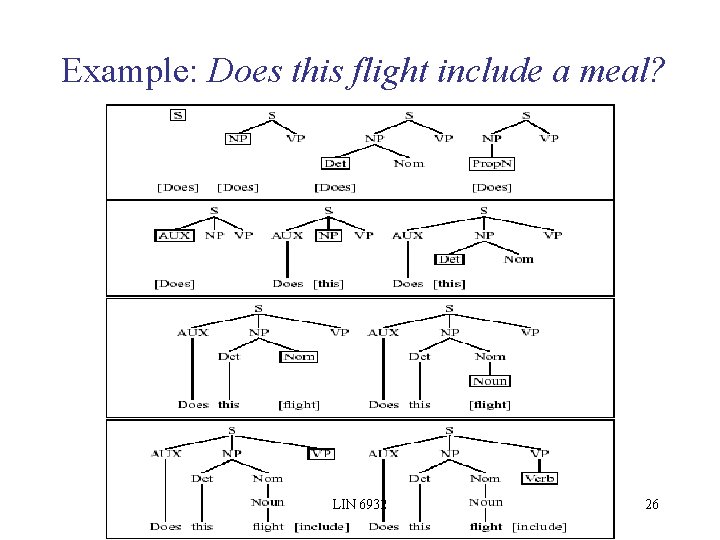

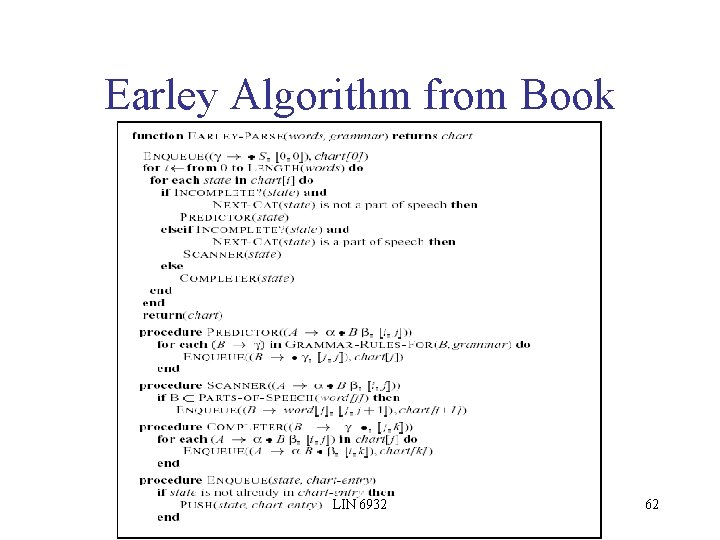

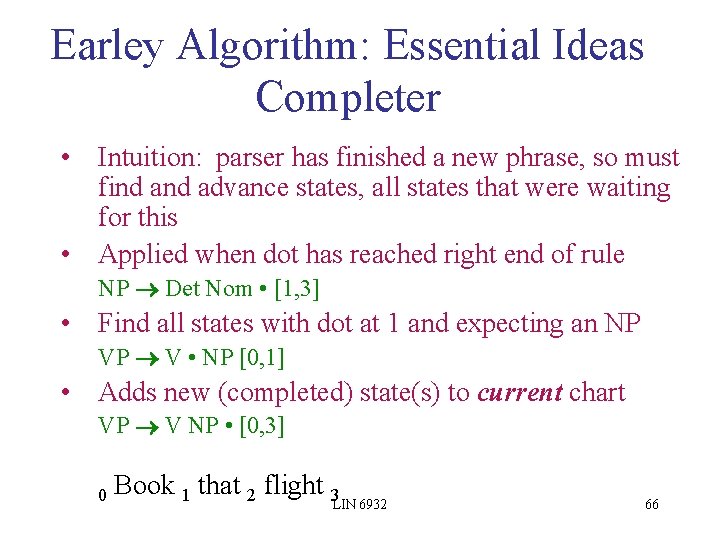

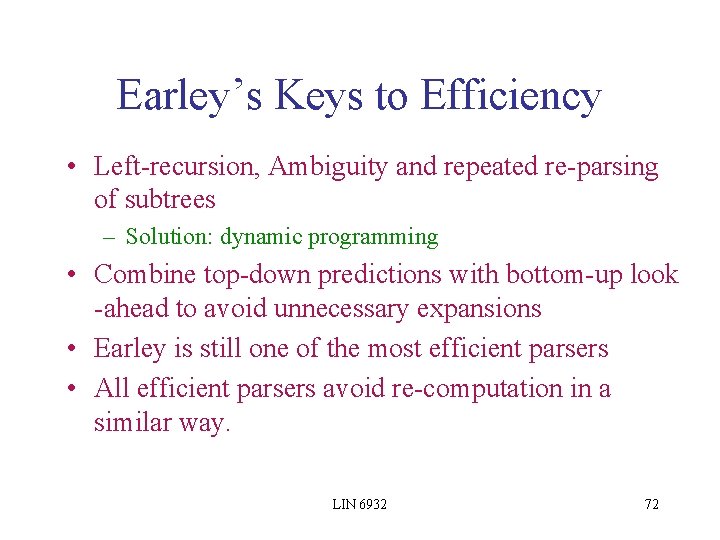

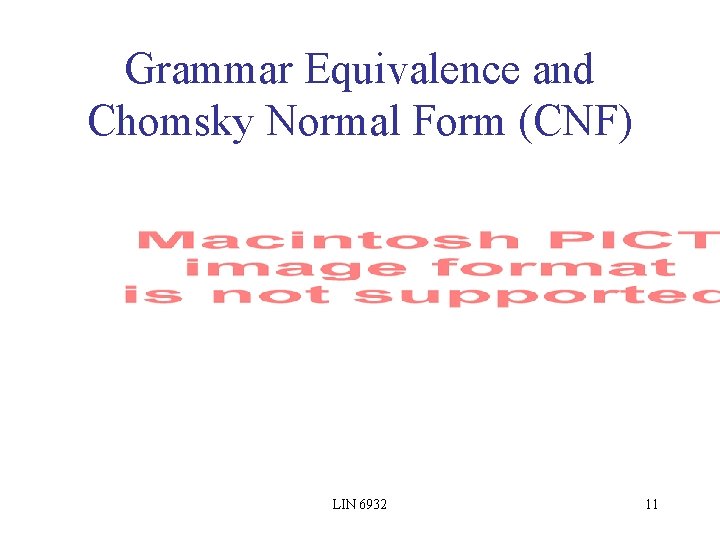

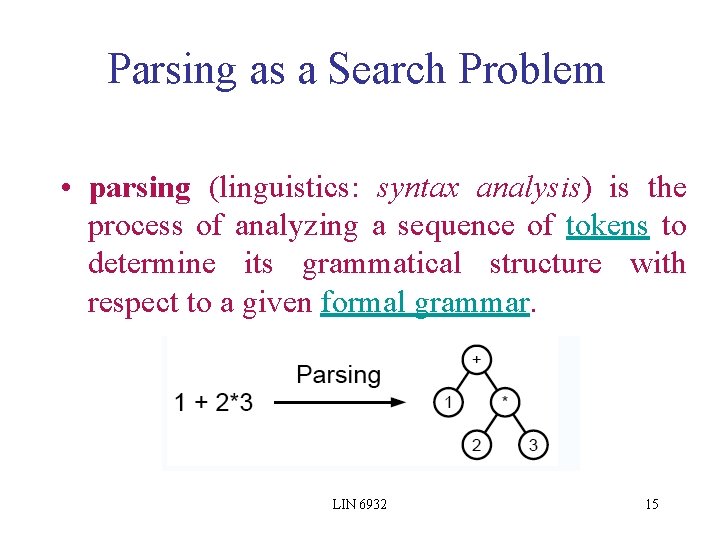

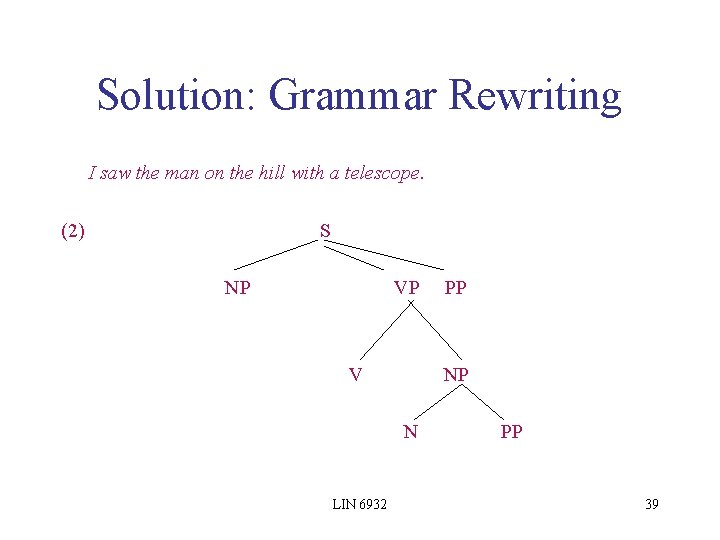

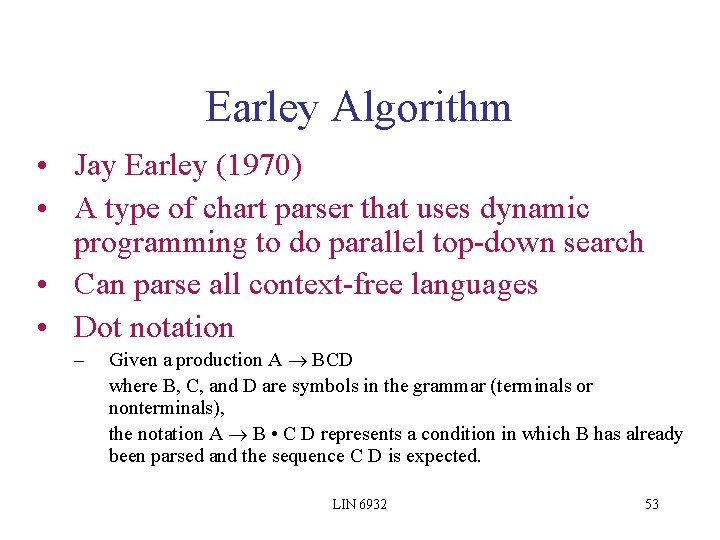

![Last Two States Scanner γS LIN 6932 nil 3 Completer 69 Last Two States Scanner γ→S . LIN 6932 [nil, 3] Completer 69](https://slidetodoc.com/presentation_image/3ab82308dc765b2b9b06f8ce4ba27bc8/image-69.jpg)

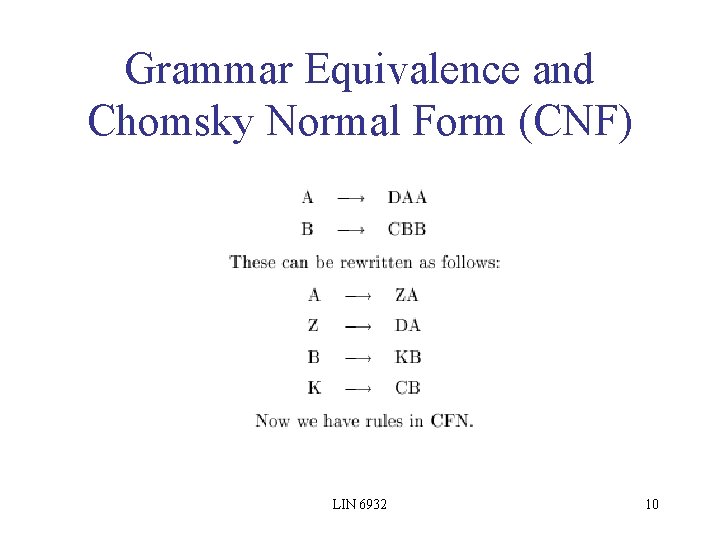

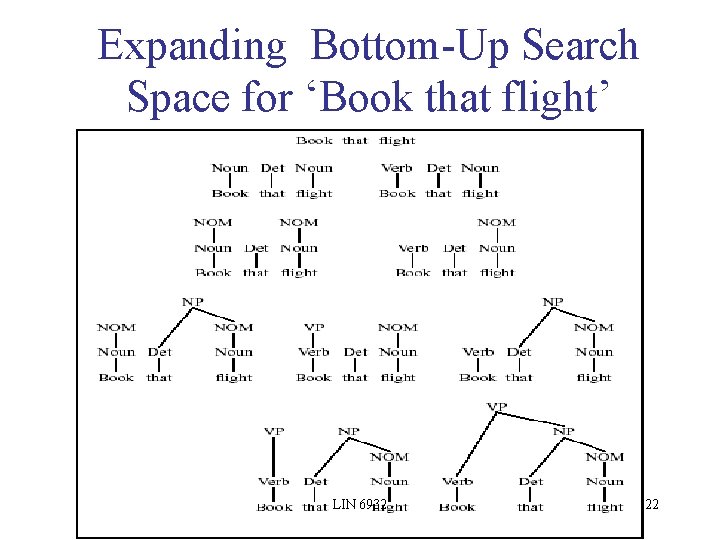

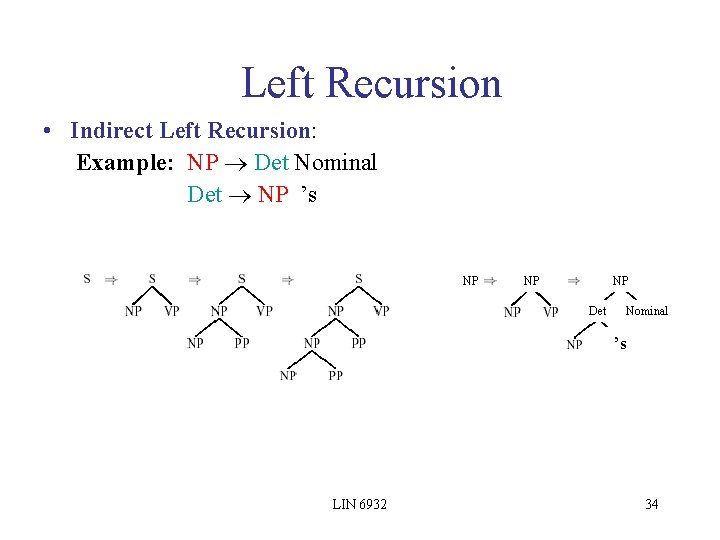

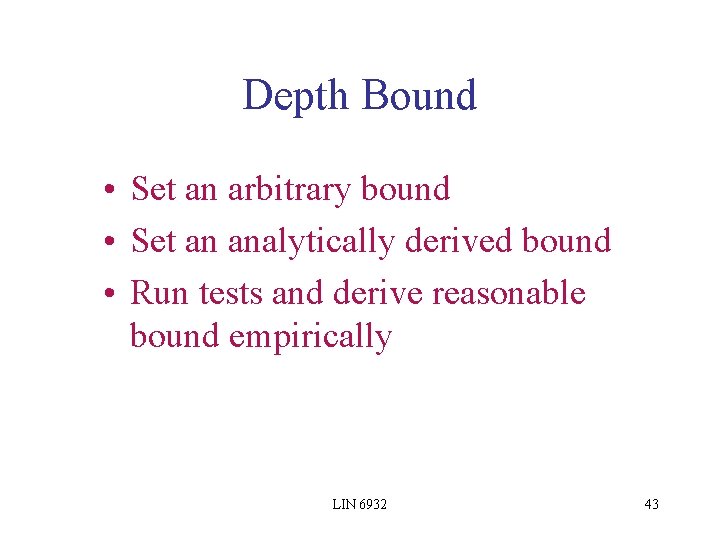

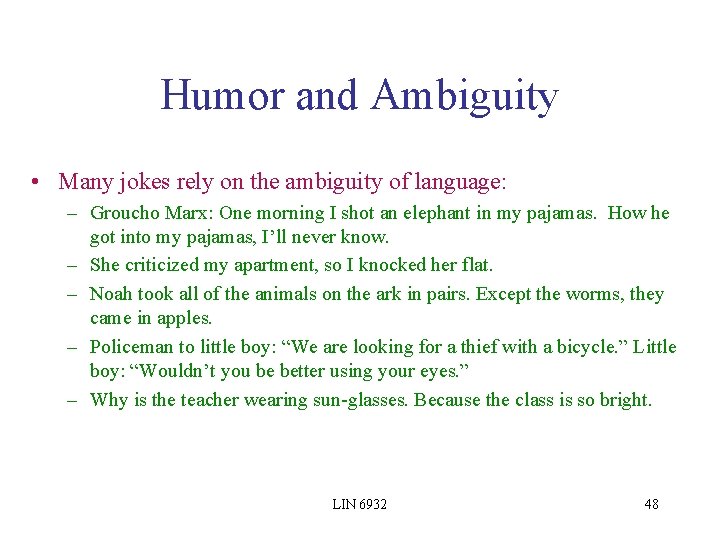

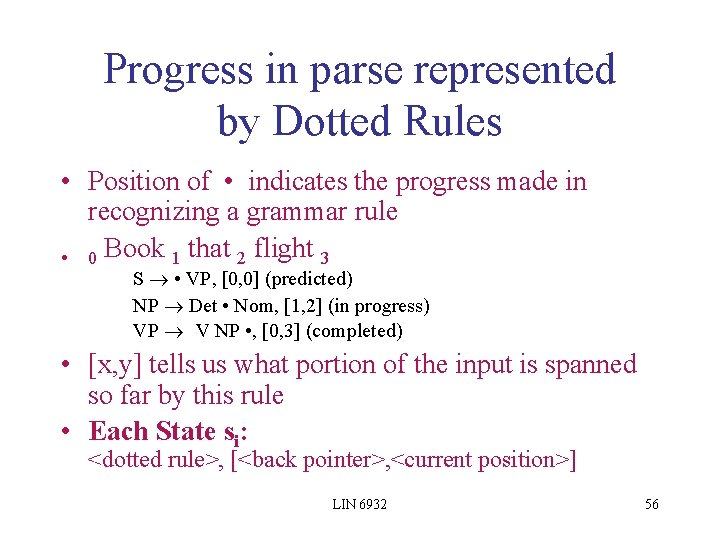

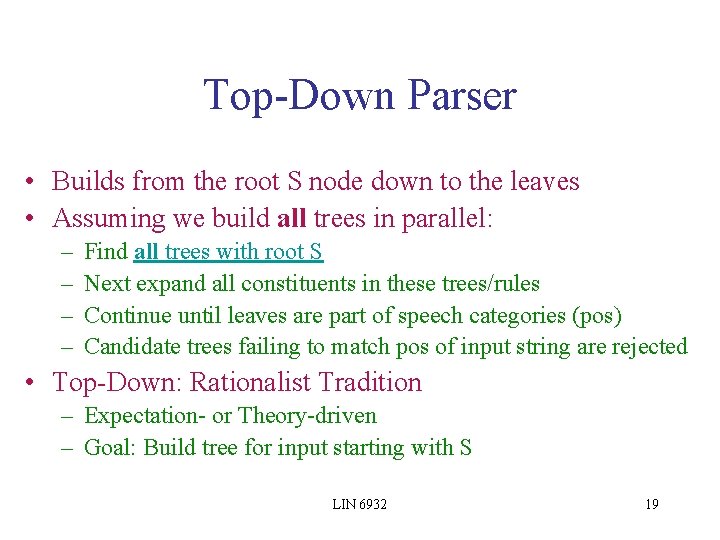

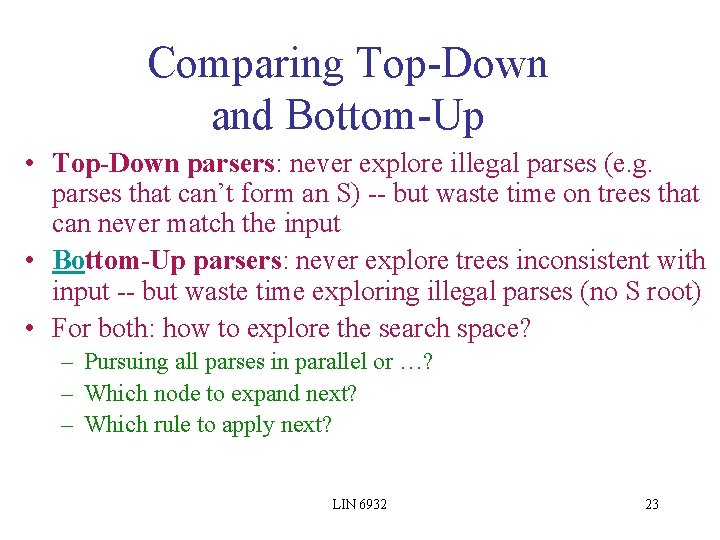

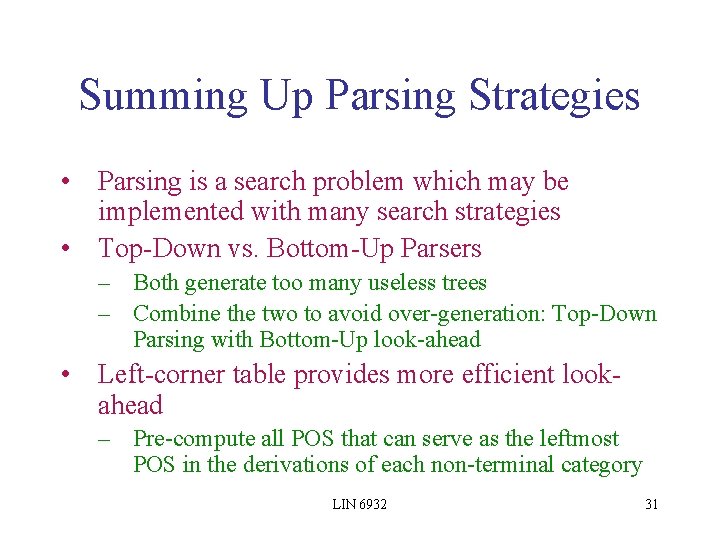

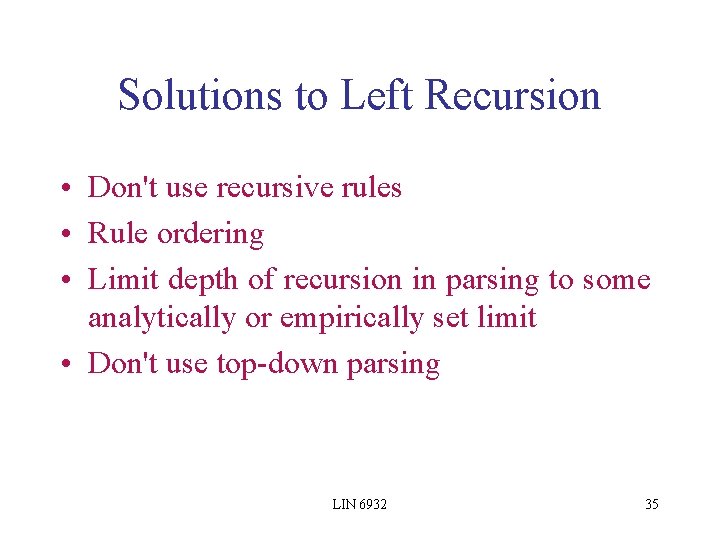

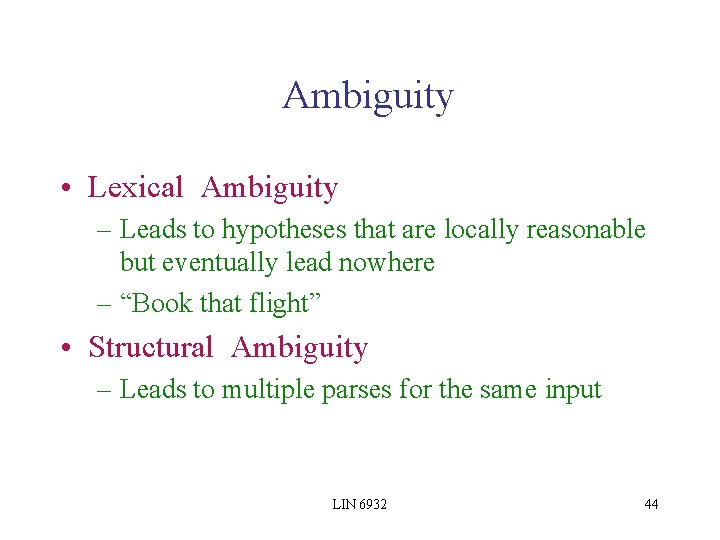

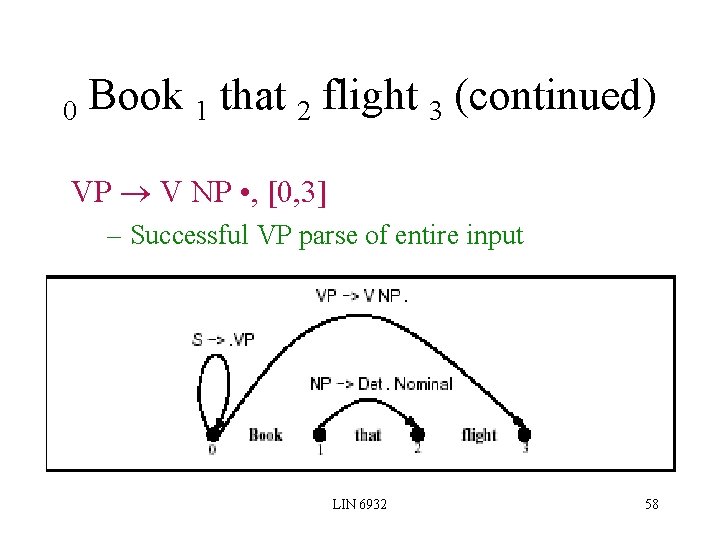

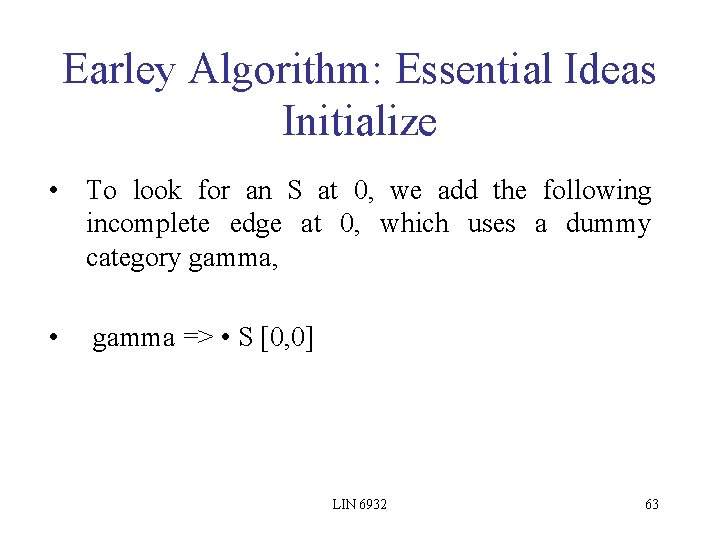

Last Two States Scanner γ→S . LIN 6932 [nil, 3] Completer 69

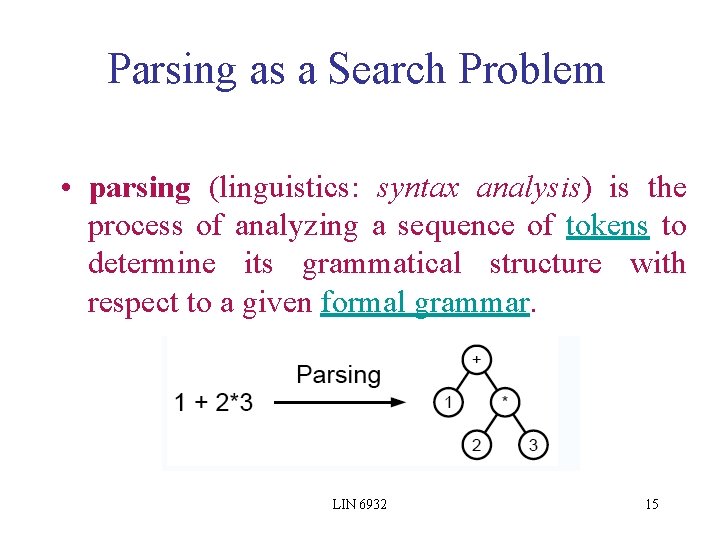

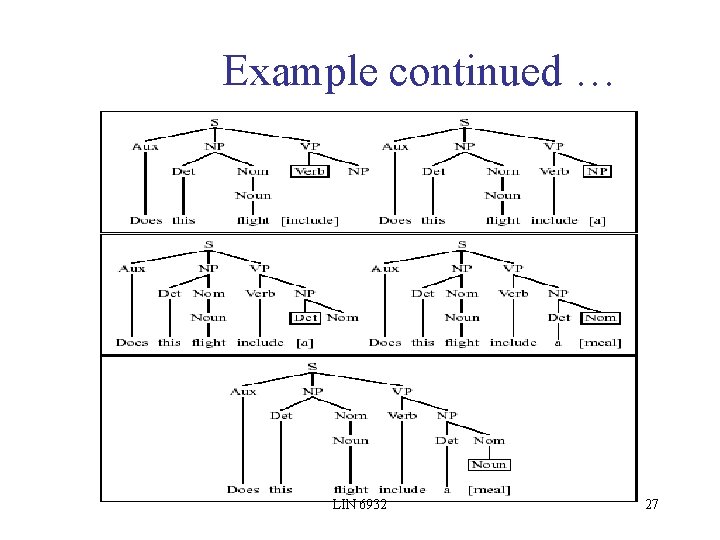

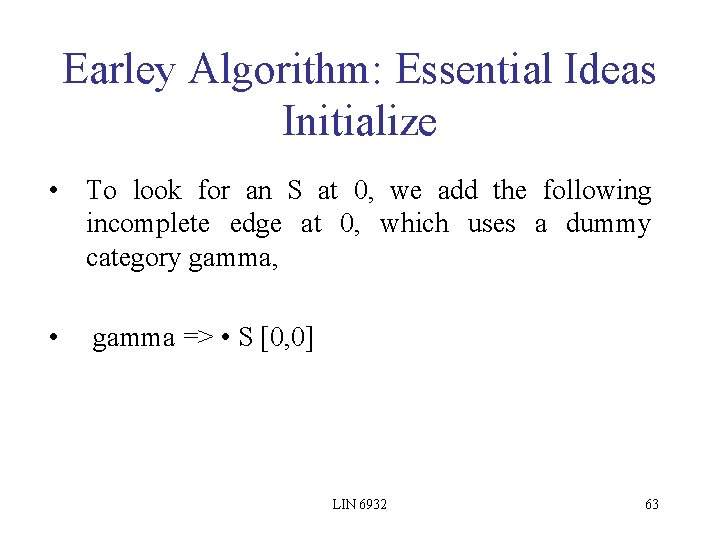

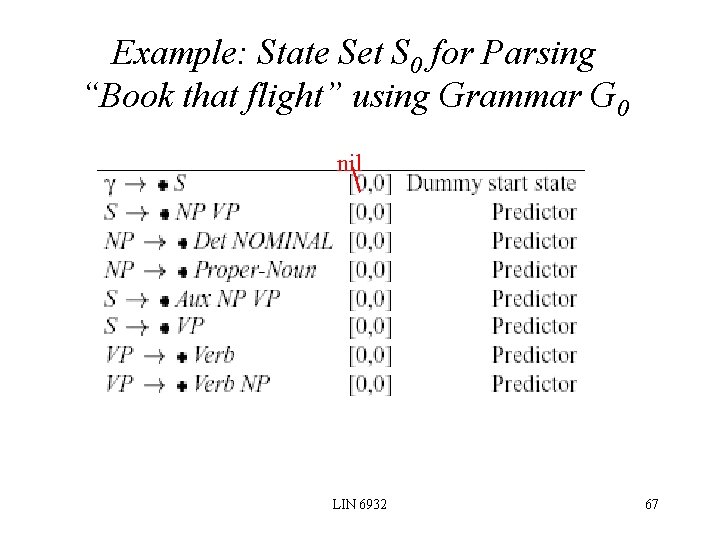

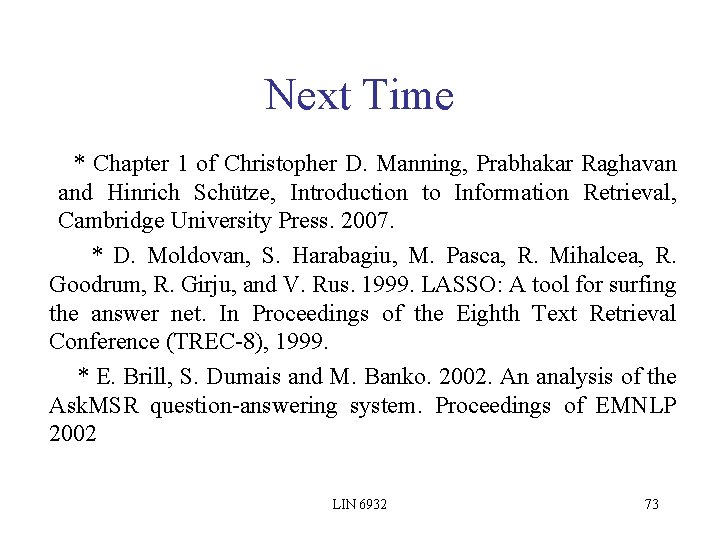

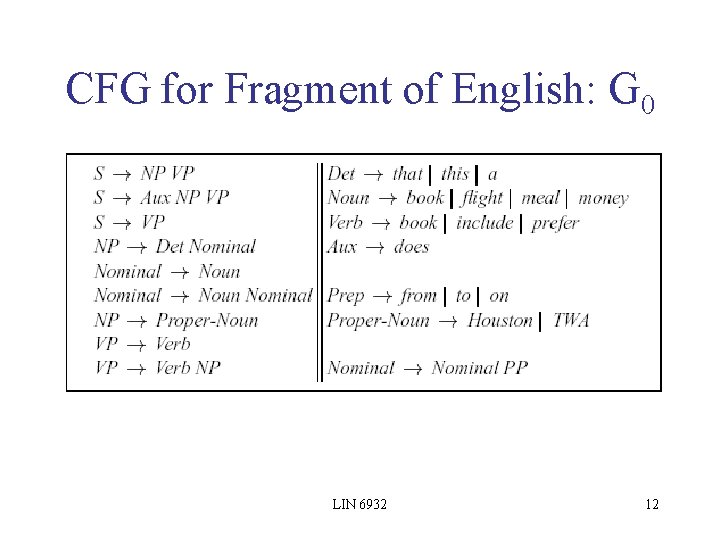

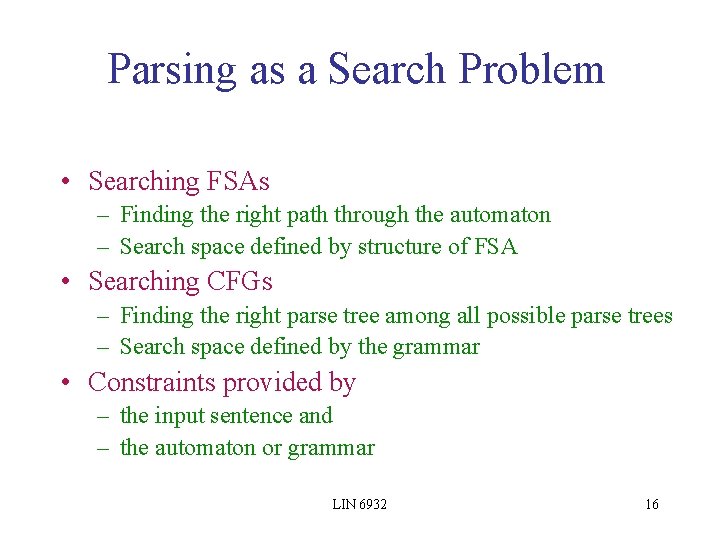

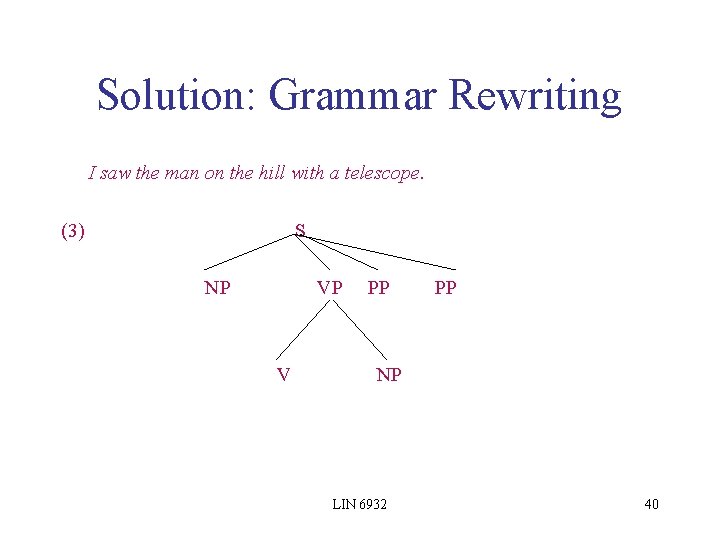

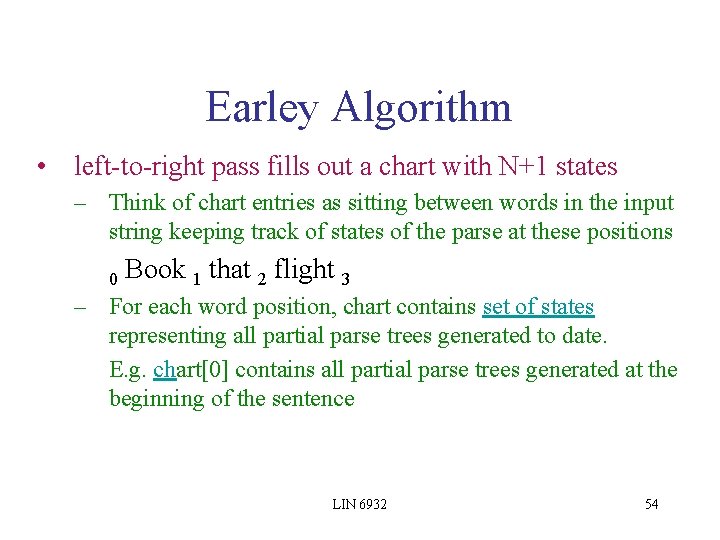

![Error Handling Valid sentences will leave the state S nil N What Error Handling Valid sentences will leave the state S , [nil, N] • What](https://slidetodoc.com/presentation_image/3ab82308dc765b2b9b06f8ce4ba27bc8/image-70.jpg)

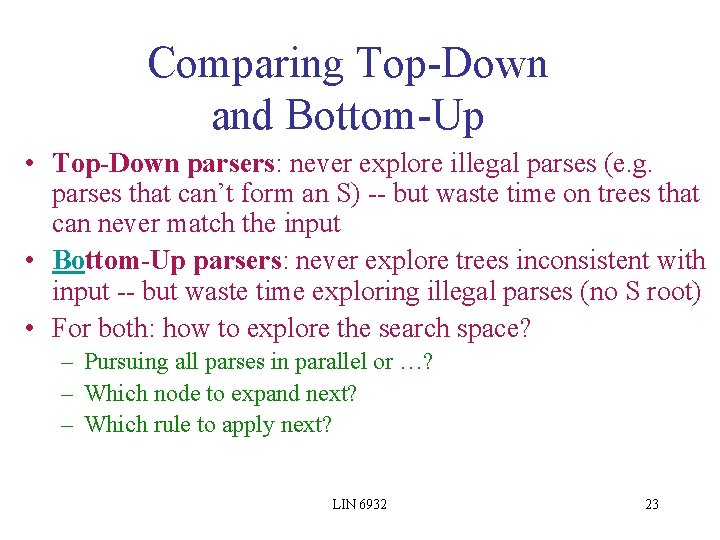

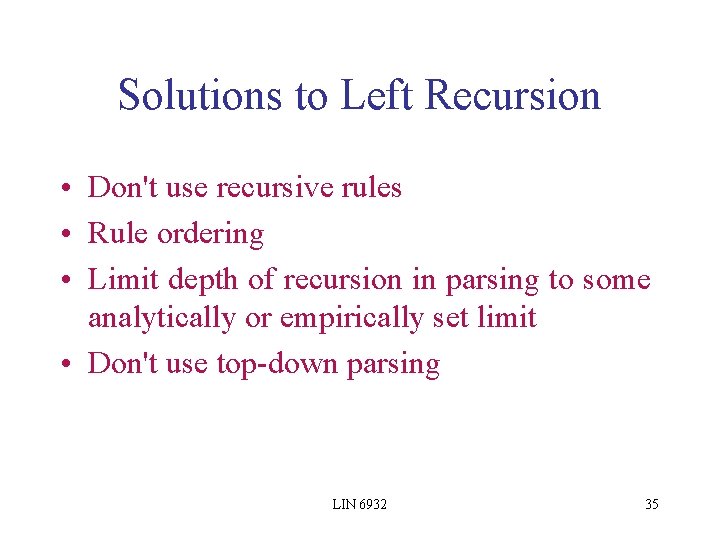

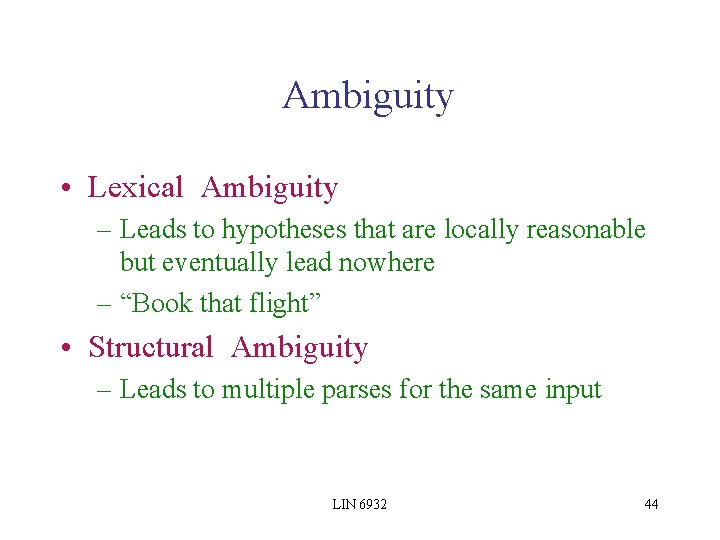

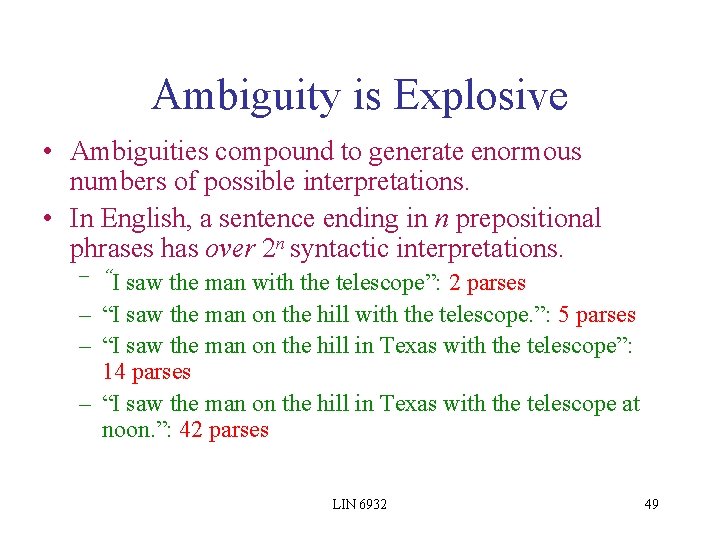

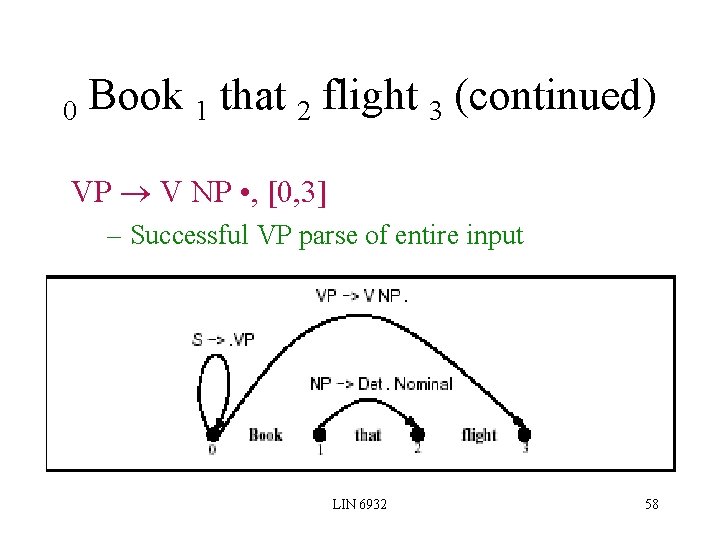

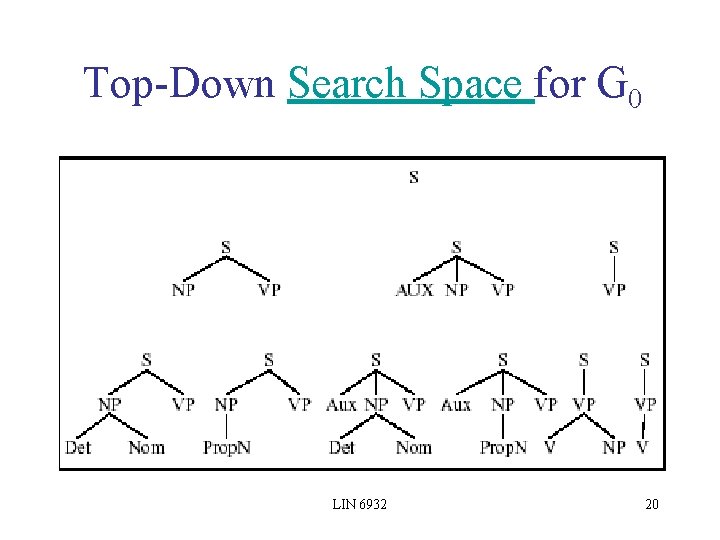

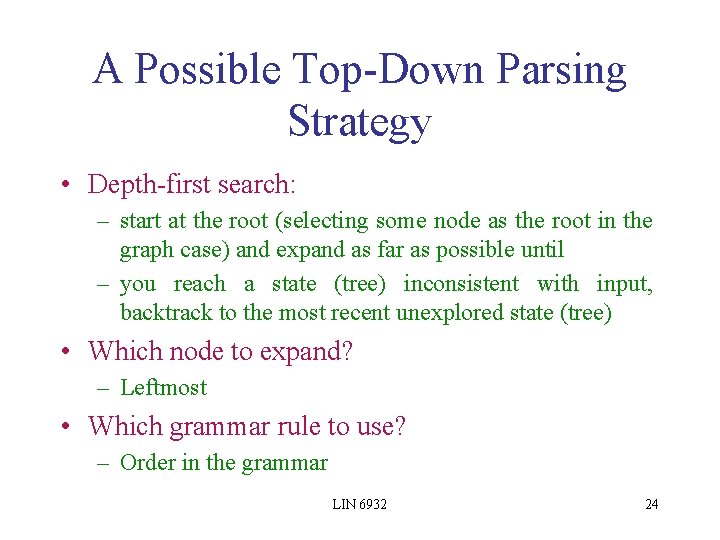

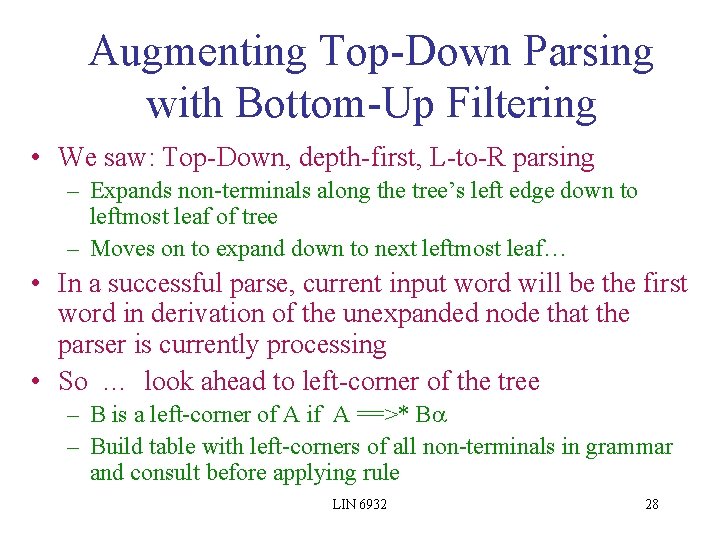

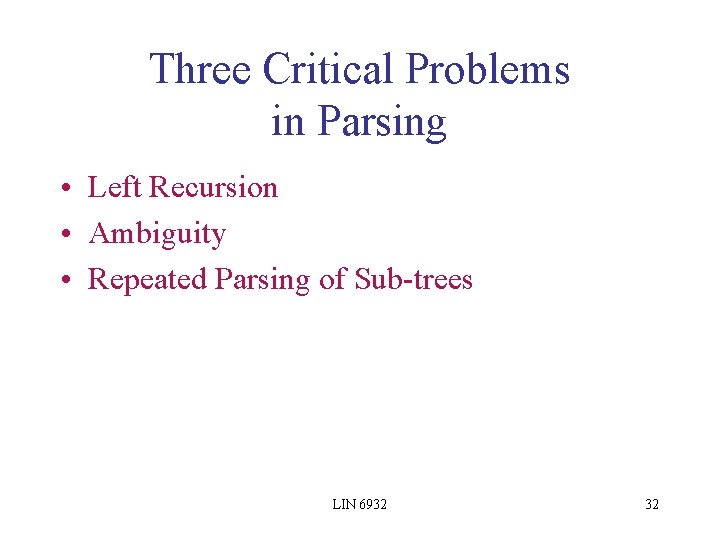

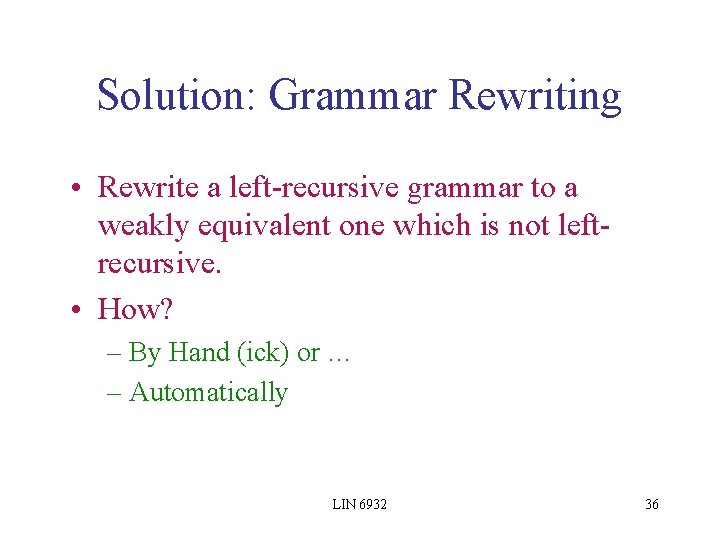

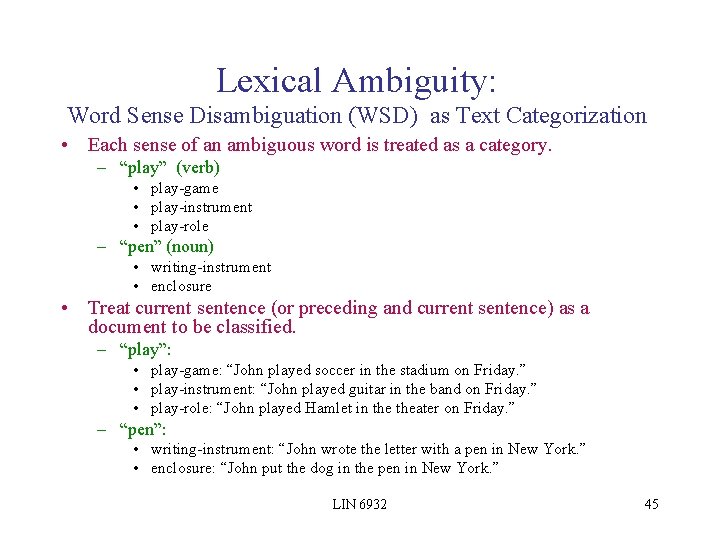

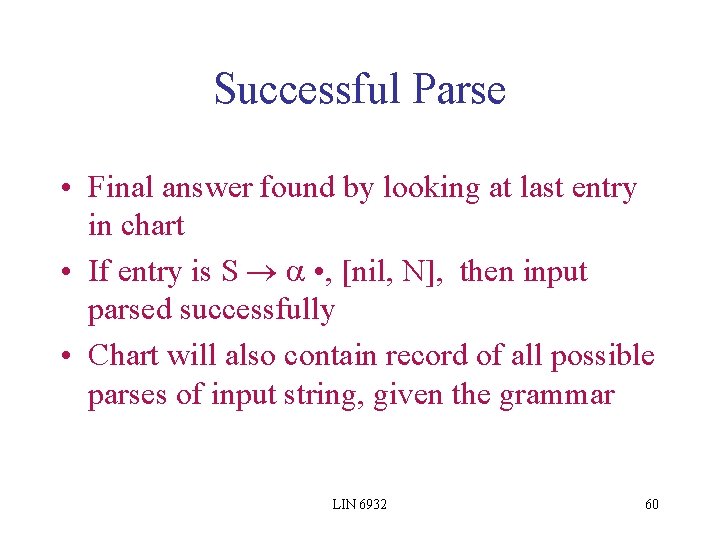

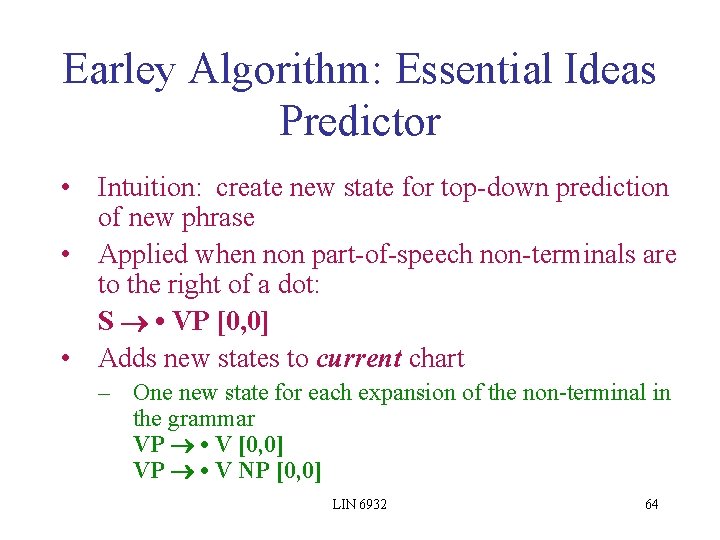

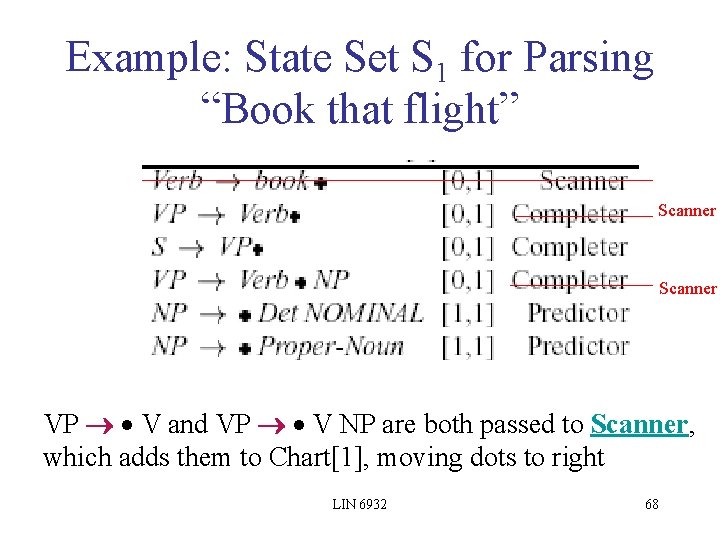

Error Handling Valid sentences will leave the state S , [nil, N] • What happens when we look at the contents of the last table column and don't find a S rule? – Is it a total loss? No. . . – Chart contains every constituent and combination of constituents possible for the input given the grammar • Also useful for partial parsing or shallow parsing used in information extraction LIN 6932 70

How do we retrieve the parses at the end? • The representation of each state must be augmented with an additional field to store information about the completed states that generated its constituents – i. e. what state did we advance here? – Read the pointers back from the final state LIN 6932 71

Earley’s Keys to Efficiency • Left-recursion, Ambiguity and repeated re-parsing of subtrees – Solution: dynamic programming • Combine top-down predictions with bottom-up look -ahead to avoid unnecessary expansions • Earley is still one of the most efficient parsers • All efficient parsers avoid re-computation in a similar way. LIN 6932 72

Next Time * Chapter 1 of Christopher D. Manning, Prabhakar Raghavan and Hinrich Schütze, Introduction to Information Retrieval, Cambridge University Press. 2007. * D. Moldovan, S. Harabagiu, M. Pasca, R. Mihalcea, R. Goodrum, R. Girju, and V. Rus. 1999. LASSO: A tool for surfing the answer net. In Proceedings of the Eighth Text Retrieval Conference (TREC-8), 1999. * E. Brill, S. Dumais and M. Banko. 2002. An analysis of the Ask. MSR question-answering system. Proceedings of EMNLP 2002 LIN 6932 73

Filip lin

Filip lin Computational linguistics olympiad

Computational linguistics olympiad Columbia computational linguistics

Columbia computational linguistics Chomsky computational linguistics

Chomsky computational linguistics Xkcd computational linguistics

Xkcd computational linguistics Traditional linguistics and modern linguistics

Traditional linguistics and modern linguistics Applied linguistics history

Applied linguistics history Pavel pai

Pavel pai Zavoral mff

Zavoral mff Magda filip

Magda filip Sveti filip i jakov plaže

Sveti filip i jakov plaže Filip jokanovic

Filip jokanovic Filip hercík

Filip hercík Javni bilježnik sesvete

Javni bilježnik sesvete Mudr. filip hudeček

Mudr. filip hudeček Filip vondra

Filip vondra Dr kokalj

Dr kokalj Filip drajfus

Filip drajfus Filip jukić

Filip jukić Filip neuls

Filip neuls Filip pe

Filip pe Filip i kyriales

Filip i kyriales Radu filip

Radu filip Kardiohirurgija skopje

Kardiohirurgija skopje Ludwig filip

Ludwig filip Filip triplat

Filip triplat Filip fiala

Filip fiala Teodora filip

Teodora filip Filip matějka wikipedie

Filip matějka wikipedie Filip triplat

Filip triplat Filip hanik

Filip hanik Filip abraham ku leuven

Filip abraham ku leuven Filip neuls

Filip neuls Filip triplat

Filip triplat Elektrownie pływowe wady i zalety

Elektrownie pływowe wady i zalety Filip latinovicz kratki sadržaj

Filip latinovicz kratki sadržaj Filip stachowiak

Filip stachowiak Indeks pearla

Indeks pearla Filip malmberg

Filip malmberg Area nervina area radicularis

Area nervina area radicularis Aronova palica

Aronova palica Sara filip

Sara filip Filip meniny

Filip meniny što su filipike

što su filipike Filip latinovicz otac

Filip latinovicz otac Filip rozanek

Filip rozanek Filip simovic teretana

Filip simovic teretana Filip bossowski

Filip bossowski Filip drajfus

Filip drajfus Filip rewakowicz

Filip rewakowicz Filip staničić

Filip staničić Peli filip

Peli filip Sap hana value proposition

Sap hana value proposition S4 hana introduction

S4 hana introduction Lssue

Lssue Rollshot huawei

Rollshot huawei It company

It company Michelangelo buonarroti

Michelangelo buonarroti Hana motors

Hana motors Hana gawlasova

Hana gawlasova Headline

Headline Sap hana analysis process

Sap hana analysis process Alifa hana syahrani

Alifa hana syahrani Sme sap

Sme sap Hana slivkova

Hana slivkova Samim

Samim Prebuilt hana views

Prebuilt hana views Crystal bridge snp

Crystal bridge snp Hana brixi

Hana brixi Hana zoričić

Hana zoričić Sap hana hint

Sap hana hint Nutanix sap hana production

Nutanix sap hana production Sap s%2f4 hana

Sap s%2f4 hana Abap cl_http_client

Abap cl_http_client