Lightweight Contexts An OS Abstraction for Safety and

Light-weight Contexts: An OS Abstraction for Safety and Performance Landon Cox February 19, 2018

What is a process? • Informal • A program in execution • Running code + things it can read/write • Process ≠ program • Formal • ≥ 1 threads in their own address space • (soon threads will share an address space)

Parts of a process • Thread • Sequence of executing instructions • Active: does things • Address space • Data the process uses as it runs • Passive: acted upon by threads

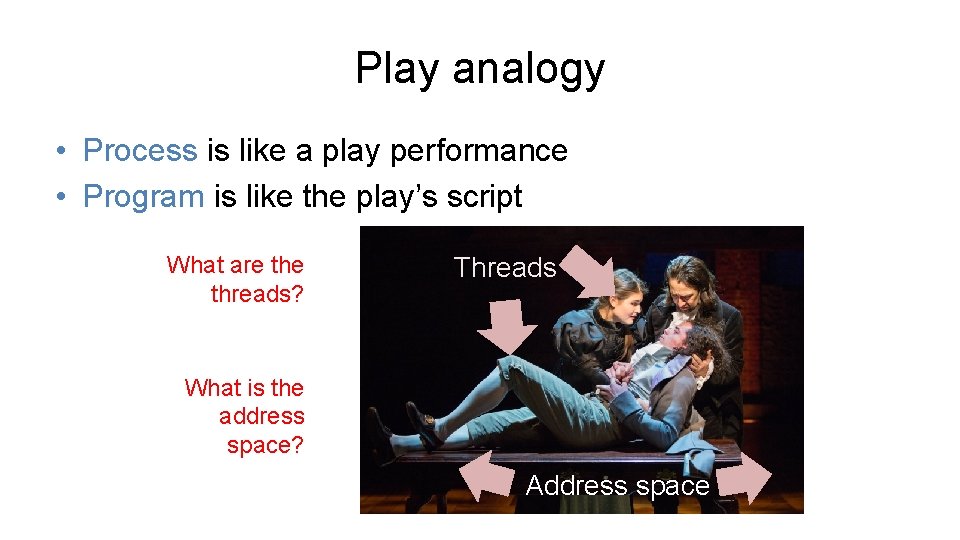

Play analogy • Process is like a play performance • Program is like the play’s script What are threads? Threads What is the address space? Address space

Threads that aren’t running • What is a non-running thread? • thread=“sequence of executing instructions” • non-running thread=“paused execution” • Must save thread’s private state • To re-run, re-load private state • Want thread to start where it left off

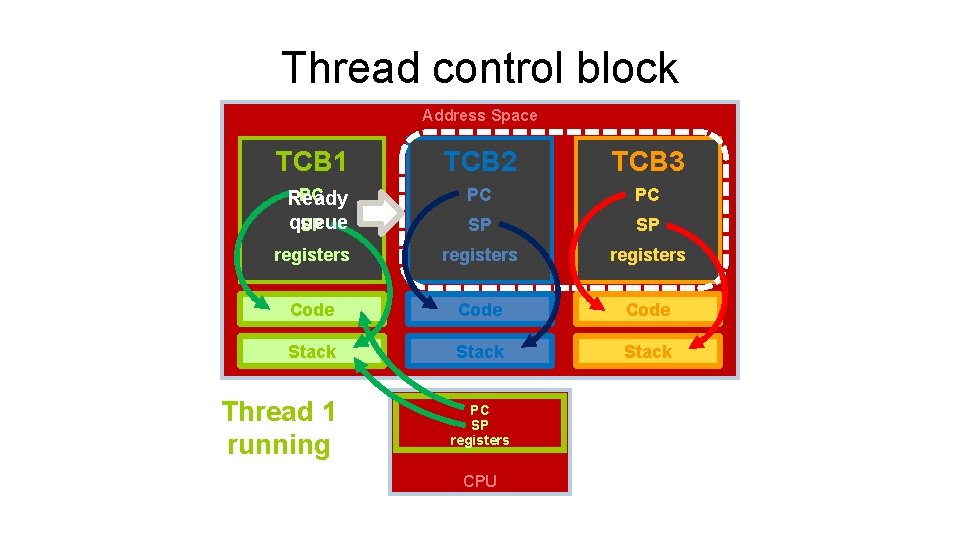

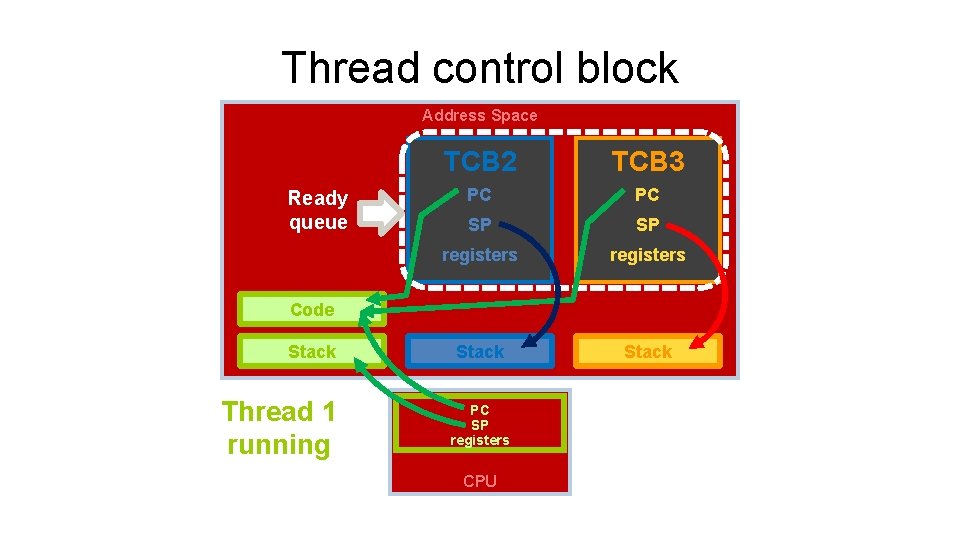

Thread control block (TCB) • What needs to access threads’ private data? • The CPU • This info is stored in the PC, SP, other registers • The OS needs pointers to non-running threads’ data • Thread control block (TCB) • Container for non-running threads’ private data • Values of PC, code, SP, stack, registers

Thread control block Address Space TCB 1 TCB 2 TCB 3 PC PC SP SP registers Code Stack PC Ready queue SP Thread 1 running PC SP registers CPU

Thread control block Address Space Ready queue TCB 2 TCB 3 PC PC SP SP registers Stack Code Stack Thread 1 running PC SP registers CPU

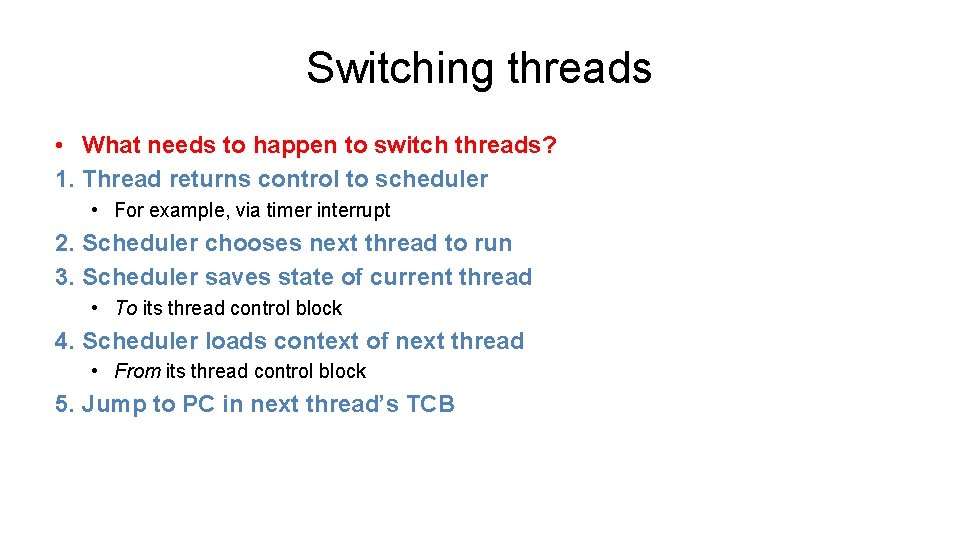

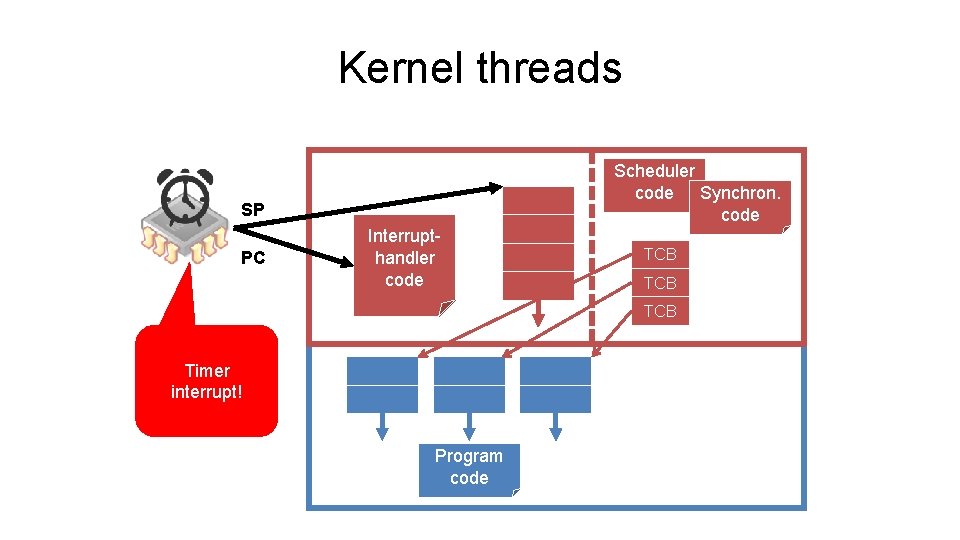

Switching threads • What needs to happen to switch threads? 1. Thread returns control to scheduler • For example, via timer interrupt 2. Scheduler chooses next thread to run 3. Scheduler saves state of current thread • To its thread control block 4. Scheduler loads context of next thread • From its thread control block 5. Jump to PC in next thread’s TCB

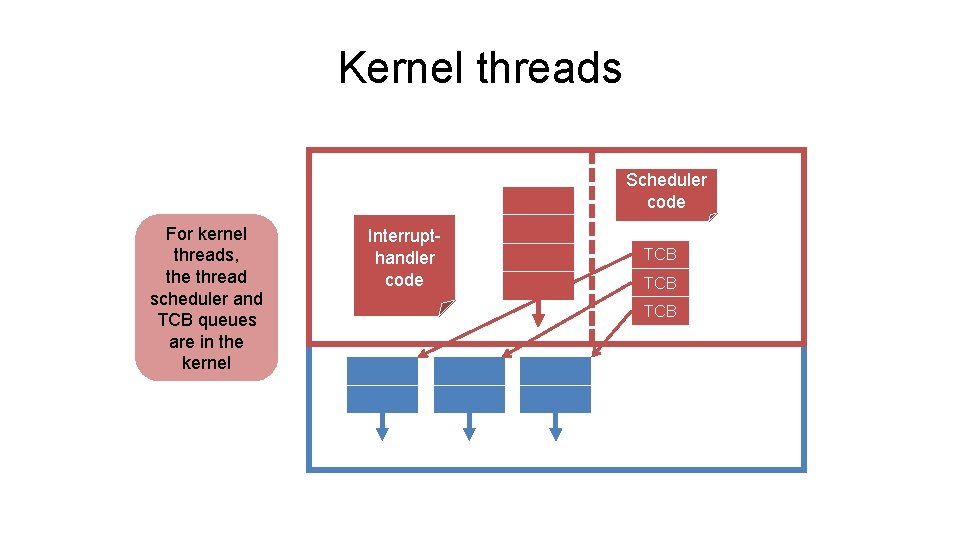

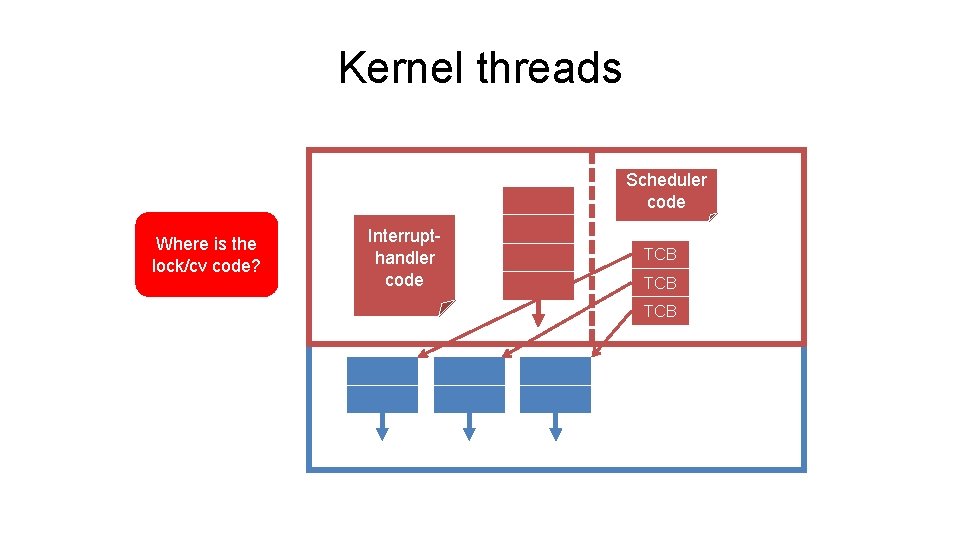

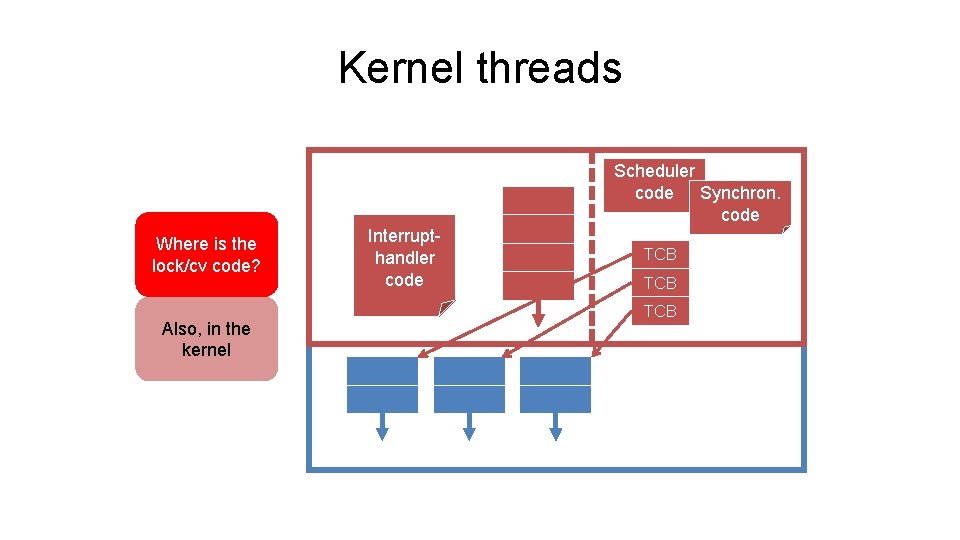

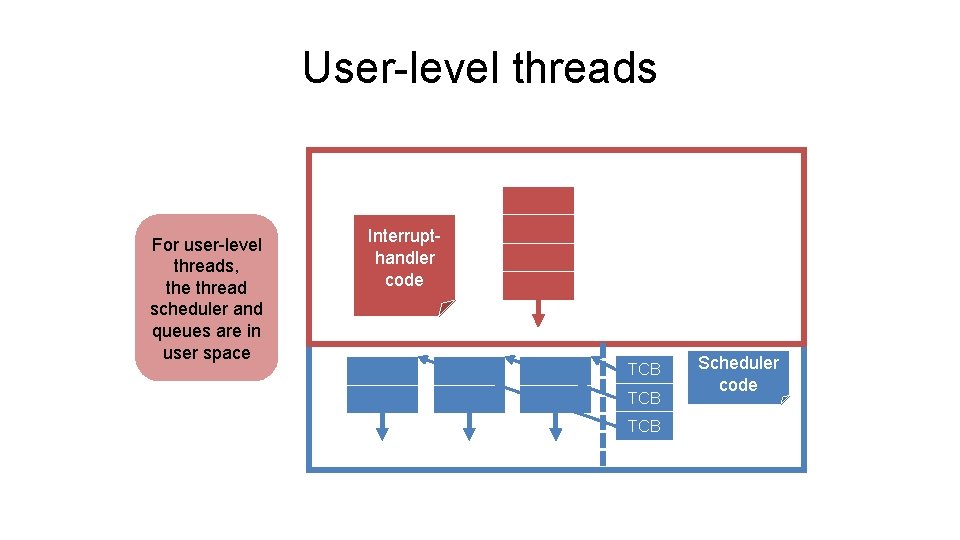

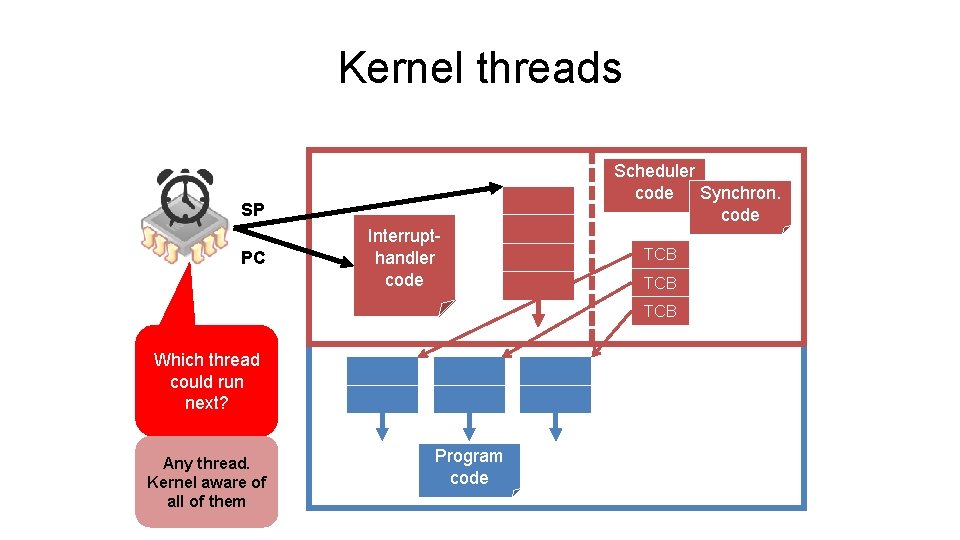

Kernel vs user threads • Kernel threads • Scheduler and queues reside in the kernel • User threads • Scheduler and queues reside in user space

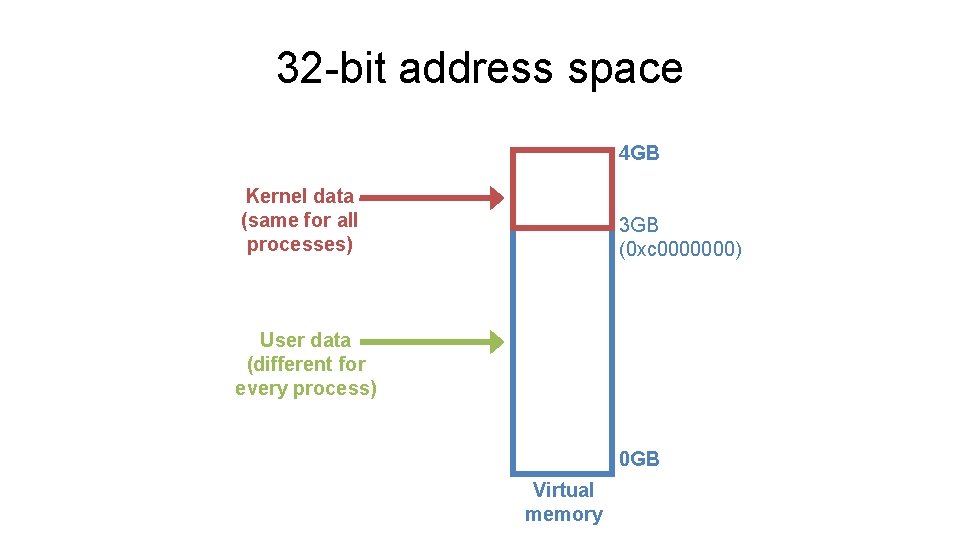

32 -bit address space 4 GB Kernel data (same for all processes) 3 GB (0 xc 0000000) User data (different for every process) 0 GB Virtual memory

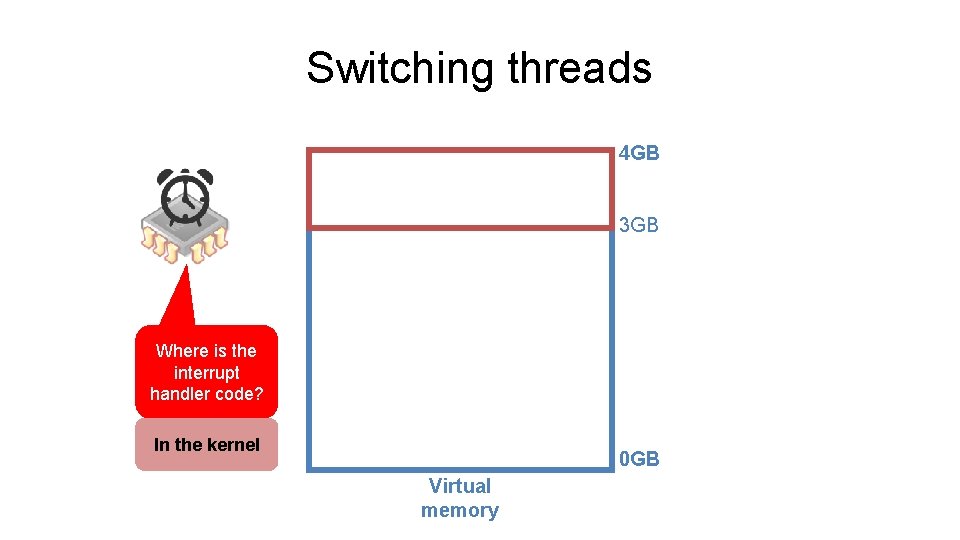

Switching threads 4 GB 3 GB Where is the interrupt handler code? In the kernel 0 GB Virtual memory

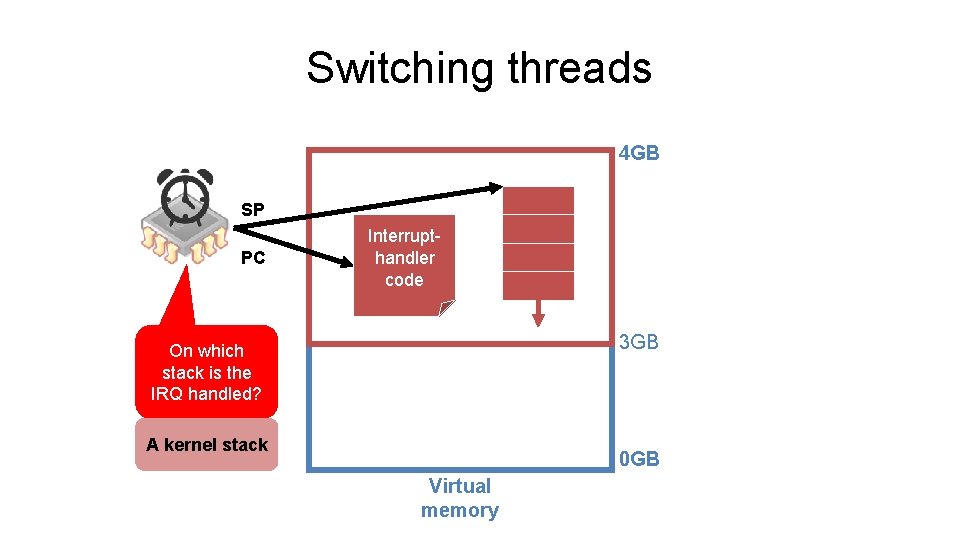

Switching threads 4 GB SP PC Interrupthandler code 3 GB On which stack is the IRQ handled? A kernel stack 0 GB Virtual memory

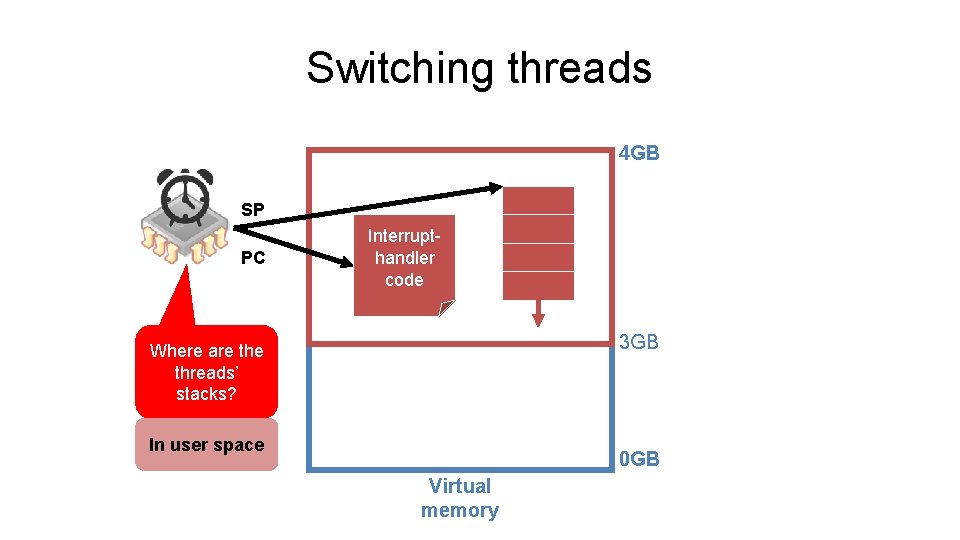

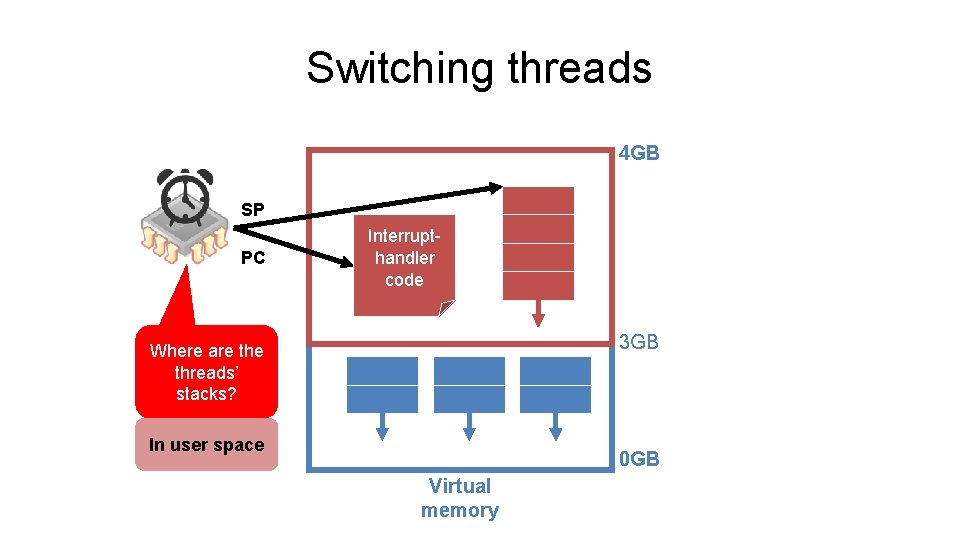

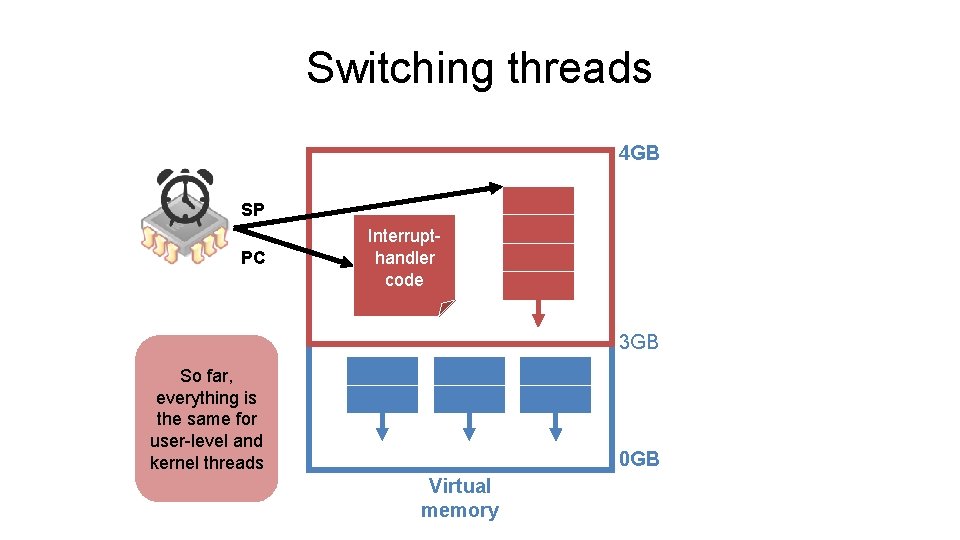

Switching threads 4 GB SP PC Interrupthandler code 3 GB Where are threads’ stacks? In user space 0 GB Virtual memory

Switching threads 4 GB SP PC Interrupthandler code 3 GB Where are threads’ stacks? In user space 0 GB Virtual memory

Switching threads 4 GB SP PC Interrupthandler code 3 GB So far, everything is the same for user-level and kernel threads 0 GB Virtual memory

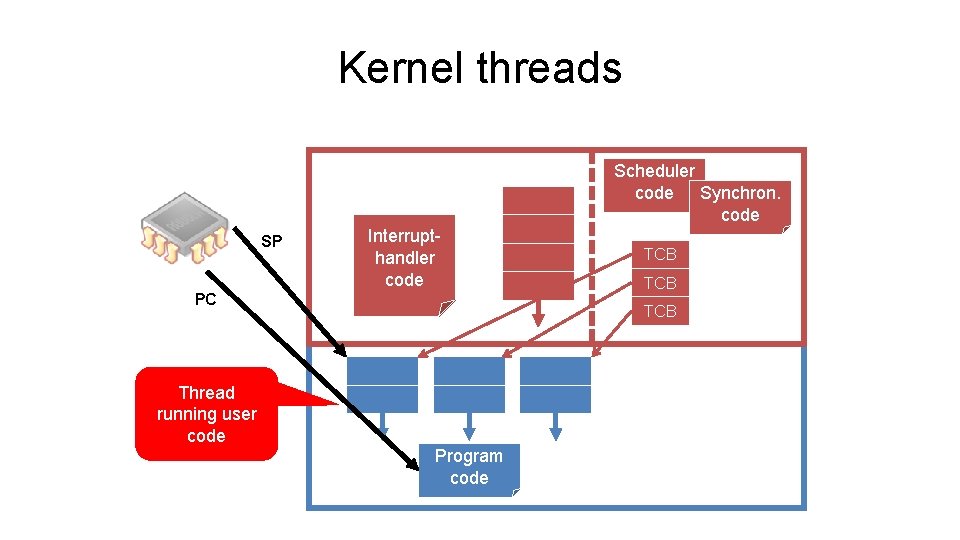

Kernel threads Scheduler code For kernel threads, the thread scheduler and TCB queues are in the kernel Handler Interruptcode handler code TCB TCB

Kernel threads Scheduler code Where is the lock/cv code? Handler Interruptcode handler code TCB TCB

Kernel threads Where is the lock/cv code? Also, in the kernel Handler Interruptcode handler code Scheduler code Synchron. code TCB TCB

User-level threads For user-level threads, the thread scheduler and queues are in user space Handler Interruptcode handler code TCB TCB Scheduler code

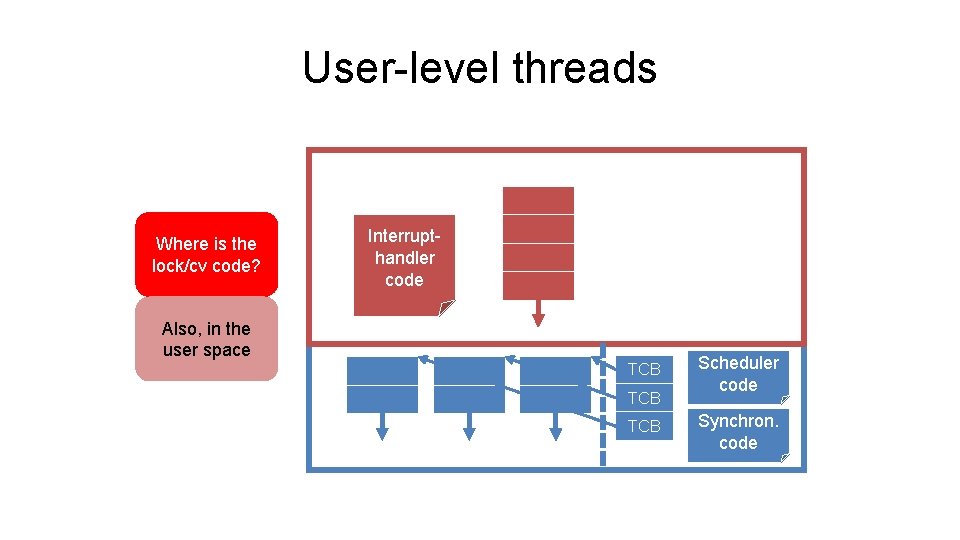

User-level threads Where is the lock/cv code? Handler Interruptcode handler code Also, in the user space TCB TCB Scheduler code Synchron. code

Switching to a new user-level thread

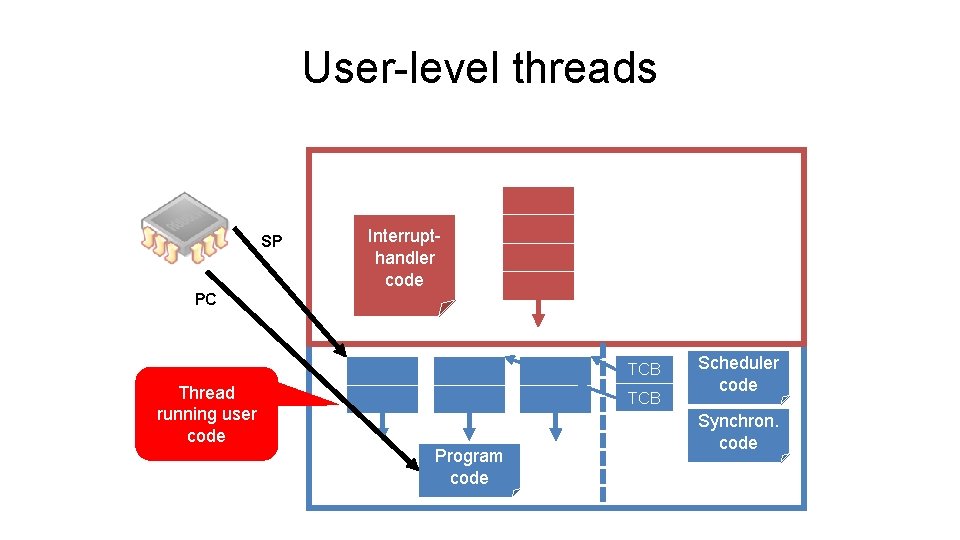

User-level threads SP Handler Interruptcode handler code PC TCB Thread running user code TCB Program code Scheduler code Synchron. code

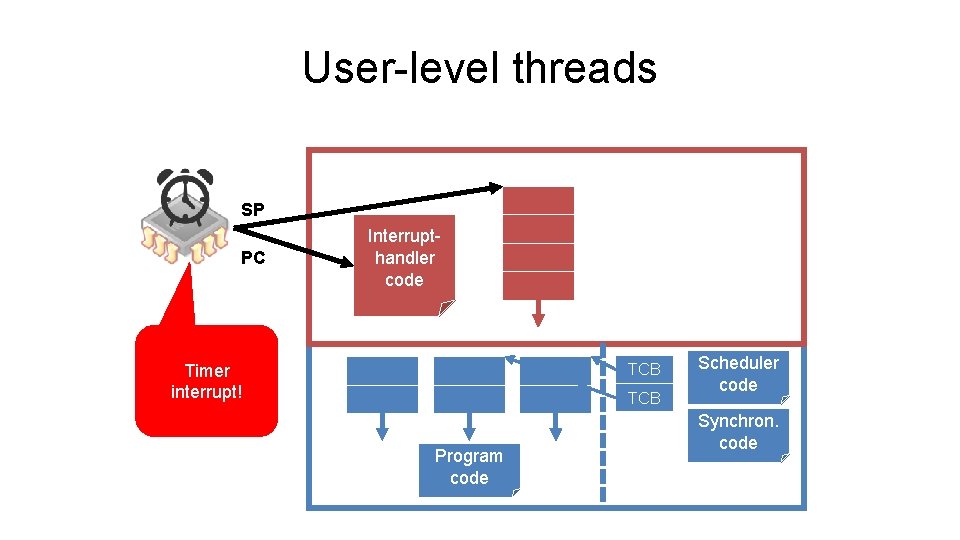

User-level threads SP PC Handler Interruptcode handler code Timer interrupt! TCB Program code Scheduler code Synchron. code

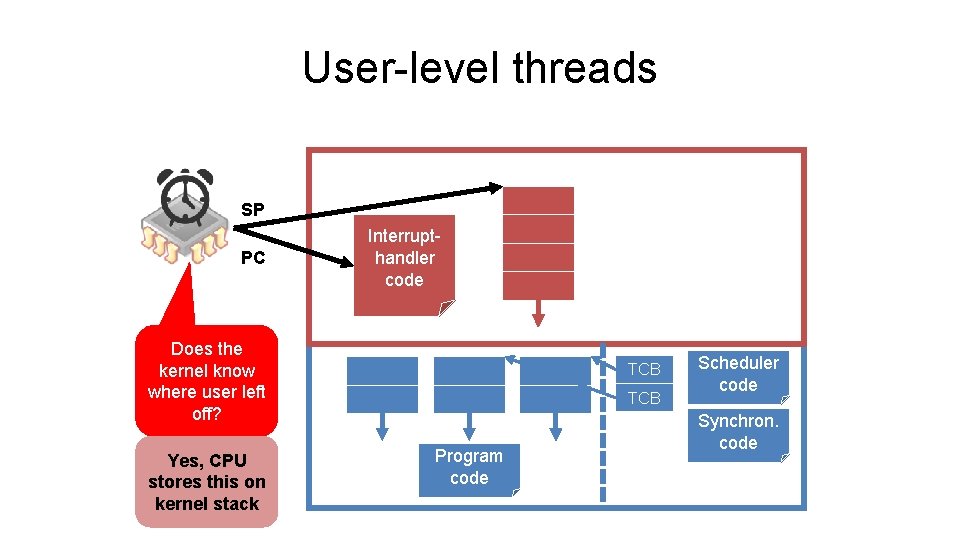

User-level threads SP PC Handler Interruptcode handler code Does the kernel know where user left off? Yes, CPU stores this on kernel stack TCB Program code Scheduler code Synchron. code

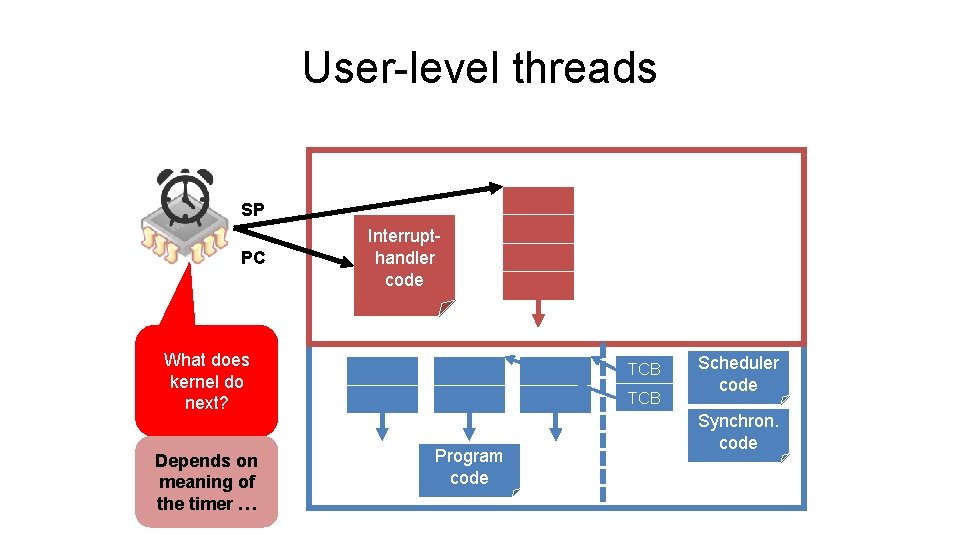

User-level threads SP PC Handler Interruptcode handler code What does kernel do next? Depends on meaning of the timer … TCB Program code Scheduler code Synchron. code

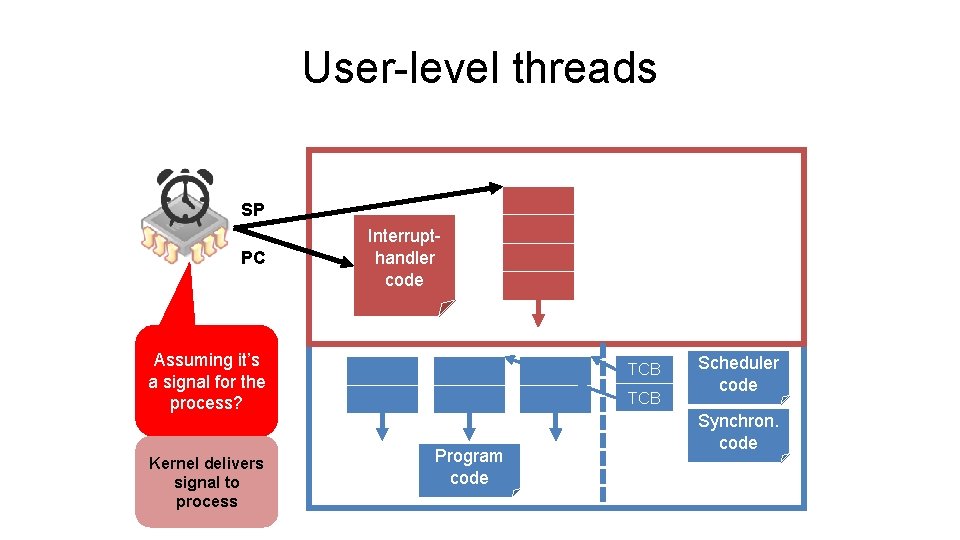

User-level threads SP PC Handler Interruptcode handler code Assuming it’s a signal for the process? Kernel delivers signal to process TCB Program code Scheduler code Synchron. code

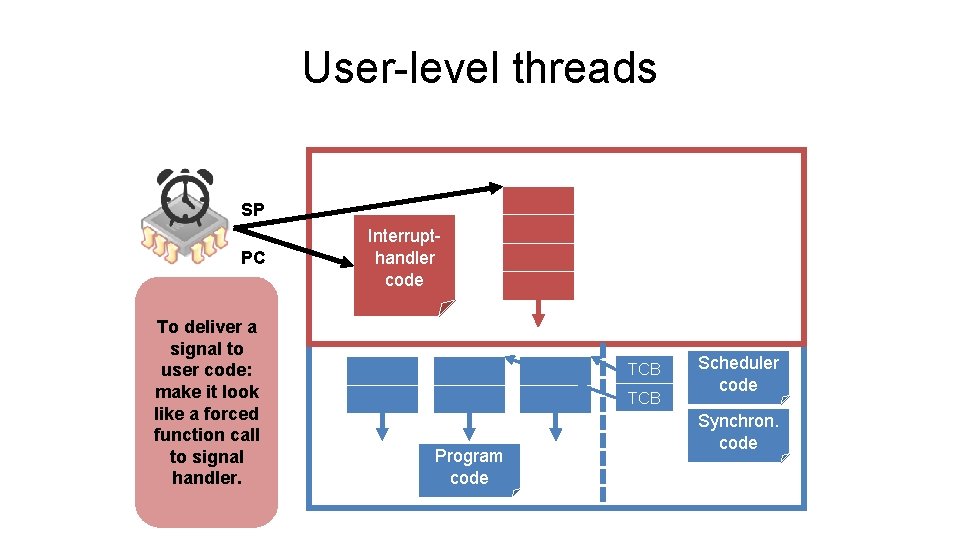

User-level threads SP PC To deliver a signal to user code: make it look like a forced function call to signal handler. Handler Interruptcode handler code TCB Program code Scheduler code Synchron. code

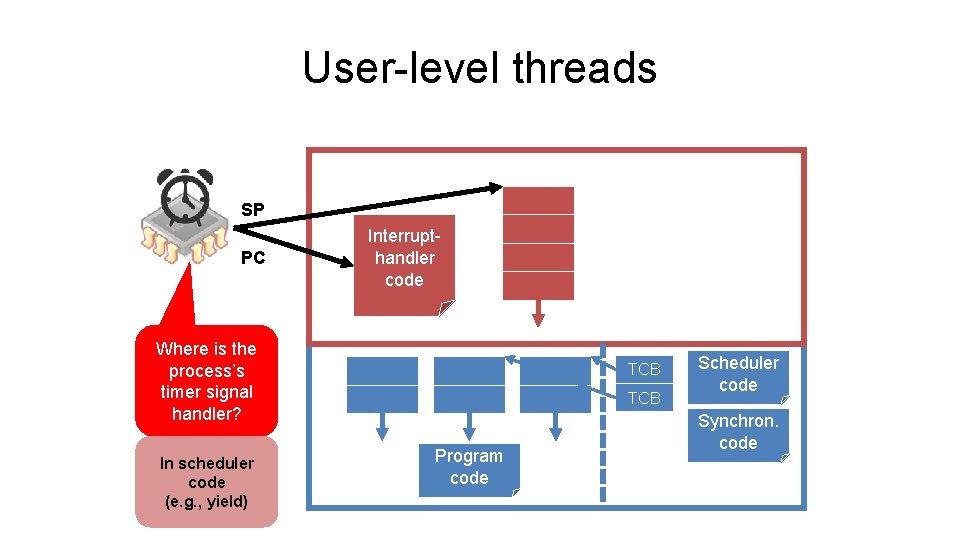

User-level threads SP PC Handler Interruptcode handler code Where is the process’s timer signal handler? In scheduler code (e. g. , yield) TCB Program code Scheduler code Synchron. code

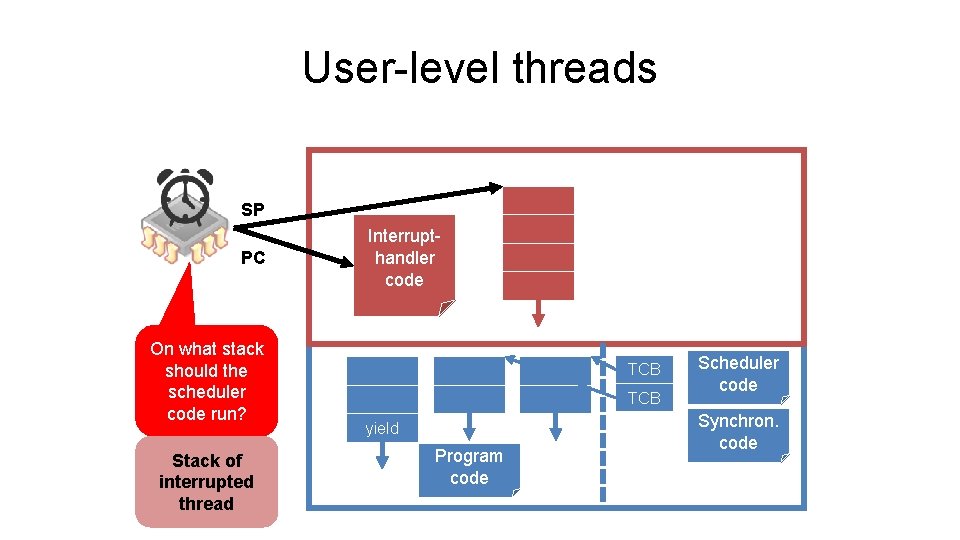

User-level threads SP PC On what stack should the scheduler code run? Stack of interrupted thread Handler Interruptcode handler code TCB yield Program code Scheduler code Synchron. code

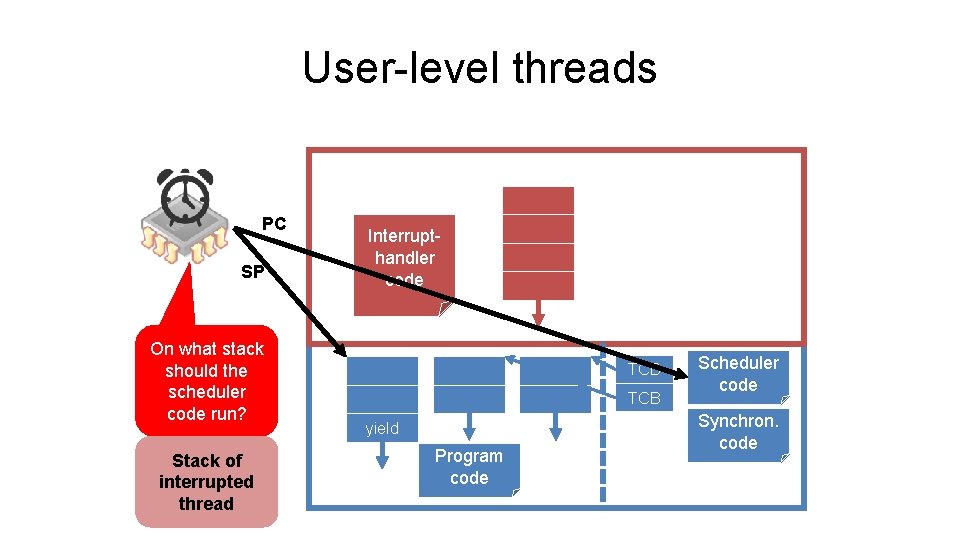

User-level threads PC SP On what stack should the scheduler code run? Stack of interrupted thread Handler Interruptcode handler code TCB yield Program code Scheduler code Synchron. code

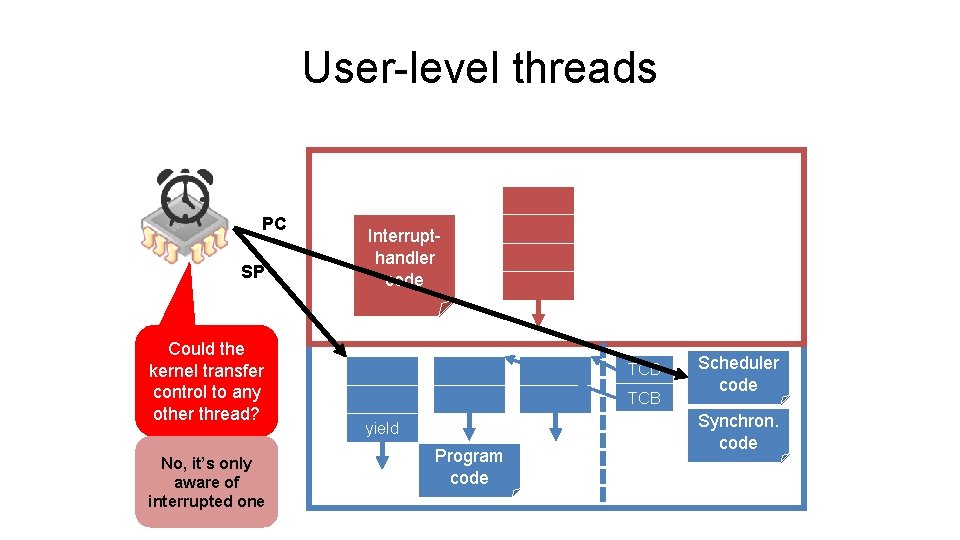

User-level threads PC SP Could the kernel transfer control to any other thread? No, it’s only aware of interrupted one Handler Interruptcode handler code TCB yield Program code Scheduler code Synchron. code

Switching to a new kernel thread

Kernel threads SP Handler Interruptcode handler code PC Thread running user code Scheduler code Synchron. code TCB TCB Program code

Kernel threads SP PC Handler Interruptcode handler code Scheduler code Synchron. code TCB TCB Timer interrupt! Program code

Kernel threads SP PC Handler Interruptcode handler code Scheduler code Synchron. code TCB TCB Which thread could run next? Any thread. Kernel aware of all of them Program code

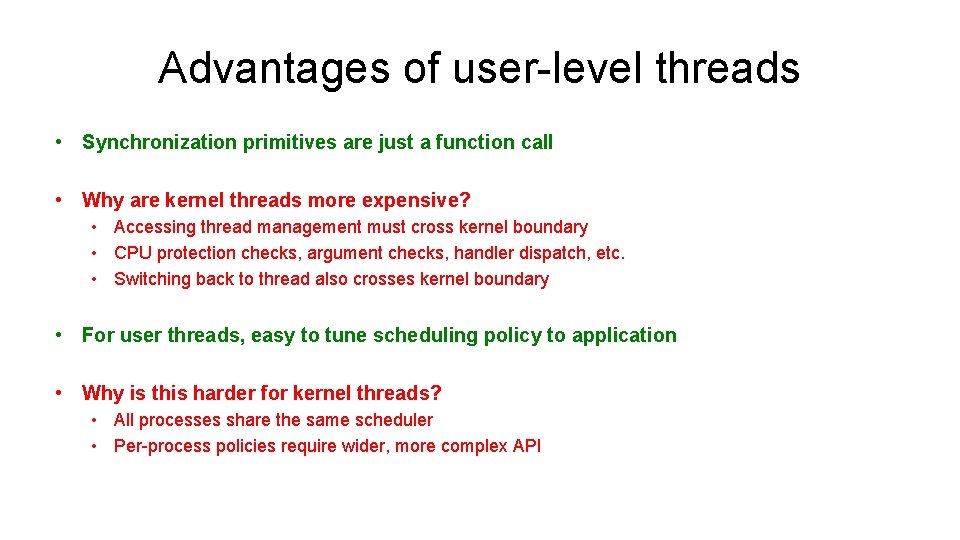

Advantages of user-level threads • Synchronization primitives are just a function call • Why are kernel threads more expensive? • Accessing thread management must cross kernel boundary • CPU protection checks, argument checks, handler dispatch, etc. • Switching back to thread also crosses kernel boundary • For user threads, easy to tune scheduling policy to application • Why is this harder for kernel threads? • All processes share the same scheduler • Per-process policies require wider, more complex API

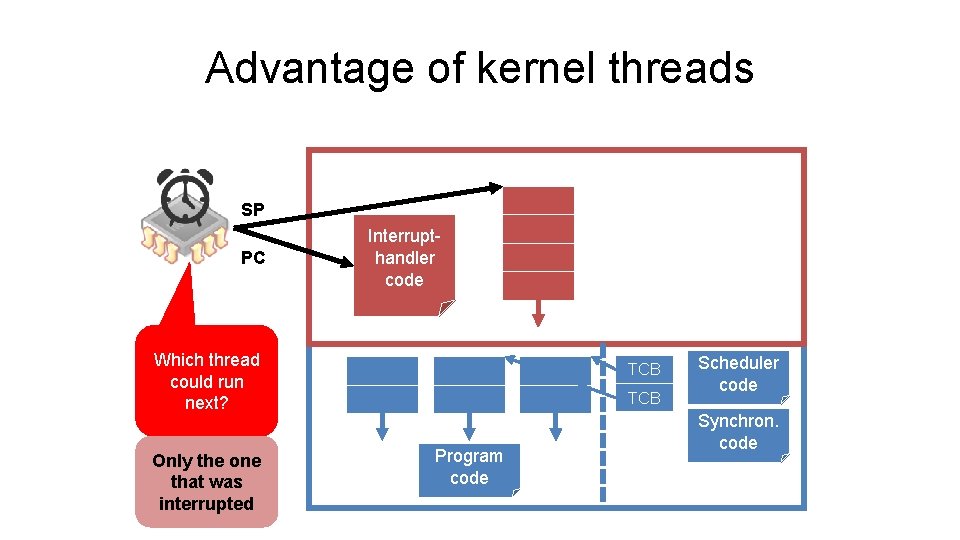

Advantage of kernel threads SP PC Handler Interruptcode handler code Which thread could run next? Only the one that was interrupted TCB Program code Scheduler code Synchron. code

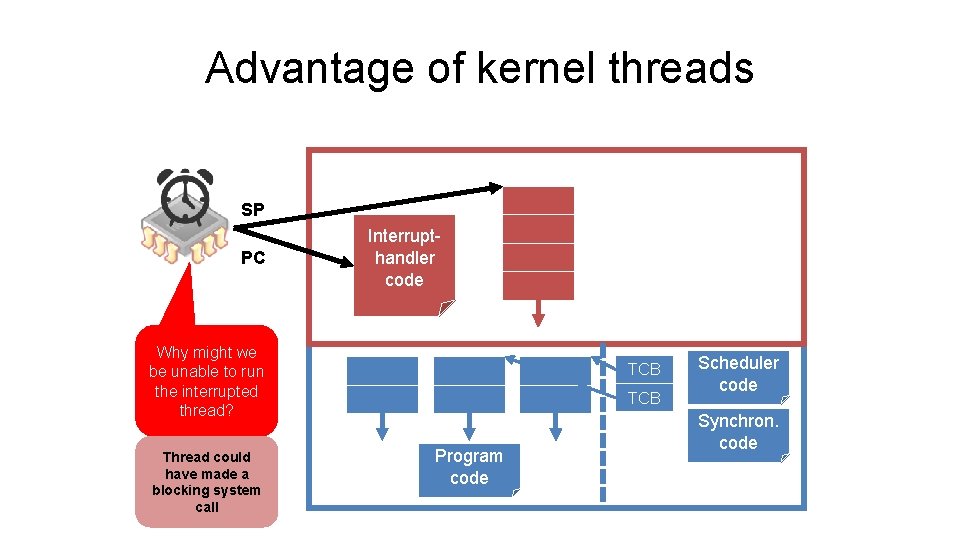

Advantage of kernel threads SP PC Handler Interruptcode handler code Why might we be unable to run the interrupted thread? Thread could have made a blocking system call TCB Program code Scheduler code Synchron. code

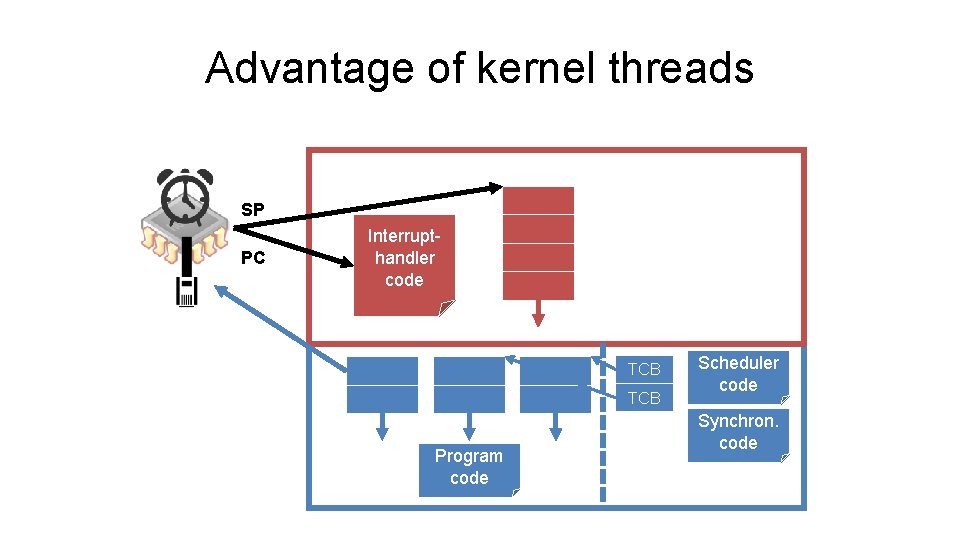

Advantage of kernel threads SP PC Handler Interruptcode handler code TCB Program code Scheduler code Synchron. code

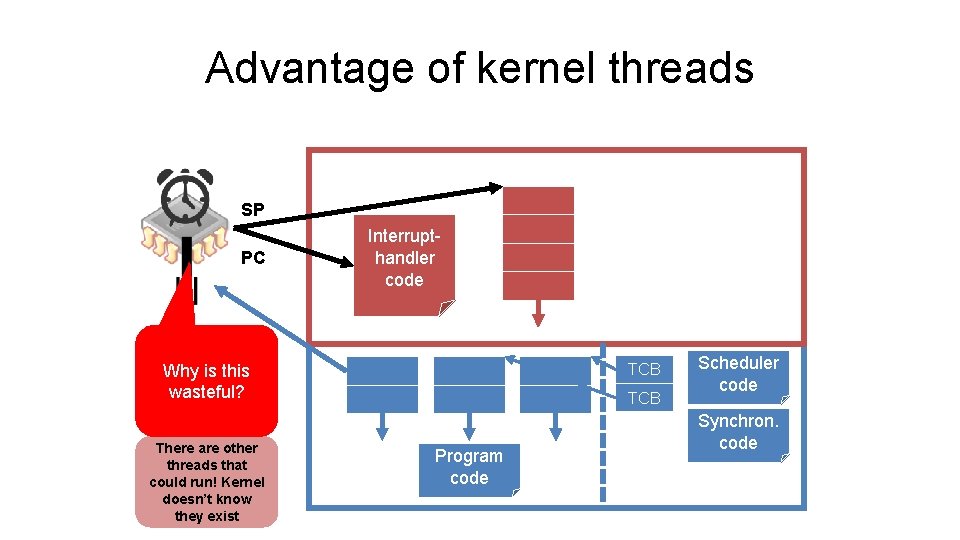

Advantage of kernel threads SP PC Handler Interruptcode handler code Why is this wasteful? There are other threads that could run! Kernel doesn’t know they exist TCB Program code Scheduler code Synchron. code

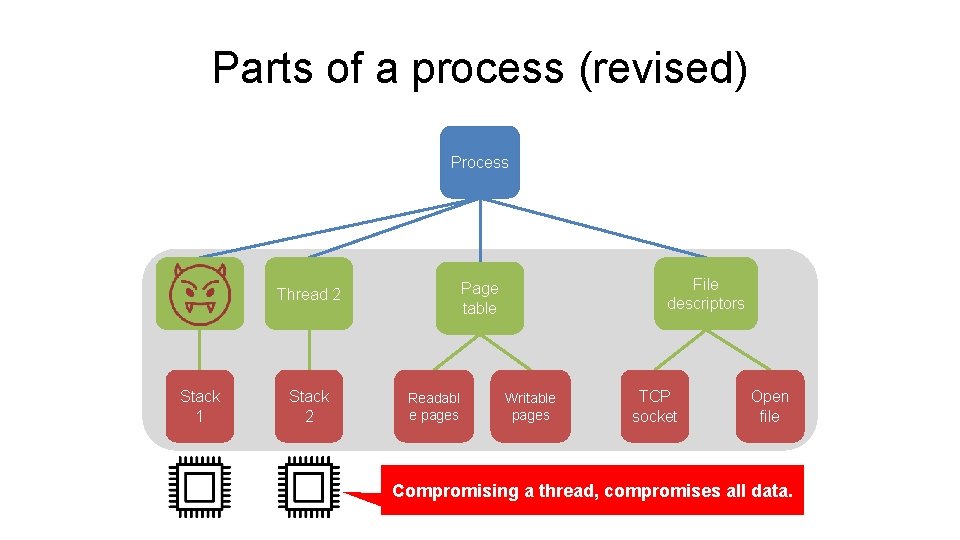

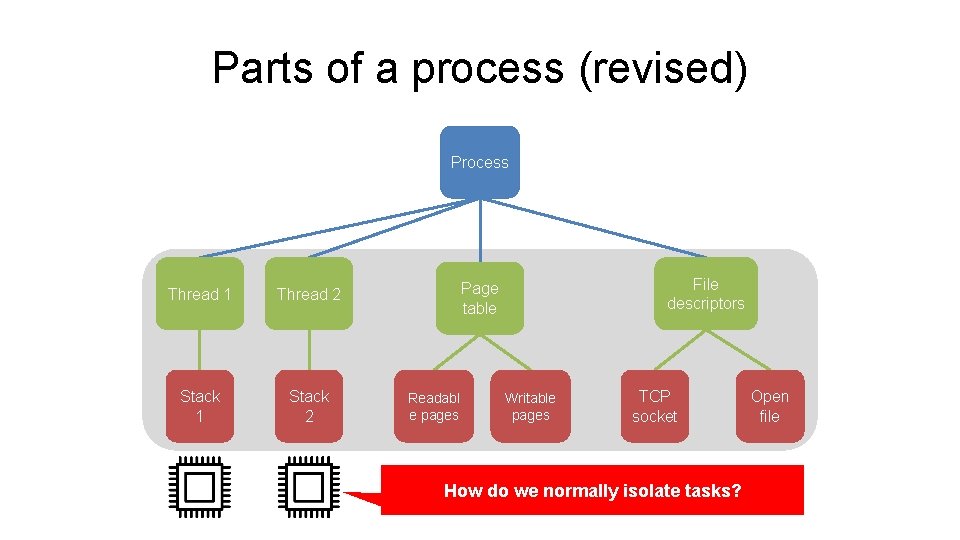

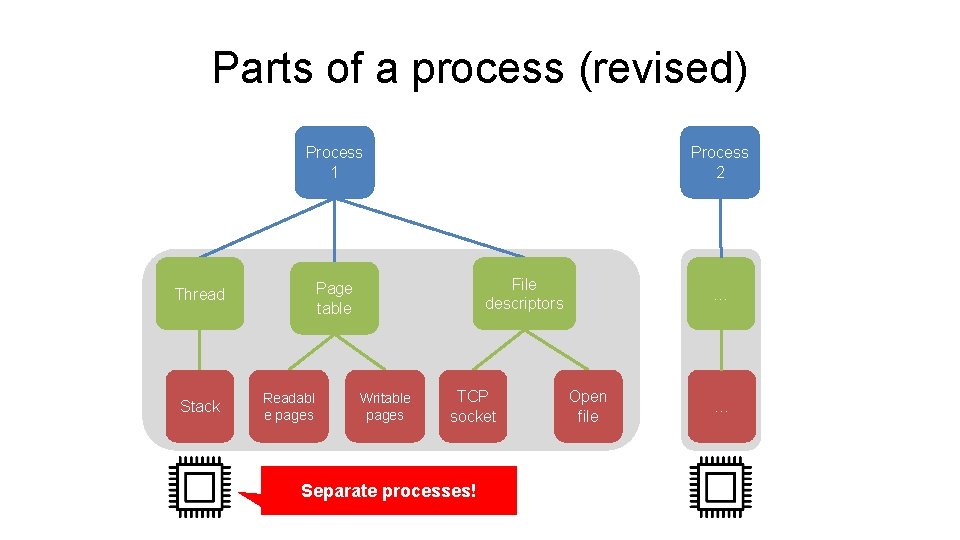

Parts of a process (revised) • Thread • Sequence of executing instructions • Active: does things • Address space • Data the process uses as it runs • Passive: acted upon by threads • File descriptors • Communication channels (e. g. , files, sockets, pipes) • Passive: read-from/written-to by threads

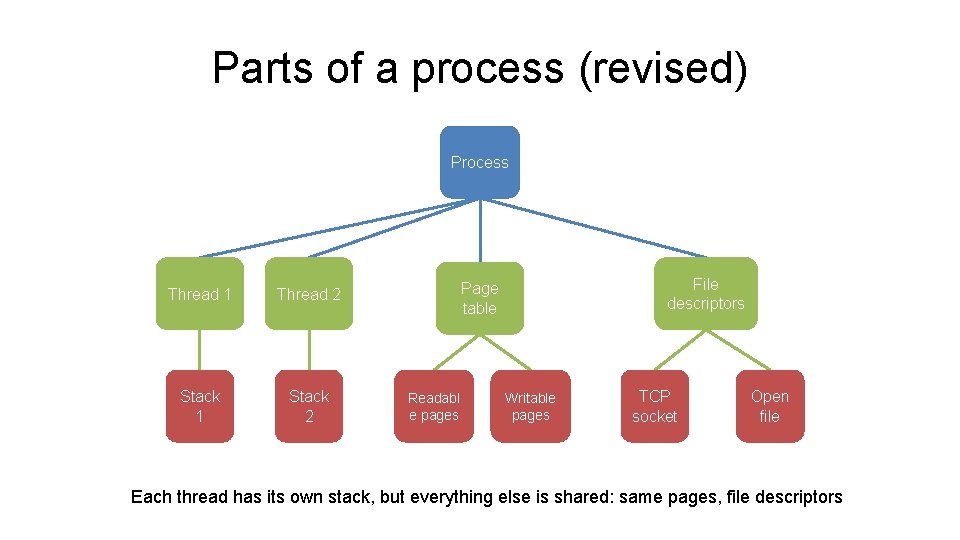

Parts of a process (revised) Process Thread 1 Thread 2 Stack 1 Stack 2 File descriptors Page table Readabl e pages Writable pages TCP socket Open file Each thread has its own stack, but everything else is shared: same pages, file descriptors

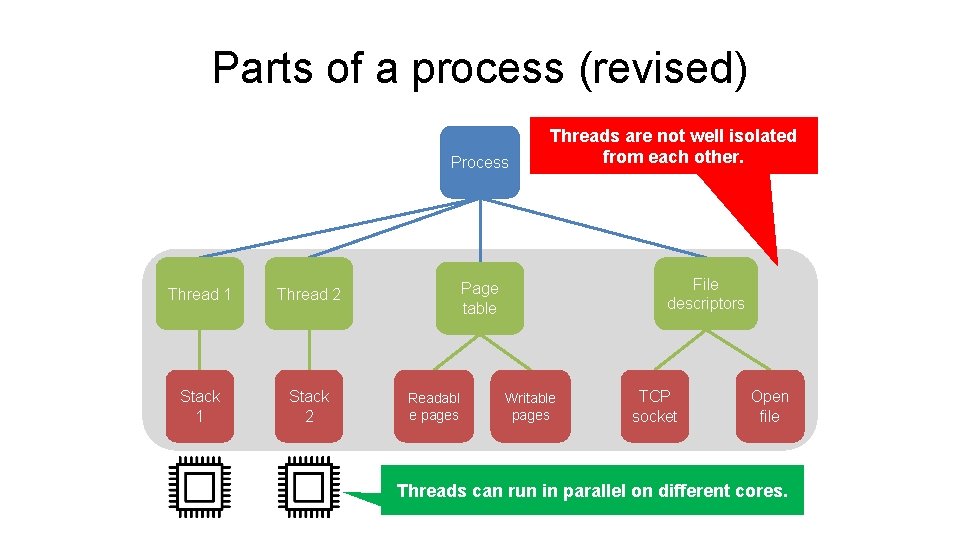

Parts of a process (revised) Process Thread 1 Thread 2 Stack 1 Stack 2 Threads are not well isolated from each other. File descriptors Page table Readabl e pages Writable pages TCP socket Open file Threads can run in parallel on different cores.

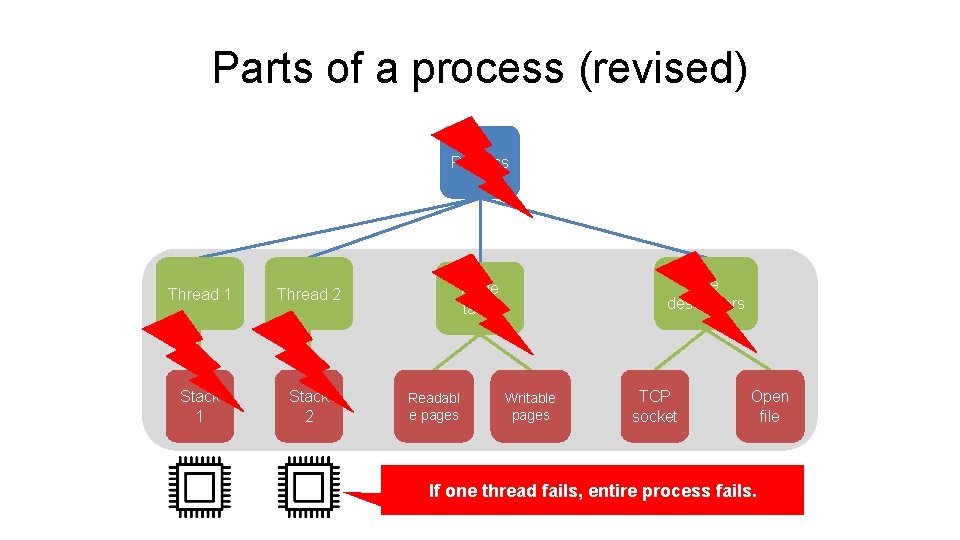

Parts of a process (revised) Process Thread 1 Thread 2 Stack 1 Stack 2 File descriptors Page table Readabl e pages Writable pages TCP socket Open file If one thread fails, entire process fails.

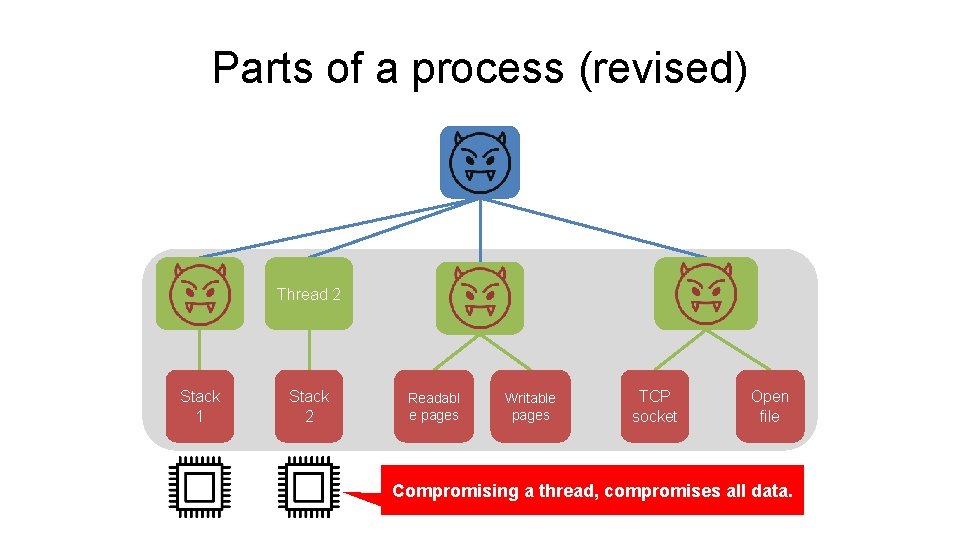

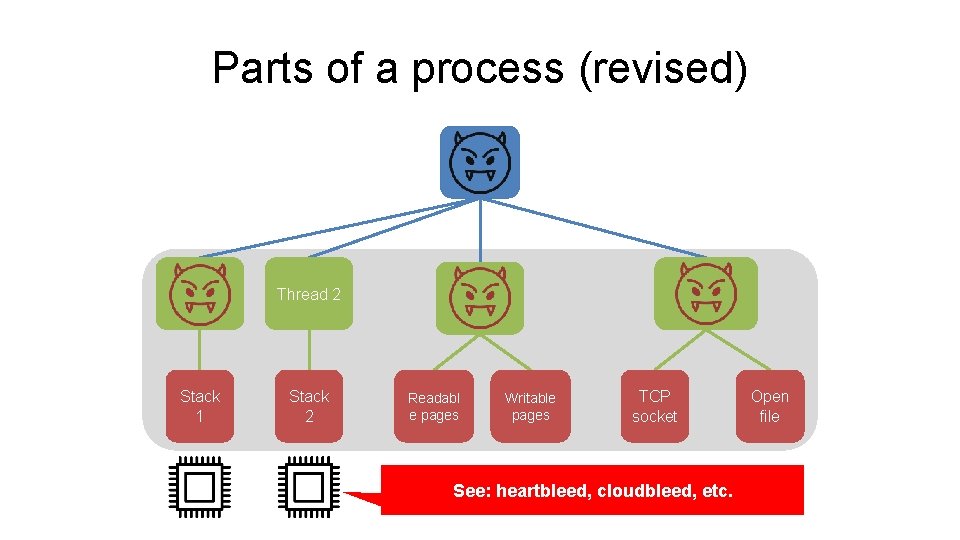

Parts of a process (revised) Process Stack 1 Stack 2 File descriptors Page table Thread 2 Readabl e pages Writable pages TCP socket Open file Compromising a thread, compromises all data.

Parts of a process (revised) Thread 2 Stack 1 Stack 2 Readabl e pages Writable pages TCP socket Open file Compromising a thread, compromises all data.

Parts of a process (revised) Thread 2 Stack 1 Stack 2 Readabl e pages Writable pages TCP socket See: heartbleed, cloudbleed, etc. Open file

Heartbleed

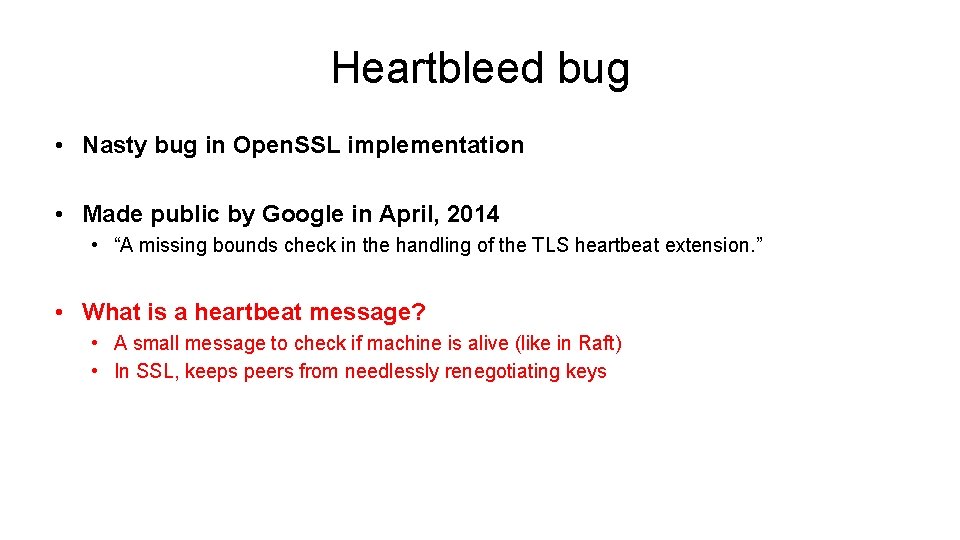

Heartbleed bug • Nasty bug in Open. SSL implementation • Made public by Google in April, 2014 • “A missing bounds check in the handling of the TLS heartbeat extension. ” • What is a heartbeat message? • A small message to check if machine is alive (like in Raft) • In SSL, keeps peers from needlessly renegotiating keys

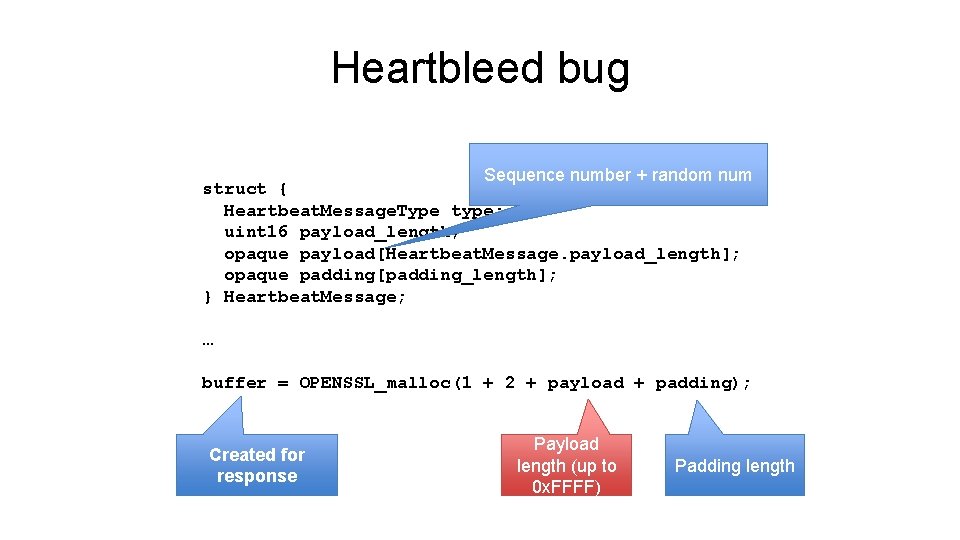

Heartbleed bug Sequence number + random num struct { Heartbeat. Message. Type type; uint 16 payload_length; opaque payload[Heartbeat. Message. payload_length]; opaque padding[padding_length]; } Heartbeat. Message; … buffer = OPENSSL_malloc(1 + 2 + payload + padding); Created for response Payload length (up to 0 x. FFFF) Padding length

Heartbleed bug

Parts of a process (revised) Process Thread 1 Thread 2 Stack 1 Stack 2 File descriptors Page table Readabl e pages Writable pages TCP socket How do we normally isolate tasks? Open file

Parts of a process (revised) Process 1 File descriptors Page table Thread Stack Process 2 Readabl e pages Writable pages TCP socket Separate processes! … Open file …

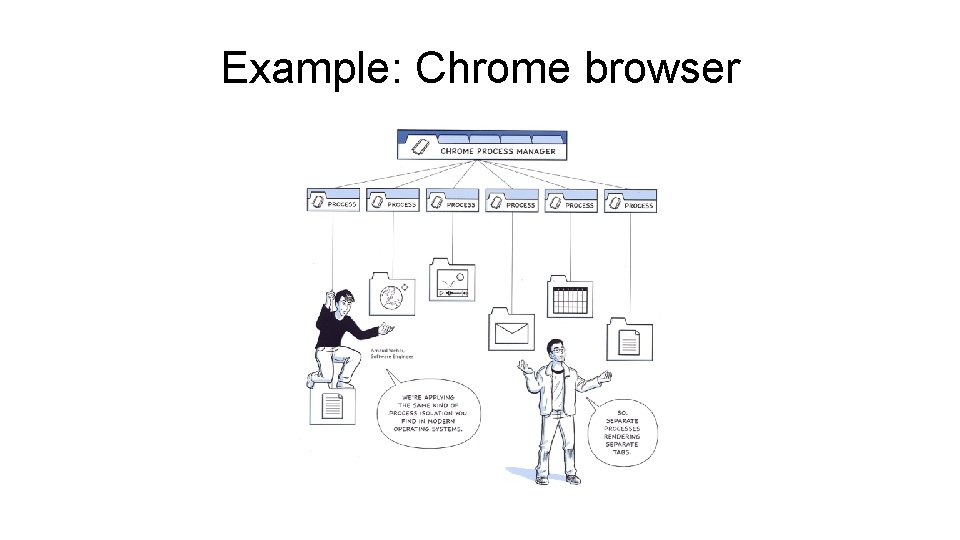

Example: Chrome browser

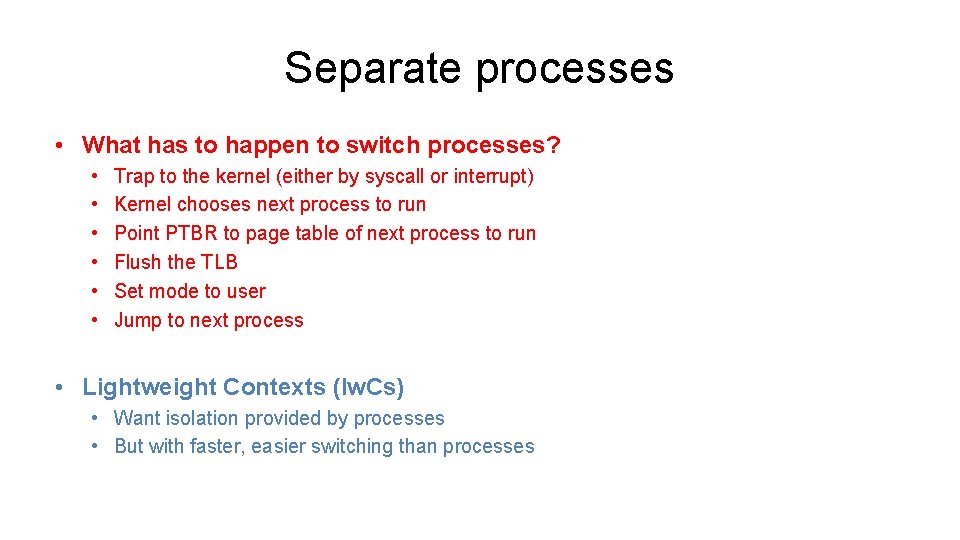

Separate processes • What has to happen to switch processes? • • • Trap to the kernel (either by syscall or interrupt) Kernel chooses next process to run Point PTBR to page table of next process to run Flush the TLB Set mode to user Jump to next process • Lightweight Contexts (lw. Cs) • Want isolation provided by processes • But with faster, easier switching than processes

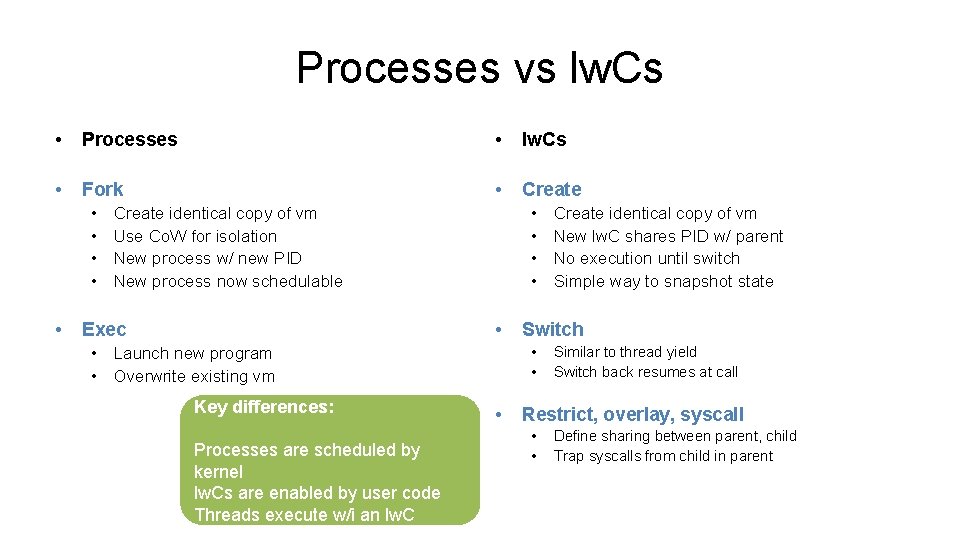

Processes vs lw. Cs • Processes • lw. Cs • Fork • Create • • • Create identical copy of vm Use Co. W for isolation New process w/ new PID New process now schedulable • Exec Key differences: Processes are scheduled by kernel lw. Cs are enabled by user code Threads execute w/i an lw. C Switch • • • Launch new program • Overwrite existing vm • Create identical copy of vm New lw. C shares PID w/ parent No execution until switch Simple way to snapshot state Similar to thread yield Switch back resumes at call Restrict, overlay, syscall • • Define sharing between parent, child Trap syscalls from child in parent

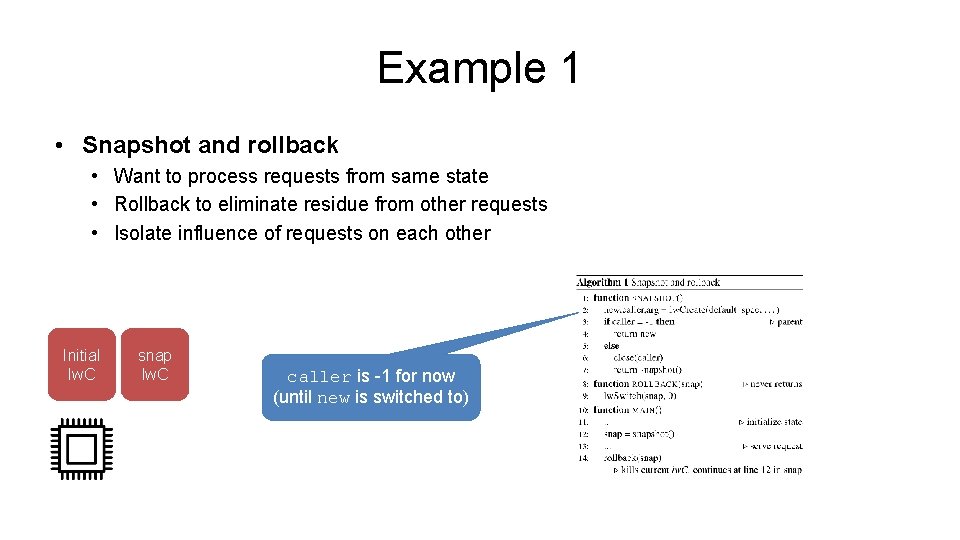

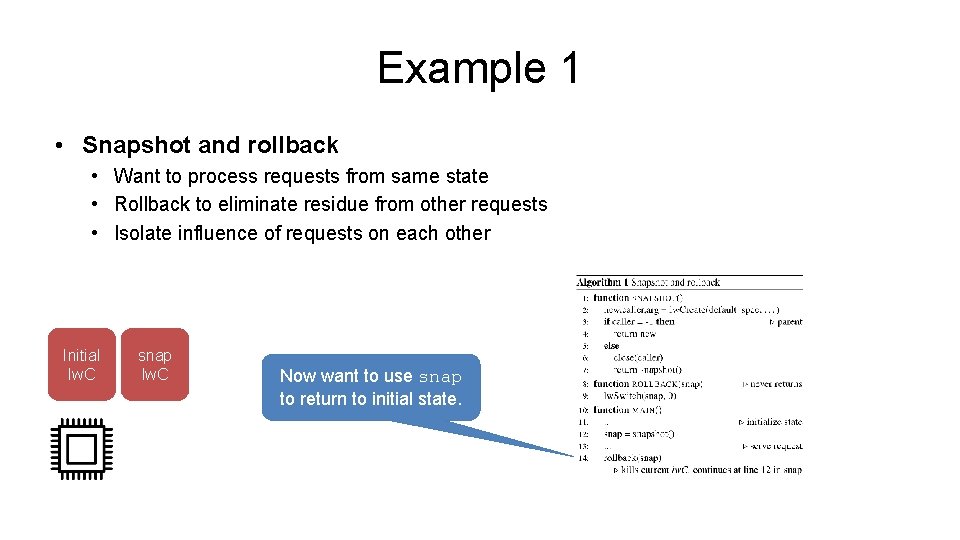

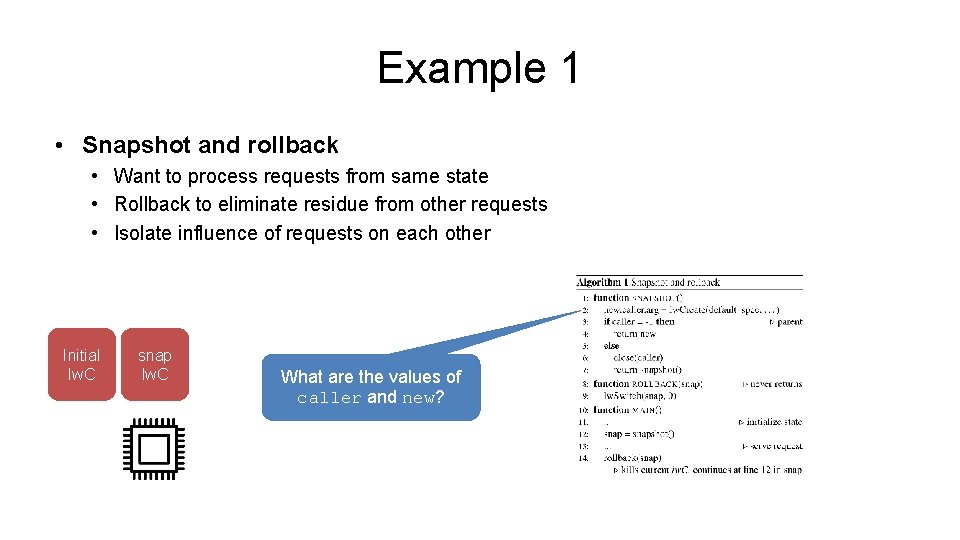

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C Process starts in main

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C Server initializes its state

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C Server creates a snapshot

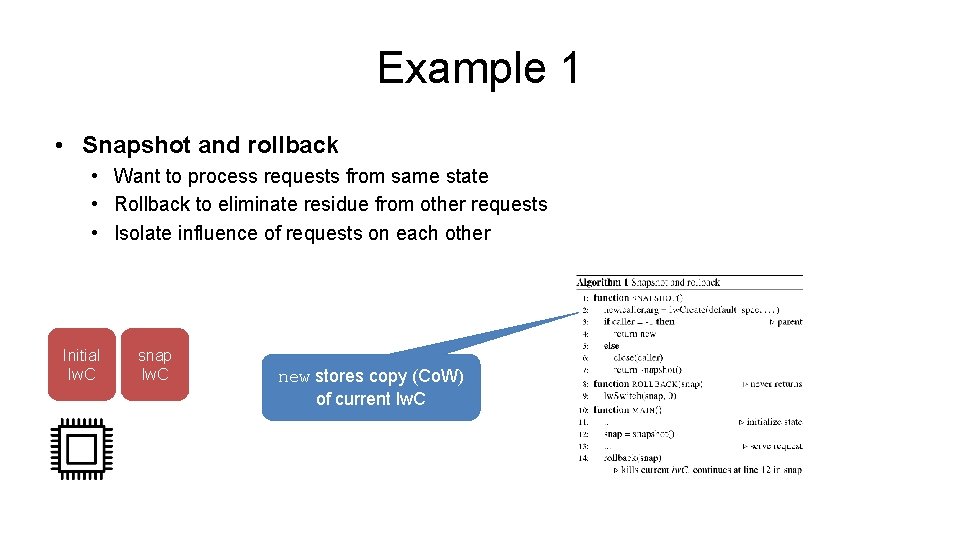

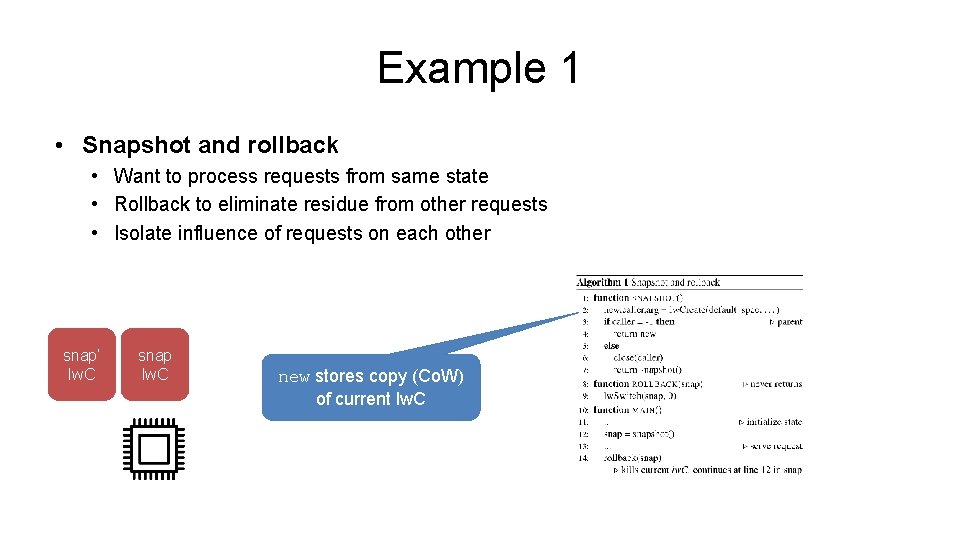

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C snap lw. C new stores copy (Co. W) of current lw. C

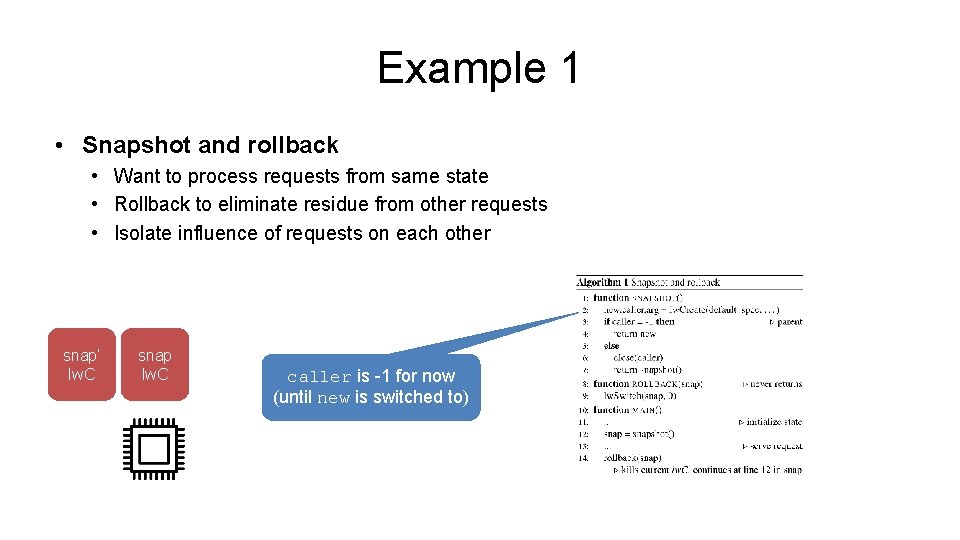

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C snap lw. C caller is -1 for now (until new is switched to)

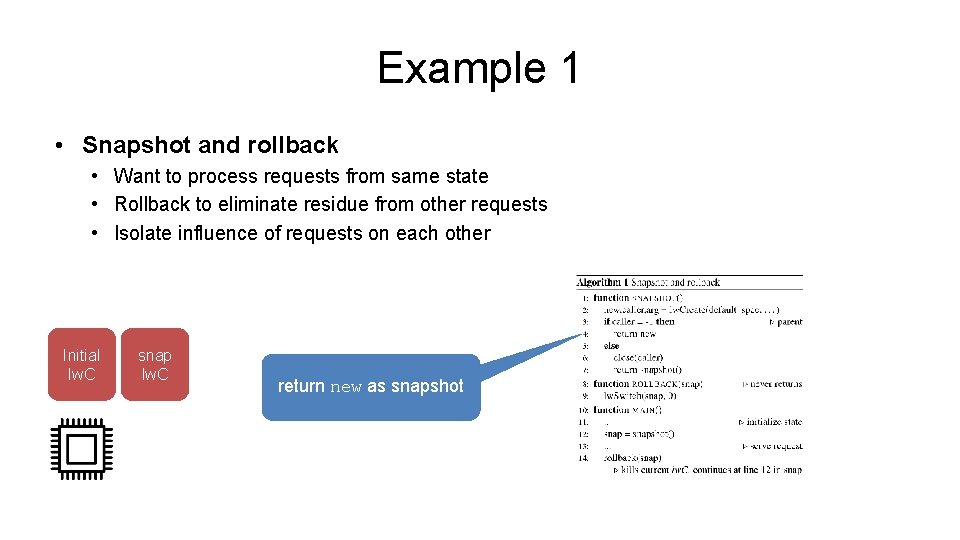

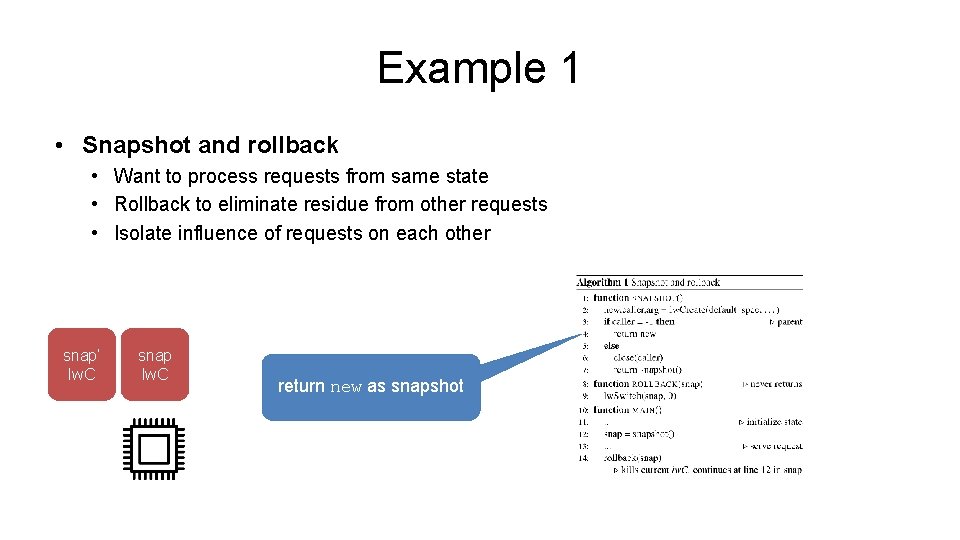

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C snap lw. C return new as snapshot

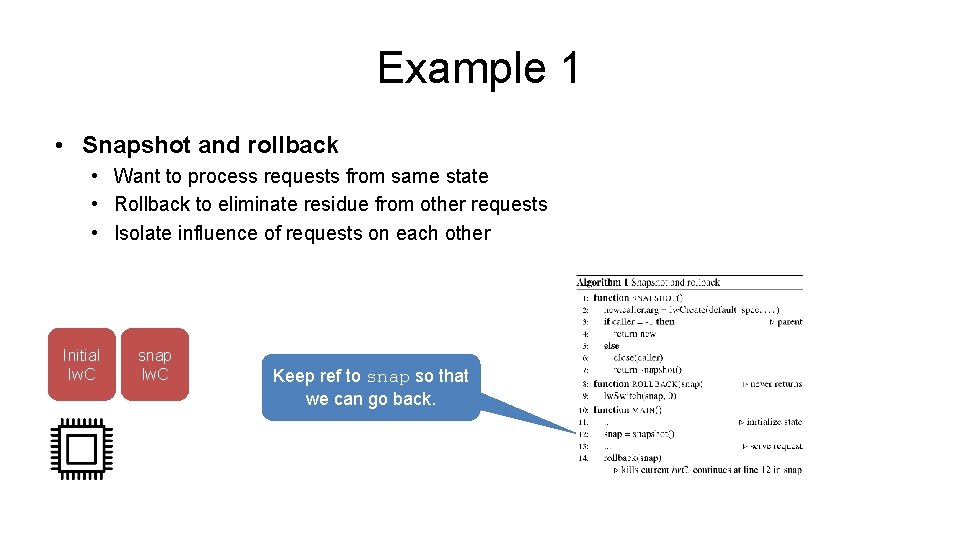

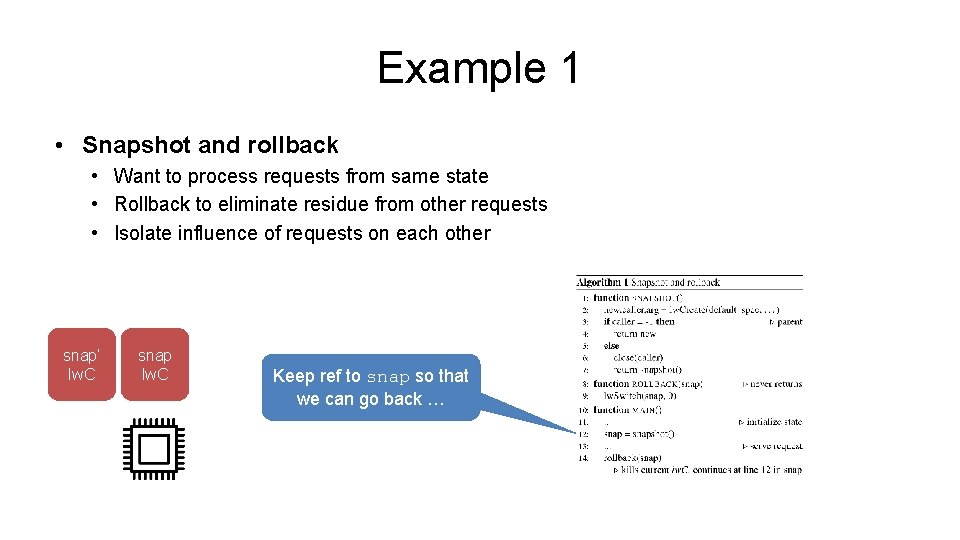

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C snap lw. C Keep ref to snap so that we can go back.

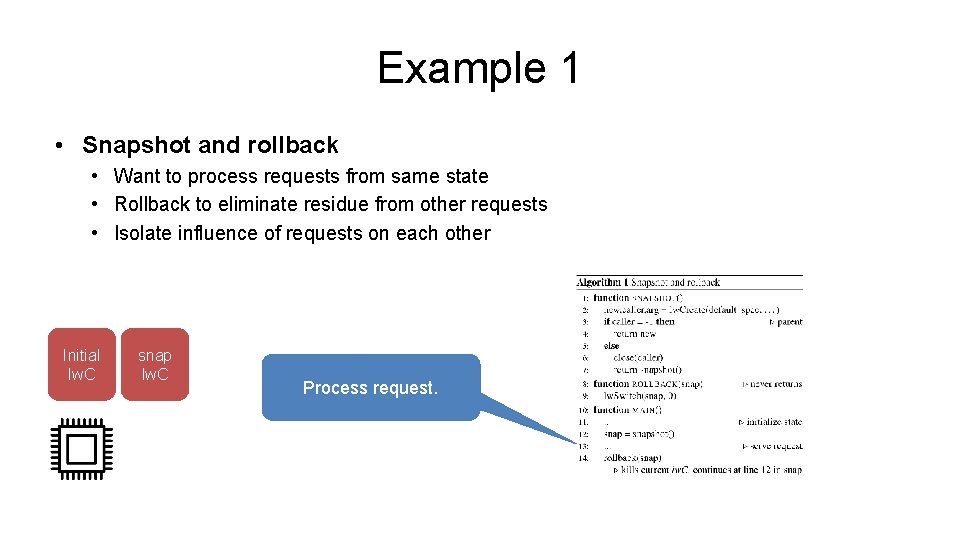

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C snap lw. C Process request.

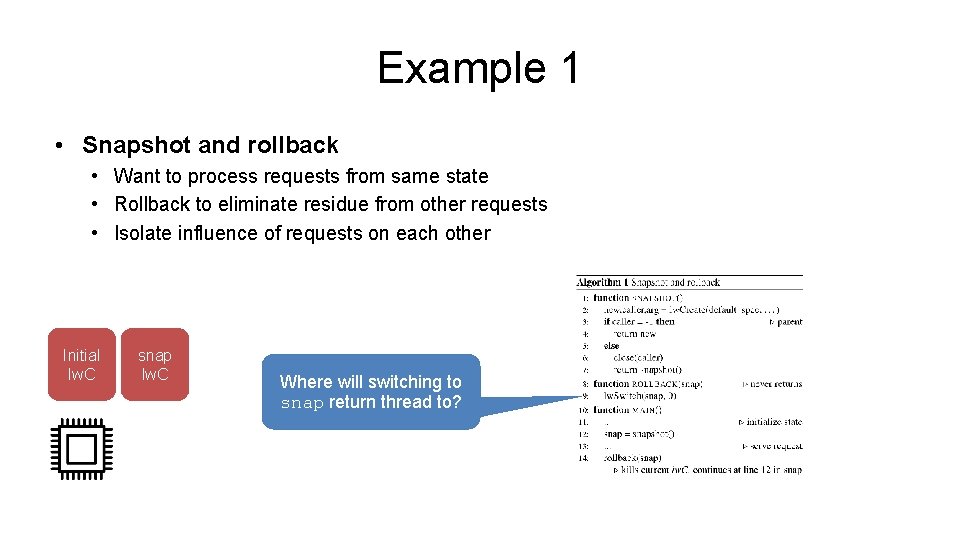

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C snap lw. C Now want to use snap to return to initial state.

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C snap lw. C Where will switching to snap return thread to?

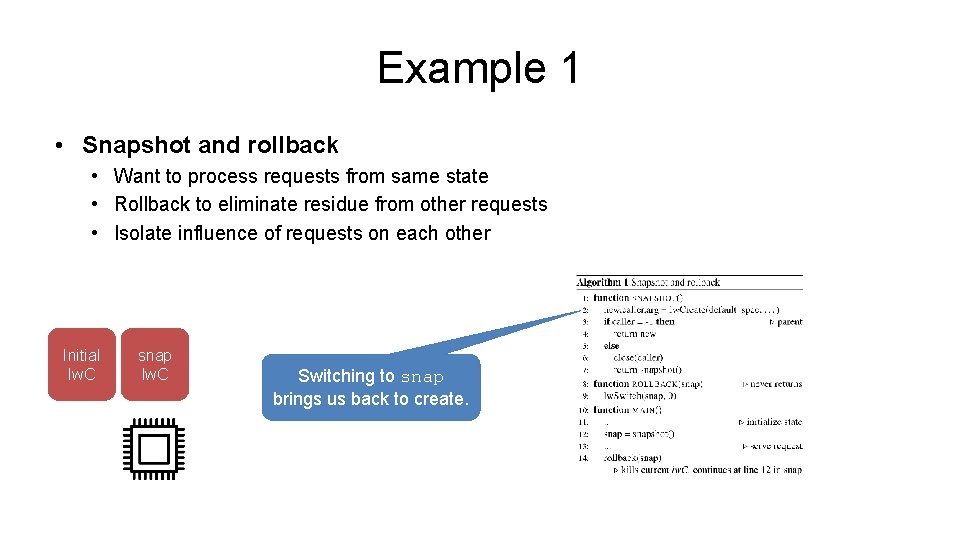

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C snap lw. C Switching to snap brings us back to create.

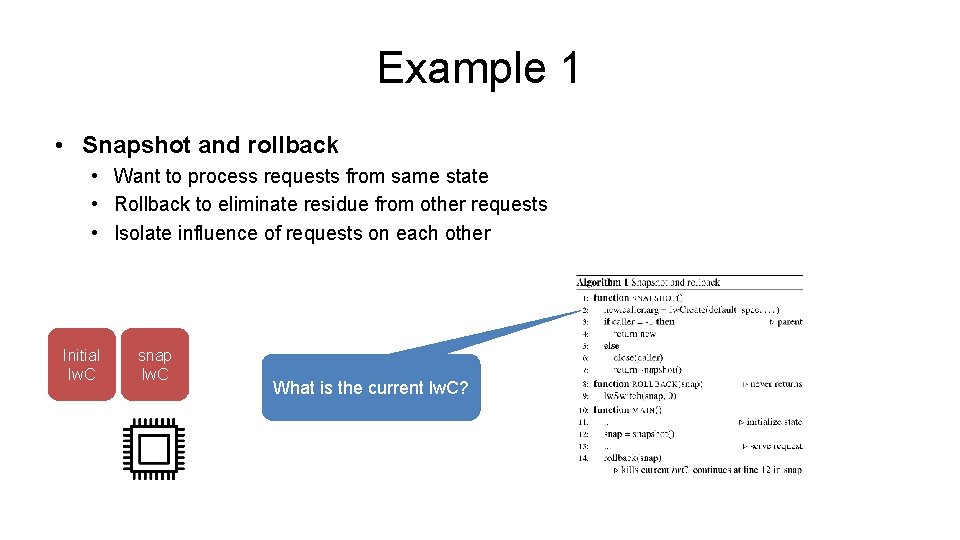

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C snap lw. C What is the current lw. C?

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other Initial lw. C snap lw. C What are the values of caller and new?

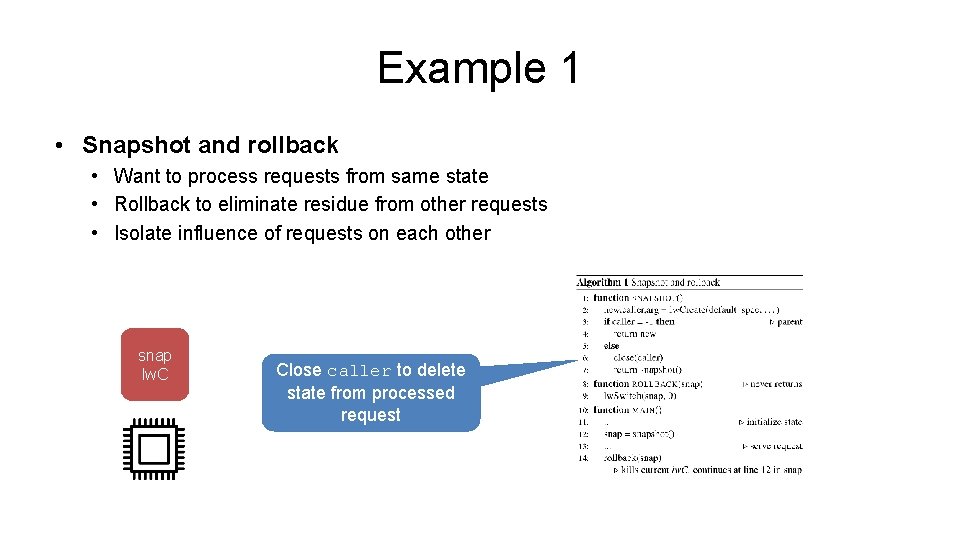

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other snap lw. C Close caller to delete state from processed request

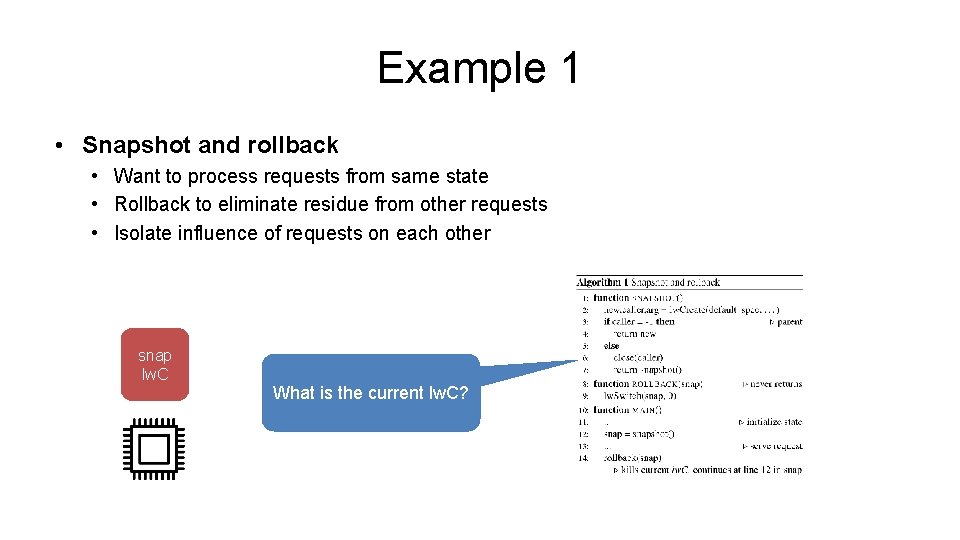

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other snap lw. C What is the current lw. C?

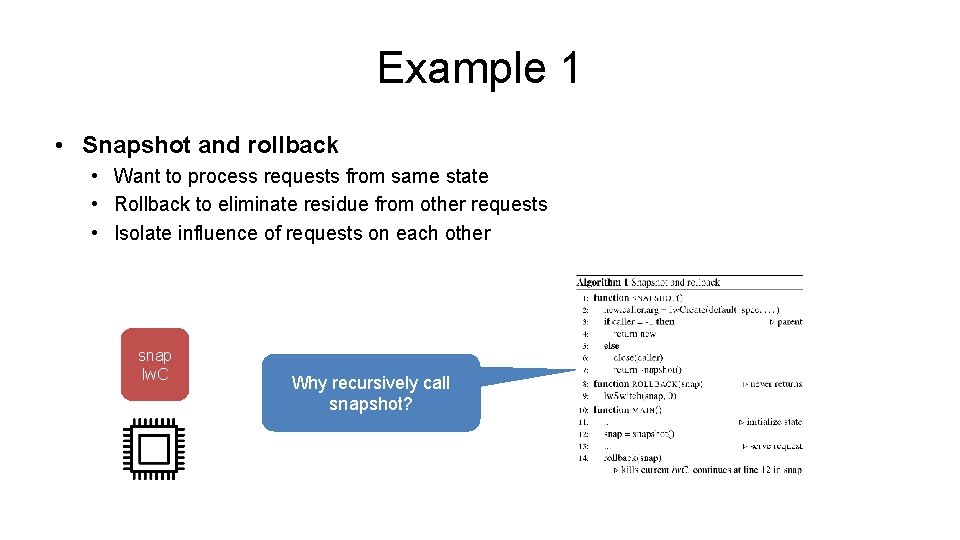

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other snap lw. C Why recursively call snapshot?

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other snap’ lw. C snap lw. C new stores copy (Co. W) of current lw. C

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other snap’ lw. C snap lw. C caller is -1 for now (until new is switched to)

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other snap’ lw. C snap lw. C return new as snapshot

Example 1 • Snapshot and rollback • Want to process requests from same state • Rollback to eliminate residue from other requests • Isolate influence of requests on each other snap’ lw. C snap lw. C Keep ref to snap so that we can go back …

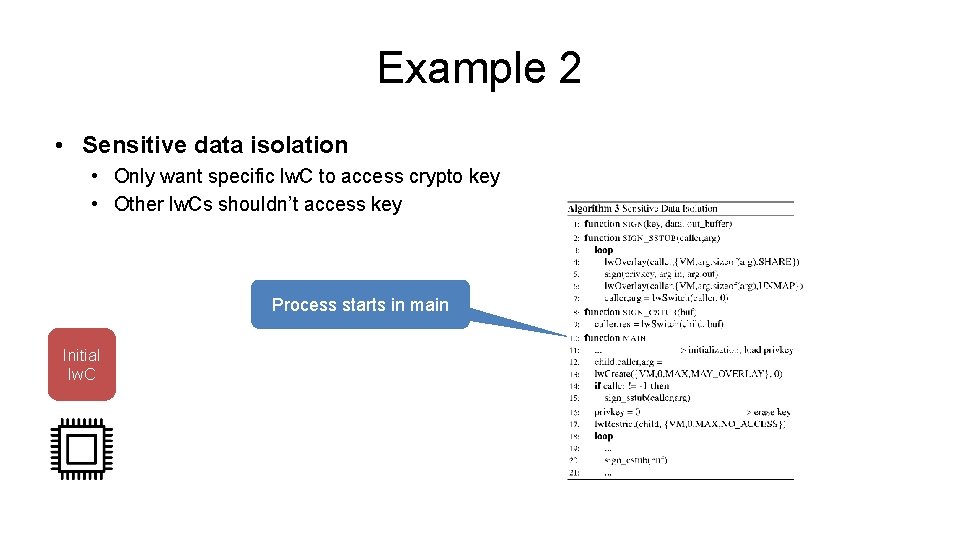

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Process starts in main Initial lw. C

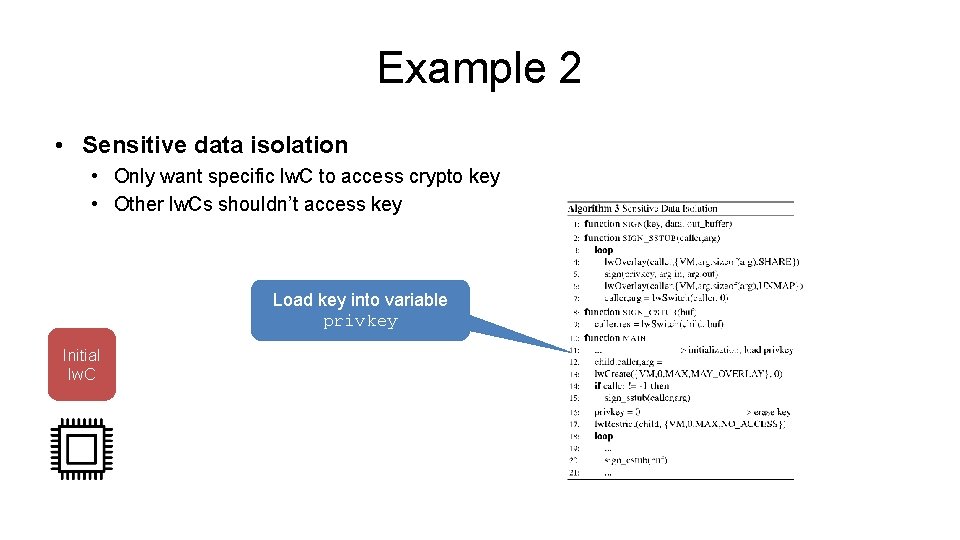

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Load key into variable privkey Initial lw. C

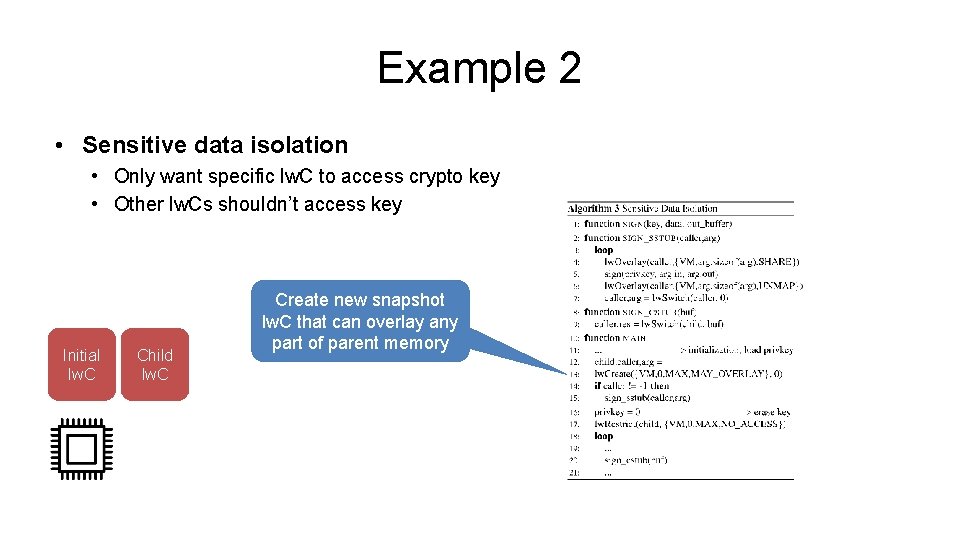

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Initial lw. C Child lw. C Create new snapshot lw. C that can overlay any part of parent memory

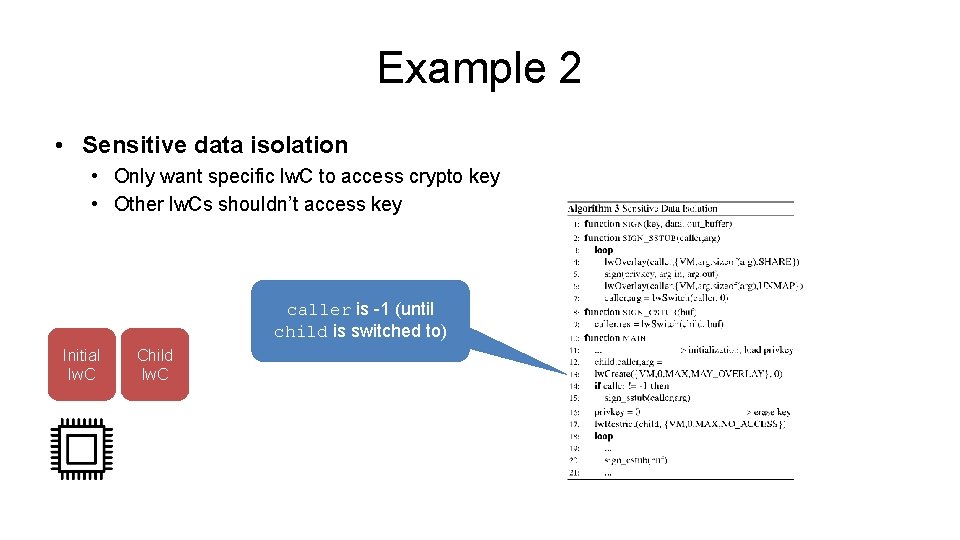

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key caller is -1 (until child is switched to) Initial lw. C Child lw. C

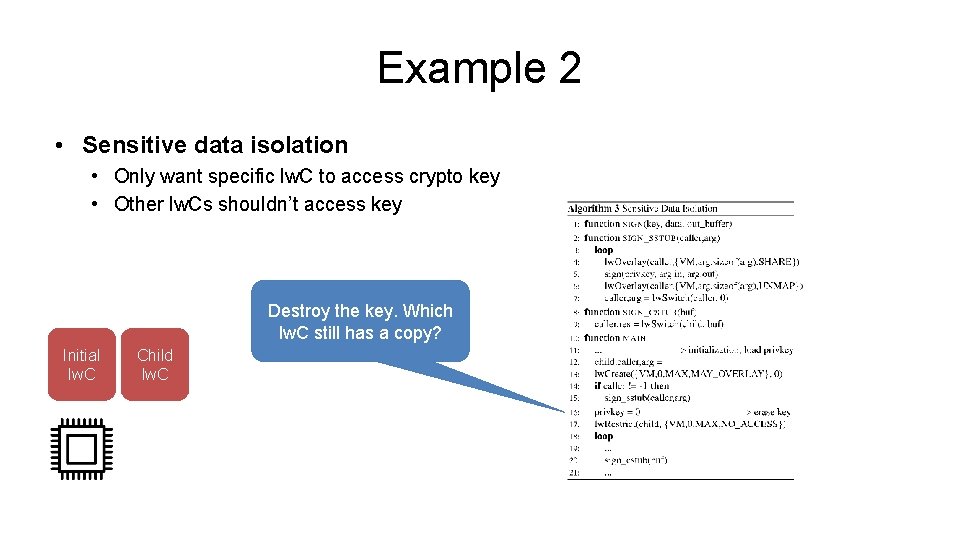

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Destroy the key. Which lw. C still has a copy? Initial lw. C Child lw. C

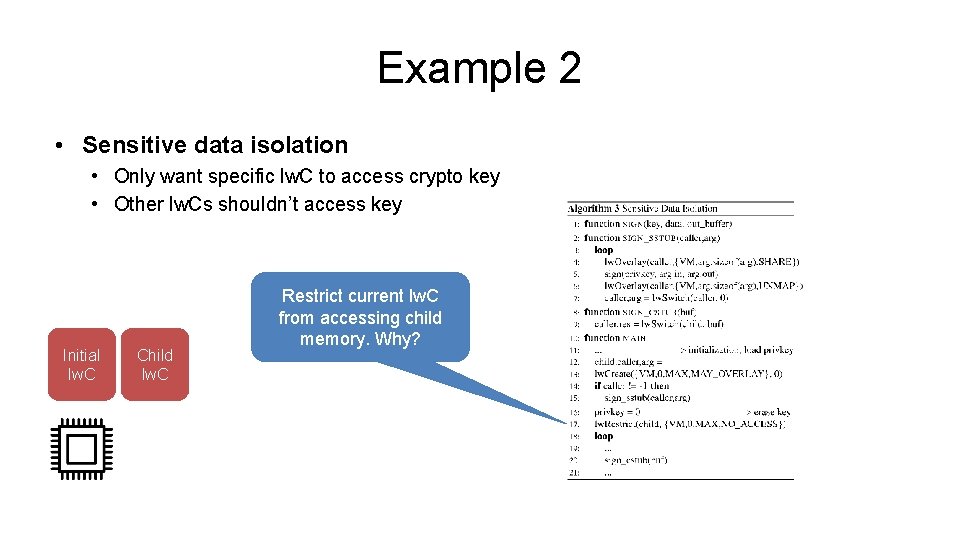

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Initial lw. C Child lw. C Restrict current lw. C from accessing child memory. Why?

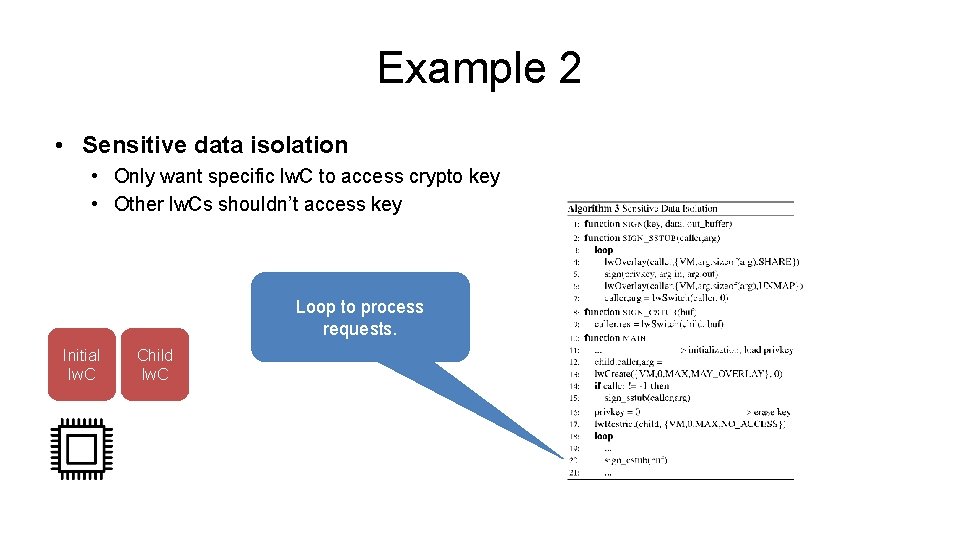

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Loop to process requests. Initial lw. C Child lw. C

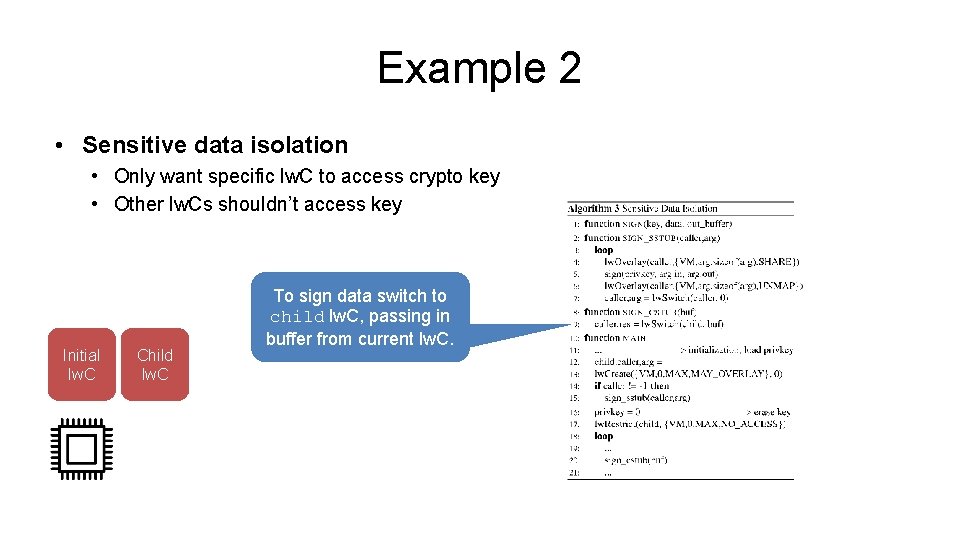

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Initial lw. C Child lw. C To sign data switch to child lw. C, passing in buffer from current lw. C.

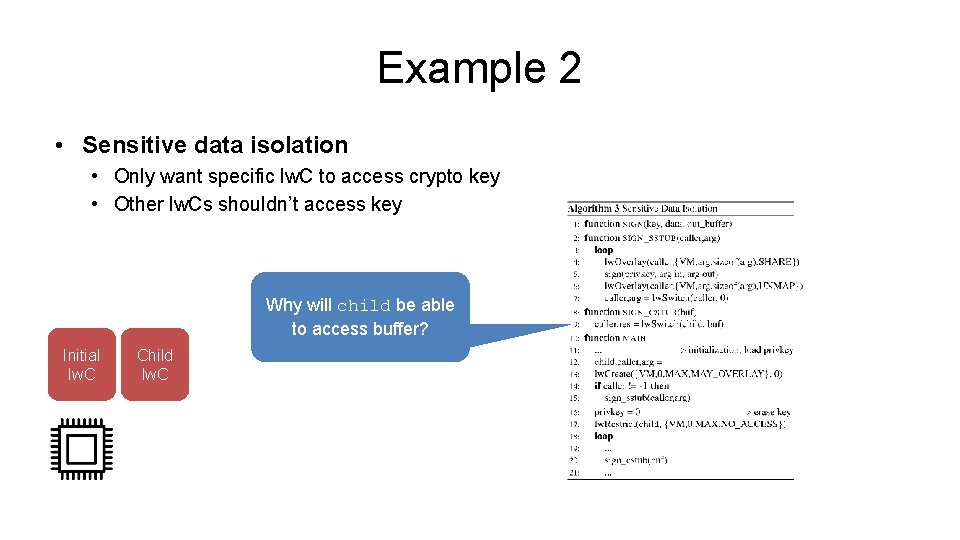

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Why will child be able to access buffer? Initial lw. C Child lw. C

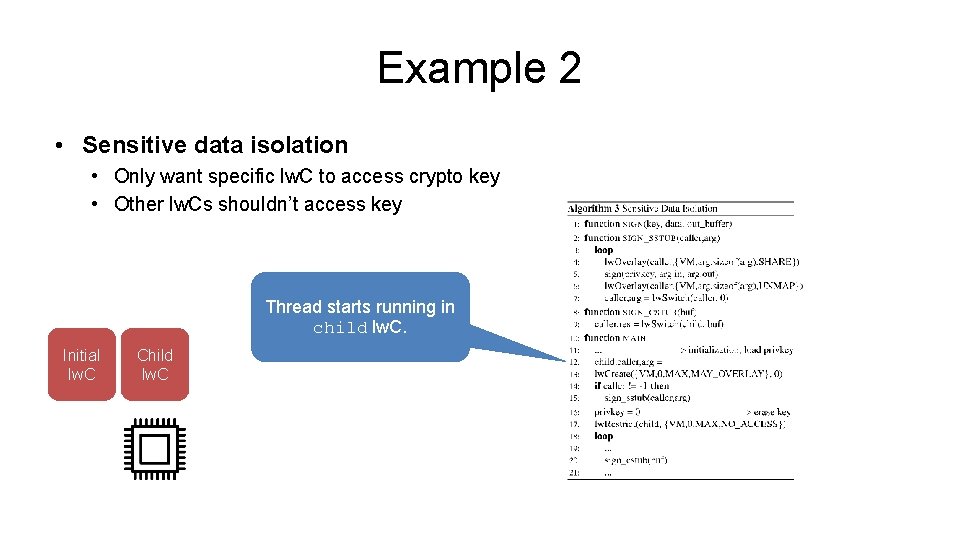

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Thread starts running in child lw. C. Initial lw. C Child lw. C

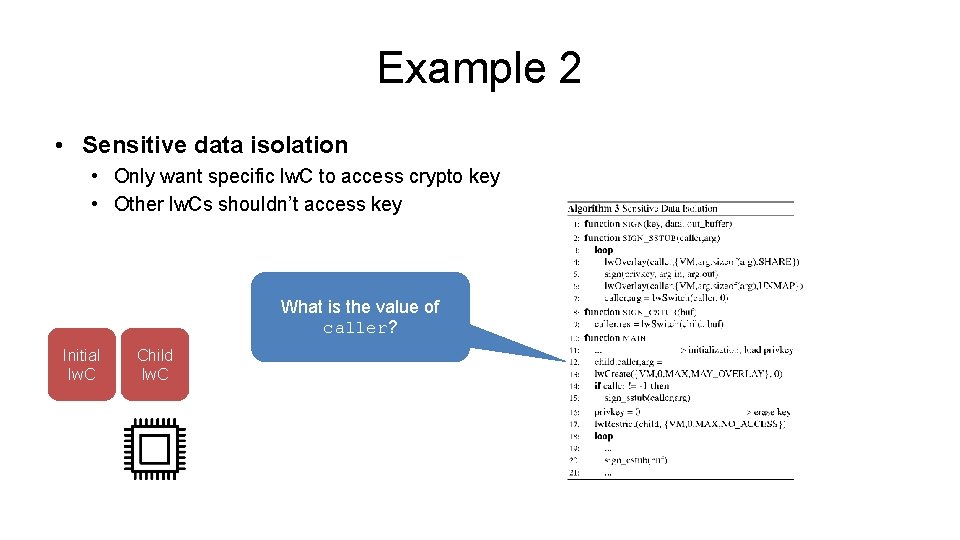

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key What is the value of caller? Initial lw. C Child lw. C

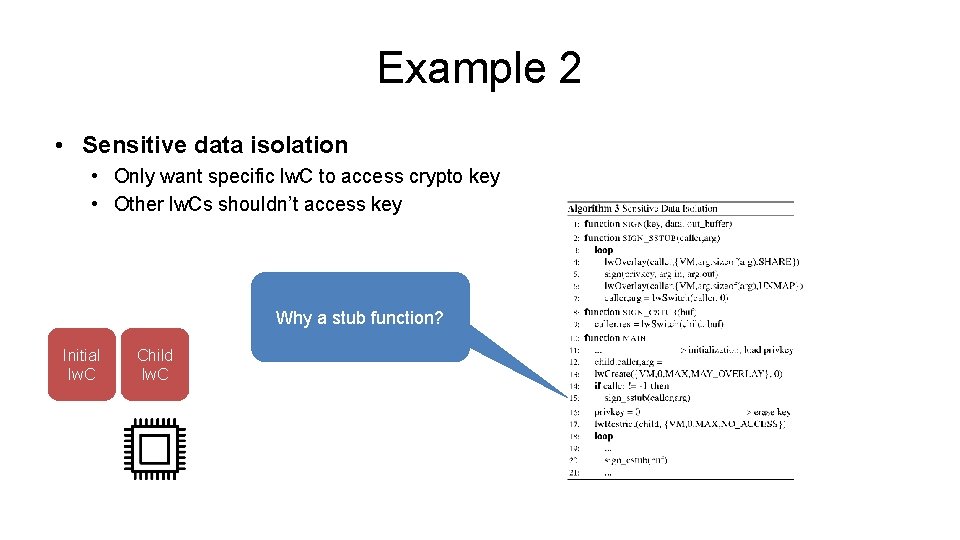

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Why a stub function? Initial lw. C Child lw. C

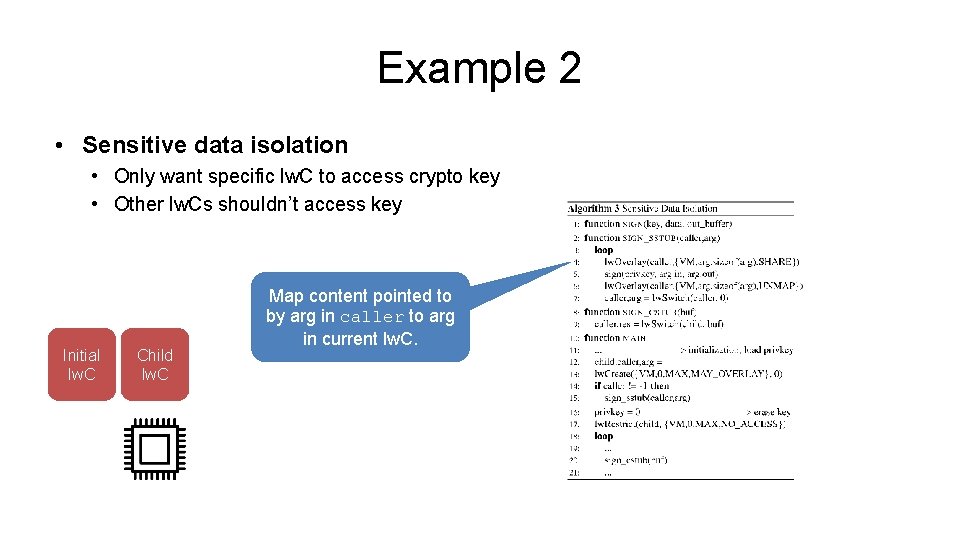

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Initial lw. C Child lw. C Map content pointed to by arg in caller to arg in current lw. C.

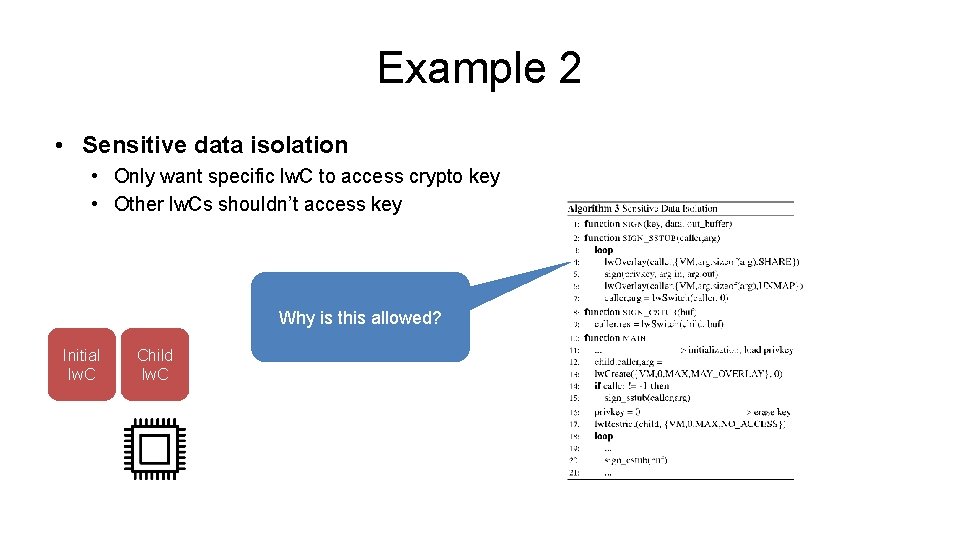

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Why is this allowed? Initial lw. C Child lw. C

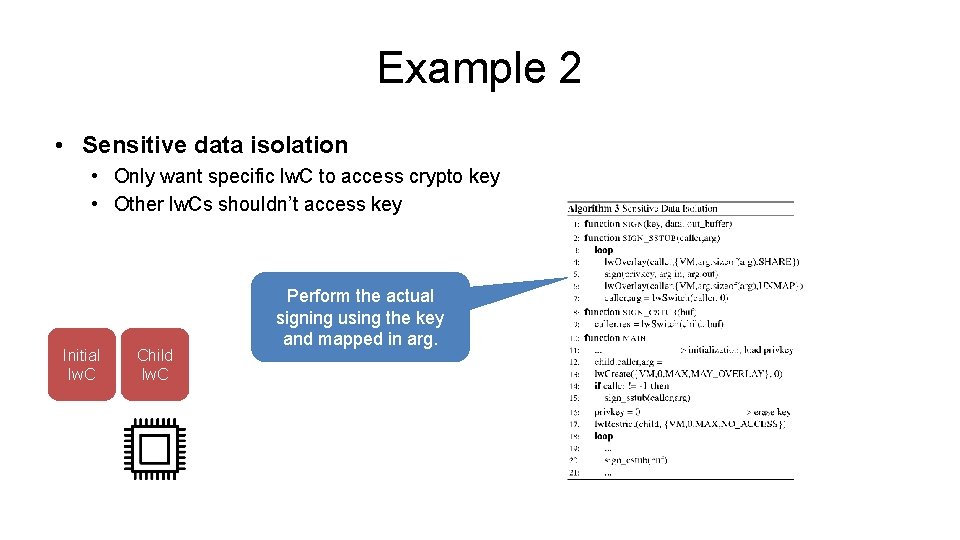

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Initial lw. C Child lw. C Perform the actual signing using the key and mapped in arg.

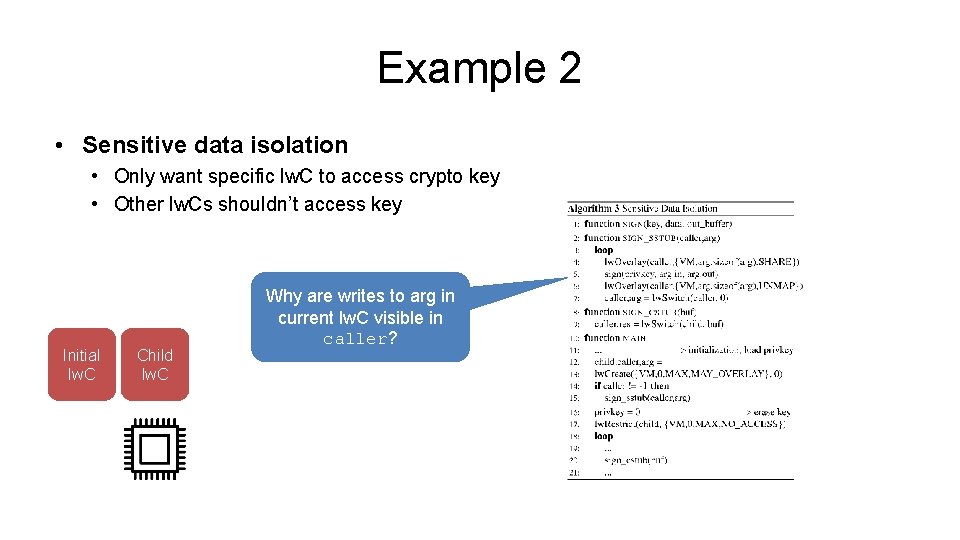

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Initial lw. C Child lw. C Why are writes to arg in current lw. C visible in caller?

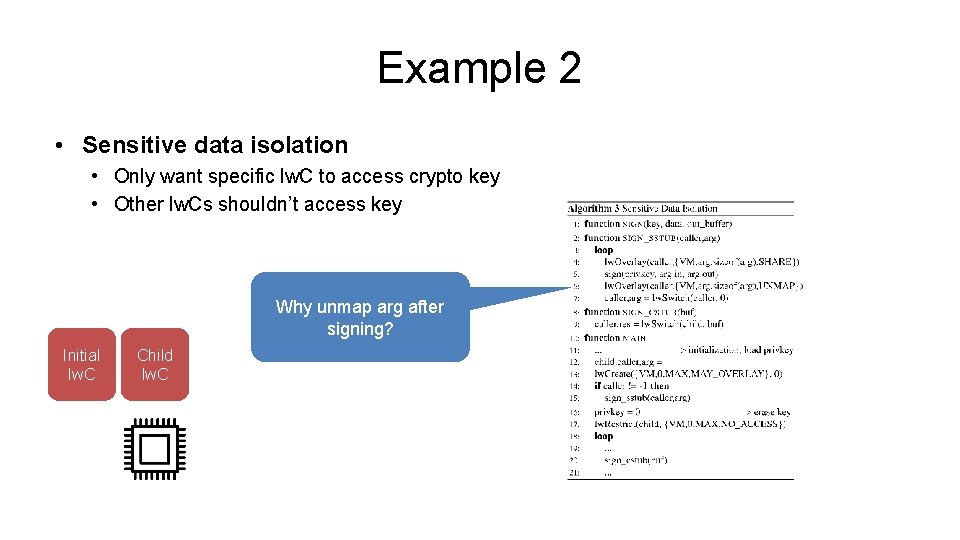

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Why unmap arg after signing? Initial lw. C Child lw. C

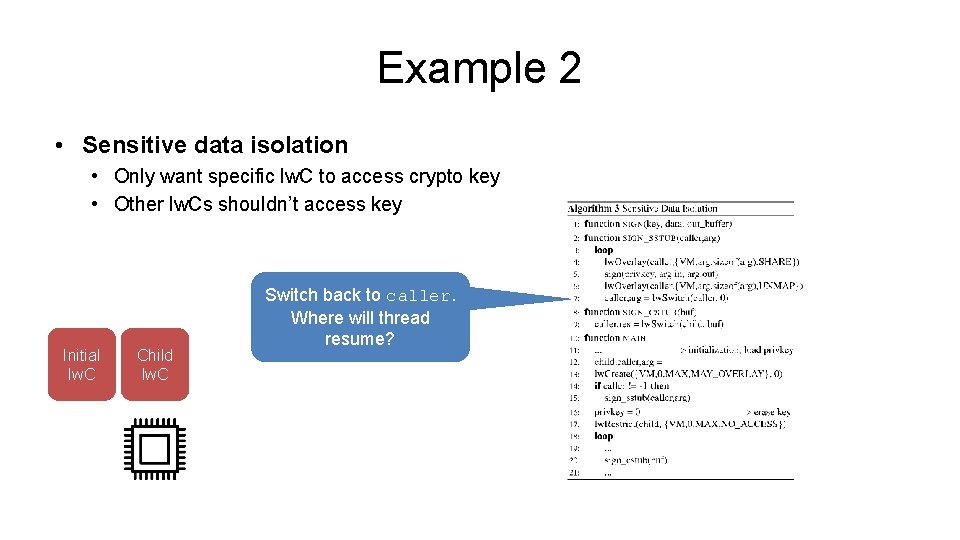

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Initial lw. C Child lw. C Switch back to caller. Where will thread resume?

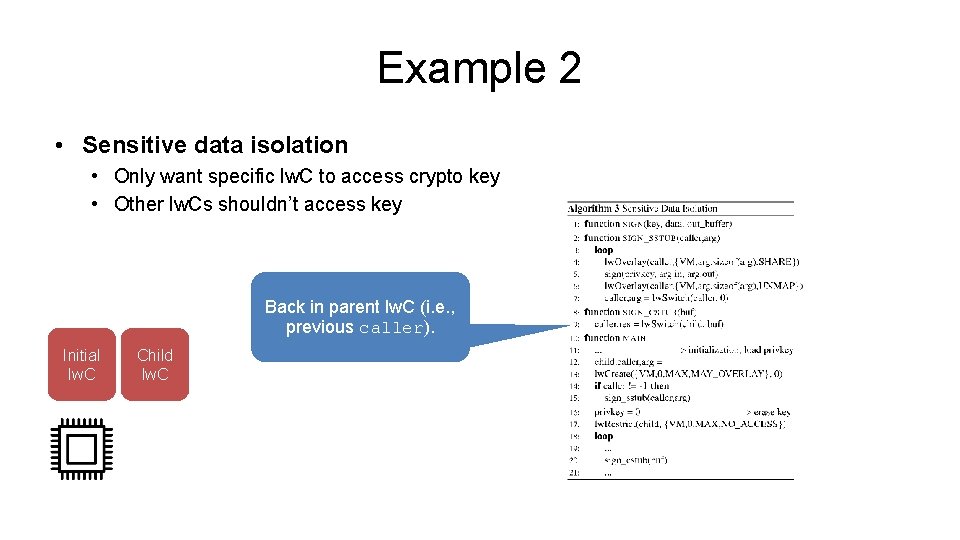

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Back in parent lw. C (i. e. , previous caller). Initial lw. C Child lw. C

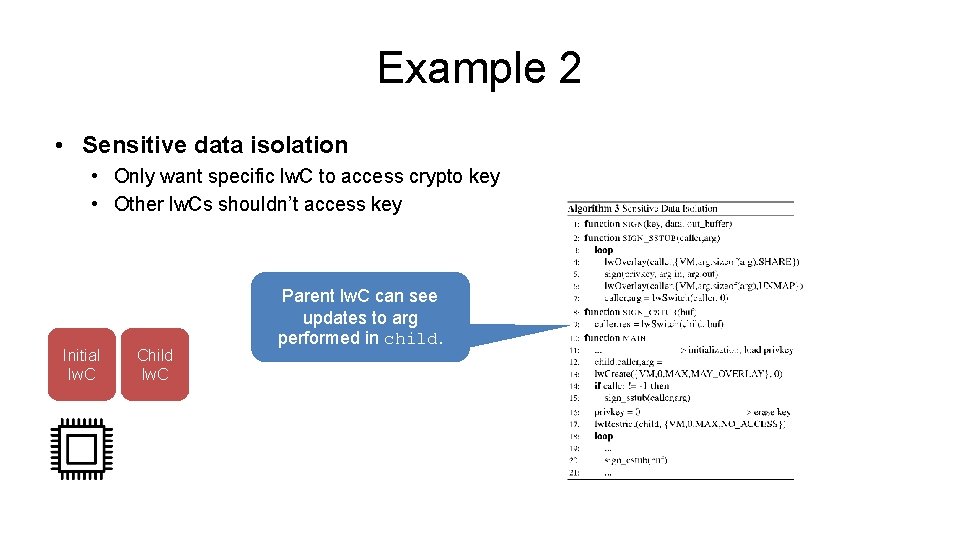

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Initial lw. C Child lw. C Parent lw. C can see updates to arg performed in child.

Example 2 • Sensitive data isolation • Only want specific lw. C to access crypto key • Other lw. Cs shouldn’t access key Initial lw. C Child lw. C With data signed, process request, loop back for more …

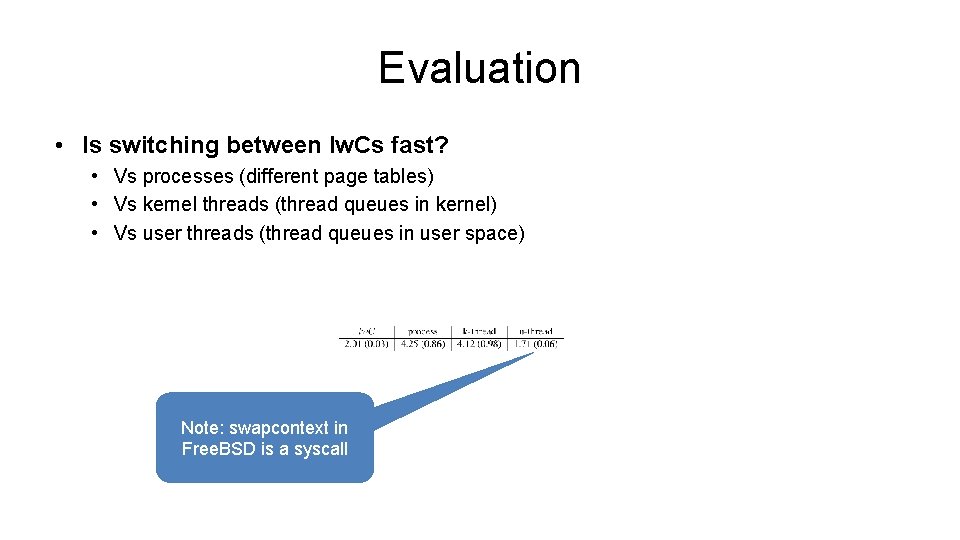

Evaluation • Is switching between lw. Cs fast? • Vs processes (different page tables) • Vs kernel threads (thread queues in kernel) • Vs user threads (thread queues in user space)

Evaluation • Is switching between lw. Cs fast? • Vs processes (different page tables) • Vs kernel threads (thread queues in kernel) • Vs user threads (thread queues in user space) What is true for each approach (on Free. BSD)?

Evaluation • Is switching between lw. Cs fast? • Vs processes (different page tables) • Vs kernel threads (thread queues in kernel) • Vs user threads (thread queues in user space) Note: swapcontext in Free. BSD is a syscall

Evaluation • Is switching between lw. Cs fast? • Vs processes (different page tables) • Vs kernel threads (thread queues in kernel) • Vs user threads (thread queues in user space) Note: swapcontext in Linux is not asyscall

Evaluation • Is switching between lw. Cs fast? • Vs processes (different page tables) • Vs kernel threads (thread queues in kernel) • Vs user threads (thread queues in user space) On Linux this number is 6% of lw. C …

Evaluation • Is switching between lw. Cs fast? • Vs processes (different page tables) • Vs kernel threads (thread queues in kernel) • Vs user threads (thread queues in user space) Why are processes and k-threads twice as slow as lw. Cs?

Evaluation • Is switching between lw. Cs fast? • Vs processes (different page tables) • Vs kernel threads (thread queues in kernel) • Vs user threads (thread queues in user space) lw. Cs avoid: * scheduling overhead (go right to next lw. C)

- Slides: 105