LHC requirements for GRID middleware F Carminati P

- Slides: 21

LHC requirements for GRID middleware F. Carminati, P. Cerello, C. Grandi, O. Smirnova, J. Templon, E. Van Herwijnen CHEP 2003 La Jolla, March 24 -28, 2003 1

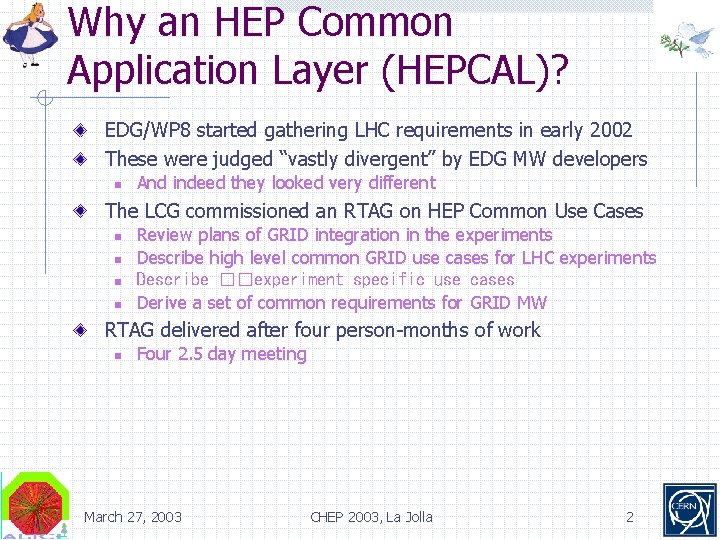

Why an HEP Common Application Layer (HEPCAL)? EDG/WP 8 started gathering LHC requirements in early 2002 These were judged “vastly divergent” by EDG MW developers n And indeed they looked very different The LCG commissioned an RTAG on HEP Common Use Cases n n Review plans of GRID integration in the experiments Describe high level common GRID use cases for LHC experiments Describe ��experiment specific use cases Derive a set of common requirements for GRID MW RTAG delivered after four person-months of work n Four 2. 5 day meeting March 27, 2003 CHEP 2003, La Jolla 2

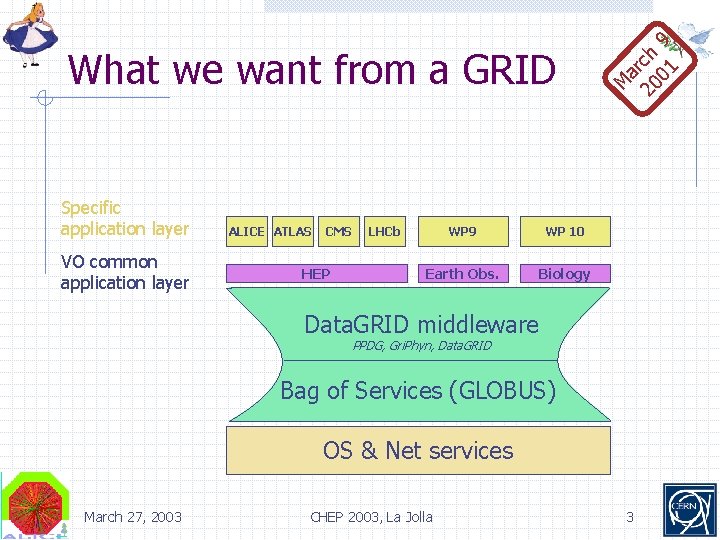

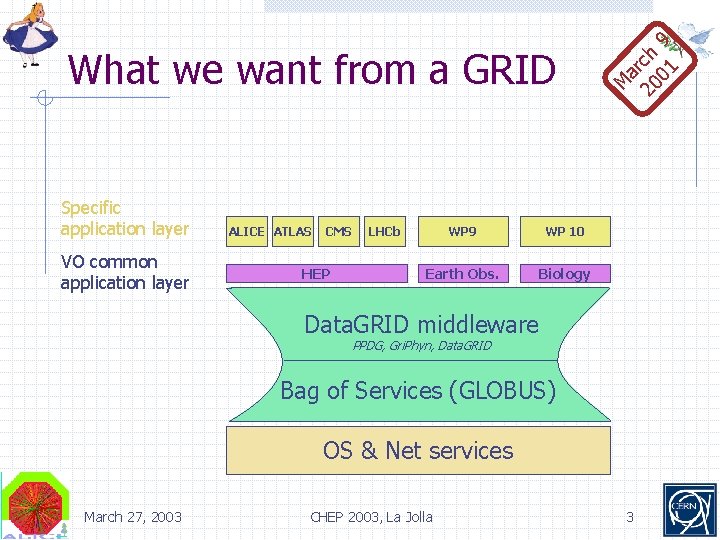

Specific application layer VO common application layer ALICE ATLAS CMS HEP LHCb WP 9 WP 10 Earth Obs. Biology M ar 20 ch 01 9 What we want from a GRID Data. GRID middleware PPDG, Gri. Phyn, Data. GRID Bag of Services (GLOBUS) OS & Net services March 27, 2003 CHEP 2003, La Jolla 3

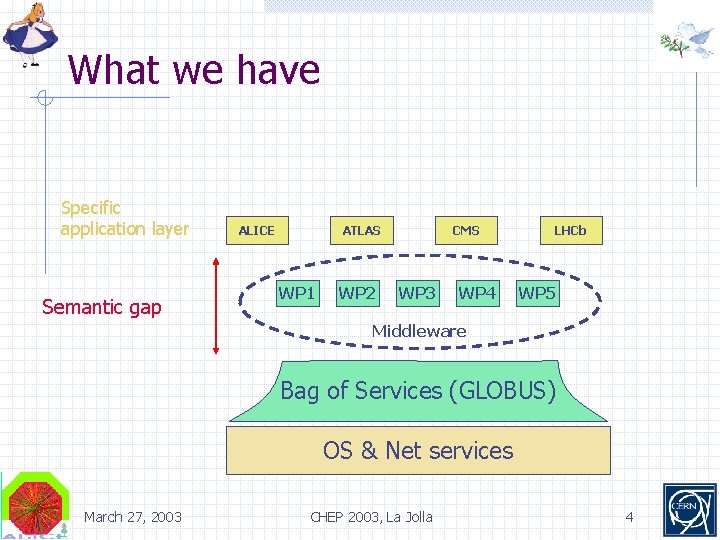

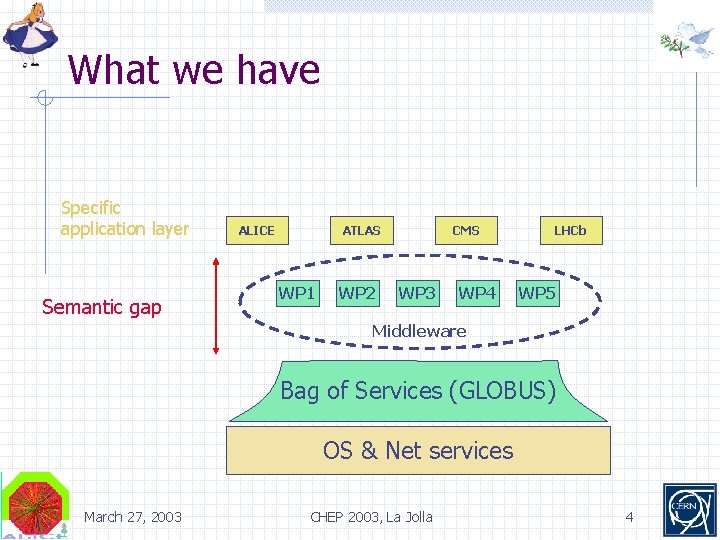

What we have Specific application layer Semantic gap ALICE ATLAS WP 1 WP 2 CMS WP 3 WP 4 LHCb WP 5 Middleware Bag of Services (GLOBUS) OS & Net services March 27, 2003 CHEP 2003, La Jolla 4

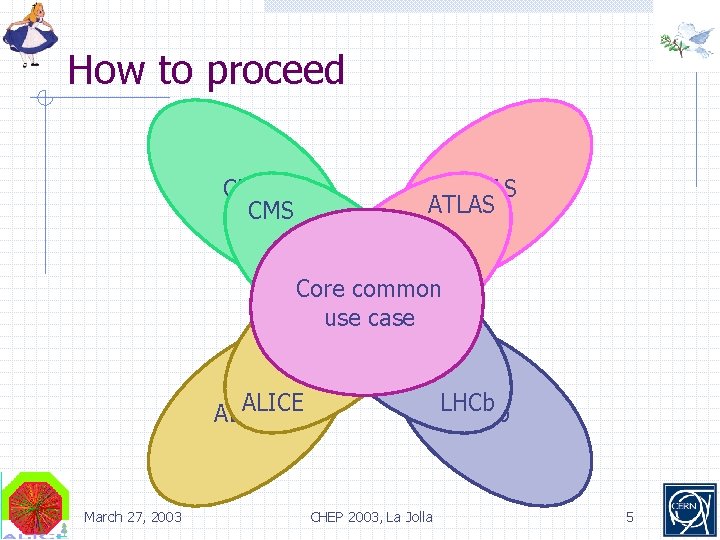

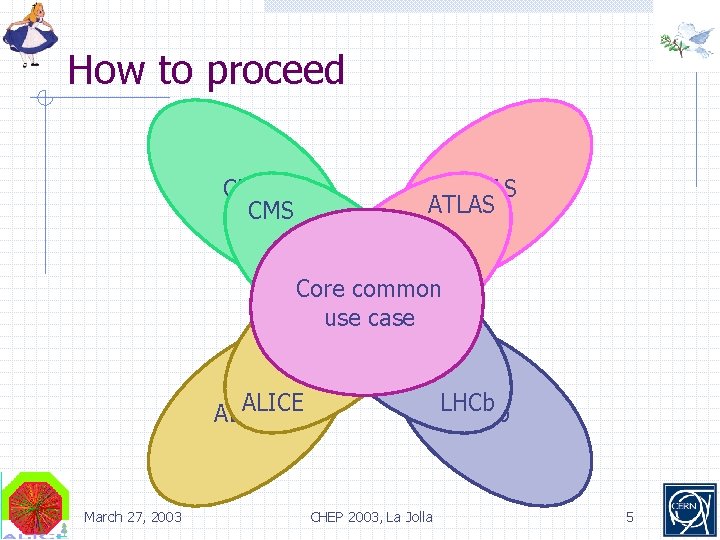

How to proceed CMS ATLAS Core common use case ALICE March 27, 2003 LHCb CHEP 2003, La Jolla 5

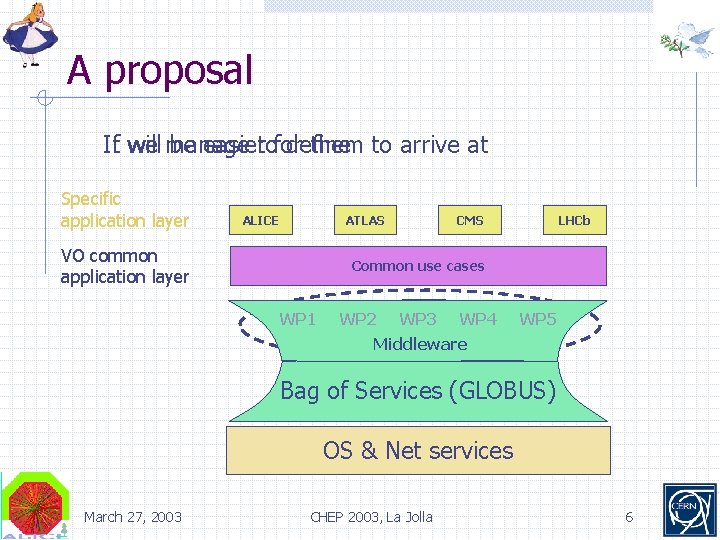

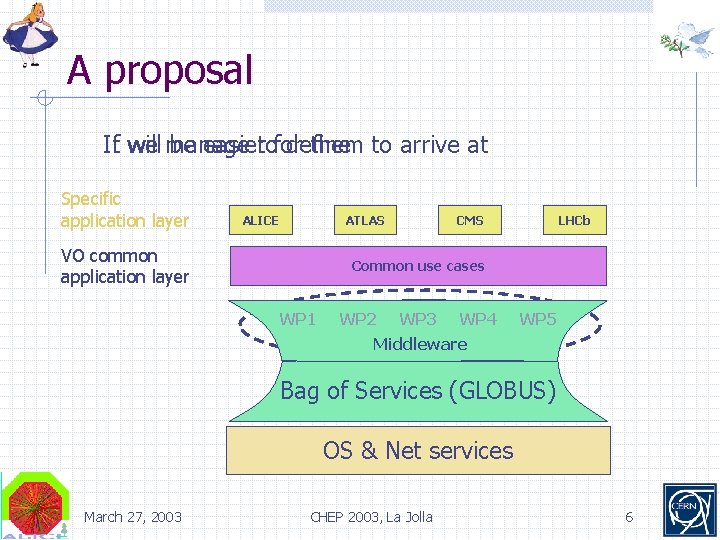

A proposal If we manage to define It will be easier for them to arrive at Specific application layer ALICE ATLAS VO common application layer CMS LHCb Common use cases WP 1 WP 2 WP 3 WP 4 WP 5 Data. GRID middleware Middleware Bag of Services (GLOBUS) OS & Net services March 27, 2003 CHEP 2003, La Jolla 6

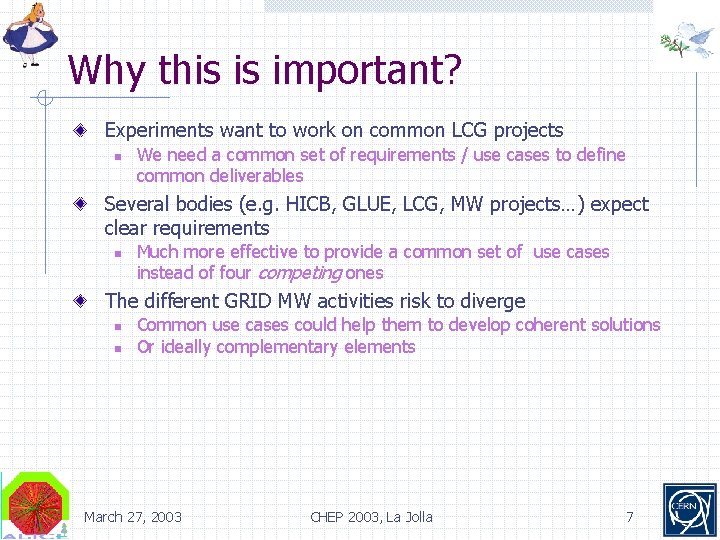

Why this is important? Experiments want to work on common LCG projects n We need a common set of requirements / use cases to define common deliverables Several bodies (e. g. HICB, GLUE, LCG, MW projects…) expect clear requirements n Much more effective to provide a common set of use cases instead of four competing ones The different GRID MW activities risk to diverge n n Common use cases could help them to develop coherent solutions Or ideally complementary elements March 27, 2003 CHEP 2003, La Jolla 7

Rules of the game As much as you may like Harry Potter, he is not a good excuse! If you cannot explain it to your mother-inlaw, you did not undestand it yourself If your only argument is “why not” or “we need it”, go back and think again Say what you want, not how you think it must be done -- STOP short of architecture March 27, 2003 CHEP 2003, La Jolla 8

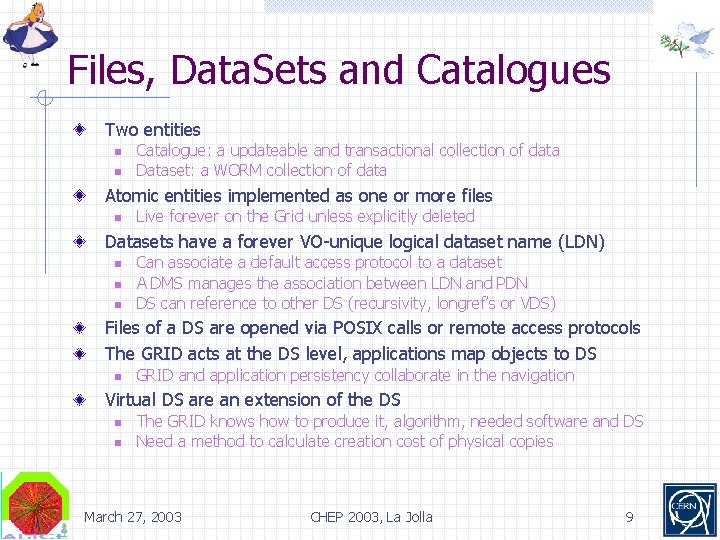

Files, Data. Sets and Catalogues Two entities n n Catalogue: a updateable and transactional collection of data Dataset: a WORM collection of data Atomic entities implemented as one or more files n Live forever on the Grid unless explicitly deleted Datasets have a forever VO-unique logical dataset name (LDN) n n n Can associate a default access protocol to a dataset A DMS manages the association between LDN and PDN DS can reference to other DS (recursivity, longref’s or VDS) Files of a DS are opened via POSIX calls or remote access protocols The GRID acts at the DS level, applications map objects to DS n GRID and application persistency collaborate in the navigation Virtual DS are an extension of the DS n n The GRID knows how to produce it, algorithm, needed software and DS Need a method to calculate creation cost of physical copies March 27, 2003 CHEP 2003, La Jolla 9

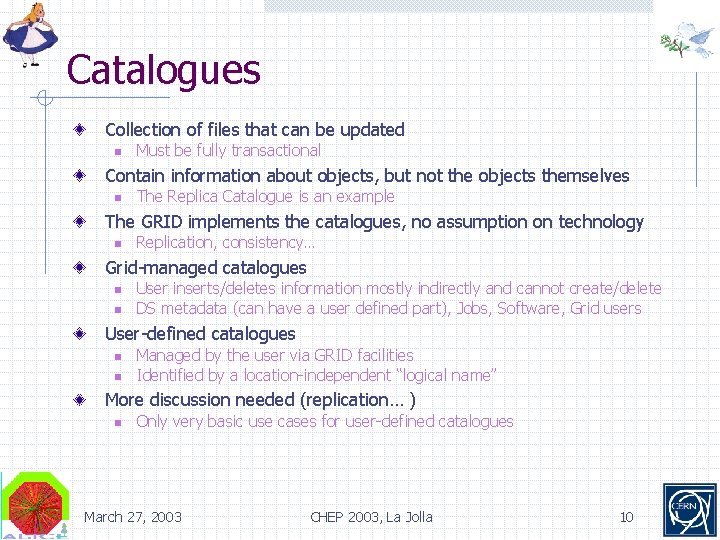

Catalogues Collection of files that can be updated n Must be fully transactional Contain information about objects, but not the objects themselves n The Replica Catalogue is an example The GRID implements the catalogues, no assumption on technology n Replication, consistency… Grid-managed catalogues n n User inserts/deletes information mostly indirectly and cannot create/delete DS metadata (can have a user defined part), Jobs, Software, Grid users User-defined catalogues n n Managed by the user via GRID facilities Identified by a location-independent “logical name” More discussion needed (replication… ) n Only very basic use cases for user-defined catalogues March 27, 2003 CHEP 2003, La Jolla 10

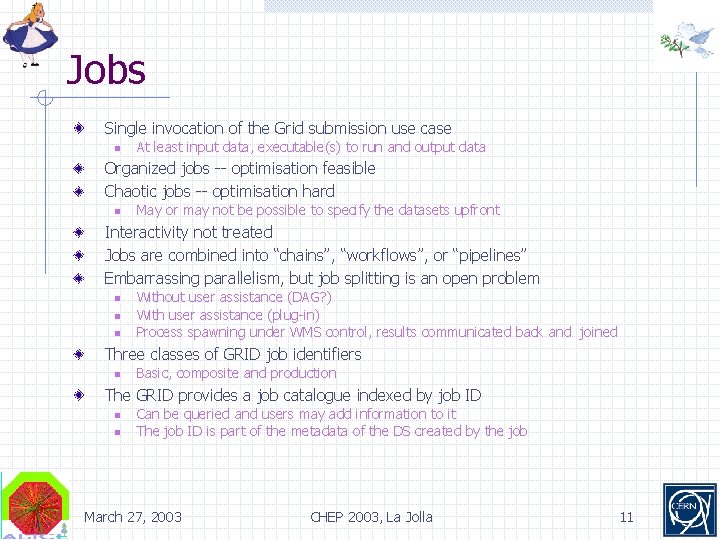

Jobs Single invocation of the Grid submission use case n At least input data, executable(s) to run and output data Organized jobs -- optimisation feasible Chaotic jobs -- optimisation hard n May or may not be possible to specify the datasets upfront Interactivity not treated Jobs are combined into “chains”, “workflows”, or “pipelines” Embarrassing parallelism, but job splitting is an open problem n n n Without user assistance (DAG? ) With user assistance (plug-in) Process spawning under WMS control, results communicated back and joined Three classes of GRID job identifiers n Basic, composite and production The GRID provides a job catalogue indexed by job ID n n Can be queried and users may add information to it The job ID is part of the metadata of the DS created by the job March 27, 2003 CHEP 2003, La Jolla 11

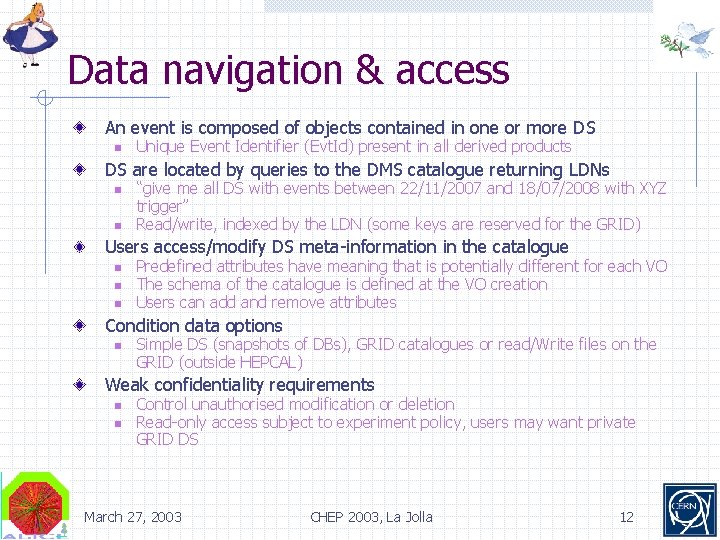

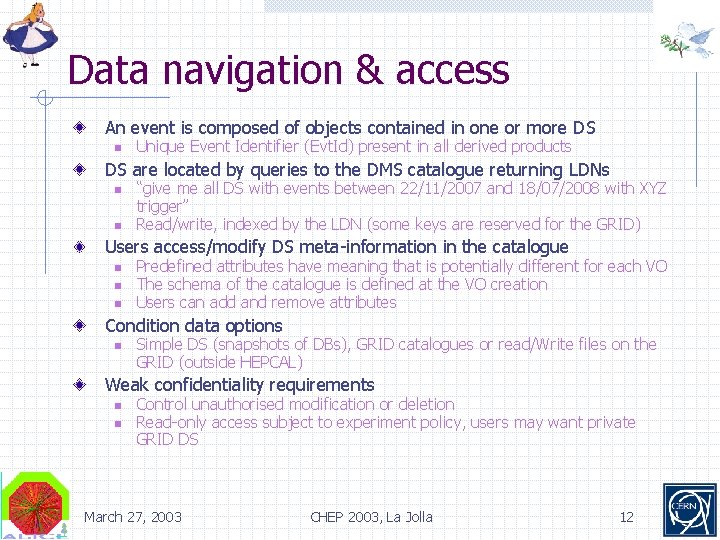

Data navigation & access An event is composed of objects contained in one or more DS n Unique Event Identifier (Evt. Id) present in all derived products DS are located by queries to the DMS catalogue returning LDNs n n “give me all DS with events between 22/11/2007 and 18/07/2008 with XYZ trigger” Read/write, indexed by the LDN (some keys are reserved for the GRID) Users access/modify DS meta-information in the catalogue n n n Predefined attributes have meaning that is potentially different for each VO The schema of the catalogue is defined at the VO creation Users can add and remove attributes Condition data options n Simple DS (snapshots of DBs), GRID catalogues or read/Write files on the GRID (outside HEPCAL) Weak confidentiality requirements n n Control unauthorised modification or deletion Read-only access subject to experiment policy, users may want private GRID DS March 27, 2003 CHEP 2003, La Jolla 12

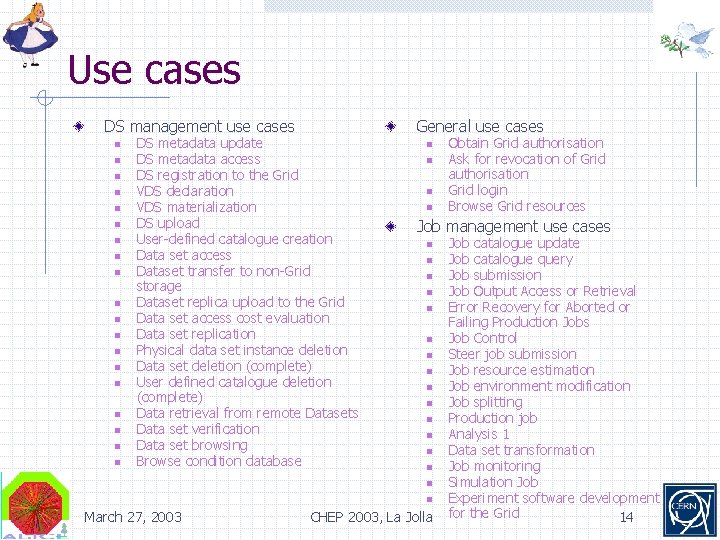

Use cases Presented in rigorous (? ) tabular description n Easy to translate to a formal language such as UML To be implemented by a “single call” n From the command shell, C++ API or Web portal March 27, 2003 CHEP 2003, La Jolla 13

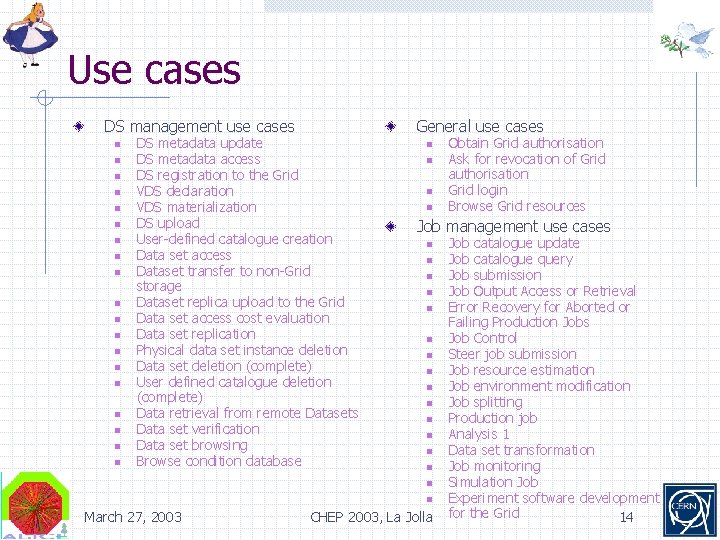

Use cases DS management use cases n n n n n DS metadata update DS metadata access DS registration to the Grid VDS declaration VDS materialization DS upload User-defined catalogue creation Data set access Dataset transfer to non-Grid storage Dataset replica upload to the Grid Data set access cost evaluation Data set replication Physical data set instance deletion Data set deletion (complete) User defined catalogue deletion (complete) Data retrieval from remote Datasets Data set verification Data set browsing Browse condition database General use cases n n Job management use cases n n n n March 27, 2003 Obtain Grid authorisation Ask for revocation of Grid authorisation Grid login Browse Grid resources CHEP 2003, La Jolla Job catalogue update Job catalogue query Job submission Job Output Access or Retrieval Error Recovery for Aborted or Failing Production Jobs Job Control Steer job submission Job resource estimation Job environment modification Job splitting Production job Analysis 1 Data set transformation Job monitoring Simulation Job Experiment software development for the Grid 14

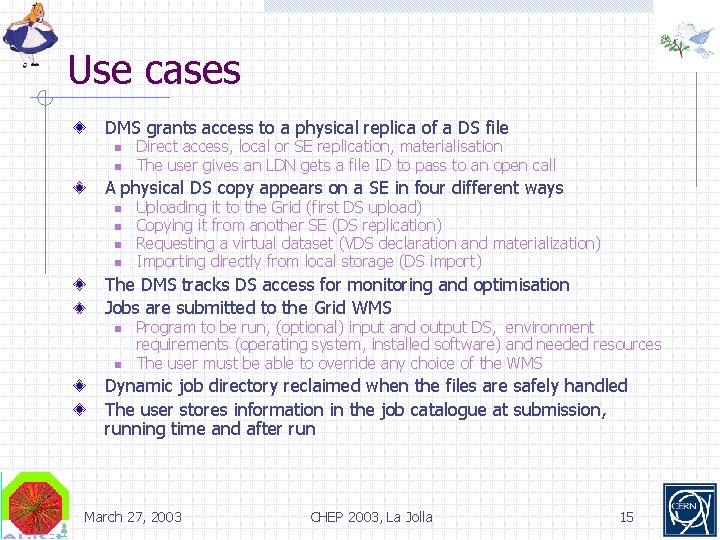

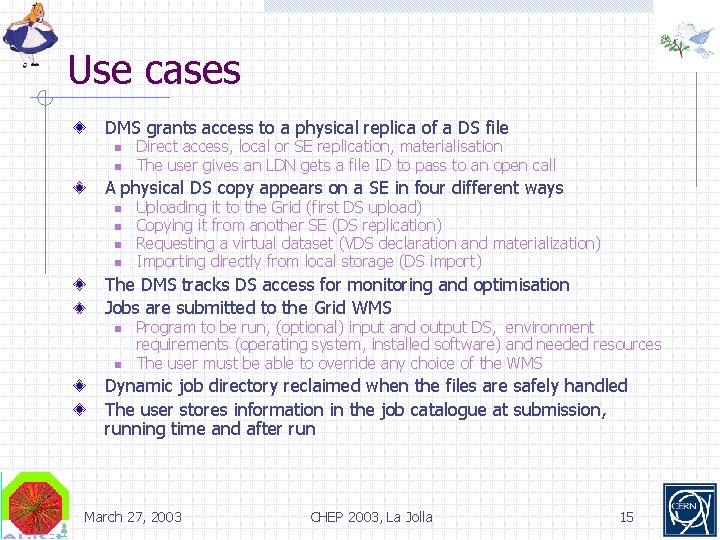

Use cases DMS grants access to a physical replica of a DS file n n Direct access, local or SE replication, materialisation The user gives an LDN gets a file ID to pass to an open call A physical DS copy appears on a SE in four different ways n n Uploading it to the Grid (first DS upload) Copying it from another SE (DS replication) Requesting a virtual dataset (VDS declaration and materialization) Importing directly from local storage (DS import) The DMS tracks DS access for monitoring and optimisation Jobs are submitted to the Grid WMS n n Program to be run, (optional) input and output DS, environment requirements (operating system, installed software) and needed resources The user must be able to override any choice of the WMS Dynamic job directory reclaimed when the files are safely handled The user stores information in the job catalogue at submission, running time and after run March 27, 2003 CHEP 2003, La Jolla 15

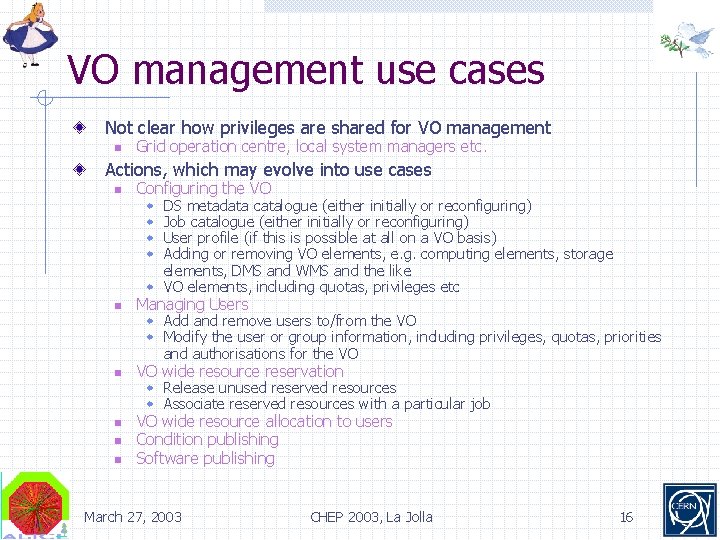

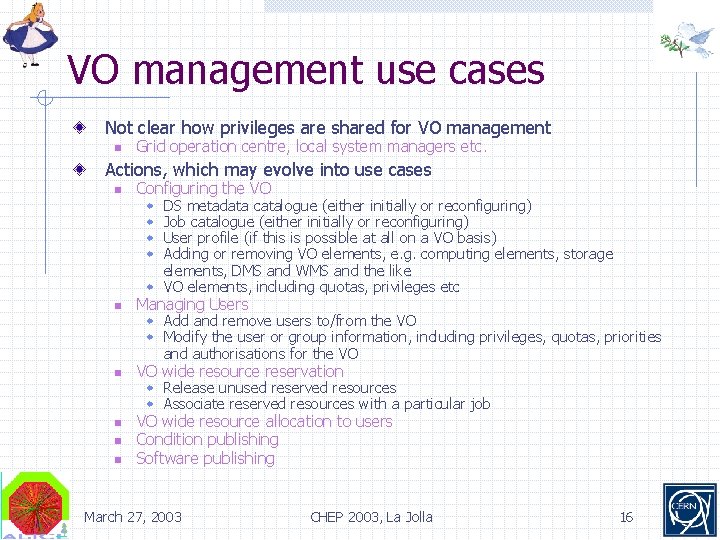

VO management use cases Not clear how privileges are shared for VO management n Grid operation centre, local system managers etc. Actions, which may evolve into use cases n Configuring the VO DS metadata catalogue (either initially or reconfiguring) Job catalogue (either initially or reconfiguring) User profile (if this is possible at all on a VO basis) Adding or removing VO elements, e. g. computing elements, storage elements, DMS and WMS and the like w VO elements, including quotas, privileges etc w w n Managing Users n VO wide resource reservation n w Add and remove users to/from the VO w Modify the user or group information, including privileges, quotas, priorities and authorisations for the VO w Release unused reserved resources w Associate reserved resources with a particular job VO wide resource allocation to users Condition publishing Software publishing March 27, 2003 CHEP 2003, La Jolla 16

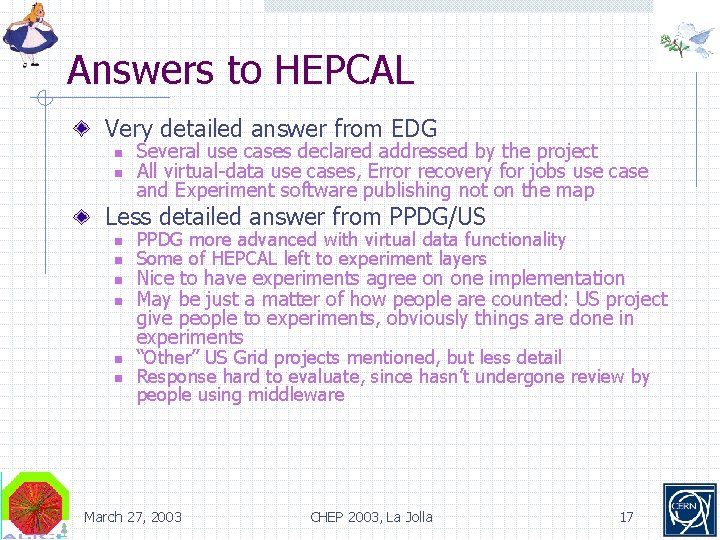

Answers to HEPCAL Very detailed answer from EDG n n Several use cases declared addressed by the project All virtual-data use cases, Error recovery for jobs use case and Experiment software publishing not on the map Less detailed answer from PPDG/US n n n PPDG more advanced with virtual data functionality Some of HEPCAL left to experiment layers Nice to have experiments agree on one implementation May be just a matter of how people are counted: US project give people to experiments, obviously things are done in experiments “Other” US Grid projects mentioned, but less detail Response hard to evaluate, since hasn’t undergone review by people using middleware March 27, 2003 CHEP 2003, La Jolla 17

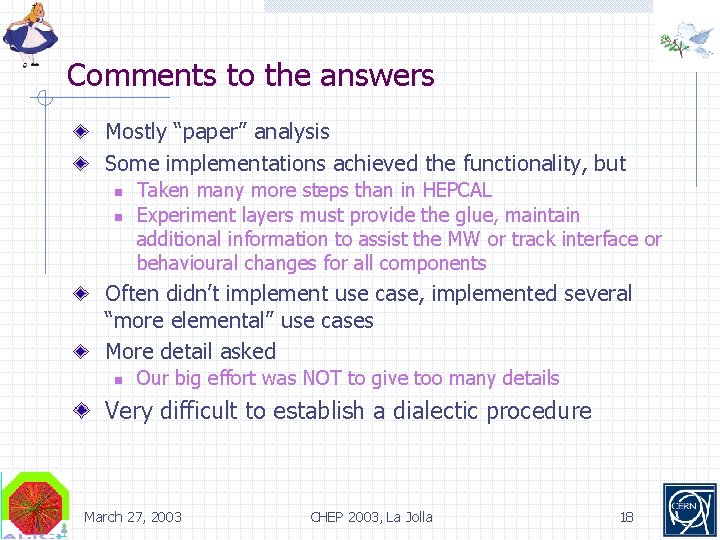

Comments to the answers Mostly “paper” analysis Some implementations achieved the functionality, but n n Taken many more steps than in HEPCAL Experiment layers must provide the glue, maintain additional information to assist the MW or track interface or behavioural changes for all components Often didn’t implement use case, implemented several “more elemental” use cases More detail asked n Our big effort was NOT to give too many details Very difficult to establish a dialectic procedure March 27, 2003 CHEP 2003, La Jolla 18

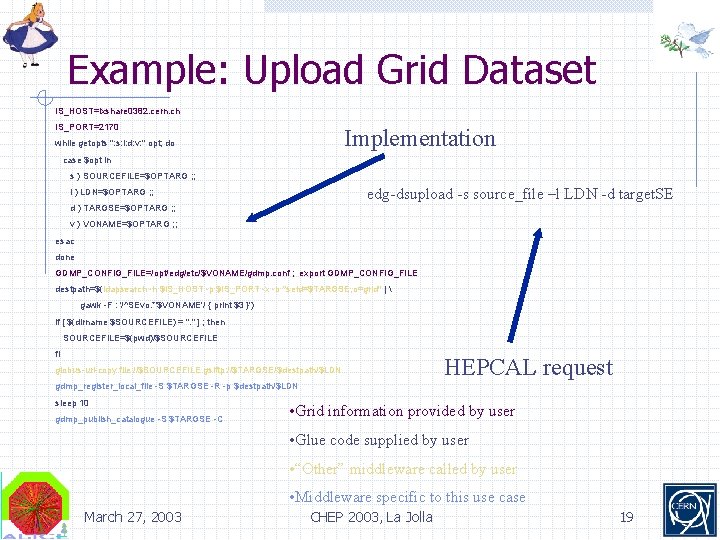

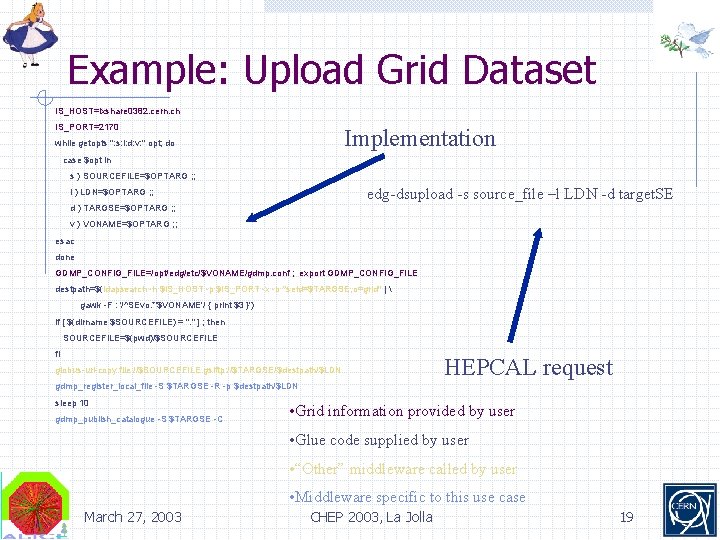

Example: Upload Grid Dataset IS_HOST=lxshare 0382. cern. ch IS_PORT=2170 Implementation while getopts ": s: l: d: v: " opt; do case $opt in s ) SOURCEFILE=$OPTARG ; ; edg-dsupload -s source_file –l LDN -d target. SE l ) LDN=$OPTARG ; ; d ) TARGSE=$OPTARG ; ; v ) VONAME=$OPTARG ; ; esac done GDMP_CONFIG_FILE=/opt/edg/etc/$VONAME/gdmp. conf ; export GDMP_CONFIG_FILE destpath=$(ldapsearch -h $IS_HOST -p $IS_PORT -x -b "se. Id=$TARGSE, o=grid" | gawk -F : '/^SEvo. *'$VONAME'/ { print $3 }') if [ $(dirname $SOURCEFILE) = ". " ] ; then SOURCEFILE=$(pwd)/$SOURCEFILE fi globus-url-copy file: //$SOURCEFILE gsiftp: //$TARGSE/$destpath/$LDN HEPCAL request gdmp_register_local_file -S $TARGSE -R -p $destpath/$LDN sleep 10 gdmp_publish_catalogue -S $TARGSE -C • Grid information provided by user • Glue code supplied by user • “Other” middleware called by user • Middleware specific to this use case March 27, 2003 CHEP 2003, La Jolla 19

GAG A Grid Application Group has been setup by LCG to follow up on HEPCAL n Semi permanent and reporting to LCG Both US and EU representatives from experiments and GRID project HEPCAL II already scheduled before Summer Discussion on the production of test jobs / code fragments / examples to validate against use cases March 27, 2003 CHEP 2003, La Jolla 20

Conclusion Very interesting and productive work n 320 google hits (with moderate filtering)! It prompted a constructive dialogue with MW projects n And between US and EU projects It provides a solid base to develop a GRID architecture n Largely used by EDG ATF It proves that common meaningful requirements can be produced by different experiments The dialogue with the MW projects has to continue, but it is very labor intensive March 27, 2003 CHEP 2003, La Jolla 21