LESSON 4 1 MULTIPLE LINEAR REGRESSION Design and

- Slides: 32

LESSON 4. 1. MULTIPLE LINEAR REGRESSION Design and Data Analysis in Psychology II Salvador Chacón Moscoso Susana Sanduvete Chaves 1

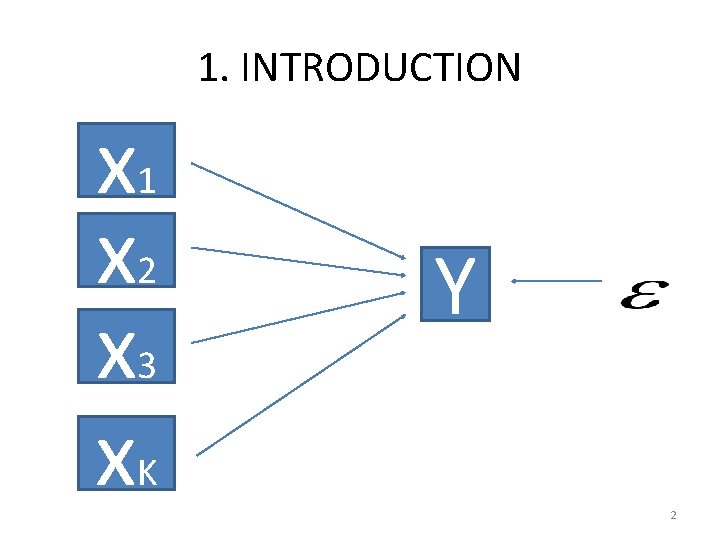

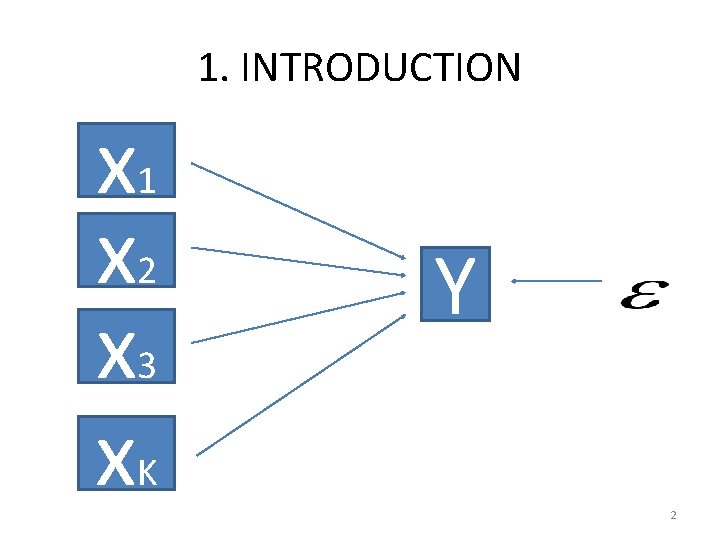

1. INTRODUCTION x 1 x 2 x 3 x. K Y 2

1. INTRODUCTION • Model components: – More than one independent variable (X): • Qualitative. • Quantitative. – A quantitative dependent variable (Y). – Example: • • • X 1: educative level. X 2: economic level. X 3: personality characteristics. X 4: gender. Y: drug dependence level. 3

1. INTRODUCTION • Reasons why it is interesting to increase the simple linear regression model: – Human behavior is complex (multiple regression is more realistic). – It increases statistical power (probability of rejecting null hypothesis and taking a good decision). 4

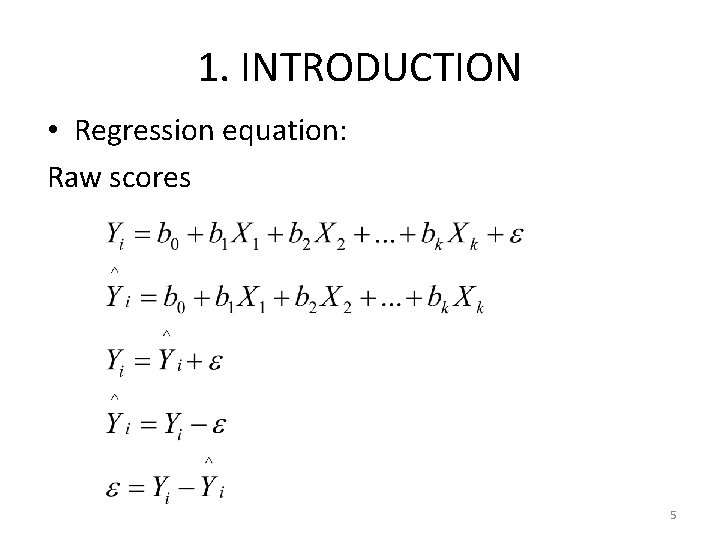

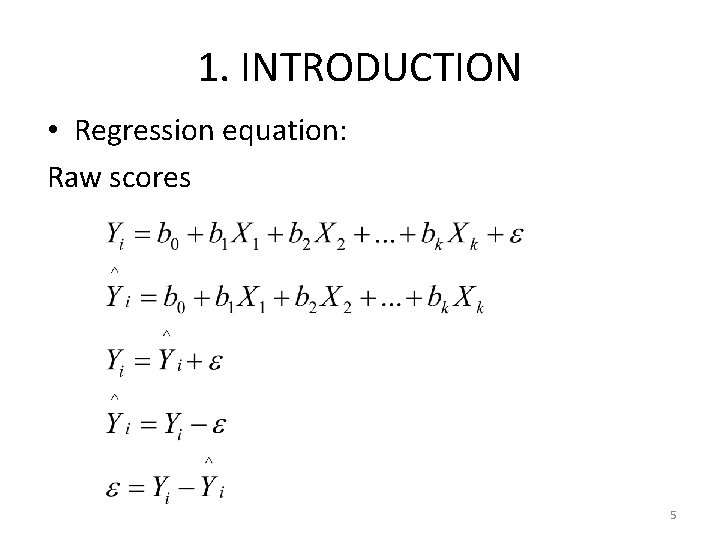

1. INTRODUCTION • Regression equation: Raw scores 5

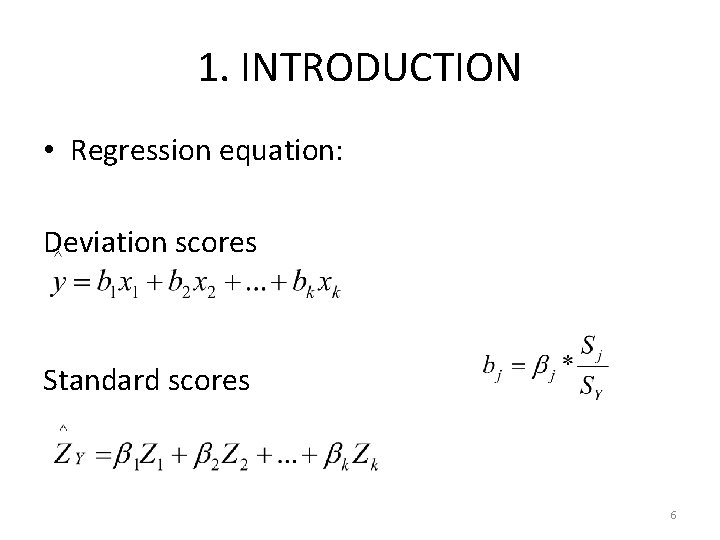

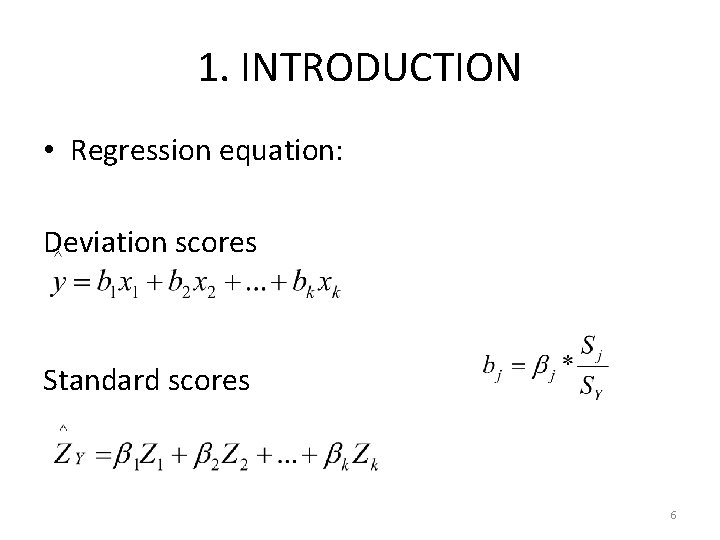

1. INTRODUCTION • Regression equation: Deviation scores Standard scores 6

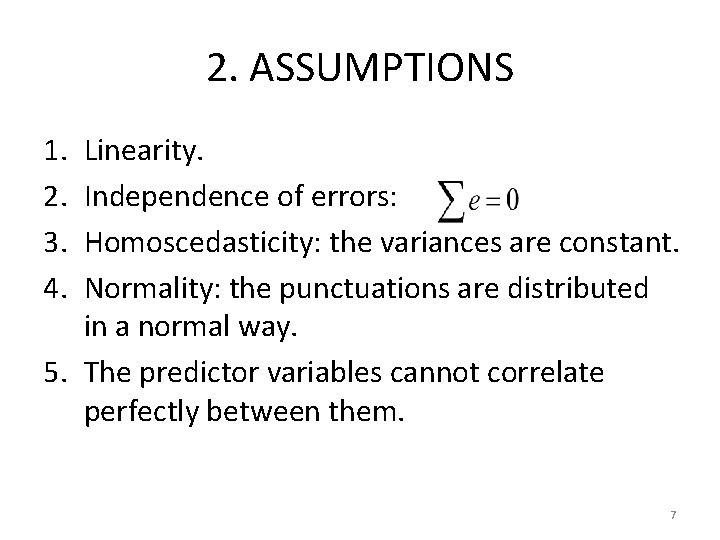

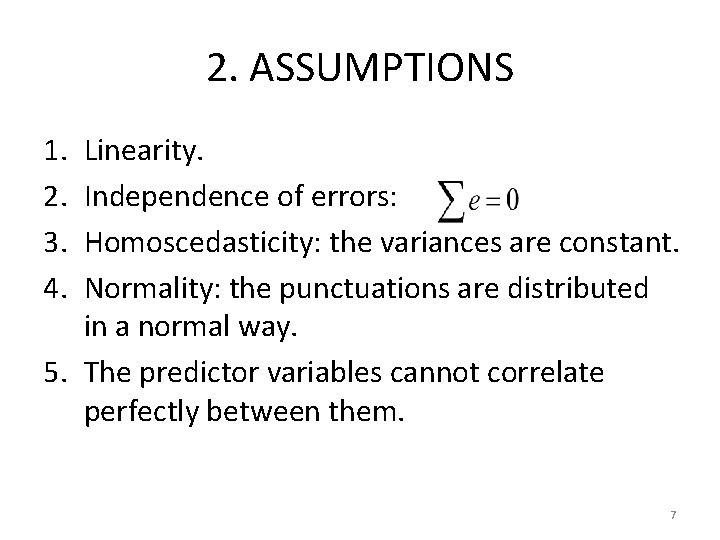

2. ASSUMPTIONS 1. 2. 3. 4. Linearity. Independence of errors: Homoscedasticity: the variances are constant. Normality: the punctuations are distributed in a normal way. 5. The predictor variables cannot correlate perfectly between them. 7

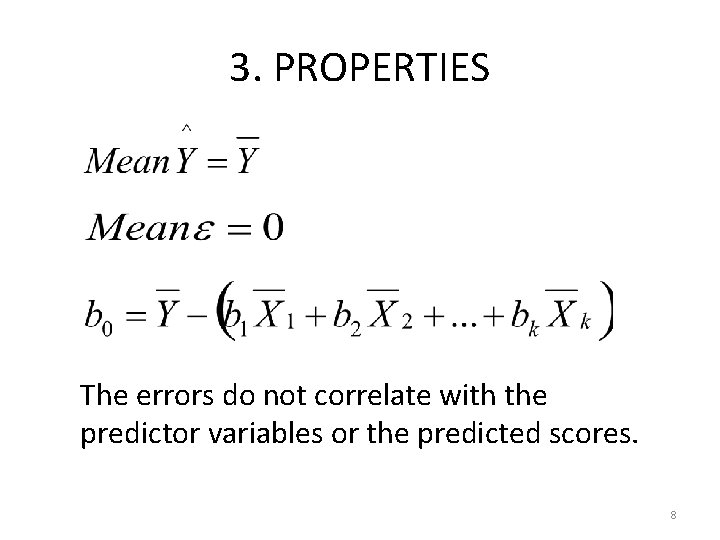

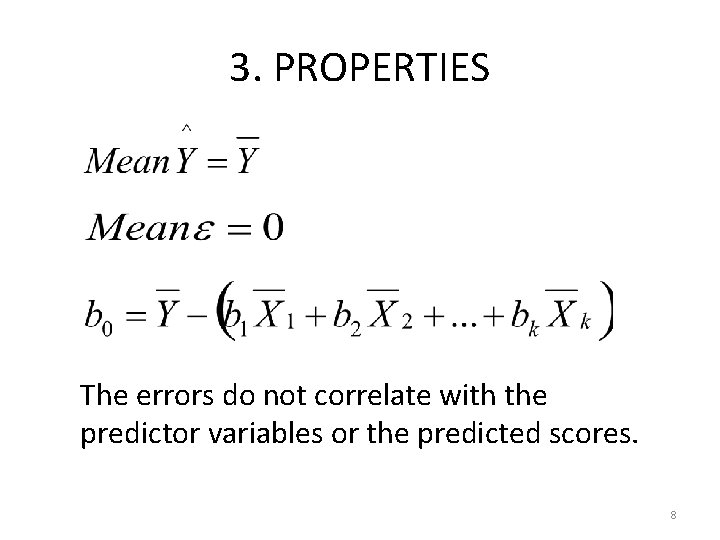

3. PROPERTIES The errors do not correlate with the predictor variables or the predicted scores. 8

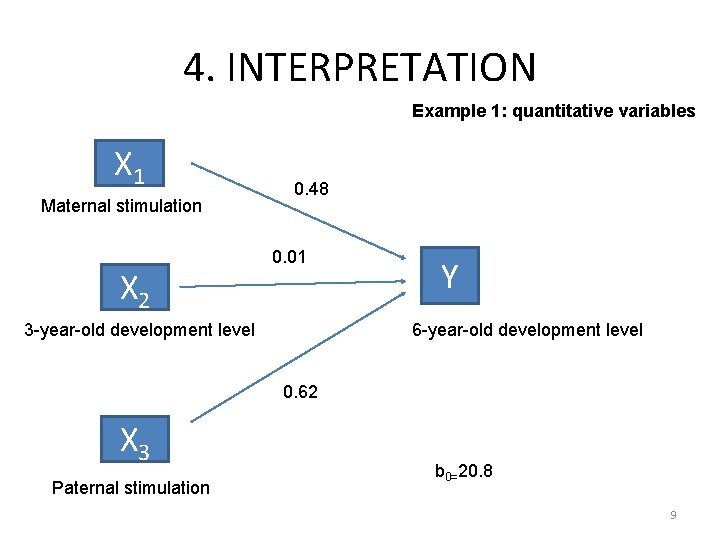

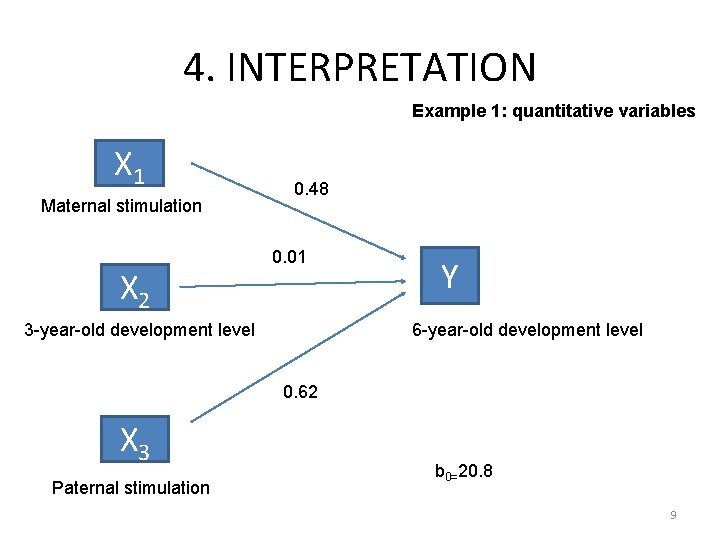

4. INTERPRETATION Example 1: quantitative variables X 1 Maternal stimulation X 2 0. 48 0. 01 3 -year-old development level Y 6 -year-old development level 0. 62 X 3 Paternal stimulation b 0=20. 8 9

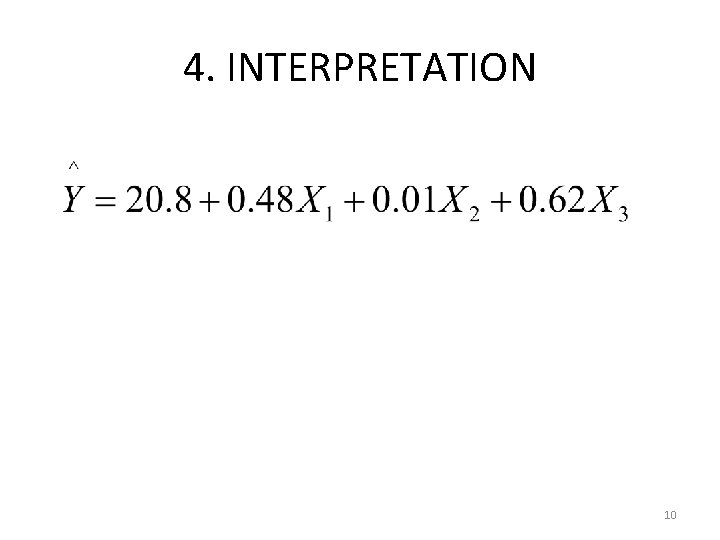

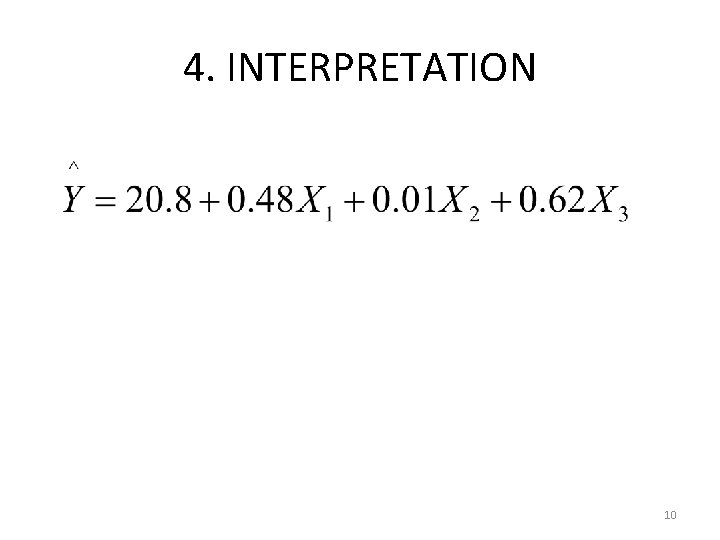

4. INTERPRETATION 10

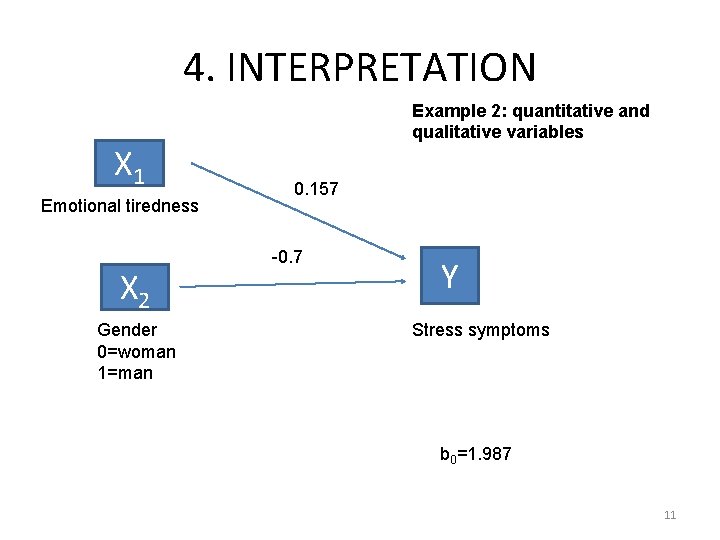

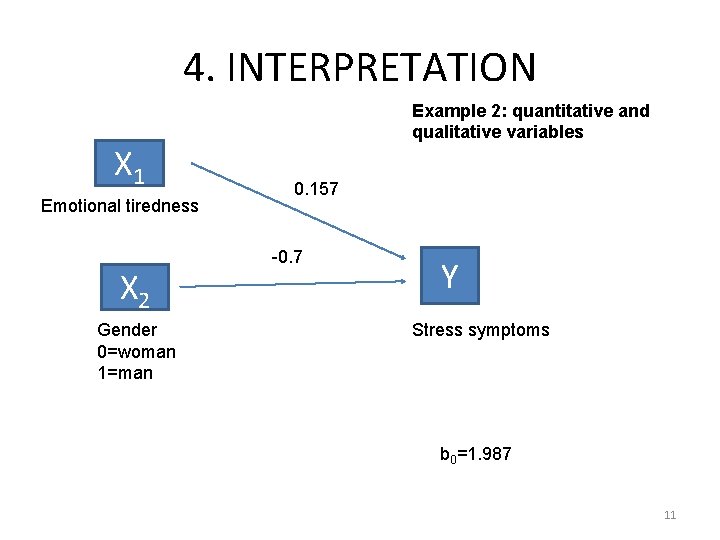

4. INTERPRETATION X 1 Emotional tiredness X 2 Gender 0=woman 1=man Example 2: quantitative and qualitative variables 0. 157 -0. 7 Y Stress symptoms b 0=1. 987 11

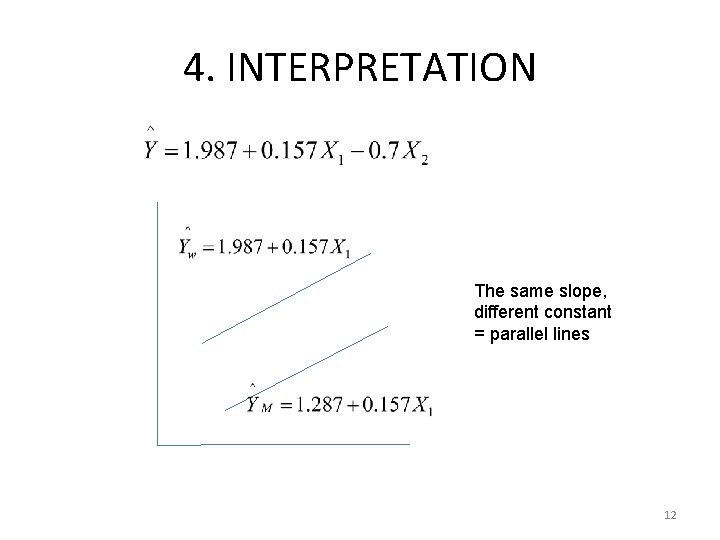

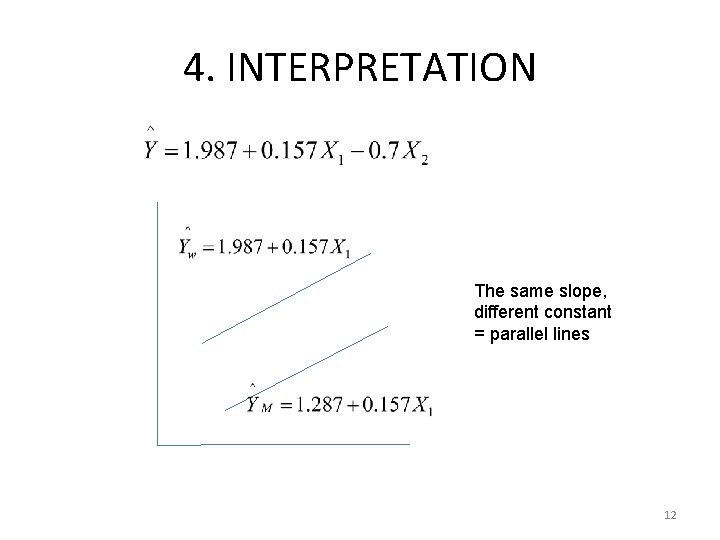

4. INTERPRETATION The same slope, different constant = parallel lines 12

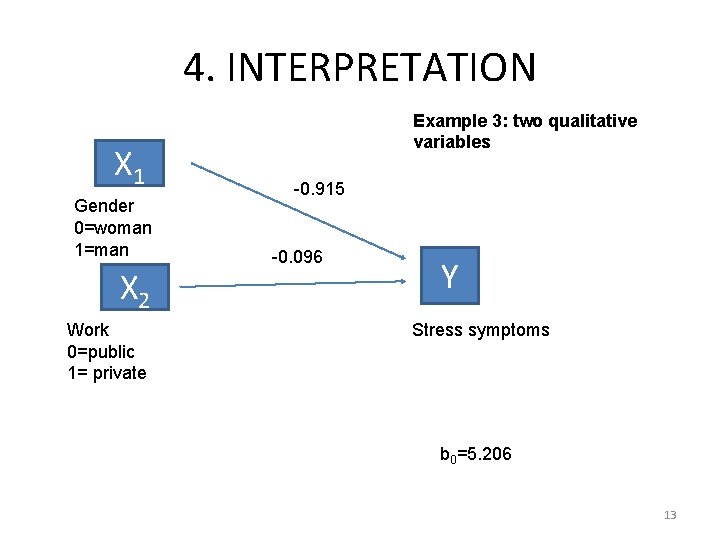

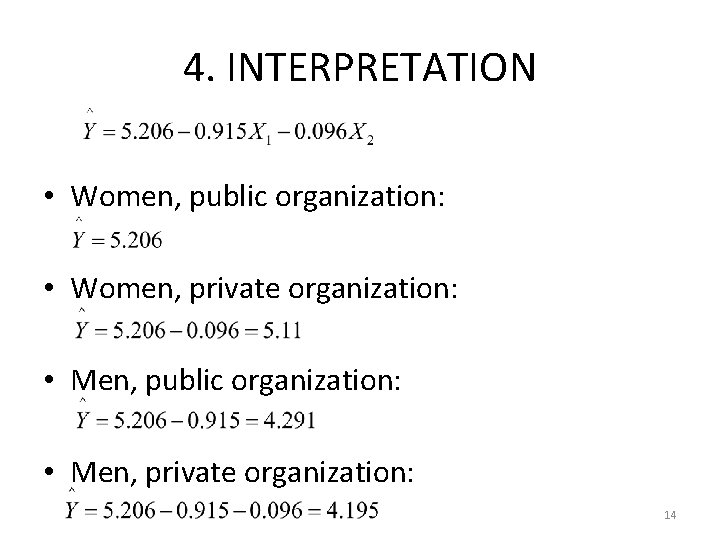

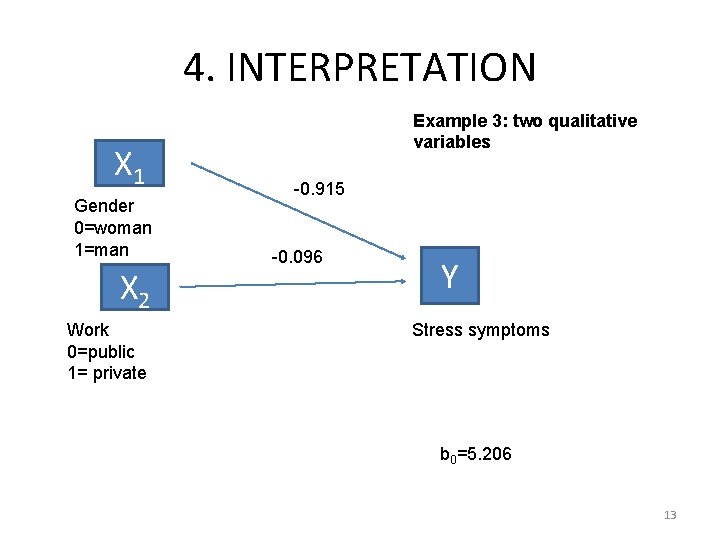

4. INTERPRETATION X 1 Gender 0=woman 1=man X 2 Work 0=public 1= private Example 3: two qualitative variables -0. 915 -0. 096 Y Stress symptoms b 0=5. 206 13

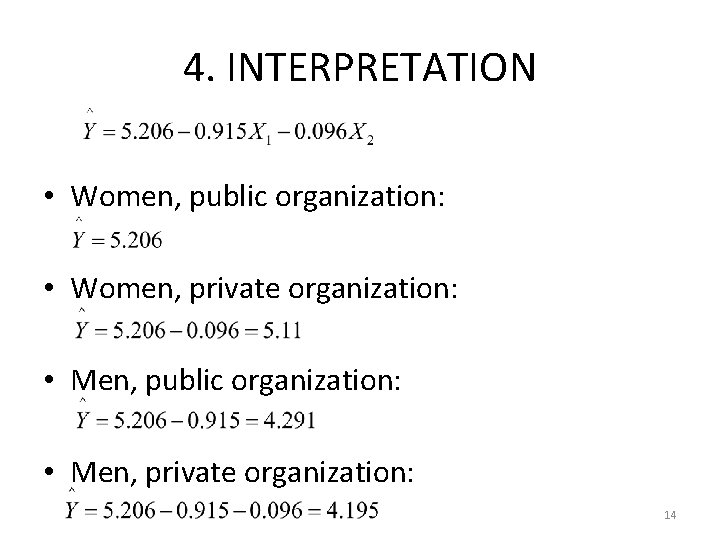

4. INTERPRETATION • Women, public organization: • Women, private organization: • Men, public organization: • Men, private organization: 14

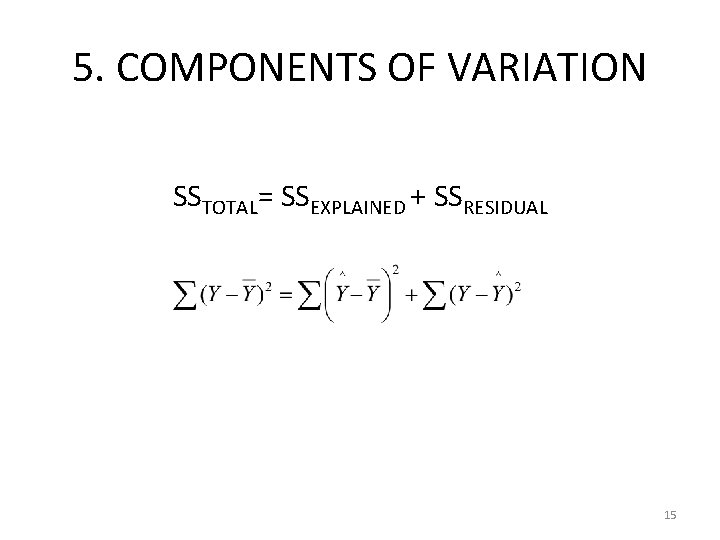

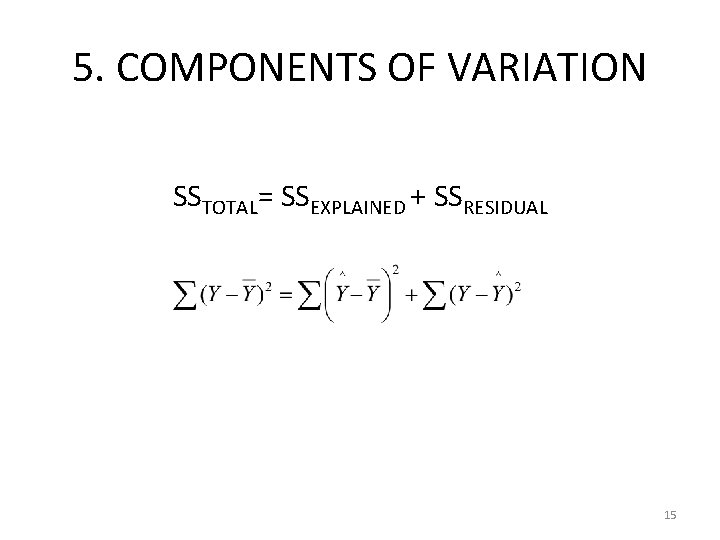

5. COMPONENTS OF VARIATION SSTOTAL= SSEXPLAINED + SSRESIDUAL 15

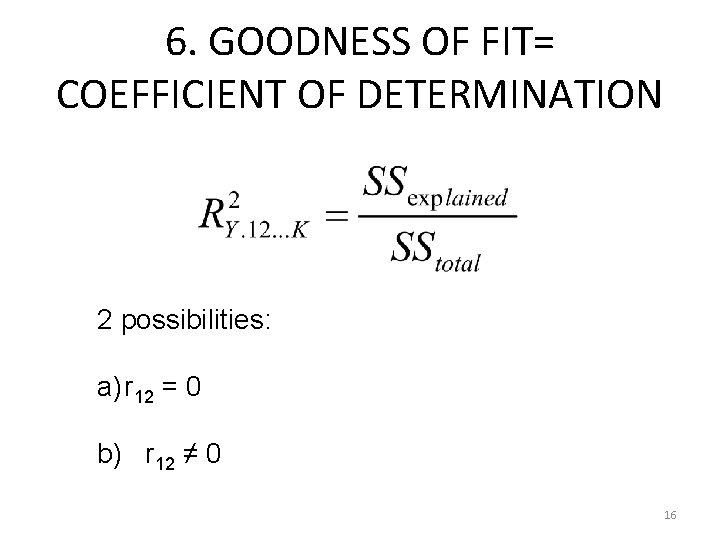

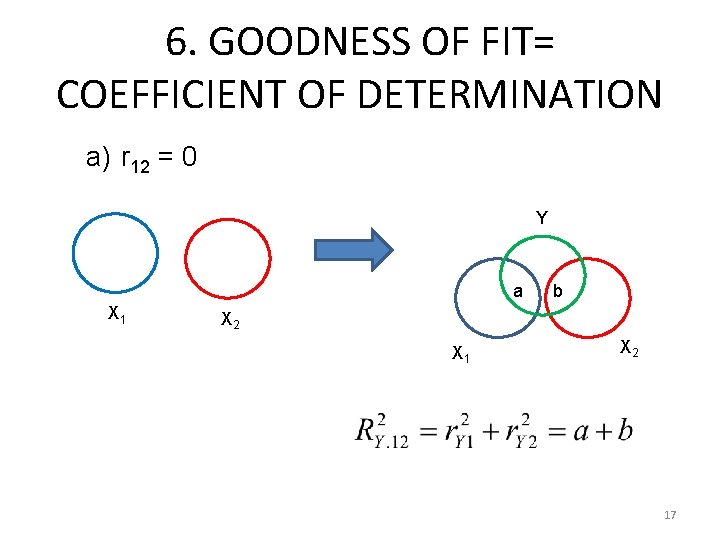

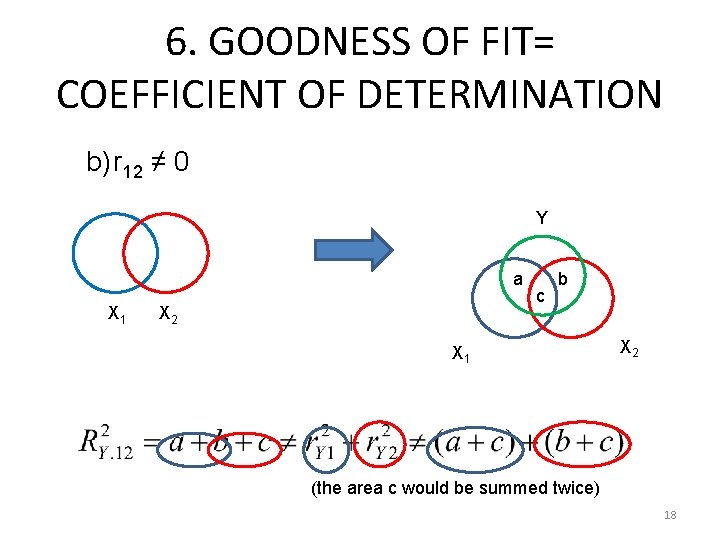

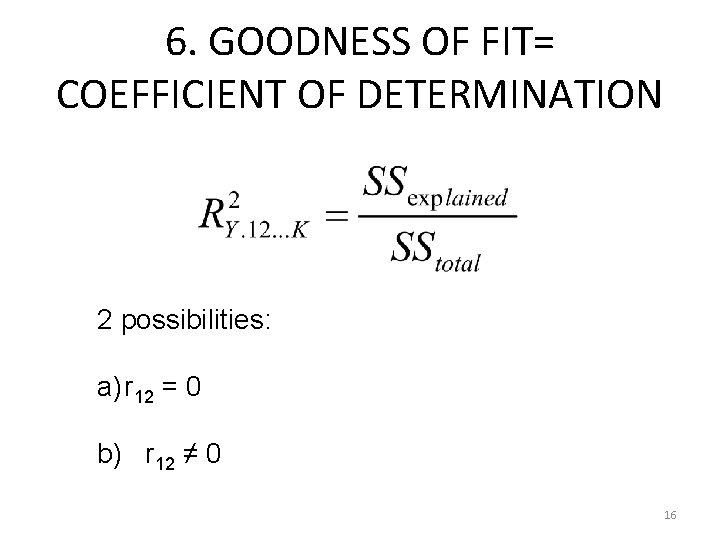

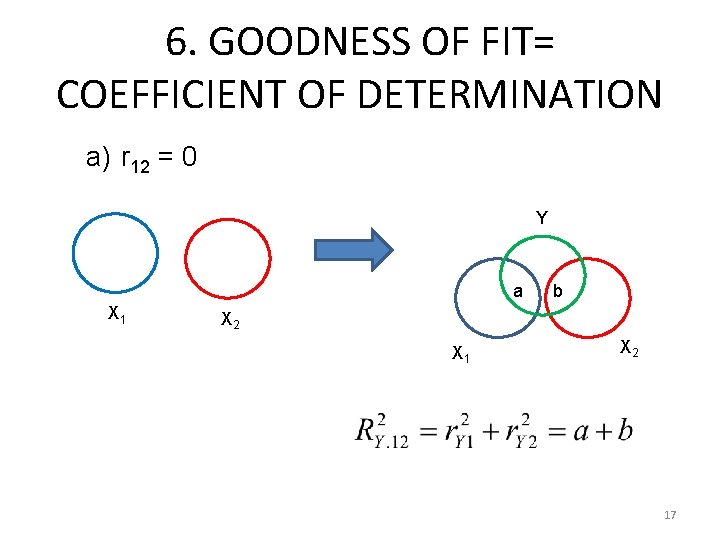

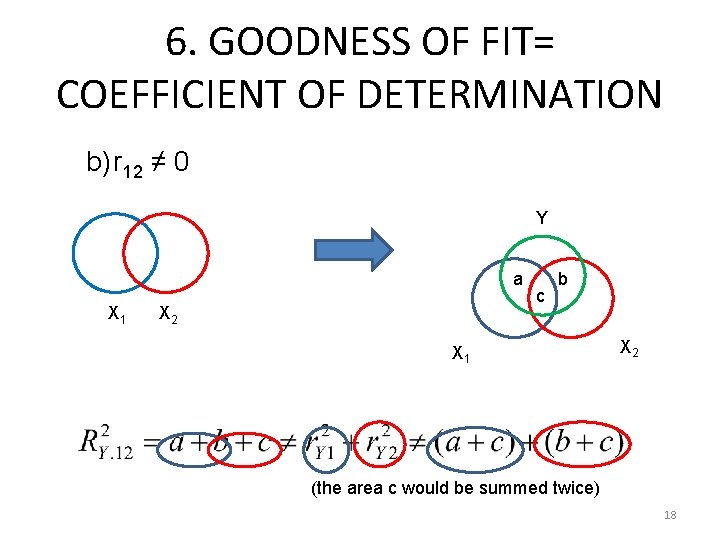

6. GOODNESS OF FIT= COEFFICIENT OF DETERMINATION 2 possibilities: a) r 12 = 0 b) r 12 ≠ 0 16

6. GOODNESS OF FIT= COEFFICIENT OF DETERMINATION a) r 12 = 0 Y a X 1 b X 2 X 1 X 2 17

6. GOODNESS OF FIT= COEFFICIENT OF DETERMINATION b) r 12 ≠ 0 Y a X 1 X 2 c b X 1 X 2 (the area c would be summed twice) 18

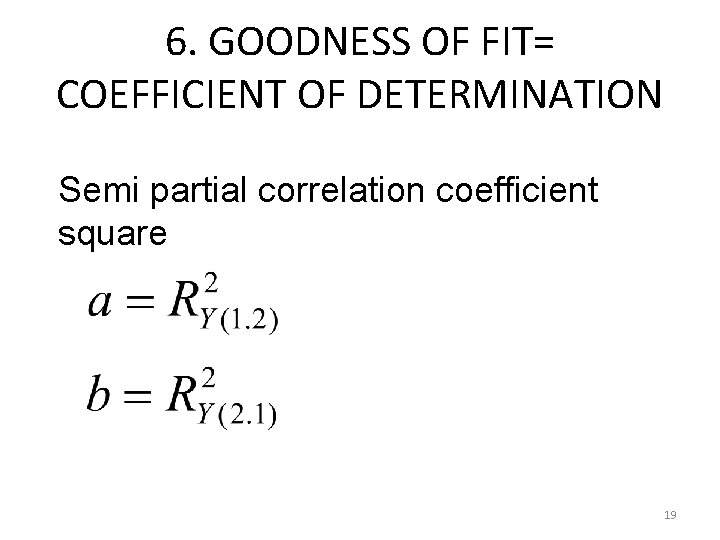

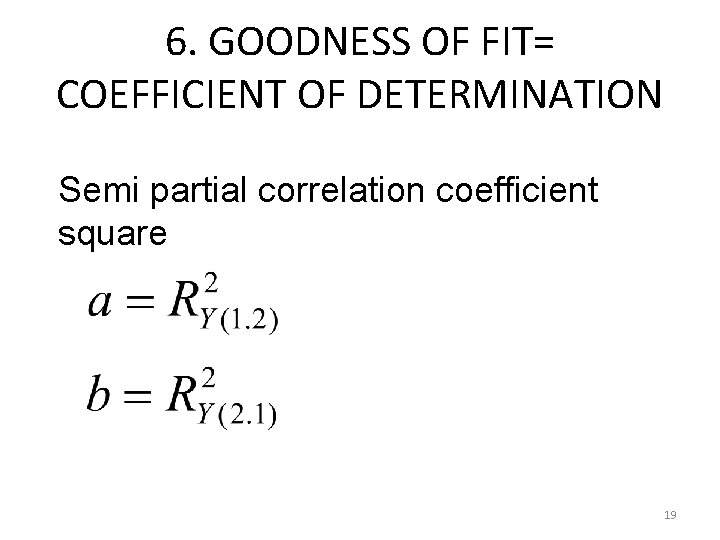

6. GOODNESS OF FIT= COEFFICIENT OF DETERMINATION Semi partial correlation coefficient square 19

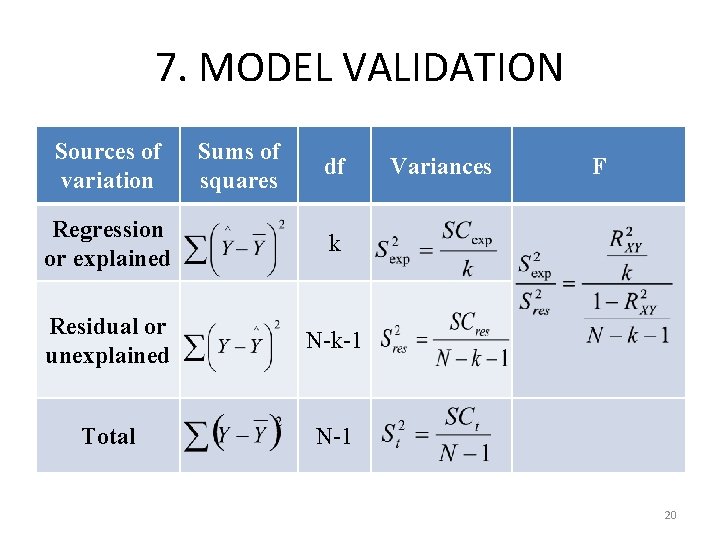

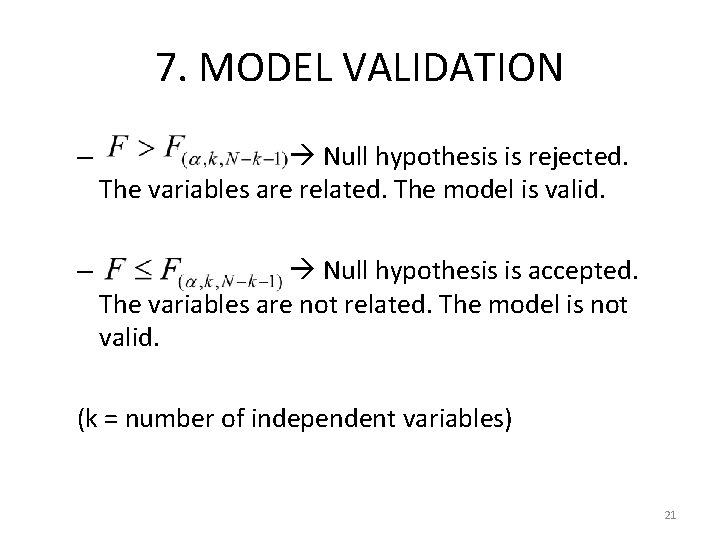

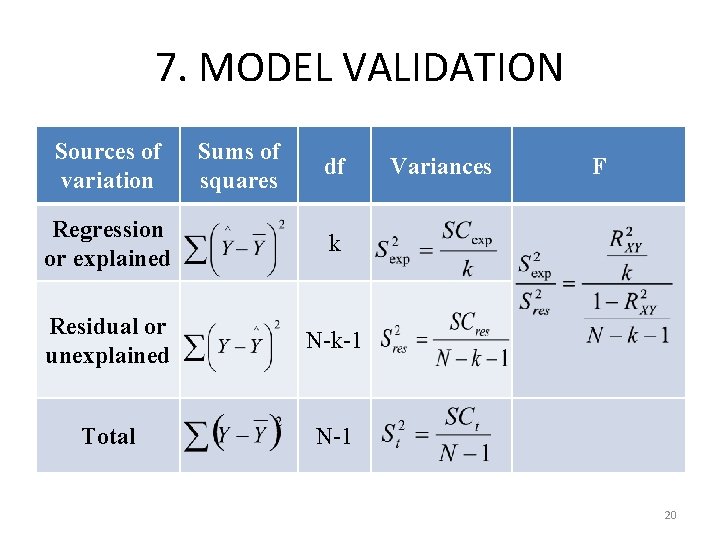

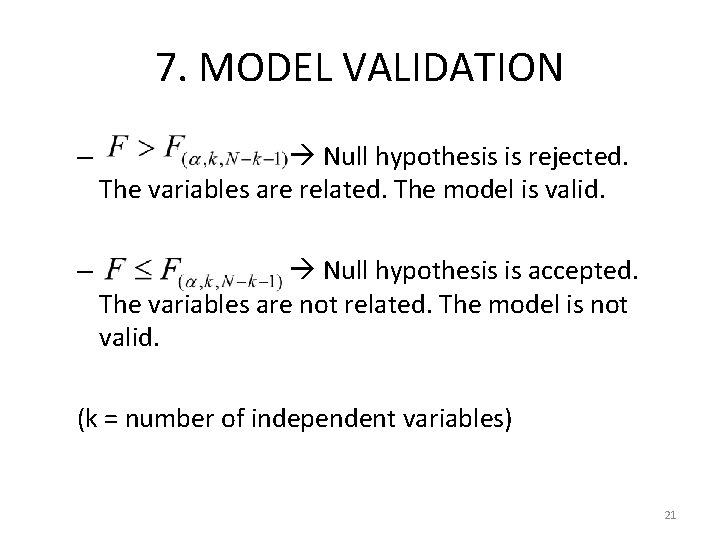

7. MODEL VALIDATION Sources of variation Sums of squares df Regression or explained k Residual or unexplained N-k-1 Total N-1 Variances F 20

7. MODEL VALIDATION – Null hypothesis is rejected. The variables are related. The model is valid. – Null hypothesis is accepted. The variables are not related. The model is not valid. (k = number of independent variables) 21

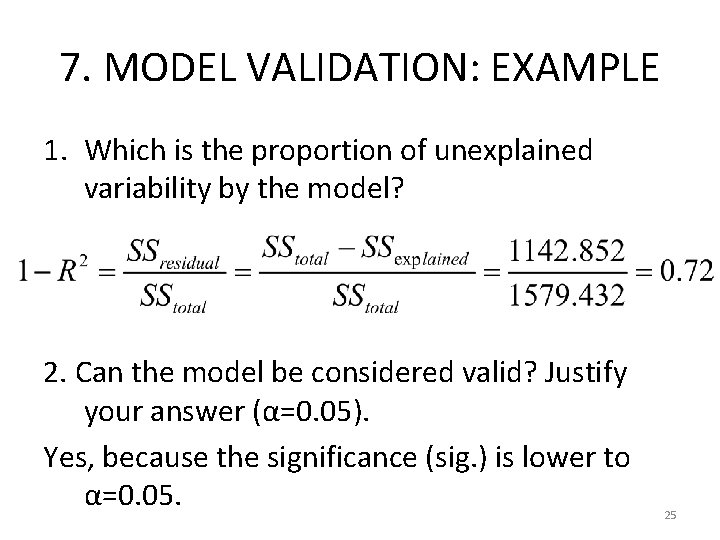

7. MODEL VALIDATION: EXAMPLE • A linear regression equation was estimated in order to study the possible relationship between the level of familiar cohesion (Y) and the variables gender (X 1) and time working outside, instead at home (X 2). Some of the most relevant results were the following: 22

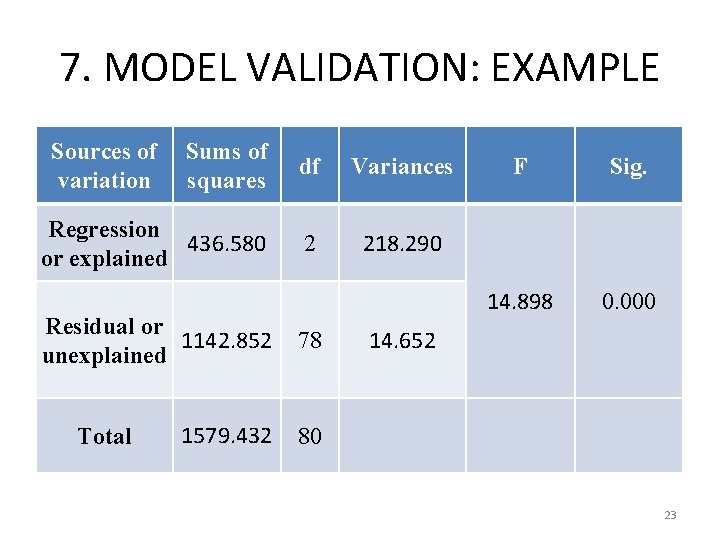

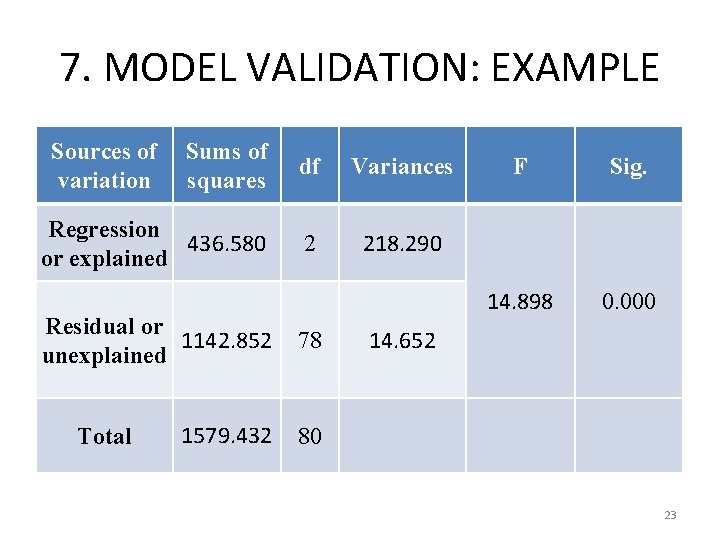

7. MODEL VALIDATION: EXAMPLE Sources of variation Sums of squares df Variances Regression 436. 580 or explained 2 218. 290 Residual or 1142. 852 unexplained 78 1579. 432 80 Total F Sig. 14. 898 0. 000 14. 652 23

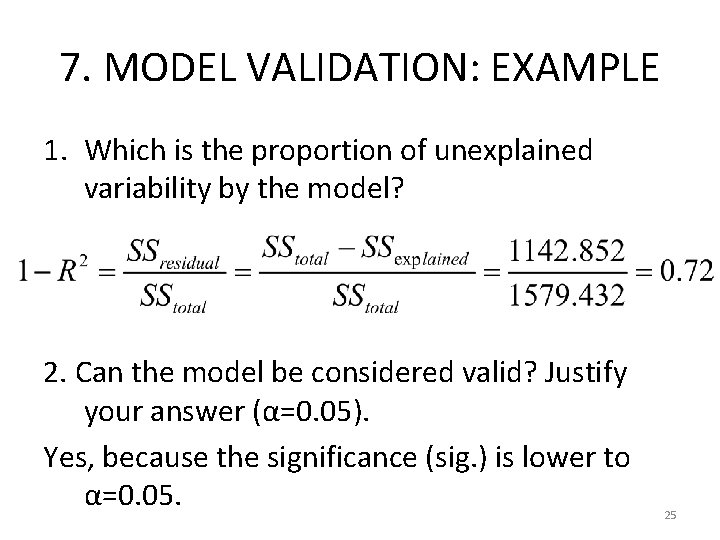

7. MODEL VALIDATION: EXAMPLE 1. Which is the proportion of unexplained variability by the model? 2. Can the model be considered valid? Justify your answer (α=0. 05). 24

7. MODEL VALIDATION: EXAMPLE 1. Which is the proportion of unexplained variability by the model? 2. Can the model be considered valid? Justify your answer (α=0. 05). Yes, because the significance (sig. ) is lower to α=0. 05. 25

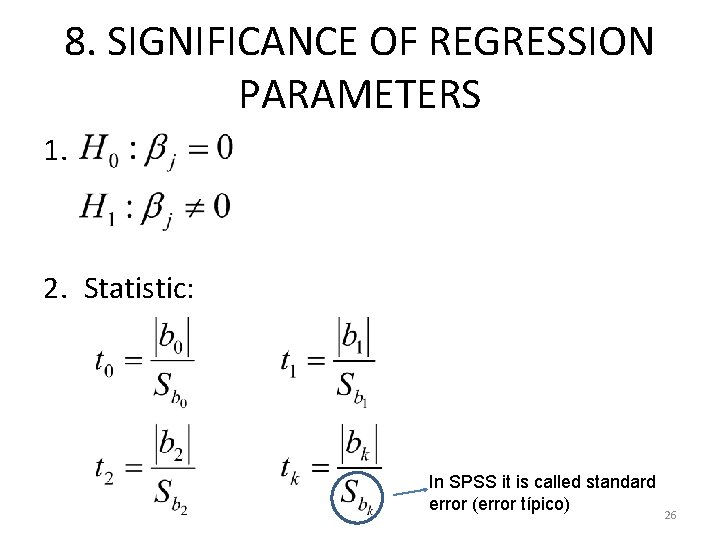

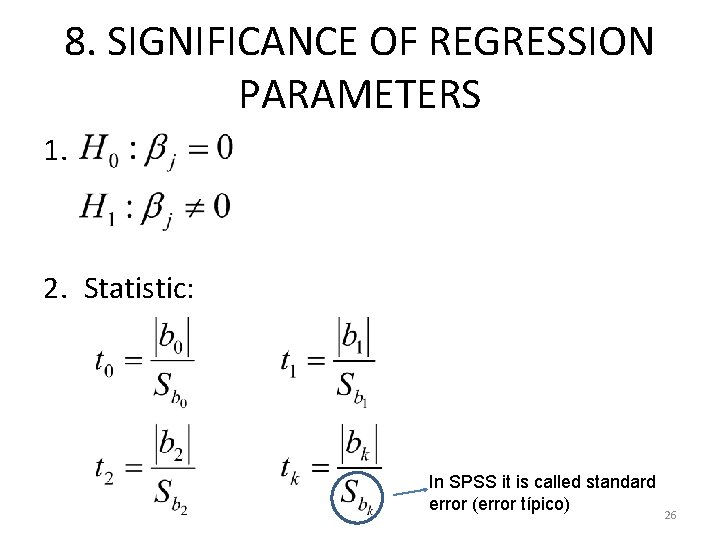

8. SIGNIFICANCE OF REGRESSION PARAMETERS 1. 2. Statistic: In SPSS it is called standard error (error típico) 26

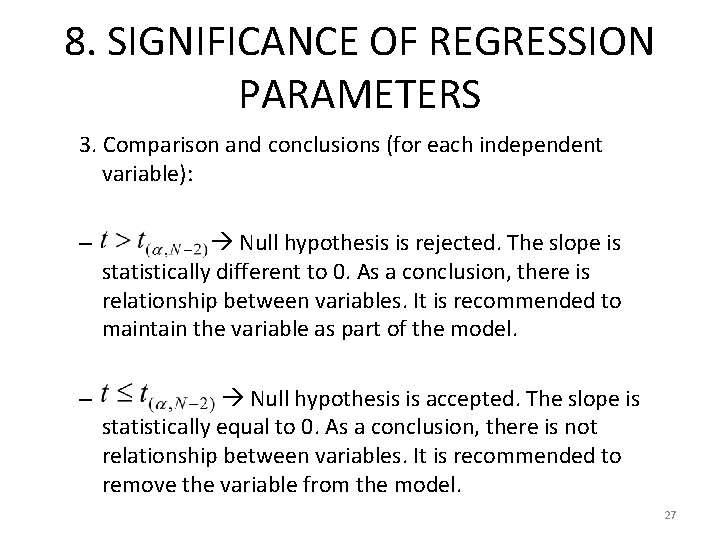

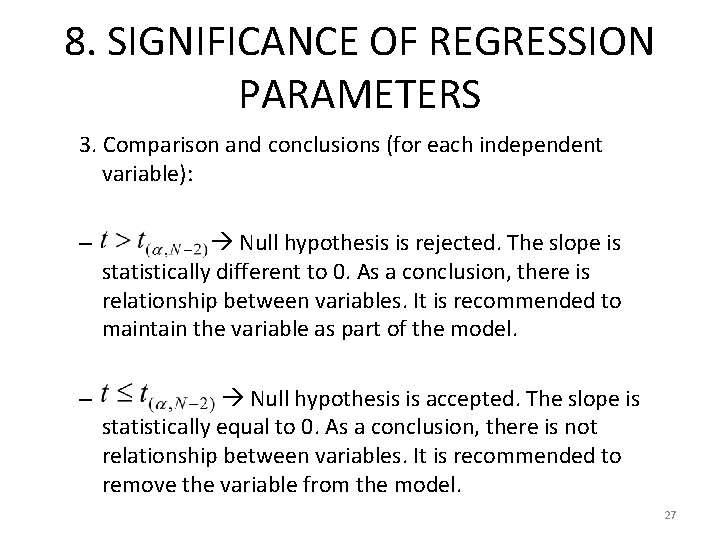

8. SIGNIFICANCE OF REGRESSION PARAMETERS 3. Comparison and conclusions (for each independent variable): – Null hypothesis is rejected. The slope is statistically different to 0. As a conclusion, there is relationship between variables. It is recommended to maintain the variable as part of the model. – Null hypothesis is accepted. The slope is statistically equal to 0. As a conclusion, there is not relationship between variables. It is recommended to remove the variable from the model. 27

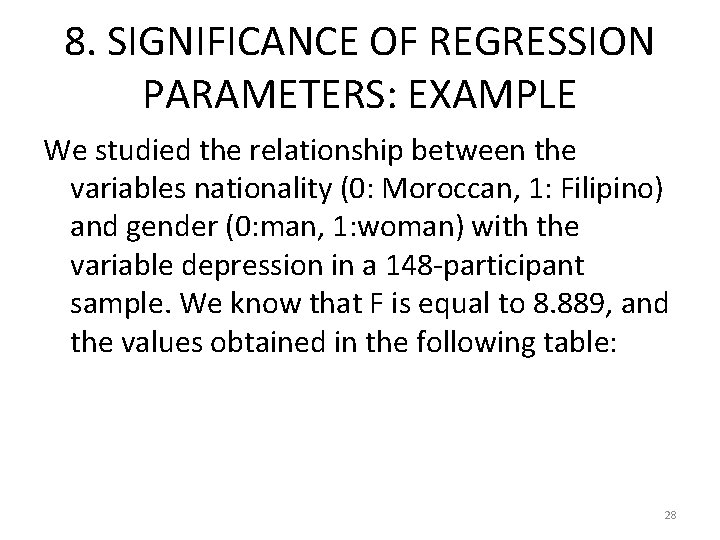

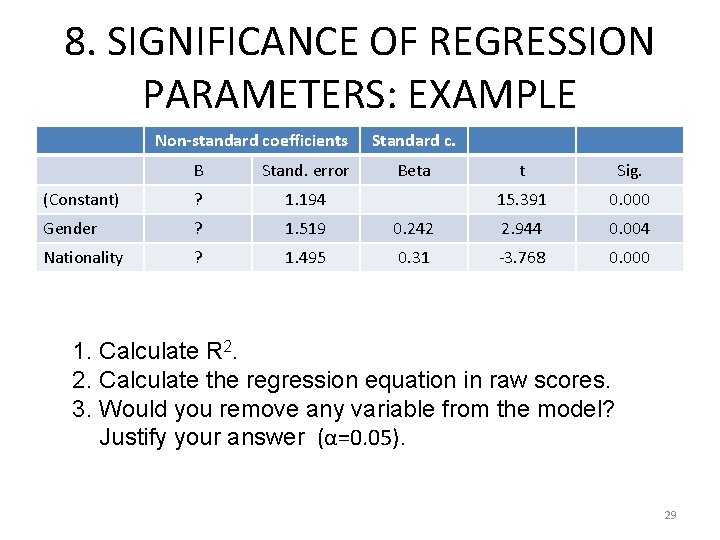

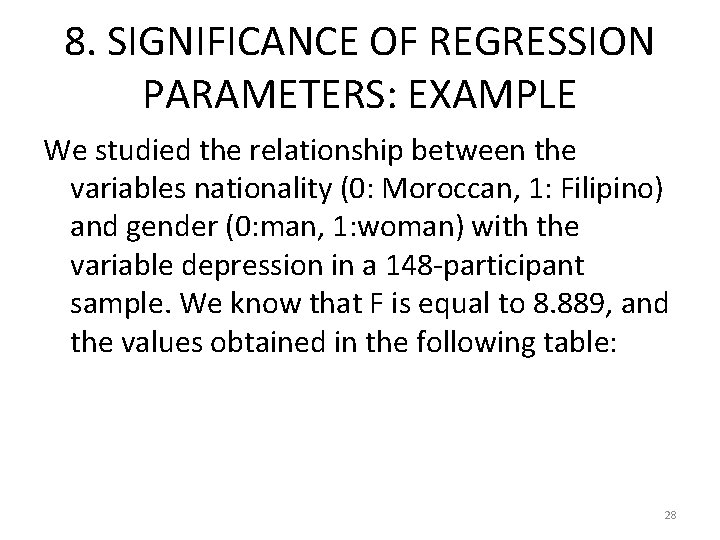

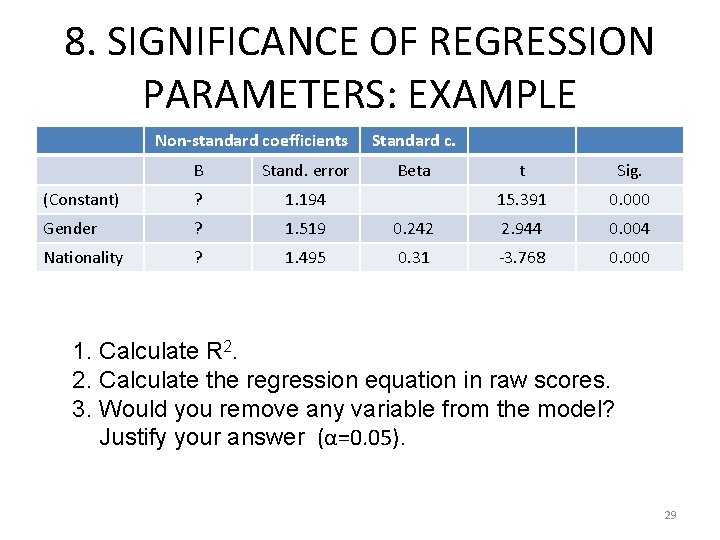

8. SIGNIFICANCE OF REGRESSION PARAMETERS: EXAMPLE We studied the relationship between the variables nationality (0: Moroccan, 1: Filipino) and gender (0: man, 1: woman) with the variable depression in a 148 -participant sample. We know that F is equal to 8. 889, and the values obtained in the following table: 28

8. SIGNIFICANCE OF REGRESSION PARAMETERS: EXAMPLE Non-standard coefficients B Stand. error (Constant) ? 1. 194 Gender ? 1. 519 Nationality ? 1. 495 Standard c. Beta t Sig. 15. 391 0. 000 0. 242 2. 944 0. 004 0. 31 -3. 768 0. 000 1. Calculate R 2. 2. Calculate the regression equation in raw scores. 3. Would you remove any variable from the model? Justify your answer (α=0. 05). 29

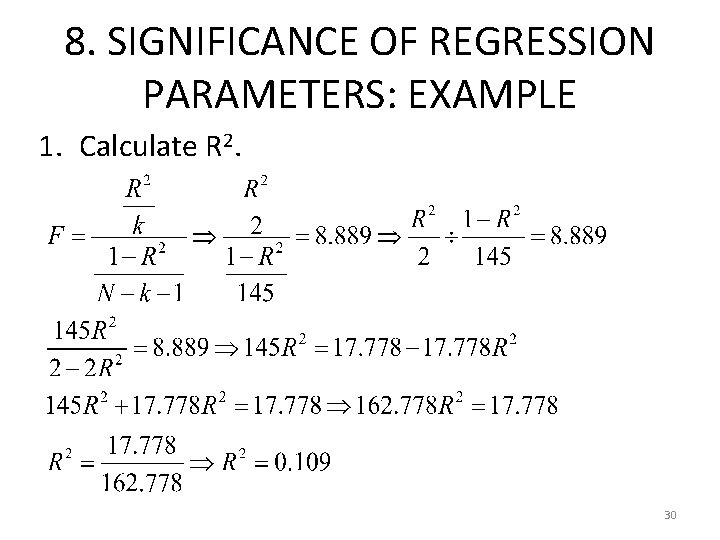

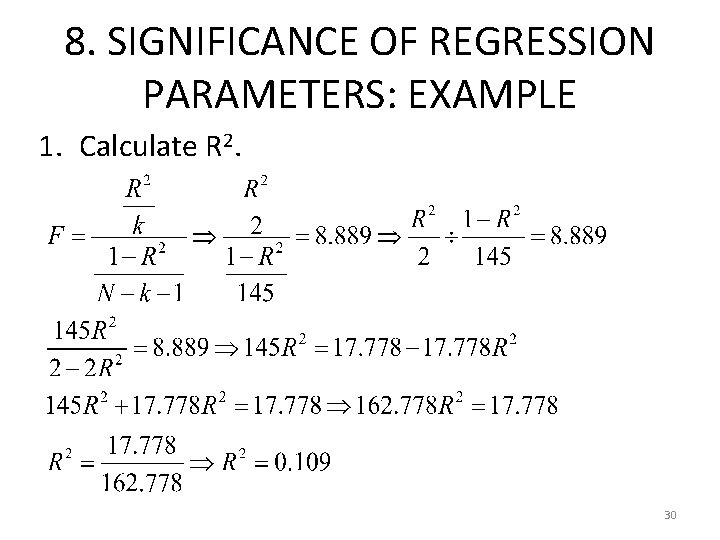

8. SIGNIFICANCE OF REGRESSION PARAMETERS: EXAMPLE 1. Calculate R 2. 30

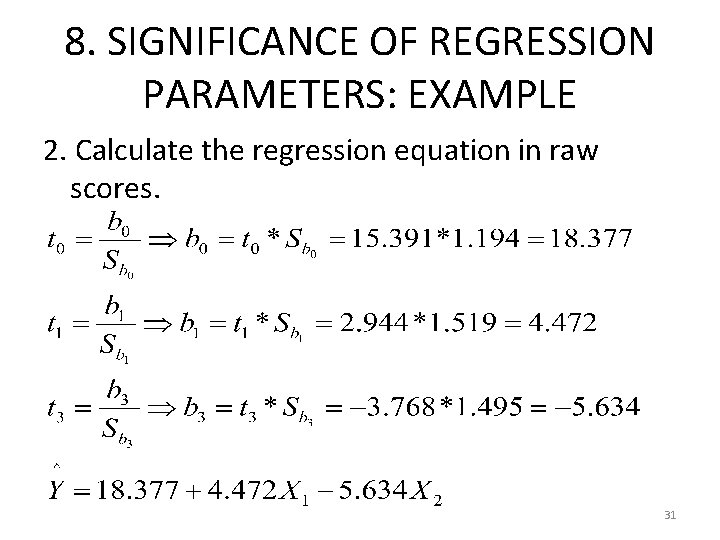

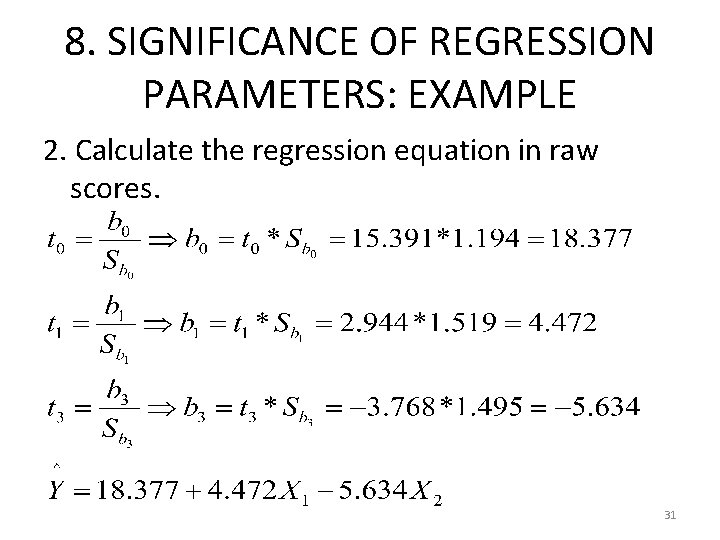

8. SIGNIFICANCE OF REGRESSION PARAMETERS: EXAMPLE 2. Calculate the regression equation in raw scores. 31

8. SIGNIFICANCE OF REGRESSION PARAMETERS: EXAMPLE 3. Would you remove any variable from the model? Justify your answer (α=0. 05). No, because the t of the three parameters present a significance (sig. ) lower than α=0. 05 32