Lesson 1 Introduction to Apache Spark BUILDING APPLICATIONS

Lesson 1: Introduction to Apache Spark BUILDING APPLICATIONS WITH APACHE SPARK

Learning Objectives Add the end of this lesson, you will be able to: o List the benefits of using Apache Spark o Load data into Apache Spark o Explore data in Apache Spark

What is Apache Spark? Fast, in-memory cluster computing platform

Who uses Spark? Data Scientist Developer Interactive data analysis Productization of ML model Data exploration Data processing applications Machine Learning

Benefits of Using Spark 1 Speed 2 Ease of development 3 Unified stack 4 Deployment Flexibility

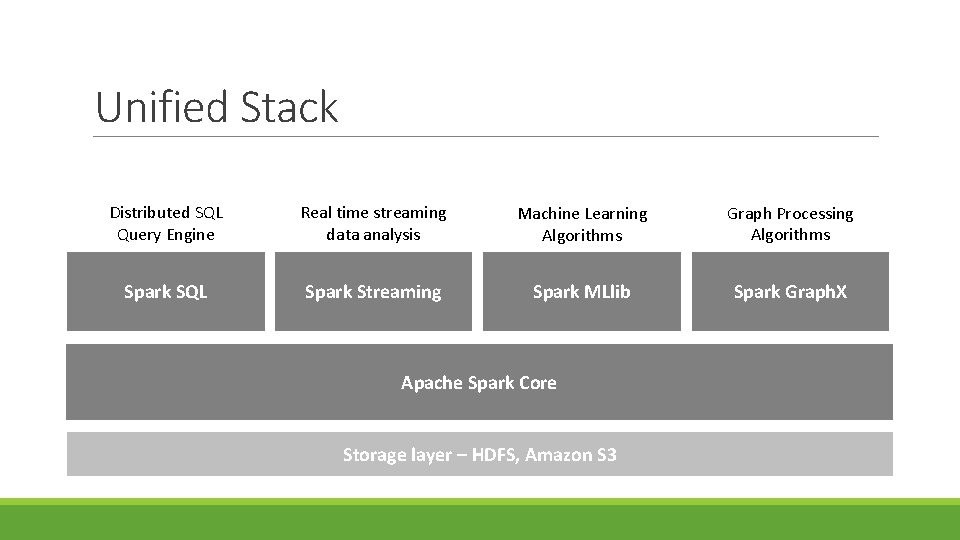

Unified Stack Distributed SQL Query Engine Real time streaming data analysis Machine Learning Algorithms Graph Processing Algorithms Spark SQL Spark Streaming Spark MLlib Spark Graph. X Apache Spark Core Storage layer – HDFS, Amazon S 3

Example Data Sources Dashboard Io. T Apps Web Services Stream Processing Spark Streaming Machine Learning Spark MLlib Queries Spark SQL Data Storage (HDFS, Amazon S 3 etc. ) Enterprise Data Warehouse Query/Adva nced Analytics

Learning Objectives Add the end of this lesson, you will be able to: o List the benefits of using Apache Spark o Load data into Spark o Explore data in Spark

Load Data into Spark Data sources Any storage source supported by Hadoop o Local file system o Hadoop Distributed File System (HDFS) o Amazon S 3 Data formats o Text files, CSV , JSON files o Sequence. Files o Structured data

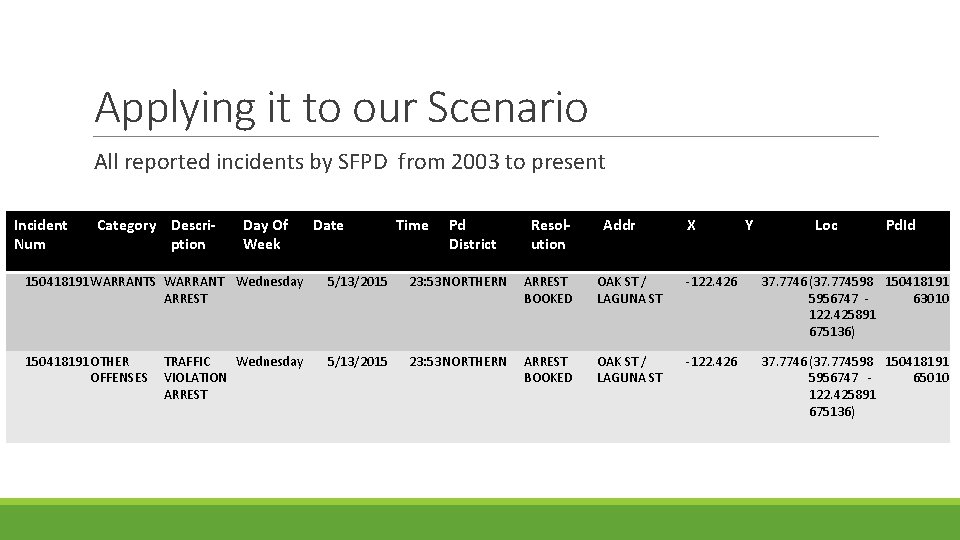

Applying it to our Scenario All reported incidents by SFPD from 2003 to present Incident Num Category Description Day Of Week Date Time Pd District Resolution Addr X Y Loc Pd. Id 150418191 WARRANTS WARRANT Wednesday ARREST 5/13/2015 23: 53 NORTHERN ARREST BOOKED OAK ST / LAGUNA ST -122. 426 37. 7746(37. 774598 150418191 5956747 63010 122. 425891 675136) 150418191 OTHER OFFENSES 5/13/2015 23: 53 NORTHERN ARREST BOOKED OAK ST / LAGUNA ST -122. 426 37. 7746(37. 774598 150418191 5956747 65010 122. 425891 675136) TRAFFIC Wednesday VIOLATION ARREST

What do we want from this data? What areas are most dangerous? Can we predict a resolution?

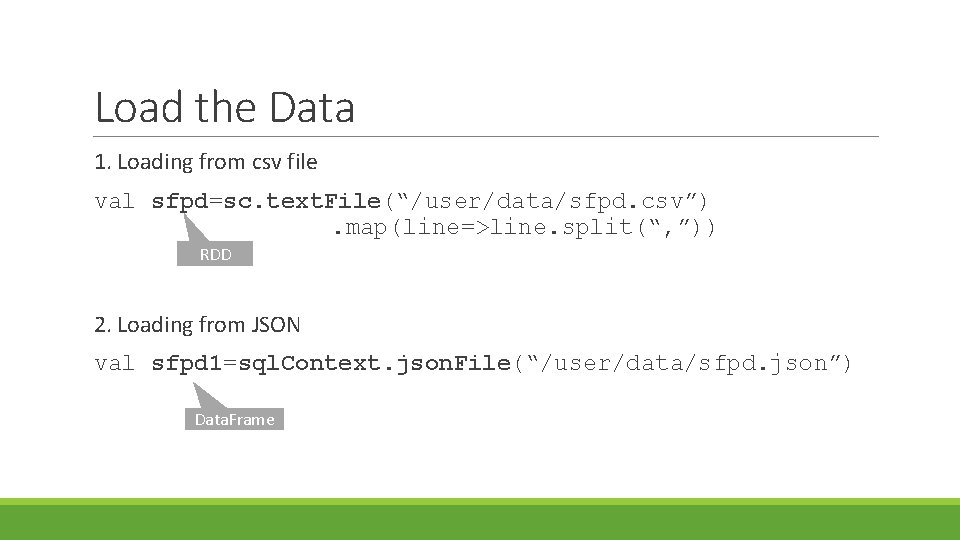

Load the Data 1. Loading from csv file val sfpd=sc. text. File(“/user/data/sfpd. csv”). map(line=>line. split(“, ”)) ◦ RDD 2. Loading from JSON val sfpd 1=sql. Context. json. File(“/user/data/sfpd. json”) Data. Frame

Learning Objectives Add the end of this lesson, you will be able to: o List the benefits of using Apache Spark o Load data into Spark o Explore data in Spark

Explore the Data 1. What does the data look like in Spark? 2. What is the total number of reported incidents? 3. How many categories are there? 4. What are the categories? 5. What is the total number of burglaries? 6. How many districts are there? 7. What are the districts? 8. Which five district have the max resolutions? 9. What are the top ten address that have the max incidents?

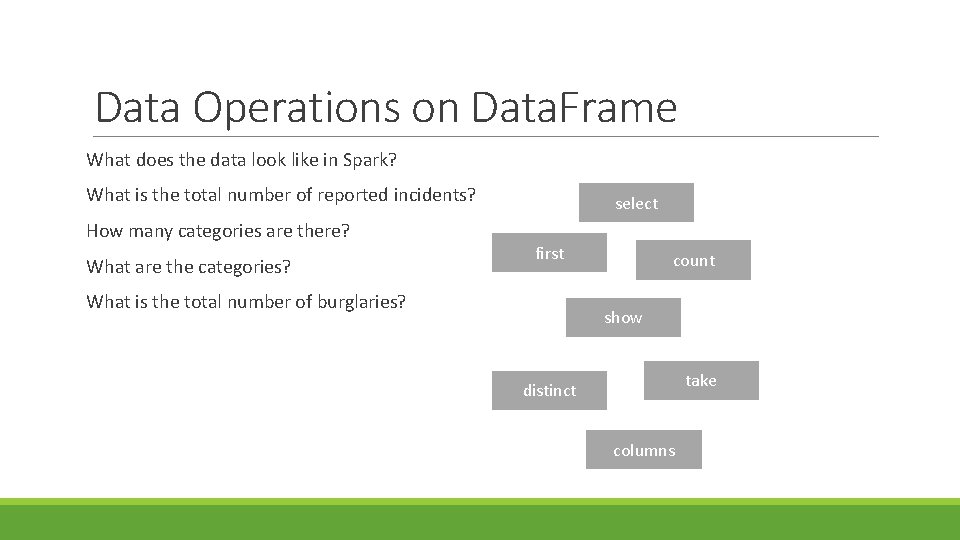

Data Operations on Data. Frame What does the data look like in Spark? What is the total number of reported incidents? How many categories are there? What are the categories? select first What is the total number of burglaries? count show take distinct columns

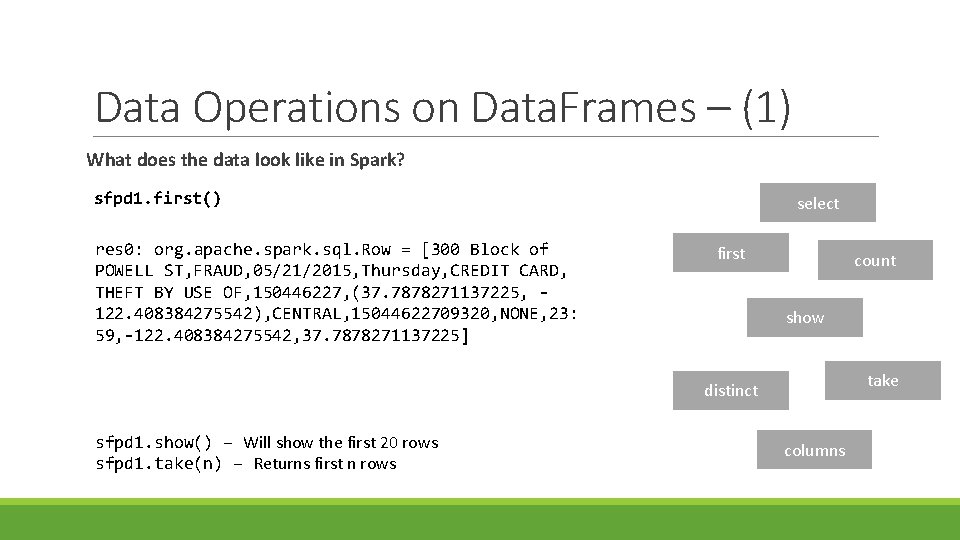

Data Operations on Data. Frames – (1) What does the data look like in Spark? sfpd 1. first() res 0: org. apache. spark. sql. Row = [300 Block of POWELL ST, FRAUD, 05/21/2015, Thursday, CREDIT CARD, THEFT BY USE OF, 150446227, (37. 7878271137225, 122. 408384275542), CENTRAL, 15044622709320, NONE, 23: 59, -122. 408384275542, 37. 7878271137225] select first count show take distinct sfpd 1. show() – Will show the first 20 rows sfpd 1. take(n) – Returns first n rows columns

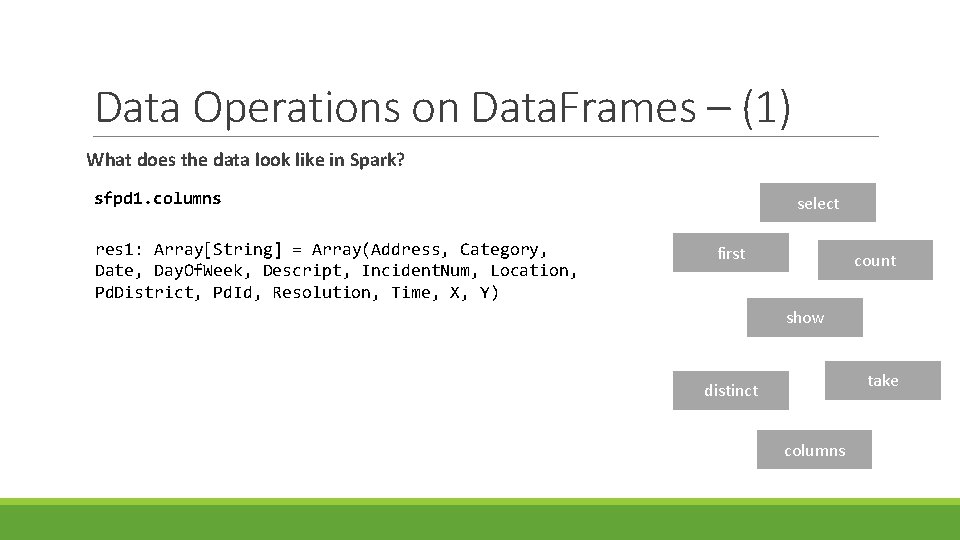

Data Operations on Data. Frames – (1) What does the data look like in Spark? sfpd 1. columns res 1: Array[String] = Array(Address, Category, Date, Day. Of. Week, Descript, Incident. Num, Location, Pd. District, Pd. Id, Resolution, Time, X, Y) select first count show take distinct columns

Data Operations on Data. Frames – (2) What is the total number of reported incidents? select sfpd 1. count() first count res 3: Long = 769454 show take distinct columns

Data Operations on Data. Frames – (3) How many categories (incident types) are there? select sfpd 1. select("Category"). distinct. count() res 3: Long = 39 first count show take distinct columns

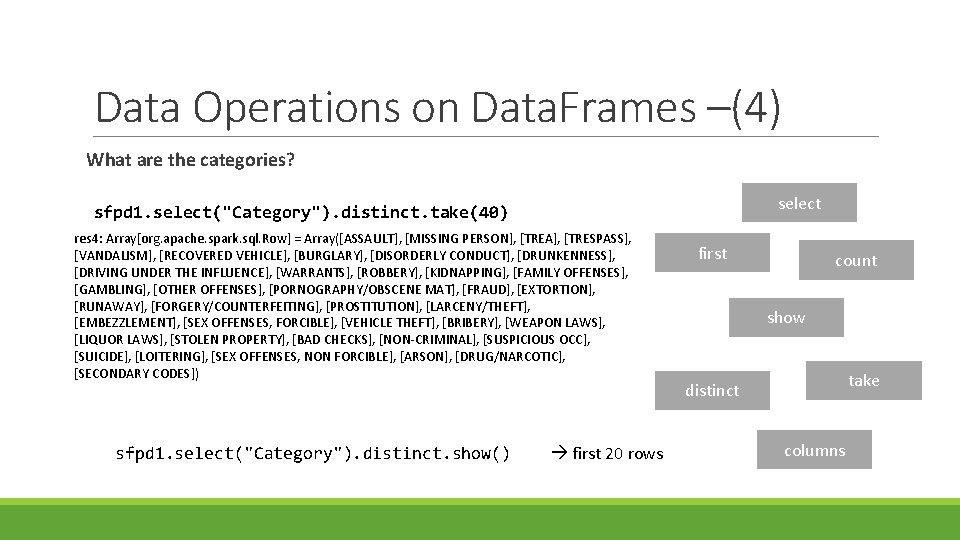

Data Operations on Data. Frames –(4) What are the categories? select sfpd 1. select("Category"). distinct. take(40) res 4: Array[org. apache. spark. sql. Row] = Array([ASSAULT], [MISSING PERSON], [TREA], [TRESPASS], [VANDALISM], [RECOVERED VEHICLE], [BURGLARY], [DISORDERLY CONDUCT], [DRUNKENNESS], [DRIVING UNDER THE INFLUENCE], [WARRANTS], [ROBBERY], [KIDNAPPING], [FAMILY OFFENSES], [GAMBLING], [OTHER OFFENSES], [PORNOGRAPHY/OBSCENE MAT], [FRAUD], [EXTORTION], [RUNAWAY], [FORGERY/COUNTERFEITING], [PROSTITUTION], [LARCENY/THEFT], [EMBEZZLEMENT], [SEX OFFENSES, FORCIBLE], [VEHICLE THEFT], [BRIBERY], [WEAPON LAWS], [LIQUOR LAWS], [STOLEN PROPERTY], [BAD CHECKS], [NON-CRIMINAL], [SUSPICIOUS OCC], [SUICIDE], [LOITERING], [SEX OFFENSES, NON FORCIBLE], [ARSON], [DRUG/NARCOTIC], [SECONDARY CODES]) sfpd 1. select("Category"). distinct. show() first 20 rows first count show take distinct columns

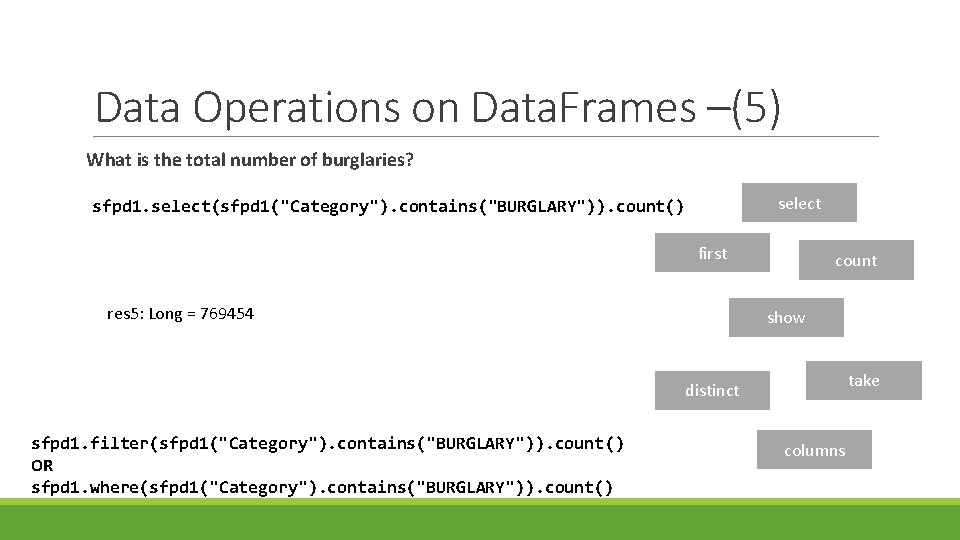

Data Operations on Data. Frames –(5) What is the total number of burglaries? select sfpd 1. select(sfpd 1("Category"). contains("BURGLARY")). count() first res 5: Long = 769454 count show take distinct sfpd 1. filter(sfpd 1("Category"). contains("BURGLARY")). count() OR sfpd 1. where(sfpd 1("Category"). contains("BURGLARY")). count() columns

ACTIVITY 1 o Load data into Apache Spark o Apply data operations to explore the data

Summary The benefits of using Apache Spark o o Speed; Ease of Development; Unified Stack; Deployment flexibility Load data into Spark o o Various sources and various formats Explore data in Spark o o Data operations

- Slides: 23