Lesson 05 Concurrency Mutual Exclusion Synchronization Multiple Processes

- Slides: 20

Lesson 05 Concurrency: Mutual Exclusion & Synchronization

Multiple Processes • Operating System design is concerned with the management of processes and threads: – Multiprogramming: • Management of multiple processes within a uni-processor system. – Multiprocessing • Management of multiple processes within a multiprocessor system. – Distributed Processing • Management of multiple processes executing on multiple, distributed computer systems. • E. g. Cluster

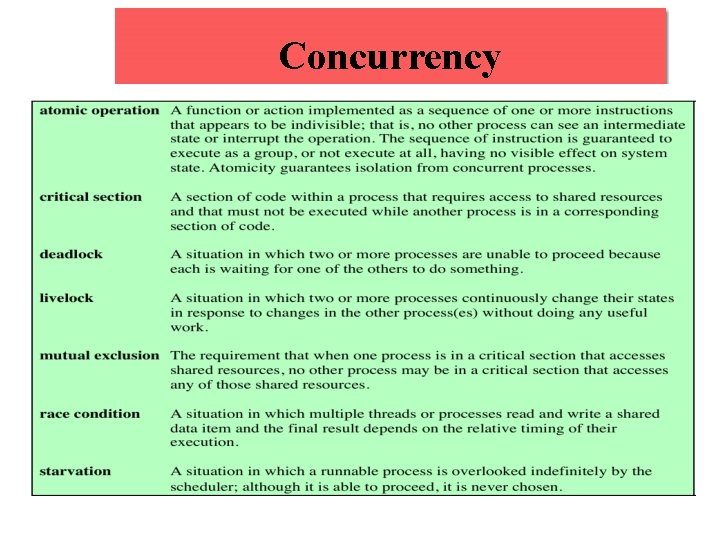

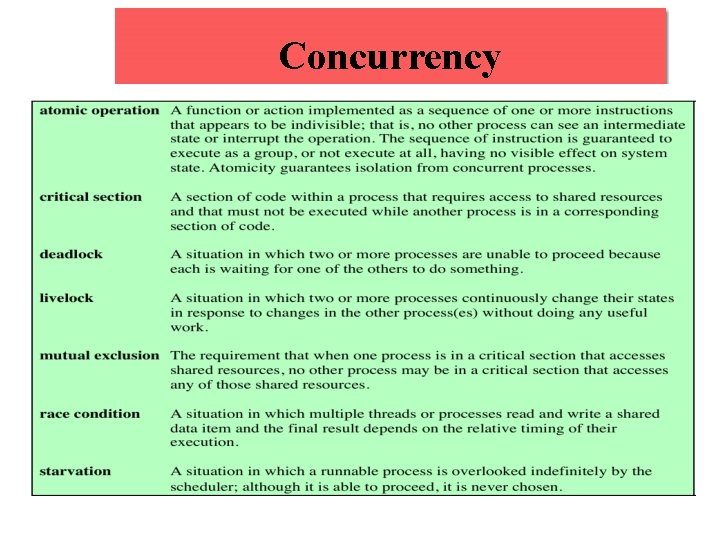

Concurrency • Concurrency: It occurs when two or more separate execution flows are able to run simultaneously. • Resource allocation Management: – If one process request and allocated resources but suspended and transfer of same resource to other process disturb optimal performance. – Solution: Allocated resources may be locked with one process until the completion of one process but it may leads to deadlock.

Concurrency • Concurrency arises in three different contexts: • Multiple applications: » In multiprogramming, processing time is shared dynamically. • Structured applications: » Applications having multiple concurrent processes • Operating system structure: » Operating system is also implemented as a set of processes & Threads.

Concurrency

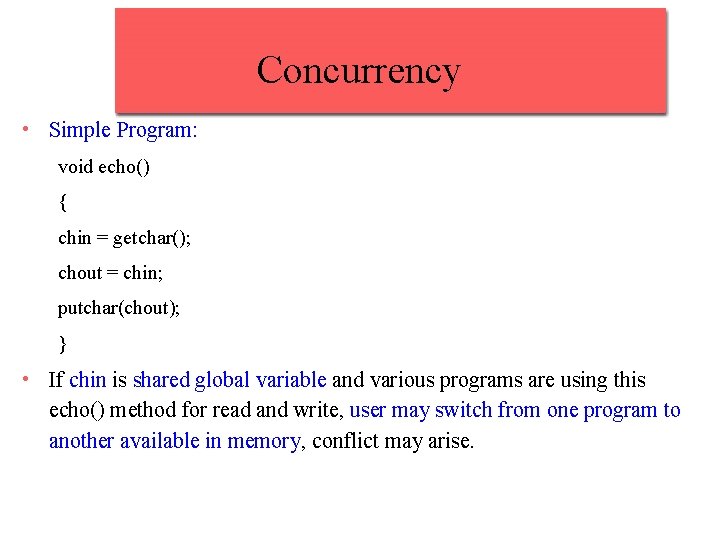

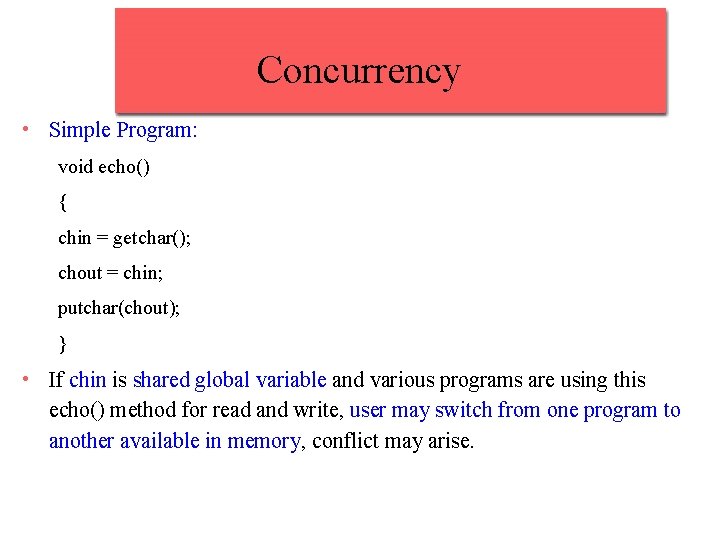

Concurrency • Simple Program: void echo() { chin = getchar(); chout = chin; putchar(chout); } • If chin is shared global variable and various programs are using this echo() method for read and write, user may switch from one program to another available in memory, conflict may arise.

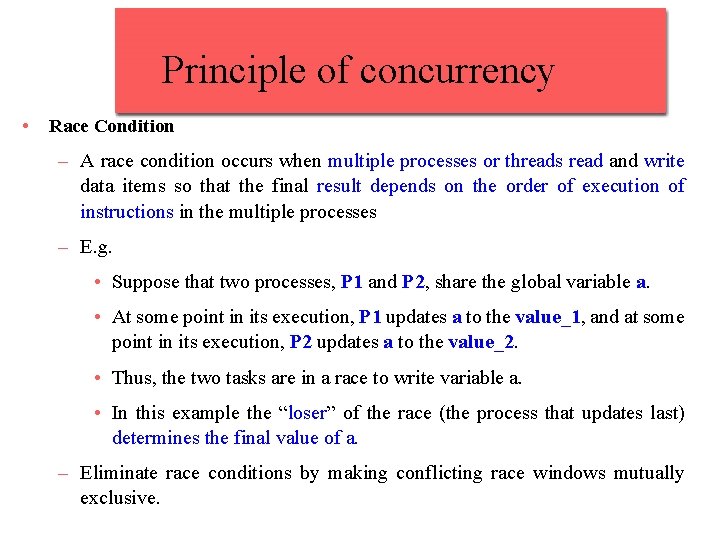

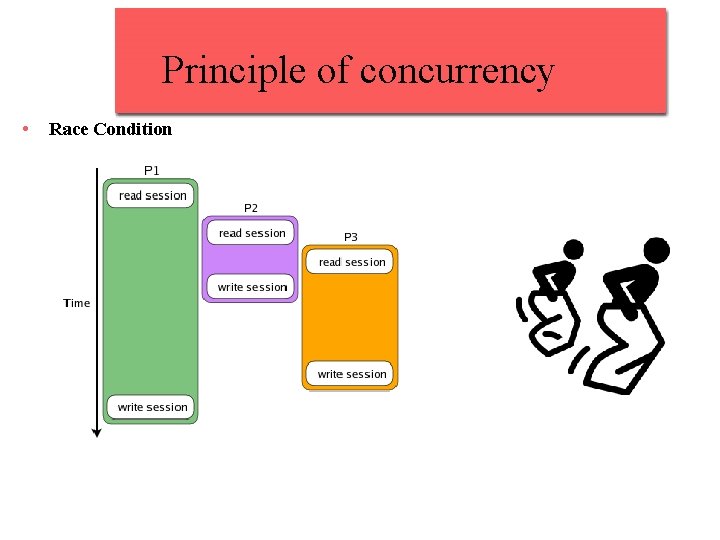

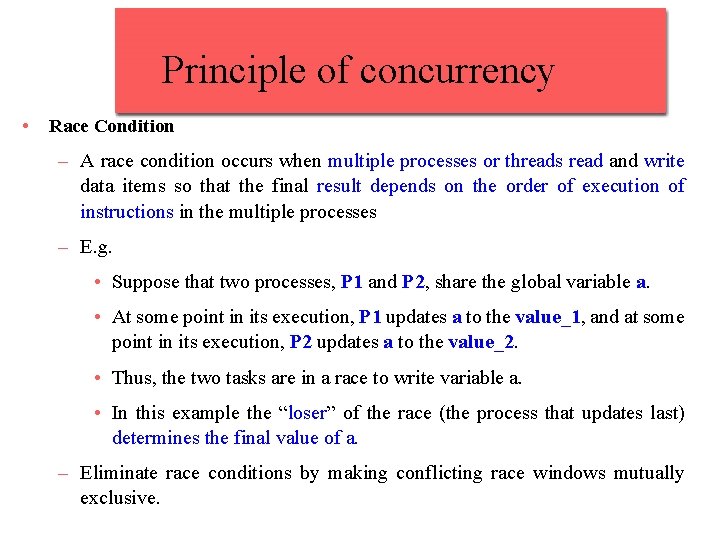

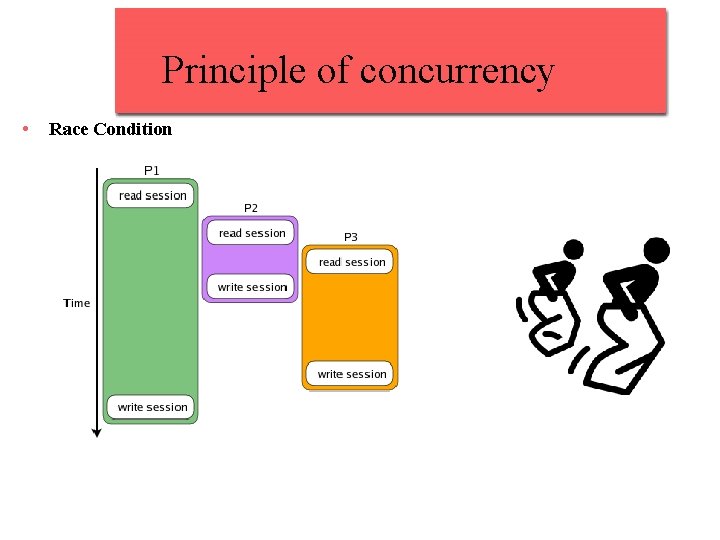

Principle of concurrency • Race Condition – A race condition occurs when multiple processes or threads read and write data items so that the final result depends on the order of execution of instructions in the multiple processes – E. g. • Suppose that two processes, P 1 and P 2, share the global variable a. • At some point in its execution, P 1 updates a to the value_1, and at some point in its execution, P 2 updates a to the value_2. • Thus, the two tasks are in a race to write variable a. • In this example the “loser” of the race (the process that updates last) determines the final value of a. – Eliminate race conditions by making conflicting race windows mutually exclusive.

Principle of concurrency • Race Condition

Principle of concurrency: Operating System Design Concern for Concurrency • Responsibilities of OS to handle concurrency – Keep track of various processes by using PCB. – Allocate and de-allocate resources to each active process – Protect the data and resources against interference by other processes. – Avoid race: Ensure that the processes and outputs are independent of the processing speed relative to other processes

Process Interaction • Process Interact on the basis of degree of their awareness about each other: 1. Processes unaware of each other: – Independent Process: Not intended to work together. – E. g. Multi-programming of multiple independent processes. – Although the processes are not working together, the OS needs to be concerned about competition for resources. • For example: two independent applications may both want to access the same disk or file or printer.

Process Interaction 2. Processes indirectly aware of each other: – These are processes that are not necessarily aware of each other by their respective process IDs – But that share access to some object, such as an I/O buffer. 3. Processes directly aware of each other: – These are processes that are able to communicate with each other by process ID – They are designed to work jointly on some activity.

Principle of concurrency: Control Problems 1. Mutual Exclusion – Critical section of program: (One program is allowed in it) • Program which handles critical resources, used by multiple processes – Critical Resource • Resource demanded by multiple processes 2. Deadlock – When resources required by process p 1 are occupied by p 2, and resources required by process p 2 are occupied by p 1. 3. Starvation – If any process is ignored for longer period of time by scheduler, even if it is ready to execute.

Mutual Exclusion • Only one process at a time is allowed in the critical section for a resource • Fast Execution: – A process must not be delayed access to a critical section when there is no other process using it • Time Limit: – A process remains inside its critical section for a finite time only • Advantages: – No deadlock – No starvation

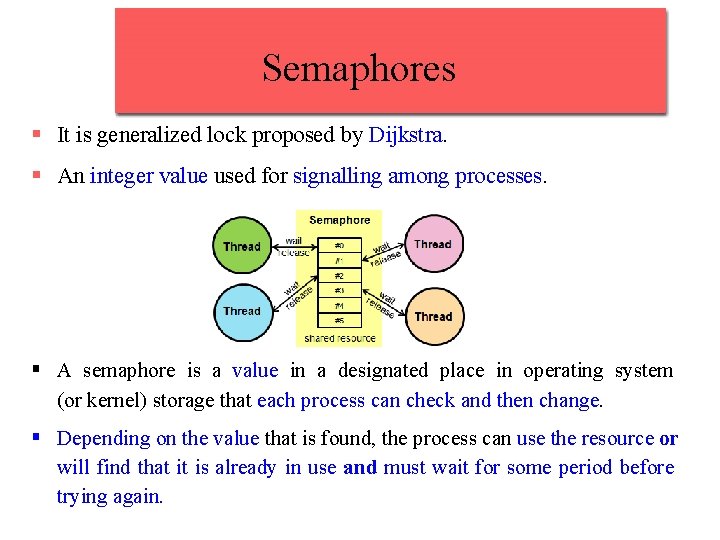

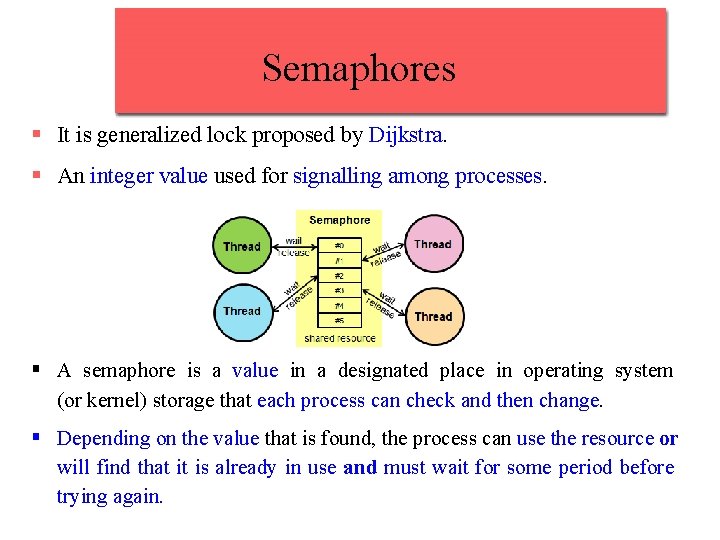

Semaphores § It is generalized lock proposed by Dijkstra. § An integer value used for signalling among processes. § A semaphore is a value in a designated place in operating system (or kernel) storage that each process can check and then change. § Depending on the value that is found, the process can use the resource or will find that it is already in use and must wait for some period before trying again.

Semaphore § Semaphore Operations • Acquire Resource (Decrement) – When a process wants to utilize a resource, it will perform wait operation on the semaphore, decrements the semaphore and gets blocked till the resource will be allocated to it. • Release (Increment): – When a process releases a resource, it will perform the signal operation to the semaphore and increment the semaphore. – The released resource will be then allocated to another process which was being waiting for it. • No Resource available (zero): – When the count of semaphore becomes 0, it means that all the resources are being used. – Any process which wants to utilize a resource, will be blocked until counter becomes greater than 0.

Semaphore • Main purpose of semaphore is to avoid race condition • Semaphores are divided in two categories, according to order of removing from queue: 1. Strong Semaphores: – Uses FIFO for removal from queue 1. Weak Semaphores: – Don’t specify the order of removal from the queue • Based on priority.

Semaphore § Binary Semaphores – These semaphores allow only one process to access the shared resource, at one time. – Binary semaphores are restricted to only two values: • 0 or 1. • Locked or un-locked. • Available or un-available. – On some systems, these are also known as Mutex Locks, because these provide mutual exclusion in those systems.

Semaphore § Applications of semaphores – Solve the problem of race condition – Control proper access of resources § Disadvantages of semaphores – If the semaphore operations are not initialized or used properly, then they can cause following problems: • Mutual exclusion violation. • Dead Locks • Starvation

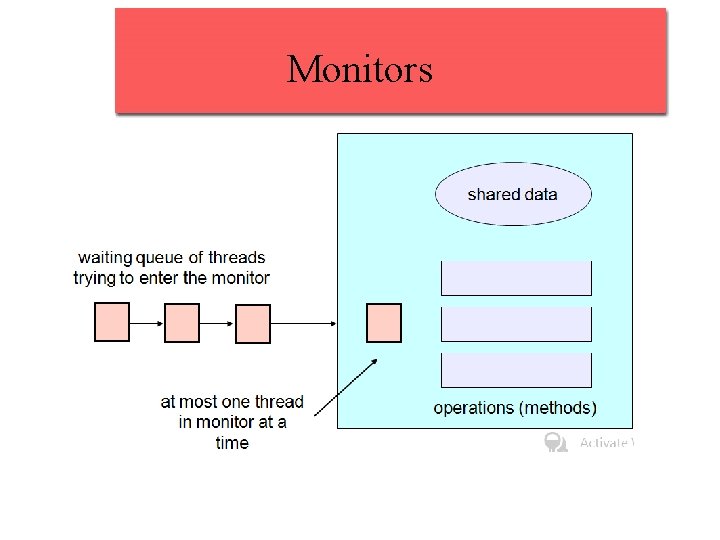

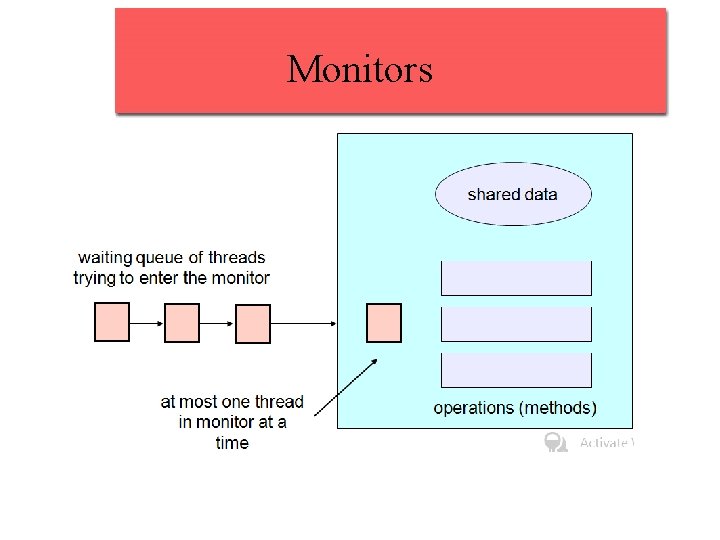

Monitors • It is used to put a monitor lock on any object. – E. g. Lock on all linked lists, on linked list, on particular element of linked list. • If a second thread tries to execute a monitor procedure, monitor blocks until the first has left the monitor. • Support Data Encapsulation – No process can directly access a monitor’s local variables • Support Mutual Exclusion: – Only one process can be inside a monitor at any time

Monitors