Lending a Hand Sign Language Machine Translation Sara

- Slides: 19

Lending a Hand: Sign Language Machine Translation Sara Morrissey NCLT Seminar Series 21 st June 2006

Overview Introduction - What, why, how…? Out with the old… - SL Corpora - The System …in with the new - *new and improved* Lost in Translation - Evaluation issues Conclusion 2

Introduction Q WHAT ? A Sign Language Visually articulated language Linguistic phenomena prevalent to SLs ~ Classifiers ~ Non-manual features (NMFs) ~ Discourse mapping and use of signing space

Introduction (2) Q WHY ? A a) Improve communication b) Stretching application of EBMT Q HOW? A Our approach ~ Annotated SL corpora ~ Example-based MT employing Marker Hypothesis (Green, 1979) 4

Introduction (3) Other approaches 1. Transfer - Grieve-Smith, 1999; Marshall & Sáfár, 2002, Sáfár & Marshall 2002; Van Zijl & Barker, 2003 2. Interlingua – Veale et al. , 1998; Zhao et al. , 2000 3. Multi-path – Huenerfauth, 2004, 2005 4. Statistical – Bauer et al. , 1999, Bungeroth & Ney, 2004, 2005, 2006 5

Out with the old… Corpora Difficult to find ECHO project Nederlandse Gebarentaal (NGT) corpora ~ ~ ~ 40 minutes of video data 5 Aesop’s fables by two signers and SL poetry Combined corpus of 561 sentences

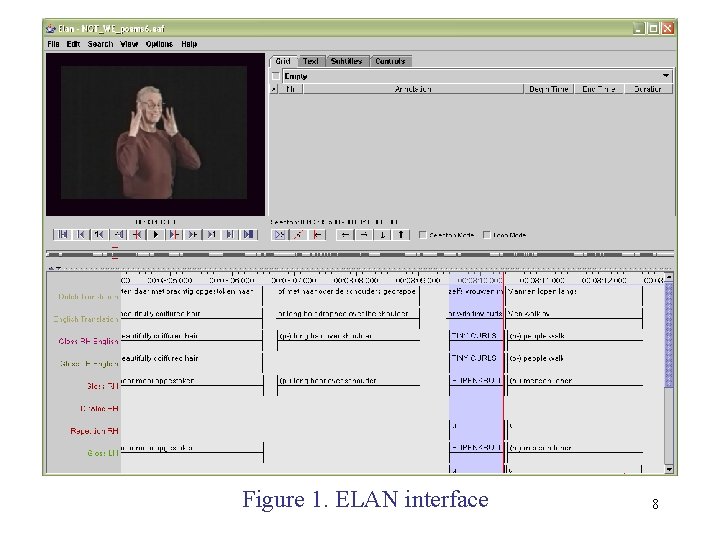

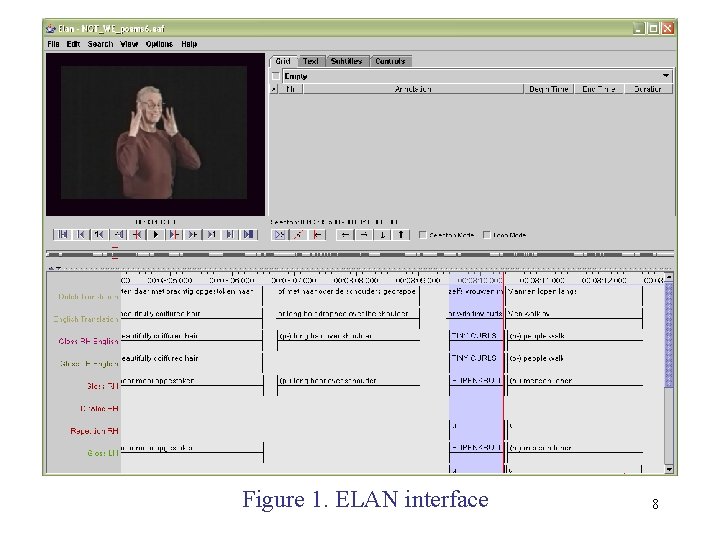

Out with the old… Annotation Why annotate? ~ ~ No formal written form for SLs Linguistic description including NMFs Can include translation making corpus bi/trilingual Time for chunking and aligning present ELAN annotation toolkit ~ Graphical user interface displaying videos and annotations simultaneously (Fig. 1) ~ Time-aligned and non-time-aligned annotations including NMF description, repetition notation and notes on indexing and role. 7

Figure 1. ELAN interface 8

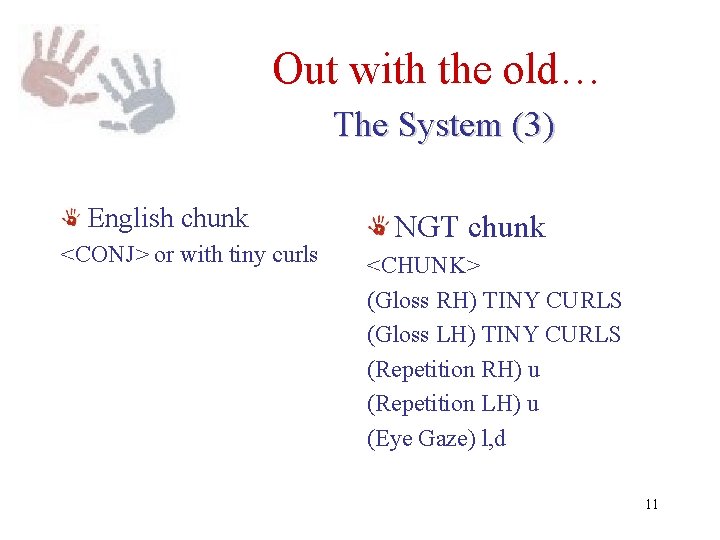

Out with the old… The System Segmentation using the ‘Marker Hypothesis’ (MH) (Green, 1979) ~ Analagous to system of (Way & Gough, 2003; Gough & Way, 24 a/b) ~ Segments spoken language sentences according to a set of closed class words ~ Chunks start with closed class words and usually encapsulate a concept or an attribute of a concept forming concept chunks, e. g <CONJ> or with tiny curls 9

Out with the old… The System (2) MH not suitable for use with SL side of corpus due to sparseness of closed class item markers ~ NGT gloss tier segmented based on time spans of its annotations, remaining annotations with same time span grouped with gloss tier segments forming concept chunks similar to English marker chunks ~ Despite different methods, they are successful in forming potentially alignable concept chunks 10

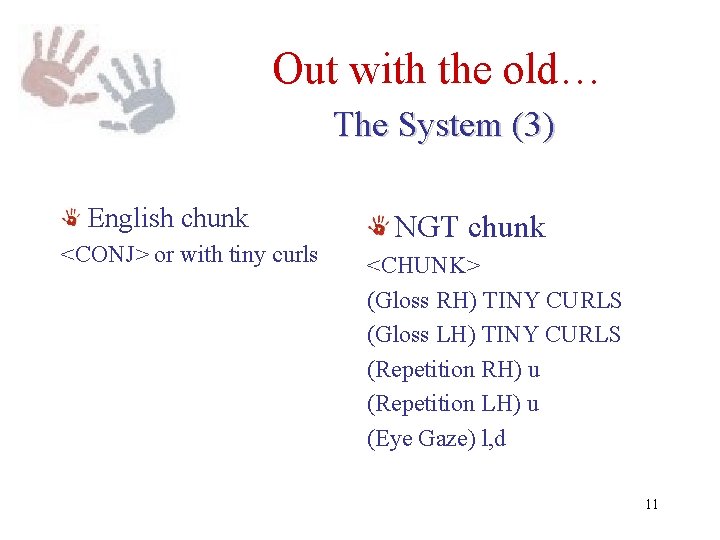

Out with the old… The System (3) English chunk <CONJ> or with tiny curls NGT chunk <CHUNK> (Gloss RH) TINY CURLS (Gloss LH) TINY CURLS (Repetition RH) u (Repetition LH) u (Eye Gaze) l, d 11

Out with the old… The System (4) Searches for exact sentence match in aligned bilingual corpus Uses MH to segment input and searches matching or close match chunks in English side of aligned corpus Looks for individual words in the bilingual lexicon 12

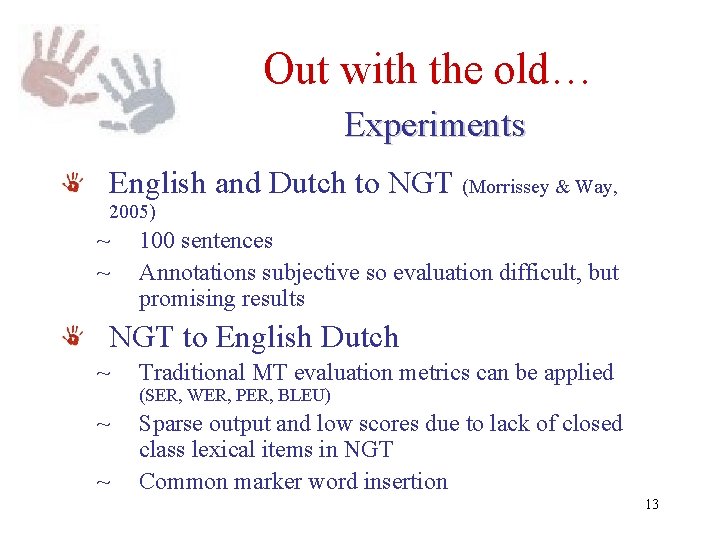

Out with the old… Experiments English and Dutch to NGT (Morrissey & Way, 2005) ~ ~ 100 sentences Annotations subjective so evaluation difficult, but promising results NGT to English Dutch ~ Traditional MT evaluation metrics can be applied ~ Sparse output and low scores due to lack of closed class lexical items in NGT Common marker word insertion ~ (SER, WER, PER, BLEU) 13

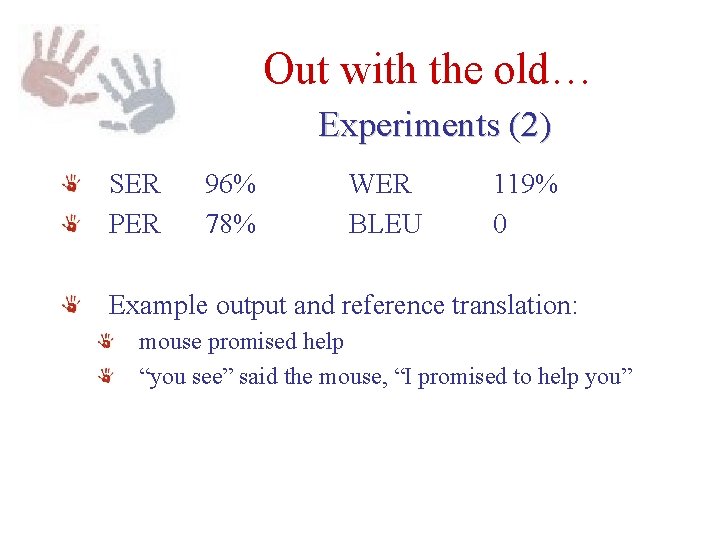

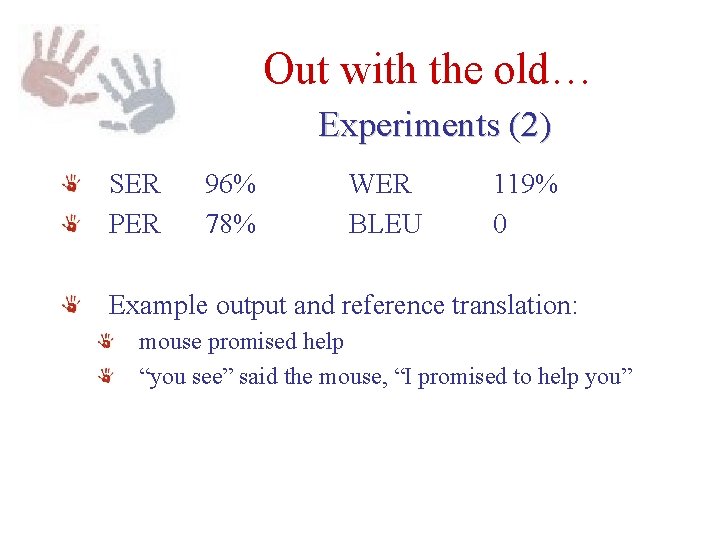

Out with the old… Experiments (2) SER PER 96% 78% WER BLEU 119% 0 Example output and reference translation: mouse promised help “you see” said the mouse, “I promised to help you”

…in with the new New Corpus - ~1400 sentences (Sun. Dial and ATIS corpora) Flight information queries ISL signed video version Homespun annotation - With view to end product New system - Open. Lab

Lost in Translation Evaluation issues Mainstream evaluation techniques 1. Exact text matching 2. No recognition of synonyms, syntactic structure, semantics 3. SLs no gold standard 1. Other possible evaluation metrics 1. Number of content words/number of words in ref translation 2. Evaluation of syntactic or semantic relations 16

Conclusions Basic system Corpus problems - Larger corpus such as ISL one in creation, more scope for matches, annotations subjective EBMT caters for some SL linguistic phenomena Evaluation metrics unsuitable oral <->nonoral translation 17

Future Work Adding in NMF information Manual analysis Language model to improve output Suitable evaluation metrics Review other writing systems for SLs Avatar… 18

Thank You Questions? 19