Lecture on High Performance Processor Architecture CS 05162

- Slides: 53

Lecture on High Performance Processor Architecture (CS 05162) DLP Architecture Case Study: Stream Processor Xu Guang xuguang 5@mail. ustc. edu. cn Fall 2007 University of Science and Technology of China Department of Computer Science and Technology CS of USTC

Discussion Outline n Motivation n Related work n Imagine n Conclusion n TPA-PD n Future work 2021/10/17 CS of USTC 2

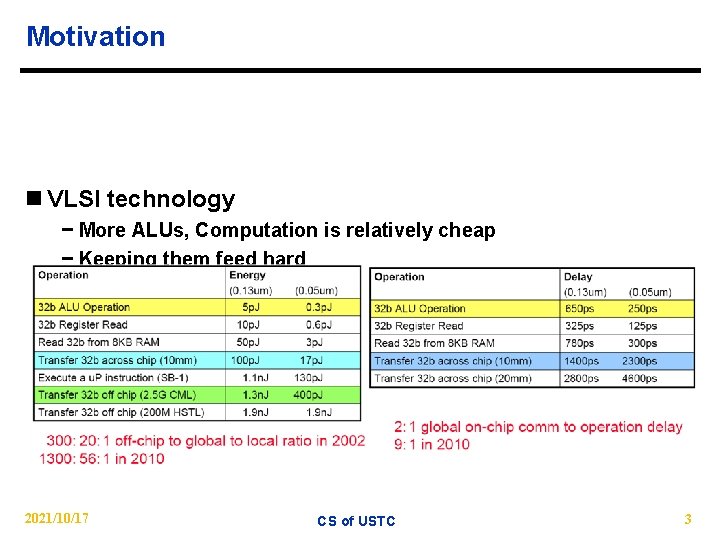

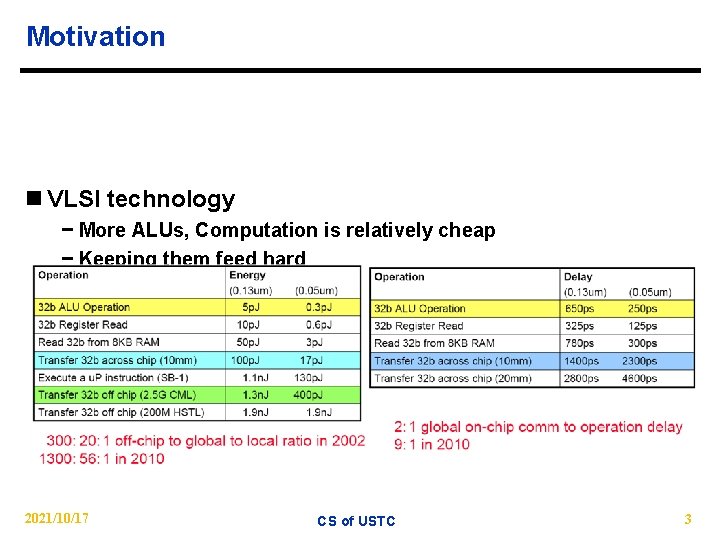

Motivation n VLSI technology − More ALUs, Computation is relatively cheap − Keeping them feed hard − The problem is bandwidth l Energy l Delay 2021/10/17 CS of USTC 3

Motivation n Data level parallel (DLP) applications − Media application l Real-time graphics l Signal processing l Video processing − Scientific computing n Application characteristics − Dense computing − Parallelism − Territorial 2021/10/17 CS of USTC 4

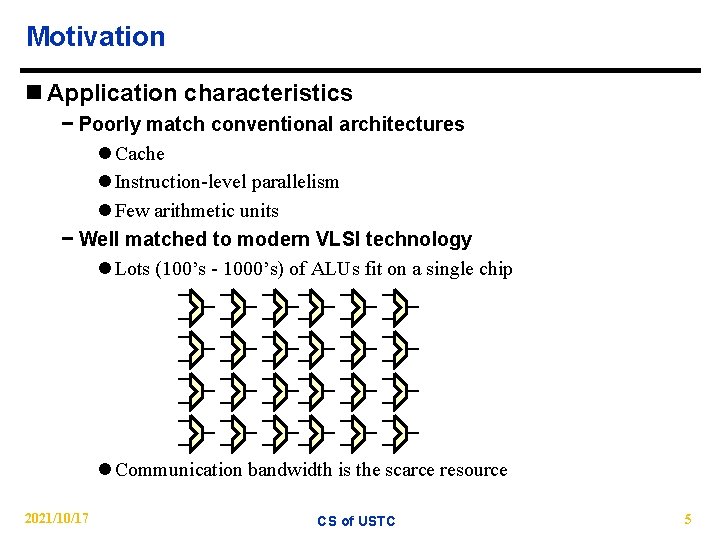

Motivation n Application characteristics − Poorly match conventional architectures l Cache l Instruction-level parallelism l Few arithmetic units − Well matched to modern VLSI technology l Lots (100’s - 1000’s) of ALUs fit on a single chip l Communication bandwidth is the scarce resource 2021/10/17 CS of USTC 5

Related work n Vector − Large set of temporary values n Dsp − Signal register file n GPU − Special n General purpose processor − ILP − cache 2021/10/17 CS of USTC 6

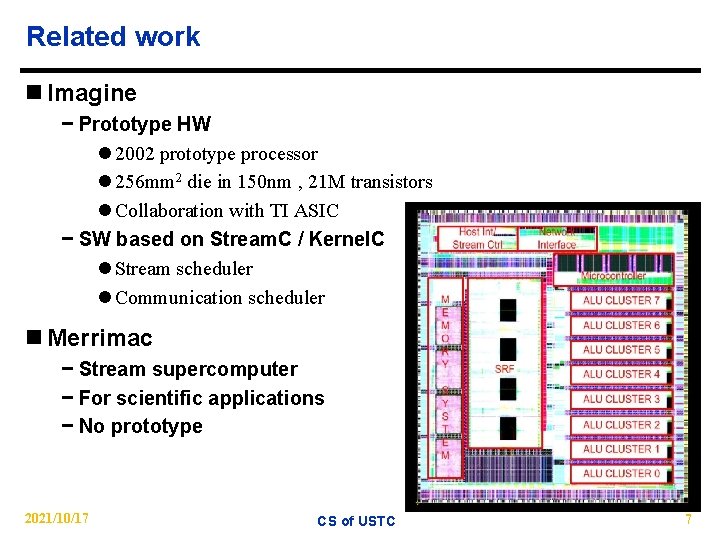

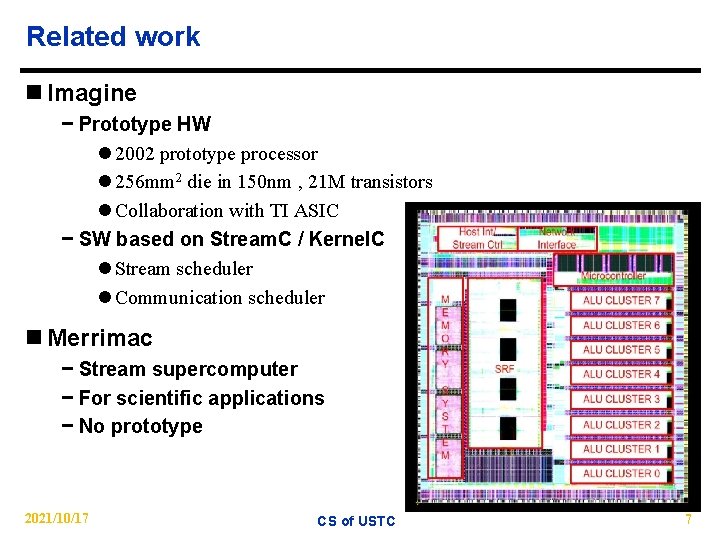

Related work n Imagine − Prototype HW l 2002 prototype processor l 256 mm 2 die in 150 nm , 21 M transistors l Collaboration with TI ASIC − SW based on Stream. C / Kernel. C l Stream scheduler l Communication scheduler n Merrimac − Stream supercomputer − For scientific applications − No prototype 2021/10/17 CS of USTC 7

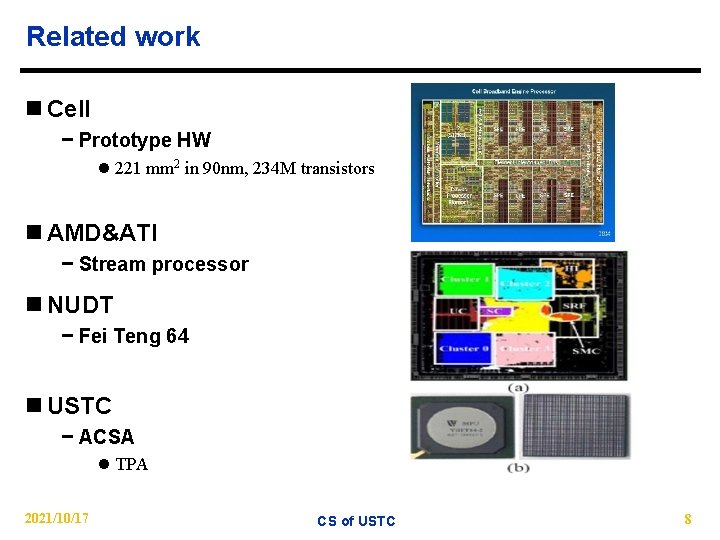

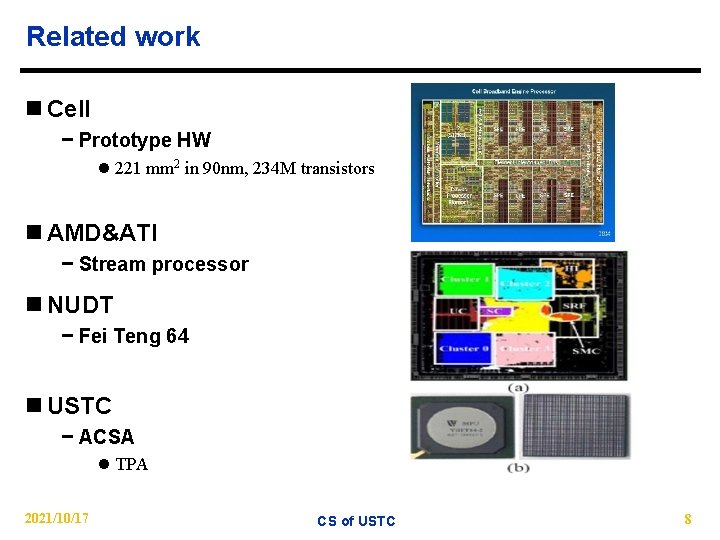

Related work n Cell − Prototype HW l 221 mm 2 in 90 nm, 234 M transistors n AMD&ATI − Stream processor n NUDT − Fei Teng 64 n USTC − ACSA l TPA 2021/10/17 CS of USTC 8

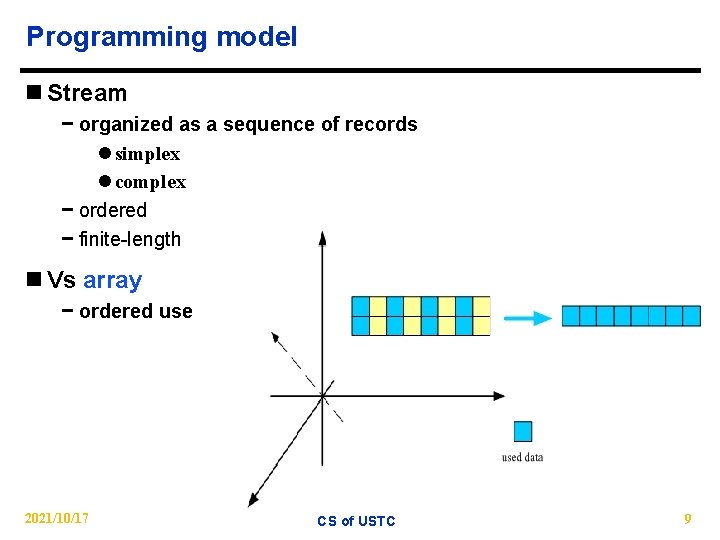

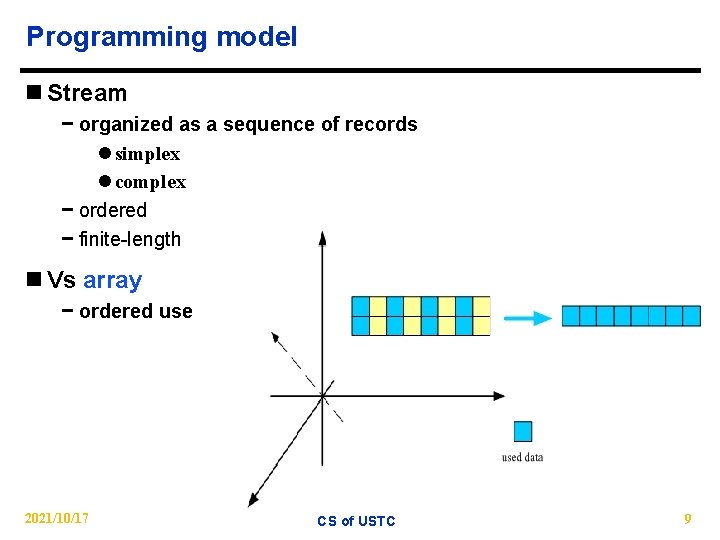

Programming model n Stream − organized as a sequence of records l simplex l complex − ordered − finite-length n Vs array − ordered use 2021/10/17 CS of USTC 9

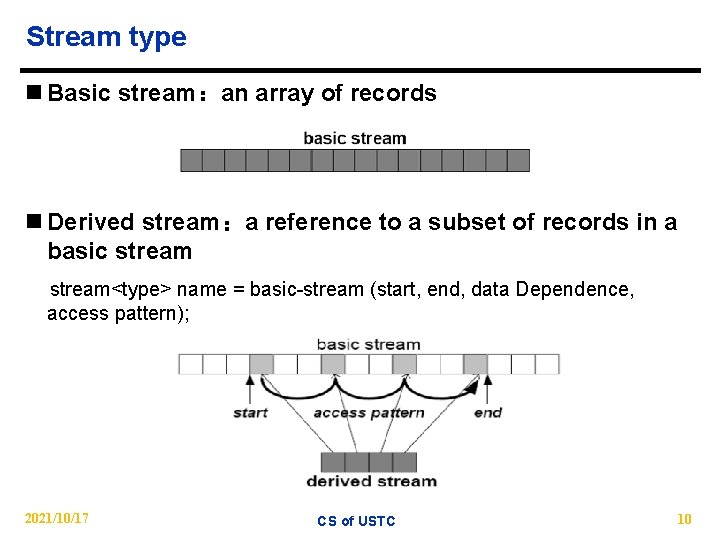

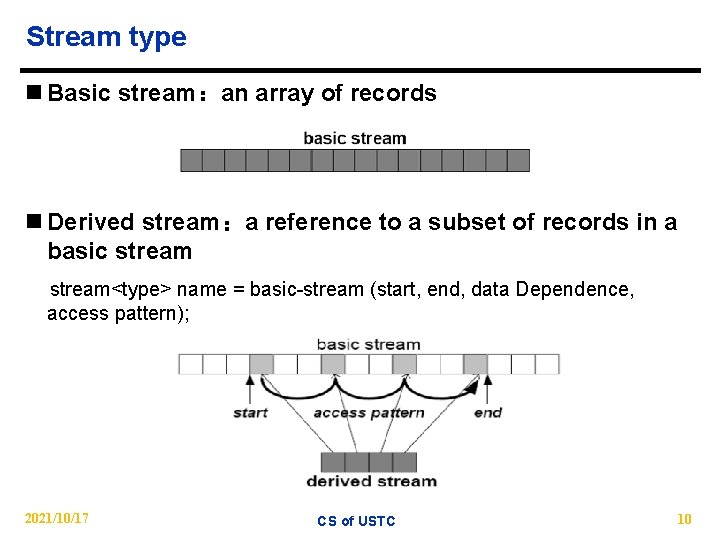

Stream type n Basic stream:an array of records n Derived stream:a reference to a subset of records in a basic stream<type> name = basic-stream (start, end, data Dependence, access pattern); 2021/10/17 CS of USTC 10

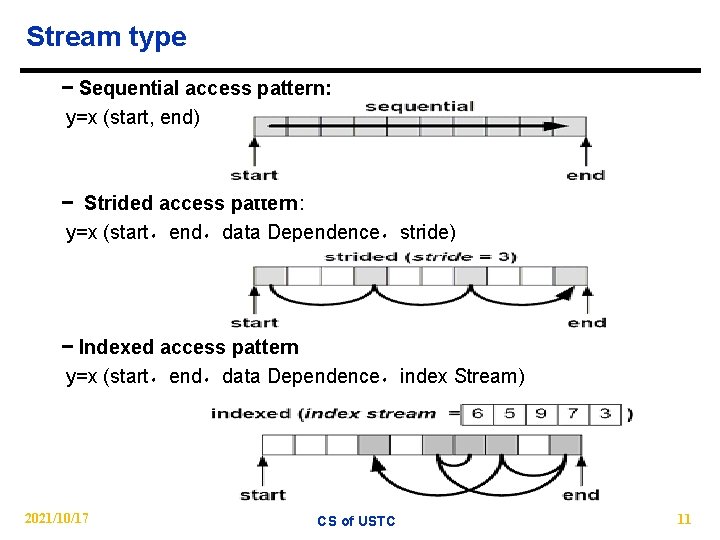

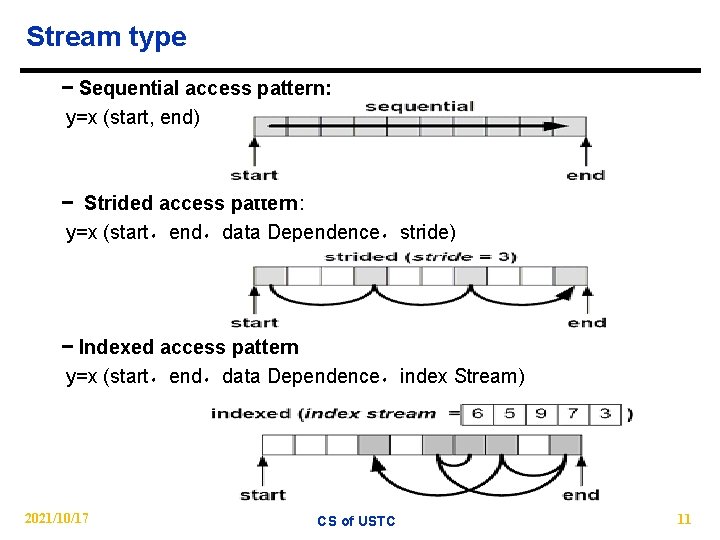

Stream type − Sequential access pattern: y=x (start, end) − Strided access pattern: y=x (start,end,data Dependence,stride) − Indexed access pattern y=x (start,end,data Dependence,index Stream) 2021/10/17 CS of USTC 11

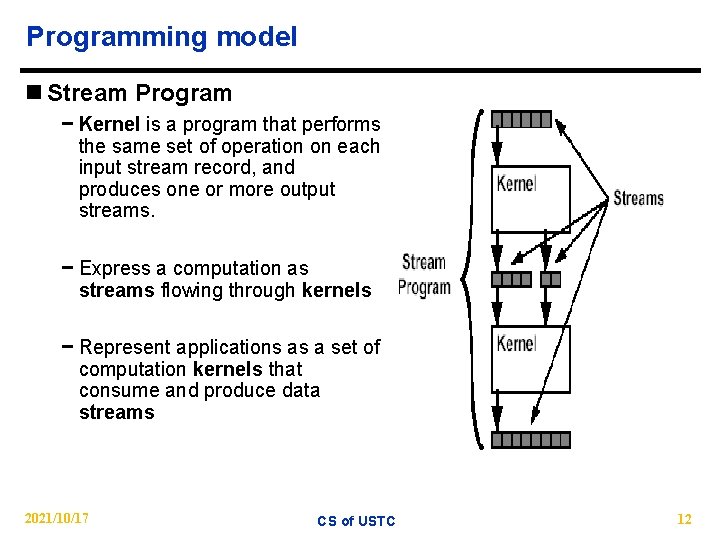

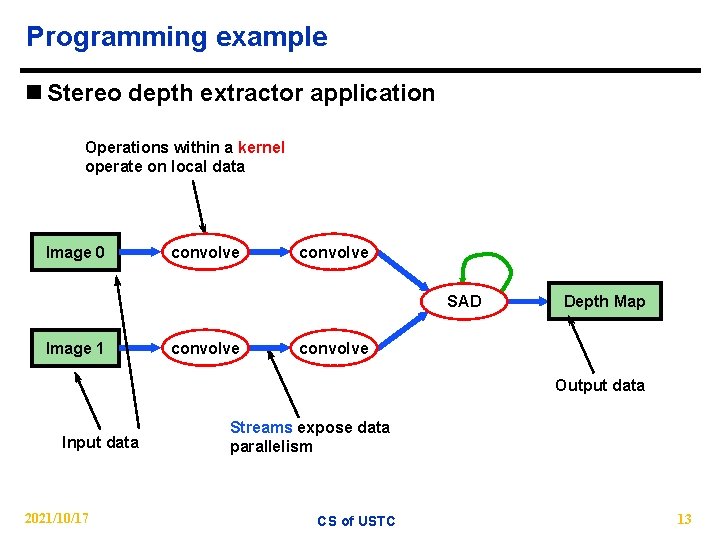

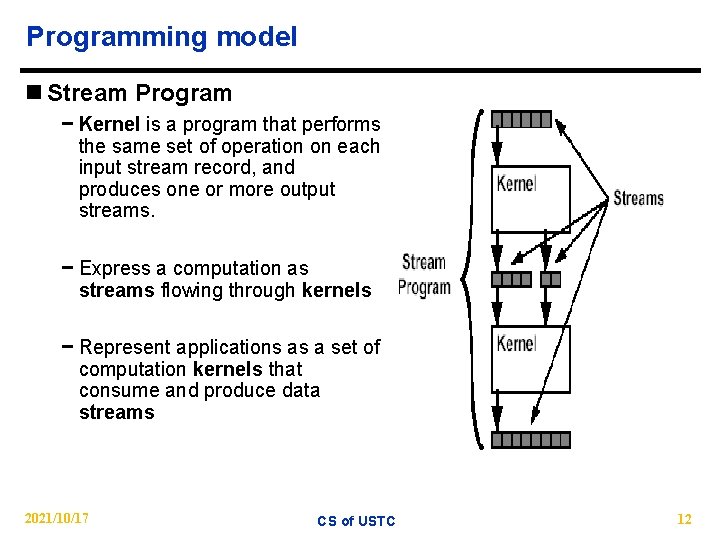

Programming model n Stream Program − Kernel is a program that performs the same set of operation on each input stream record, and produces one or more output streams. − Express a computation as streams flowing through kernels − Represent applications as a set of computation kernels that consume and produce data streams 2021/10/17 CS of USTC 12

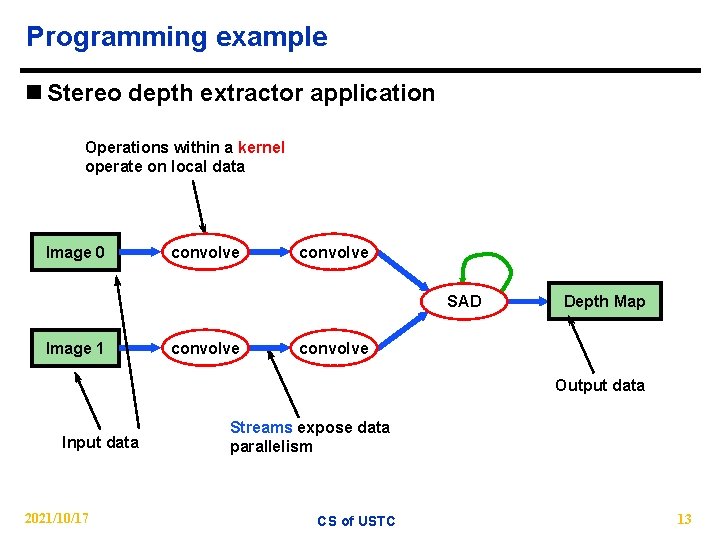

Programming example n Stereo depth extractor application Operations within a kernel operate on local data Image 0 convolve SAD Image 1 convolve Depth Map convolve Output data Input data 2021/10/17 Streams expose data parallelism CS of USTC 13

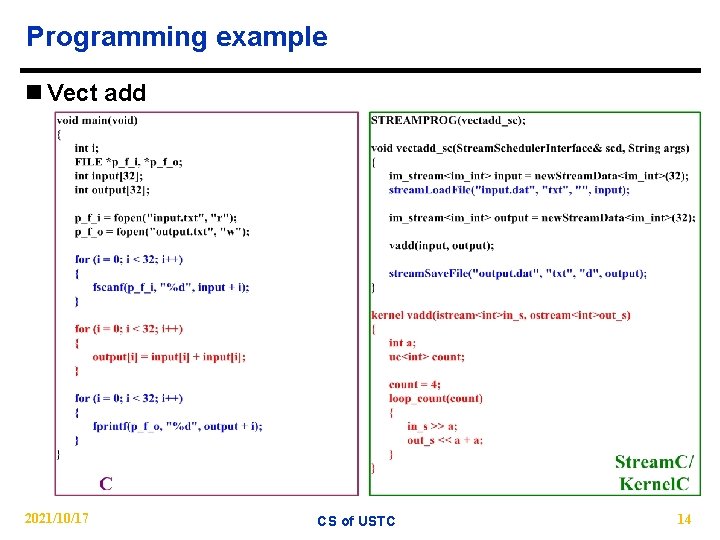

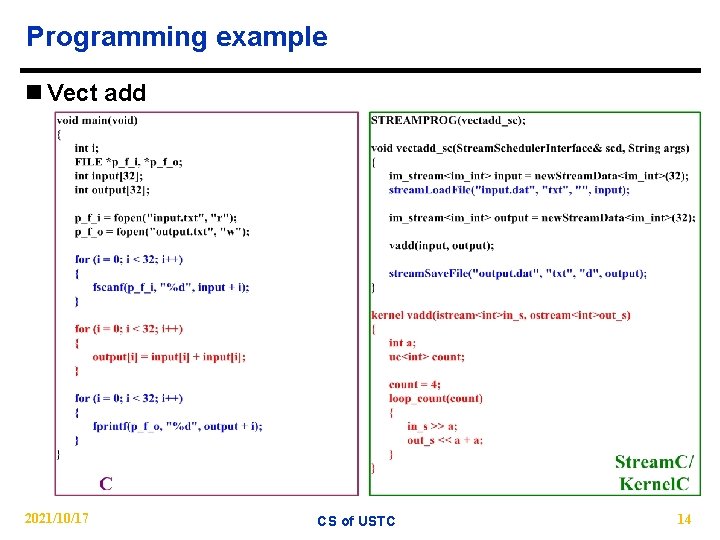

Programming example n Vect add 2021/10/17 CS of USTC 14

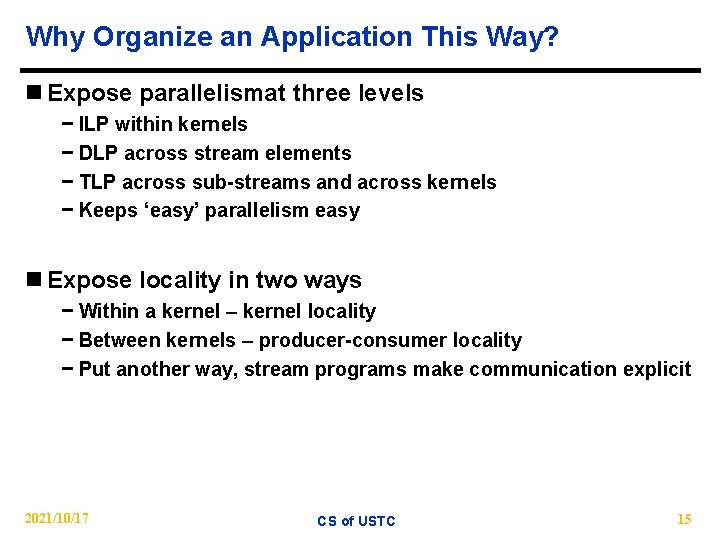

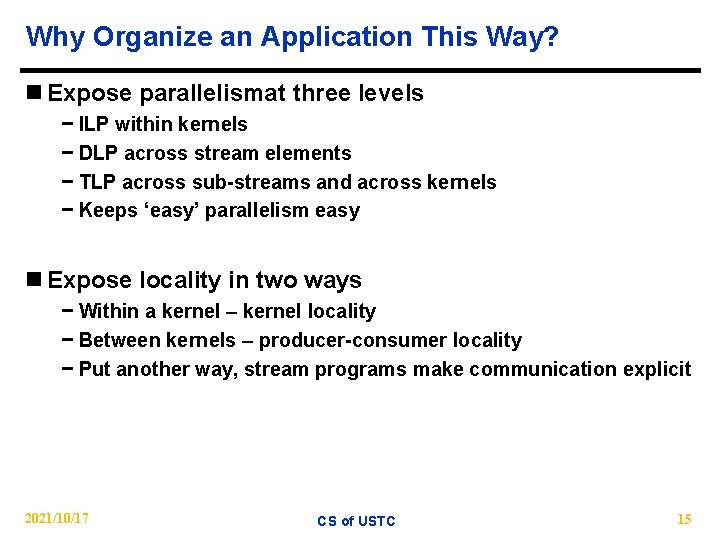

Why Organize an Application This Way? n Expose parallelismat three levels − ILP within kernels − DLP across stream elements − TLP across sub-streams and across kernels − Keeps ‘easy’ parallelism easy n Expose locality in two ways − Within a kernel – kernel locality − Between kernels – producer-consumer locality − Put another way, stream programs make communication explicit 2021/10/17 CS of USTC 15

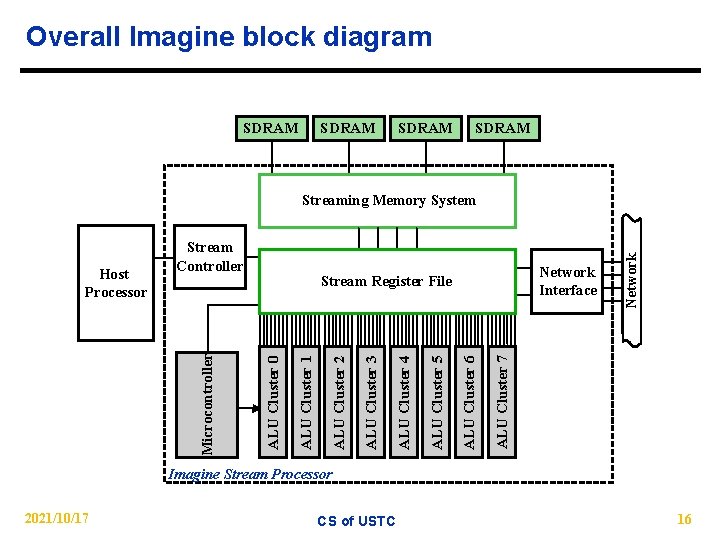

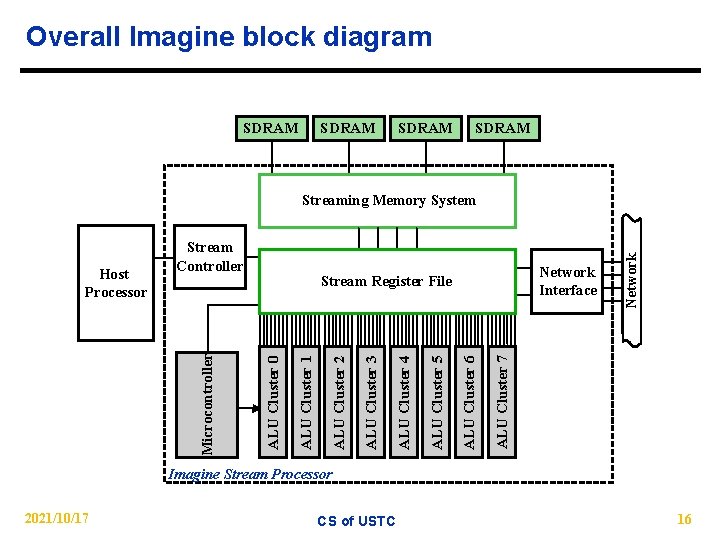

Overall Imagine block diagram SDRAM Network Interface ALU Cluster 7 ALU Cluster 6 ALU Cluster 5 ALU Cluster 4 ALU Cluster 3 ALU Cluster 2 ALU Cluster 1 Stream Register File ALU Cluster 0 Microcontroller Host Processor Stream Controller Network Streaming Memory System Imagine Stream Processor 2021/10/17 CS of USTC 16

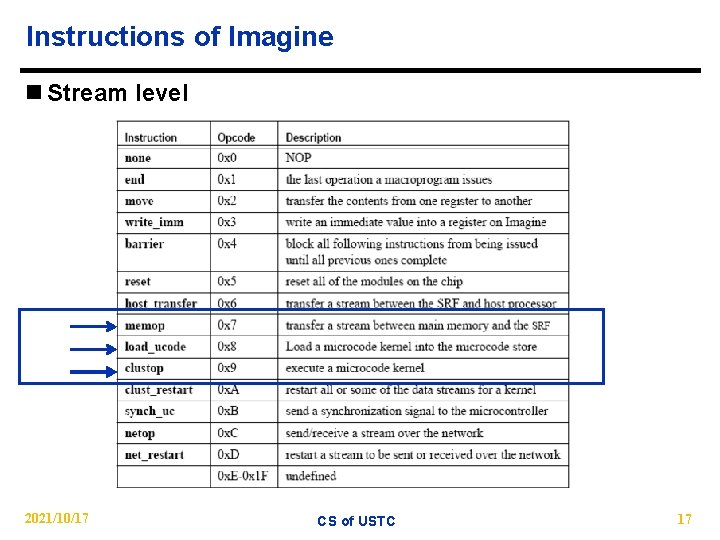

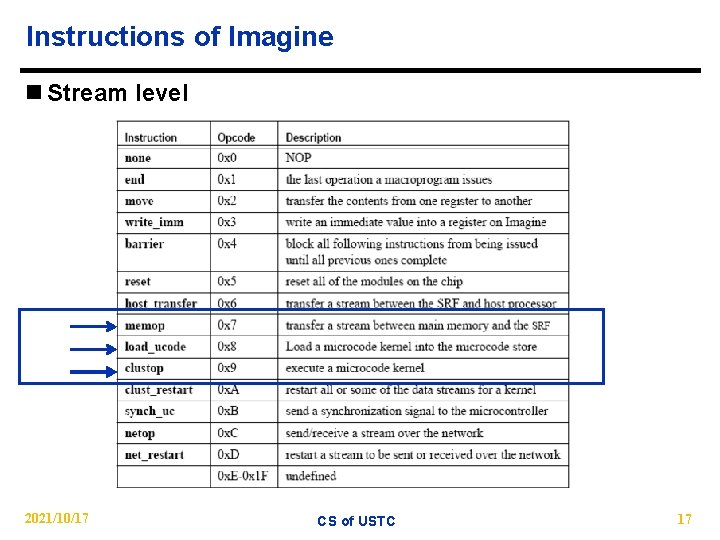

Instructions of Imagine n Stream level 2021/10/17 CS of USTC 17

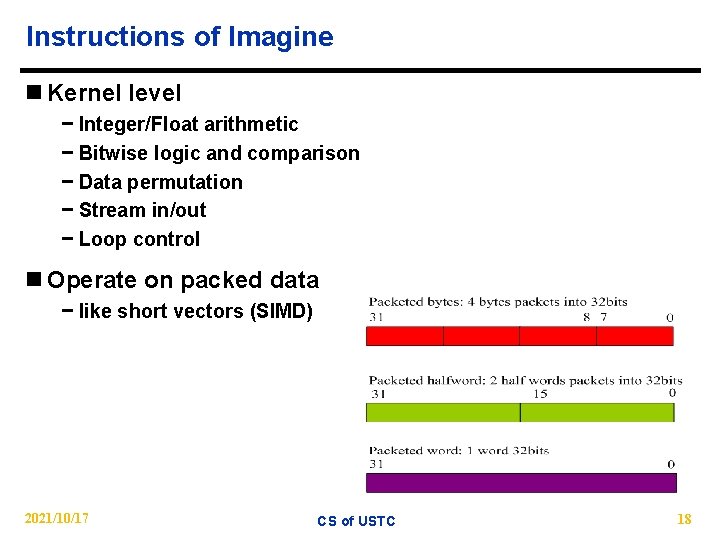

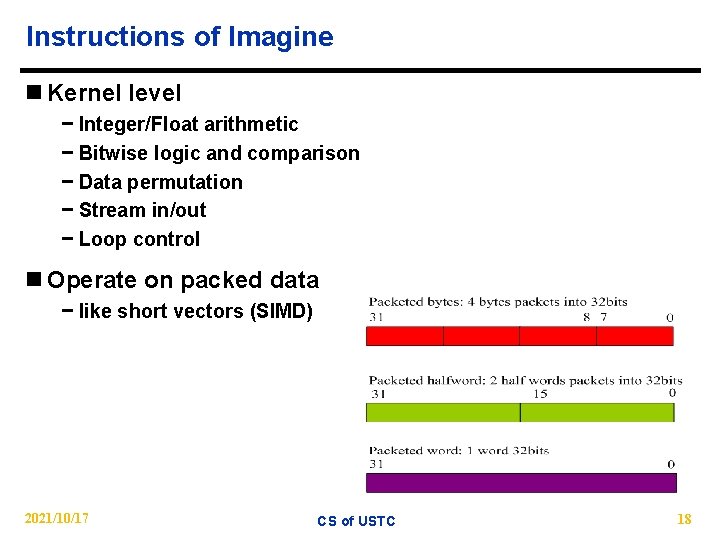

Instructions of Imagine n Kernel level − Integer/Float arithmetic − Bitwise logic and comparison − Data permutation − Stream in/out − Loop control n Operate on packed data − like short vectors (SIMD) 2021/10/17 CS of USTC 18

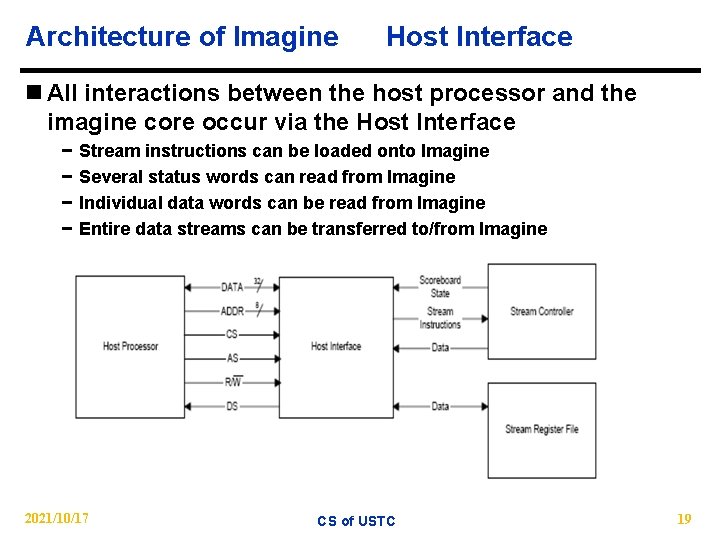

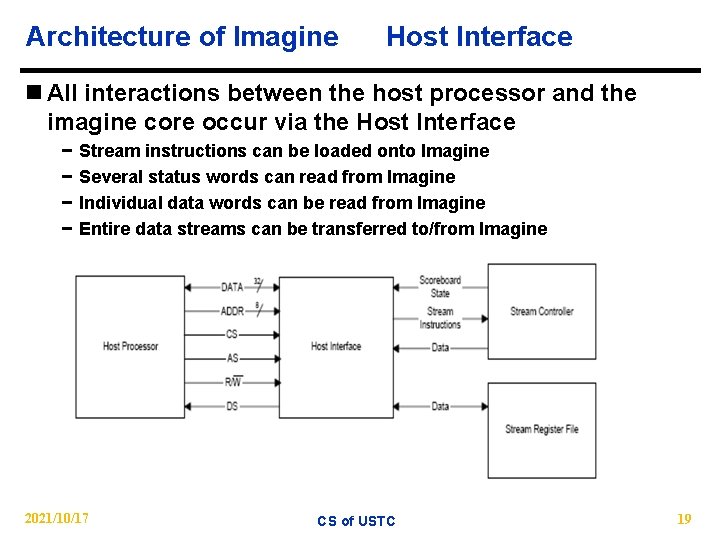

Architecture of Imagine Host Interface n All interactions between the host processor and the imagine core occur via the Host Interface − − Stream instructions can be loaded onto Imagine Several status words can read from Imagine Individual data words can be read from Imagine Entire data streams can be transferred to/from Imagine 2021/10/17 CS of USTC 19

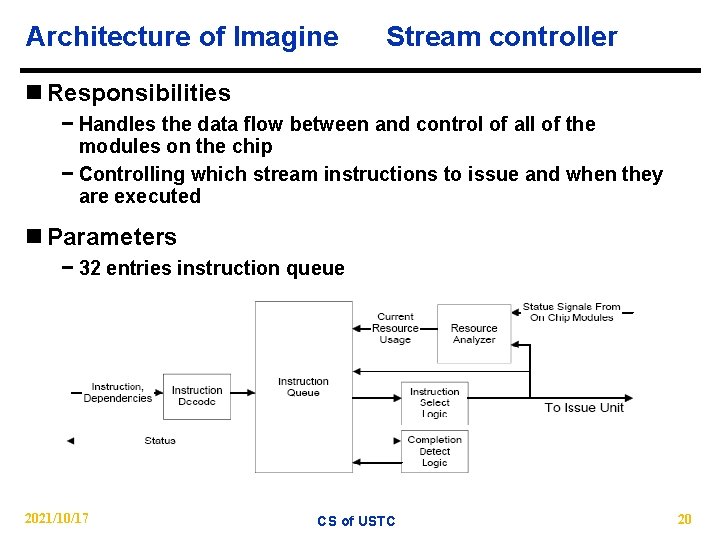

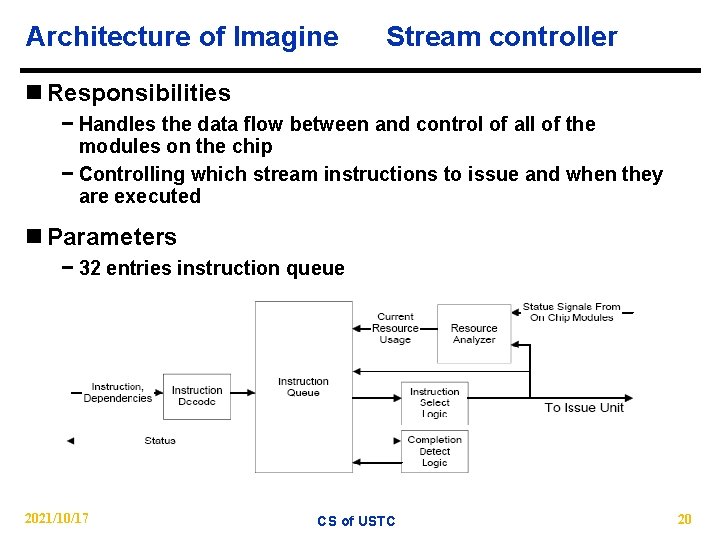

Architecture of Imagine Stream controller n Responsibilities − Handles the data flow between and control of all of the modules on the chip − Controlling which stream instructions to issue and when they are executed n Parameters − 32 entries instruction queue 2021/10/17 CS of USTC 20

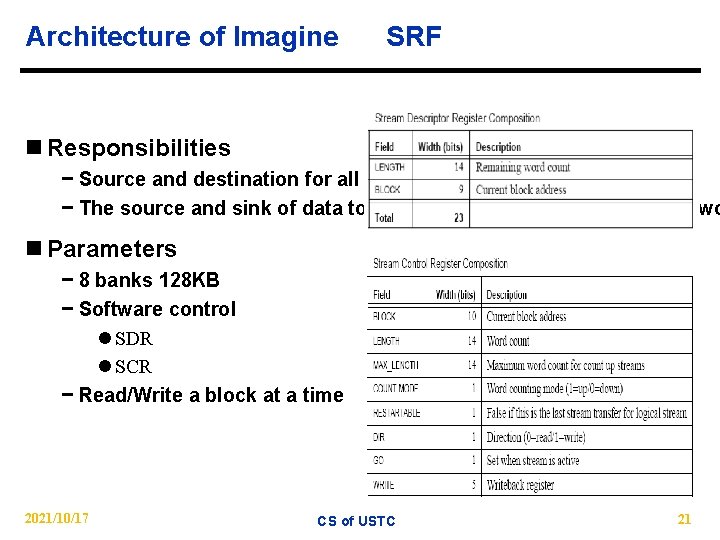

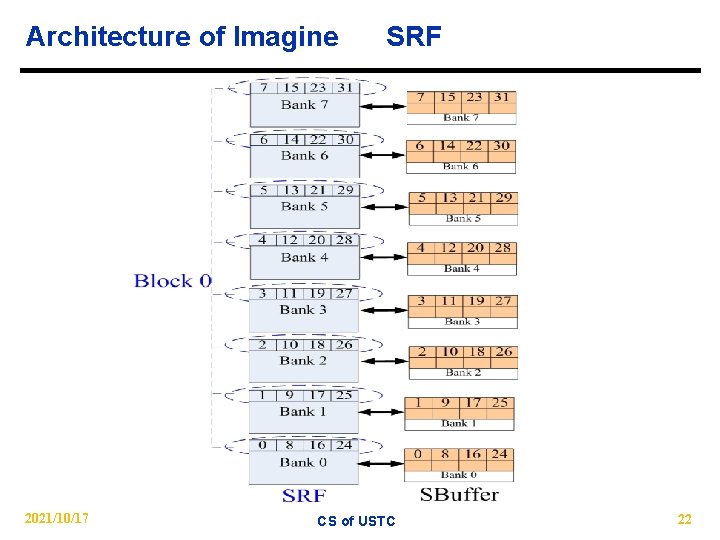

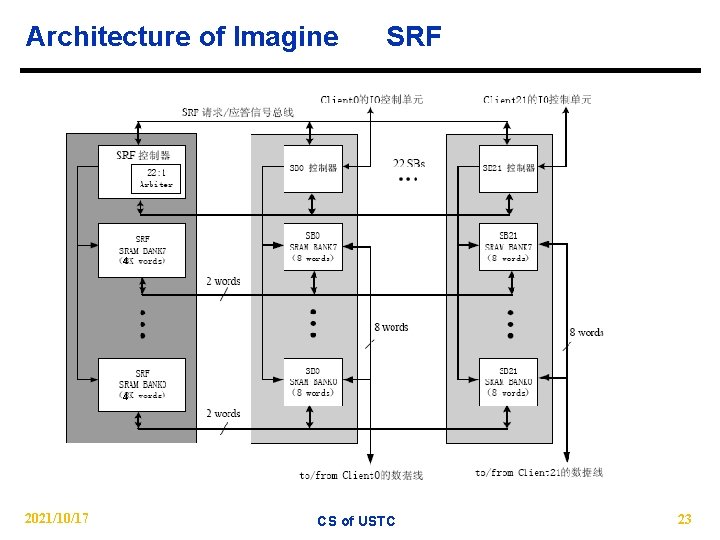

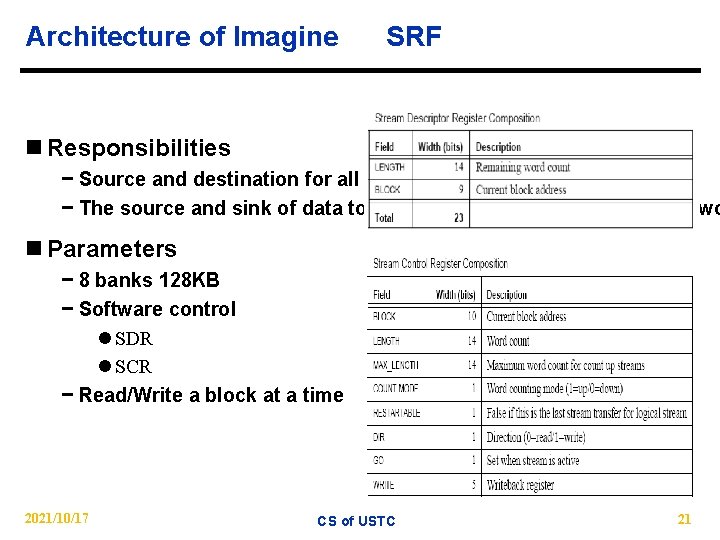

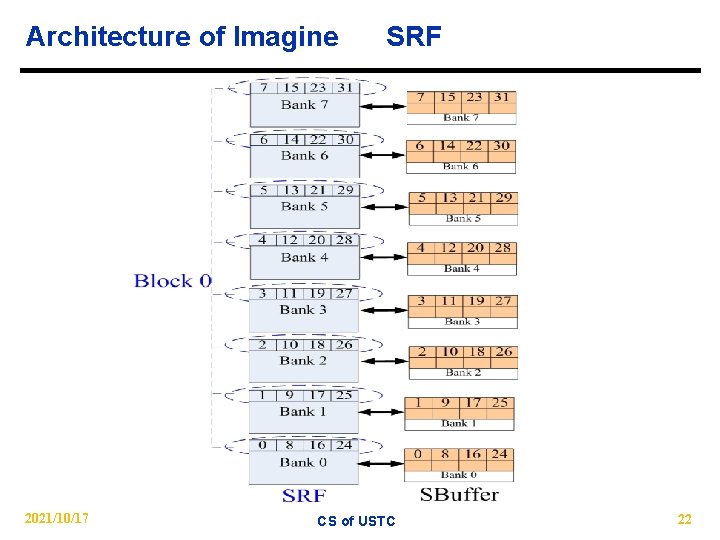

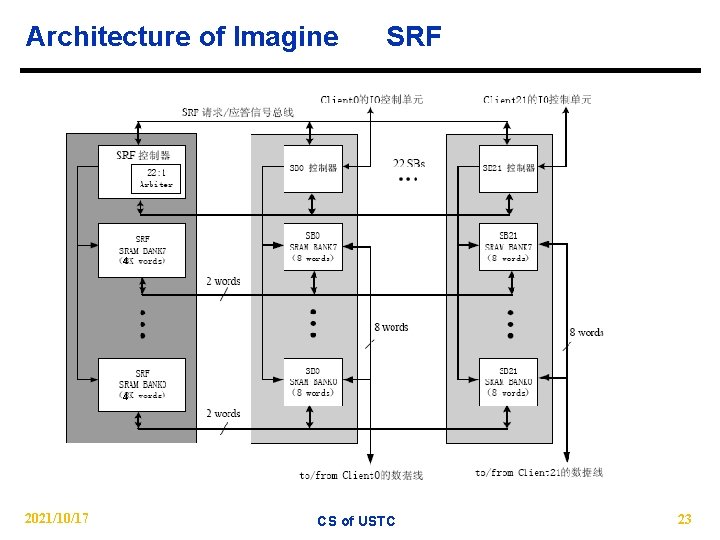

Architecture of Imagine SRF n Responsibilities − Source and destination for all memory operations − The source and sink of data to the arithmetic clusters and the netwo n Parameters − 8 banks 128 KB − Software control l SDR l SCR − Read/Write a block at a time 2021/10/17 CS of USTC 21

Architecture of Imagine 2021/10/17 SRF CS of USTC 22

Architecture of Imagine SRF 4 4 2021/10/17 CS of USTC 23

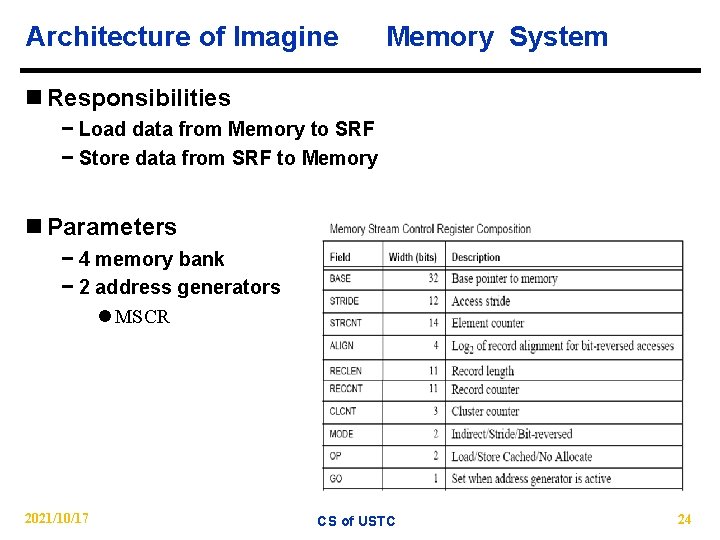

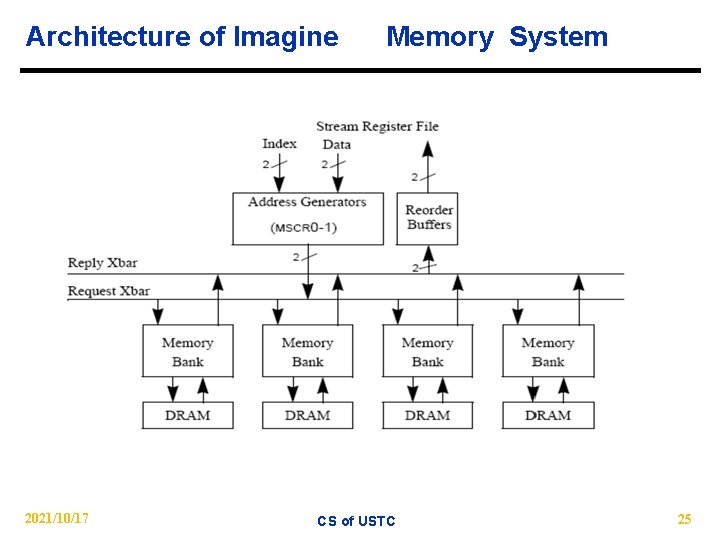

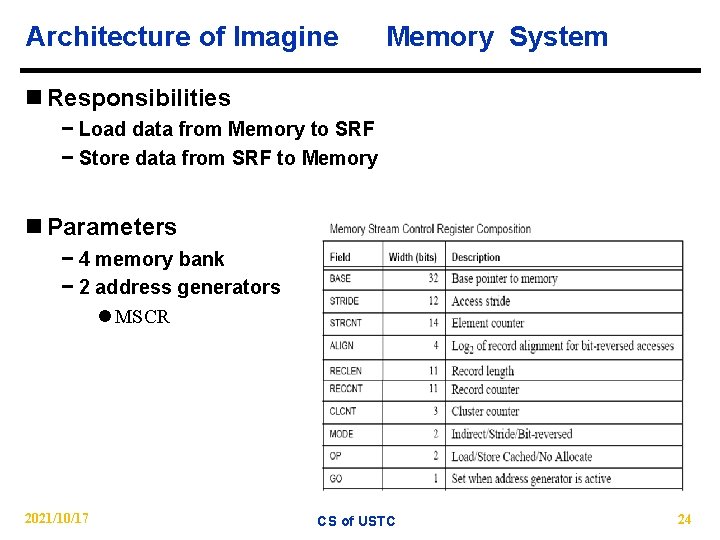

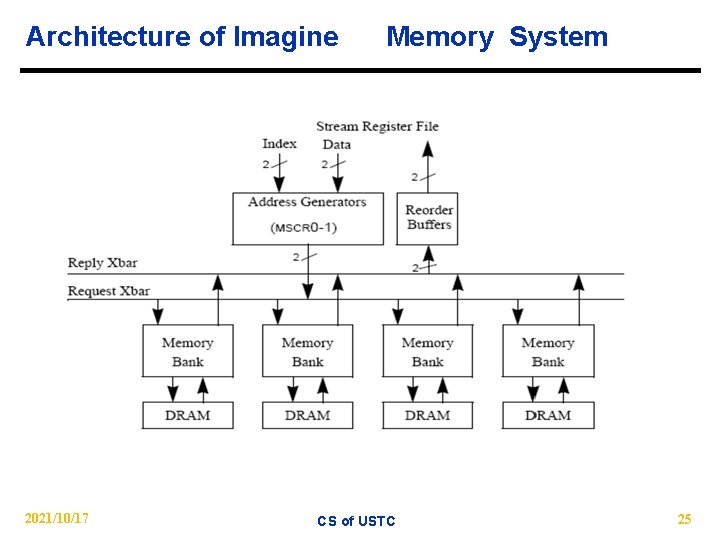

Architecture of Imagine Memory System n Responsibilities − Load data from Memory to SRF − Store data from SRF to Memory n Parameters − 4 memory bank − 2 address generators l MSCR 2021/10/17 CS of USTC 24

Architecture of Imagine 2021/10/17 Memory System CS of USTC 25

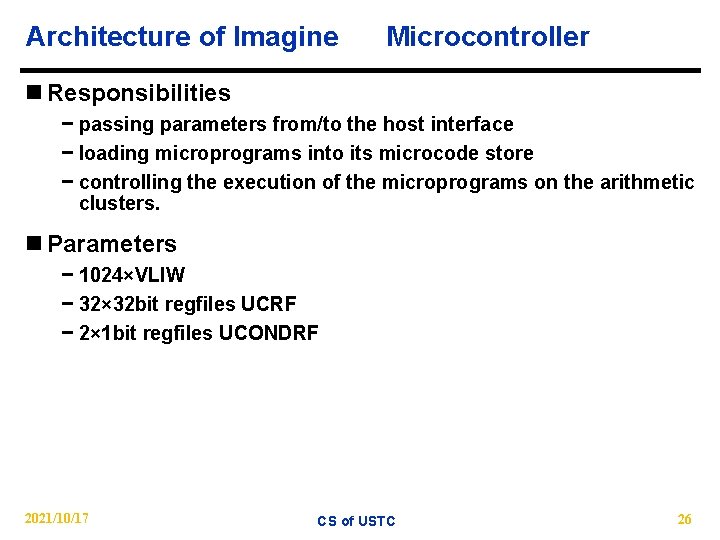

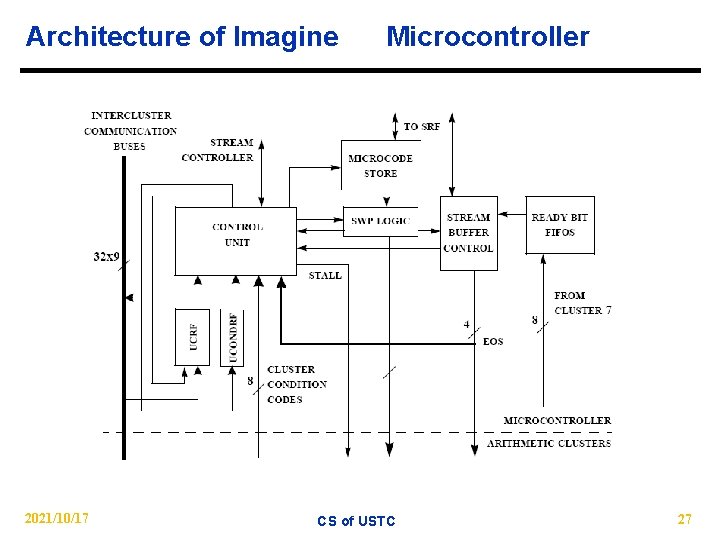

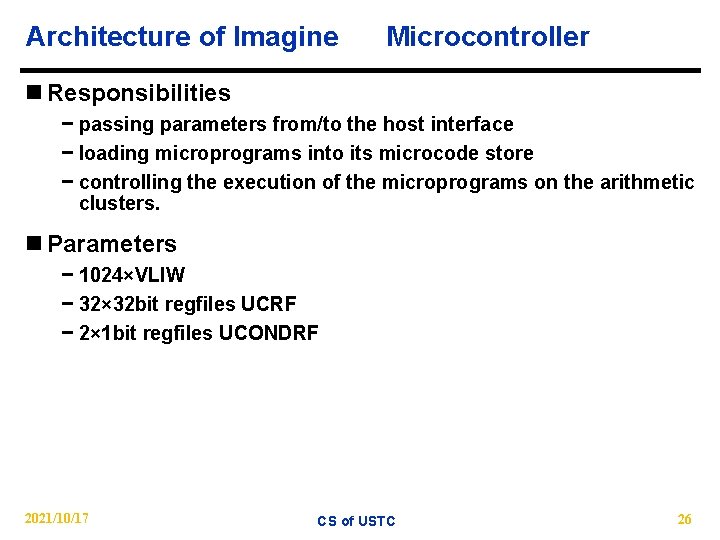

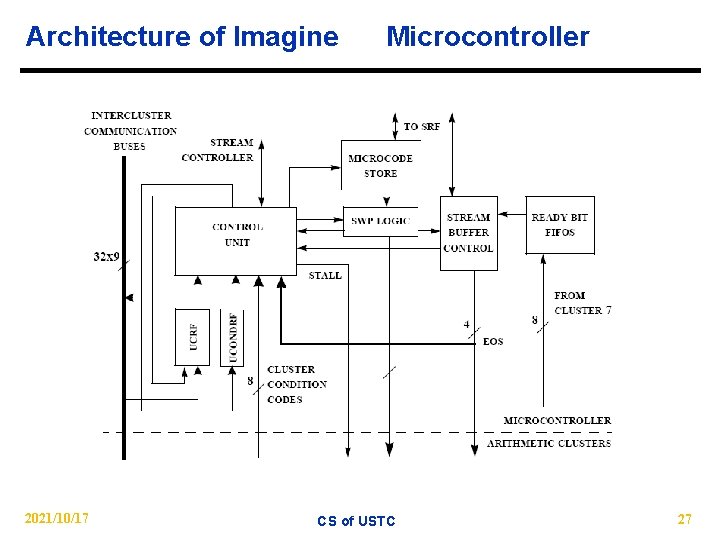

Architecture of Imagine Microcontroller n Responsibilities − passing parameters from/to the host interface − loading microprograms into its microcode store − controlling the execution of the microprograms on the arithmetic clusters. n Parameters − 1024×VLIW − 32× 32 bit regfiles UCRF − 2× 1 bit regfiles UCONDRF 2021/10/17 CS of USTC 26

Architecture of Imagine 2021/10/17 Microcontroller CS of USTC 27

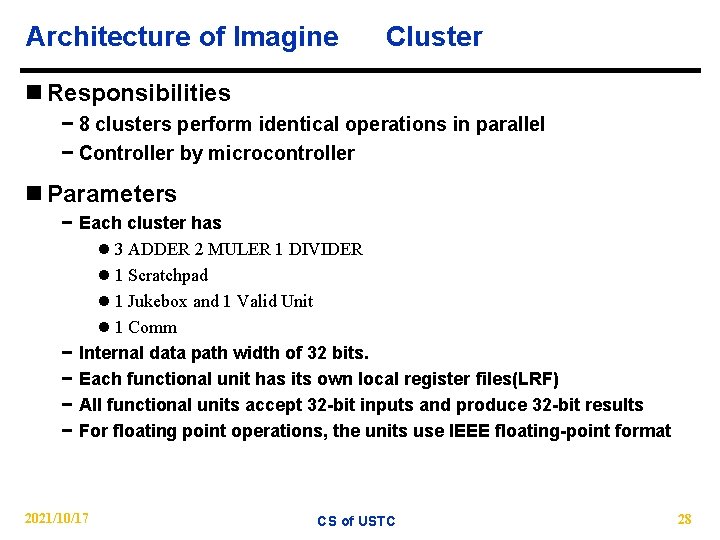

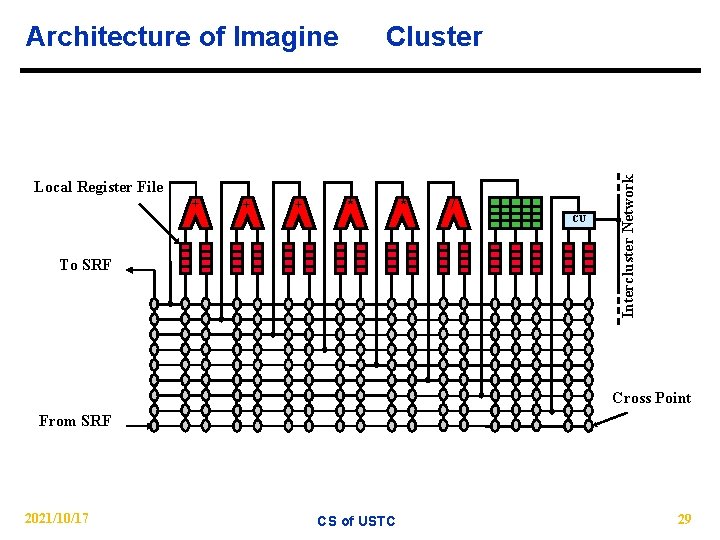

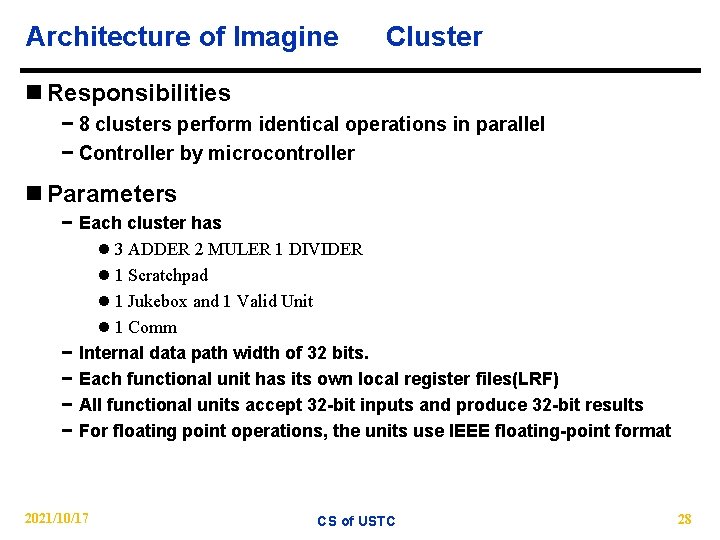

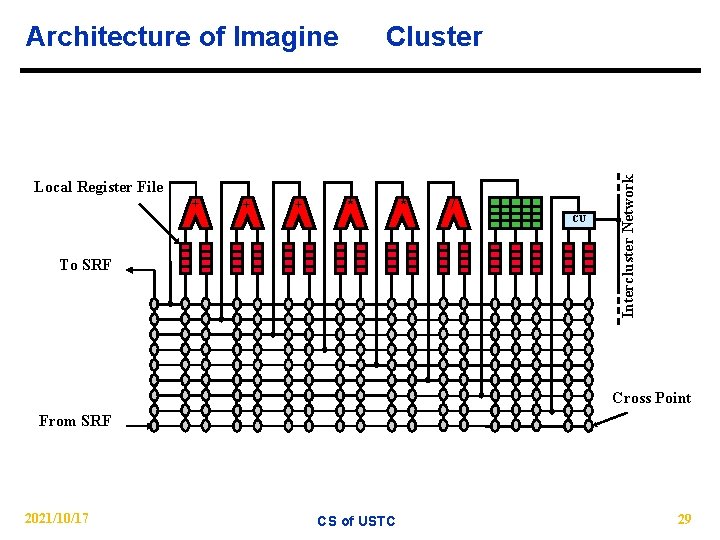

Architecture of Imagine Cluster n Responsibilities − 8 clusters perform identical operations in parallel − Controller by microcontroller n Parameters − Each cluster has l 3 ADDER 2 MULER 1 DIVIDER l 1 Scratchpad l 1 Jukebox and 1 Valid Unit l 1 Comm − Internal data path width of 32 bits. − Each functional unit has its own local register files(LRF) − All functional units accept 32 -bit inputs and produce 32 -bit results − For floating point operations, the units use IEEE floating-point format 2021/10/17 CS of USTC 28

Cluster Local Register File + + + * To SRF * / CU Intercluster Network Architecture of Imagine Cross Point From SRF 2021/10/17 CS of USTC 29

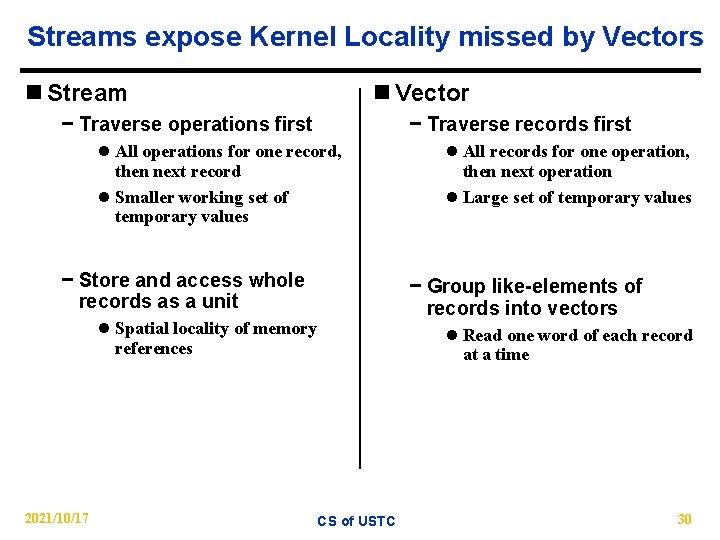

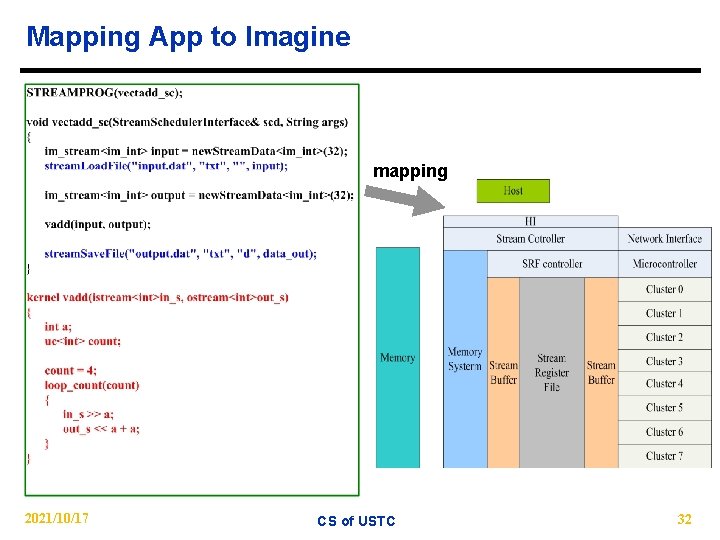

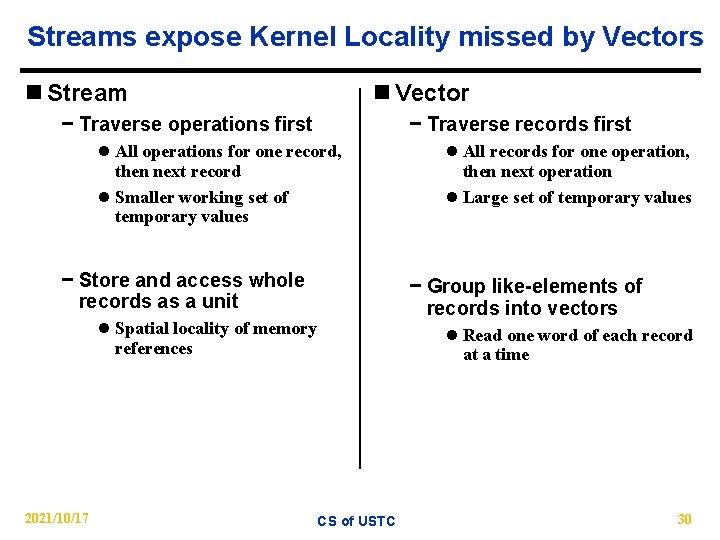

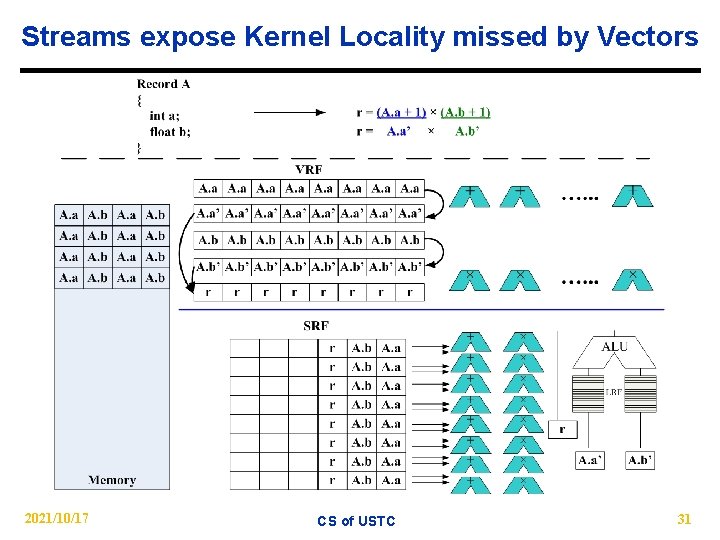

Streams expose Kernel Locality missed by Vectors n Stream n Vector − Traverse operations first − Traverse records first l All operations for one record, then next record l Smaller working set of temporary values − Store and access whole records as a unit − Group like-elements of records into vectors l Spatial locality of memory references 2021/10/17 l All records for one operation, then next operation l Large set of temporary values l Read one word of each record at a time CS of USTC 30

Streams expose Kernel Locality missed by Vectors 2021/10/17 CS of USTC 31

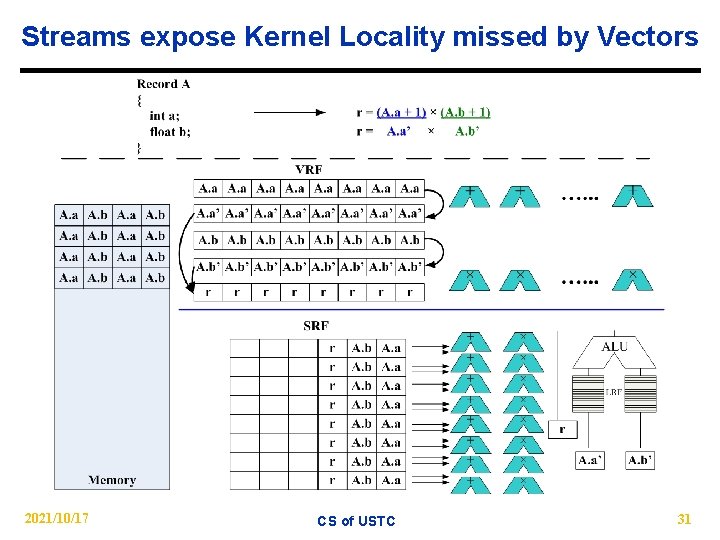

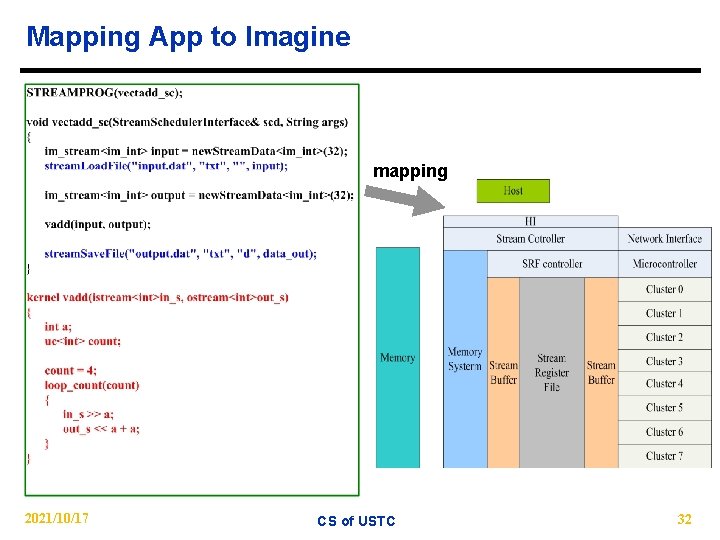

Mapping App to Imagine mapping 2021/10/17 CS of USTC 32

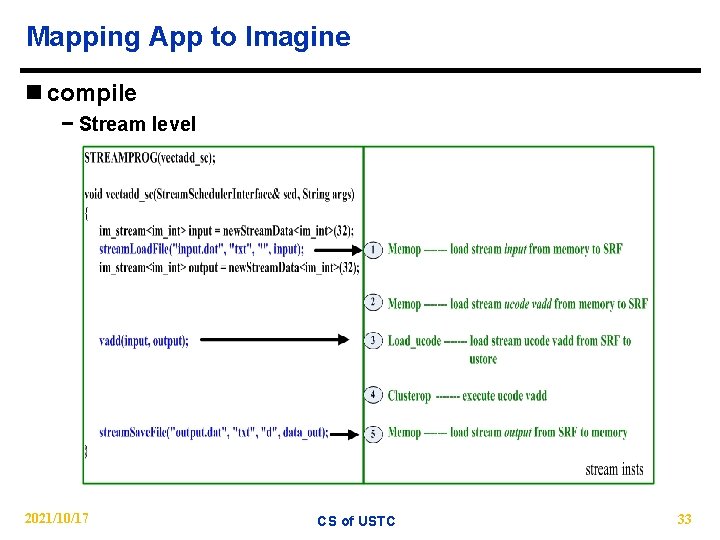

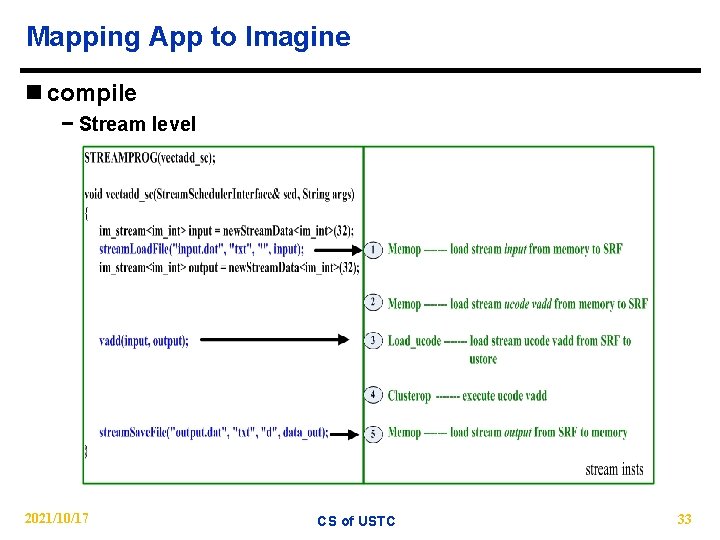

Mapping App to Imagine n compile − Stream level 2021/10/17 CS of USTC 33

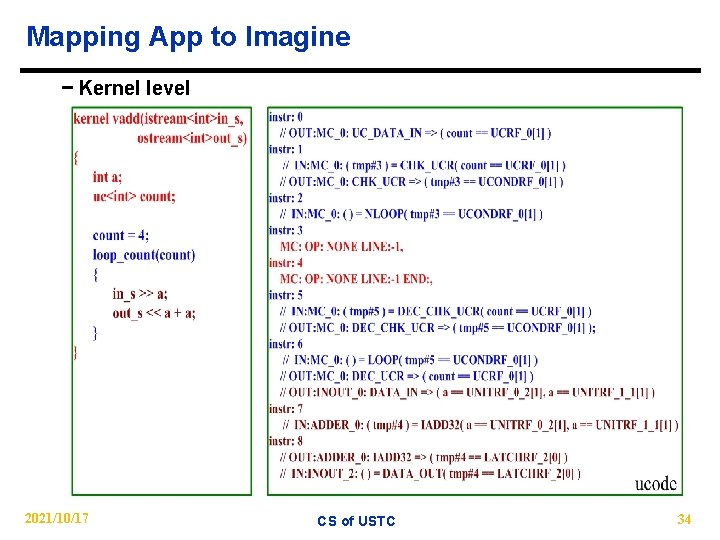

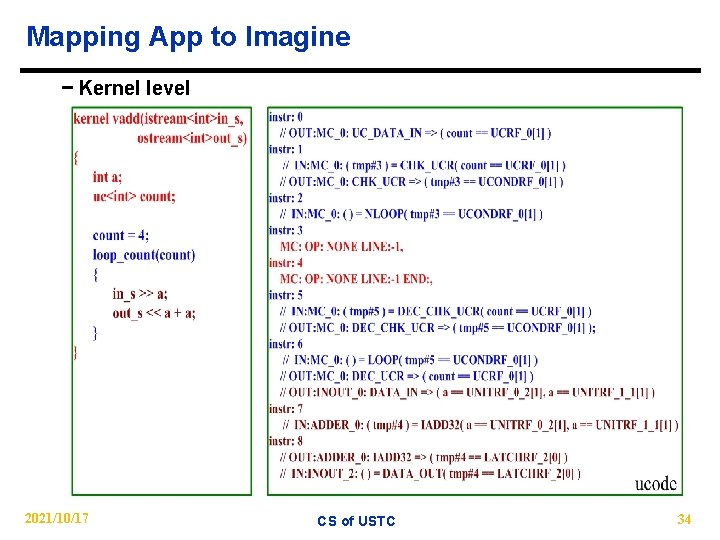

Mapping App to Imagine − Kernel level 2021/10/17 CS of USTC 34

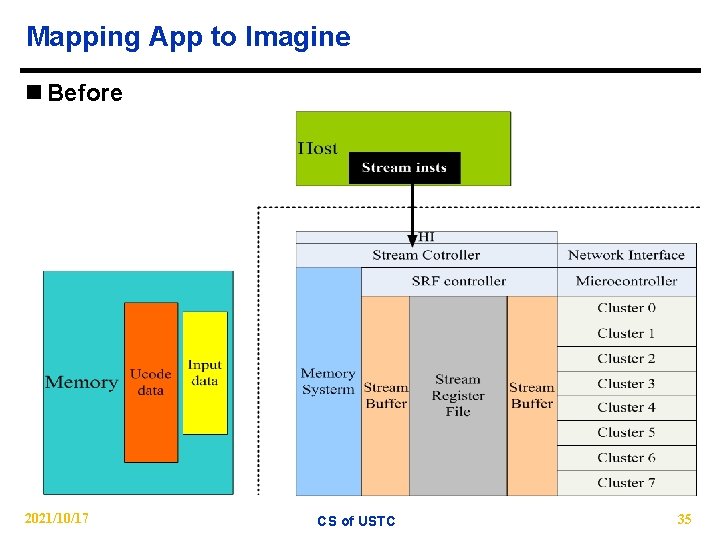

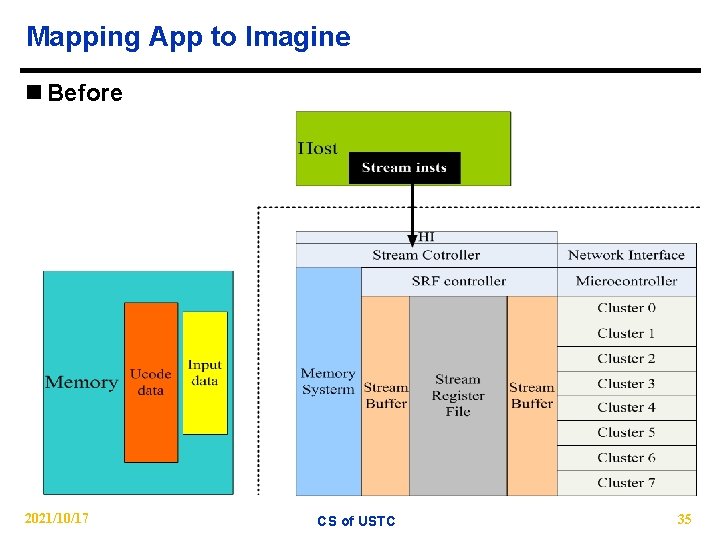

Mapping App to Imagine n Before 2021/10/17 CS of USTC 35

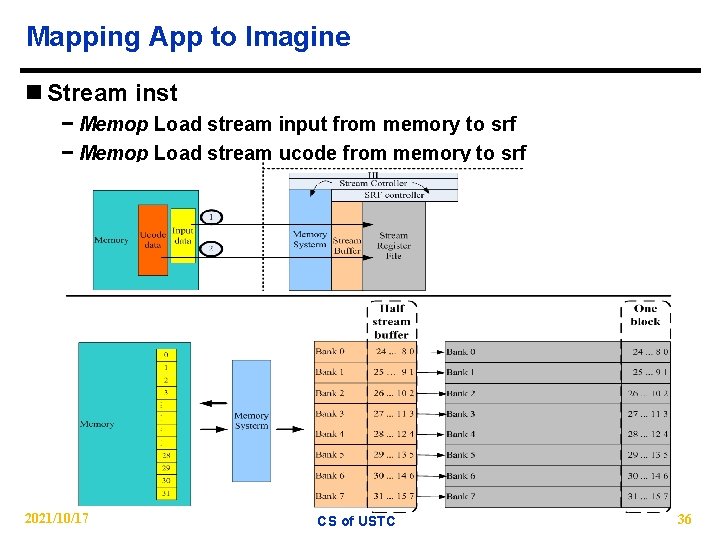

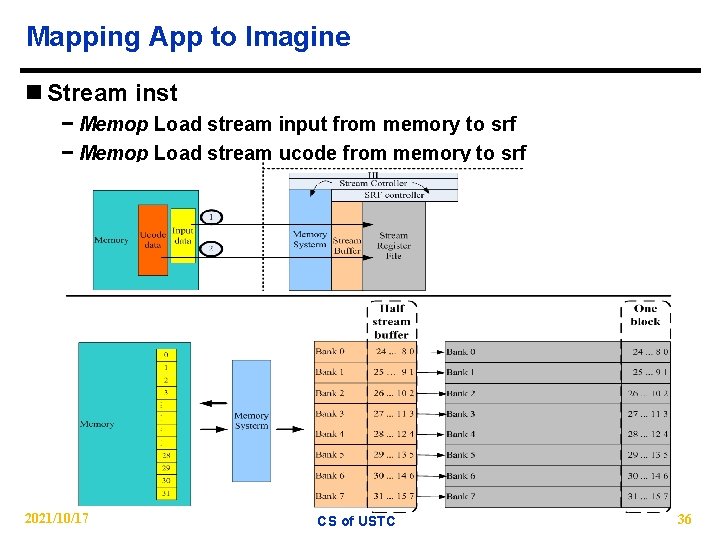

Mapping App to Imagine n Stream inst − Memop Load stream input from memory to srf − Memop Load stream ucode from memory to srf 2021/10/17 CS of USTC 36

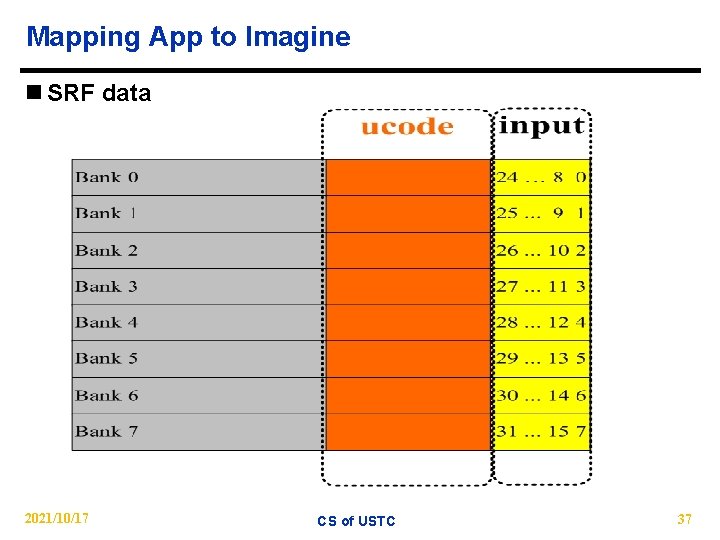

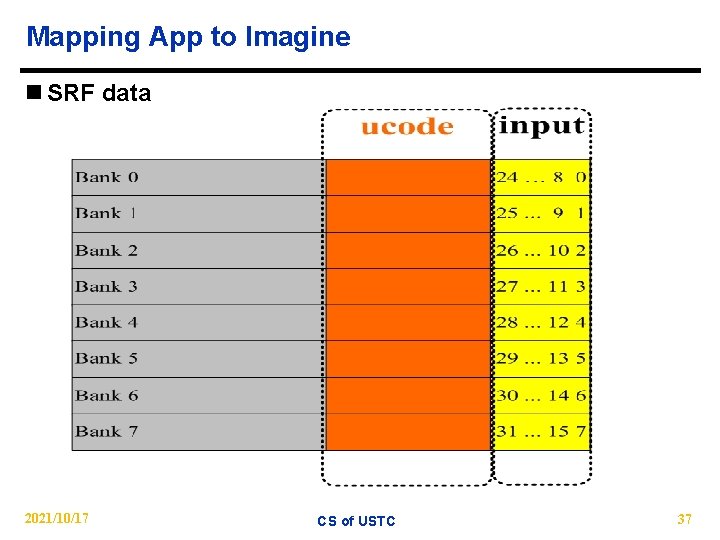

Mapping App to Imagine n SRF data 2021/10/17 CS of USTC 37

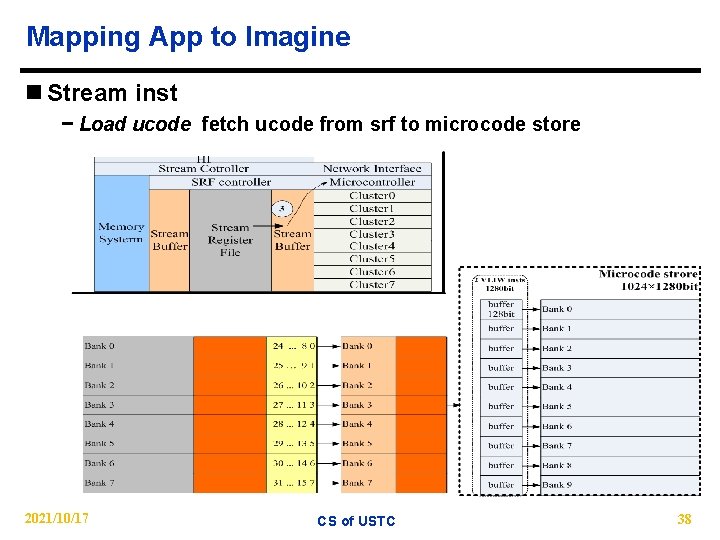

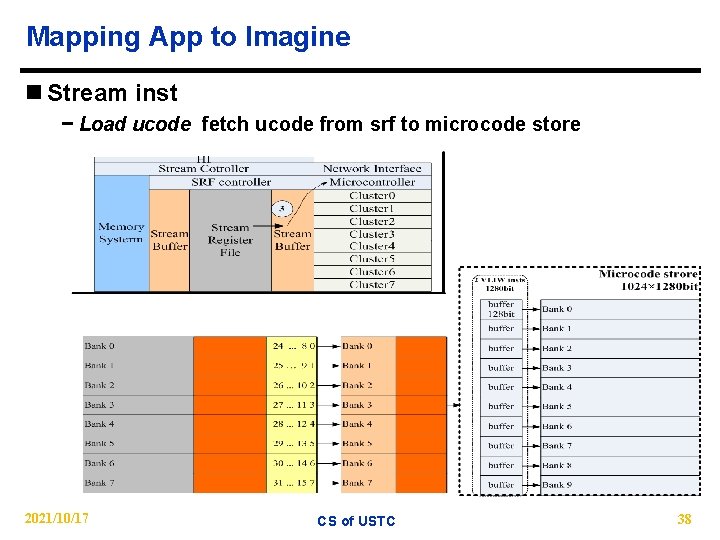

Mapping App to Imagine n Stream inst − Load ucode fetch ucode from srf to microcode store 2021/10/17 CS of USTC 38

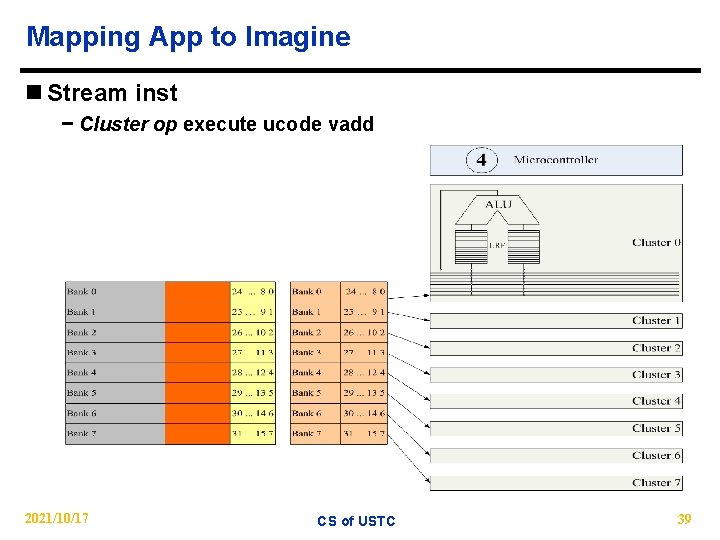

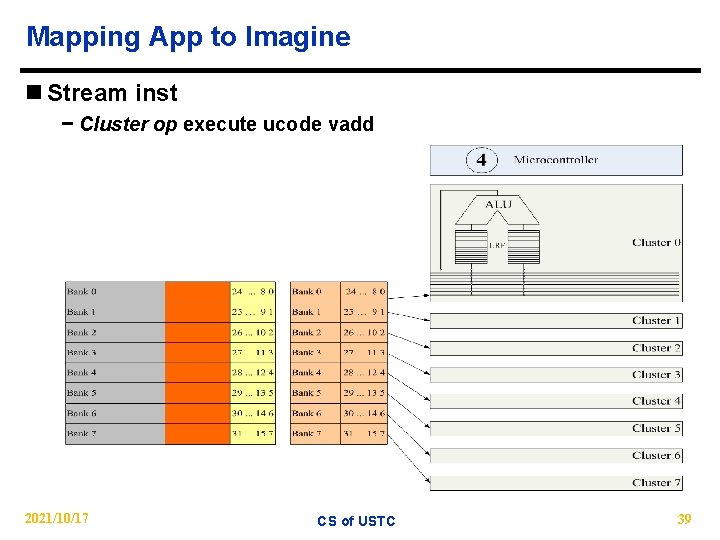

Mapping App to Imagine n Stream inst − Cluster op execute ucode vadd 2021/10/17 CS of USTC 39

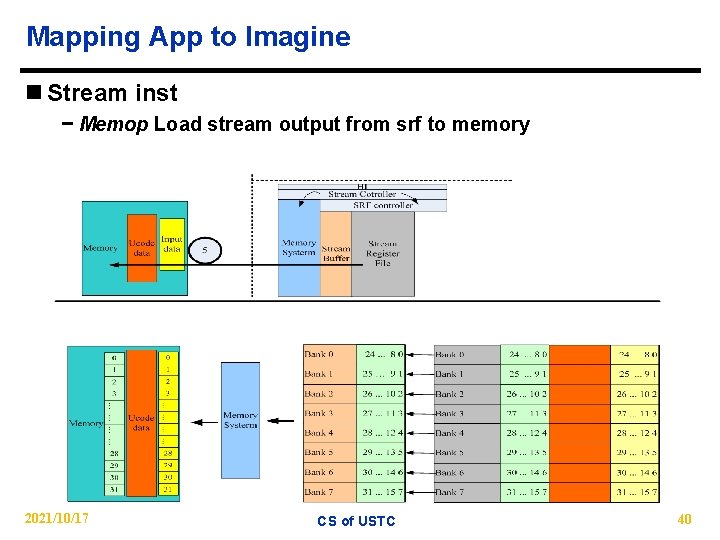

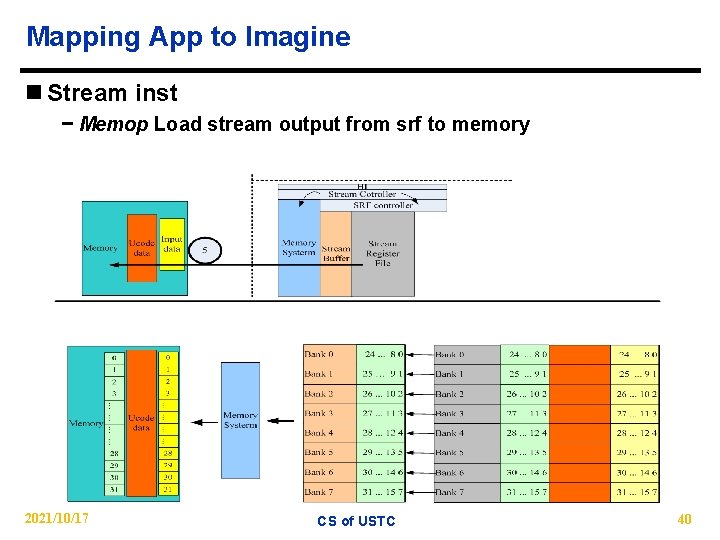

Mapping App to Imagine n Stream inst − Memop Load stream output from srf to memory 2021/10/17 CS of USTC 40

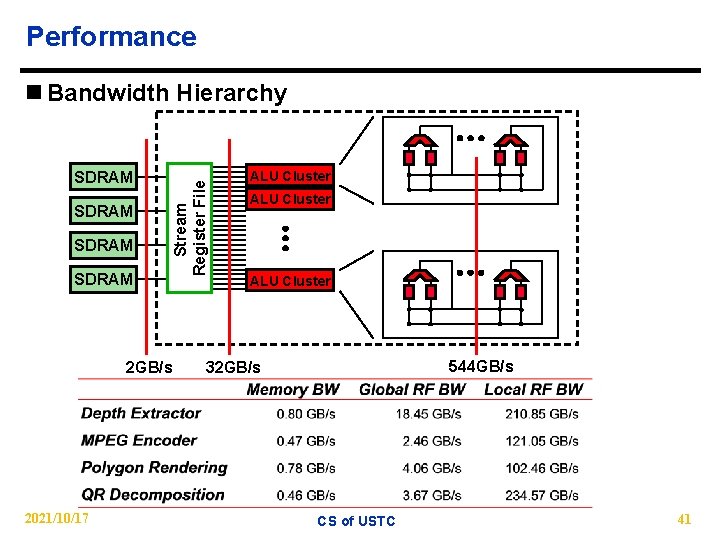

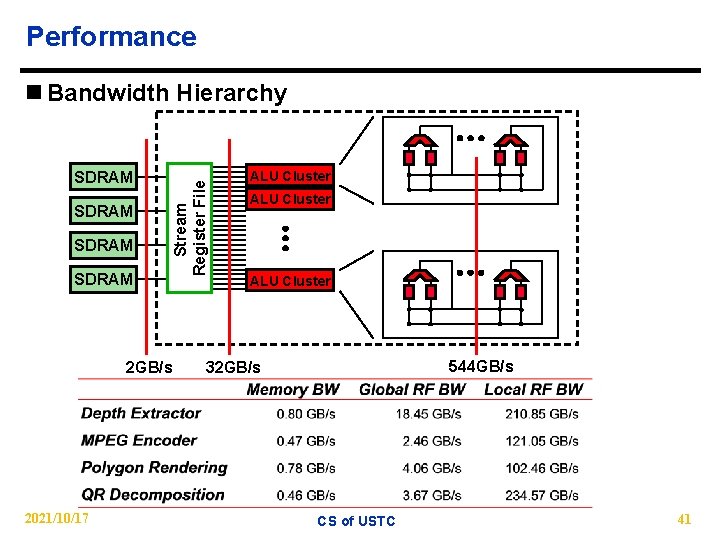

Performance SDRAM Stream Register File n Bandwidth Hierarchy 2 GB/s 2021/10/17 ALU Cluster 544 GB/s 32 GB/s CS of USTC 41

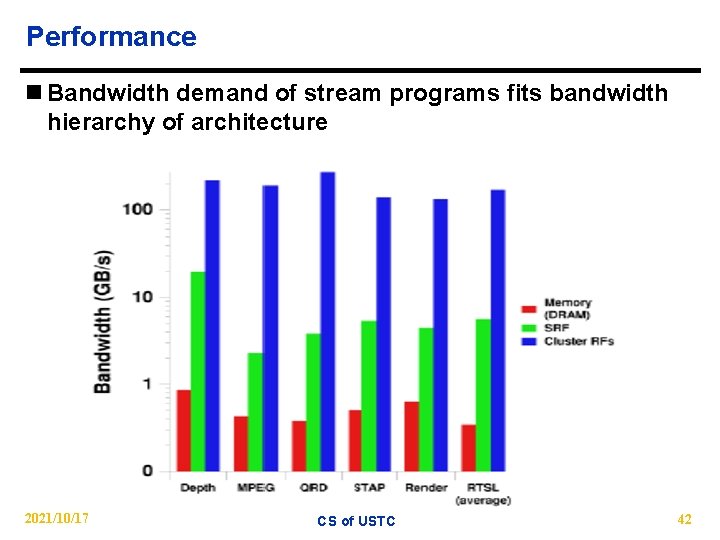

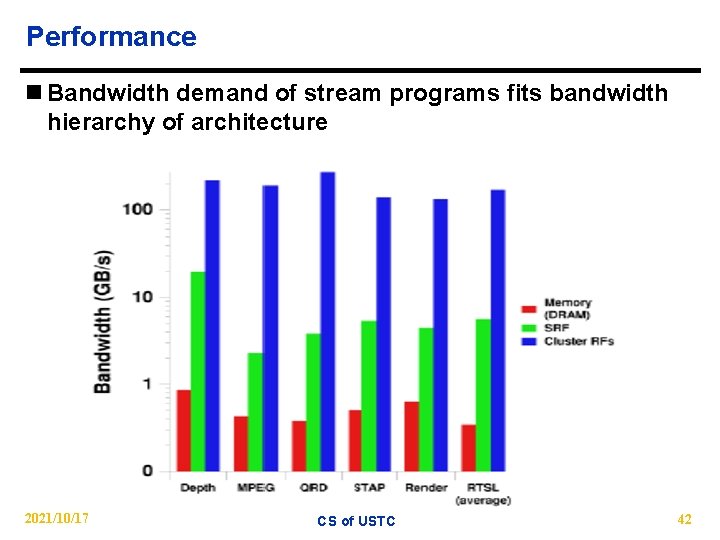

Performance n Bandwidth demand of stream programs fits bandwidth hierarchy of architecture 2021/10/17 CS of USTC 42

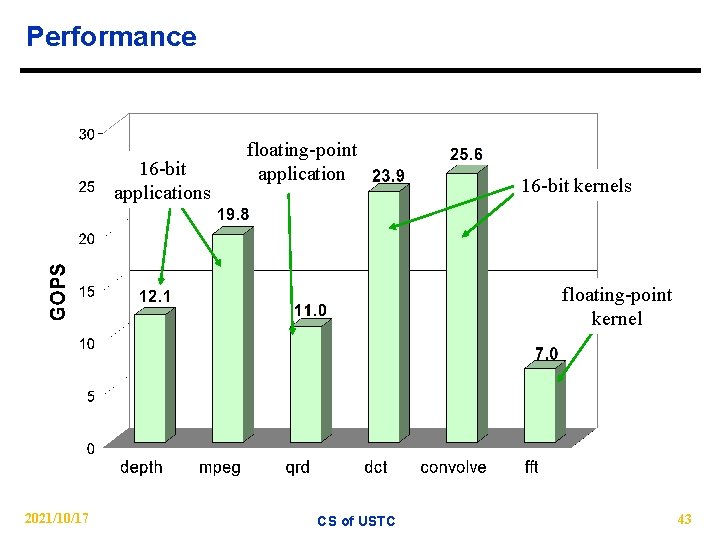

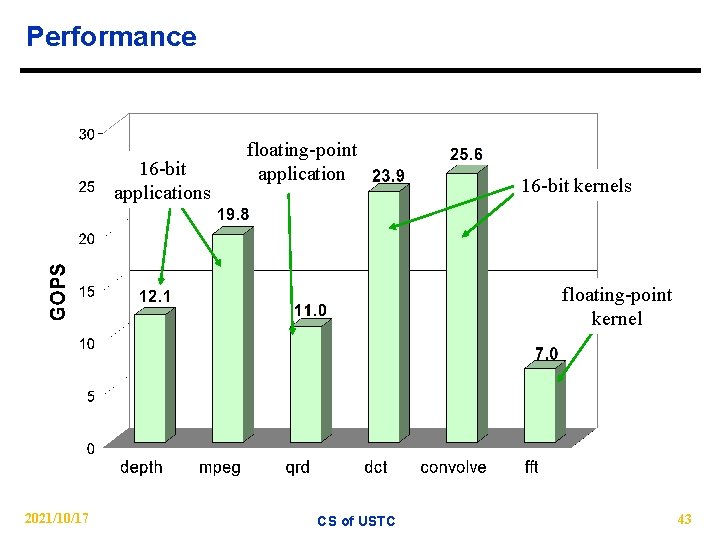

Performance 16 -bit applications floating-point application 16 -bit kernels floating-point kernel 2021/10/17 CS of USTC 43

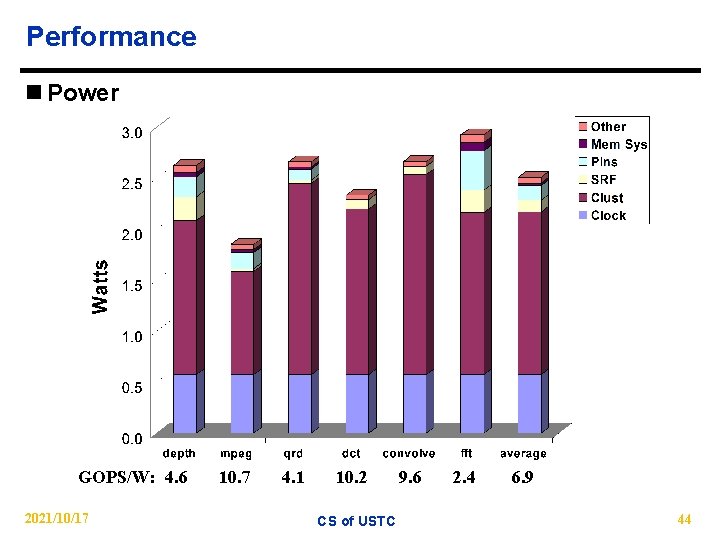

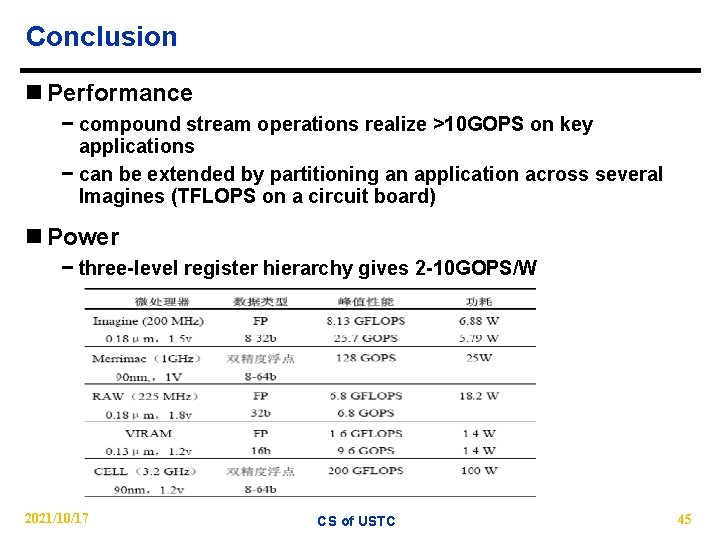

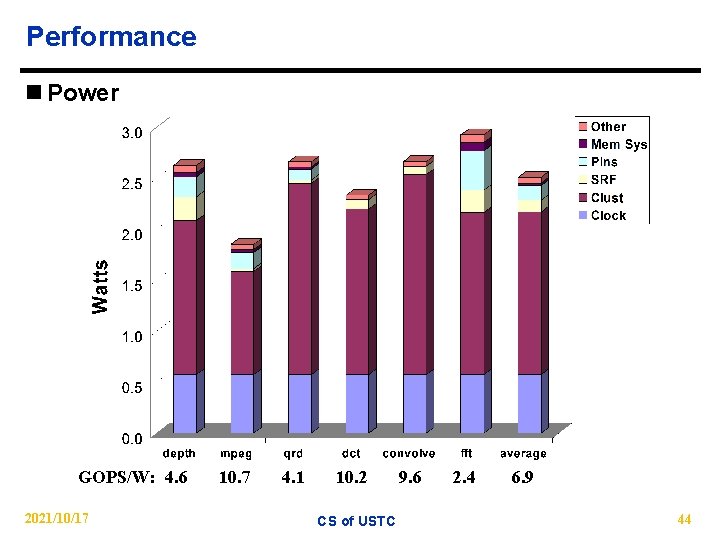

Performance n Power GOPS/W: 4. 6 2021/10/17 10. 7 4. 1 10. 2 CS of USTC 9. 6 2. 4 6. 9 44

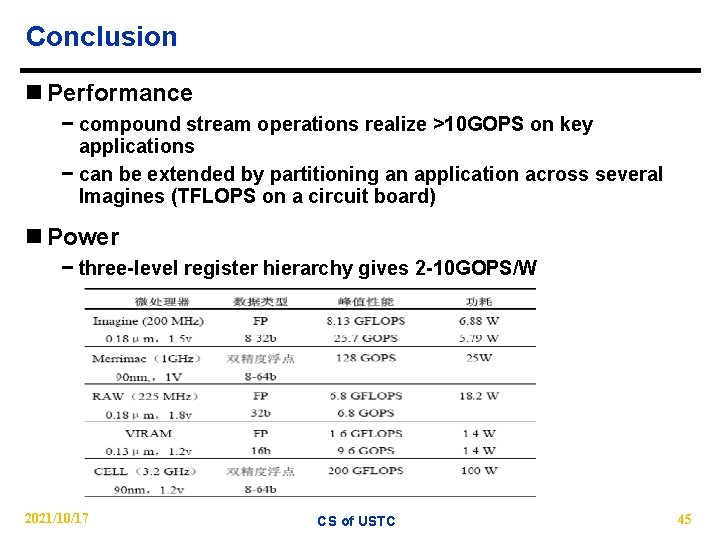

Conclusion n Performance − compound stream operations realize >10 GOPS on key applications − can be extended by partitioning an application across several Imagines (TFLOPS on a circuit board) n Power − three-level register hierarchy gives 2 -10 GOPS/W 2021/10/17 CS of USTC 45

Conclusion n Disadvantage − Programming model l Rewrite application l Programmers need to know details of hardware 2021/10/17 CS of USTC 46

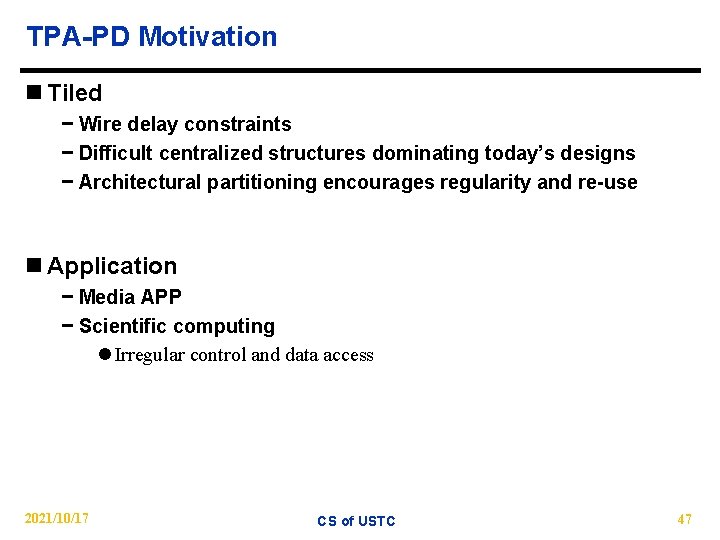

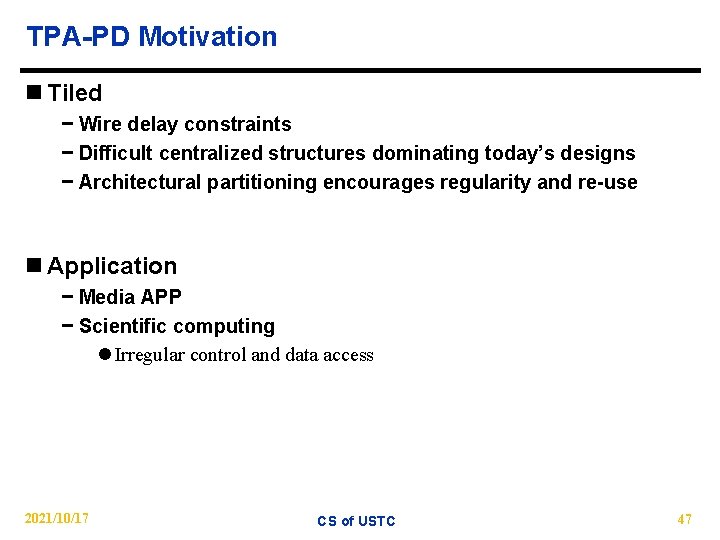

TPA-PD Motivation n Tiled − Wire delay constraints − Difficult centralized structures dominating today’s designs − Architectural partitioning encourages regularity and re-use n Application − Media APP − Scientific computing l Irregular control and data access 2021/10/17 CS of USTC 47

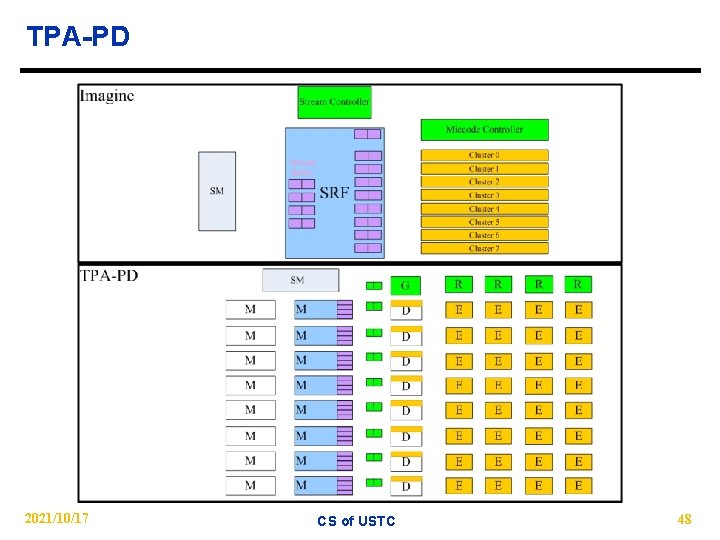

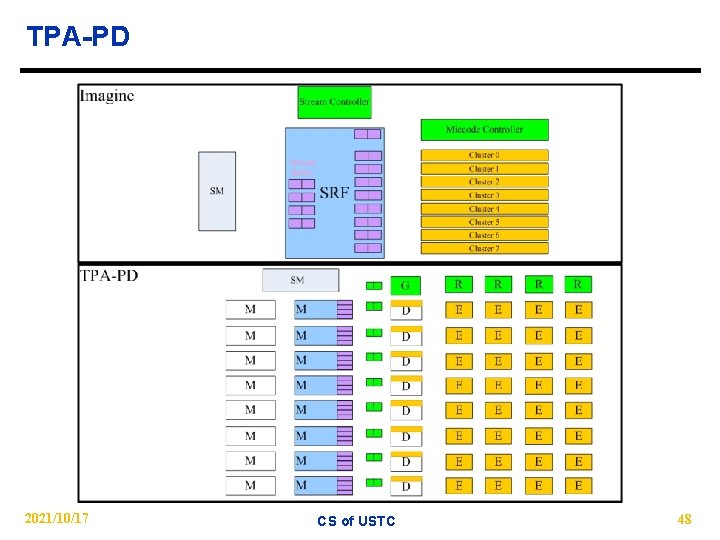

TPA-PD 2021/10/17 CS of USTC 48

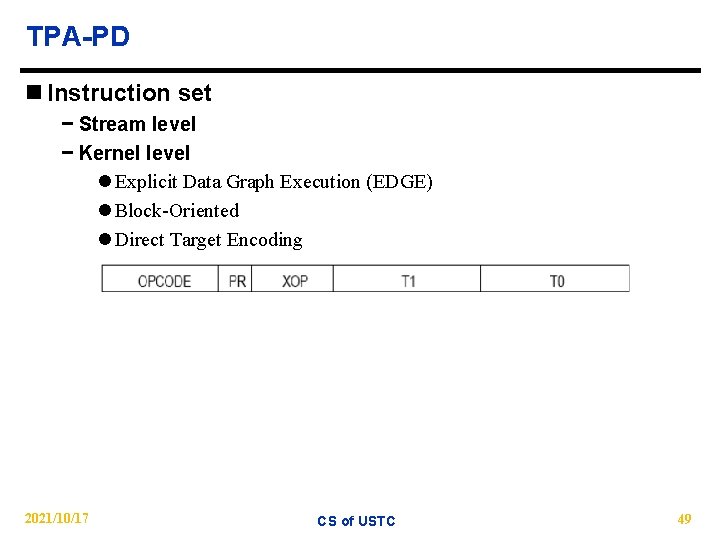

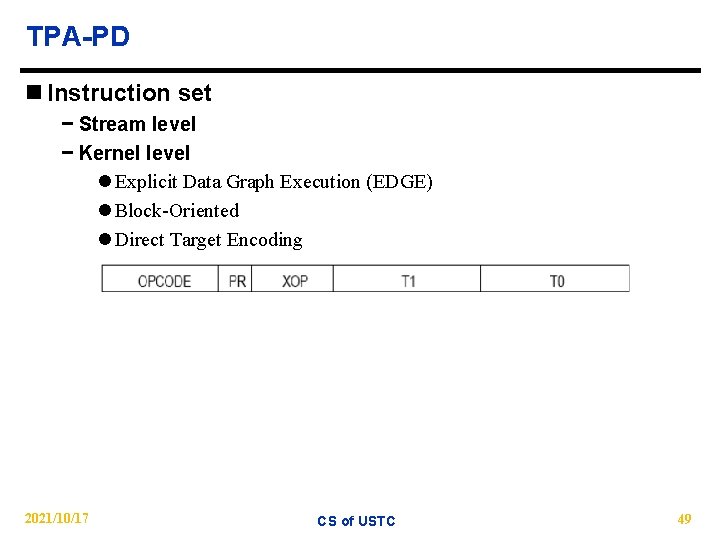

TPA-PD n Instruction set − Stream level − Kernel level l Explicit Data Graph Execution (EDGE) l Block-Oriented l Direct Target Encoding 2021/10/17 CS of USTC 49

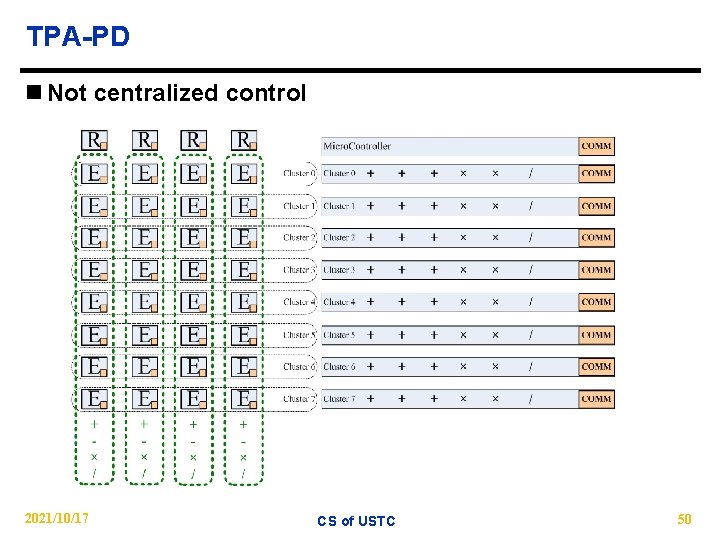

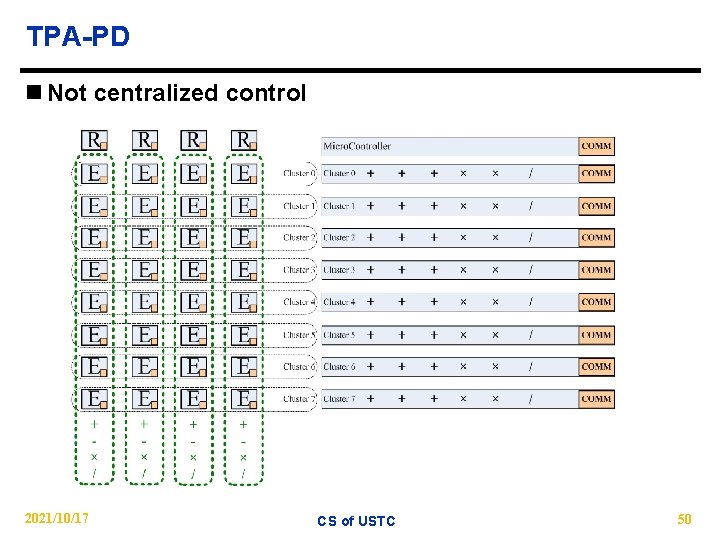

TPA-PD n Not centralized control 2021/10/17 CS of USTC 50

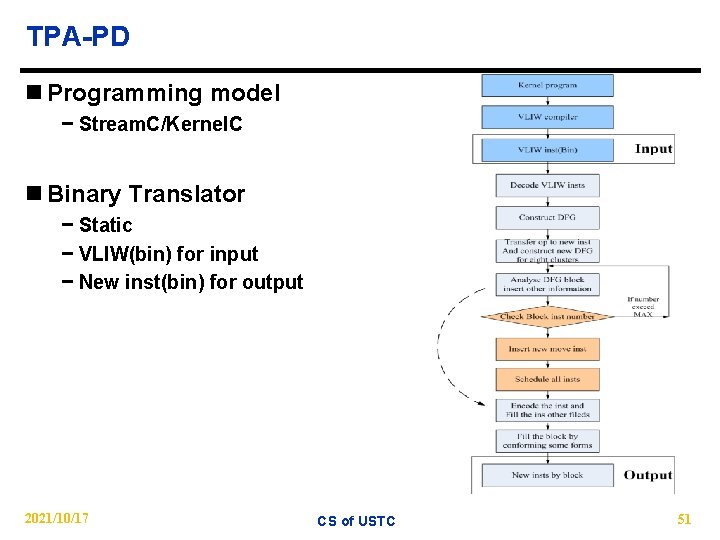

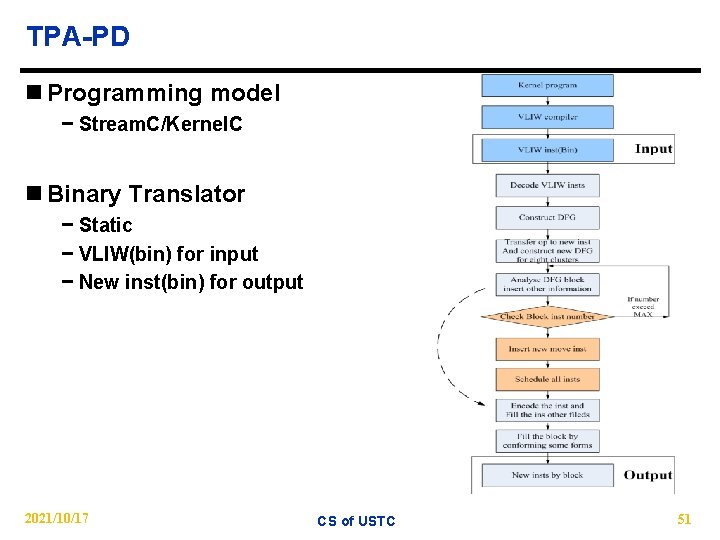

TPA-PD n Programming model − Stream. C/Kernel. C n Binary Translator − Static − VLIW(bin) for input − New inst(bin) for output 2021/10/17 CS of USTC 51

Future work n TPA-PD − Architecture − Instruction Set − C simulator − RTL simulator 2021/10/17 CS of USTC 52

Thank you 2021/10/17 CS of USTC 53