Lecture on High Performance Processor Architecture CS 05162

- Slides: 44

Lecture on High Performance Processor Architecture (CS 05162) Value Prediction and Instruction Reuse An Hong han@ustc. edu. cn Fall 2007 University of Science and Technology of China Department of Computer Science and Technology CS of USTC AN Hong

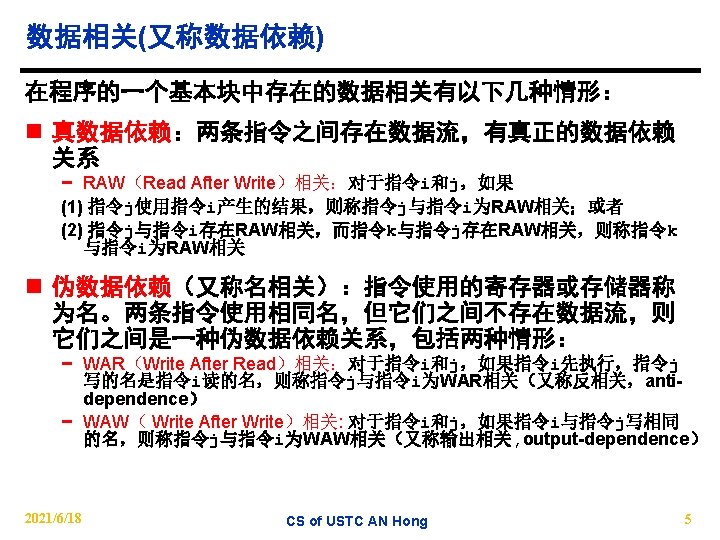

Outline n What’s Data Hazards and Solution? n What Makes Data Speculation possible? n Value Prediction (VP) n Instruction Reuse (IR) 2021/6/18 CS of USTC AN Hong 2

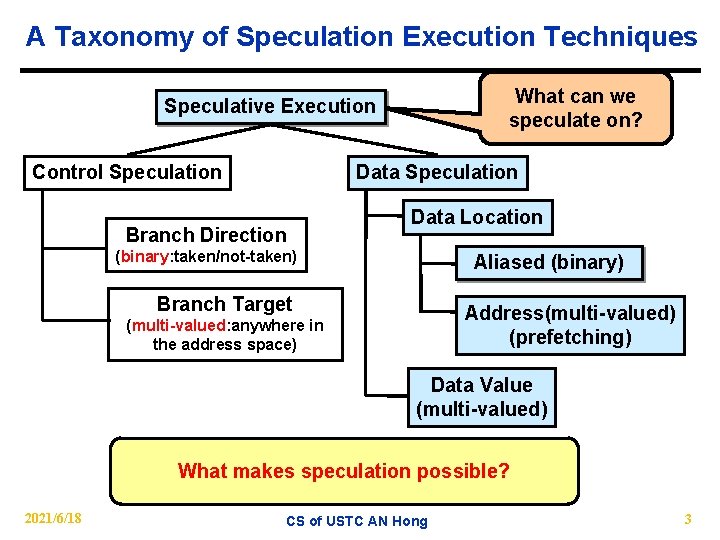

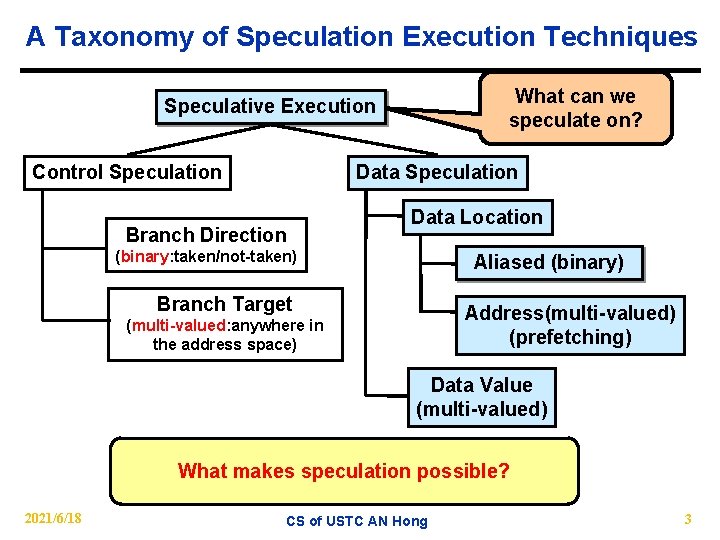

A Taxonomy of Speculation Execution Techniques What can we speculate on? Speculative Execution Control Speculation Data Speculation Branch Direction Data Location (binary: taken/not-taken) Aliased (binary) Branch Target Address(multi-valued) (prefetching) (multi-valued: anywhere in the address space) Data Value (multi-valued) What makes speculation possible? 2021/6/18 CS of USTC AN Hong 3

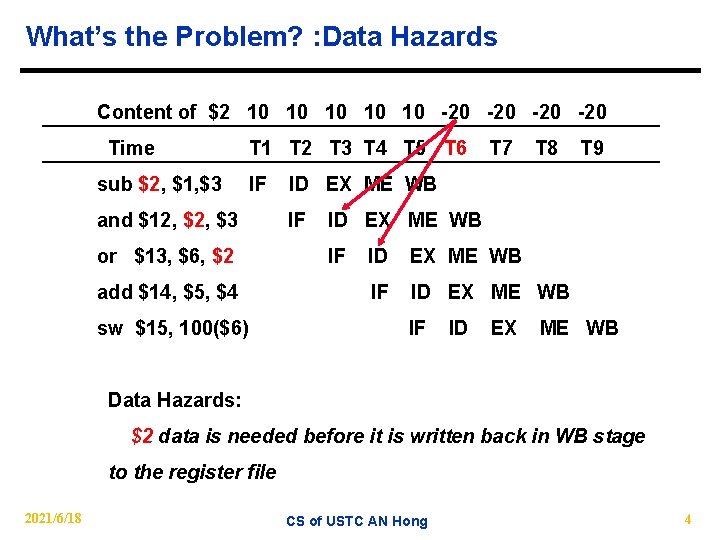

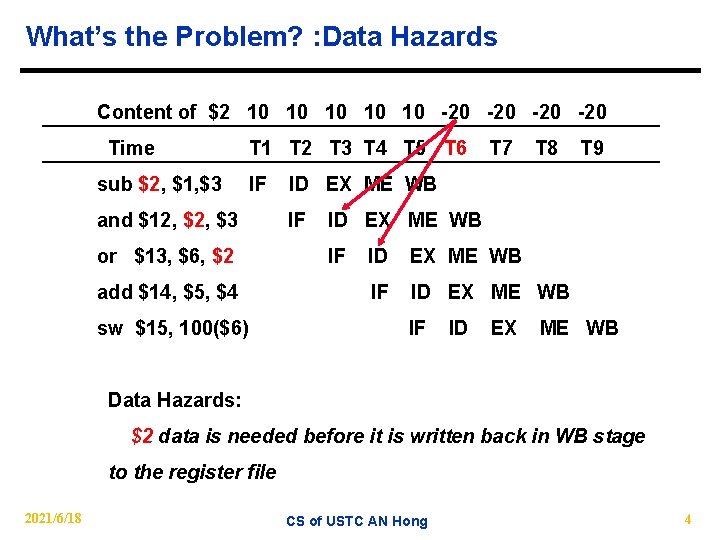

What’s the Problem? : Data Hazards Content of $2 10 10 10 -20 -20 Time sub $2, $1, $3 T 1 T 2 T 3 T 4 T 5 T 6 IF and $12, $3 or $13, $6, $2 add $14, $5, $4 sw $15, 100($6) T 7 T 8 T 9 ID EX ME WB IF ID EX ME WB Data Hazards: $2 data is needed before it is written back in WB stage to the register file 2021/6/18 CS of USTC AN Hong 4

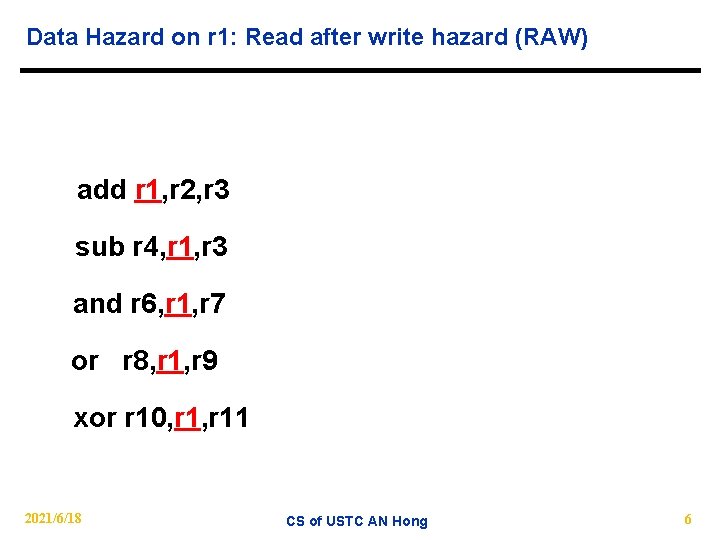

Data Hazard on r 1: Read after write hazard (RAW) add r 1, r 2, r 3 sub r 4, r 1, r 3 and r 6, r 1, r 7 or r 8, r 1, r 9 xor r 10, r 11 2021/6/18 CS of USTC AN Hong 6

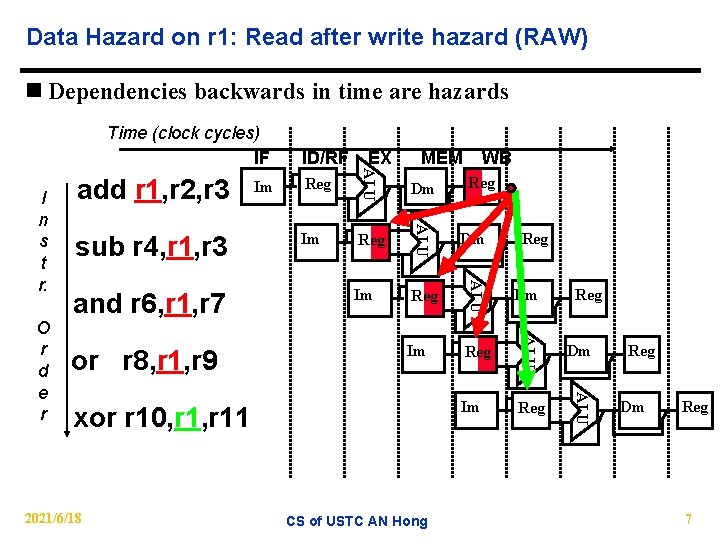

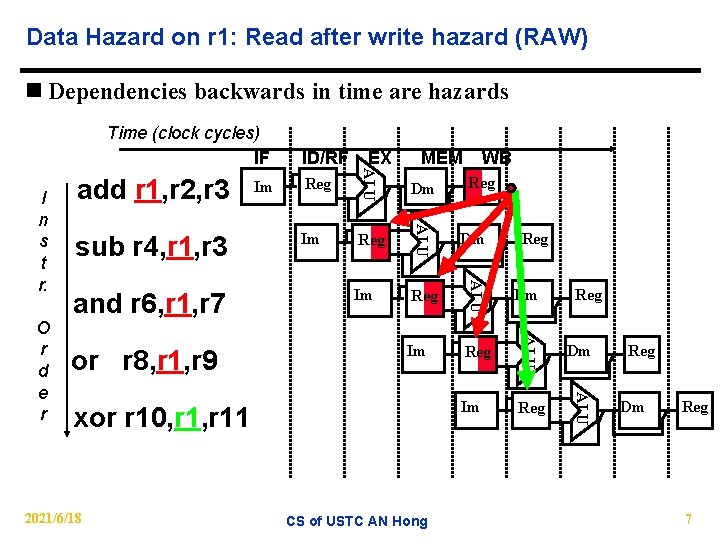

Data Hazard on r 1: Read after write hazard (RAW) n Dependencies backwards in time are hazards Time (clock cycles) IF Reg Dm Im Reg ALU or r 8, r 1, r 9 xor r 10, r 11 2021/6/18 WB ALU and r 6, r 1, r 7 MEM ALU O r d e r sub r 4, r 1, r 3 Im EX ALU I n s t r. add r 1, r 2, r 3 ID/RF CS of USTC AN Hong Reg Reg Dm Reg 7

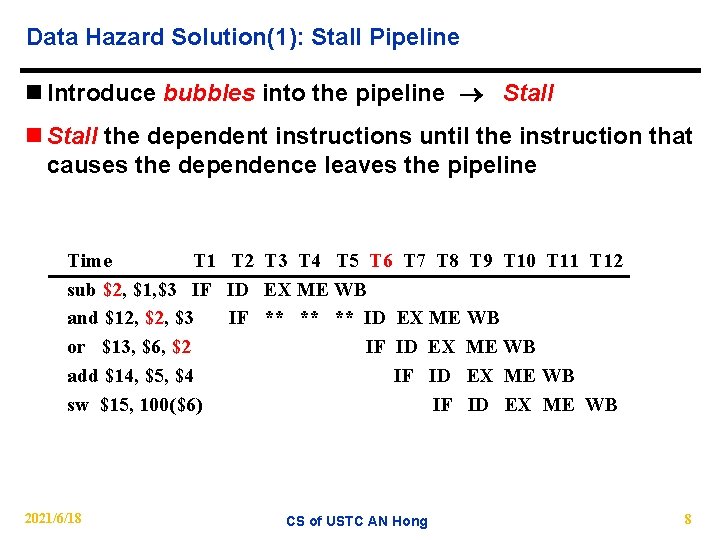

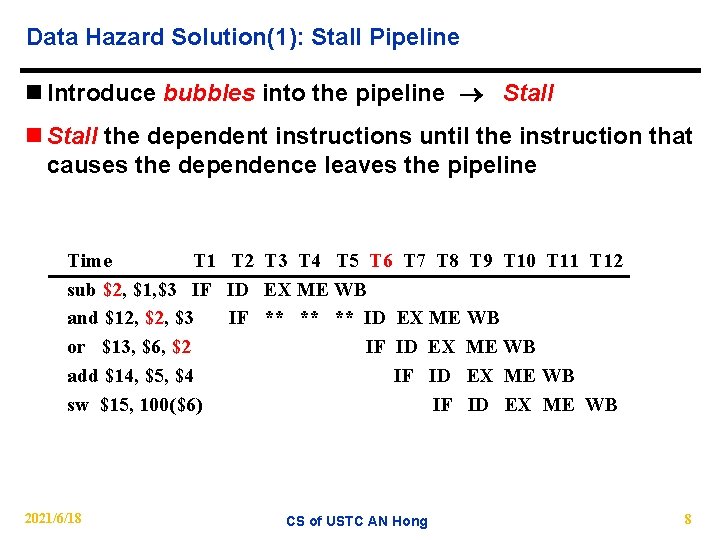

Data Hazard Solution(1): Stall Pipeline n Introduce bubbles into the pipeline Stall n Stall the dependent instructions until the instruction that causes the dependence leaves the pipeline Time T 1 T 2 T 3 T 4 T 5 T 6 T 7 T 8 T 9 T 10 T 11 T 12 sub $2, $1, $3 IF ID EX ME WB and $12, $3 IF ** ** ** ID EX ME WB or $13, $6, $2 IF ID EX ME WB add $14, $5, $4 IF ID EX ME WB sw $15, 100($6) IF ID EX ME WB 2021/6/18 CS of USTC AN Hong 8

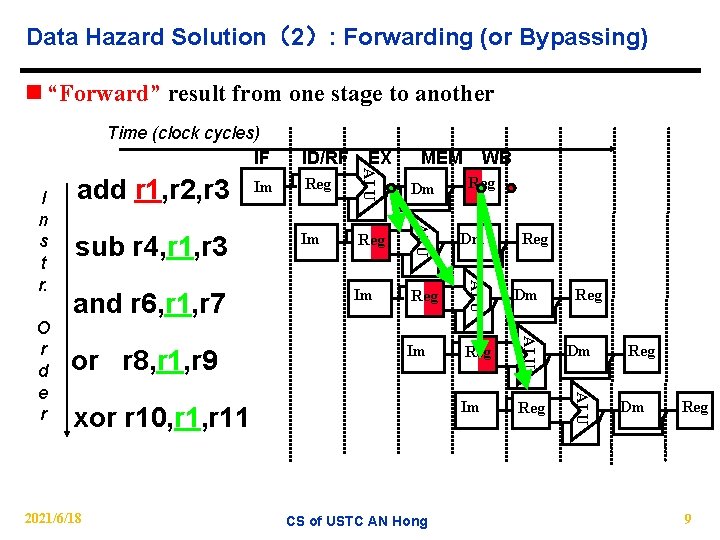

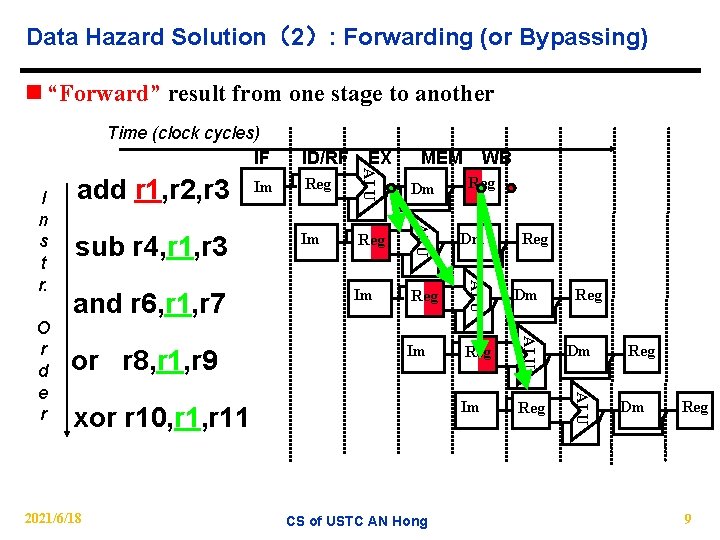

Data Hazard Solution(2): Forwarding (or Bypassing) n “Forward” result from one stage to another Time (clock cycles) IF Reg Dm Im Reg ALU or r 8, r 1, r 9 xor r 10, r 11 2021/6/18 WB ALU and r 6, r 1, r 7 MEM ALU O r d e r sub r 4, r 1, r 3 Im EX ALU I n s t r. add r 1, r 2, r 3 ID/RF CS of USTC AN Hong Reg Reg Dm Reg 9

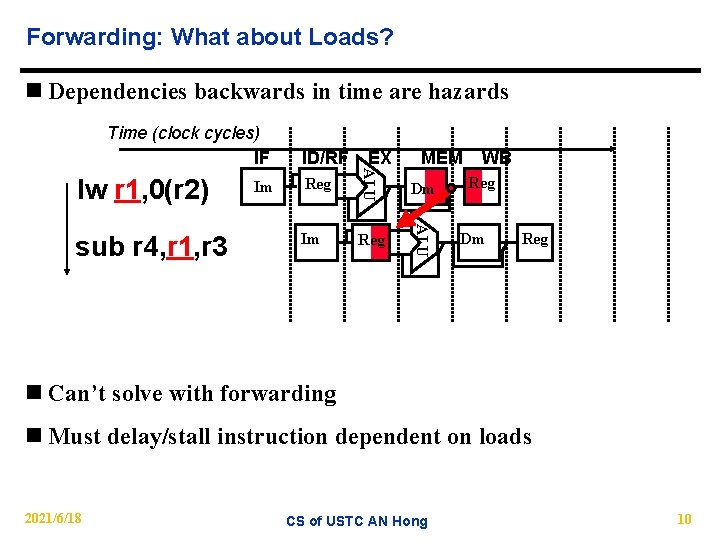

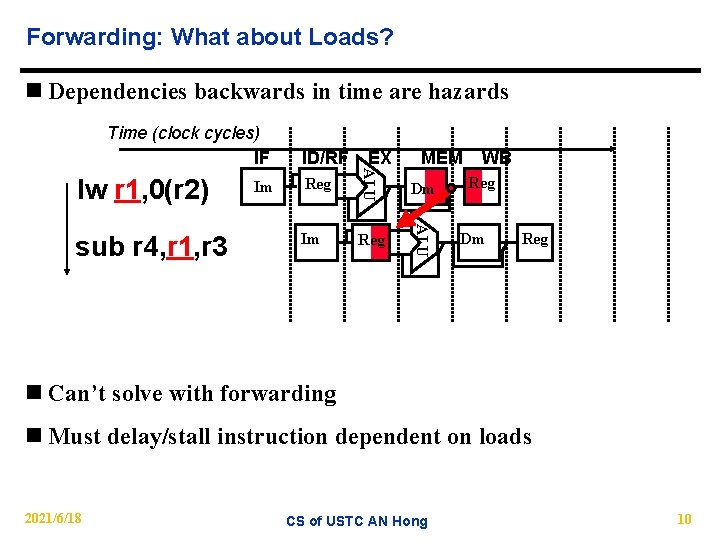

Forwarding: What about Loads? n Dependencies backwards in time are hazards Time (clock cycles) IF MEM Reg Dm Im Reg ALU sub r 4, r 1, r 3 Im EX ALU lw r 1, 0(r 2) ID/RF WB Reg Dm Reg n Can’t solve with forwarding n Must delay/stall instruction dependent on loads 2021/6/18 CS of USTC AN Hong 10

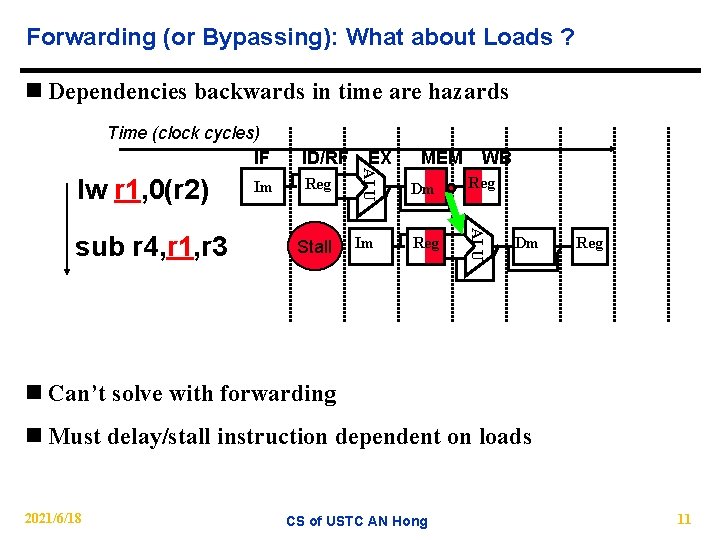

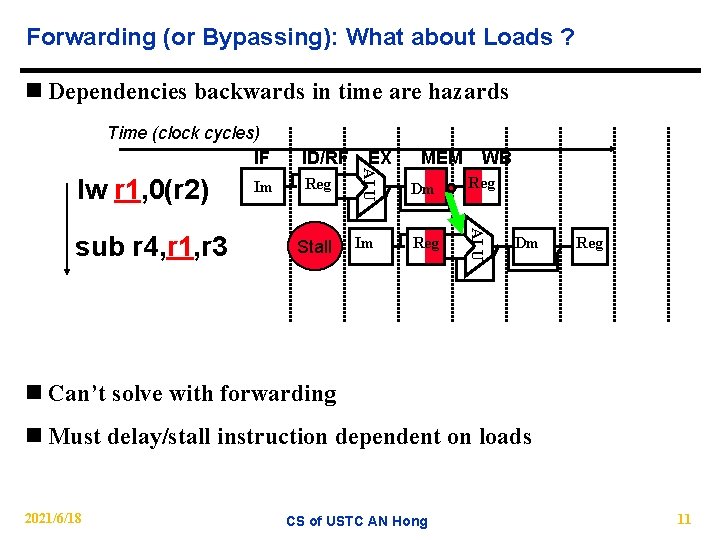

Forwarding (or Bypassing): What about Loads ? n Dependencies backwards in time are hazards Time (clock cycles) IF Reg Stall MEM WB Dm Reg Im Reg ALU sub r 4, r 1, r 3 Im EX ALU lw r 1, 0(r 2) ID/RF Dm Reg n Can’t solve with forwarding n Must delay/stall instruction dependent on loads 2021/6/18 CS of USTC AN Hong 11

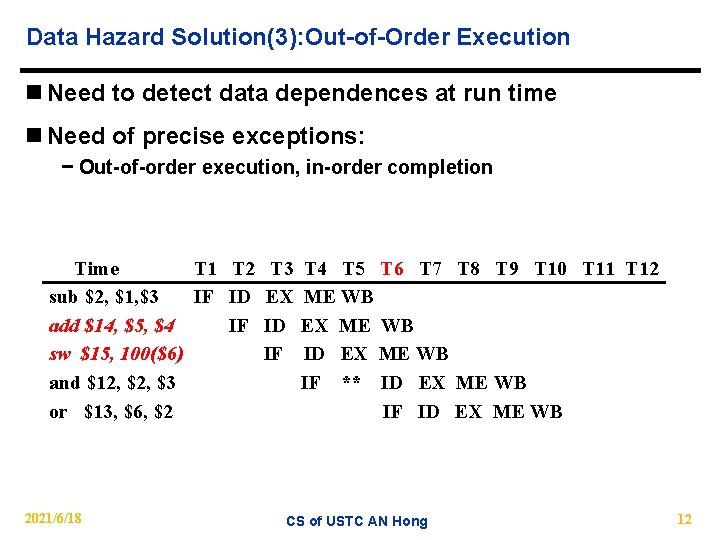

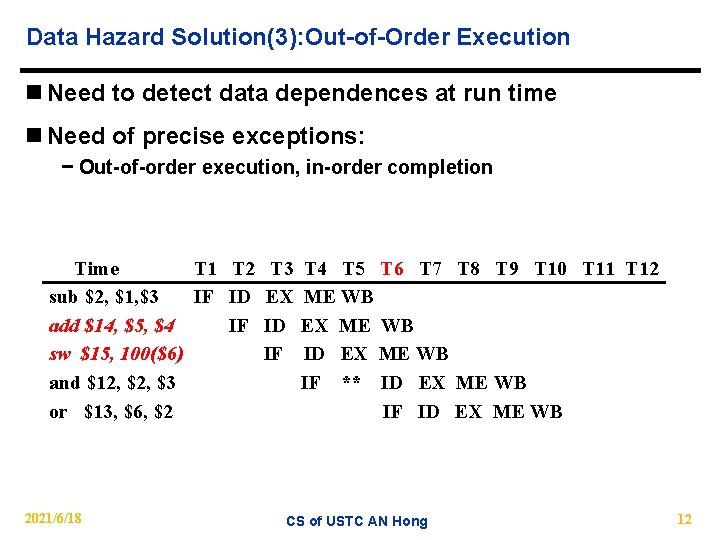

Data Hazard Solution(3): Out-of-Order Execution n Need to detect data dependences at run time n Need of precise exceptions: − Out-of-order execution, in-order completion Time T 1 T 2 T 3 T 4 T 5 T 6 T 7 T 8 T 9 T 10 T 11 T 12 sub $2, $1, $3 IF ID EX ME WB add $14, $5, $4 IF ID EX ME WB sw $15, 100($6) IF ID EX ME WB and $12, $3 IF ** ID EX ME WB or $13, $6, $2 IF ID EX ME WB 2021/6/18 CS of USTC AN Hong 12

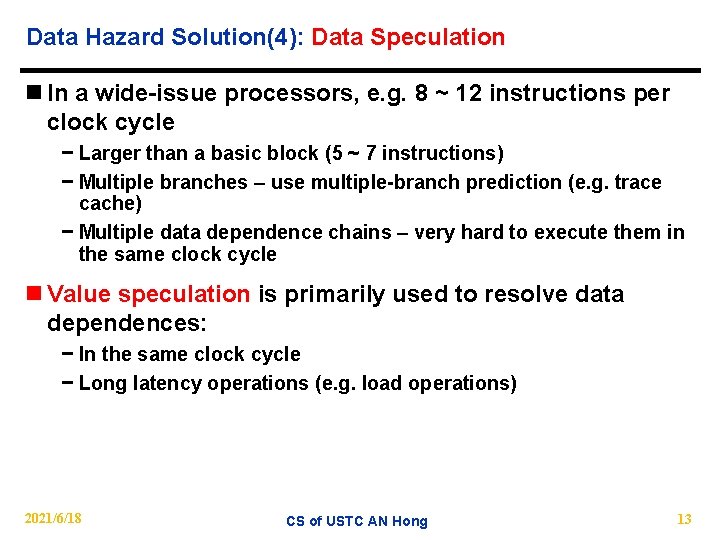

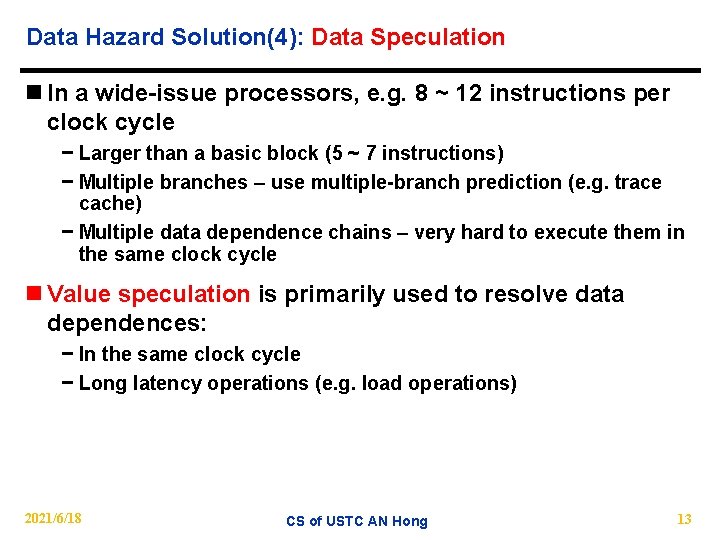

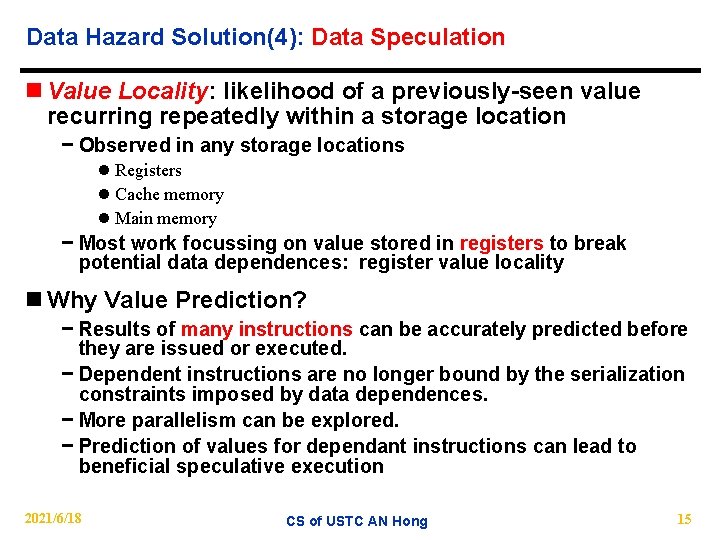

Data Hazard Solution(4): Data Speculation n In a wide-issue processors, e. g. 8 ~ 12 instructions per clock cycle − Larger than a basic block (5 ~ 7 instructions) − Multiple branches – use multiple-branch prediction (e. g. trace cache) − Multiple data dependence chains – very hard to execute them in the same clock cycle n Value speculation is primarily used to resolve data dependences: − In the same clock cycle − Long latency operations (e. g. load operations) 2021/6/18 CS of USTC AN Hong 13

Data Hazard Solution(4): Data Speculation n Why is Speculation Useful? − Speculation lets all these instruction run in parallel on a superscalar machine. addq $3 $1 $2 addq $4 $3 $1 addq $5 $3 $2 n What is Value Prediction? − Predict the value of instructions before they are executed − Cp. l Branch Prediction • eliminates the control dependences • Prediction Data are just two values( taken or not taken) l Value Prediction • eliminates the data dependences • Prediction Data are taken from a much larger range of values 2021/6/18 CS of USTC AN Hong 14

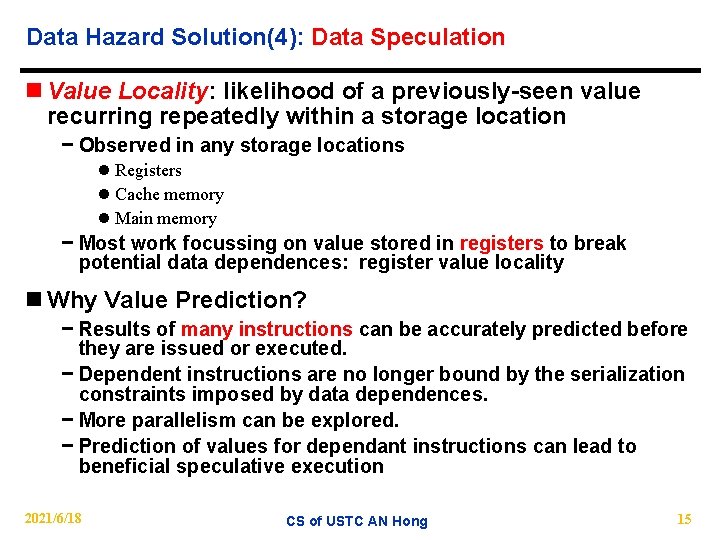

Data Hazard Solution(4): Data Speculation n Value Locality: likelihood of a previously-seen value recurring repeatedly within a storage location − Observed in any storage locations l Registers l Cache memory l Main memory − Most work focussing on value stored in registers to break potential data dependences: register value locality n Why Value Prediction? − Results of many instructions can be accurately predicted before they are issued or executed. − Dependent instructions are no longer bound by the serialization constraints imposed by data dependences. − More parallelism can be explored. − Prediction of values for dependant instructions can lead to beneficial speculative execution 2021/6/18 CS of USTC AN Hong 15

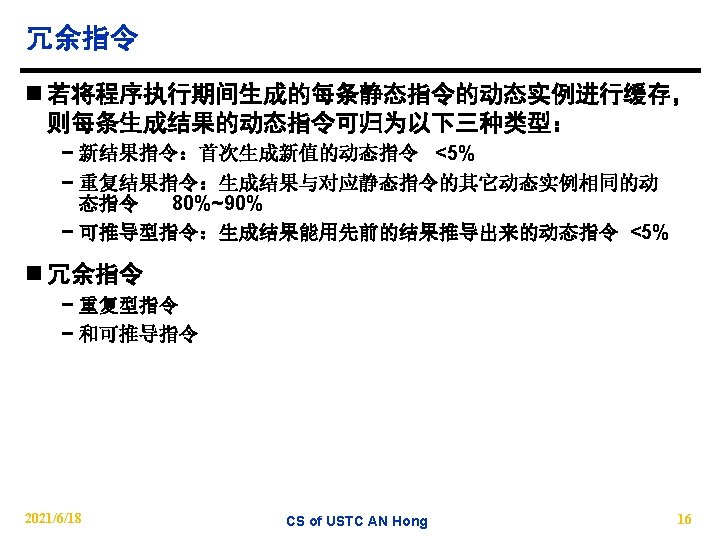

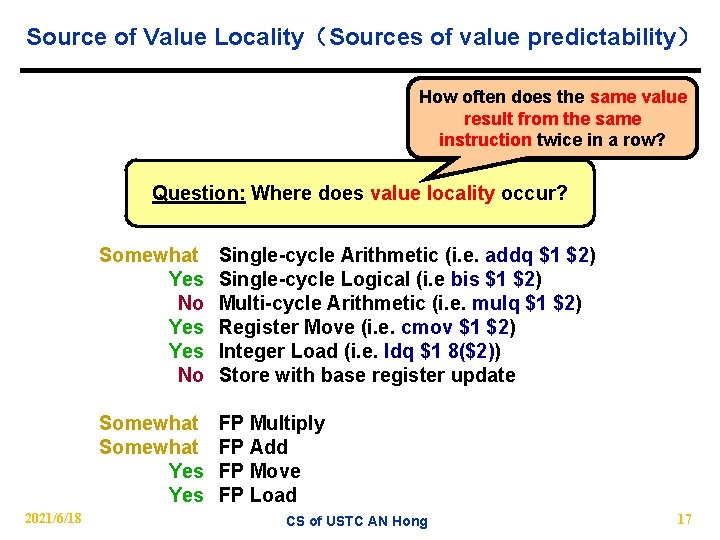

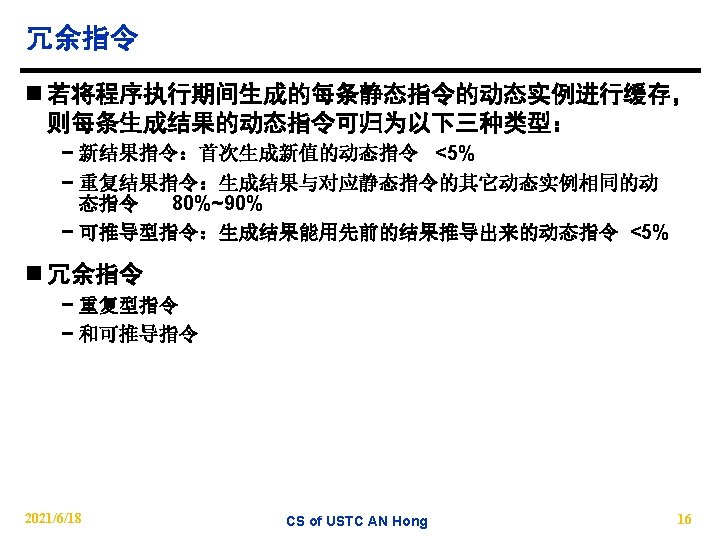

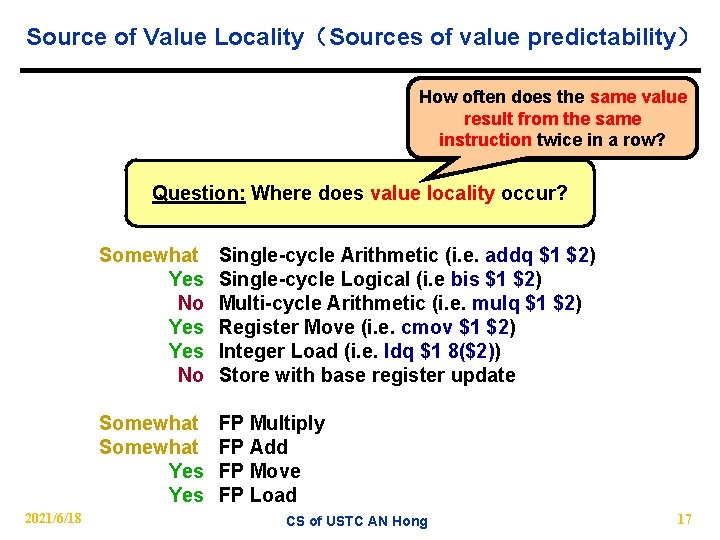

Source of Value Locality(Sources of value predictability) How often does the same value result from the same instruction twice in a row? Question: Where does value locality occur? 2021/6/18 Somewhat Yes No Single-cycle Arithmetic (i. e. addq $1 $2) Single-cycle Logical (i. e bis $1 $2) Multi-cycle Arithmetic (i. e. mulq $1 $2) Register Move (i. e. cmov $1 $2) Integer Load (i. e. ldq $1 8($2)) Store with base register update Somewhat Yes FP Multiply FP Add FP Move FP Load CS of USTC AN Hong 17

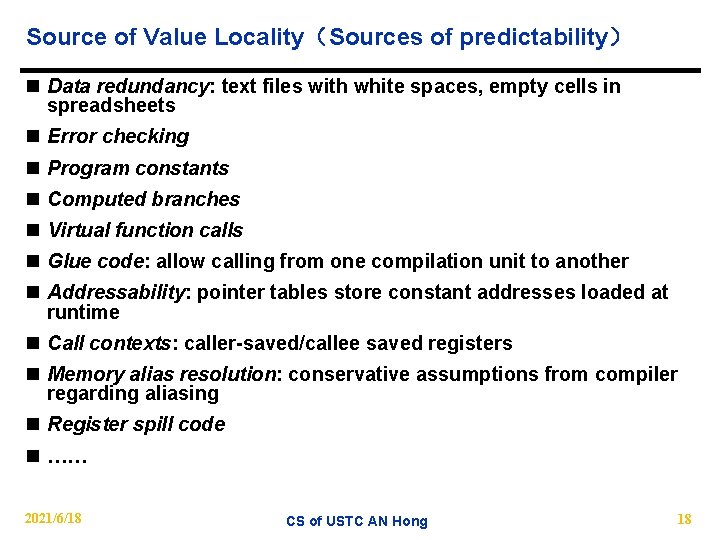

Source of Value Locality(Sources of predictability) n Data redundancy: text files with white spaces, empty cells in spreadsheets n Error checking n Program constants n Computed branches n Virtual function calls n Glue code: allow calling from one compilation unit to another n Addressability: pointer tables store constant addresses loaded at runtime n Call contexts: caller-saved/callee saved registers n Memory alias resolution: conservative assumptions from compiler regarding aliasing n Register spill code n …… 2021/6/18 CS of USTC AN Hong 18

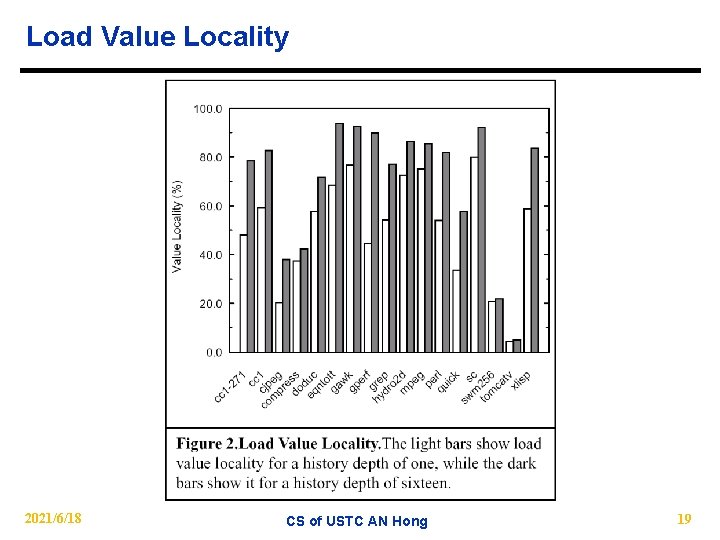

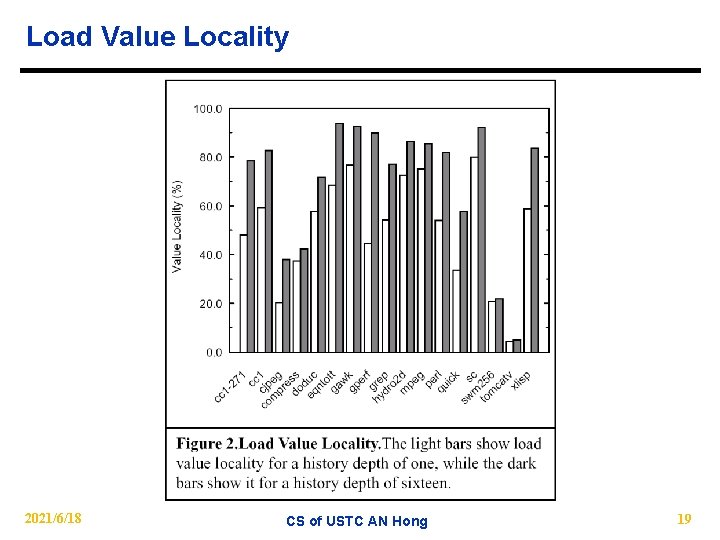

Load Value Locality 2021/6/18 CS of USTC AN Hong 19

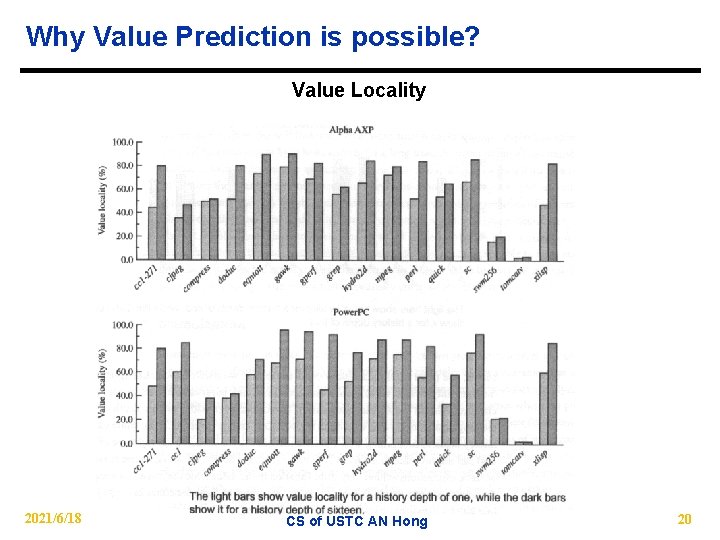

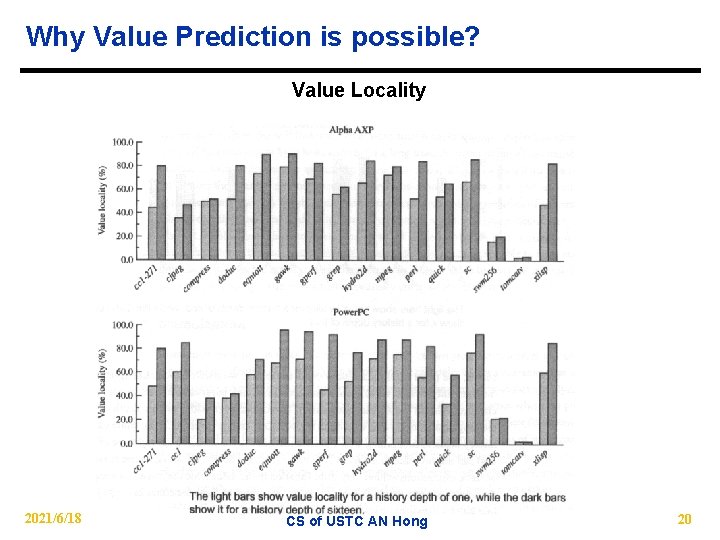

Why Value Prediction is possible? Value Locality 2021/6/18 CS of USTC AN Hong 20

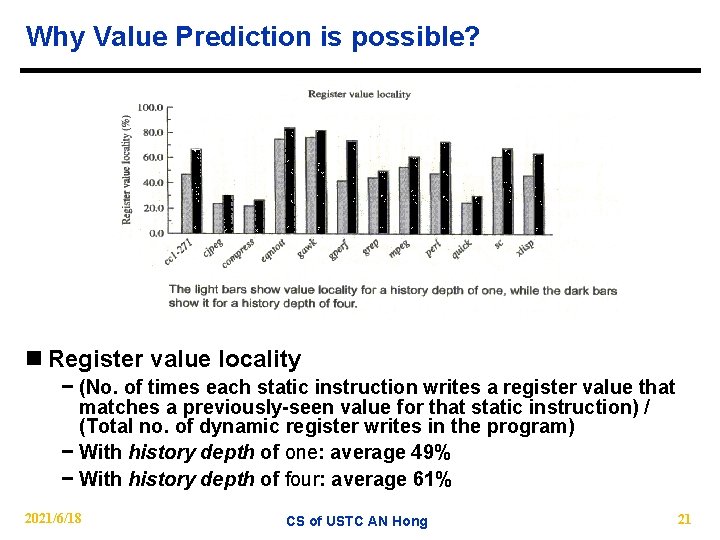

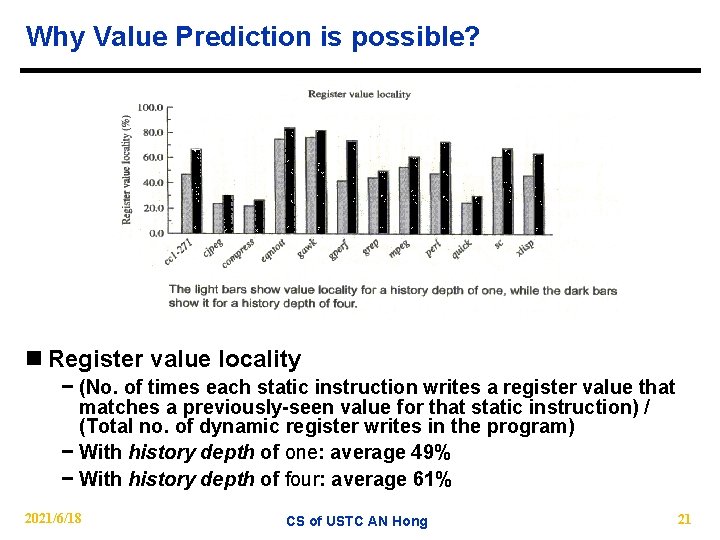

Why Value Prediction is possible? n Register value locality − (No. of times each static instruction writes a register value that matches a previously-seen value for that static instruction) / (Total no. of dynamic register writes in the program) − With history depth of one: average 49% − With history depth of four: average 61% 2021/6/18 CS of USTC AN Hong 21

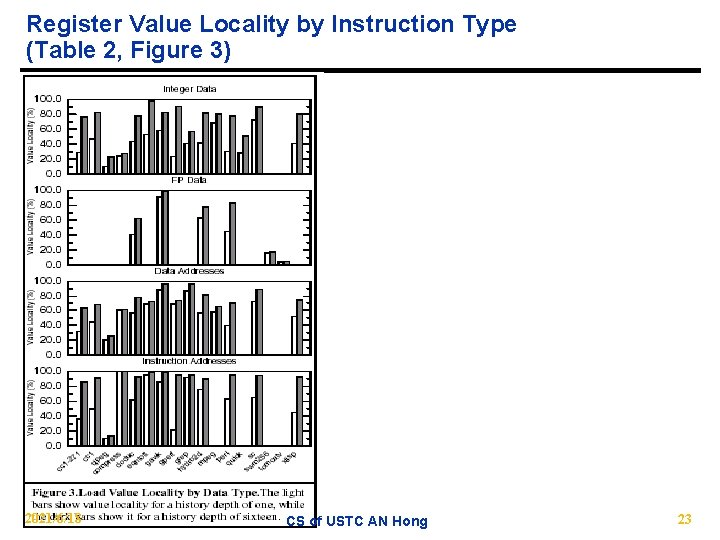

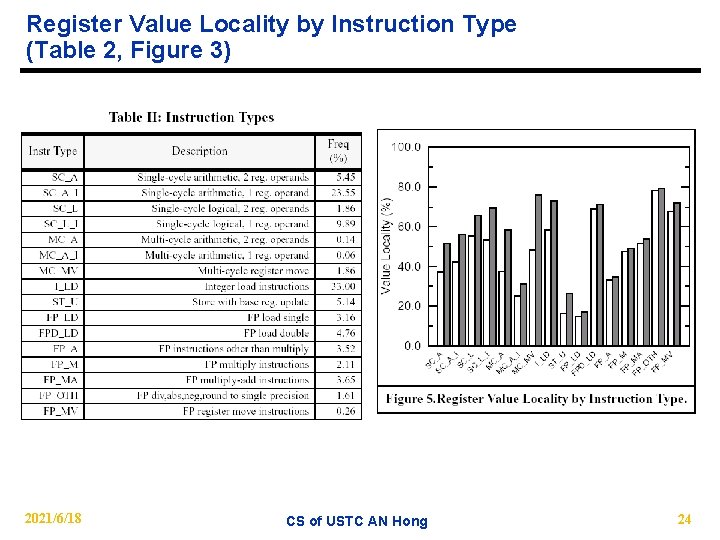

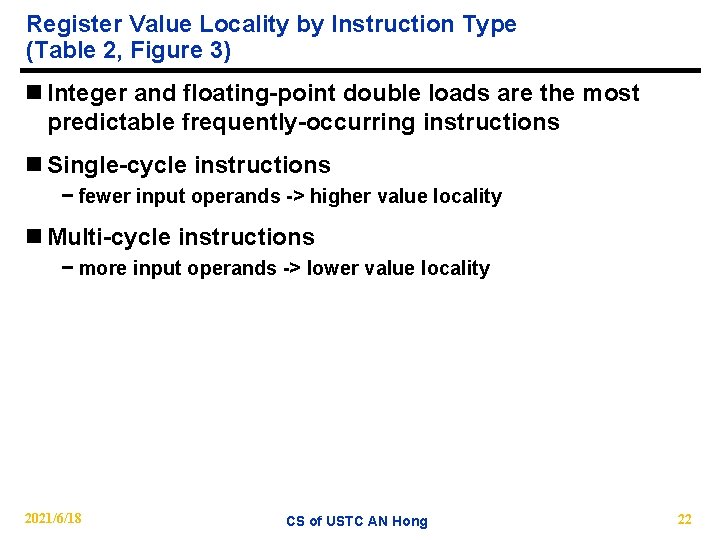

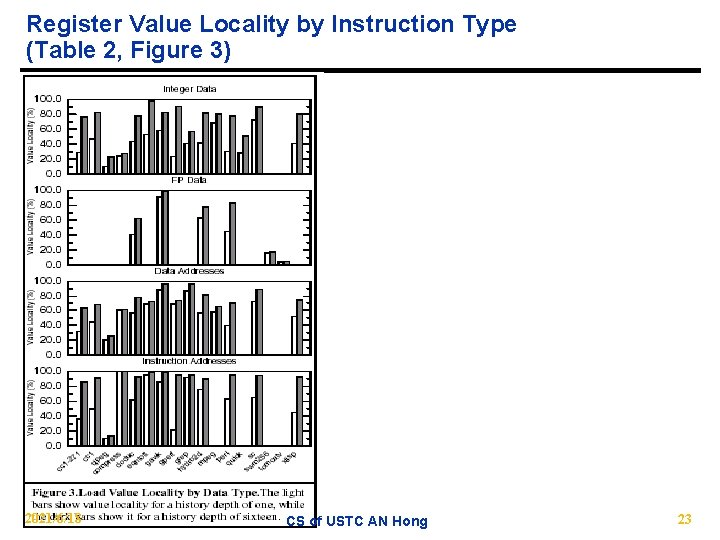

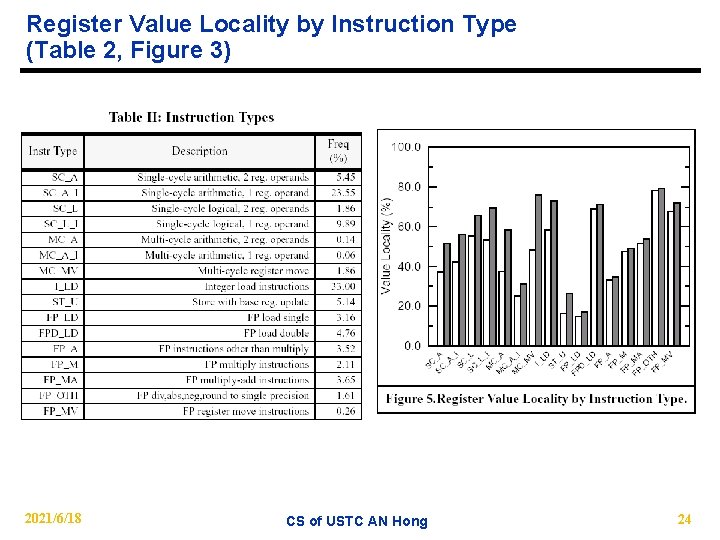

Register Value Locality by Instruction Type (Table 2, Figure 3) n Integer and floating-point double loads are the most predictable frequently-occurring instructions n Single-cycle instructions − fewer input operands -> higher value locality n Multi-cycle instructions − more input operands -> lower value locality 2021/6/18 CS of USTC AN Hong 22

Register Value Locality by Instruction Type (Table 2, Figure 3) 2021/6/18 CS of USTC AN Hong 23

Register Value Locality by Instruction Type (Table 2, Figure 3) 2021/6/18 CS of USTC AN Hong 24

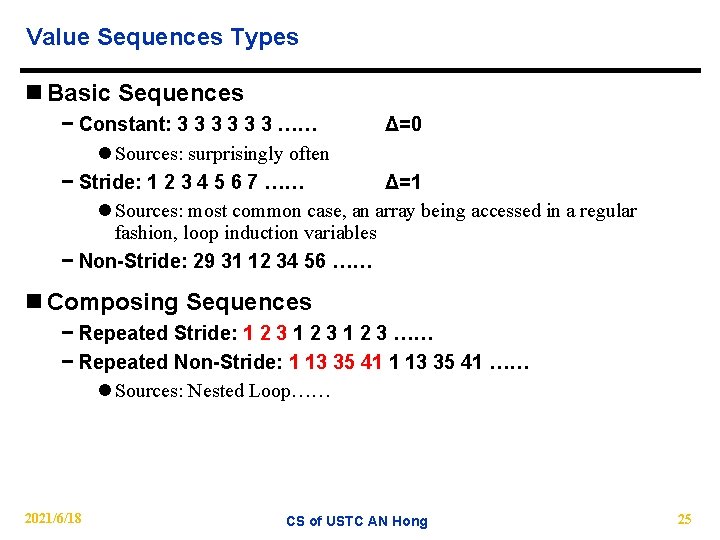

Value Sequences Types n Basic Sequences − Constant: 3 3 3 …… Δ=0 l Sources: surprisingly often − Stride: 1 2 3 4 5 6 7 …… Δ=1 l Sources: most common case, an array being accessed in a regular fashion, loop induction variables − Non-Stride: 29 31 12 34 56 …… n Composing Sequences − Repeated Stride: 1 2 3 …… − Repeated Non-Stride: 1 13 35 41 …… l Sources: Nested Loop…… 2021/6/18 CS of USTC AN Hong 25

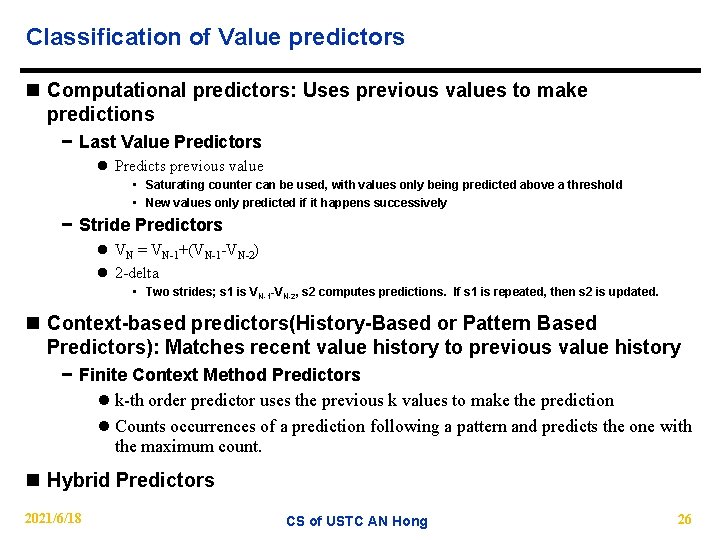

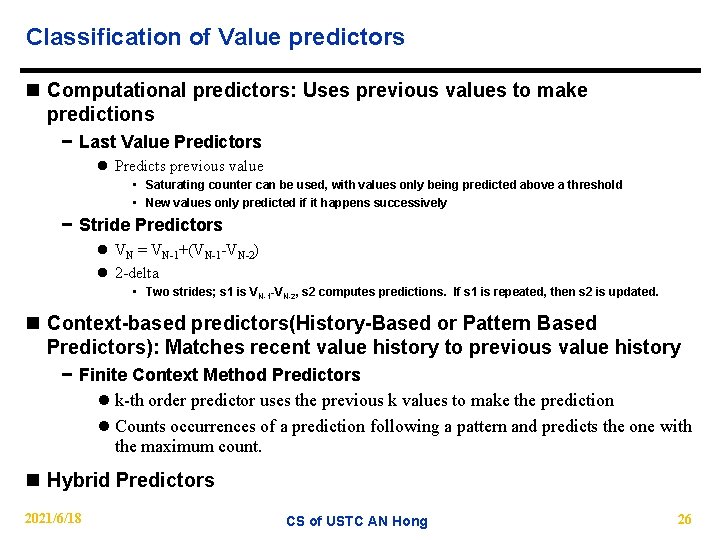

Classification of Value predictors n Computational predictors: Uses previous values to make predictions − Last Value Predictors l Predicts previous value • Saturating counter can be used, with values only being predicted above a threshold • New values only predicted if it happens successively − Stride Predictors l VN = VN-1+(VN-1 -VN-2) l 2 -delta • Two strides; s 1 is VN-1 -VN-2, s 2 computes predictions. If s 1 is repeated, then s 2 is updated. n Context-based predictors(History-Based or Pattern Based Predictors): Matches recent value history to previous value history − Finite Context Method Predictors l k-th order predictor uses the previous k values to make the prediction l Counts occurrences of a prediction following a pattern and predicts the one with the maximum count. n Hybrid Predictors 2021/6/18 CS of USTC AN Hong 26

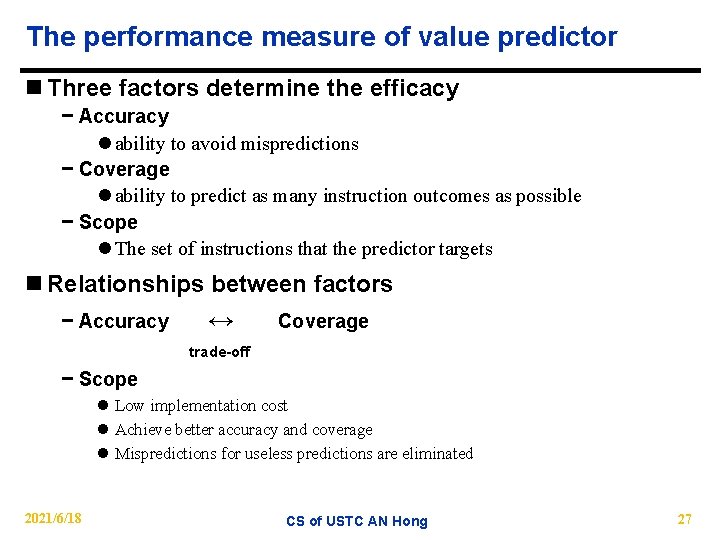

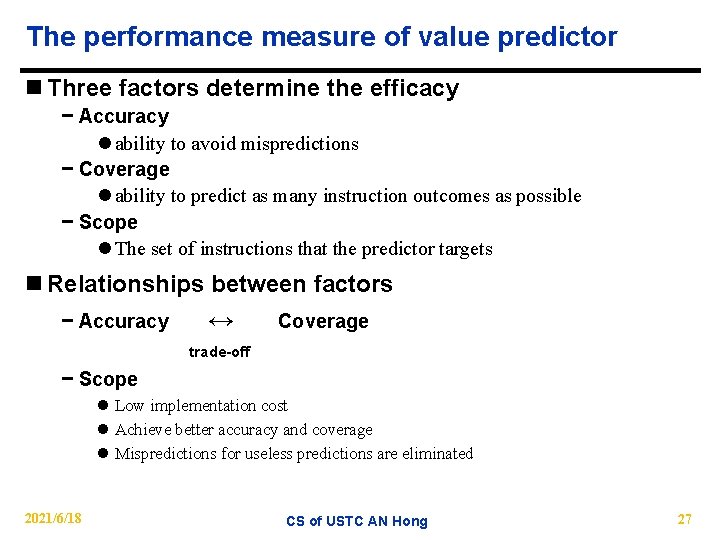

The performance measure of value predictor n Three factors determine the efficacy − Accuracy l ability to avoid mispredictions − Coverage l ability to predict as many instruction outcomes as possible − Scope l The set of instructions that the predictor targets n Relationships between factors − Accuracy ↔ Coverage trade-off − Scope l Low implementation cost l Achieve better accuracy and coverage l Mispredictions for useless predictions are eliminated 2021/6/18 CS of USTC AN Hong 27

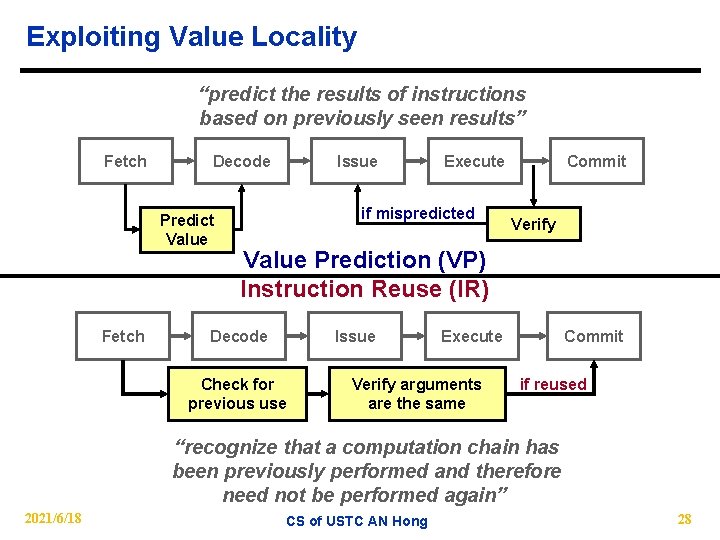

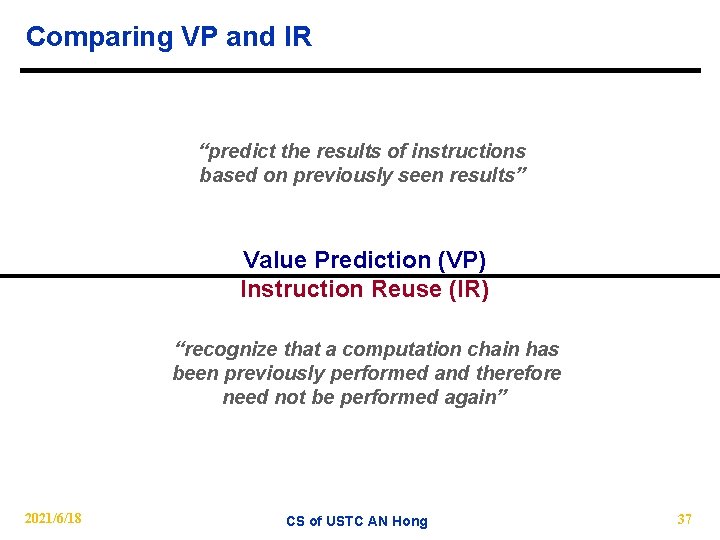

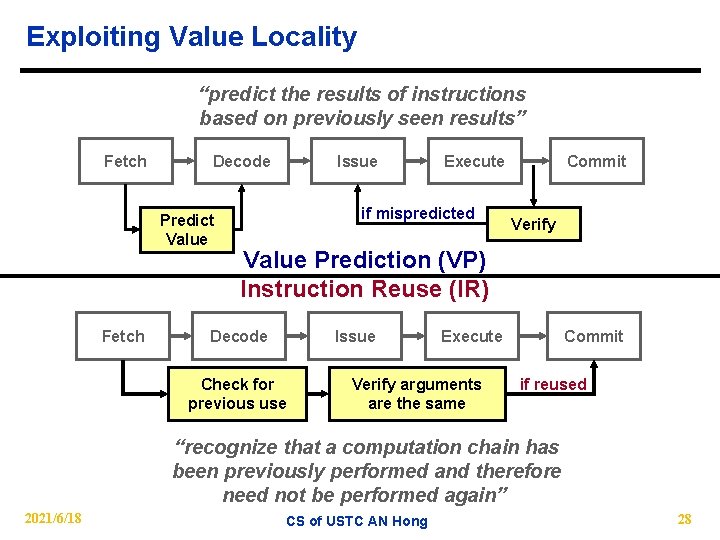

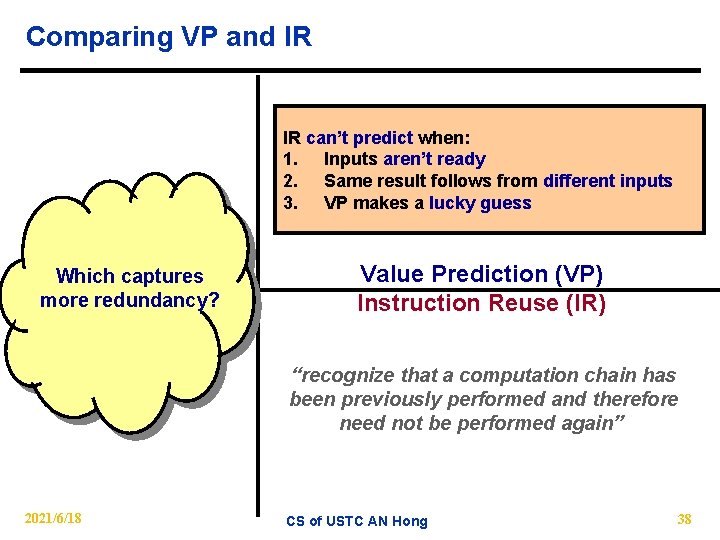

Exploiting Value Locality “predict the results of instructions based on previously seen results” Fetch Decode Predict Value Fetch Issue Execute if mispredicted Commit Verify Value Prediction (VP) Instruction Reuse (IR) Decode Issue Check for previous use Execute Verify arguments are the same Commit if reused “recognize that a computation chain has been previously performed and therefore need not be performed again” 2021/6/18 CS of USTC AN Hong 28

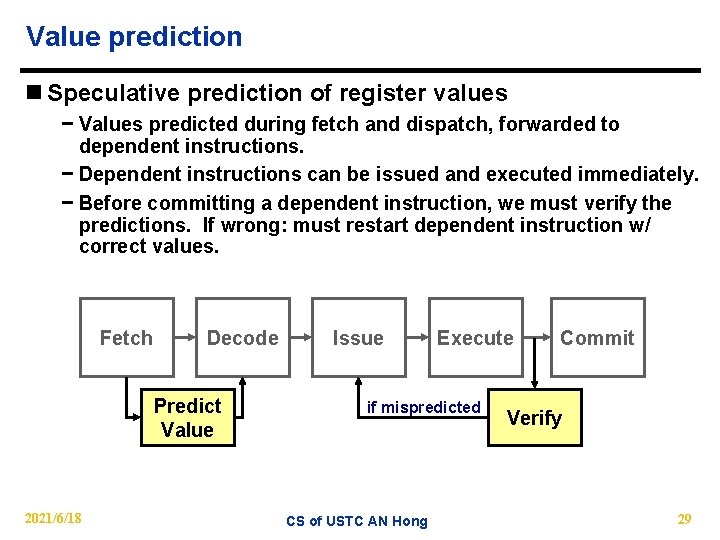

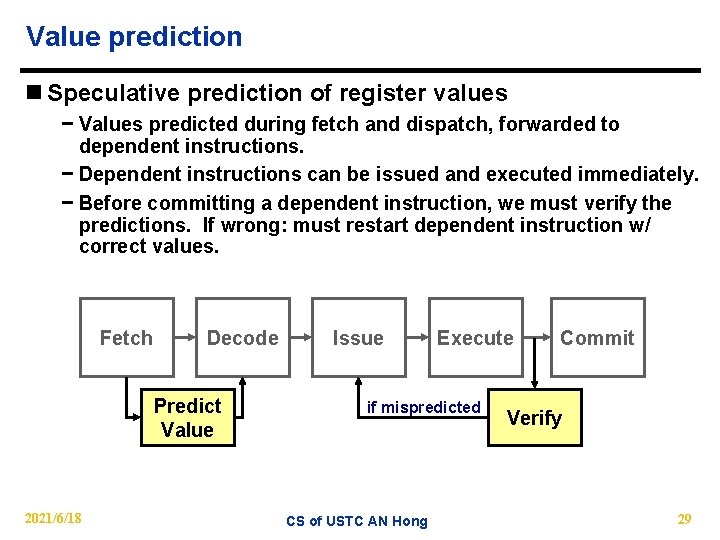

Value prediction n Speculative prediction of register values − Values predicted during fetch and dispatch, forwarded to dependent instructions. − Dependent instructions can be issued and executed immediately. − Before committing a dependent instruction, we must verify the predictions. If wrong: must restart dependent instruction w/ correct values. Fetch Decode Predict Value 2021/6/18 Issue Execute if mispredicted CS of USTC AN Hong Commit Verify 29

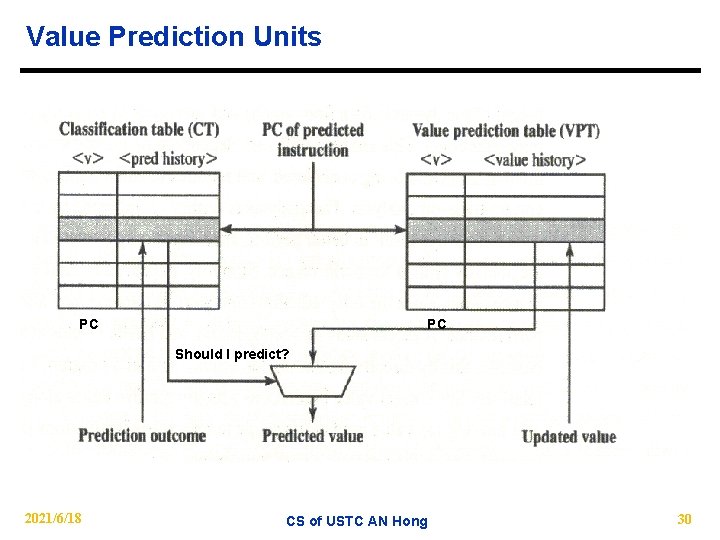

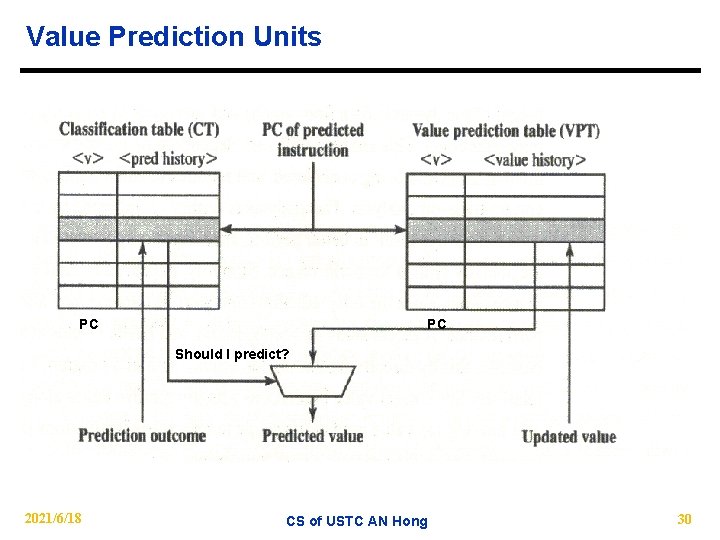

Value Prediction Units PC PC Should I predict? 2021/6/18 CS of USTC AN Hong 30

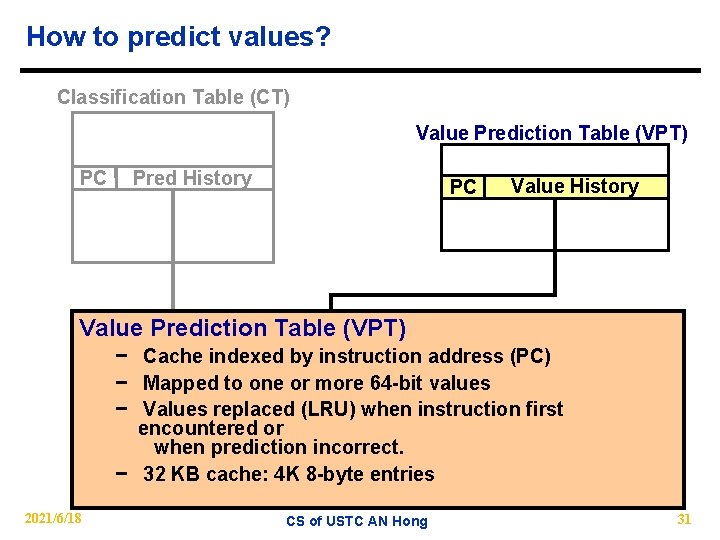

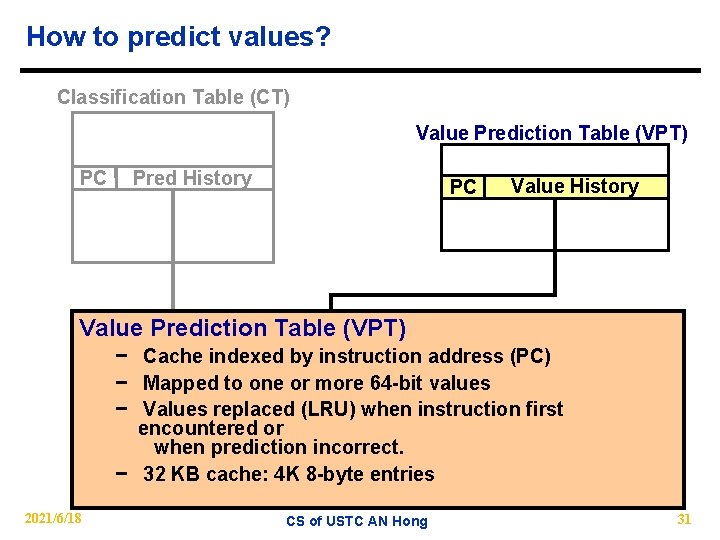

How to predict values? Classification Table (CT) Value Prediction Table (VPT) PC Pred History PC Value History Value Prediction Table (VPT) 2021/6/18 − Cache indexed by instruction address (PC) − Mapped to one or more 64 -bit values − Values replaced (LRU) when instruction first encountered or when prediction incorrect. − 32 KB cache: 4 K 8 -byte entries Prediction CS of USTC AN Hong 31

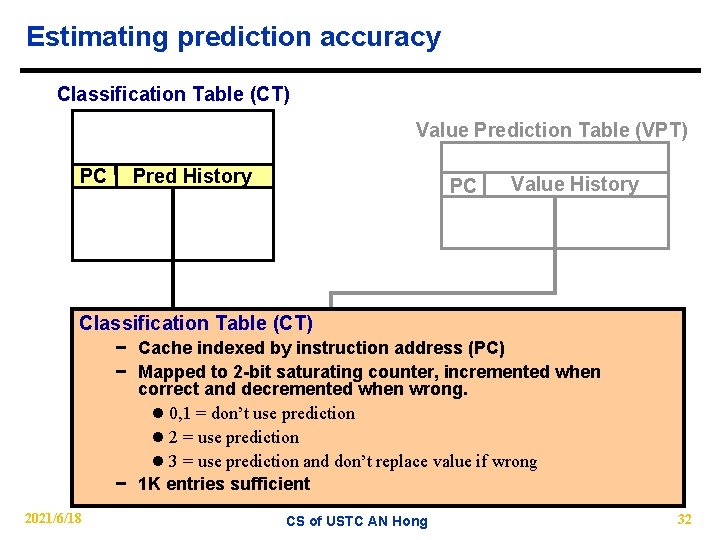

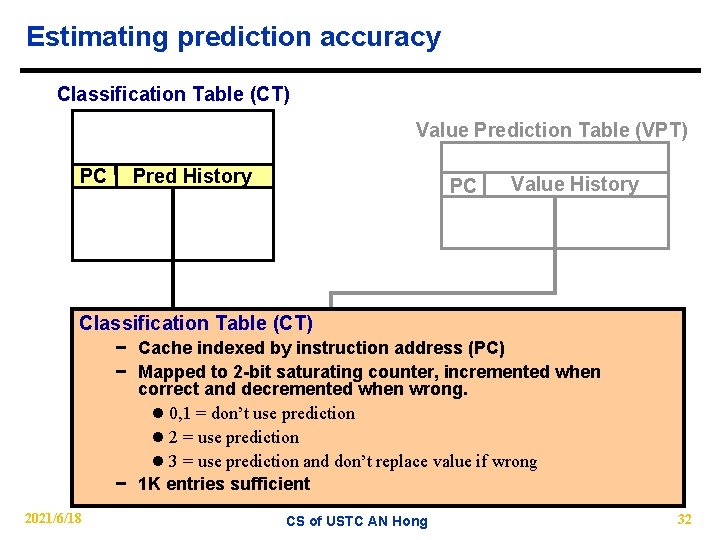

Estimating prediction accuracy Classification Table (CT) Value Prediction Table (VPT) PC Pred History PC Classification Table (CT) Value History Predicted Value − Cache indexed by instruction address (PC) − Mapped to 2 -bit saturating counter, incremented when correct and decremented when wrong. l 0, 1 = don’t use prediction l 2 = use prediction l 3 = use prediction and don’t replace value if wrong − 1 K entries sufficient Prediction 2021/6/18 CS of USTC AN Hong 32

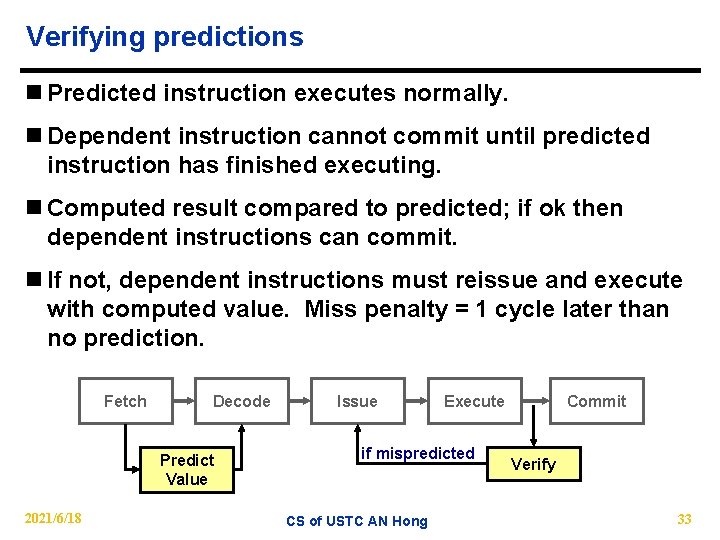

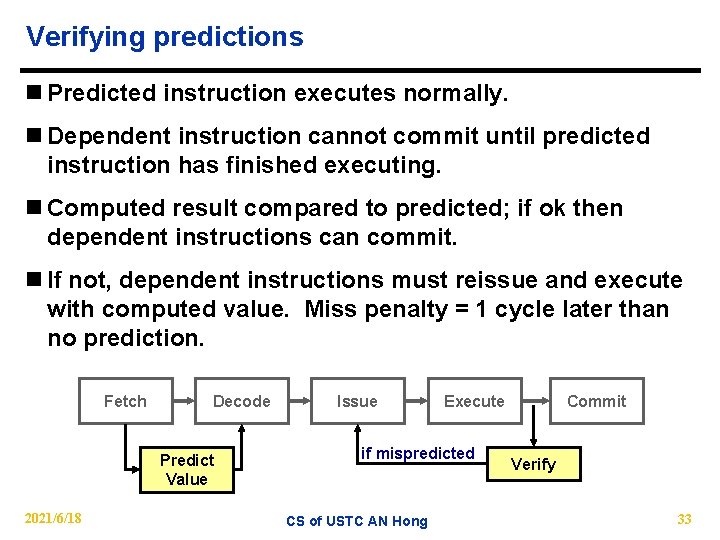

Verifying predictions n Predicted instruction executes normally. n Dependent instruction cannot commit until predicted instruction has finished executing. n Computed result compared to predicted; if ok then dependent instructions can commit. n If not, dependent instructions must reissue and execute with computed value. Miss penalty = 1 cycle later than no prediction. Fetch Decode Predict Value 2021/6/18 Issue Execute if mispredicted CS of USTC AN Hong Commit Verify 33

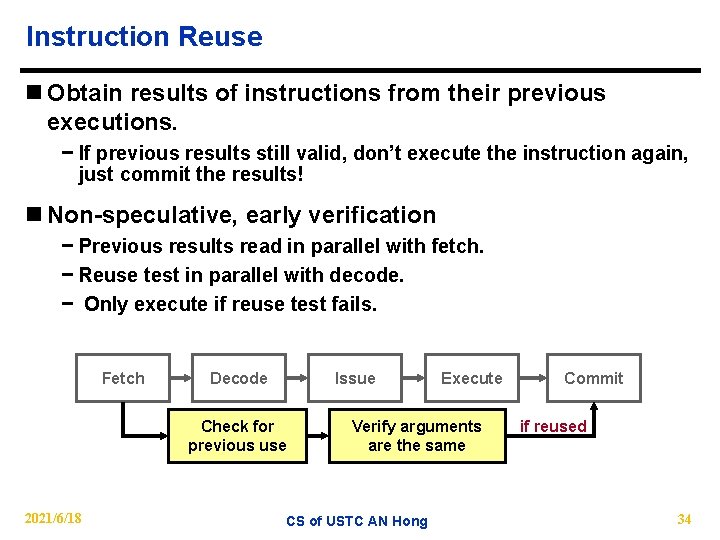

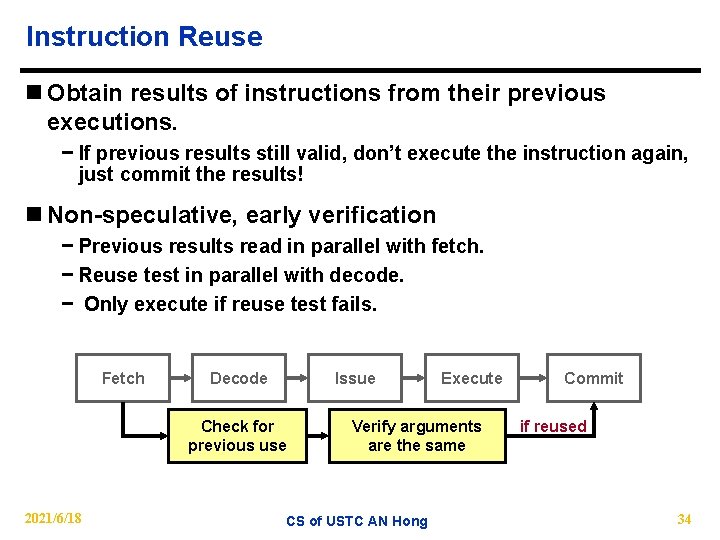

Instruction Reuse n Obtain results of instructions from their previous executions. − If previous results still valid, don’t execute the instruction again, just commit the results! n Non-speculative, early verification − Previous results read in parallel with fetch. − Reuse test in parallel with decode. − Only execute if reuse test fails. Fetch Decode Issue Check for previous use 2021/6/18 Execute Verify arguments are the same CS of USTC AN Hong Commit if reused 34

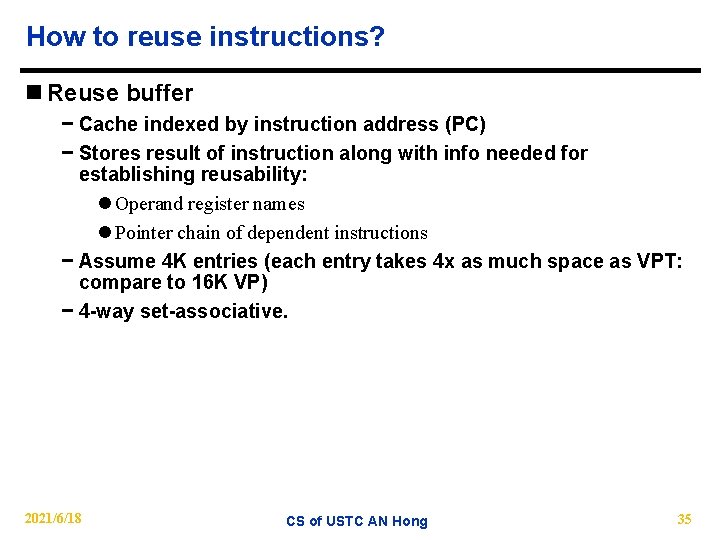

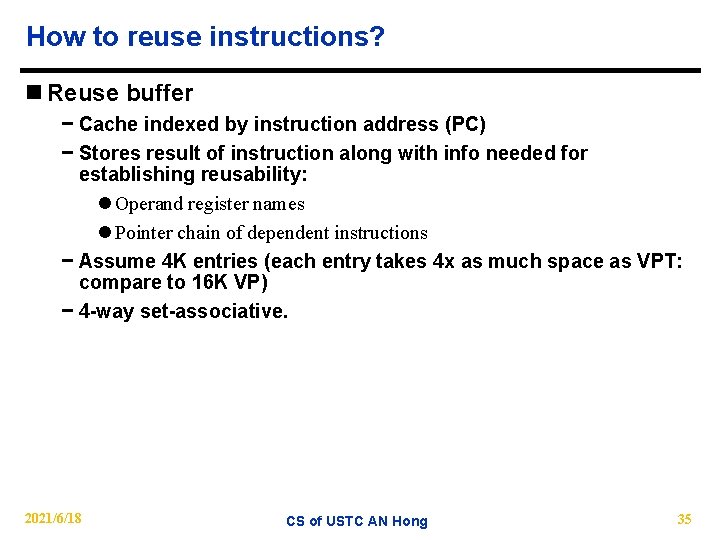

How to reuse instructions? n Reuse buffer − Cache indexed by instruction address (PC) − Stores result of instruction along with info needed for establishing reusability: l Operand register names l Pointer chain of dependent instructions − Assume 4 K entries (each entry takes 4 x as much space as VPT: compare to 16 K VP) − 4 -way set-associative. 2021/6/18 CS of USTC AN Hong 35

Reuse Scheme n Dependent chain of results (each points to previous instruction in chain) − Entry is reusable if the entries on which it depends have been reused (can’t reuse out of order). − Start of chain: reusable if “valid” bit set; invalidated when operand registers overwritten. − Special handling of loads and stores. n Instruction will not be reused if: − Inputs not ready for reuse test (decode stage) − Different operand registers 2021/6/18 CS of USTC AN Hong 36

Comparing VP and IR “predict the results of instructions based on previously seen results” Value Prediction (VP) Instruction Reuse (IR) “recognize that a computation chain has been previously performed and therefore need not be performed again” 2021/6/18 CS of USTC AN Hong 37

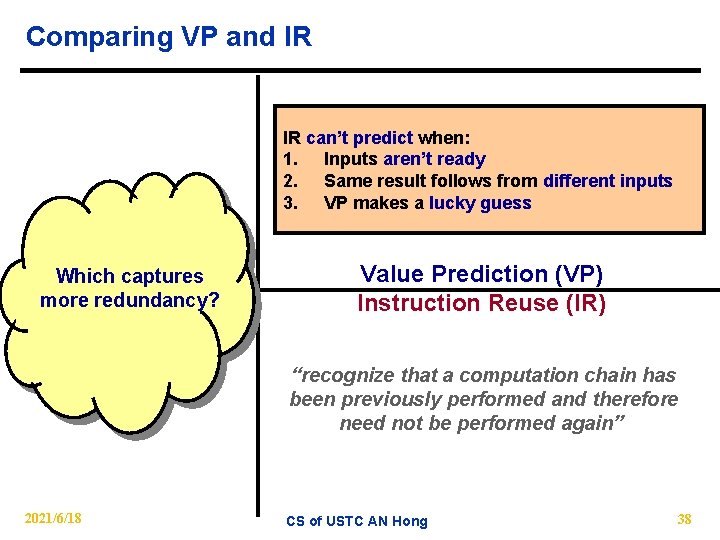

Comparing VP and IR IR can’t predict when: 1. Inputs aren’t ready the follows resultsfrom of instructions 2. “predict Same result different inputs on previously seen results” 3. based VP makes a lucky guess Which captures more redundancy? Value Prediction (VP) Instruction Reuse (IR) “recognize that a computation chain has been previously performed and therefore need not be performed again” 2021/6/18 CS of USTC AN Hong 38

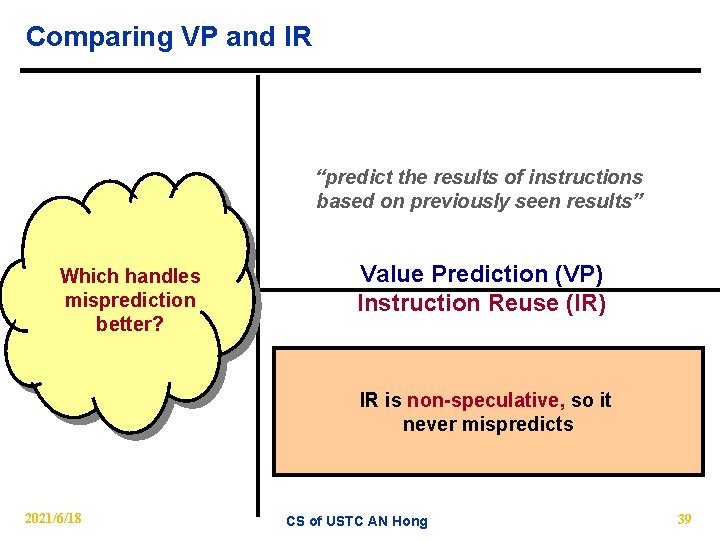

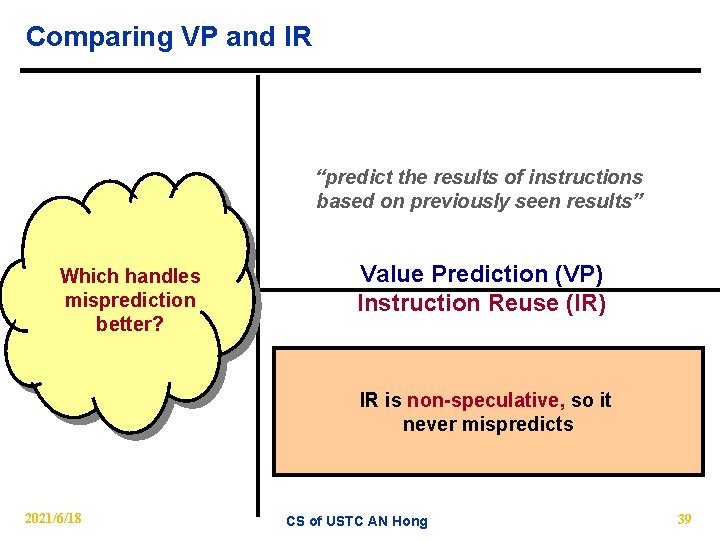

Comparing VP and IR “predict the results of instructions based on previously seen results” Which captures handles Which misprediction more redundancy? better? Value Prediction (VP) Instruction Reuse (IR) “recognize that a computation chain has been previously performed and IR is non-speculative, sotherefore it need not be performed again” never mispredicts 2021/6/18 CS of USTC AN Hong 39

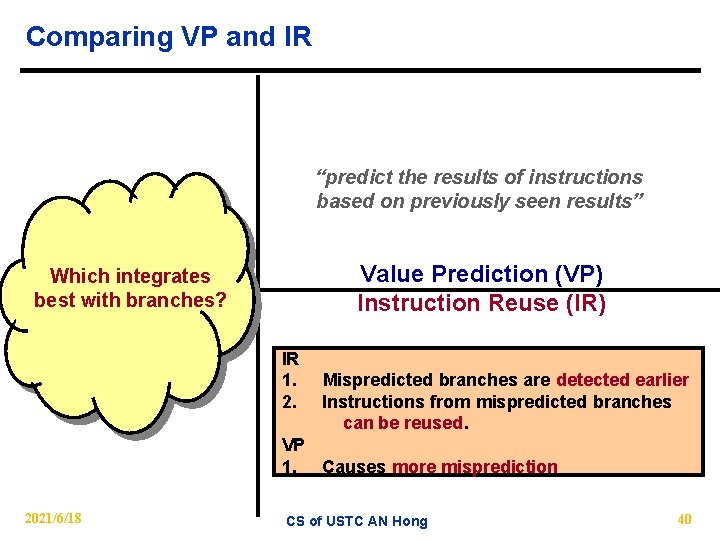

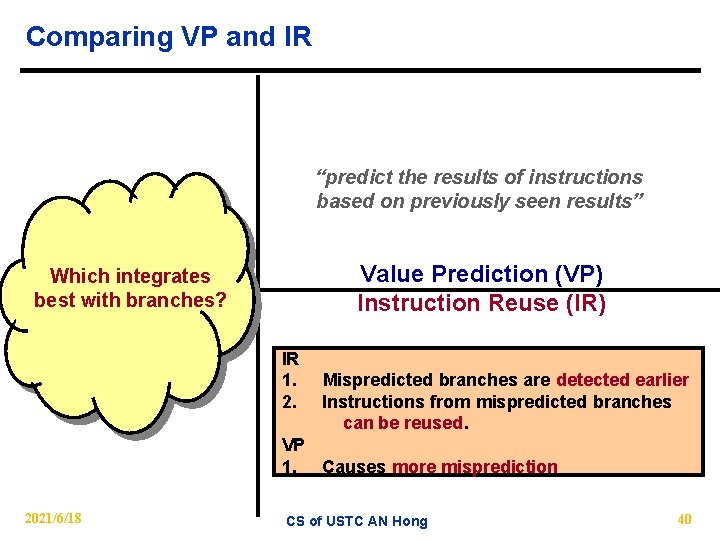

Comparing VP and IR “predict the results of instructions based on previously seen results” Whichintegrates captures best with branches? more redundancy? Value Prediction (VP) Instruction Reuse (IR) IR that branches a computation chainearlier has 1. “recognize Mispredicted are detected previously performed and therefore 2. been Instructions from mispredicted branches can benot reused. need be performed again” VP 1. Causes more misprediction 2021/6/18 CS of USTC AN Hong 40

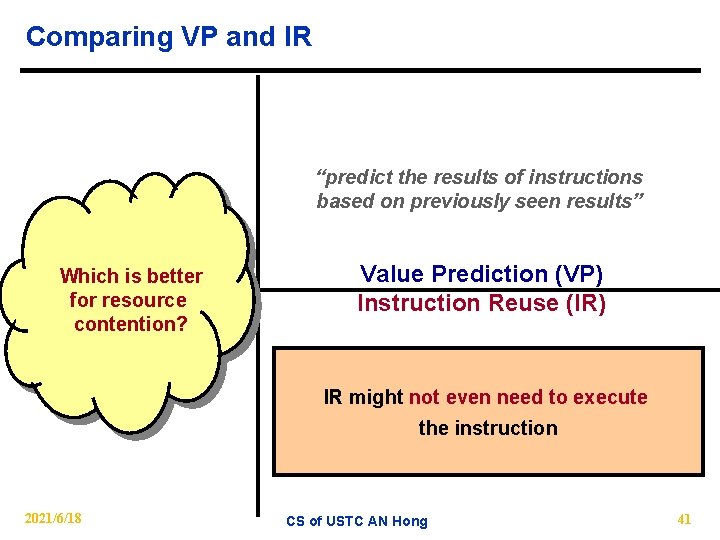

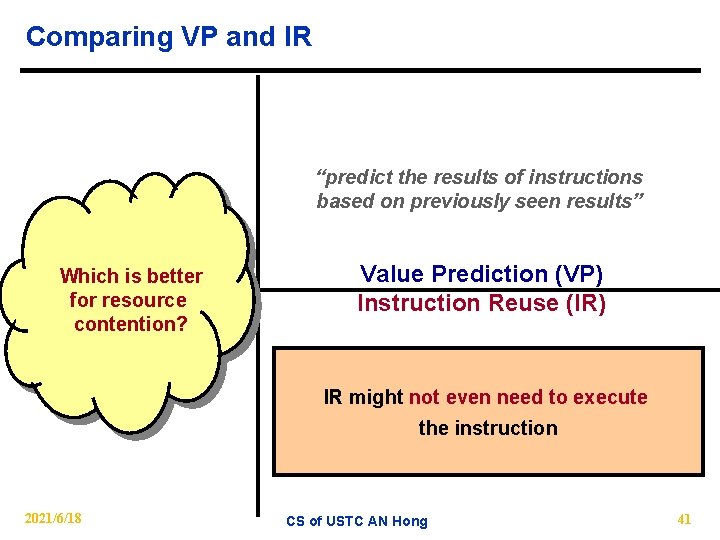

Comparing VP and IR “predict the results of instructions based on previously seen results” Which captures is better Which for redundancy? resource more contention? Value Prediction (VP) Instruction Reuse (IR) “recognize that a computation chain has IRpreviously might not even need to execute been performed and therefore need notthe be instruction performed again” 2021/6/18 CS of USTC AN Hong 41

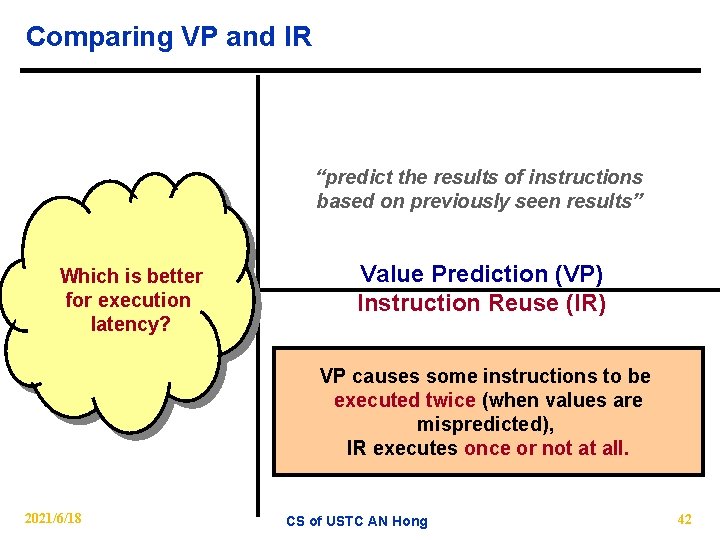

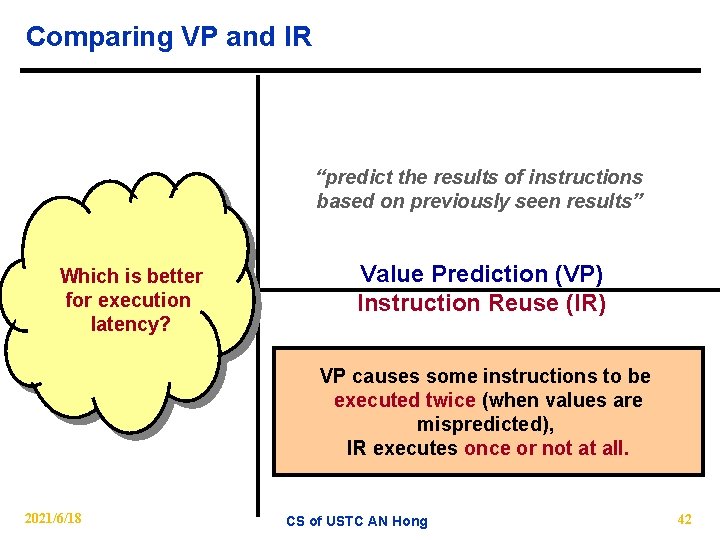

Comparing VP and IR “predict the results of instructions based on previously seen results” Which captures is better Which for execution more redundancy? latency? Value Prediction (VP) Instruction Reuse (IR) “recognize that a computation chain VP causes some instructions to behas beenexecuted previously performed and therefore twice (when values are need notmispredicted), be performed again” IR executes once or not at all. 2021/6/18 CS of USTC AN Hong 42

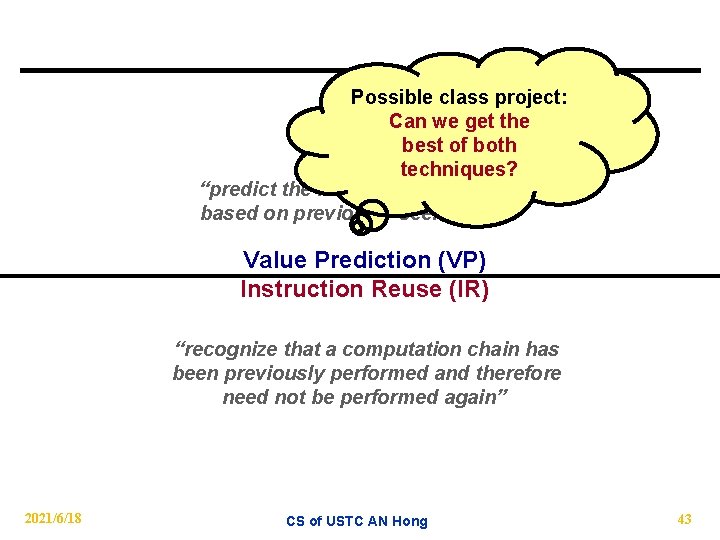

Possible class project: Can we get the best of both techniques? “predict the results of instructions based on previously seen results” Value Prediction (VP) Instruction Reuse (IR) “recognize that a computation chain has been previously performed and therefore need not be performed again” 2021/6/18 CS of USTC AN Hong 43

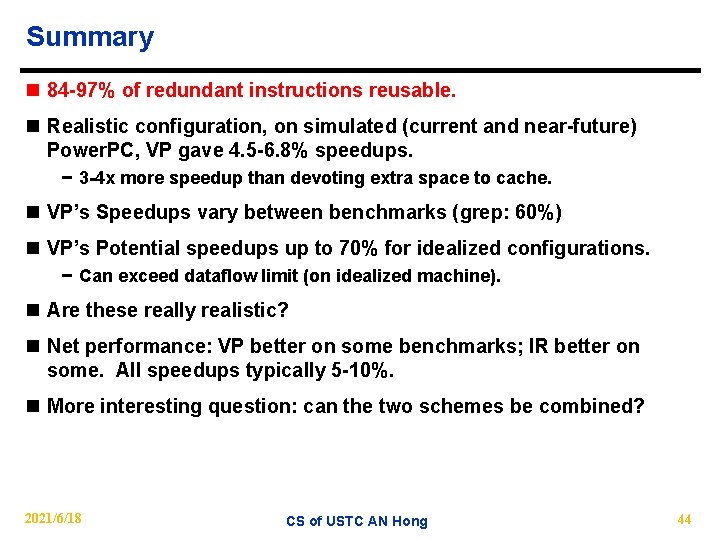

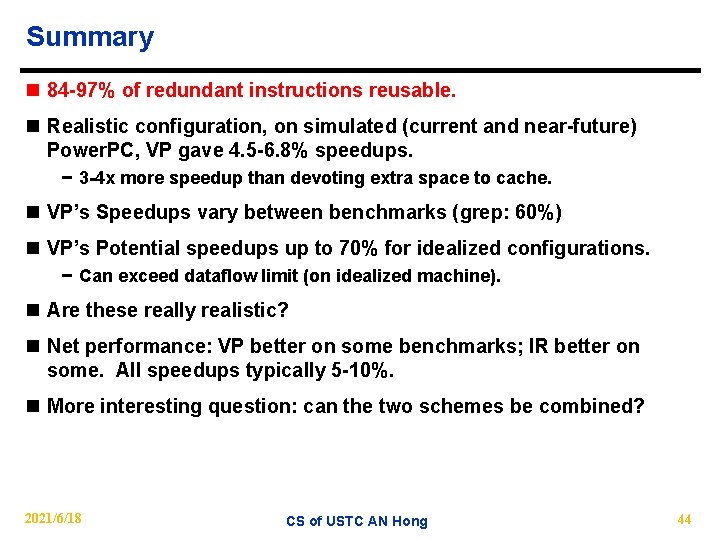

Summary n 84 -97% of redundant instructions reusable. n Realistic configuration, on simulated (current and near-future) Power. PC, VP gave 4. 5 -6. 8% speedups. − 3 -4 x more speedup than devoting extra space to cache. n VP’s Speedups vary between benchmarks (grep: 60%) n VP’s Potential speedups up to 70% for idealized configurations. − Can exceed dataflow limit (on idealized machine). n Are these really realistic? n Net performance: VP better on some benchmarks; IR better on some. All speedups typically 5 -10%. n More interesting question: can the two schemes be combined? 2021/6/18 CS of USTC AN Hong 44