Lecture on High Performance Processor Architecture CS 05162

![Vector Code Example # C code # Scalar code for (i=0; i<64; i++) C[i]=A[i]+B[i]; Vector Code Example # C code # Scalar code for (i=0; i<64; i++) C[i]=A[i]+B[i];](https://slidetodoc.com/presentation_image_h2/77afb5afd3f9ab3ddf2f5bf9aee7d063/image-20.jpg)

![Automatic Code Vectorization for (i=0; i < N; i++) C[i] = A[i] + B[i]; Automatic Code Vectorization for (i=0; i < N; i++) C[i] = A[i] + B[i];](https://slidetodoc.com/presentation_image_h2/77afb5afd3f9ab3ddf2f5bf9aee7d063/image-21.jpg)

- Slides: 87

Lecture on High Performance Processor Architecture (CS 05162) Introduction on Data-Level Parallel Architecture An Hong han@ustc. edu. cn Fall 2007 University of Science and Technology of China Department of Computer Science and Technology USTC CS AN Hong

Outline n Basic Concepts n Roots: Vector Supercomputers n Vector Architectures of the Future − High-end supercomputer − High performance microprocessors for the Mutilmedia n Stream Processor Architecture 2021/6/17 USTC CS AN Hong 2

DLP vs. ILP(superscalar and VLIW) n ILP(Instruction level parallelism): Different operations (one load, one add, one multiply and one divide) in same cycle − Each operation is performed in a different execution unit − General purpose computing − Superscalar, VLIW, DSP(VLIW) n DLP(data-level parallelism): Same type of arithmetic/logical operation is performed on multiple data elements − Typically, the execution unit is wide (64 -bit or 128 -bit) and holds multiple data, e. g. , four 16 -bit elements in a 64 -bit execution unit − Multimedia, scientific computing − Vector, SIMD, SPMD 2021/6/17 USTC CS AN Hong 3

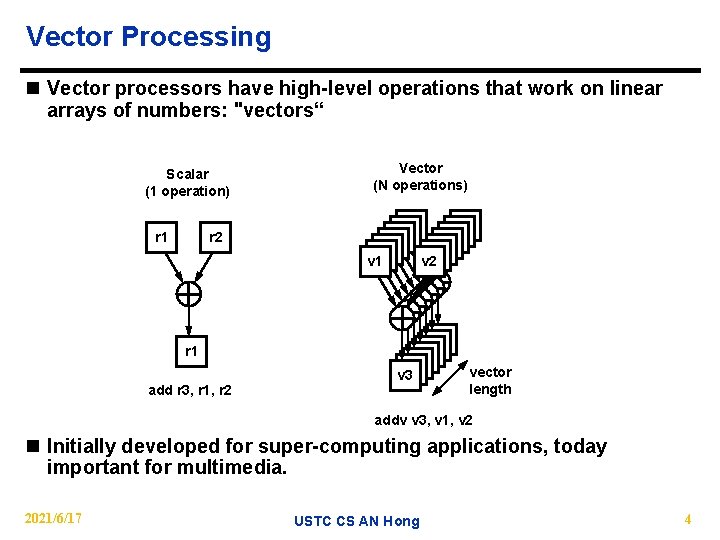

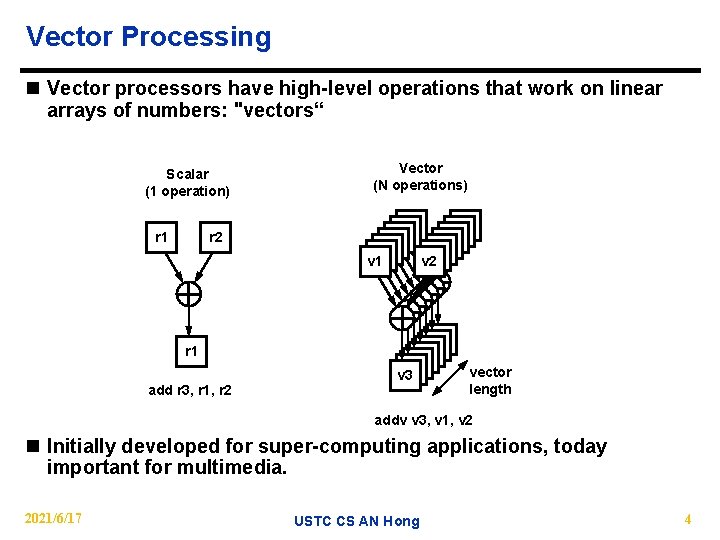

Vector Processing n Vector processors have high-level operations that work on linear arrays of numbers: "vectors“ Scalar (1 operation) r 1 r 2 r 1 add r 3, r 1, r 2 Vector (N operations) r 1 r 2 r 1 r 2 v 1 v 2 r 1 r 1 r 1 v 3 vector length addv v 3, v 1, v 2 n Initially developed for super-computing applications, today important for multimedia. 2021/6/17 USTC CS AN Hong 4

Roots: Vector Supercomputers 2021/6/17 USTC CS AN Hong 5

Supercomputers In 70 s-80 s, Supercomputer = Vector Machine n Definition of a supercomputer − Fastest machine in world at given task − A device to turn a compute-bound problem into an I/O bound problem − Any machine costing $30 M+ − Any machine designed by Seymour Cray CDC 6600 (Cray, 1964) regarded as first supercomputer (Control Data Corporation) 2021/6/17 USTC CS AN Hong 6

Supercomputer Applications n Typical application areas − Military research (nuclear weapons, cryptography) − Scientific research − Weather forecasting − Oil exploration − Industrial design (car crash simulation) − …… All involve huge computations on large data sets 2021/6/17 USTC CS AN Hong 7

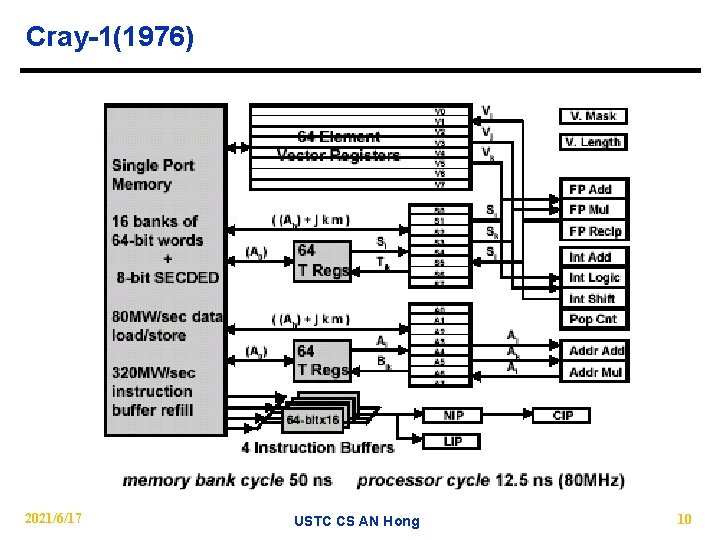

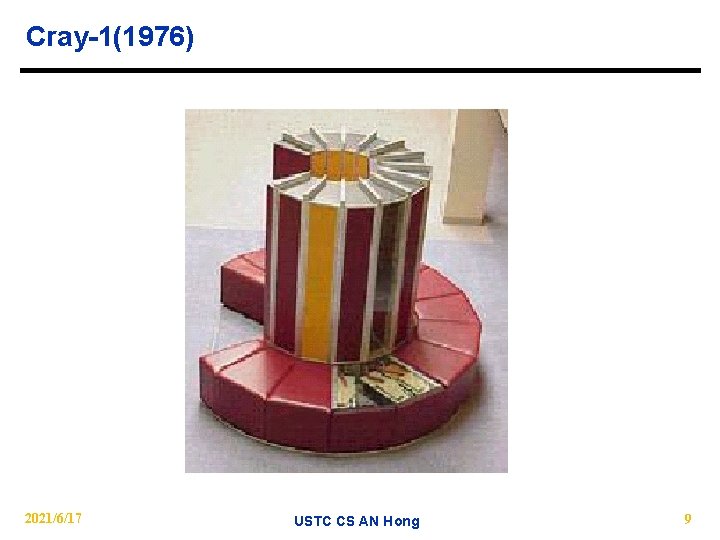

Vector Supercomputers (Epitomized by Cray-1, 1976) n Scalar Unit + Vector Extensions. − Load/Store Architecture. − Vector Registers. − Vector Instructions. − Hardwired Control. − Highly Pipelined Functional Units. − Interleaved Memory System. − No Data Caches. − No Virtual Memory 2021/6/17 USTC CS AN Hong 8

Cray-1(1976) 2021/6/17 USTC CS AN Hong 9

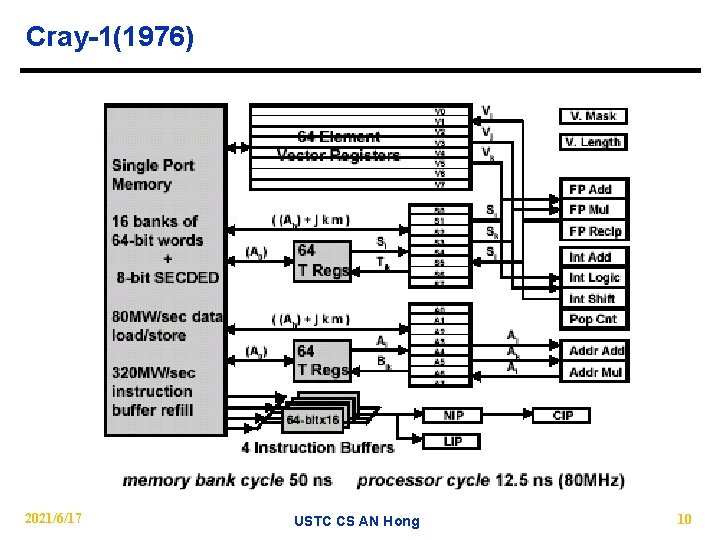

Cray-1(1976) 2021/6/17 USTC CS AN Hong 10

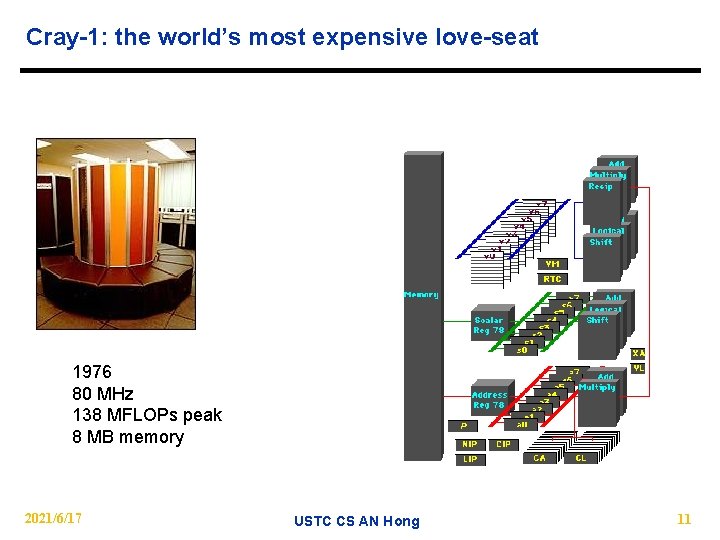

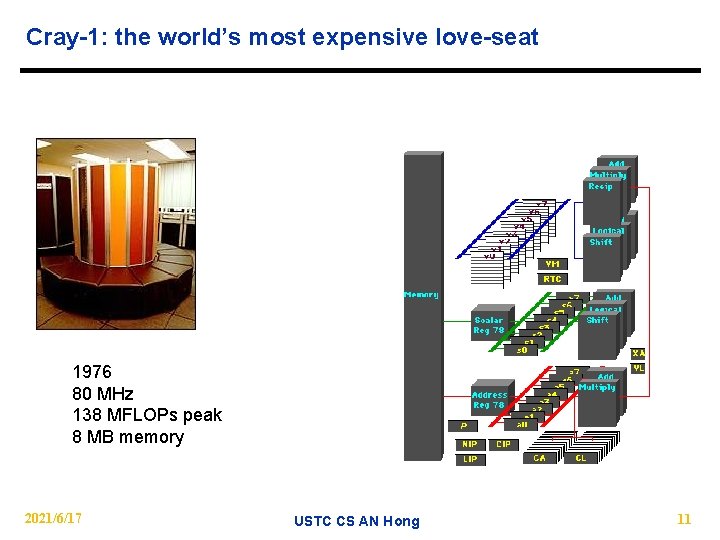

Cray-1: the world’s most expensive love-seat 1976 80 MHz 138 MFLOPs peak 8 MB memory 2021/6/17 USTC CS AN Hong 11

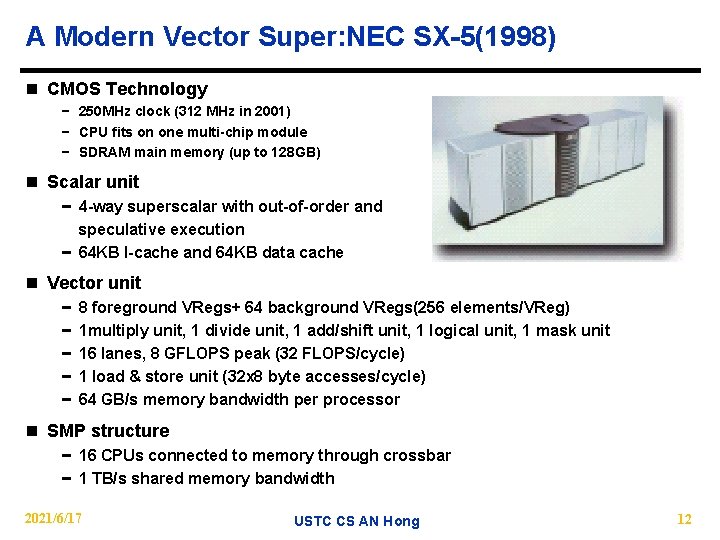

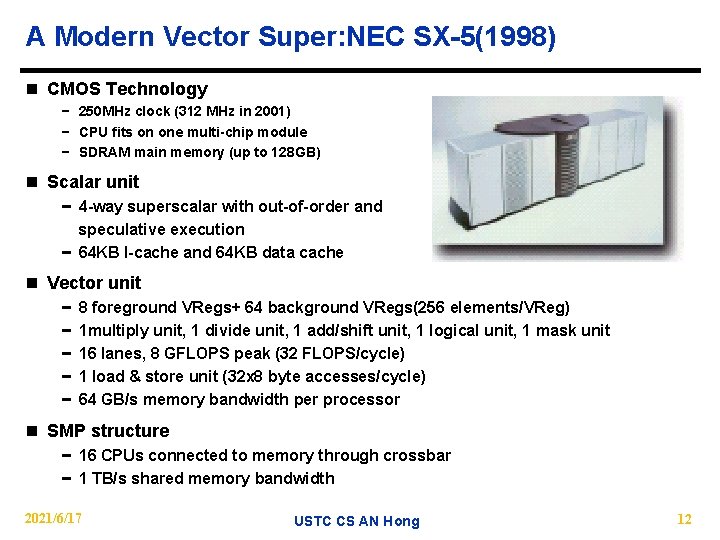

A Modern Vector Super: NEC SX-5(1998) n CMOS Technology − 250 MHz clock (312 MHz in 2001) − CPU fits on one multi-chip module − SDRAM main memory (up to 128 GB) n Scalar unit − 4 -way superscalar with out-of-order and speculative execution − 64 KB I-cache and 64 KB data cache n Vector unit − − − 8 foreground VRegs+ 64 background VRegs(256 elements/VReg) 1 multiply unit, 1 divide unit, 1 add/shift unit, 1 logical unit, 1 mask unit 16 lanes, 8 GFLOPS peak (32 FLOPS/cycle) 1 load & store unit (32 x 8 byte accesses/cycle) 64 GB/s memory bandwidth per processor n SMP structure − 16 CPUs connected to memory through crossbar − 1 TB/s shared memory bandwidth 2021/6/17 USTC CS AN Hong 12

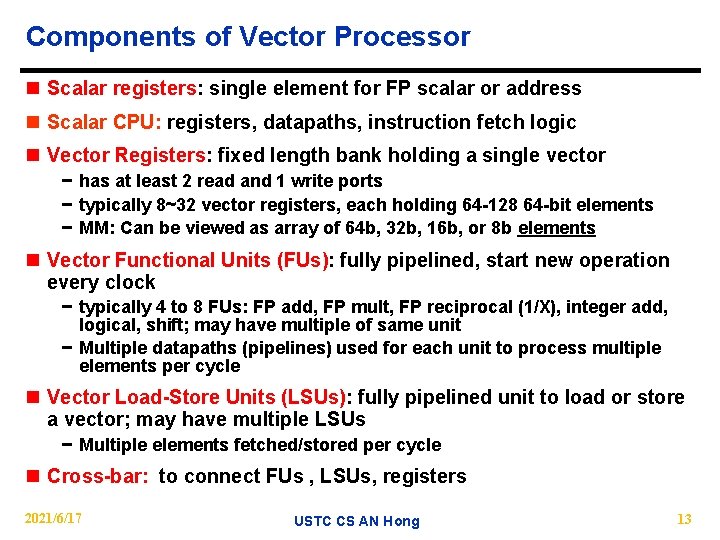

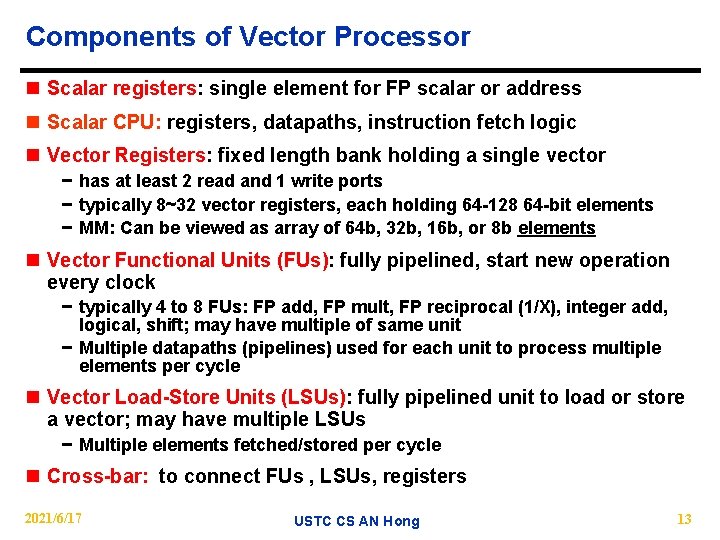

Components of Vector Processor n Scalar registers: single element for FP scalar or address n Scalar CPU: registers, datapaths, instruction fetch logic n Vector Registers: fixed length bank holding a single vector − has at least 2 read and 1 write ports − typically 8~32 vector registers, each holding 64 -128 64 -bit elements − MM: Can be viewed as array of 64 b, 32 b, 16 b, or 8 b elements n Vector Functional Units (FUs): fully pipelined, start new operation every clock − typically 4 to 8 FUs: FP add, FP mult, FP reciprocal (1/X), integer add, logical, shift; may have multiple of same unit − Multiple datapaths (pipelines) used for each unit to process multiple elements per cycle n Vector Load-Store Units (LSUs): fully pipelined unit to load or store a vector; may have multiple LSUs − Multiple elements fetched/stored per cycle n Cross-bar: to connect FUs , LSUs, registers 2021/6/17 USTC CS AN Hong 13

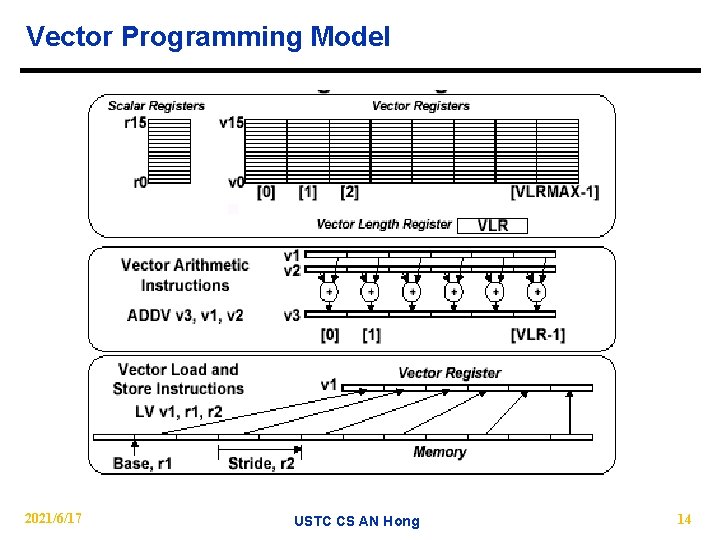

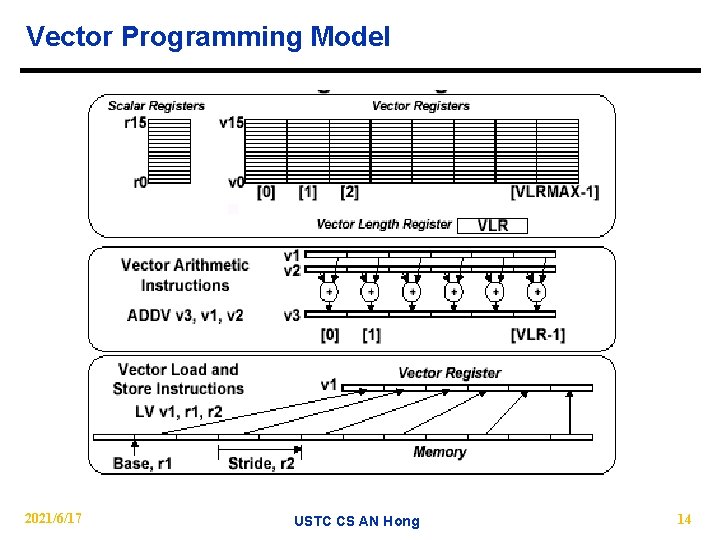

Vector Programming Model 2021/6/17 USTC CS AN Hong 14

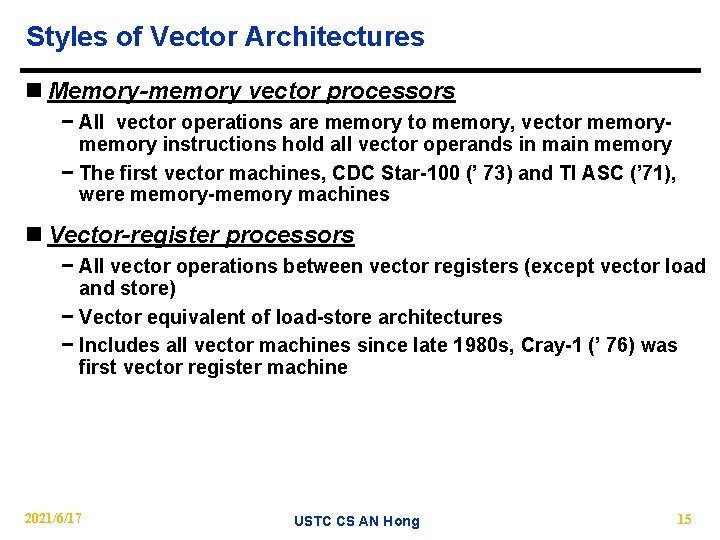

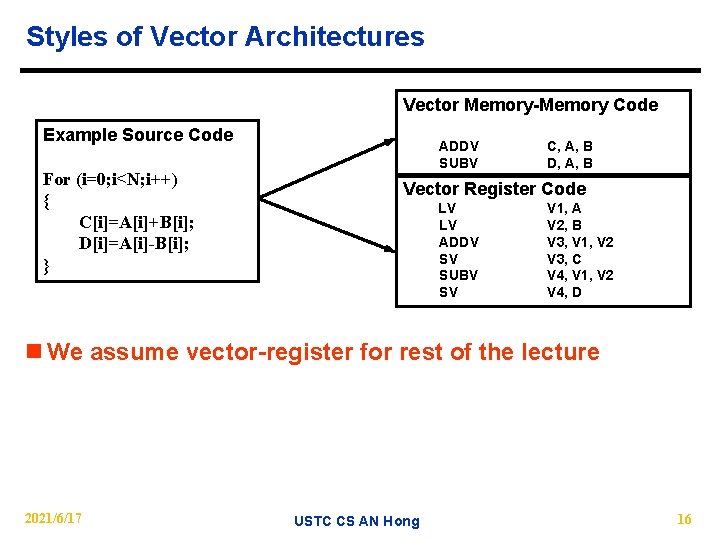

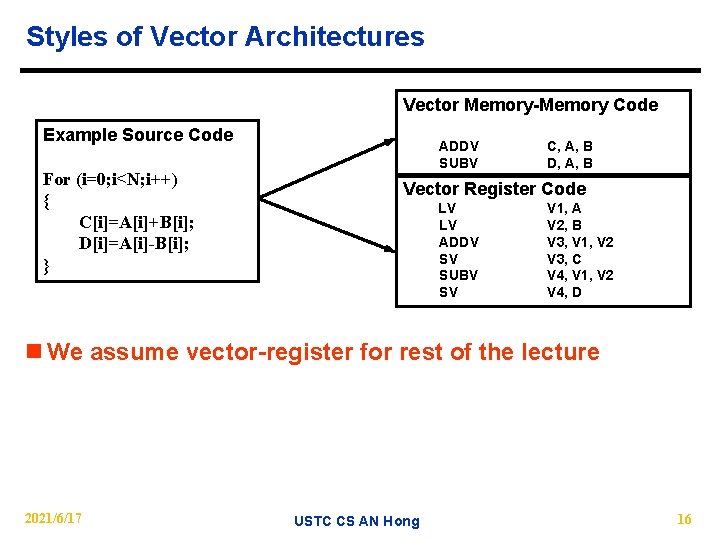

Styles of Vector Architectures n Memory-memory vector processors − All vector operations are memory to memory, vector memory instructions hold all vector operands in main memory − The first vector machines, CDC Star-100 (’ 73) and TI ASC (’ 71), were memory-memory machines n Vector-register processors − All vector operations between vector registers (except vector load and store) − Vector equivalent of load-store architectures − Includes all vector machines since late 1980 s, Cray-1 (’ 76) was first vector register machine 2021/6/17 USTC CS AN Hong 15

Styles of Vector Architectures Vector Memory-Memory Code Example Source Code For (i=0; i<N; i++) { C[i]=A[i]+B[i]; D[i]=A[i]-B[i]; } ADDV SUBV C, A, B D, A, B Vector Register Code LV LV ADDV SV SUBV SV V 1, A V 2, B V 3, V 1, V 2 V 3, C V 4, V 1, V 2 V 4, D n We assume vector-register for rest of the lecture 2021/6/17 USTC CS AN Hong 16

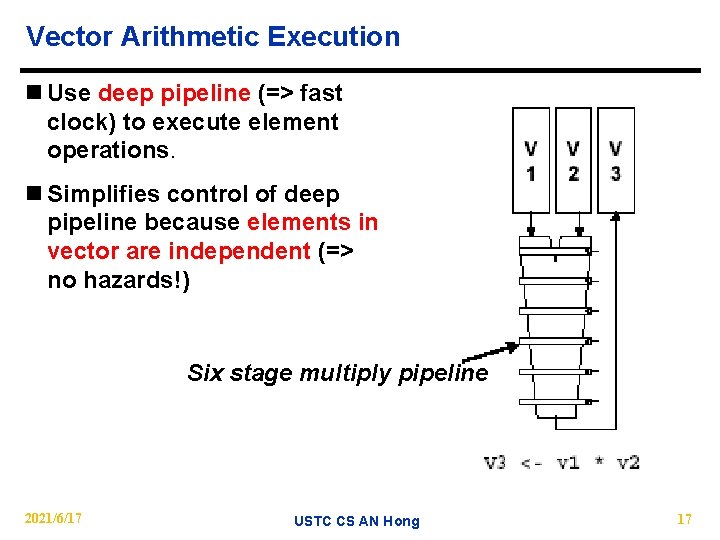

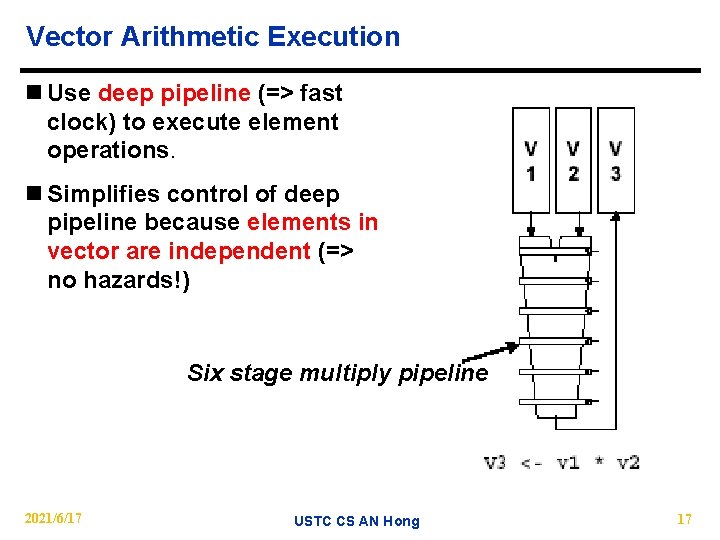

Vector Arithmetic Execution n Use deep pipeline (=> fast clock) to execute element operations. n Simplifies control of deep pipeline because elements in vector are independent (=> no hazards!) Six stage multiply pipeline 2021/6/17 USTC CS AN Hong 17

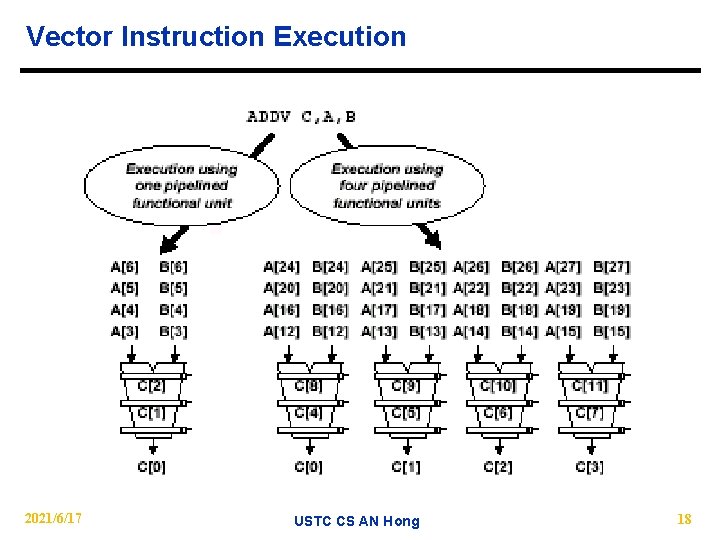

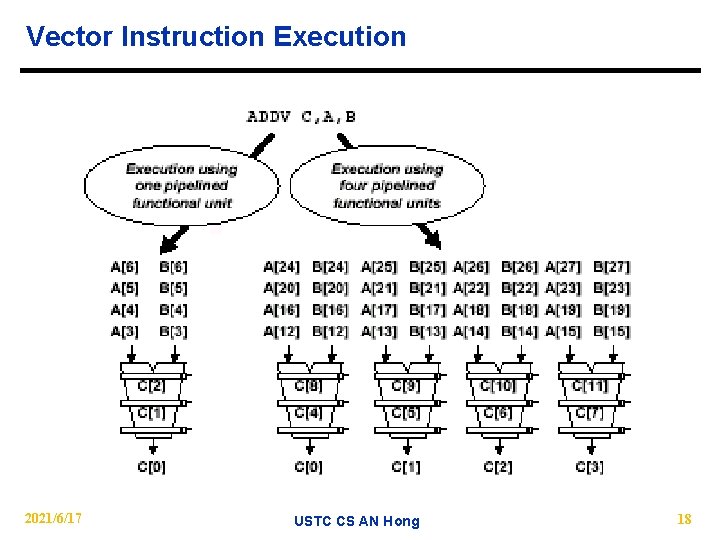

Vector Instruction Execution 2021/6/17 USTC CS AN Hong 18

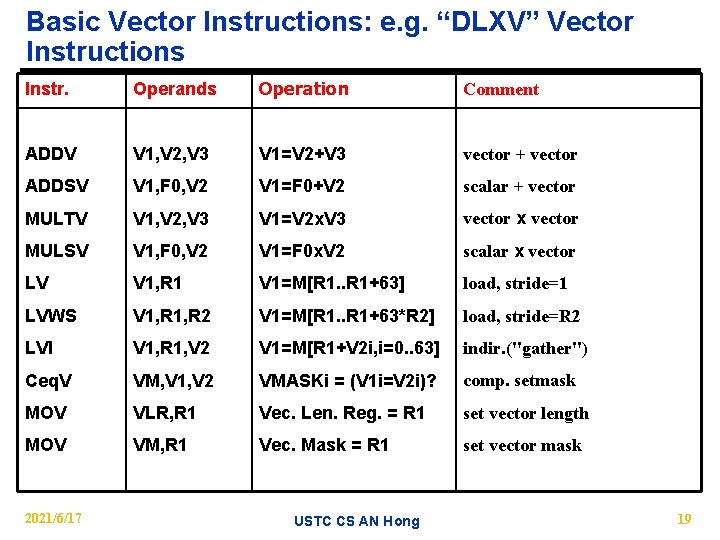

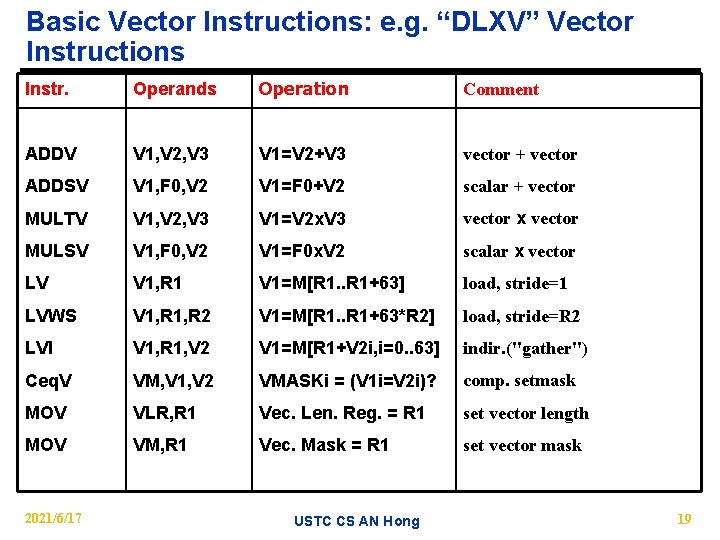

Basic Vector Instructions: e. g. “DLXV” Vector Instructions Instr. Operands Operation Comment ADDV V 1, V 2, V 3 V 1=V 2+V 3 vector + vector ADDSV V 1, F 0, V 2 V 1=F 0+V 2 scalar + vector MULTV V 1, V 2, V 3 V 1=V 2 x. V 3 vector x vector MULSV V 1, F 0, V 2 V 1=F 0 x. V 2 scalar x vector LV V 1, R 1 V 1=M[R 1. . R 1+63] load, stride=1 LVWS V 1, R 2 V 1=M[R 1. . R 1+63*R 2] load, stride=R 2 LVI V 1, R 1, V 2 V 1=M[R 1+V 2 i, i=0. . 63] indir. ("gather") Ceq. V VM, V 1, V 2 VMASKi = (V 1 i=V 2 i)? comp. setmask MOV VLR, R 1 Vec. Len. Reg. = R 1 set vector length MOV VM, R 1 Vec. Mask = R 1 set vector mask 2021/6/17 USTC CS AN Hong 19

![Vector Code Example C code Scalar code for i0 i64 i CiAiBi Vector Code Example # C code # Scalar code for (i=0; i<64; i++) C[i]=A[i]+B[i];](https://slidetodoc.com/presentation_image_h2/77afb5afd3f9ab3ddf2f5bf9aee7d063/image-20.jpg)

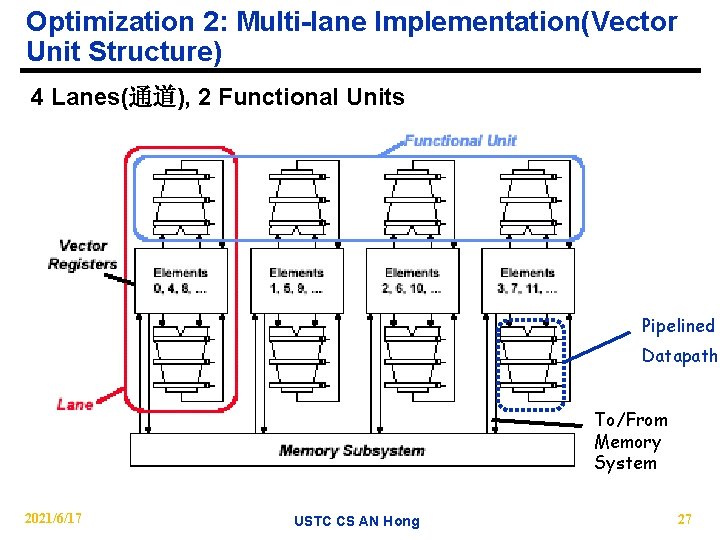

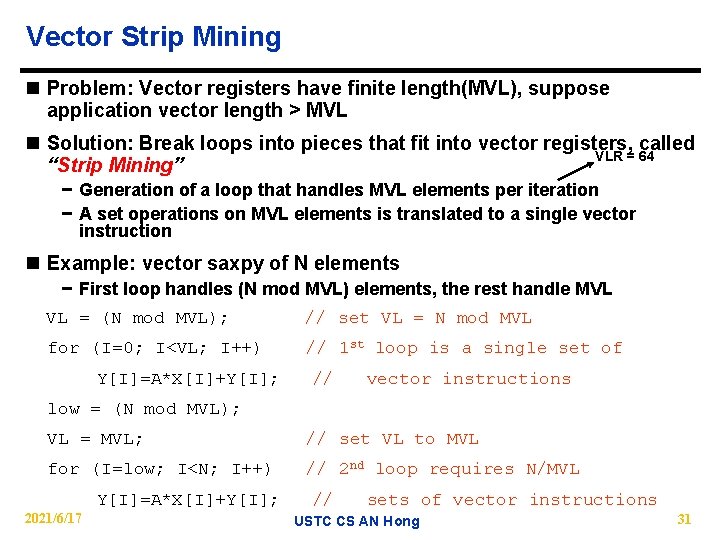

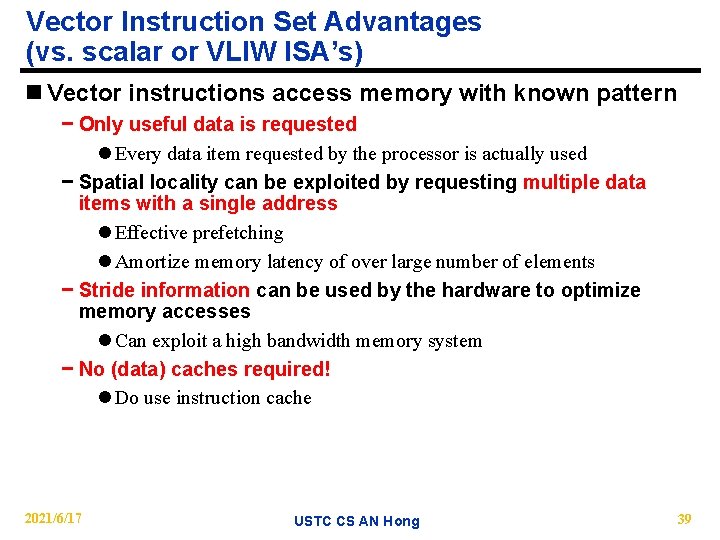

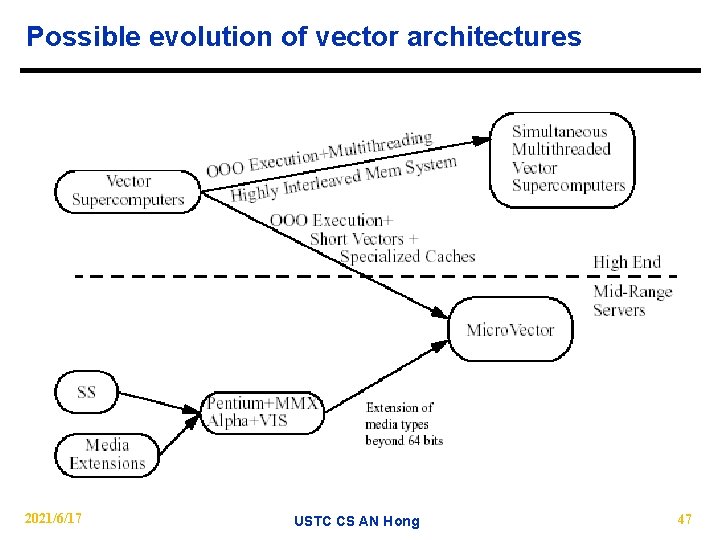

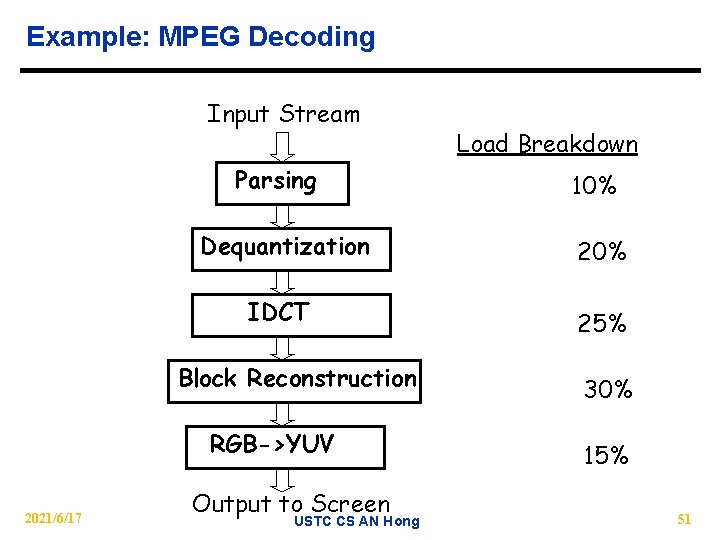

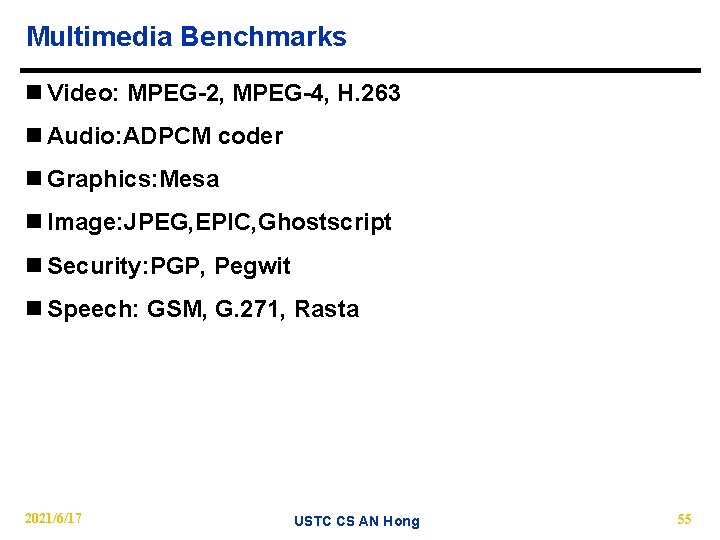

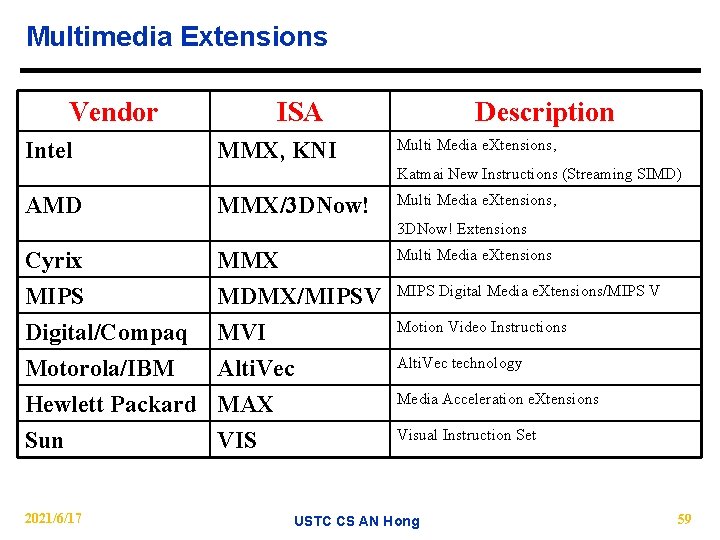

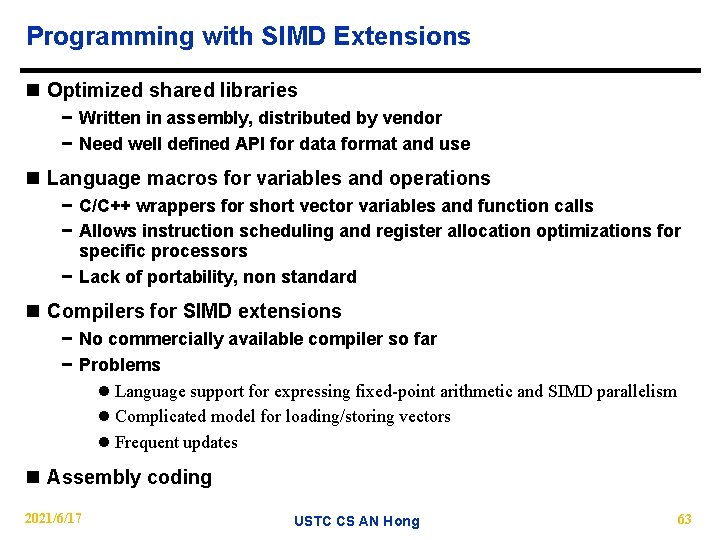

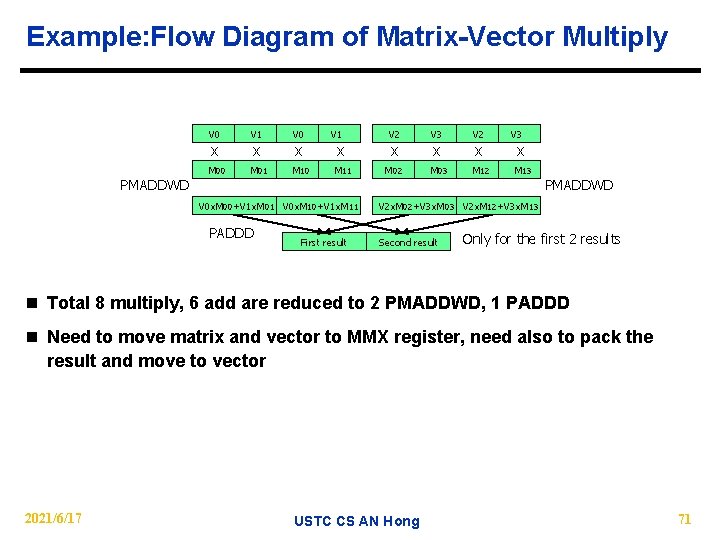

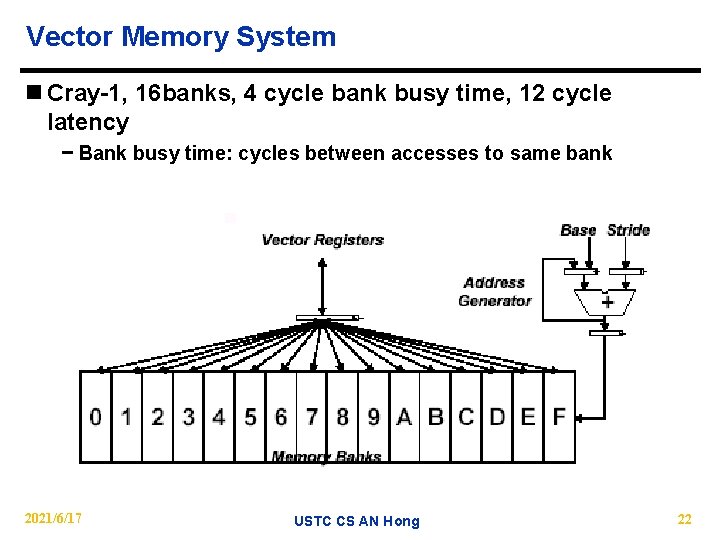

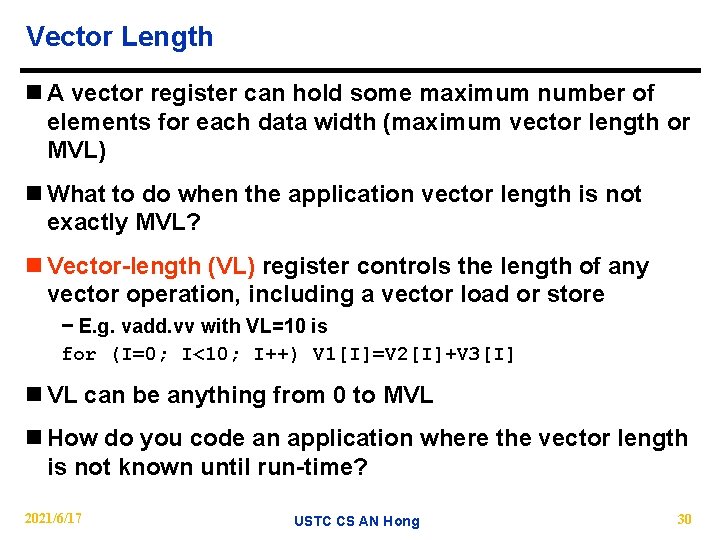

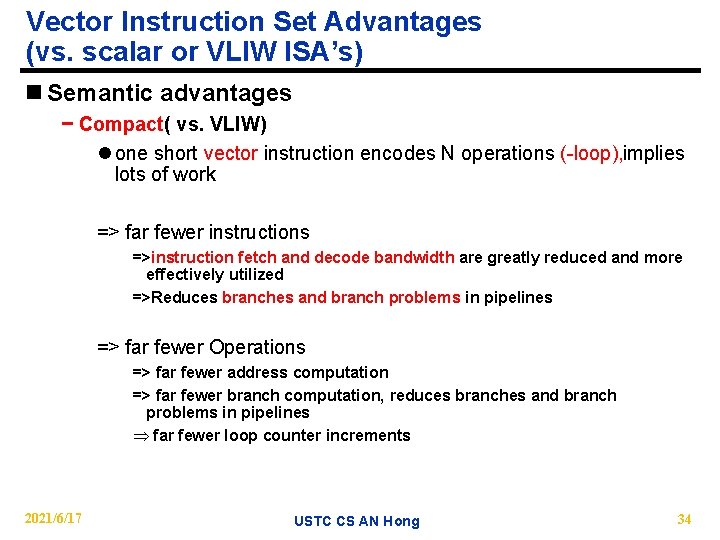

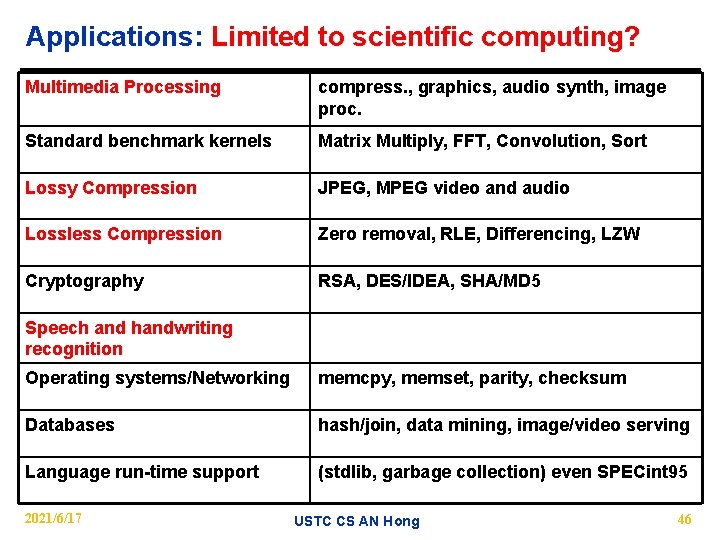

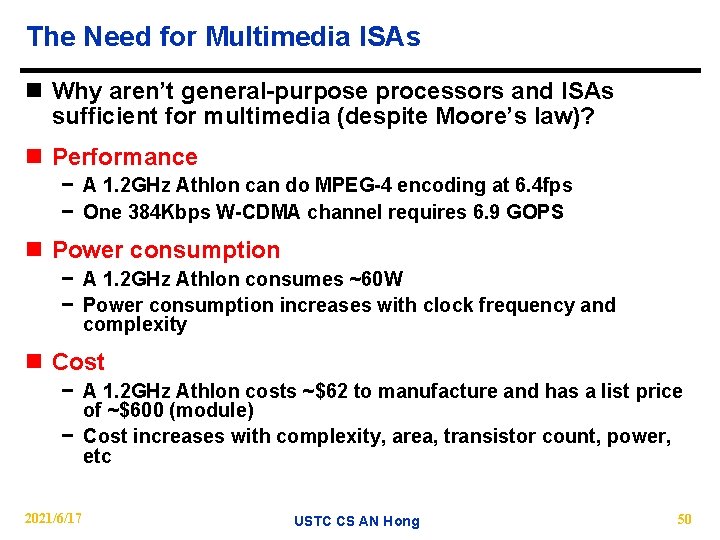

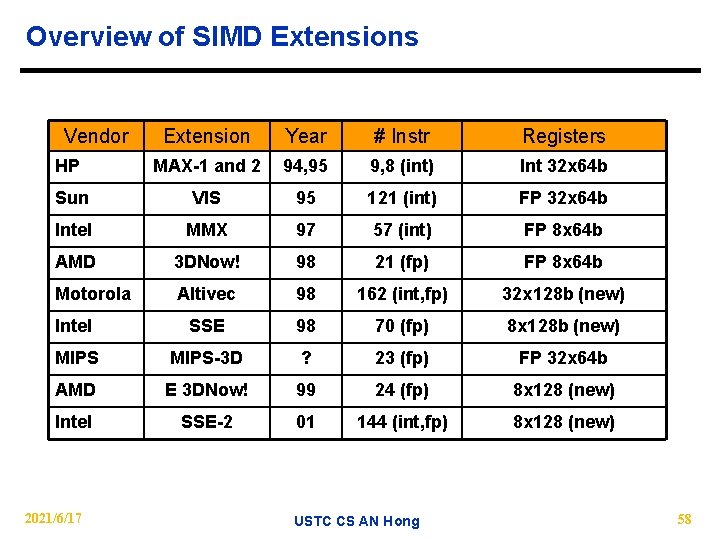

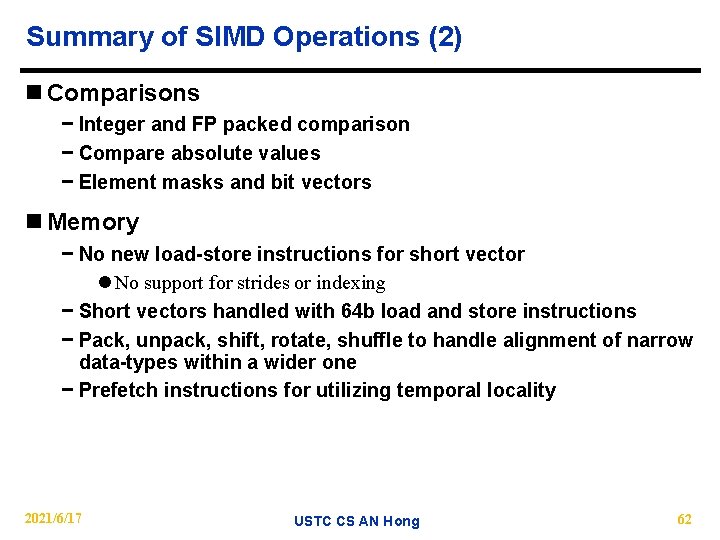

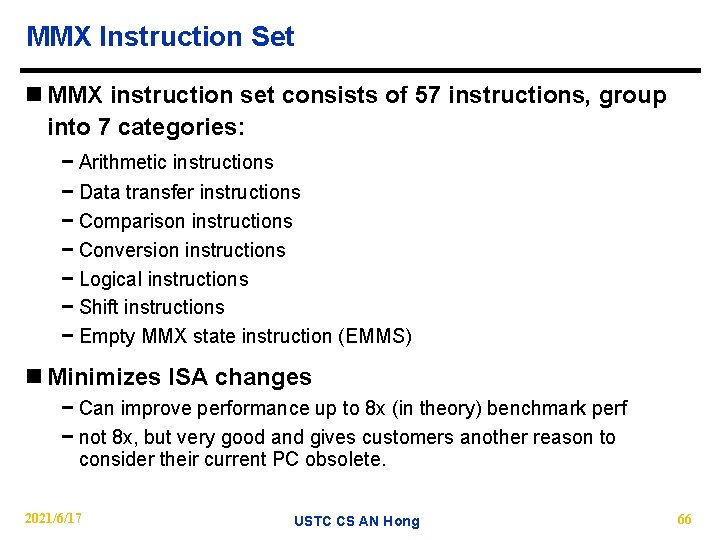

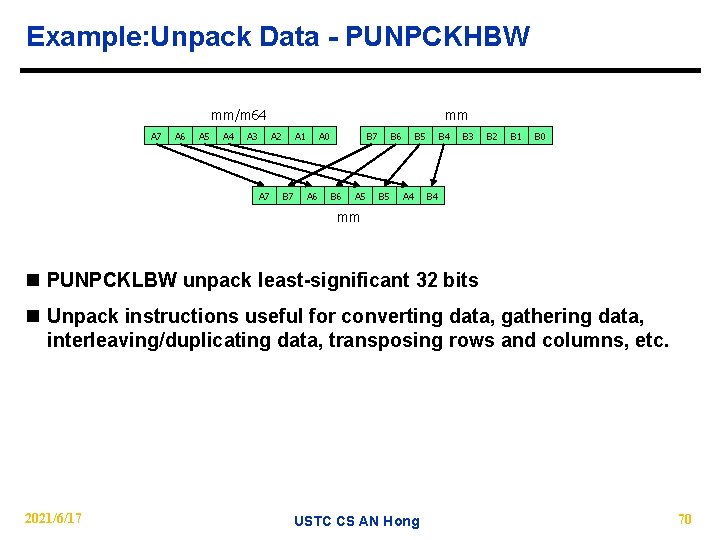

Vector Code Example # C code # Scalar code for (i=0; i<64; i++) C[i]=A[i]+B[i]; LI Loop: L. D ADD. D S. D DADDIU BNEZ 2021/6/17 # Vector code R 4, 64 F 0, 0(R 1) F 2, 0(R 2) F 4, F 2, F 0 F 4, 0(R 3) R 1, 8 R 2, 8 R 3, 8 R 4, 1 R 4, loop USTC CS AN Hong LI LV LV ADDV. D SV VLR, 64 V 1, R 1 V 2, R 2 V 3, V 1, V 2 V 3, R 3 20

![Automatic Code Vectorization for i0 i N i Ci Ai Bi Automatic Code Vectorization for (i=0; i < N; i++) C[i] = A[i] + B[i];](https://slidetodoc.com/presentation_image_h2/77afb5afd3f9ab3ddf2f5bf9aee7d063/image-21.jpg)

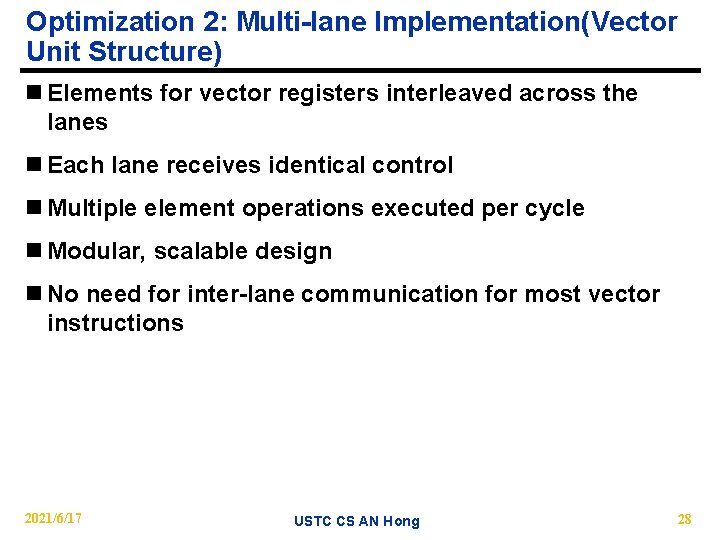

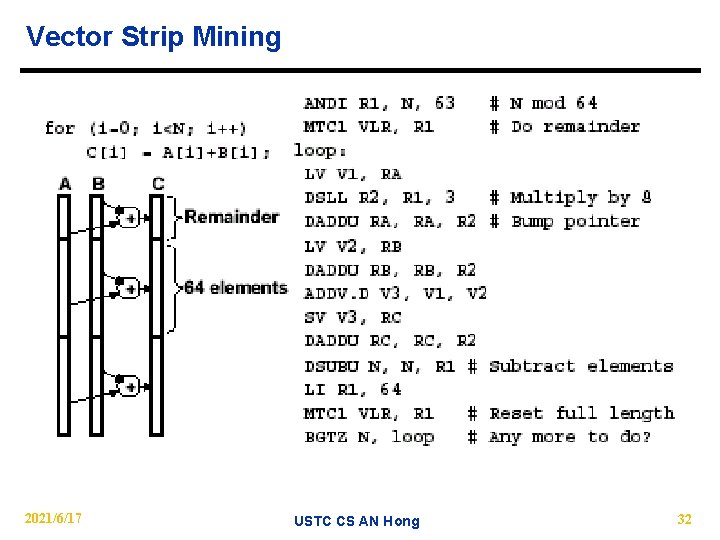

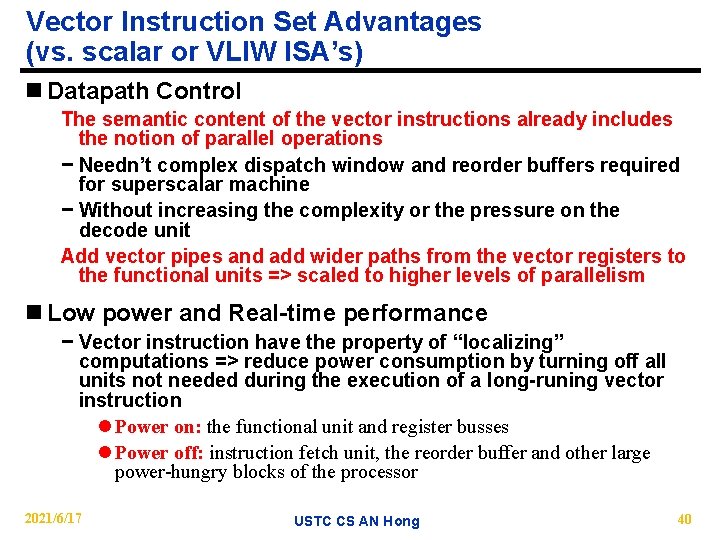

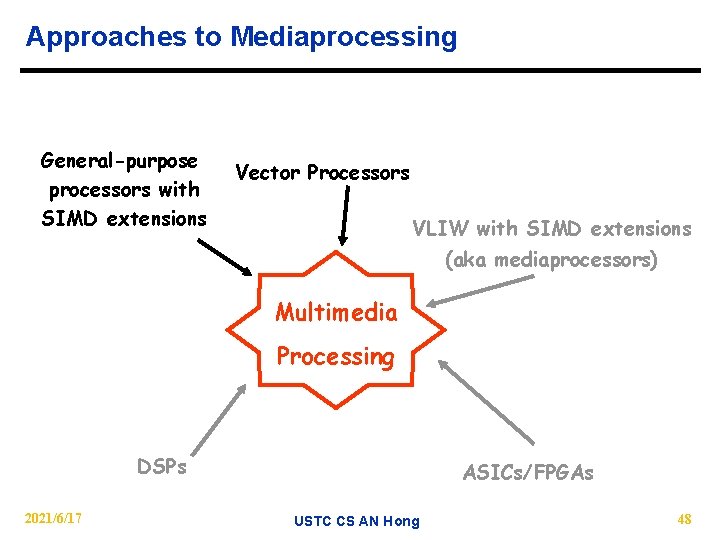

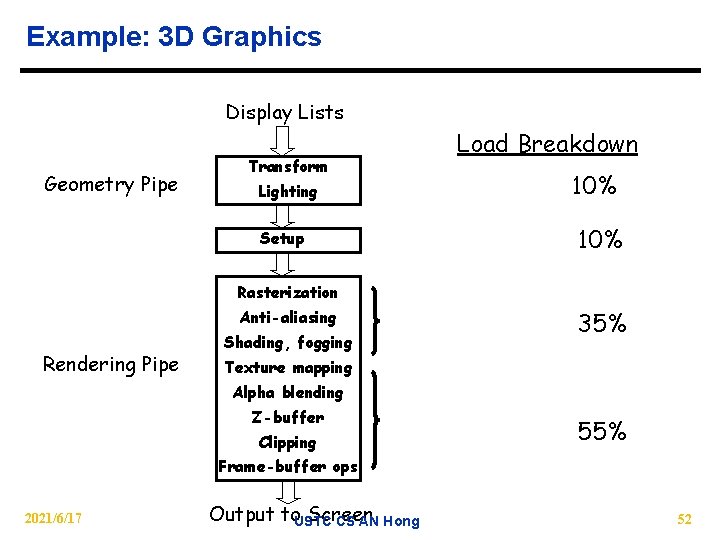

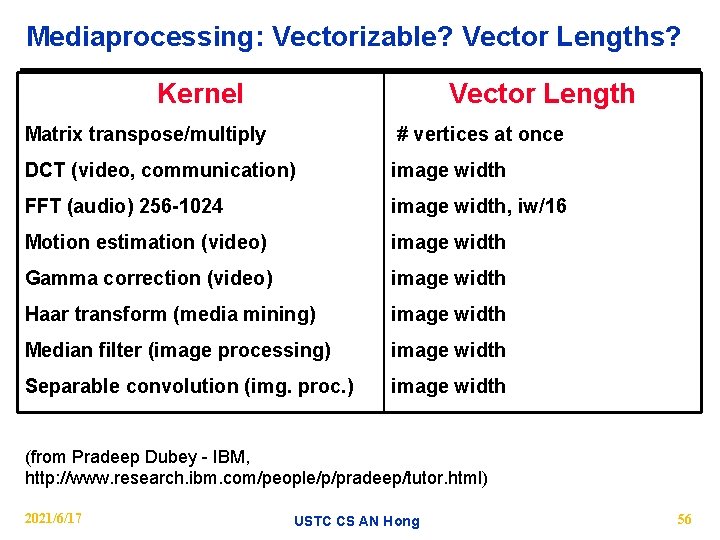

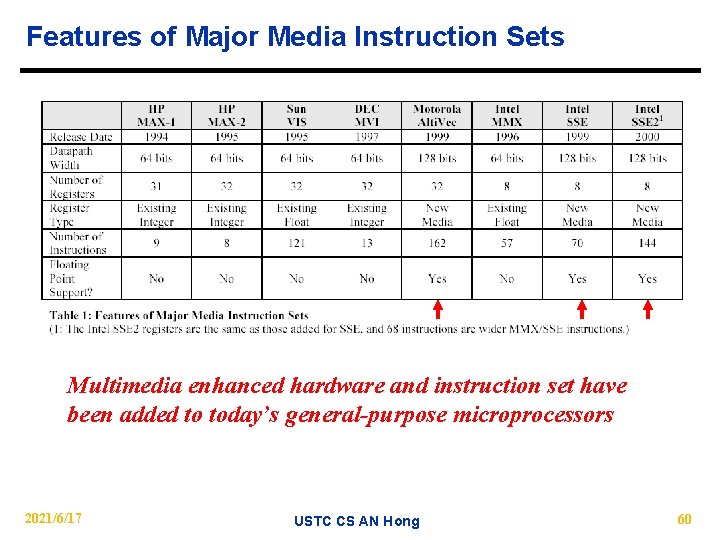

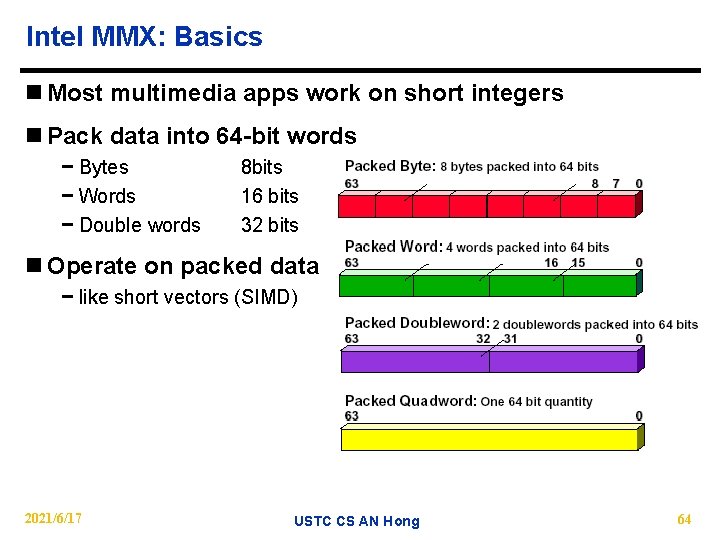

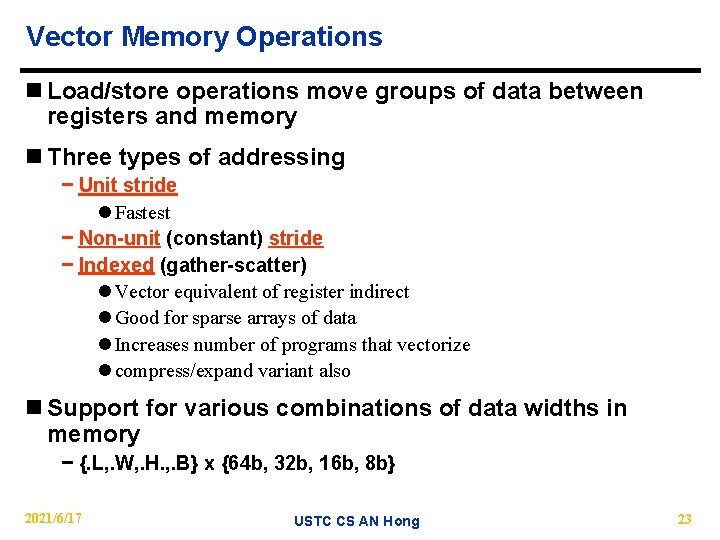

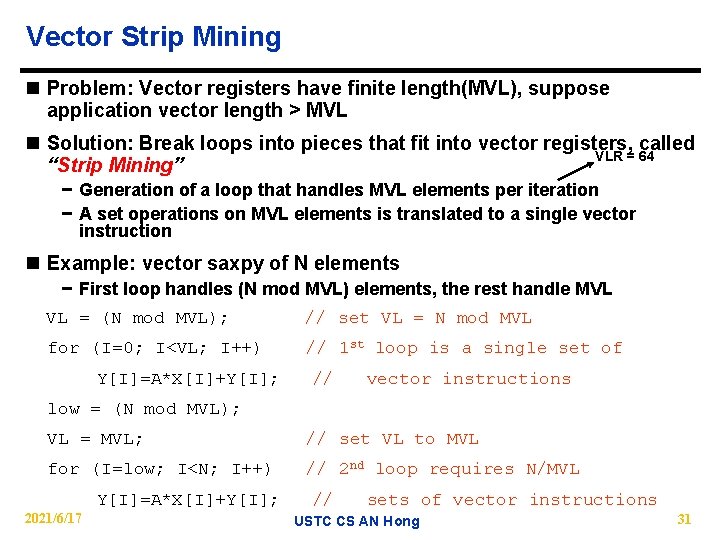

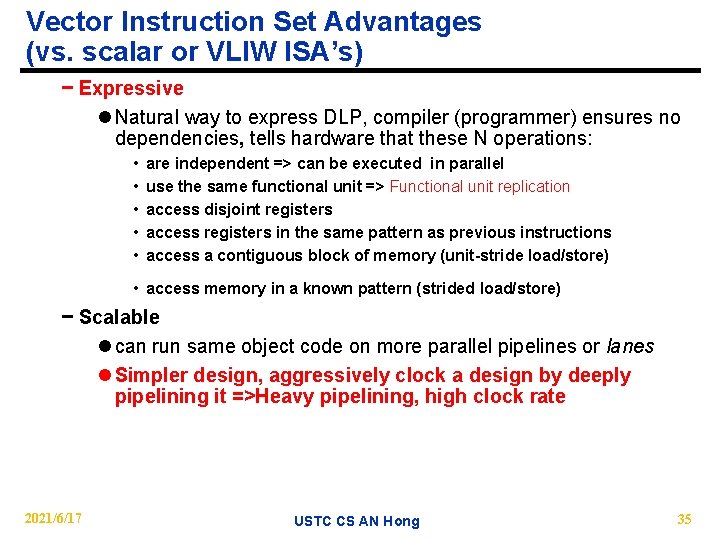

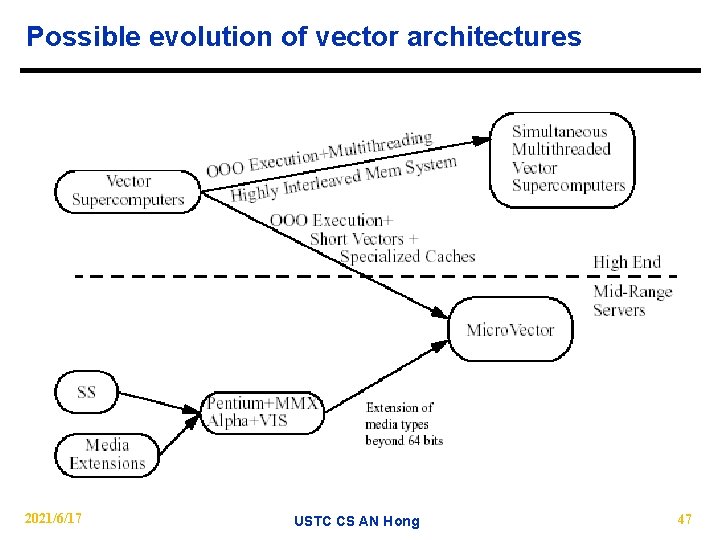

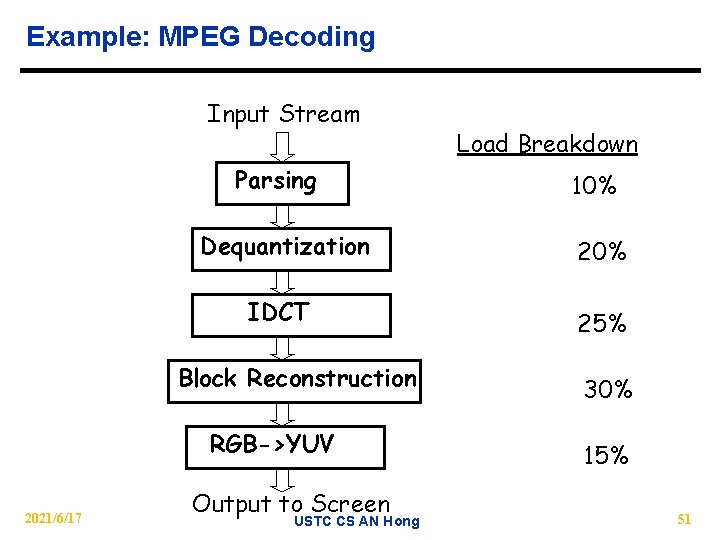

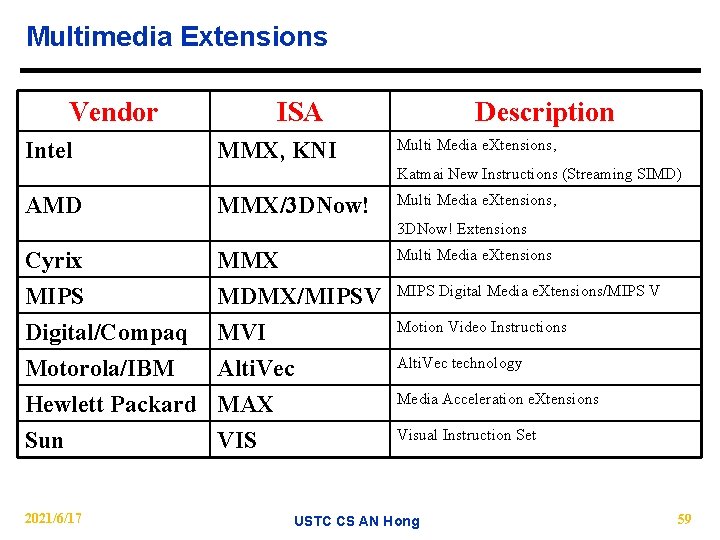

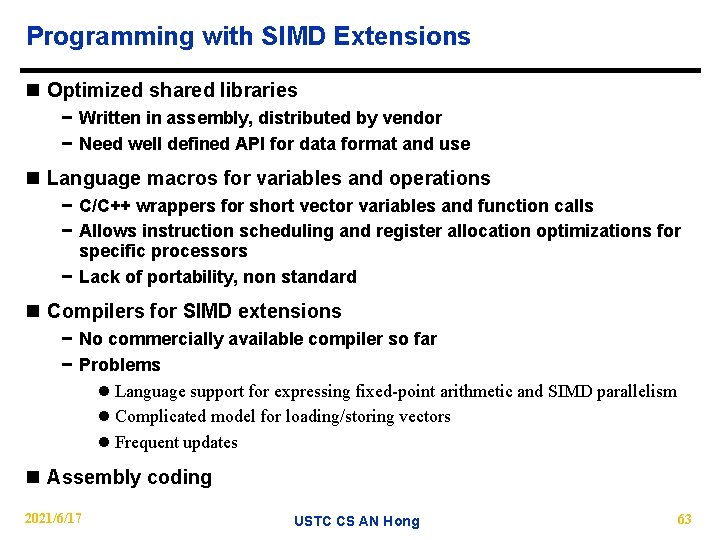

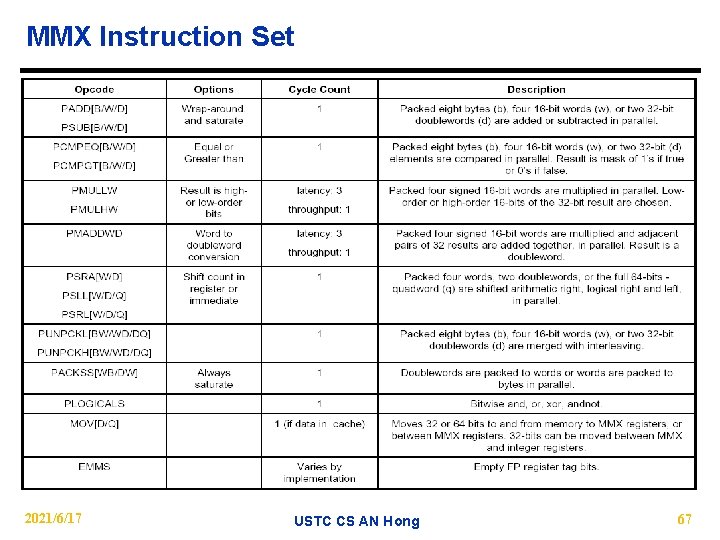

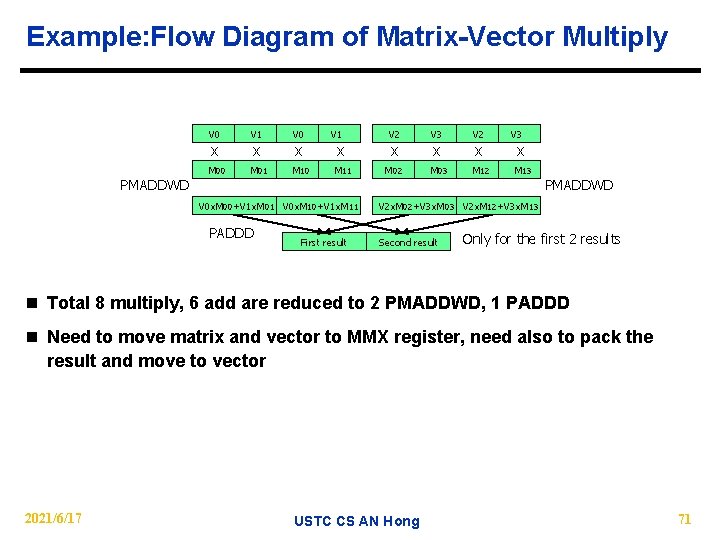

Automatic Code Vectorization for (i=0; i < N; i++) C[i] = A[i] + B[i]; 2021/6/17 USTC CS AN Hong 21

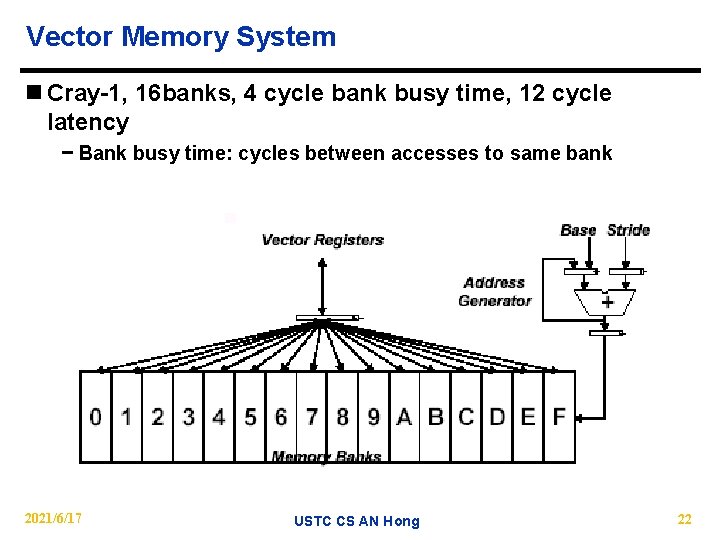

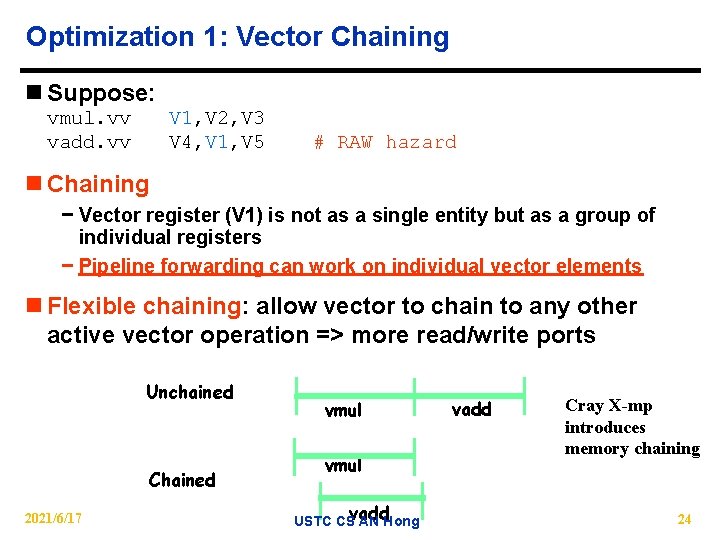

Vector Memory System n Cray-1, 16 banks, 4 cycle bank busy time, 12 cycle latency − Bank busy time: cycles between accesses to same bank 2021/6/17 USTC CS AN Hong 22

Vector Memory Operations n Load/store operations move groups of data between registers and memory n Three types of addressing − Unit stride l Fastest − Non-unit (constant) stride − Indexed (gather-scatter) l Vector equivalent of register indirect l Good for sparse arrays of data l Increases number of programs that vectorize l compress/expand variant also n Support for various combinations of data widths in memory − {. L, . W, . H. , . B} x {64 b, 32 b, 16 b, 8 b} 2021/6/17 USTC CS AN Hong 23

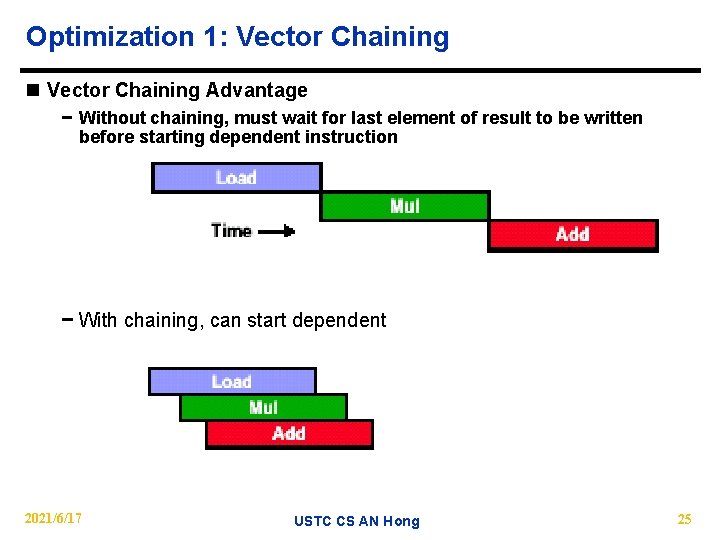

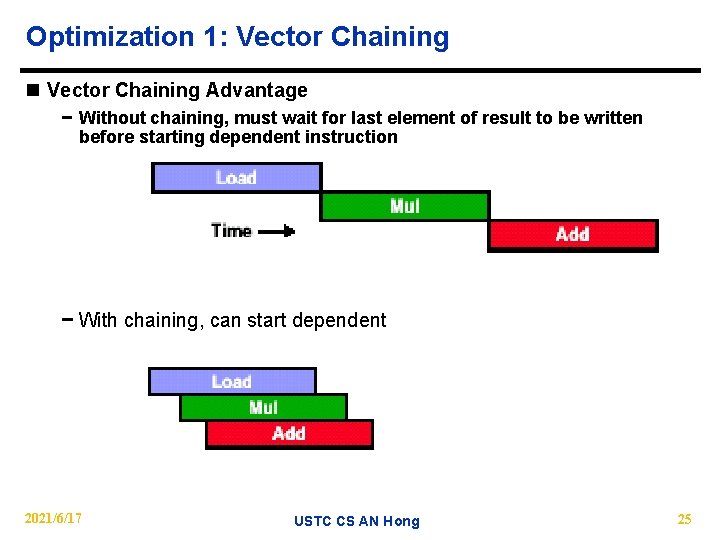

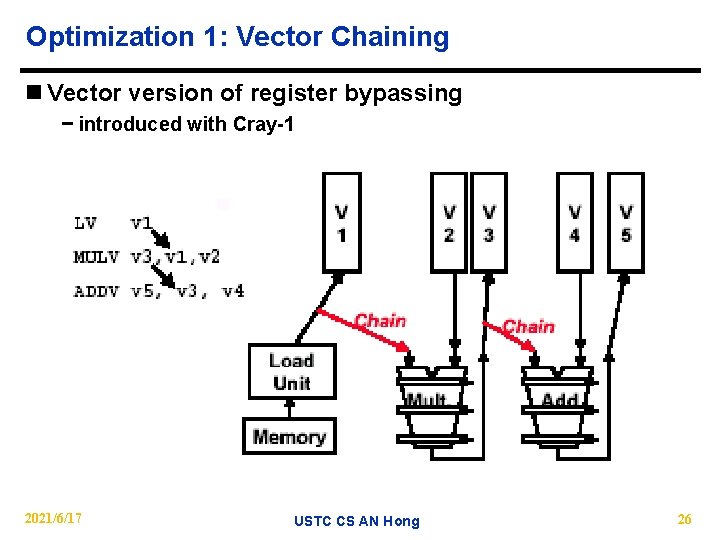

Optimization 1: Vector Chaining n Suppose: vmul. vv vadd. vv V 1, V 2, V 3 V 4, V 1, V 5 # RAW hazard n Chaining − Vector register (V 1) is not as a single entity but as a group of individual registers − Pipeline forwarding can work on individual vector elements n Flexible chaining: allow vector to chain to any other active vector operation => more read/write ports Unchained Chained 2021/6/17 vmul vadd USTC CS AN Hong vadd Cray X-mp introduces memory chaining 24

Optimization 1: Vector Chaining n Vector Chaining Advantage − Without chaining, must wait for last element of result to be written before starting dependent instruction − With chaining, can start dependent 2021/6/17 USTC CS AN Hong 25

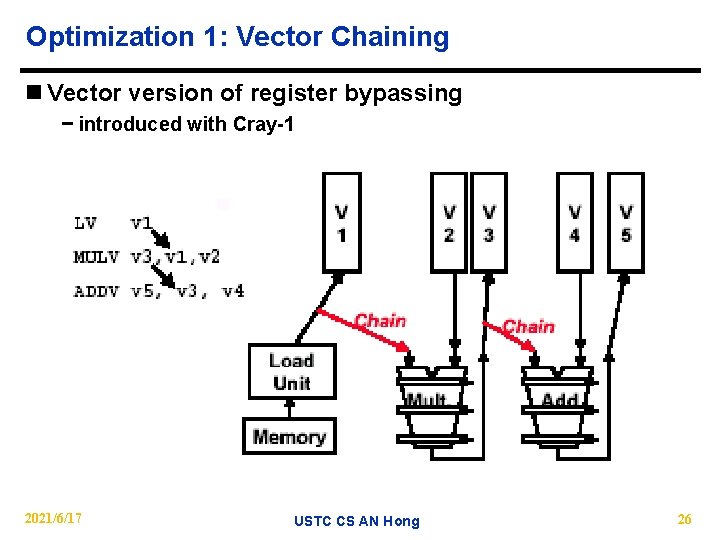

Optimization 1: Vector Chaining n Vector version of register bypassing − introduced with Cray-1 2021/6/17 USTC CS AN Hong 26

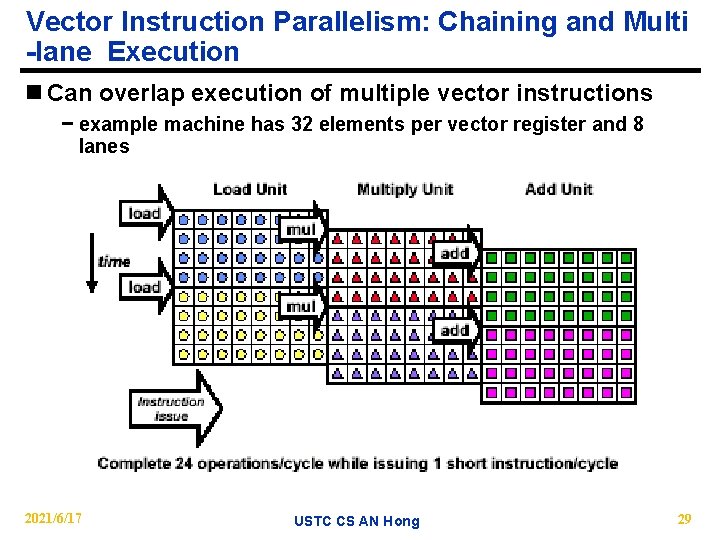

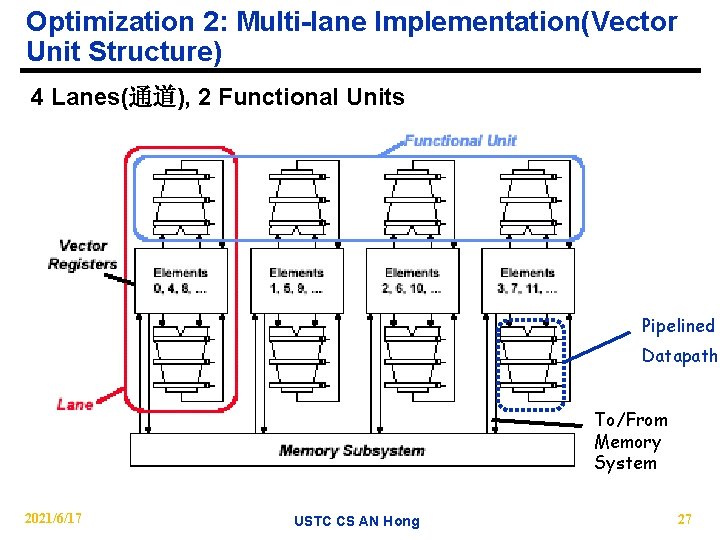

Optimization 2: Multi-lane Implementation(Vector Unit Structure) 4 Lanes(通道), 2 Functional Units Pipelined Datapath To/From Memory System 2021/6/17 USTC CS AN Hong 27

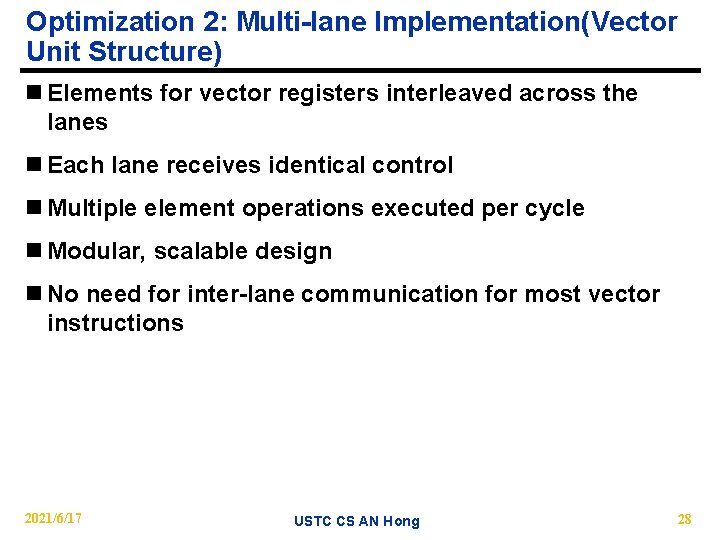

Optimization 2: Multi-lane Implementation(Vector Unit Structure) n Elements for vector registers interleaved across the lanes n Each lane receives identical control n Multiple element operations executed per cycle n Modular, scalable design n No need for inter-lane communication for most vector instructions 2021/6/17 USTC CS AN Hong 28

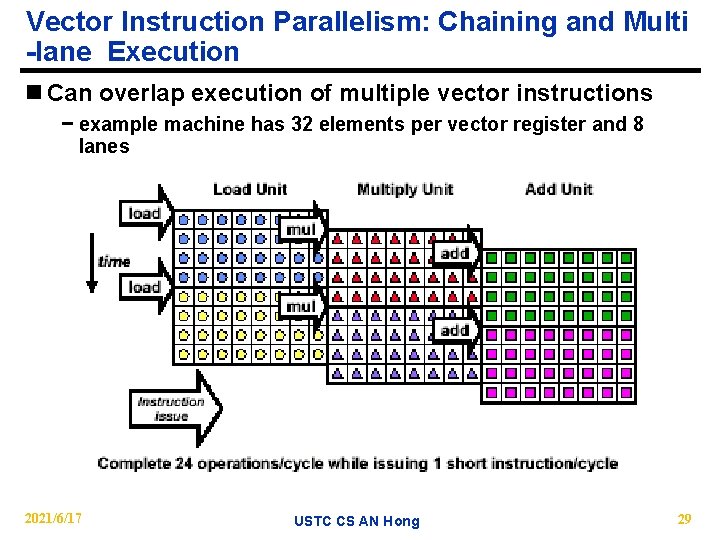

Vector Instruction Parallelism: Chaining and Multi -lane Execution n Can overlap execution of multiple vector instructions − example machine has 32 elements per vector register and 8 lanes 2021/6/17 USTC CS AN Hong 29

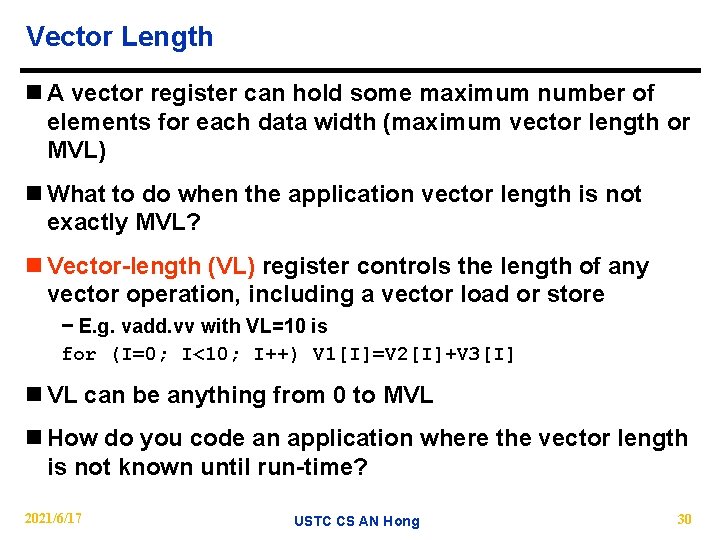

Vector Length n A vector register can hold some maximum number of elements for each data width (maximum vector length or MVL) n What to do when the application vector length is not exactly MVL? n Vector-length (VL) register controls the length of any vector operation, including a vector load or store − E. g. vadd. vv with VL=10 is for (I=0; I<10; I++) V 1[I]=V 2[I]+V 3[I] n VL can be anything from 0 to MVL n How do you code an application where the vector length is not known until run-time? 2021/6/17 USTC CS AN Hong 30

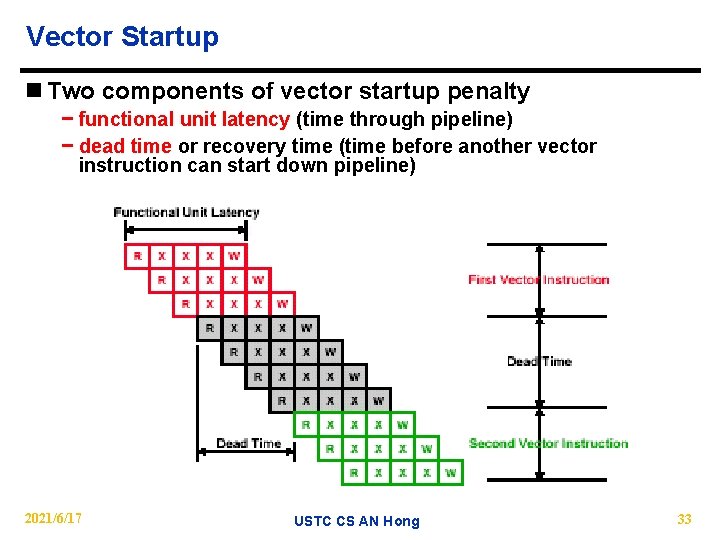

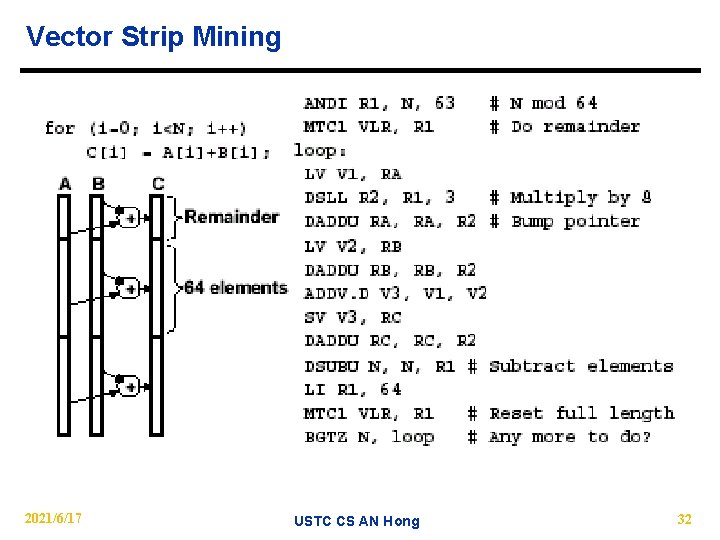

Vector Strip Mining n Problem: Vector registers have finite length(MVL), suppose application vector length > MVL n Solution: Break loops into pieces that fit into vector registers, called VLR = 64 “Strip Mining” − Generation of a loop that handles MVL elements per iteration − A set operations on MVL elements is translated to a single vector instruction n Example: vector saxpy of N elements − First loop handles (N mod MVL) elements, the rest handle MVL VL = (N mod MVL); // set VL = N mod MVL for (I=0; I<VL; I++) // 1 st loop is a single set of Y[I]=A*X[I]+Y[I]; // vector instructions low = (N mod MVL); VL = MVL; // set VL to MVL for (I=low; I<N; I++) // 2 nd loop requires N/MVL Y[I]=A*X[I]+Y[I]; 2021/6/17 // sets of vector instructions USTC CS AN Hong 31

Vector Strip Mining 2021/6/17 USTC CS AN Hong 32

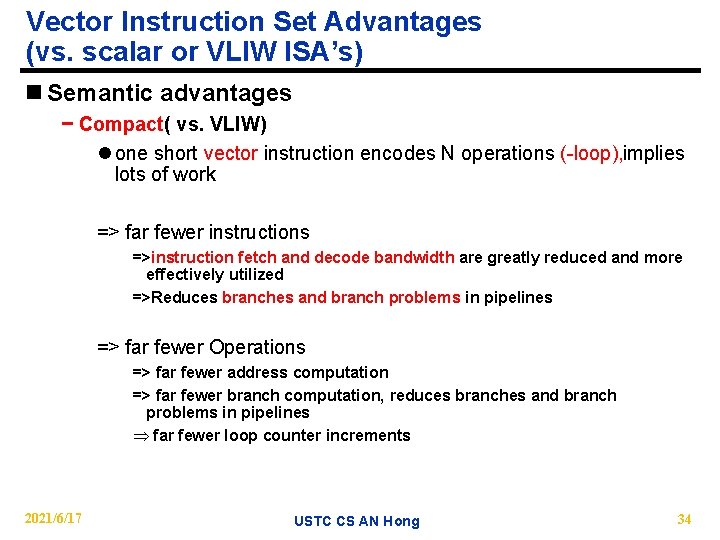

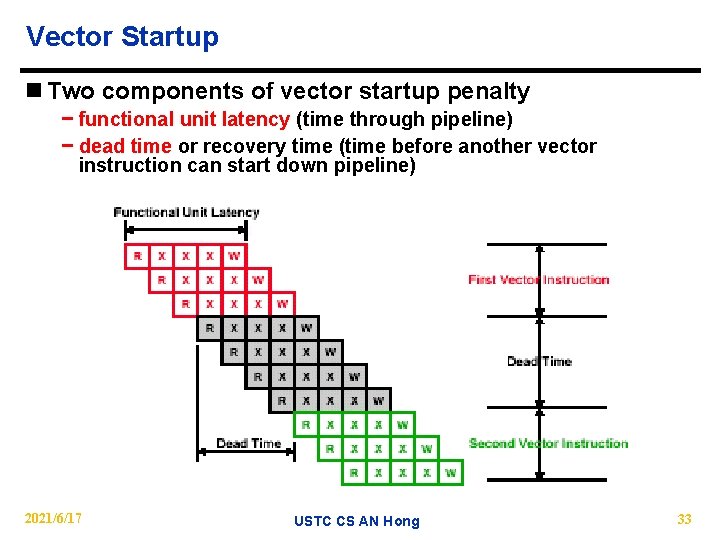

Vector Startup n Two components of vector startup penalty − functional unit latency (time through pipeline) − dead time or recovery time (time before another vector instruction can start down pipeline) 2021/6/17 USTC CS AN Hong 33

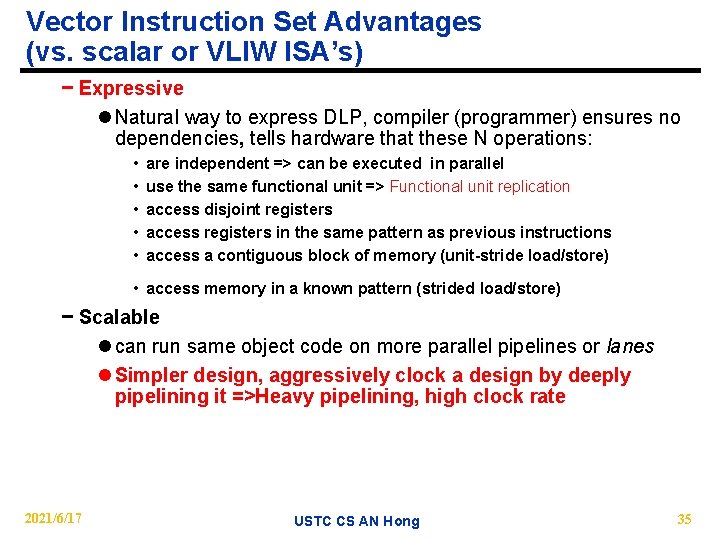

Vector Instruction Set Advantages (vs. scalar or VLIW ISA’s) n Semantic advantages − Compact( vs. VLIW) l one short vector instruction encodes N operations ( loop), implies lots of work => far fewer instructions =>instruction fetch and decode bandwidth are greatly reduced and more effectively utilized =>Reduces branches and branch problems in pipelines => far fewer Operations => far fewer address computation => far fewer branch computation, reduces branches and branch problems in pipelines Þ far fewer loop counter increments 2021/6/17 USTC CS AN Hong 34

Vector Instruction Set Advantages (vs. scalar or VLIW ISA’s) − Expressive l Natural way to express DLP, compiler (programmer) ensures no dependencies, tells hardware that these N operations: • • • are independent => can be executed in parallel use the same functional unit => Functional unit replication access disjoint registers access registers in the same pattern as previous instructions access a contiguous block of memory (unit-stride load/store) • access memory in a known pattern (strided load/store) − Scalable l can run same object code on more parallel pipelines or lanes l Simpler design, aggressively clock a design by deeply pipelining it =>Heavy pipelining, high clock rate 2021/6/17 USTC CS AN Hong 35

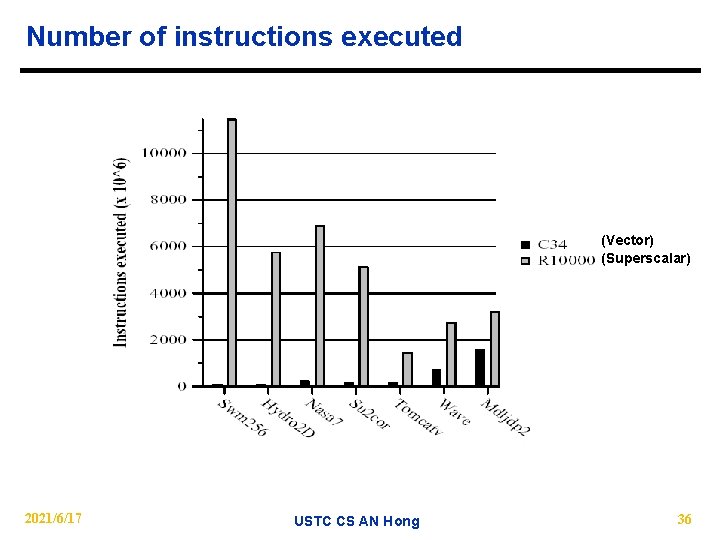

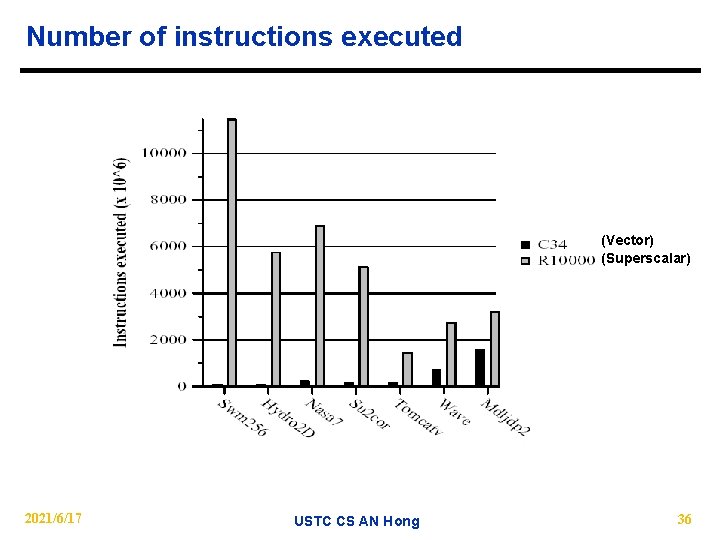

Number of instructions executed (Vector) (Superscalar) 2021/6/17 USTC CS AN Hong 36

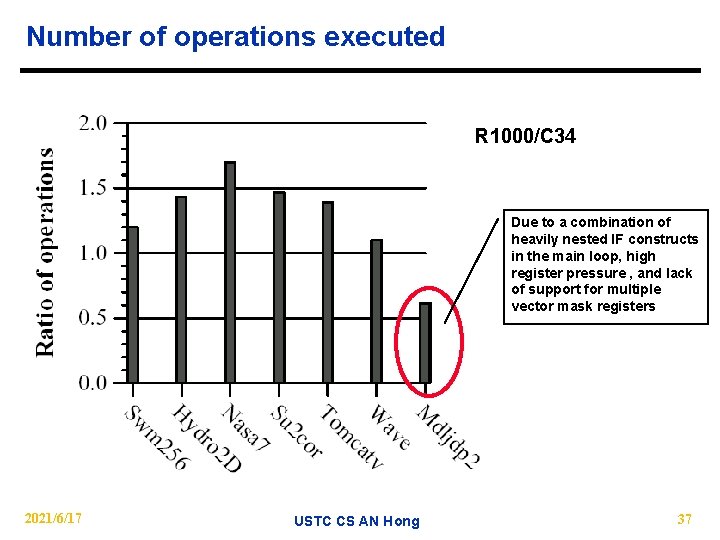

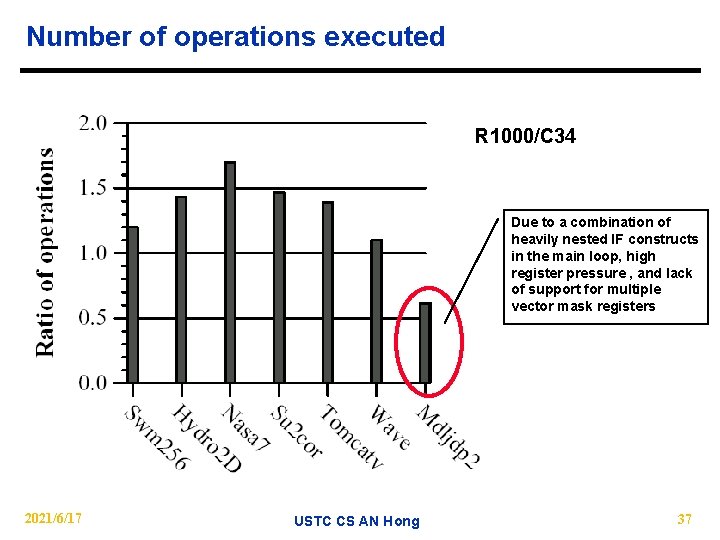

Number of operations executed R 1000/C 34 Due to a combination of heavily nested IF constructs in the main loop, high register pressure , and lack of support for multiple vector mask registers 2021/6/17 USTC CS AN Hong 37

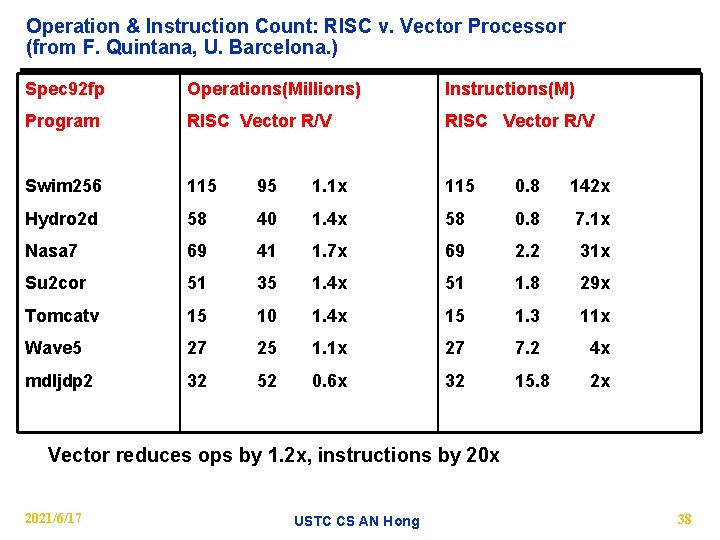

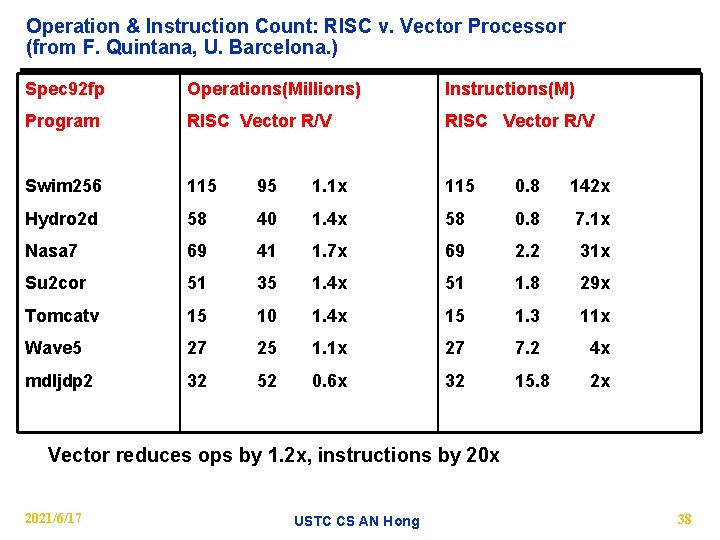

Operation & Instruction Count: RISC v. Vector Processor (from F. Quintana, U. Barcelona. ) Spec 92 fp Operations(Millions) Instructions(M) Program RISC Vector R/V Swim 256 115 95 1. 1 x 115 0. 8 142 x Hydro 2 d 58 40 1. 4 x 58 0. 8 7. 1 x Nasa 7 69 41 1. 7 x 69 2. 2 31 x Su 2 cor 51 35 1. 4 x 51 1. 8 29 x Tomcatv 15 10 1. 4 x 15 1. 3 11 x Wave 5 27 25 1. 1 x 27 7. 2 4 x mdljdp 2 32 52 0. 6 x 32 15. 8 2 x Vector reduces ops by 1. 2 x, instructions by 20 x 2021/6/17 USTC CS AN Hong 38

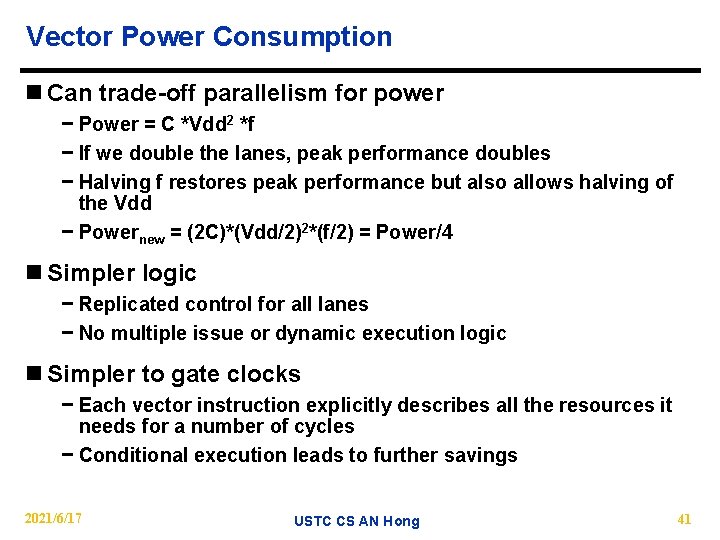

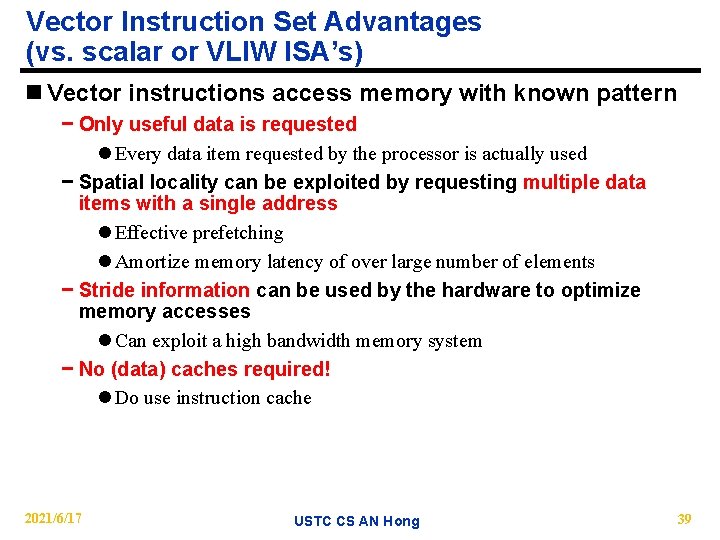

Vector Instruction Set Advantages (vs. scalar or VLIW ISA’s) n Vector instructions access memory with known pattern − Only useful data is requested l Every data item requested by the processor is actually used − Spatial locality can be exploited by requesting multiple data items with a single address l Effective prefetching l Amortize memory latency of over large number of elements − Stride information can be used by the hardware to optimize memory accesses l Can exploit a high bandwidth memory system − No (data) caches required! l Do use instruction cache 2021/6/17 USTC CS AN Hong 39

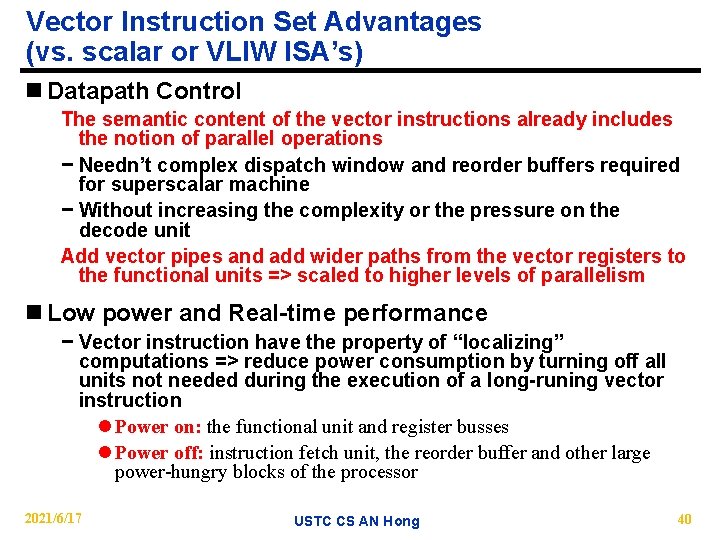

Vector Instruction Set Advantages (vs. scalar or VLIW ISA’s) n Datapath Control The semantic content of the vector instructions already includes the notion of parallel operations − Needn’t complex dispatch window and reorder buffers required for superscalar machine − Without increasing the complexity or the pressure on the decode unit Add vector pipes and add wider paths from the vector registers to the functional units => scaled to higher levels of parallelism n Low power and Real-time performance − Vector instruction have the property of “localizing” computations => reduce power consumption by turning off all units not needed during the execution of a long-runing vector instruction l Power on: the functional unit and register busses l Power off: instruction fetch unit, the reorder buffer and other large power-hungry blocks of the processor 2021/6/17 USTC CS AN Hong 40

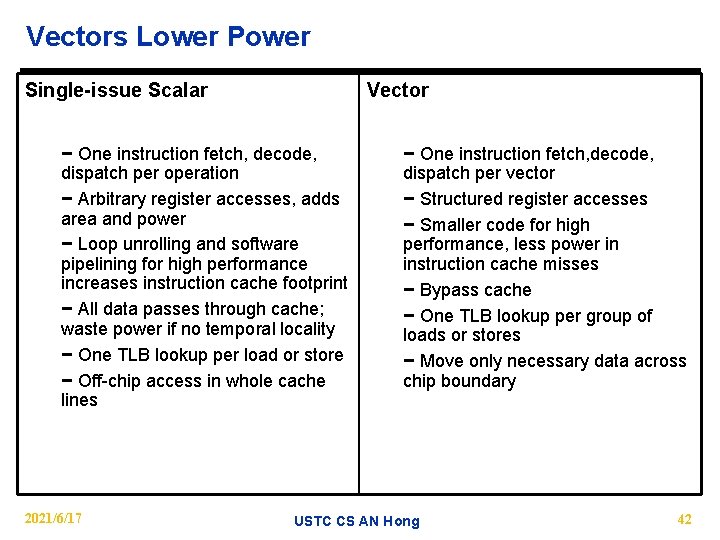

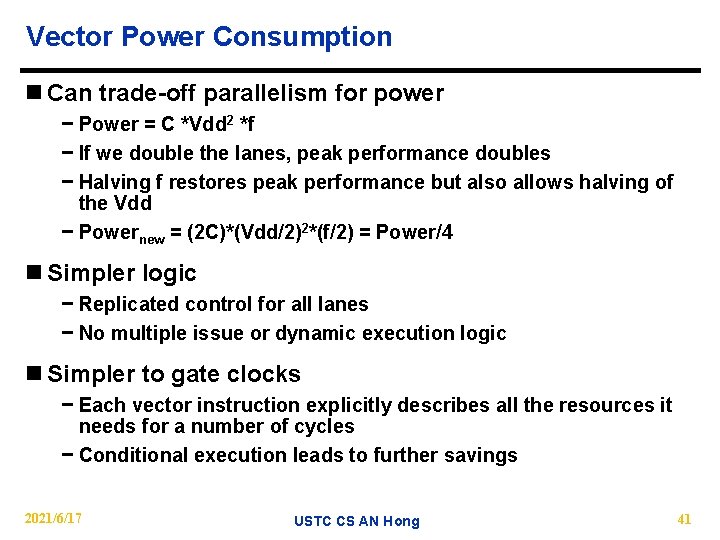

Vector Power Consumption n Can trade-off parallelism for power − Power = C *Vdd 2 *f − If we double the lanes, peak performance doubles − Halving f restores peak performance but also allows halving of the Vdd − Powernew = (2 C)*(Vdd/2)2*(f/2) = Power/4 n Simpler logic − Replicated control for all lanes − No multiple issue or dynamic execution logic n Simpler to gate clocks − Each vector instruction explicitly describes all the resources it needs for a number of cycles − Conditional execution leads to further savings 2021/6/17 USTC CS AN Hong 41

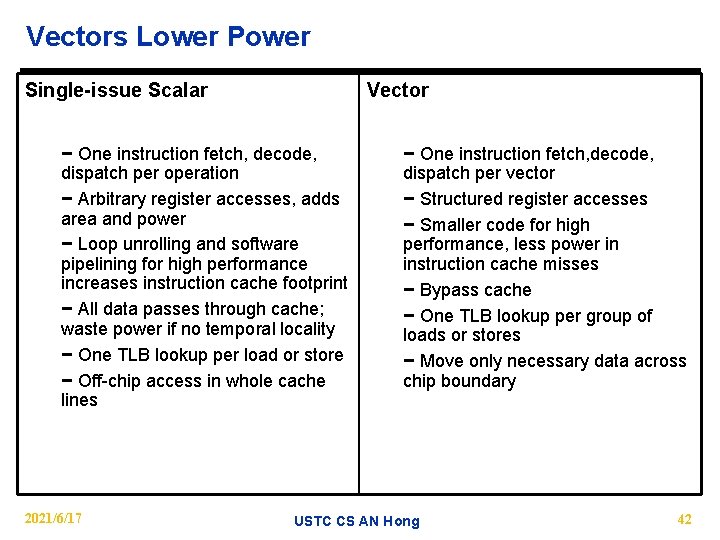

Vectors Lower Power Single-issue Scalar Vector − One instruction fetch, decode, dispatch per operation − Arbitrary register accesses, adds area and power − Loop unrolling and software pipelining for high performance increases instruction cache footprint − All data passes through cache; waste power if no temporal locality − One TLB lookup per load or store − Off chip access in whole cache lines 2021/6/17 − One instruction fetch, decode, dispatch per vector − Structured register accesses − Smaller code for high performance, less power in instruction cache misses − Bypass cache − One TLB lookup per group of loads or stores − Move only necessary data across chip boundary USTC CS AN Hong 42

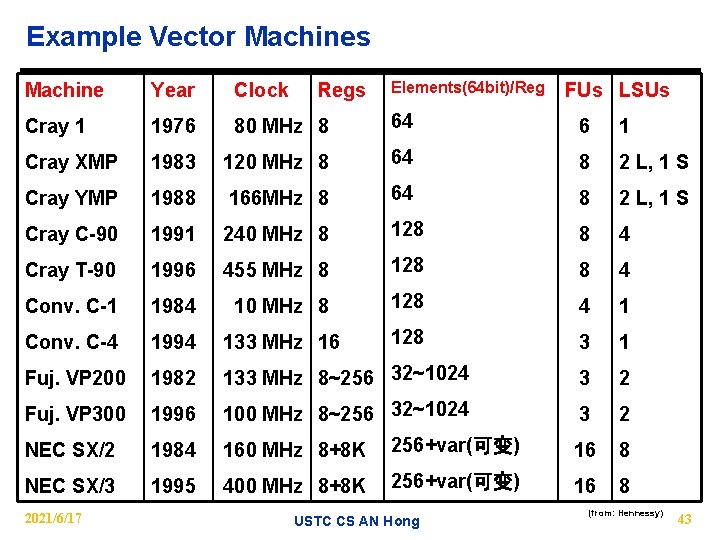

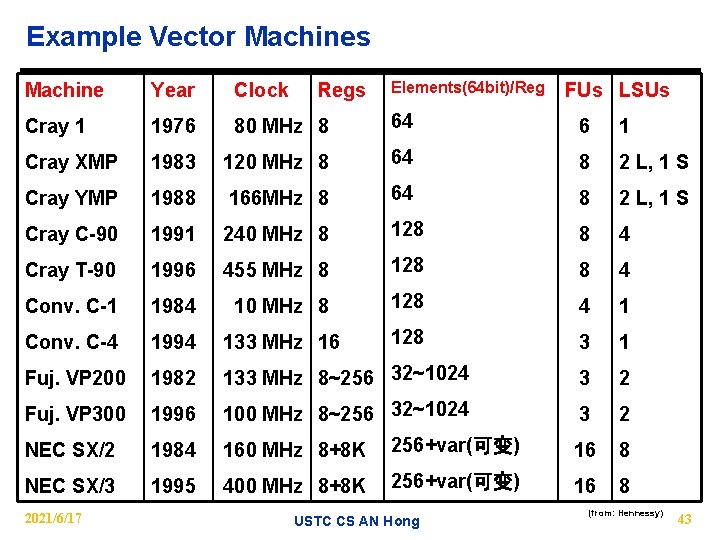

Example Vector Machines Year Clock Cray 1 1976 80 MHz 8 64 6 1 Cray XMP 1983 120 MHz 8 64 8 2 L, 1 S Cray YMP 1988 166 MHz 8 64 8 2 L, 1 S Cray C-90 1991 240 MHz 8 128 8 4 Cray T-90 1996 455 MHz 8 128 8 4 Conv. C-1 1984 10 MHz 8 128 4 1 Conv. C-4 1994 133 MHz 16 128 3 1 Fuj. VP 200 1982 133 MHz 8~256 32~1024 3 2 Fuj. VP 300 1996 100 MHz 8~256 32~1024 3 2 NEC SX/2 1984 160 MHz 8+8 K 256+var(可变) 16 8 NEC SX/3 1995 400 MHz 8+8 K 256+var(可变) 16 8 2021/6/17 Regs Elements(64 bit)/Reg Machine USTC CS AN Hong FUs LSUs (from: Hennessy) 43

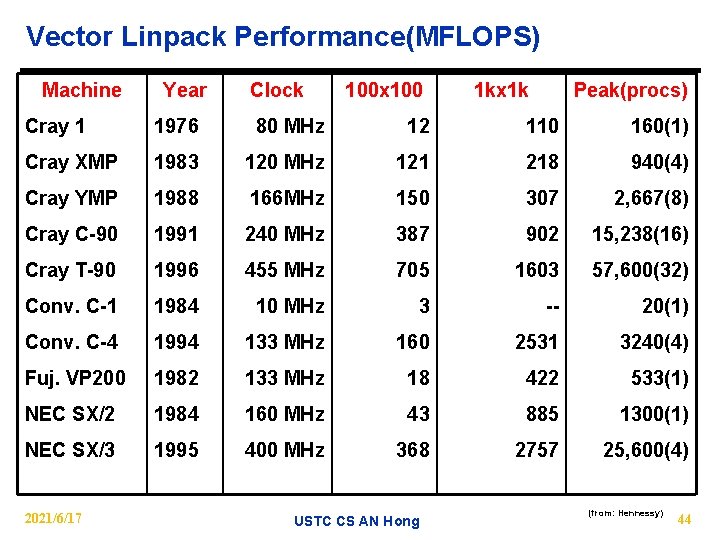

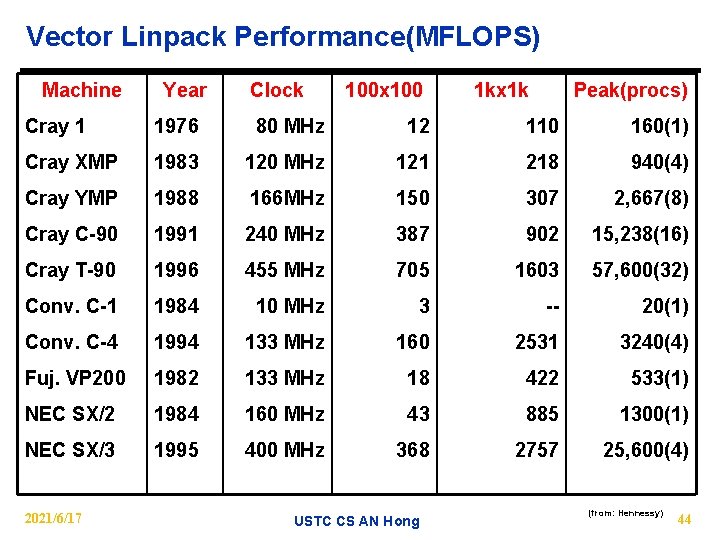

Vector Linpack Performance(MFLOPS) Machine Year Clock 100 x 100 1 kx 1 k Peak(procs) Cray 1 1976 80 MHz 12 110 160(1) Cray XMP 1983 120 MHz 121 218 940(4) Cray YMP 1988 166 MHz 150 307 2, 667(8) Cray C-90 1991 240 MHz 387 902 15, 238(16) Cray T-90 1996 455 MHz 705 1603 57, 600(32) Conv. C-1 1984 10 MHz 3 -- 20(1) Conv. C-4 1994 133 MHz 160 2531 3240(4) Fuj. VP 200 1982 133 MHz 18 422 533(1) NEC SX/2 1984 160 MHz 43 885 1300(1) NEC SX/3 1995 400 MHz 368 2757 25, 600(4) 2021/6/17 USTC CS AN Hong (from: Hennessy) 44

Vector architectures of the futrue: High performance microprocessors for the mutilmedia 2021/6/17 USTC CS AN Hong 45

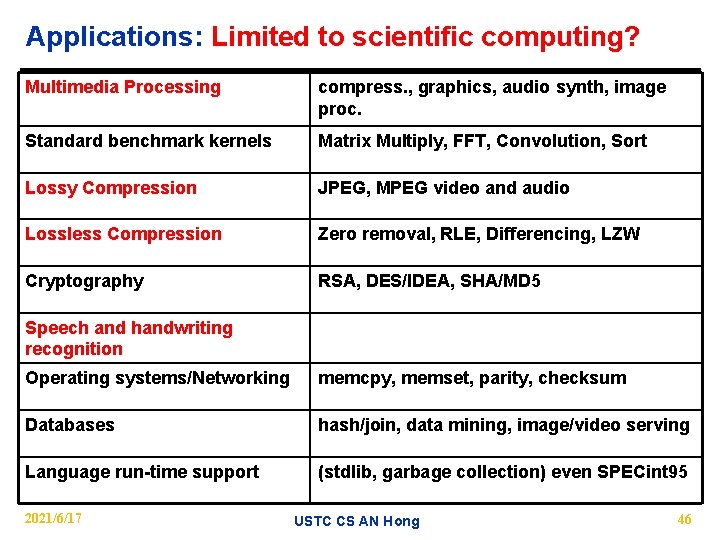

Applications: Limited to scientific computing? Multimedia Processing compress. , graphics, audio synth, image proc. Standard benchmark kernels Matrix Multiply, FFT, Convolution, Sort Lossy Compression JPEG, MPEG video and audio Lossless Compression Zero removal, RLE, Differencing, LZW Cryptography RSA, DES/IDEA, SHA/MD 5 Speech and handwriting recognition Operating systems/Networking memcpy, memset, parity, checksum Databases hash/join, data mining, image/video serving Language run-time support (stdlib, garbage collection) even SPECint 95 2021/6/17 USTC CS AN Hong 46

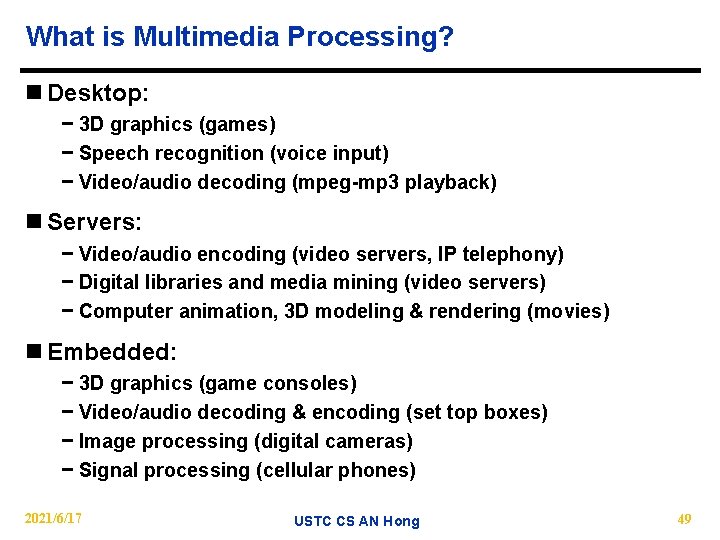

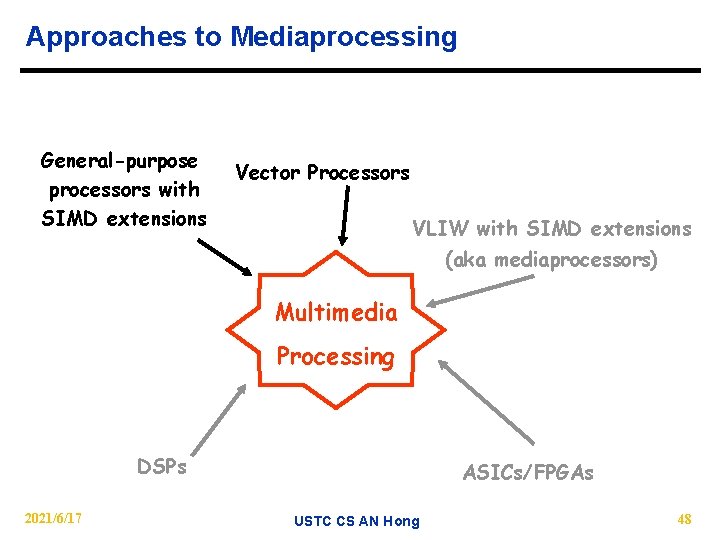

Possible evolution of vector architectures 2021/6/17 USTC CS AN Hong 47

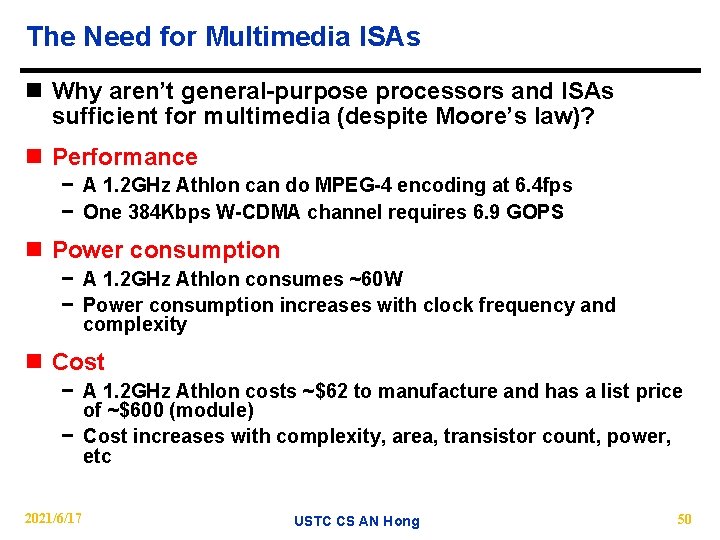

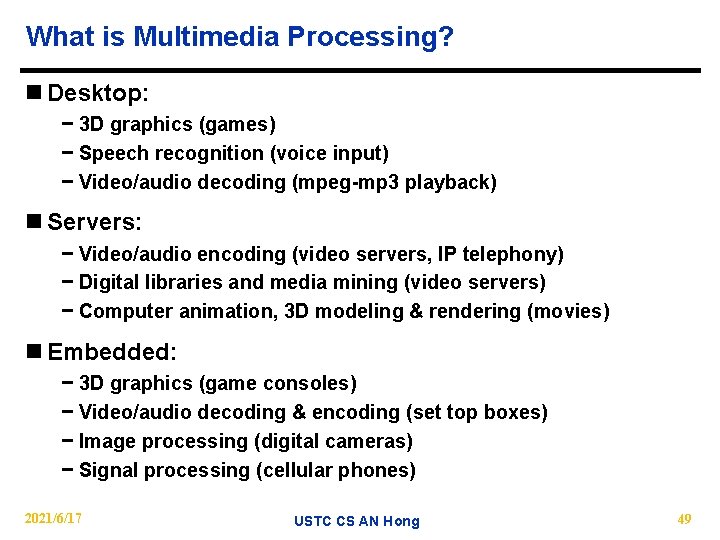

Approaches to Mediaprocessing General-purpose processors with SIMD extensions Vector Processors VLIW with SIMD extensions (aka mediaprocessors) Multimedia Processing DSPs 2021/6/17 ASICs/FPGAs USTC CS AN Hong 48

What is Multimedia Processing? n Desktop: − 3 D graphics (games) − Speech recognition (voice input) − Video/audio decoding (mpeg-mp 3 playback) n Servers: − Video/audio encoding (video servers, IP telephony) − Digital libraries and media mining (video servers) − Computer animation, 3 D modeling & rendering (movies) n Embedded: − 3 D graphics (game consoles) − Video/audio decoding & encoding (set top boxes) − Image processing (digital cameras) − Signal processing (cellular phones) 2021/6/17 USTC CS AN Hong 49

The Need for Multimedia ISAs n Why aren’t general-purpose processors and ISAs sufficient for multimedia (despite Moore’s law)? n Performance − A 1. 2 GHz Athlon can do MPEG-4 encoding at 6. 4 fps − One 384 Kbps W-CDMA channel requires 6. 9 GOPS n Power consumption − A 1. 2 GHz Athlon consumes ~60 W − Power consumption increases with clock frequency and complexity n Cost − A 1. 2 GHz Athlon costs ~$62 to manufacture and has a list price of ~$600 (module) − Cost increases with complexity, area, transistor count, power, etc 2021/6/17 USTC CS AN Hong 50

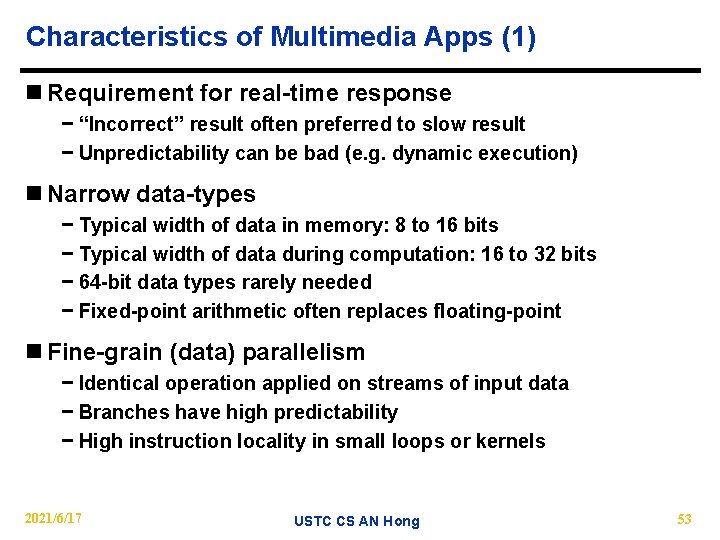

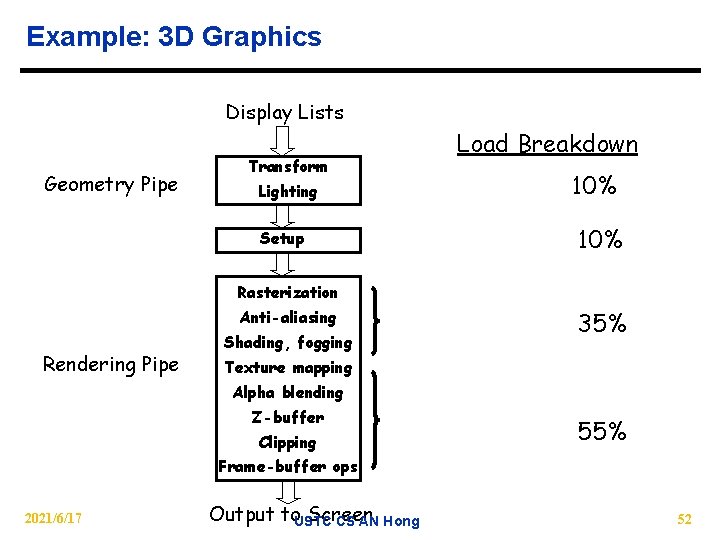

Example: MPEG Decoding Input Stream Parsing 10% Dequantization 20% IDCT 25% Block Reconstruction RGB->YUV 2021/6/17 Load Breakdown Output to Screen USTC CS AN Hong 30% 15% 51

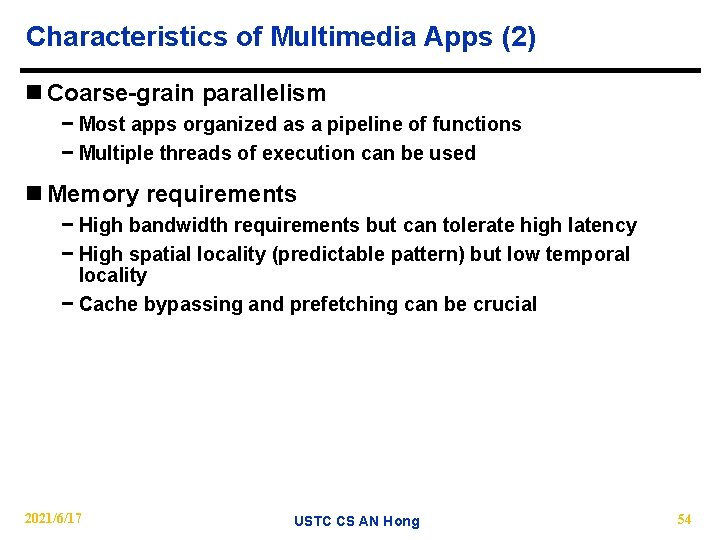

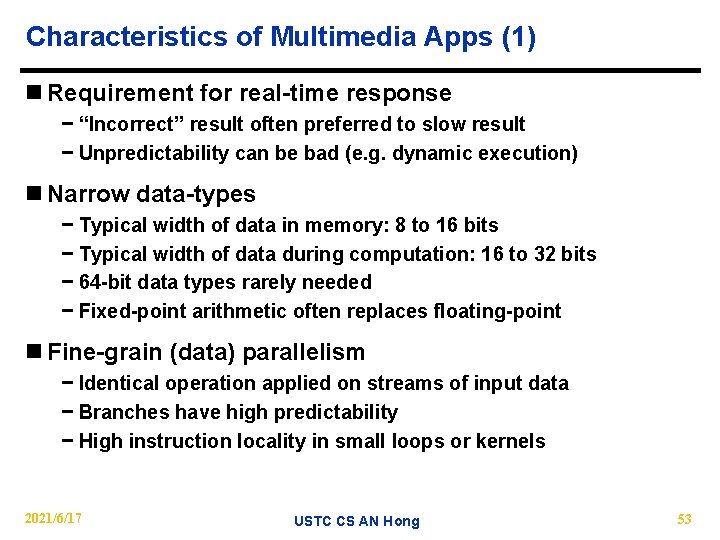

Example: 3 D Graphics Display Lists Geometry Pipe Transform Lighting Setup Load Breakdown 10% Rasterization Anti-aliasing Rendering Pipe Shading, fogging 35% Texture mapping Alpha blending Z-buffer Clipping 55% Frame-buffer ops 2021/6/17 Output to. USTC Screen CS AN Hong 52

Characteristics of Multimedia Apps (1) n Requirement for real-time response − “Incorrect” result often preferred to slow result − Unpredictability can be bad (e. g. dynamic execution) n Narrow data-types − Typical width of data in memory: 8 to 16 bits − Typical width of data during computation: 16 to 32 bits − 64 -bit data types rarely needed − Fixed-point arithmetic often replaces floating-point n Fine-grain (data) parallelism − Identical operation applied on streams of input data − Branches have high predictability − High instruction locality in small loops or kernels 2021/6/17 USTC CS AN Hong 53

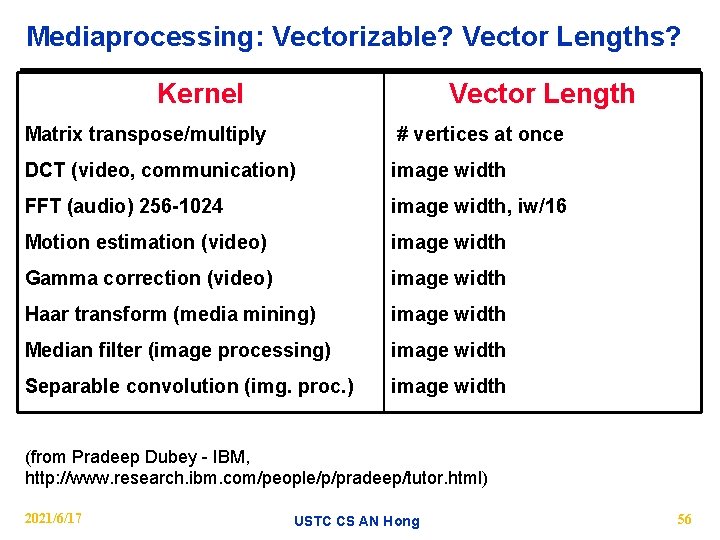

Characteristics of Multimedia Apps (2) n Coarse-grain parallelism − Most apps organized as a pipeline of functions − Multiple threads of execution can be used n Memory requirements − High bandwidth requirements but can tolerate high latency − High spatial locality (predictable pattern) but low temporal locality − Cache bypassing and prefetching can be crucial 2021/6/17 USTC CS AN Hong 54

Multimedia Benchmarks n Video: MPEG-2, MPEG-4, H. 263 n Audio: ADPCM coder n Graphics: Mesa n Image: JPEG, EPIC, Ghostscript n Security: PGP, Pegwit n Speech: GSM, G. 271, Rasta 2021/6/17 USTC CS AN Hong 55

Mediaprocessing: Vectorizable? Vector Lengths? Kernel Vector Length Matrix transpose/multiply # vertices at once DCT (video, communication) image width FFT (audio) 256 -1024 image width, iw/16 Motion estimation (video) image width Gamma correction (video) image width Haar transform (media mining) image width Median filter (image processing) image width Separable convolution (img. proc. ) image width (from Pradeep Dubey IBM, http: //www. research. ibm. com/people/p/pradeep/tutor. html) 2021/6/17 USTC CS AN Hong 56

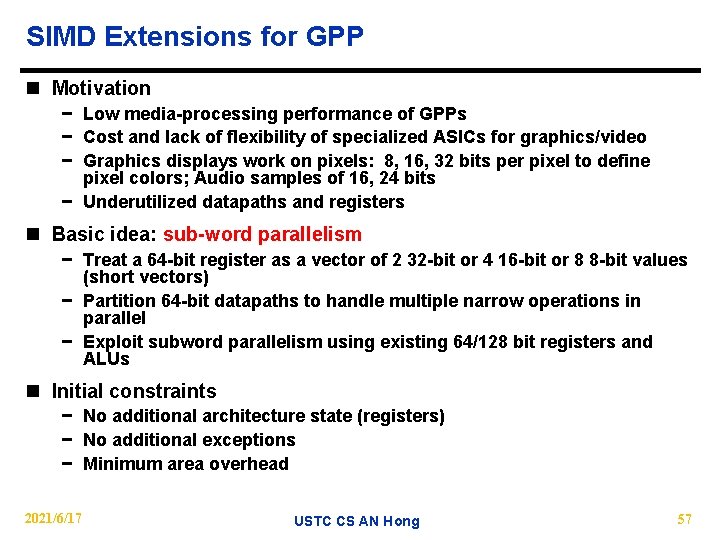

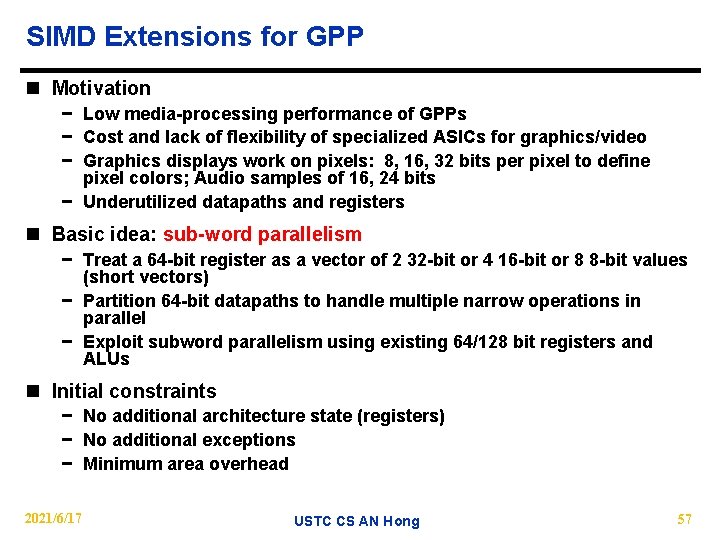

SIMD Extensions for GPP n Motivation − Low media-processing performance of GPPs − Cost and lack of flexibility of specialized ASICs for graphics/video − Graphics displays work on pixels: 8, 16, 32 bits per pixel to define pixel colors; Audio samples of 16, 24 bits − Underutilized datapaths and registers n Basic idea: sub-word parallelism − Treat a 64 -bit register as a vector of 2 32 -bit or 4 16 -bit or 8 8 -bit values (short vectors) − Partition 64 -bit datapaths to handle multiple narrow operations in parallel − Exploit subword parallelism using existing 64/128 bit registers and ALUs n Initial constraints − No additional architecture state (registers) − No additional exceptions − Minimum area overhead 2021/6/17 USTC CS AN Hong 57

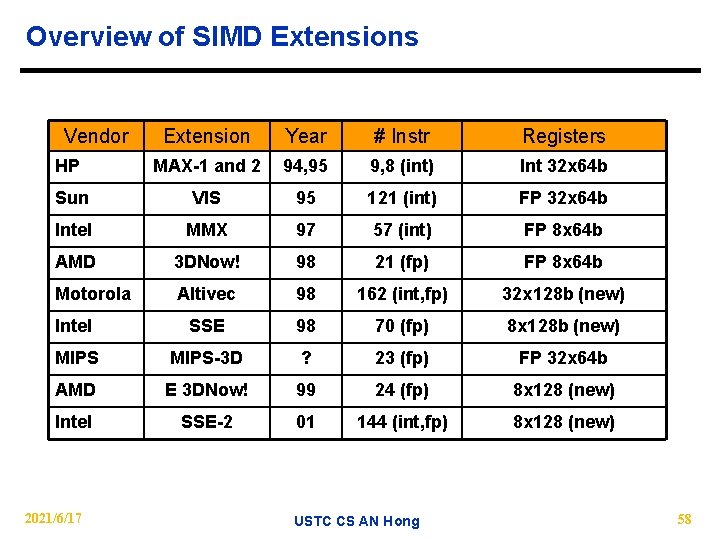

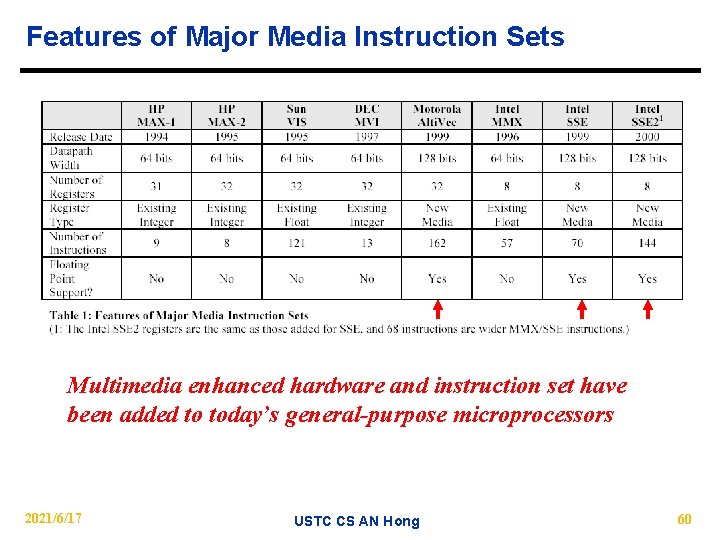

Overview of SIMD Extensions Vendor Extension Year # Instr Registers HP MAX-1 and 2 94, 95 9, 8 (int) Int 32 x 64 b Sun VIS 95 121 (int) FP 32 x 64 b Intel MMX 97 57 (int) FP 8 x 64 b AMD 3 DNow! 98 21 (fp) FP 8 x 64 b Motorola Altivec 98 162 (int, fp) 32 x 128 b (new) Intel SSE 98 70 (fp) 8 x 128 b (new) MIPS-3 D ? 23 (fp) FP 32 x 64 b AMD E 3 DNow! 99 24 (fp) 8 x 128 (new) Intel SSE-2 01 144 (int, fp) 8 x 128 (new) 2021/6/17 USTC CS AN Hong 58

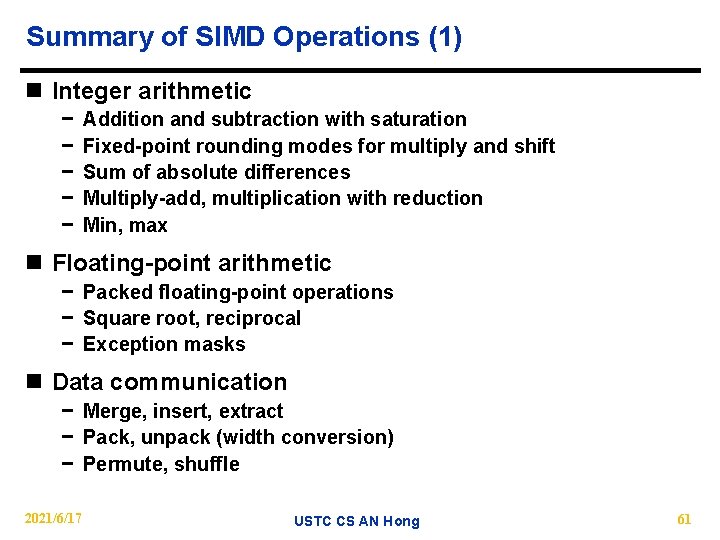

Multimedia Extensions Vendor Intel ISA MMX, KNI Description Multi Media e. Xtensions, Katmai New Instructions (Streaming SIMD) AMD MMX/3 DNow! Multi Media e. Xtensions, 3 DNow! Extensions Cyrix MIPS Digital/Compaq MMX MDMX/MIPSV MVI Motorola/IBM Alti. Vec Hewlett Packard MAX Sun VIS 2021/6/17 Multi Media e. Xtensions MIPS Digital Media e. Xtensions/MIPS V Motion Video Instructions Alti. Vec technology Media Acceleration e. Xtensions Visual Instruction Set USTC CS AN Hong 59

Features of Major Media Instruction Sets Multimedia enhanced hardware and instruction set have been added to today’s general-purpose microprocessors 2021/6/17 USTC CS AN Hong 60

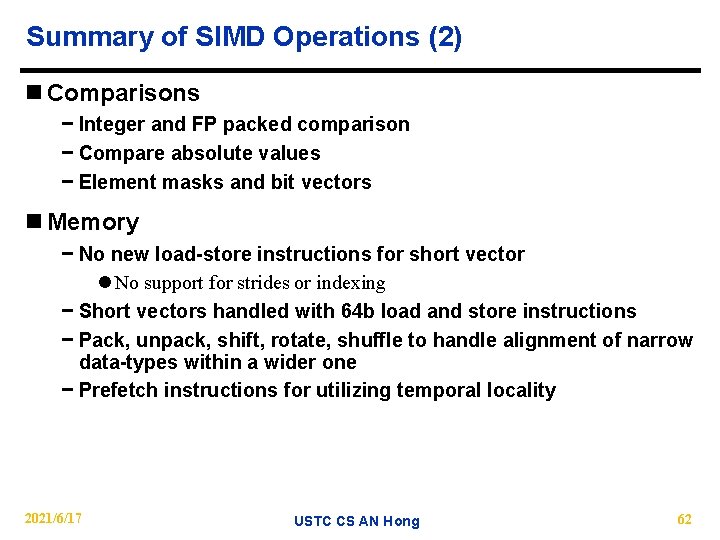

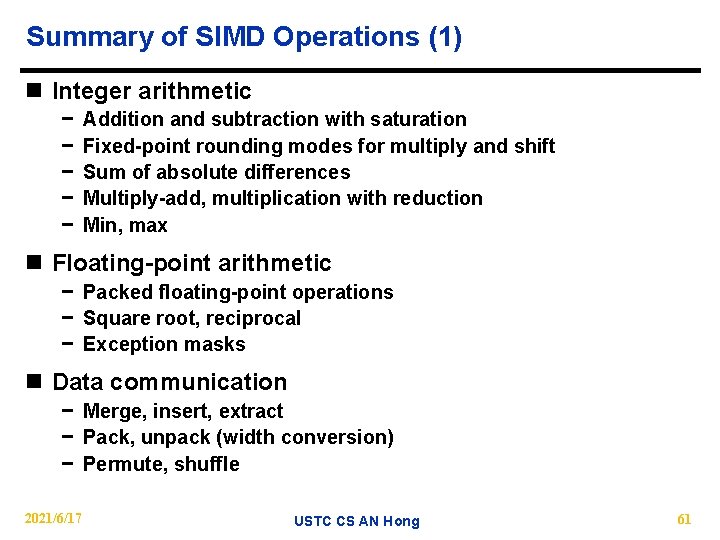

Summary of SIMD Operations (1) n Integer arithmetic − − − Addition and subtraction with saturation Fixed-point rounding modes for multiply and shift Sum of absolute differences Multiply-add, multiplication with reduction Min, max n Floating-point arithmetic − Packed floating-point operations − Square root, reciprocal − Exception masks n Data communication − Merge, insert, extract − Pack, unpack (width conversion) − Permute, shuffle 2021/6/17 USTC CS AN Hong 61

Summary of SIMD Operations (2) n Comparisons − Integer and FP packed comparison − Compare absolute values − Element masks and bit vectors n Memory − No new load-store instructions for short vector l No support for strides or indexing − Short vectors handled with 64 b load and store instructions − Pack, unpack, shift, rotate, shuffle to handle alignment of narrow data-types within a wider one − Prefetch instructions for utilizing temporal locality 2021/6/17 USTC CS AN Hong 62

Programming with SIMD Extensions n Optimized shared libraries − Written in assembly, distributed by vendor − Need well defined API for data format and use n Language macros for variables and operations − C/C++ wrappers for short vector variables and function calls − Allows instruction scheduling and register allocation optimizations for specific processors − Lack of portability, non standard n Compilers for SIMD extensions − No commercially available compiler so far − Problems l Language support for expressing fixed-point arithmetic and SIMD parallelism l Complicated model for loading/storing vectors l Frequent updates n Assembly coding 2021/6/17 USTC CS AN Hong 63

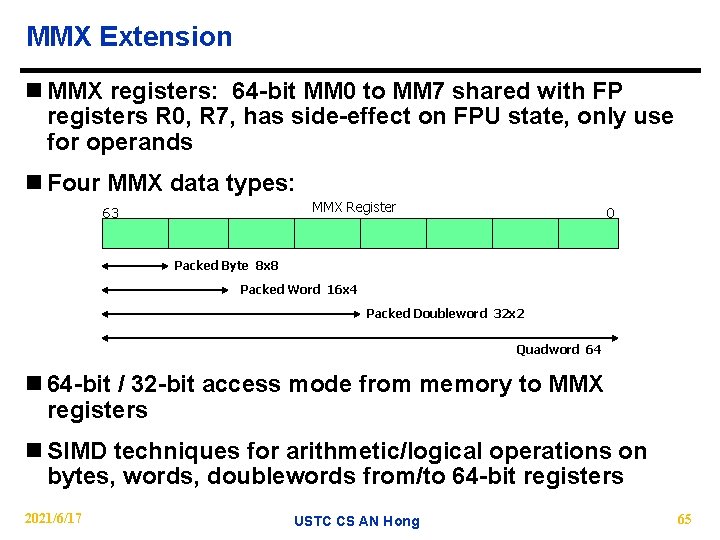

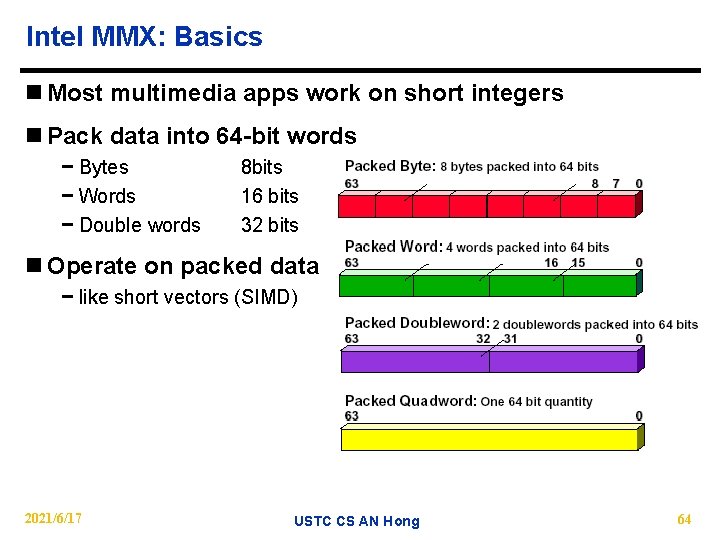

Intel MMX: Basics n Most multimedia apps work on short integers n Pack data into 64 -bit words − Bytes − Words − Double words 8 bits 16 bits 32 bits n Operate on packed data − like short vectors (SIMD) 2021/6/17 USTC CS AN Hong 64

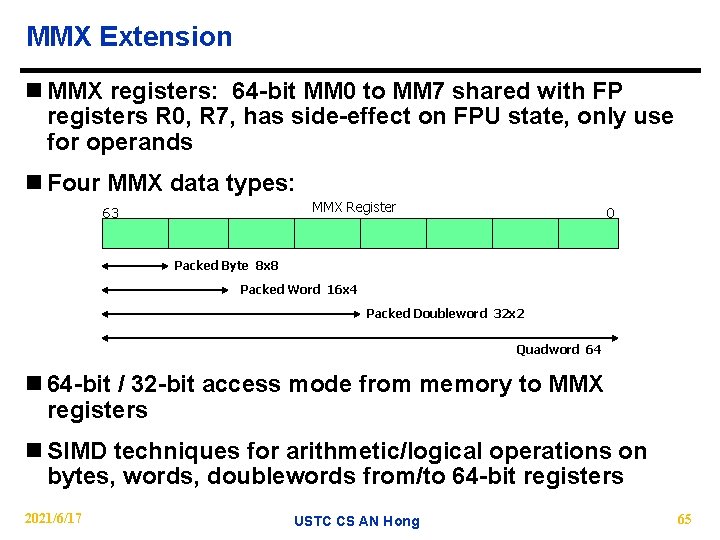

MMX Extension n MMX registers: 64 -bit MM 0 to MM 7 shared with FP registers R 0, R 7, has side-effect on FPU state, only use for operands n Four MMX data types: MMX Register 63 0 Packed Byte 8 x 8 Packed Word 16 x 4 Packed Doubleword 32 x 2 Quadword 64 n 64 -bit / 32 -bit access mode from memory to MMX registers n SIMD techniques for arithmetic/logical operations on bytes, words, doublewords from/to 64 -bit registers 2021/6/17 USTC CS AN Hong 65

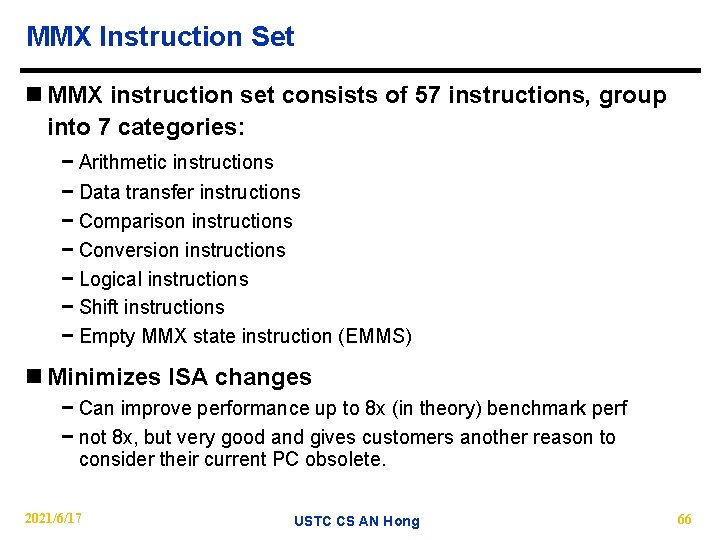

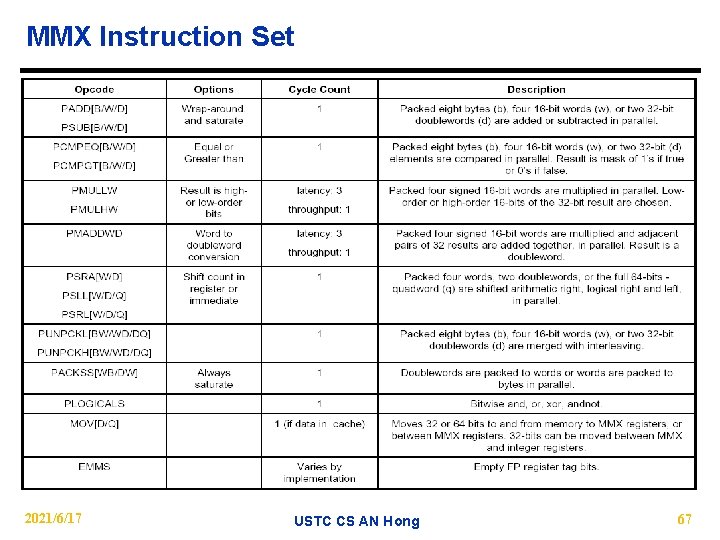

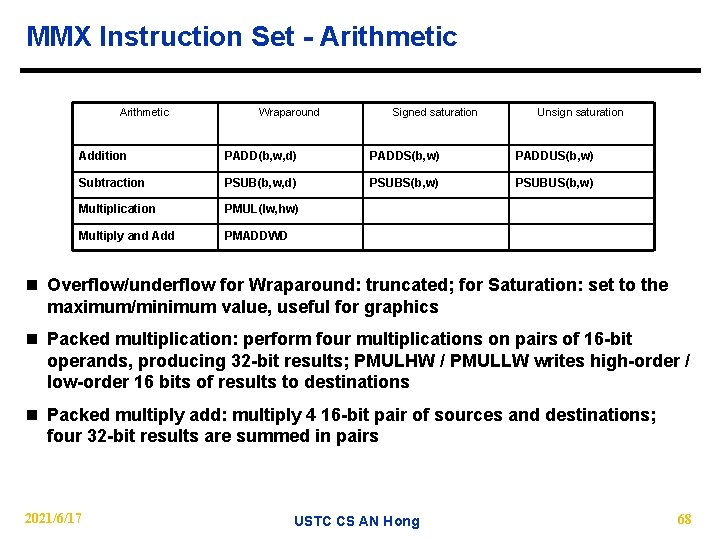

MMX Instruction Set n MMX instruction set consists of 57 instructions, group into 7 categories: − Arithmetic instructions − Data transfer instructions − Comparison instructions − Conversion instructions − Logical instructions − Shift instructions − Empty MMX state instruction (EMMS) n Minimizes ISA changes − Can improve performance up to 8 x (in theory) benchmark perf − not 8 x, but very good and gives customers another reason to consider their current PC obsolete. 2021/6/17 USTC CS AN Hong 66

MMX Instruction Set 2021/6/17 USTC CS AN Hong 67

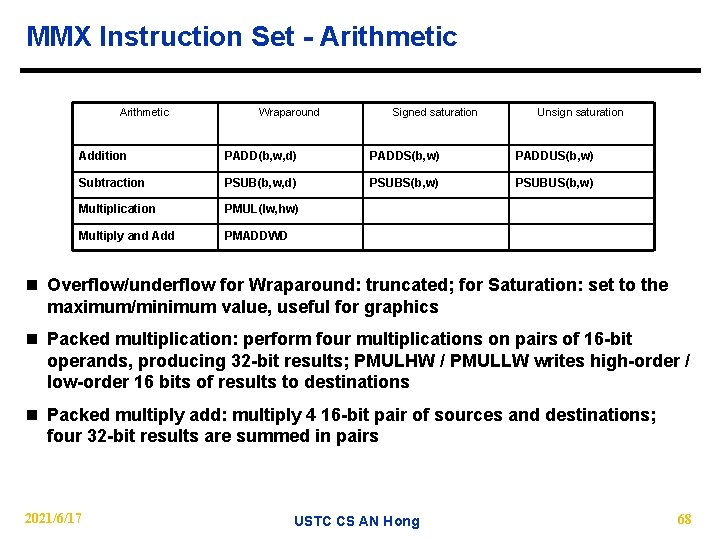

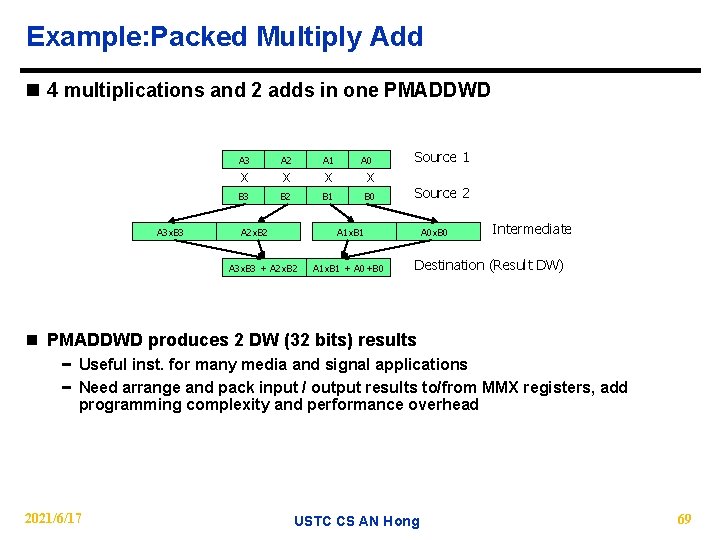

MMX Instruction Set - Arithmetic Wraparound Signed saturation Unsign saturation Addition PADD(b, w, d) PADDS(b, w) PADDUS(b, w) Subtraction PSUB(b, w, d) PSUBS(b, w) PSUBUS(b, w) Multiplication PMUL(lw, hw) Multiply and Add PMADDWD n Overflow/underflow for Wraparound: truncated; for Saturation: set to the maximum/minimum value, useful for graphics n Packed multiplication: perform four multiplications on pairs of 16 -bit operands, producing 32 -bit results; PMULHW / PMULLW writes high-order / low-order 16 bits of results to destinations n Packed multiply add: multiply 4 16 -bit pair of sources and destinations; four 32 -bit results are summed in pairs 2021/6/17 USTC CS AN Hong 68

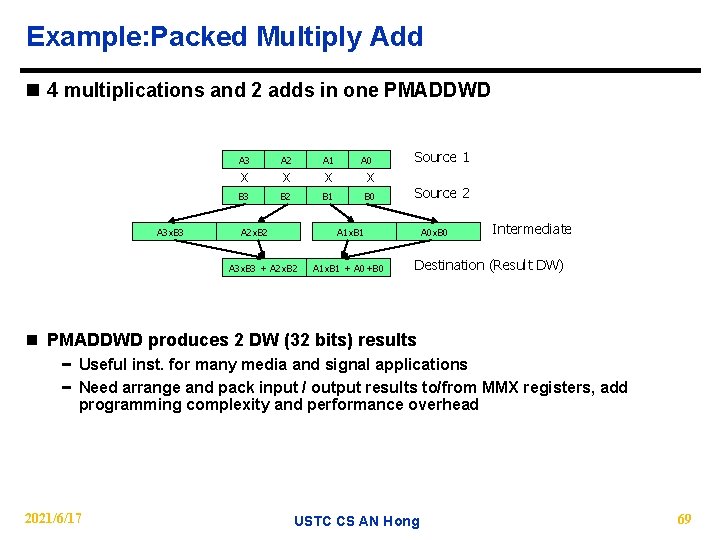

Example: Packed Multiply Add n 4 multiplications and 2 adds in one PMADDWD A 3 A 2 A 1 B 3 B 2 B 1 x A 3 x. B 3 x x A 2 x. B 2 A 0 x B 0 Source 1 Source 2 A 1 x. B 1 A 3 x. B 3 + A 2 x. B 2 A 1 x. B 1 + A 0+B 0 A 0 x. B 0 Intermediate Destination (Result DW) n PMADDWD produces 2 DW (32 bits) results − Useful inst. for many media and signal applications − Need arrange and pack input / output results to/from MMX registers, add programming complexity and performance overhead 2021/6/17 USTC CS AN Hong 69

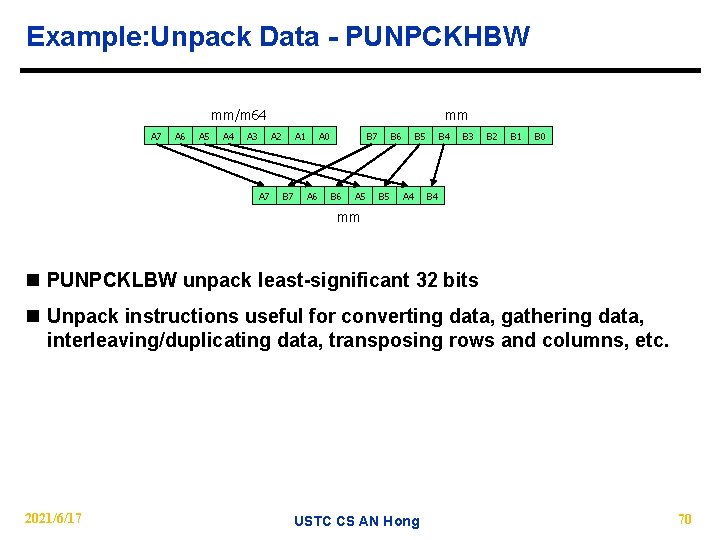

Example: Unpack Data - PUNPCKHBW mm/m 64 A 7 A 6 A 5 A 4 A 3 mm A 2 A 7 A 1 A 6 B 7 A 0 A 6 B 7 B 6 A 5 A 6 B 5 B 5 A 4 B 3 B 2 B 1 B 0 B 4 mm n PUNPCKLBW unpack least-significant 32 bits n Unpack instructions useful for converting data, gathering data, interleaving/duplicating data, transposing rows and columns, etc. 2021/6/17 USTC CS AN Hong 70

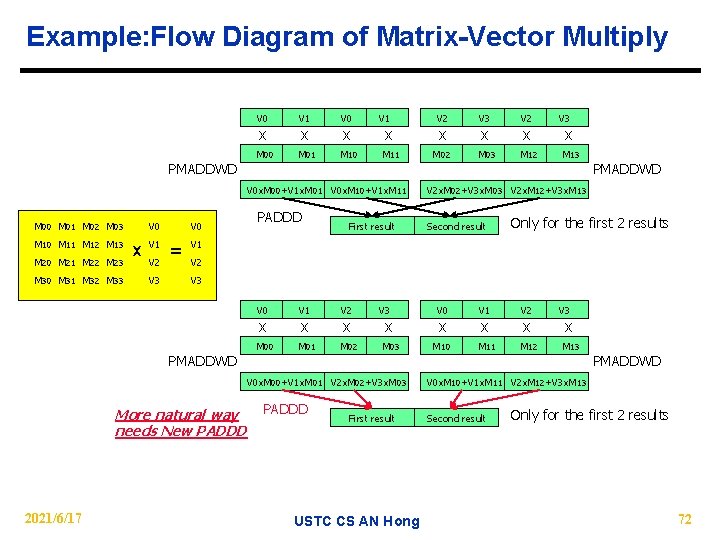

Example: Flow Diagram of Matrix-Vector Multiply V 0 V 1 V 0 M 01 M 10 x PMADDWD x x V 1 x M 11 V 0 x. M 00+V 1 x. M 01 V 0 x. M 10+V 1 x. M 11 PADDD First result V 2 x M 02 V 3 V 2 M 03 M 12 x x V 3 x M 13 PMADDWD V 2 x. M 02+V 3 x. M 03 V 2 x. M 12+V 3 x. M 13 Second result Only for the first 2 results n Total 8 multiply, 6 add are reduced to 2 PMADDWD, 1 PADDD n Need to move matrix and vector to MMX register, need also to pack the result and move to vector 2021/6/17 USTC CS AN Hong 71

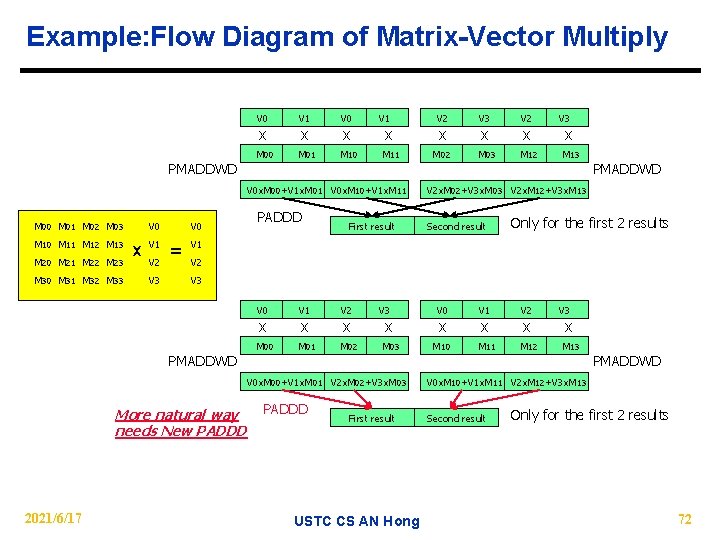

Example: Flow Diagram of Matrix-Vector Multiply V 0 V 1 V 0 M 01 M 10 x PMADDWD x x V 1 x M 11 V 0 x. M 00+V 1 x. M 01 V 0 x. M 10+V 1 x. M 11 M 00 M 01 M 02 M 03 M 10 M 11 M 12 M 13 M 20 M 21 M 22 M 23 M 30 M 31 M 32 M 33 V 0 x V 1 V 2 V 3 V 0 = PADDD First result x M 02 V 3 V 2 M 03 M 12 x x V 3 x M 13 PMADDWD V 2 x. M 02+V 3 x. M 03 V 2 x. M 12+V 3 x. M 13 Second result Only for the first 2 results V 1 V 2 V 3 V 0 V 1 V 2 M 00 M 01 M 02 x PMADDWD x x V 3 x M 03 V 0 x. M 00+V 1 x. M 01 V 2 x. M 02+V 3 x. M 03 More natural way needs New PADDD 2021/6/17 V 2 PADDD First result USTC CS AN Hong V 0 x M 10 V 1 V 2 M 11 M 12 x x V 3 x M 13 PMADDWD V 0 x. M 10+V 1 x. M 11 V 2 x. M 12+V 3 x. M 13 Second result Only for the first 2 results 72

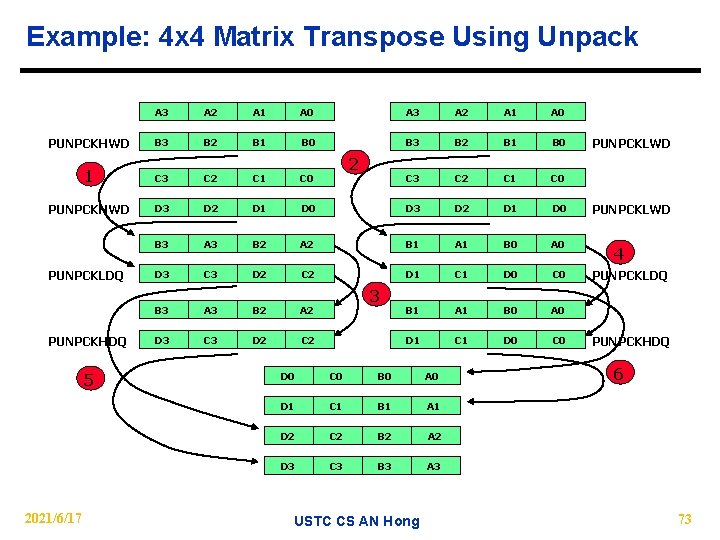

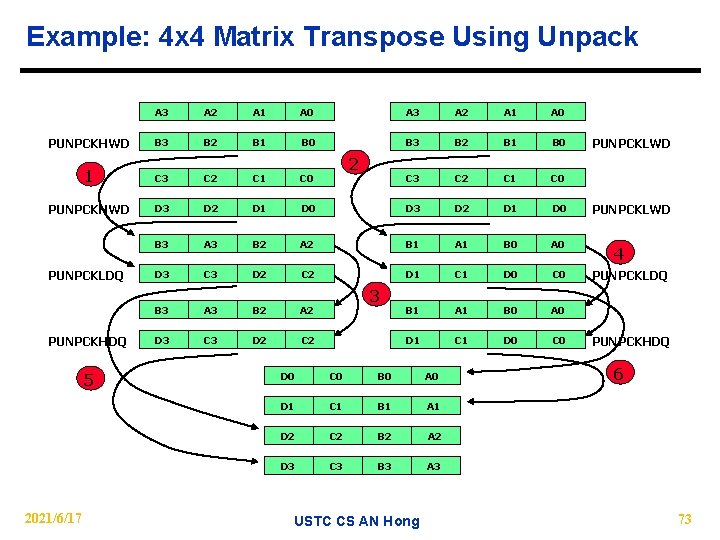

Example: 4 x 4 Matrix Transpose Using Unpack A 3 A 2 A 1 A 0 PUNPCKHWD B 3 B 2 B 1 B 0 1 C 3 C 2 C 1 C 0 PUNPCKHWD D 3 D 2 D 1 D 0 B 3 A 3 B 2 A 2 B 1 A 1 B 0 A 0 D 3 C 3 D 2 C 2 D 1 C 1 D 0 C 0 PUNPCKLDQ PUNPCKHDQ 5 2021/6/17 2 3 D 0 C 0 B 0 A 0 D 1 C 1 B 1 A 1 D 2 C 2 B 2 A 2 D 3 C 3 B 3 A 3 USTC CS AN Hong PUNPCKLWD 4 PUNPCKLDQ PUNPCKHDQ 6 73

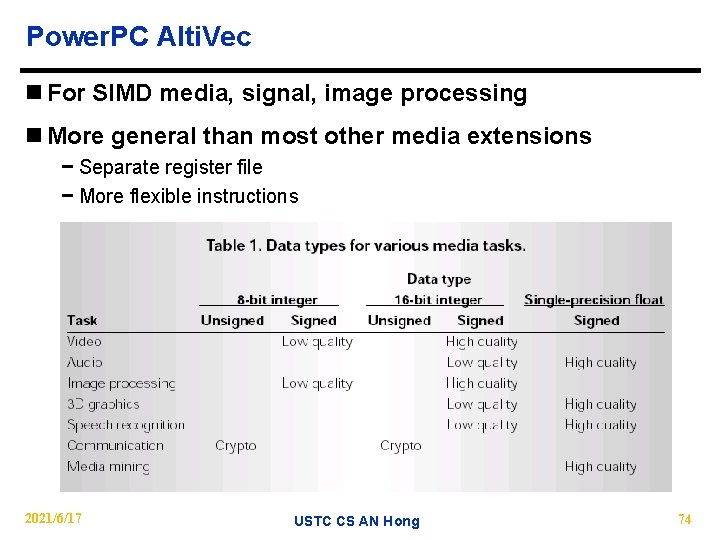

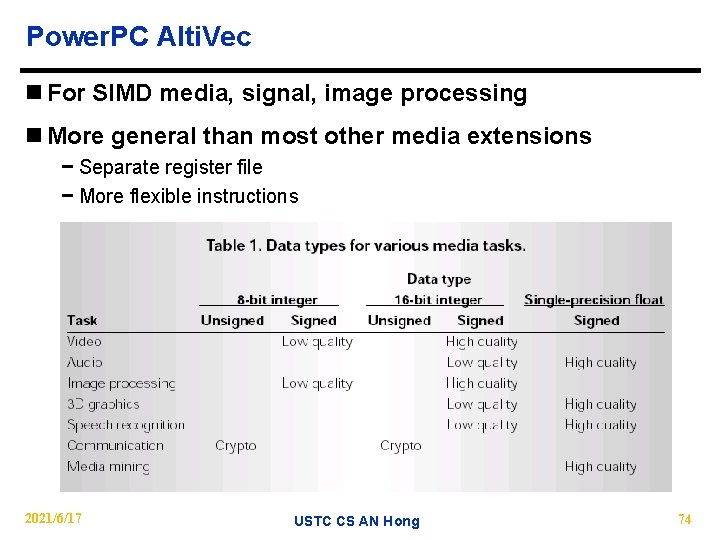

Power. PC Alti. Vec n For SIMD media, signal, image processing n More general than most other media extensions − Separate register file − More flexible instructions 2021/6/17 USTC CS AN Hong 74

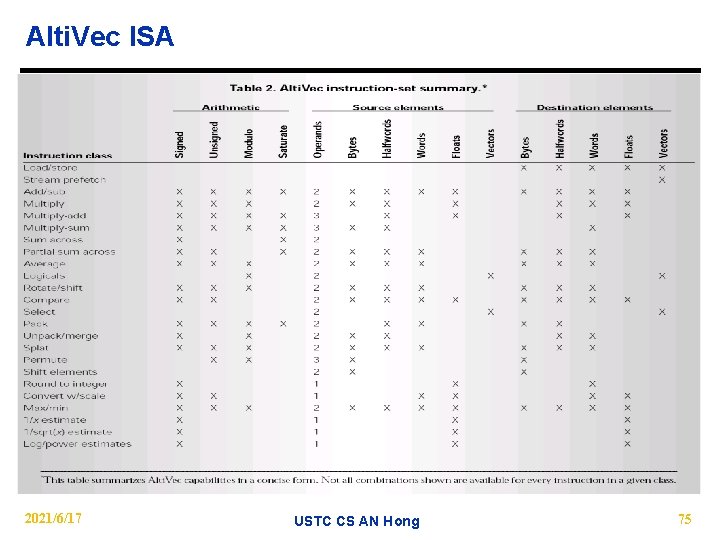

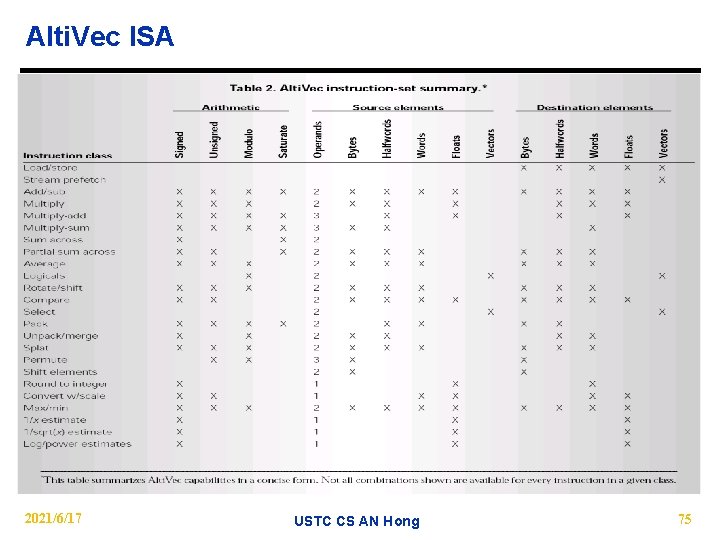

Alti. Vec ISA 2021/6/17 USTC CS AN Hong 75

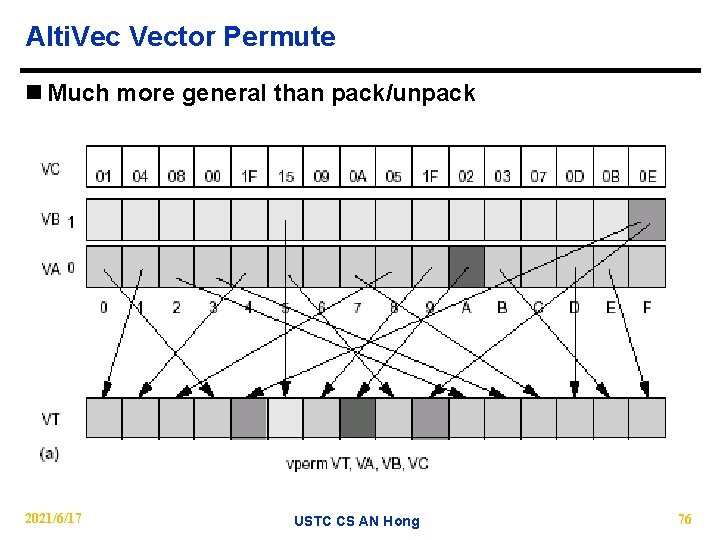

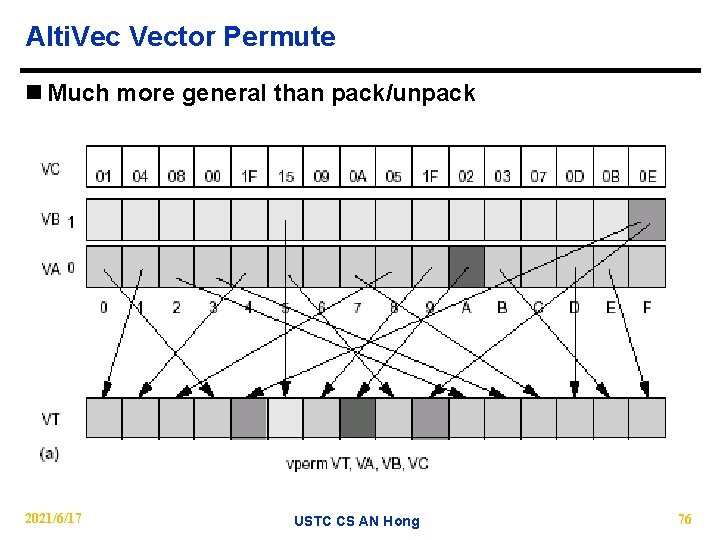

Alti. Vector Permute n Much more general than pack/unpack 2021/6/17 USTC CS AN Hong 76

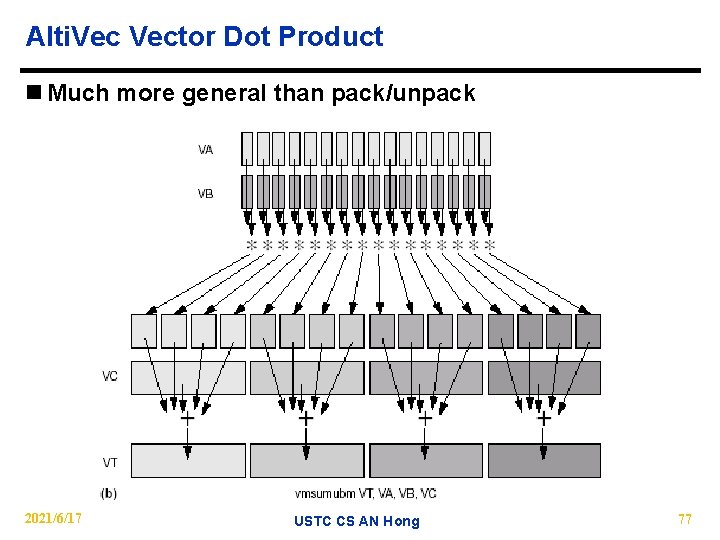

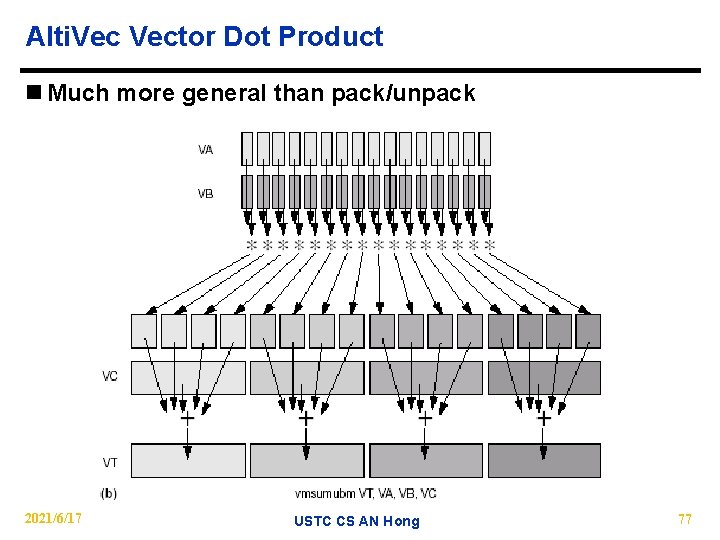

Alti. Vector Dot Product n Much more general than pack/unpack 2021/6/17 USTC CS AN Hong 77

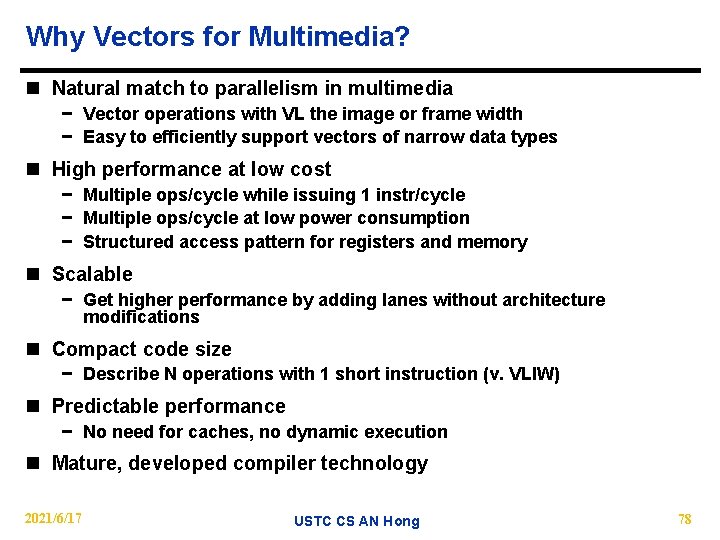

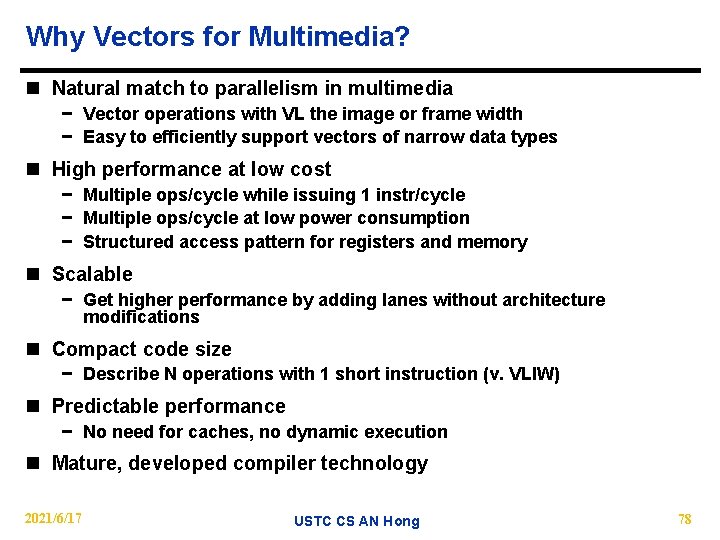

Why Vectors for Multimedia? n Natural match to parallelism in multimedia − Vector operations with VL the image or frame width − Easy to efficiently support vectors of narrow data types n High performance at low cost − Multiple ops/cycle while issuing 1 instr/cycle − Multiple ops/cycle at low power consumption − Structured access pattern for registers and memory n Scalable − Get higher performance by adding lanes without architecture modifications n Compact code size − Describe N operations with 1 short instruction (v. VLIW) n Predictable performance − No need for caches, no dynamic execution n Mature, developed compiler technology 2021/6/17 USTC CS AN Hong 78

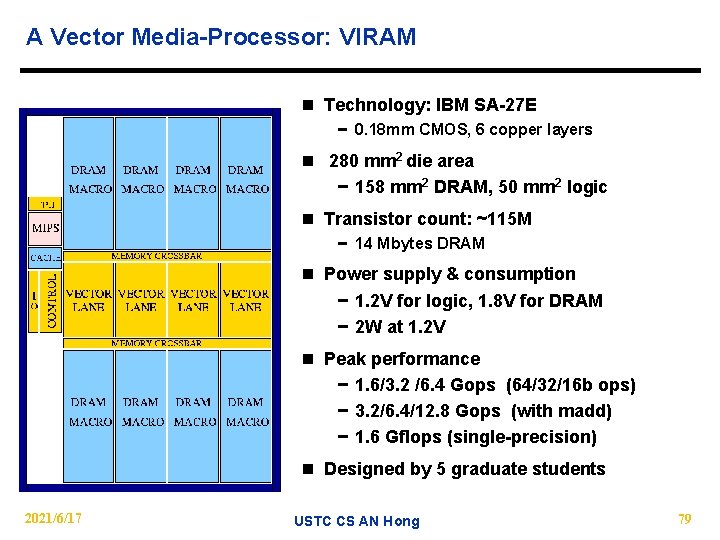

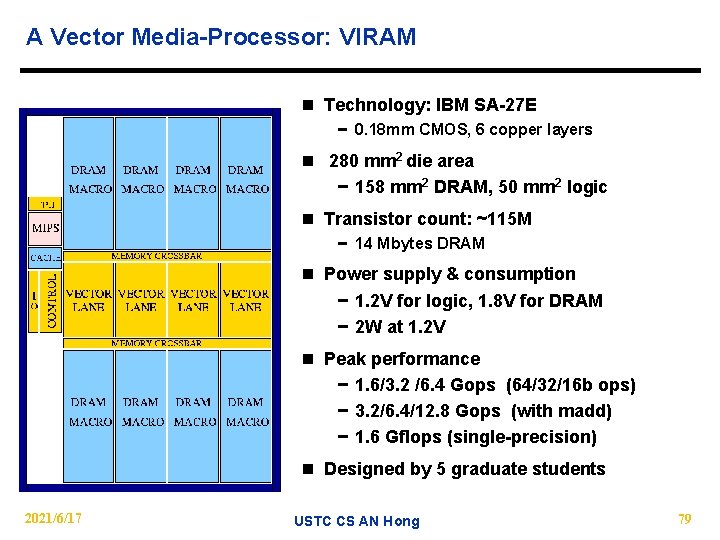

A Vector Media-Processor: VIRAM n Technology: IBM SA-27 E − 0. 18 mm CMOS, 6 copper layers n 280 mm 2 die area − 158 mm 2 DRAM, 50 mm 2 logic n Transistor count: ~115 M − 14 Mbytes DRAM n Power supply & consumption − 1. 2 V for logic, 1. 8 V for DRAM − 2 W at 1. 2 V n Peak performance − 1. 6/3. 2 /6. 4 Gops (64/32/16 b ops) − 3. 2/6. 4/12. 8 Gops (with madd) − 1. 6 Gflops (single-precision) n Designed by 5 graduate students 2021/6/17 USTC CS AN Hong 79

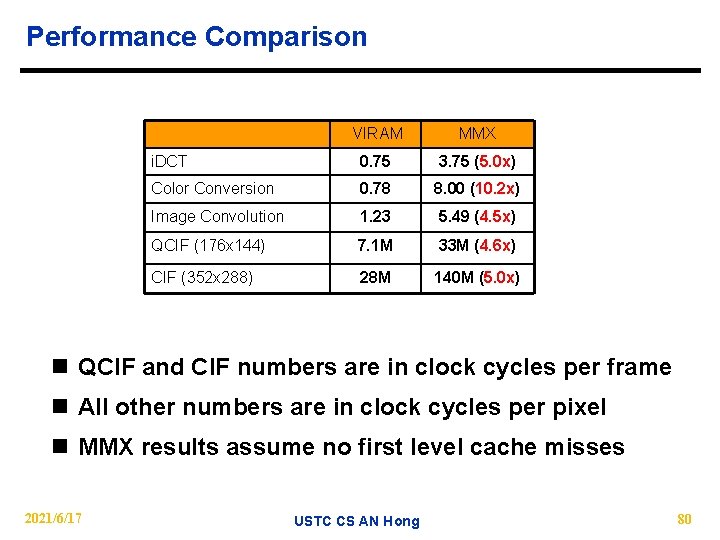

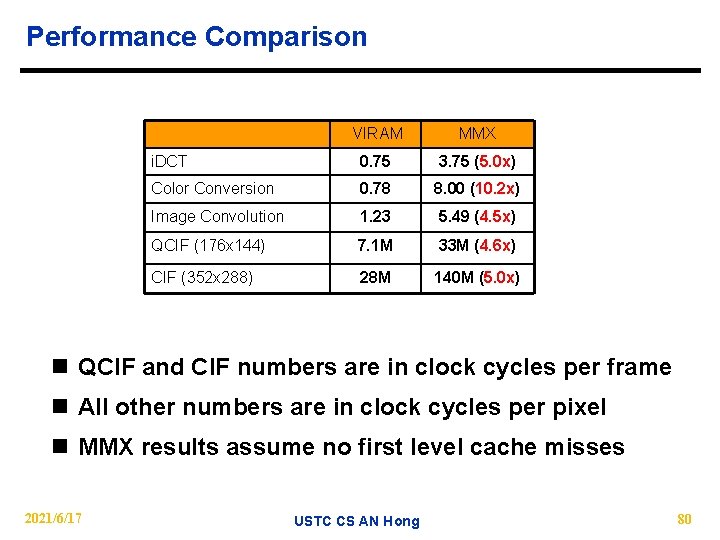

Performance Comparison VIRAM MMX i. DCT 0. 75 3. 75 (5. 0 x) Color Conversion 0. 78 8. 00 (10. 2 x) Image Convolution 1. 23 5. 49 (4. 5 x) QCIF (176 x 144) 7. 1 M 33 M (4. 6 x) CIF (352 x 288) 28 M 140 M (5. 0 x) n QCIF and CIF numbers are in clock cycles per frame n All other numbers are in clock cycles per pixel n MMX results assume no first level cache misses 2021/6/17 USTC CS AN Hong 80

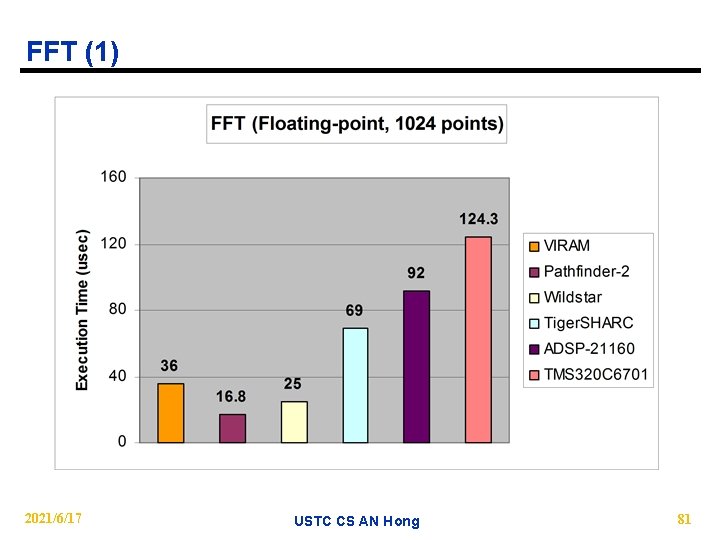

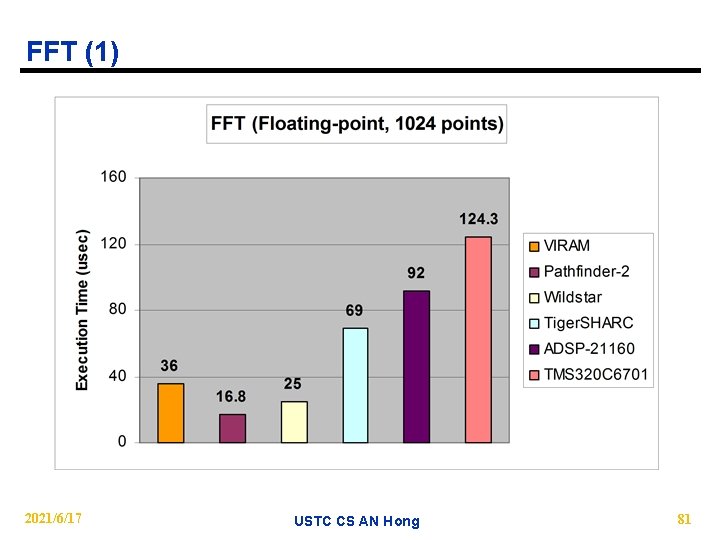

FFT (1) 2021/6/17 USTC CS AN Hong 81

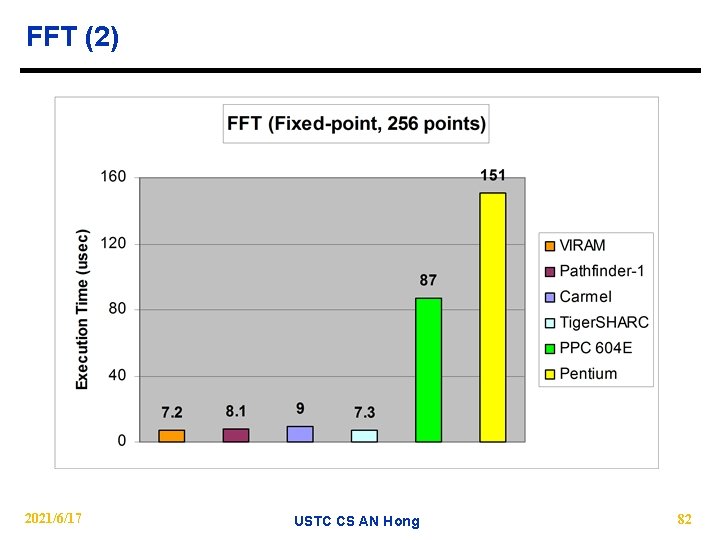

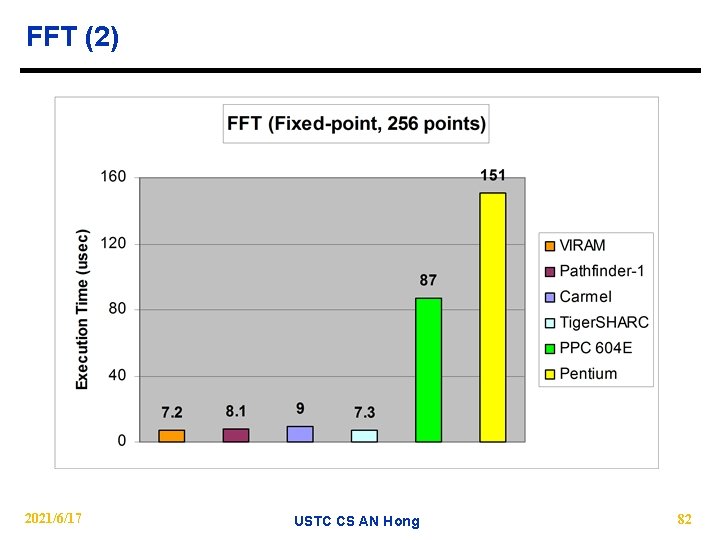

FFT (2) 2021/6/17 USTC CS AN Hong 82

SIMD Summary n Narrow vector extensions for GPPs − 64 b or 128 b registers as vectors of 32 b, 16 b, and 8 b elements n Based on sub-word parallelism and partitioned datapaths n Instructions − Packed fixed- and floating-point, multiply-add, reductions − Pack, unpack, permutations − Limited memory support n 2 x to 4 x performance improvement over base architecture − Limited by memory bandwidth n Difficult to use (no compilers) 2021/6/17 USTC CS AN Hong 83

Vector Summary n Alternative model for explicitly expressing data parallelism n If code is vectorizable, then simpler hardware, more power efficient, and better real-time model than out-oforder machines with SIMD support n Design issues include − number of lanes − number of functional units − number of vector registers − length of vector registers − exception handling − conditional operations n Will multimedia popularity revive vector architectures? 2021/6/17 USTC CS AN Hong 84

Vector Advantages n Easy to get high performance − Compact: Describe N operations with 1 short instruction (v. VLIW) − Predictable (real-time) performance vs. statistical performance (cache) n Scalable − get higher performance as more HW resources available n Much easier for hardware − more powerful instructions, more predictable memory accesses, fewer harzards, fewer branches, fewer mispredicted branches, . . . n Mature, developed compiler technology n Alternate model accomodates long memory latency − doesn’t rely on caches as does Out-Of-Order, superscalar/VLIW designs => NO data Cache! n Multimedia ready: choose N * 64 b, 2 N * 32 b, 4 N * 16 b, 8 N * 8 b 2021/6/17 USTC CS AN Hong 85

Vector Pitfalls n Concentrating on peak performance and ignoring startup overhead: − e. g. NV (length faster than scalar) > 100 (CDC-star) n Increasing vector performance, without comparable increases in scalar performance (Amdahl's Law) − failure of Cray competitor from his former company n Good processor vector performance without providing good memory bandwidth 2021/6/17 USTC CS AN Hong 86

Issues n In future apps, what % of computation is vectorizable? n Is vector a good match to new apps such as multidemia, DSP, what else? n Do parallelism and small data granularity in signal and media processing allow exploiting SIMD on existing hardware? n Why limited speed-up In Multimedia Extensions? − due to limited hardware parallelism n What are the impacts of complicated data manipulation instructions for pack/unpack data to/from media registers? − Performance scalable in Multimedia Extensions as in vector machines? n In Multimedia Extensions, Assembly-level programming is difficult to program, how to solve the issue? n What is the future approach for media processor? − General purpose with media enhancement ? − Special purpose co processor ? 2021/6/17 USTC CS AN Hong 87