Lecture 9 Embedded DSP Processor Papers Embedded Computing

Lecture 9: Embedded DSP Processor Papers Embedded Computing Systems Michael Schulte Contains copyrighted material. Do not distribute

Papers n M. J. Schulte, J. Glossner, S. Jinturkar, M. Moudgill, S. Mamidi, and S. Vassiliadis, "A Low. Power Multithreaded Processor for Software Defined Radio, " Journal of VLSI Signal Processing Systems, vol. 43, No. 2/3, pp. 143 -159, June 2006. n M. Woh, S. Seo, S. Mahlke, T. Mudge, C. Chakrabarti, and K. Flautner, “Any. SP: Anytime Anywhere Anyway Signal Processing, ” Proceedings of the 36 th International Symposium on Computer Architecture, pp. 128 -139, June 2009.

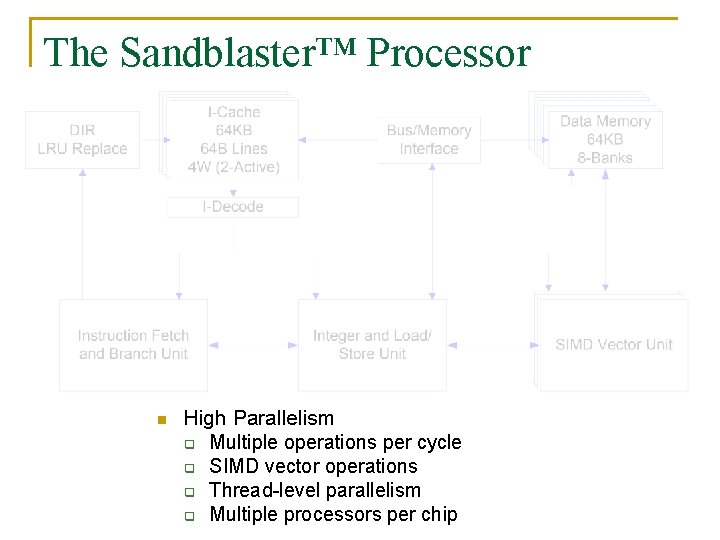

The Sandblaster™ Processor n High Parallelism q Multiple operations per cycle q SIMD vector operations q Thread-level parallelism q Multiple processors per chip

High-Performance Compound Instructions n n n Example L 0: lvu %vr 0, %r 3, 8 || vmulreds %ac 0, %vr 0, %ac 0 || loop %lc 0, L 0 q load vector update: 4 16 -bit loads + address update q vector multiply and reduce: 4 16 -bit saturating multiplies + 4 32 bit saturating adds q loop: decrement, compare against zero and branch Throughput q 3 complex operations/cycle q 16 RISC-ops/cycle q 4 MACS/cycle 20 tap finite impulse response (FIR) filter q 3. 92 MACs/cycle sustained q ~16 RISC-ops/cycle sustained

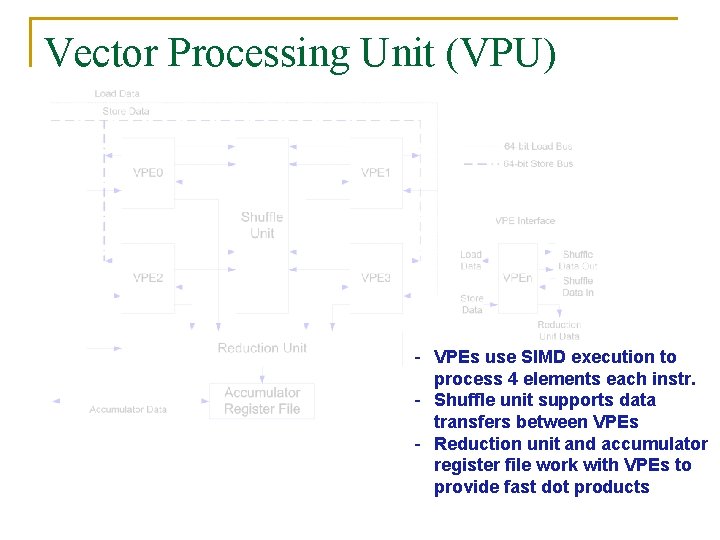

Vector Processing Unit (VPU) - VPEs use SIMD execution to process 4 elements each instr. - Shuffle unit supports data transfers between VPEs - Reduction unit and accumulator register file work with VPEs to provide fast dot products

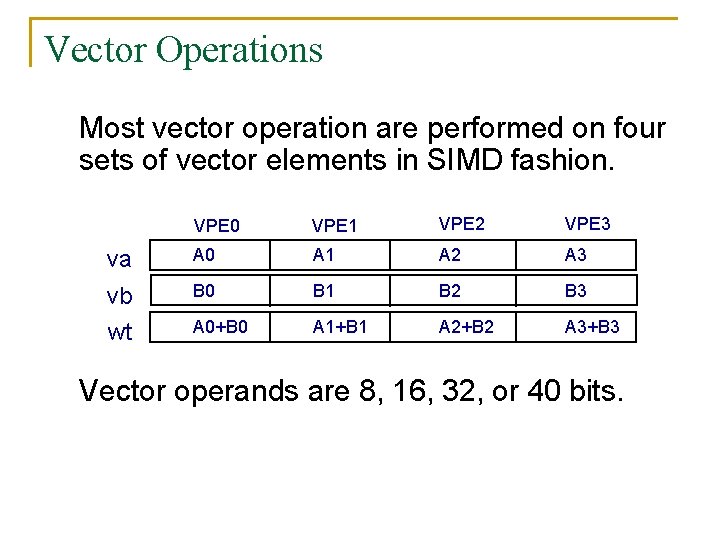

Vector Operations Most vector operation are performed on four sets of vector elements in SIMD fashion. VPE 0 VPE 1 VPE 2 VPE 3 va A 0 A 1 A 2 A 3 vb wt B 0 B 1 B 2 B 3 A 0+B 0 A 1+B 1 A 2+B 2 A 3+B 3 Vector operands are 8, 16, 32, or 40 bits.

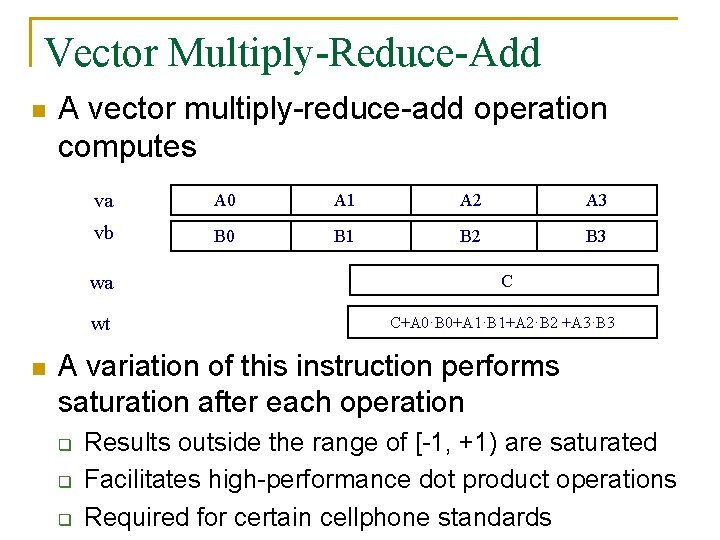

Vector Multiply-Reduce-Add n n A vector multiply-reduce-add operation computes va A 0 A 1 A 2 A 3 vb B 0 B 1 B 2 B 3 wa C wt C+A 0·B 0+A 1·B 1+A 2·B 2 +A 3·B 3 A variation of this instruction performs saturation after each operation q q q Results outside the range of [-1, +1) are saturated Facilitates high-performance dot product operations Required for certain cellphone standards

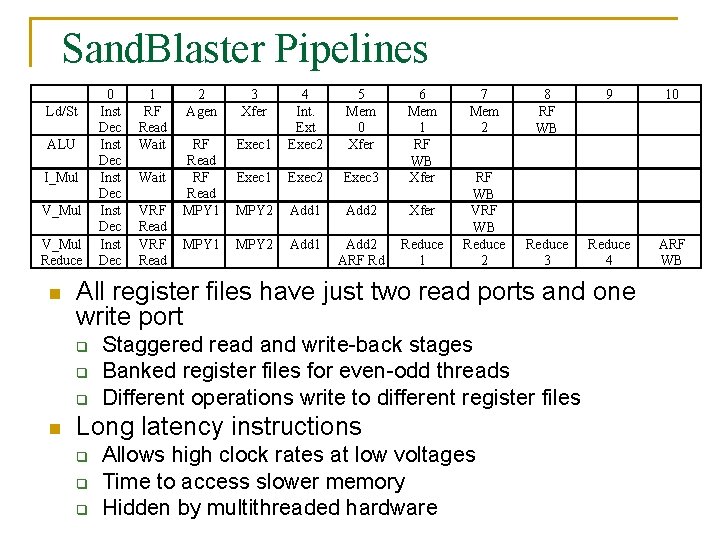

Sand. Blaster Pipelines Ld/St ALU I_Mul V_Mul Reduce n 1 RF Read Wait VRF Read 2 Agen 3 Xfer Exec 1 4 Int. Ext Exec 2 5 Mem 0 Xfer Exec 1 Exec 2 Exec 3 6 Mem 1 RF WB Xfer RF Read MPY 1 MPY 2 Add 1 Add 2 Xfer MPY 1 MPY 2 Add 1 Add 2 ARF Rd Reduce 1 7 Mem 2 8 RF WB 9 10 RF WB VRF WB Reduce 2 Reduce 3 Reduce 4 ARF WB All register files have just two read ports and one write port q q q n 0 Inst Dec Inst Dec Staggered read and write-back stages Banked register files for even-odd threads Different operations write to different register files Long latency instructions q q q Allows high clock rates at low voltages Time to access slower memory Hidden by multithreaded hardware

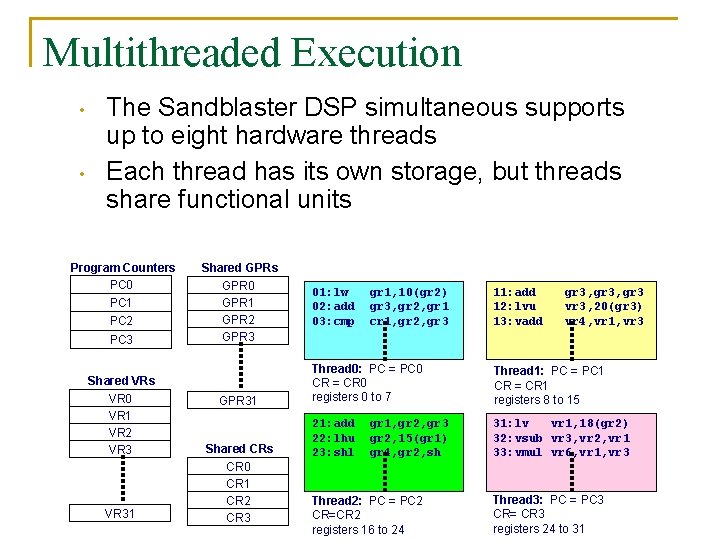

Multithreaded Execution • • The Sandblaster DSP simultaneous supports up to eight hardware threads Each thread has its own storage, but threads share functional units Program Counters PC 0 PC 1 PC 2 PC 3 Shared VRs VR 0 VR 1 VR 2 VR 31 Shared GPRs GPR 0 GPR 1 GPR 2 GPR 31 Shared CRs CR 0 CR 1 CR 2 CR 3 01: lw 02: add 03: cmp gr 1, 10(gr 2) gr 3, gr 2, gr 1 cr 1, gr 2, gr 3 11: add 12: lvu 13: vadd gr 3, gr 3 vr 3, 20(gr 3) vr 4, vr 1, vr 3 Thread 0: PC = PC 0 CR = CR 0 registers 0 to 7 Thread 1: PC = PC 1 CR = CR 1 registers 8 to 15 21: add 22: lhu 23: shl 31: lv vr 1, 18(gr 2) 32: vsub vr 3, vr 2, vr 1 33: vmul vr 6, vr 1, vr 3 gr 1, gr 2, gr 3 gr 2, 15(gr 1) gr 4, gr 2, sh Thread 2: PC = PC 2 CR=CR 2 registers 16 to 24 Thread 3: PC = PC 3 CR= CR 3 registers 24 to 31

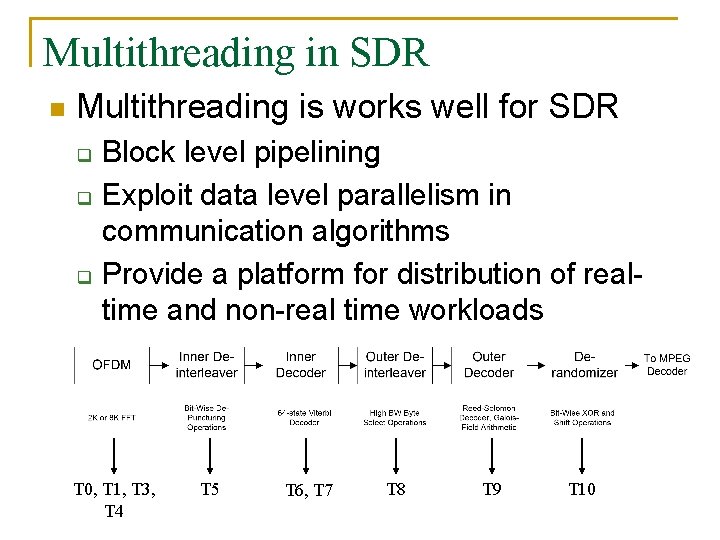

Multithreading in SDR n Multithreading is works well for SDR q q q Block level pipelining Exploit data level parallelism in communication algorithms Provide a platform for distribution of realtime and non-real time workloads T 0, T 1, T 3, T 4 T 5 T 6, T 7 T 8 T 9 T 10

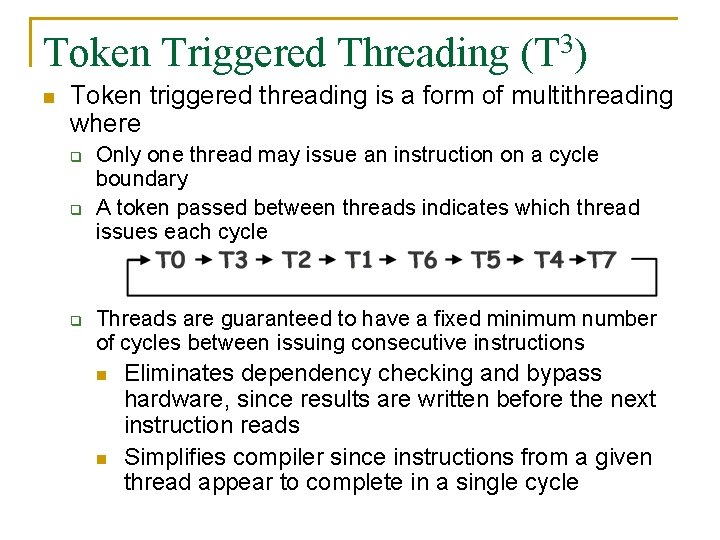

Token Triggered Threading (T 3) n Token triggered threading is a form of multithreading where q q q Only one thread may issue an instruction on a cycle boundary A token passed between threads indicates which thread issues each cycle Threads are guaranteed to have a fixed minimum number of cycles between issuing consecutive instructions n n Eliminates dependency checking and bypass hardware, since results are written before the next instruction reads Simplifies compiler since instructions from a given thread appear to complete in a single cycle

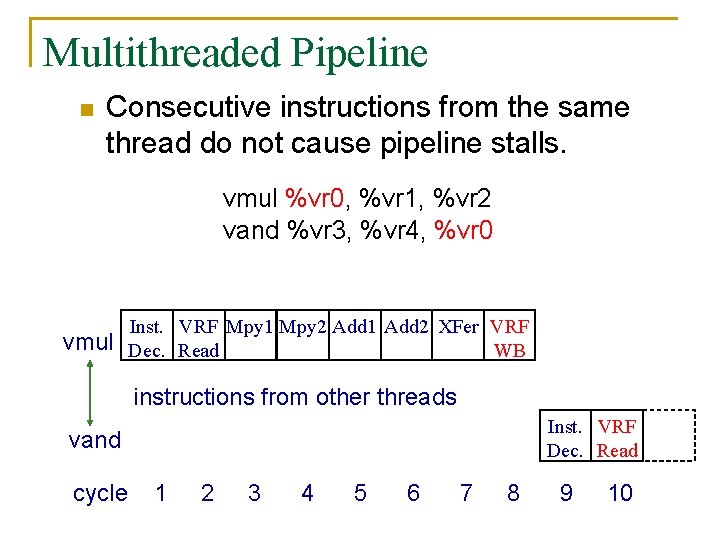

Multithreaded Pipeline n Consecutive instructions from the same thread do not cause pipeline stalls. vmul %vr 0, %vr 1, %vr 2 vand %vr 3, %vr 4, %vr 0 vmul Inst. VRF Mpy 1 Mpy 2 Add 1 Add 2 XFer VRF Dec. Read WB instructions from other threads Inst. VRF Dec. Read vand cycle 1 2 3 4 5 6 7 8 9 10

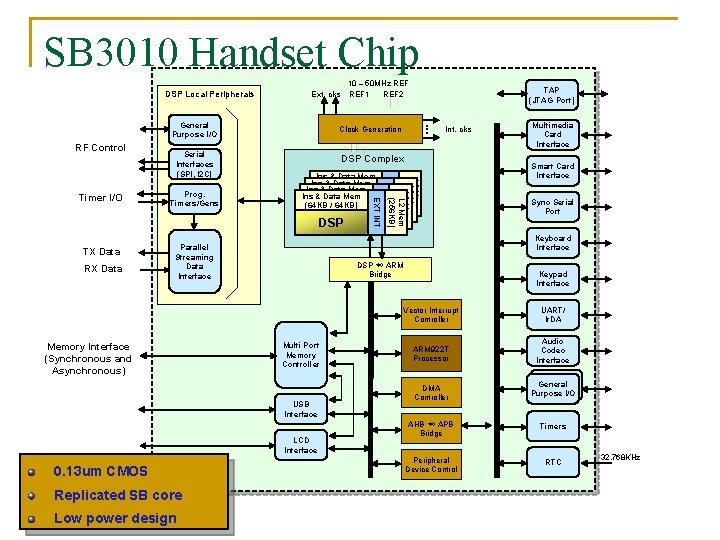

SB 3010 Handset Chip DSP Local Peripherals 10 – 50 MHz REF Ext. clks REF 1 REF 2 General Purpose I/O RF Control TX Data RX Data Prog. Timers/Gens Ins & Data Mem Ins(64 KB & Data Mem / Mem 64 KB) Ins(64 KB & Data / 64 KB) (64 KB / DSP 64 KB) DSP DSP ARM Bridge Multi Port Memory Controller LCD Interface Replicated SB core Low power design Multimedia Card Interface Smart Card Interface Sync Serial Port Keyboard Interface USB Interface 0. 13 um CMOS Int. clks DSP Complex Parallel Streaming Data Interface Memory Interface (Synchronous and Asynchronous) . . . Clock Generation L 2 Mem (256 KB) EXT INT Timer I/O Serial Interfaces (SPI, I 2 C) TAP (JTAG Port) Keypad Interface Vector Interrupt Controller UART/ Ir. DA ARM 922 T Processor Audio Codec Interface DMA Controller General Purpose I/O AHB APB Bridge Timers Peripheral Device Control RTC 32. 768 KHz

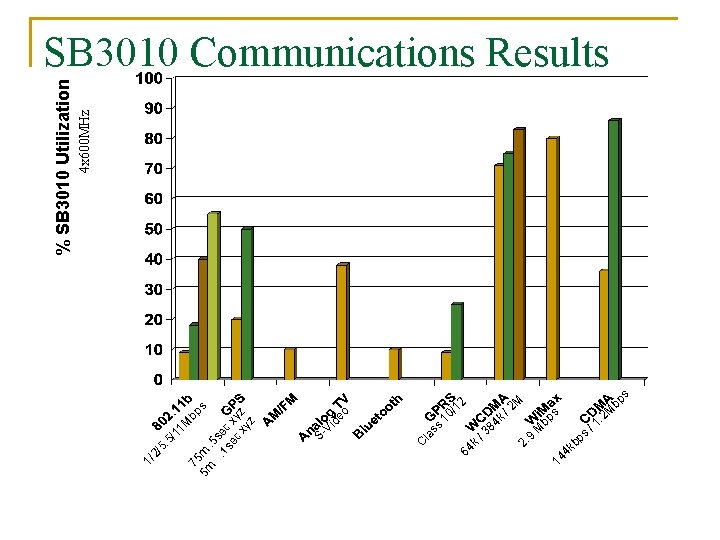

M bp 75 s 5 m m. 5. 1 sec se x c yz xy z 5/ 11 1/ 2/ 5. Sla s s d Vi C eo 10 /1 2 4 x 600 MHz % SB 3010 Utilization SB 3010 Communications Results k 84 6 4 k /3 M /2 2. s bp 9 M kb 4 14 s bp 2 M ps. /1

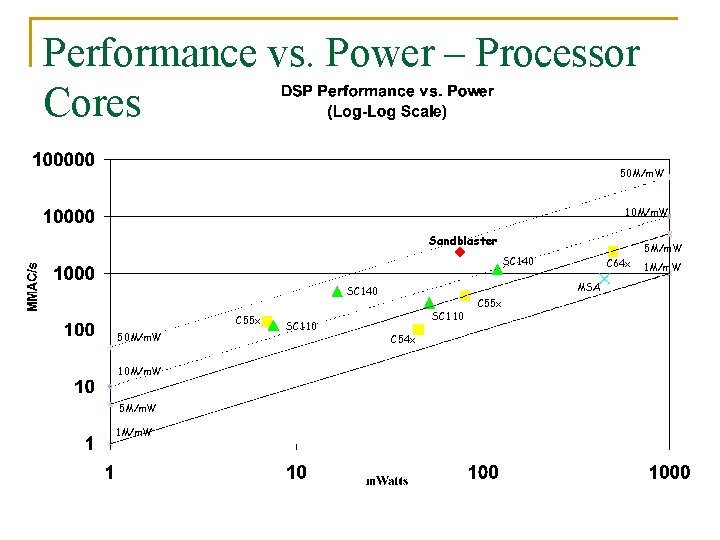

Performance vs. Power – Processor Cores 50 M/m. W 10 M/m. W Sandblaster 5 M/m. W SC 140 MSA SC 140 C 55 x 50 M/m. W 10 M/m. W 5 M/m. W 1 M/m. W SC 110 C 64 x SC 110 C 54 x C 55 x 1 M/m. W

Questions n n What types of parallelism are available in the Sandblaster Processor? Why is multithreading useful in wireless communication applications? What advantages and disadvantages does Token Triggered Threading have compared to Simultaneous Multithreading? What are some potential limitations of the Sandblaster Architecture

Any. SP: Anytime Anywhere Anyway Signal Processing Mark Woh , Sangwon Seo , Scott 1 1 Mahlke 1, Trevor Mudge 1, Chaitali Chakrabarti 2, Krisztian Flautner 3 University of Michigan – ACAL 1 Arizona State University 2 ARM, Ltd. 3 Portions of these slides have been modified for use in ECE 902. Do not distribute.

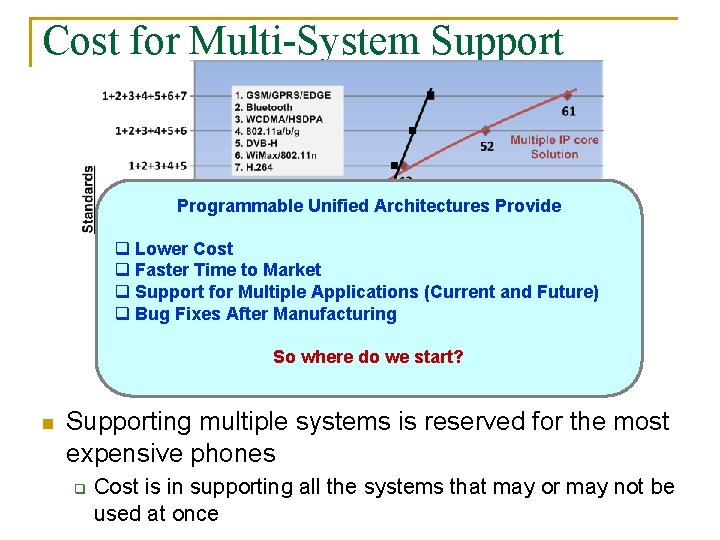

Cost for Multi-System Support Programmable Unified Architectures Provide q Lower Cost q Faster Time to Market q Support for Multiple Applications (Current and Future) q Bug Fixes After Manufacturing So where do we start? n Supporting multiple systems is reserved for the most expensive phones q Cost is in supporting all the systems that may or may not be used at once 19

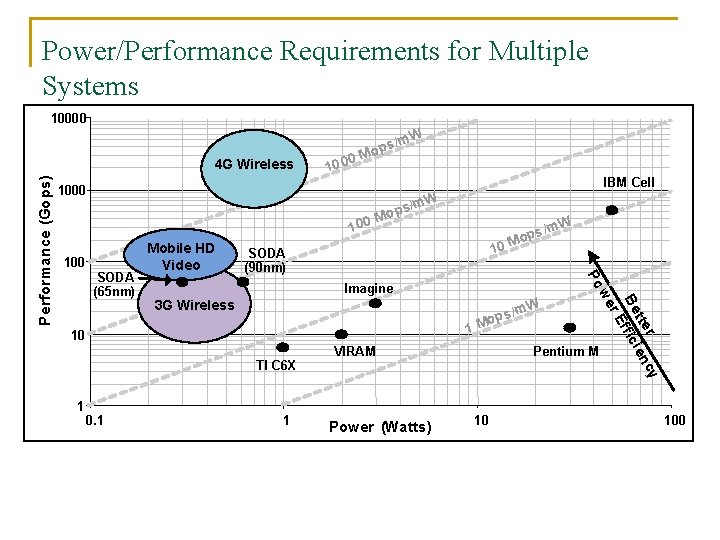

Power/Performance Requirements for Multiple Systems 10000 0 M 10 0 IBM Cell 1000 1 00 m. W ps/ o M /m. W Different applications have different power/performance ops M 0 Mobile HD 1 SODA characteristics! Video (90 nm) 100 Imagine W 1 10 (Not GPP but Domain Specific Processor)Pentium M VIRAM TI C 6 X 1 0. 1 1 Power (Watts) 10 r cy tte i en Be f fic r. E 3 G Wireless m mind! s /in We need to design keeping each application p o M we SODA (65 nm) Po Performance (Gops) 4 G Wireless /m. W s p o 100 20

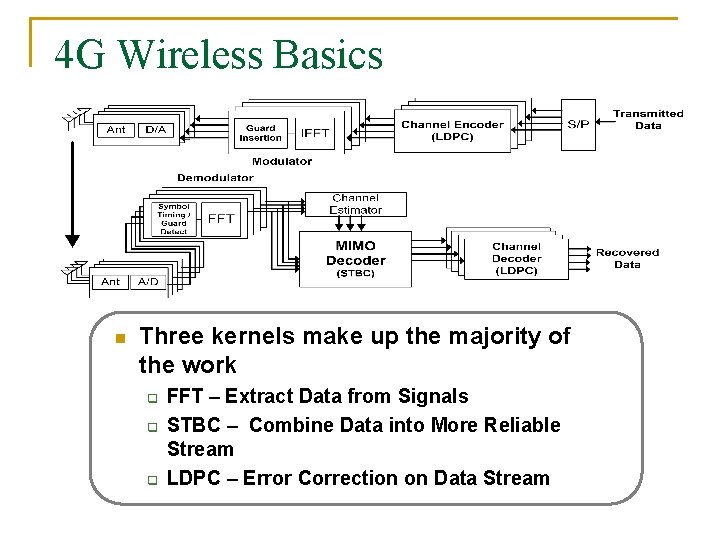

4 G Wireless Basics n Three kernels make up the majority of the work q q q FFT – Extract Data from Signals STBC – Combine Data into More Reliable Stream LDPC – Error Correction on Data Stream 21

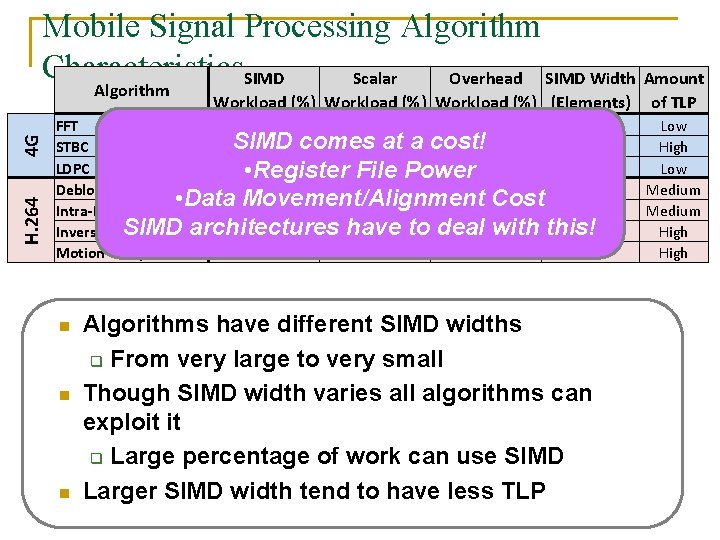

Mobile Signal Processing Algorithm Characteristics. SIMD Scalar Overhead SIMD Width Amount H. 264 4 G Algorithm Workload (%) (Elements) FFT 75 5 20 1024 SIMD comes 5 at a cost!14 STBC 81 4 LDPC 49 18 Power 33 96 • Register File Deblocking Filter 72 13 15 8 • Data Movement/Alignment Cost Intra-Prediction 85 5 10 16 SIMD architectures have to deal 15 with this!8 Inverse Transform 80 5 Motion Compensation 75 5 10 8 n n n Algorithms have different SIMD widths q From very large to very small Though SIMD width varies all algorithms can exploit it q Large percentage of work can use SIMD Larger SIMD width tend to have less TLP of TLP Low High Low Medium High

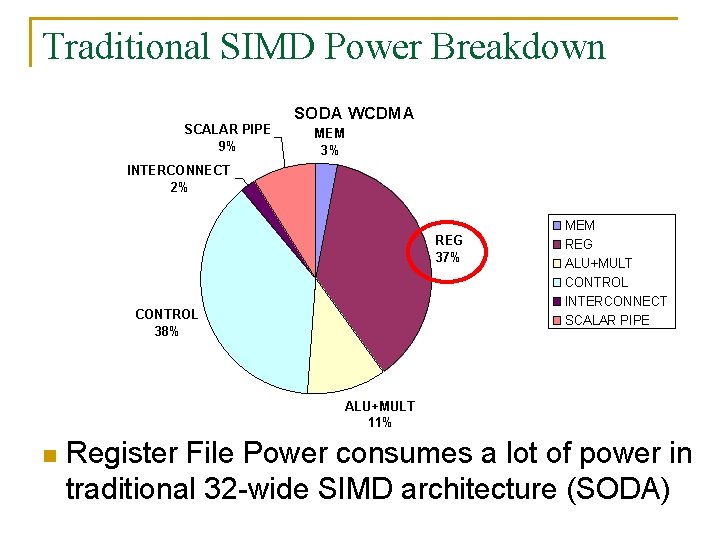

Traditional SIMD Power Breakdown SODA WCDMA SCALAR PIPE 9% MEM 3% INTERCONNECT 2% REG 37% CONTROL 38% MEM REG ALU+MULT CONTROL INTERCONNECT SCALAR PIPE ALU+MULT 11% n Register File Power consumes a lot of power in traditional 32 -wide SIMD architecture (SODA)

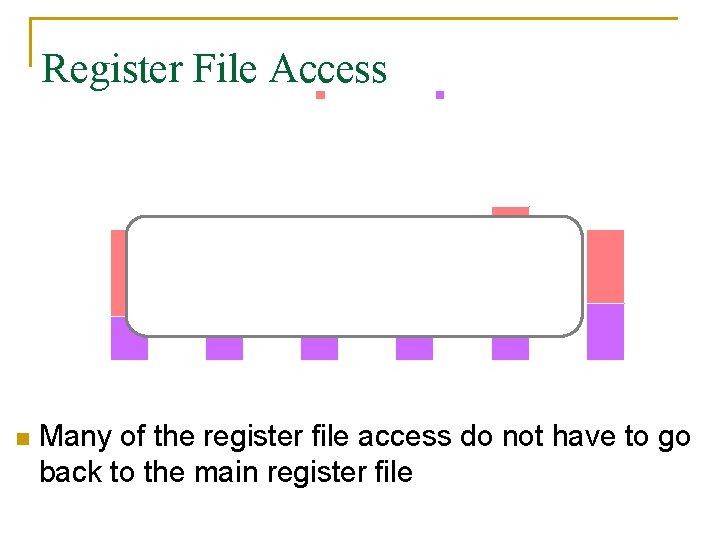

Register File. Access Bypass Read 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% n Bypass Write Lots of power wasted on unneeded register file access! FFT STBC LDPC Deblocking Filter Intra-Prediction Inverse Transform Many of the register file access do not have to go back to the main register file 24

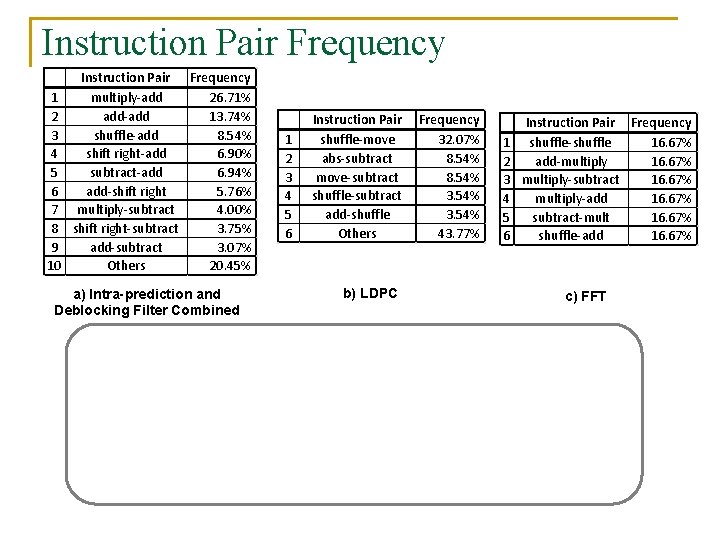

Instruction Pair Frequency 1 multiply-add 26. 71% 2 add-add 13. 74% 3 shuffle-add 8. 54% 4 shift right-add 6. 90% 5 subtract-add 6. 94% 6 add-shift right 5. 76% 7 multiply-subtract 4. 00% 8 shift right-subtract 3. 75% 9 add-subtract 3. 07% 10 Others 20. 45% a) Intra-prediction and Deblocking Filter Combined 1 2 3 4 5 6 Instruction Pair shuffle-move abs-subtract move-subtract shuffle-subtract add-shuffle Others b) LDPC Frequency 32. 07% 8. 54% 3. 54% 43. 77% 1 2 3 4 5 6 Instruction Pair Frequency shuffle-shuffle 16. 67% add-multiply 16. 67% multiply-subtract 16. 67% multiply-add 16. 67% subtract-mult 16. 67% shuffle-add 16. 67% c) FFT Like the Multiply-Accumulate (MAC) instruction there is opportunity to fuse other instructions A few instruction pairs (3 -5) make up the majority of all instruction pairs! 25

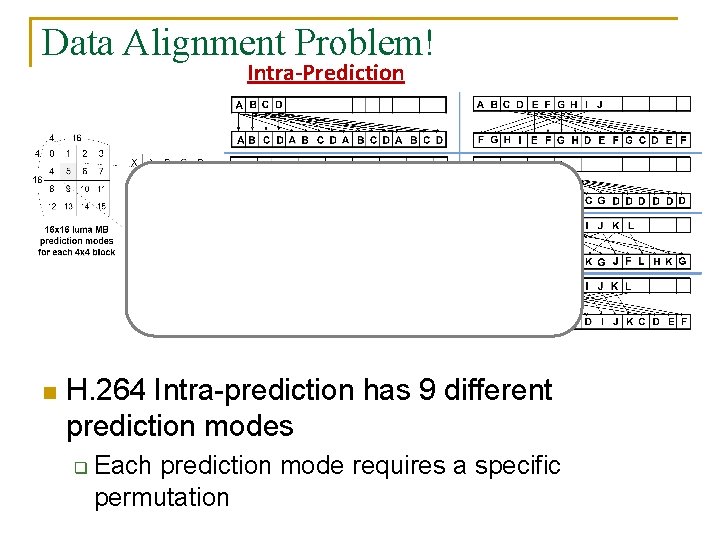

Data Alignment Problem! Intra-Prediction Traditional SIMD machines take too long or cost too much to do this Good news – small fixed number patterns per kernel n H. 264 Intra-prediction has 9 different prediction modes q Each prediction mode requires a specific permutation 26

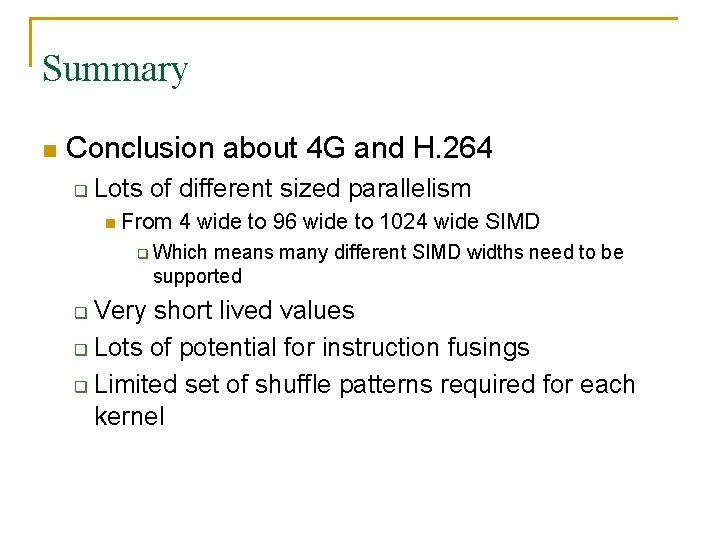

Summary n Conclusion about 4 G and H. 264 q Lots of different sized parallelism n From 4 wide to 96 wide to 1024 wide SIMD q Which means many different SIMD widths need to be supported Very short lived values q Lots of potential for instruction fusings q Limited set of shuffle patterns required for each kernel q

Traditional SIMD Architectures 32 -Wide SIMD with Simple Shuffle Network 28

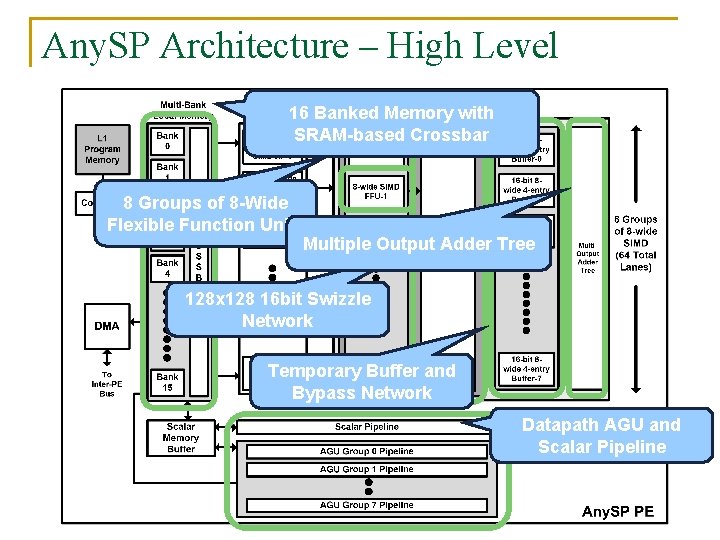

Any. SP Architecture – High Level 16 Banked Memory with SRAM-based Crossbar 8 Groups of 8 -Wide Flexible Function Units Multiple Output Adder Tree 128 x 128 16 bit Swizzle Network Temporary Buffer and Bypass Network Datapath AGU and Scalar Pipeline

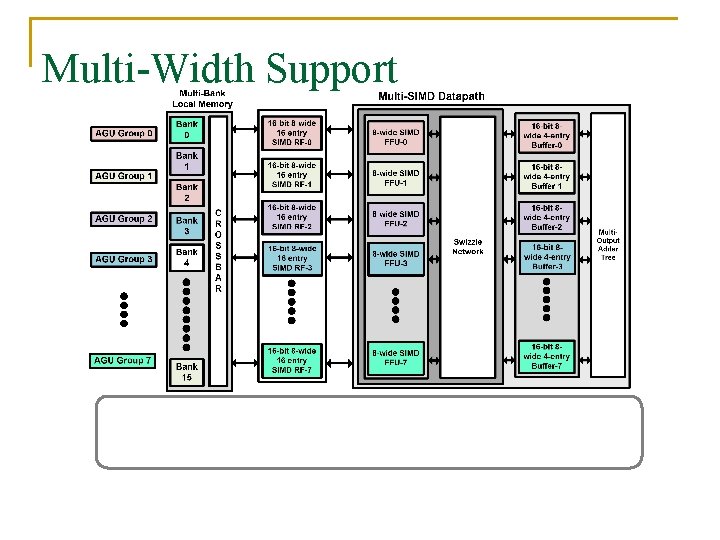

Multi-Width Support Each 8 -wide SIMD Group works on different memory Normal 64 -Wide SIMD mode – all lanes share one AGU locations of the same 8 -wide code – AGU Offsets 30

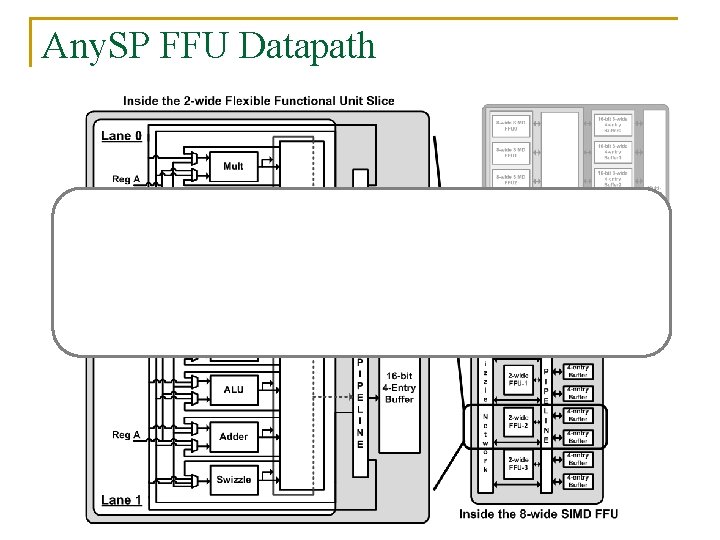

Any. SP FFU Datapath Flexible Functional Unit allows us to 1. 2. 3. 4. Exploit Pipeline-parallelism by joining two lanes together Handle register bypass and the temporary buffer Join multiple pipelines to process deeper subgraphs Fuse Instruction Pairs

Simulation Environment n Traditional SIMD architecture comparison q n SODA at 90 nm technology Any. SP Synthesized at 90 nm TSMC q Power, timing, area numbers were extracted q n Performance and power for each kernel were generated using synthesized data on in-house simulator 32

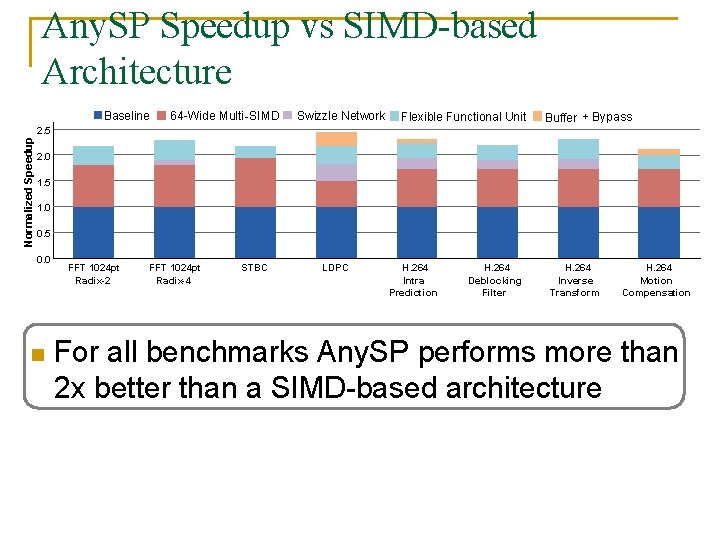

Any. SP Speedup vs SIMD-based Architecture Baseline 64 -Wide Multi -SIMD Swizzle Network Flexible Functional Unit Buffer + Bypass Normalized Speedup 2. 5 2. 0 1. 5 1. 0 0. 5 0. 0 n FFT 1024 pt Radix-2 FFT 1024 pt Radix-4 STBC LDPC H. 264 Intra Prediction H. 264 Deblocking Filter H. 264 Inverse Transform H. 264 Motion Compensation For all benchmarks Any. SP performs more than 2 x better than a SIMD-based architecture 33

Normalized Energy-Delay Any. SP Energy-Delay vs SIMD-based Architecture Any. SP 1. 0 0. 8 0. 6 0. 4 0. 2 0. 0 FFT 1024 pt Radix-2 n FFT 1024 pt Radix-4 STBC LDPC H. 264 Intra Prediction H. 264 Deblocking Filter H. 264 Inverse Transform H. 264 Motion Compenstation More importantly energy efficiency is much better! 34

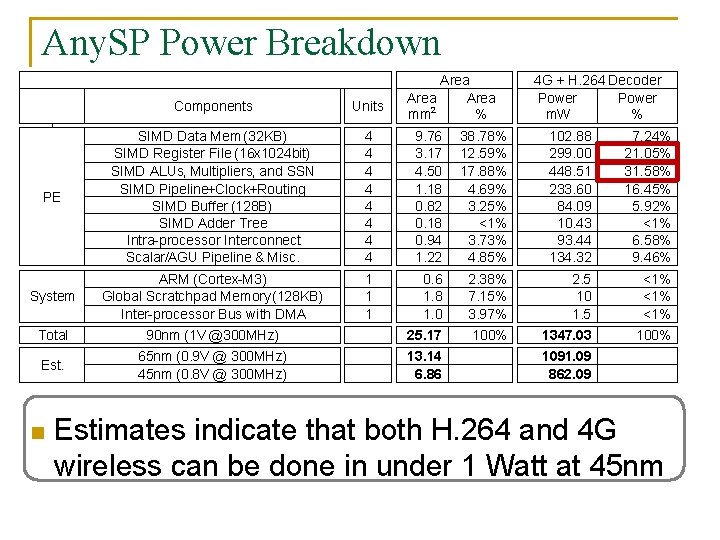

Any. SP Power Breakdown PE System Total Est. n Area 2 mm % 4 G + H. 264 Decoder Power m. W % Components Units SIMD Data Mem (32 KB) SIMD Register File (16 x 1024 bit) SIMD ALUs, Multipliers, and SSN SIMD Pipeline+Clock+Routing SIMD Buffer (128 B) SIMD Adder Tree Intra-processor Interconnect Scalar/AGU Pipeline & Misc. 4 4 4 4 9. 76 3. 17 4. 50 1. 18 0. 82 0. 18 0. 94 1. 22 38. 78% 12. 59% 17. 88% 4. 69% 3. 25% <1% 3. 73% 4. 85% 102. 88 299. 00 448. 51 233. 60 84. 09 10. 43 93. 44 134. 32 7. 24% 21. 05% 31. 58% 16. 45% 5. 92% <1% 6. 58% 9. 46% ARM (Cortex-M 3) Global Scratchpad Memory (128 KB) Inter-processor Bus with DMA 90 nm (1 V @300 MHz) 65 nm (0. 9 V @ 300 MHz) 45 nm (0. 8 V @ 300 MHz) 1 1 1 0. 6 1. 8 1. 0 25. 17 13. 14 6. 86 2. 38% 7. 15% 3. 97% 100% 2. 5 10 1. 5 1347. 03 1091. 09 862. 09 <1% <1% 100% Estimates indicate that both H. 264 and 4 G wireless can be done in under 1 Watt at 45 nm 35

Questions What are some key characteristics of wireless communication and HD video algorithms? n What aspects of Any. SP are designed to exploit these characteristics? n How does the Any. SP architecture differ from the Sandblaster architecture? n What are some potential limitations of the Any. SP architecture? n

- Slides: 35