Lecture 8 TCP and Congestion Control ITCS 61668166

![TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival](https://slidetodoc.com/presentation_image/4ea2225beac71e1ddc9df000ea2f0a13/image-8.jpg)

- Slides: 33

Lecture 8: TCP and Congestion Control ITCS 6166/8166 091 Spring 2007 Jamie Payton Department of Computer Science University of North Carolina at Charlotte February 5, 2007 Slides adapted from: Congestion slides for Computer Networks: A Systems Approach (Peterson and Davis) Chapter 3 slides for Computer Networking: A Top Down Approach Featuring the Internet (Kurose and Ross)

Announcements • Textbook is on reserve in library • Homework 2 will be assigned on Wednesday – Due: Feb. 14

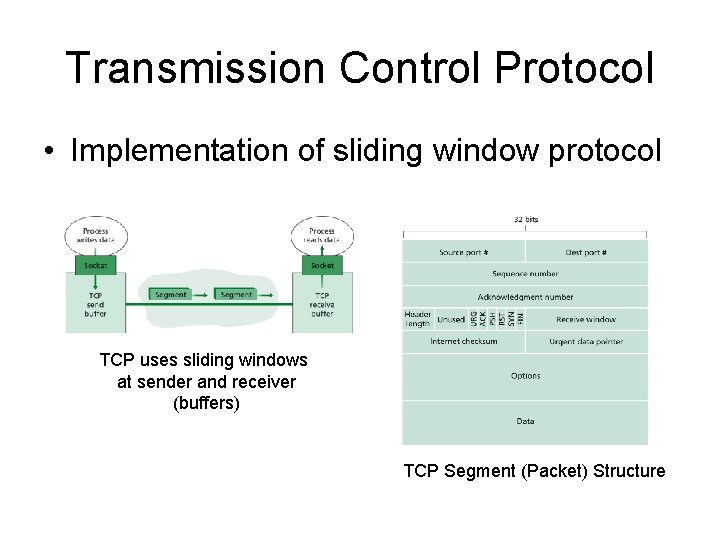

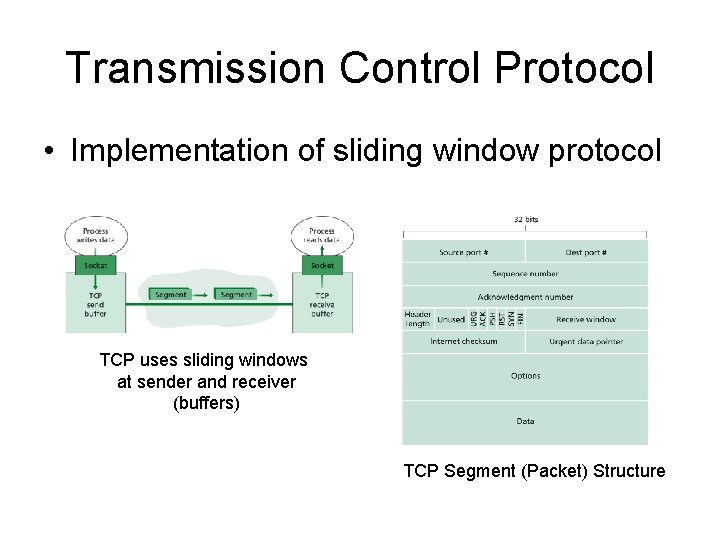

Transmission Control Protocol • Implementation of sliding window protocol TCP uses sliding windows at sender and receiver (buffers) TCP Segment (Packet) Structure

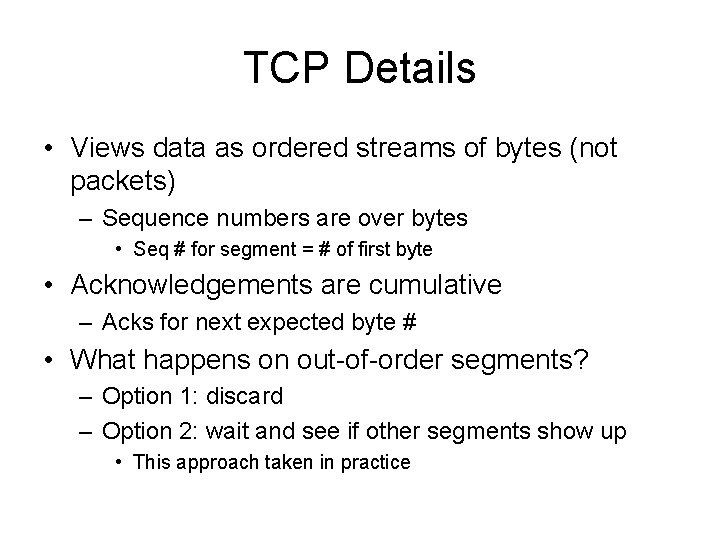

TCP Details • Views data as ordered streams of bytes (not packets) – Sequence numbers are over bytes • Seq # for segment = # of first byte • Acknowledgements are cumulative – Acks for next expected byte # • What happens on out-of-order segments? – Option 1: discard – Option 2: wait and see if other segments show up • This approach taken in practice

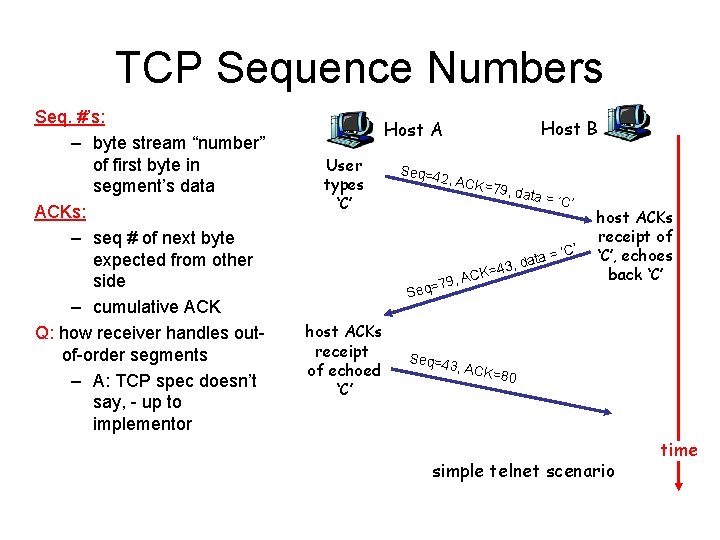

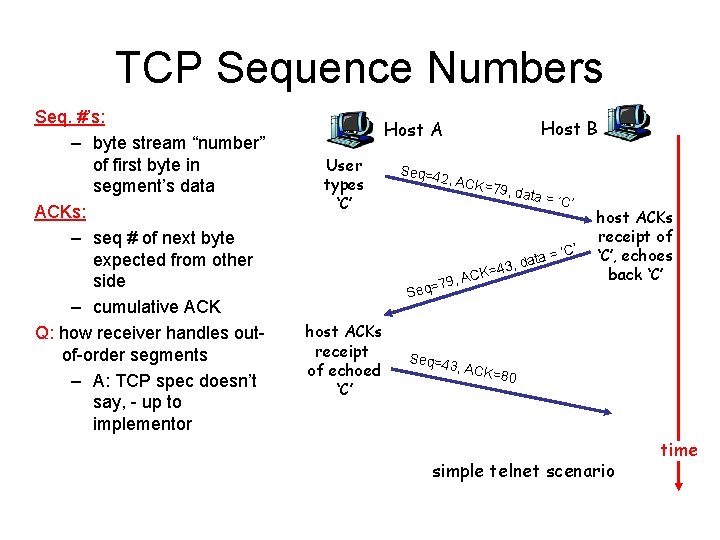

TCP Sequence Numbers Seq. #’s: – byte stream “number” of first byte in segment’s data ACKs: – seq # of next byte expected from other side – cumulative ACK Q: how receiver handles outof-order segments – A: TCP spec doesn’t say, - up to implementor Host B Host A User types ‘C’ Seq=4 2, ACK = 79, da ta ata = d , 3 4 K= C 79, A = q e S host ACKs receipt of echoed ‘C’ = ‘C’ host ACKs receipt of ‘C’, echoes back ‘C’ Seq=4 3, ACK =80 simple telnet scenario time

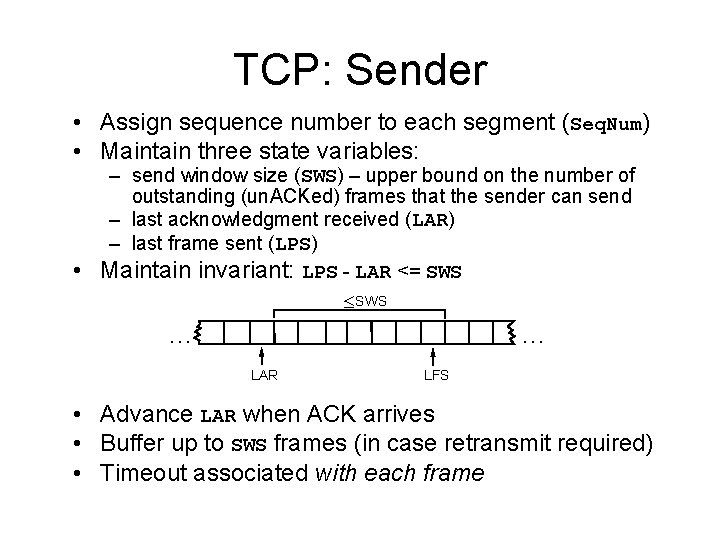

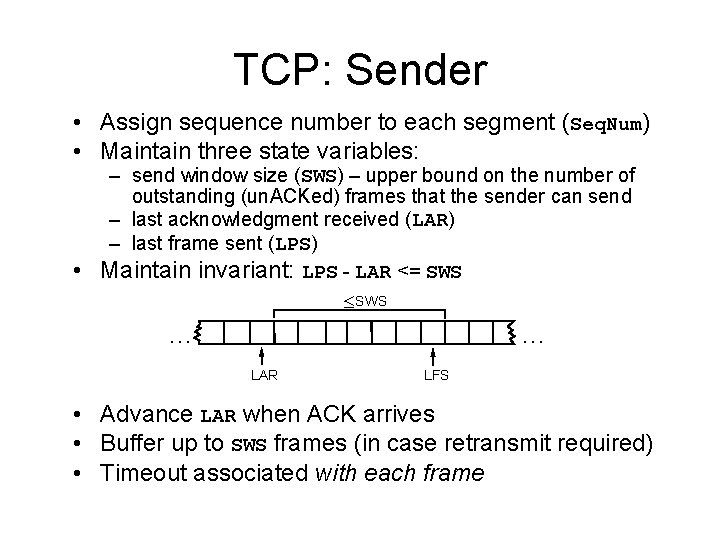

TCP: Sender • Assign sequence number to each segment (Seq. Num) • Maintain three state variables: – send window size (SWS) – upper bound on the number of outstanding (un. ACKed) frames that the sender can send – last acknowledgment received (LAR) – last frame sent (LPS) • Maintain invariant: LPS - LAR <= SWS £ SWS … … LAR LFS • Advance LAR when ACK arrives • Buffer up to SWS frames (in case retransmit required) • Timeout associated with each frame

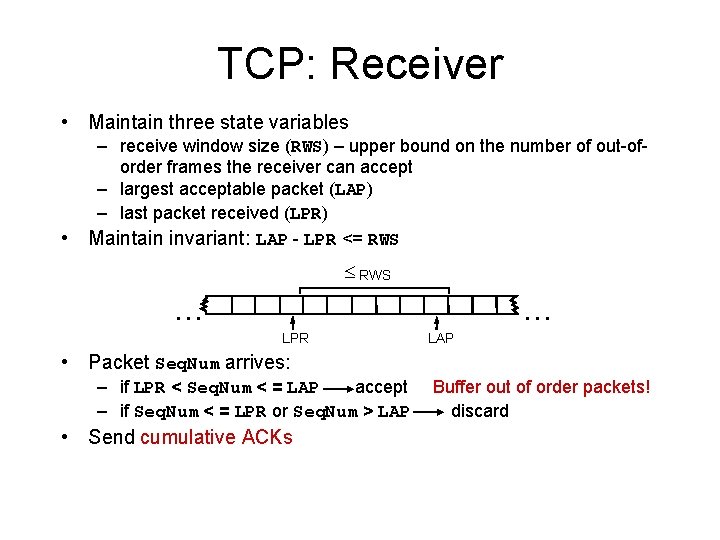

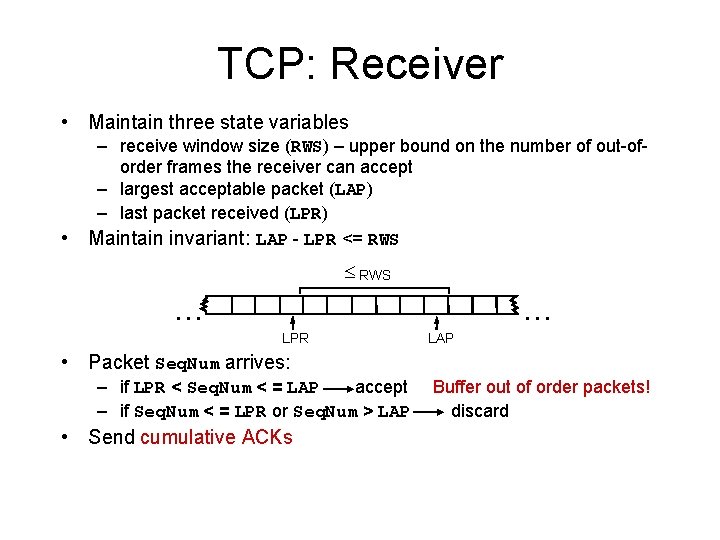

TCP: Receiver • Maintain three state variables • – receive window size (RWS) – upper bound on the number of out-oforder frames the receiver can accept – largest acceptable packet (LAP) – last packet received (LPR) Maintain invariant: LAP - LPR <= RWS £ RWS … … LPR LAP • Packet Seq. Num arrives: – if LPR < Seq. Num < = LAP accept – if Seq. Num < = LPR or Seq. Num > LAP • Send cumulative ACKs Buffer out of order packets! discard

![TCP ACK generation RFC 1122 RFC 2581 Event at Receiver TCP Receiver action Arrival TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival](https://slidetodoc.com/presentation_image/4ea2225beac71e1ddc9df000ea2f0a13/image-8.jpg)

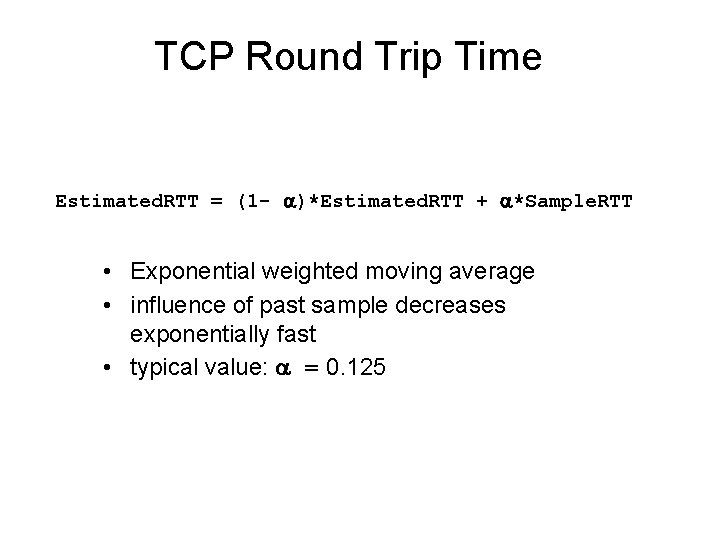

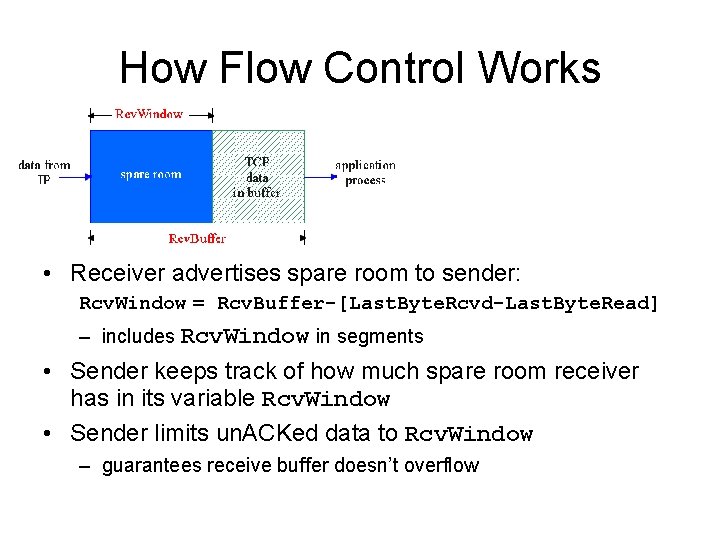

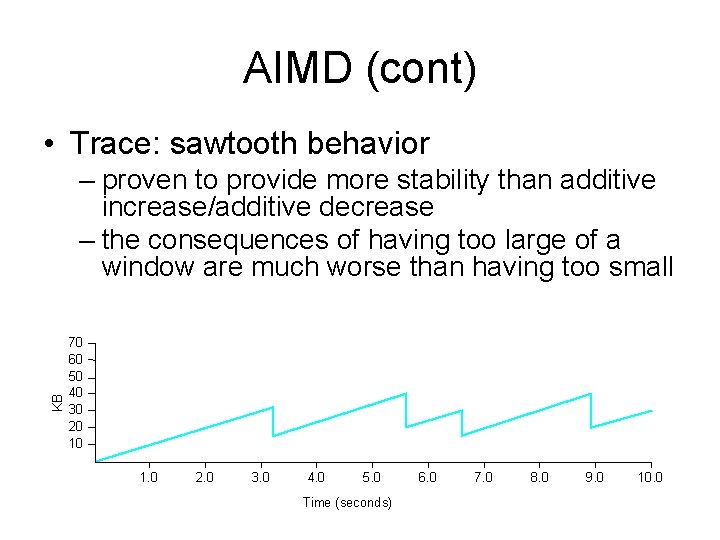

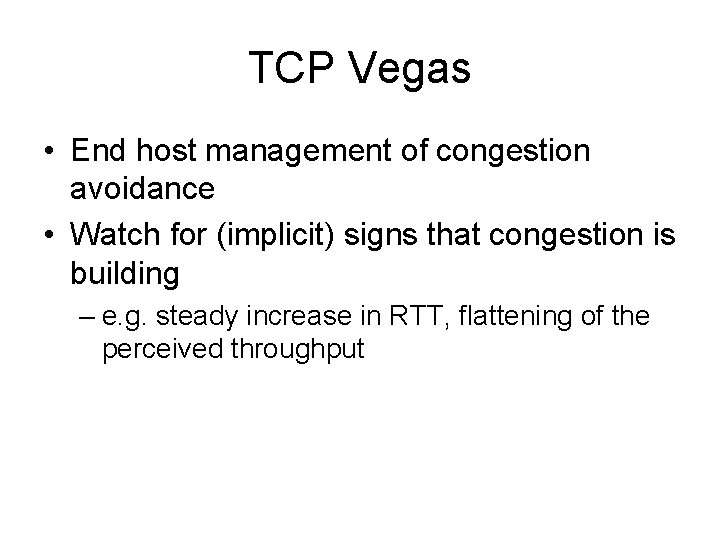

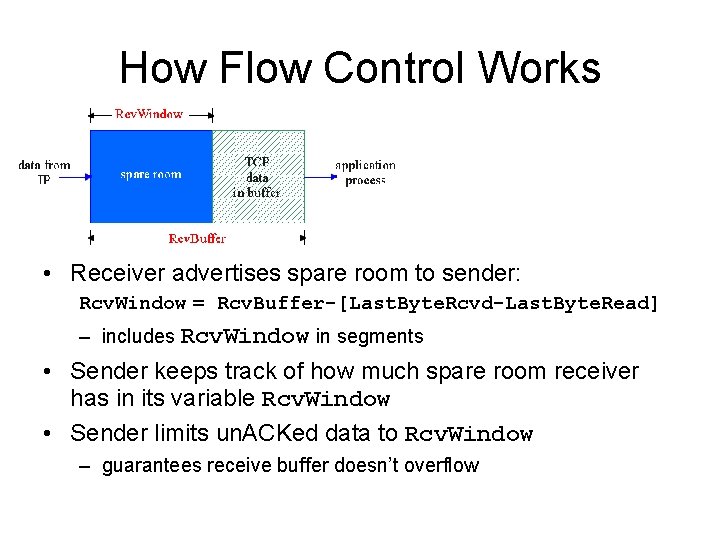

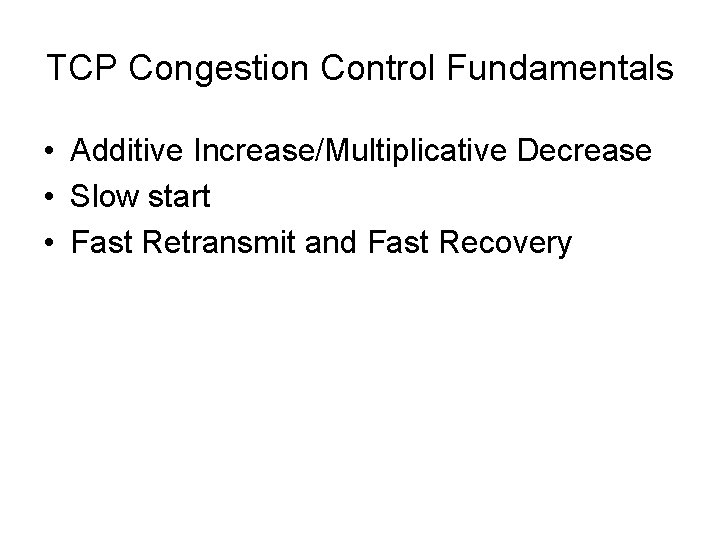

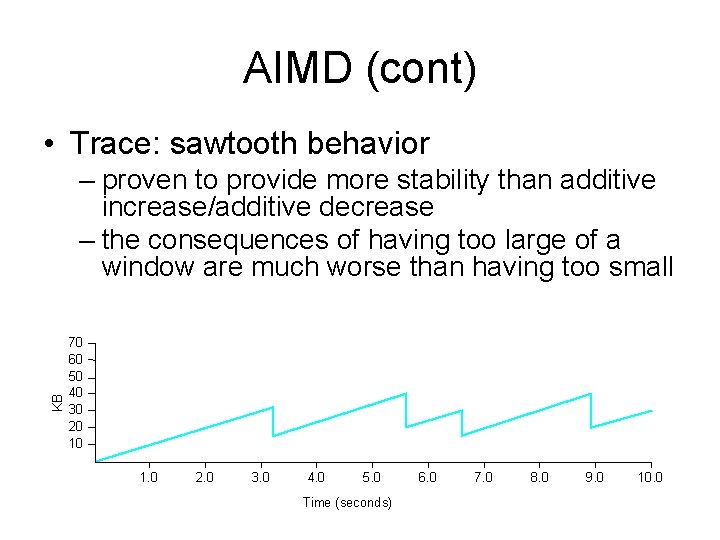

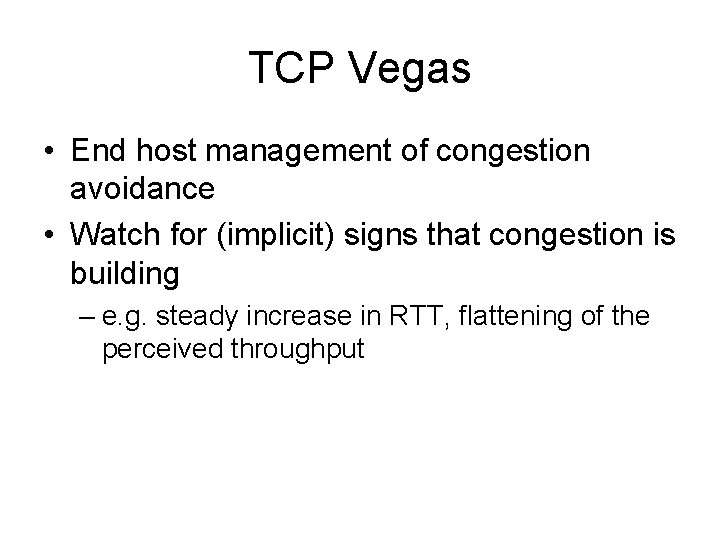

TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival of in-order segment with expected seq #. All data up to expected seq # already ACKed Delayed ACK. Wait up to 500 ms for next segment. If no next segment, send ACK Arrival of in-order segment with expected seq #. One other segment has ACK pending Immediately send single cumulative ACK, ACKing both in-order segments Arrival of out-of-order segment higher-than-expect seq. #. Gap detected Immediately send duplicate ACK, indicating seq. # of next expected byte Arrival of segment that partially or completely fills gap Immediate send ACK, provided that segment starts at lower end of gap

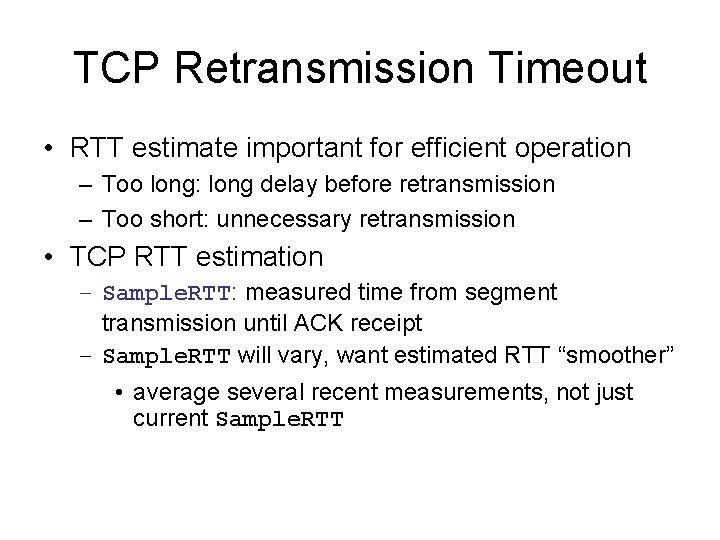

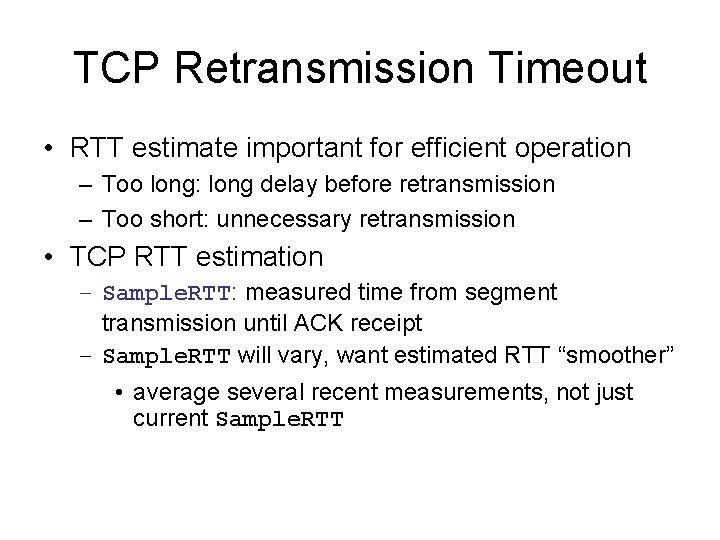

TCP Retransmission Timeout • RTT estimate important for efficient operation – Too long: long delay before retransmission – Too short: unnecessary retransmission • TCP RTT estimation – Sample. RTT: measured time from segment transmission until ACK receipt – Sample. RTT will vary, want estimated RTT “smoother” • average several recent measurements, not just current Sample. RTT

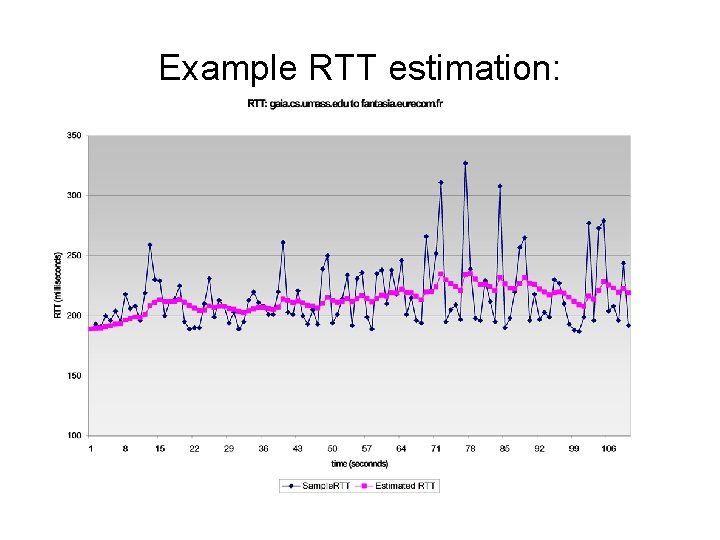

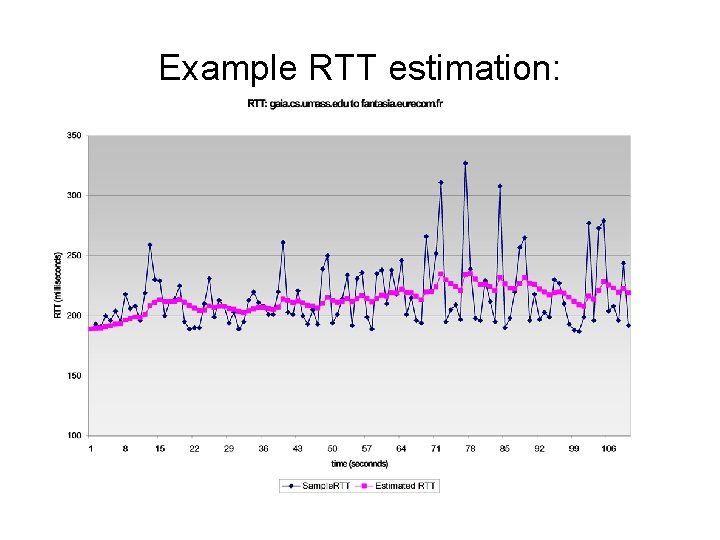

TCP Round Trip Time Estimated. RTT = (1 - )*Estimated. RTT + *Sample. RTT • Exponential weighted moving average • influence of past sample decreases exponentially fast • typical value: = 0. 125

Example RTT estimation:

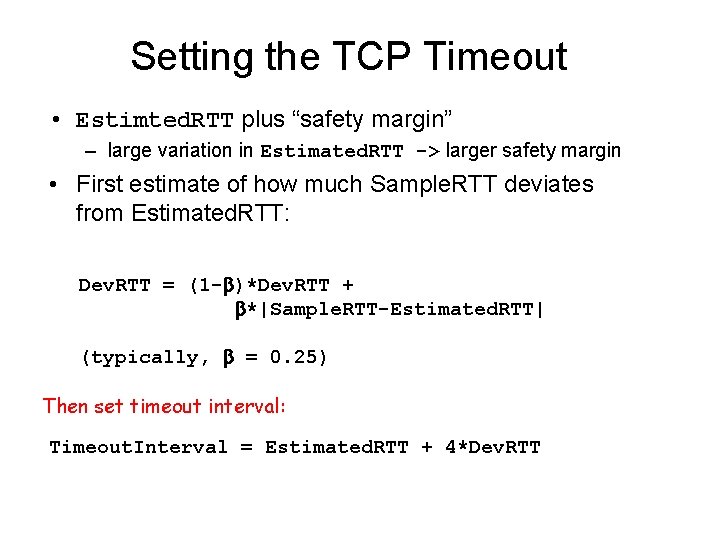

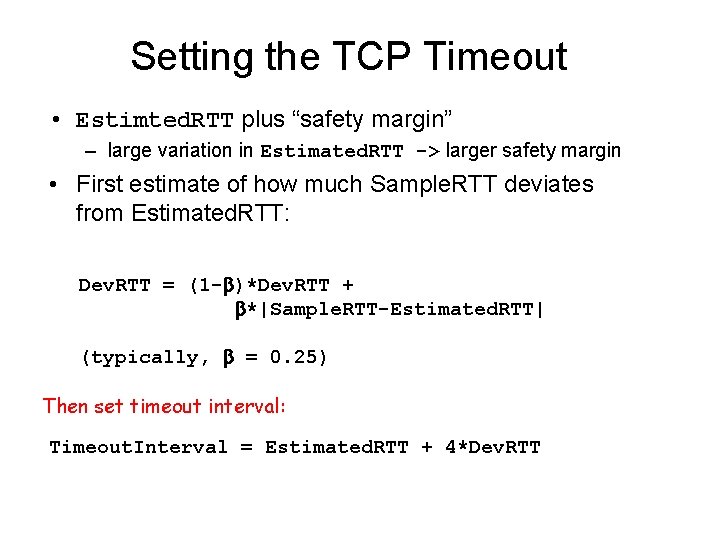

Setting the TCP Timeout • Estimted. RTT plus “safety margin” – large variation in Estimated. RTT -> larger safety margin • First estimate of how much Sample. RTT deviates from Estimated. RTT: Dev. RTT = (1 - )*Dev. RTT + *|Sample. RTT-Estimated. RTT| (typically, = 0. 25) Then set timeout interval: Timeout. Interval = Estimated. RTT + 4*Dev. RTT

Flow Control • What happens if the receiving process is slow and the sending process is fast? • TCP provides flow control – sender won’t overflow receiver’s buffer by transmitting too much, too fast

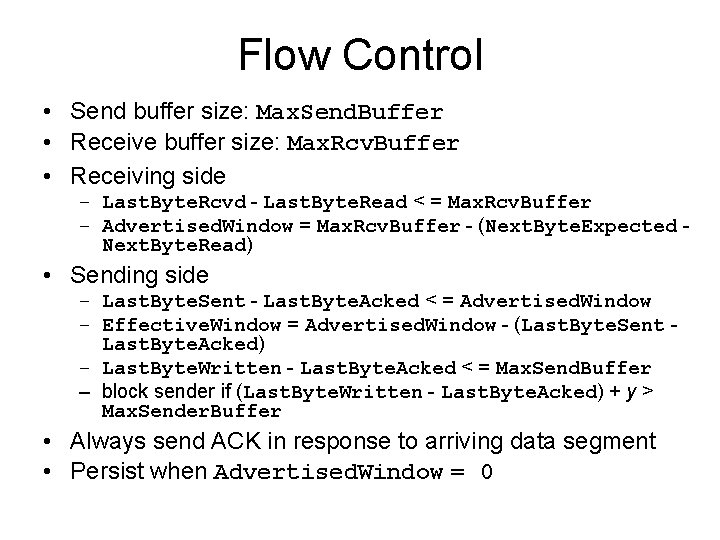

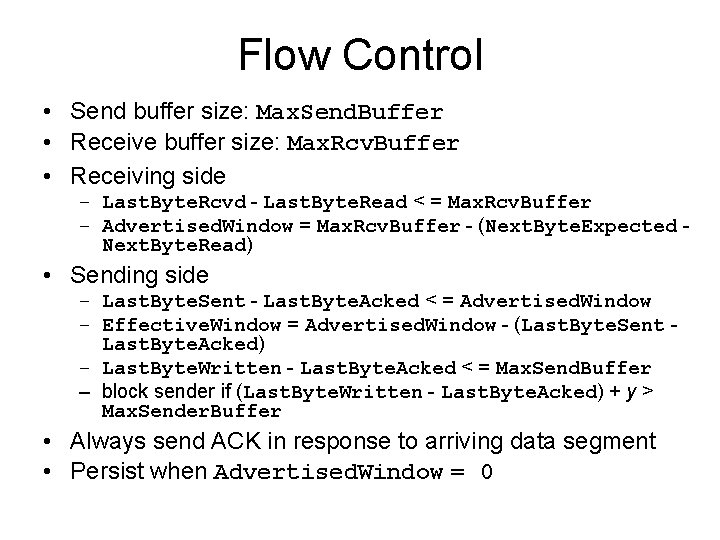

How Flow Control Works • Receiver advertises spare room to sender: Rcv. Window = Rcv. Buffer-[Last. Byte. Rcvd-Last. Byte. Read] – includes Rcv. Window in segments • Sender keeps track of how much spare room receiver has in its variable Rcv. Window • Sender limits un. ACKed data to Rcv. Window – guarantees receive buffer doesn’t overflow

Flow Control • Send buffer size: Max. Send. Buffer • Receive buffer size: Max. Rcv. Buffer • Receiving side – Last. Byte. Rcvd - Last. Byte. Read < = Max. Rcv. Buffer – Advertised. Window = Max. Rcv. Buffer - (Next. Byte. Expected Next. Byte. Read) • Sending side – Last. Byte. Sent - Last. Byte. Acked < = Advertised. Window – Effective. Window = Advertised. Window - (Last. Byte. Sent Last. Byte. Acked) – Last. Byte. Written - Last. Byte. Acked < = Max. Send. Buffer – block sender if (Last. Byte. Written - Last. Byte. Acked) + y > Max. Sender. Buffer • Always send ACK in response to arriving data segment • Persist when Advertised. Window = 0

Congestion Control Congestion: • Informally: “too many sources sending too much data too fast for network to handle” • Different from flow control! • Manifestations: – lost packets (buffer overflow at routers) – long delays (queueing in router buffers) • A top-10 problem in computer network research!

TCP Congestion Control • Idea – – assumes best-effort network (FIFO or FQ routers) each source determines network capacity for itself uses implicit feedback ACKs pace transmission (self-clocking) • Challenges – determining the available capacity in the first place – adjusting to changes in the available capacity

TCP Congestion Control Fundamentals • Additive Increase/Multiplicative Decrease • Slow start • Fast Retransmit and Fast Recovery

Additive Increase/Multiplicative Decrease • Objective: adjust to changes in the available capacity • New state variable per connection: Congestion. Window – Counterpart to flow control’s advertised window – limits how much data source has in transit Max. Win = MIN(Congestion. Window, Advertised. Window) Eff. Win = Max. Win - (Last. Byte. Sent Last. Byte. Acked) • Idea: – increase Congestion. Window when congestion goes down – decrease Congestion. Window when congestion goes up • Now Effective. Window includes both flow control and congestion control

AIMD (cont) • Question: how does the source determine whether or not the network is congested? • Answer: a timeout occurs – timeout signals that a packet was lost – packets are seldom lost due to transmission error – lost packet implies congestion

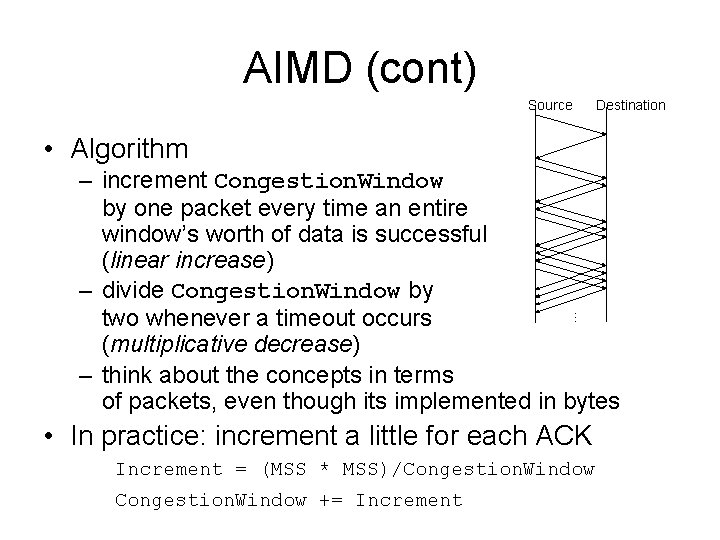

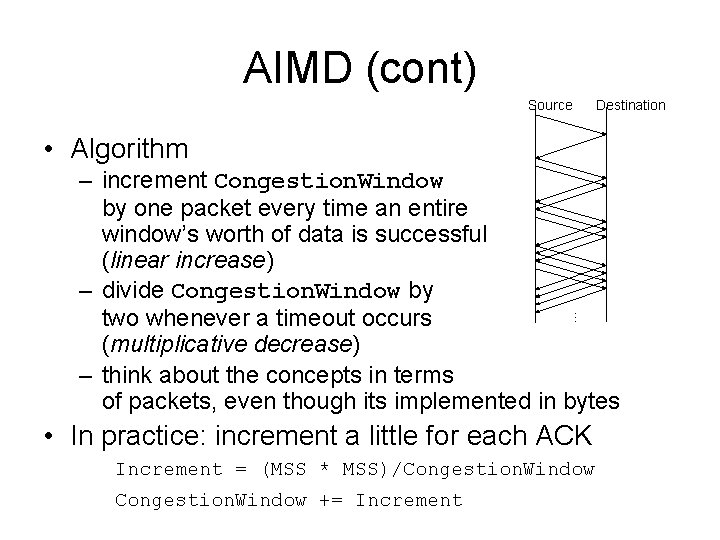

AIMD (cont) Source Destination • Algorithm … – increment Congestion. Window by one packet every time an entire window’s worth of data is successful (linear increase) – divide Congestion. Window by two whenever a timeout occurs (multiplicative decrease) – think about the concepts in terms of packets, even though its implemented in bytes • In practice: increment a little for each ACK Increment = (MSS * MSS)/Congestion. Window += Increment

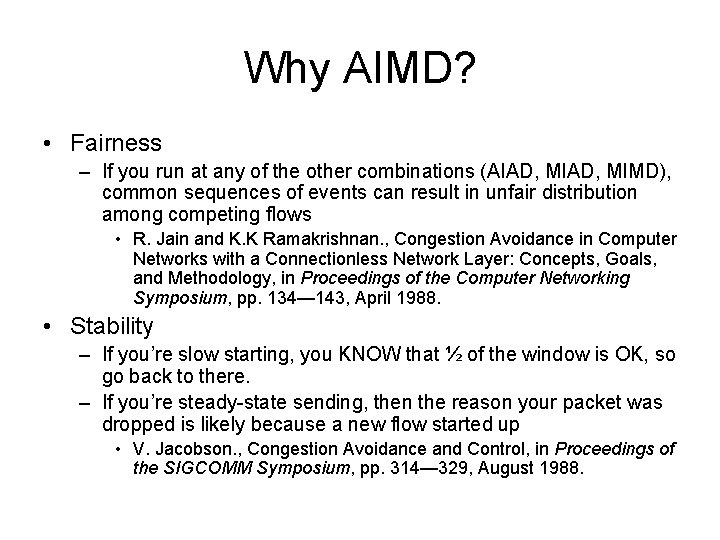

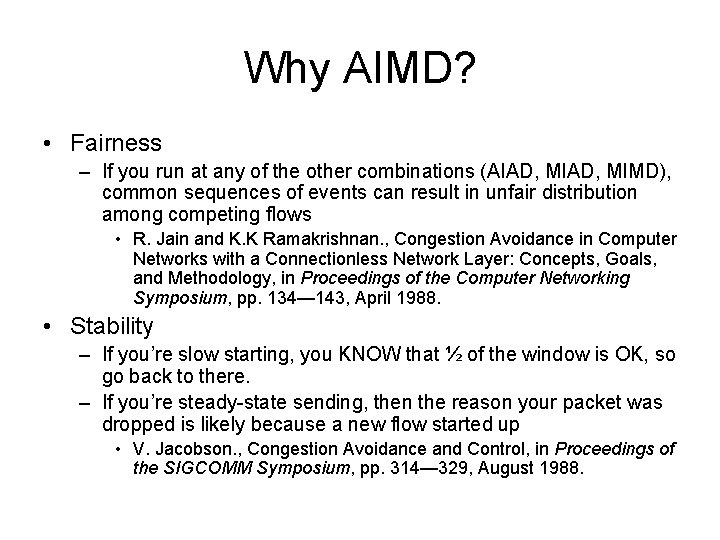

AIMD (cont) • Trace: sawtooth behavior KB – proven to provide more stability than additive increase/additive decrease – the consequences of having too large of a window are much worse than having too small 70 60 50 40 30 20 10 1. 0 2. 0 3. 0 4. 0 5. 0 Time (seconds) 6. 0 7. 0 8. 0 9. 0 10. 0

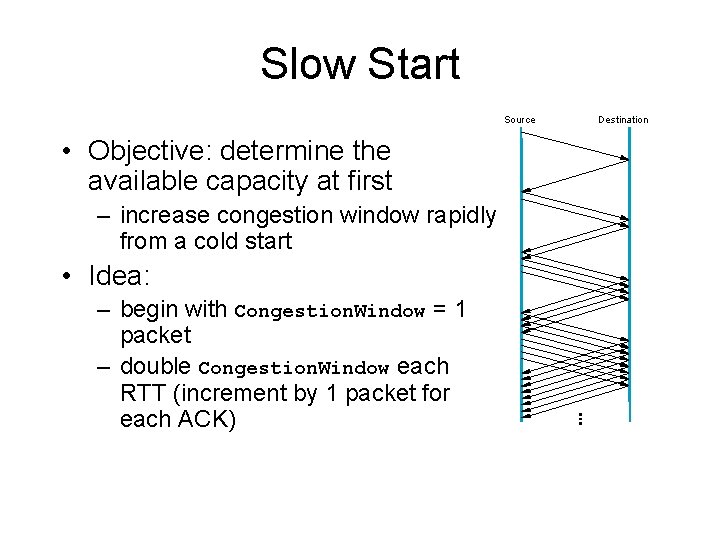

Why AIMD? • Fairness – If you run at any of the other combinations (AIAD, MIMD), common sequences of events can result in unfair distribution among competing flows • R. Jain and K. K Ramakrishnan. , Congestion Avoidance in Computer Networks with a Connectionless Network Layer: Concepts, Goals, and Methodology, in Proceedings of the Computer Networking Symposium, pp. 134— 143, April 1988. • Stability – If you’re slow starting, you KNOW that ½ of the window is OK, so go back to there. – If you’re steady-state sending, then the reason your packet was dropped is likely because a new flow started up • V. Jacobson. , Congestion Avoidance and Control, in Proceedings of the SIGCOMM Symposium, pp. 314— 329, August 1988.

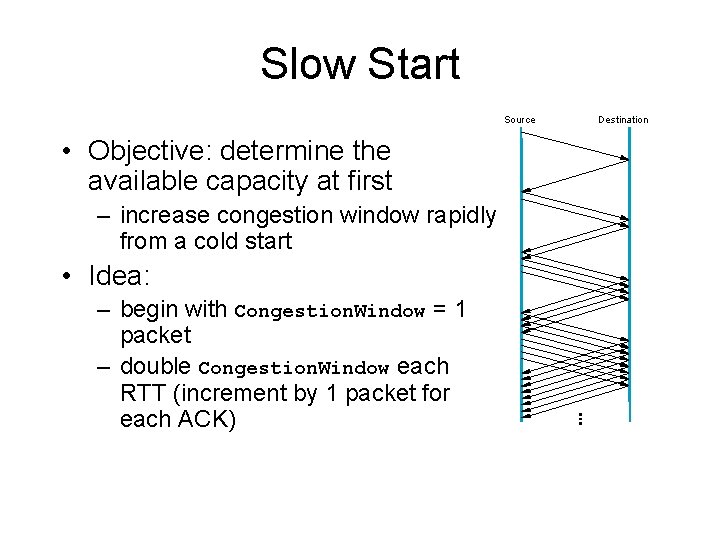

Slow Start Source • Objective: determine the available capacity at first – increase congestion window rapidly from a cold start • Idea: – begin with Congestion. Window = 1 packet – double Congestion. Window each RTT (increment by 1 packet for each ACK) Destination

Slow Start (cont. ) • Why “slow”? – Starts slow in comparison to immediately filling the advertised window – Prevents routers from having to handle bursts of initial traffic.

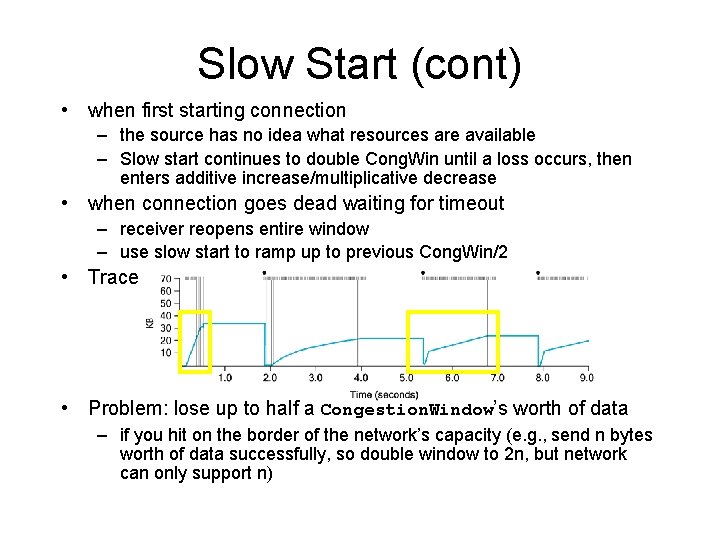

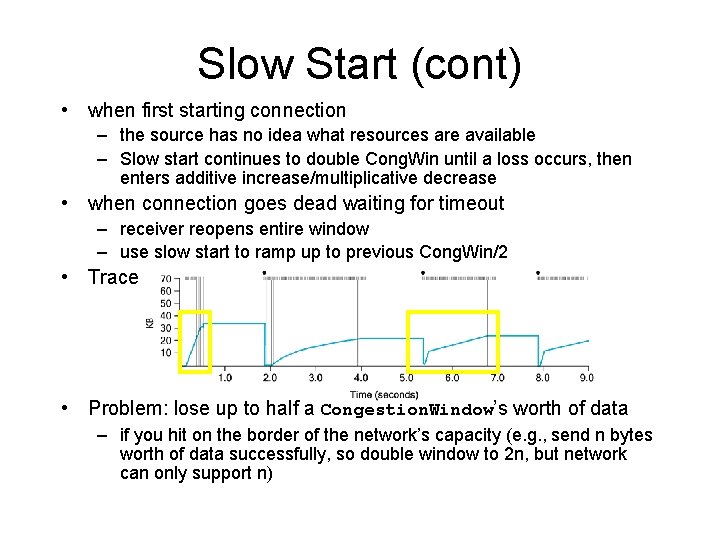

Slow Start (cont) • when first starting connection – the source has no idea what resources are available – Slow start continues to double Cong. Win until a loss occurs, then enters additive increase/multiplicative decrease • when connection goes dead waiting for timeout – receiver reopens entire window – use slow start to ramp up to previous Cong. Win/2 • Trace • Problem: lose up to half a Congestion. Window’s worth of data – if you hit on the border of the network’s capacity (e. g. , send n bytes worth of data successfully, so double window to 2 n, but network can only support n)

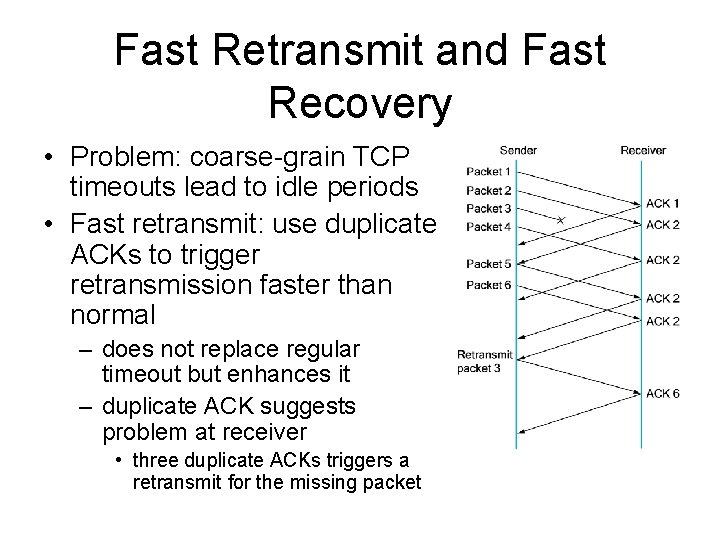

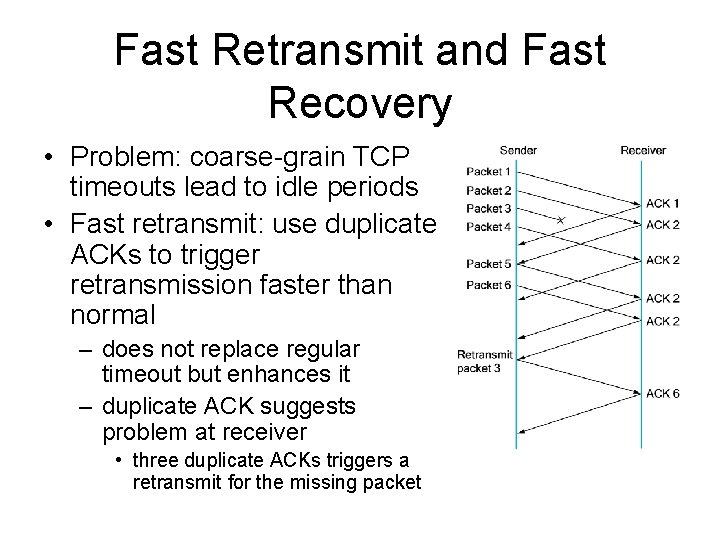

Fast Retransmit and Fast Recovery • Problem: coarse-grain TCP timeouts lead to idle periods • Fast retransmit: use duplicate ACKs to trigger retransmission faster than normal – does not replace regular timeout but enhances it – duplicate ACK suggests problem at receiver • three duplicate ACKs triggers a retransmit for the missing packet

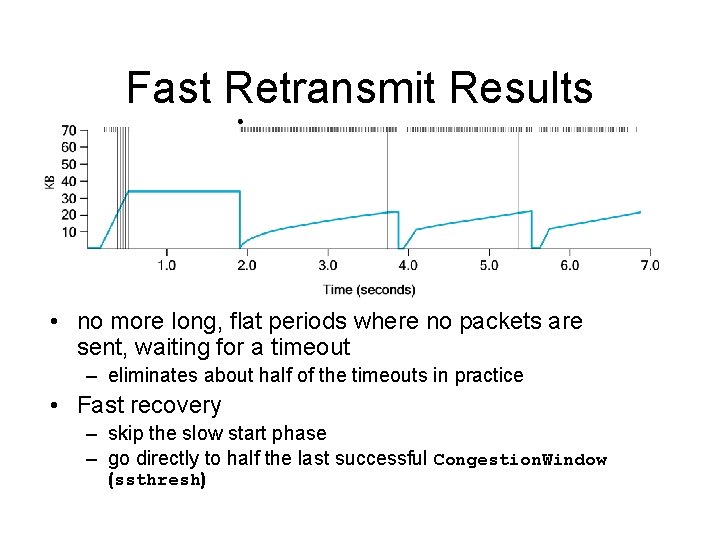

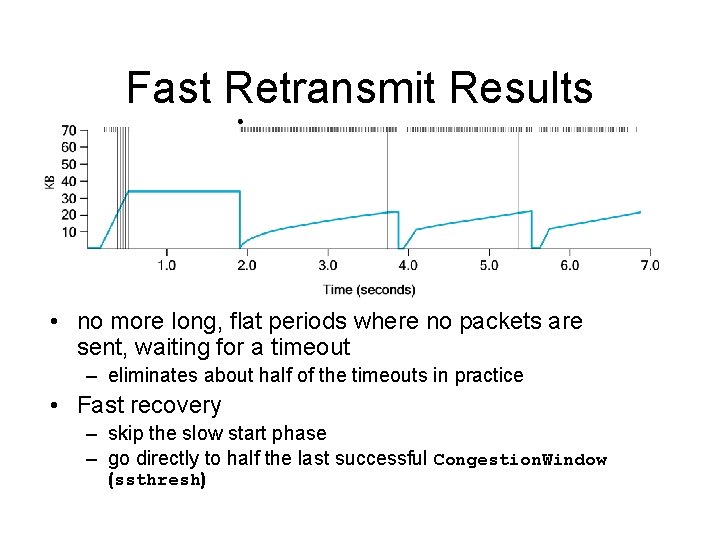

Fast Retransmit Results • no more long, flat periods where no packets are sent, waiting for a timeout – eliminates about half of the timeouts in practice • Fast recovery – skip the slow start phase – go directly to half the last successful Congestion. Window (ssthresh)

Congestion Avoidance • TCP’s strategy – control congestion once it happens – repeatedly increase load in an effort to find the point at which congestion occurs, and then back off • i. e. , cause congestion in order to control it • Alternative strategy – predict when congestion is about to happen – reduce rate before packets start being discarded – call this congestion avoidance, instead of congestion control

TCP Vegas • End host management of congestion avoidance • Watch for (implicit) signs that congestion is building – e. g. steady increase in RTT, flattening of the perceived throughput

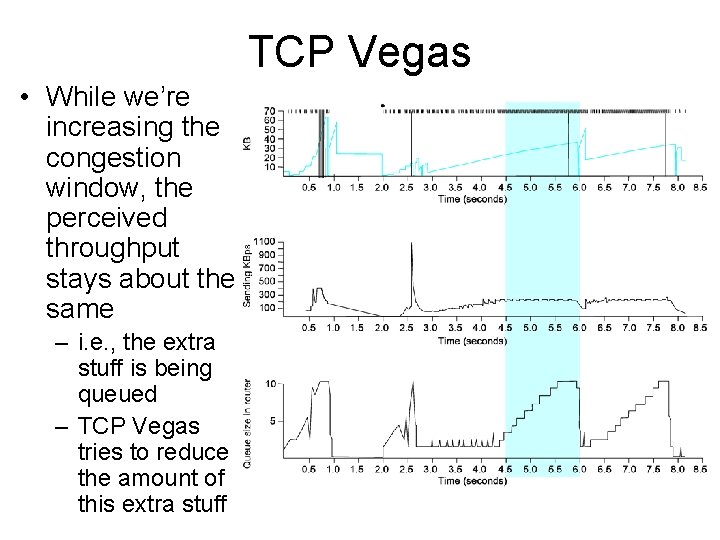

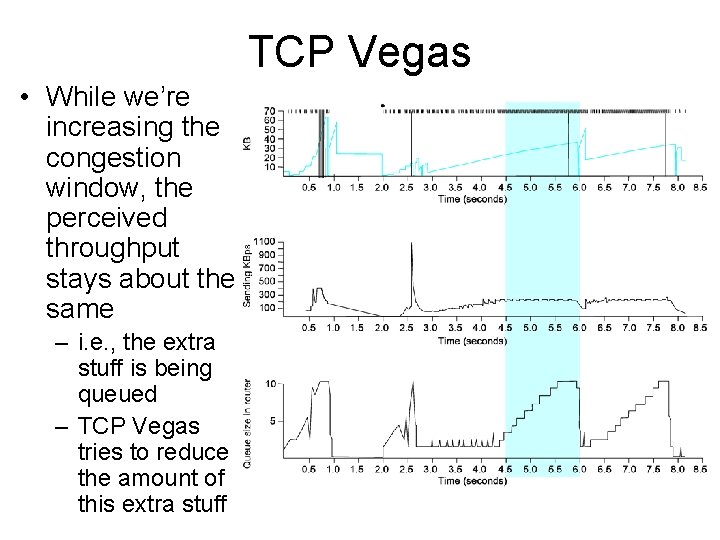

TCP Vegas • While we’re increasing the congestion window, the perceived throughput stays about the same – i. e. , the extra stuff is being queued – TCP Vegas tries to reduce the amount of this extra stuff

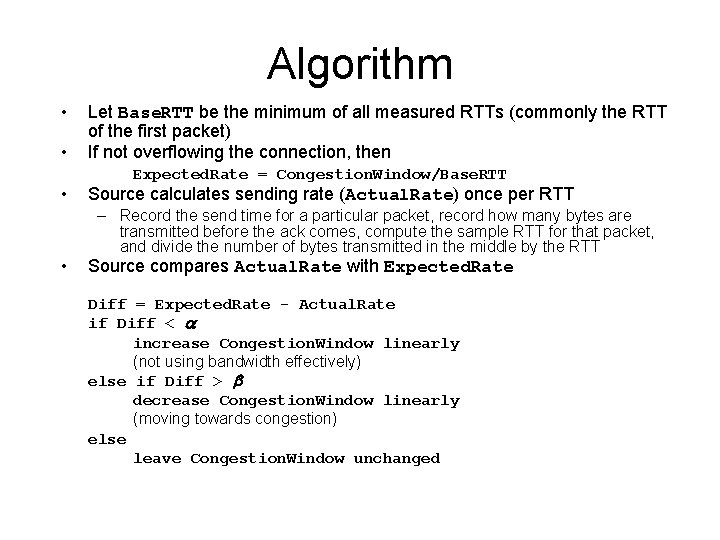

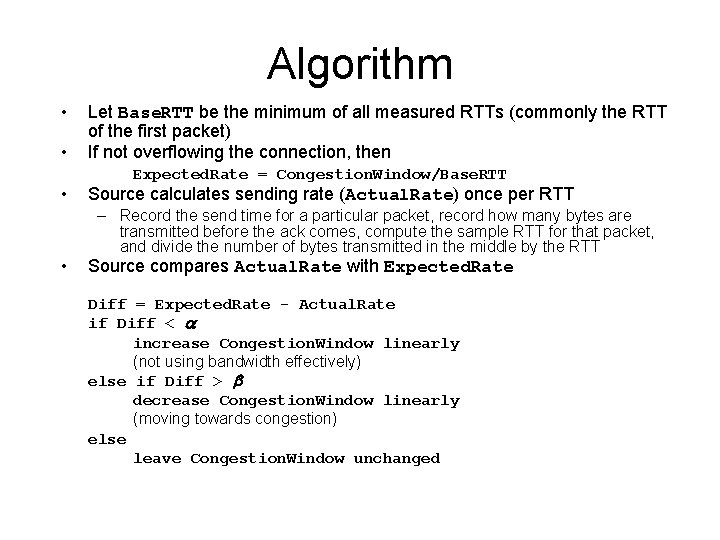

Algorithm • • Let Base. RTT be the minimum of all measured RTTs (commonly the RTT of the first packet) If not overflowing the connection, then Expected. Rate = Congestion. Window/Base. RTT Source calculates sending rate (Actual. Rate) once per RTT – Record the send time for a particular packet, record how many bytes are transmitted before the ack comes, compute the sample RTT for that packet, and divide the number of bytes transmitted in the middle by the RTT Source compares Actual. Rate with Expected. Rate Diff = Expected. Rate - Actual. Rate if Diff < a increase Congestion. Window linearly (not using bandwidth effectively) else if Diff > b decrease Congestion. Window linearly (moving towards congestion) else leave Congestion. Window unchanged

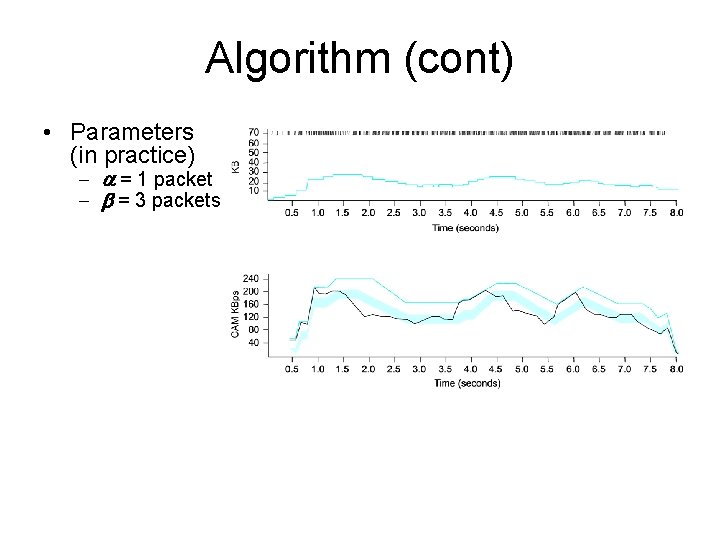

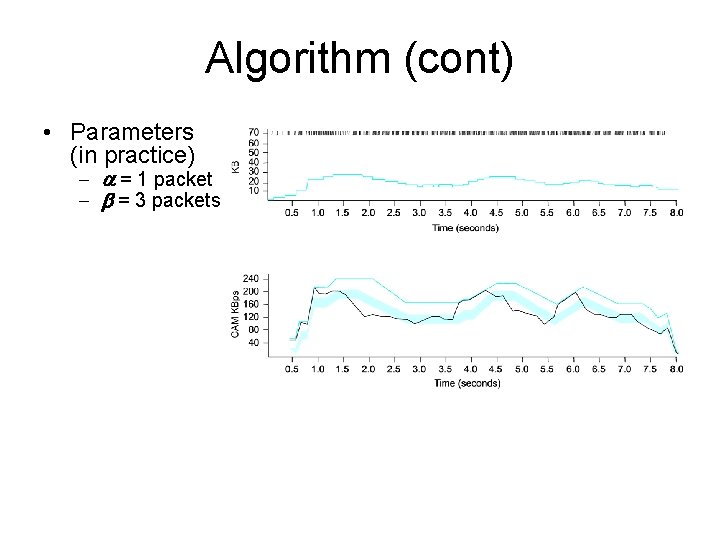

Algorithm (cont) • Parameters (in practice) - a = 1 packet - b = 3 packets