Lecture 8 Generative Adversarial Network l GAN was

![KL (Kullback-Leibler) divergence l Discrete: DKL(P||Q) = Σi. P(i)log[P(i)/Q(i)] l Continuous: ∞ DKL(P||Q) = KL (Kullback-Leibler) divergence l Discrete: DKL(P||Q) = Σi. P(i)log[P(i)/Q(i)] l Continuous: ∞ DKL(P||Q) =](https://slidetodoc.com/presentation_image/5ca6c4d2d8b9452c72b4a57bbea7c1ff/image-28.jpg)

![max. DV(G, D) V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] max. D V(G, max. DV(G, D) V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] max. D V(G,](https://slidetodoc.com/presentation_image/5ca6c4d2d8b9452c72b4a57bbea7c1ff/image-35.jpg)

![Summary: V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] l Generator G, Discriminator D Summary: V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] l Generator G, Discriminator D](https://slidetodoc.com/presentation_image/5ca6c4d2d8b9452c72b4a57bbea7c1ff/image-36.jpg)

![In practice … V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] l Given G, In practice … V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] l Given G,](https://slidetodoc.com/presentation_image/5ca6c4d2d8b9452c72b4a57bbea7c1ff/image-39.jpg)

![Discriminator 1 0 GAN implementation estimation V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] Discriminator 1 0 GAN implementation estimation V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))]](https://slidetodoc.com/presentation_image/5ca6c4d2d8b9452c72b4a57bbea7c1ff/image-47.jpg)

- Slides: 62

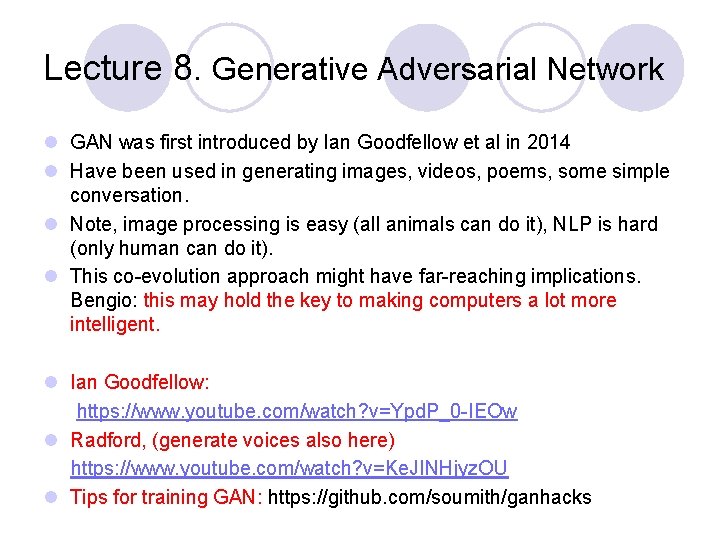

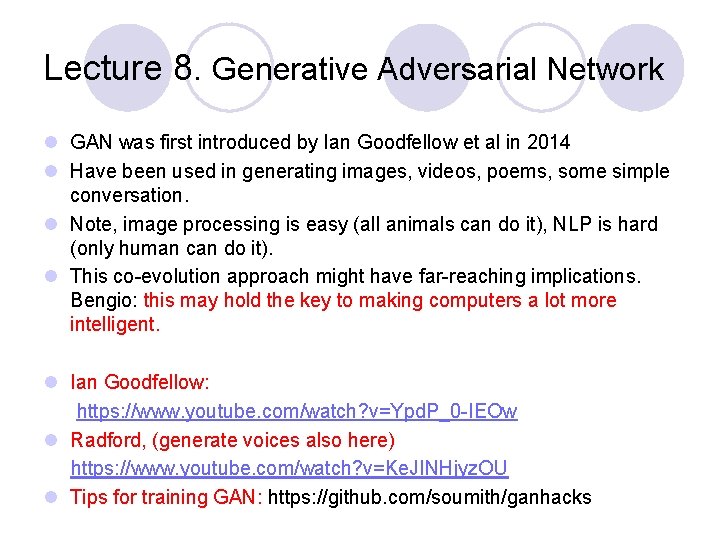

Lecture 8. Generative Adversarial Network l GAN was first introduced by Ian Goodfellow et al in 2014 l Have been used in generating images, videos, poems, some simple conversation. l Note, image processing is easy (all animals can do it), NLP is hard (only human can do it). l This co-evolution approach might have far-reaching implications. Bengio: this may hold the key to making computers a lot more intelligent. l Ian Goodfellow: https: //www. youtube. com/watch? v=Ypd. P_0 -IEOw l Radford, (generate voices also here) https: //www. youtube. com/watch? v=Ke. JINHjyz. OU l Tips for training GAN: https: //github. com/soumith/ganhacks

Autoencoder As close as possible code NN Encoder code Randomly generate a vector as code NN Decoder Image ?

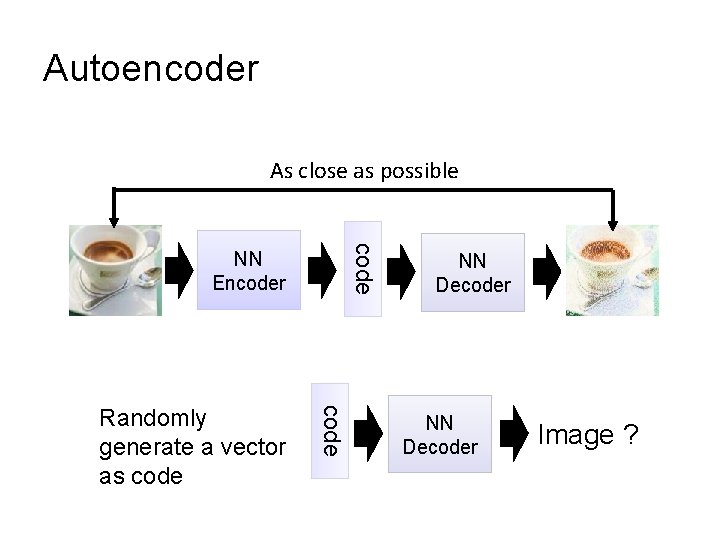

Autoencoder with 3 fully connected layers Training: model. fit(X, X) Cost function: Σk=1. . N (xk – x’k)2 Large small, learn to compress

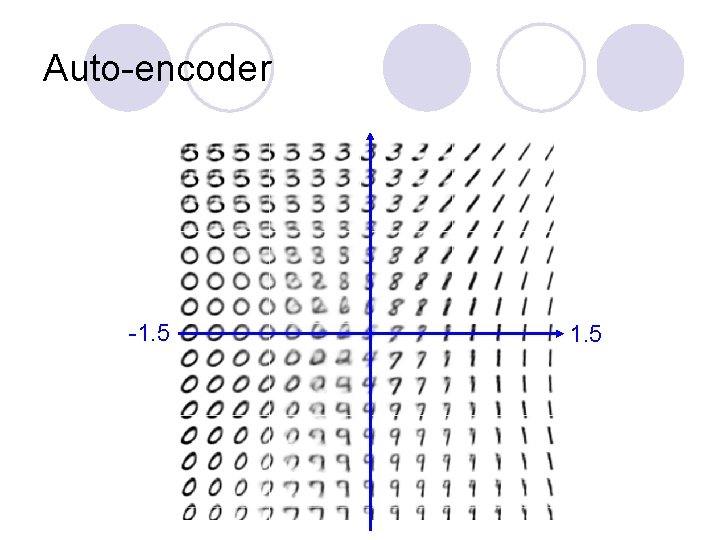

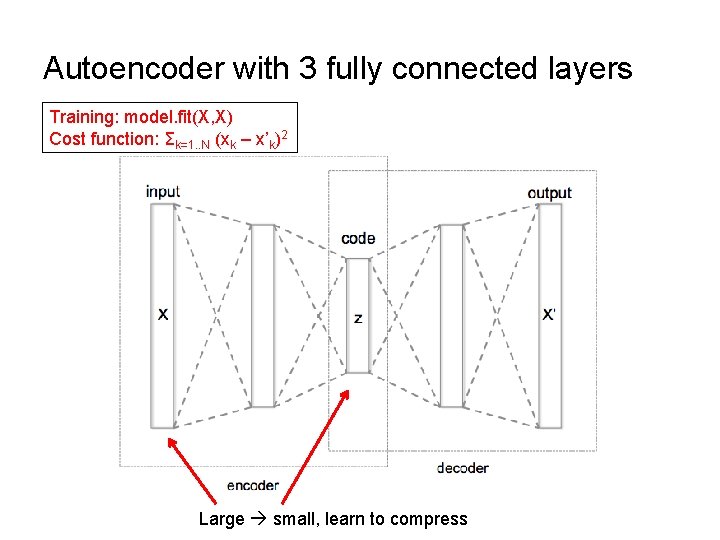

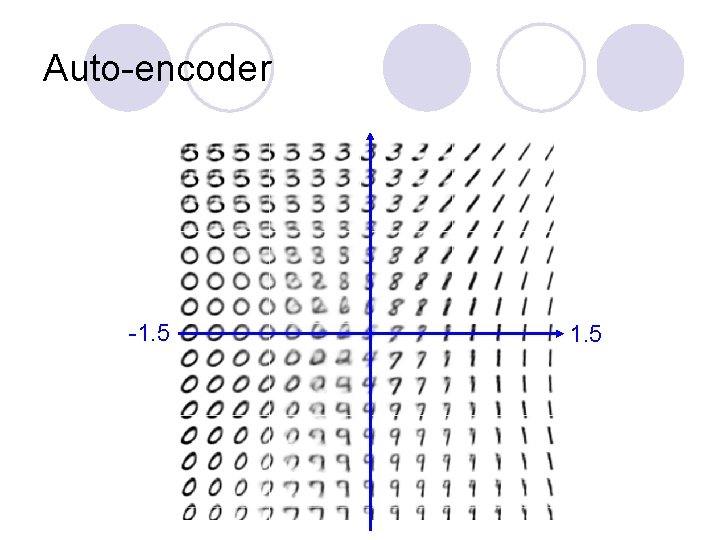

Auto-encoder 2 D code NN Decoder -1. 5 NN Decoder

Auto-encoder -1. 5

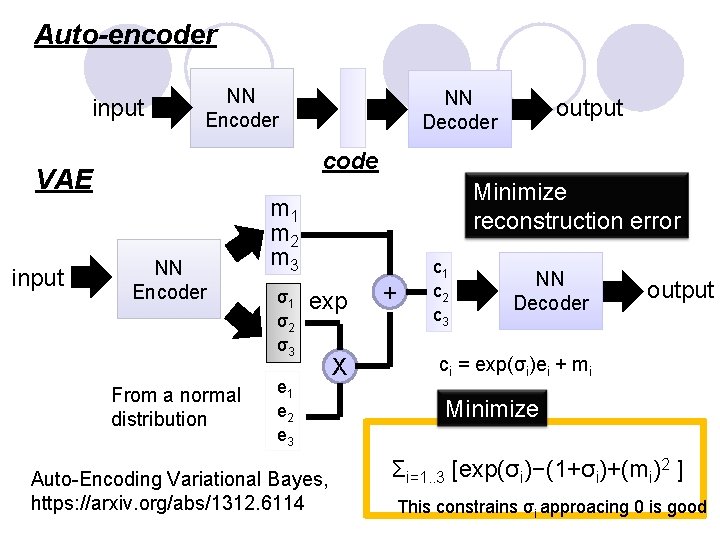

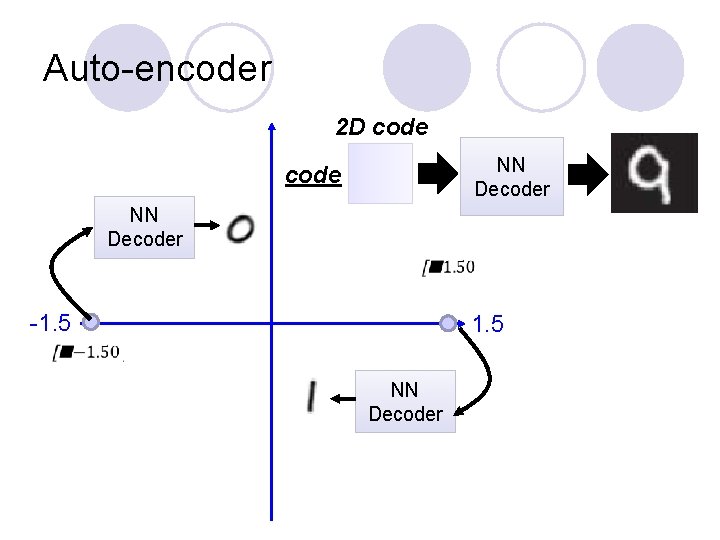

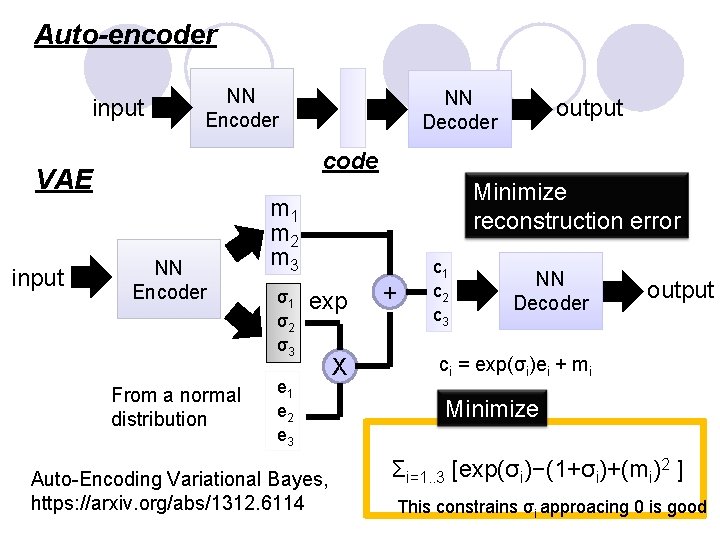

Auto-encoder input NN Encoder output code VAE input NN Decoder NN Encoder From a normal distribution Minimize reconstruction error m 1 m 2 m 3 σ1 σ2 σ3 e 1 e 2 e 3 exp X Auto-Encoding Variational Bayes, https: //arxiv. org/abs/1312. 6114 + c 1 c 2 c 3 NN Decoder output ci = exp(σi)ei + mi Minimize Σi=1. . 3 [exp(σi)−(1+σi)+(mi)2 ] This constrains σi approacing 0 is good

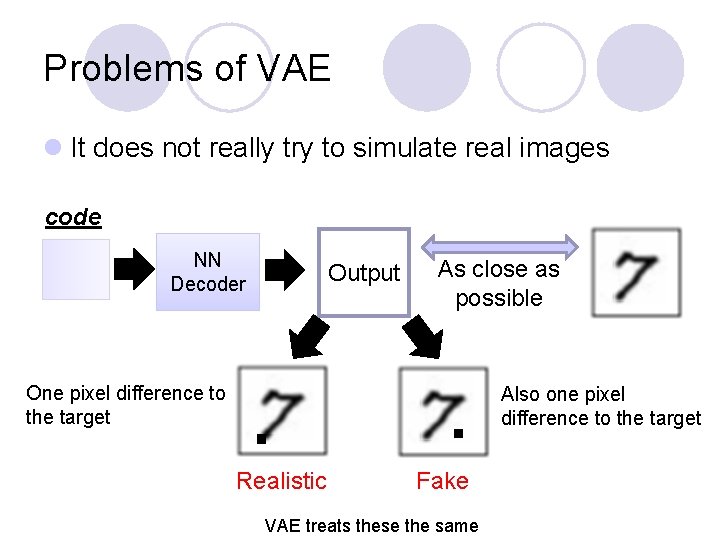

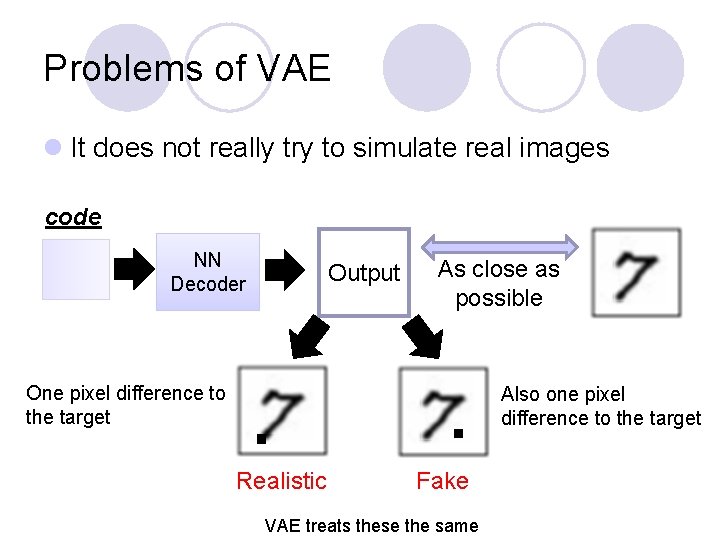

Problems of VAE l It does not really try to simulate real images code NN Decoder Output As close as possible One pixel difference to the target Also one pixel difference to the target Realistic Fake VAE treats these the same

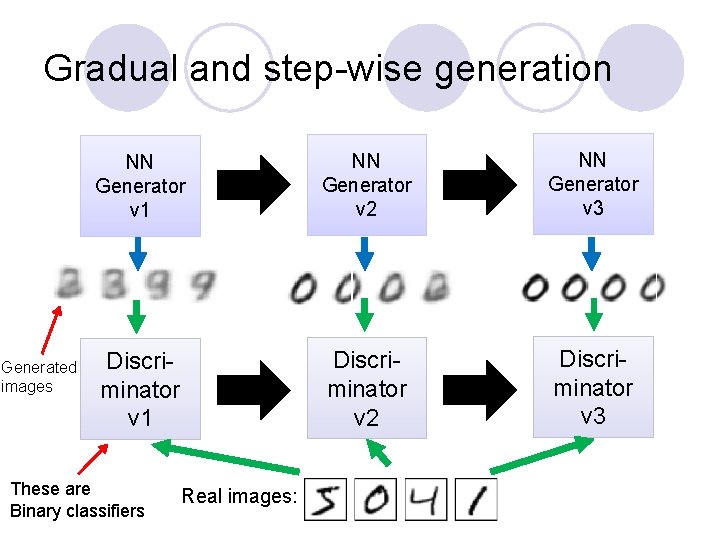

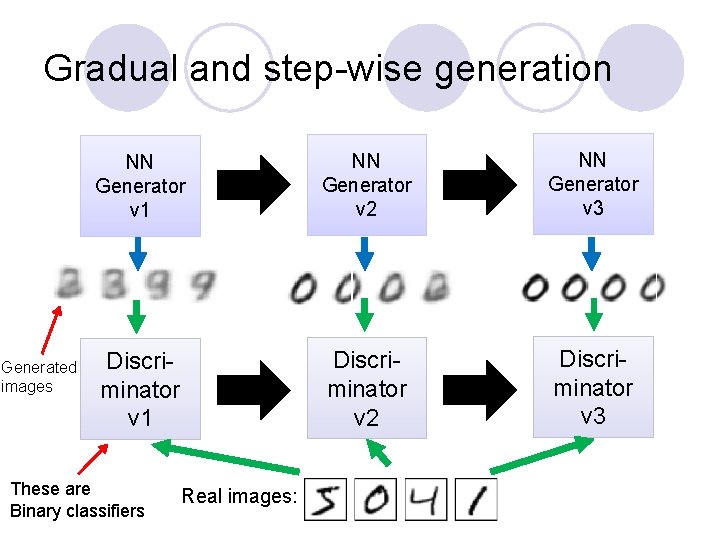

Gradual and step-wise generation Generated images NN Generator v 1 NN Generator v 2 NN Generator v 3 Discriminator v 1 Discriminator v 2 Discriminator v 3 These are Binary classifiers Real images:

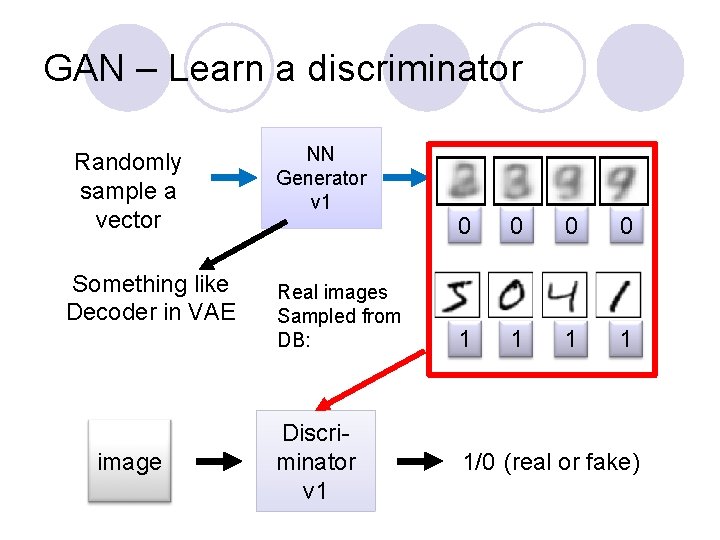

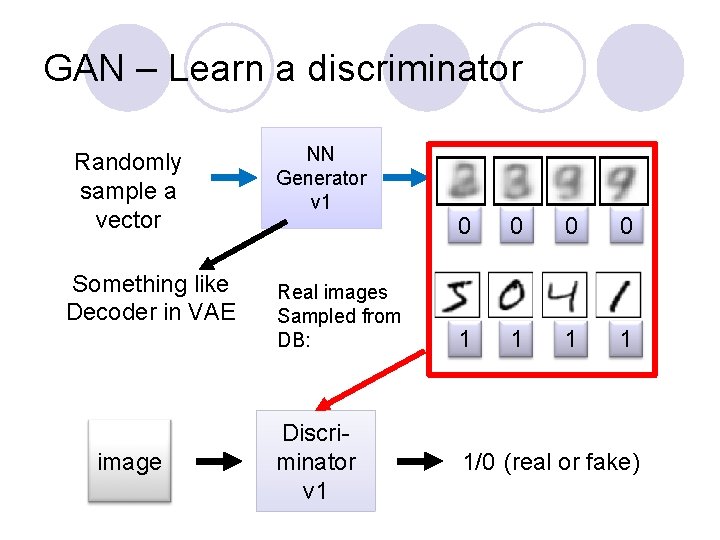

GAN – Learn a discriminator Randomly sample a vector Something like Decoder in VAE image NN Generator v 1 Real images Sampled from DB: Discriminator v 1 0 0 1 1 1/0 (real or fake)

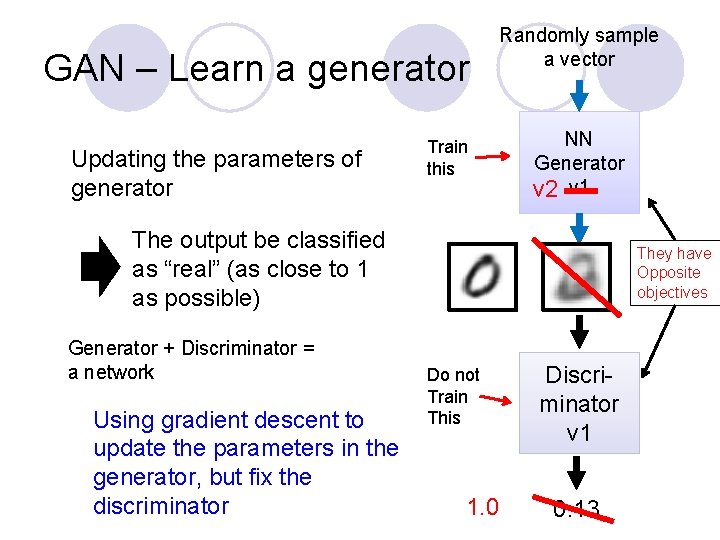

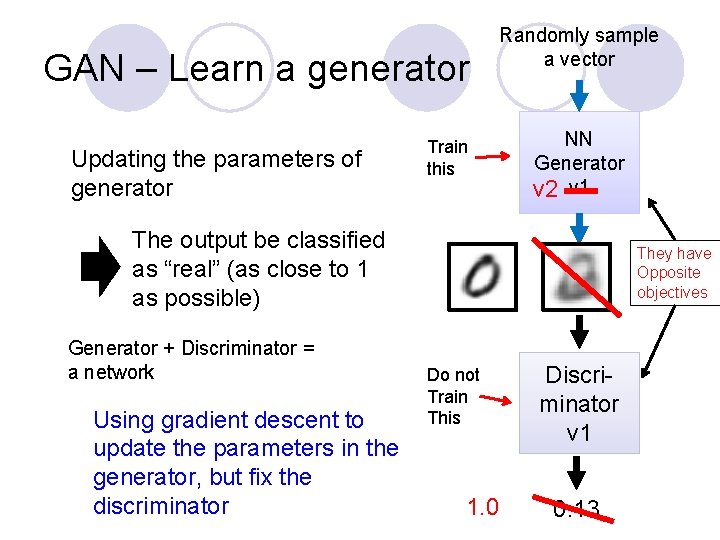

GAN – Learn a generator Updating the parameters of generator Randomly sample a vector Train this NN Generator v 2 v 1 The output be classified as “real” (as close to 1 as possible) Generator + Discriminator = a network Using gradient descent to update the parameters in the generator, but fix the discriminator They have Opposite objectives Do not Train This 1. 0 Discriminator v 1 0. 13

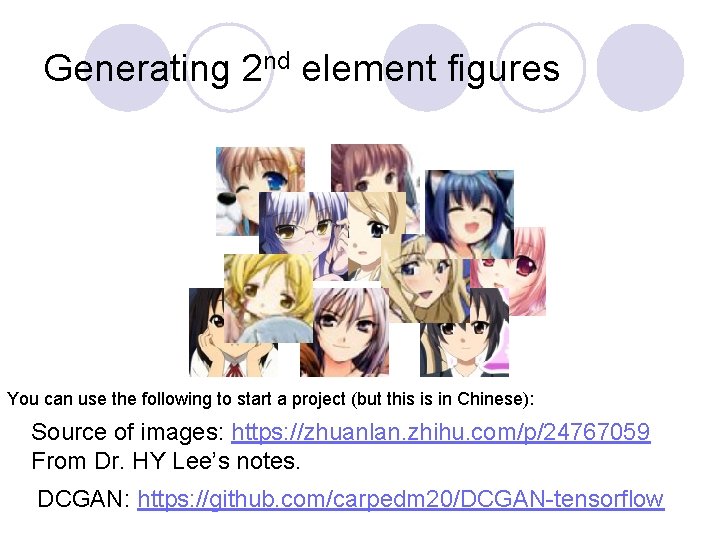

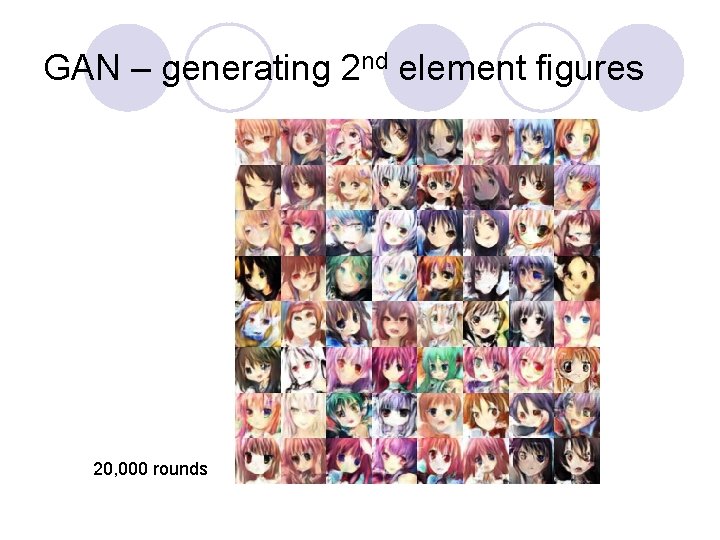

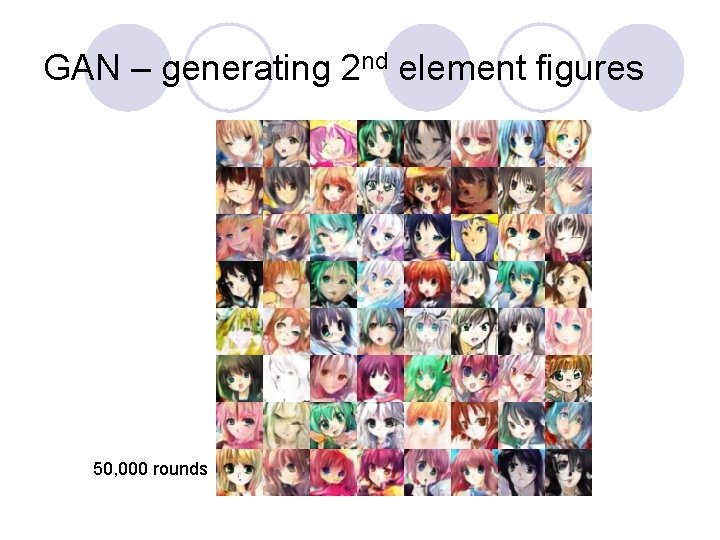

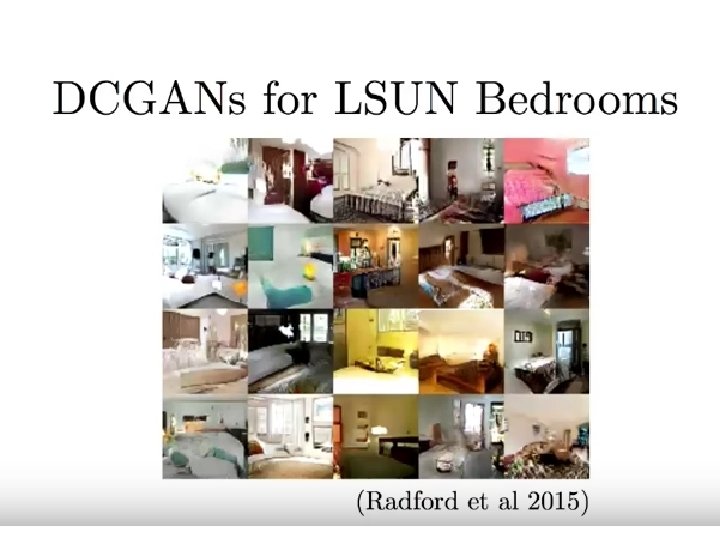

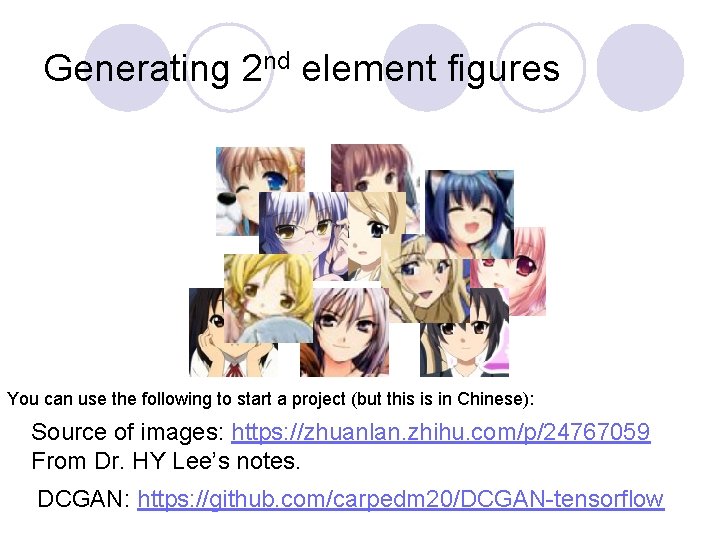

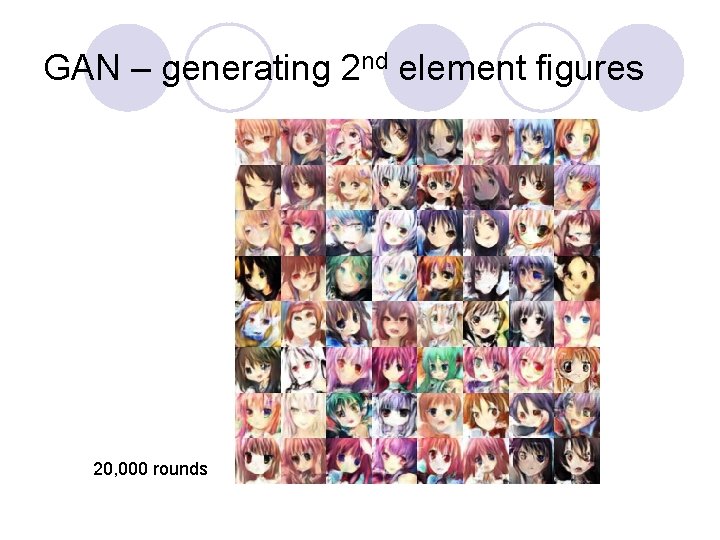

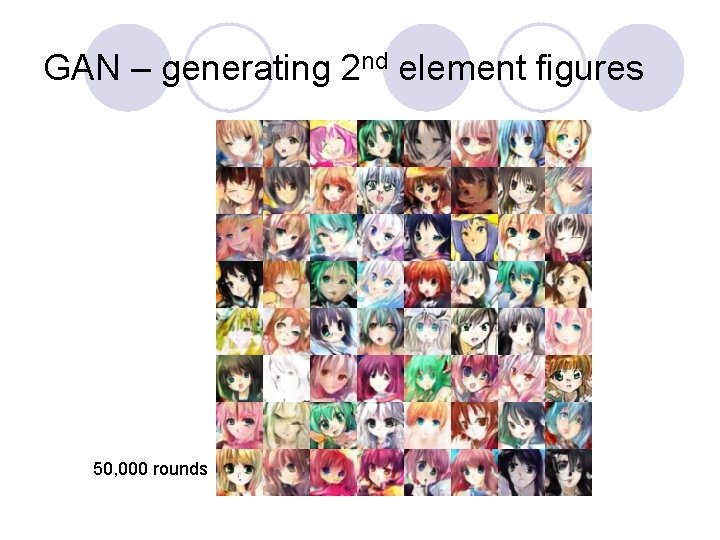

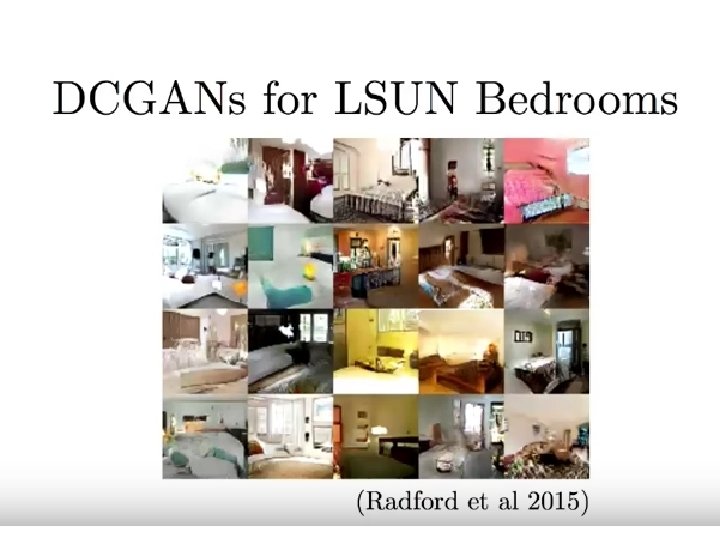

Generating 2 nd element figures You can use the following to start a project (but this is in Chinese): Source of images: https: //zhuanlan. zhihu. com/p/24767059 From Dr. HY Lee’s notes. DCGAN: https: //github. com/carpedm 20/DCGAN-tensorflow

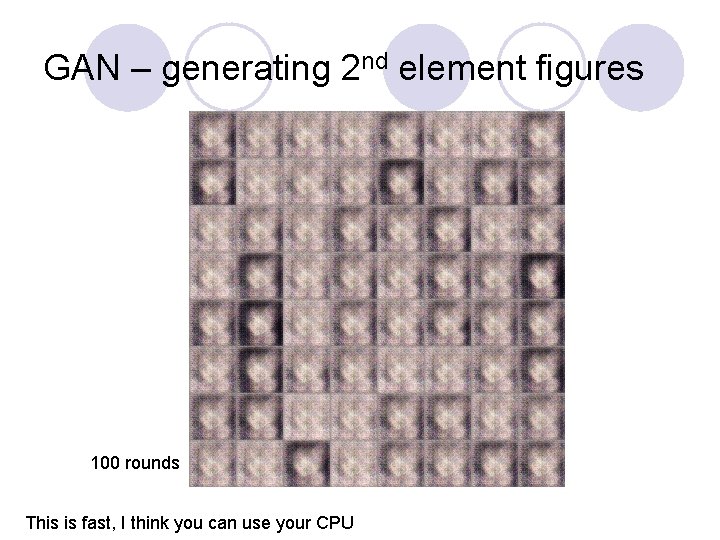

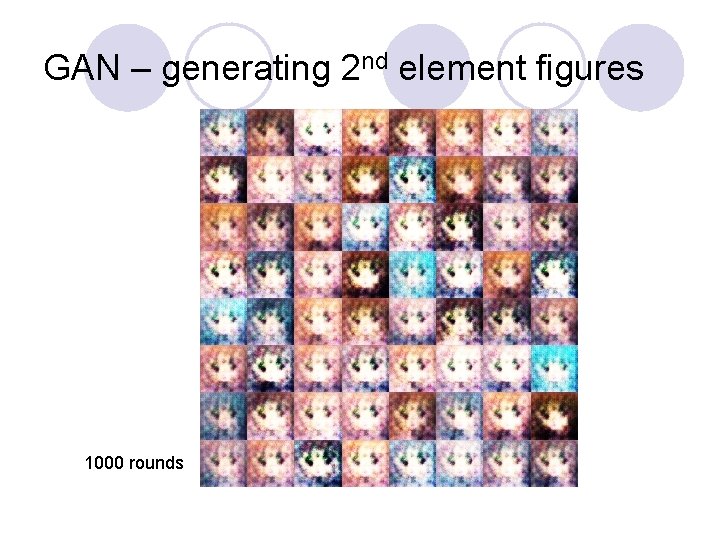

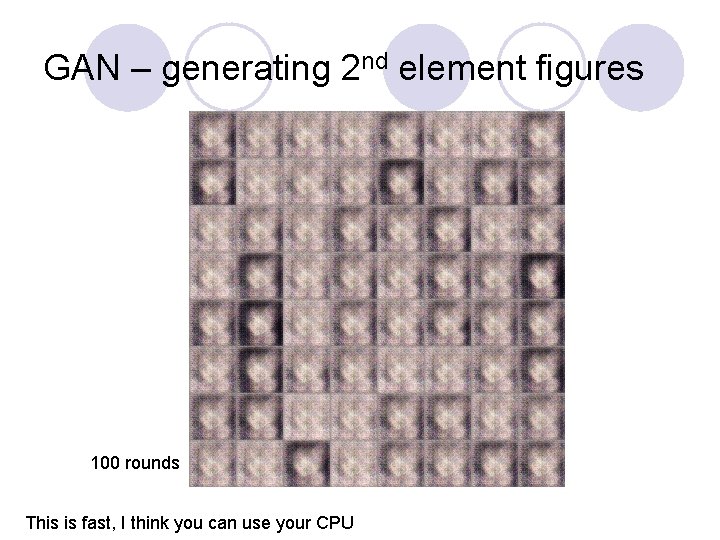

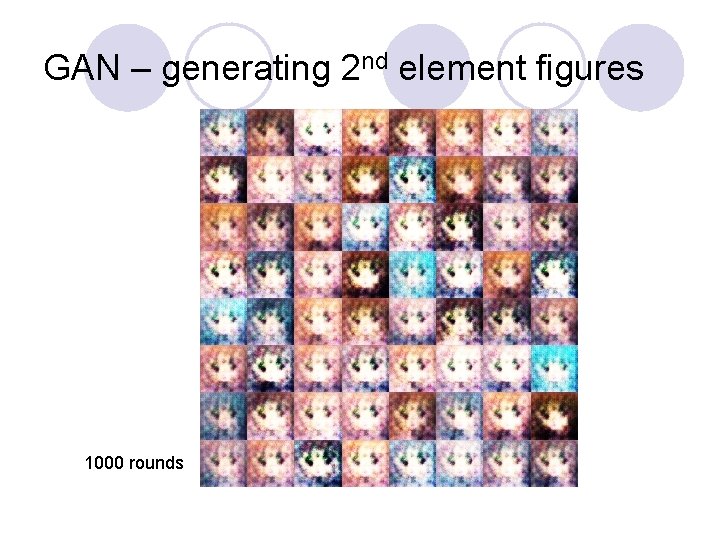

GAN – generating 2 nd element figures 100 rounds This is fast, I think you can use your CPU

GAN – generating 2 nd element figures 1000 rounds

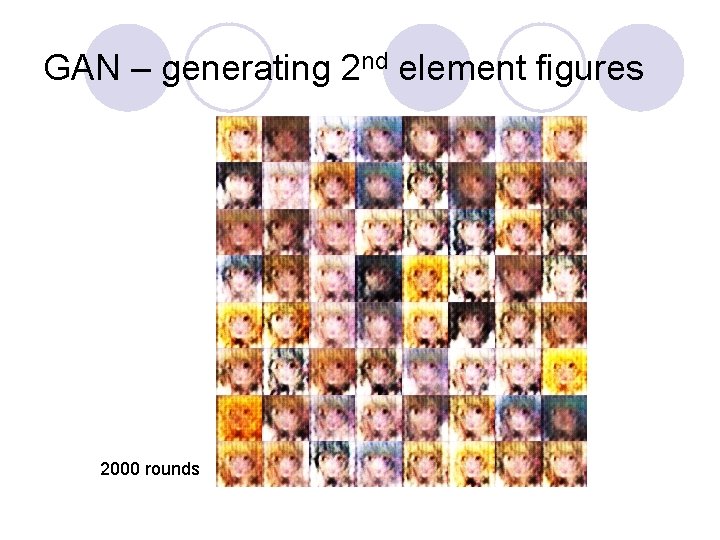

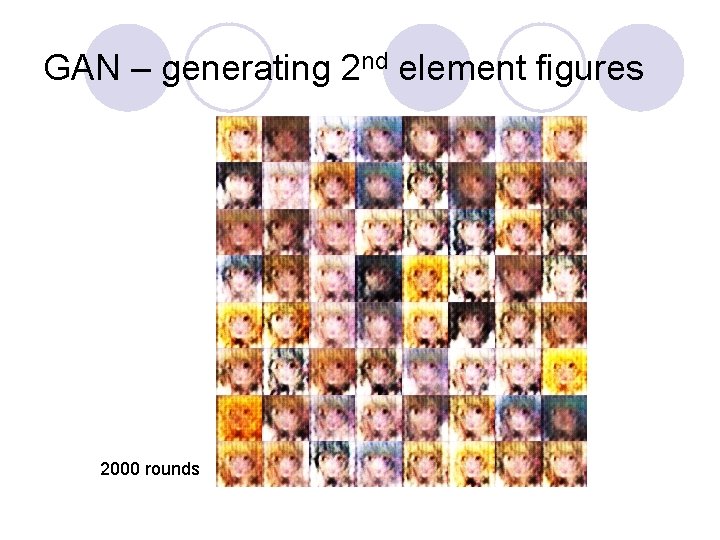

GAN – generating 2 nd element figures 2000 rounds

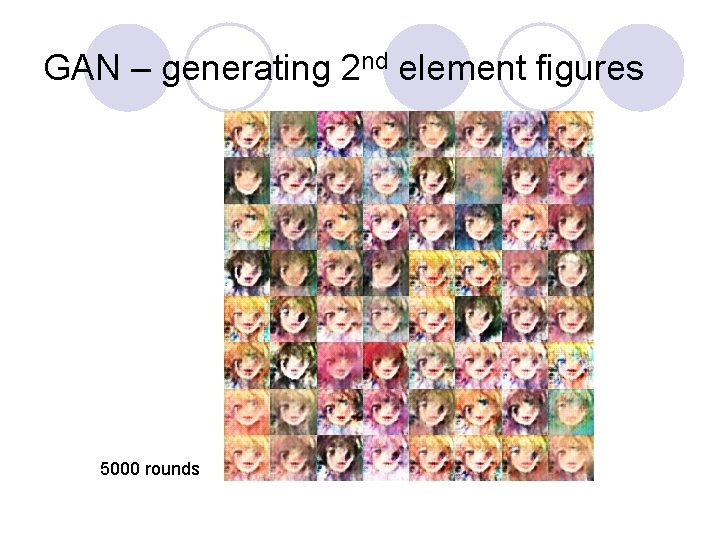

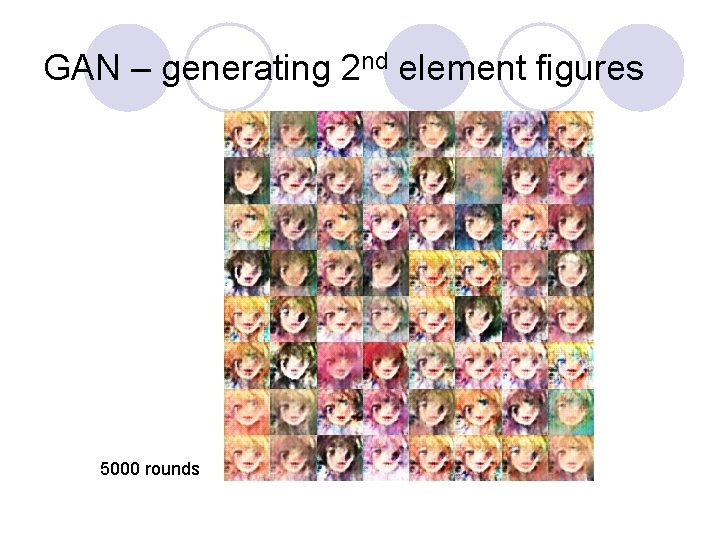

GAN – generating 2 nd element figures 5000 rounds

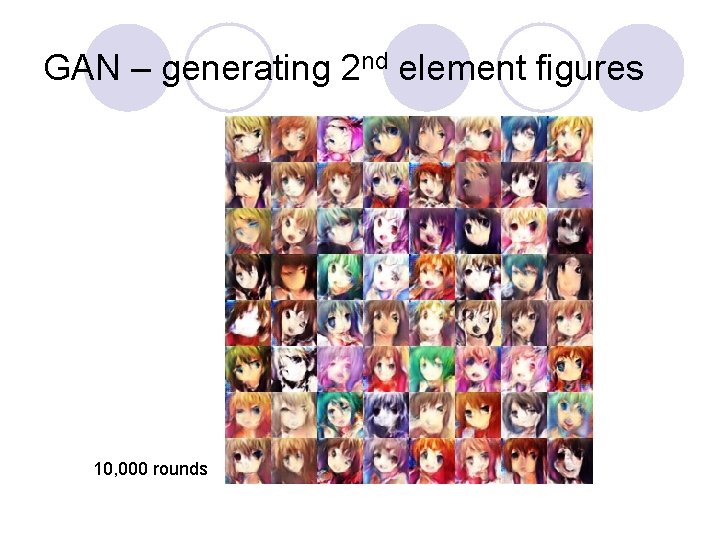

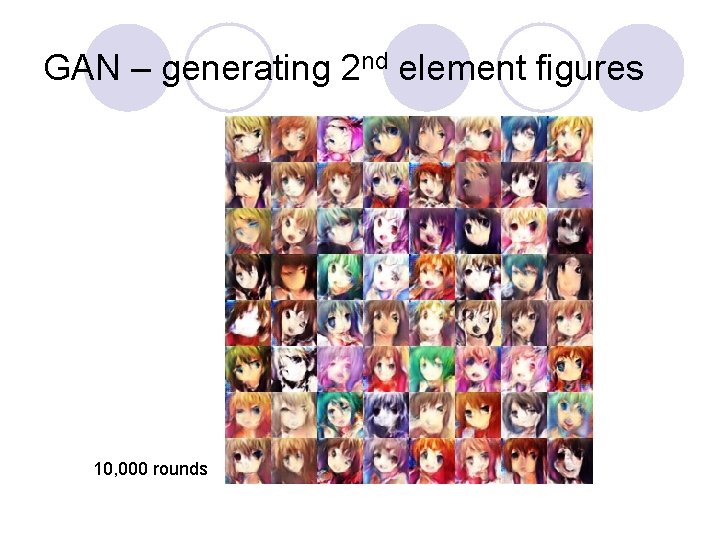

GAN – generating 2 nd element figures 10, 000 rounds

GAN – generating 2 nd element figures 20, 000 rounds

GAN – generating 2 nd element figures 50, 000 rounds

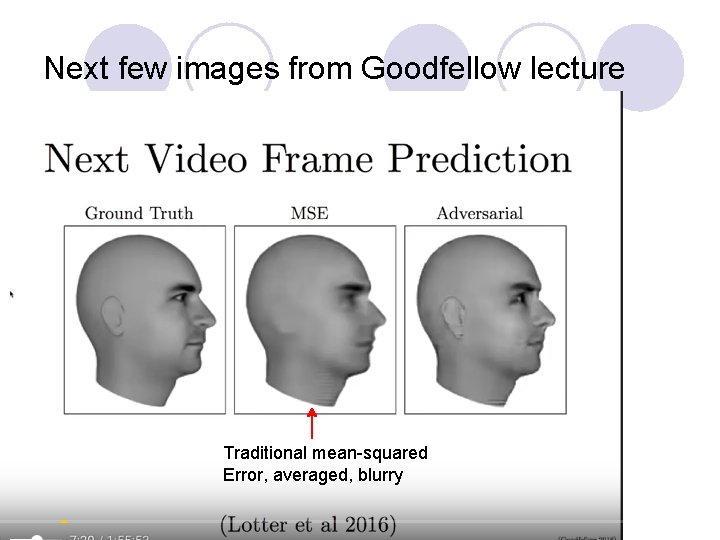

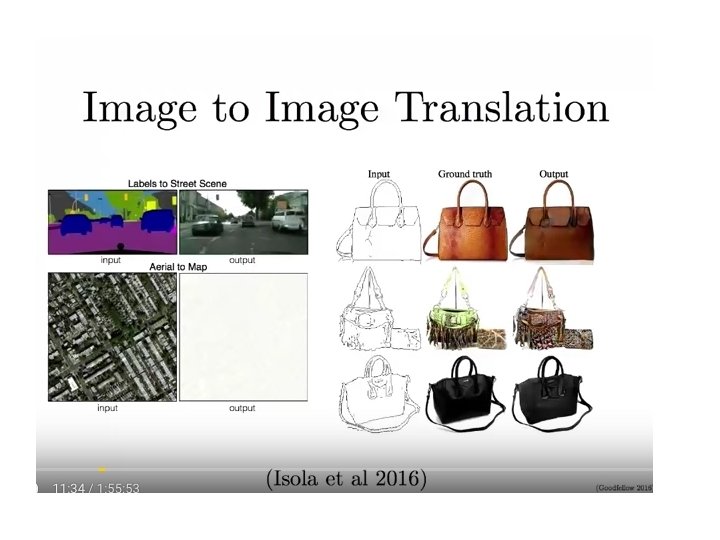

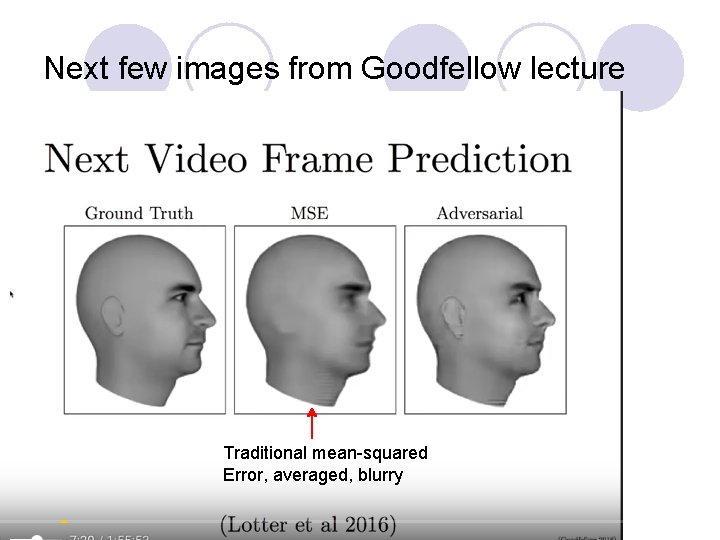

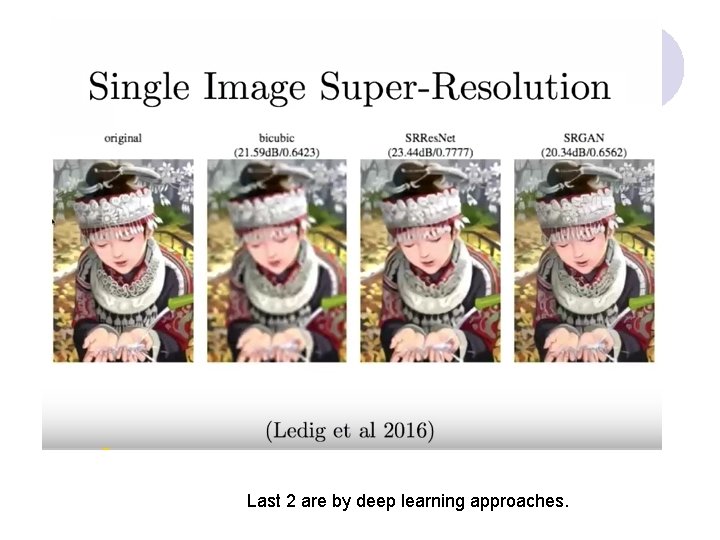

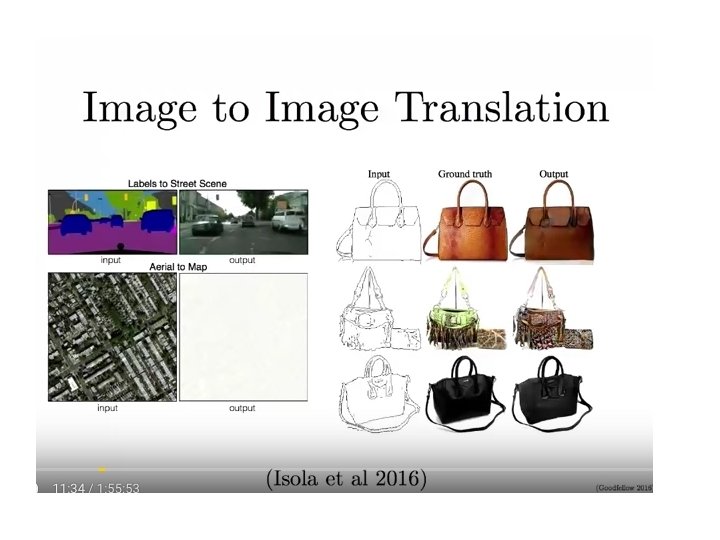

Next few images from Goodfellow lecture Traditional mean-squared Error, averaged, blurry

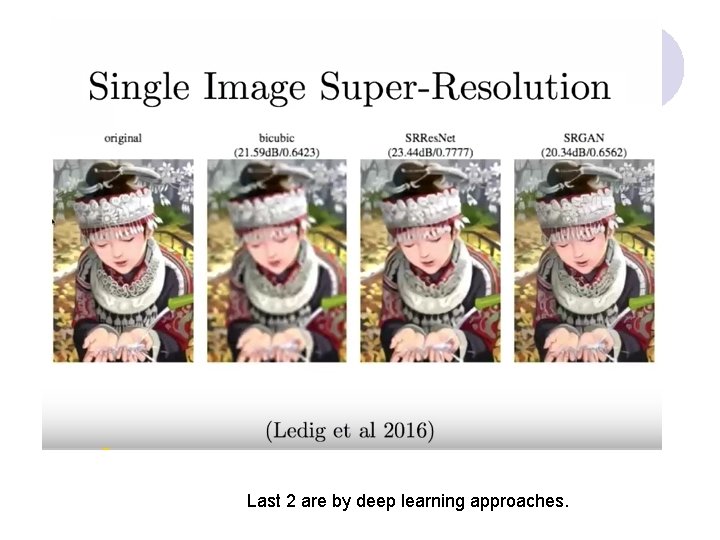

Last 2 are by deep learning approaches.

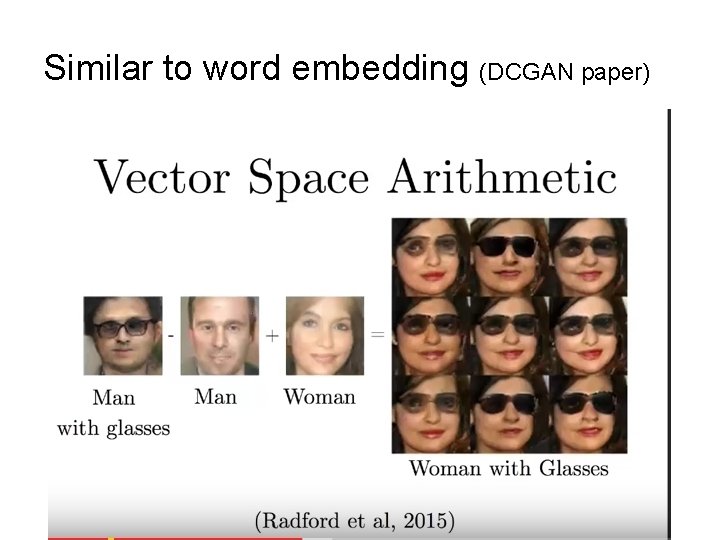

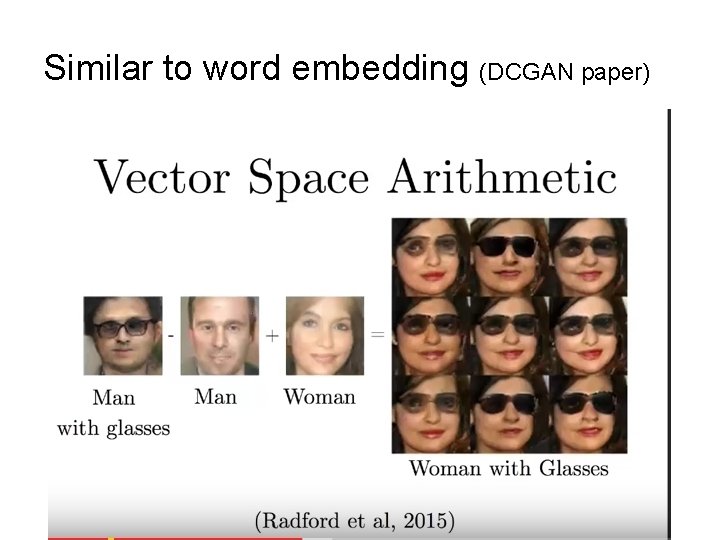

Similar to word embedding (DCGAN paper)

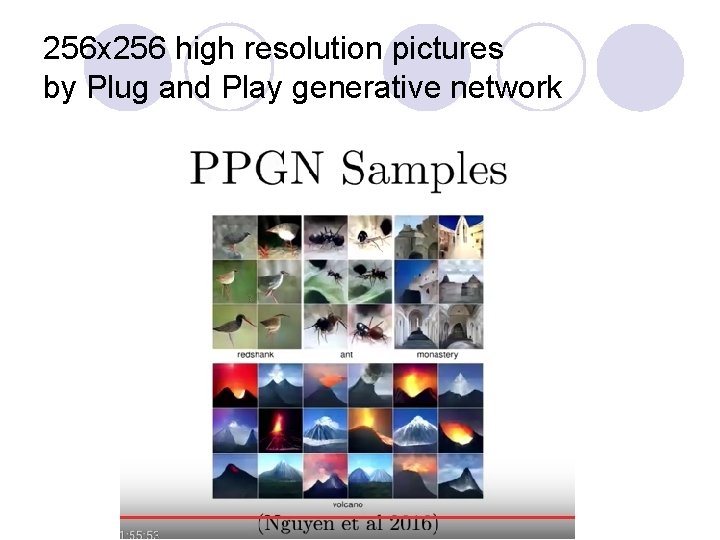

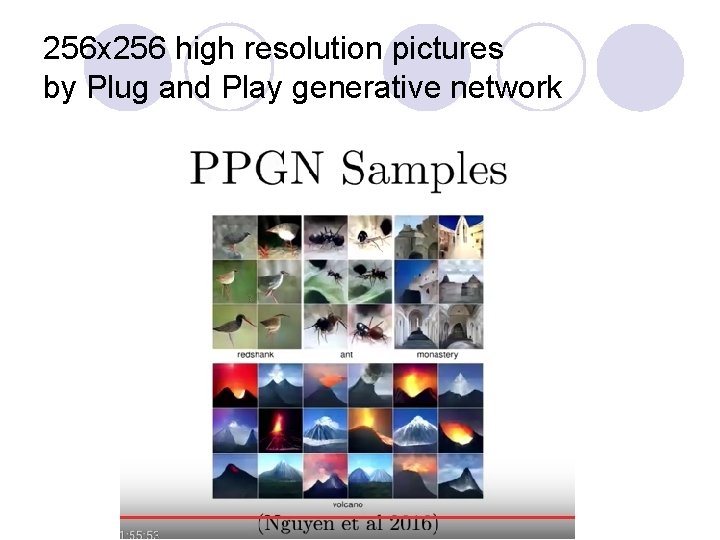

256 x 256 high resolution pictures by Plug and Play generative network

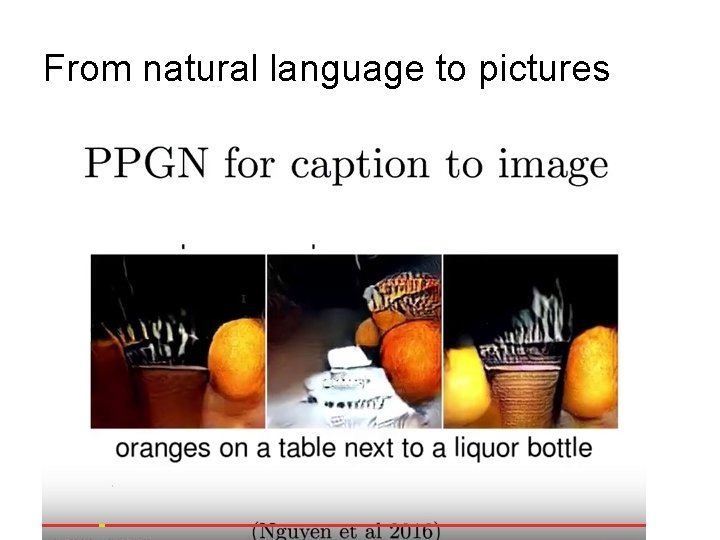

From natural language to pictures

Deriving GAN l During the rest of this lecture, we will go thru the original ideas and derive GAN. l I will avoid the continuous case and stick to simple explanations.

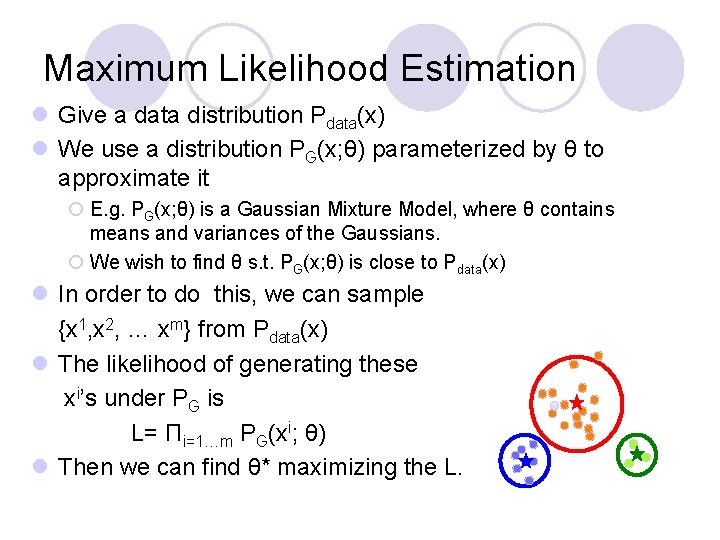

Maximum Likelihood Estimation l Give a data distribution Pdata(x) l We use a distribution PG(x; θ) parameterized by θ to approximate it ¡ E. g. PG(x; θ) is a Gaussian Mixture Model, where θ contains means and variances of the Gaussians. ¡ We wish to find θ s. t. PG(x; θ) is close to Pdata(x) l In order to do this, we can sample {x 1, x 2, … xm} from Pdata(x) l The likelihood of generating these xi’s under PG is L= Πi=1…m PG(xi; θ) l Then we can find θ* maximizing the L.

![KL KullbackLeibler divergence l Discrete DKLPQ Σi PilogPiQi l Continuous DKLPQ KL (Kullback-Leibler) divergence l Discrete: DKL(P||Q) = Σi. P(i)log[P(i)/Q(i)] l Continuous: ∞ DKL(P||Q) =](https://slidetodoc.com/presentation_image/5ca6c4d2d8b9452c72b4a57bbea7c1ff/image-28.jpg)

KL (Kullback-Leibler) divergence l Discrete: DKL(P||Q) = Σi. P(i)log[P(i)/Q(i)] l Continuous: ∞ DKL(P||Q) = p(x)log [p(x)/q(x)] −∞ l Explanations: Entropy: - Σi. P(i)log. P(i) - expected code length (also optimal) Cross Entropy: - Σi. P(i)log Q(i) – expected coding length using optimal code for Q DKL= Σi. P(i)log[P(i)/Q(i)] = Σi. P(i)[log. P(i) – log. Q(i)], extra bits JSD(P||Q) = ½ DKL(P||M)+ ½ DKL(Q||M), M= ½ (P+Q), symmetric KL * JSD = Jensen-Shannon Divergency

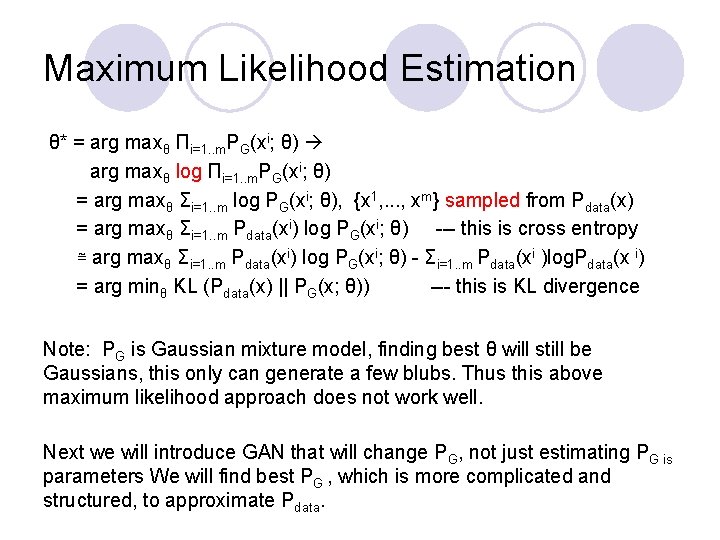

Maximum Likelihood Estimation θ* = arg maxθ Πi=1. . m. PG(xi; θ) i arg maxθ log Πi=1. . m. PG(x ; θ) = arg maxθ Σi=1. . m log PG(xi; θ), {x 1, . . . , xm} sampled from Pdata(x) = arg maxθ Σi=1. . m Pdata(xi) log PG(xi; θ) --- this is cross entropy ≅ arg maxθ Σi=1. . m Pdata(xi) log PG(xi; θ) - Σi=1. . m Pdata(xi )log. Pdata(x i) = arg minθ KL (Pdata(x) || PG(x; θ)) --- this is KL divergence Note: PG is Gaussian mixture model, finding best θ will still be Gaussians, this only can generate a few blubs. Thus this above maximum likelihood approach does not work well. Next we will introduce GAN that will change PG, not just estimating PG is parameters We will find best PG , which is more complicated and structured, to approximate Pdata.

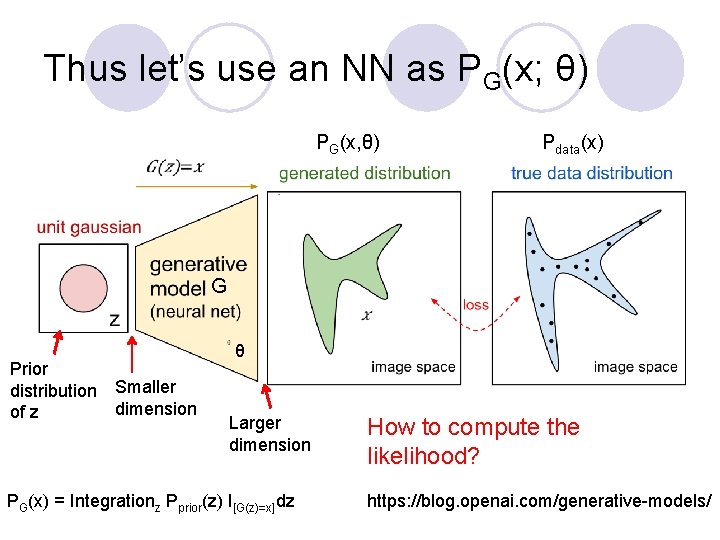

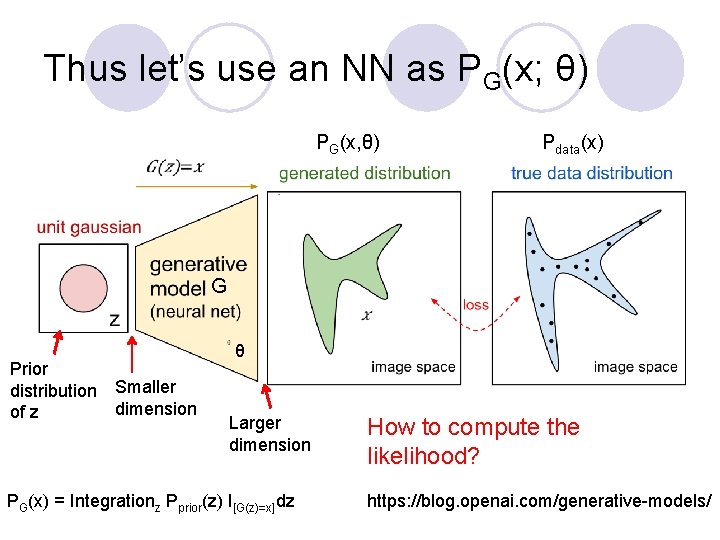

Thus let’s use an NN as PG(x; θ) PG(x, θ) Pdata(x) G Prior distribution Smaller dimension of z θ Larger dimension PG(x) = Integrationz Pprior(z) I[G(z)=x]dz How to compute the likelihood? https: //blog. openai. com/generative-models/

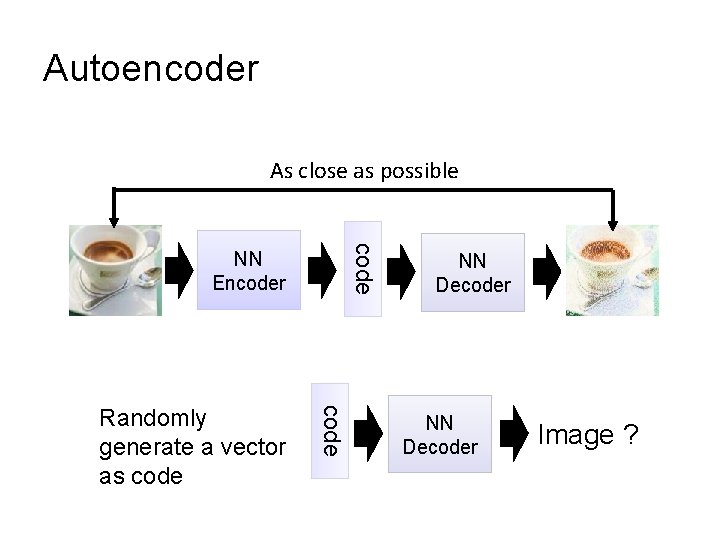

Basic Idea of GAN l Generator G Hard to learn PG by maximum likelihood ¡ G is a function, input z, output x ¡ Given a prior distribution Pprior(z), a probability distribution PG(x) is defined by function G l Discriminator D ¡ D is a function, input x, output scalar ¡ Evaluate the “difference” between PG(x) and Pdata(x) l In order for D to find difference between Pdata from PG, we need a cost function V(G, D): G*=arg min. Gmax. DV(G, D) Note, we are changing distribution G, not just update its parameters (as in the max likelihood case).

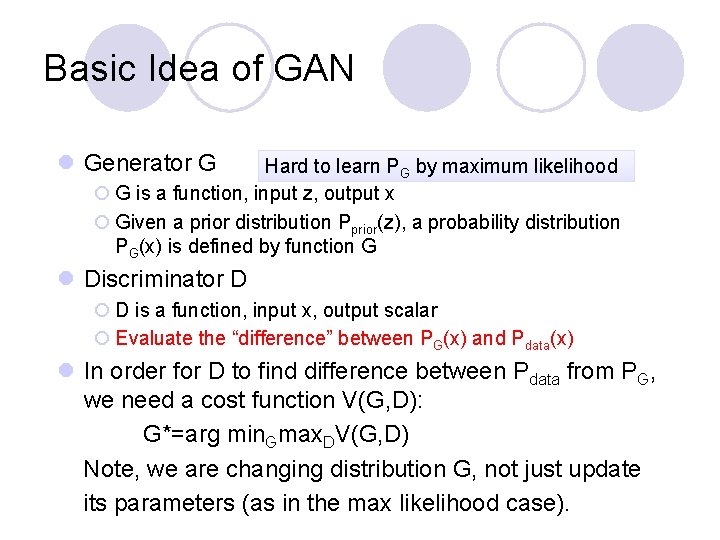

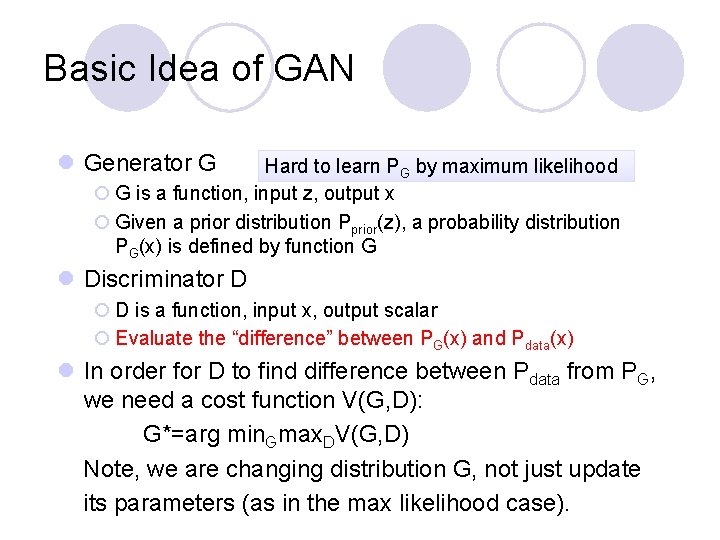

Basic Idea G* = arg min. Gmax. D V(G, D) Pick JSD function: V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] Given a generator G, max. DV(G, D) evaluates the “difference” between PG and Pdata Pick the G s. t. PG is most similar to Pdata V(G 1, D) G 1 V(G 3, D) V(G 2, D) G 2 G 3

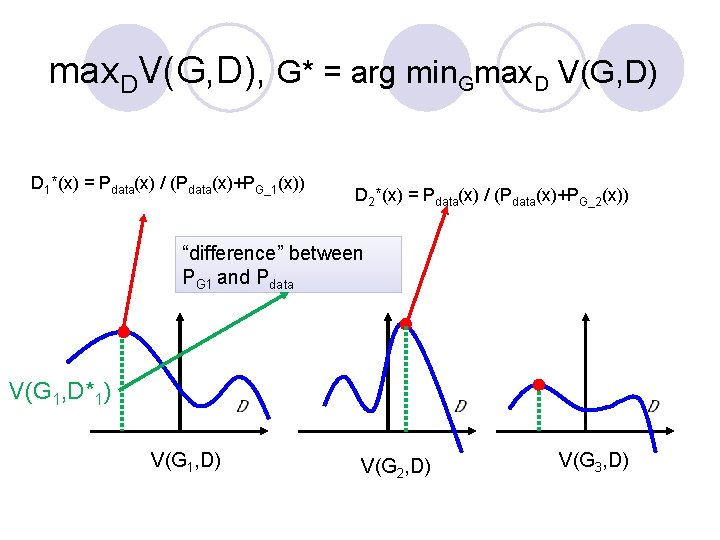

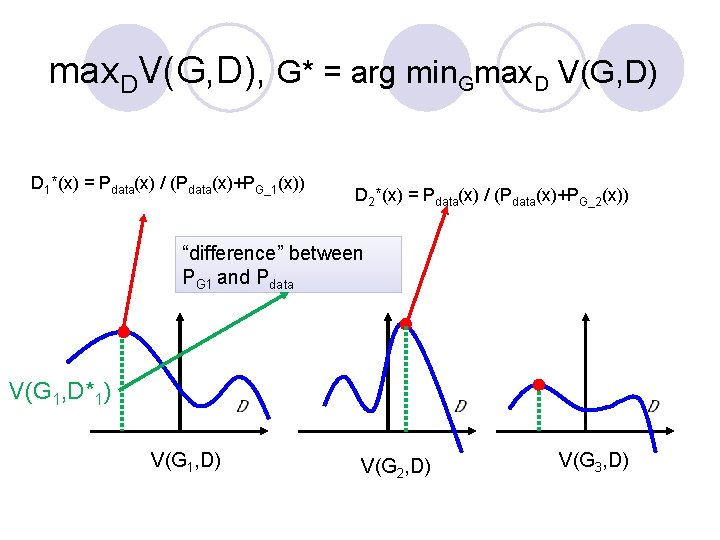

Max. DV(G, D), G*=arg min. Gmax. DV(G, D) l Given G, what is the optimal D* maximizing V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] = Σ [ Pdata(x) log D(x) + PG(x) log(1 -D(x) ] Thus: D*(x) = Pdata(x) / (Pdata(x)+PG(x)) Assuming D(x) can have any value here l Given x, the optimal D* maximizing is: f(D) = alog. D + blog(1 -D) D*=a/(a+b)

max. DV(G, D), G* = arg min. Gmax. D V(G, D) D 1*(x) = Pdata(x) / (Pdata(x)+PG_1(x)) D 2*(x) = Pdata(x) / (Pdata(x)+PG_2(x)) “difference” between PG 1 and Pdata V(G 1, D*1) V(G 1, D) V(G 2, D) V(G 3, D)

![max DVG D V ExPdata log Dx ExPGlog1 Dx max D VG max. DV(G, D) V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] max. D V(G,](https://slidetodoc.com/presentation_image/5ca6c4d2d8b9452c72b4a57bbea7c1ff/image-35.jpg)

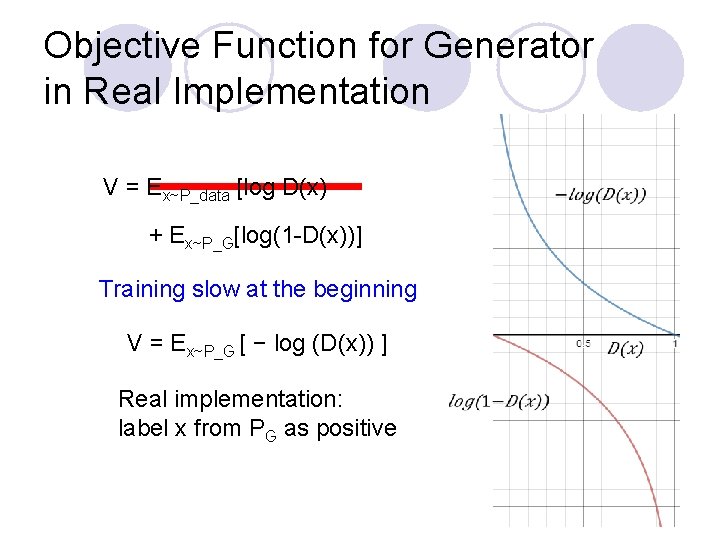

max. DV(G, D) V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] max. D V(G, D) = V(G, D*), where D*(x) = Pdata / (Pdata + PG), and 1 -D*(x) = P G / (Pdata + PG) = Ex~P_data log D*(x) + Ex~P_G log (1 -D*(x)) ≈ Σ [ Pdata (x) log D*(x) + PG(x) log (1 -D*(x)) ] = -2 log 2 + 2 JSD(Pdata || PG ), JSD(P||Q) = Jensen-Shannon divergence = ½ DKL(P||M)+ ½ DKL(Q||M) where M= ½ (P+Q). DKL(P||Q) = Σ P(x) log P(x) /Q(x)

![Summary V ExPdata log Dx ExPGlog1 Dx l Generator G Discriminator D Summary: V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] l Generator G, Discriminator D](https://slidetodoc.com/presentation_image/5ca6c4d2d8b9452c72b4a57bbea7c1ff/image-36.jpg)

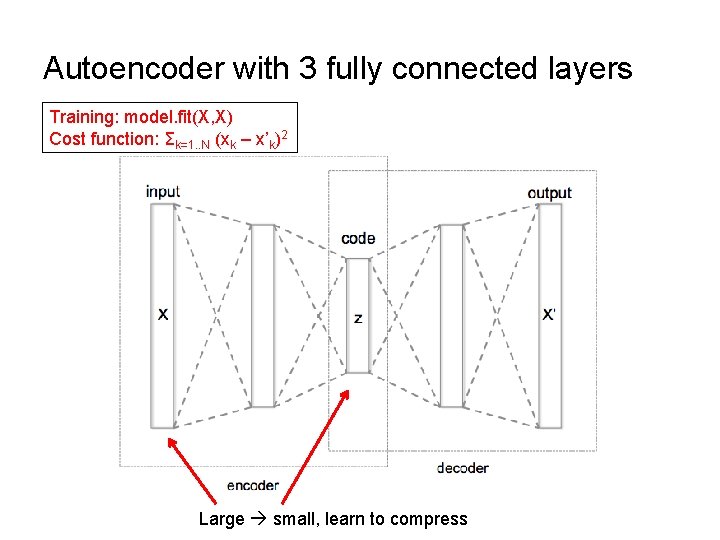

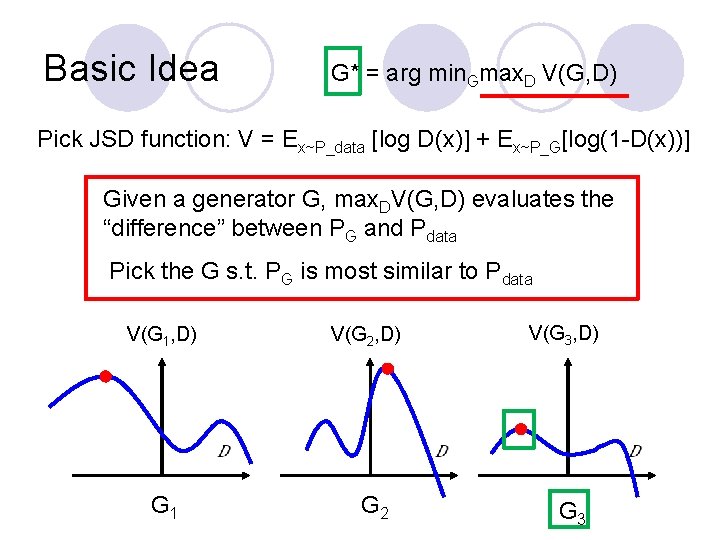

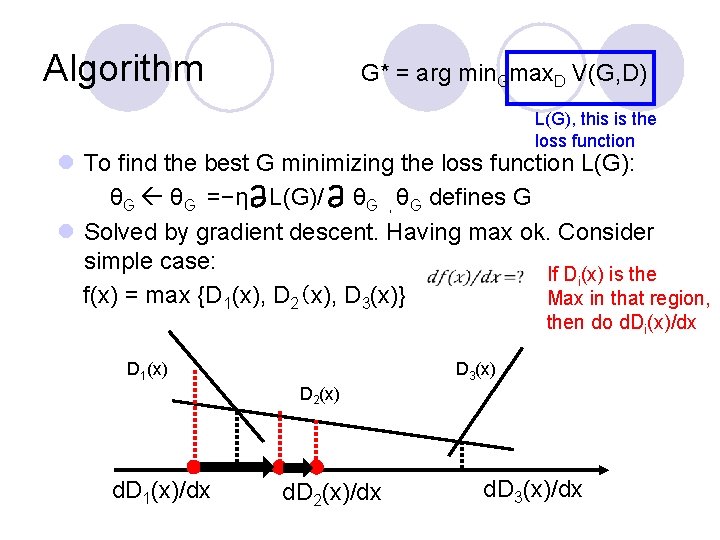

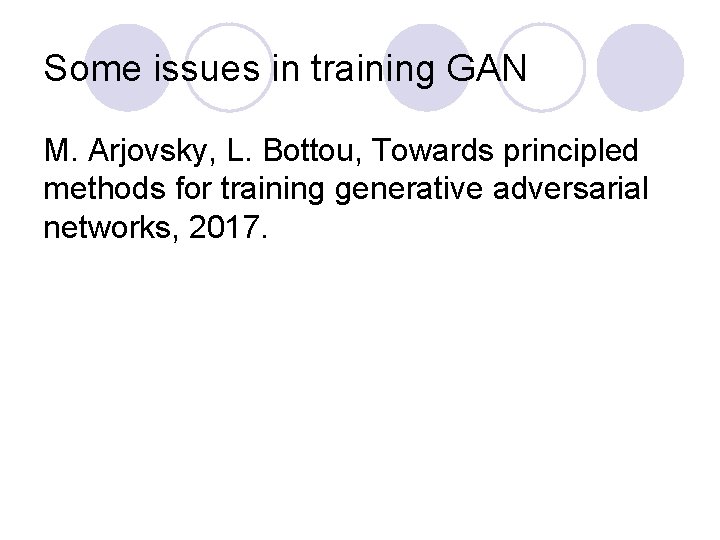

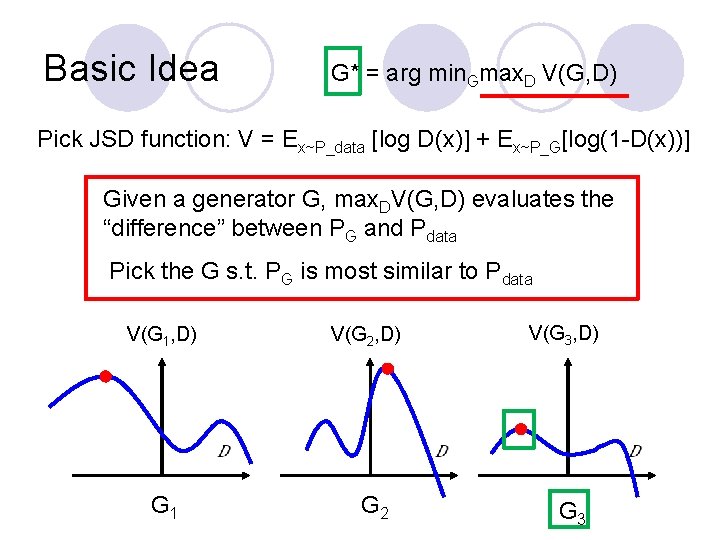

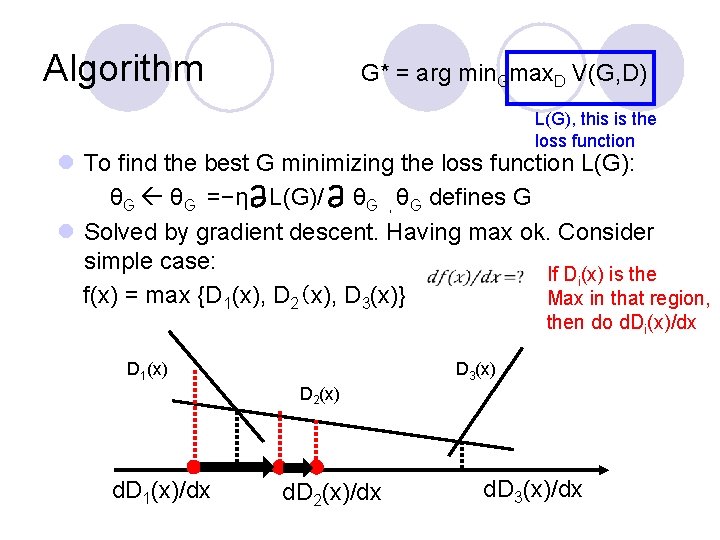

Summary: V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] l Generator G, Discriminator D l Looking for G* such that G* = arg min. Gmax. D V(G, D) l Given G, max. D V(G, D) = -2 log 2 + 2 JSD(Pdata(x) || PG(x)) l What is the optimal G? It is G that makes JSD smallest = 0: PG(x) = Pdata (x)

Algorithm G* = arg min. Gmax. D V(G, D) L(G), this is the loss function l To find the best G minimizing the loss function L(G): θG =−η L(G)/ θG , θG defines G l Solved by gradient descent. Having max ok. Consider simple case: If Di(x) is the f(x) = max {D 1(x), D 2(x), D 3(x)} Max in that region, then do d. Di(x)/dx D 1(x) D 3(x) D 2(x) d. D 1(x)/dx d. D 2(x)/dx d. D 3(x)/dx

Algorithm G* = arg min. Gmax. D V(G, D) L(G) l Given G 0 l Find D*0 maximizing V(G 0, D) V(G 0, D 0*) is the JS divergence between Pdata(x) and PG 0(x) l θG −η ΔV(G, D 0*) / θG Obtaining G 1 (decrease JSD) l Find D 1* maximizing V(G 1, D) V(G 1, D 1*) is the JS divergence between Pdata(x) and PG 1(x) l θG −η ΔV(G, D 1*) / θG Obtaining G 2 (decrease JSD) l And so on …

![In practice V ExPdata log Dx ExPGlog1 Dx l Given G In practice … V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] l Given G,](https://slidetodoc.com/presentation_image/5ca6c4d2d8b9452c72b4a57bbea7c1ff/image-39.jpg)

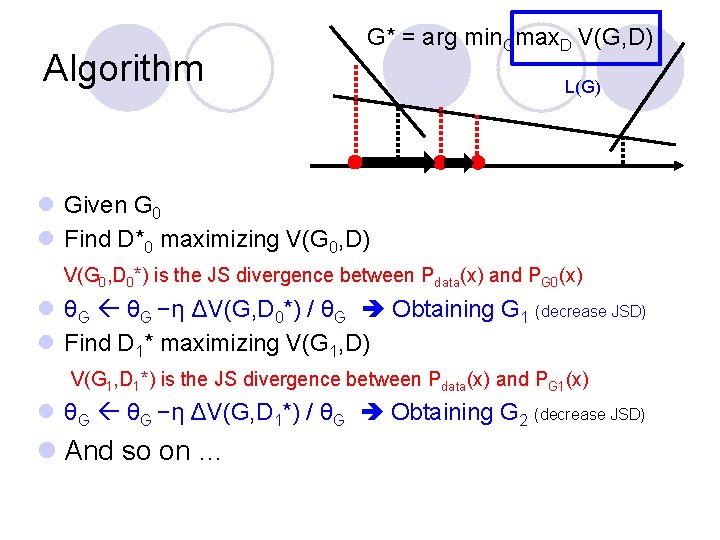

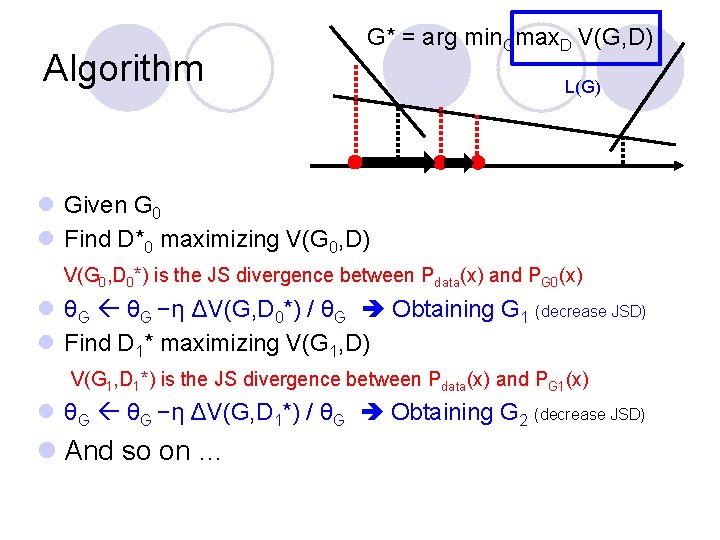

In practice … V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] l Given G, how to compute max. DV(G, D)? ¡Sample {x 1, … , xm} from Pdata ¡Sample {x*1, … , x*m} from generator PG Maximize: V’ = 1/m Σi=1. . m log. D(xi) + 1/m Σi=1. . m log(1 -D(x*i)) Positive example D must accept Negative example D must reject This is what a Binary Classifier do Output is D(x) Minimize Cross-entropy If x is a positive example Minimize –log D(x) If x is a negative example Minimize –log(1 -D(x))

Binary Classifier Output is f(x) Minimize Cross-entropy If x is a positive example Minimize –log f(x) If x is a negative example Minimize –log(1 -f(x)) D is a binary classifier (can be deep) with parameters θd {x 1, x 2, … xm} from Pdata (x) Positive examples {x*1, x*2, … x*m} from PG(x) Negative examples Minimize L = - V’ or Maximize V’ = Σi=1. . m log. D(xi) + 1/m Σi=1. . m log(1 -D(x*i))

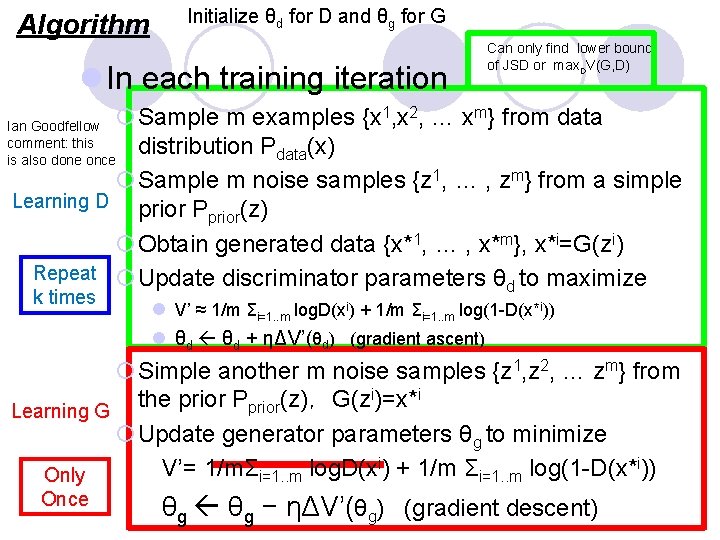

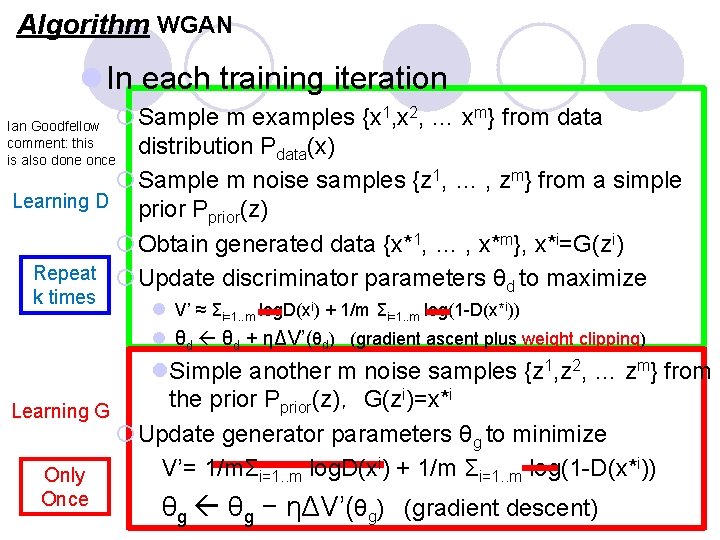

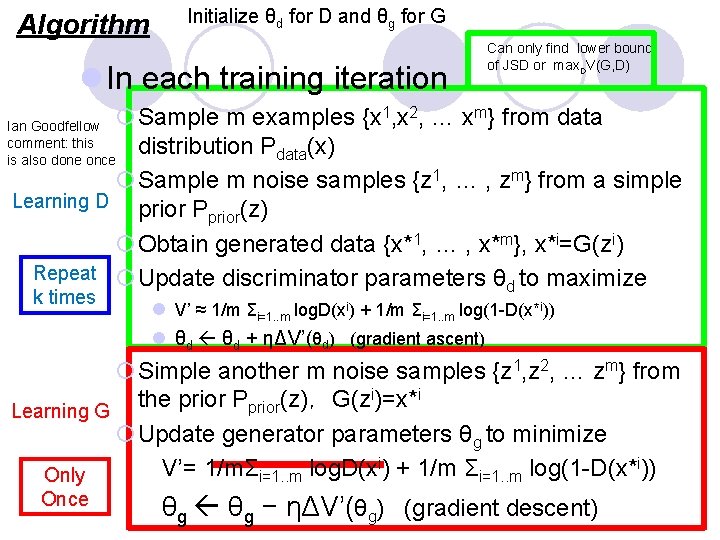

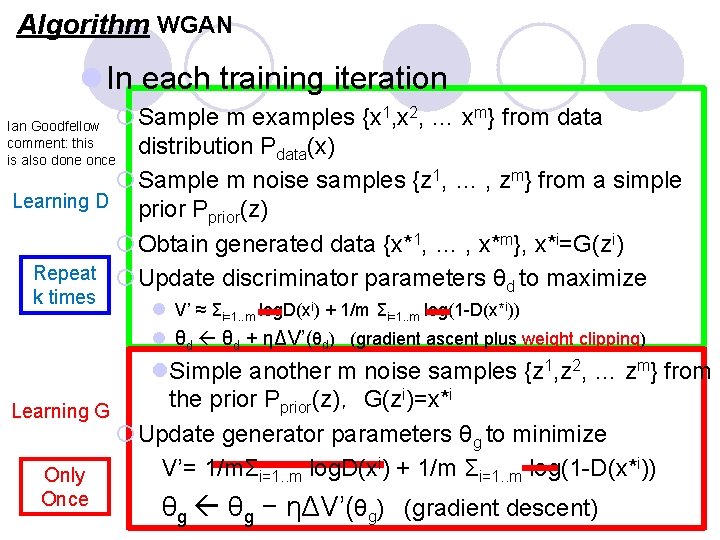

Algorithm Initialize θd for D and θg for G l In each training iteration Can only find lower bound of JSD or max. DV(G, D) ¡Sample m examples {x 1, x 2, … xm} from data distribution Pdata(x) ¡Sample m noise samples {z 1, … , zm} from a simple Learning D prior P prior(z) ¡Obtain generated data {x*1, … , x*m}, x*i=G(zi) Repeat ¡ Update discriminator parameters θ to maximize d Ian Goodfellow comment: this is also done once k times l V’ ≈ 1/m Σi=1. . m log. D(xi) + 1/m Σi=1. . m log(1 -D(x*i)) l θd + ηΔV’(θd) (gradient ascent) ¡Simple another m noise samples {z 1, z 2, … zm} from i)=x*i the prior P (z),G(z prior Learning G ¡Update generator parameters θg to minimize i i Only V’= 1/mΣi=1. . m log. D(x ) + 1/m Σi=1. . m log(1 -D(x* )) Once θg − ηΔV’(θg) (gradient descent)

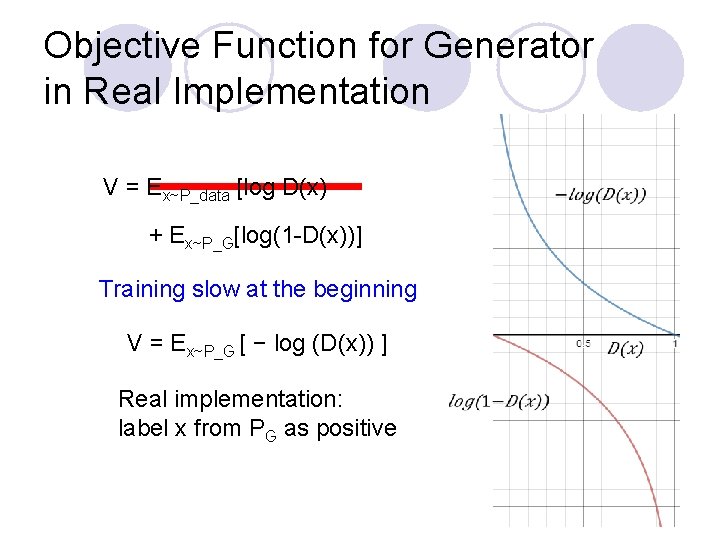

Objective Function for Generator in Real Implementation V = Ex~P_data [log D(x) + Ex~P_G[log(1 -D(x))] Training slow at the beginning V = Ex~P_G [ − log (D(x)) ] Real implementation: label x from PG as positive

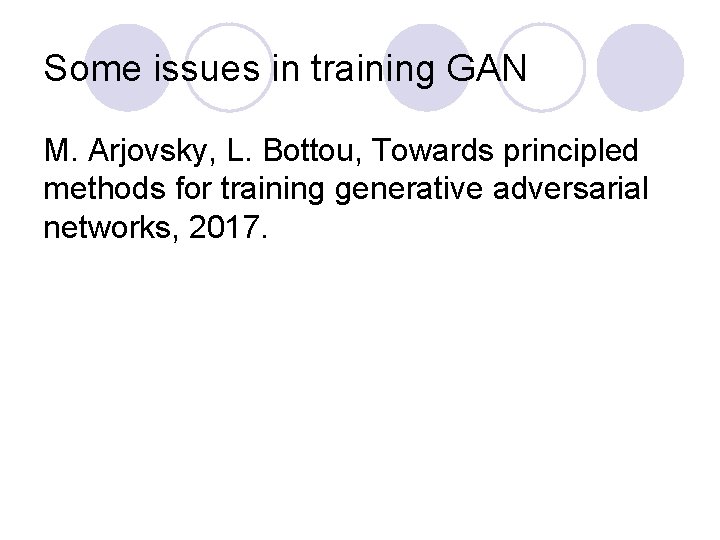

Some issues in training GAN M. Arjovsky, L. Bottou, Towards principled methods for training generative adversarial networks, 2017.

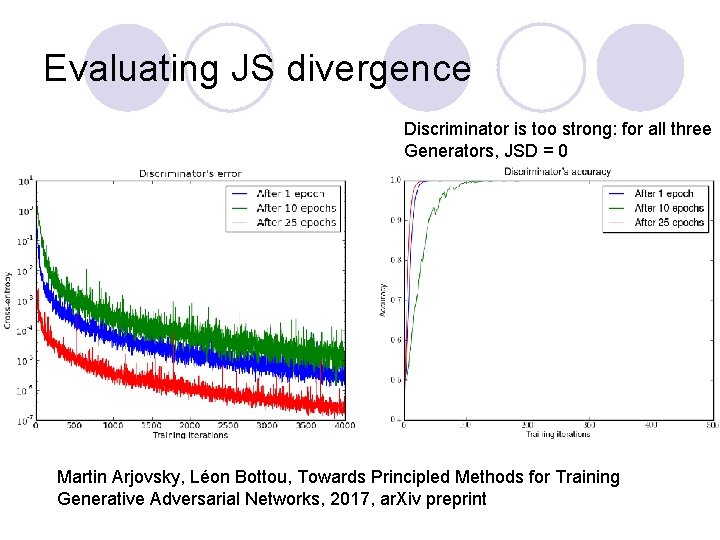

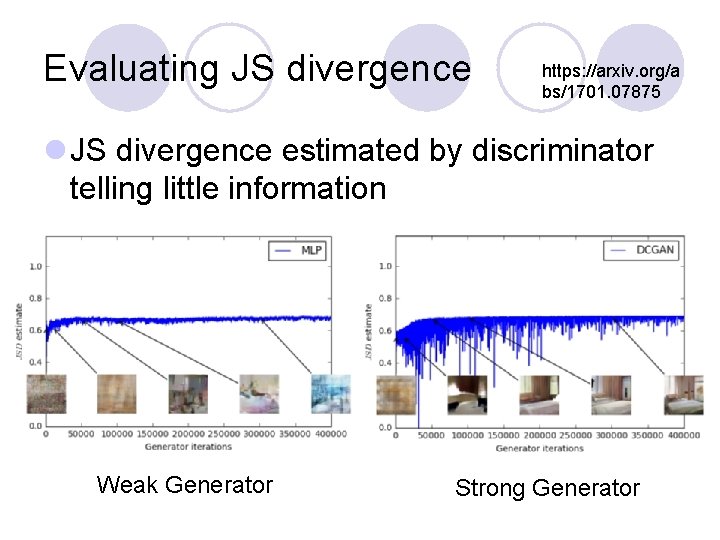

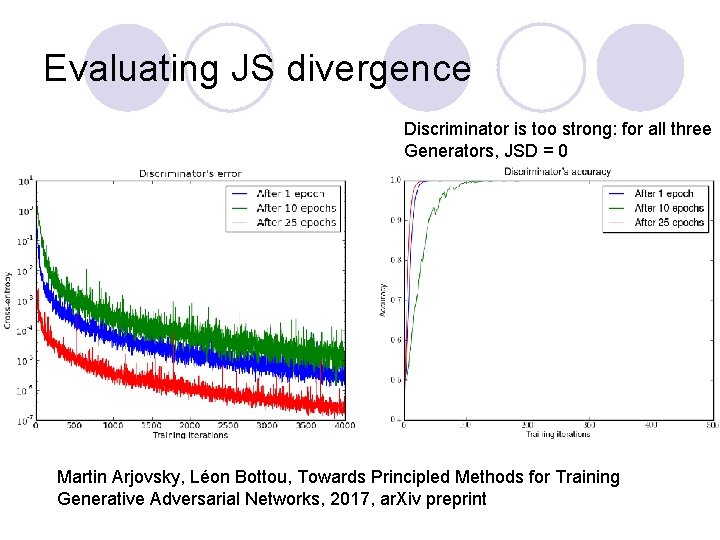

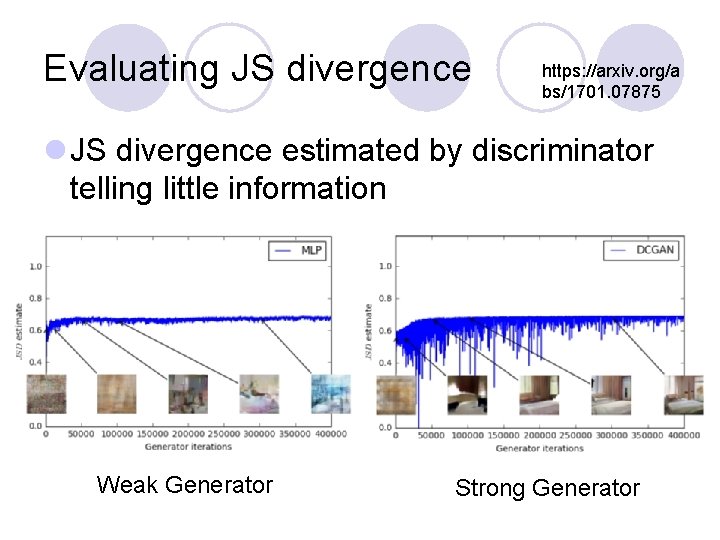

Evaluating JS divergence Discriminator is too strong: for all three Generators, JSD = 0 Martin Arjovsky, Léon Bottou, Towards Principled Methods for Training Generative Adversarial Networks, 2017, ar. Xiv preprint

Evaluating JS divergence https: //arxiv. org/a bs/1701. 07875 l JS divergence estimated by discriminator telling little information Weak Generator Strong Generator

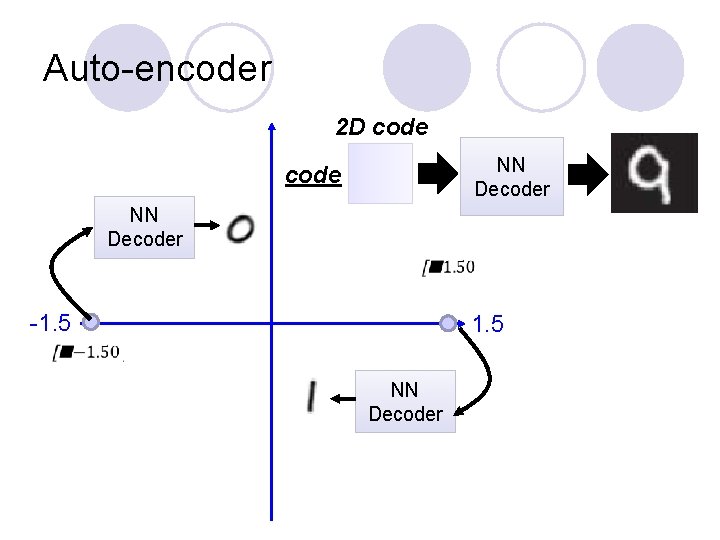

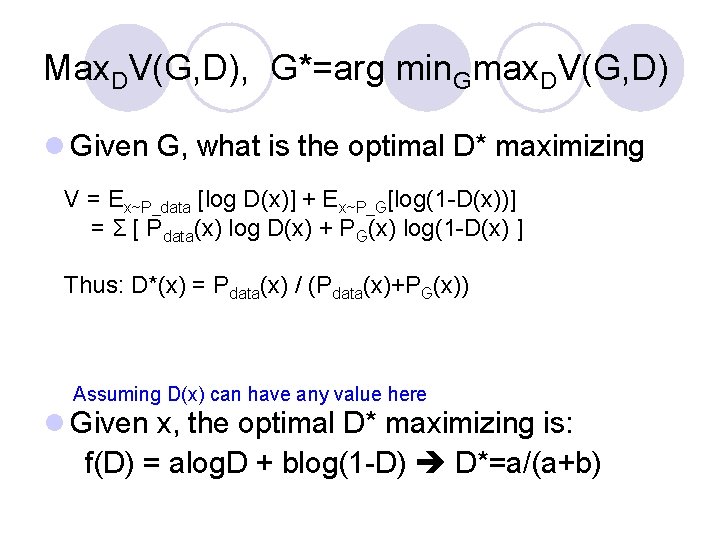

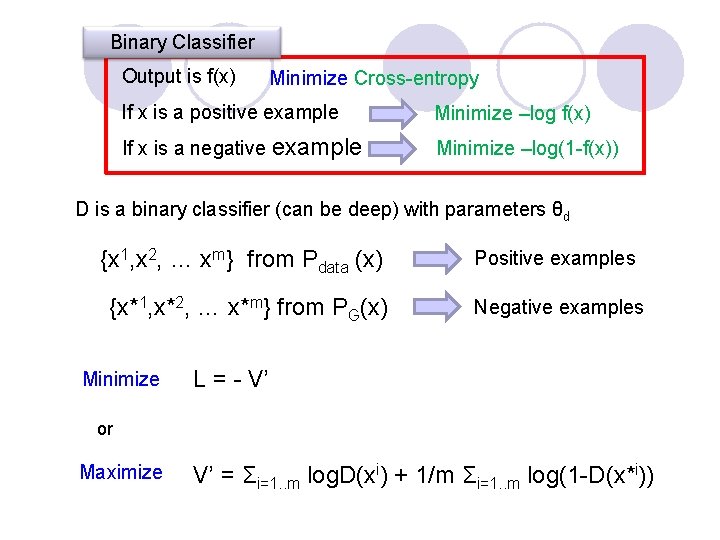

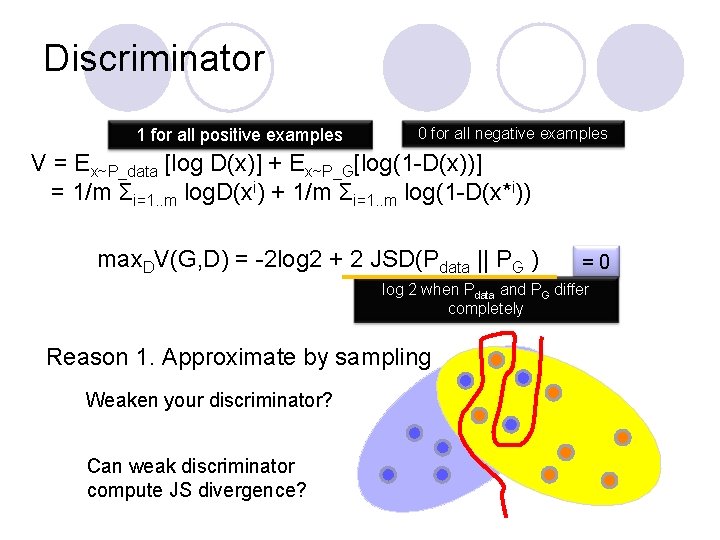

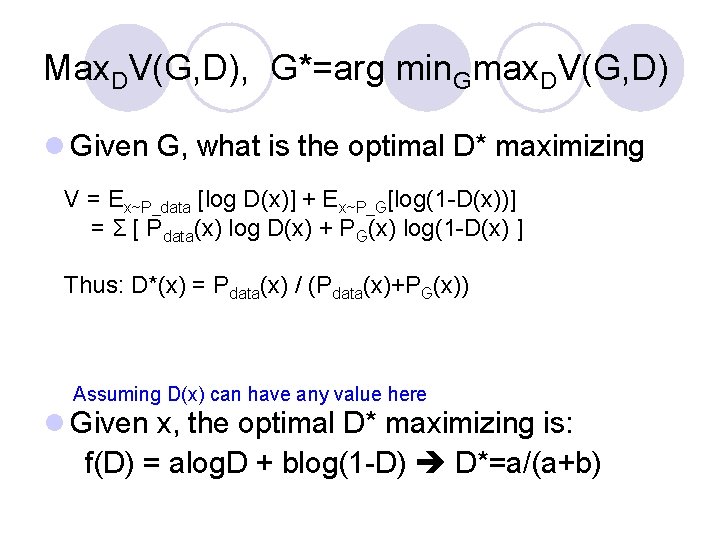

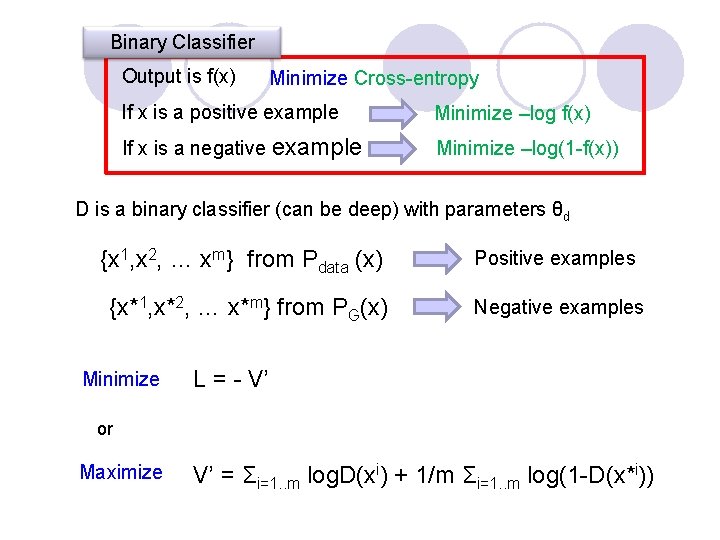

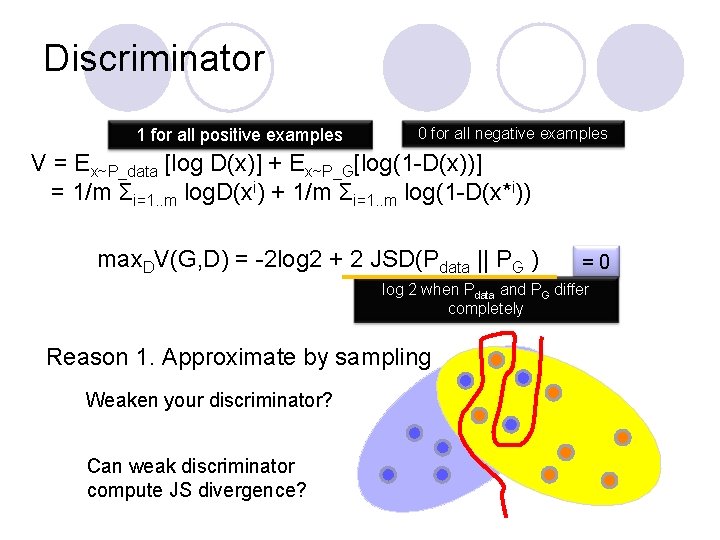

Discriminator 1 for all positive examples 0 for all negative examples V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] = 1/m Σi=1. . m log. D(xi) + 1/m Σi=1. . m log(1 -D(x*i)) max. DV(G, D) = -2 log 2 + 2 JSD(Pdata || PG ) = 0 log 2 when Pdata and PG differ completely Reason 1. Approximate by sampling Weaken your discriminator? Can weak discriminator compute JS divergence?

![Discriminator 1 0 GAN implementation estimation V ExPdata log Dx ExPGlog1 Dx Discriminator 1 0 GAN implementation estimation V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))]](https://slidetodoc.com/presentation_image/5ca6c4d2d8b9452c72b4a57bbea7c1ff/image-47.jpg)

Discriminator 1 0 GAN implementation estimation V = Ex~P_data [log D(x)] + Ex~P_G[log(1 -D(x))] = 1/m Σi=1. . m log. D(xi) + 1/m Σi=1. . m log(1 -D(x*i)) ≈ 0 max. DV(G, D) = -2 log 2 + 2 JSD(Pdata || PG ) = 0 Theoretical estimation Reason 2. the nature of data Pdata(x) and PG(x) have very little overlap in high dimensional space log 2

Evolution http: //www. guokr. com/post/773890/ Better

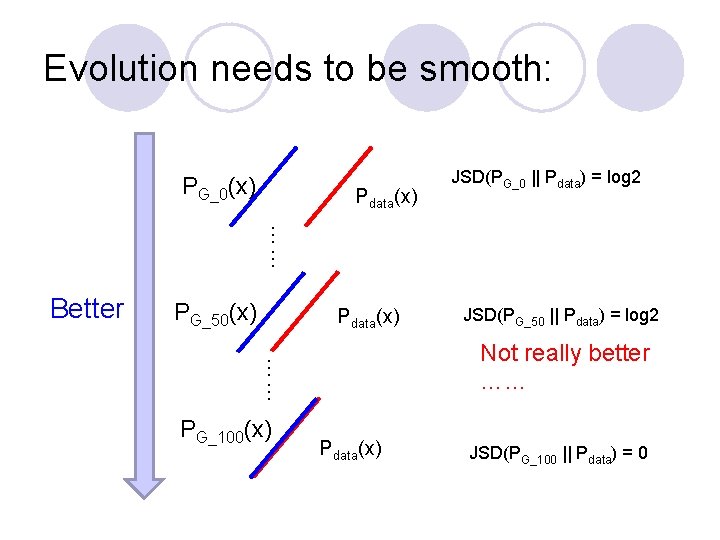

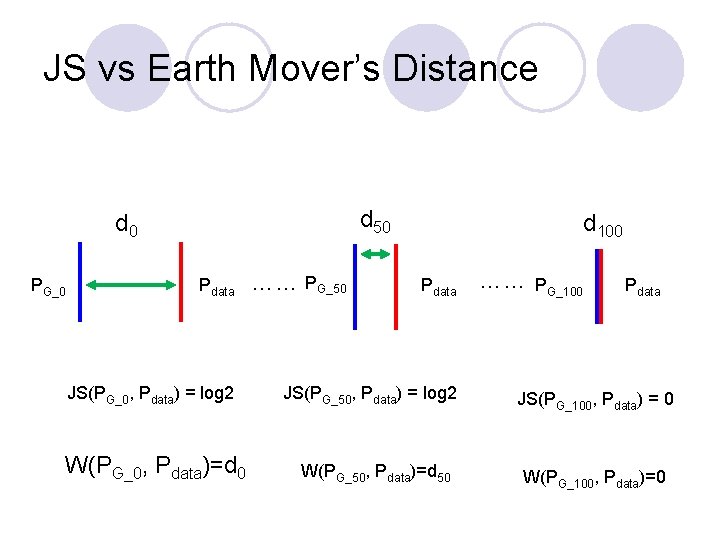

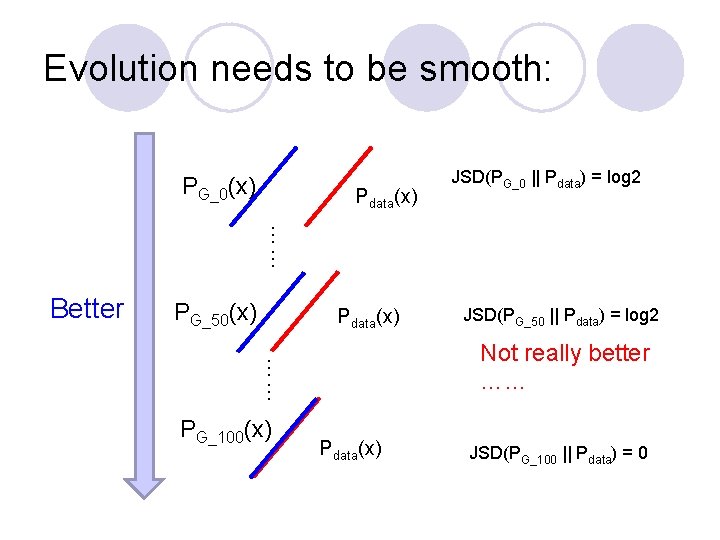

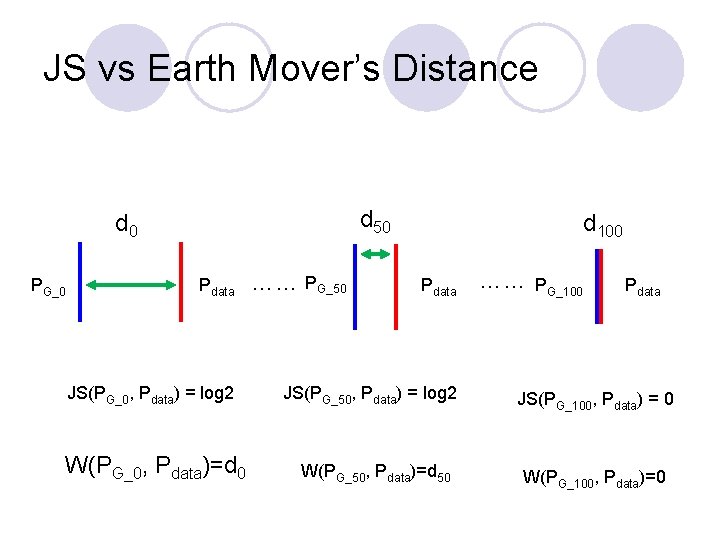

Evolution needs to be smooth: PG_0(x) Pdata(x) JSD(PG_0 || Pdata) = log 2 …… Better PG_50(x) Pdata(x) Not really better …… …… PG_100(x) JSD(PG_50 || Pdata) = log 2 Pdata(x) JSD(PG_100 || Pdata) = 0

One simple solution: add noise l Add some artificial noise to the inputs of discriminator l Make the labels noisy for the discriminator Discriminator cannot perfectly separate real and generated data Pdata(x) and PG(x) have some overlap Noises need to decay over time

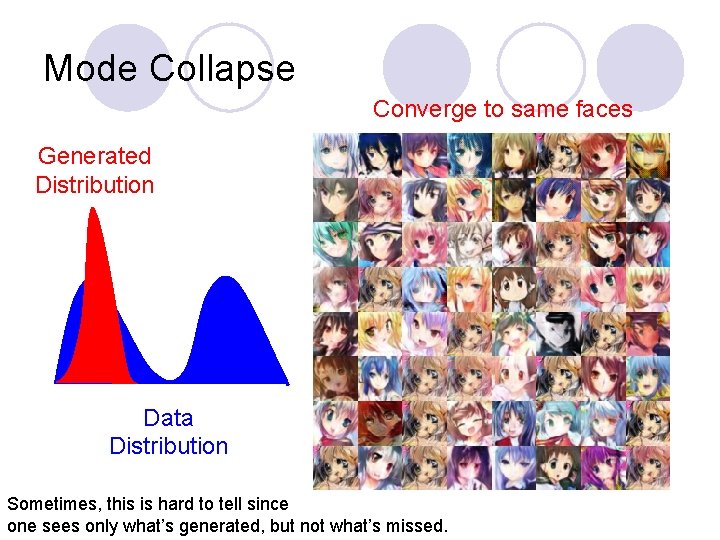

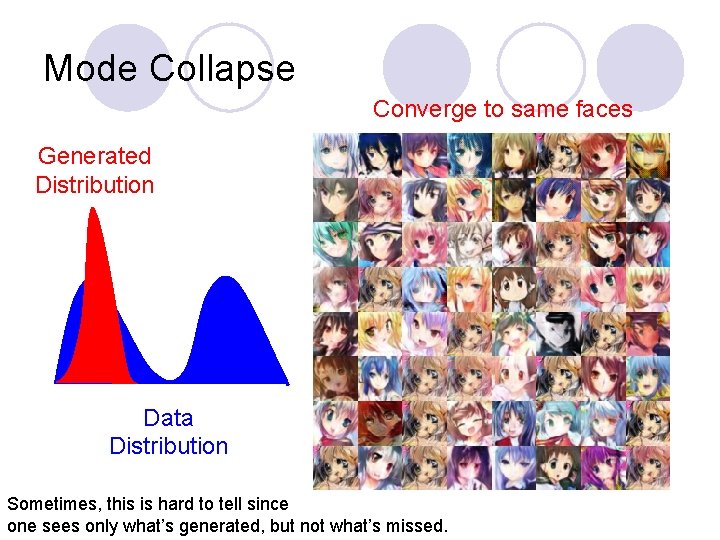

Mode Collapse Converge to same faces Generated Distribution Data Distribution Sometimes, this is hard to tell since one sees only what’s generated, but not what’s missed.

Mode Collapse Example 8 Gaussian distributions: What we want … In reality … Pdata

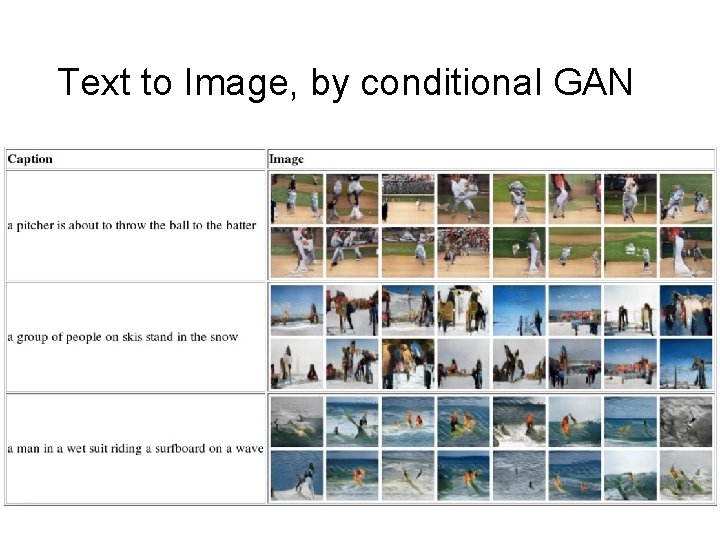

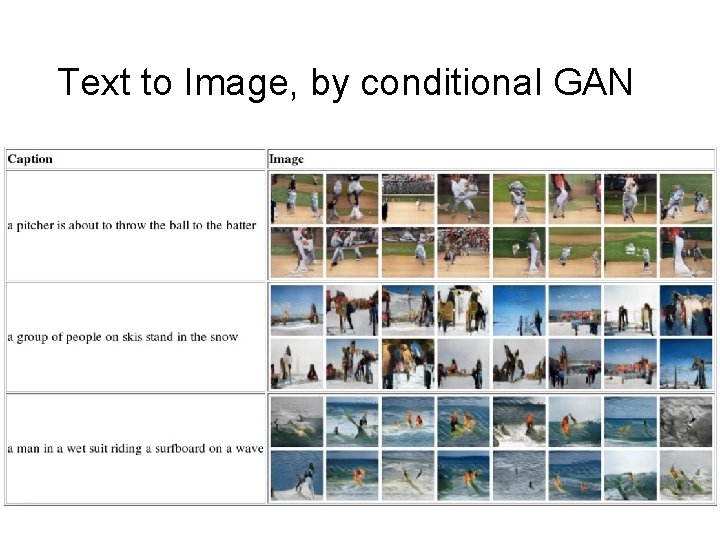

Text to Image, by conditional GAN

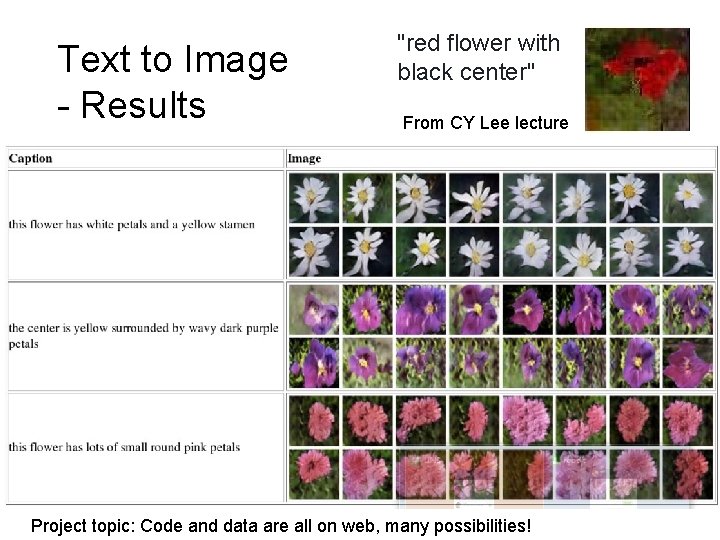

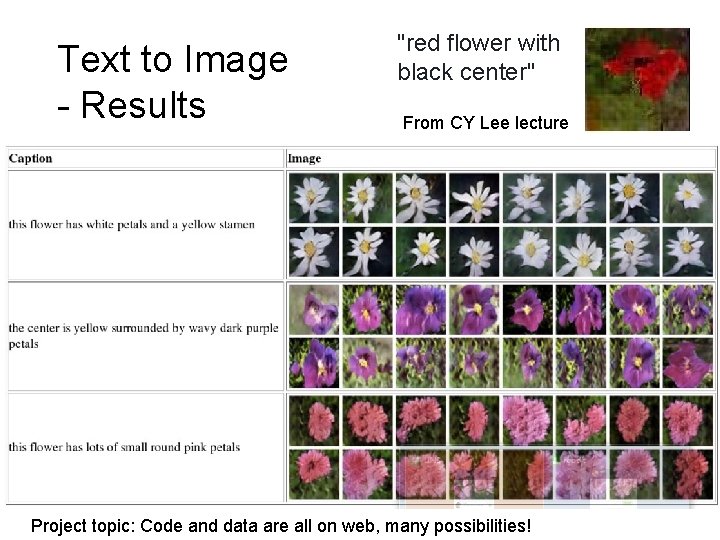

Text to Image - Results "red flower with black center" From CY Lee lecture Project topic: Code and data are all on web, many possibilities!

Algorithm WGAN l In each training iteration ¡Sample m examples {x 1, x 2, … xm} from data distribution Pdata(x) ¡Sample m noise samples {z 1, … , zm} from a simple Learning D prior P prior(z) ¡Obtain generated data {x*1, … , x*m}, x*i=G(zi) Repeat ¡ Update discriminator parameters θ to maximize d Ian Goodfellow comment: this is also done once k times l V’ ≈ Σi=1. . m log. D(xi) + 1/m Σi=1. . m log(1 -D(x*i)) l θd + ηΔV’(θd) (gradient ascent plus weight clipping) l. Simple another m noise samples {z 1, z 2, … zm} from i)=x*i the prior P (z),G(z prior Learning G ¡Update generator parameters θg to minimize i i Only V’= 1/mΣi=1. . m log. D(x ) + 1/m Σi=1. . m log(1 -D(x* )) Once θg − ηΔV’(θg) (gradient descent)

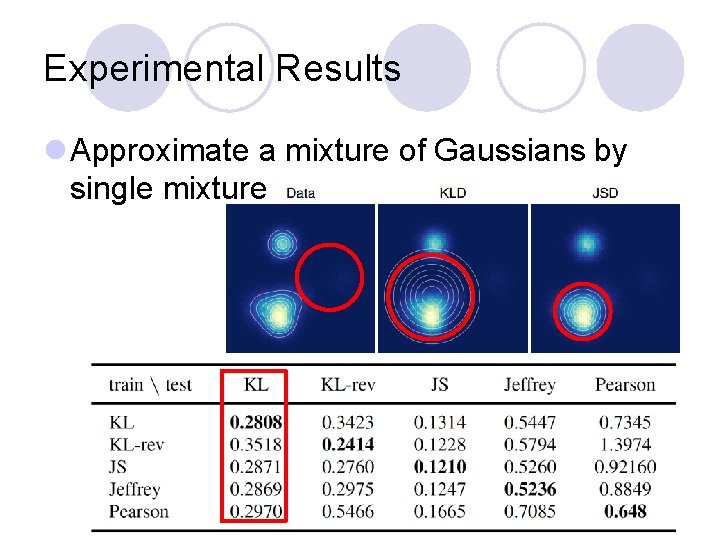

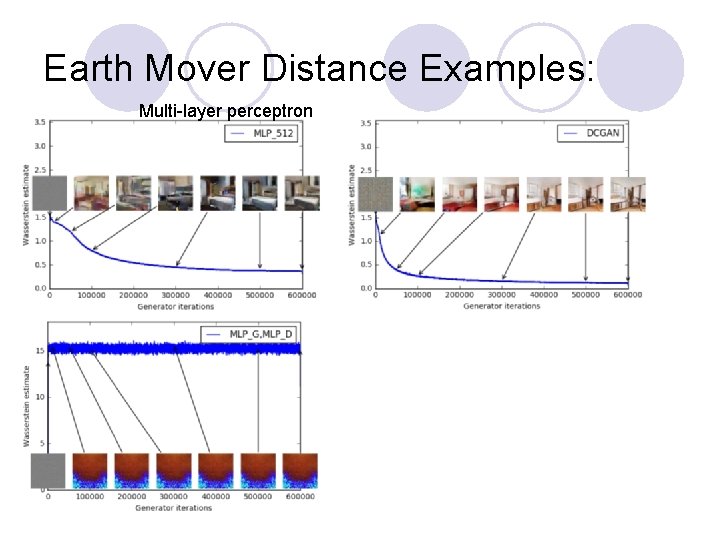

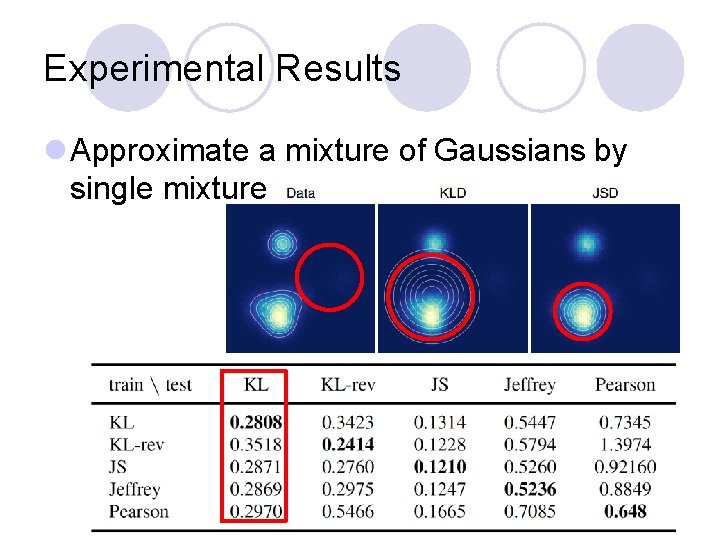

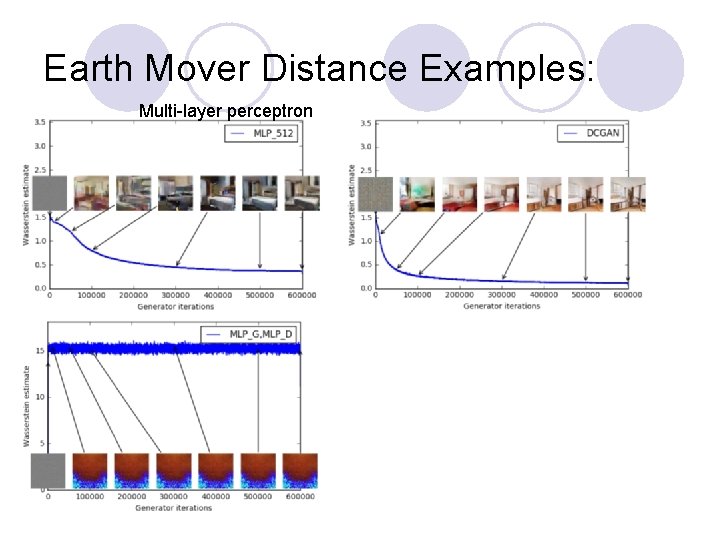

Experimental Results l Approximate a mixture of Gaussians by single mixture

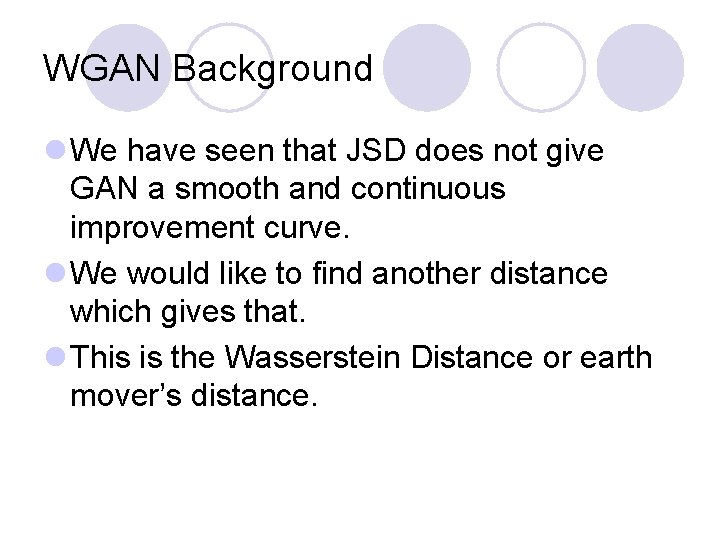

WGAN Background l We have seen that JSD does not give GAN a smooth and continuous improvement curve. l We would like to find another distance which gives that. l This is the Wasserstein Distance or earth mover’s distance.

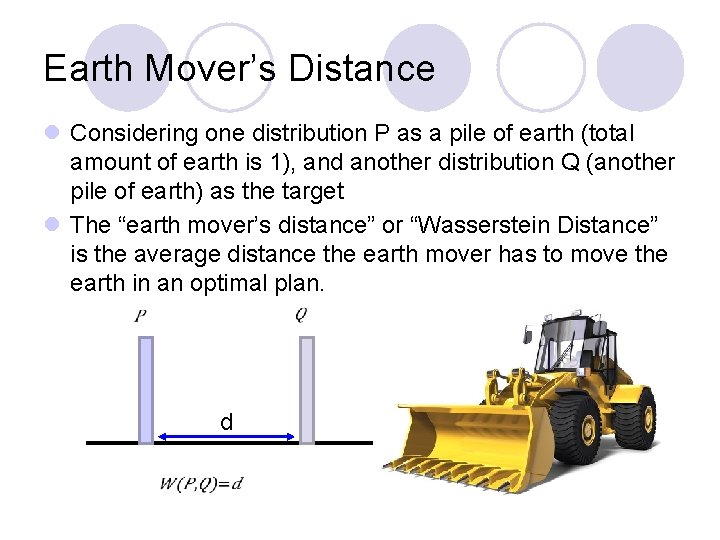

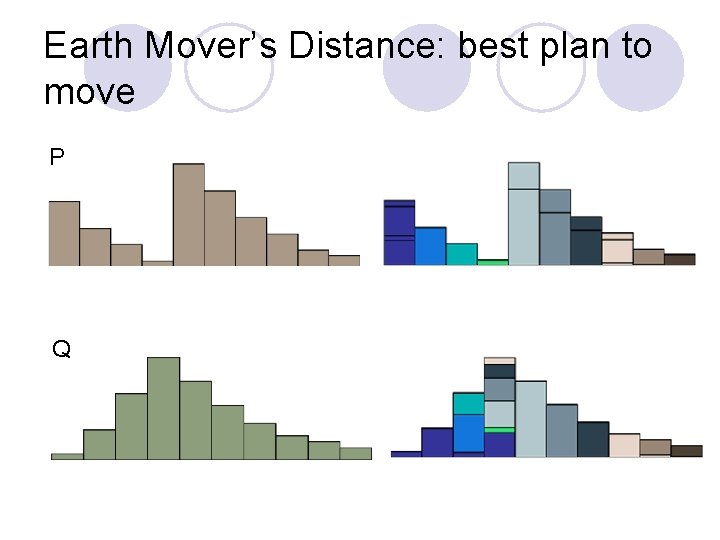

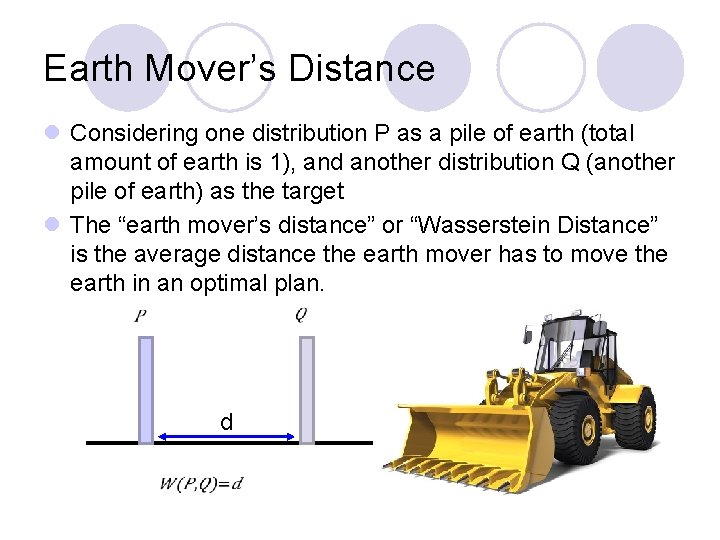

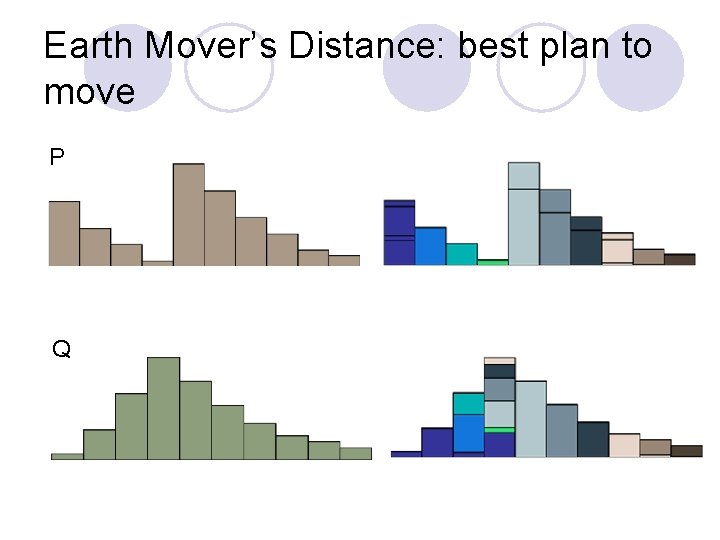

Earth Mover’s Distance l Considering one distribution P as a pile of earth (total amount of earth is 1), and another distribution Q (another pile of earth) as the target l The “earth mover’s distance” or “Wasserstein Distance” is the average distance the earth mover has to move the earth in an optimal plan. d

Earth Mover’s Distance: best plan to move P Q

JS vs Earth Mover’s Distance d 50 Pdata JS(PG_0, Pdata) = log 2 W(PG_0, Pdata)=d 0 …… PG_0 PG_50 d 100 Pdata JS(PG_50, Pdata) = log 2 W(PG_50, Pdata)=d 50 …… d 0 PG_100 Pdata JS(PG_100, Pdata) = 0 W(PG_100, Pdata)=0

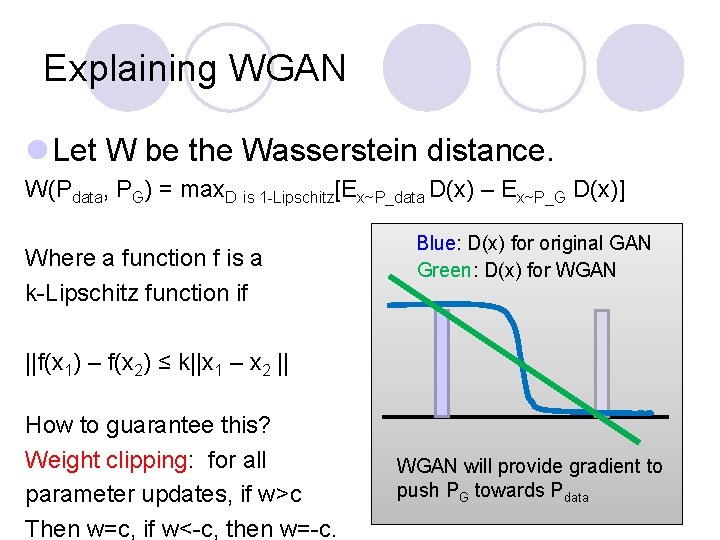

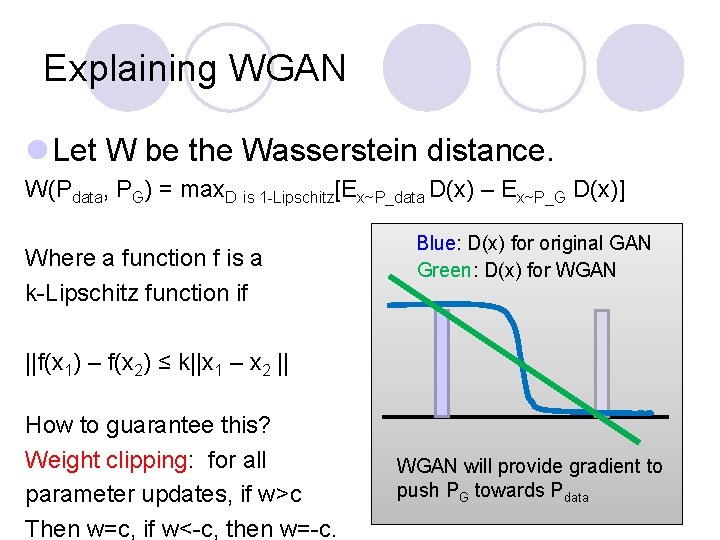

Explaining WGAN l Let W be the Wasserstein distance. W(Pdata, PG) = max. D is 1 -Lipschitz[Ex~P_data D(x) – Ex~P_G D(x)] Where a function f is a k-Lipschitz function if Blue: D(x) for original GAN Green: D(x) for WGAN ||f(x 1) – f(x 2) ≤ k||x 1 – x 2 || How to guarantee this? Weight clipping: for all parameter updates, if w>c Then w=c, if w<-c, then w=-c. WGAN will provide gradient to push PG towards Pdata

Earth Mover Distance Examples: Multi-layer perceptron