Lecture 6 Recurrent Neural Networks Alireza Akhavan Pour

![Simplified RNN notation qwa is waa and wax stacked horizontally. q[a<t-1>, x<t>] is a<t-1> Simplified RNN notation qwa is waa and wax stacked horizontally. q[a<t-1>, x<t>] is a<t-1>](https://slidetodoc.com/presentation_image_h2/722737b382092d6cd2c529bd5456675b/image-9.jpg)

- Slides: 35

Lecture 6: Recurrent Neural Networks Alireza Akhavan Pour CLASS. VISION SRTTU – A. Akhavan Lecture 6 - 1 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

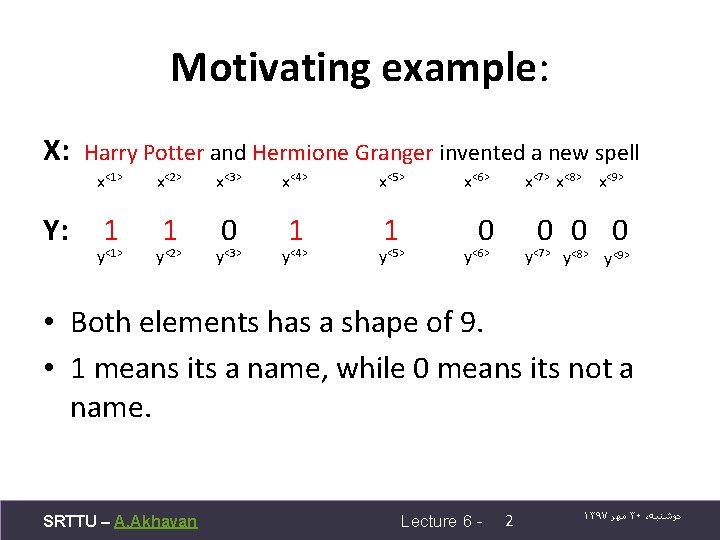

Motivating example: X: Y: Harry Potter and Hermione Granger invented a new spell x<1> x<2> x<3> x<4> x<5> 1 1 0 1 1 y<1> y<2> y<3> y<4> y<5> x<6> x<7> x<8> x<9> 0 0 y<6> y<7> y<8> y<9> • Both elements has a shape of 9. • 1 means its a name, while 0 means its not a name. SRTTU – A. Akhavan Lecture 6 - 2 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

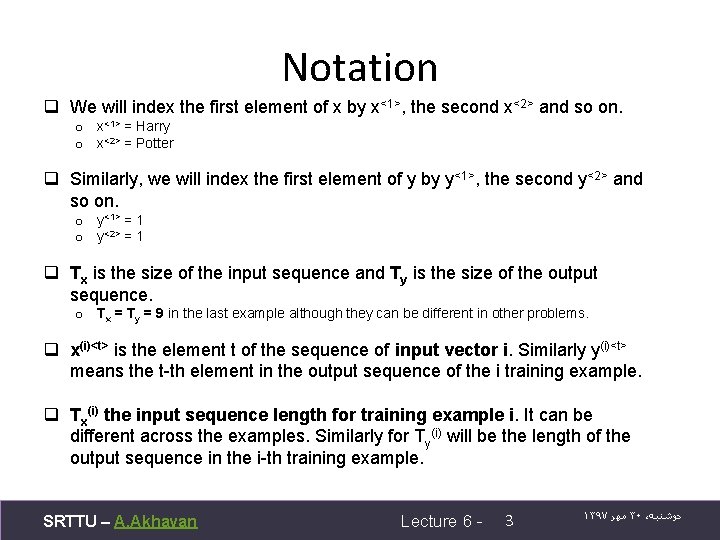

Notation q We will index the first element of x by x<1>, the second x<2> and so on. o x<1> = Harry o x<2> = Potter q Similarly, we will index the first element of y by y<1>, the second y<2> and so on. o y<1> = 1 o y<2> = 1 q Tx is the size of the input sequence and Ty is the size of the output sequence. o Tx = Ty = 9 in the last example although they can be different in other problems. q x(i)<t> is the element t of the sequence of input vector i. Similarly y(i)<t> means the t-th element in the output sequence of the i training example. q Tx(i) the input sequence length for training example i. It can be different across the examples. Similarly for Ty(i) will be the length of the output sequence in the i-th training example. SRTTU – A. Akhavan Lecture 6 - 3 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

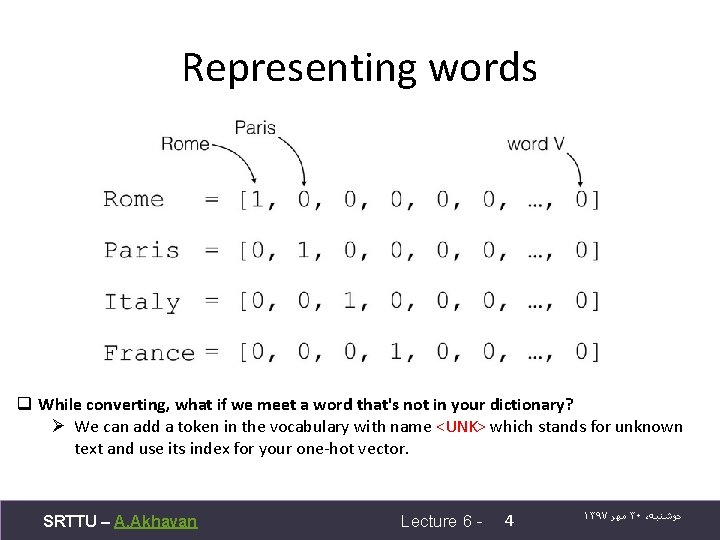

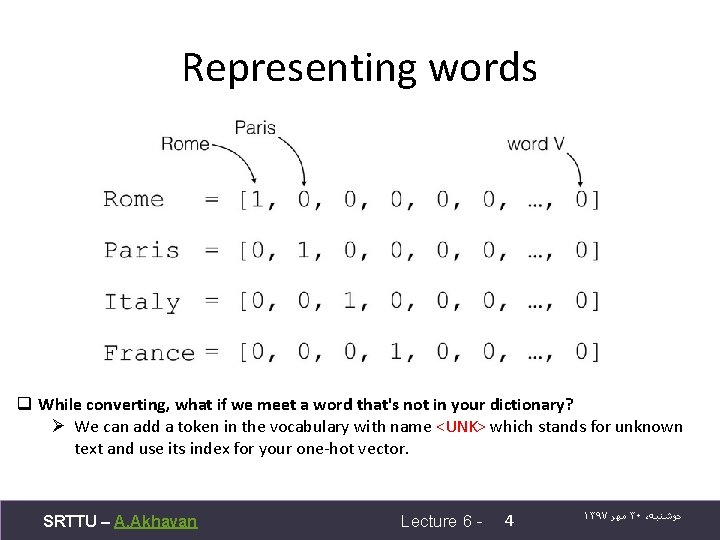

Representing words q While converting, what if we meet a word that's not in your dictionary? Ø We can add a token in the vocabulary with name <UNK> which stands for unknown text and use its index for your one-hot vector. SRTTU – A. Akhavan Lecture 6 - 4 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

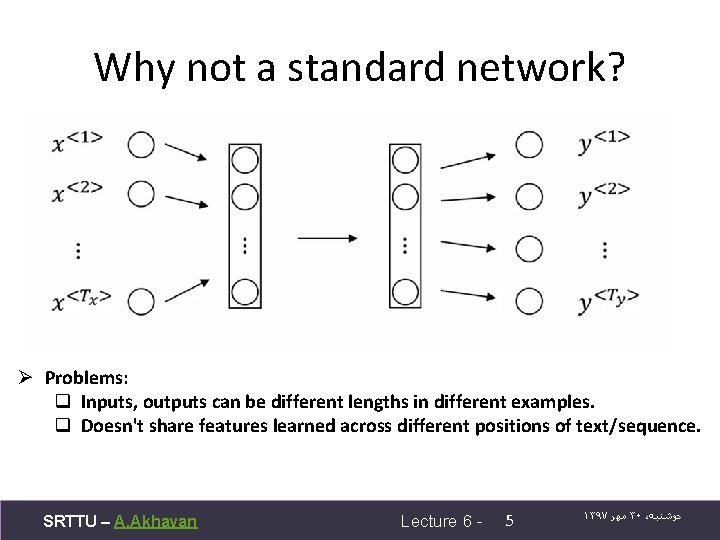

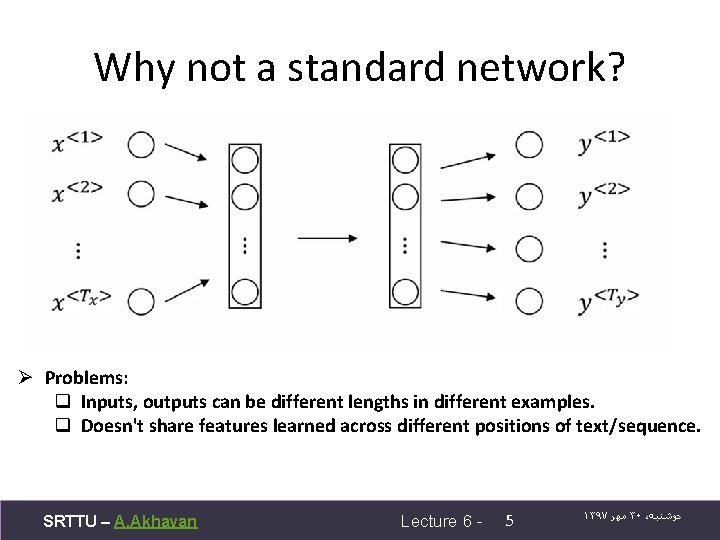

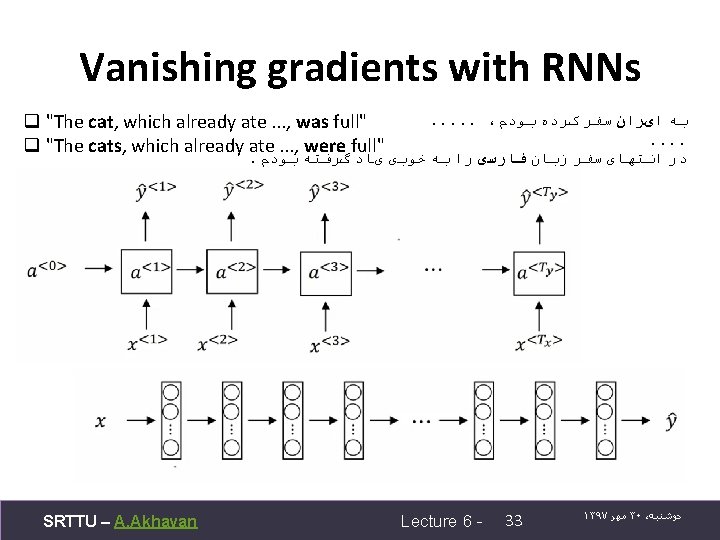

Why not a standard network? Ø Problems: q Inputs, outputs can be different lengths in different examples. q Doesn't share features learned across different positions of text/sequence. SRTTU – A. Akhavan Lecture 6 - 5 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

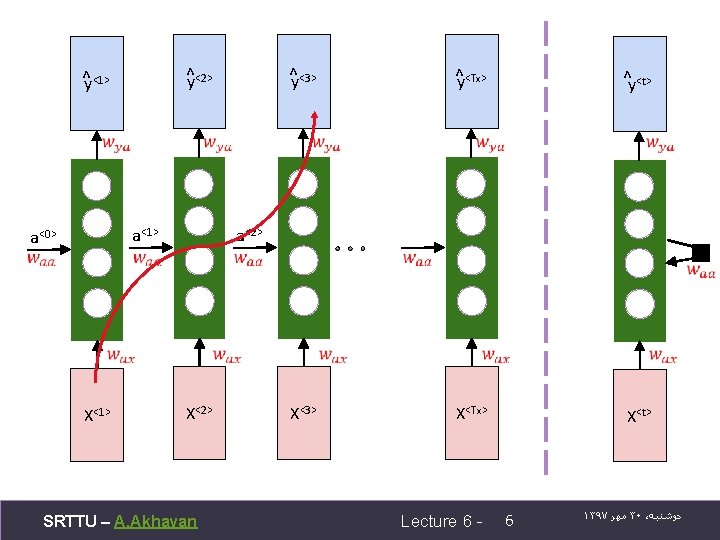

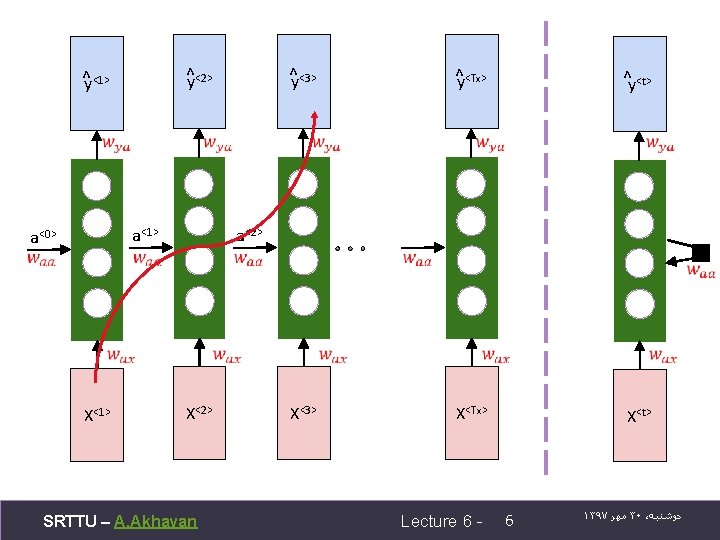

^y<2> ^y<1> a<0> X<1> ^y<3> ^y<Tx> ^y<t> X<3> X<Tx> X<t> a<2> X<2> SRTTU – A. Akhavan Lecture 6 - 6 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

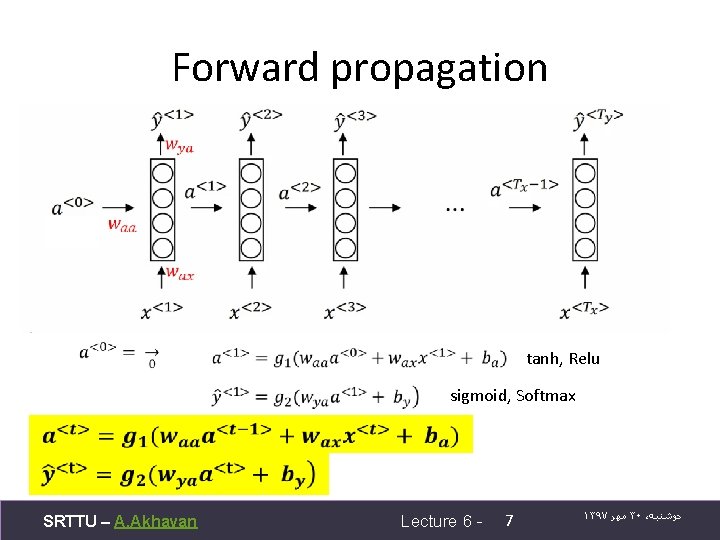

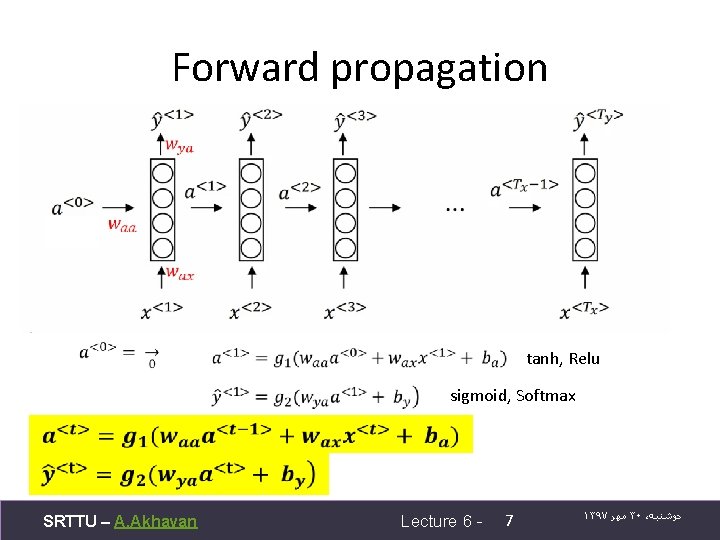

Forward propagation tanh, Relu sigmoid, Softmax SRTTU – A. Akhavan Lecture 6 - 7 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

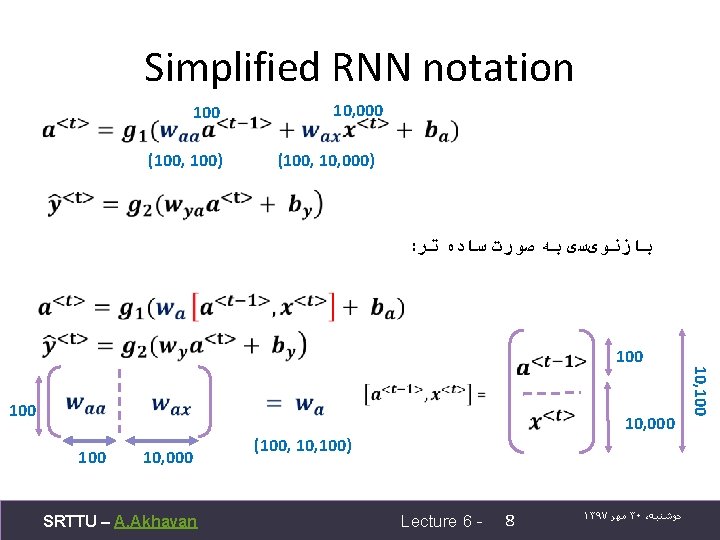

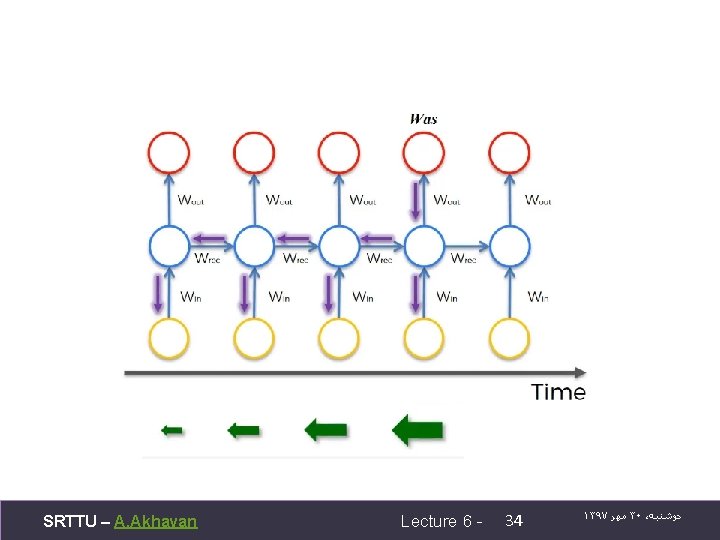

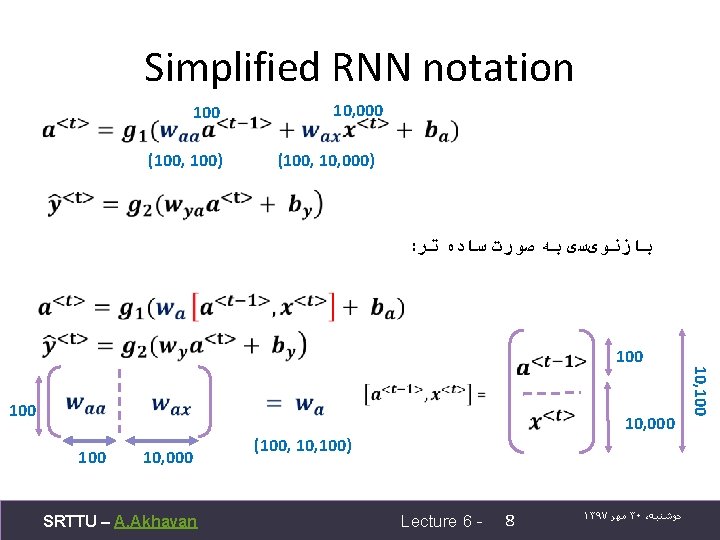

Simplified RNN notation 100 (100, 100) 10, 000 (100, 10, 000) : ﺑﺎﺯﻧﻮیﺴی ﺑﻪ ﺻﻮﺭﺕ ﺳﺎﺩﻩ ﺗﺮ 100 10, 000 SRTTU – A. Akhavan 10, 000 (100, 100) Lecture 6 - 8 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ 10, 100

![Simplified RNN notation qwa is waa and wax stacked horizontally qat1 xt is at1 Simplified RNN notation qwa is waa and wax stacked horizontally. q[a<t-1>, x<t>] is a<t-1>](https://slidetodoc.com/presentation_image_h2/722737b382092d6cd2c529bd5456675b/image-9.jpg)

Simplified RNN notation qwa is waa and wax stacked horizontally. q[a<t-1>, x<t>] is a<t-1> and x<t> stacked vertically. qwa shape: (No. Of. Hidden. Neurons, No. Of. Hidden. Neurons + nx) q[a<t-1>, x<t>] shape: (No. Of. Hidden. Neurons + nx, 1) SRTTU – A. Akhavan Lecture 6 - 9 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

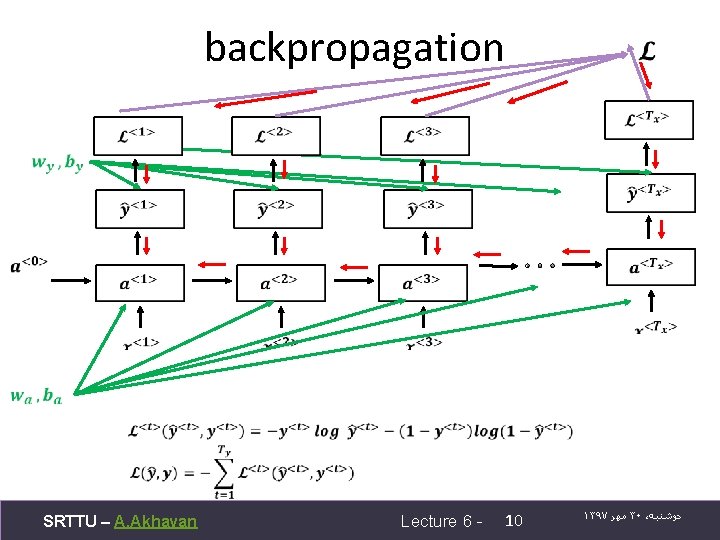

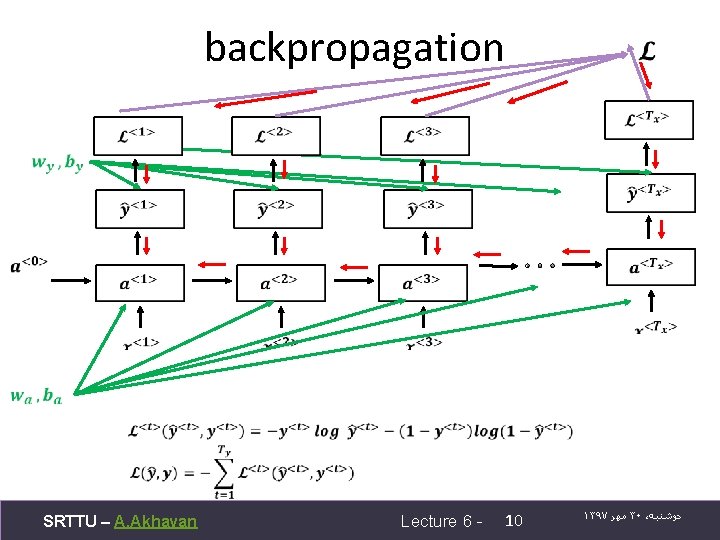

backpropagation SRTTU – A. Akhavan Lecture 6 - 10 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

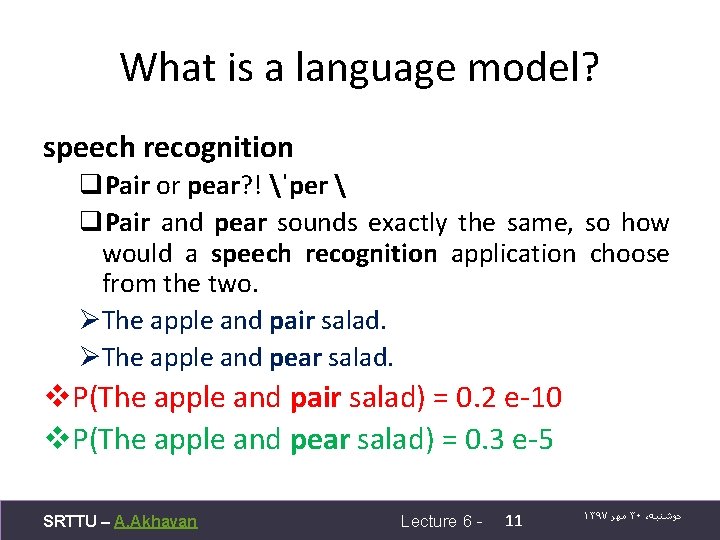

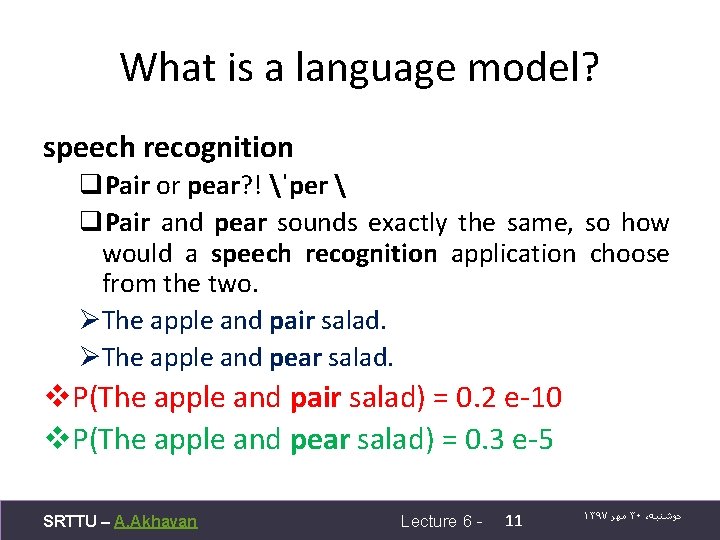

What is a language model? speech recognition q. Pair or pear? ! ˈper q. Pair and pear sounds exactly the same, so how would a speech recognition application choose from the two. ØThe apple and pair salad. ØThe apple and pear salad. v. P(The apple and pair salad) = 0. 2 e-10 v. P(The apple and pear salad) = 0. 3 e-5 SRTTU – A. Akhavan Lecture 6 - 11 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

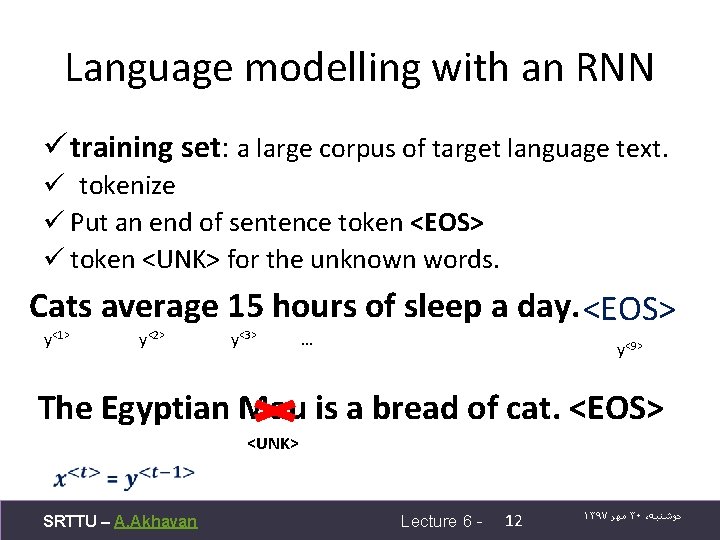

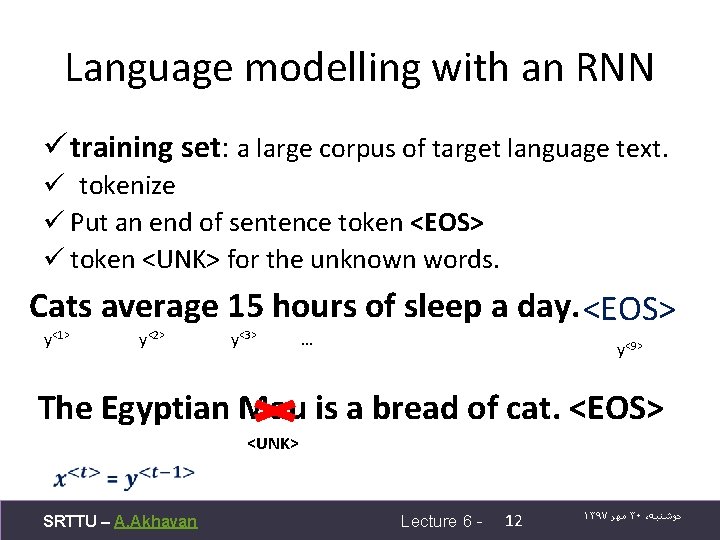

Language modelling with an RNN ü training set: a large corpus of target language text. ü tokenize ü Put an end of sentence token <EOS> ü token <UNK> for the unknown words. Cats average 15 hours of sleep a day. <EOS> y<1> y<2> y<3> … y<9> The Egyptian Mau is a bread of cat. <EOS> <UNK> SRTTU – A. Akhavan Lecture 6 - 12 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

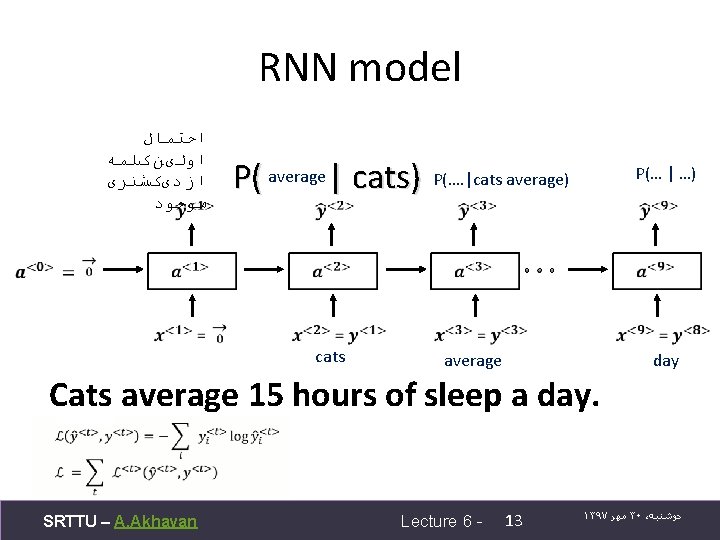

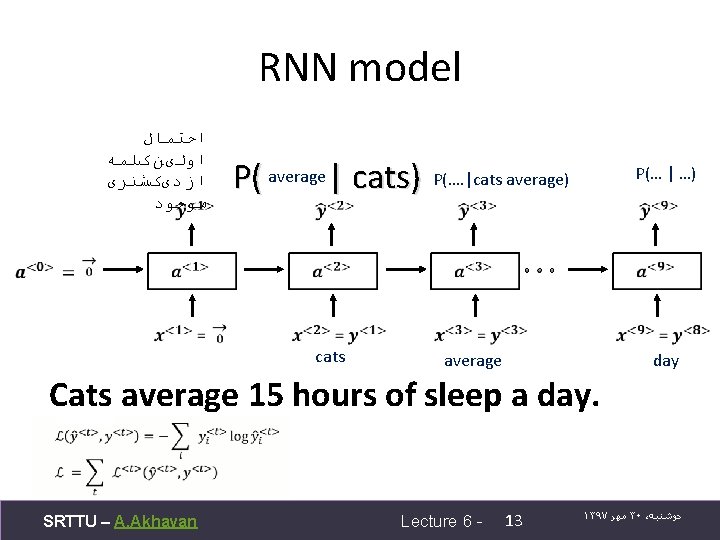

RNN model ﺍﺣﺘﻤﺎﻝ ﺍﻭﻟیﻦ کﻠﻤﻪ ﺍﺯ ﺩیکﺸﻨﺮی ﻣﻮﺟﻮﺩ P( average | cats) P(…. |cats average) cats P(… | …) average day Cats average 15 hours of sleep a day. SRTTU – A. Akhavan Lecture 6 - 13 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

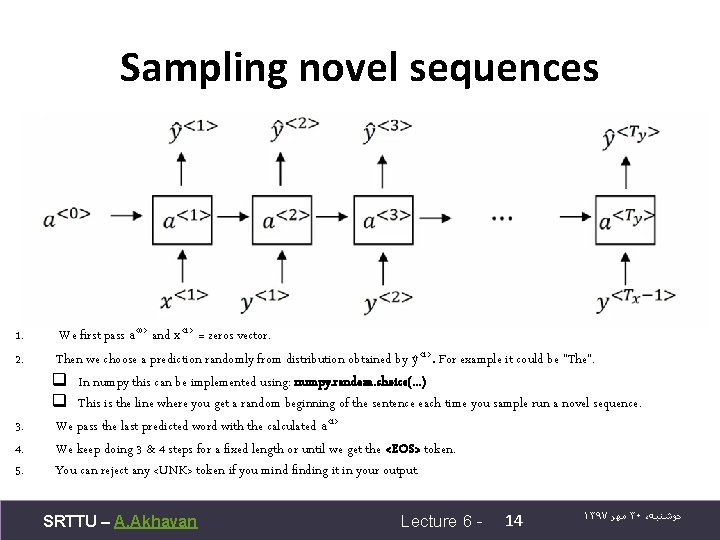

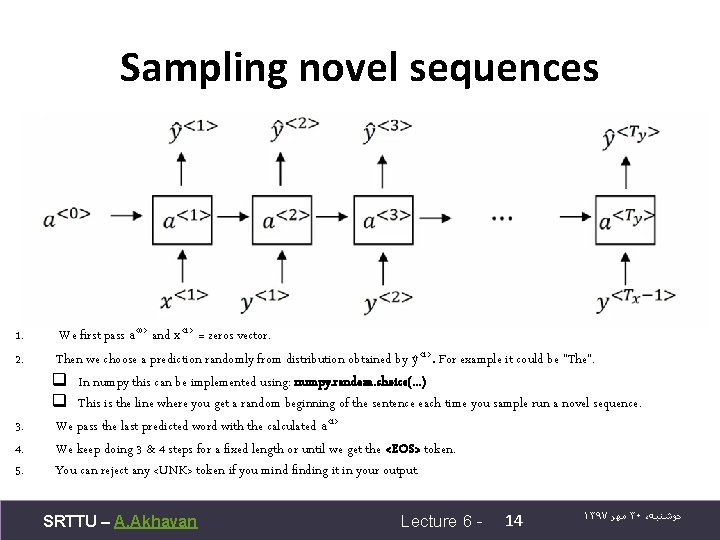

Sampling novel sequences 1. 2. 3. 4. 5. We first pass a<0> and x<1> = zeros vector. Then we choose a prediction randomly from distribution obtained by y <1>. For example it could be "The". q In numpy this can be implemented using: numpy. random. choice(. . . ) q This is the line where you get a random beginning of the sentence each time you sample run a novel sequence. We pass the last predicted word with the calculated a<1> We keep doing 3 & 4 steps for a fixed length or until we get the <EOS> token. You can reject any <UNK> token if you mind finding it in your output. SRTTU – A. Akhavan Lecture 6 - 14 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

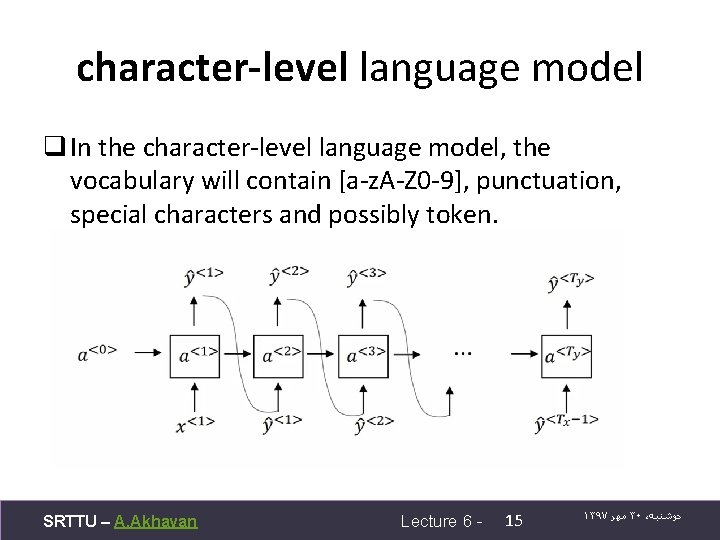

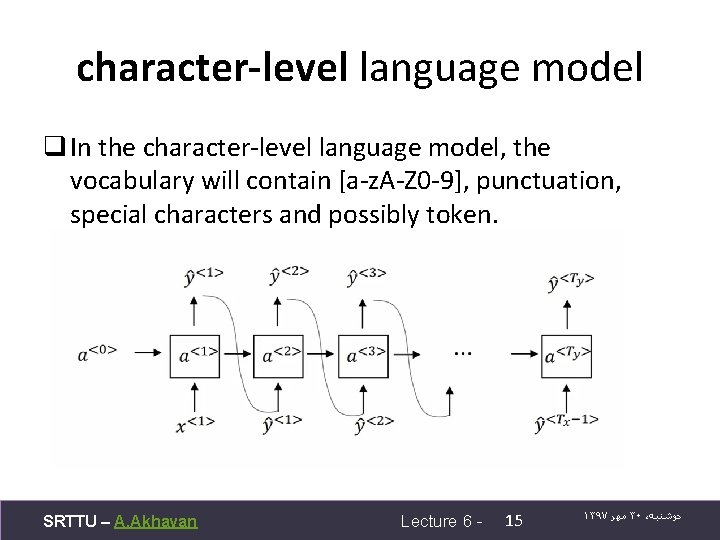

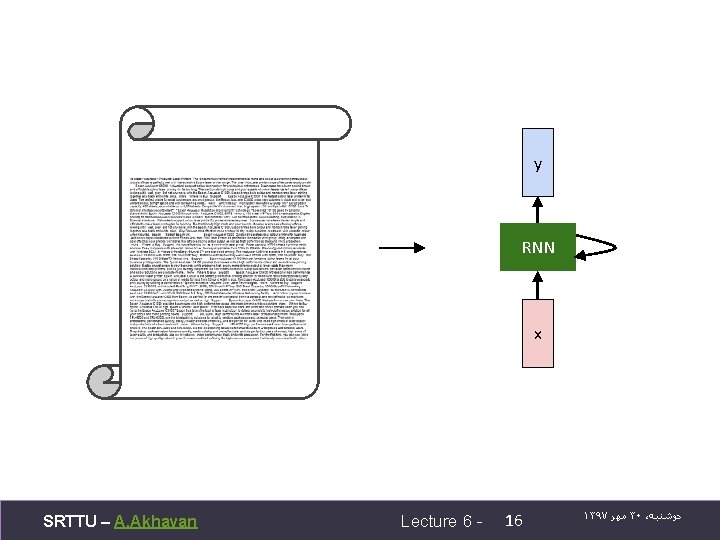

character-level language model q In the character-level language model, the vocabulary will contain [a-z. A-Z 0 -9], punctuation, special characters and possibly token. SRTTU – A. Akhavan Lecture 6 - 15 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

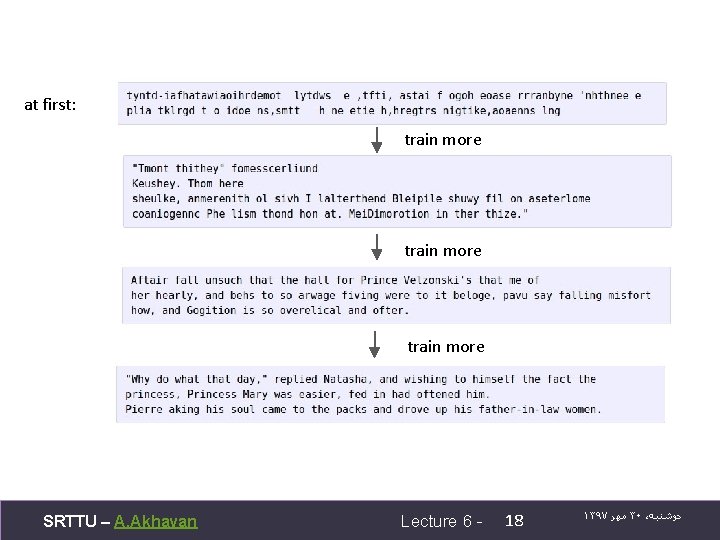

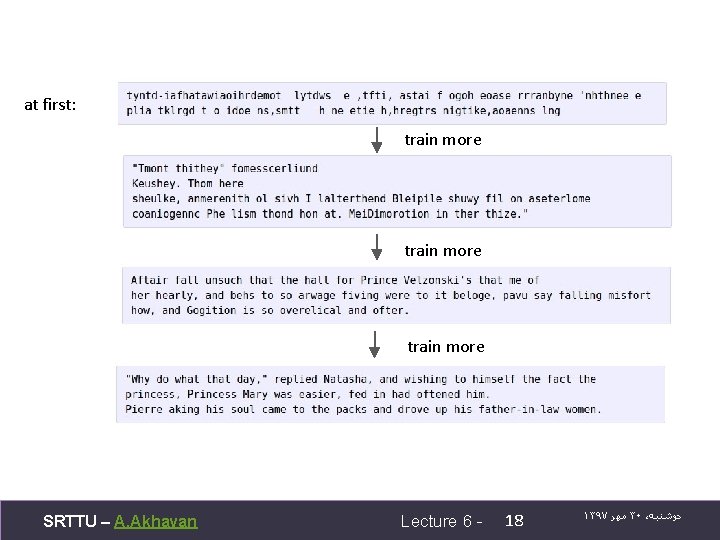

at first: train more SRTTU – A. Akhavan Lecture 6 - 18 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

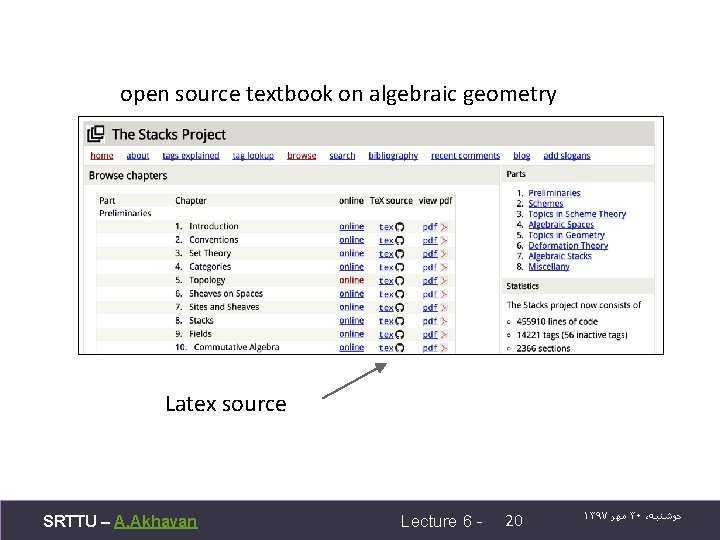

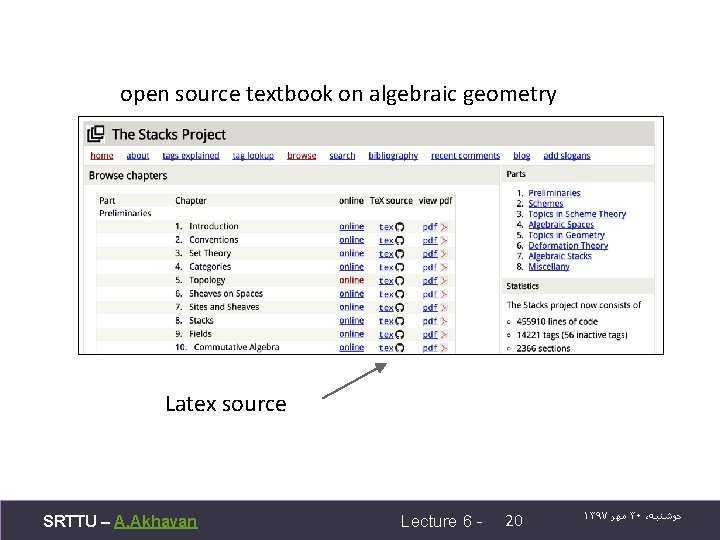

open source textbook on algebraic geometry Latex source SRTTU – A. Akhavan Lecture 6 - 20 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

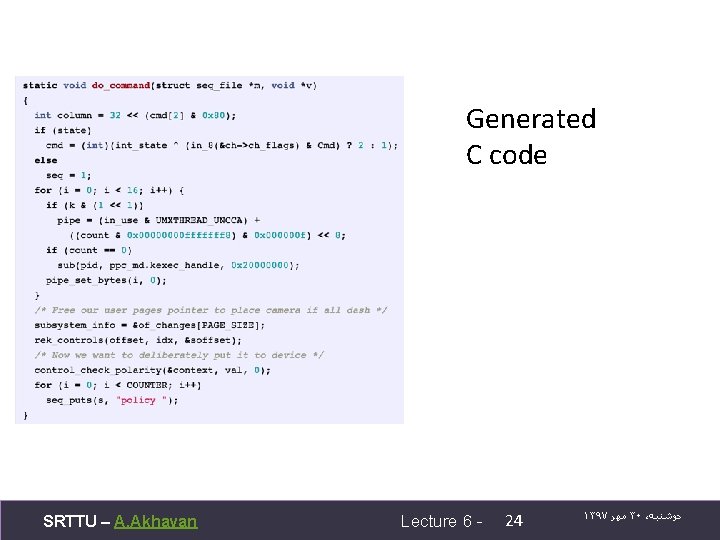

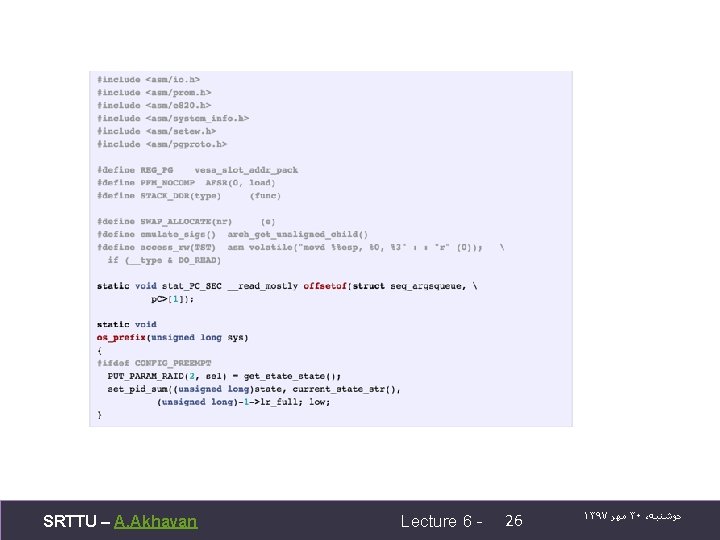

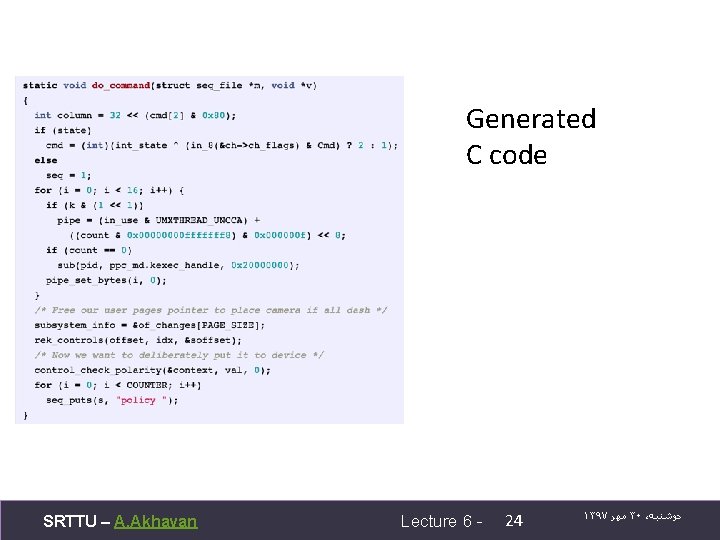

Generated C code SRTTU – A. Akhavan Lecture 6 - 24 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

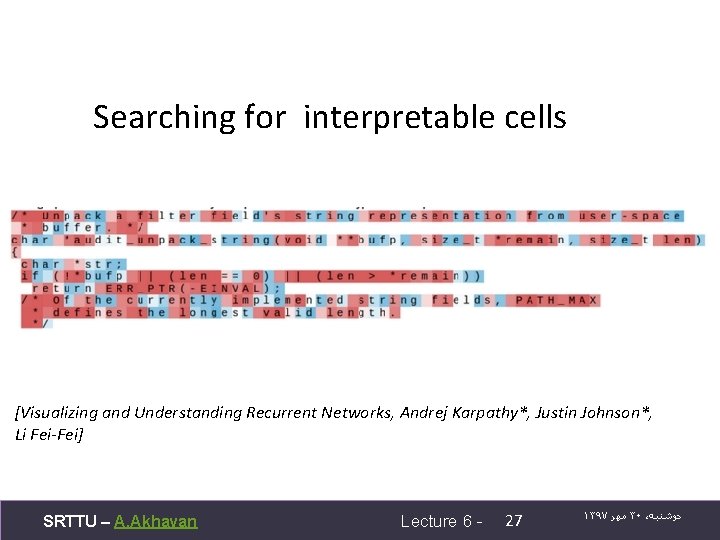

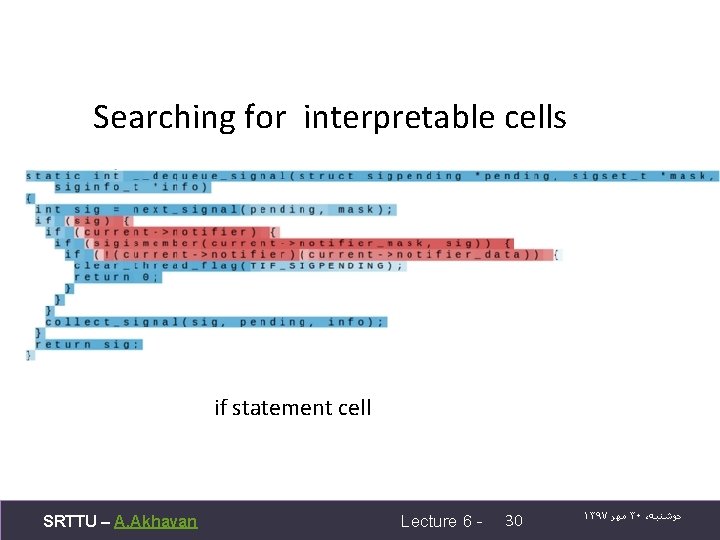

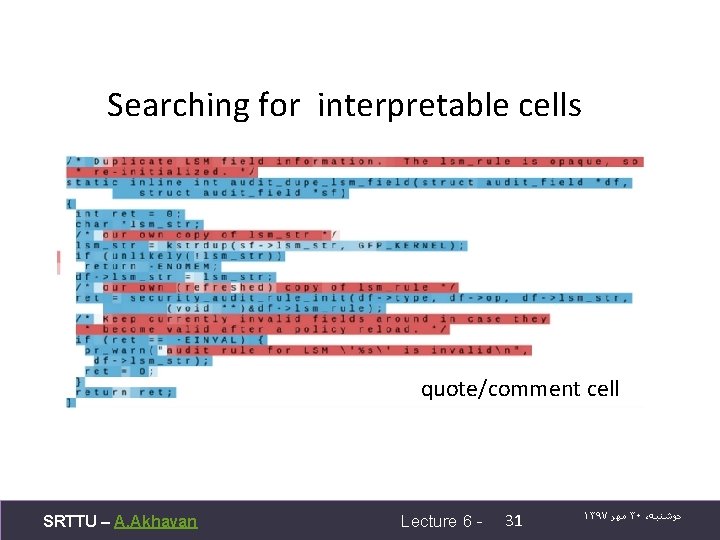

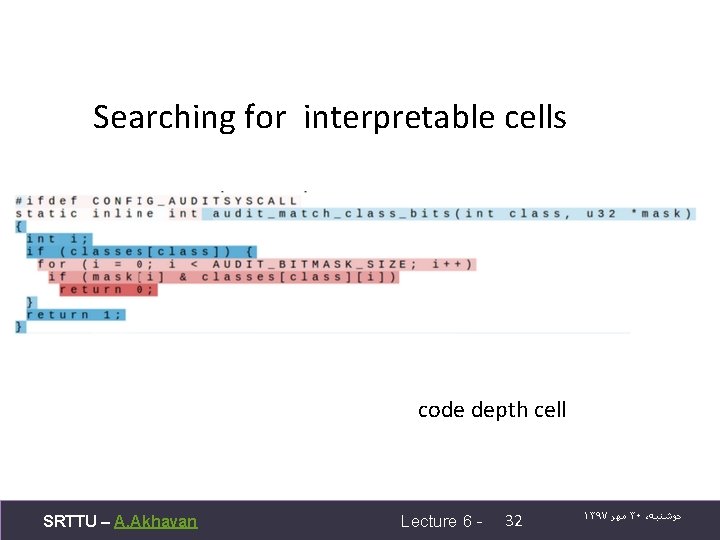

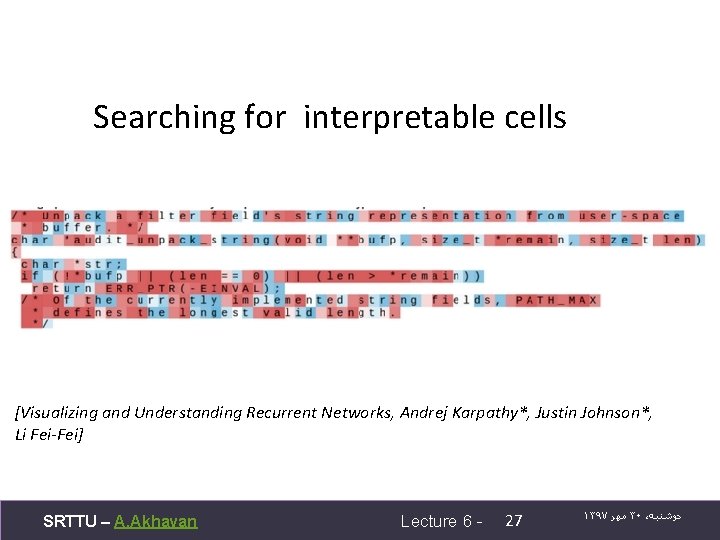

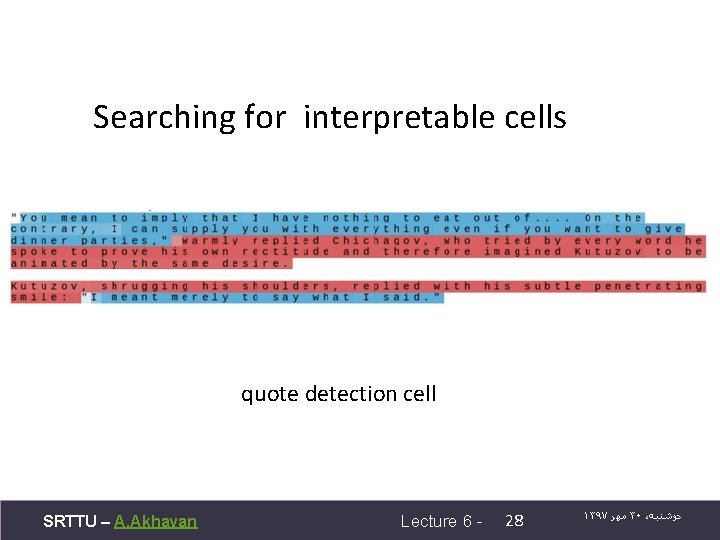

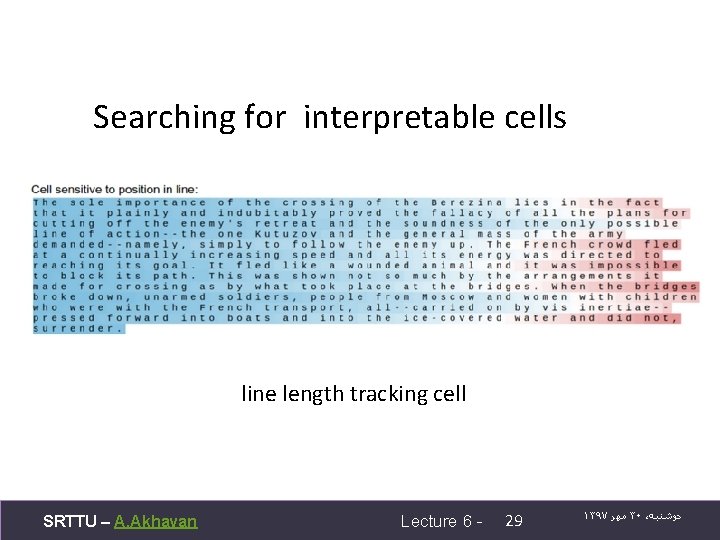

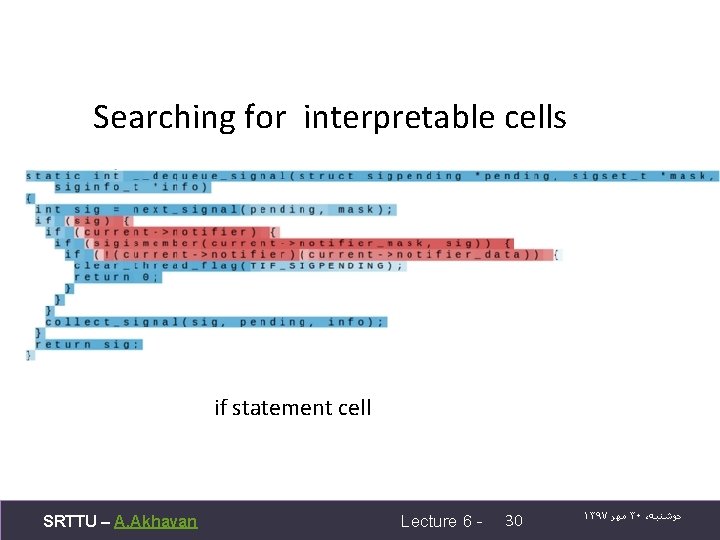

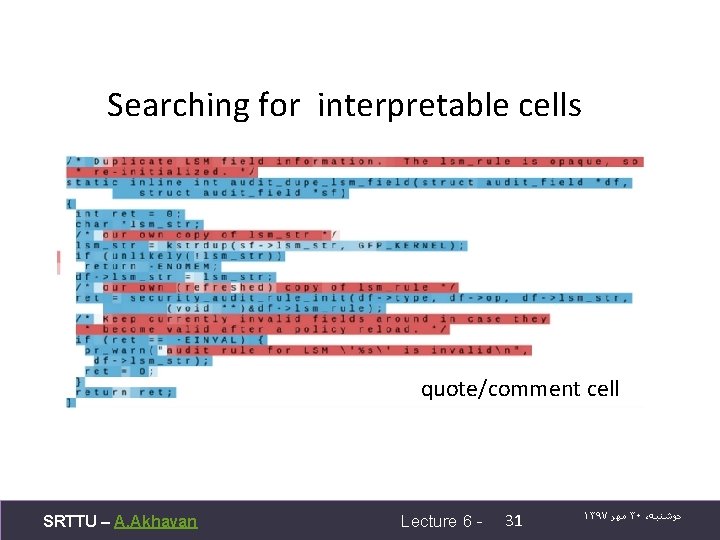

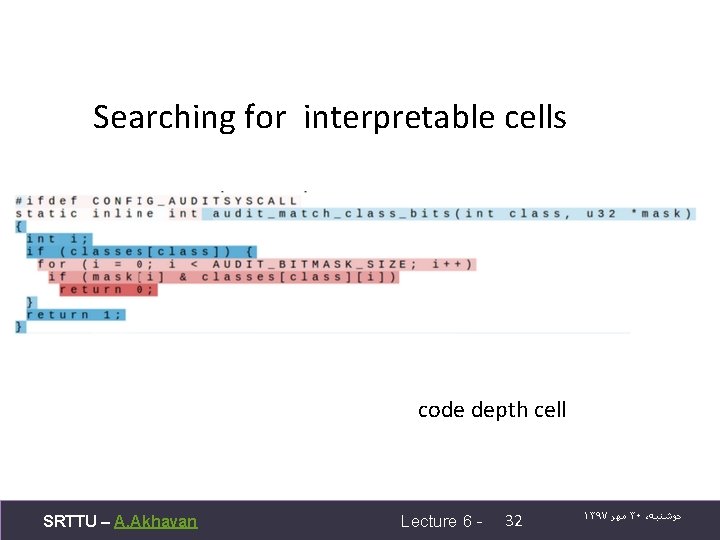

Searching for interpretable cells [Visualizing and Understanding Recurrent Networks, Andrej Karpathy*, Justin Johnson*, Li Fei-Fei] SRTTU – A. Akhavan Lecture 6 - 27 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

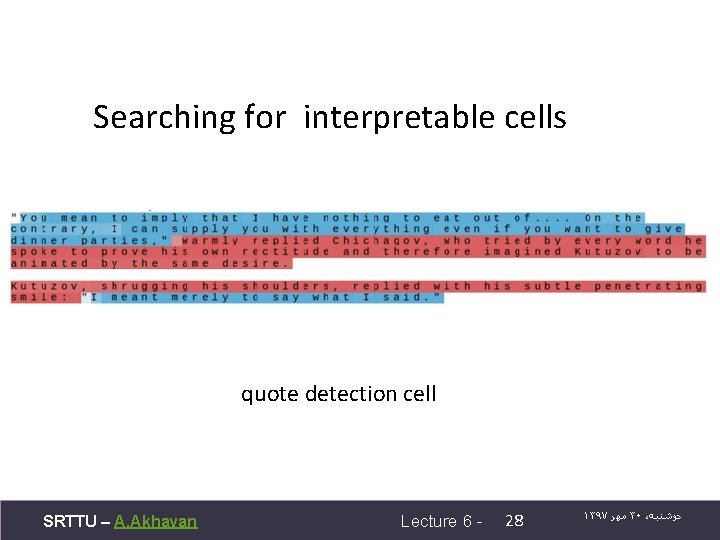

Searching for interpretable cells quote detection cell SRTTU – A. Akhavan Lecture 6 - 28 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

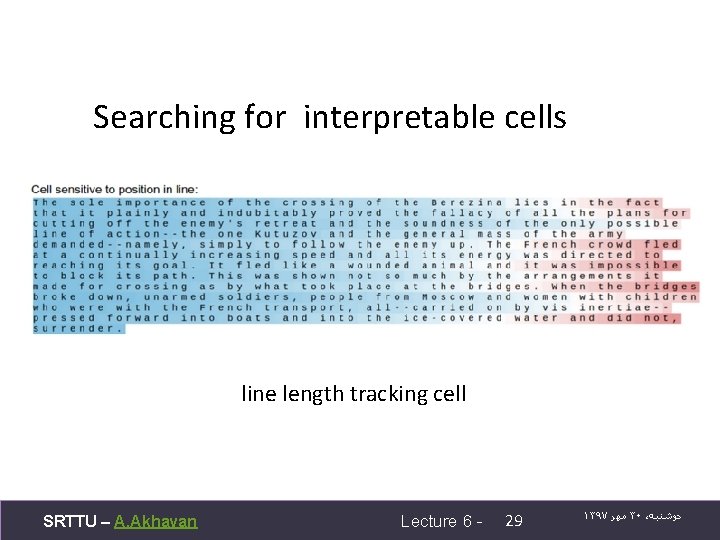

Searching for interpretable cells line length tracking cell SRTTU – A. Akhavan Lecture 6 - 29 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

Searching for interpretable cells if statement cell SRTTU – A. Akhavan Lecture 6 - 30 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

Searching for interpretable cells quote/comment cell SRTTU – A. Akhavan Lecture 6 - 31 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

Searching for interpretable cells code depth cell SRTTU – A. Akhavan Lecture 6 - 32 ۱۳۹۷ ﻣﻬﺮ ۳۰ ، ﺩﻭﺷﻨﺒﻪ

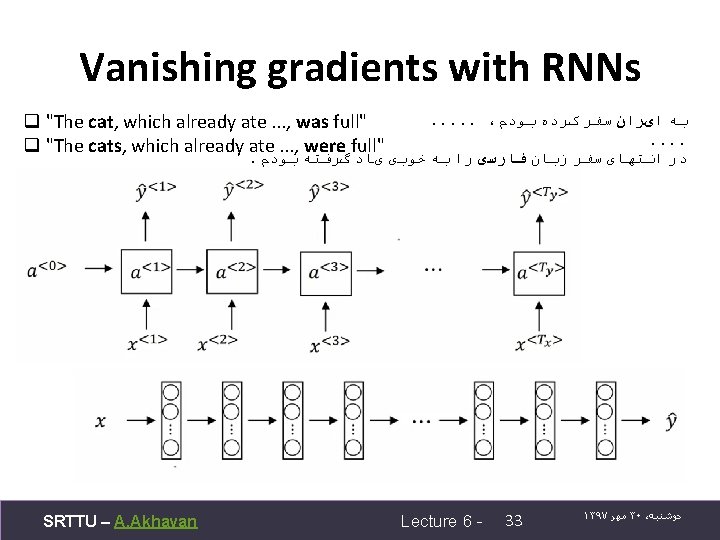

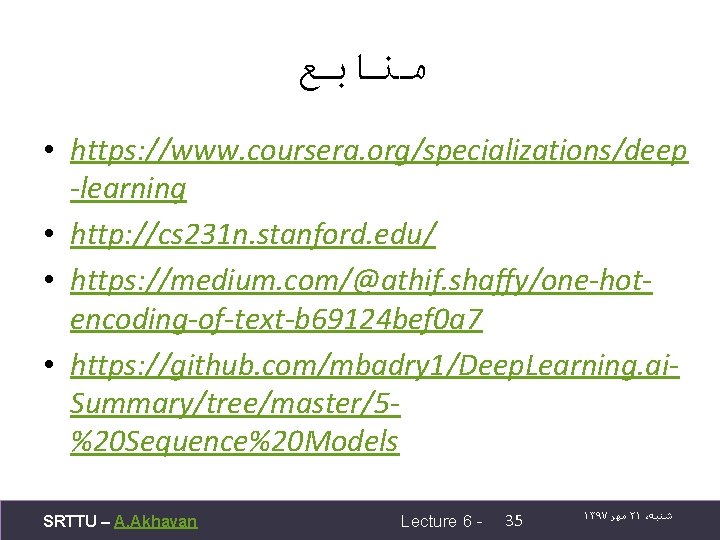

ﻣﻨﺎﺑﻊ • https: //www. coursera. org/specializations/deep -learning • http: //cs 231 n. stanford. edu/ • https: //medium. com/@athif. shaffy/one-hotencoding-of-text-b 69124 bef 0 a 7 • https: //github. com/mbadry 1/Deep. Learning. ai. Summary/tree/master/5%20 Sequence%20 Models SRTTU – A. Akhavan Lecture 6 - 35 ۱۳۹۷ ﻣﻬﺮ ۲۱ ، ﺷﻨﺒﻪ