LECTURE 6 MULTIAGENT INTERACTIONS An Introduction to Multi

- Slides: 61

LECTURE 6: MULTIAGENT INTERACTIONS An Introduction to Multi. Agent Systems http: //www. csc. liv. ac. uk/~mjw/pubs/imas Chapter 11 in the Second Edition Chapter 6 in the First Edition 6 -1

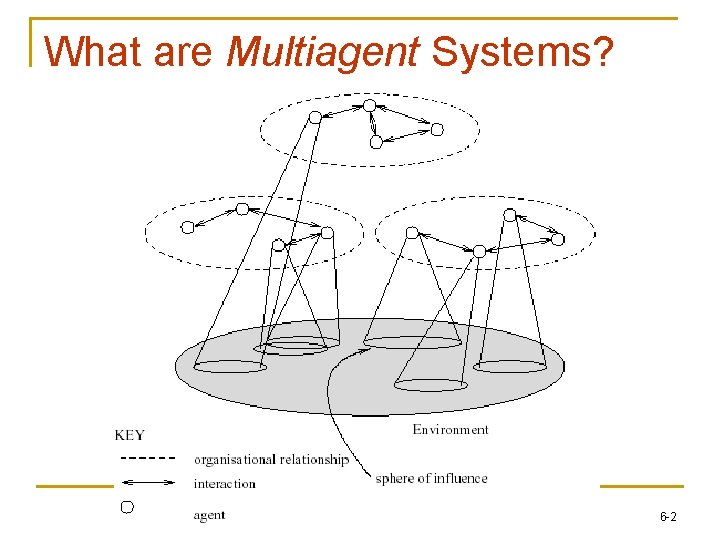

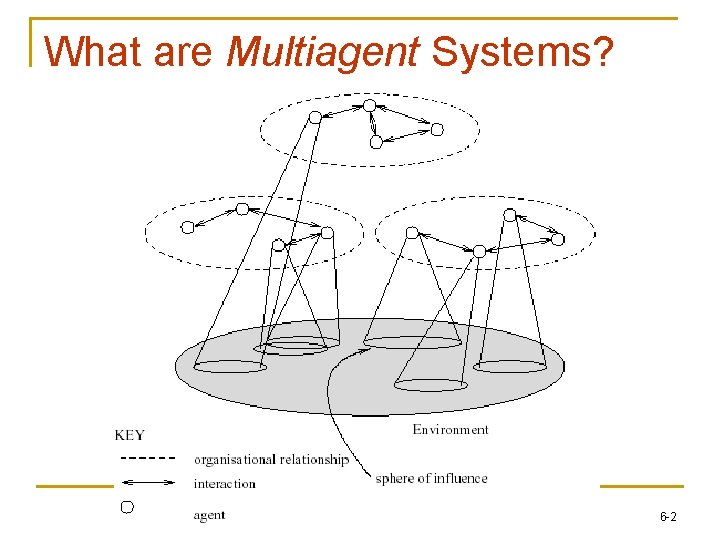

What are Multiagent Systems? 6 -2

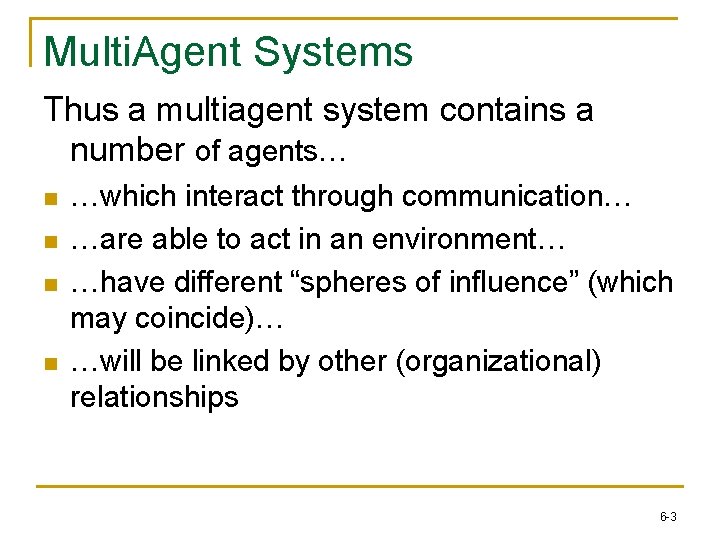

Multi. Agent Systems Thus a multiagent system contains a number of agents… n n …which interact through communication… …are able to act in an environment… …have different “spheres of influence” (which may coincide)… …will be linked by other (organizational) relationships 6 -3

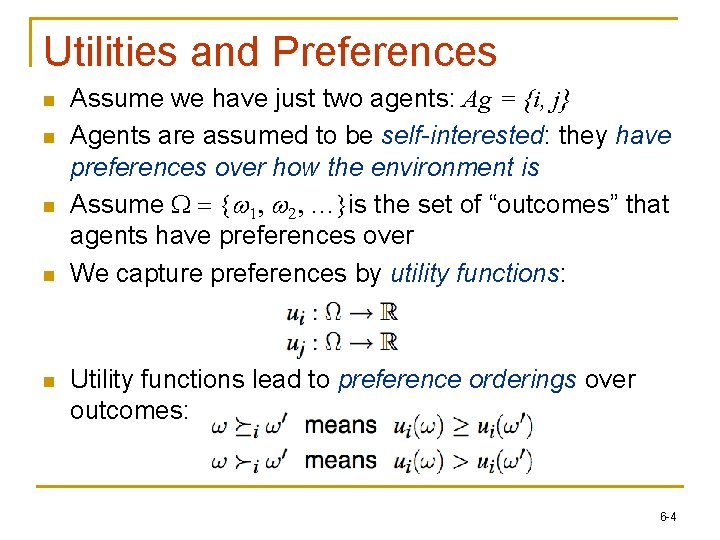

Utilities and Preferences n n n Assume we have just two agents: Ag = {i, j} Agents are assumed to be self-interested: they have preferences over how the environment is Assume W = {w 1, w 2, …}is the set of “outcomes” that agents have preferences over We capture preferences by utility functions: Utility functions lead to preference orderings over outcomes: 6 -4

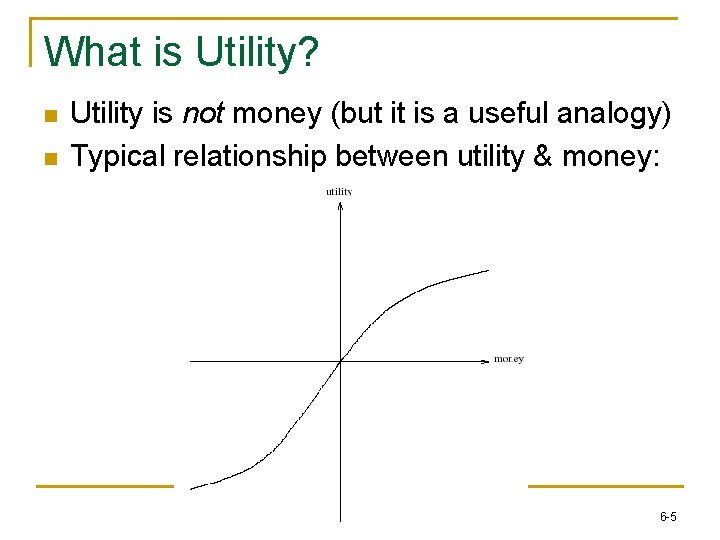

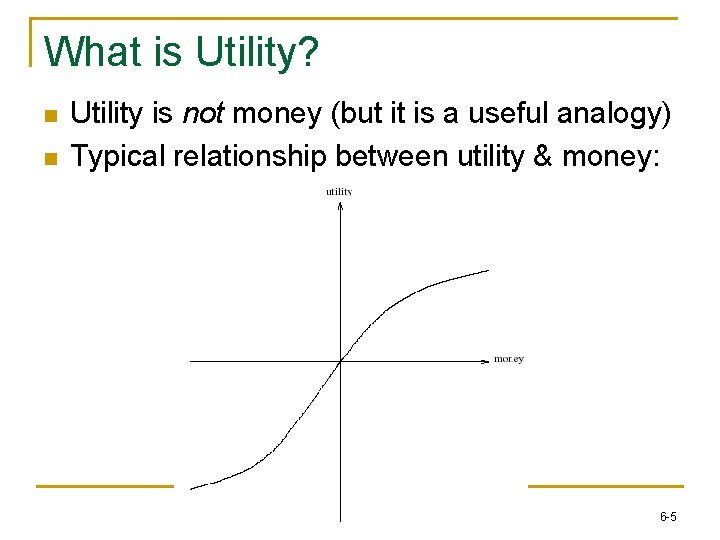

What is Utility? n n Utility is not money (but it is a useful analogy) Typical relationship between utility & money: 6 -5

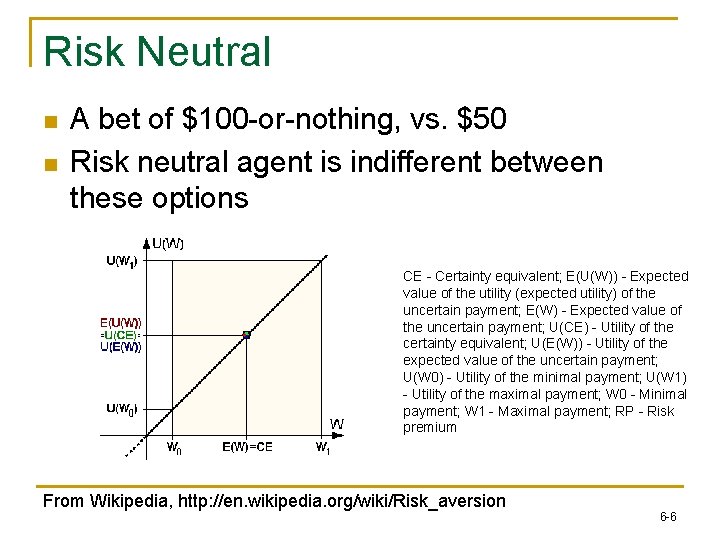

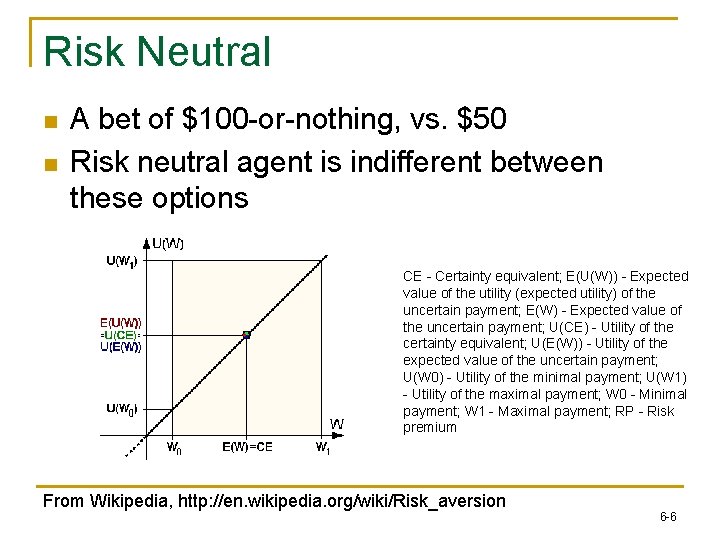

Risk Neutral n n A bet of $100 -or-nothing, vs. $50 Risk neutral agent is indifferent between these options CE - Certainty equivalent; E(U(W)) - Expected value of the utility (expected utility) of the uncertain payment; E(W) - Expected value of the uncertain payment; U(CE) - Utility of the certainty equivalent; U(E(W)) - Utility of the expected value of the uncertain payment; U(W 0) - Utility of the minimal payment; U(W 1) - Utility of the maximal payment; W 0 - Minimal payment; W 1 - Maximal payment; RP - Risk premium From Wikipedia, http: //en. wikipedia. org/wiki/Risk_aversion 6 -6

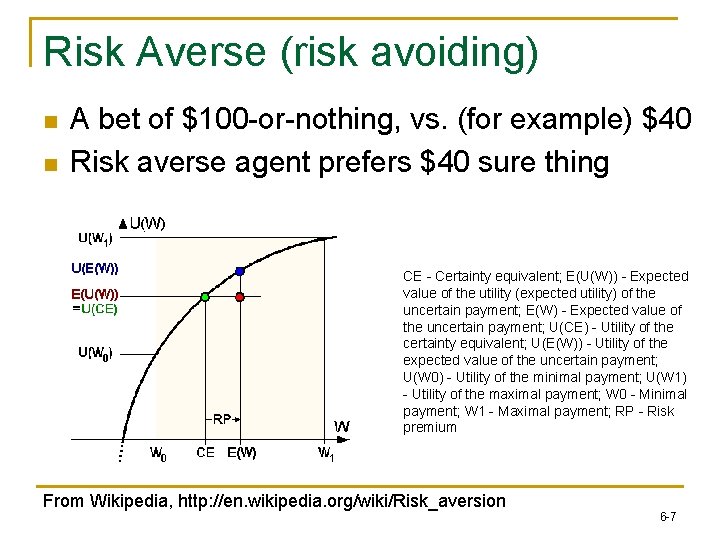

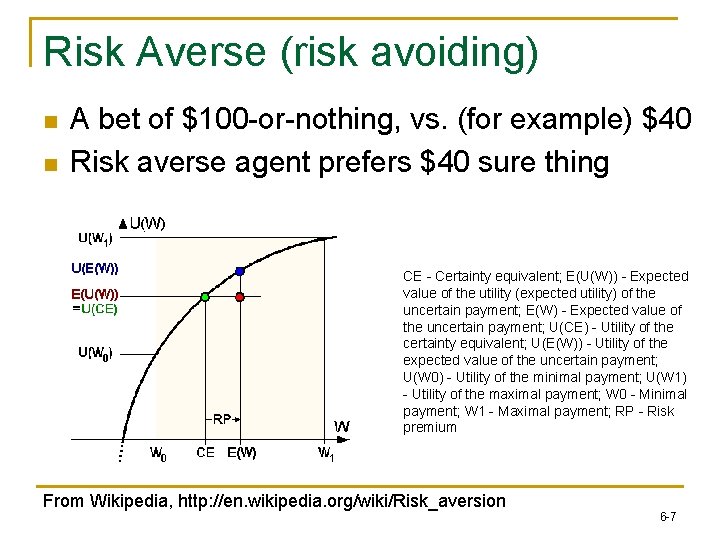

Risk Averse (risk avoiding) n n A bet of $100 -or-nothing, vs. (for example) $40 Risk averse agent prefers $40 sure thing CE - Certainty equivalent; E(U(W)) - Expected value of the utility (expected utility) of the uncertain payment; E(W) - Expected value of the uncertain payment; U(CE) - Utility of the certainty equivalent; U(E(W)) - Utility of the expected value of the uncertain payment; U(W 0) - Utility of the minimal payment; U(W 1) - Utility of the maximal payment; W 0 - Minimal payment; W 1 - Maximal payment; RP - Risk premium From Wikipedia, http: //en. wikipedia. org/wiki/Risk_aversion 6 -7

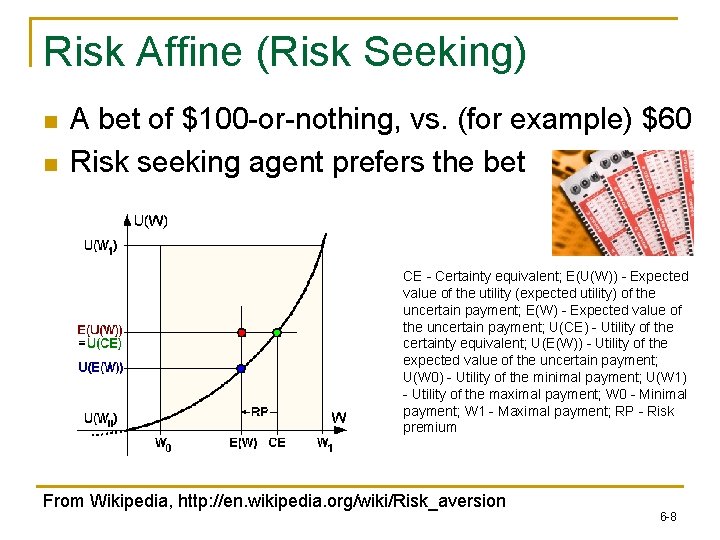

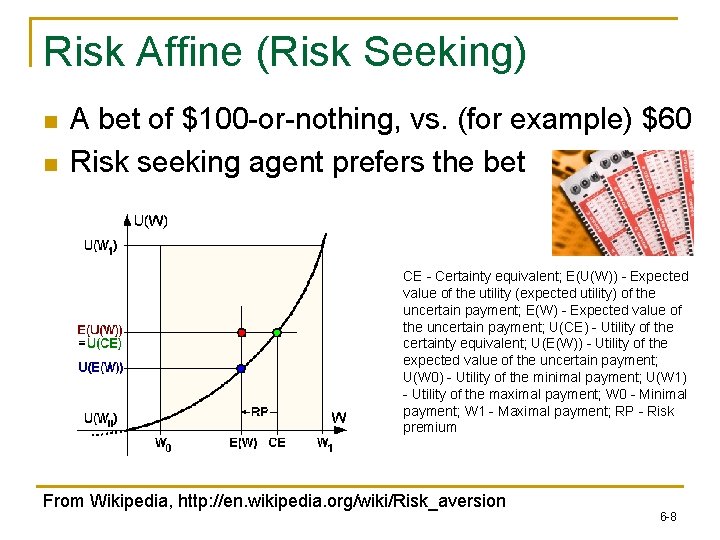

Risk Affine (Risk Seeking) n n A bet of $100 -or-nothing, vs. (for example) $60 Risk seeking agent prefers the bet CE - Certainty equivalent; E(U(W)) - Expected value of the utility (expected utility) of the uncertain payment; E(W) - Expected value of the uncertain payment; U(CE) - Utility of the certainty equivalent; U(E(W)) - Utility of the expected value of the uncertain payment; U(W 0) - Utility of the minimal payment; U(W 1) - Utility of the maximal payment; W 0 - Minimal payment; W 1 - Maximal payment; RP - Risk premium From Wikipedia, http: //en. wikipedia. org/wiki/Risk_aversion 6 -8

Human Approaches to “Utility” n n Behavioral Economists have studied how human attitudes towards utility contradict classic economic notions of utility (and rationality, utility maximization) These issues are also important for agents who would need to interact with people in natural ways (or for multiagent systems that include people as some of the agents) 6 -9

Kahneman (and Tversky) n Scenario 1: You go to a concert with $200 in your wallet, and a ticket to the concert that cost $100. When getting there, you discover that you lost a $100 bill from your wallet (so you have remaining $100, and the ticket). Do you go into the concert? 6 -10

Kahneman (and Tversky) n Scenario 2: You go to a concert with $200 in your wallet, and a ticket to the concert that cost $100. When getting there, you discover that you lost the ticket from your wallet (so you have remaining $200, and no ticket). Do you buy another ticket and go into the concert? 6 -11

Kahneman (and Tversky) n n People remember the end of painful events more vividly Scenario 1: hand put into very cold (painfully cold) water for 2 minutes, then pulled out. Scenario 2: hand put into very cold (painfully cold) water for 2 minutes, then water is heated for 1 minute (lessening pain), and hand then pulled out. People prefer the second scenario, though the amount of pain is a superset of the first 6 -12

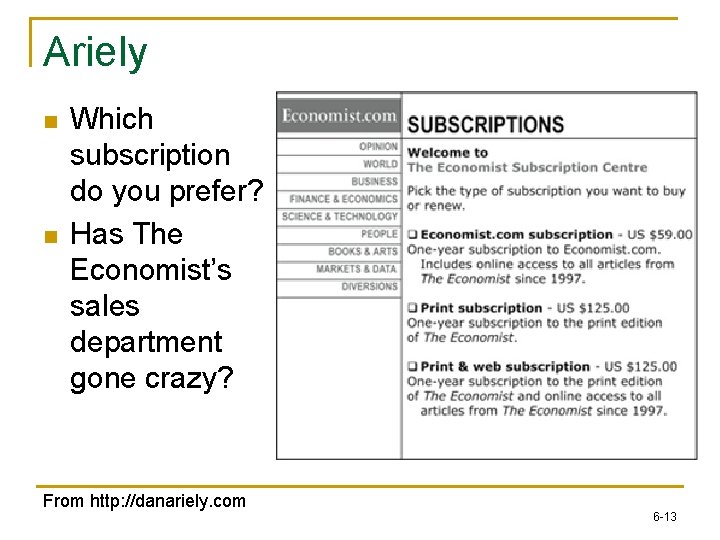

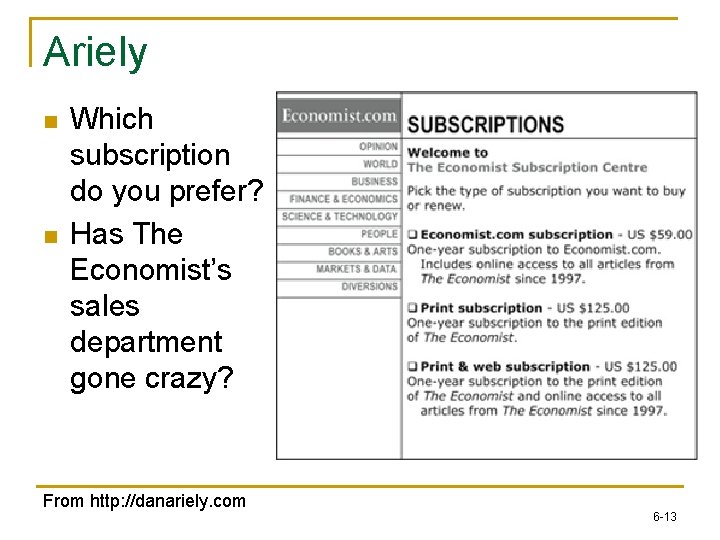

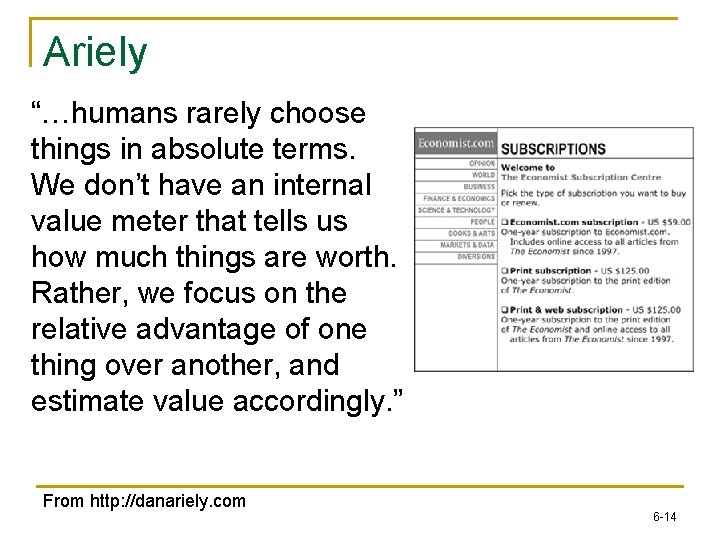

Ariely n n Which subscription do you prefer? Has The Economist’s sales department gone crazy? From http: //danariely. com 6 -13

Ariely “…humans rarely choose things in absolute terms. We don’t have an internal value meter that tells us how much things are worth. Rather, we focus on the relative advantage of one thing over another, and estimate value accordingly. ” From http: //danariely. com 6 -14

Ariely n n n Ask stranger to help unload sofa from a truck, for free; many agree Ask stranger to help unload sofa from a truck, for $1; most do not The second scenario seems to provide the utility of the first, plus more, though “obviously”, it doesn’t 6 -15

Ultimatum Game n n Two players Player 1 is given $100, told to offer Player 2 some of it. If Player 2 accepts, they divide the $100 according to the offer; if Player 2 does not accept, they both get nothing Player 1 offers Player 2 $1 Does Player 2 accept? Is it rational not to accept? 6 -16

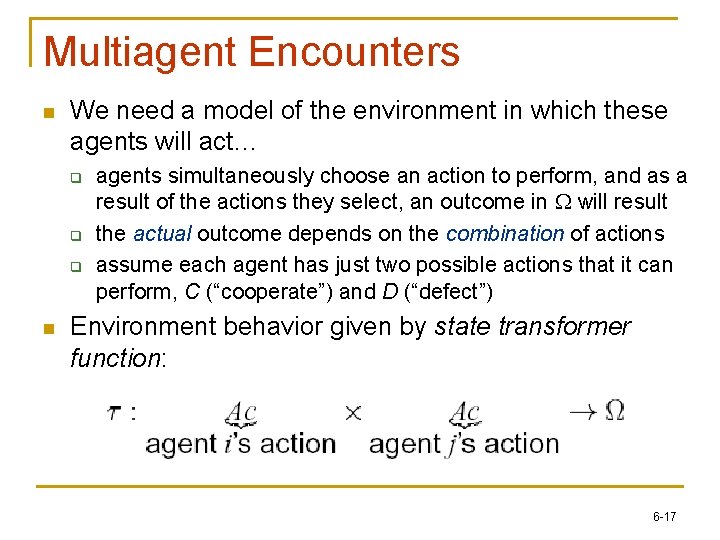

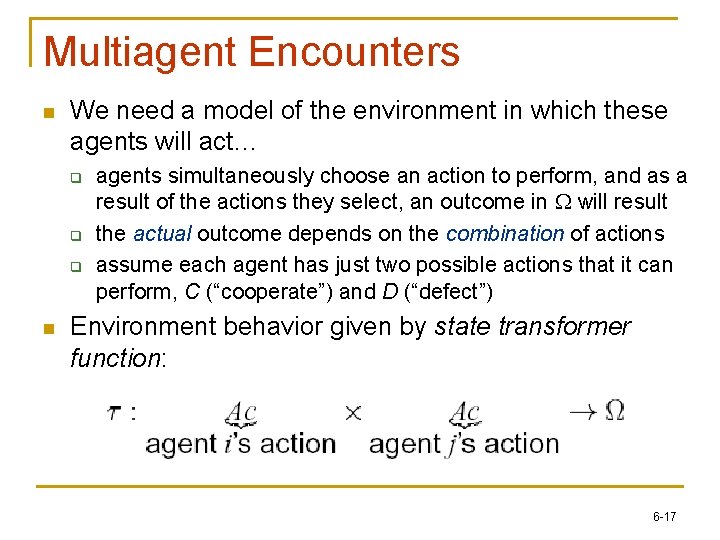

Multiagent Encounters n We need a model of the environment in which these agents will act… q q q n agents simultaneously choose an action to perform, and as a result of the actions they select, an outcome in W will result the actual outcome depends on the combination of actions assume each agent has just two possible actions that it can perform, C (“cooperate”) and D (“defect”) Environment behavior given by state transformer function: 6 -17

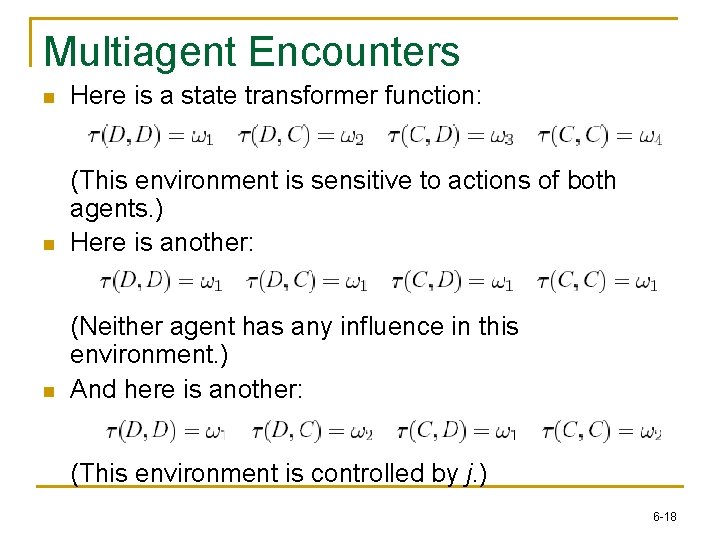

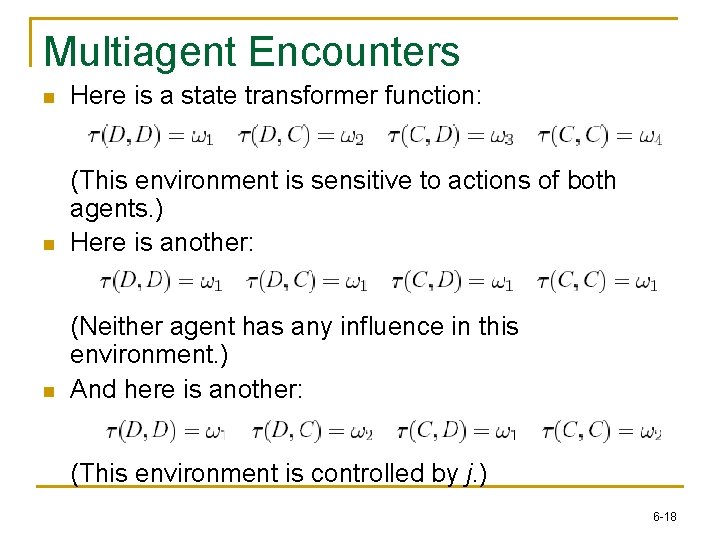

Multiagent Encounters n Here is a state transformer function: n (This environment is sensitive to actions of both agents. ) Here is another: n (Neither agent has any influence in this environment. ) And here is another: (This environment is controlled by j. ) 6 -18

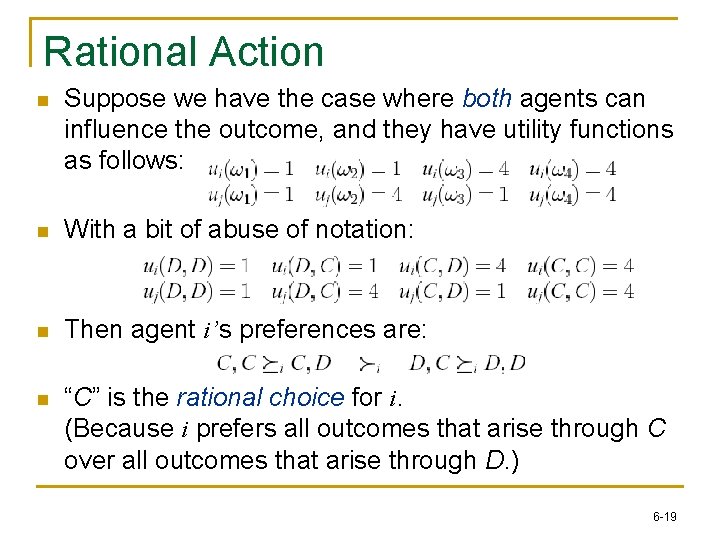

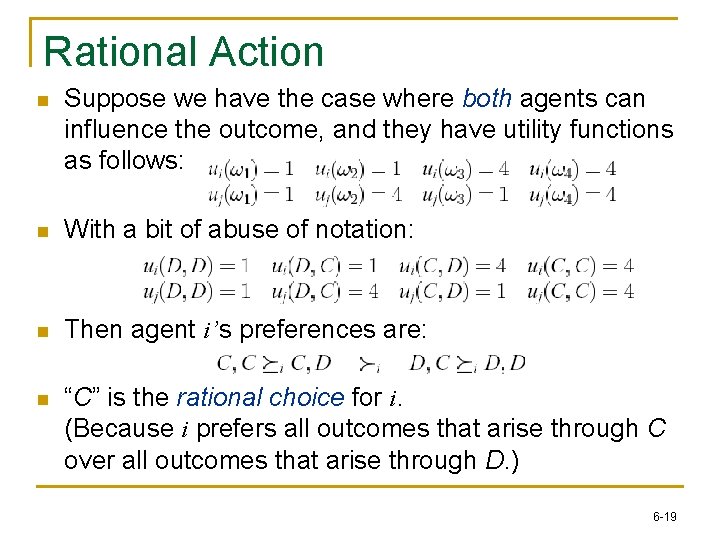

Rational Action n Suppose we have the case where both agents can influence the outcome, and they have utility functions as follows: n With a bit of abuse of notation: n Then agent i’s preferences are: n “C” is the rational choice for i. (Because i prefers all outcomes that arise through C over all outcomes that arise through D. ) 6 -19

An Aside on “Rationality” n n The term “rational” is used imprecisely as a synonym of “reasonable” But “rational” is used (precisely) to mean “utility maximizer”, given the alternatives Since humans’ utility functions are difficult to elicit, one way of determining their functions is to see what people choose (under the assumption that they are rational) Then behavioral economists can show forms of behavior that seem “irrational” 6 -20

“Rational” in Common Usage n n The New York Times, April 26, 2012, “Defense Minister Adds to Israel’s Recent Mix of Messages on Iran”, by Jodi Rudore “General Gantz described the Iranian government as ‘very rational. ’ Mr. Netanyahu had told CNN on Tuesday that he would not count ‘on Iran’s rational behavior. ’ ” “Mr. Barak said he thought it unlikely that the sanctions would succeed and that he did not see Iran as ‘rational in the Western sense of the word, meaning people seeking a status quo and the outlines of a solution to problems in a peaceful manner. ’ ” “Dore Gold…said the apparent disagreement on rationality could be explained: ‘The Iranians have irrational goals, which they may try and advance in a rational way. ’ ” 6 -21

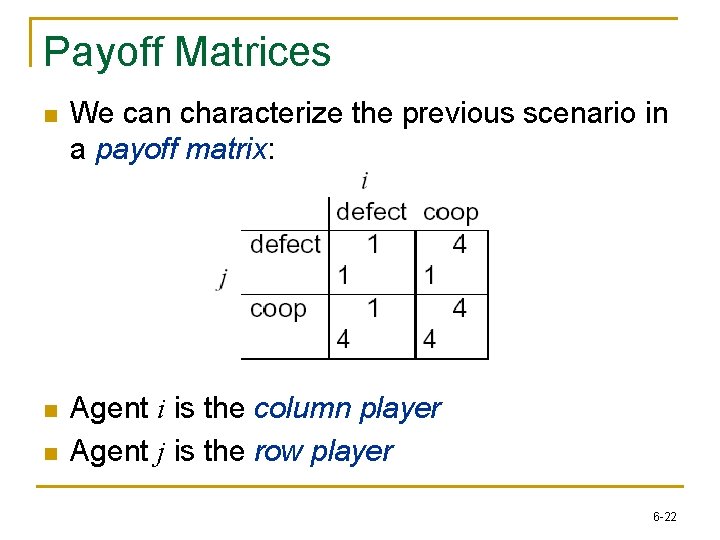

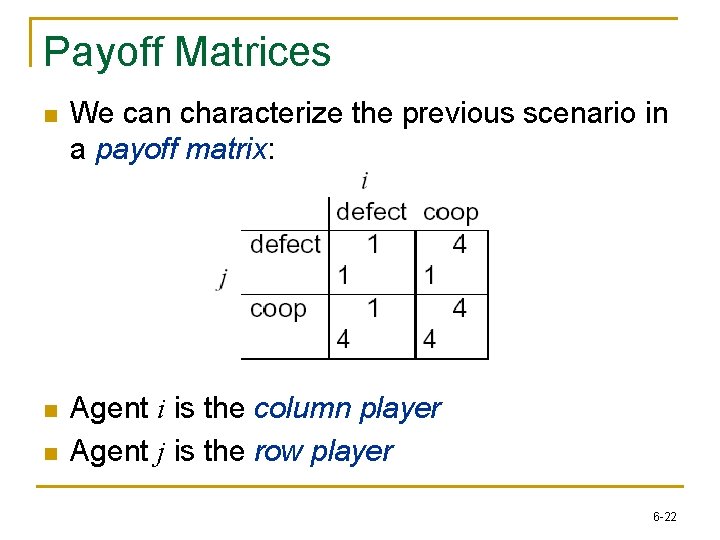

Payoff Matrices n We can characterize the previous scenario in a payoff matrix: n Agent i is the column player Agent j is the row player n 6 -22

Solution Concepts n n How will a rational agent will behave in any given scenario? Answered in solution concepts: q q dominant strategy Nash equilibrium strategy Pareto optimal strategies that maximize social welfare 6 -23

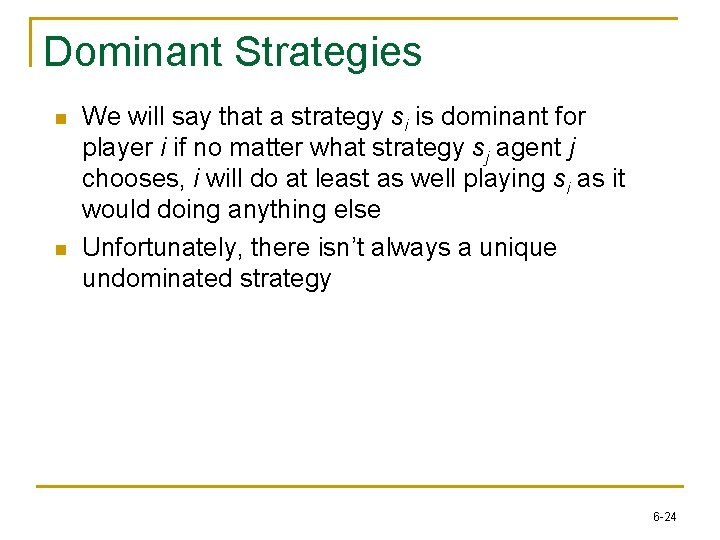

Dominant Strategies n n We will say that a strategy si is dominant for player i if no matter what strategy sj agent j chooses, i will do at least as well playing si as it would doing anything else Unfortunately, there isn’t always a unique undominated strategy 6 -24

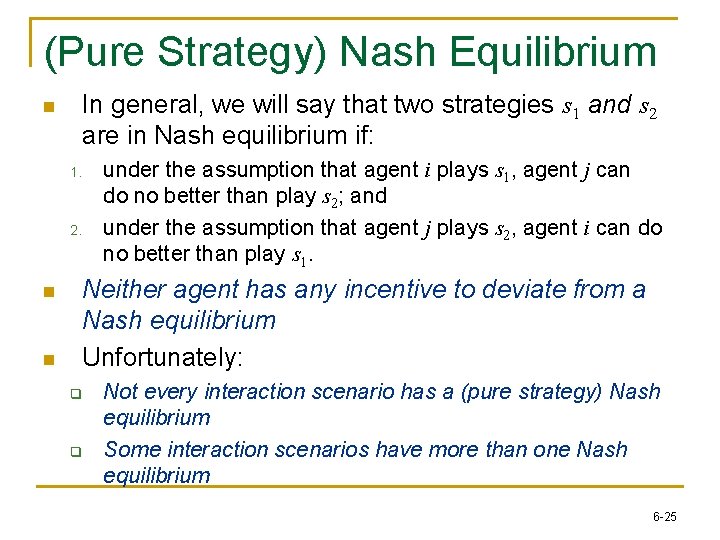

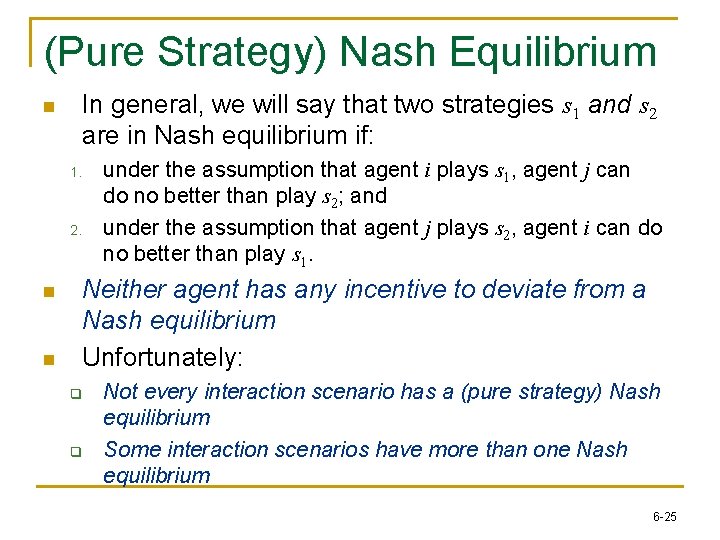

(Pure Strategy) Nash Equilibrium In general, we will say that two strategies s 1 and s 2 are in Nash equilibrium if: n under the assumption that agent i plays s 1, agent j can do no better than play s 2; and under the assumption that agent j plays s 2, agent i can do no better than play s 1. 2. n n Neither agent has any incentive to deviate from a Nash equilibrium Unfortunately: q q Not every interaction scenario has a (pure strategy) Nash equilibrium Some interaction scenarios have more than one Nash equilibrium 6 -25

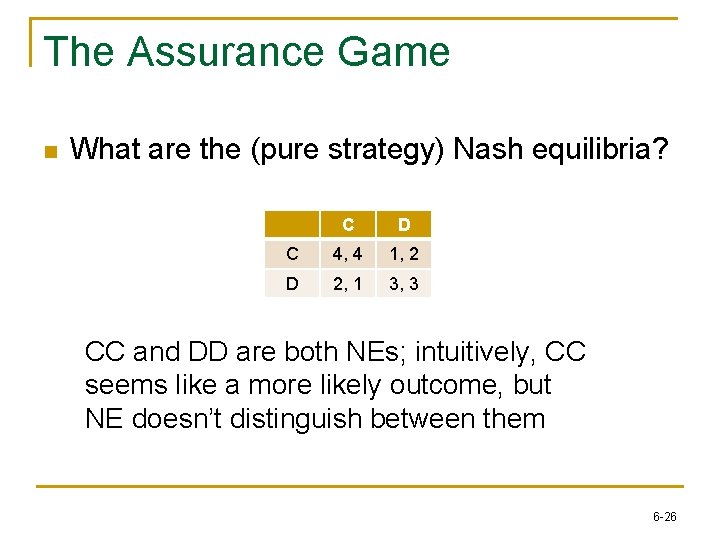

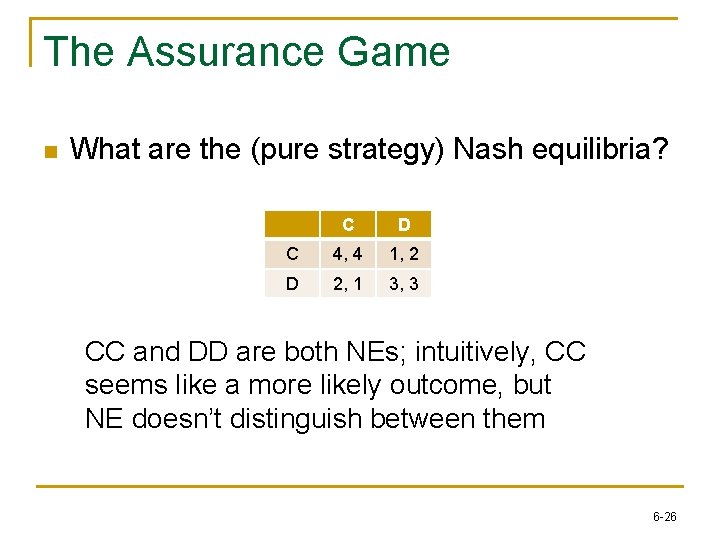

The Assurance Game n What are the (pure strategy) Nash equilibria? C D C 4, 4 1, 2 D 2, 1 3, 3 CC and DD are both NEs; intuitively, CC seems like a more likely outcome, but NE doesn’t distinguish between them 6 -26

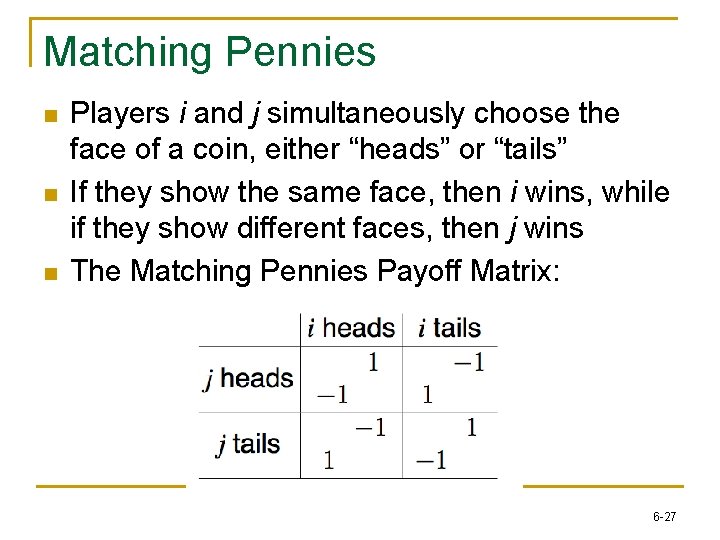

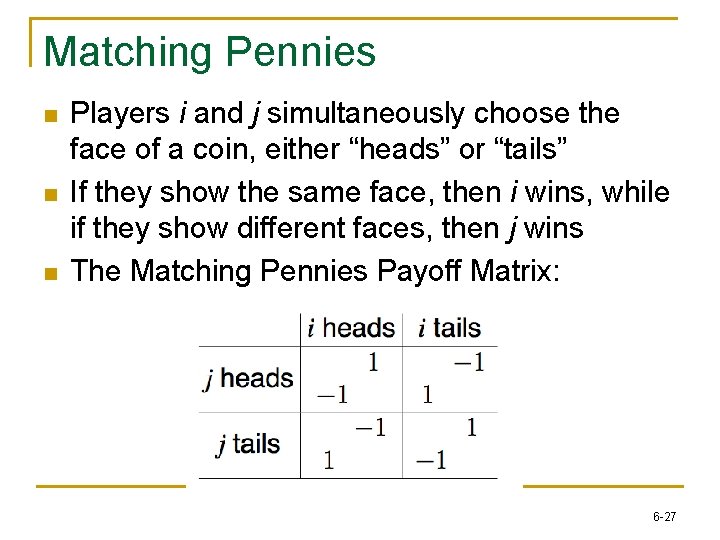

Matching Pennies n n n Players i and j simultaneously choose the face of a coin, either “heads” or “tails” If they show the same face, then i wins, while if they show different faces, then j wins The Matching Pennies Payoff Matrix: 6 -27

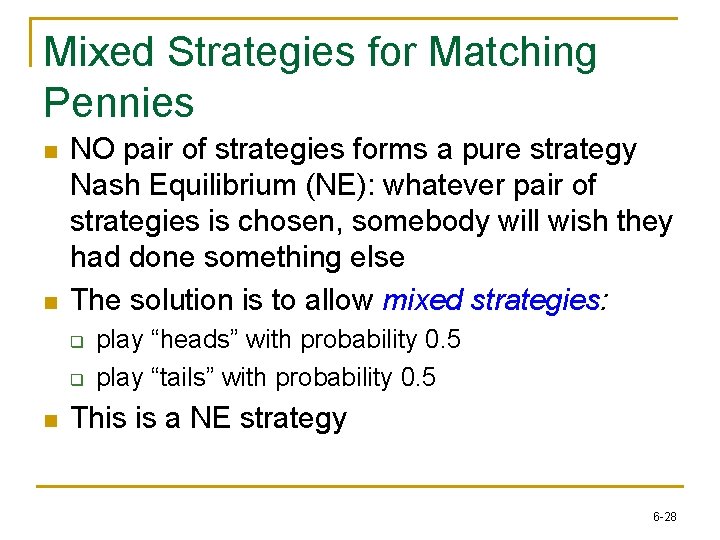

Mixed Strategies for Matching Pennies n n NO pair of strategies forms a pure strategy Nash Equilibrium (NE): whatever pair of strategies is chosen, somebody will wish they had done something else The solution is to allow mixed strategies: q q n play “heads” with probability 0. 5 play “tails” with probability 0. 5 This is a NE strategy 6 -28

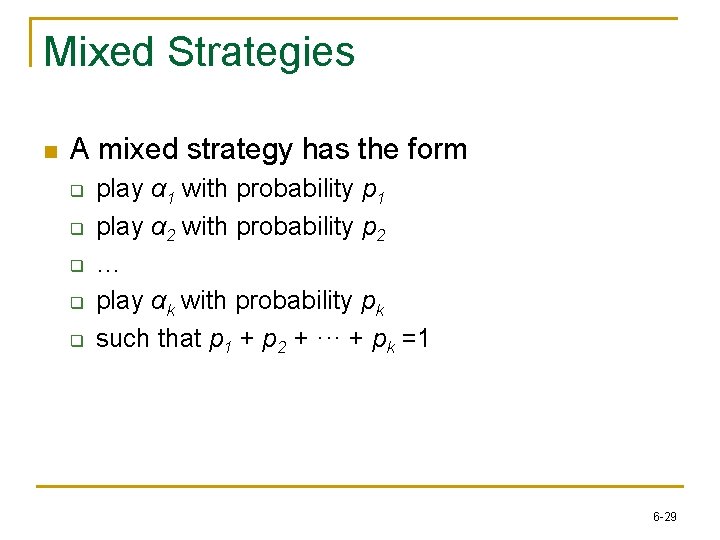

Mixed Strategies n A mixed strategy has the form q q q play α 1 with probability p 1 play α 2 with probability p 2 … play αk with probability pk such that p 1 + p 2 + ··· + pk =1 6 -29

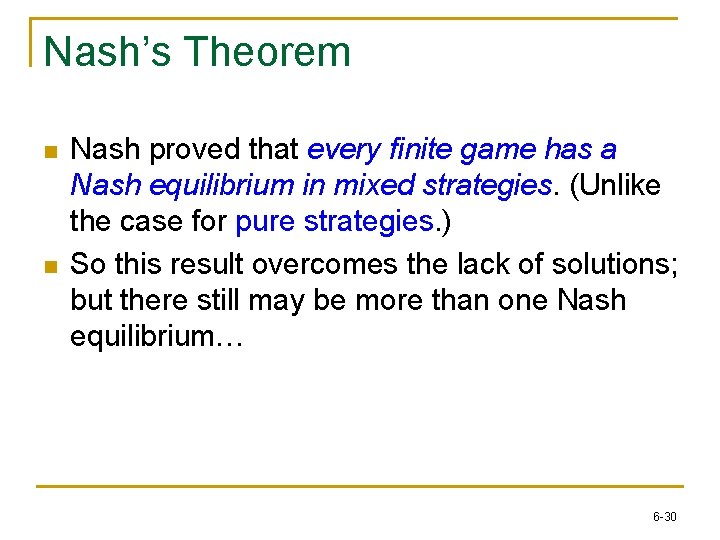

Nash’s Theorem n n Nash proved that every finite game has a Nash equilibrium in mixed strategies. (Unlike the case for pure strategies. ) So this result overcomes the lack of solutions; but there still may be more than one Nash equilibrium… 6 -30

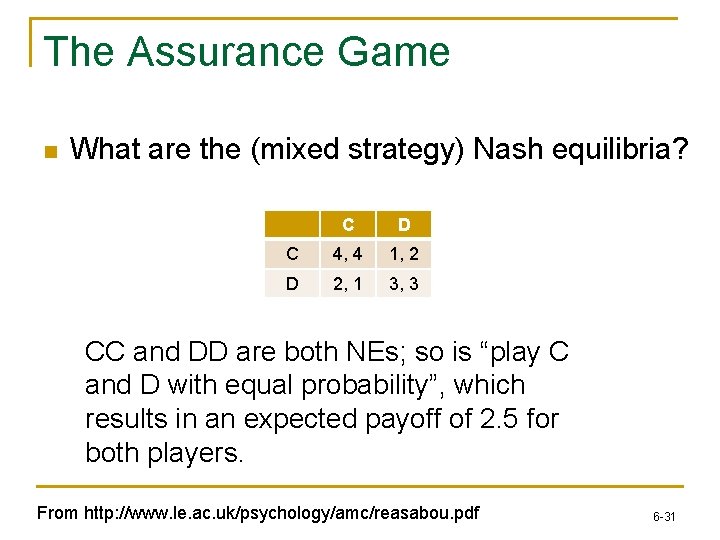

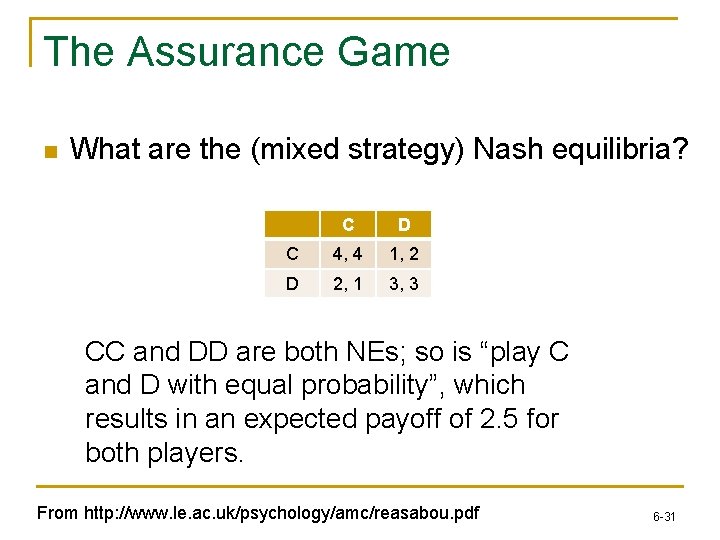

The Assurance Game n What are the (mixed strategy) Nash equilibria? C D C 4, 4 1, 2 D 2, 1 3, 3 CC and DD are both NEs; so is “play C and D with equal probability”, which results in an expected payoff of 2. 5 for both players. From http: //www. le. ac. uk/psychology/amc/reasabou. pdf 6 -31

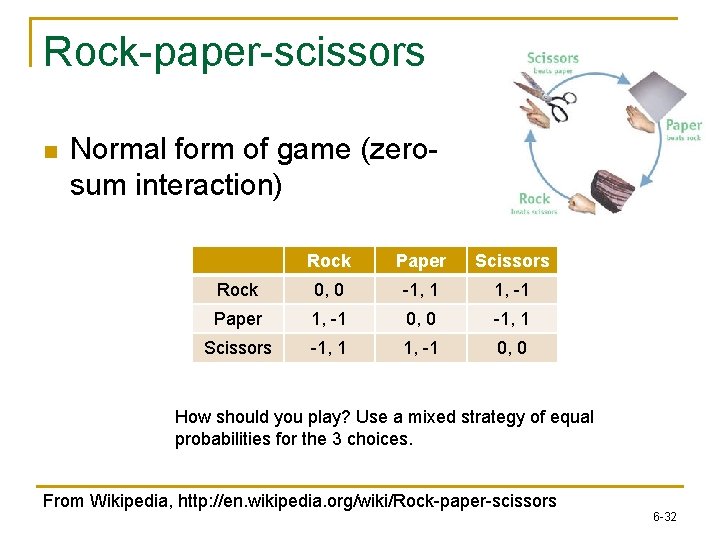

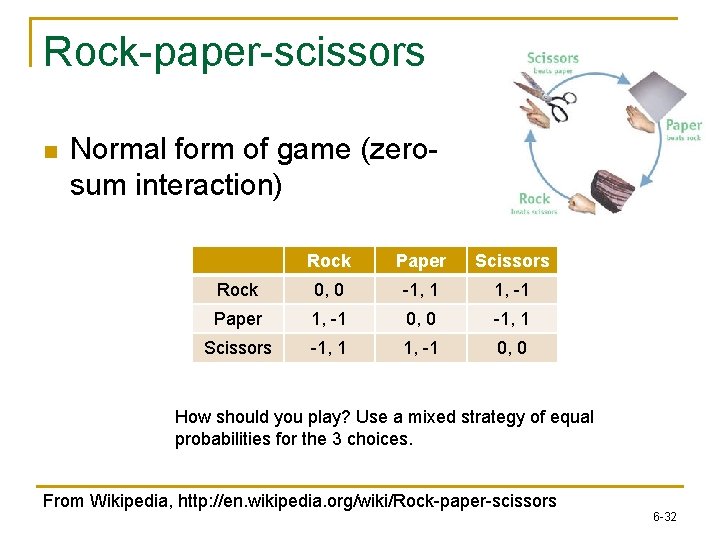

Rock-paper-scissors n Normal form of game (zerosum interaction) Rock Paper Scissors Rock 0, 0 -1, 1 1, -1 Paper 1, -1 0, 0 -1, 1 Scissors -1, 1 1, -1 0, 0 How should you play? Use a mixed strategy of equal probabilities for the 3 choices. From Wikipedia, http: //en. wikipedia. org/wiki/Rock-paper-scissors 6 -32

n So why do these web sites (and books) exist? Hint: your opponent is not an idealized rational agent, or even a computer. 6 -33

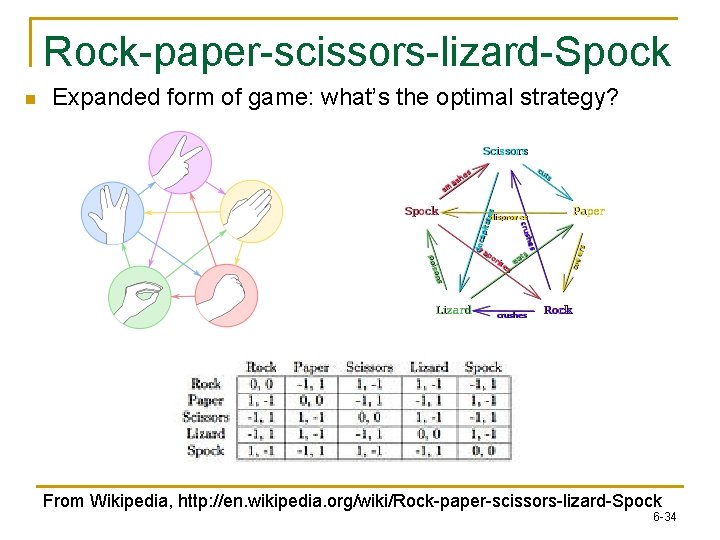

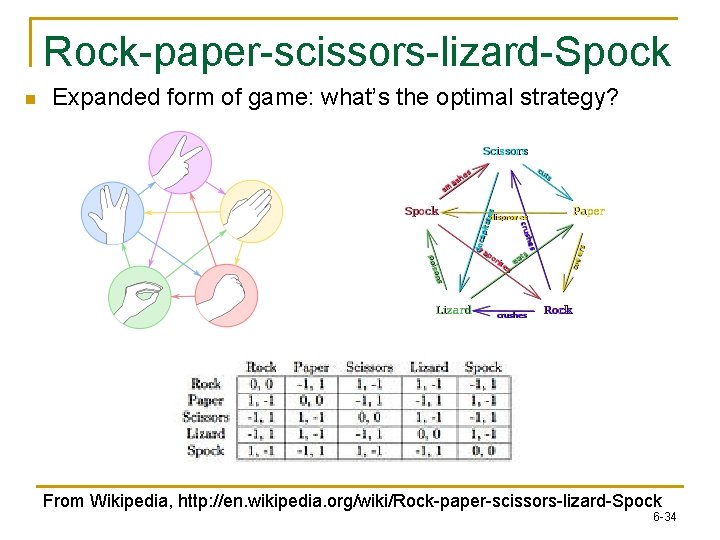

Rock-paper-scissors-lizard-Spock n Expanded form of game: what’s the optimal strategy? From Wikipedia, http: //en. wikipedia. org/wiki/Rock-paper-scissors-lizard-Spock 6 -34

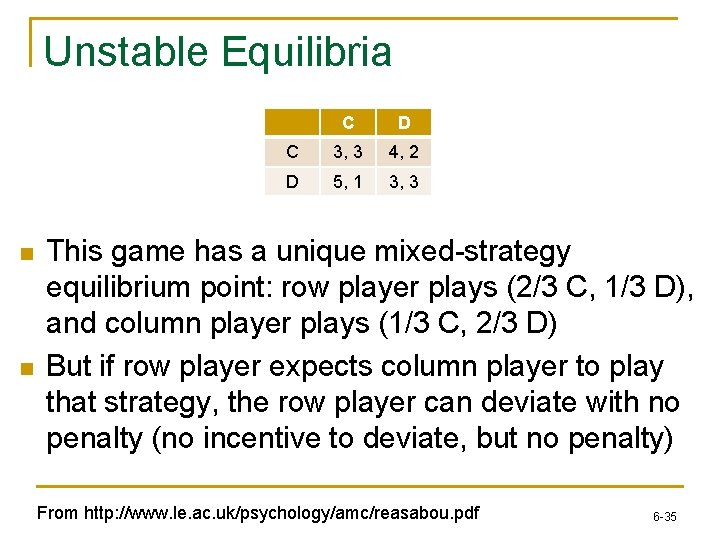

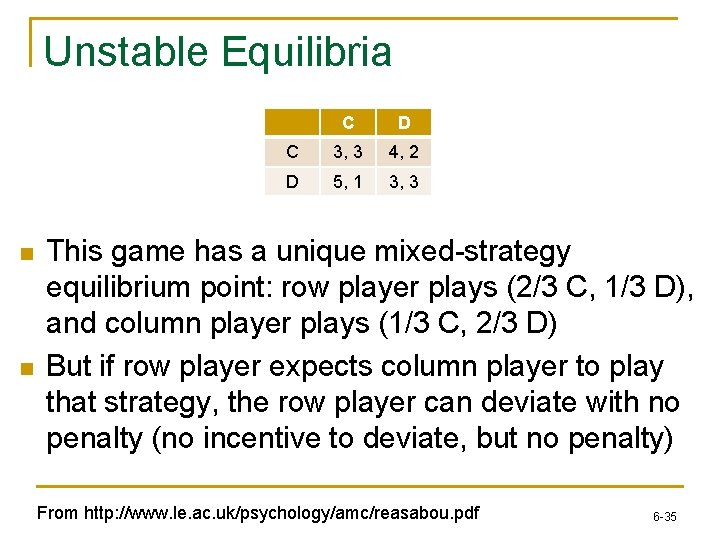

Unstable Equilibria n n C D C 3, 3 4, 2 D 5, 1 3, 3 This game has a unique mixed-strategy equilibrium point: row player plays (2/3 C, 1/3 D), and column player plays (1/3 C, 2/3 D) But if row player expects column player to play that strategy, the row player can deviate with no penalty (no incentive to deviate, but no penalty) From http: //www. le. ac. uk/psychology/amc/reasabou. pdf 6 -35

Strong Nash Equilibrium n n A Nash equilibrium where no coalition can cooperatively deviate in a way that benefits all members, assuming that non-member actions are fixed Defined in terms of all possible coalitional deviations, rather than all possible unilateral deviations 6 -36

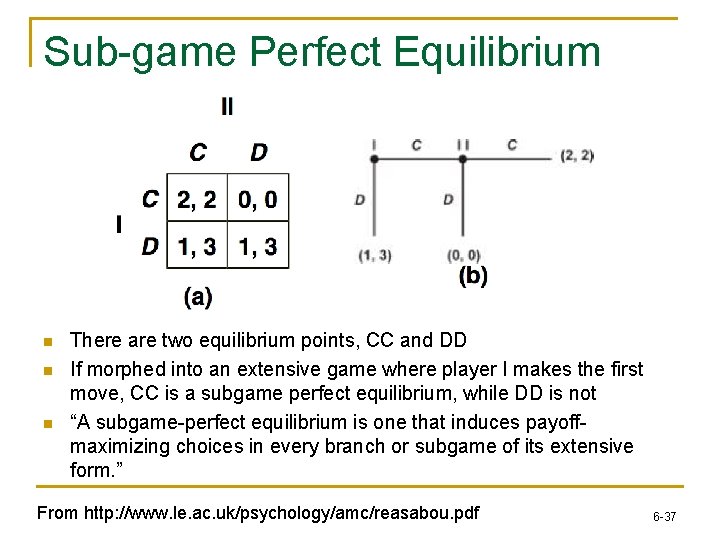

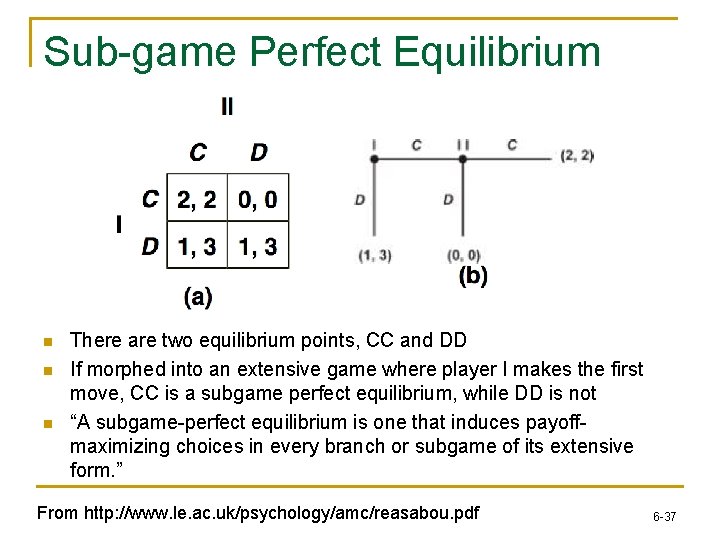

Sub-game Perfect Equilibrium n n n There are two equilibrium points, CC and DD If morphed into an extensive game where player I makes the first move, CC is a subgame perfect equilibrium, while DD is not “A subgame-perfect equilibrium is one that induces payoffmaximizing choices in every branch or subgame of its extensive form. ” From http: //www. le. ac. uk/psychology/amc/reasabou. pdf 6 -37

Pareto Optimality n n An outcome is said to be Pareto optimal (or Pareto efficient) if there is no other outcome that makes one agent better off without making another agent worse off If an outcome is Pareto optimal, then at least one agent will be reluctant to move away from it (because this agent will be worse off) 6 -38

Pareto Optimality n n If an outcome ω is not Pareto optimal, then there is another outcome ω� ’ that makes everyone as happy, if not happier, than ω “Reasonable” agents would agree to move to ω’ in this case. (Even if I don’t directly benefit from ω’� , you can benefit without me suffering. ) 6 -39

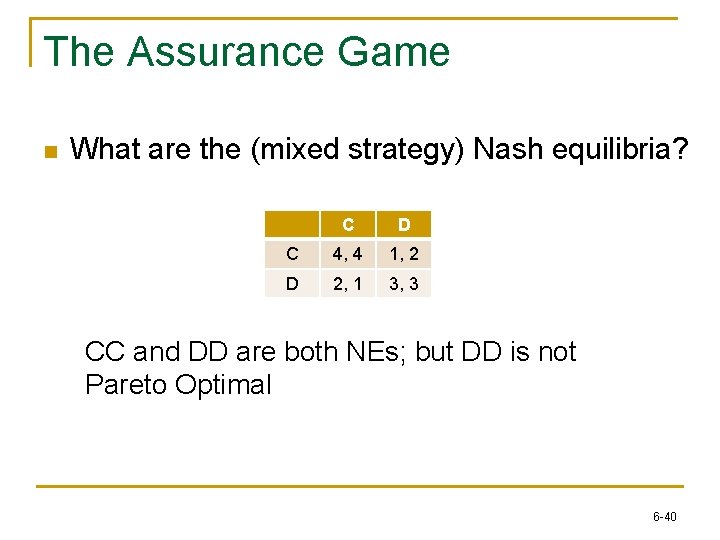

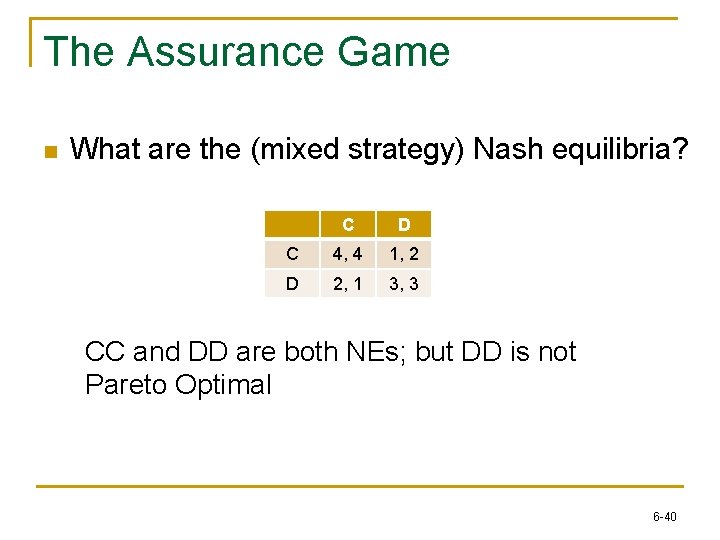

The Assurance Game n What are the (mixed strategy) Nash equilibria? C D C 4, 4 1, 2 D 2, 1 3, 3 CC and DD are both NEs; but DD is not Pareto Optimal 6 -40

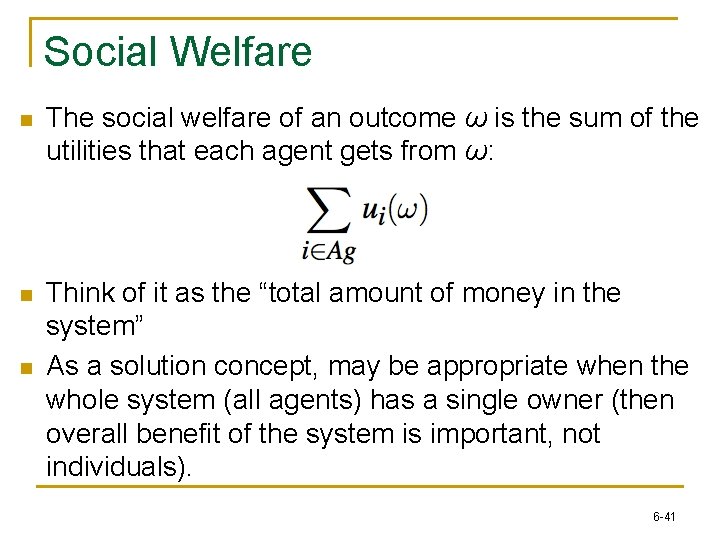

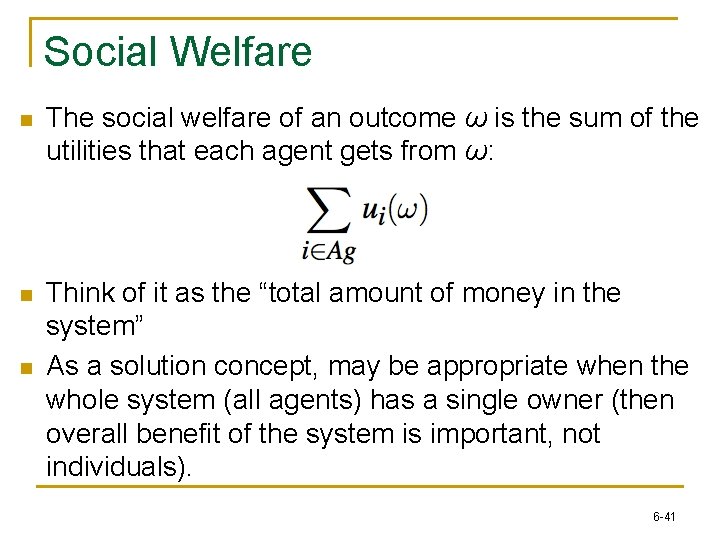

Social Welfare n The social welfare of an outcome ω is the sum of the utilities that each agent gets from ω: n Think of it as the “total amount of money in the system” As a solution concept, may be appropriate when the whole system (all agents) has a single owner (then overall benefit of the system is important, not individuals). n 6 -41

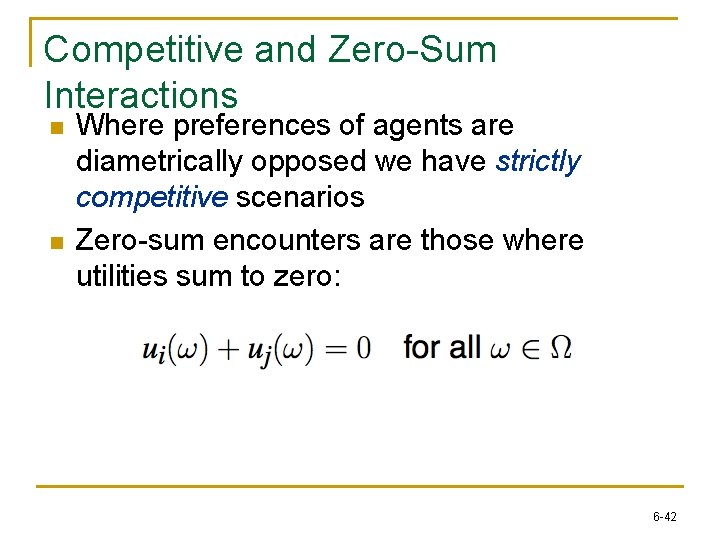

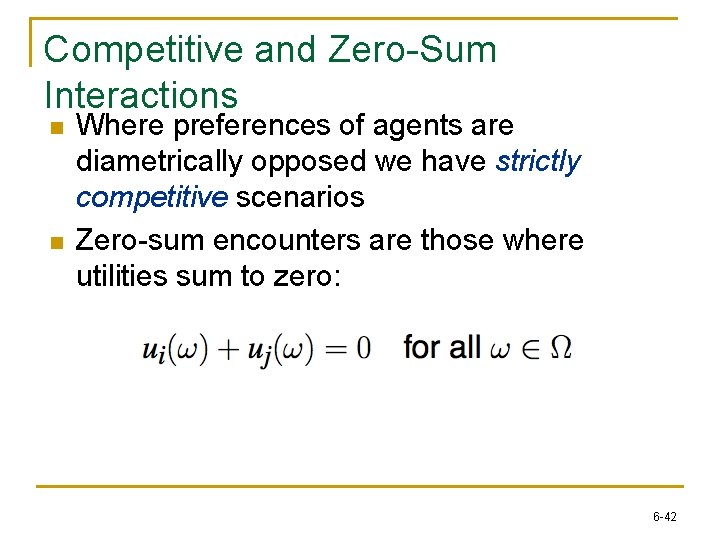

Competitive and Zero-Sum Interactions n n Where preferences of agents are diametrically opposed we have strictly competitive scenarios Zero-sum encounters are those where utilities sum to zero: 6 -42

Competitive and Zero Sum Interactions n n Zero sum encounters are bad news: for me to get positive utility you have to get negative utility. The best outcome for me is the worst for you. Zero sum encounters in real life are very rare … but people tend to act in many scenarios as if they were zero sum 6 -43

The Prisoner’s Dilemma n Two men are collectively charged with a crime and held in separate cells, with no way of meeting or communicating. They are told that: q q n if one confesses and the other does not, the confessor will be freed, and the other will be jailed for three years if both confess, then each will be jailed for two years Both prisoners know that if neither confesses, then they will each be jailed for one year 6 -44

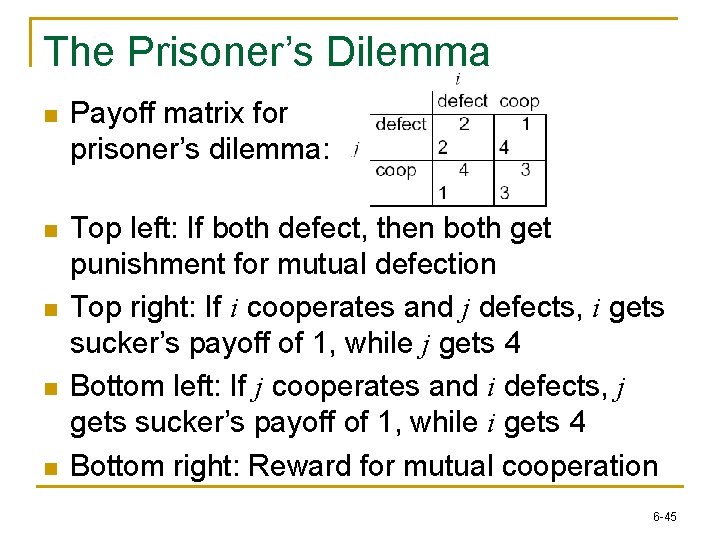

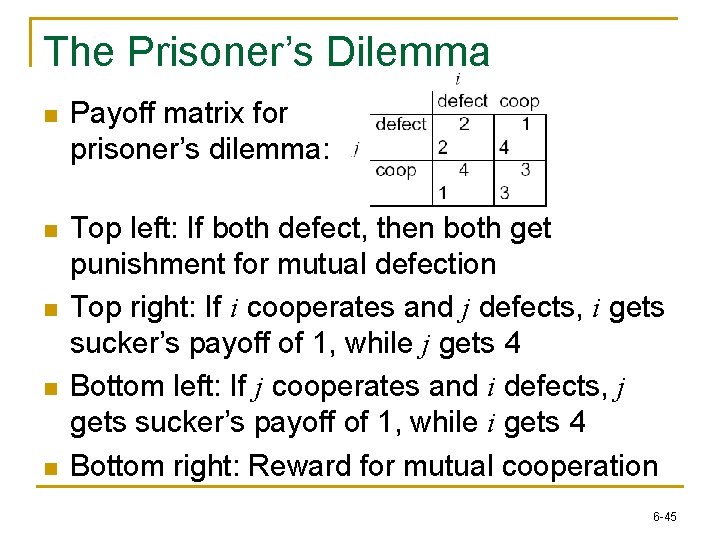

The Prisoner’s Dilemma n Payoff matrix for prisoner’s dilemma: n Top left: If both defect, then both get punishment for mutual defection Top right: If i cooperates and j defects, i gets sucker’s payoff of 1, while j gets 4 Bottom left: If j cooperates and i defects, j gets sucker’s payoff of 1, while i gets 4 Bottom right: Reward for mutual cooperation n 6 -45

What Should You Do? n n n The individual rational action is defect This guarantees a payoff of no worse than 2, whereas cooperating guarantees a payoff of at most 1 So defection is the best response to all possible strategies: both agents defect, and get payoff = 2 But intuition says this is not the best outcome: Surely they should both cooperate and each get payoff of 3. 6 -46

Solution Concepts n n D is a dominant strategy (D, D) is the only Nash equilibrium All outcomes except (D, D) are Pareto optimal (C, C) maximizes social welfare 6 -47

The Prisoner’s Dilemma n n This apparent paradox is the fundamental problem of multi-agent interactions. It appears to imply that cooperation will not occur in societies of self-interested agents. Real world examples: q q q n n nuclear arms reduction (“why don’t I keep mine. . . ”) free rider systems — public transport; in the UK and Israel — television licenses. The prisoner’s dilemma is ubiquitous. Can we recover cooperation? 6 -48

Arguments for Recovering Cooperation n Conclusions that some have drawn from this analysis: q q n the game theory notion of rational action is wrong somehow the dilemma is being formulated wrongly Arguments to recover cooperation: q q We are not all Machiavelli The other prisoner is my twin Program equilibria and mediators The shadow of the future… 6 -49

Program Equilibria n n n The strategy you really want to play in the prisoner’s dilemma is: I’ll cooperate if he will Program equilibria provide one way of enabling this Each agent submits a program strategy to a mediator which jointly executes the strategies. Crucially, strategies can be conditioned on the strategies of the others 6 -50

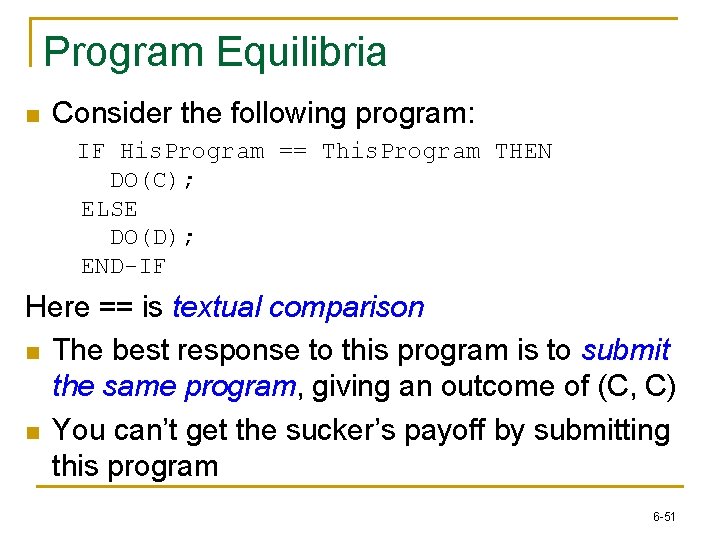

Program Equilibria n Consider the following program: IF His. Program == This. Program THEN DO(C); ELSE DO(D); END-IF Here == is textual comparison n The best response to this program is to submit the same program, giving an outcome of (C, C) n You can’t get the sucker’s payoff by submitting this program 6 -51

The Iterated Prisoner’s Dilemma n n n One answer: play the game more than once If you know you will be meeting your opponent again, then the incentive to defect appears to evaporate Cooperation is the rational choice in the infinititely repeated prisoner’s dilemma 6 -52

Backwards Induction n n But…suppose you both know that you will play the game exactly n times On round n - 1, you have an incentive to defect, to gain that extra bit of payoff… But this makes round n – 2 the last “real”, and so you have an incentive to defect there, too. This is the backwards induction problem. Playing the prisoner’s dilemma with a fixed, finite, pre-determined, commonly known number of rounds, defection is the best strategy 6 -53

Axelrod’s Tournament n n Suppose you play iterated prisoner’s dilemma against a range of opponents… What strategy should you choose, so as to maximize your overall payoff? Axelrod (1984) investigated this problem, with a computer tournament for programs playing the prisoner’s dilemma 6 -54

Strategies in Axelrod’s Tournament n ALLD: q TIT-FOR-TAT: n On round u = 0, cooperate On round u > 0, do what your opponent did on round u – 1 1. 2. n TESTER: q n “Always defect” — the hawk strategy; On 1 st round, defect. If the opponent retaliated, then play TIT-FOR-TAT. Otherwise intersperse cooperation and defection. JOSS: q As TIT-FOR-TAT, except periodically defect 6 -55

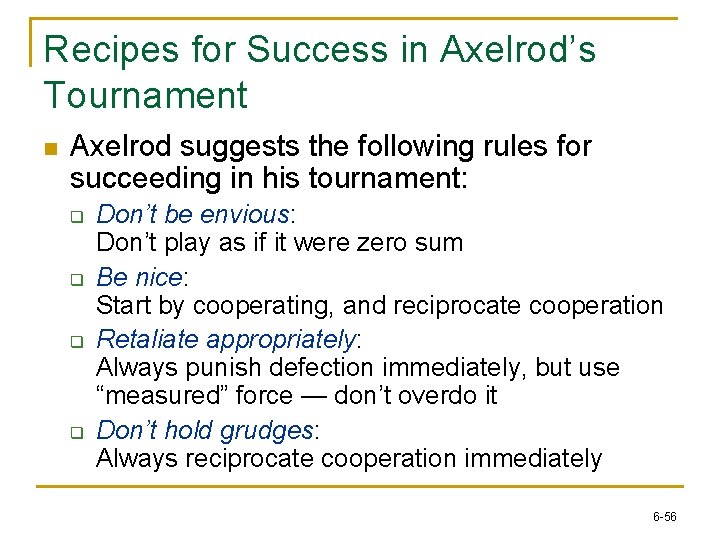

Recipes for Success in Axelrod’s Tournament n Axelrod suggests the following rules for succeeding in his tournament: q q Don’t be envious: Don’t play as if it were zero sum Be nice: Start by cooperating, and reciprocate cooperation Retaliate appropriately: Always punish defection immediately, but use “measured” force — don’t overdo it Don’t hold grudges: Always reciprocate cooperation immediately 6 -56

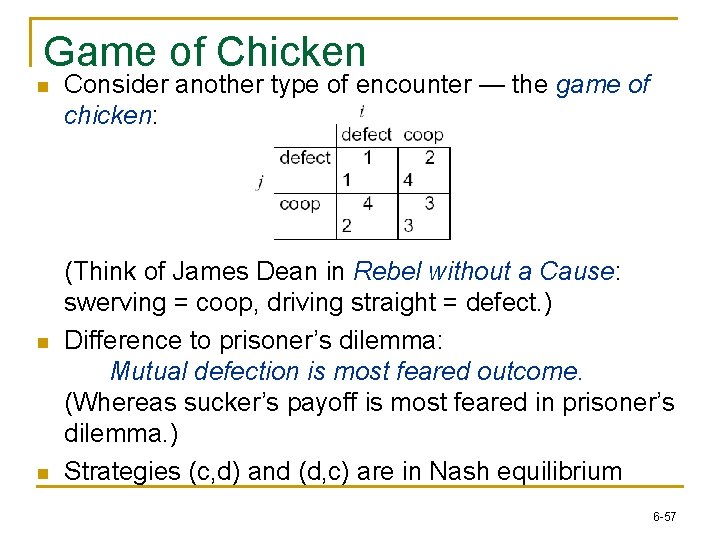

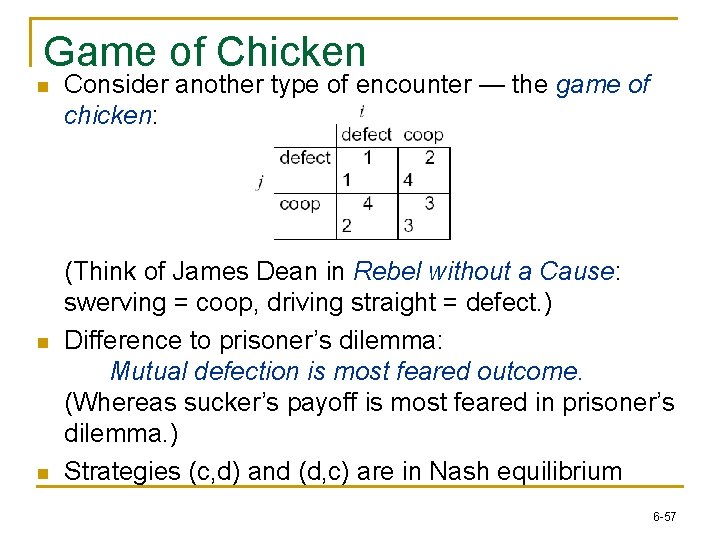

Game of Chicken n Consider another type of encounter — the game of chicken: (Think of James Dean in Rebel without a Cause: swerving = coop, driving straight = defect. ) Difference to prisoner’s dilemma: Mutual defection is most feared outcome. (Whereas sucker’s payoff is most feared in prisoner’s dilemma. ) Strategies (c, d) and (d, c) are in Nash equilibrium 6 -57

Solution Concepts n n There is no dominant strategy (in our sense) Strategy pairs (C, D) and (D, C) are Nash equilibriums All outcomes except (D, D) are Pareto optimal All outcomes except (D, D) maximize social welfare 6 -58

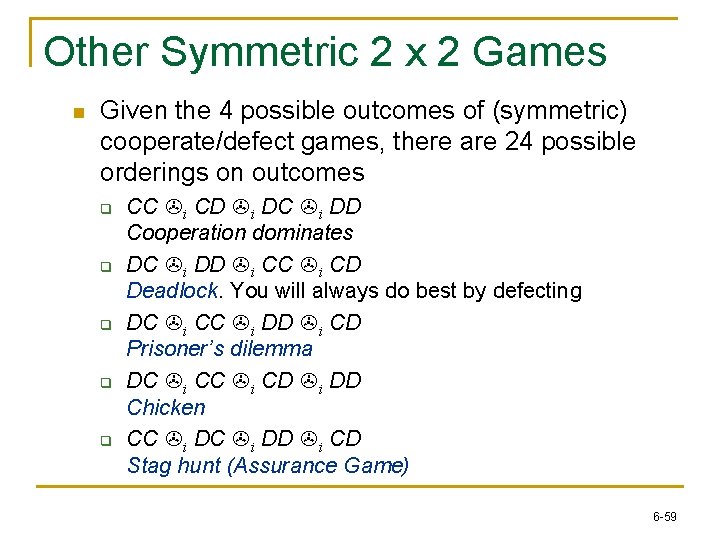

Other Symmetric 2 x 2 Games n Given the 4 possible outcomes of (symmetric) cooperate/defect games, there are 24 possible orderings on outcomes q q q CC >i CD >i DC >i DD Cooperation dominates DC >i DD >i CC >i CD Deadlock. You will always do best by defecting DC >i CC >i DD >i CD Prisoner’s dilemma DC >i CD >i DD Chicken CC >i DD >i CD Stag hunt (Assurance Game) 6 -59

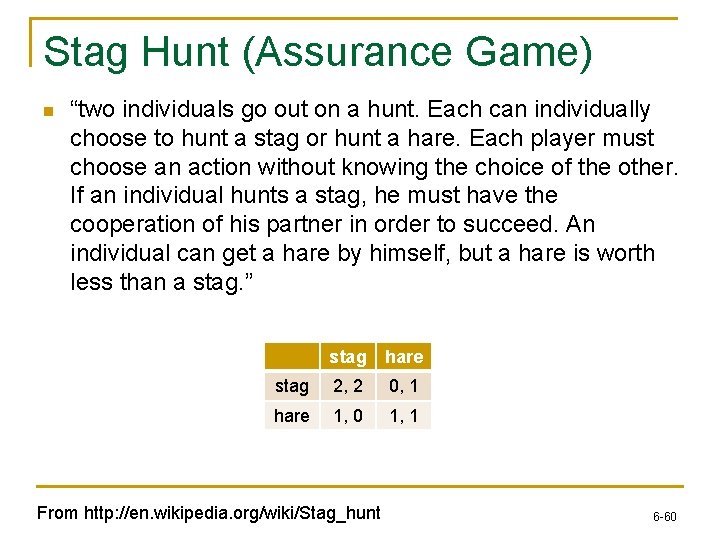

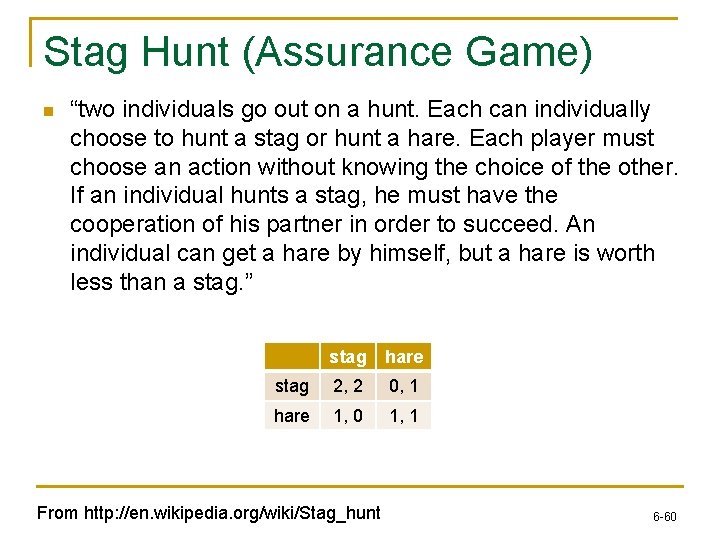

Stag Hunt (Assurance Game) n “two individuals go out on a hunt. Each can individually choose to hunt a stag or hunt a hare. Each player must choose an action without knowing the choice of the other. If an individual hunts a stag, he must have the cooperation of his partner in order to succeed. An individual can get a hare by himself, but a hare is worth less than a stag. ” stag hare stag 2, 2 0, 1 hare 1, 0 1, 1 From http: //en. wikipedia. org/wiki/Stag_hunt 6 -60

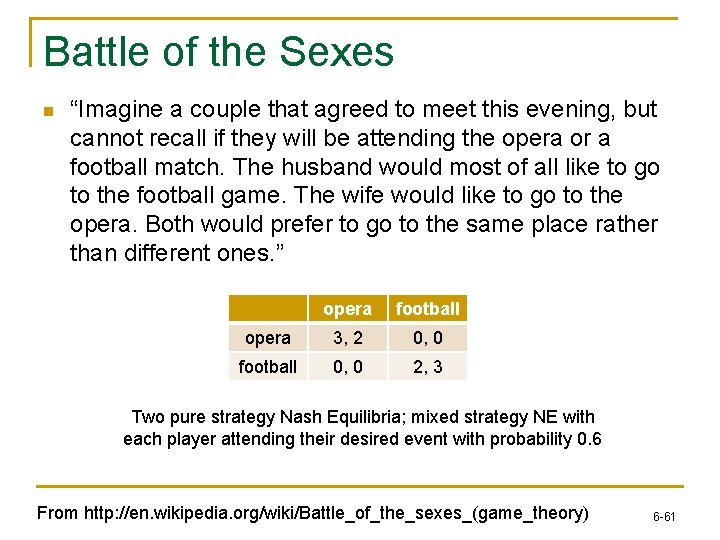

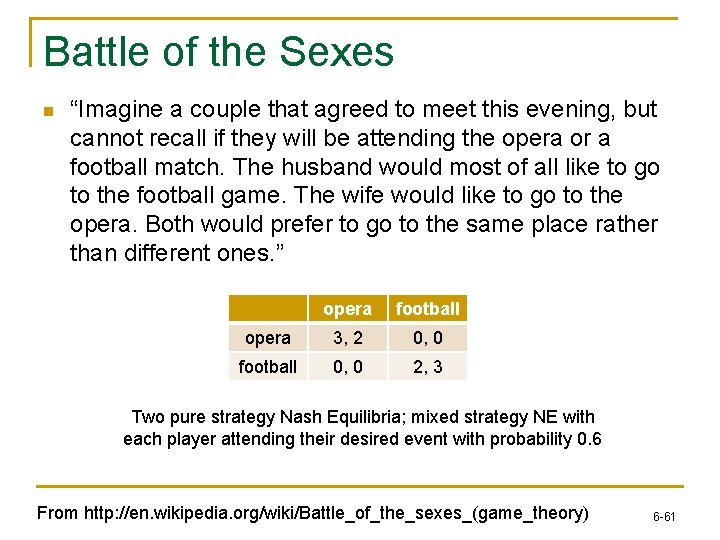

Battle of the Sexes n “Imagine a couple that agreed to meet this evening, but cannot recall if they will be attending the opera or a football match. The husband would most of all like to go to the football game. The wife would like to go to the opera. Both would prefer to go to the same place rather than different ones. ” opera football opera 3, 2 0, 0 football 0, 0 2, 3 Two pure strategy Nash Equilibria; mixed strategy NE with each player attending their desired event with probability 0. 6 From http: //en. wikipedia. org/wiki/Battle_of_the_sexes_(game_theory) 6 -61