Lecture 6 Memory Management Virtual Memory Approaches Time

- Slides: 26

Lecture 6 Memory Management

Virtual Memory Approaches • Time Sharing: one process uses RAM at a time • Static Relocation: statically rewrite code before run • Base: add a base to virtual address to get physical • Base+Bounds: also check physical is in range • Segmentation: many base+bounds pairs • Paging: divide mem into small, fix-sized page frames

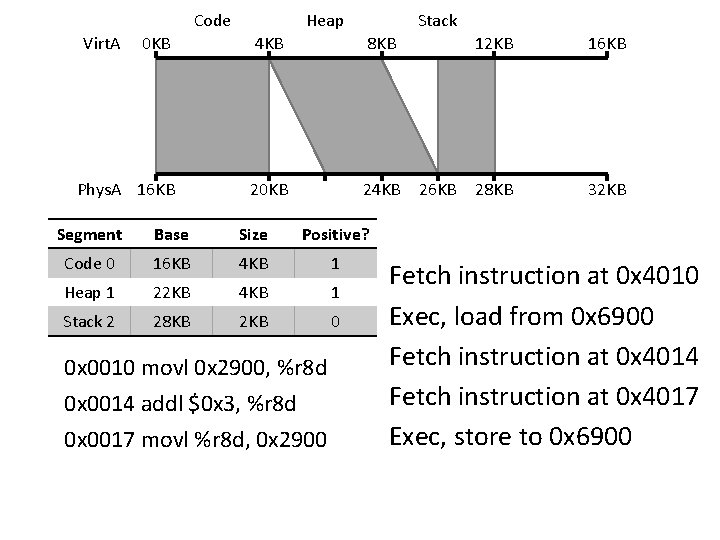

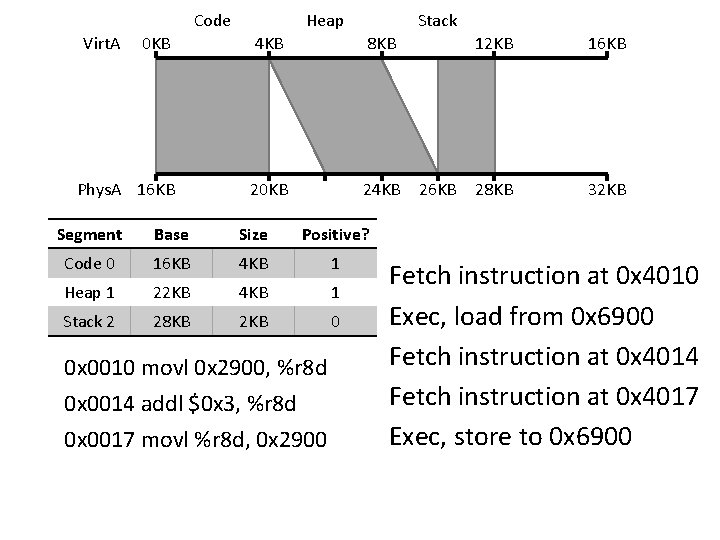

Code Virt. A Heap Stack 0 KB 4 KB 8 KB Phys. A 16 KB 20 KB 24 KB Segment Base Size Positive? Code 0 16 KB 4 KB 1 Heap 1 22 KB 4 KB 1 Stack 2 28 KB 2 KB 0 0 x 0010 movl 0 x 2900, %r 8 d 0 x 0014 addl $0 x 3, %r 8 d 0 x 0017 movl %r 8 d, 0 x 2900 26 KB 12 KB 16 KB 28 KB 32 KB Fetch instruction at 0 x 4010 Exec, load from 0 x 6900 Fetch instruction at 0 x 4014 Fetch instruction at 0 x 4017 Exec, store to 0 x 6900

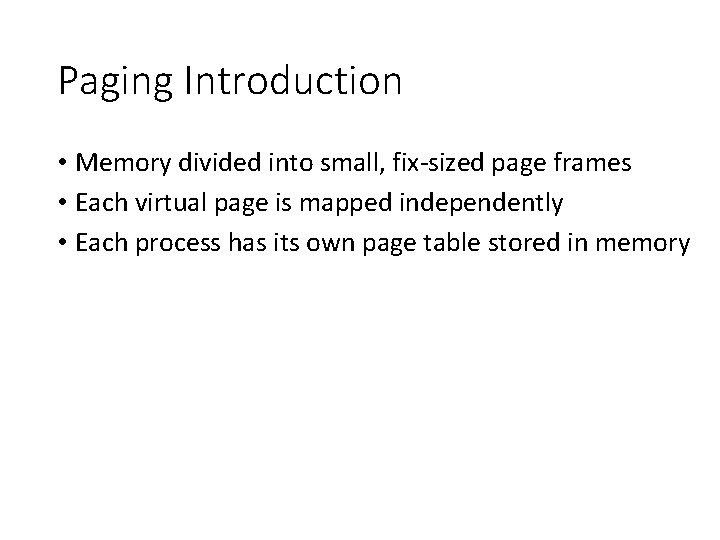

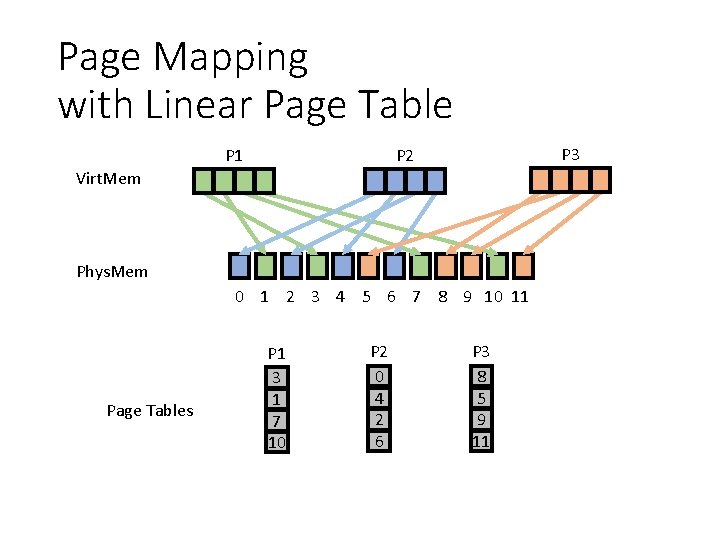

Paging Introduction • Memory divided into small, fix-sized page frames • Each virtual page is mapped independently • Each process has its own page table stored in memory

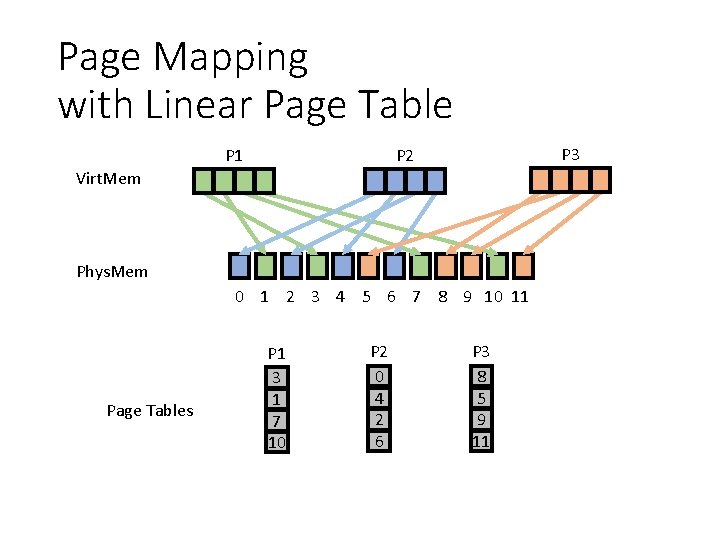

Page Mapping with Linear Page Table P 1 P 3 P 2 Virt. Mem Phys. Mem 0 1 2 3 4 5 6 7 8 9 10 11 Page Tables P 1 3 1 7 10 P 2 0 4 2 6 P 3 8 5 9 11

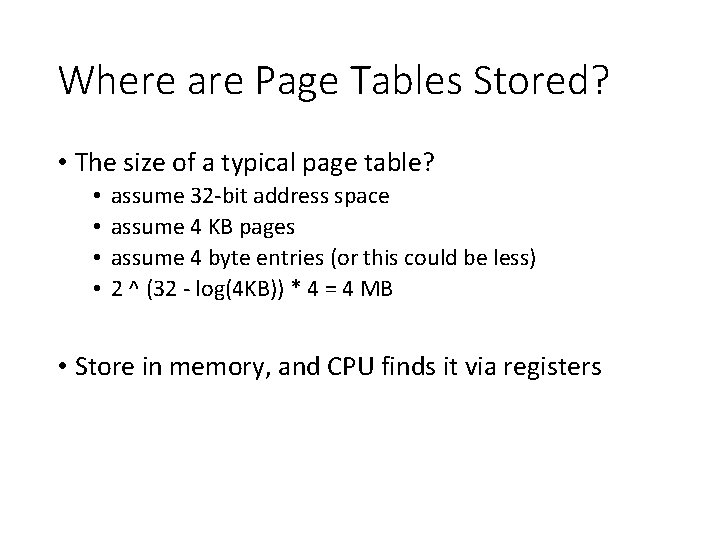

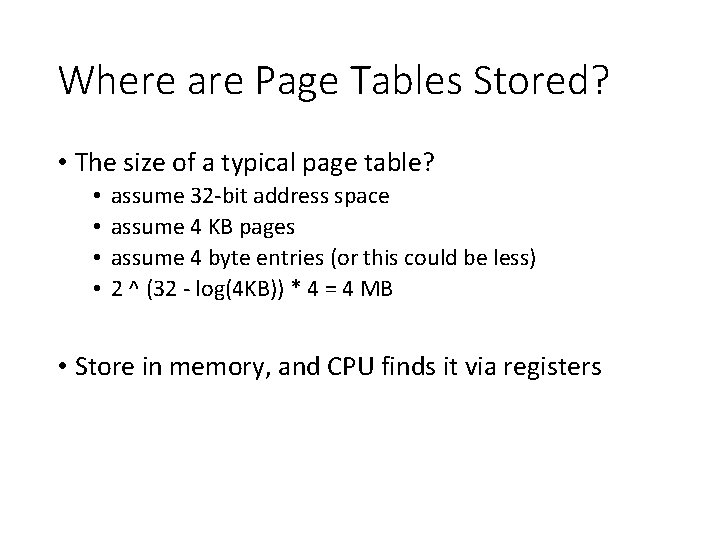

Where are Page Tables Stored? • The size of a typical page table? • • assume 32 -bit address space assume 4 KB pages assume 4 byte entries (or this could be less) 2 ^ (32 - log(4 KB)) * 4 = 4 MB • Store in memory, and CPU finds it via registers

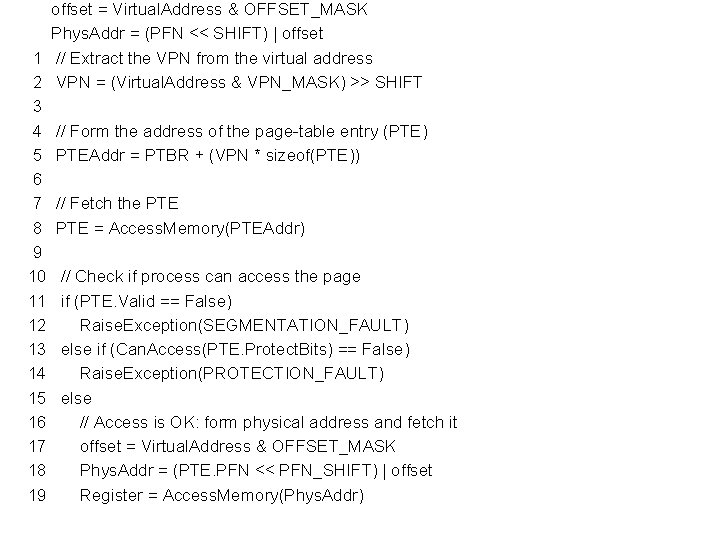

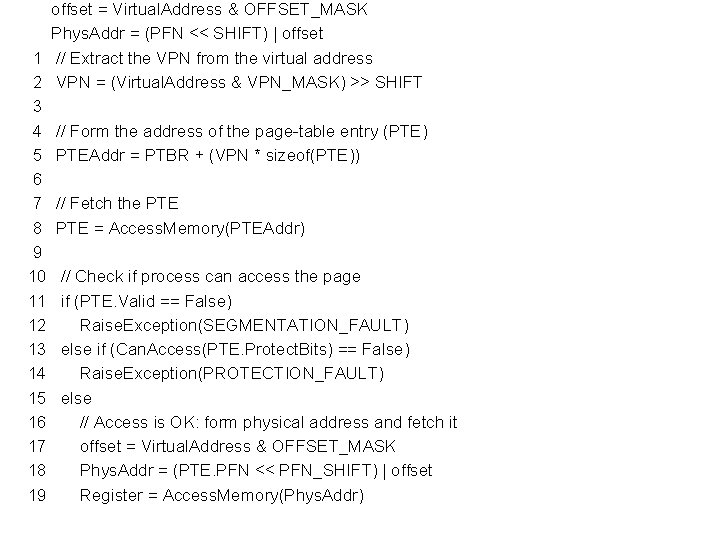

offset = Virtual. Address & OFFSET_MASK Phys. Addr = (PFN << SHIFT) | offset 1 // Extract the VPN from the virtual address 2 VPN = (Virtual. Address & VPN_MASK) >> SHIFT 3 4 // Form the address of the page-table entry (PTE) 5 PTEAddr = PTBR + (VPN * sizeof(PTE)) 6 7 // Fetch the PTE 8 PTE = Access. Memory(PTEAddr) 9 10 // Check if process can access the page 11 if (PTE. Valid == False) 12 Raise. Exception(SEGMENTATION_FAULT) 13 else if (Can. Access(PTE. Protect. Bits) == False) 14 Raise. Exception(PROTECTION_FAULT) 15 else 16 // Access is OK: form physical address and fetch it 17 offset = Virtual. Address & OFFSET_MASK 18 Phys. Addr = (PTE. PFN << PFN_SHIFT) | offset 19 Register = Access. Memory(Phys. Addr)

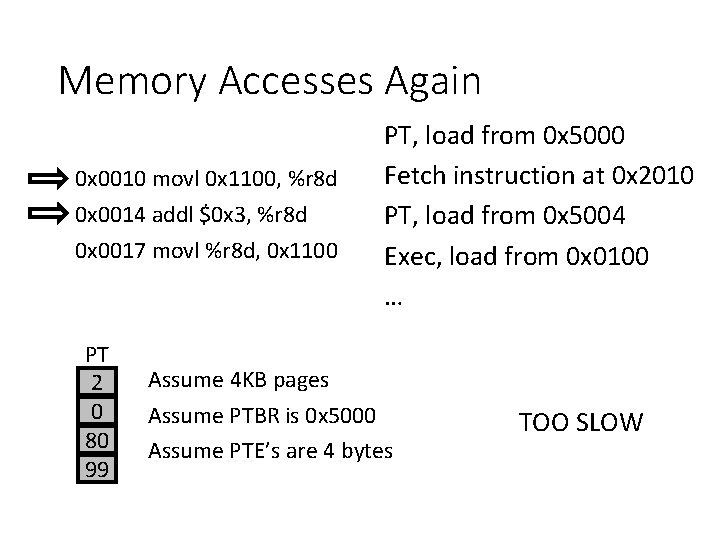

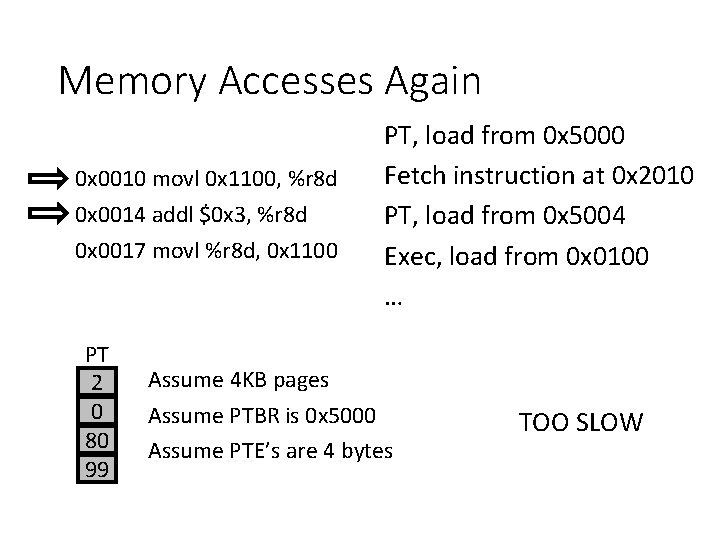

Memory Accesses Again 0 x 0010 movl 0 x 1100, %r 8 d 0 x 0014 addl $0 x 3, %r 8 d 0 x 0017 movl %r 8 d, 0 x 1100 PT 2 0 80 99 PT, load from 0 x 5000 Fetch instruction at 0 x 2010 PT, load from 0 x 5004 Exec, load from 0 x 0100 … Assume 4 KB pages Assume PTBR is 0 x 5000 Assume PTE’s are 4 bytes TOO SLOW

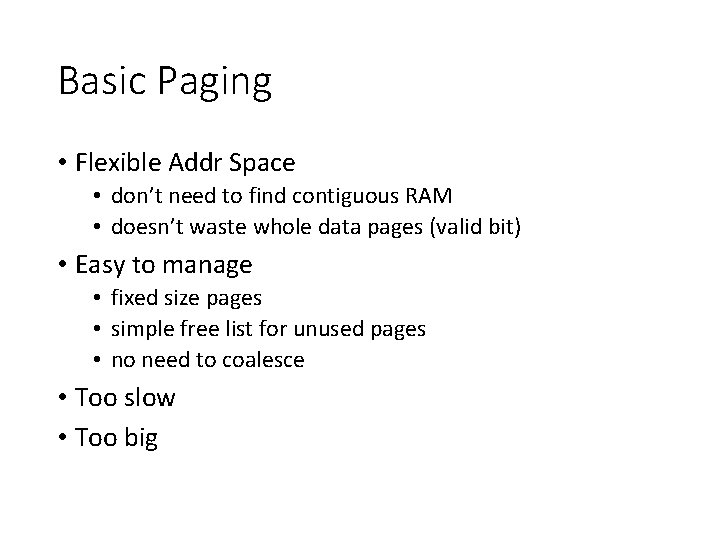

Basic Paging • Flexible Addr Space • don’t need to find contiguous RAM • doesn’t waste whole data pages (valid bit) • Easy to manage • fixed size pages • simple free list for unused pages • no need to coalesce • Too slow • Too big

Translation Steps H/W: for each mem reference: 1. extract VPN (virt page num) from VA (virt addr) 2. calculate addr of PTE (page table entry) 3. fetch PTE 4. extract PFN (page frame num) 5. build PA (phys addr) 6. fetch PA to register

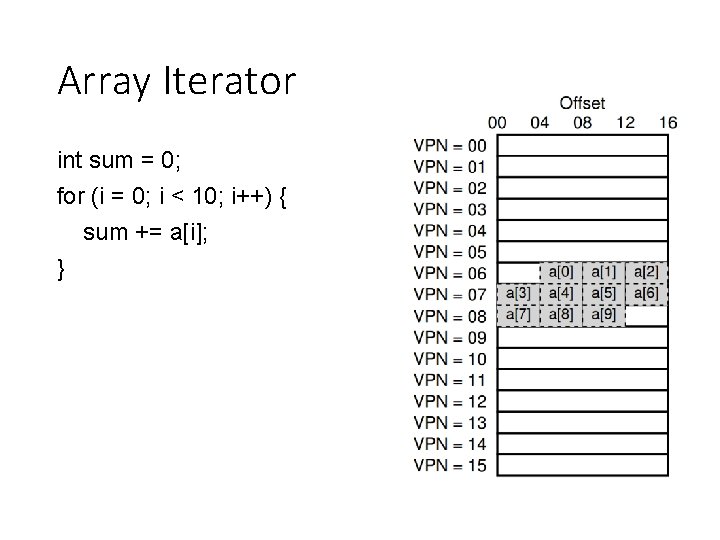

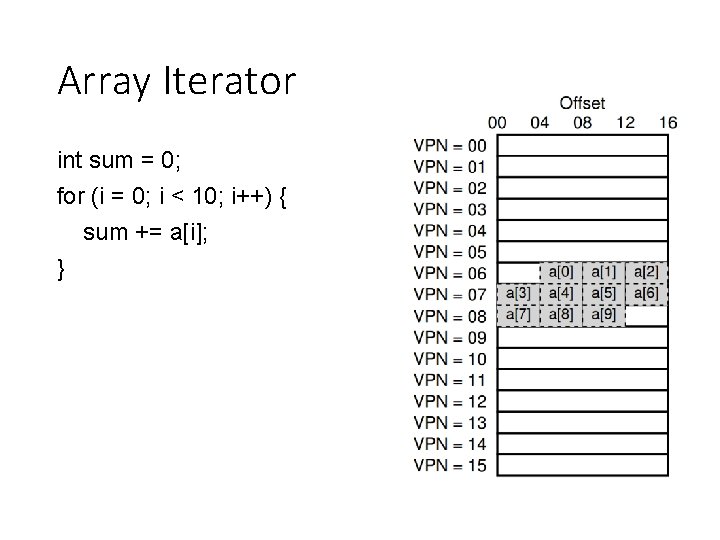

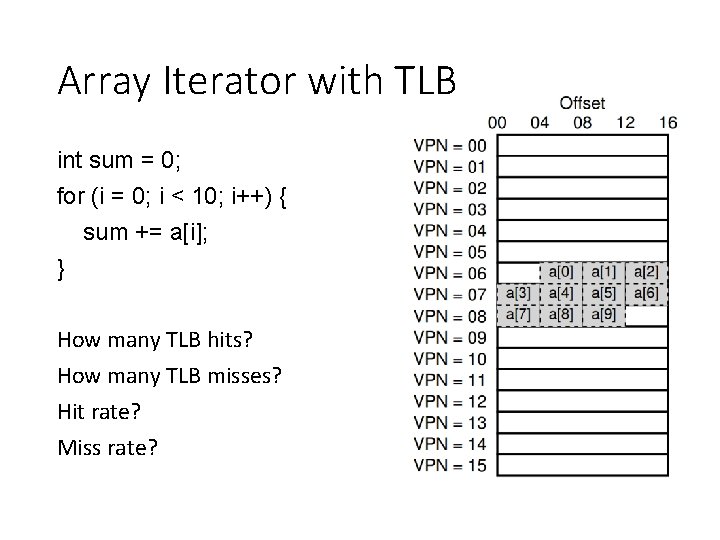

Array Iterator int sum = 0; for (i = 0; i < 10; i++) { sum += a[i]; }

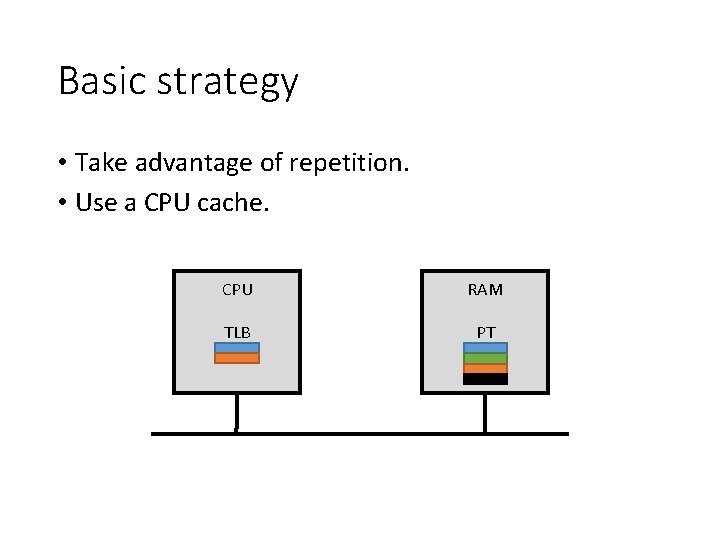

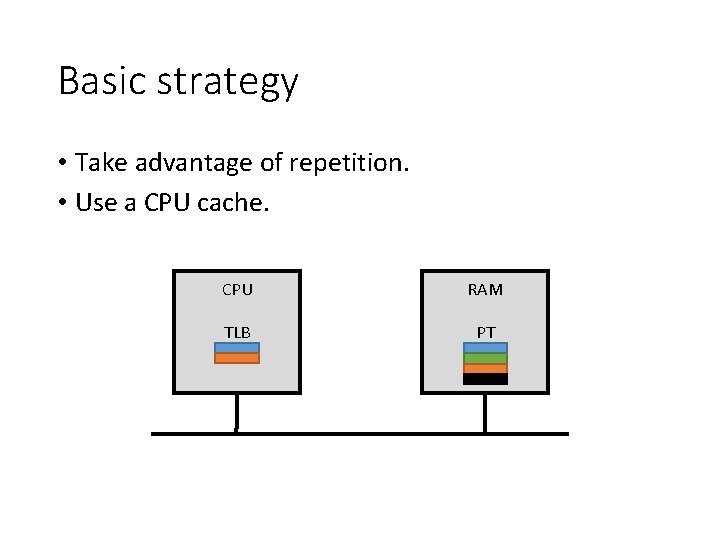

Basic strategy • Take advantage of repetition. • Use a CPU cache. CPU RAM TLB PT

TLB Cache Type • Fully-Associative: entries can go anywhere • most common for TLBs • must store whole key/value in cache • search all in parallel • There are other general cache types

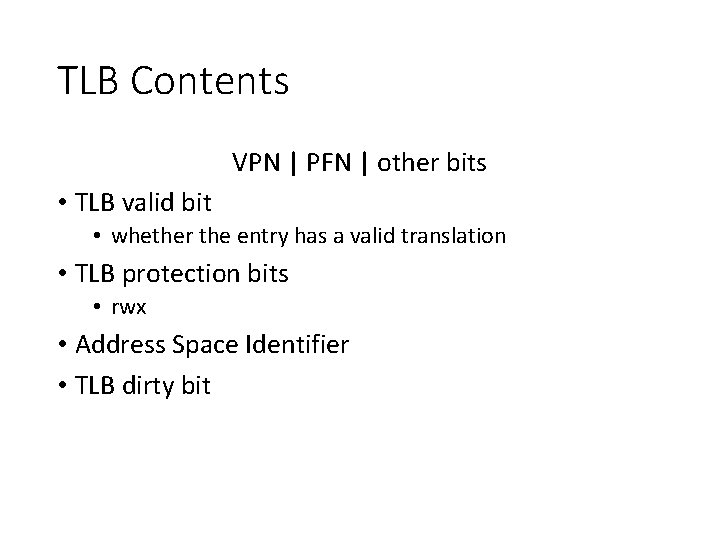

TLB Contents VPN | PFN | other bits • TLB valid bit • whether the entry has a valid translation • TLB protection bits • rwx • Address Space Identifier • TLB dirty bit

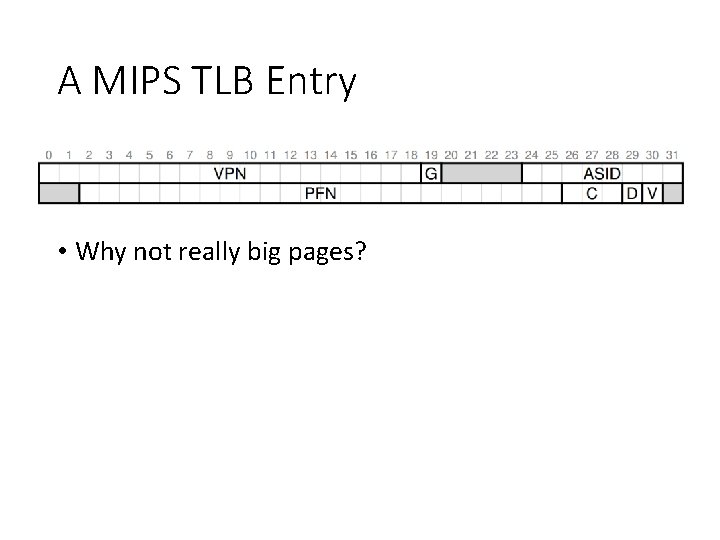

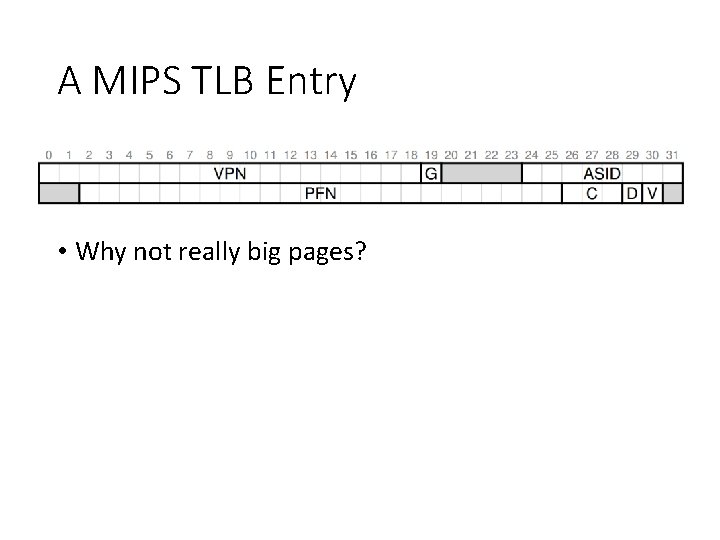

A MIPS TLB Entry • Why not really big pages?

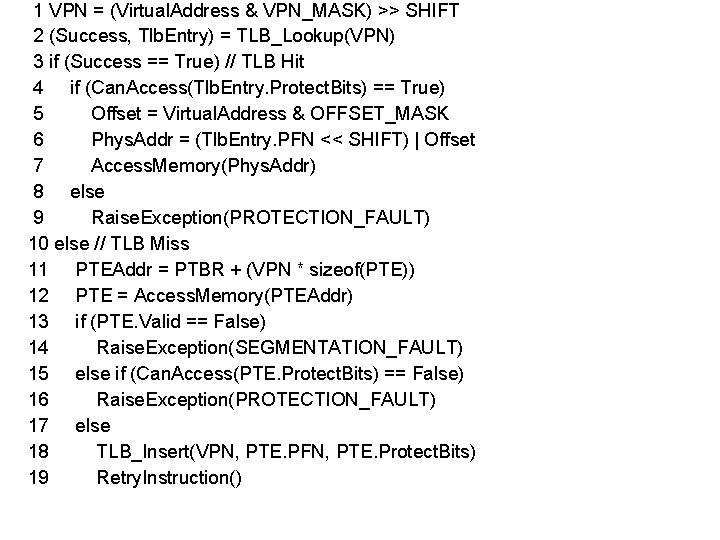

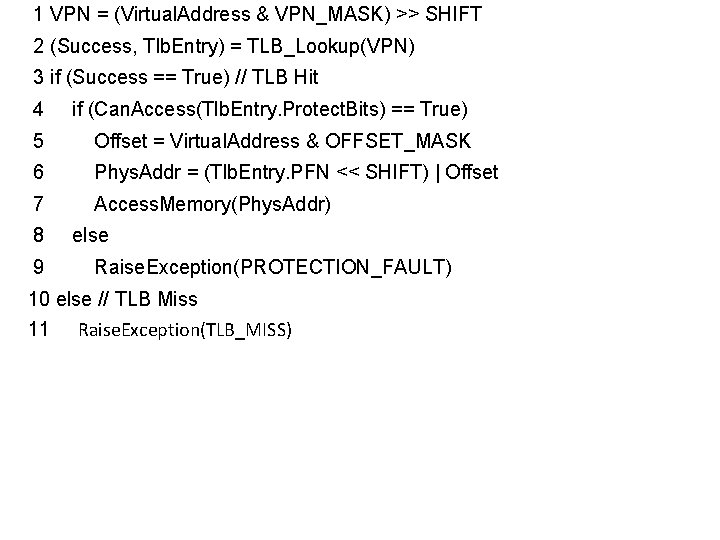

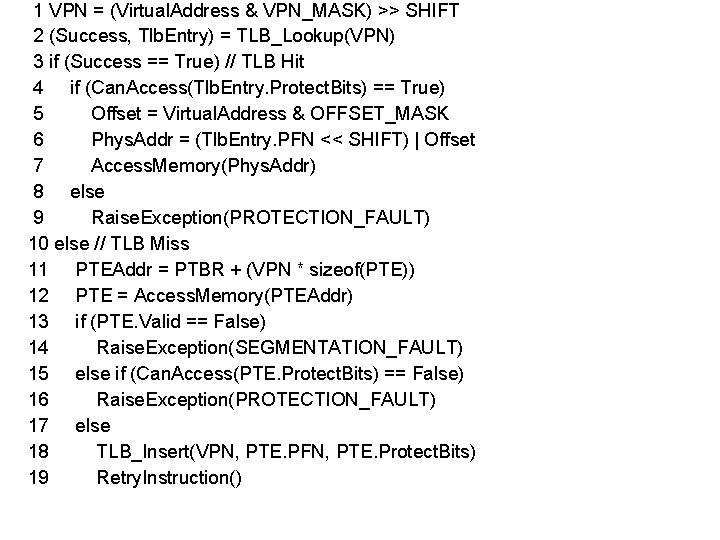

1 VPN = (Virtual. Address & VPN_MASK) >> SHIFT 2 (Success, Tlb. Entry) = TLB_Lookup(VPN) 3 if (Success == True) // TLB Hit 4 if (Can. Access(Tlb. Entry. Protect. Bits) == True) 5 Offset = Virtual. Address & OFFSET_MASK 6 Phys. Addr = (Tlb. Entry. PFN << SHIFT) | Offset 7 Access. Memory(Phys. Addr) 8 else 9 Raise. Exception(PROTECTION_FAULT) 10 else // TLB Miss 11 PTEAddr = PTBR + (VPN * sizeof(PTE)) 12 PTE = Access. Memory(PTEAddr) 13 if (PTE. Valid == False) 14 Raise. Exception(SEGMENTATION_FAULT) 15 else if (Can. Access(PTE. Protect. Bits) == False) 16 Raise. Exception(PROTECTION_FAULT) 17 else 18 TLB_Insert(VPN, PTE. PFN, PTE. Protect. Bits) 19 Retry. Instruction()

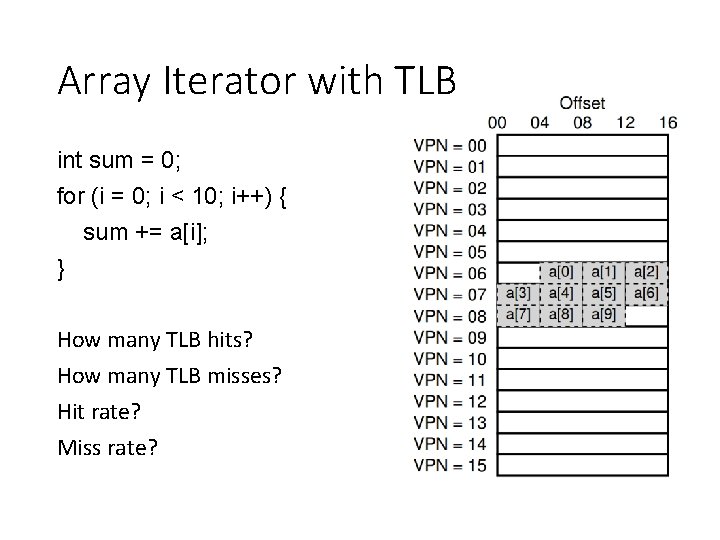

Array Iterator with TLB int sum = 0; for (i = 0; i < 10; i++) { sum += a[i]; } How many TLB hits? How many TLB misses? Hit rate? Miss rate?

Reasoning about TLB • Workload: series of loads/stores to accesses • TLB: chooses entries to store in CPU • Metric: performance (i. e. , hit rate)

TLB Workloads • Spatial locality • Sequential array accesses can almost always hit in the TLB, and so are very fast! • Temporal locality • What pattern would be slow? • highly random, with no repeat accesses

TLB Replacement Policies • LRU: evict least-recently used a TLB slot is needed • Random: randomly choose entries to evict • When is each better? • Sometimes random is better than a “smart” policy!

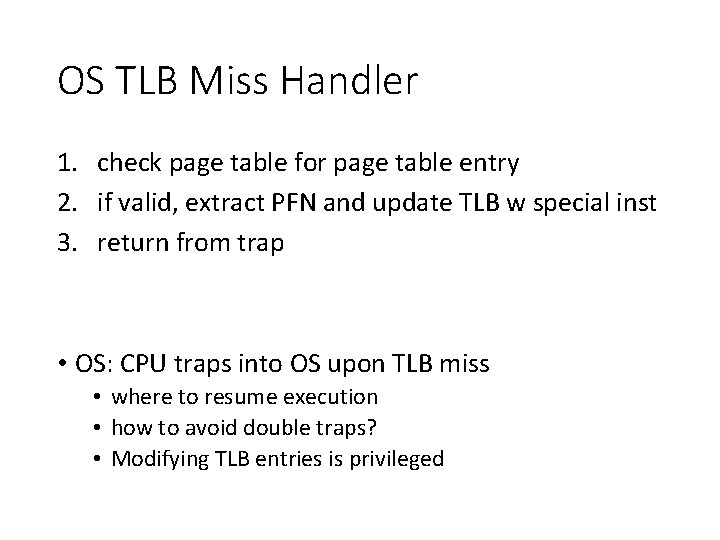

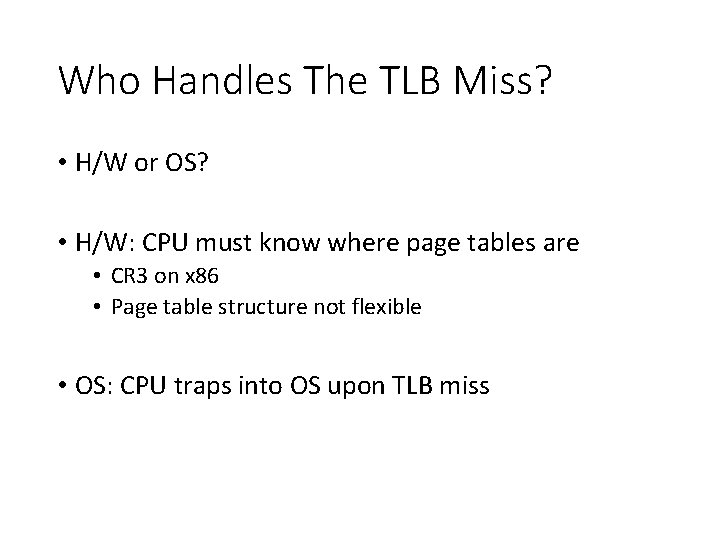

Who Handles The TLB Miss? • H/W or OS? • H/W: CPU must know where page tables are • CR 3 on x 86 • Page table structure not flexible • OS: CPU traps into OS upon TLB miss

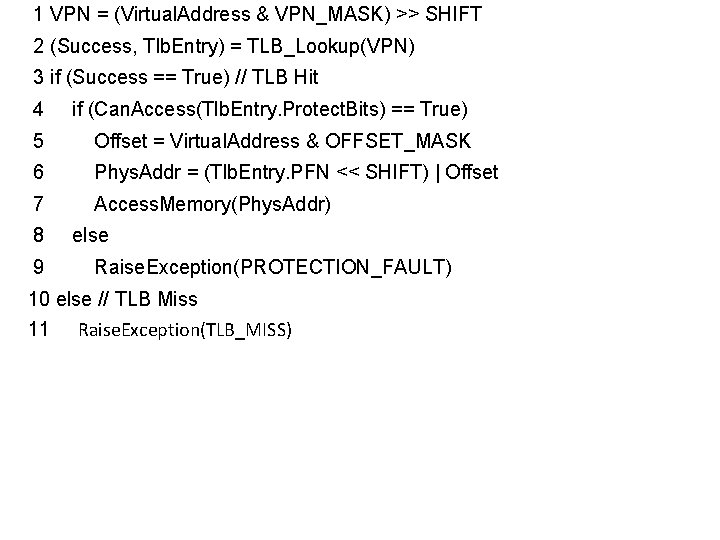

1 VPN = (Virtual. Address & VPN_MASK) >> SHIFT 2 (Success, Tlb. Entry) = TLB_Lookup(VPN) 3 if (Success == True) // TLB Hit 4 if (Can. Access(Tlb. Entry. Protect. Bits) == True) 5 Offset = Virtual. Address & OFFSET_MASK 6 Phys. Addr = (Tlb. Entry. PFN << SHIFT) | Offset 7 Access. Memory(Phys. Addr) 8 9 else Raise. Exception(PROTECTION_FAULT) 10 else // TLB Miss 11 Raise. Exception(TLB_MISS)

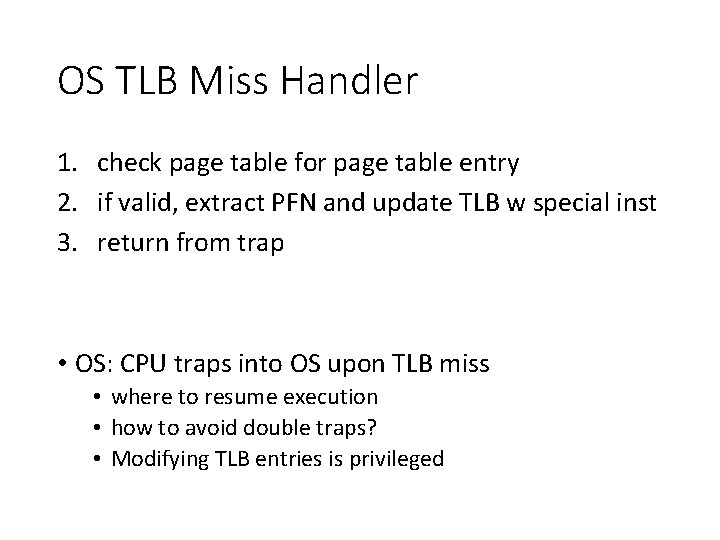

OS TLB Miss Handler 1. check page table for page table entry 2. if valid, extract PFN and update TLB w special inst 3. return from trap • OS: CPU traps into OS upon TLB miss • where to resume execution • how to avoid double traps? • Modifying TLB entries is privileged

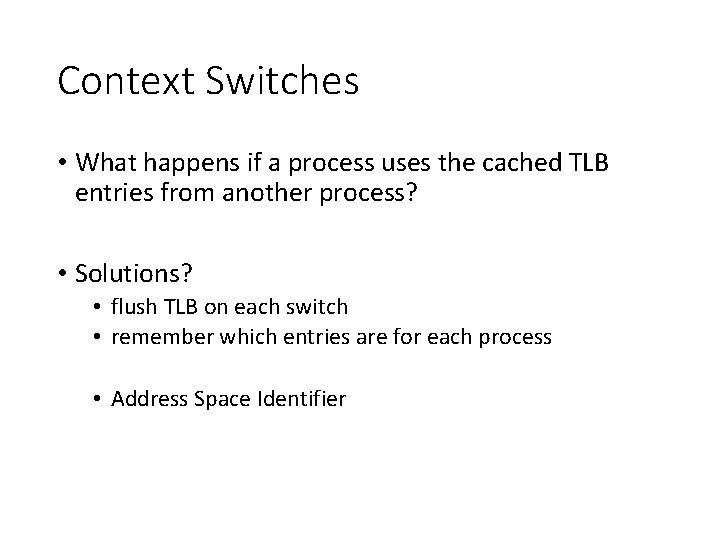

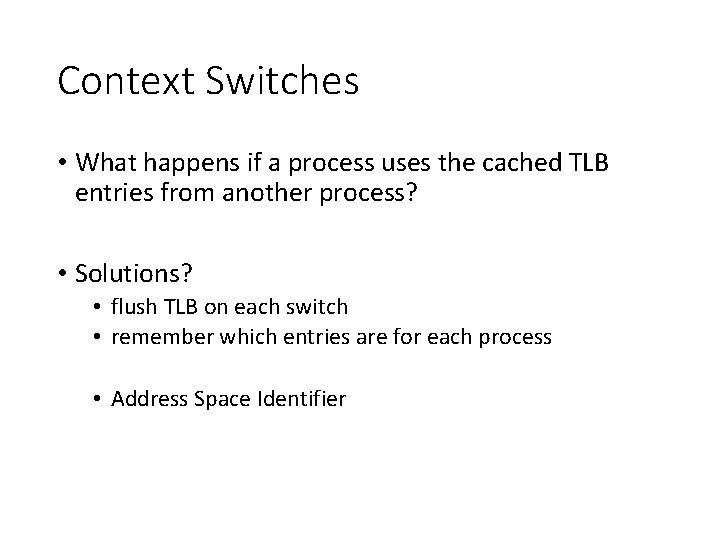

Context Switches • What happens if a process uses the cached TLB entries from another process? • Solutions? • flush TLB on each switch • remember which entries are for each process • Address Space Identifier

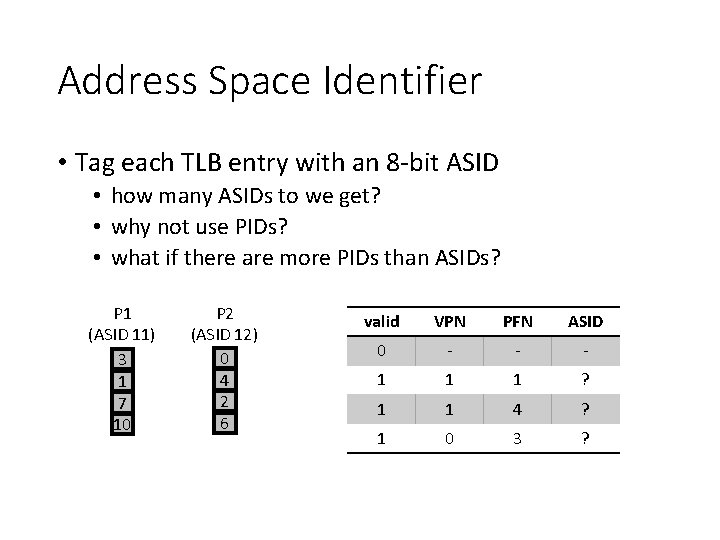

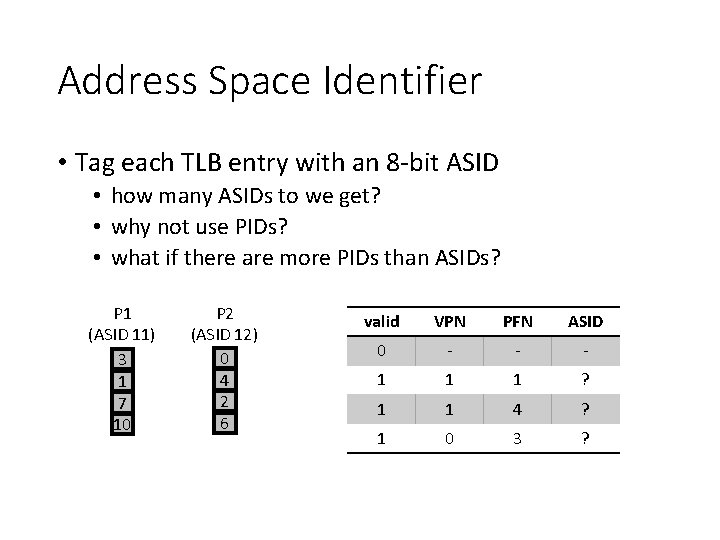

Address Space Identifier • Tag each TLB entry with an 8 -bit ASID • how many ASIDs to we get? • why not use PIDs? • what if there are more PIDs than ASIDs? P 1 (ASID 11) 3 1 7 10 P 2 (ASID 12) 0 4 2 6 valid VPN PFN ASID 0 - - - 1 1 1 ? 1 1 4 ? 1 0 3 ?

Next time: solving the too big problems