Lecture 6 Boolean to Vector Principles of Information

Lecture 6: Boolean to Vector Principles of Information Retrieval Prof. Ray Larson University of California, Berkeley School of Information Tuesday and Thursday 10: 30 am - 12: 00 pm Spring 2007 http: //courses. ischool. berkeley. edu/i 240/s 07 IS 240 – Spring 2007. 02. 01 - SLIDE 1

Today • IR Models • The Boolean Model • Boolean implementation issues IS 240 – Spring 2007. 02. 01 - SLIDE 2

Review • IR Models • Extended Boolean IS 240 – Spring 2007. 02. 01 - SLIDE 3

IR Models • Set Theoretic Models – Boolean – Fuzzy – Extended Boolean • Vector Models (Algebraic) • Probabilistic Models (probabilistic) IS 240 – Spring 2007. 02. 01 - SLIDE 4

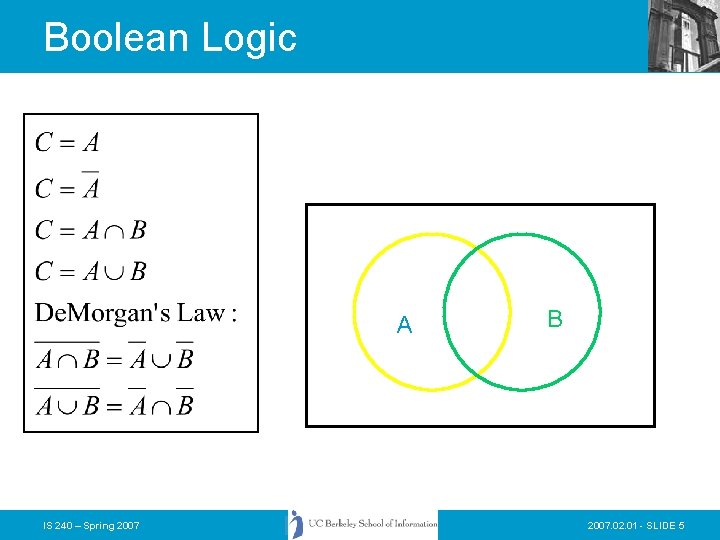

Boolean Logic A IS 240 – Spring 2007 B 2007. 02. 01 - SLIDE 5

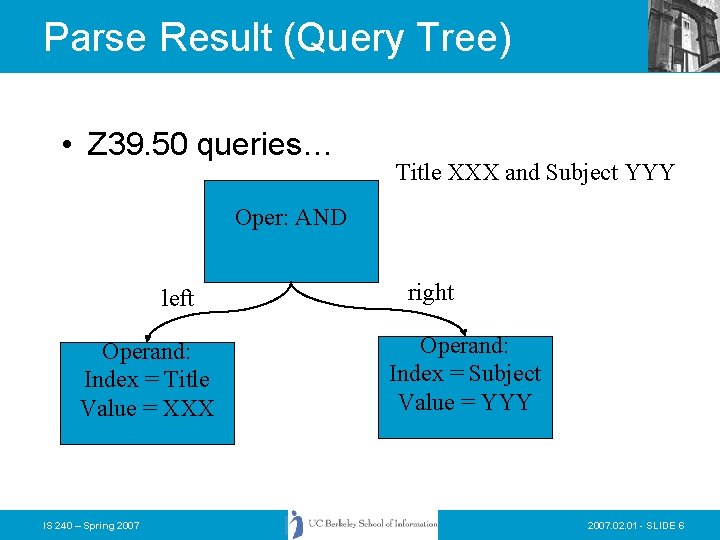

Parse Result (Query Tree) • Z 39. 50 queries… Title XXX and Subject YYY Oper: AND left Operand: Index = Title Value = XXX IS 240 – Spring 2007 right Operand: Index = Subject Value = YYY 2007. 02. 01 - SLIDE 6

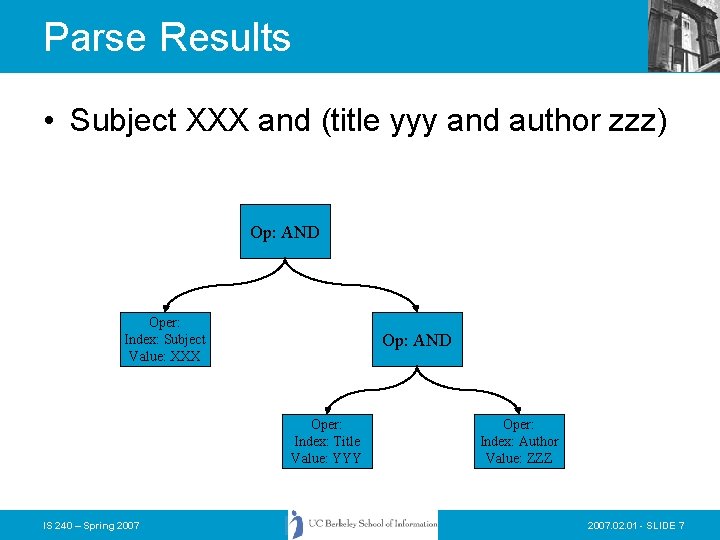

Parse Results • Subject XXX and (title yyy and author zzz) Op: AND Oper: Index: Subject Value: XXX Op: AND Oper: Index: Title Value: YYY IS 240 – Spring 2007 Oper: Index: Author Value: ZZZ 2007. 02. 01 - SLIDE 7

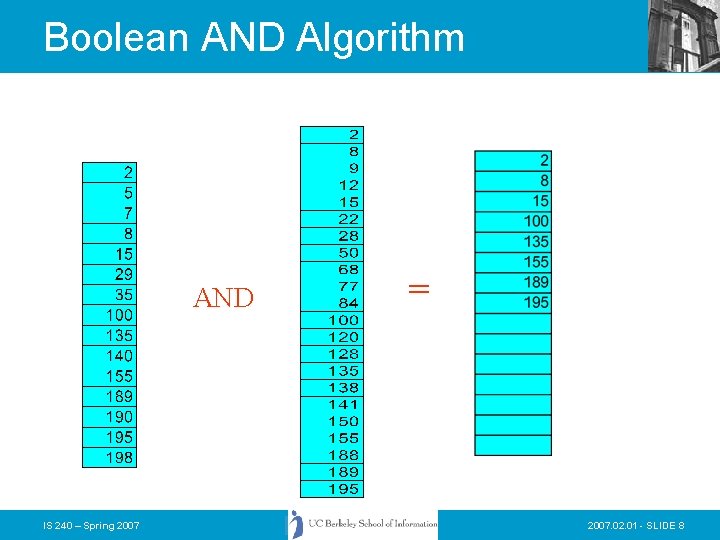

Boolean AND Algorithm AND IS 240 – Spring 2007 = 2007. 02. 01 - SLIDE 8

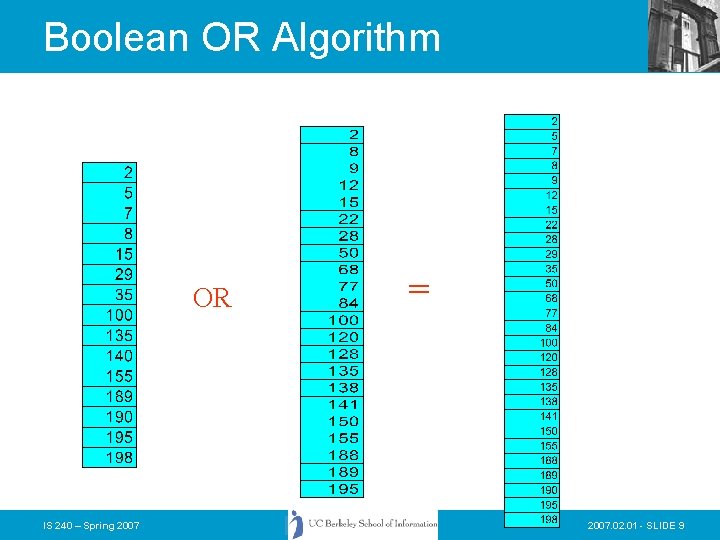

Boolean OR Algorithm OR IS 240 – Spring 2007 = 2007. 02. 01 - SLIDE 9

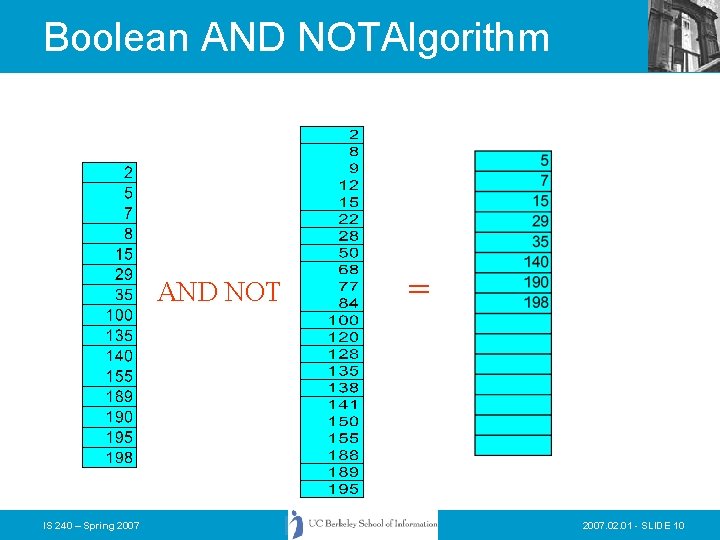

Boolean AND NOTAlgorithm AND NOT IS 240 – Spring 2007 = 2007. 02. 01 - SLIDE 10

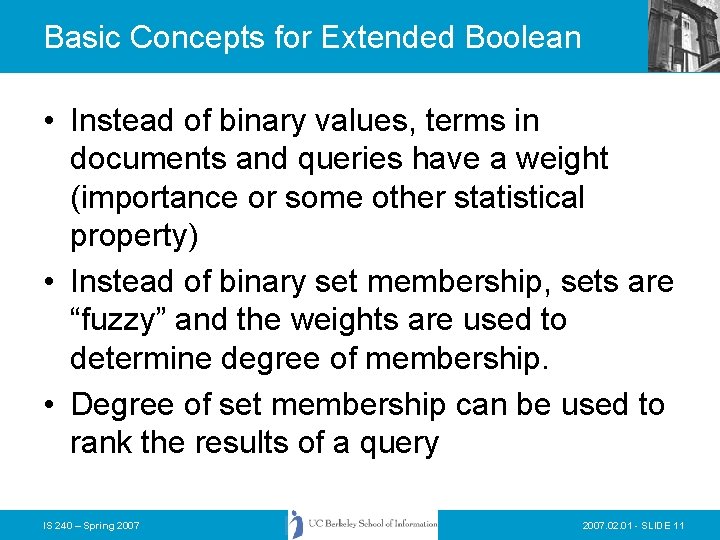

Basic Concepts for Extended Boolean • Instead of binary values, terms in documents and queries have a weight (importance or some other statistical property) • Instead of binary set membership, sets are “fuzzy” and the weights are used to determine degree of membership. • Degree of set membership can be used to rank the results of a query IS 240 – Spring 2007. 02. 01 - SLIDE 11

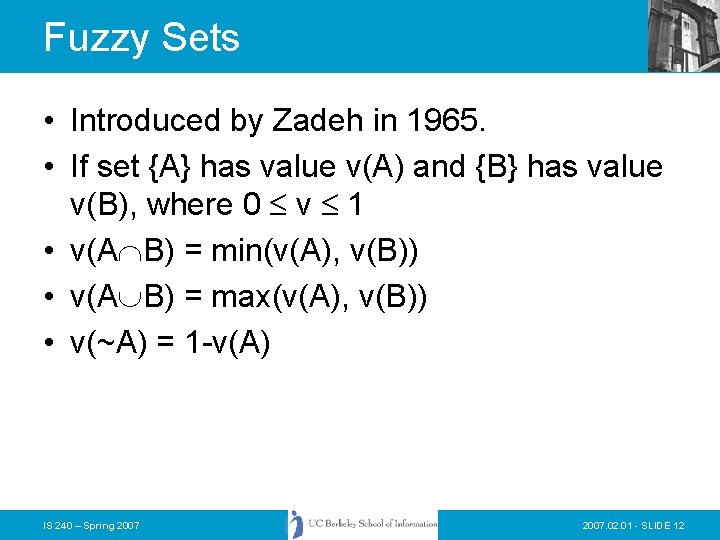

Fuzzy Sets • Introduced by Zadeh in 1965. • If set {A} has value v(A) and {B} has value v(B), where 0 v 1 • v(A B) = min(v(A), v(B)) • v(A B) = max(v(A), v(B)) • v(~A) = 1 -v(A) IS 240 – Spring 2007. 02. 01 - SLIDE 12

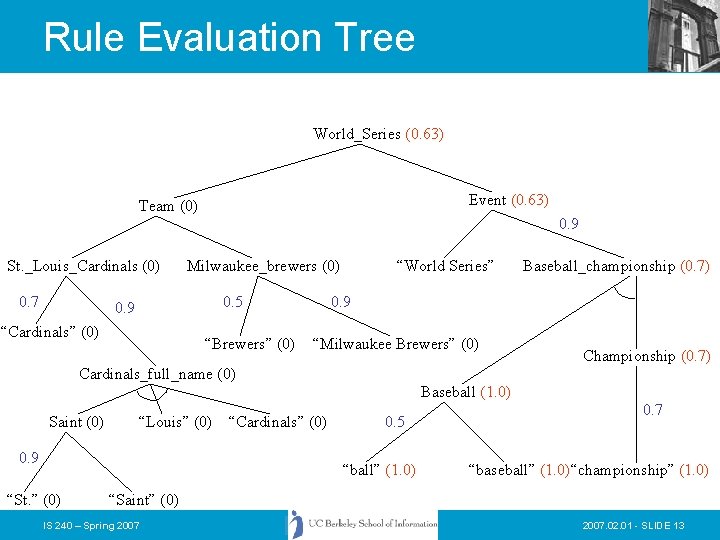

Rule Evaluation Tree World_Series (0. 63) Event (0. 63) Team (0) 0. 9 St. _Louis_Cardinals (0) 0. 7 Milwaukee_brewers (0) 0. 5 0. 9 “Cardinals” (0) “Brewers” (0) “World Series” Baseball_championship (0. 7) 0. 9 “Milwaukee Brewers” (0) Championship (0. 7) Cardinals_full_name (0) Baseball (1. 0) Saint (0) “Louis” (0) 0. 9 “Cardinals” (0) 0. 5 “ball” (1. 0) “St. ” (0) 0. 7 “baseball” (1. 0)“championship” (1. 0) “Saint” (0) IS 240 – Spring 2007. 02. 01 - SLIDE 13

Boolean Limitations • Advantages – simple queries are easy to understand – relatively easy to implement • Disadvantages – difficult to specify what is wanted, particularly in complex situations (E. g. , RUBRIC Queries) – too much returned, or too little – ordering not well determined in Traditional Boolean – Ordering may be problematic in extended Boolean (Robertson’s critique) – Weighting is based only on the query – or some undefined weighting scheme must be used for the documents. IS 240 – Spring 2007. 02. 01 - SLIDE 14

Lecture Overview • Statistical Properties of Text – Zipf Distribution – Statistical Dependence • Indexing and Inverted Files • Vector Representation • Term Weights Credit for some of the slides in this lecture goes to Marti Hearst IS 240 – Spring 2007. 02. 01 - SLIDE 15

Lecture Overview • Statistical Properties of Text – Zipf Distribution – Statistical Dependence • • Indexing and Inverted Files Vector Representation Term Weights Vector Matching Credit for some of the slides in this lecture goes to Marti Hearst IS 240 – Spring 2007. 02. 01 - SLIDE 16

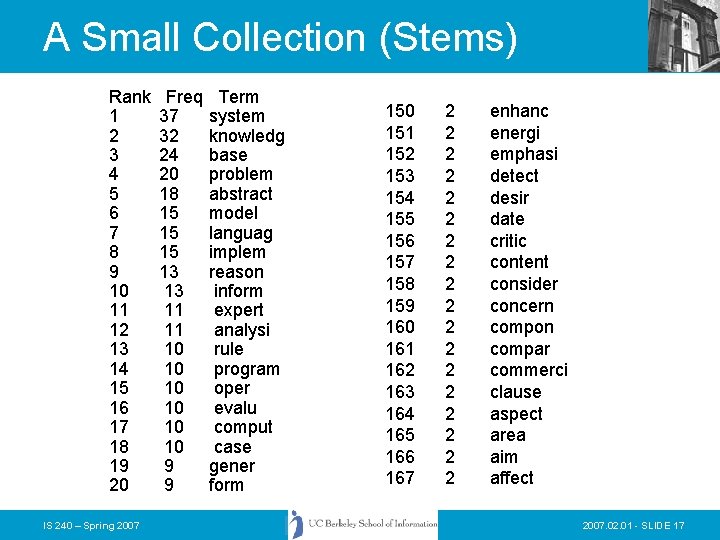

A Small Collection (Stems) Rank 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 IS 240 – Spring 2007 Freq 37 32 24 20 18 15 15 15 13 13 11 11 10 10 10 9 9 Term system knowledg base problem abstract model languag implem reason inform expert analysi rule program oper evalu comput case gener form 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 2 2 2 2 2 enhanc energi emphasi detect desir date critic content consider concern compon compar commerci clause aspect area aim affect 2007. 02. 01 - SLIDE 17

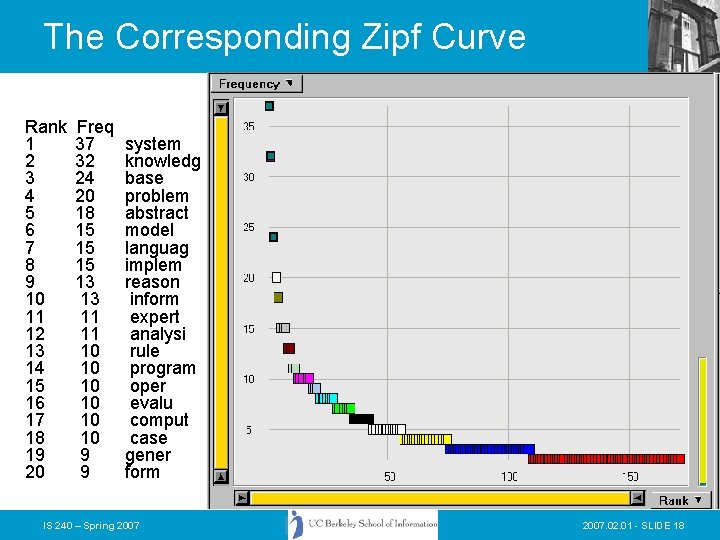

The Corresponding Zipf Curve Rank 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 Freq 37 32 24 20 18 15 15 15 13 13 11 11 10 10 10 9 9 system knowledg base problem abstract model languag implem reason inform expert analysi rule program oper evalu comput case gener form IS 240 – Spring 2007. 02. 01 - SLIDE 18

Zipf Distribution • The Important Points: – A few elements occur very frequently – A medium number of elements have medium frequency – Many elements occur very infrequently IS 240 – Spring 2007. 02. 01 - SLIDE 19

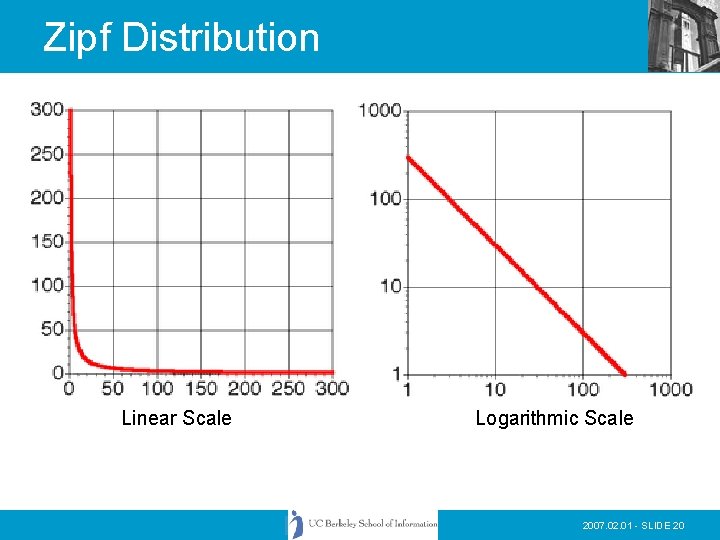

Zipf Distribution Linear Scale Logarithmic Scale 2007. 02. 01 - SLIDE 20

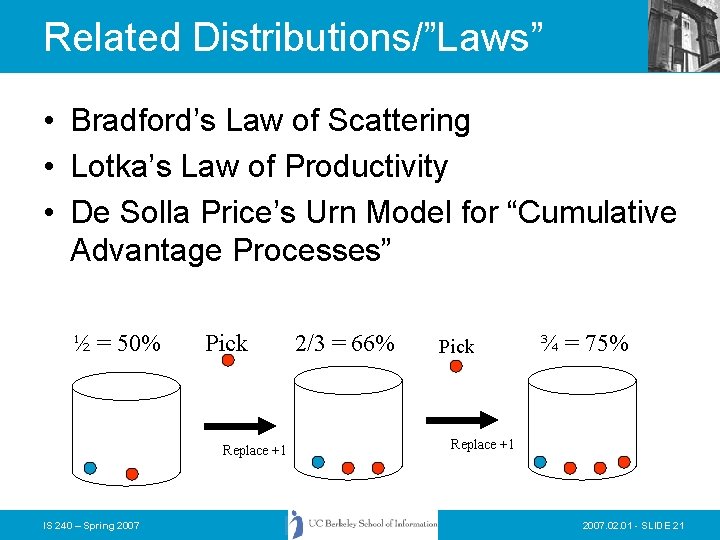

Related Distributions/”Laws” • Bradford’s Law of Scattering • Lotka’s Law of Productivity • De Solla Price’s Urn Model for “Cumulative Advantage Processes” ½ = 50% Pick Replace +1 IS 240 – Spring 2007 2/3 = 66% Pick ¾ = 75% Replace +1 2007. 02. 01 - SLIDE 21

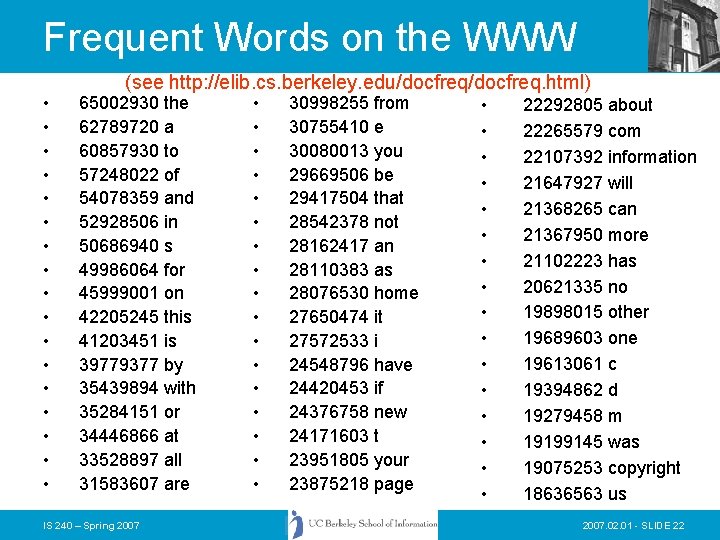

Frequent Words on the WWW • • • • • (see http: //elib. cs. berkeley. edu/docfreq. html) 65002930 the 62789720 a 60857930 to 57248022 of 54078359 and 52928506 in 50686940 s 49986064 for 45999001 on 42205245 this 41203451 is 39779377 by 35439894 with 35284151 or 34446866 at 33528897 all 31583607 are IS 240 – Spring 2007 • • • • • 30998255 from 30755410 e 30080013 you 29669506 be 29417504 that 28542378 not 28162417 an 28110383 as 28076530 home 27650474 it 27572533 i 24548796 have 24420453 if 24376758 new 24171603 t 23951805 your 23875218 page • • • • 22292805 about 22265579 com 22107392 information 21647927 will 21368265 can 21367950 more 21102223 has 20621335 no 19898015 other 19689603 one 19613061 c 19394862 d 19279458 m 19199145 was 19075253 copyright 18636563 us 2007. 02. 01 - SLIDE 22

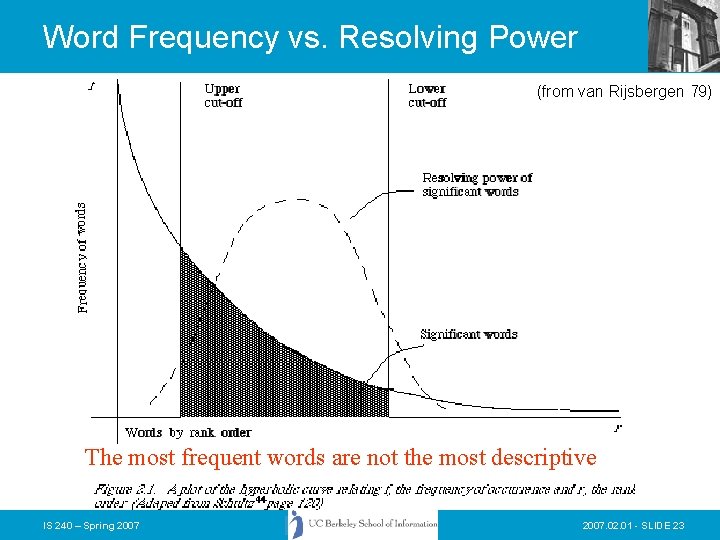

Word Frequency vs. Resolving Power (from van Rijsbergen 79) The most frequent words are not the most descriptive IS 240 – Spring 2007. 02. 01 - SLIDE 23

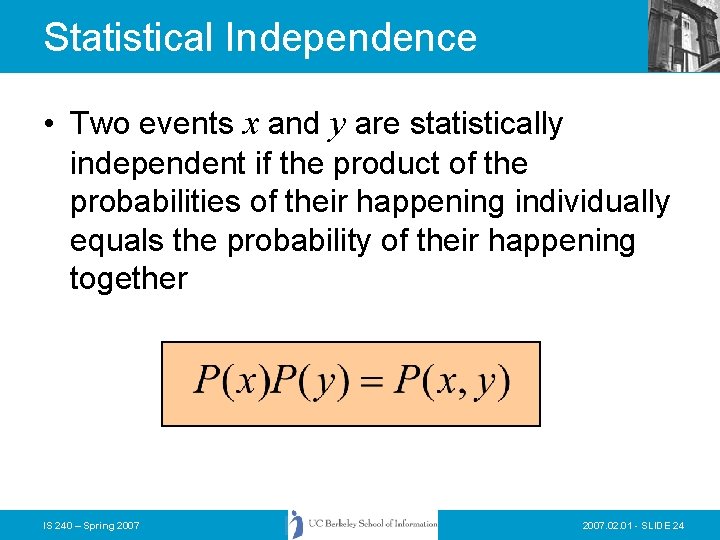

Statistical Independence • Two events x and y are statistically independent if the product of the probabilities of their happening individually equals the probability of their happening together IS 240 – Spring 2007. 02. 01 - SLIDE 24

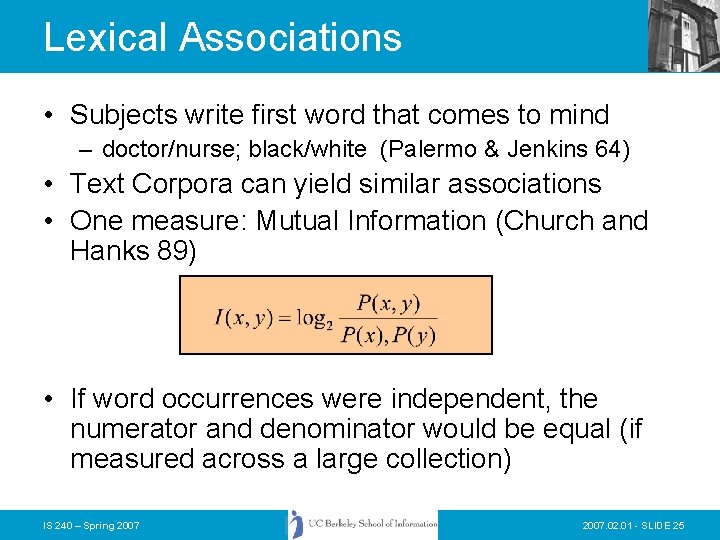

Lexical Associations • Subjects write first word that comes to mind – doctor/nurse; black/white (Palermo & Jenkins 64) • Text Corpora can yield similar associations • One measure: Mutual Information (Church and Hanks 89) • If word occurrences were independent, the numerator and denominator would be equal (if measured across a large collection) IS 240 – Spring 2007. 02. 01 - SLIDE 25

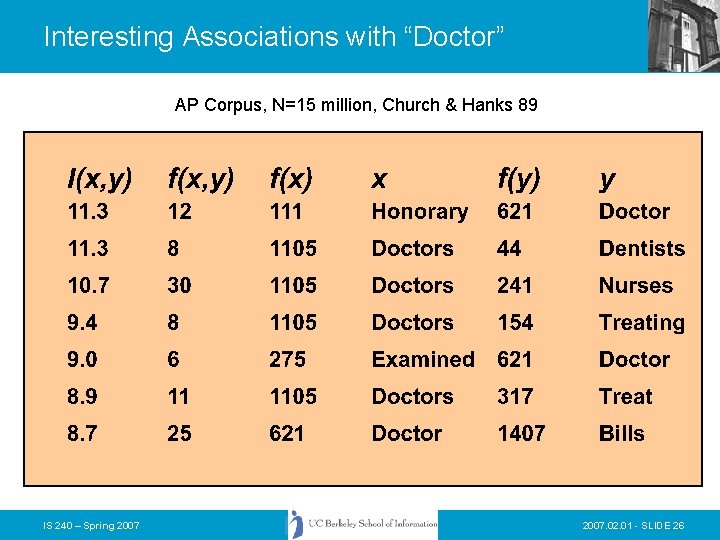

Interesting Associations with “Doctor” AP Corpus, N=15 million, Church & Hanks 89 IS 240 – Spring 2007. 02. 01 - SLIDE 26

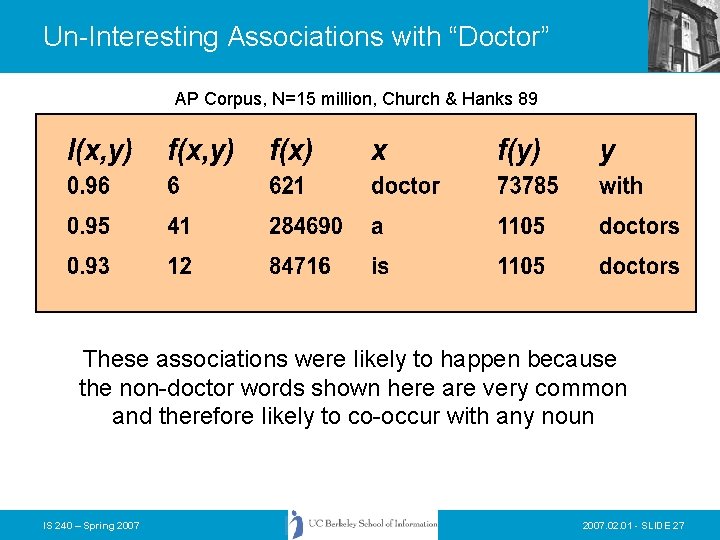

Un-Interesting Associations with “Doctor” AP Corpus, N=15 million, Church & Hanks 89 These associations were likely to happen because the non-doctor words shown here are very common and therefore likely to co-occur with any noun IS 240 – Spring 2007. 02. 01 - SLIDE 27

Content Analysis Summary • Content Analysis: transforming raw text into more computationally useful forms • Words in text collections exhibit interesting statistical properties – Word frequencies have a Zipf distribution – Word co-occurrences exhibit dependencies IS 240 – Spring 2007. 02. 01 - SLIDE 28

Lecture Overview • Statistical Properties of Text – Zipf Distribution – Statistical Dependence • • Indexing and Inverted Files Vector Representation Term Weights Vector Matching Credit for some of the slides in this lecture goes to Marti Hearst IS 240 – Spring 2007. 02. 01 - SLIDE 29

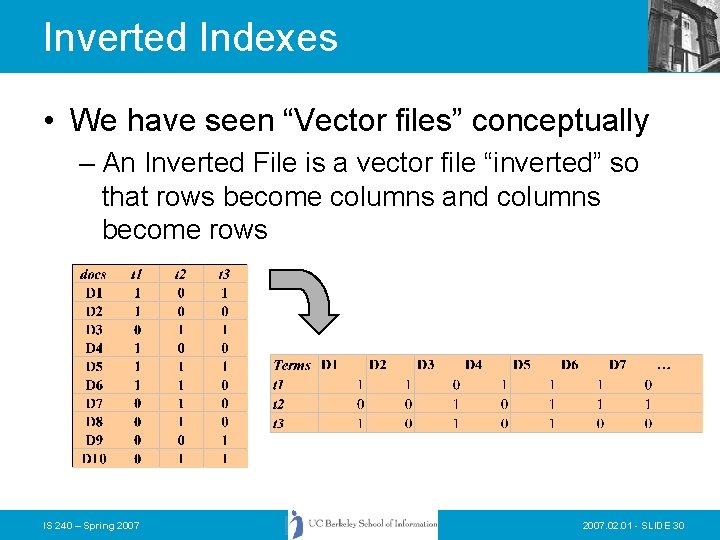

Inverted Indexes • We have seen “Vector files” conceptually – An Inverted File is a vector file “inverted” so that rows become columns and columns become rows IS 240 – Spring 2007. 02. 01 - SLIDE 30

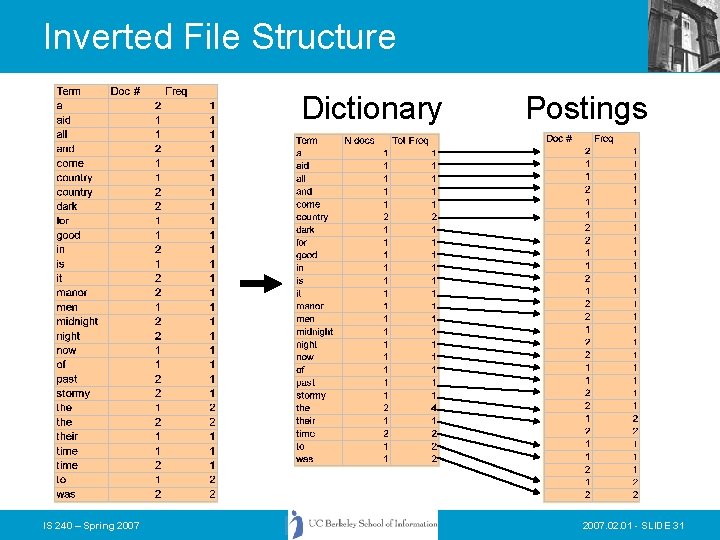

Inverted File Structure Dictionary IS 240 – Spring 2007 Postings 2007. 02. 01 - SLIDE 31

Inverted Indexes • Permit fast search for individual terms • For each term, you get a list consisting of: – Document ID – Frequency of term in doc (optional) – Position of term in doc (optional) • These lists can be used to solve Boolean queries: • country -> d 1, d 2 • manor -> d 2 • country AND manor -> d 2 • Also used for statistical ranking algorithms IS 240 – Spring 2007. 02. 01 - SLIDE 32

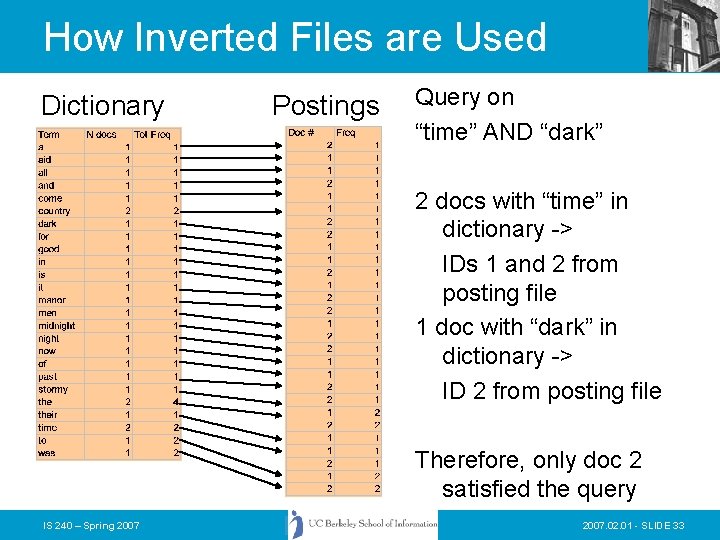

How Inverted Files are Used Dictionary Postings Query on “time” AND “dark” 2 docs with “time” in dictionary -> IDs 1 and 2 from posting file 1 doc with “dark” in dictionary -> ID 2 from posting file Therefore, only doc 2 satisfied the query IS 240 – Spring 2007. 02. 01 - SLIDE 33

Lecture Overview • Review – Boolean Searching – Content Analysis • Statistical Properties of Text – Zipf Distribution – Statistical Dependence • • Indexing and Inverted Files Vector Representation Term Weights Vector Matching Credit for some of the slides in this lecture goes to Marti Hearst IS 240 – Spring 2007. 02. 01 - SLIDE 34

Document Vectors • Documents are represented as “bags of words” • Represented as vectors when used computationally – A vector is like an array of floating point – Has direction and magnitude – Each vector holds a place for every term in the collection – Therefore, most vectors are sparse IS 240 – Spring 2007. 02. 01 - SLIDE 35

Vector Space Model • Documents are represented as vectors in term space – Terms are usually stems – Documents represented by binary or weighted vectors of terms • Queries represented the same as documents • Query and Document weights are based on length and direction of their vector • A vector distance measure between the query and documents is used to rank retrieved documents IS 240 – Spring 2007. 02. 01 - SLIDE 36

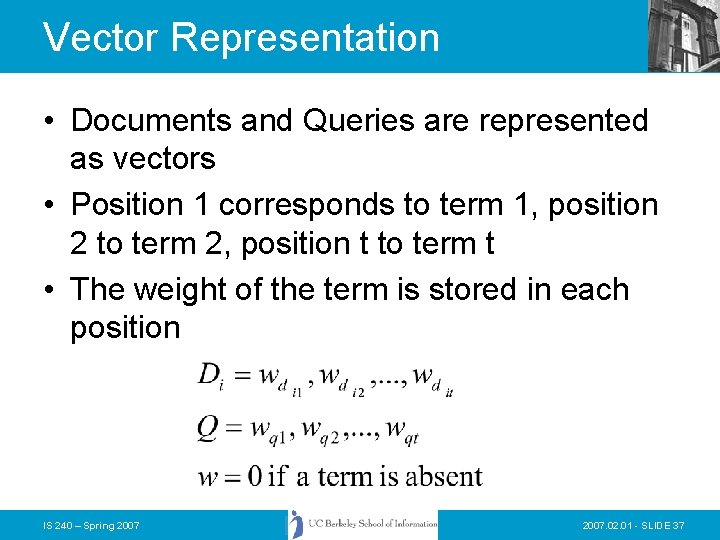

Vector Representation • Documents and Queries are represented as vectors • Position 1 corresponds to term 1, position 2 to term 2, position t to term t • The weight of the term is stored in each position IS 240 – Spring 2007. 02. 01 - SLIDE 37

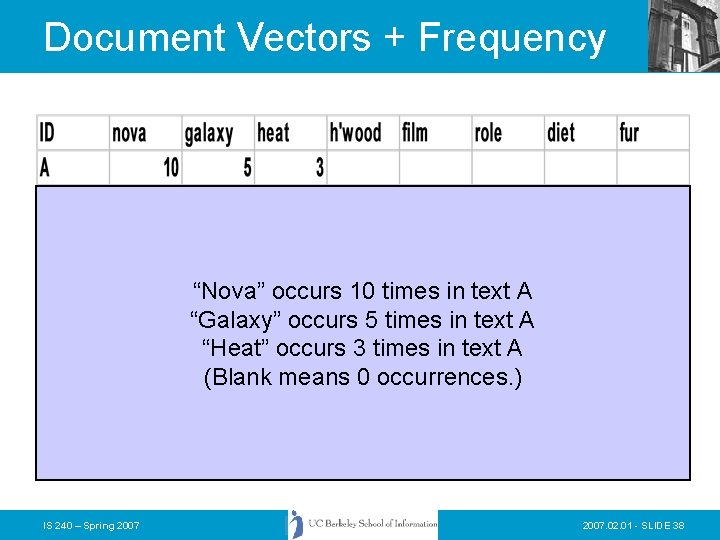

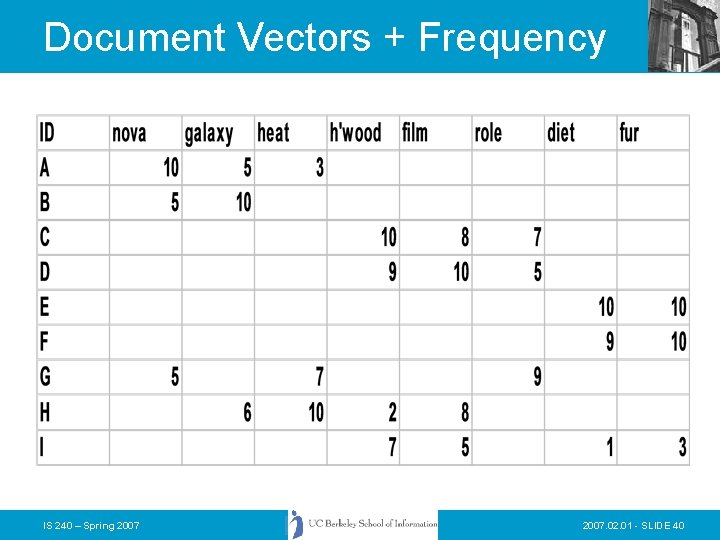

Document Vectors + Frequency “Nova” occurs 10 times in text A “Galaxy” occurs 5 times in text A “Heat” occurs 3 times in text A (Blank means 0 occurrences. ) IS 240 – Spring 2007. 02. 01 - SLIDE 38

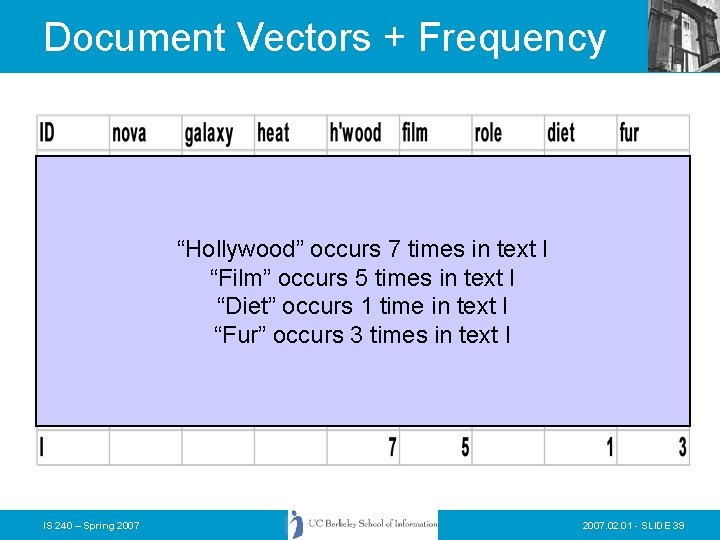

Document Vectors + Frequency “Hollywood” occurs 7 times in text I “Film” occurs 5 times in text I “Diet” occurs 1 time in text I “Fur” occurs 3 times in text I IS 240 – Spring 2007. 02. 01 - SLIDE 39

Document Vectors + Frequency IS 240 – Spring 2007. 02. 01 - SLIDE 40

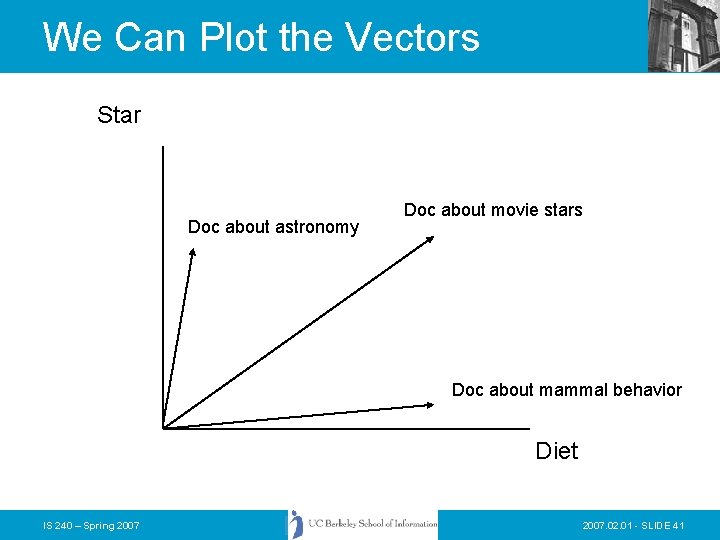

We Can Plot the Vectors Star Doc about astronomy Doc about movie stars Doc about mammal behavior Diet IS 240 – Spring 2007. 02. 01 - SLIDE 41

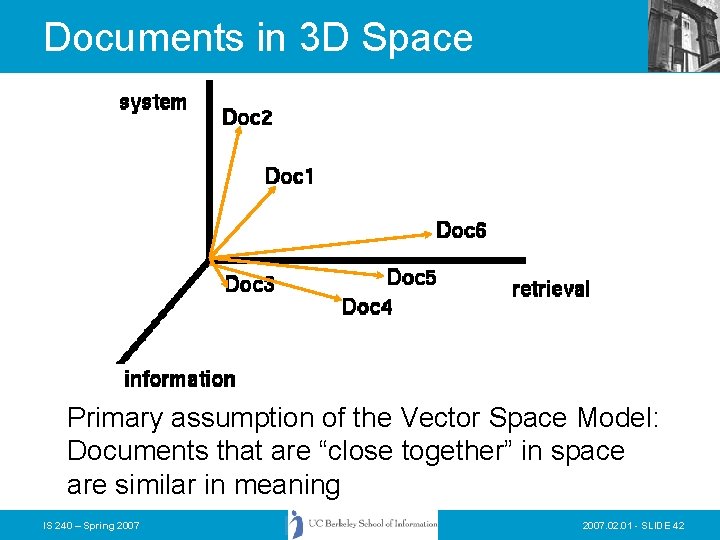

Documents in 3 D Space Primary assumption of the Vector Space Model: Documents that are “close together” in space are similar in meaning IS 240 – Spring 2007. 02. 01 - SLIDE 42

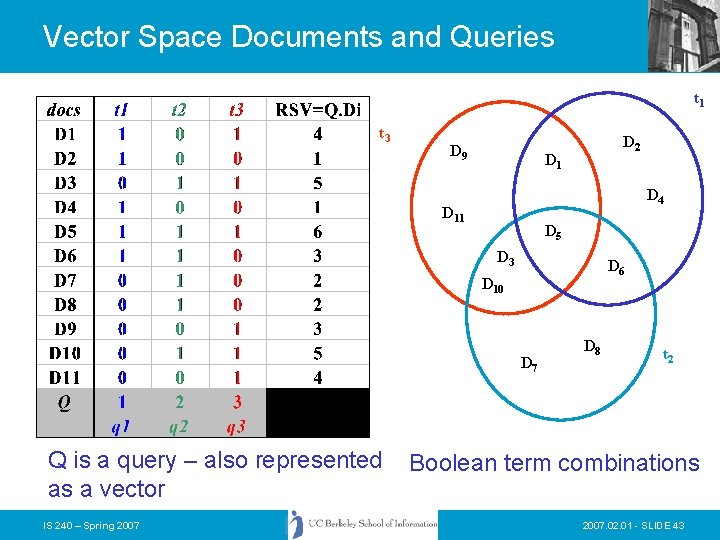

Vector Space Documents and Queries t 1 t 3 D 9 D 2 D 1 D 4 D 11 D 5 D 3 D 6 D 10 D 7 Q is a query – also represented as a vector IS 240 – Spring 2007 D 8 t 2 Boolean term combinations 2007. 02. 01 - SLIDE 43

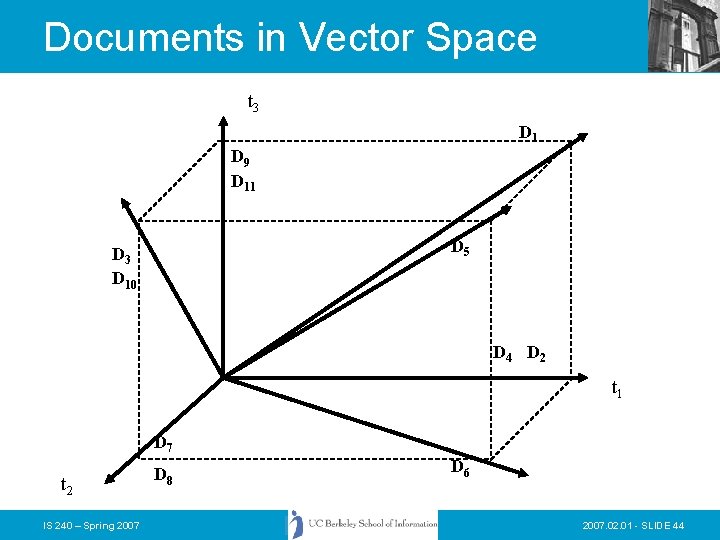

Documents in Vector Space t 3 D 1 D 9 D 11 D 5 D 3 D 10 D 4 D 2 t 1 D 7 t 2 IS 240 – Spring 2007 D 8 D 6 2007. 02. 01 - SLIDE 44

Document Space has High Dimensionality • What happens beyond 2 or 3 dimensions? • Similarity still has to do with how many tokens are shared in common. • More terms -> harder to understand which subsets of words are shared among similar documents. • We will look in detail at ranking methods • Approaches to handling high dimensionality: Clustering and LSI (later) IS 240 – Spring 2007. 02. 01 - SLIDE 45

Lecture Overview • Statistical Properties of Text – Zipf Distribution – Statistical Dependence • • Indexing and Inverted Files Vector Representation Term Weights Vector Matching Credit for some of the slides in this lecture goes to Marti Hearst IS 240 – Spring 2007. 02. 01 - SLIDE 46

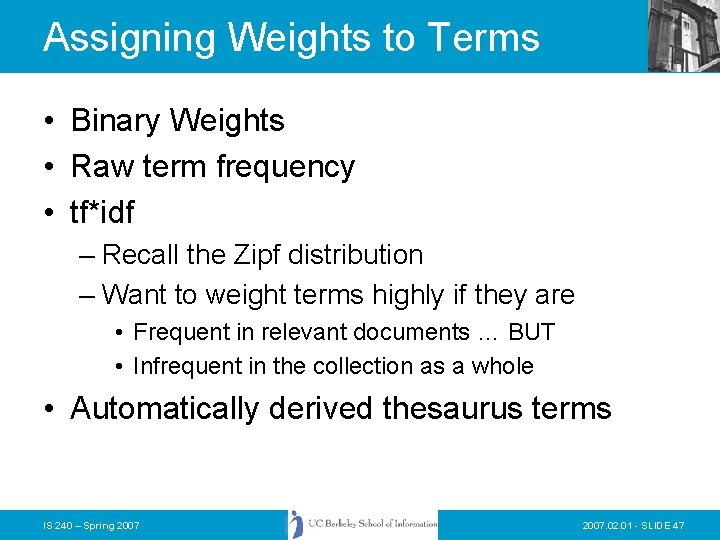

Assigning Weights to Terms • Binary Weights • Raw term frequency • tf*idf – Recall the Zipf distribution – Want to weight terms highly if they are • Frequent in relevant documents … BUT • Infrequent in the collection as a whole • Automatically derived thesaurus terms IS 240 – Spring 2007. 02. 01 - SLIDE 47

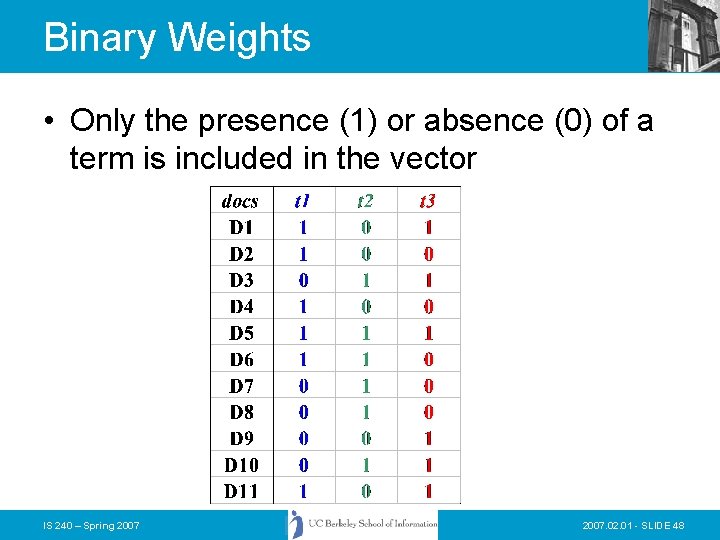

Binary Weights • Only the presence (1) or absence (0) of a term is included in the vector IS 240 – Spring 2007. 02. 01 - SLIDE 48

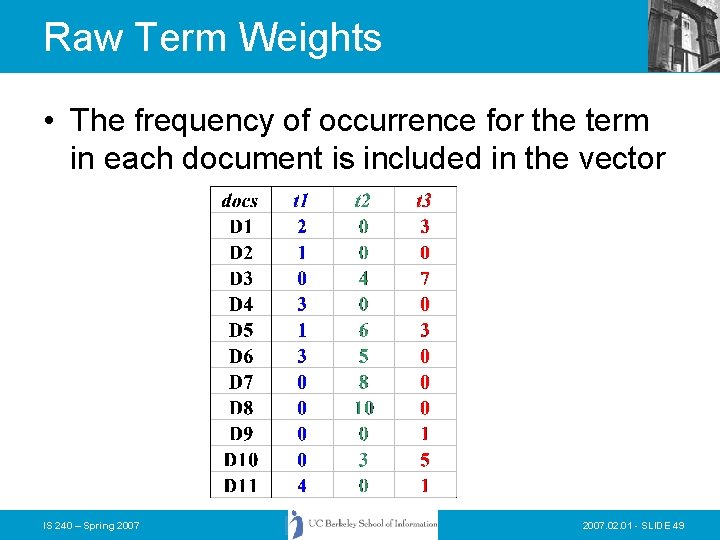

Raw Term Weights • The frequency of occurrence for the term in each document is included in the vector IS 240 – Spring 2007. 02. 01 - SLIDE 49

Assigning Weights • tf*idf measure: – Term frequency (tf) – Inverse document frequency (idf) • A way to deal with some of the problems of the Zipf distribution • Goal: Assign a tf*idf weight to each term in each document IS 240 – Spring 2007. 02. 01 - SLIDE 50

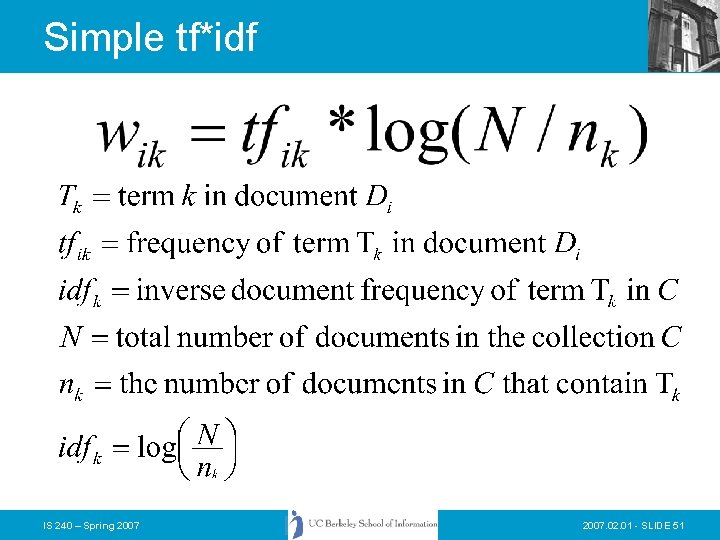

Simple tf*idf IS 240 – Spring 2007. 02. 01 - SLIDE 51

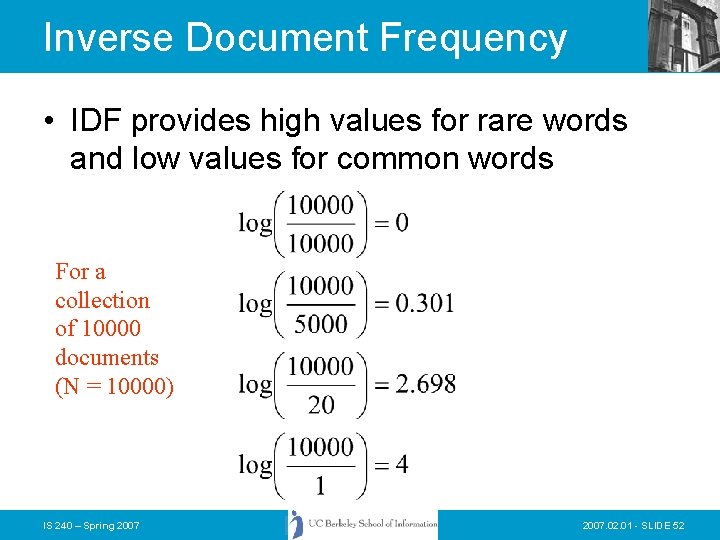

Inverse Document Frequency • IDF provides high values for rare words and low values for common words For a collection of 10000 documents (N = 10000) IS 240 – Spring 2007. 02. 01 - SLIDE 52

Non-Boolean IR • Need to measure some similarity between the query and the document • The basic notion is that documents that are somehow similar to a query, are likely to be relevant responses for that query • We will revisit this notion again and see how the Language Modelling approach to IR has taken it to a new level IS 240 – Spring 2007. 02. 01 - SLIDE 53

Non-Boolean? • To measure similarity we… – Need to consider the characteristics of the document and the query – Make the assumption that similarity of language use between the query and the document implies similarity of topic and hence, potential relevance. IS 240 – Spring 2007. 02. 01 - SLIDE 54

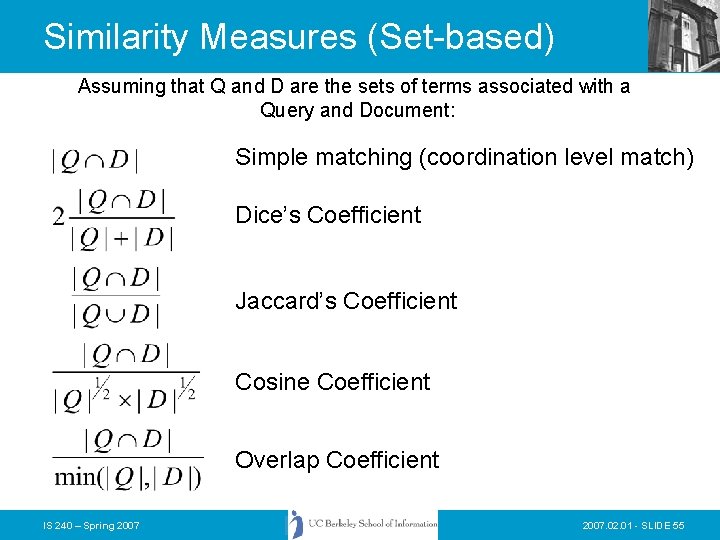

Similarity Measures (Set-based) Assuming that Q and D are the sets of terms associated with a Query and Document: Simple matching (coordination level match) Dice’s Coefficient Jaccard’s Coefficient Cosine Coefficient Overlap Coefficient IS 240 – Spring 2007. 02. 01 - SLIDE 55

What form should these take? • Each of the queries and documents might be considered as: – A set of terms (Boolean approach) • “index terms” • “words”, stems, etc. – Some other form? IS 240 – Spring 2007. 02. 01 - SLIDE 56

Weighting schemes • We have seen something of – Binary – Raw term weights – TF*IDF • There are many other possibilities – IDF alone – Normalized term frequency – etc. IS 240 – Spring 2007. 02. 01 - SLIDE 57

Next Week • More on Vector Space • Probabilistic Models and Retrieval IS 240 – Spring 2007. 02. 01 - SLIDE 58

- Slides: 58