Lecture 5 Verification Tools Automation improves the efficiency

- Slides: 23

Lecture 5 - Verification Tools • Automation improves the efficiency and reliability of the verification process • Some tools, such as a simulator, are essential. Others automate tedious tasks and increase confidence in the outcome. • It is not necessary to use all the tools. EE 694 v-Verification-Lect 5 1

Lecture Overview • Will look at the other tools used in verification – – – – – Third party models Hardware modelers Waveform viewers Code coverage tools Verification languages Revision control Configuration management Issue tracking Metrics EE 694 v-Verification-Lect 5 2

Third Party Models • Many designs use off the shelf parts • To verify such a design, must obtain a model to these parts • Often must get the model from a 3 rd party • Most 3 rd party models are provided as compiled binary models • Why buy 3 rd party models? – Engineering resources – Quality (especially in the area of system timing) EE 694 v-Verification-Lect 5 3

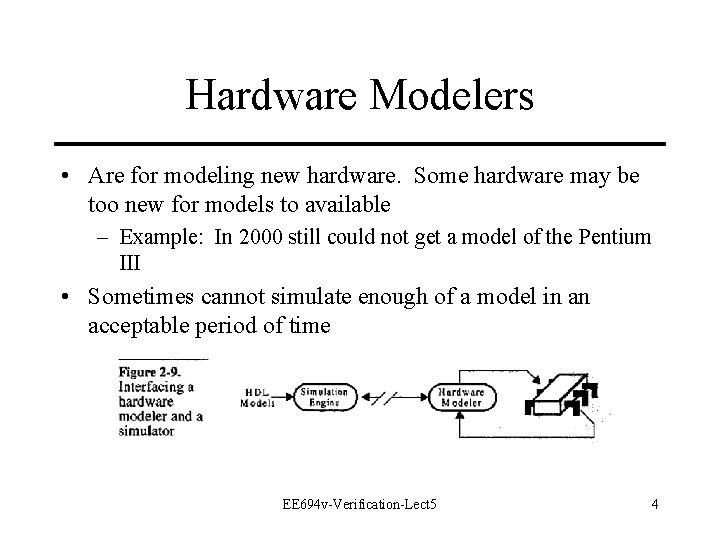

Hardware Modelers • Are for modeling new hardware. Some hardware may be too new for models to available – Example: In 2000 still could not get a model of the Pentium III • Sometimes cannot simulate enough of a model in an acceptable period of time EE 694 v-Verification-Lect 5 4

Hardware Modelers (cont) • Hardware modeler features – Small box that connects to network that contains a real copy of the physical chip – Rest of HDL model provides inputs to the chip and obtains the chips output to return to your model EE 694 v-Verification-Lect 5 5

Waveform Viewers • Lets you view transitions on multiple signals over time • The most common of verification tools • Waveform can be saved in a trace file • In verification – need to know expected output and whenever the simulated output is not as expected • both the signal value and the signal timing – use the testbench to compare the model output with the expected EE 694 v-Verification-Lect 5 6

Code Coverage • A technique that has been used in software engineering for years. • By covering all statements adequately the chances of a false positive (a bad design tests good) are reduced. • Never 100% certain that design under verification is indeed correct. Code coverage increases confidence. • Some tools may use file I/O aspect of language and others have special features built into the simulator to report coverage statistics. EE 694 v-Verification-Lect 5 7

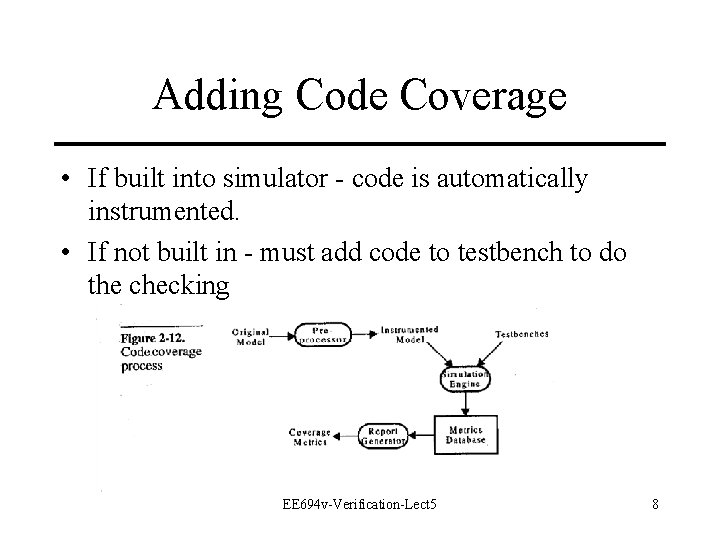

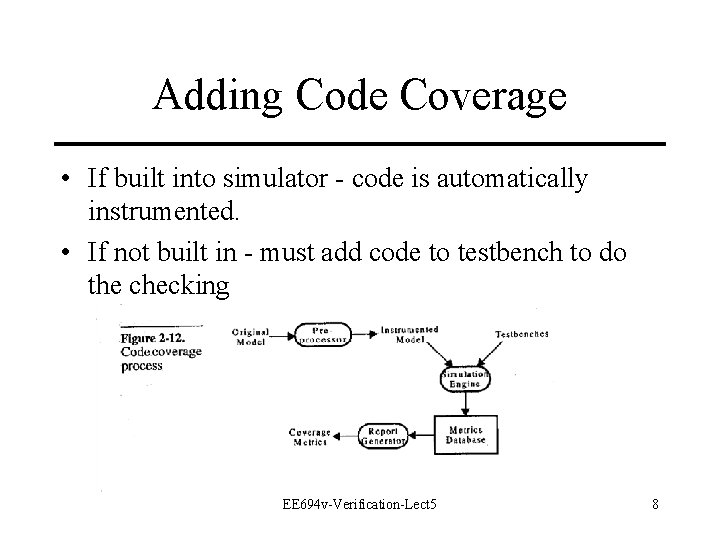

Adding Code Coverage • If built into simulator - code is automatically instrumented. • If not built in - must add code to testbench to do the checking EE 694 v-Verification-Lect 5 8

Code Coverage • Objective is to determine if you have overlooked exercising some code in the model – If you answer yes then must also ask why the code is present • Coverage metrics can be generated after running a testbench • Metrics measure coverage of – statements – possible paths through code – expressions EE 694 v-Verification-Lect 5 9

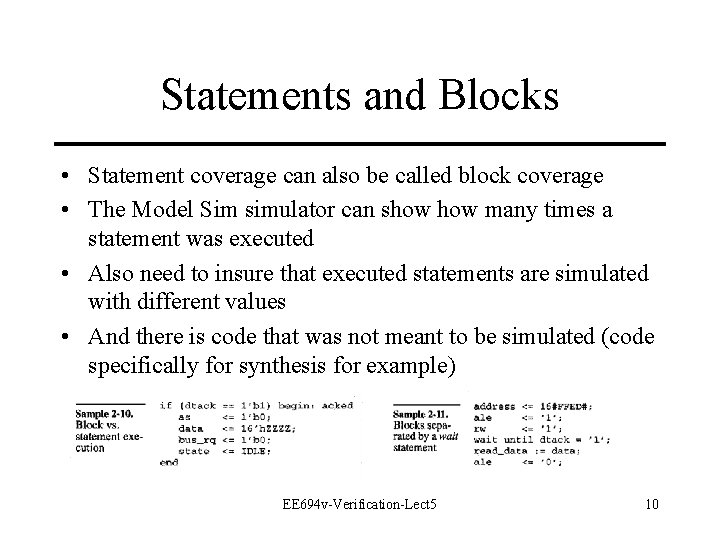

Statements and Blocks • Statement coverage can also be called block coverage • The Model Sim simulator can show many times a statement was executed • Also need to insure that executed statements are simulated with different values • And there is code that was not meant to be simulated (code specifically for synthesis for example) EE 694 v-Verification-Lect 5 10

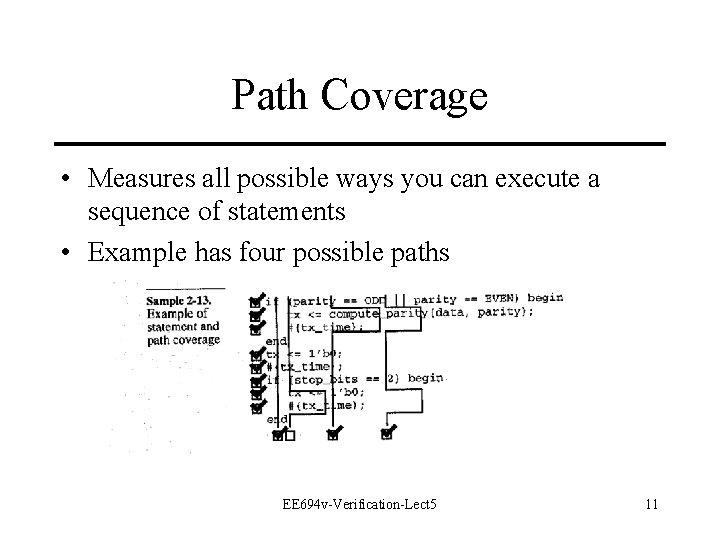

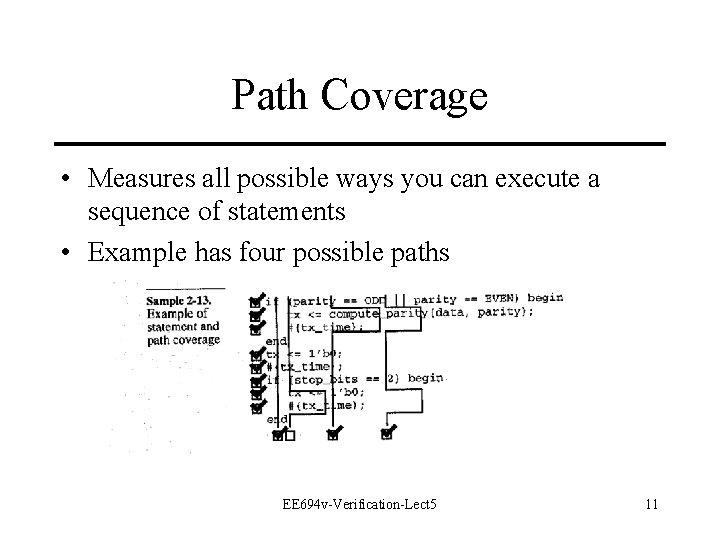

Path Coverage • Measures all possible ways you can execute a sequence of statements • Example has four possible paths EE 694 v-Verification-Lect 5 11

Path Coverage Goal • Desire is to take all possible paths through code • It is possible to have 100% statement coverage but less than 100% path coverage • Number of possible paths can be very, very large => keep number of paths as small as possible • Obtaining 100% path coverage for a model of even moderate complexity is very difficult EE 694 v-Verification-Lect 5 12

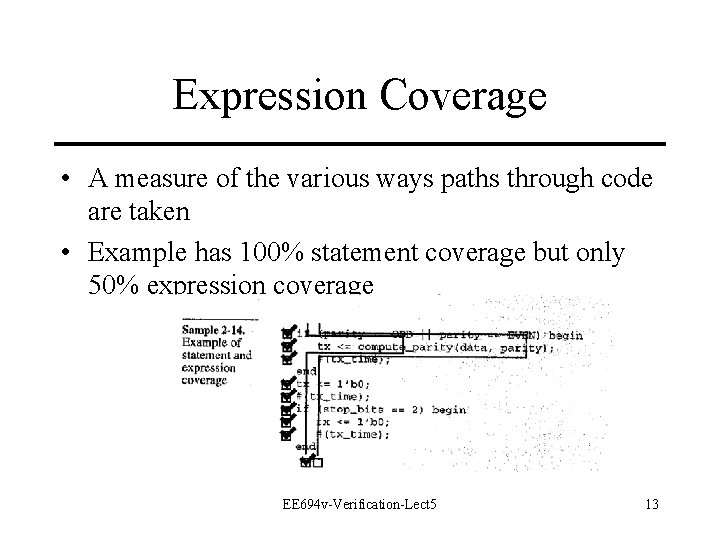

Expression Coverage • A measure of the various ways paths through code are taken • Example has 100% statement coverage but only 50% expression coverage EE 694 v-Verification-Lect 5 13

100% Code Coverage • What do 100% path and 100% expression coverage mean? – Not much!! Just indicates how thoroughly verification suite exercises code. Does not indicate the quality of the verification suite. – Does not provide an indication about correctness of code • Results from coverage can help identify corner cases not exercised • Is an additional indicator for completeness of job – Code coverage value can indicate if job is not complete EE 694 v-Verification-Lect 5 14

Verification Languages • Verilog and VHDL were designed as design languages • Verification languages are designed for verification – e/Specman from Verisity – VERA from Synopsys – RAVE from Cronology • Even with a verification language still – – – need to plan verification design verification strategy and design verification architecture create stimulus determine expected response compare actual response versus expected response EE 694 v-Verification-Lect 5 15

Revision Control • Need to insure that model verified is model used for implementation • Managing a HDL-based hardware project is similar to managing a software project • Require a source control management system • Such systems keep last version of a file and a history of previous versions along with what changes are present in each version EE 694 v-Verification-Lect 5 16

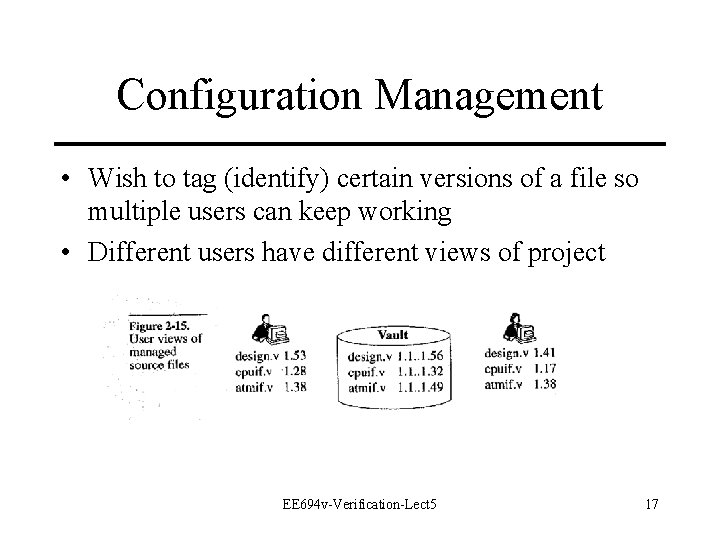

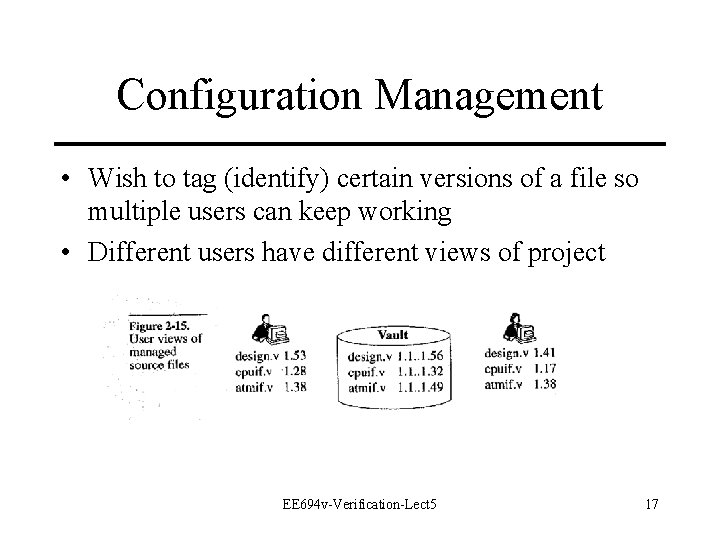

Configuration Management • Wish to tag (identify) certain versions of a file so multiple users can keep working • Different users have different views of project EE 694 v-Verification-Lect 5 17

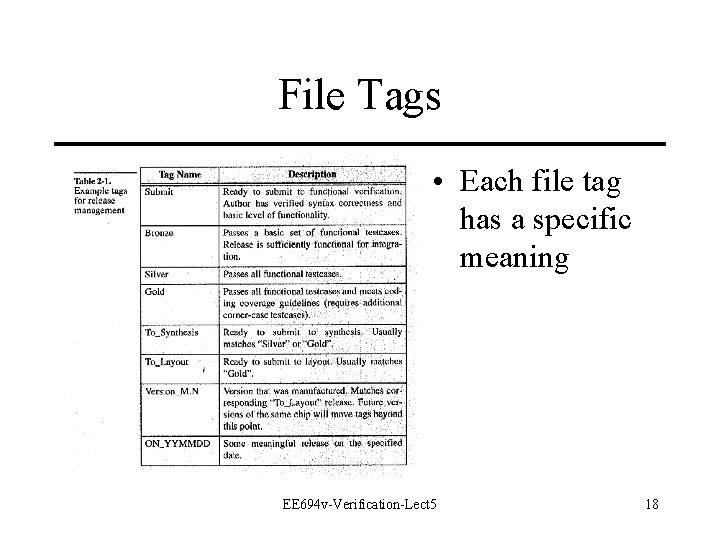

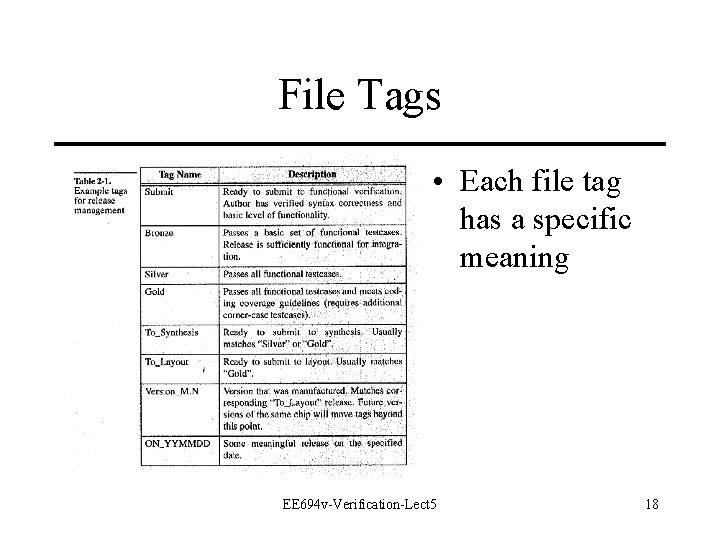

File Tags • Each file tag has a specific meaning EE 694 v-Verification-Lect 5 18

Issue Tracking • It is normal and expected to find functional irregularities in complex systems • Worry if you don’t!!! Bugs will be found!!! • An issue is anything that can affect the functionality of the design – – Bugs during execution of the testbench Ambiguities or incompleteness of specifications A new and relevant testcase Errors found at any stage • Must track all issues if a bad design could be manufactured were the issue not tracked EE 694 v-Verification-Lect 5 19

Issue Tracking Systems • The Grapevine – Casual conversation between members of a design team in which issues are discussed – No-one has clear responsibility for solution – System does not maintain a history • The Post-it System – – The yellow stickies are used to post issues Ownership of issues is tenuous at best No ability to prioritize issues System does not maintain a history EE 694 v-Verification-Lect 5 20

Issue Tracking Systems (cont. ) • The Procedural System – Issues are formally reported – Outstanding issues are reviewed and resolved during team meetings – This system consumes a lot of meeting time • Computerized Systems – – Issues seen through to resolution Can send periodic reminders until resolved History of action(s) to resolve is archived Problem is that these systems can require a significant effort to use EE 694 v-Verification-Lect 5 21

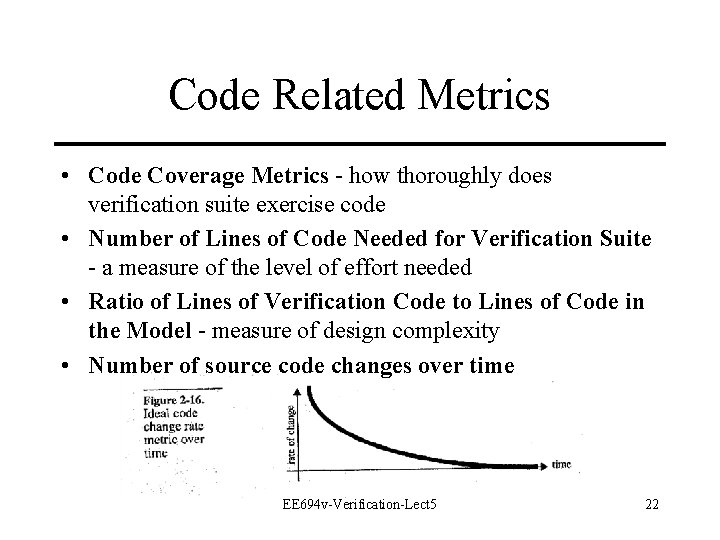

Code Related Metrics • Code Coverage Metrics - how thoroughly does verification suite exercise code • Number of Lines of Code Needed for Verification Suite - a measure of the level of effort needed • Ratio of Lines of Verification Code to Lines of Code in the Model - measure of design complexity • Number of source code changes over time EE 694 v-Verification-Lect 5 22

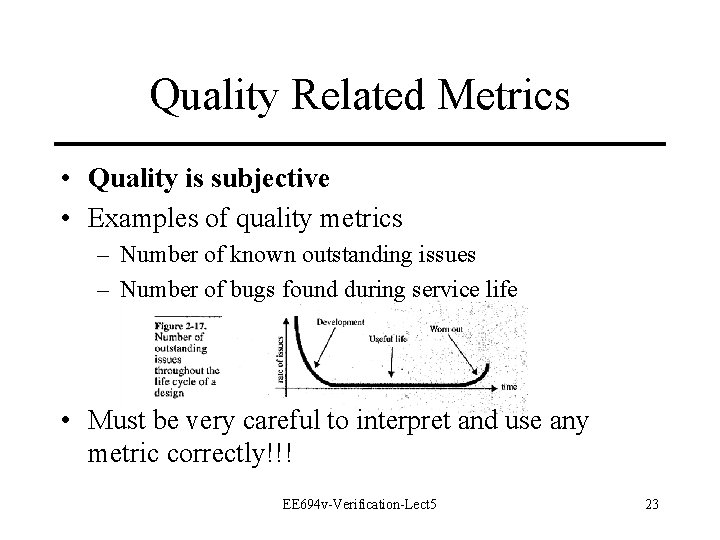

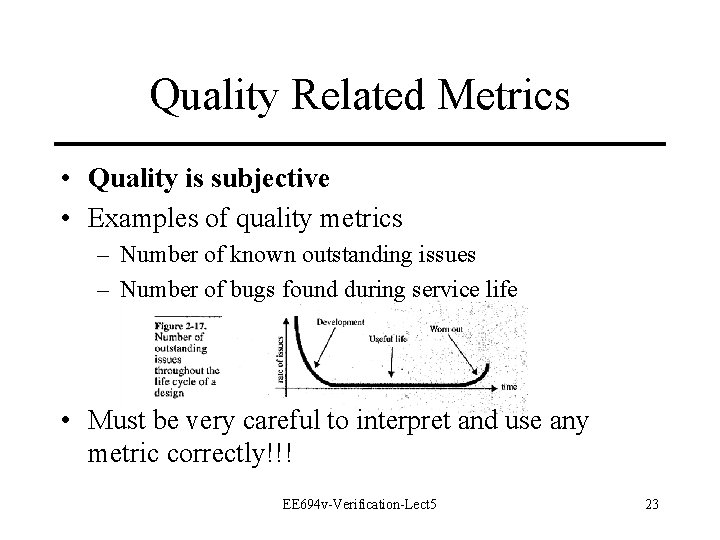

Quality Related Metrics • Quality is subjective • Examples of quality metrics – Number of known outstanding issues – Number of bugs found during service life • Must be very careful to interpret and use any metric correctly!!! EE 694 v-Verification-Lect 5 23