Lecture 5 Memory Performance Types of Memory Registers

![Locality of Reference Ex: Stride-1 reference pattern int sumvec(int v[N]) { int i, sum=0; Locality of Reference Ex: Stride-1 reference pattern int sumvec(int v[N]) { int i, sum=0;](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-10.jpg)

![Locality of Reference Ex: Stride-1 reference pattern int sumaryrows(int a[M][N]) { int i, j, Locality of Reference Ex: Stride-1 reference pattern int sumaryrows(int a[M][N]) { int i, j,](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-11.jpg)

![Locality of Reference Ex: Stride-N reference pattern int sumaryrows(int a[M][N]) { int i, j, Locality of Reference Ex: Stride-N reference pattern int sumaryrows(int a[M][N]) { int i, j,](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-12.jpg)

![Locality of Reference Ex: Locality of references #define N 1000 typedef struct{ int vel[3]; Locality of Reference Ex: Locality of references #define N 1000 typedef struct{ int vel[3];](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-14.jpg)

![Cache Performance Memory Ex: Cache trashing float dotprod(float x[8], float y[8]){ int i; float Cache Performance Memory Ex: Cache trashing float dotprod(float x[8], float y[8]){ int i; float](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-16.jpg)

![Virtual Memory double x[] stride=516; for (j=0; j<jmax; j++) for (i=0; i<imax; i++) x[i*stride+j] Virtual Memory double x[] stride=516; for (j=0; j<jmax; j++) for (i=0; i<imax; i++) x[i*stride+j]](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-19.jpg)

- Slides: 24

Lecture 5: Memory Performance

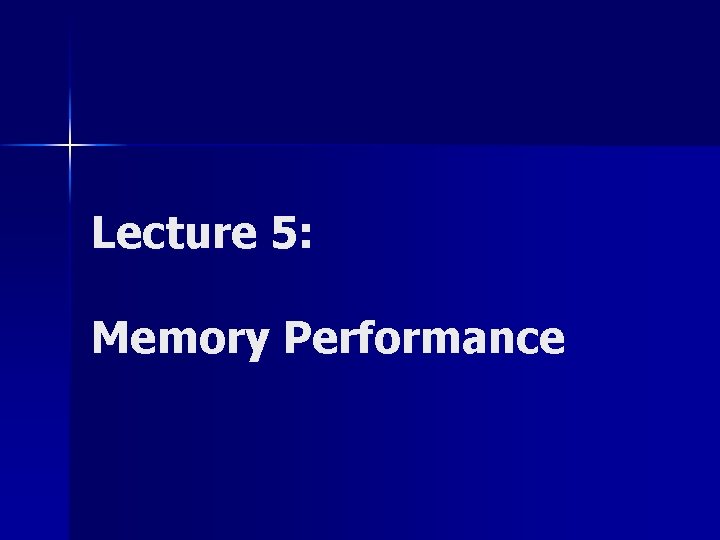

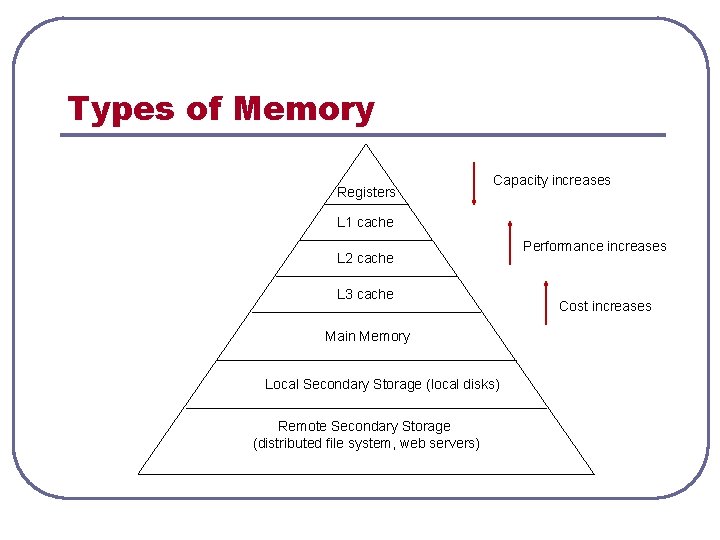

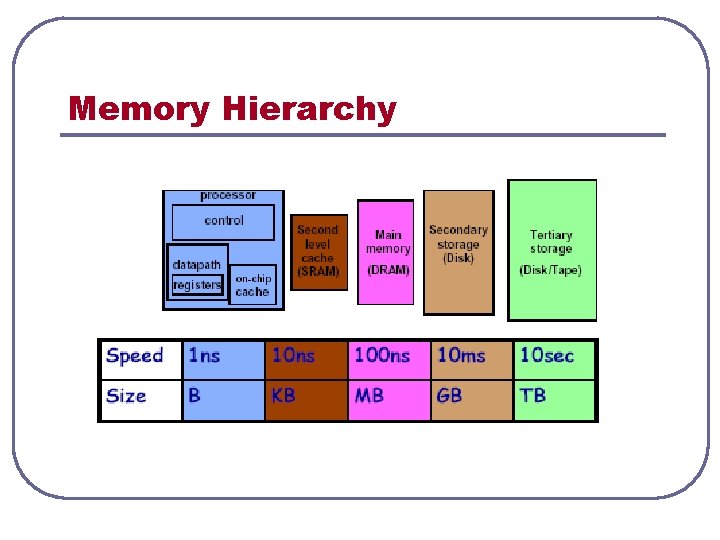

Types of Memory Registers Capacity increases L 1 cache L 2 cache L 3 cache Main Memory Local Secondary Storage (local disks) Remote Secondary Storage (distributed file system, web servers) Performance increases Cost increases

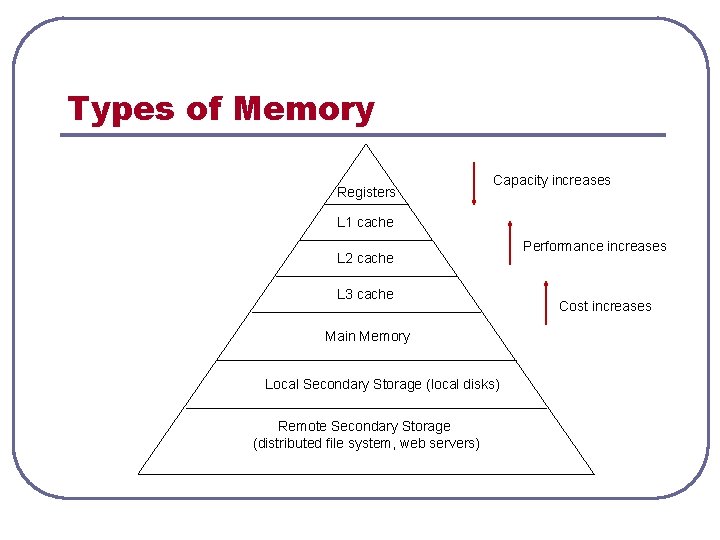

Memory Hierarchy

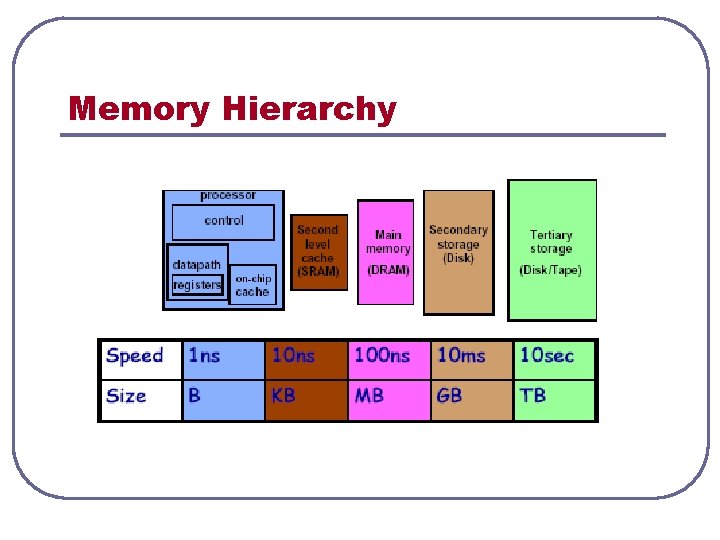

Random Access Memory (RAM) l l • • • DRAM (Dynamic RAM) Must be refreshed periodically 1 transistor per bit Unavailable when it is being refreshed Slower Less expensive Main memory SRAM (Static RAM) Does not require periodic refreshes 5 -6 transistors per bit Faster and more complex More expensive Cache memory

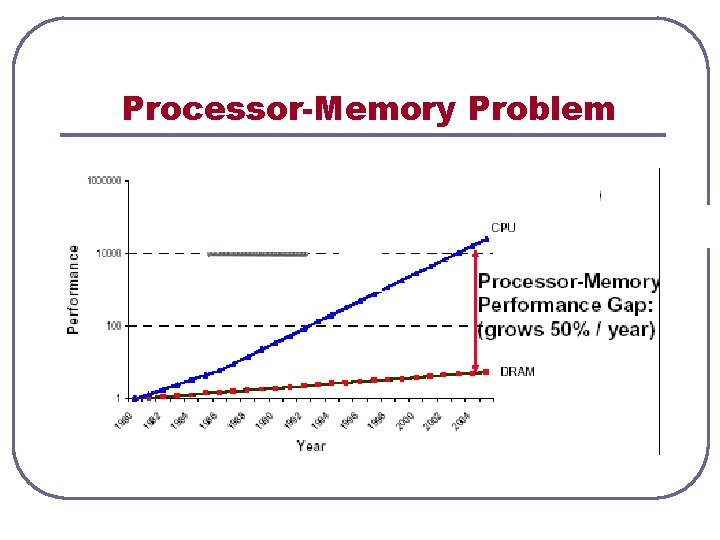

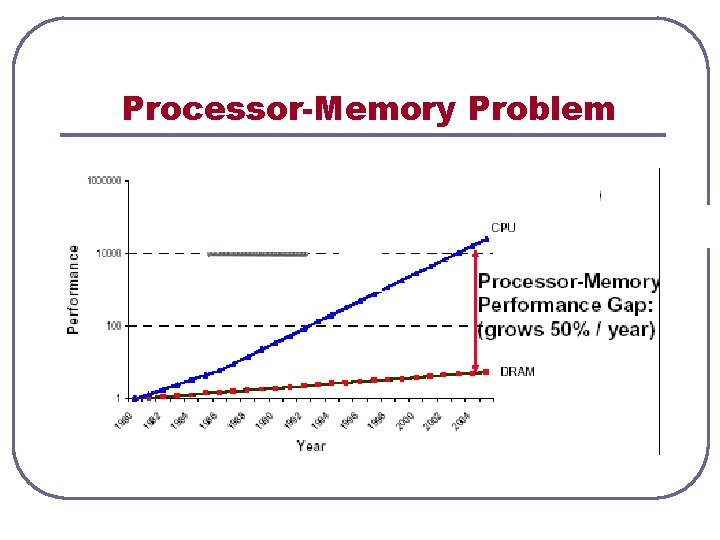

Processor-Memory Problem

Processor-Memory Problem l Processors issue instructions roughly every nanosecond l DRAM can be accessed roughly every 100 nanoseconds l The gap is growing: • processors getting faster by 60% per year • DRAM getting faster by 7% per year

Cache Memory Cache Mapping Strategies: l Direct Mapping l Associative Mapping l Set-associative Mapping

Locality of Reference Principle of locality is the tendency of a program to reference data items that are near other recently referenced data items or that are recently referenced themselves. Programs with good locality run faster.

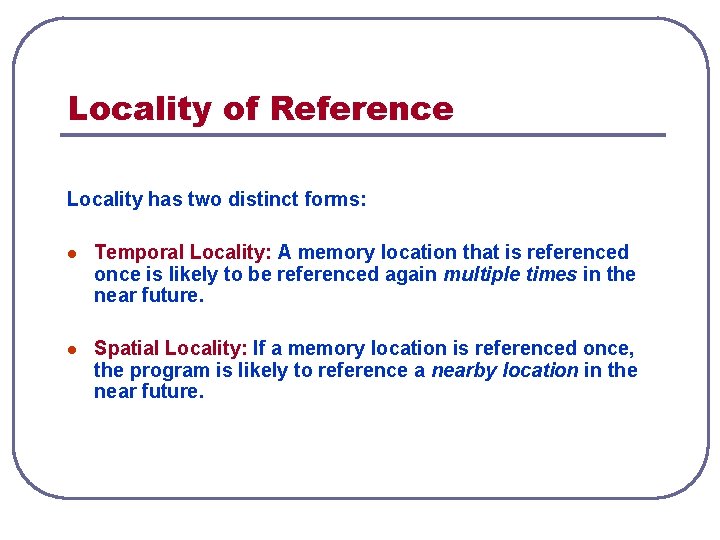

Locality of Reference Locality has two distinct forms: l Temporal Locality: A memory location that is referenced once is likely to be referenced again multiple times in the near future. l Spatial Locality: If a memory location is referenced once, the program is likely to reference a nearby location in the near future.

![Locality of Reference Ex Stride1 reference pattern int sumvecint vN int i sum0 Locality of Reference Ex: Stride-1 reference pattern int sumvec(int v[N]) { int i, sum=0;](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-10.jpg)

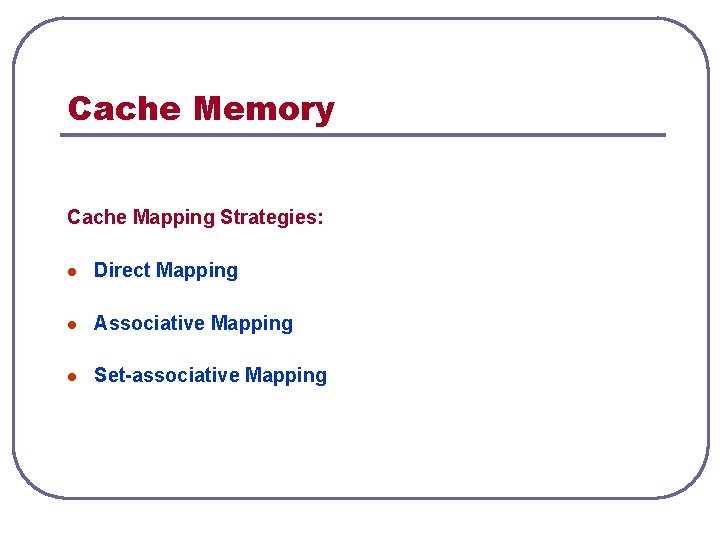

Locality of Reference Ex: Stride-1 reference pattern int sumvec(int v[N]) { int i, sum=0; for (i=0; i<N; i++) sum += v[i]; } l l l Good spatial locality Poor temporal locality Only one of them can be good Good locality

![Locality of Reference Ex Stride1 reference pattern int sumaryrowsint aMN int i j Locality of Reference Ex: Stride-1 reference pattern int sumaryrows(int a[M][N]) { int i, j,](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-11.jpg)

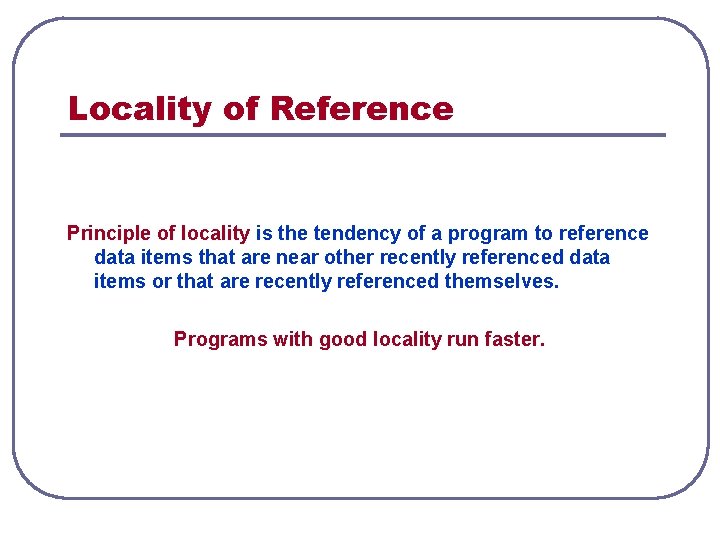

Locality of Reference Ex: Stride-1 reference pattern int sumaryrows(int a[M][N]) { int i, j, sum=0; for (i=0; i<M; i++) for (j=0; j<N; j++) sum += a[i][j]; } l l l Row-major access: a 00, a 01, a 02, … a 10, a 11, a 12, … Order of elements in the memory: a 00, a 01, a 02, … a 10, a 11, a 12, … Good spatial locality

![Locality of Reference Ex StrideN reference pattern int sumaryrowsint aMN int i j Locality of Reference Ex: Stride-N reference pattern int sumaryrows(int a[M][N]) { int i, j,](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-12.jpg)

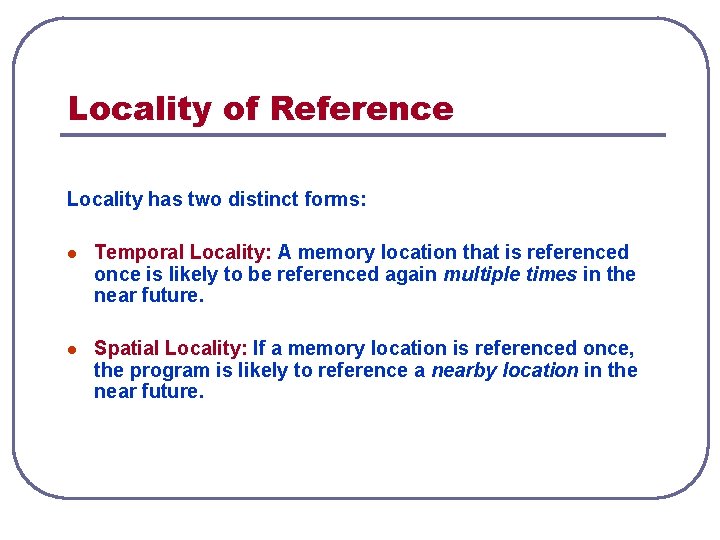

Locality of Reference Ex: Stride-N reference pattern int sumaryrows(int a[M][N]) { int i, j, sum=0; for (j=0; j<N; j++) for (i=0; i<M; i++) sum += a[i][j]; } l l l Column-major access: a 00, a 10, a 20, … a 01, a 11, a 21, … Order of elements in the memory: a 00, a 01, a 02, … a 10, a 11, a 12, … Poor spatial locality

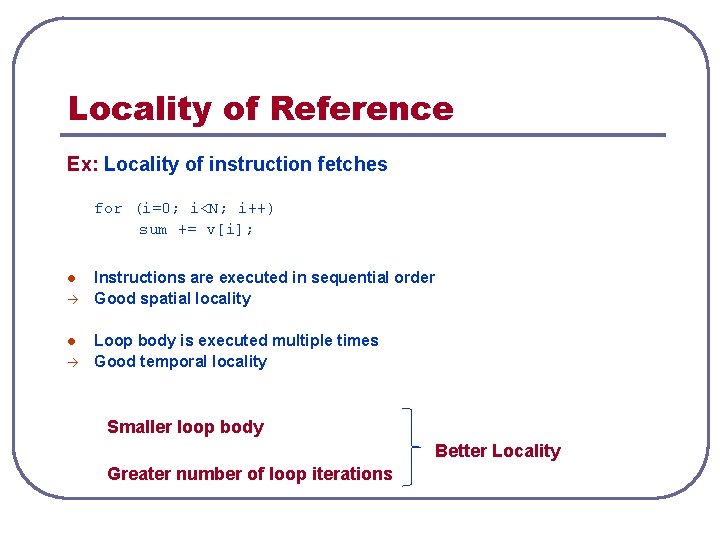

Locality of Reference Ex: Locality of instruction fetches for (i=0; i<N; i++) sum += v[i]; l l Instructions are executed in sequential order Good spatial locality Loop body is executed multiple times Good temporal locality Smaller loop body Better Locality Greater number of loop iterations

![Locality of Reference Ex Locality of references define N 1000 typedef struct int vel3 Locality of Reference Ex: Locality of references #define N 1000 typedef struct{ int vel[3];](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-14.jpg)

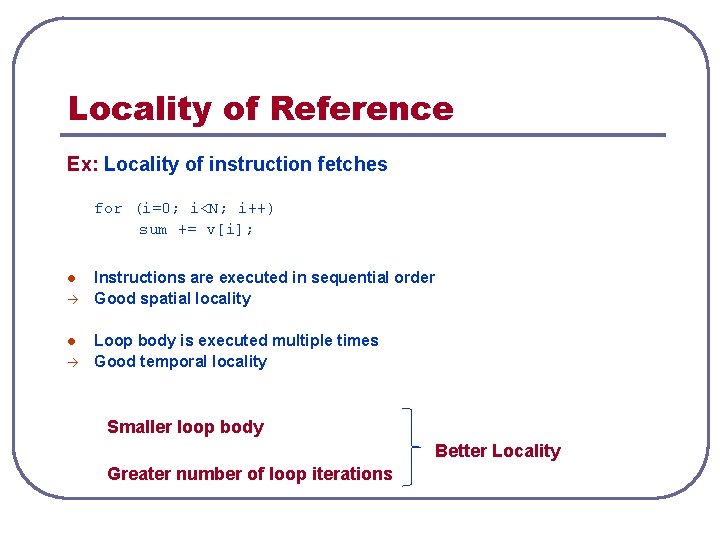

Locality of Reference Ex: Locality of references #define N 1000 typedef struct{ int vel[3]; int acc[3]; }point; point p[N]; Good spatial locality void clear 1(point *p, int n){ int i, j; for (i=0; i<n; i++){ for (j=0; j<3; j++) p[i]. vel[j]=0; for (j=0; j<3; j++) p[i]. acc[j]=0; } } void clear 2(point *p, int n){ int i, j; for (i=0; i<n; i++) for (j=0; j<3; j++) { Worse than p[i]. vel[j]=0; clear 1 p[i]. acc[j]=0; } } void clear 3(point *p, int n){ int i, j; for (j=0; j<3; j++){ The worst for (i=0; i<n; i++) p[i]. vel[j]=0; for (i=0; i<n; i++) p[i]. acc[j]=0; } }

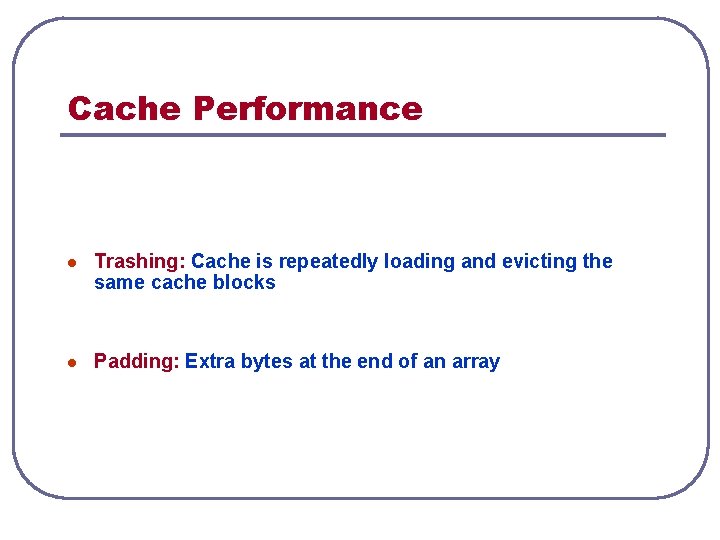

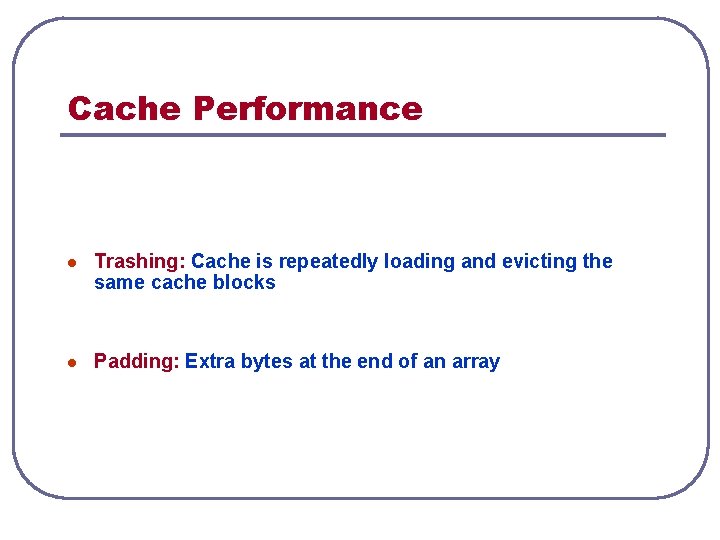

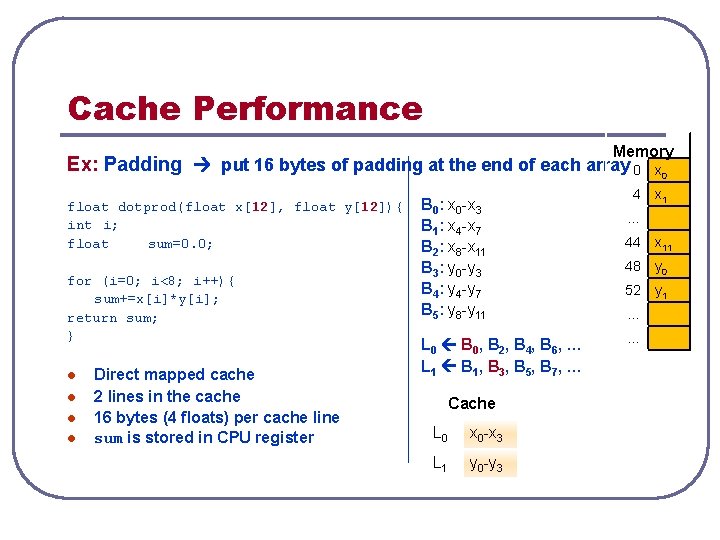

Cache Performance l Trashing: Cache is repeatedly loading and evicting the same cache blocks l Padding: Extra bytes at the end of an array

![Cache Performance Memory Ex Cache trashing float dotprodfloat x8 float y8 int i float Cache Performance Memory Ex: Cache trashing float dotprod(float x[8], float y[8]){ int i; float](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-16.jpg)

Cache Performance Memory Ex: Cache trashing float dotprod(float x[8], float y[8]){ int i; float sum=0. 0; Good spatial locality for (i=0; i<8; i++){ sum+=x[i]*y[i]; return sum; } l l but ? Direct mapped cache 2 lines in the cache 16 bytes (4 floats) per cache line sum is stored in CPU register B 0: x 0 -x 3 B 1: x 4 -x 7 B 2: y 0 -y 3 B 3: y 4 -y 7 0 x 0 4 x 1 … … L 0 B 0 , B 2 , B 4 , B 6 , … L 1 B 1, B 3, B 5, B 7, … 32 y 0 36 y 1 … … Cache L 0 x 0 -x 3 y 0 -y 3 x 1 y 1 L 1 x 0 y 0

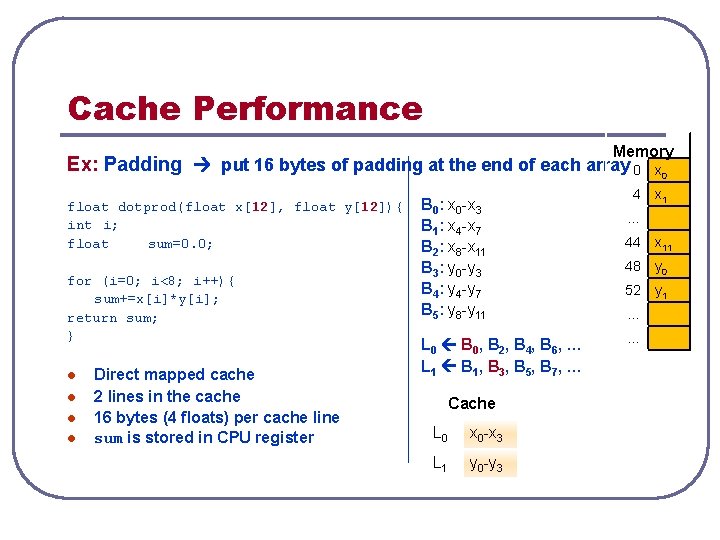

Cache Performance Memory Ex: Padding put 16 bytes of padding at the end of each array 0 x 0 4 x 1 float dotprod(float x[12], float y[12]){ int i; float sum=0. 0; for (i=0; i<8; i++){ sum+=x[i]*y[i]; return sum; } l l Direct mapped cache 2 lines in the cache 16 bytes (4 floats) per cache line sum is stored in CPU register B 0: x 0 -x 3 B 1: x 4 -x 7 B 2: x 8 -x 11 B 3: y 0 -y 3 B 4: y 4 -y 7 B 5: y 8 -y 11 L 0 B 0 , B 2 , B 4 , B 6 , … L 1 B 1 , B 3 , B 5 , B 7 , … Cache L 0 x 0 -x 3 L 1 y 0 -y 3 … 44 x 11 48 y 0 52 y 1 … …

Cache Performance Writing cache-friendly code: 1. Focus on the inner loops where most of the computations and memory accesses occur. 2. Maximize spatial locality by reading data sequentially with stride-1 • 3. Stride-1 reference pattern is good because data is stored in caches as contiguous blocks Maximize temporal locality by using data as often as possible once it has been read from memory. • Repeated references to local variables (eg. sum, i, j) are good because compiler can cache them in the register file

![Virtual Memory double x stride516 for j0 jjmax j for i0 iimax i xistridej Virtual Memory double x[] stride=516; for (j=0; j<jmax; j++) for (i=0; i<imax; i++) x[i*stride+j]](https://slidetodoc.com/presentation_image_h/88122b204102c74d745e97fa87f7e6c7/image-19.jpg)

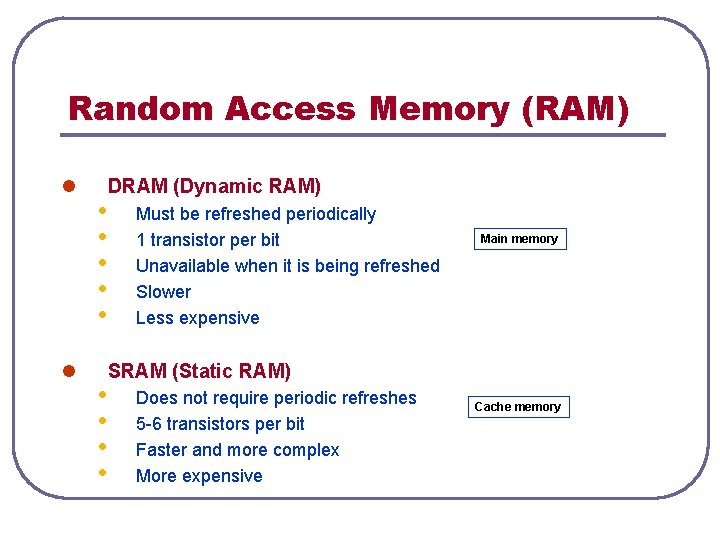

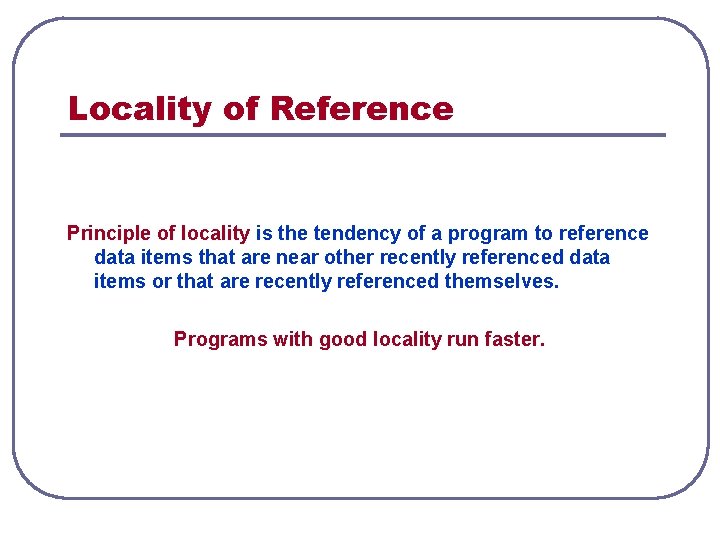

Virtual Memory double x[] stride=516; for (j=0; j<jmax; j++) for (i=0; i<imax; i++) x[i*stride+j] = 1. 1 + x[i*stride+j]; stride=516 byte stride=816*8=4128 Access order: x 0, x 516, x 1032, … x 1, x 517, x 1033, … Page size 4 KB = (512 x 8) x 0 -x 511 Problem size = 0. 5 MB (imax = jmax = 256) Problem size = 4 MB (imax = 256, jmax=2048) 163. 4 ns 224. 5 ns 16 KB = (2048 x 8) x 0 -x 2047 4. 9 ns 81. 9 ns 4 MB 4. 9 ns 23. 9 ns

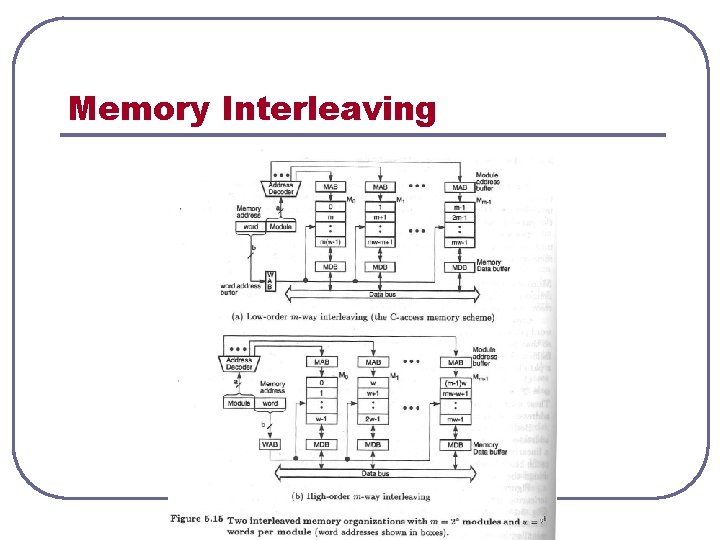

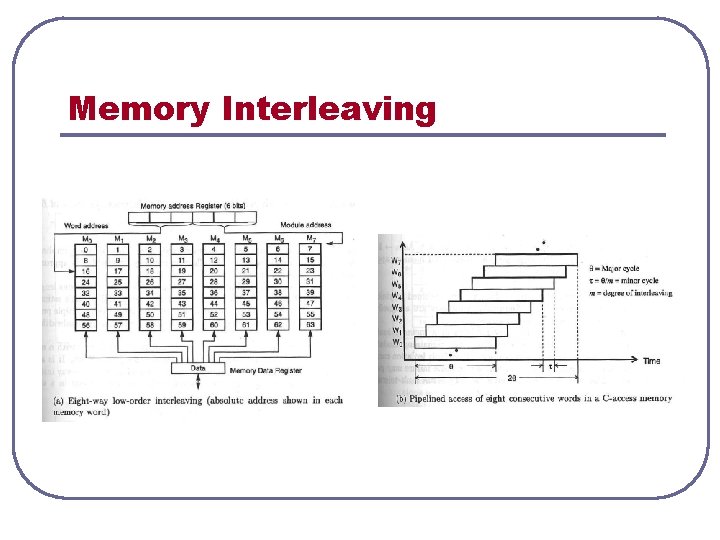

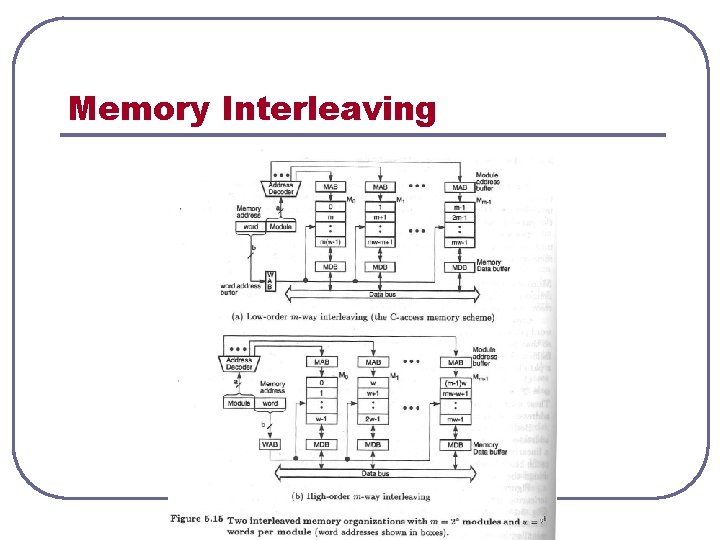

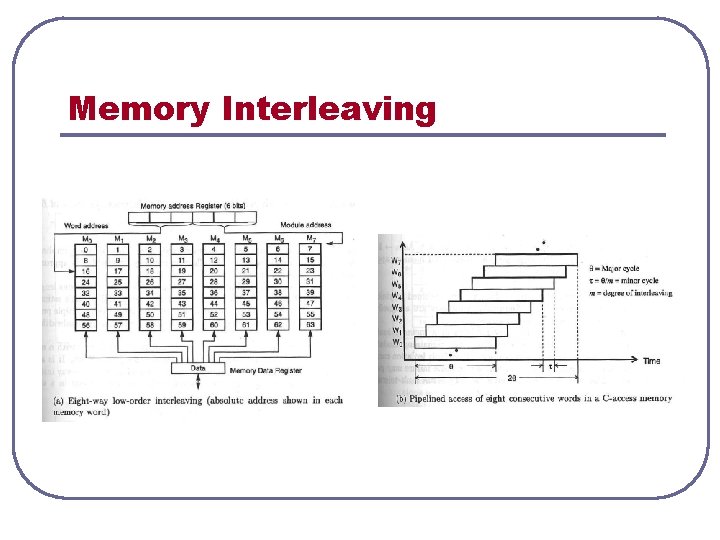

Memory Interleaving

Memory Interleaving

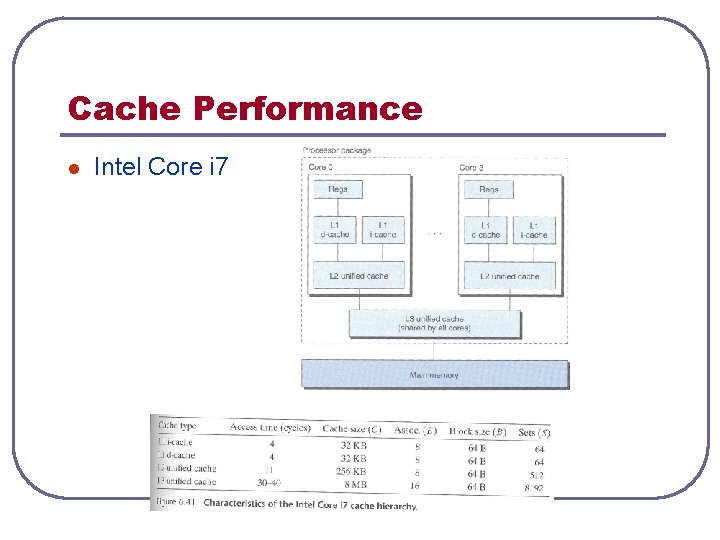

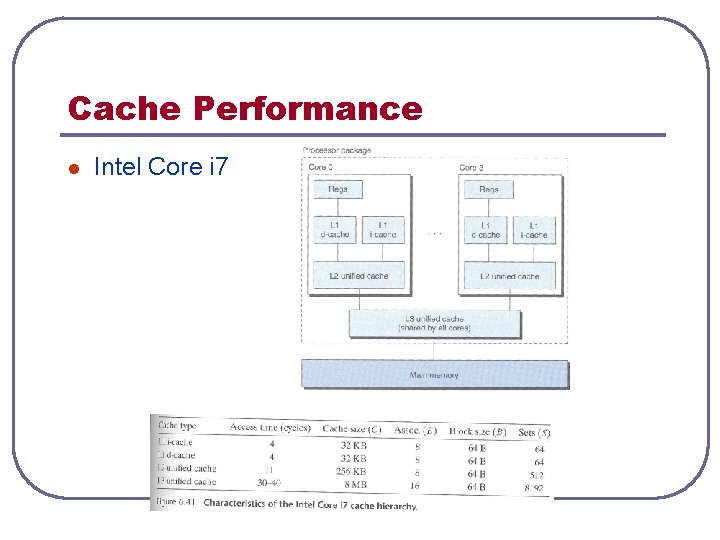

Cache Performance l Intel Core i 7

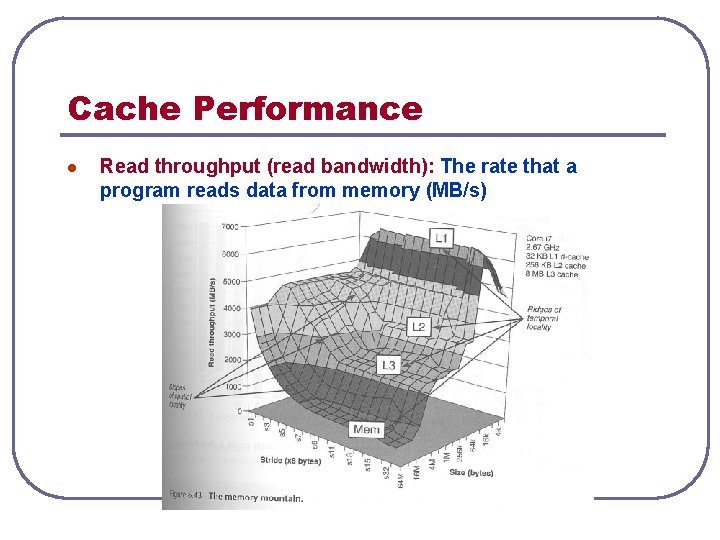

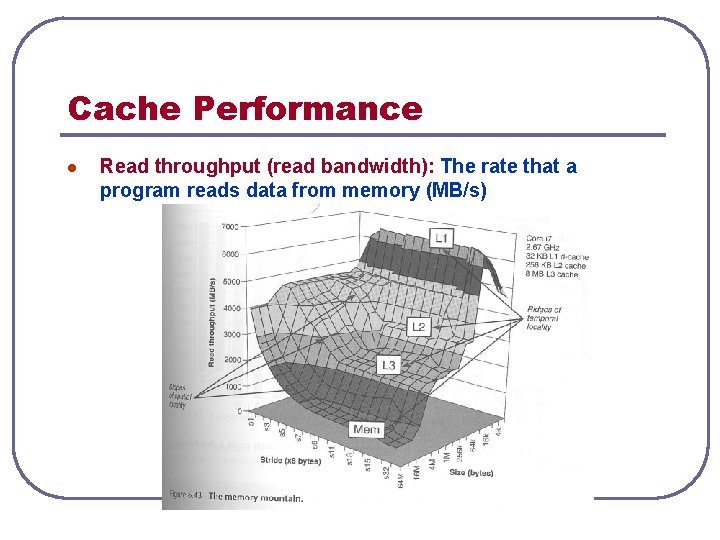

Cache Performance l Read throughput (read bandwidth): The rate that a program reads data from memory (MB/s)

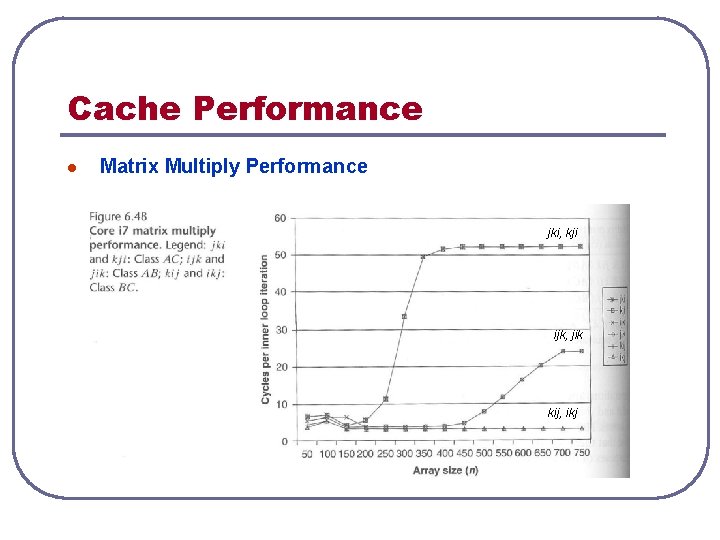

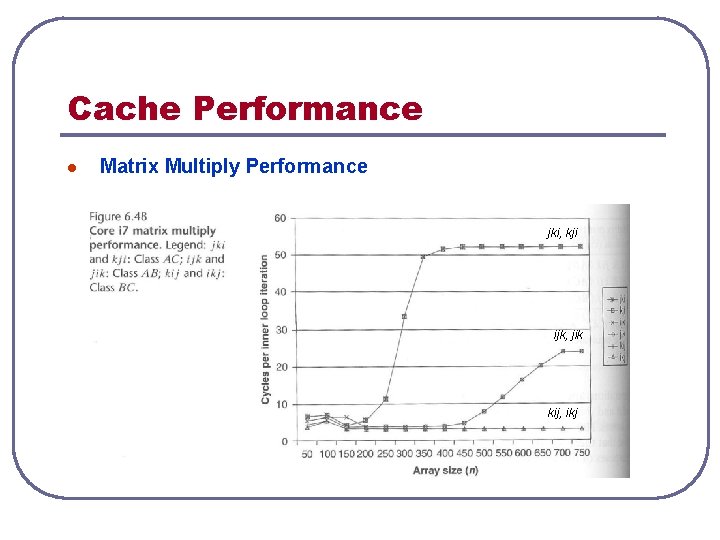

Cache Performance l Matrix Multiply Performance jki, kji ijk, jik kij, ikj