Lecture 4 2 Memory Hierarchy Cache Basics Learning

Lecture 4. 2 Memory Hierarchy: Cache Basics

Learning Objectives n n n Given a 32 -bit address, figure out the corresponding block address Given a block address, find the index of the cache line it maps to Given a 32 -bit address and a multi-word cache line, identify the particular location inside the cache line the word maps to Given a sequence of memory requests, decide hit/miss for each request Give the cache configuration, calculate the total number of bits to implement the cache Describe the behaviors of write-through cache and writeback cache for write hit or write miss 2

Coverage n Textbook Chapter 5. 3 3

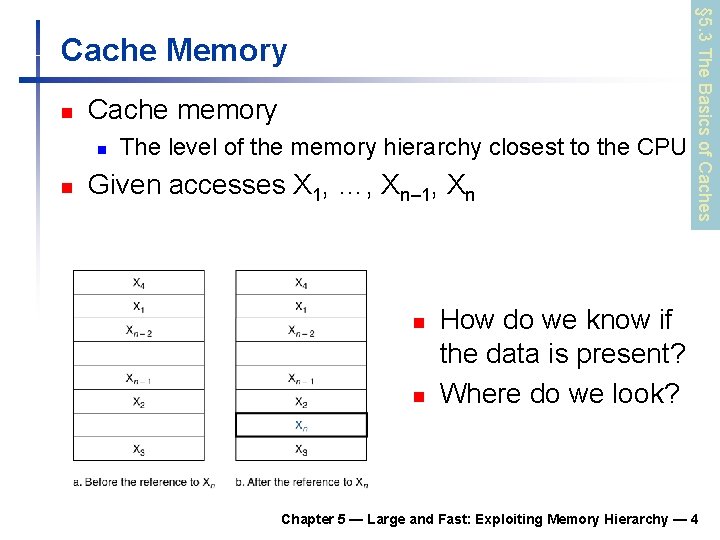

n Cache memory n n The level of the memory hierarchy closest to the CPU Given accesses X 1, …, Xn– 1, Xn n n § 5. 3 The Basics of Caches Cache Memory How do we know if the data is present? Where do we look? Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 4

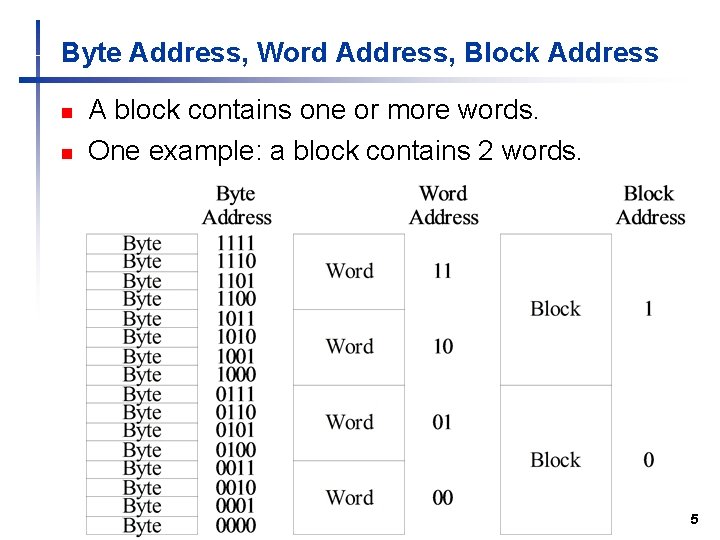

Byte Address, Word Address, Block Address n n A block contains one or more words. One example: a block contains 2 words. 5

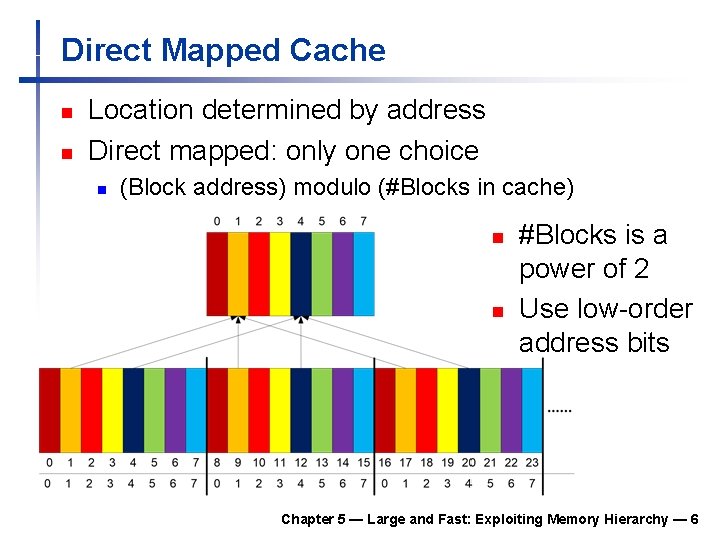

Direct Mapped Cache n n Location determined by address Direct mapped: only one choice n (Block address) modulo (#Blocks in cache) n n #Blocks is a power of 2 Use low-order address bits Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 6

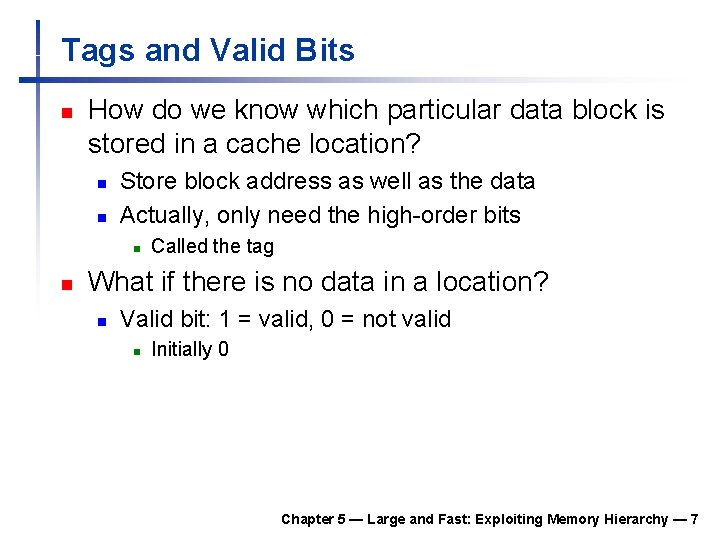

Tags and Valid Bits n How do we know which particular data block is stored in a cache location? n n Store block address as well as the data Actually, only need the high-order bits n n Called the tag What if there is no data in a location? n Valid bit: 1 = valid, 0 = not valid n Initially 0 Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 7

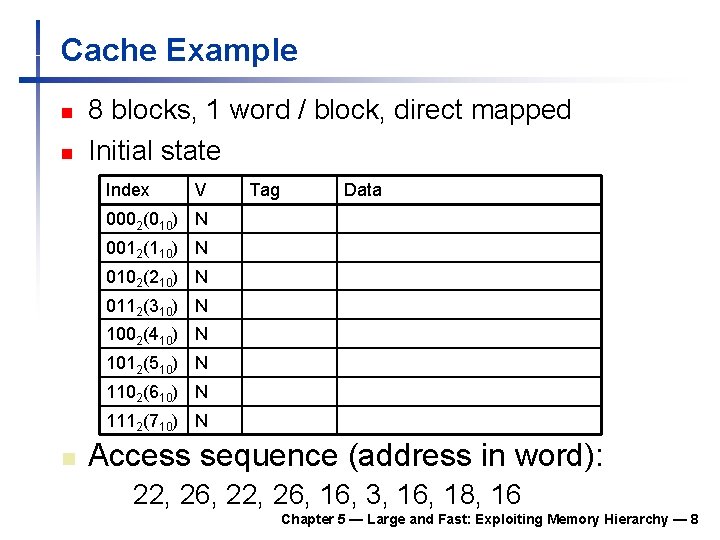

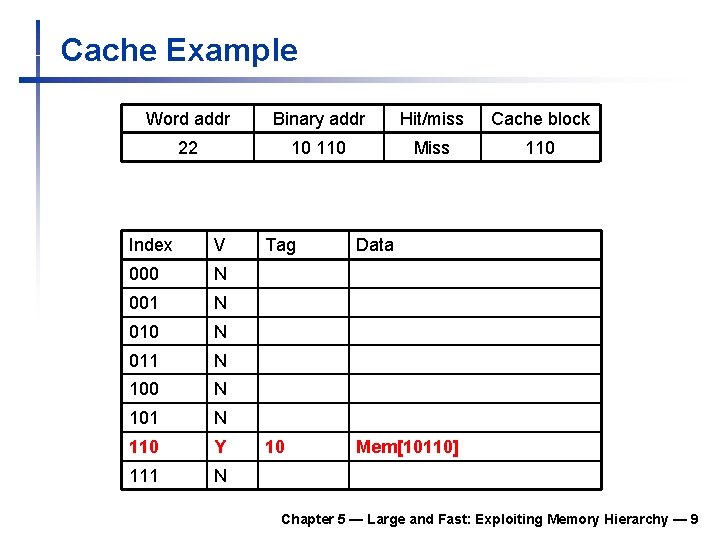

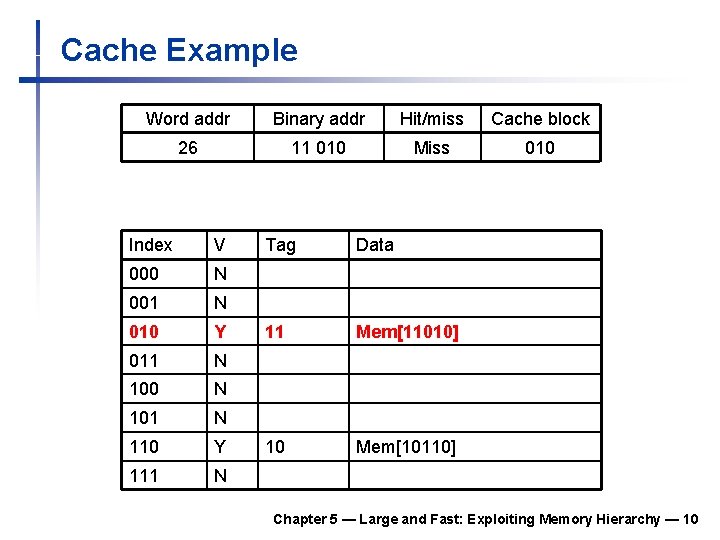

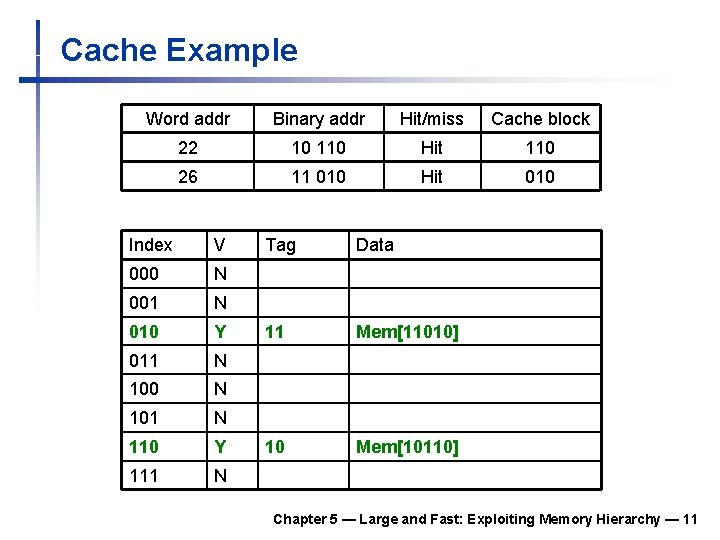

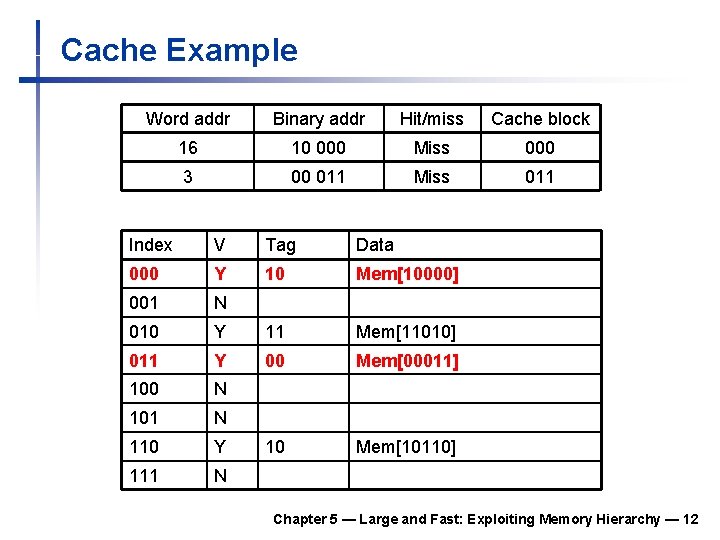

Cache Example n n 8 blocks, 1 word / block, direct mapped Initial state Index V Tag Data 0002(010) N 0012(110) N 0102(210) N 0112(310) N 1002(410) N 1012(510) N 1102(610) N 1112(710) N n Access sequence (address in word): 22, 26, 16, 3, 16, 18, 16 Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 8

Cache Example Word addr Binary addr Hit/miss Cache block 22 10 110 Miss 110 Index V 000 N 001 N 010 N 011 N 100 N 101 N 110 Y 111 N Tag Data 10 Mem[10110] Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 9

Cache Example Word addr Binary addr Hit/miss Cache block 26 11 010 Miss 010 Index V 000 N 001 N 010 Y 011 N 100 N 101 N 110 Y 111 N Tag Data 11 Mem[11010] 10 Mem[10110] Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 10

Cache Example Word addr Binary addr Hit/miss Cache block 22 10 110 Hit 110 26 11 010 Hit 010 Index V 000 N 001 N 010 Y 011 N 100 N 101 N 110 Y 111 N Tag Data 11 Mem[11010] 10 Mem[10110] Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 11

Cache Example Word addr Binary addr Hit/miss Cache block 16 10 000 Miss 000 3 00 011 Miss 011 Index V Tag Data 000 Y 10 Mem[10000] 001 N 010 Y 11 Mem[11010] 011 Y 00 Mem[00011] 100 N 101 N 110 Y 10 Mem[10110] 111 N Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 12

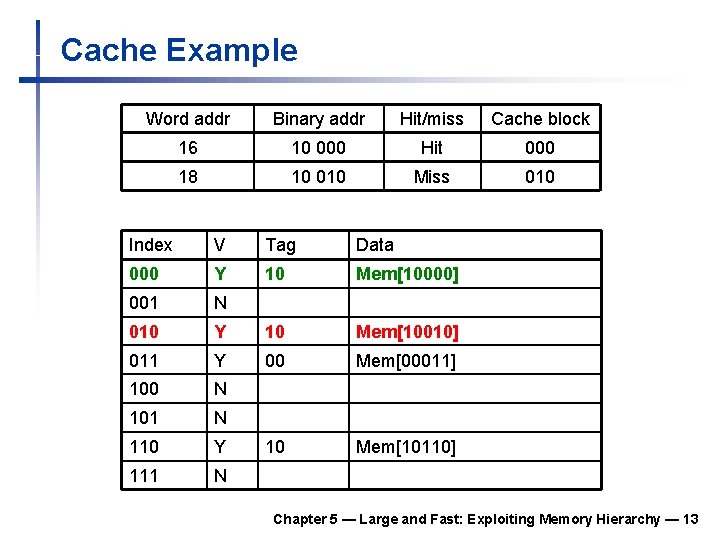

Cache Example Word addr Binary addr Hit/miss Cache block 16 10 000 Hit 000 18 10 010 Miss 010 Index V Tag Data 000 Y 10 Mem[10000] 001 N 010 Y 10 Mem[10010] 011 Y 00 Mem[00011] 100 N 101 N 110 Y 10 Mem[10110] 111 N Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 13

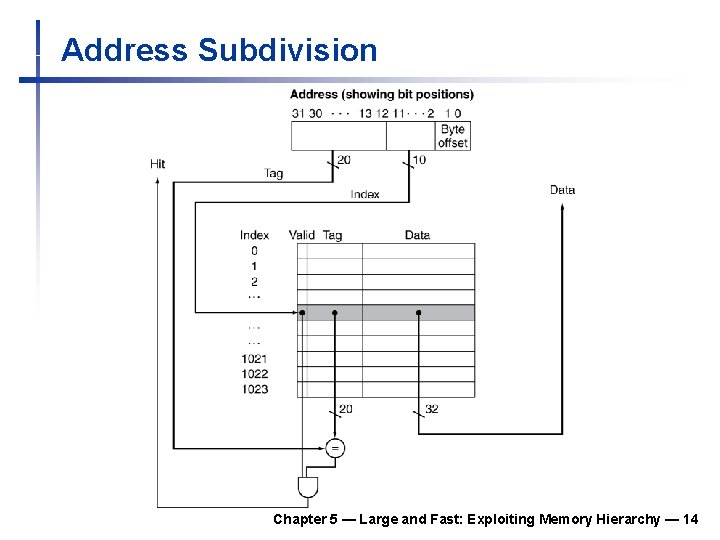

Address Subdivision Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 14

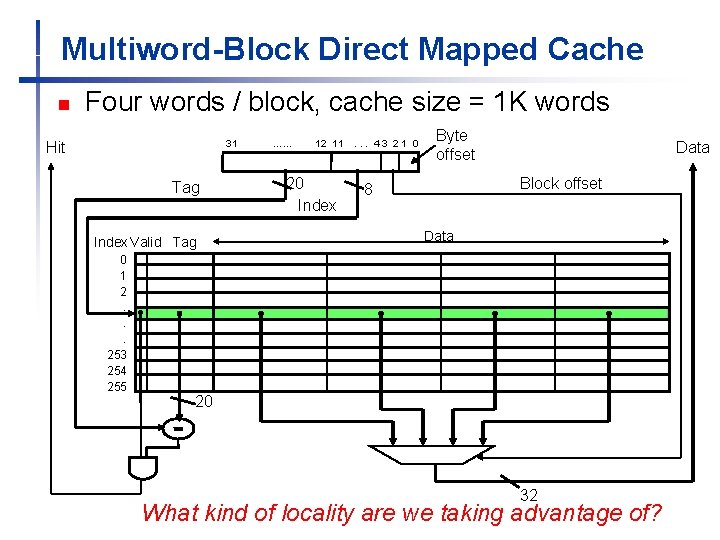

Multiword-Block Direct Mapped Cache n Four words / block, cache size = 1 K words 31 Hit Tag Index Valid Tag 0 1 2. . . 253 254 255 …… 12 11 20 Index . . . 43 21 0 Byte offset Data Block offset 8 Data 20 32 What kind of locality are we taking advantage of?

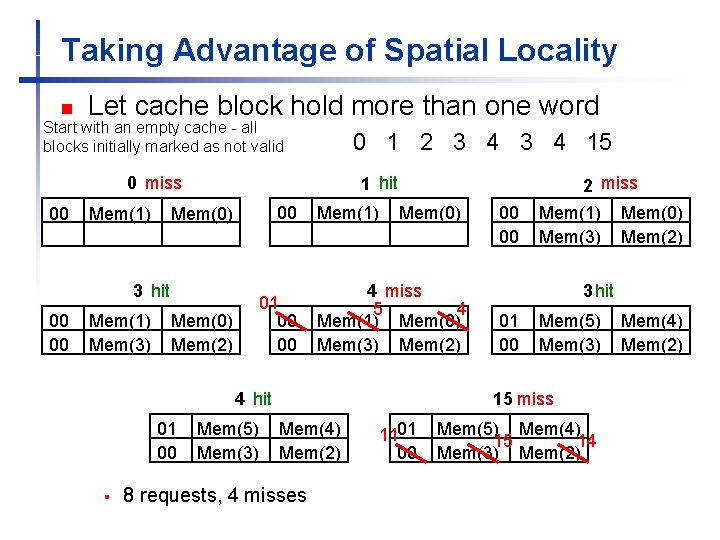

Taking Advantage of Spatial Locality n Let cache block hold more than one word Start with an empty cache - all blocks initially marked as not valid 0 1 2 3 4 15 0 miss 00 Mem(1) 1 hit Mem(0) 00 Mem(0) Mem(2) 01 00 00 3 hit 00 00 Mem(1) Mem(3) Mem(1) Mem(0) 4 miss 5 4 Mem(1) Mem(0) Mem(3) Mem(2) 4 hit 01 00 § Mem(5) Mem(3) 00 00 2 miss Mem(1) Mem(0) Mem(3) Mem(2) 3 hit 01 00 Mem(5) Mem(3) 15 miss Mem(4) Mem(2) 8 requests, 4 misses 1101 00 Mem(5) Mem(4) 15 14 Mem(3) Mem(2) Mem(4) Mem(2)

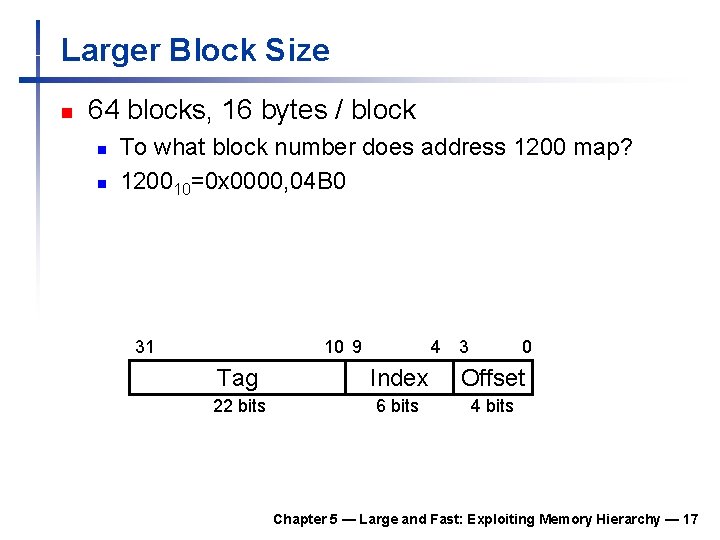

Larger Block Size n 64 blocks, 16 bytes / block n n To what block number does address 1200 map? 120010=0 x 0000, 04 B 0 31 10 9 4 3 0 Tag Index Offset 22 bits 6 bits 4 bits Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 17

Block Size Considerations n Larger blocks should reduce miss rate n n Due to spatial locality In a fixed-sized cache n Larger blocks fewer of them n n More competition increased miss rate Larger miss penalty n n Can override benefit of reduced miss rate Early restart and critical-word-first can help Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 18

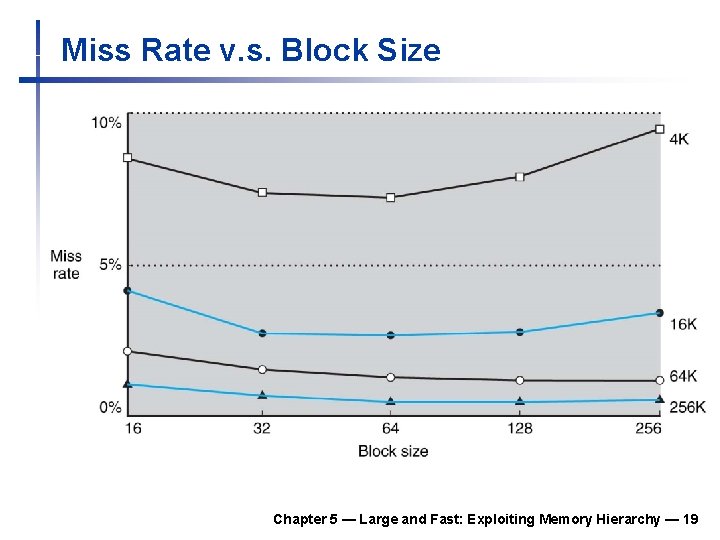

Miss Rate v. s. Block Size Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 19

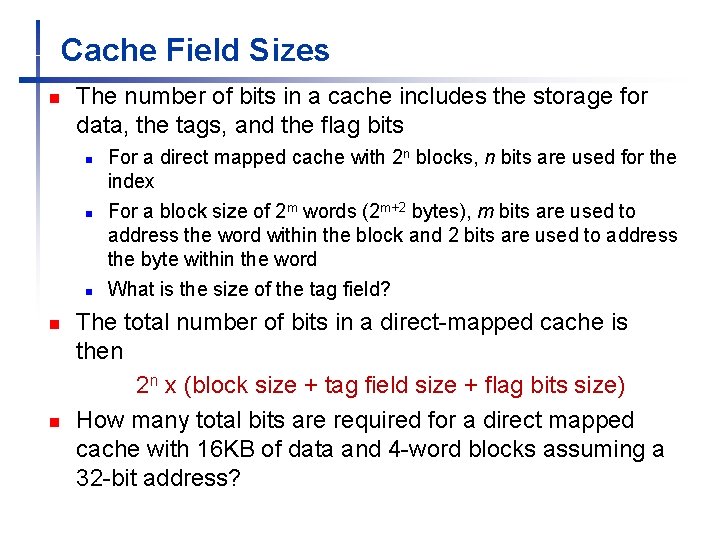

Cache Field Sizes n The number of bits in a cache includes the storage for data, the tags, and the flag bits n n n For a direct mapped cache with 2 n blocks, n bits are used for the index For a block size of 2 m words (2 m+2 bytes), m bits are used to address the word within the block and 2 bits are used to address the byte within the word What is the size of the tag field? The total number of bits in a direct-mapped cache is then 2 n x (block size + tag field size + flag bits size) How many total bits are required for a direct mapped cache with 16 KB of data and 4 -word blocks assuming a 32 -bit address?

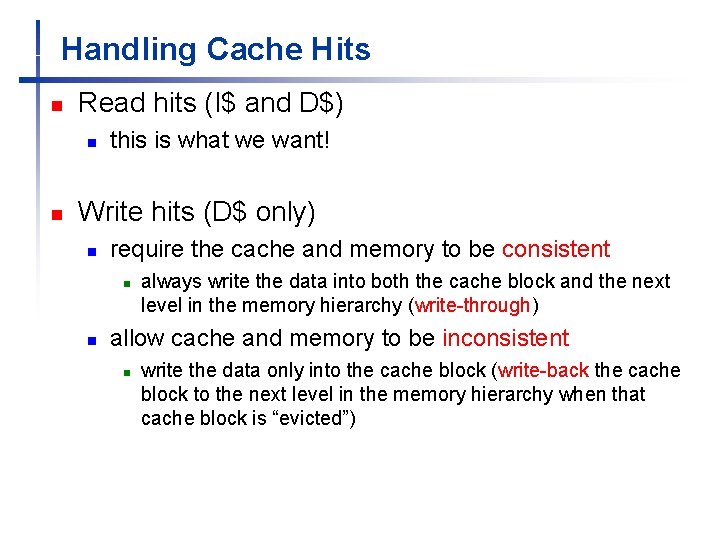

Handling Cache Hits n Read hits (I$ and D$) n n this is what we want! Write hits (D$ only) n require the cache and memory to be consistent n n always write the data into both the cache block and the next level in the memory hierarchy (write-through) allow cache and memory to be inconsistent n write the data only into the cache block (write-back the cache block to the next level in the memory hierarchy when that cache block is “evicted”)

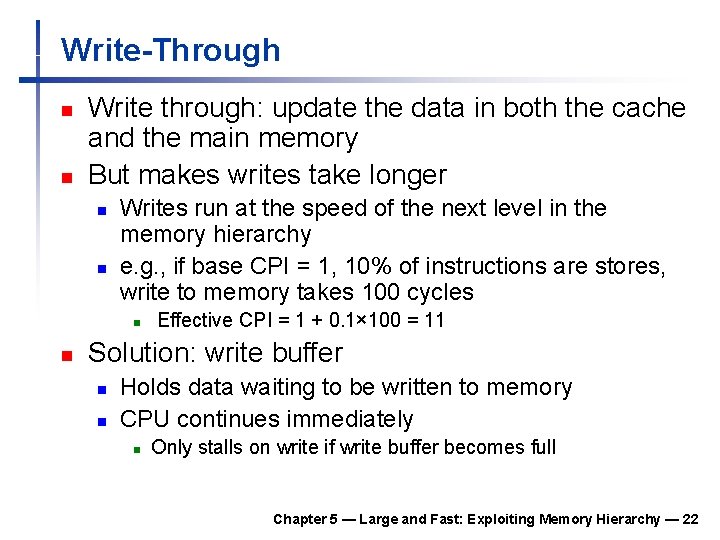

Write-Through n n Write through: update the data in both the cache and the main memory But makes writes take longer n n Writes run at the speed of the next level in the memory hierarchy e. g. , if base CPI = 1, 10% of instructions are stores, write to memory takes 100 cycles n n Effective CPI = 1 + 0. 1× 100 = 11 Solution: write buffer n n Holds data waiting to be written to memory CPU continues immediately n Only stalls on write if write buffer becomes full Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 22

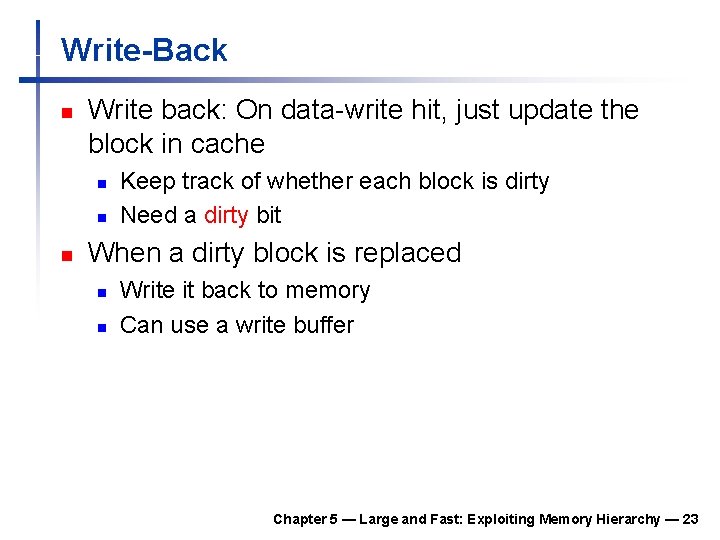

Write-Back n Write back: On data-write hit, just update the block in cache n n n Keep track of whether each block is dirty Need a dirty bit When a dirty block is replaced n n Write it back to memory Can use a write buffer Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 23

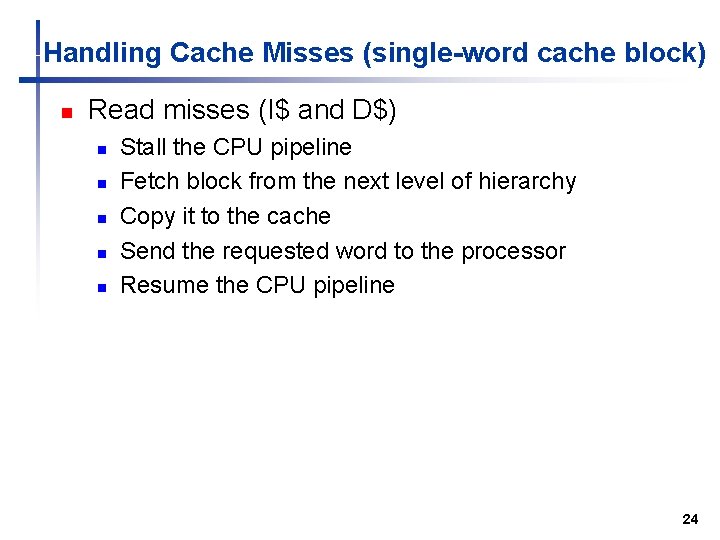

Handling Cache Misses (single-word cache block) n Read misses (I$ and D$) n n n Stall the CPU pipeline Fetch block from the next level of hierarchy Copy it to the cache Send the requested word to the processor Resume the CPU pipeline 24

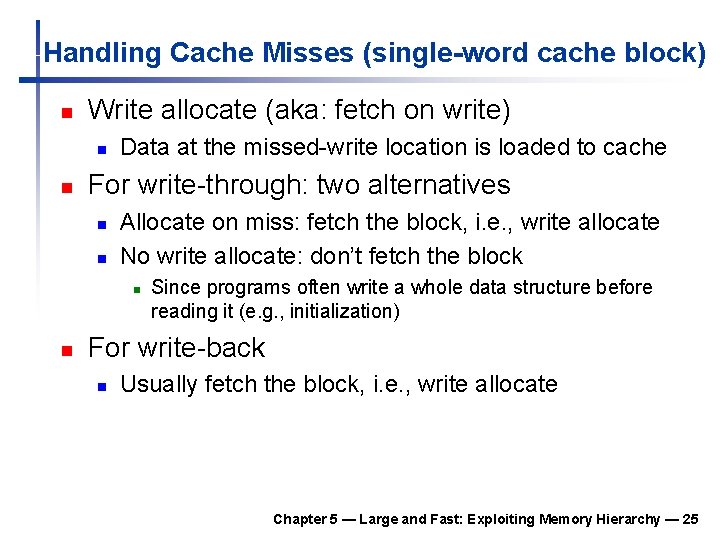

Handling Cache Misses (single-word cache block) n Write allocate (aka: fetch on write) n n Data at the missed-write location is loaded to cache For write-through: two alternatives n n Allocate on miss: fetch the block, i. e. , write allocate No write allocate: don’t fetch the block n n Since programs often write a whole data structure before reading it (e. g. , initialization) For write-back n Usually fetch the block, i. e. , write allocate Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 25

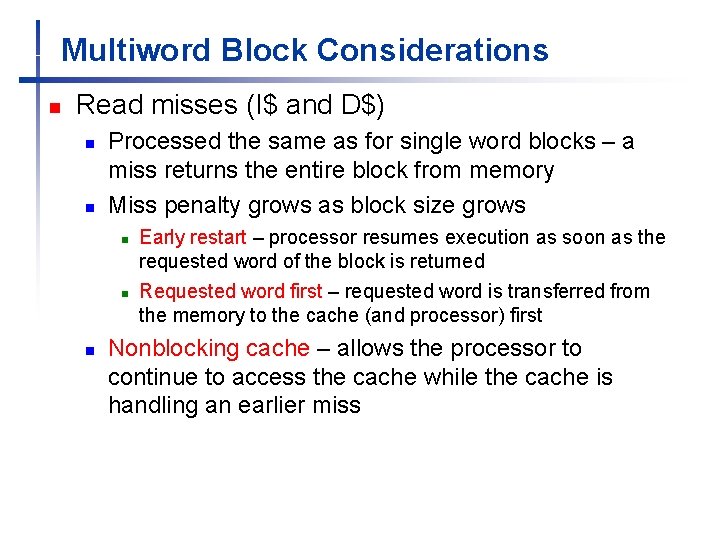

Multiword Block Considerations n Read misses (I$ and D$) n n Processed the same as for single word blocks – a miss returns the entire block from memory Miss penalty grows as block size grows n n n Early restart – processor resumes execution as soon as the requested word of the block is returned Requested word first – requested word is transferred from the memory to the cache (and processor) first Nonblocking cache – allows the processor to continue to access the cache while the cache is handling an earlier miss

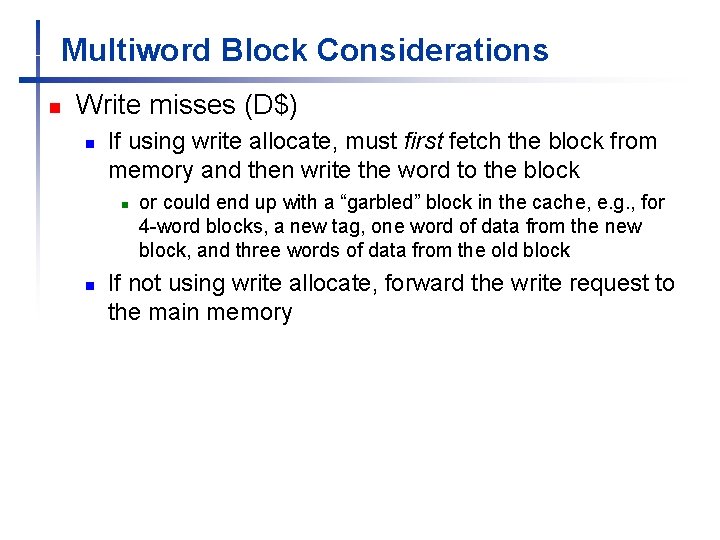

Multiword Block Considerations n Write misses (D$) n If using write allocate, must first fetch the block from memory and then write the word to the block n n or could end up with a “garbled” block in the cache, e. g. , for 4 -word blocks, a new tag, one word of data from the new block, and three words of data from the old block If not using write allocate, forward the write request to the main memory

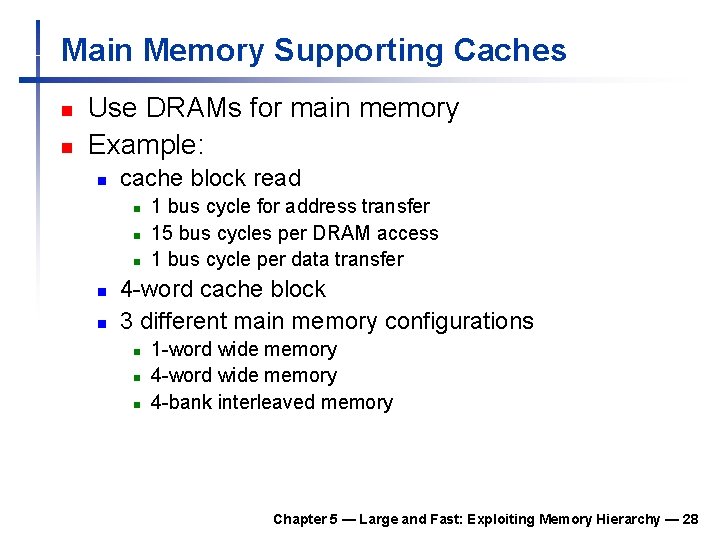

Main Memory Supporting Caches n n Use DRAMs for main memory Example: n cache block read n n n 1 bus cycle for address transfer 15 bus cycles per DRAM access 1 bus cycle per data transfer 4 -word cache block 3 different main memory configurations n n n 1 -word wide memory 4 -bank interleaved memory Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 28

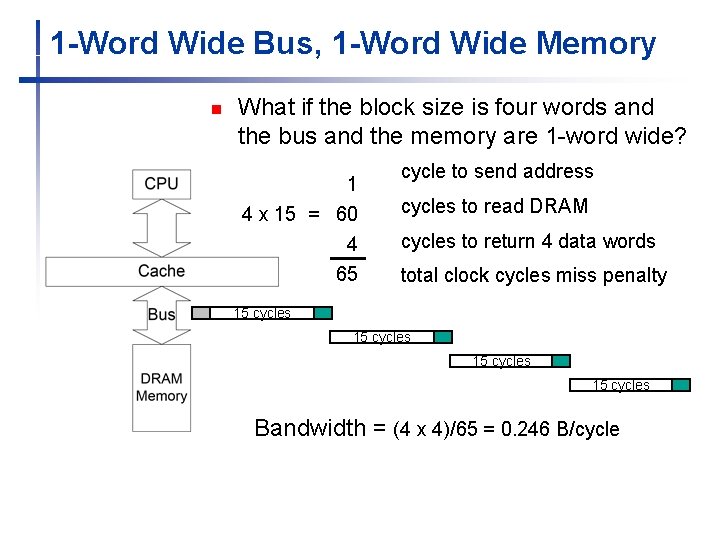

1 -Word Wide Bus, 1 -Word Wide Memory n What if the block size is four words and the bus and the memory are 1 -word wide? cycle to send address cycles to read DRAM cycles to return 4 data words total clock cycles miss penalty

1 -Word Wide Bus, 1 -Word Wide Memory n What if the block size is four words and the bus and the memory are 1 -word wide? 1 4 x 15 = 60 4 65 cycle to send address cycles to read DRAM cycles to return 4 data words total clock cycles miss penalty 15 cycles Bandwidth = (4 x 4)/65 = 0. 246 B/cycle

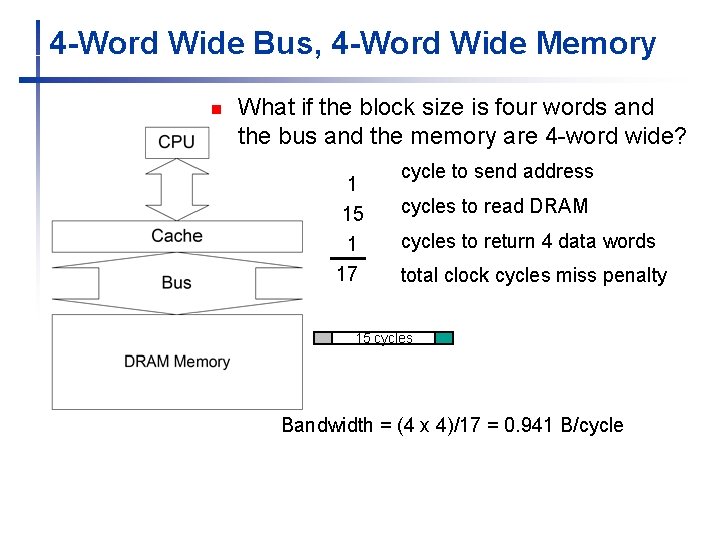

4 -Word Wide Bus, 4 -Word Wide Memory n What if the block size is four words and the bus and the memory are 4 -word wide? cycle to send address cycles to read DRAM cycles to return 4 data words total clock cycles miss penalty

4 -Word Wide Bus, 4 -Word Wide Memory n What if the block size is four words and the bus and the memory are 4 -word wide? 1 15 1 17 cycle to send address cycles to read DRAM cycles to return 4 data words total clock cycles miss penalty 15 cycles Bandwidth = (4 x 4)/17 = 0. 941 B/cycle

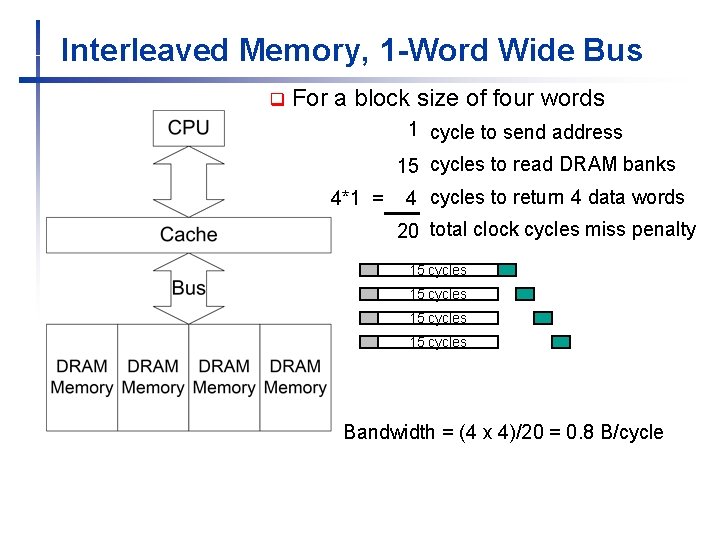

Interleaved Memory, 1 -Word Wide Bus q For a block size of four words cycle to send address cycles to read DRAM banks cycles to return 4 data words total clock cycles miss penalty

Interleaved Memory, 1 -Word Wide Bus q For a block size of four words 1 cycle to send address 15 cycles to read DRAM banks 4*1 = 4 cycles to return 4 data words 20 total clock cycles miss penalty 15 cycles Bandwidth = (4 x 4)/20 = 0. 8 B/cycle

- Slides: 34