Lecture 3 Morphology Parsing Words CS 4705 What

- Slides: 25

Lecture 3 Morphology: Parsing Words CS 4705

What is morphology? • The study of how words are composed from smaller, meaning-bearing units (morphemes) – Stems: children, undoubtedly, – Affixes (prefixes, suffixes, circumfixes, infixes) • Immaterial • Trying • Gesagt • Absobl**dylutely – Concatenative vs. non-concatenative (e. g. Arabic rootand-pattern) morphological systems

Morphology Helps Define Word Classes • AKA morphological classes, parts-of-speech • Closed vs. open (function vs. content) class words – Pronoun, preposition, conjunction, determiner, … – Noun, verb, adjective, …

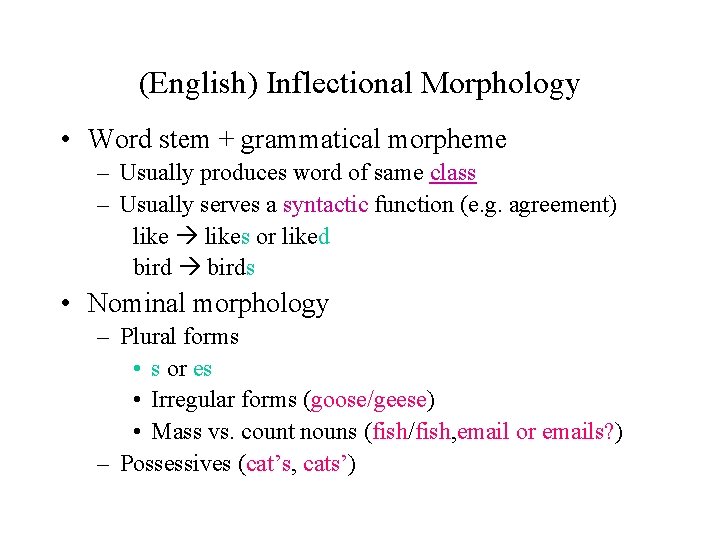

(English) Inflectional Morphology • Word stem + grammatical morpheme – Usually produces word of same class – Usually serves a syntactic function (e. g. agreement) likes or liked birds • Nominal morphology – Plural forms • s or es • Irregular forms (goose/geese) • Mass vs. count nouns (fish/fish, email or emails? ) – Possessives (cat’s, cats’)

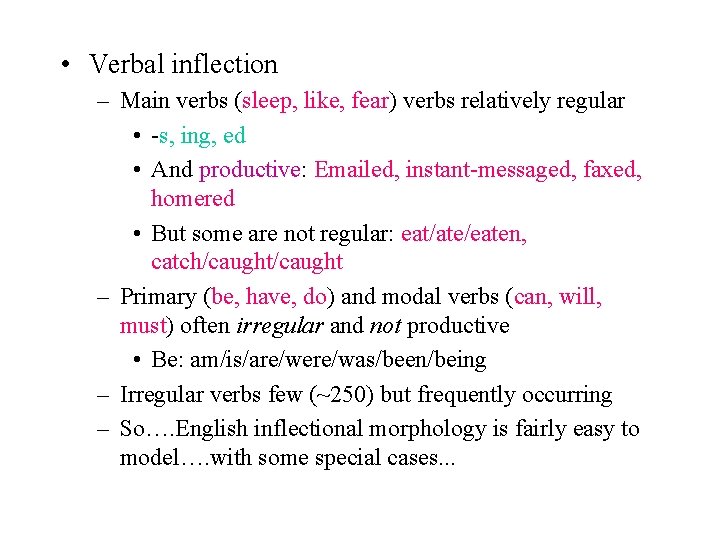

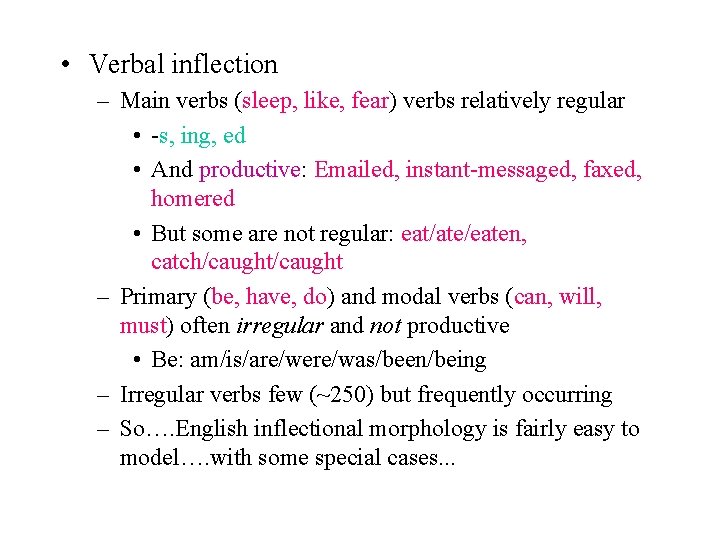

• Verbal inflection – Main verbs (sleep, like, fear) verbs relatively regular • -s, ing, ed • And productive: Emailed, instant-messaged, faxed, homered • But some are not regular: eat/ate/eaten, catch/caught – Primary (be, have, do) and modal verbs (can, will, must) often irregular and not productive • Be: am/is/are/were/was/been/being – Irregular verbs few (~250) but frequently occurring – So…. English inflectional morphology is fairly easy to model…. with some special cases. . .

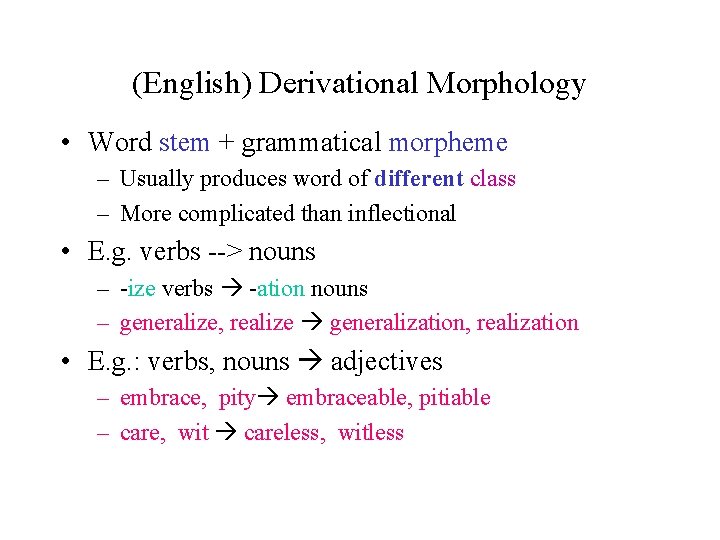

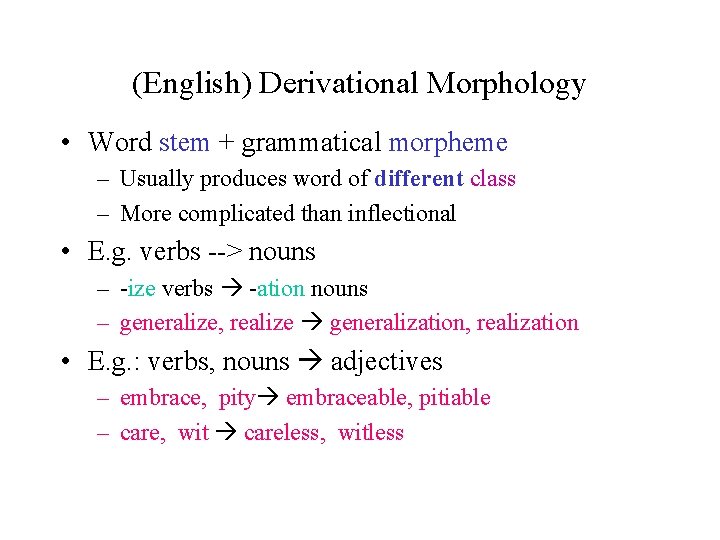

(English) Derivational Morphology • Word stem + grammatical morpheme – Usually produces word of different class – More complicated than inflectional • E. g. verbs --> nouns – -ize verbs -ation nouns – generalize, realize generalization, realization • E. g. : verbs, nouns adjectives – embrace, pity embraceable, pitiable – care, wit careless, witless

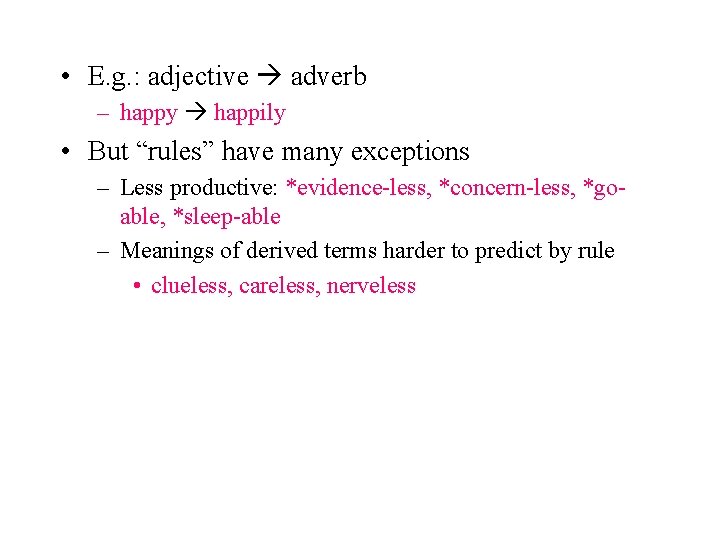

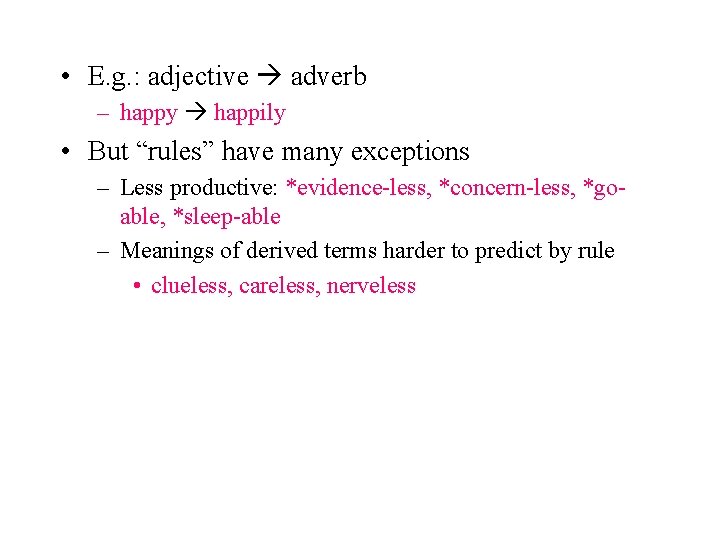

• E. g. : adjective adverb – happy happily • But “rules” have many exceptions – Less productive: *evidence-less, *concern-less, *goable, *sleep-able – Meanings of derived terms harder to predict by rule • clueless, careless, nerveless

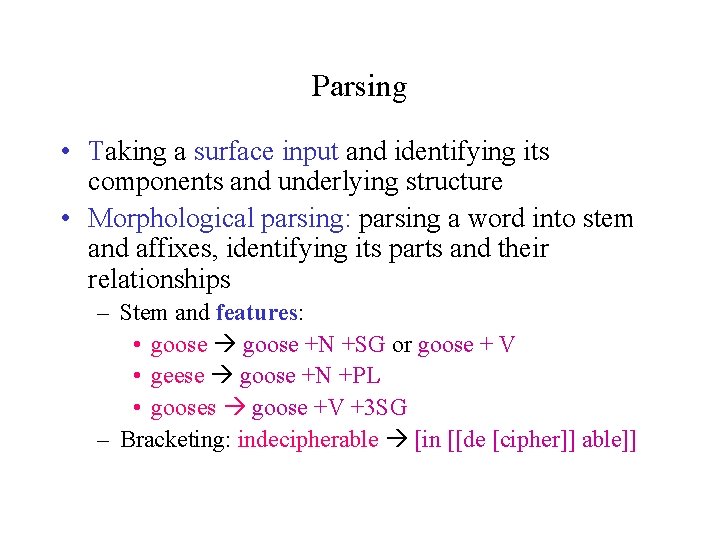

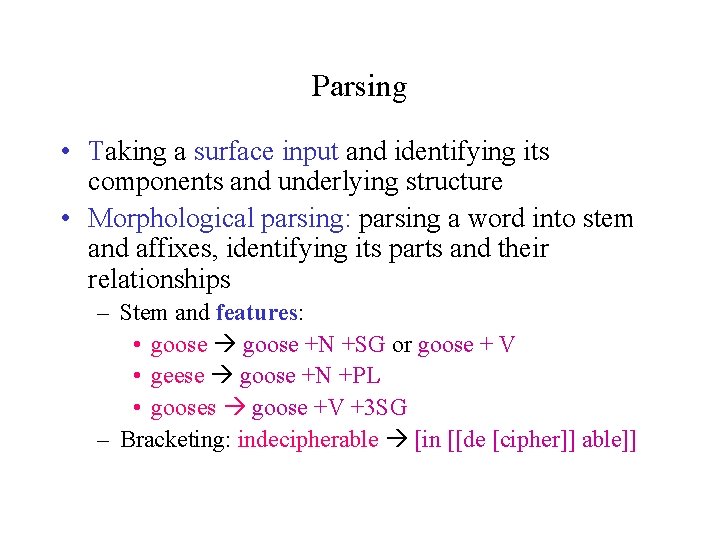

Parsing • Taking a surface input and identifying its components and underlying structure • Morphological parsing: parsing a word into stem and affixes, identifying its parts and their relationships – Stem and features: • goose +N +SG or goose + V • geese goose +N +PL • gooses goose +V +3 SG – Bracketing: indecipherable [in [[de [cipher]] able]]

Why parse words? • For spell-checking – Is muncheble a legal word? • To identify a word’s part-of-speech (pos) – For sentence parsing, for machine translation, … • To identify a word’s stem – For information retrieval • Why not just list all word forms in a lexicon?

How do people represent words? • Hypotheses: – Full listing hypothesis: words listed – Minimum redundancy hypothesis: morphemes listed • Experimental evidence: – Priming experiments (Does seeing/hearing one word facilitate recognition of another? ) suggest neither – Regularly inflected forms prime stem but not derived forms – But spoken derived words can prime stems if they are semantically close (e. g. government/govern but not department/depart)

• Speech errors suggest affixes must be represented separately in the mental lexicon – easy enoughly

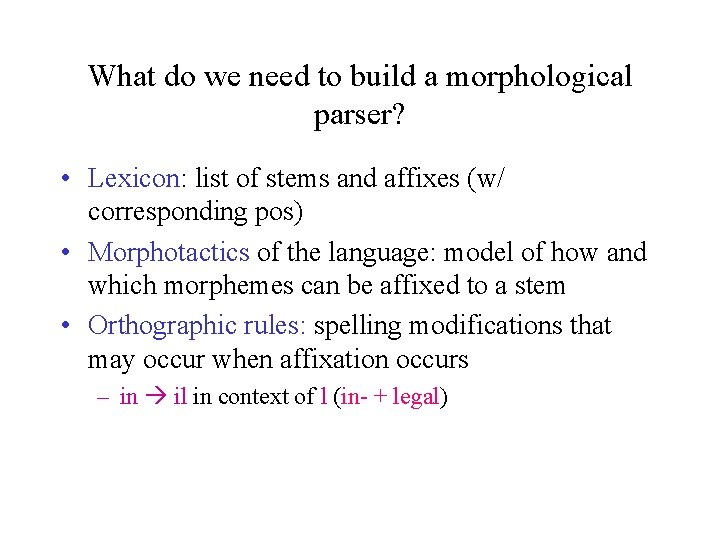

What do we need to build a morphological parser? • Lexicon: list of stems and affixes (w/ corresponding pos) • Morphotactics of the language: model of how and which morphemes can be affixed to a stem • Orthographic rules: spelling modifications that may occur when affixation occurs – in il in context of l (in- + legal)

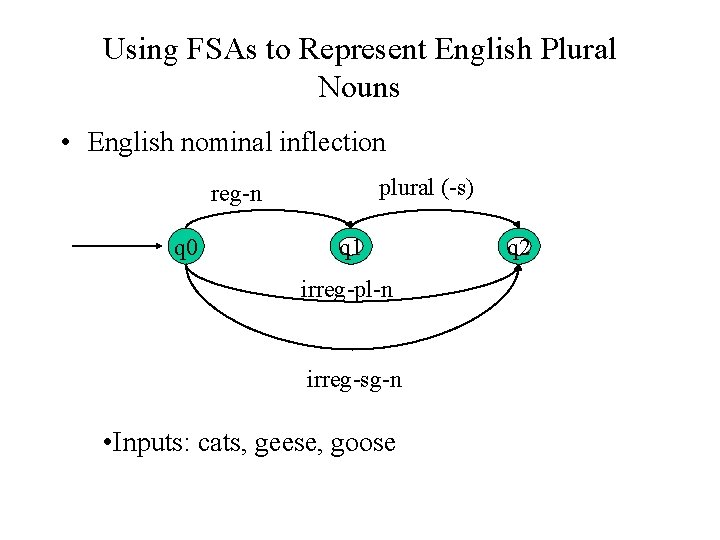

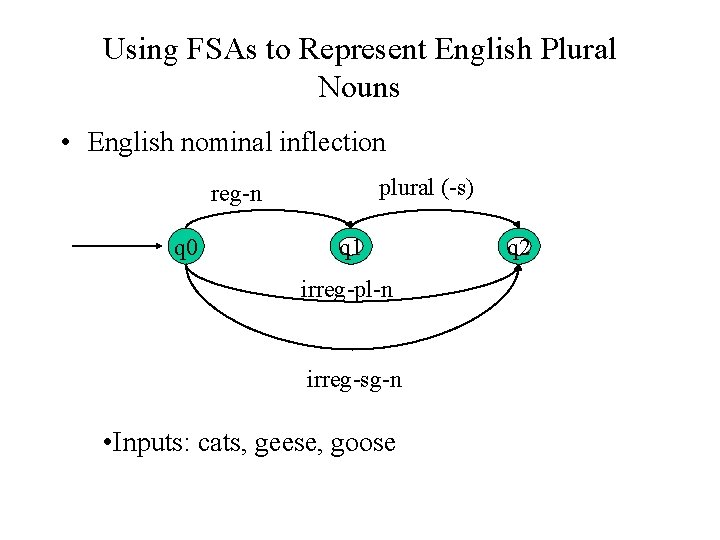

Using FSAs to Represent English Plural Nouns • English nominal inflection plural (-s) reg-n q 0 q 1 irreg-pl-n irreg-sg-n • Inputs: cats, geese, goose q 2

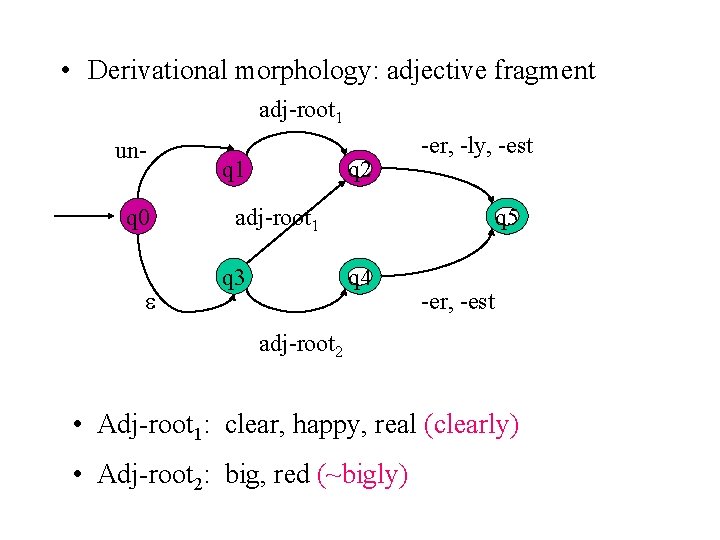

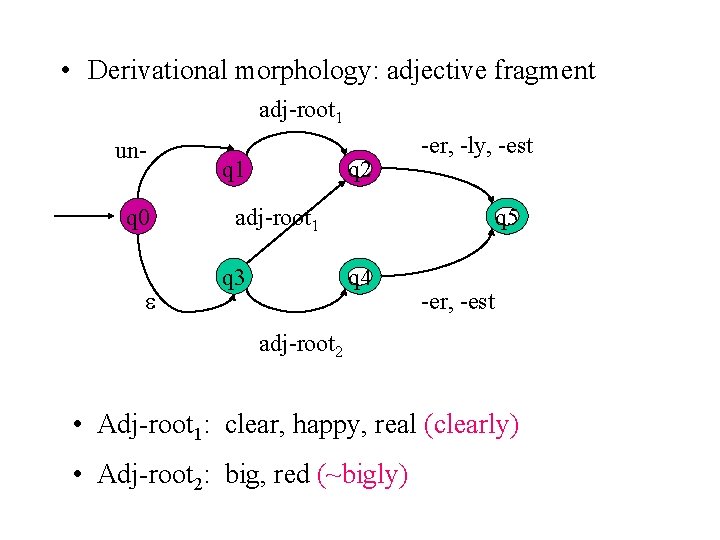

• Derivational morphology: adjective fragment adj-root 1 unq 0 q 1 q 2 -er, -ly, -est q 5 adj-root 1 q 3 q 4 -er, -est adj-root 2 • Adj-root 1: clear, happy, real (clearly) • Adj-root 2: big, red (~bigly)

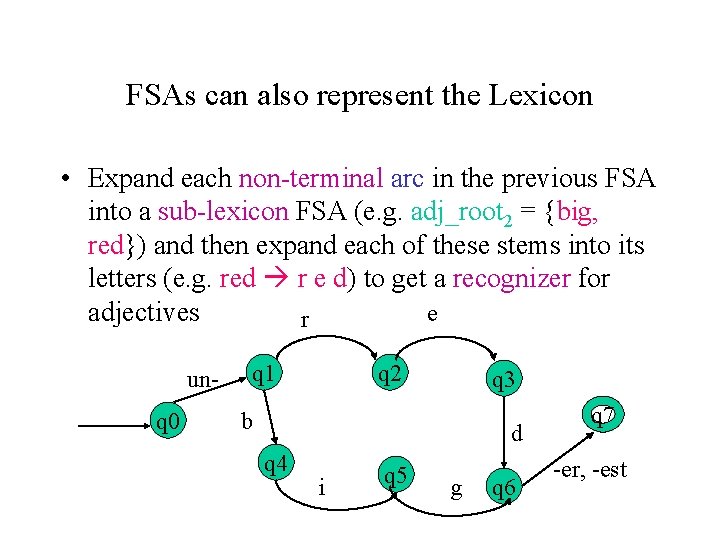

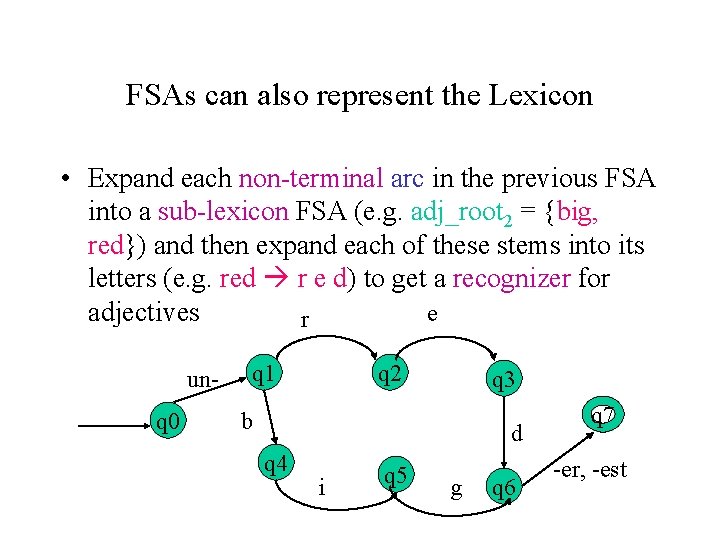

FSAs can also represent the Lexicon • Expand each non-terminal arc in the previous FSA into a sub-lexicon FSA (e. g. adj_root 2 = {big, red}) and then expand each of these stems into its letters (e. g. red r e d) to get a recognizer for e adjectives r unq 0 q 1 q 2 q 3 b d q 4 i q 5 g q 6 q 7 -er, -est

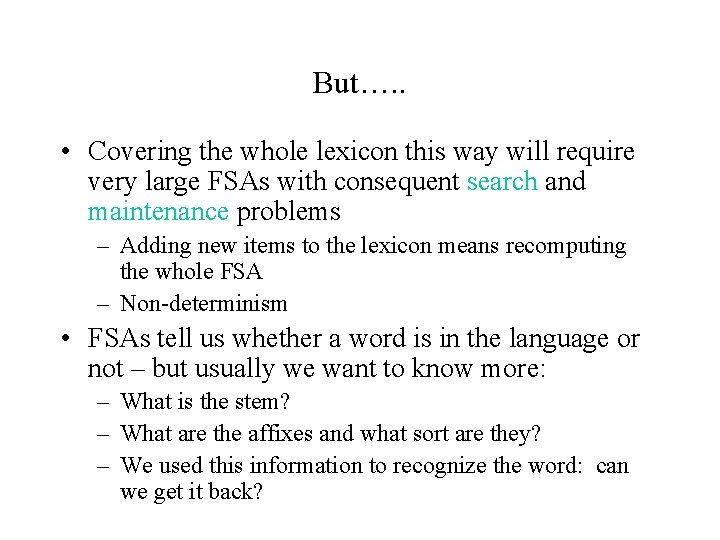

But…. . • Covering the whole lexicon this way will require very large FSAs with consequent search and maintenance problems – Adding new items to the lexicon means recomputing the whole FSA – Non-determinism • FSAs tell us whether a word is in the language or not – but usually we want to know more: – What is the stem? – What are the affixes and what sort are they? – We used this information to recognize the word: can we get it back?

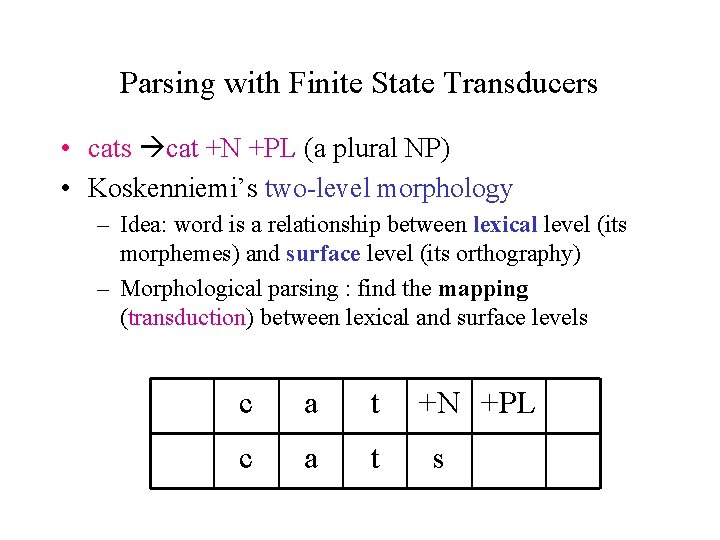

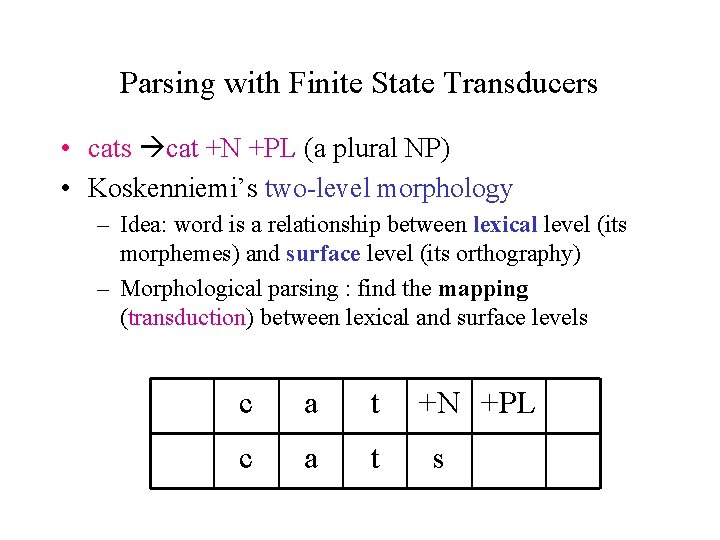

Parsing with Finite State Transducers • cats cat +N +PL (a plural NP) • Koskenniemi’s two-level morphology – Idea: word is a relationship between lexical level (its morphemes) and surface level (its orthography) – Morphological parsing : find the mapping (transduction) between lexical and surface levels c a t +N +PL s

Finite State Transducers can represent this mapping • FSTs map between one set of symbols and another using an FSA whose alphabet is composed of pairs of symbols from input and output alphabets • In general, FSTs can be used for – Translators (Hello: Ciao) – Parser/generator s(Hello: How may I help you? ) – As well as Kimmo-style morphological parsing

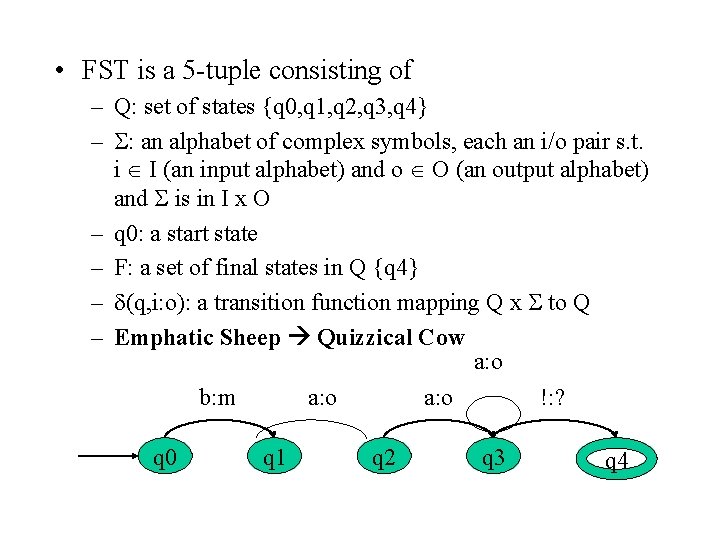

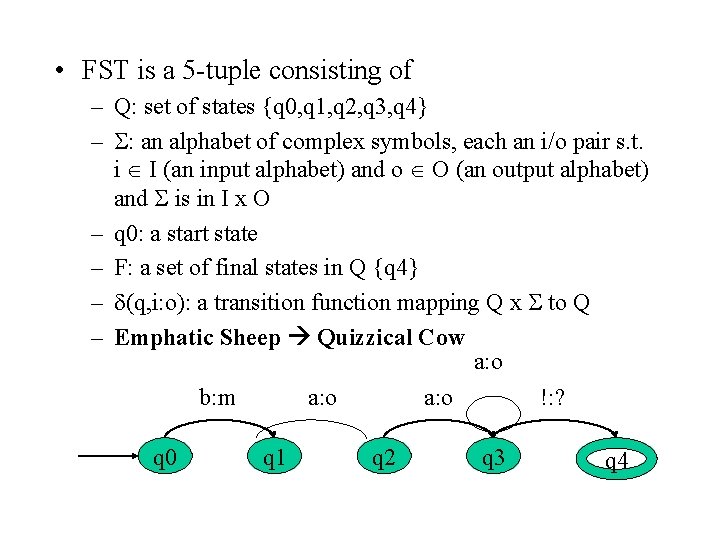

• FST is a 5 -tuple consisting of – Q: set of states {q 0, q 1, q 2, q 3, q 4} – : an alphabet of complex symbols, each an i/o pair s. t. i I (an input alphabet) and o O (an output alphabet) and is in I x O – q 0: a start state – F: a set of final states in Q {q 4} – (q, i: o): a transition function mapping Q x to Q – Emphatic Sheep Quizzical Cow a: o b: m a: o !: ? q 0 q 1 q 2 q 3 q 4

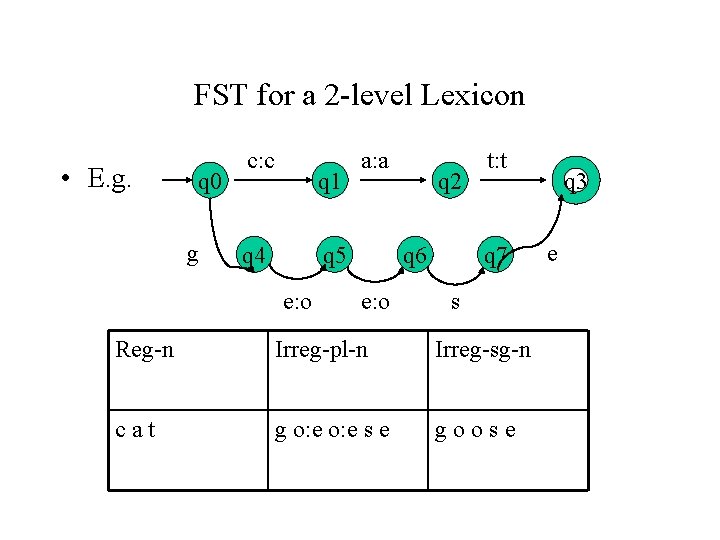

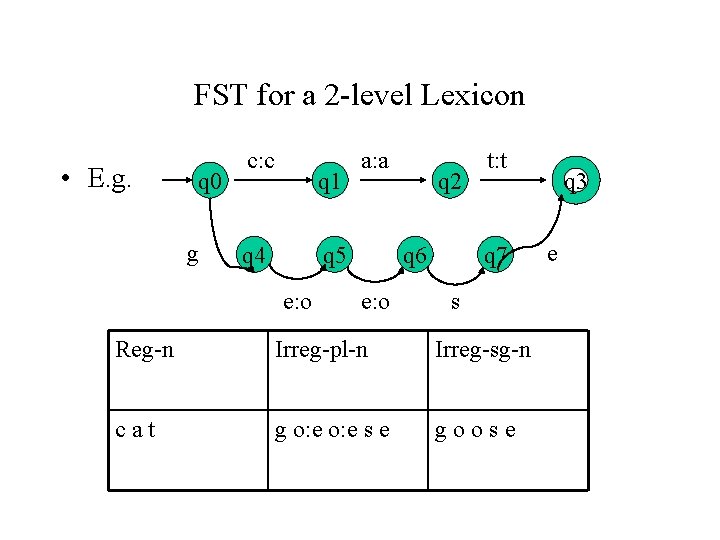

FST for a 2 -level Lexicon • E. g. q 0 g c: c q 1 q 4 a: a q 5 e: o q 2 q 6 e: o t: t q 7 s Reg-n Irreg-pl-n Irreg-sg-n cat g o: e s e goose q 3 e

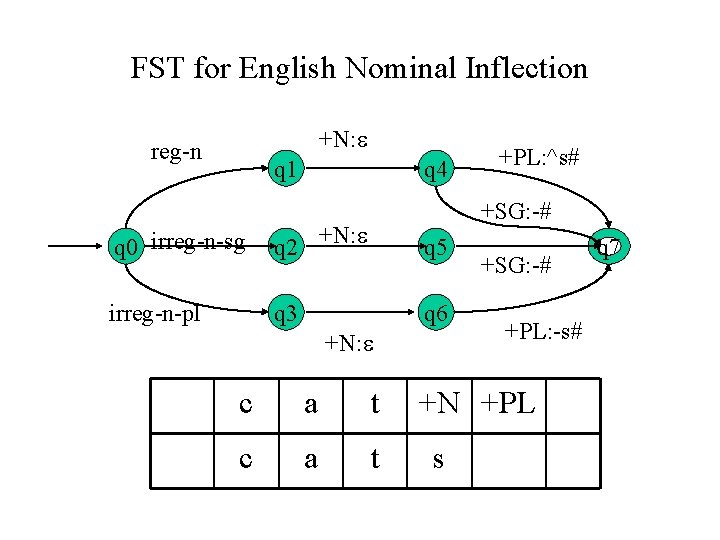

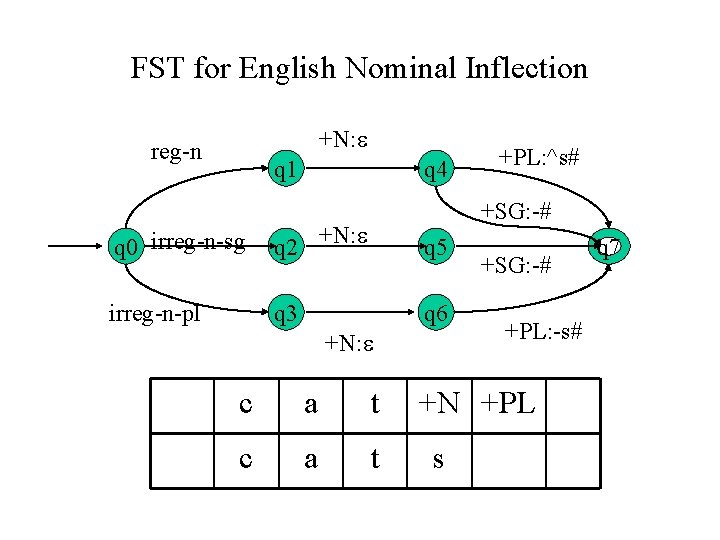

FST for English Nominal Inflection +N: reg-n q 1 q 0 irreg-n-sg q 2 irreg-n-pl q 3 q 4 +PL: ^s# +SG: -# +N: q 5 q 6 +N: c a t +SG: -# +PL: -s# +N +PL s q 7

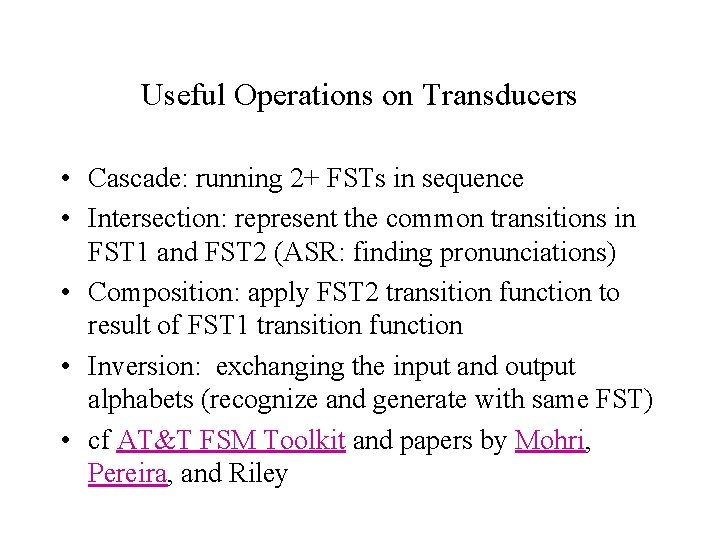

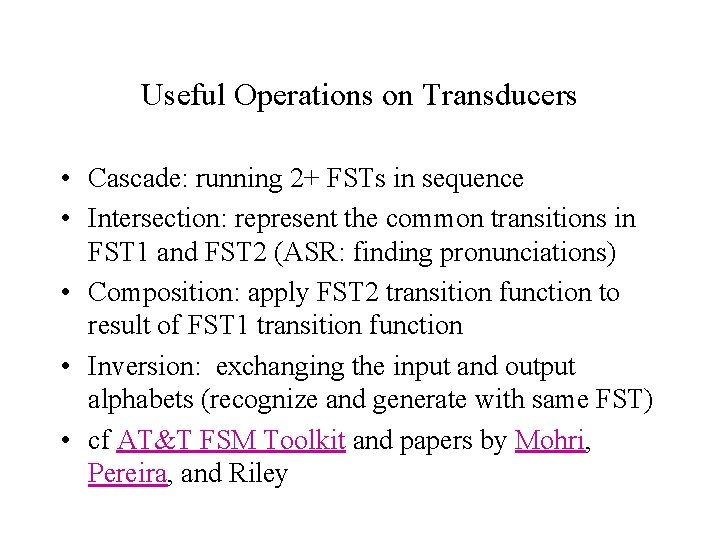

Useful Operations on Transducers • Cascade: running 2+ FSTs in sequence • Intersection: represent the common transitions in FST 1 and FST 2 (ASR: finding pronunciations) • Composition: apply FST 2 transition function to result of FST 1 transition function • Inversion: exchanging the input and output alphabets (recognize and generate with same FST) • cf AT&T FSM Toolkit and papers by Mohri, Pereira, and Riley

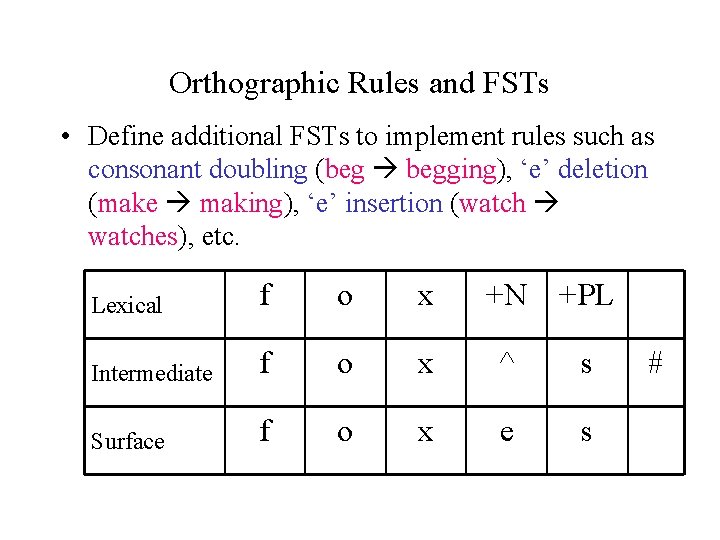

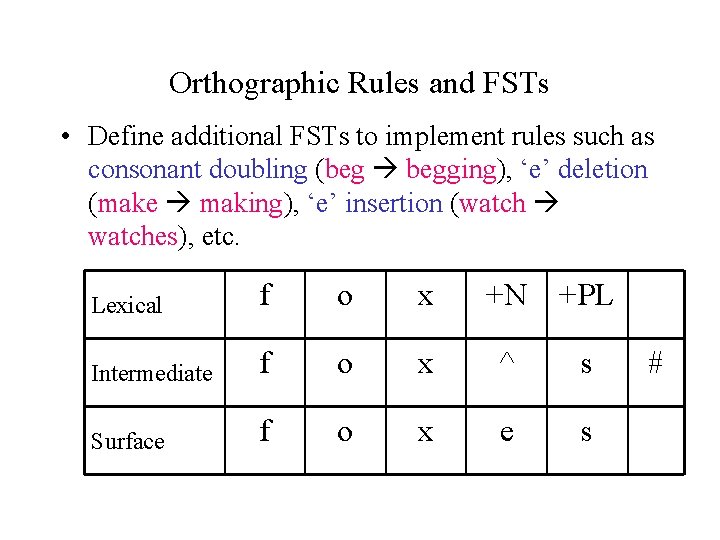

Orthographic Rules and FSTs • Define additional FSTs to implement rules such as consonant doubling (beg begging), ‘e’ deletion (make making), ‘e’ insertion (watch watches), etc. Lexical f o x +N +PL Intermediate f o x ^ s Surface f o x e s #

Porter Stemmer • Used for tasks in which you only care about the stem – IR, modeling given/new distinction, topic detection, document similarity • Rewrite rules (e. g. misunderstanding --> misunderstand --> …) • Not perfect …. But sometimes it doesn’t matter too much • Fast and easy

Summing Up • FSTs provide a useful tool for implementing a standard model of morphological analysis, Kimmo’s two-level morphology • But for many tasks (e. g. IR) much simpler approaches are still widely used, e. g. the rulebased Porter Stemmer • Next time: – Read Ch 4 – Read over HW 1 and ask questions now