Lecture 25 Advanced Data Prefetching Techniques Prefetching and

![Stride Prefetching Example float a[100], b[100], c[100]; . . . for ( i = Stride Prefetching Example float a[100], b[100], c[100]; . . . for ( i =](https://slidetodoc.com/presentation_image_h/06057a6eedce57689bc4673ab62ec8de/image-11.jpg)

- Slides: 21

Lecture 25: Advanced Data Prefetching Techniques Prefetching and data prefetching overview, Stride prefetching, Markov prefetching, precomputation-based prefetching Zhao Zhang, CPRE 585 Fall 2003 1

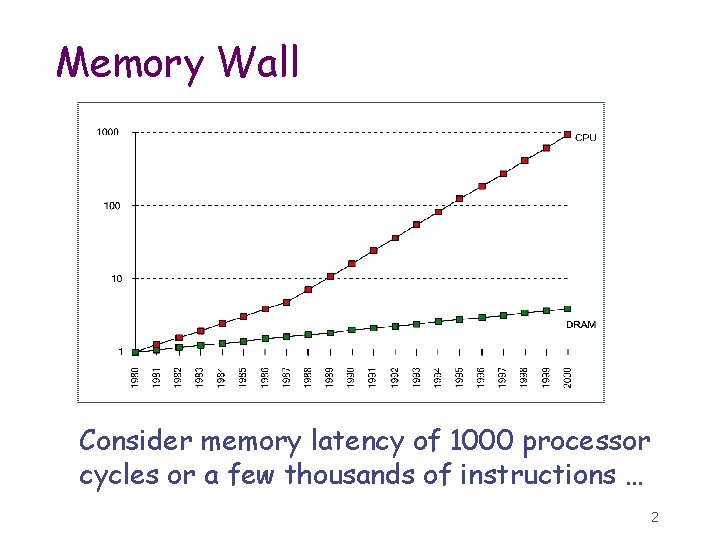

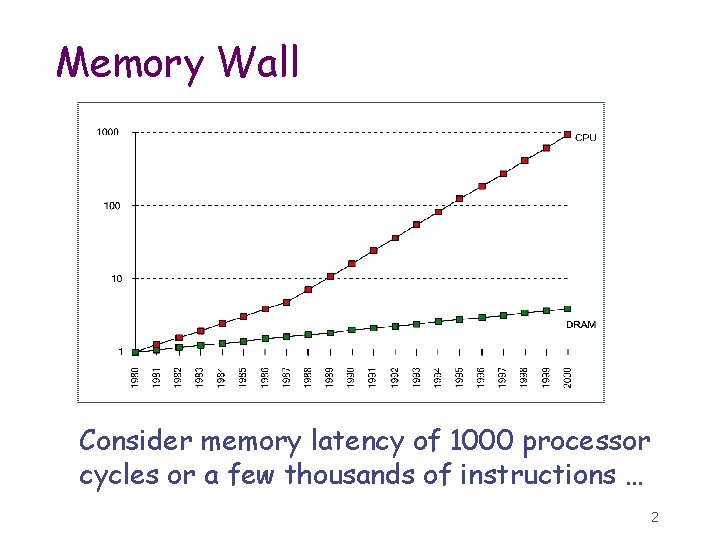

Memory Wall Consider memory latency of 1000 processor cycles or a few thousands of instructions … 2

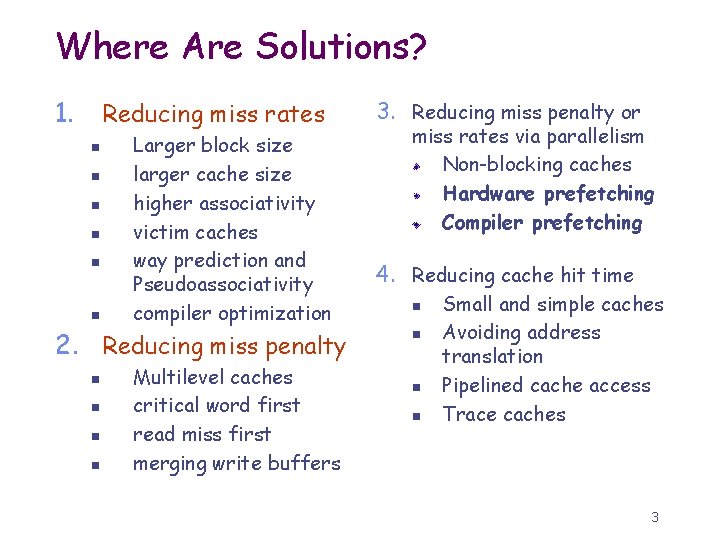

Where Are Solutions? 1. Reducing miss rates n n n Larger block size larger cache size higher associativity victim caches way prediction and Pseudoassociativity compiler optimization 2. Reducing miss penalty n n Multilevel caches critical word first read miss first merging write buffers 3. Reducing miss penalty or miss rates via parallelism Non-blocking caches Hardware prefetching Compiler prefetching 4. Reducing cache hit time n n Small and simple caches Avoiding address translation Pipelined cache access Trace caches 3

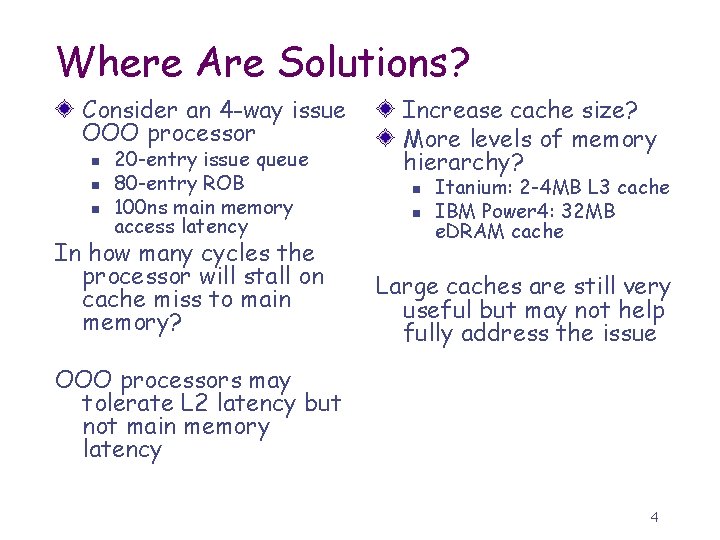

Where Are Solutions? Consider an 4 -way issue OOO processor n n n 20 -entry issue queue 80 -entry ROB 100 ns main memory access latency In how many cycles the processor will stall on cache miss to main memory? Increase cache size? More levels of memory hierarchy? n n Itanium: 2 -4 MB L 3 cache IBM Power 4: 32 MB e. DRAM cache Large caches are still very useful but may not help fully address the issue OOO processors may tolerate L 2 latency but not main memory latency 4

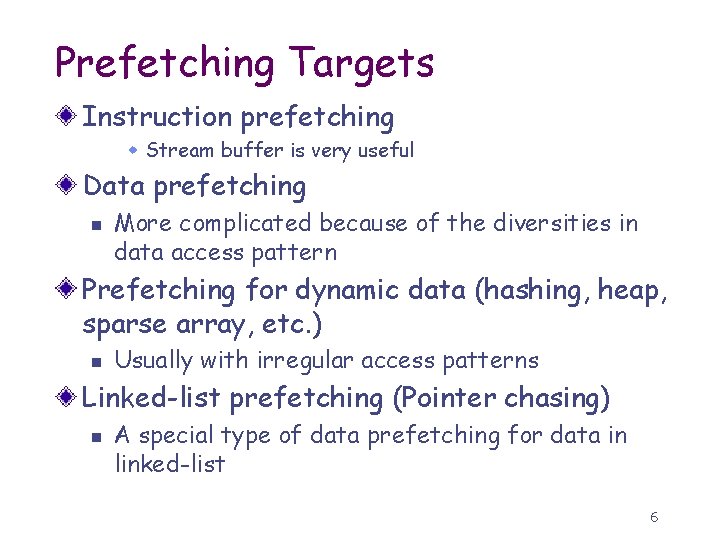

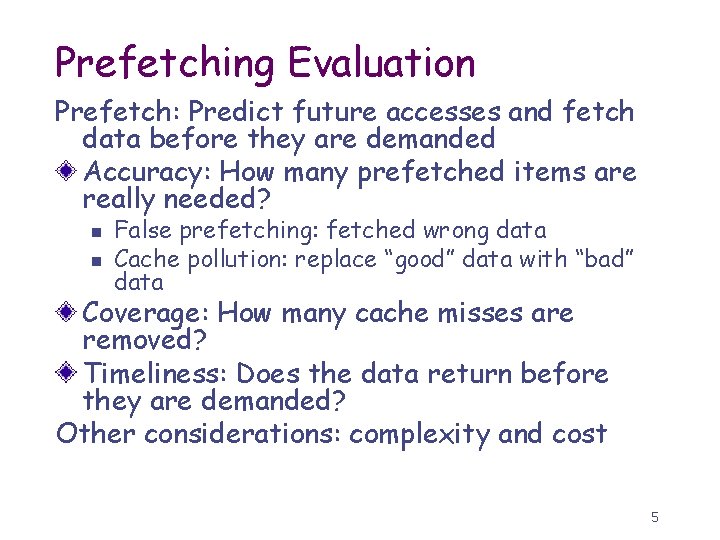

Prefetching Evaluation Prefetch: Predict future accesses and fetch data before they are demanded Accuracy: How many prefetched items are really needed? n n False prefetching: fetched wrong data Cache pollution: replace “good” data with “bad” data Coverage: How many cache misses are removed? Timeliness: Does the data return before they are demanded? Other considerations: complexity and cost 5

Prefetching Targets Instruction prefetching w Stream buffer is very useful Data prefetching n More complicated because of the diversities in data access pattern Prefetching for dynamic data (hashing, heap, sparse array, etc. ) n Usually with irregular access patterns Linked-list prefetching (Pointer chasing) n A special type of data prefetching for data in linked-list 6

Prefetching Implementations Sequential and stride prefetching n n n Tagged prefetching Simple stream buffer Stride prefetching Correlation-based prefetching n n Markov prefetching Dead-block correlating prefetching Precomputation-based n n n Keep running programs on cache misses; or Use separate hardware for prefetching; or Use compiler-generated threads on multithreaded processors Other considerations n n n Predict on miss addresses or reference address? Prefetch into cache or a temp. buffer? Demand-based or decoupled prefetching? 7

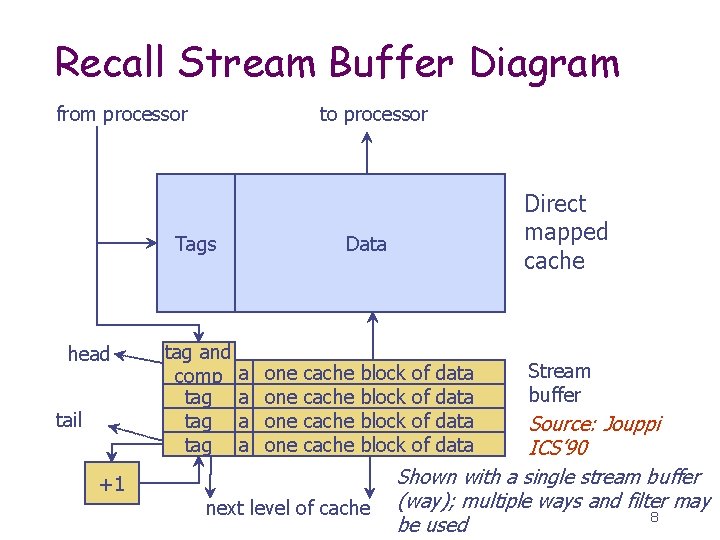

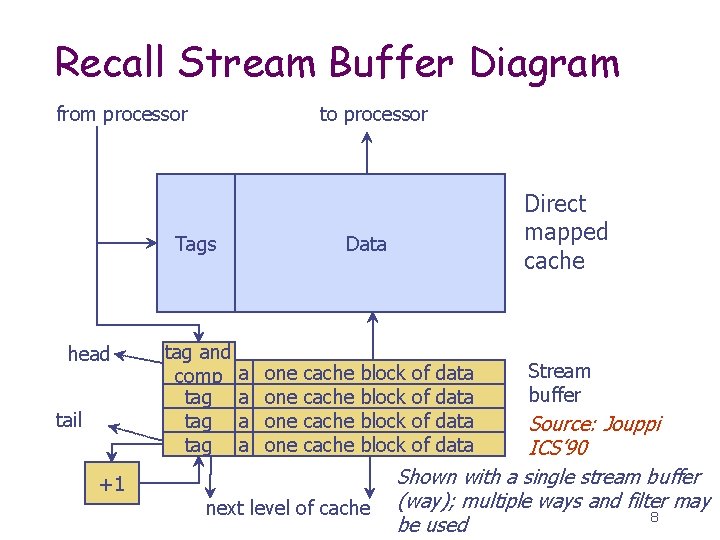

Recall Stream Buffer Diagram from processor to processor Tags head tail tag and comp tag tag Direct mapped cache Data a a one one cache block +1 next level of cache of of data Stream buffer Source: Jouppi ICS’ 90 Shown with a single stream buffer (way); multiple ways and filter may 8 be used

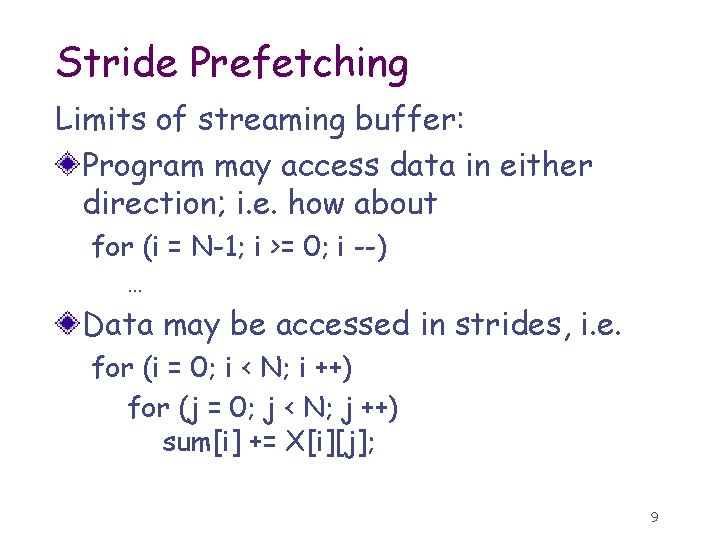

Stride Prefetching Limits of streaming buffer: Program may access data in either direction; i. e. how about for (i = N-1; i >= 0; i --) … Data may be accessed in strides, i. e. for (i = 0; i < N; i ++) for (j = 0; j < N; j ++) sum[i] += X[i][j]; 9

Stride Prefetching Diagram PC Effective address Inst tag Reference prediction table Previous address stride state + Prefetch address 10

![Stride Prefetching Example float a100 b100 c100 for i Stride Prefetching Example float a[100], b[100], c[100]; . . . for ( i =](https://slidetodoc.com/presentation_image_h/06057a6eedce57689bc4673ab62ec8de/image-11.jpg)

Stride Prefetching Example float a[100], b[100], c[100]; . . . for ( i = 0; i < 100; i++) for ( j = 0; j < 100; j++) for ( k = 0; k < 100; k++) a[i][j] += b[i][k] * c[k][j]; tag addr stride state Load b 20004 4 trans. Load c 30400 trans. Load a 30000 0 steady Iteration 2 tag addr stride state Load b 20000 0 init Load b 20008 4 steady Load c 30000 0 init Load c 30800 400 steady Load a 30000 0 init Load a 30000 0 steady Iteration 1 Iteration 3 11

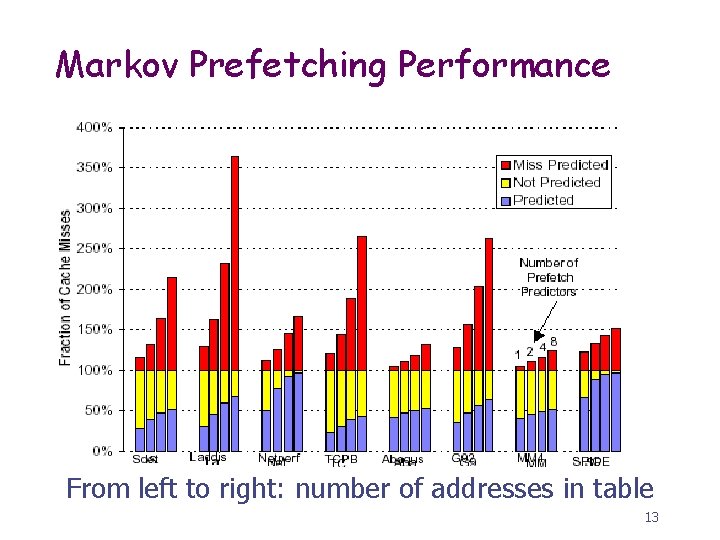

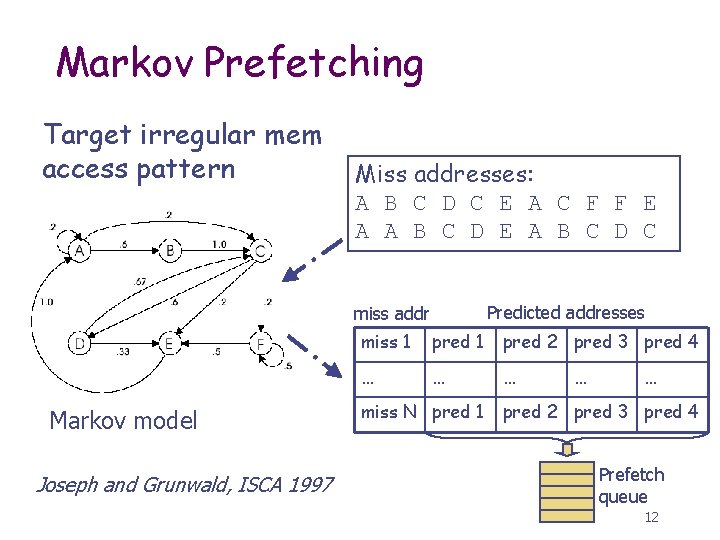

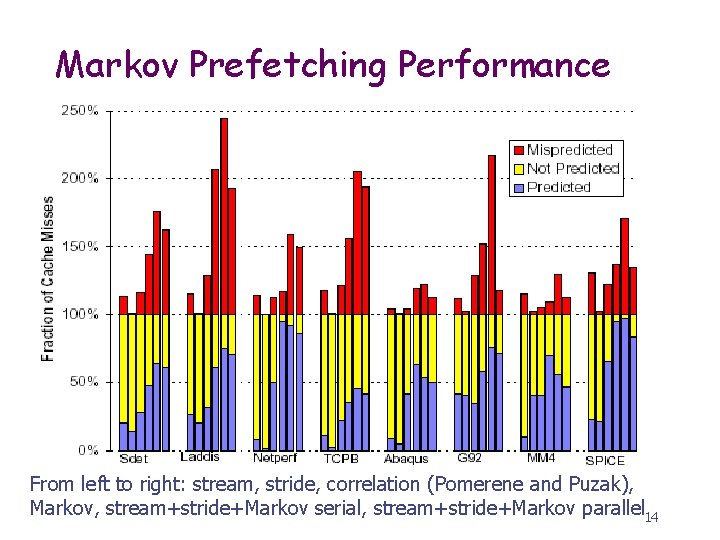

Markov Prefetching Target irregular mem access pattern Miss addresses: A B C D C E A C F F E A A B C D E A B C D C Predicted addresses miss addr Markov model Joseph and Grunwald, ISCA 1997 miss 1 pred 2 pred 3 pred 4 … … … miss N pred 1 … … pred 2 pred 3 pred 4 Prefetch queue 12

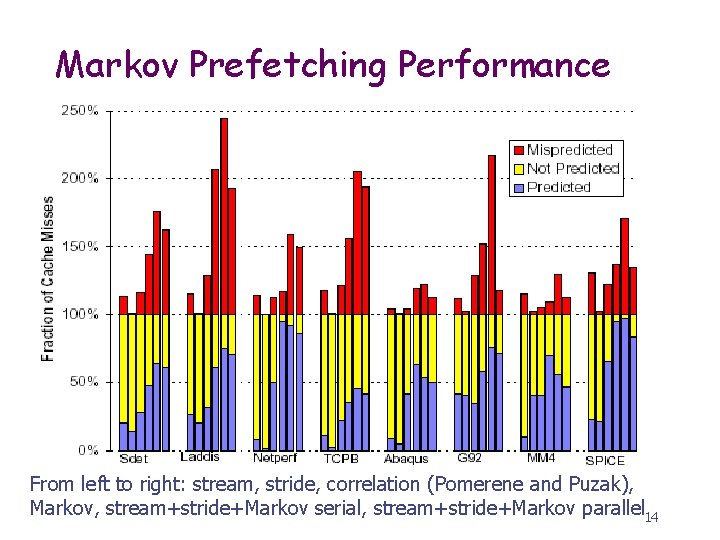

Markov Prefetching Performance From left to right: number of addresses in table 13

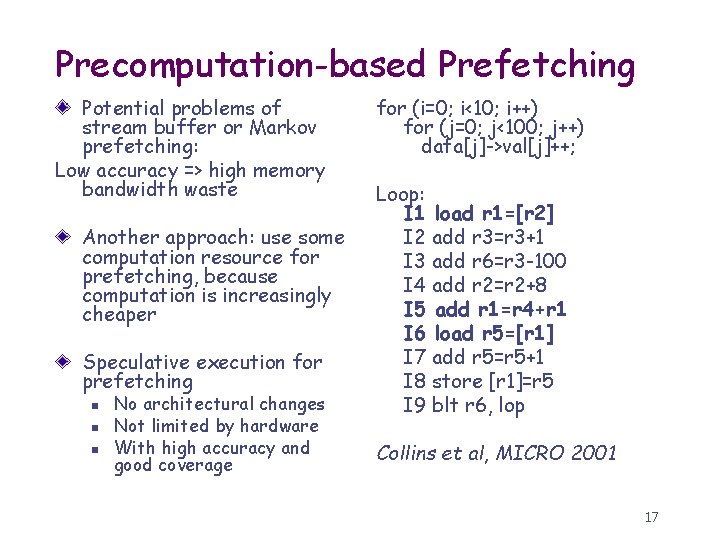

Markov Prefetching Performance From left to right: stream, stride, correlation (Pomerene and Puzak), Markov, stream+stride+Markov serial, stream+stride+Markov parallel 14

Predictor-directed Stream Buffer Cons of existing approaches: Stride prefetching (using updated stream buffer): Only useful for strid access; being interfered by non-stride accesses Markov prefetching: Working for general access patterns but requiring large history storage (megabytes) PSB: Combining the two methods n n To improve coverage of stream buffer; and Keep the required storage low (several kilobytes) Sair et al. , MICRO 2000 15

Predictor-directed Stream Buffer Markov prediction table filters out irregular address transitions (reduce stream buffer thrashing) Stream buffer filters out addresses used to train Markov prediction table (reduce storage) Which prefetching is used on each address? 16

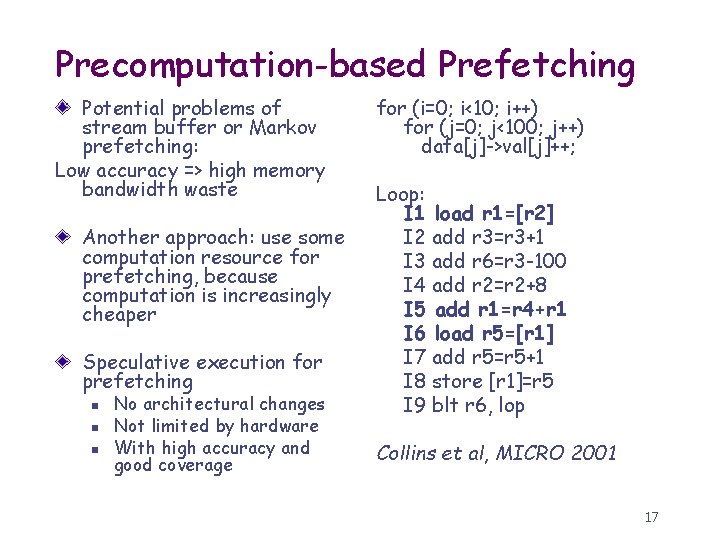

Precomputation-based Prefetching Potential problems of stream buffer or Markov prefetching: Low accuracy => high memory bandwidth waste Another approach: use some computation resource for prefetching, because computation is increasingly cheaper Speculative execution for prefetching n n n No architectural changes Not limited by hardware With high accuracy and good coverage for (i=0; i<10; i++) for (j=0; j<100; j++) data[j]->val[j]++; Loop: I 1 load r 1=[r 2] I 2 add r 3=r 3+1 I 3 add r 6=r 3 -100 I 4 add r 2=r 2+8 I 5 add r 1=r 4+r 1 I 6 load r 5=[r 1] I 7 add r 5=r 5+1 I 8 store [r 1]=r 5 I 9 blt r 6, lop Collins et al, MICRO 2001 17

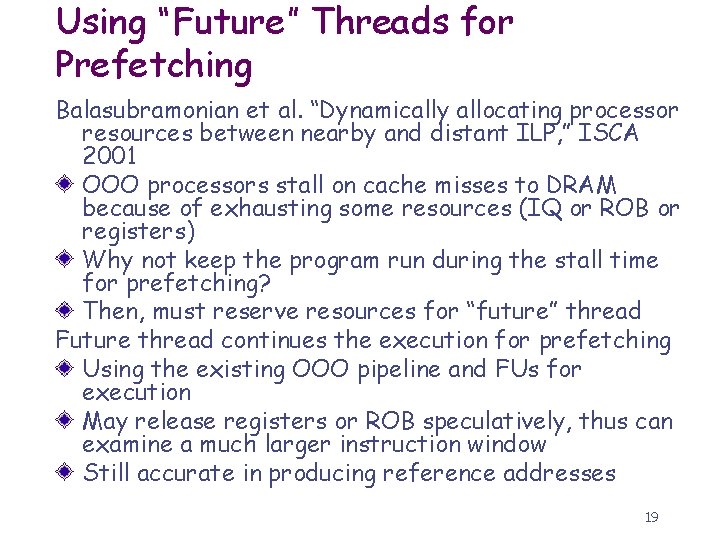

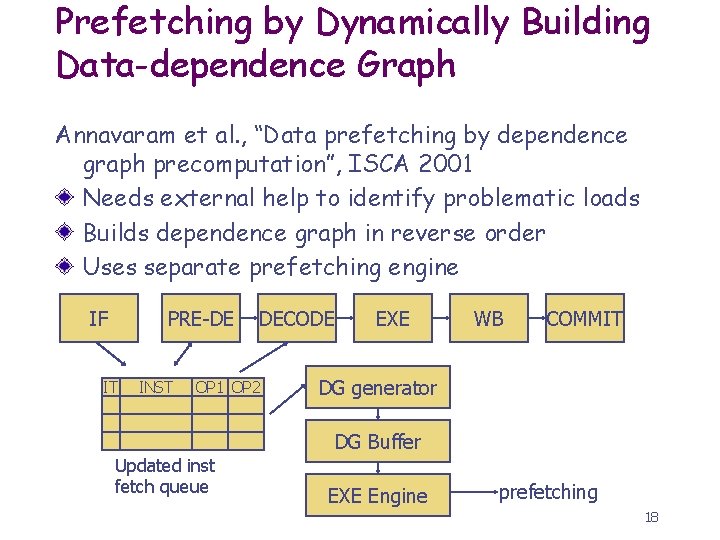

Prefetching by Dynamically Building Data-dependence Graph Annavaram et al. , “Data prefetching by dependence graph precomputation”, ISCA 2001 Needs external help to identify problematic loads Builds dependence graph in reverse order Uses separate prefetching engine IF PRE-DE IT INST DECODE OP 1 OP 2 EXE WB COMMIT DG generator DG Buffer Updated inst fetch queue EXE Engine prefetching 18

Using “Future” Threads for Prefetching Balasubramonian et al. “Dynamically allocating processor resources between nearby and distant ILP, ” ISCA 2001 OOO processors stall on cache misses to DRAM because of exhausting some resources (IQ or ROB or registers) Why not keep the program run during the stall time for prefetching? Then, must reserve resources for “future” thread Future thread continues the execution for prefetching Using the existing OOO pipeline and FUs for execution May release registers or ROB speculatively, thus can examine a much larger instruction window Still accurate in producing reference addresses 19

Precomputation with SMT Supporting Speculative Threads Collins et al. “Speculative precomputation: long-range prefetching of delinquent loads, ” ISCA 2001. Precomputation is done by an explicit speculative thread (p-thread) The code of p-threads may be constructed by compiler or hardware Main thread execution spawns p-threads on triggers (e. g. when an PC is encountered) n n Main thread some register values and initial PC for p-thread P-thread may trigger another p-thread for further prefetching For more complier issues, see Luk, “Tolerating Memory Latency through Software-Controlled Pre-Execution in Simultaneous Multithreading Processors”, ISCA 2001 20

Summary of Advanced Prefetching Being actively studied because of the increasing CPU-memory speed gap Improving cache performance beyond the limit of cache size Precomputation may be limited in prefetching distance (how good is the timeliness? ) Note there is no perfect cache/prefetching solution, e. g. while (1) { myload (addr); addr = myrandom() + addr; } How to design complexity-effective memory systems for future processors? 21