Lecture 21 Algorithms for Reference Resolution CS 4705

- Slides: 17

Lecture 21 Algorithms for Reference Resolution CS 4705

Issues • Which features should we make use of? • How should we order them? I. e. which override which? • What should appear in our discourse model? I. e. , what types of information do we need to keep track of? • How to evaluate?

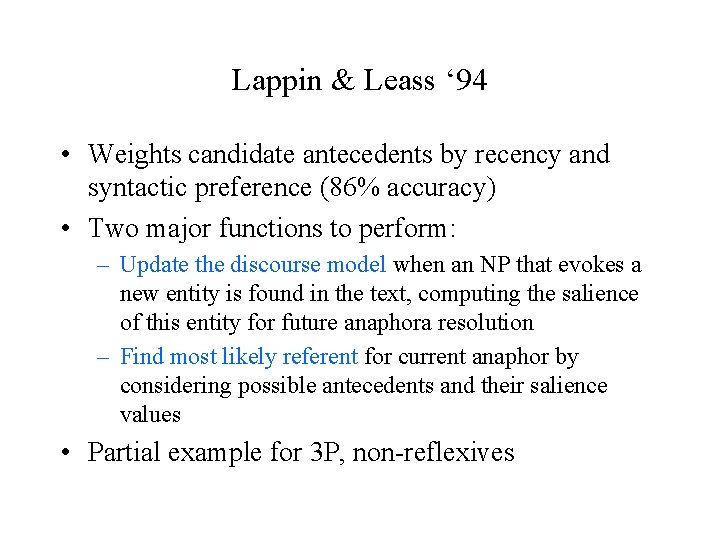

Lappin & Leass ‘ 94 • Weights candidate antecedents by recency and syntactic preference (86% accuracy) • Two major functions to perform: – Update the discourse model when an NP that evokes a new entity is found in the text, computing the salience of this entity for future anaphora resolution – Find most likely referent for current anaphor by considering possible antecedents and their salience values • Partial example for 3 P, non-reflexives

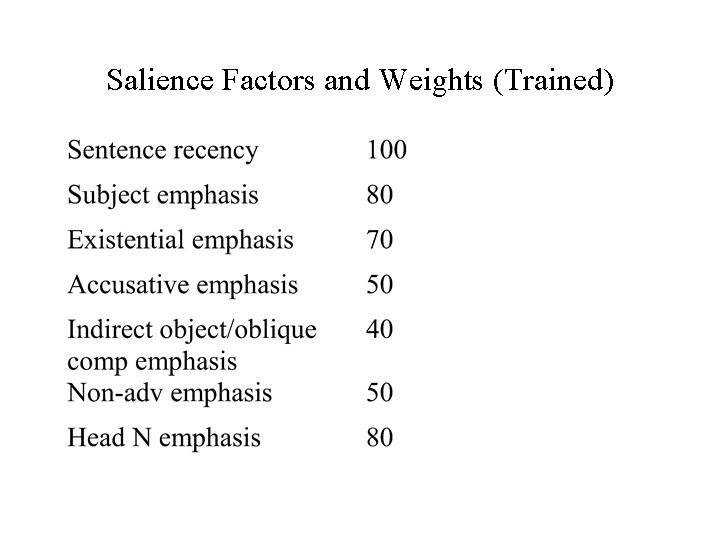

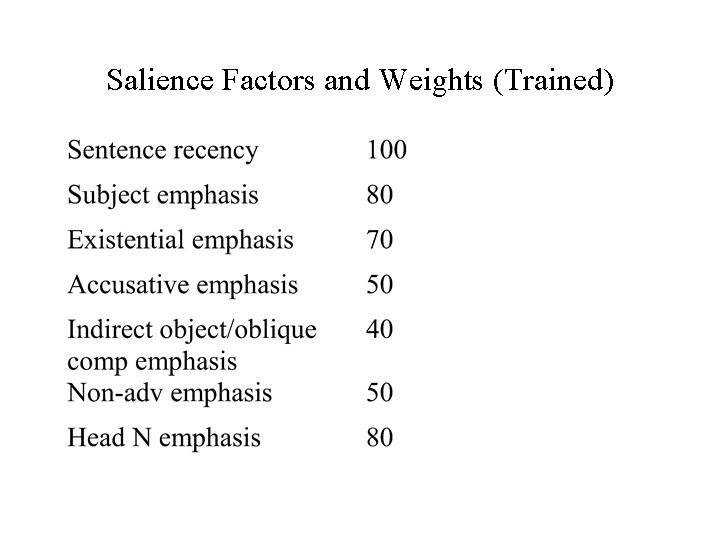

Salience Factors and Weights (Trained)

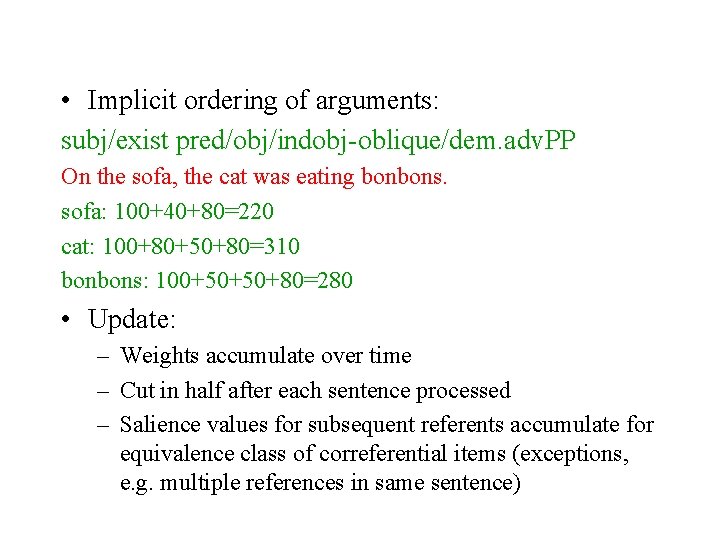

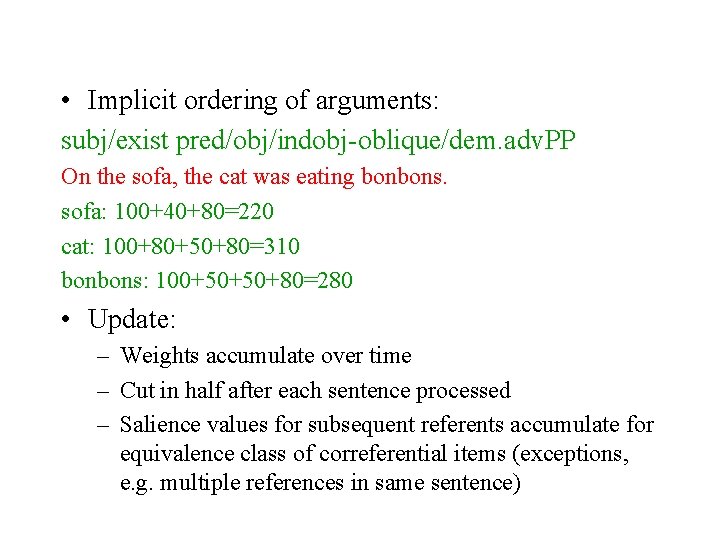

• Implicit ordering of arguments: subj/exist pred/obj/indobj-oblique/dem. adv. PP On the sofa, the cat was eating bonbons. sofa: 100+40+80=220 cat: 100+80+50+80=310 bonbons: 100+50+50+80=280 • Update: – Weights accumulate over time – Cut in half after each sentence processed – Salience values for subsequent referents accumulate for equivalence class of correferential items (exceptions, e. g. multiple references in same sentence)

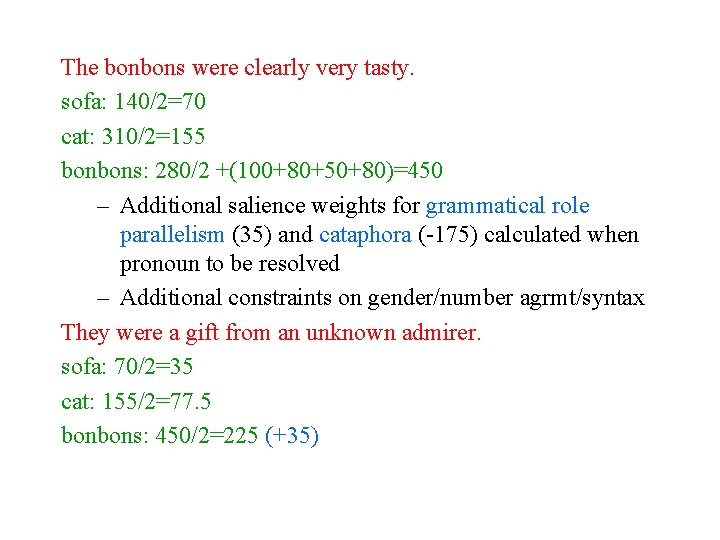

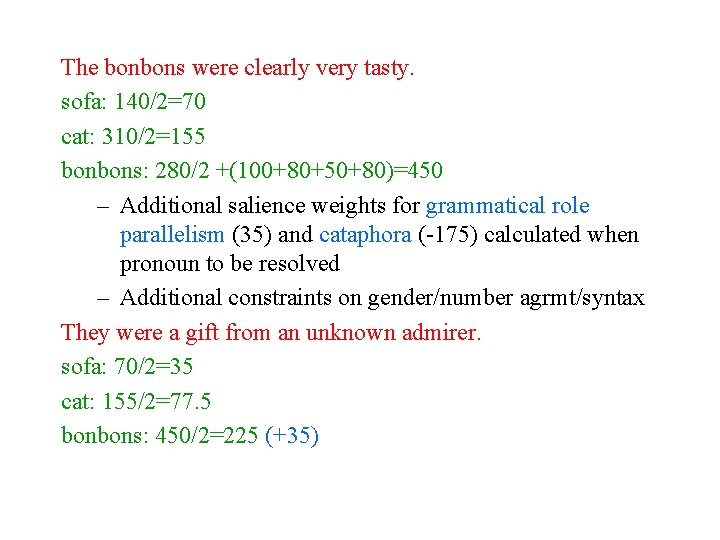

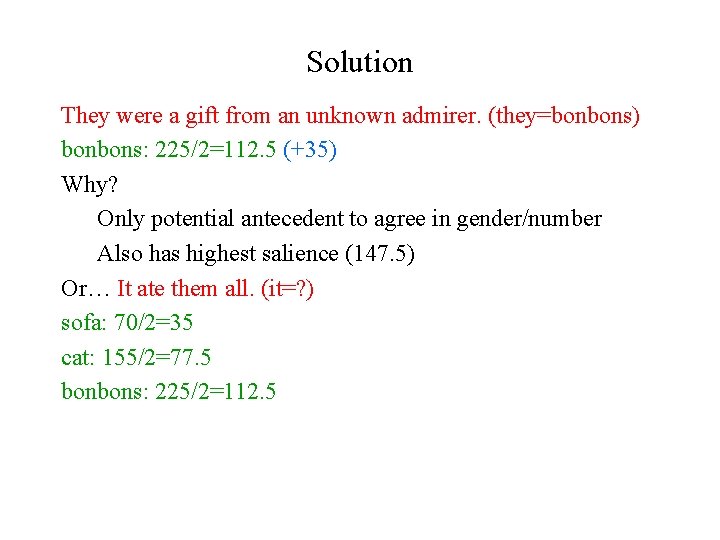

The bonbons were clearly very tasty. sofa: 140/2=70 cat: 310/2=155 bonbons: 280/2 +(100+80+50+80)=450 – Additional salience weights for grammatical role parallelism (35) and cataphora (-175) calculated when pronoun to be resolved – Additional constraints on gender/number agrmt/syntax They were a gift from an unknown admirer. sofa: 70/2=35 cat: 155/2=77. 5 bonbons: 450/2=225 (+35)

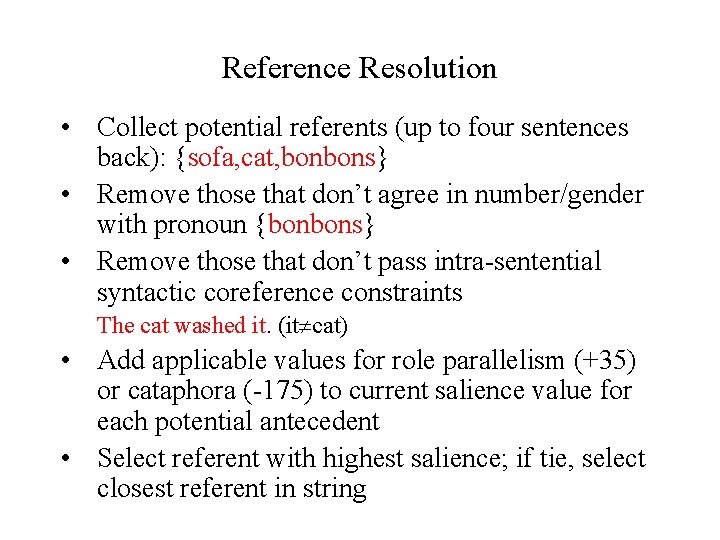

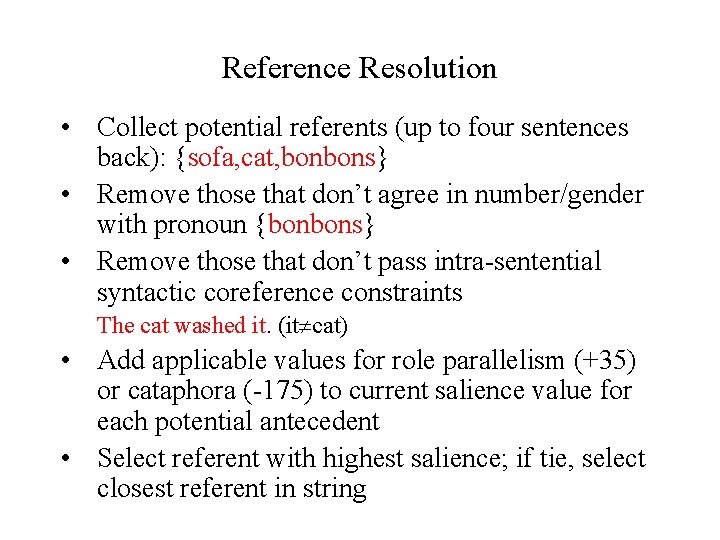

Reference Resolution • Collect potential referents (up to four sentences back): {sofa, cat, bonbons} • Remove those that don’t agree in number/gender with pronoun {bonbons} • Remove those that don’t pass intra-sentential syntactic coreference constraints The cat washed it. (it cat) • Add applicable values for role parallelism (+35) or cataphora (-175) to current salience value for each potential antecedent • Select referent with highest salience; if tie, select closest referent in string

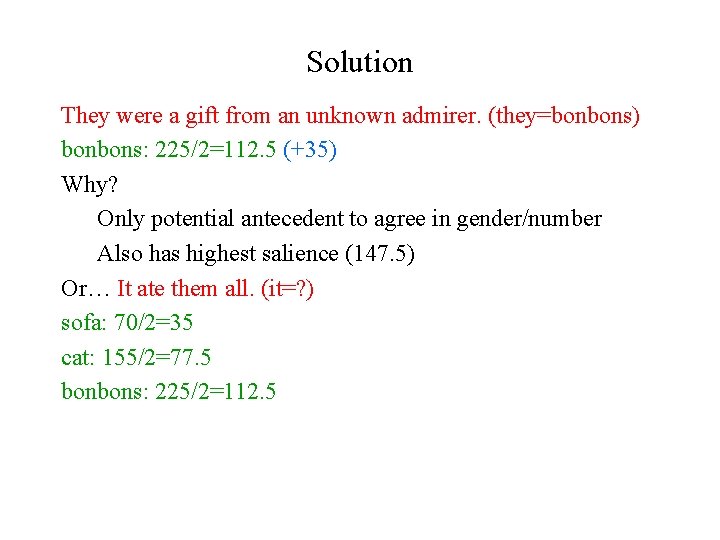

Solution They were a gift from an unknown admirer. (they=bonbons) bonbons: 225/2=112. 5 (+35) Why? Only potential antecedent to agree in gender/number Also has highest salience (147. 5) Or… It ate them all. (it=? ) sofa: 70/2=35 cat: 155/2=77. 5 bonbons: 225/2=112. 5

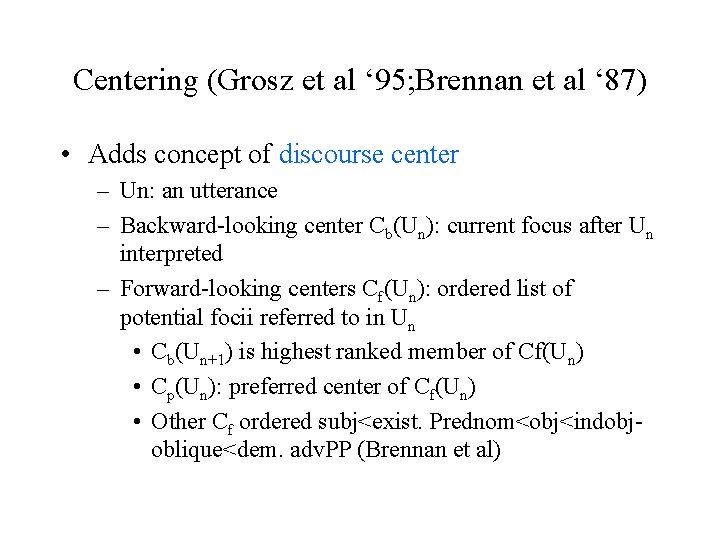

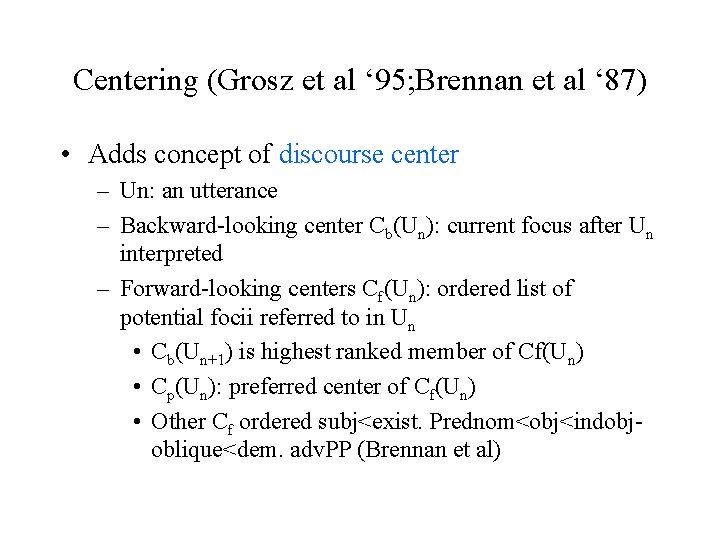

Centering (Grosz et al ‘ 95; Brennan et al ‘ 87) • Adds concept of discourse center – Un: an utterance – Backward-looking center Cb(Un): current focus after Un interpreted – Forward-looking centers Cf(Un): ordered list of potential focii referred to in Un • Cb(Un+1) is highest ranked member of Cf(Un) • Cp(Un): preferred center of Cf(Un) • Other Cf ordered subj<exist. Prednom<obj<indobjoblique<dem. adv. PP (Brennan et al)

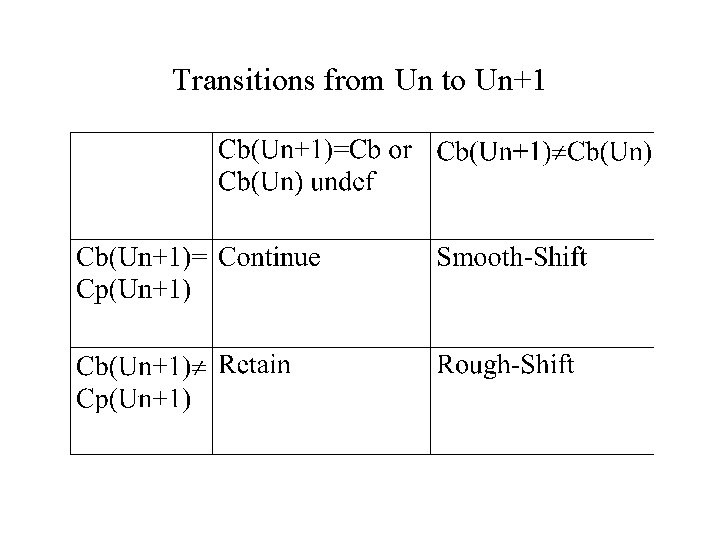

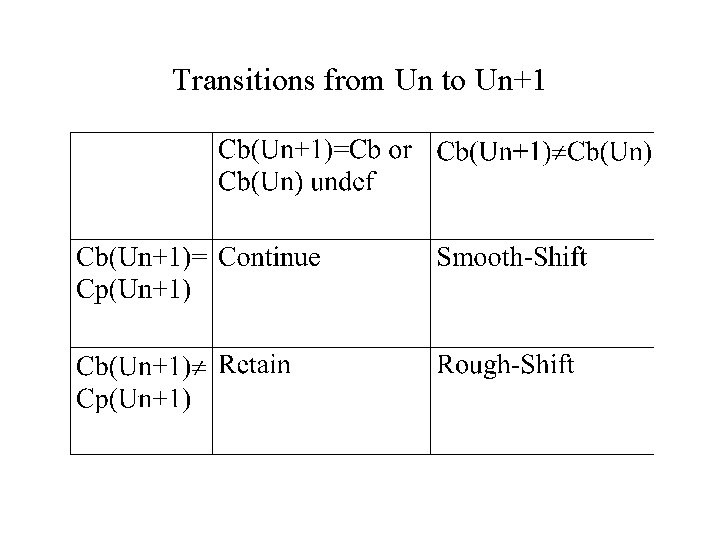

Transitions from Un to Un+1

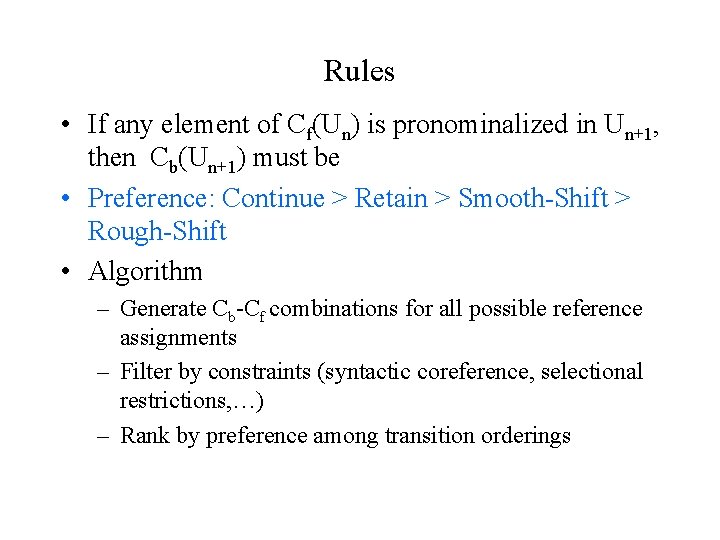

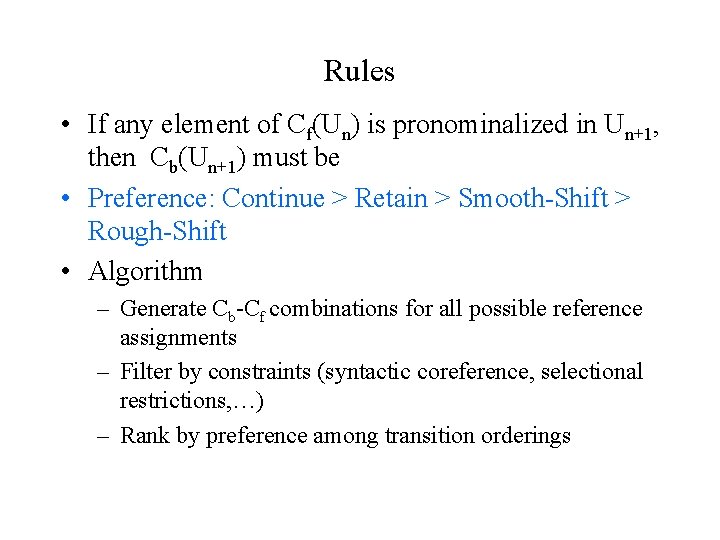

Rules • If any element of Cf(Un) is pronominalized in Un+1, then Cb(Un+1) must be • Preference: Continue > Retain > Smooth-Shift > Rough-Shift • Algorithm – Generate Cb-Cf combinations for all possible reference assignments – Filter by constraints (syntactic coreference, selectional restrictions, …) – Rank by preference among transition orderings

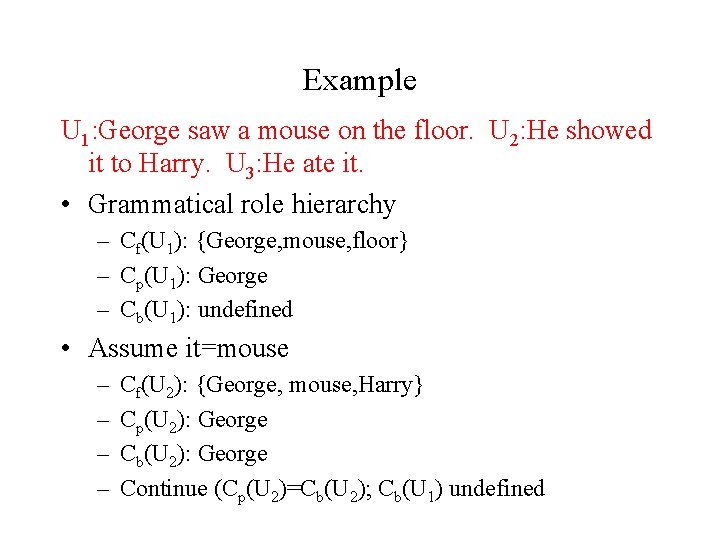

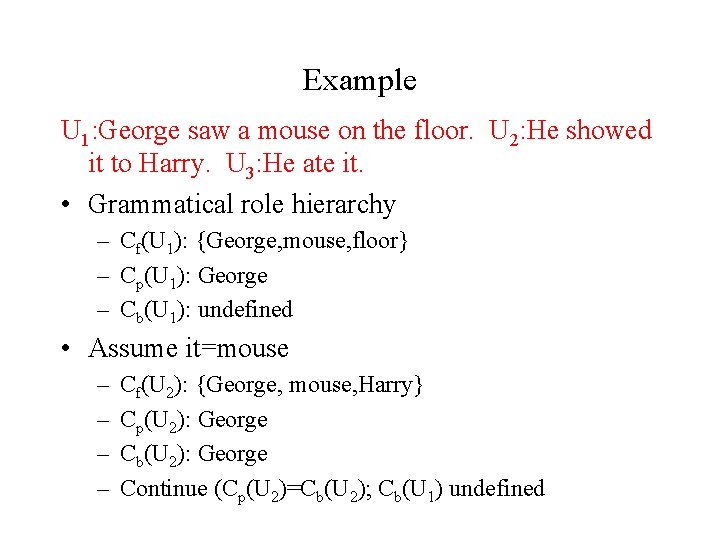

Example U 1: George saw a mouse on the floor. U 2: He showed it to Harry. U 3: He ate it. • Grammatical role hierarchy – Cf(U 1): {George, mouse, floor} – Cp(U 1): George – Cb(U 1): undefined • Assume it=mouse – – Cf(U 2): {George, mouse, Harry} Cp(U 2): George Cb(U 2): George Continue (Cp(U 2)=Cb(U 2); Cb(U 1) undefined

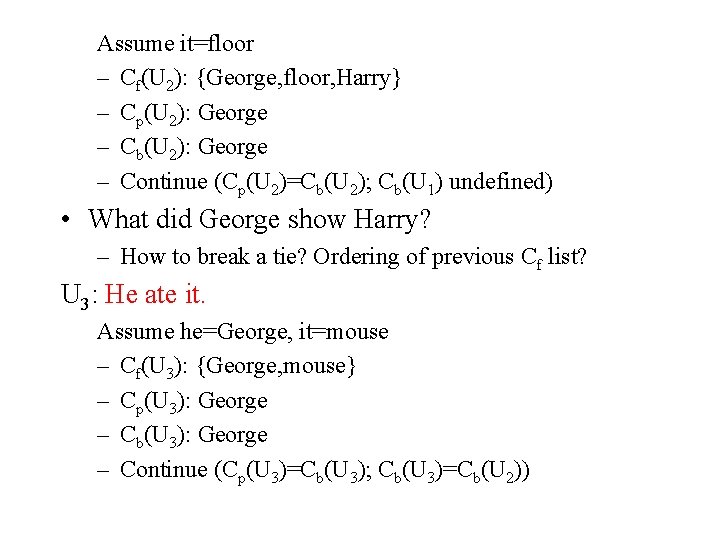

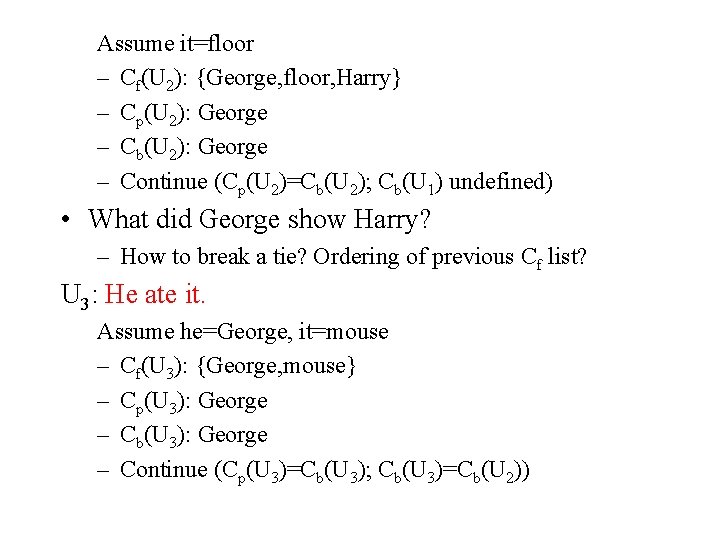

Assume it=floor – Cf(U 2): {George, floor, Harry} – Cp(U 2): George – Cb(U 2): George – Continue (Cp(U 2)=Cb(U 2); Cb(U 1) undefined) • What did George show Harry? – How to break a tie? Ordering of previous Cf list? U 3: He ate it. Assume he=George, it=mouse – Cf(U 3): {George, mouse} – Cp(U 3): George – Cb(U 3): George – Continue (Cp(U 3)=Cb(U 3); Cb(U 3)=Cb(U 2))

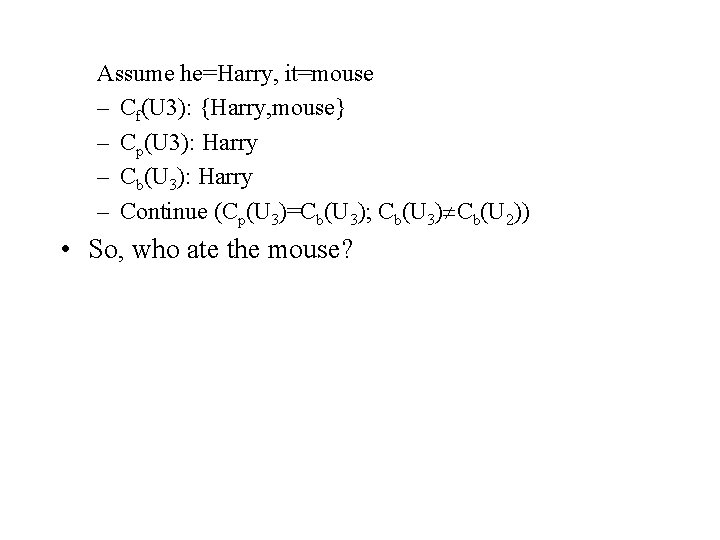

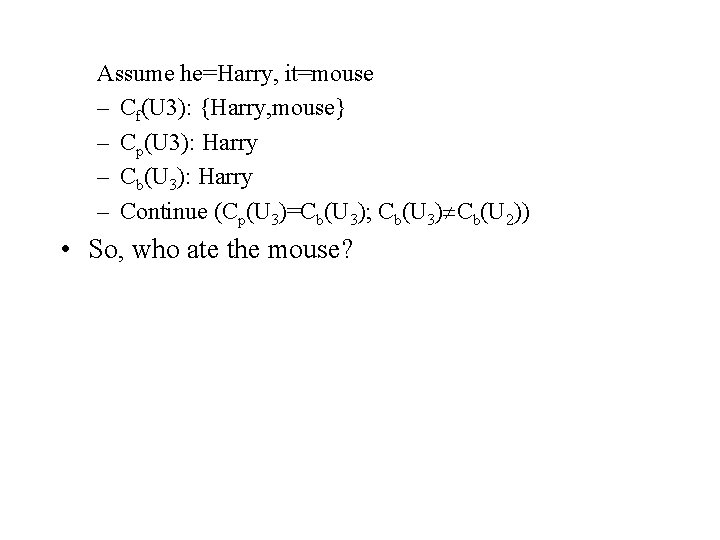

Assume he=Harry, it=mouse – Cf(U 3): {Harry, mouse} – Cp(U 3): Harry – Cb(U 3): Harry – Continue (Cp(U 3)=Cb(U 3); Cb(U 3) Cb(U 2)) • So, who ate the mouse?

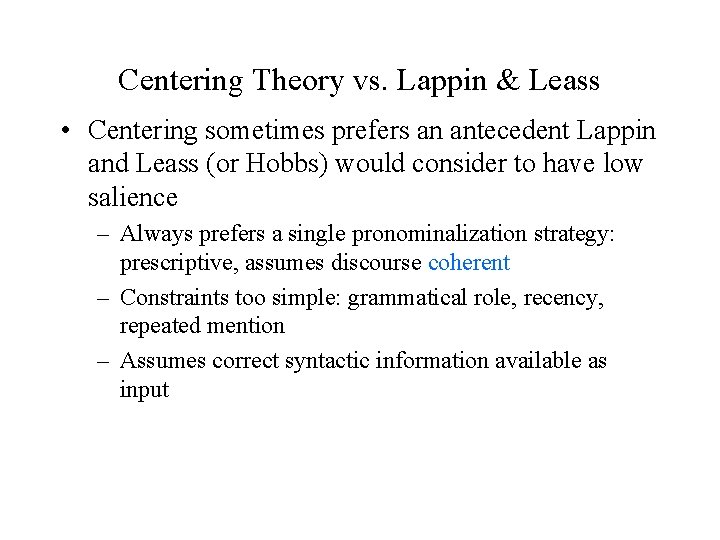

Centering Theory vs. Lappin & Leass • Centering sometimes prefers an antecedent Lappin and Leass (or Hobbs) would consider to have low salience – Always prefers a single pronominalization strategy: prescriptive, assumes discourse coherent – Constraints too simple: grammatical role, recency, repeated mention – Assumes correct syntactic information available as input

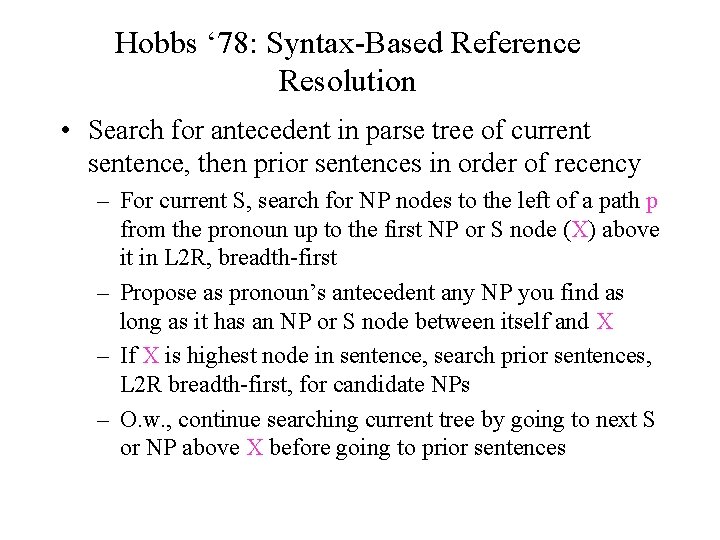

Hobbs ‘ 78: Syntax-Based Reference Resolution • Search for antecedent in parse tree of current sentence, then prior sentences in order of recency – For current S, search for NP nodes to the left of a path p from the pronoun up to the first NP or S node (X) above it in L 2 R, breadth-first – Propose as pronoun’s antecedent any NP you find as long as it has an NP or S node between itself and X – If X is highest node in sentence, search prior sentences, L 2 R breadth-first, for candidate NPs – O. w. , continue searching current tree by going to next S or NP above X before going to prior sentences

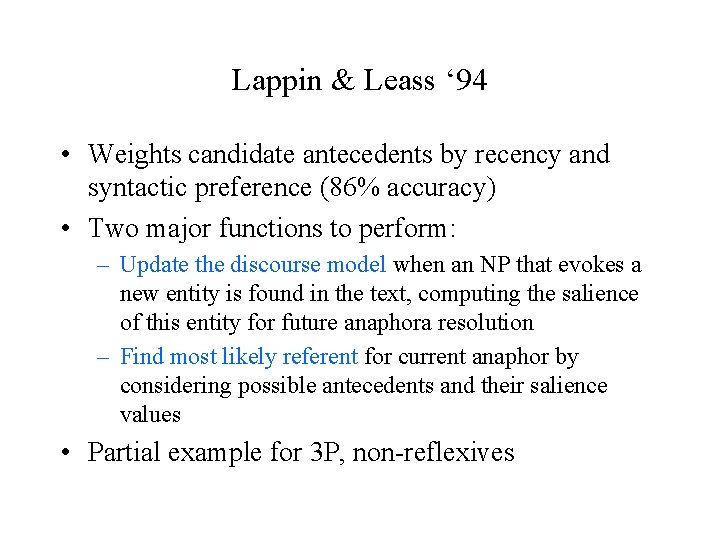

Evaluation • Centering only now being specified enough to be tested automatically on real data – Specifying the Parameters of Centering Theory: A Corpus-Based Evaluation using Text from Application. Oriented Domains (Poesio et al. , ACL 2000) • Walker ‘ 89 manual comparison of Centering vs. Hobbs ‘ 78 – – Only 281 examples from 3 genres Assumed correct features given as input to each Centering 77. 6% vs. Hobbs 81. 8% Lappin and Leass’ 86% accuracy on test set from computer training manuals