Lecture 2021 Improving Cache Performance Mary Jane Irwin

- Slides: 54

Lecture 20&21. Improving Cache Performance Mary Jane Irwin ( www. cse. psu. edu/~mji ) www. cse. psu. edu/~ [Adapted from Computer Organization and Design, Patterson & Hennessy, © 2005] Improving Cache Performance. 1

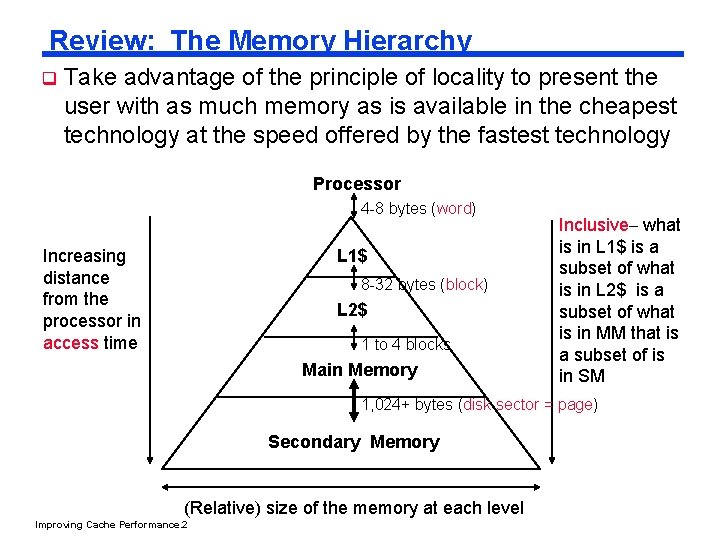

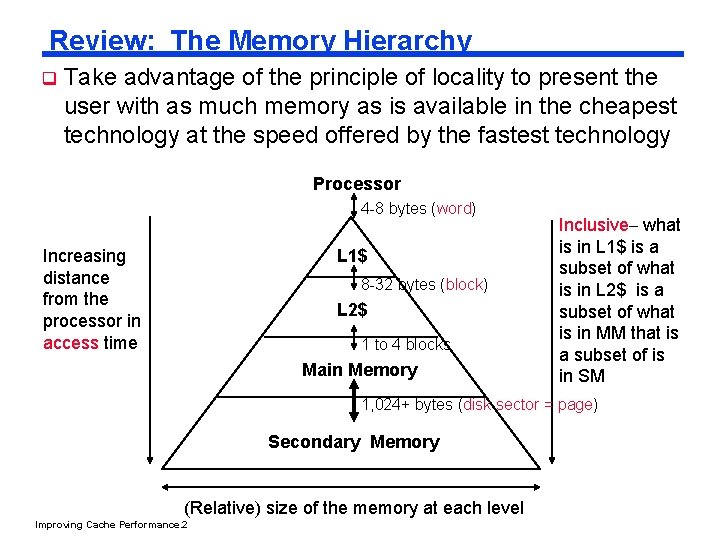

Review: The Memory Hierarchy q Take advantage of the principle of locality to present the user with as much memory as is available in the cheapest technology at the speed offered by the fastest technology Processor 4 -8 bytes (word) Increasing distance from the processor in access time L 1$ 8 -32 bytes (block) L 2$ 1 to 4 blocks Main Memory Inclusive– what is in L 1$ is a subset of what is in L 2$ is a subset of what is in MM that is a subset of is in SM 1, 024+ bytes (disk sector = page) Secondary Memory (Relative) size of the memory at each level Improving Cache Performance. 2

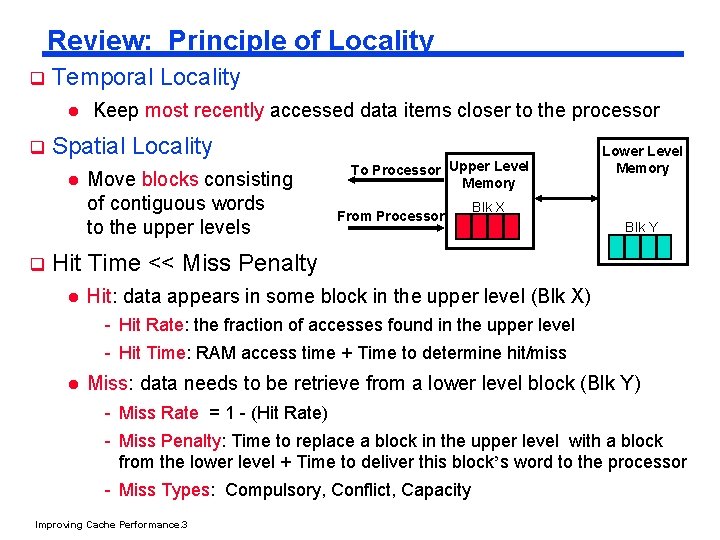

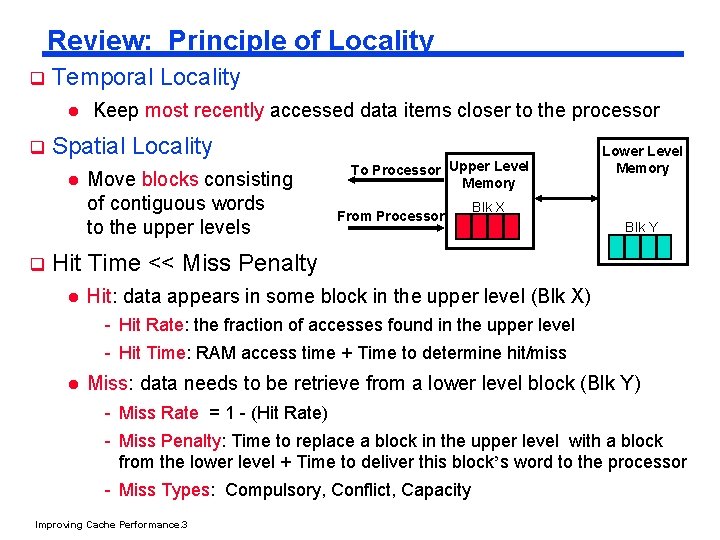

Review: Principle of Locality q Temporal Locality l q Keep most recently accessed data items closer to the processor Spatial Locality Lower Level Upper Level Memory To Processor l Move blocks consisting Memory of contiguous words Blk X From Processor Blk Y to the upper levels q Hit Time << Miss Penalty l Hit: data appears in some block in the upper level (Blk X) - Hit Rate: the fraction of accesses found in the upper level - Hit Time: RAM access time + Time to determine hit/miss l Miss: data needs to be retrieve from a lower level block (Blk Y) - Miss Rate = 1 - (Hit Rate) - Miss Penalty: Time to replace a block in the upper level with a block from the lower level + Time to deliver this block’s word to the processor - Miss Types: Compulsory, Conflict, Capacity Improving Cache Performance. 3

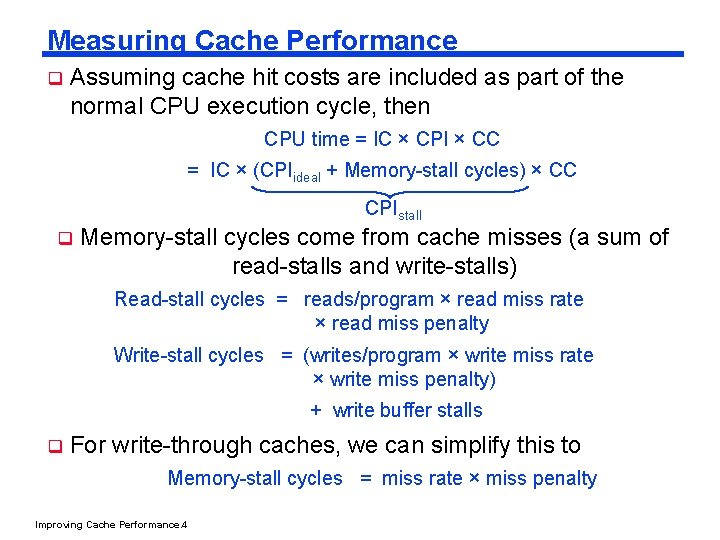

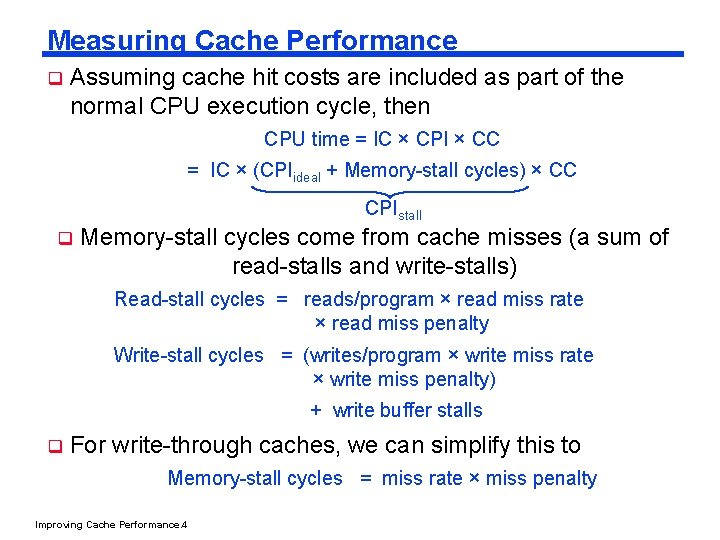

Measuring Cache Performance q Assuming cache hit costs are included as part of the normal CPU execution cycle, then CPU time = IC × CPI × CC = IC × (CPIideal + Memory-stall cycles) × CC CPIstall q Memory-stall cycles come from cache misses (a sum of read-stalls and write-stalls) Read-stall cycles = reads/program × read miss rate × read miss penalty Write-stall cycles = (writes/program × write miss rate × write miss penalty) + write buffer stalls q For write-through caches, we can simplify this to Memory-stall cycles = miss rate × miss penalty Improving Cache Performance. 4

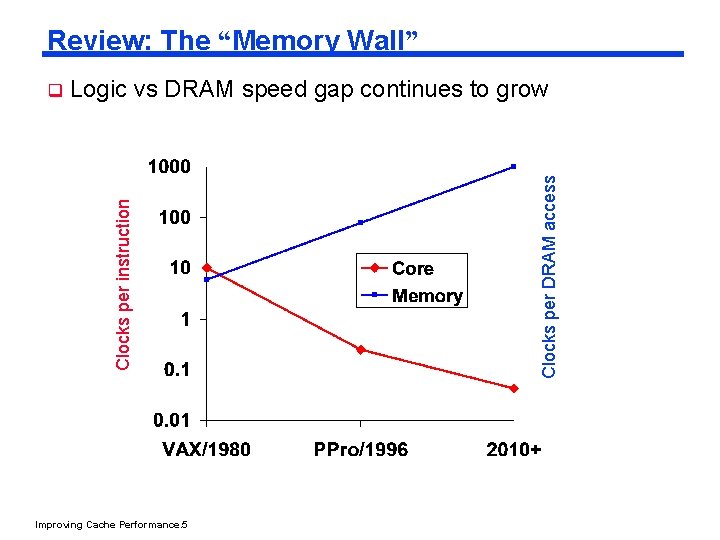

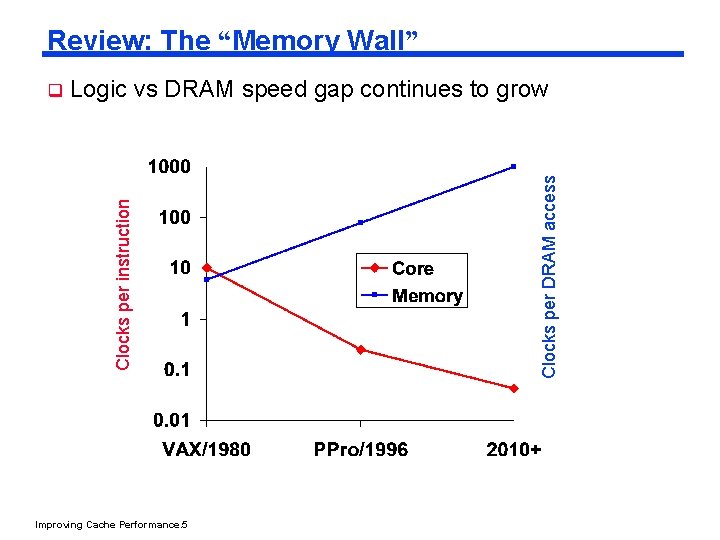

Review: The “Memory Wall” Improving Cache Performance. 5 Clocks per DRAM access Logic vs DRAM speed gap continues to grow Clocks per instruction q

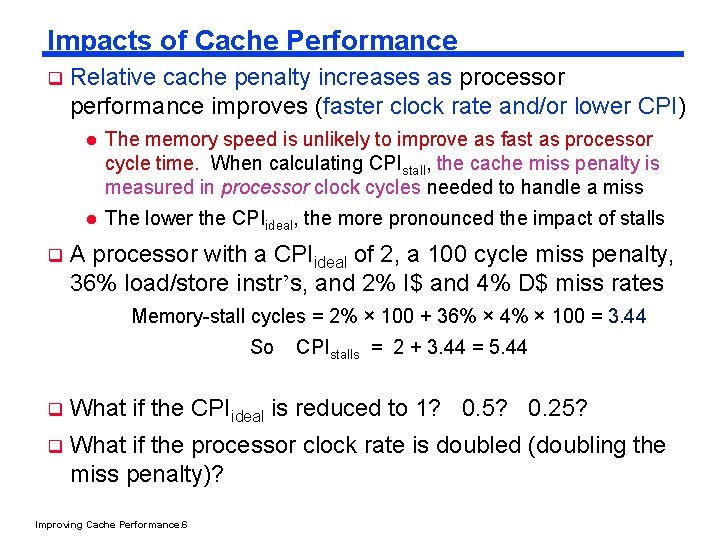

Impacts of Cache Performance q q Relative cache penalty increases as processor performance improves (faster clock rate and/or lower CPI) l The memory speed is unlikely to improve as fast as processor cycle time. When calculating CPIstall, the cache miss penalty is measured in processor clock cycles needed to handle a miss l The lower the CPIideal, the more pronounced the impact of stalls A processor with a CPIideal of 2, a 100 cycle miss penalty, 36% load/store instr’s, and 2% I$ and 4% D$ miss rates Memory-stall cycles = 2% × 100 + 36% × 4% × 100 = 3. 44 So CPIstalls = 2 + 3. 44 = 5. 44 q What if the CPIideal is reduced to 1? 0. 5? 0. 25? q What if the processor clock rate is doubled (doubling the miss penalty)? Improving Cache Performance. 6

For ideal CPI = 1, then CPIstall = 4. 44 and the amount of execution time spent on memory stalls would have risen from 3. 44/5. 44 = 63% to 3. 44/4. 44 = 77% For miss penalty of 200, memory stall cycles = 2% 200 + 36% x 4% x 200 = 6. 88 so that CPIstall = 8. 88 This assumes that hit time is not a factor in determining cache performance. • A larger cache would have a longer access time (if a lower miss rate), • meaning either a slower clock cycle or more stages in the pipeline for memory access. Improving Cache Performance. 7

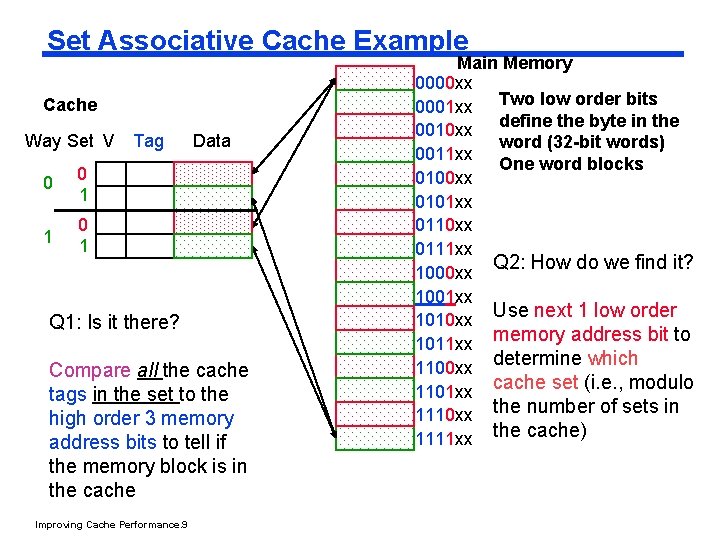

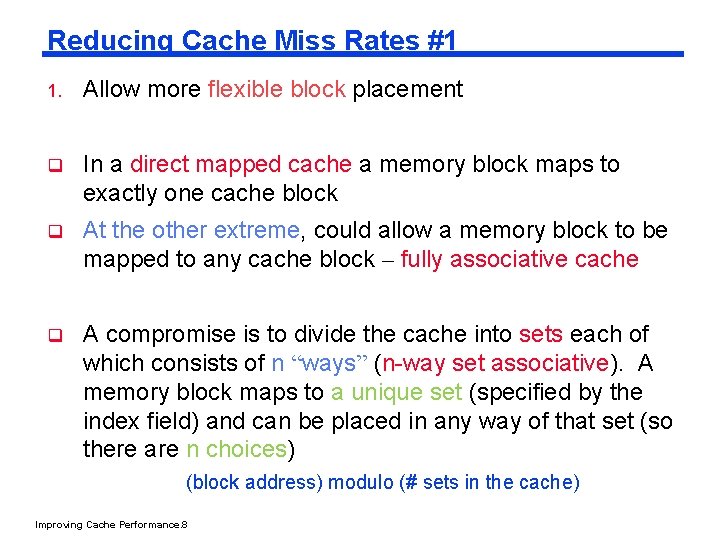

Reducing Cache Miss Rates #1 1. Allow more flexible block placement q In a direct mapped cache a memory block maps to exactly one cache block q At the other extreme, could allow a memory block to be mapped to any cache block – fully associative cache q A compromise is to divide the cache into sets each of which consists of n “ways” (n-way set associative). A memory block maps to a unique set (specified by the index field) and can be placed in any way of that set (so there are n choices) (block address) modulo (# sets in the cache) Improving Cache Performance. 8

Set Associative Cache Example Cache Way Set V 0 0 1 1 0 1 Tag Data Q 1: Is it there? Compare all the cache tags in the set to the high order 3 memory address bits to tell if the memory block is in the cache Improving Cache Performance. 9 Main Memory 0000 xx 0001 xx Two low order bits define the byte in the 0010 xx word (32 -bit words) 0011 xx One word blocks 0100 xx 0101 xx 0110 xx 0111 xx Q 2: How do we find it? 1000 xx 1001 xx Use next 1 low order 1010 xx memory address bit to 1011 xx 1100 xx determine which 1101 xx cache set (i. e. , modulo 1110 xx the number of sets in 1111 xx the cache)

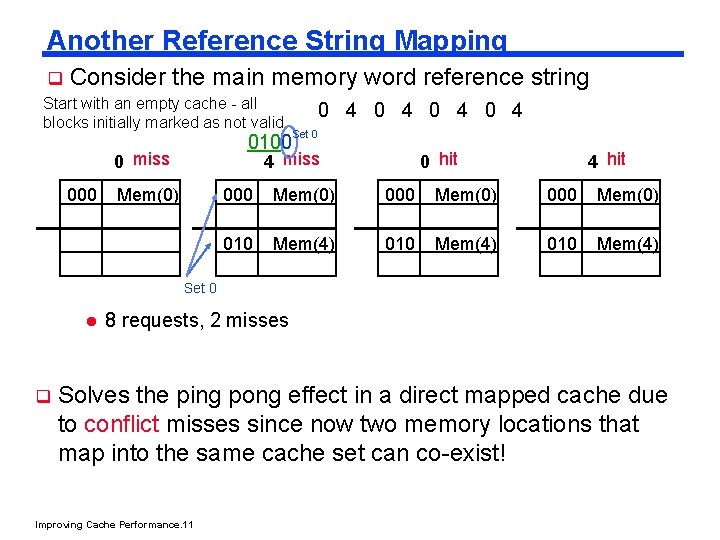

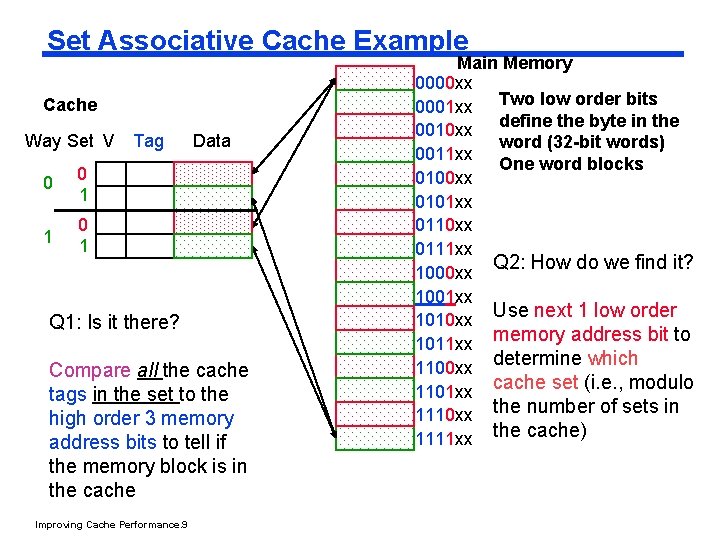

Another Reference String Mapping q Consider the main memory word reference string Start with an empty cache - all 0 4 blocks initially marked as not valid 0100 0 miss Set 0 4 miss 000 Mem(0) 0 hit 4 hit 000 Mem(0) 010 Mem(4) Set 0 l q 8 requests, 2 misses Solves the ping pong effect in a direct mapped cache due to conflict misses since now two memory locations that map into the same cache set can co-exist! Improving Cache Performance. 11

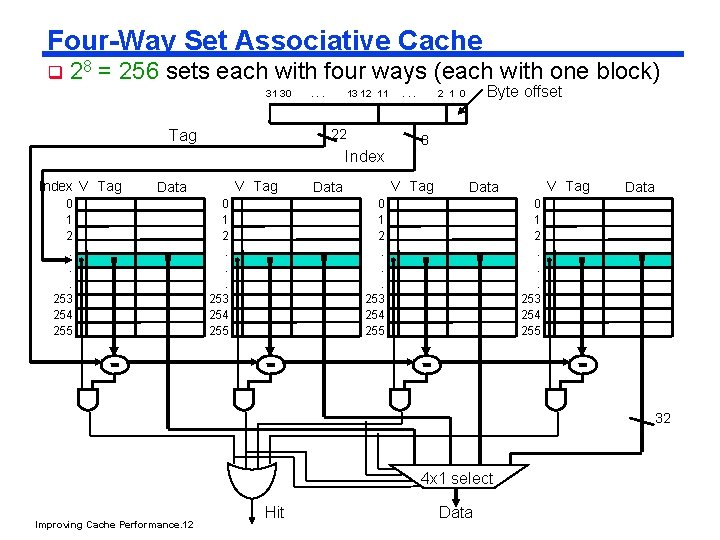

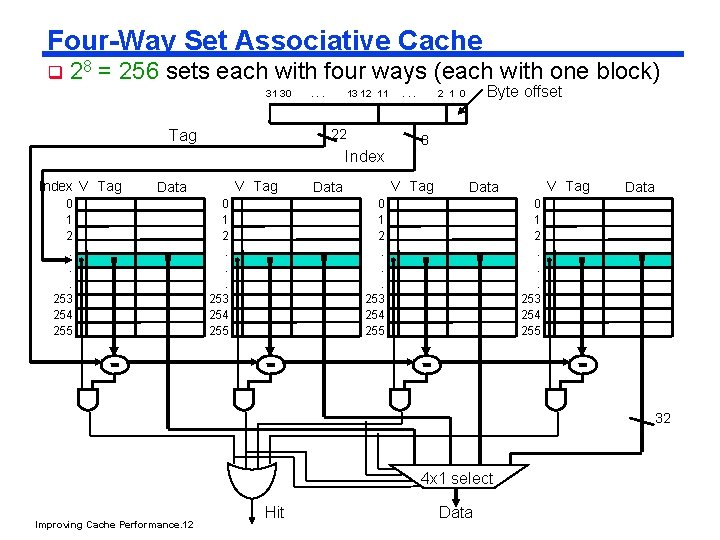

Four-Way Set Associative Cache q 28 = 256 sets each with four ways (each with one block) Byte offset 31 30 . . . 13 12 11 . . . 2 1 0 22 Tag Index V Tag Data 0 1 2. . . 253 254 255 8 V Tag Data 0 1 2. . . 253 254 255 32 4 x 1 select Improving Cache Performance. 12 Hit Data

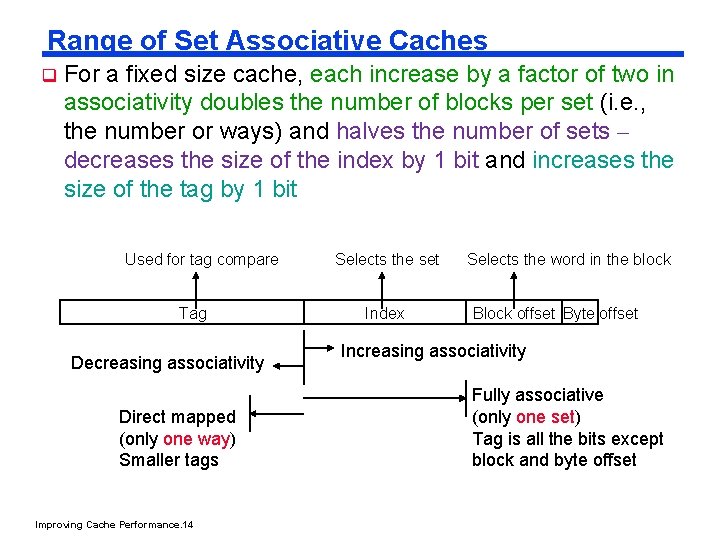

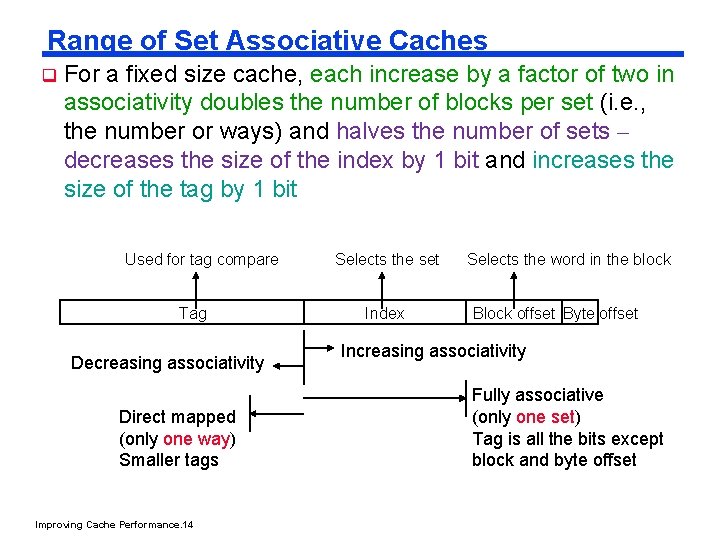

Range of Set Associative Caches q For a fixed size cache, each increase by a factor of two in associativity doubles the number of blocks per set (i. e. , the number or ways) and halves the number of sets – decreases the size of the index by 1 bit and increases the size of the tag by 1 bit Used for tag compare Tag Decreasing associativity Direct mapped (only one way) Smaller tags Improving Cache Performance. 14 Selects the set Index Selects the word in the block Block offset Byte offset Increasing associativity Fully associative (only one set) Tag is all the bits except block and byte offset

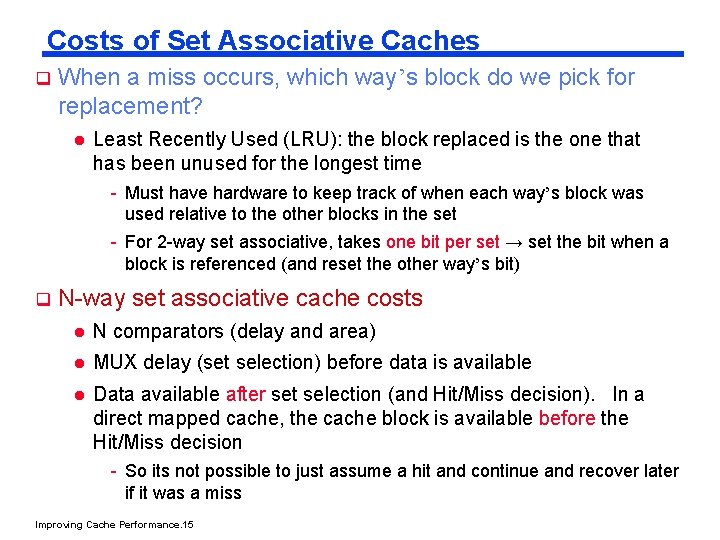

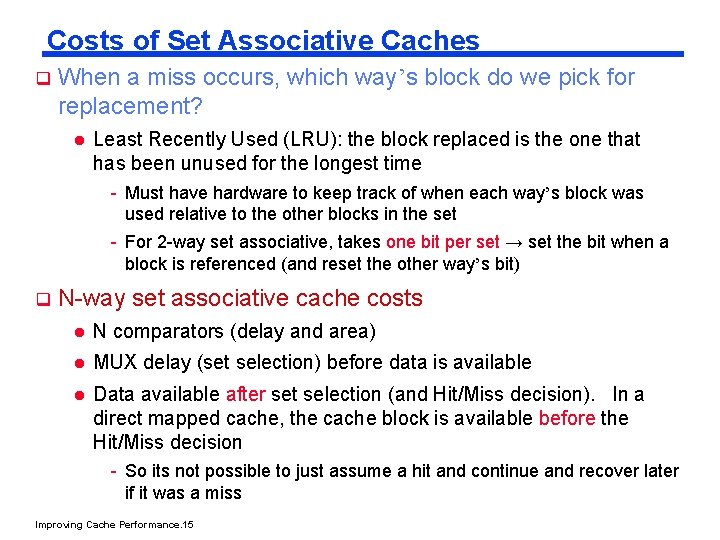

Costs of Set Associative Caches q When a miss occurs, which way’s block do we pick for replacement? l Least Recently Used (LRU): the block replaced is the one that has been unused for the longest time - Must have hardware to keep track of when each way’s block was used relative to the other blocks in the set - For 2 -way set associative, takes one bit per set → set the bit when a block is referenced (and reset the other way’s bit) q N-way set associative cache costs l N comparators (delay and area) l MUX delay (set selection) before data is available l Data available after set selection (and Hit/Miss decision). In a direct mapped cache, the cache block is available before the Hit/Miss decision - So its not possible to just assume a hit and continue and recover later if it was a miss Improving Cache Performance. 15

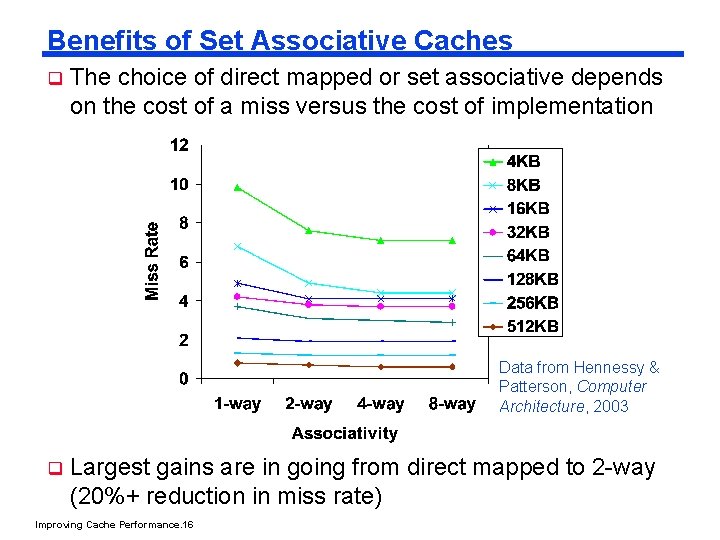

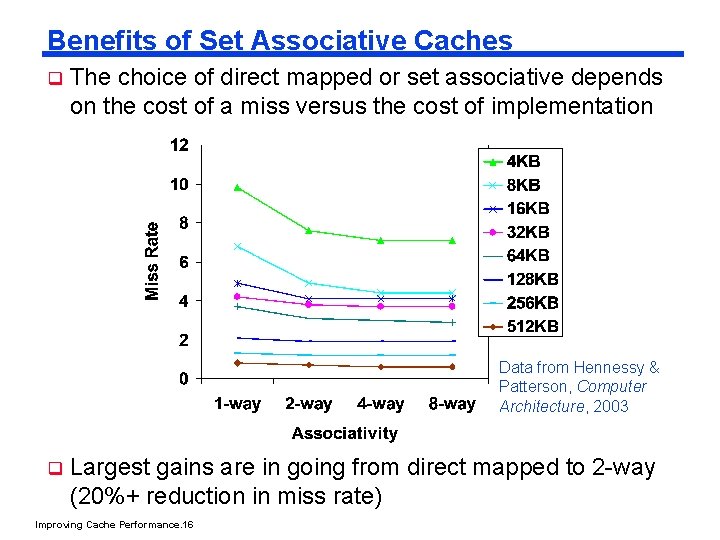

Benefits of Set Associative Caches q The choice of direct mapped or set associative depends on the cost of a miss versus the cost of implementation Data from Hennessy & Patterson, Computer Architecture, 2003 q Largest gains are in going from direct mapped to 2 -way (20%+ reduction in miss rate) Improving Cache Performance. 16

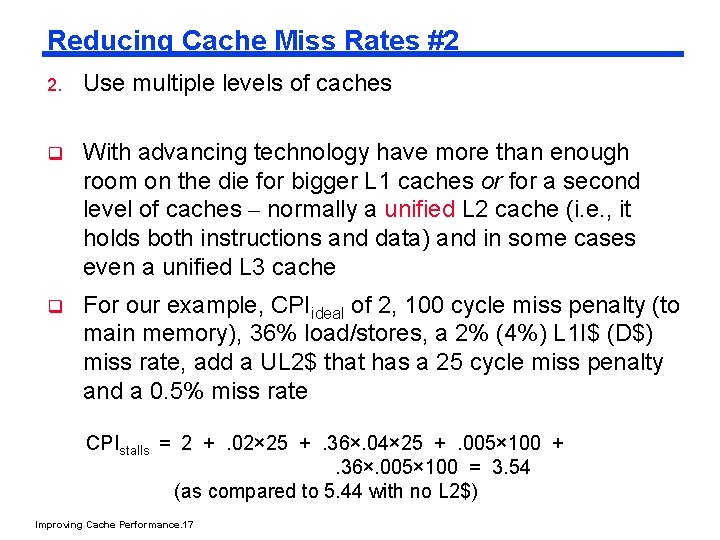

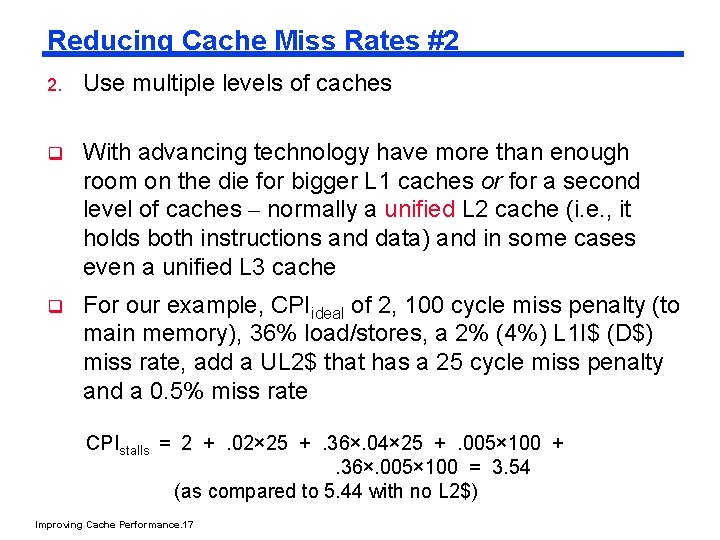

Reducing Cache Miss Rates #2 2. Use multiple levels of caches q With advancing technology have more than enough room on the die for bigger L 1 caches or for a second level of caches – normally a unified L 2 cache (i. e. , it holds both instructions and data) and in some cases even a unified L 3 cache q For our example, CPIideal of 2, 100 cycle miss penalty (to main memory), 36% load/stores, a 2% (4%) L 1 I$ (D$) miss rate, add a UL 2$ that has a 25 cycle miss penalty and a 0. 5% miss rate CPIstalls = 2 + . 02× 25 + . 36×. 04× 25 + . 005× 100 + . 36×. 005× 100 = 3. 54 (as compared to 5. 44 with no L 2$) Improving Cache Performance. 17

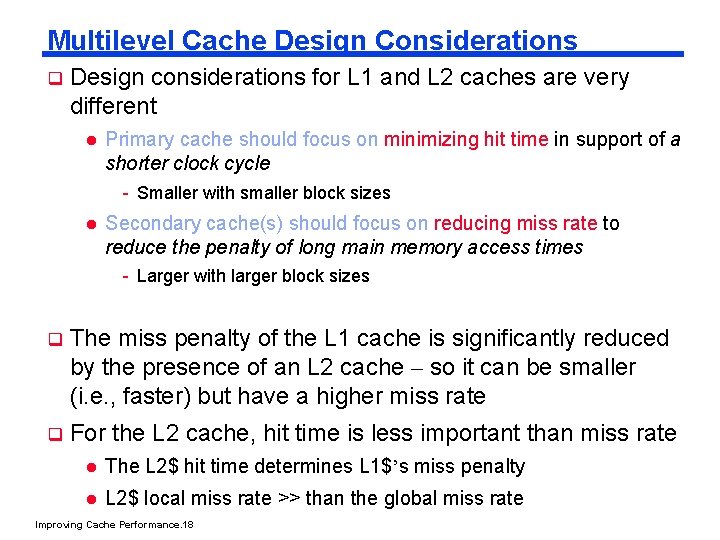

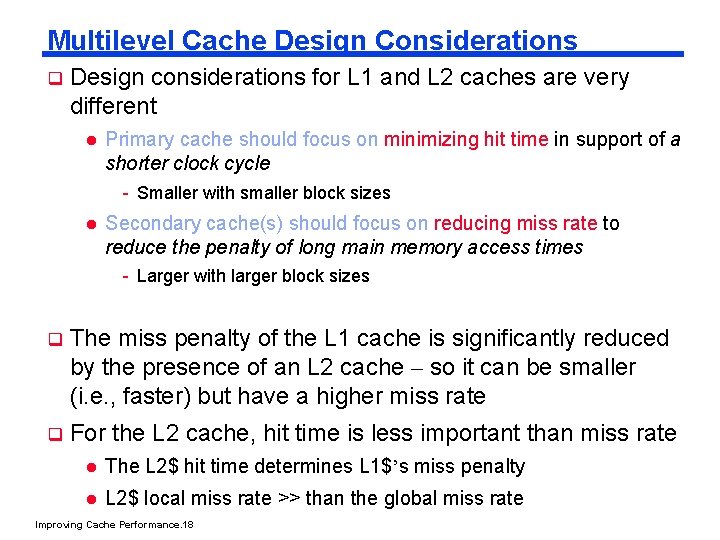

Multilevel Cache Design Considerations q Design considerations for L 1 and L 2 caches are very different l Primary cache should focus on minimizing hit time in support of a shorter clock cycle - Smaller with smaller block sizes l Secondary cache(s) should focus on reducing miss rate to reduce the penalty of long main memory access times - Larger with larger block sizes q The miss penalty of the L 1 cache is significantly reduced by the presence of an L 2 cache – so it can be smaller (i. e. , faster) but have a higher miss rate q For the L 2 cache, hit time is less important than miss rate l The L 2$ hit time determines L 1$’s miss penalty l L 2$ local miss rate >> than the global miss rate Improving Cache Performance. 18

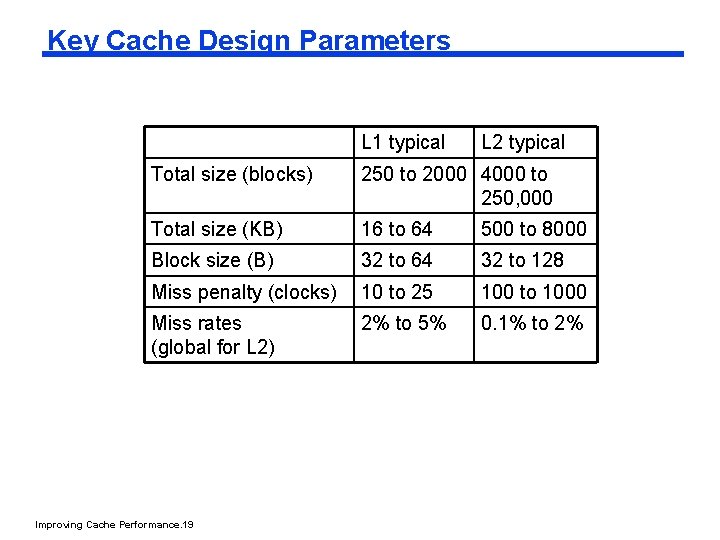

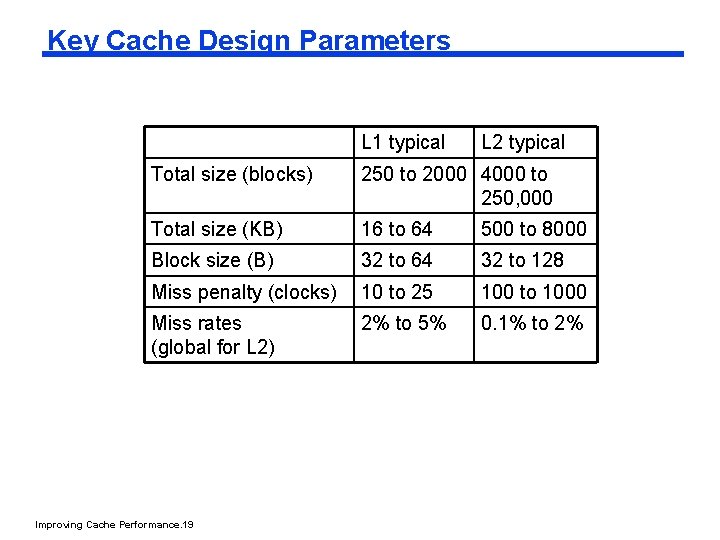

Key Cache Design Parameters L 1 typical L 2 typical Total size (blocks) 250 to 2000 4000 to 250, 000 Total size (KB) 16 to 64 500 to 8000 Block size (B) 32 to 64 32 to 128 Miss penalty (clocks) 10 to 25 100 to 1000 Miss rates (global for L 2) 2% to 5% 0. 1% to 2% Improving Cache Performance. 19

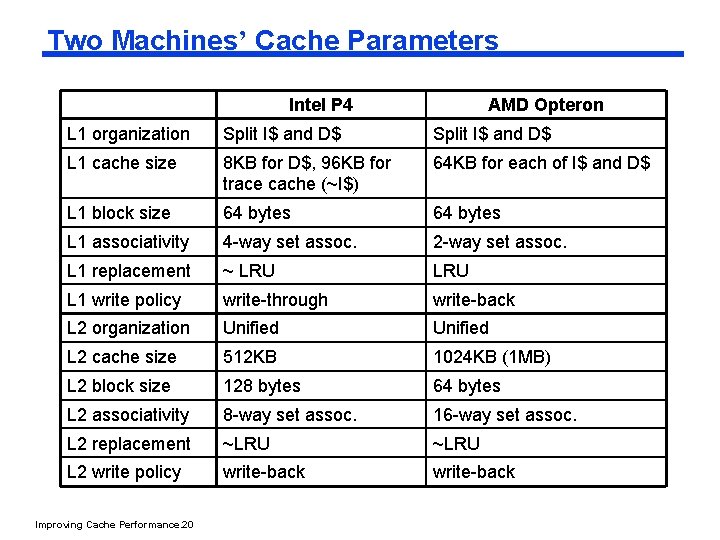

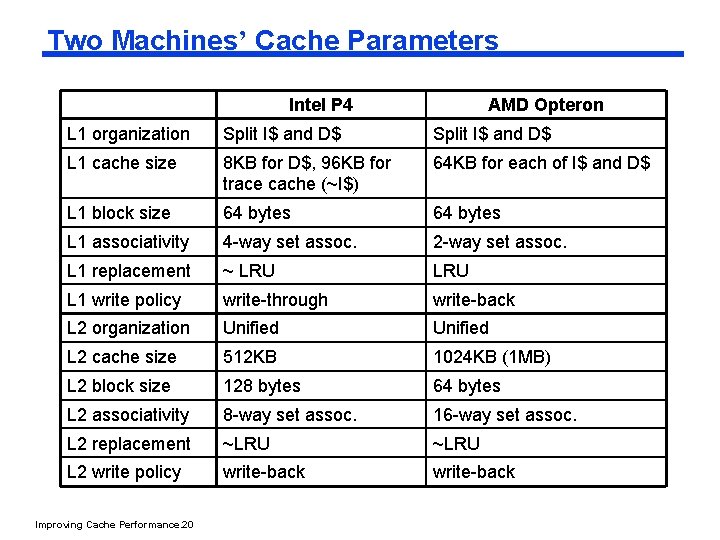

Two Machines’ Cache Parameters Intel P 4 AMD Opteron L 1 organization Split I$ and D$ L 1 cache size 8 KB for D$, 96 KB for trace cache (~I$) 64 KB for each of I$ and D$ L 1 block size 64 bytes L 1 associativity 4 -way set assoc. 2 -way set assoc. L 1 replacement ~ LRU L 1 write policy write-through write-back L 2 organization Unified L 2 cache size 512 KB 1024 KB (1 MB) L 2 block size 128 bytes 64 bytes L 2 associativity 8 -way set assoc. 16 -way set assoc. L 2 replacement ~LRU L 2 write policy write-back Improving Cache Performance. 20

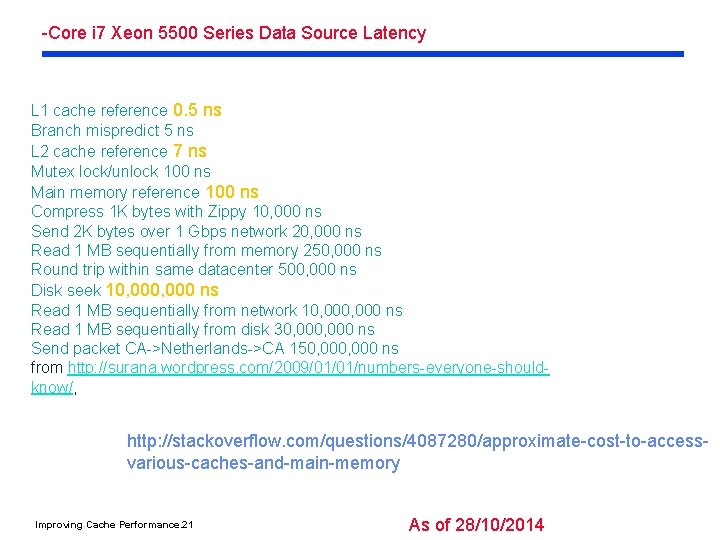

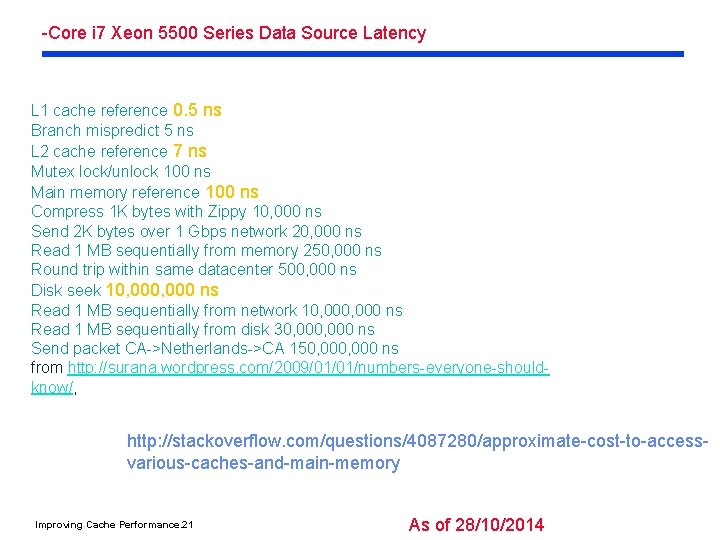

-Core i 7 Xeon 5500 Series Data Source Latency L 1 cache reference 0. 5 ns Branch mispredict 5 ns L 2 cache reference 7 ns Mutex lock/unlock 100 ns Main memory reference 100 ns Compress 1 K bytes with Zippy 10, 000 ns Send 2 K bytes over 1 Gbps network 20, 000 ns Read 1 MB sequentially from memory 250, 000 ns Round trip within same datacenter 500, 000 ns Disk seek 10, 000 ns Read 1 MB sequentially from network 10, 000 ns Read 1 MB sequentially from disk 30, 000 ns Send packet CA->Netherlands->CA 150, 000 ns from http: //surana. wordpress. com/2009/01/01/numbers-everyone-shouldknow/, http: //stackoverflow. com/questions/4087280/approximate-cost-to-accessvarious-caches-and-main-memory Improving Cache Performance. 21 As of 28/10/2014

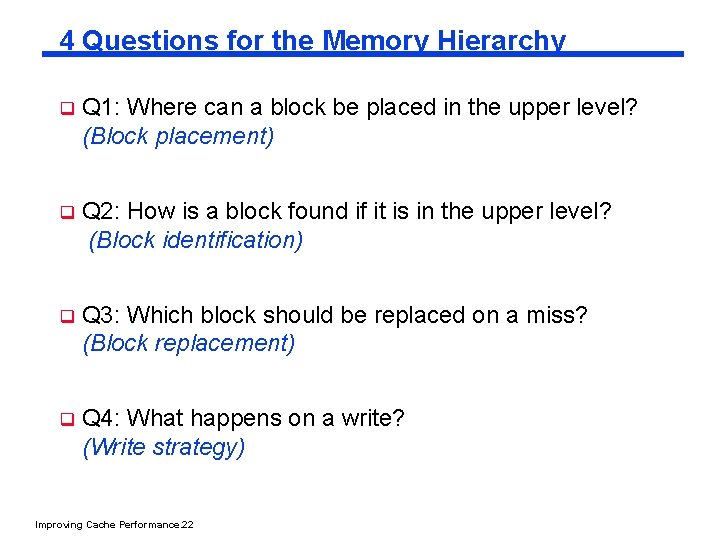

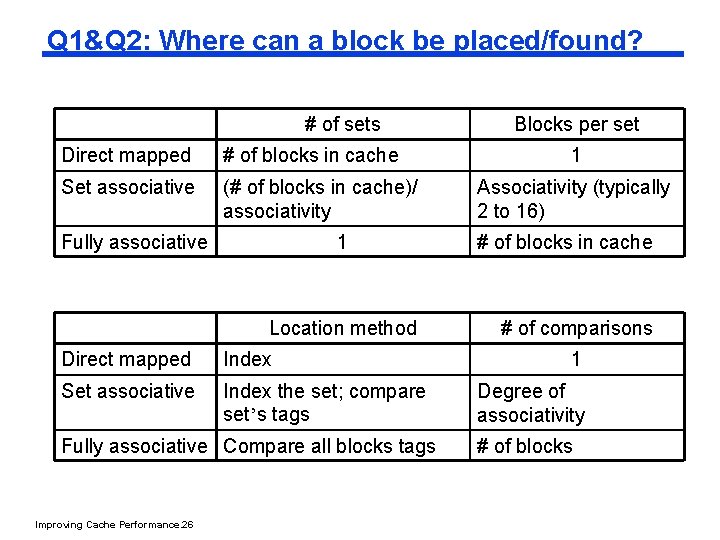

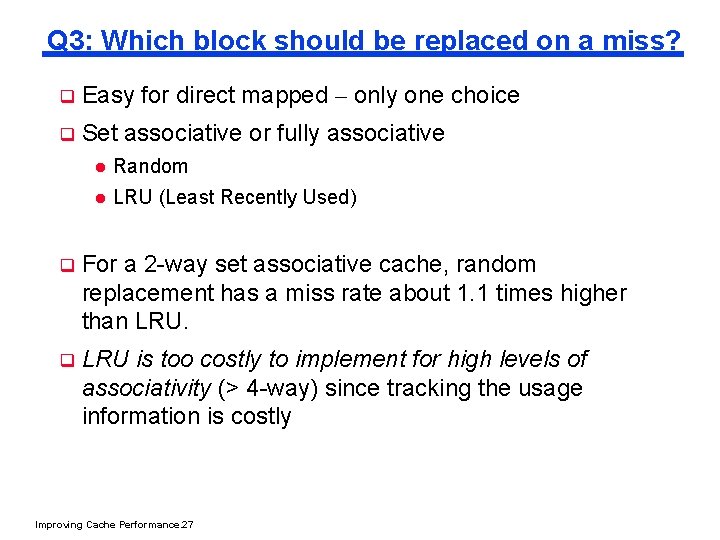

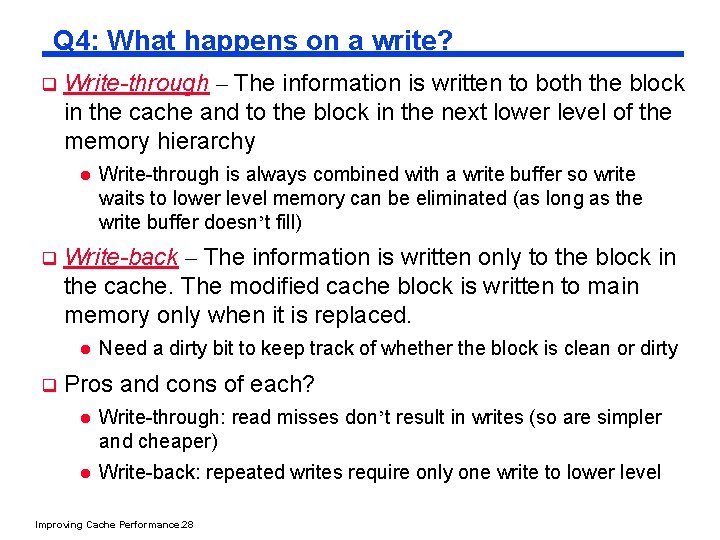

4 Questions for the Memory Hierarchy q Q 1: Where can a block be placed in the upper level? (Block placement) q Q 2: How is a block found if it is in the upper level? (Block identification) q Q 3: Which block should be replaced on a miss? (Block replacement) q Q 4: What happens on a write? (Write strategy) Improving Cache Performance. 22

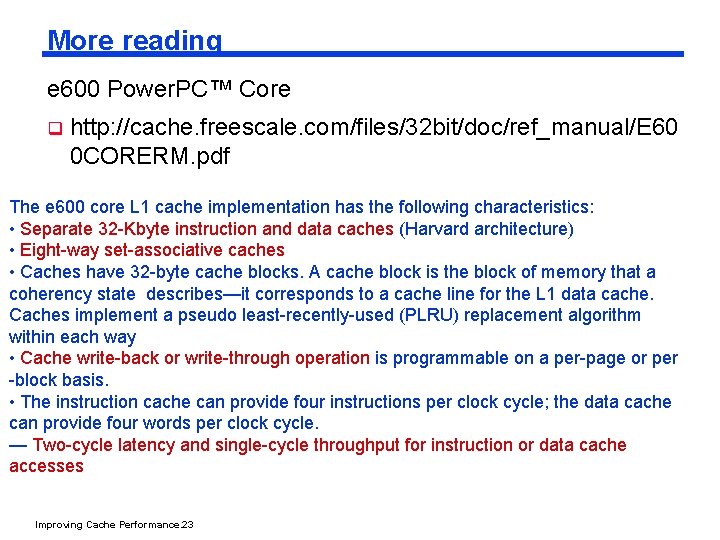

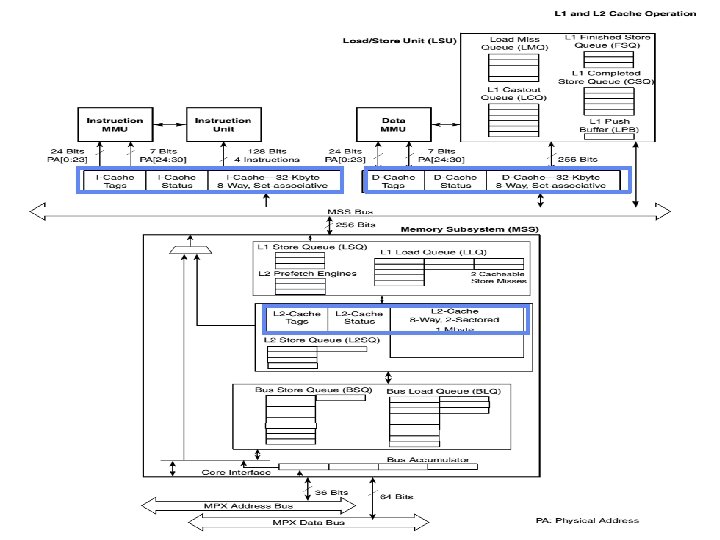

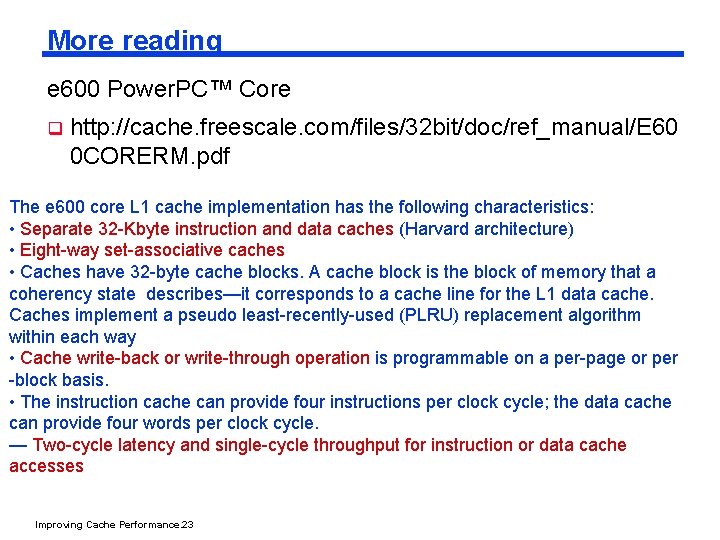

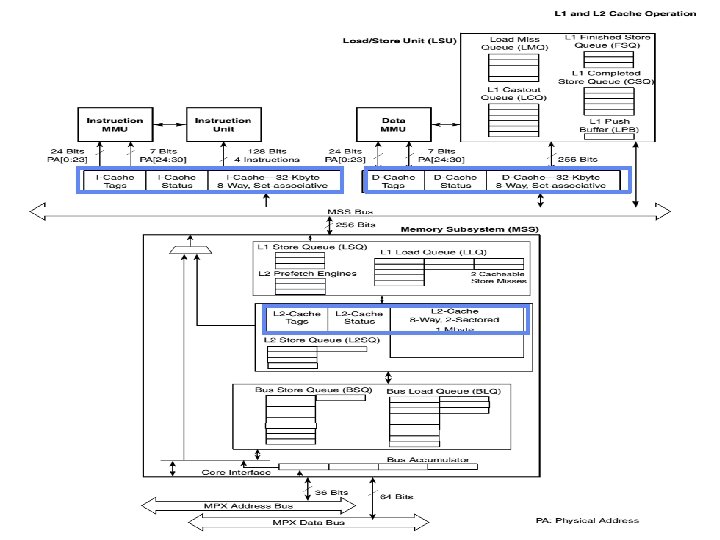

More reading e 600 Power. PC™ Core q http: //cache. freescale. com/files/32 bit/doc/ref_manual/E 60 0 CORERM. pdf The e 600 core L 1 cache implementation has the following characteristics: • Separate 32 -Kbyte instruction and data caches (Harvard architecture) • Eight-way set-associative caches • Caches have 32 -byte cache blocks. A cache block is the block of memory that a coherency state describes—it corresponds to a cache line for the L 1 data cache. Caches implement a pseudo least-recently-used (PLRU) replacement algorithm within each way • Cache write-back or write-through operation is programmable on a per-page or per -block basis. • The instruction cache can provide four instructions per clock cycle; the data cache can provide four words per clock cycle. — Two-cycle latency and single-cycle throughput for instruction or data cache accesses Improving Cache Performance. 23

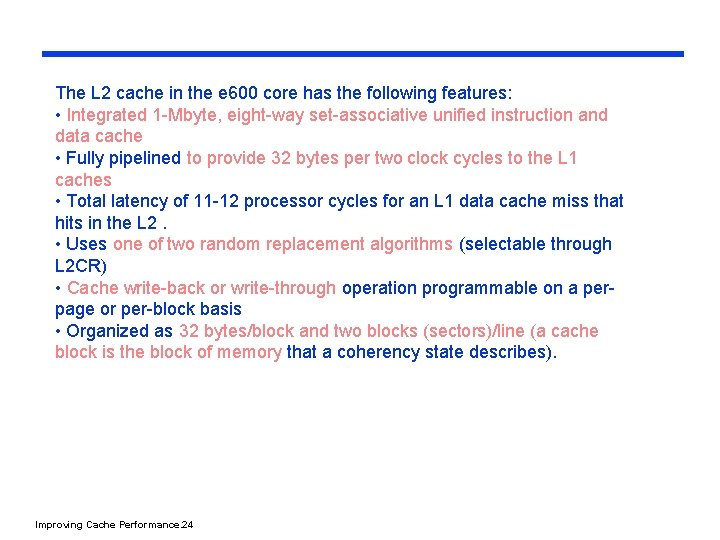

The L 2 cache in the e 600 core has the following features: • Integrated 1 -Mbyte, eight-way set-associative unified instruction and data cache • Fully pipelined to provide 32 bytes per two clock cycles to the L 1 caches • Total latency of 11 -12 processor cycles for an L 1 data cache miss that hits in the L 2. • Uses one of two random replacement algorithms (selectable through L 2 CR) • Cache write-back or write-through operation programmable on a perpage or per-block basis • Organized as 32 bytes/block and two blocks (sectors)/line (a cache block is the block of memory that a coherency state describes). Improving Cache Performance. 24

Improving Cache Performance. 25

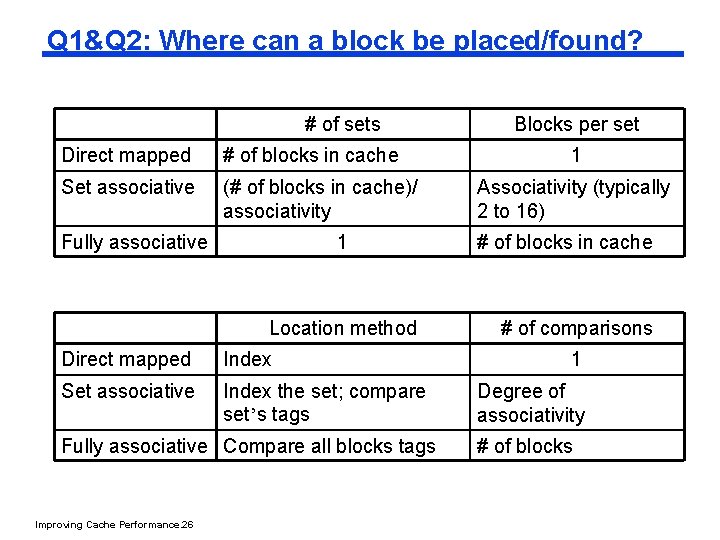

Q 1&Q 2: Where can a block be placed/found? # of sets Direct mapped # of blocks in cache Set associative (# of blocks in cache)/ associativity Fully associative 1 Location method Direct mapped Index Set associative Index the set; compare set’s tags Fully associative Compare all blocks tags Improving Cache Performance. 26 Blocks per set 1 Associativity (typically 2 to 16) # of blocks in cache # of comparisons 1 Degree of associativity # of blocks

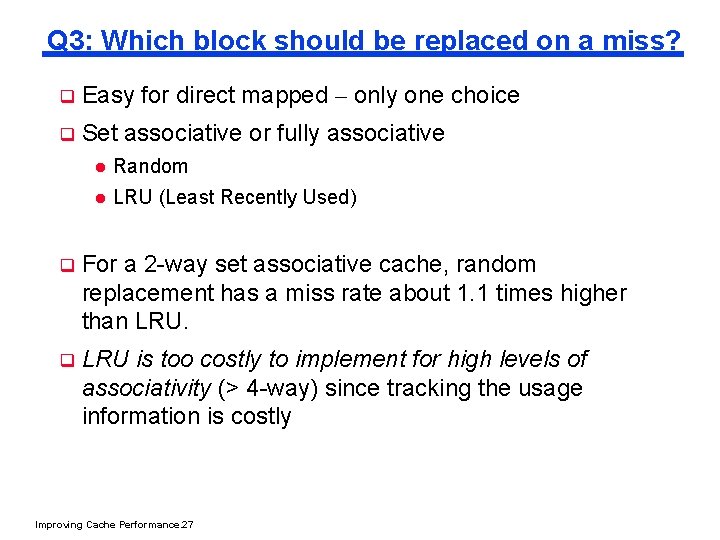

Q 3: Which block should be replaced on a miss? q Easy for direct mapped – only one choice q Set associative or fully associative l Random l LRU (Least Recently Used) q For a 2 -way set associative cache, random replacement has a miss rate about 1. 1 times higher than LRU. q LRU is too costly to implement for high levels of associativity (> 4 -way) since tracking the usage information is costly Improving Cache Performance. 27

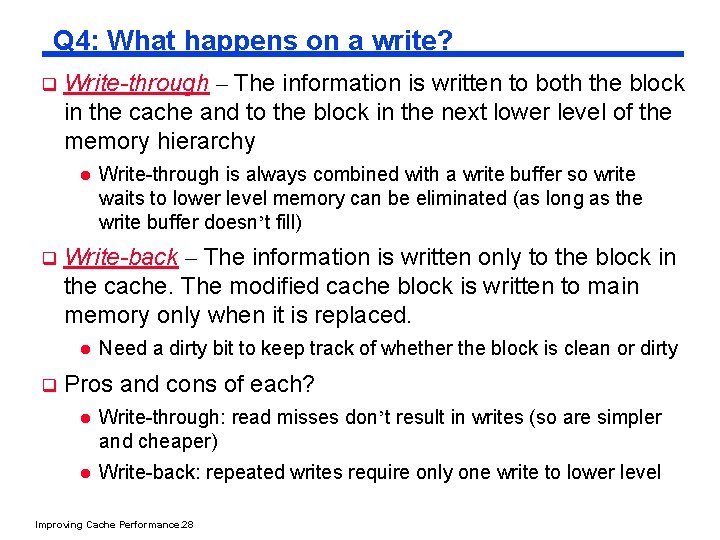

Q 4: What happens on a write? q Write-through – The information is written to both the block in the cache and to the block in the next lower level of the memory hierarchy l q Write-back – The information is written only to the block in the cache. The modified cache block is written to main memory only when it is replaced. l q Write-through is always combined with a write buffer so write waits to lower level memory can be eliminated (as long as the write buffer doesn’t fill) Need a dirty bit to keep track of whether the block is clean or dirty Pros and cons of each? l Write-through: read misses don’t result in writes (so are simpler and cheaper) l Write-back: repeated writes require only one write to lower level Improving Cache Performance. 28

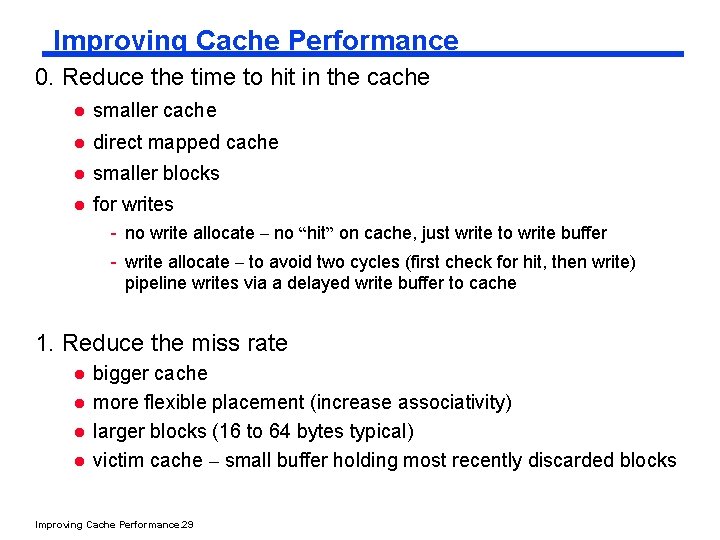

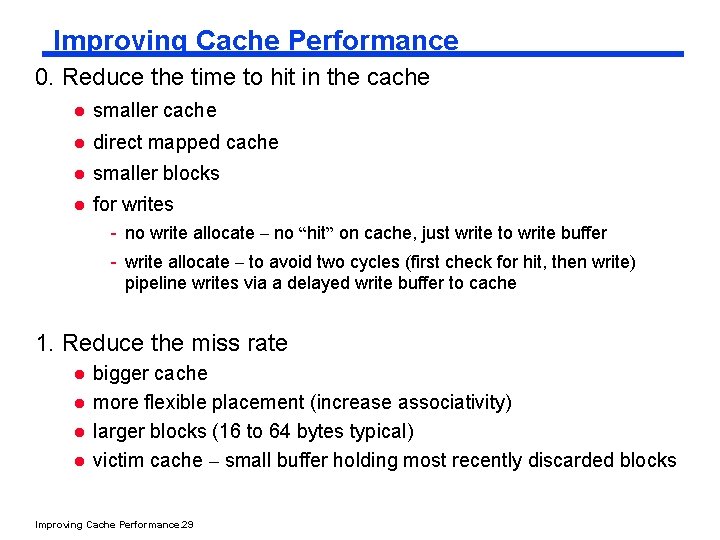

Improving Cache Performance 0. Reduce the time to hit in the cache l smaller cache l direct mapped cache l smaller blocks l for writes - no write allocate – no “hit” on cache, just write to write buffer - write allocate – to avoid two cycles (first check for hit, then write) pipeline writes via a delayed write buffer to cache 1. Reduce the miss rate l l bigger cache more flexible placement (increase associativity) larger blocks (16 to 64 bytes typical) victim cache – small buffer holding most recently discarded blocks Improving Cache Performance. 29

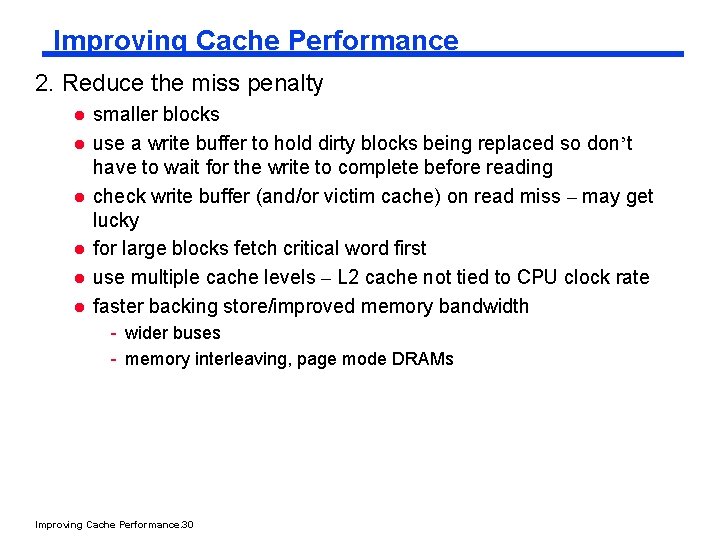

Improving Cache Performance 2. Reduce the miss penalty l l l smaller blocks use a write buffer to hold dirty blocks being replaced so don’t have to wait for the write to complete before reading check write buffer (and/or victim cache) on read miss – may get lucky for large blocks fetch critical word first use multiple cache levels – L 2 cache not tied to CPU clock rate faster backing store/improved memory bandwidth - wider buses - memory interleaving, page mode DRAMs Improving Cache Performance. 30

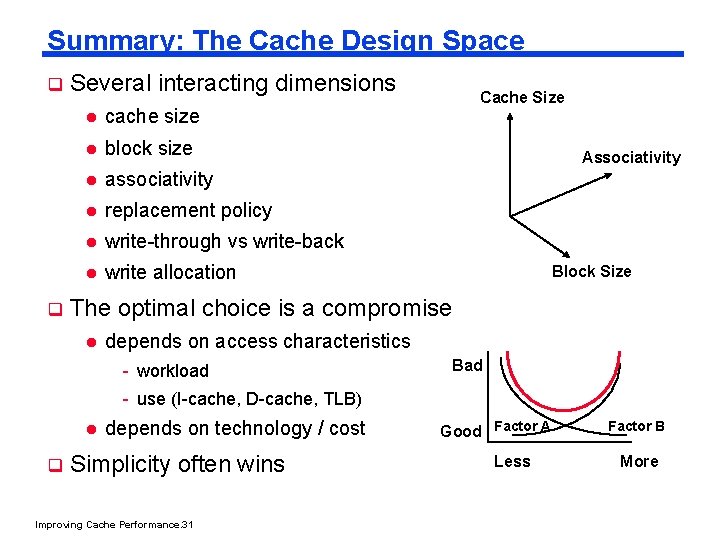

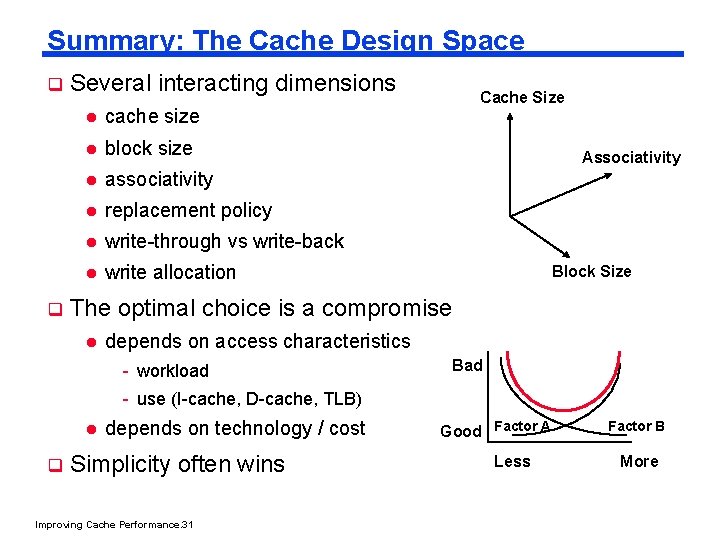

Summary: The Cache Design Space q q Several interacting dimensions l cache size l block size l associativity l replacement policy l write-through vs write-back l write allocation Cache Size Associativity Block Size The optimal choice is a compromise l depends on access characteristics - workload Bad - use (I-cache, D-cache, TLB) l q depends on technology / cost Simplicity often wins Improving Cache Performance. 31 Good Factor A Less Factor B More

Next Lecture q Next lecture - Reading assignment – PH 7. 4 Improving Cache Performance. 32

Readings q http: //www. extremetech. com/extreme/188776 -how-l 1 -and -l 2 -cpu-caches-work-and-why-theyre-an-essential-part-of -modern-chips Improving Cache Performance. 33

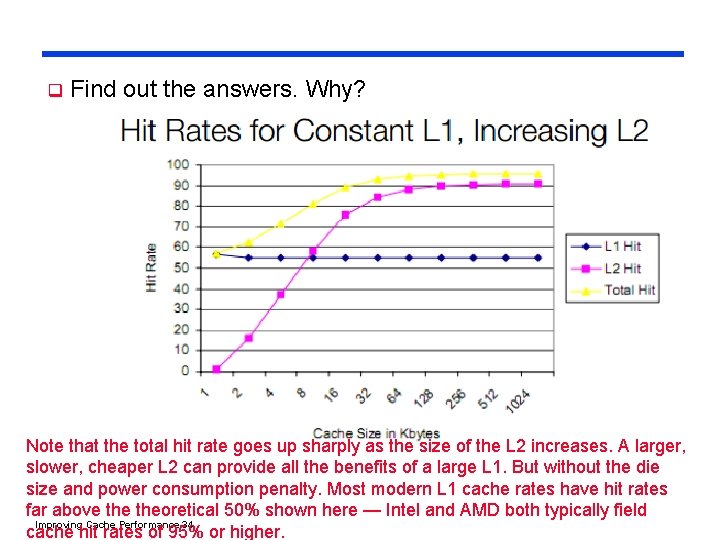

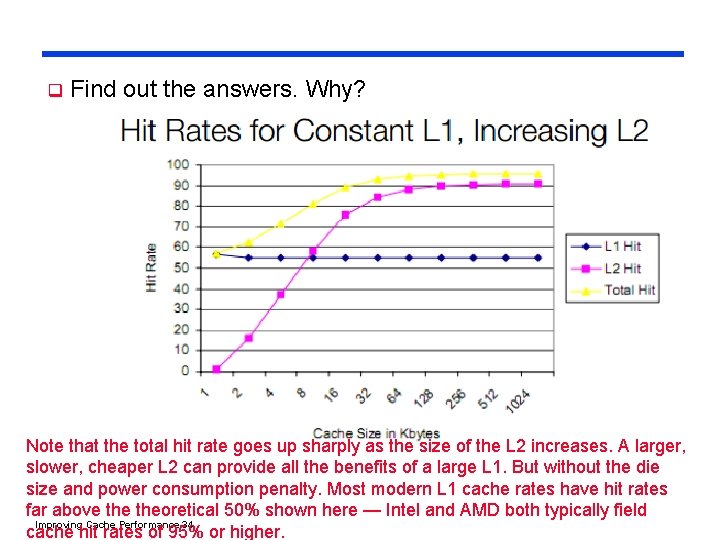

q Find out the answers. Why? Note that the total hit rate goes up sharply as the size of the L 2 increases. A larger, slower, cheaper L 2 can provide all the benefits of a large L 1. But without the die size and power consumption penalty. Most modern L 1 cache rates have hit rates far above theoretical 50% shown here — Intel and AMD both typically field Improving Cache Performance. 34 cache hit rates of 95% or higher.

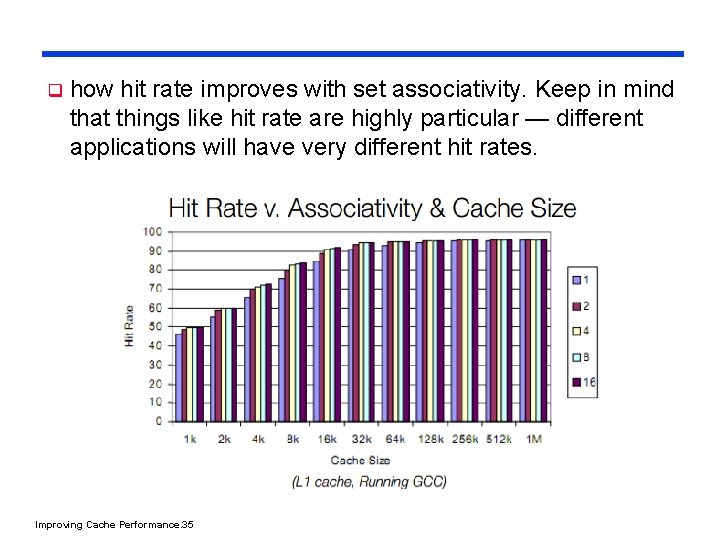

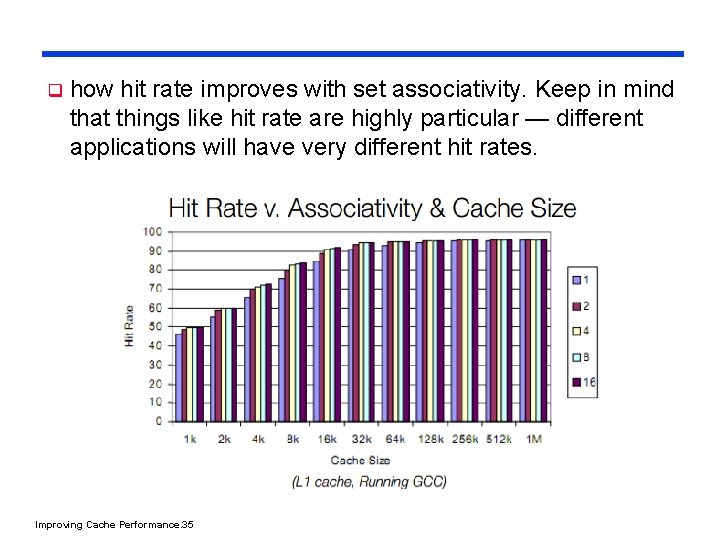

q how hit rate improves with set associativity. Keep in mind that things like hit rate are highly particular — different applications will have very different hit rates. Improving Cache Performance. 35

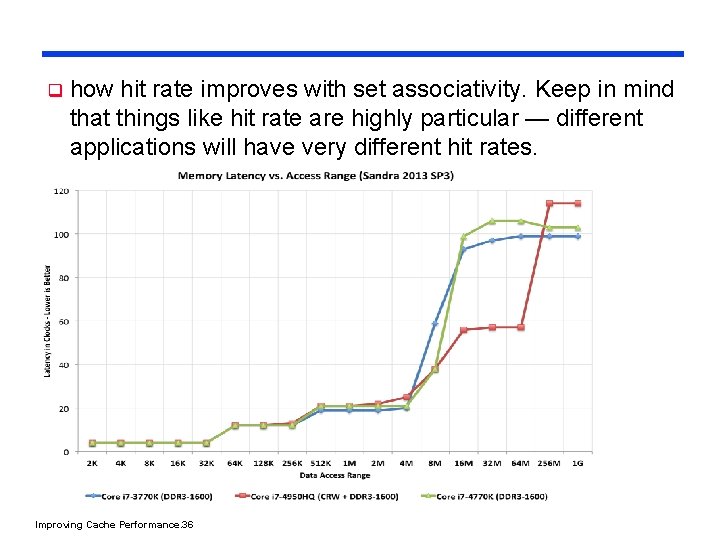

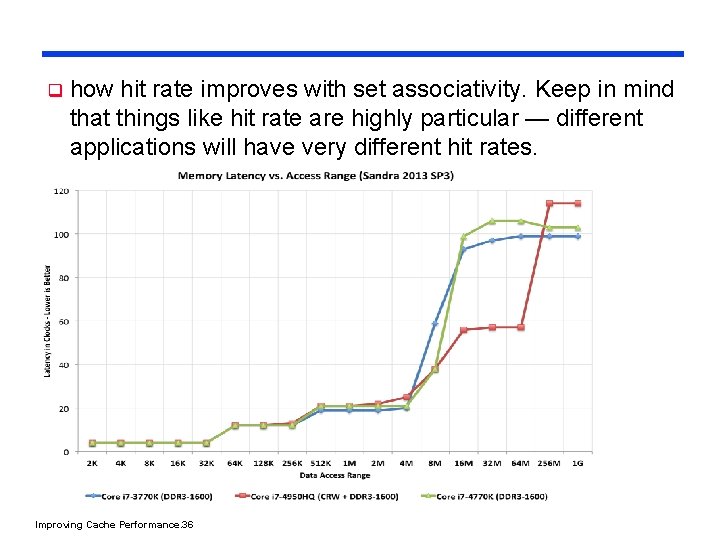

q how hit rate improves with set associativity. Keep in mind that things like hit rate are highly particular — different applications will have very different hit rates. Improving Cache Performance. 36

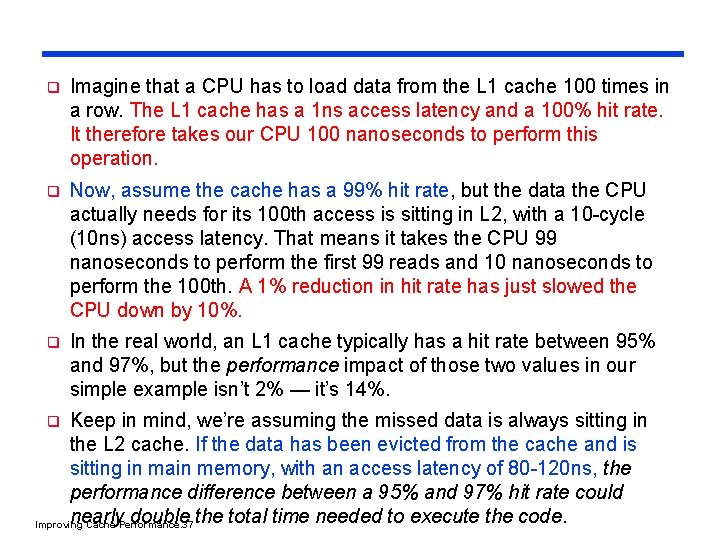

q Imagine that a CPU has to load data from the L 1 cache 100 times in a row. The L 1 cache has a 1 ns access latency and a 100% hit rate. It therefore takes our CPU 100 nanoseconds to perform this operation. q Now, assume the cache has a 99% hit rate, but the data the CPU actually needs for its 100 th access is sitting in L 2, with a 10 -cycle (10 ns) access latency. That means it takes the CPU 99 nanoseconds to perform the first 99 reads and 10 nanoseconds to perform the 100 th. A 1% reduction in hit rate has just slowed the CPU down by 10%. q In the real world, an L 1 cache typically has a hit rate between 95% and 97%, but the performance impact of those two values in our simple example isn’t 2% — it’s 14%. Keep in mind, we’re assuming the missed data is always sitting in the L 2 cache. If the data has been evicted from the cache and is sitting in main memory, with an access latency of 80 -120 ns, the performance difference between a 95% and 97% hit rate could nearly double the total time needed to execute the code. Improving Cache Performance. 37 q

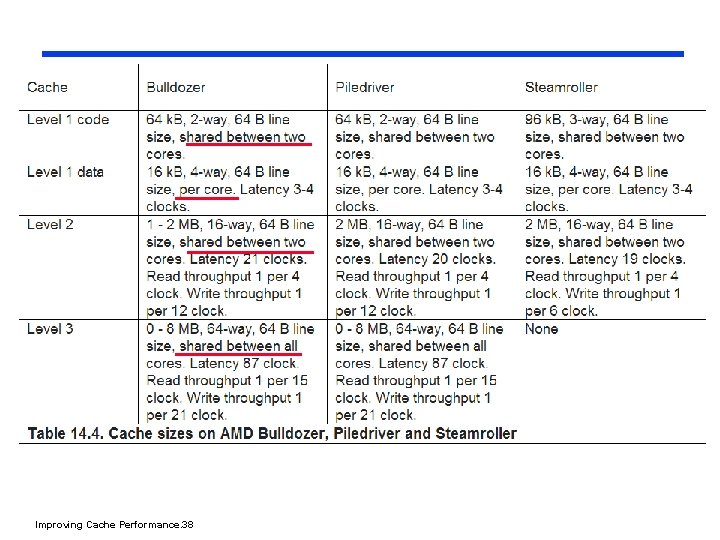

Improving Cache Performance. 38

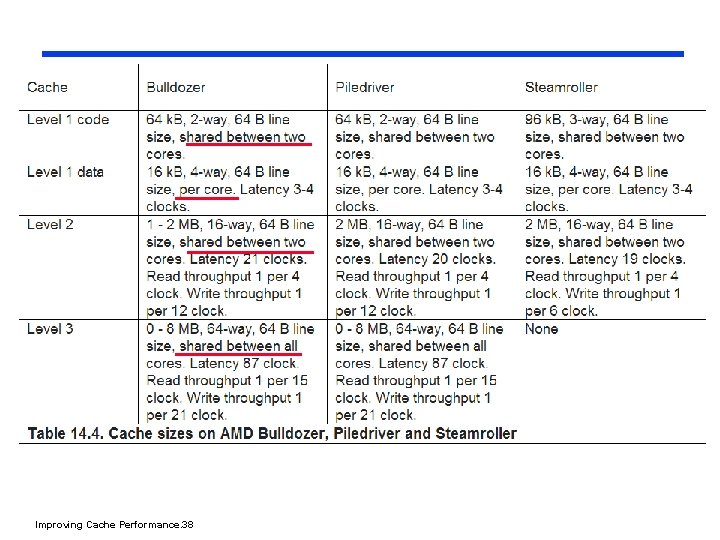

q NOTE: A cache is contended when two different threads are writing and overwriting data in the same memory space. It hurts performance of both threads — each core is forced to spend time writing its own preferred data into the L 1, only for the other core promptly overwrite that information. Steamroller still gets whacked by this problem, even though AMD increased the L 1 code cache to 96 KB and made it three-way associative instead of two. Improving Cache Performance. 39

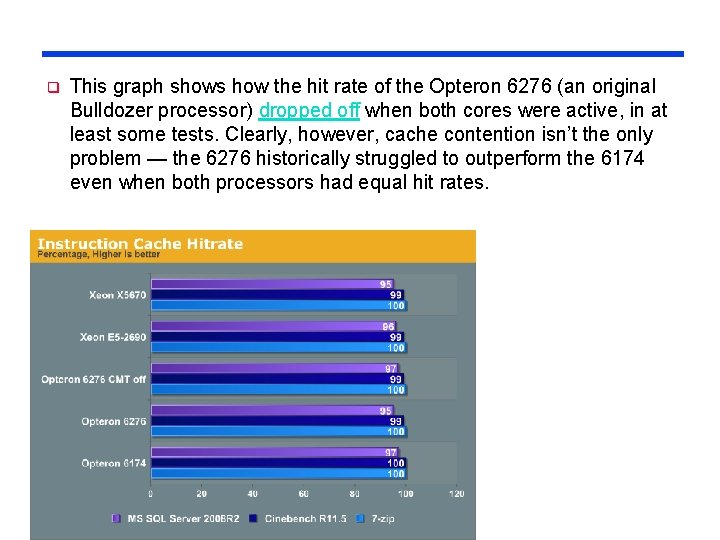

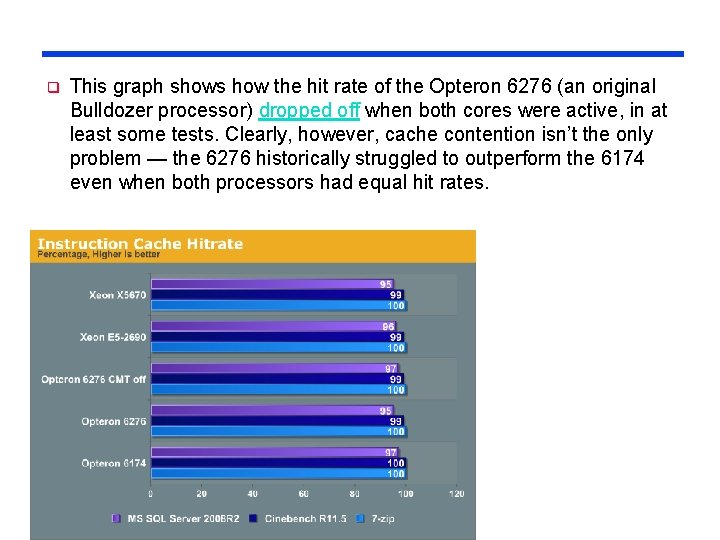

q This graph shows how the hit rate of the Opteron 6276 (an original Bulldozer processor) dropped off when both cores were active, in at least some tests. Clearly, however, cache contention isn’t the only problem — the 6276 historically struggled to outperform the 6174 even when both processors had equal hit rates. Improving Cache Performance. 40

The difference between L 2 and L 3 cache q http: //www. extremetech. com/computing/55662 -top-tipdifference-between-l 2 -and-l 3 -cache Improving Cache Performance. 41

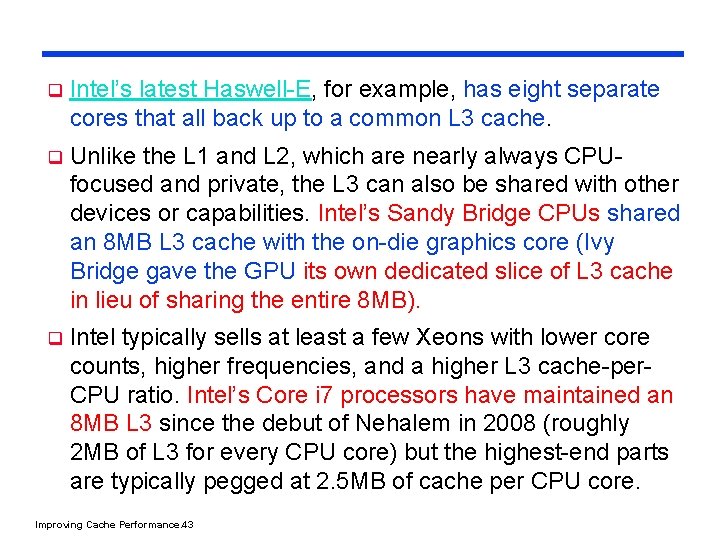

q At its simplest level, an L 3 cache is just a larger, slower version of the L 2 cache. Back when most chips were single-core processors, this was generally true. The first L 3 caches were actually built on the motherboard itself, connected to the CPU via the backside bus. When AMD launched its K 6 -III processor family, many existing K 6/K 2 motherboards could accept a K 6 -III as well. Typically these boards had 512 K-2 MB of L 2 cache — when a K 6 -III, with its integrated L 2 cache was inserted, these slower, motherboard-based caches became L 3 instead. q These new chips, like Intel’s Nehalem and AMD’s K 10 (Barcelona) used L 3 as more than just a larger, slower backstop for L 2. q L 1 and L 2 caches, tend to be private and dedicated to the needs of each particular core. (AMD’s Bulldozer design is an exception to this — Bulldozer, Piledriver, and Steamroller all share a common L 1 instruction cache between the two cores in each module). Improving Cache Performance. 42

q Intel’s latest Haswell-E, for example, has eight separate cores that all back up to a common L 3 cache. q Unlike the L 1 and L 2, which are nearly always CPUfocused and private, the L 3 can also be shared with other devices or capabilities. Intel’s Sandy Bridge CPUs shared an 8 MB L 3 cache with the on-die graphics core (Ivy Bridge gave the GPU its own dedicated slice of L 3 cache in lieu of sharing the entire 8 MB). q Intel typically sells at least a few Xeons with lower core counts, higher frequencies, and a higher L 3 cache-per. CPU ratio. Intel’s Core i 7 processors have maintained an 8 MB L 3 since the debut of Nehalem in 2008 (roughly 2 MB of L 3 for every CPU core) but the highest-end parts are typically pegged at 2. 5 MB of cache per CPU core. Improving Cache Performance. 43

Cache Performance computation Improving Cache Performance. 44

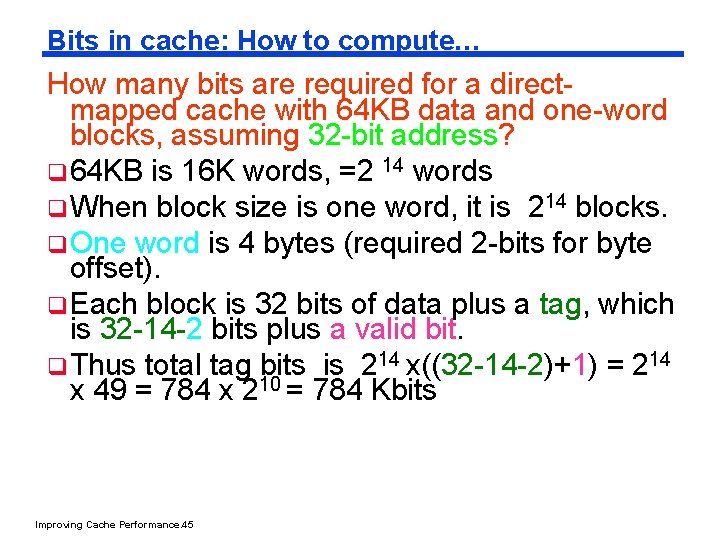

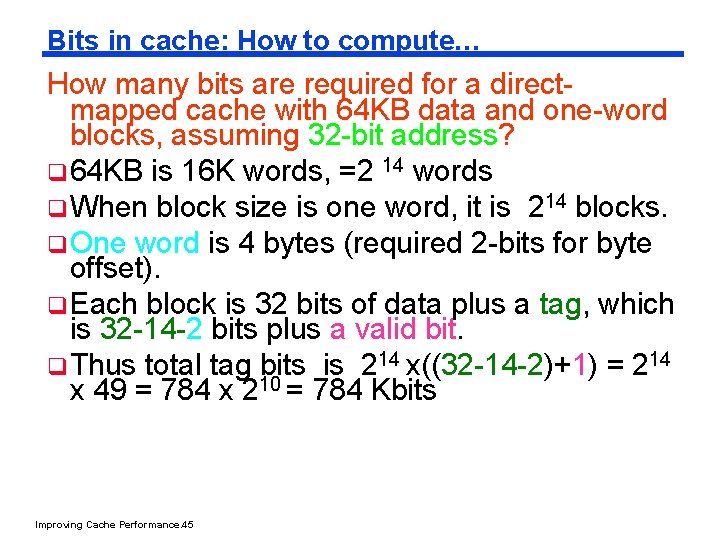

Bits in cache: How to compute… How many bits are required for a directmapped cache with 64 KB data and one-word blocks, assuming 32 -bit address? q 64 KB is 16 K words, =2 14 words q When block size is one word, it is 214 blocks. q One word is 4 bytes (required 2 -bits for byte offset). q Each block is 32 bits of data plus a tag, which is 32 -14 -2 bits plus a valid bit. q Thus total tag bits is 214 x((32 -14 -2)+1) = 214 x 49 = 784 x 210 = 784 Kbits Improving Cache Performance. 45

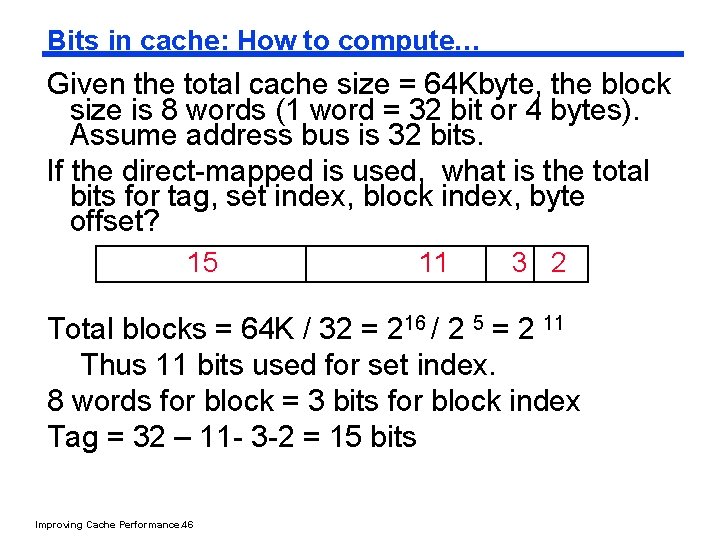

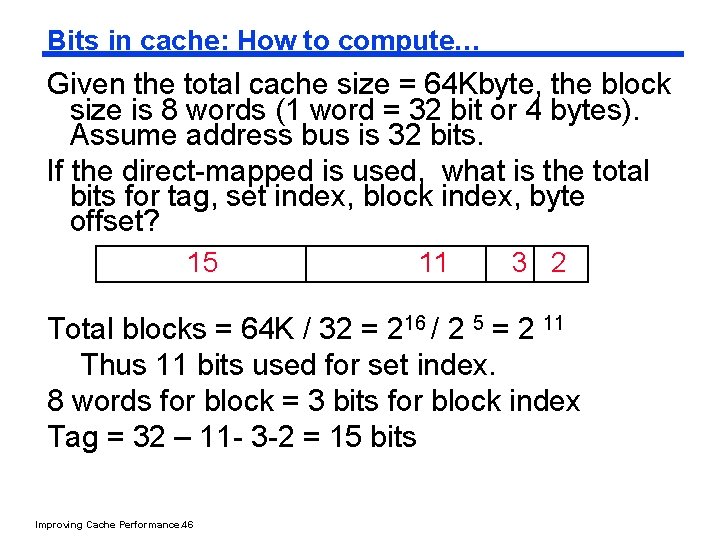

Bits in cache: How to compute… Given the total cache size = 64 Kbyte, the block size is 8 words (1 word = 32 bit or 4 bytes). Assume address bus is 32 bits. If the direct-mapped is used, what is the total bits for tag, set index, block index, byte offset? 15 11 3 2 Total blocks = 64 K / 32 = 216 / 2 5 = 2 11 Thus 11 bits used for set index. 8 words for block = 3 bits for block index Tag = 32 – 11 - 3 -2 = 15 bits Improving Cache Performance. 46

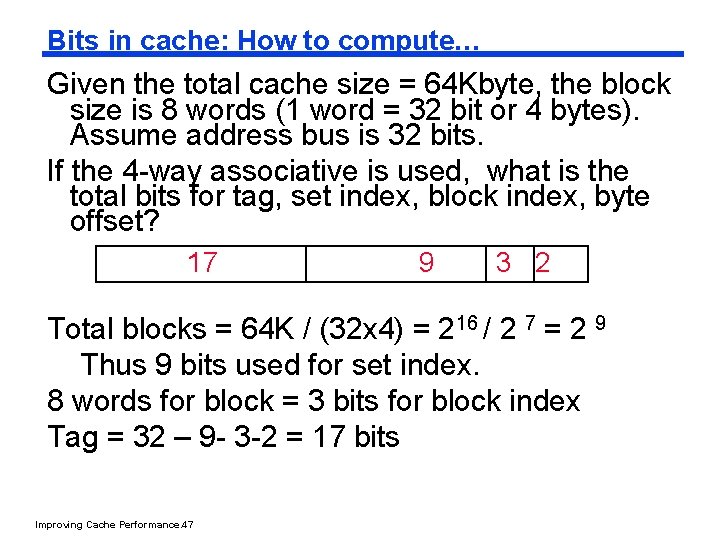

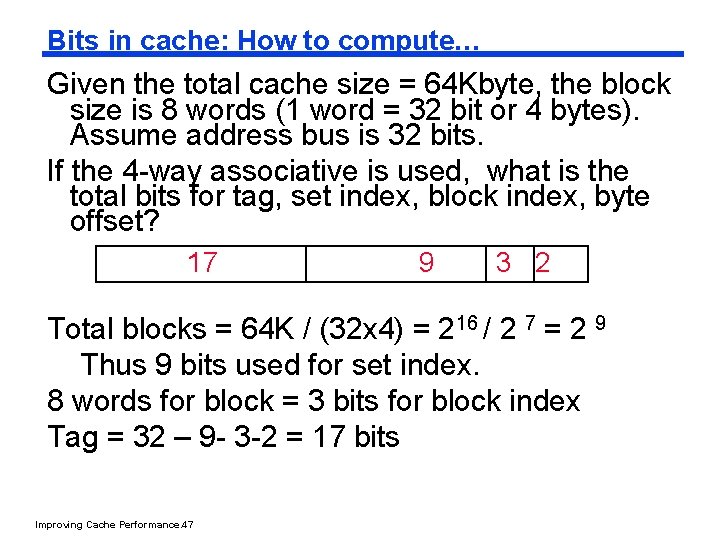

Bits in cache: How to compute… Given the total cache size = 64 Kbyte, the block size is 8 words (1 word = 32 bit or 4 bytes). Assume address bus is 32 bits. If the 4 -way associative is used, what is the total bits for tag, set index, block index, byte offset? 17 9 3 2 Total blocks = 64 K / (32 x 4) = 216 / 2 7 = 2 9 Thus 9 bits used for set index. 8 words for block = 3 bits for block index Tag = 32 – 9 - 3 -2 = 17 bits Improving Cache Performance. 47

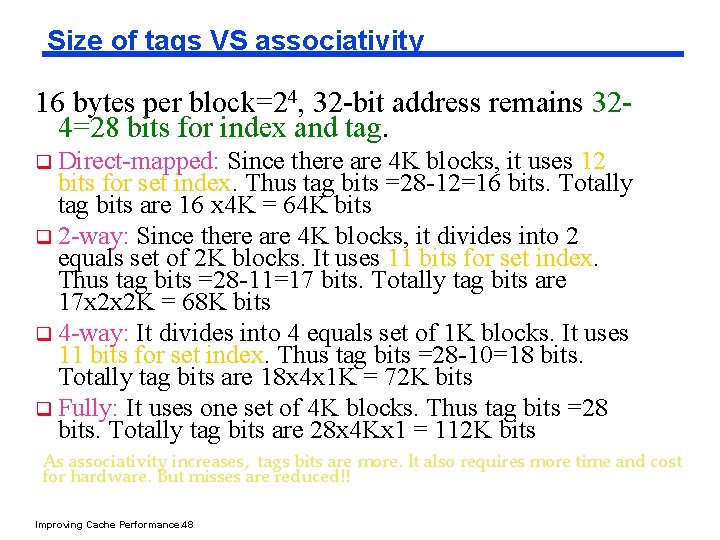

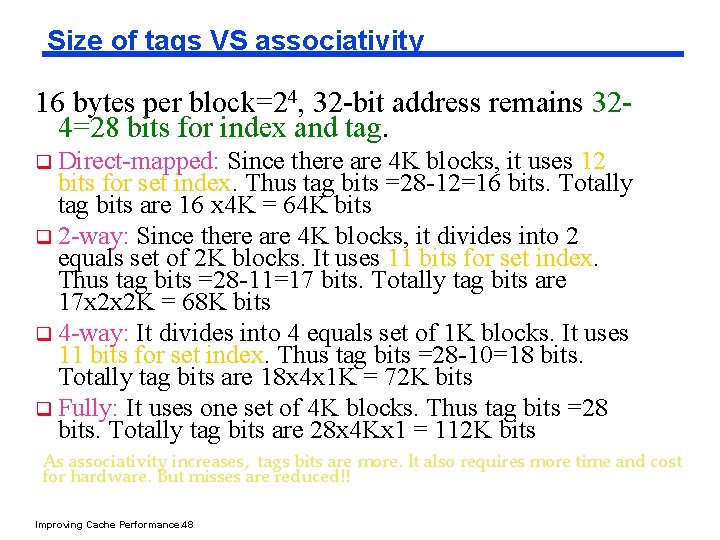

Size of tags VS associativity 16 bytes per block=24, 32 -bit address remains 324=28 bits for index and tag. q Direct-mapped: Since there are 4 K blocks, it uses 12 bits for set index. Thus tag bits =28 -12=16 bits. Totally tag bits are 16 x 4 K = 64 K bits q 2 -way: Since there are 4 K blocks, it divides into 2 equals set of 2 K blocks. It uses 11 bits for set index. Thus tag bits =28 -11=17 bits. Totally tag bits are 17 x 2 x 2 K = 68 K bits q 4 -way: It divides into 4 equals set of 1 K blocks. It uses 11 bits for set index. Thus tag bits =28 -10=18 bits. Totally tag bits are 18 x 4 x 1 K = 72 K bits q Fully: It uses one set of 4 K blocks. Thus tag bits =28 bits. Totally tag bits are 28 x 4 Kx 1 = 112 K bits As associativity increases, tags bits are more. It also requires more time and cost for hardware. But misses are reduced!! Improving Cache Performance. 48

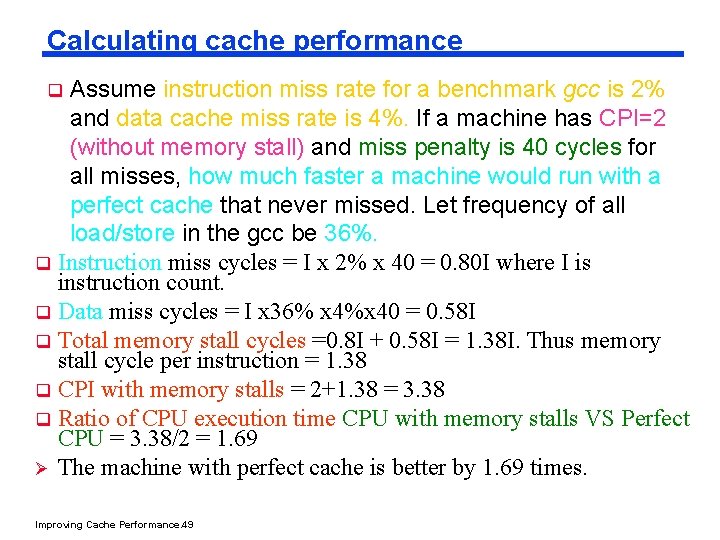

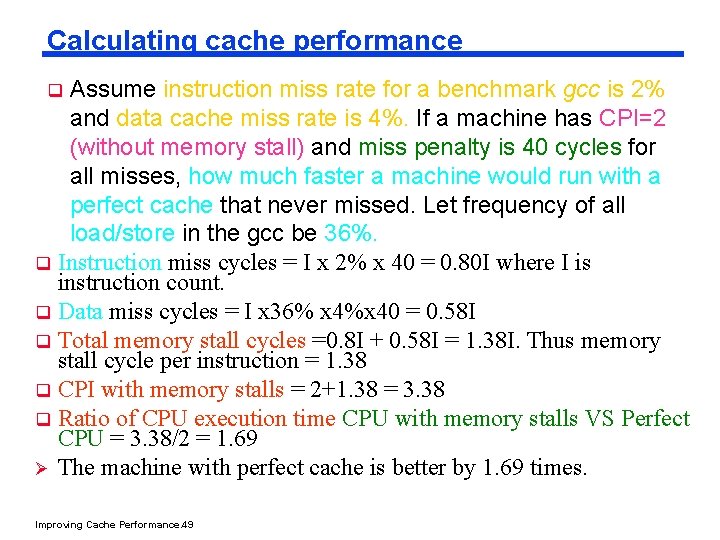

Calculating cache performance Assume instruction miss rate for a benchmark gcc is 2% and data cache miss rate is 4%. If a machine has CPI=2 (without memory stall) and miss penalty is 40 cycles for all misses, how much faster a machine would run with a perfect cache that never missed. Let frequency of all load/store in the gcc be 36%. q Instruction miss cycles = I x 2% x 40 = 0. 80 I where I is instruction count. q Data miss cycles = I x 36% x 4%x 40 = 0. 58 I q Total memory stall cycles =0. 8 I + 0. 58 I = 1. 38 I. Thus memory stall cycle per instruction = 1. 38 q CPI with memory stalls = 2+1. 38 = 3. 38 q Ratio of CPU execution time CPU with memory stalls VS Perfect CPU = 3. 38/2 = 1. 69 Ø The machine with perfect cache is better by 1. 69 times. q Improving Cache Performance. 49

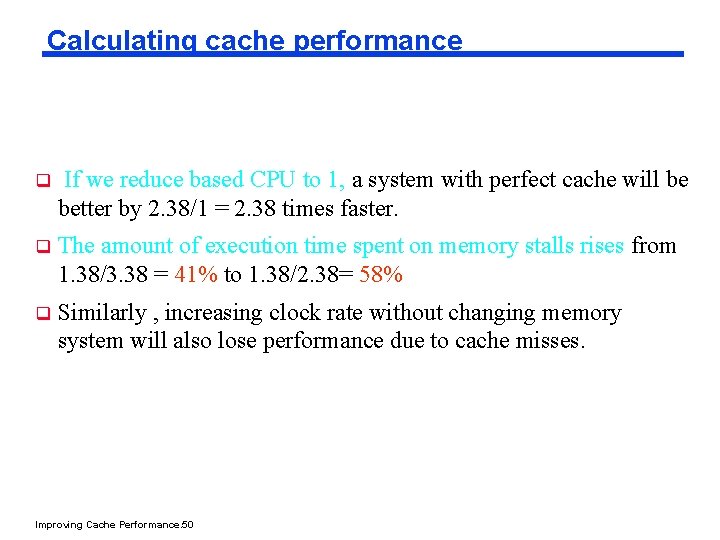

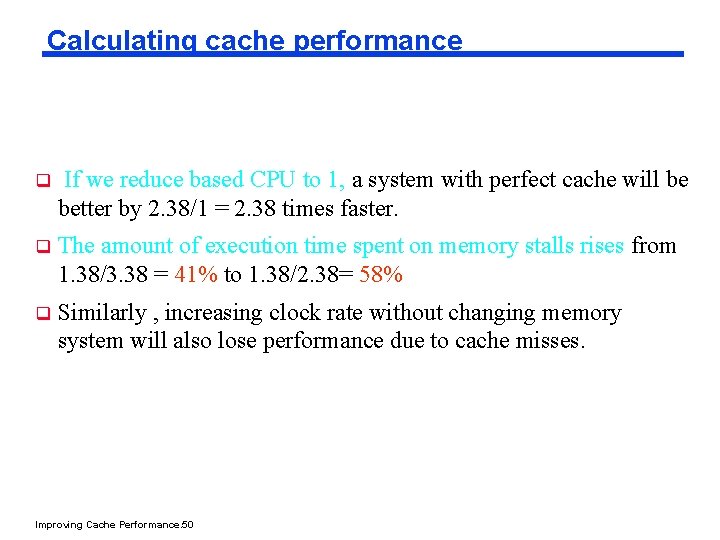

Calculating cache performance q If we reduce based CPU to 1, a system with perfect cache will be better by 2. 38/1 = 2. 38 times faster. q The amount of execution time spent on memory stalls rises from 1. 38/3. 38 = 41% to 1. 38/2. 38= 58% q Similarly , increasing clock rate without changing memory system will also lose performance due to cache misses. Improving Cache Performance. 50

Cache performance with increase clock rate q In previous example, if we double clock rate, assume absolute to handle cache miss is unchanged. How much faster will the machine be with a faster clock, assuming the same miss rate as in previous example? Improving Cache Performance. 51

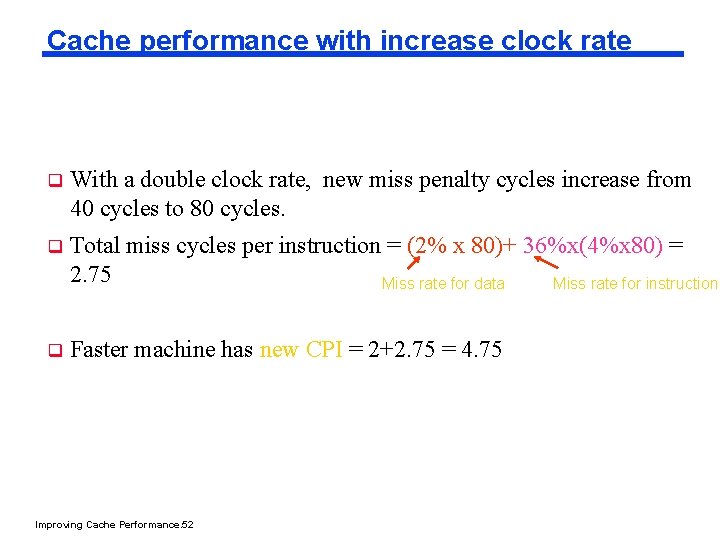

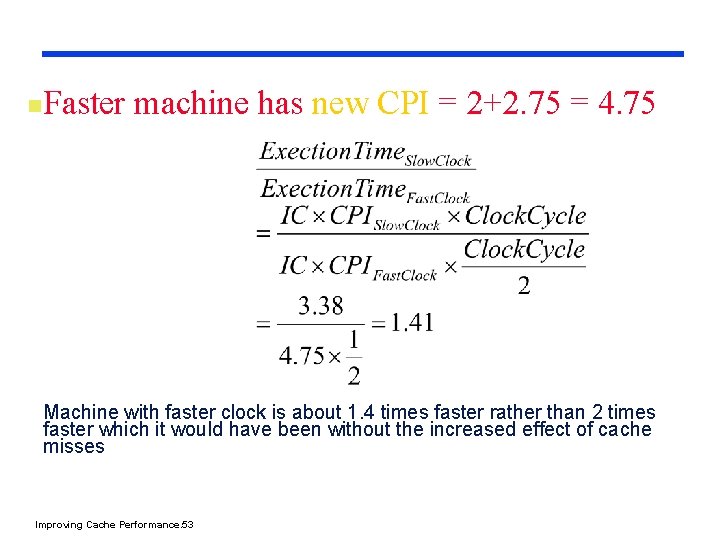

Cache performance with increase clock rate q With a double clock rate, new miss penalty cycles increase from 40 cycles to 80 cycles. q Total miss cycles per instruction = (2% x 80)+ 36%x(4%x 80) = 2. 75 Miss rate for instruction Miss rate for data q Faster machine has new CPI = 2+2. 75 = 4. 75 Improving Cache Performance. 52

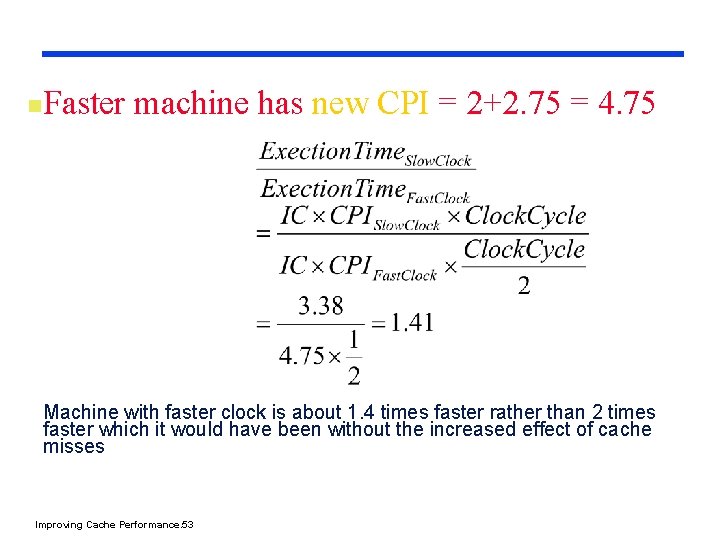

n Faster machine has new CPI = 2+2. 75 = 4. 75 Machine with faster clock is about 1. 4 times faster rather than 2 times faster which it would have been without the increased effect of cache misses Improving Cache Performance. 53

Summary of the both examples If a machine improves both clock rates and CPI, q The lower CPI, the more impact on stall cycles. q Cache miss penalty is computed in terms of # CPU cycles. Higher clock rate leads to larger miss penalty. Improving Cache Performance. 54

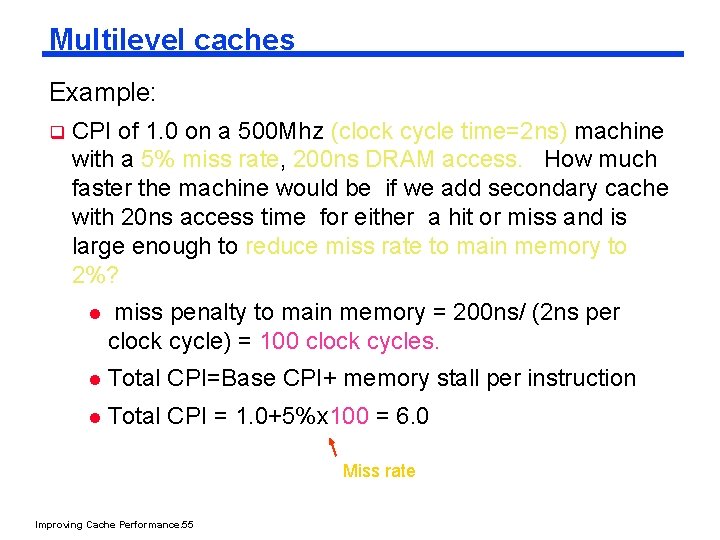

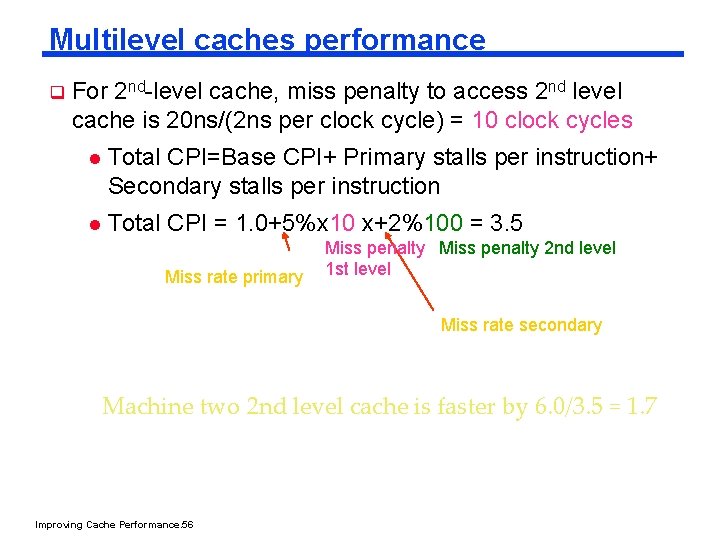

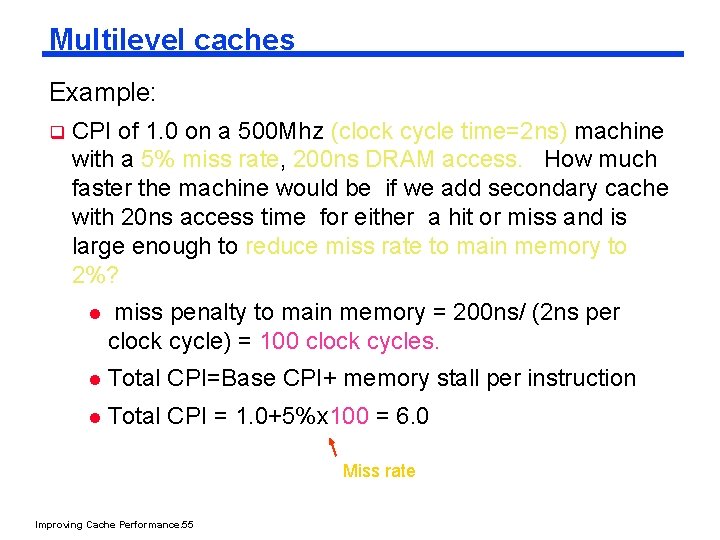

Multilevel caches Example: q CPI of 1. 0 on a 500 Mhz (clock cycle time=2 ns) machine with a 5% miss rate, 200 ns DRAM access. How much faster the machine would be if we add secondary cache with 20 ns access time for either a hit or miss and is large enough to reduce miss rate to main memory to 2%? l miss penalty to main memory = 200 ns/ (2 ns per clock cycle) = 100 clock cycles. l Total CPI=Base CPI+ memory stall per instruction l Total CPI = 1. 0+5%x 100 = 6. 0 Miss rate Improving Cache Performance. 55

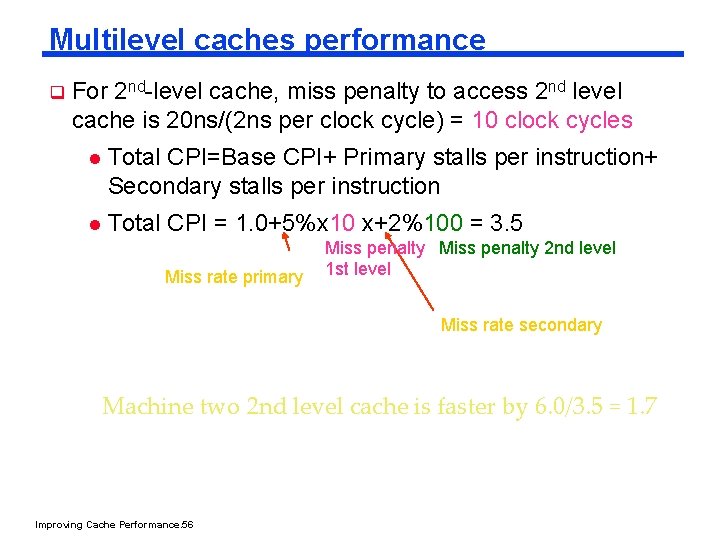

Multilevel caches performance q For 2 nd-level cache, miss penalty to access 2 nd level cache is 20 ns/(2 ns per clock cycle) = 10 clock cycles l Total CPI=Base CPI+ Primary stalls per instruction+ Secondary stalls per instruction l Total CPI = 1. 0+5%x 10 x+2%100 = 3. 5 Miss rate primary Miss penalty 2 nd level 1 st level Miss rate secondary Machine two 2 nd level cache is faster by 6. 0/3. 5 = 1. 7 Improving Cache Performance. 56