Lecture 2 Multilayer perceptron networks Practical deep learning

- Slides: 12

Lecture 2: Multi-layer perceptron networks Practical deep learning 1

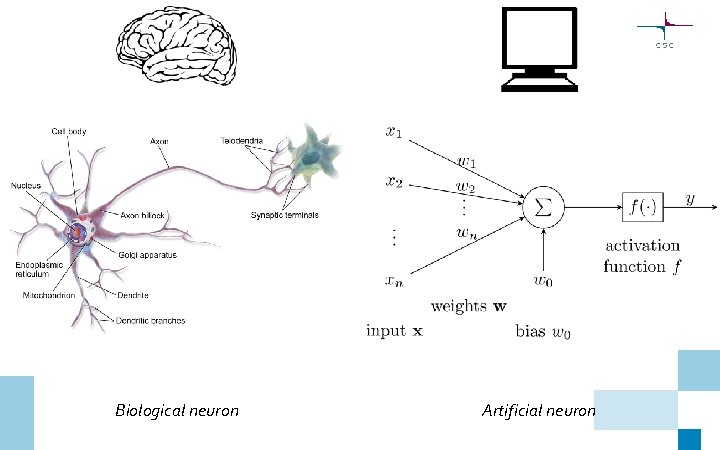

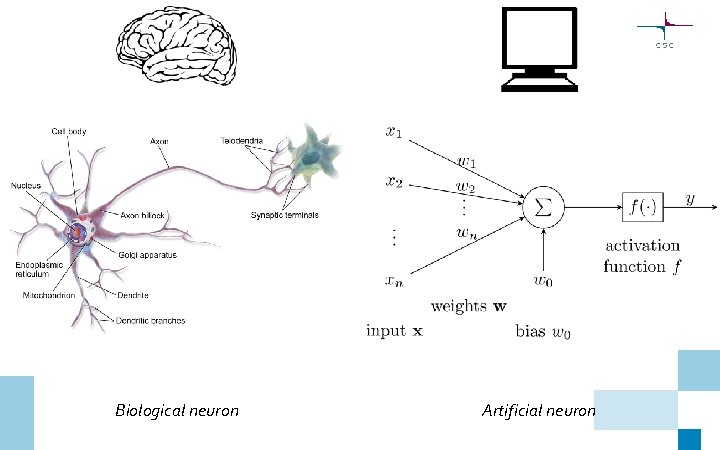

Biological neuron Artificial neuron

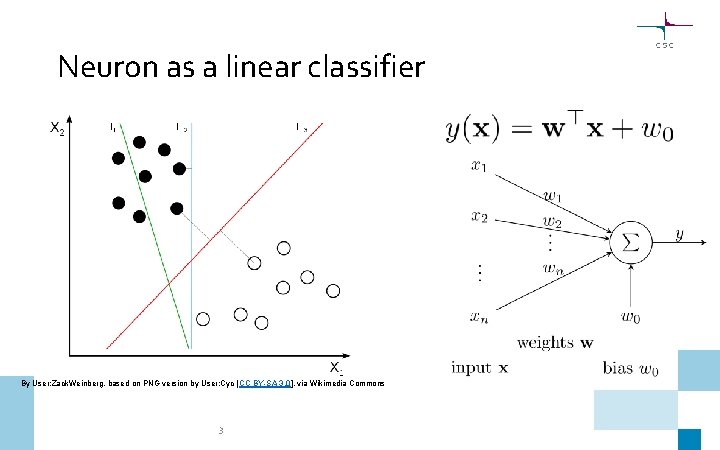

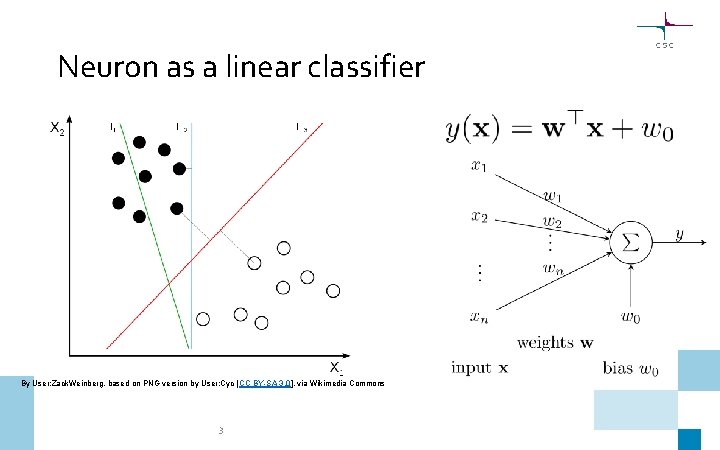

Neuron as a linear classifier By User: Zack. Weinberg, based on PNG version by User: Cyc [ CC BY-SA 3. 0], via Wikimedia Commons 3

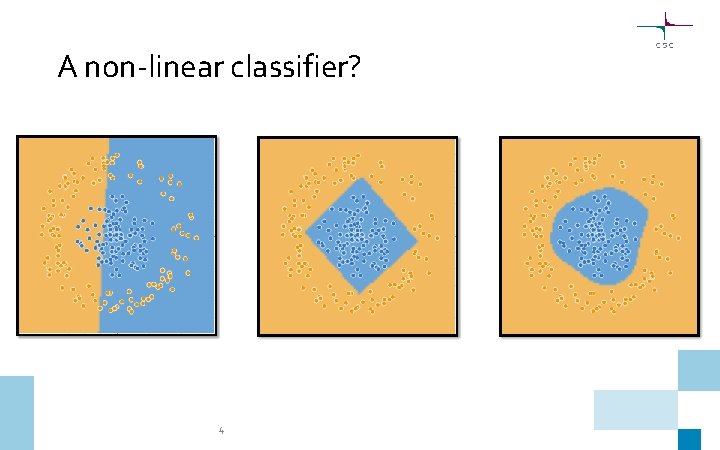

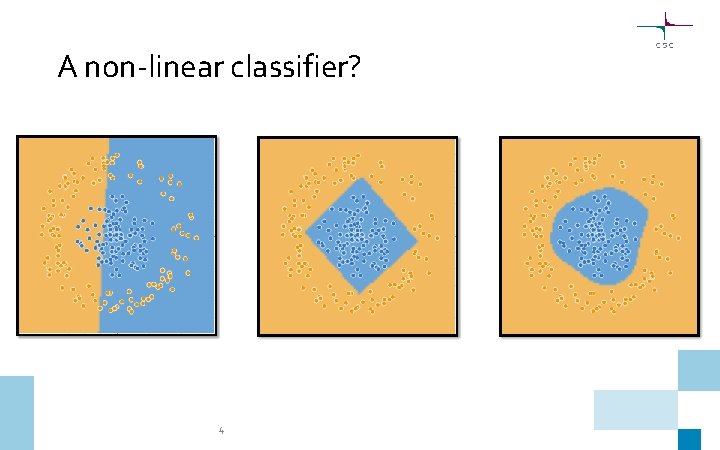

A non-linear classifier? 4

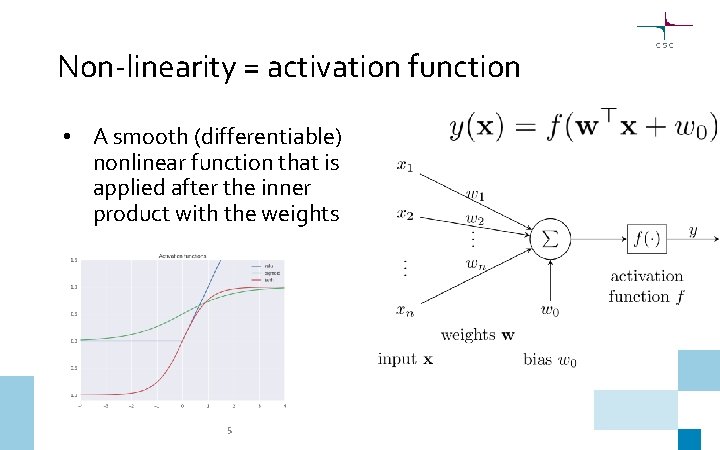

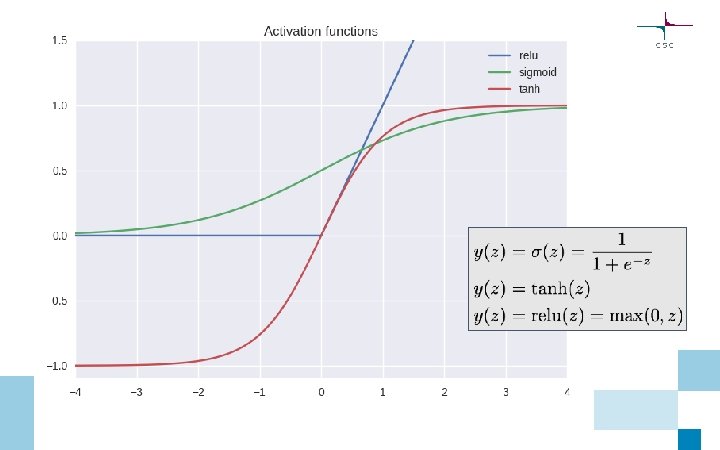

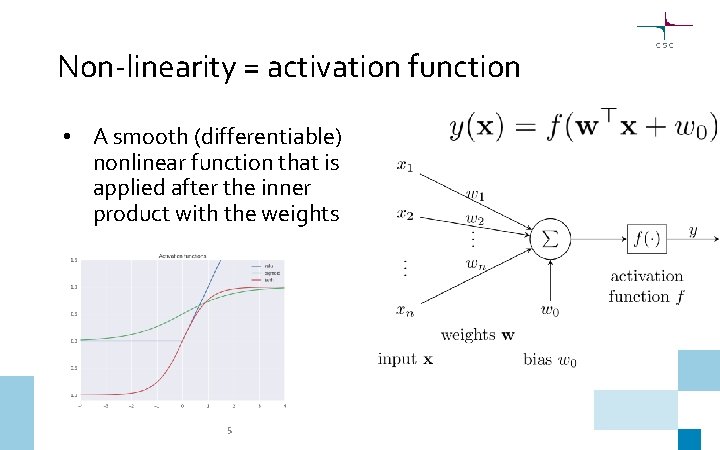

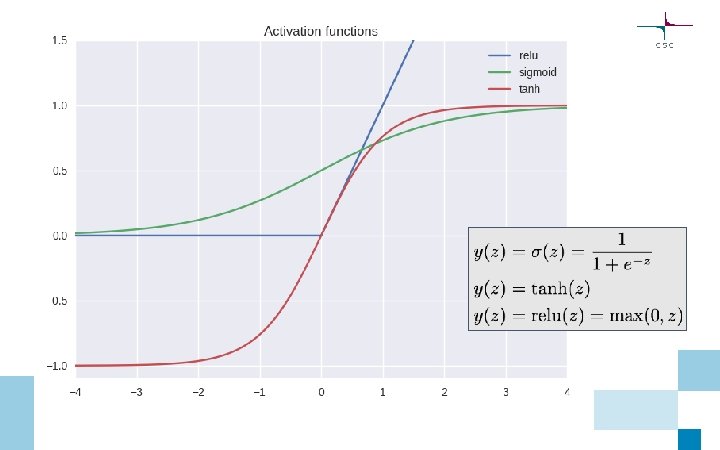

Non-linearity = activation function • A smooth (differentiable) nonlinear function that is applied after the inner product with the weights 5

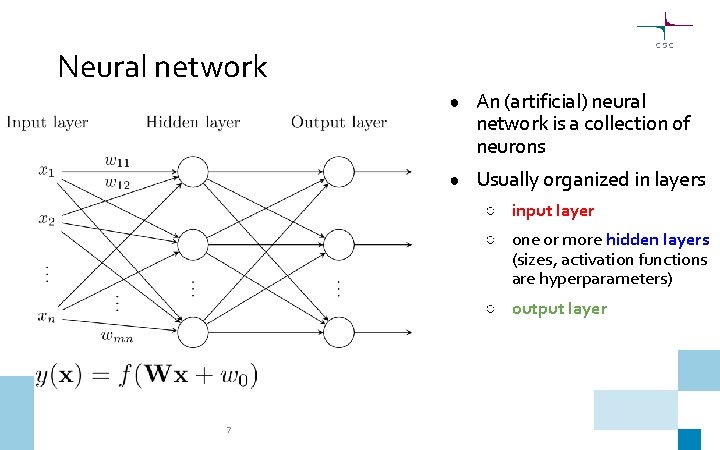

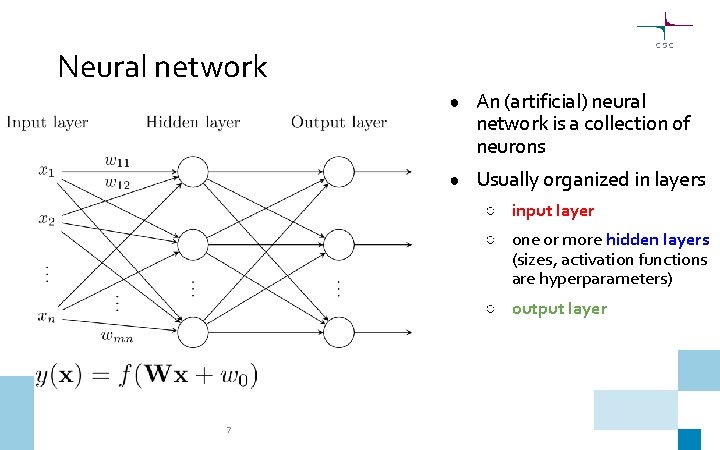

Neural network ● An (artificial) neural network is a collection of neurons ● Usually organized in layers ○ input layer ○ one or more hidden layers (sizes, activation functions are hyperparameters) ○ output layer 7

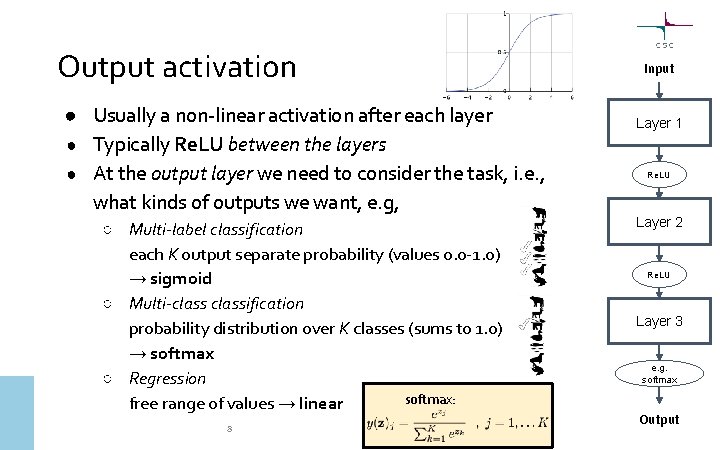

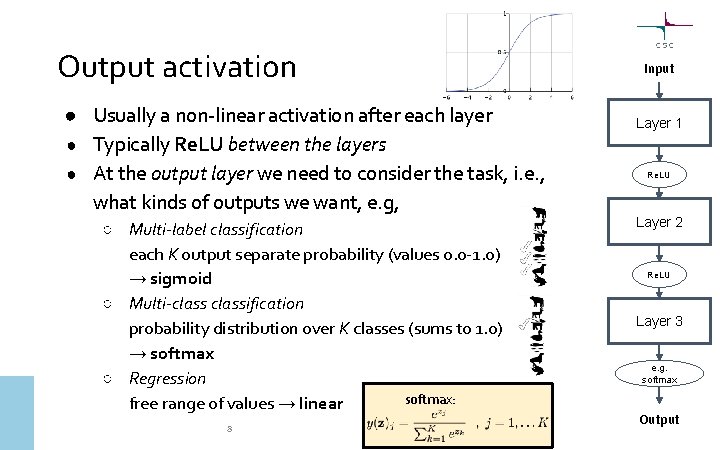

Output activation ● Usually a non-linear activation after each layer ● Typically Re. LU between the layers ● At the output layer we need to consider the task, i. e. , what kinds of outputs we want, e. g, ○ Multi-label classification each K output separate probability (values 0. 0 -1. 0) → sigmoid ○ Multi-classification probability distribution over K classes (sums to 1. 0) → softmax ○ Regression softmax: free range of values → linear 8 Input Layer 1 Re. LU Layer 2 Re. LU Layer 3 e. g. softmax Output

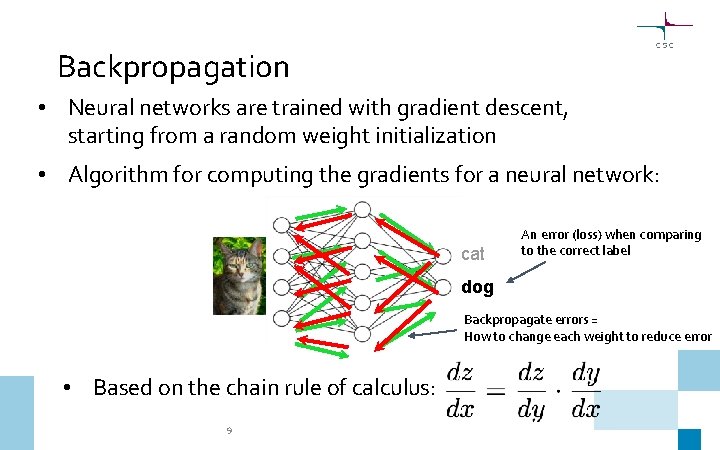

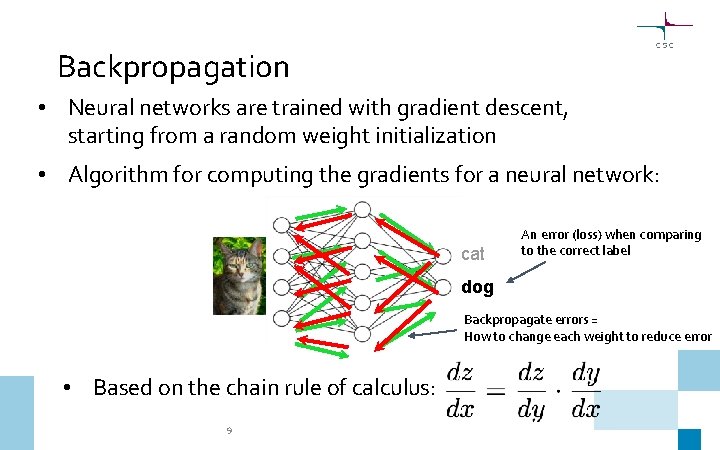

Backpropagation • Neural networks are trained with gradient descent, starting from a random weight initialization • Algorithm for computing the gradients for a neural network: cat An error (loss) when comparing to the correct label dog Backpropagate errors = How to change each weight to reduce error • Based on the chain rule of calculus: 9

Multilayer perceptron (MLP) / Dense network • Classic feedforward neural network • Densely connected: all inputs from the previous layer connected • In tf. keras: layers. Dense(units, activation=None) or: layers. Dense(units) layers. Activation(activation) 10 cat dog fish

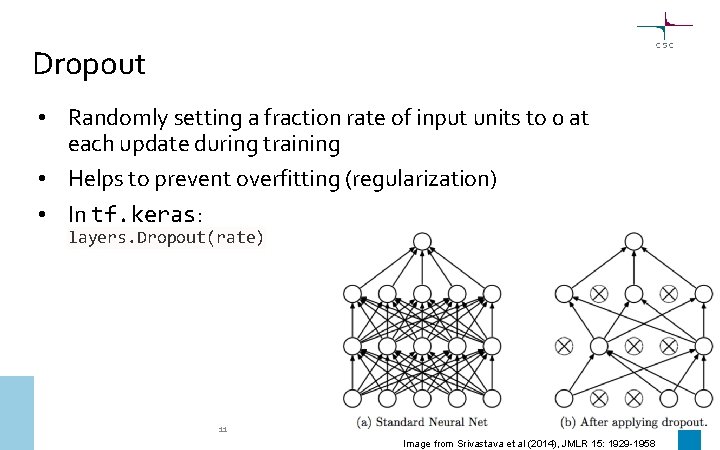

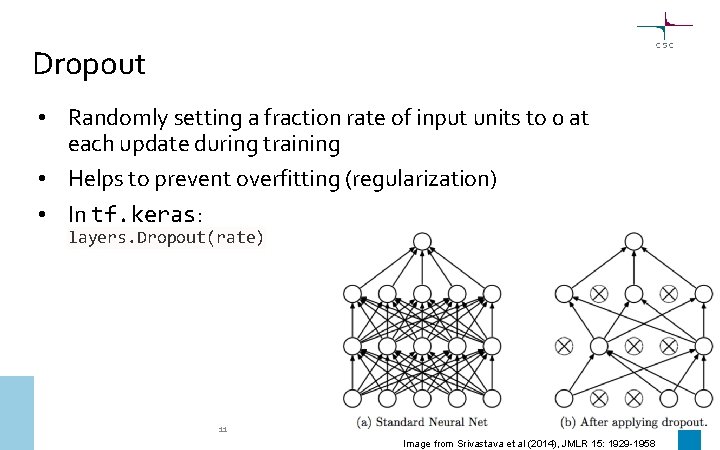

Dropout • Randomly setting a fraction rate of input units to 0 at each update during training • Helps to prevent overfitting (regularization) • In tf. keras: layers. Dropout(rate) 11 Image from Srivastava et al (2014), JMLR 15: 1929 -1958

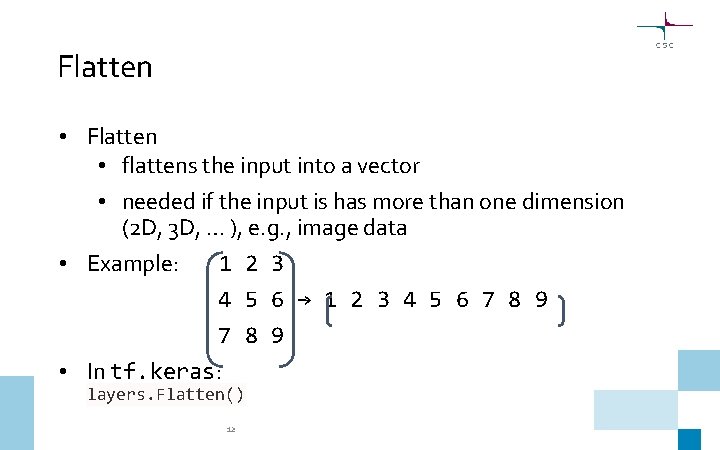

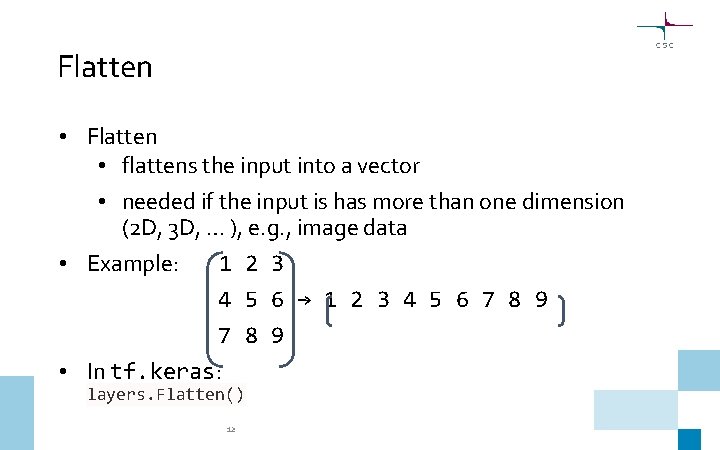

Flatten • flattens the input into a vector • needed if the input is has more than one dimension (2 D, 3 D, … ), e. g. , image data • Example: 1 2 3 4 5 6 → 1 2 3 4 5 6 7 8 9 • In tf. keras: layers. Flatten() 12