Lecture 2 Lexical Analysis Joey Paquet 2000 2002

![Generate State Transition Table letter 0 letter 1 [other] 2 digit 0 1 2 Generate State Transition Table letter 0 letter 1 [other] 2 digit 0 1 2](https://slidetodoc.com/presentation_image_h2/958efb69f0a75096919c8afde796a438/image-11.jpg)

![Hand-written Scanner next. Token() c = next. Char() case (c) of "[a. . z], Hand-written Scanner next. Token() c = next. Char() case (c) of "[a. . z],](https://slidetodoc.com/presentation_image_h2/958efb69f0a75096919c8afde796a438/image-21.jpg)

- Slides: 31

Lecture 2 Lexical Analysis Joey Paquet, 2000, 2002 1

Part I Building a Lexical Analyzer Joey Paquet, 2000, 2002 2

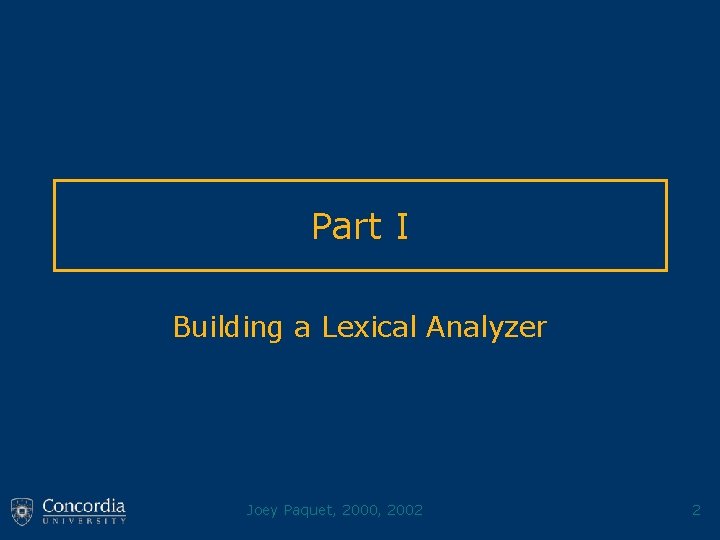

Roles of the Scanner • Removal of comments – Comments are not part of the program’s meaning • Multiple-line comments? • Nested comments? • Case conversion – Is the lexical definition case sensitive? • For identifiers • For keywords Joey Paquet, 2000, 2002 3

Roles of the Scanner • Removal of white spaces – Blanks, tabulars, carriage returns and line feeds – Is it possible to identify tokens in a program without spaces? • Interpretation of compiler directives – #include, #ifdef, #ifndef and #define are directives to “redirect the input” of the compiler – May be done by a precompiler Joey Paquet, 2000, 2002 4

Roles of the Scanner • Communication with the symbol table – A symbol table entry is created when an identifier is encountered – The lexical analyzer cannot create the whole entries • Preparation of the output listing – Output the analyzed code – Output error messages and warnings – Output a table of summary data Joey Paquet, 2000, 2002 5

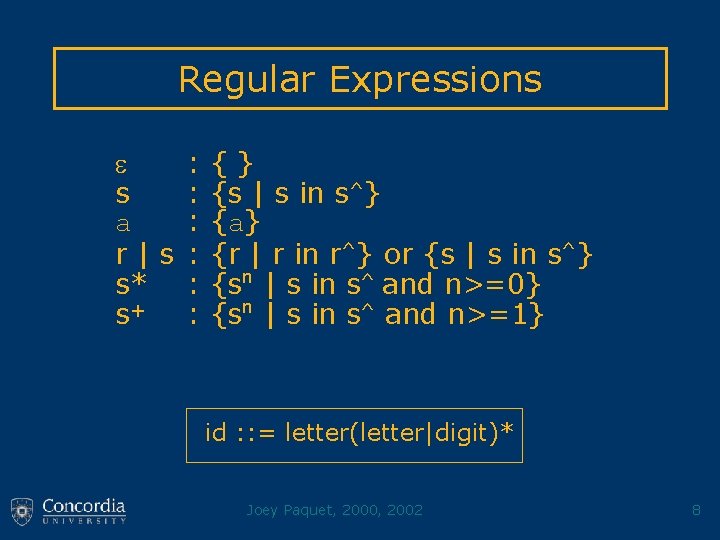

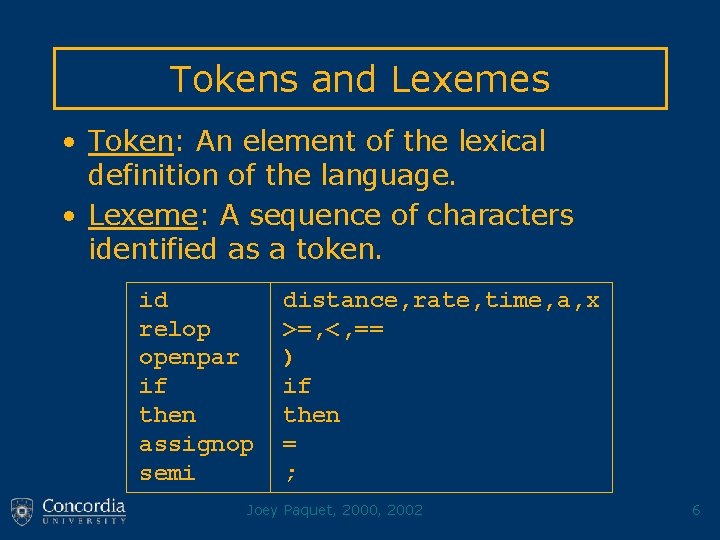

Tokens and Lexemes • Token: An element of the lexical definition of the language. • Lexeme: A sequence of characters identified as a token. id relop openpar if then assignop semi distance, rate, time, a, x >=, <, == ) if then = ; Joey Paquet, 2000, 2002 6

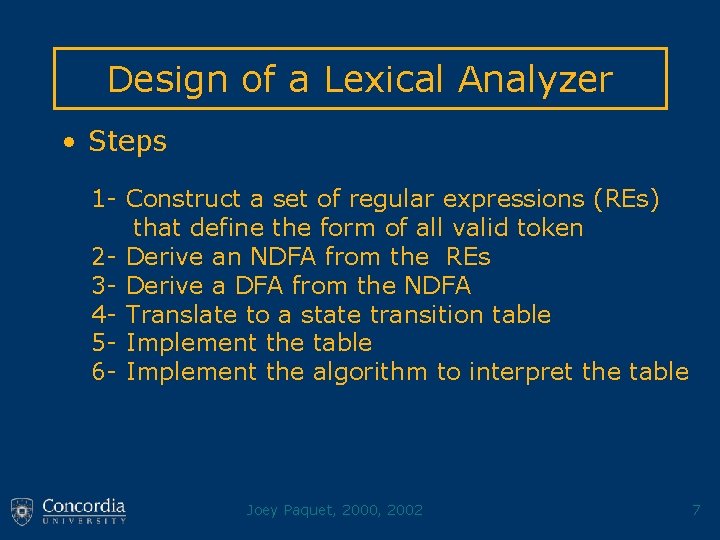

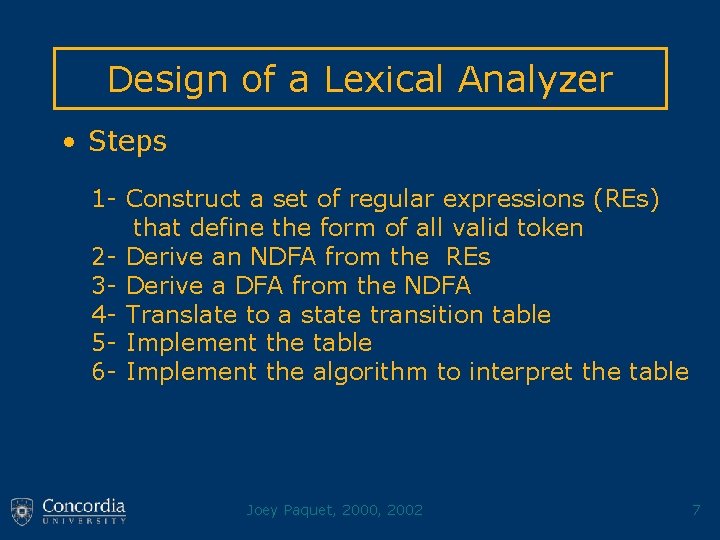

Design of a Lexical Analyzer • Steps 1 - Construct a set of regular expressions (REs) that define the form of all valid token 2 - Derive an NDFA from the REs 3 - Derive a DFA from the NDFA 4 - Translate to a state transition table 5 - Implement the table 6 - Implement the algorithm to interpret the table Joey Paquet, 2000, 2002 7

Regular Expressions : {} s : {s | s in s^} a : {a} r | s : {r | r in r^} or {s | s in s^} s* : {sn | s in s^ and n>=0} s+ : {sn | s in s^ and n>=1} id : : = letter(letter|digit)* Joey Paquet, 2000, 2002 8

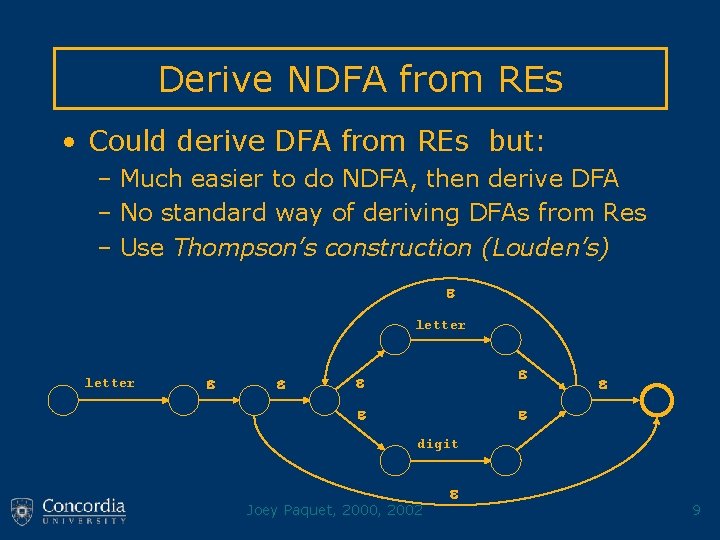

Derive NDFA from REs • Could derive DFA from REs but: – Much easier to do NDFA, then derive DFA – No standard way of deriving DFAs from Res – Use Thompson’s construction (Louden’s) letter digit Joey Paquet, 2000, 2002 9

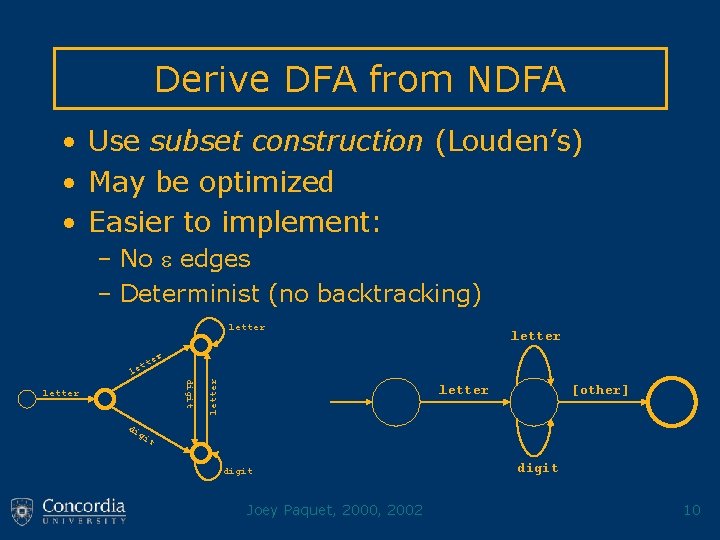

Derive DFA from NDFA • Use subset construction (Louden’s) • May be optimized • Easier to implement: – No edges – Determinist (no backtracking) letter tt er digit letter le letter [other] di gi t digit Joey Paquet, 2000, 2002 digit 10

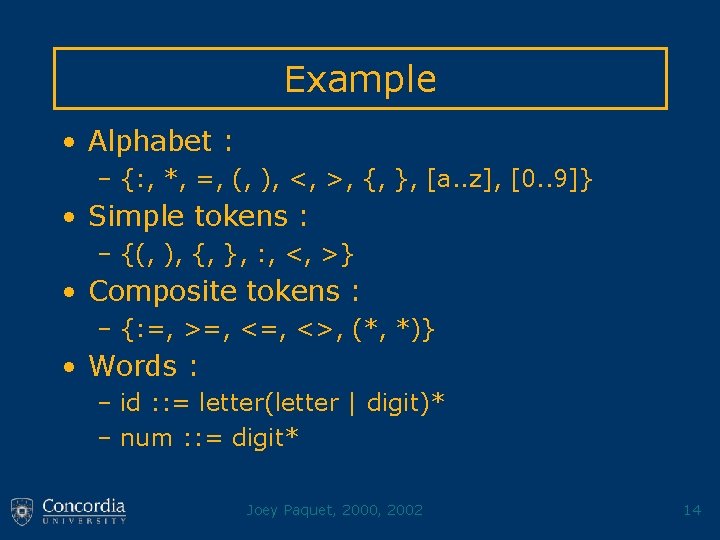

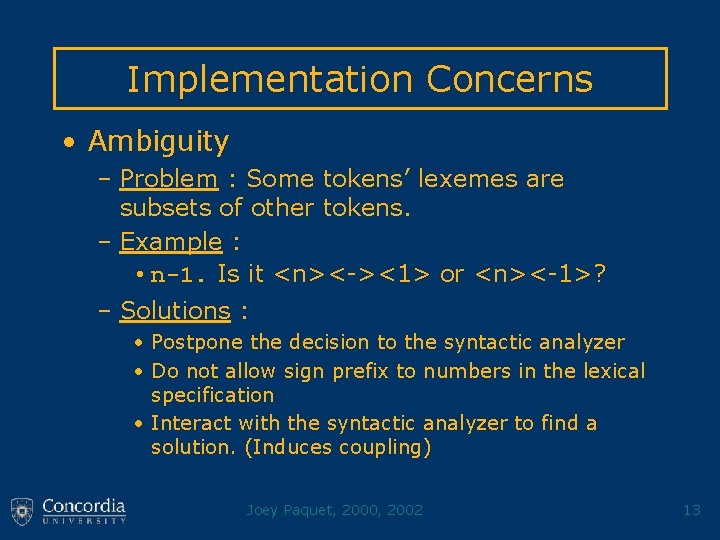

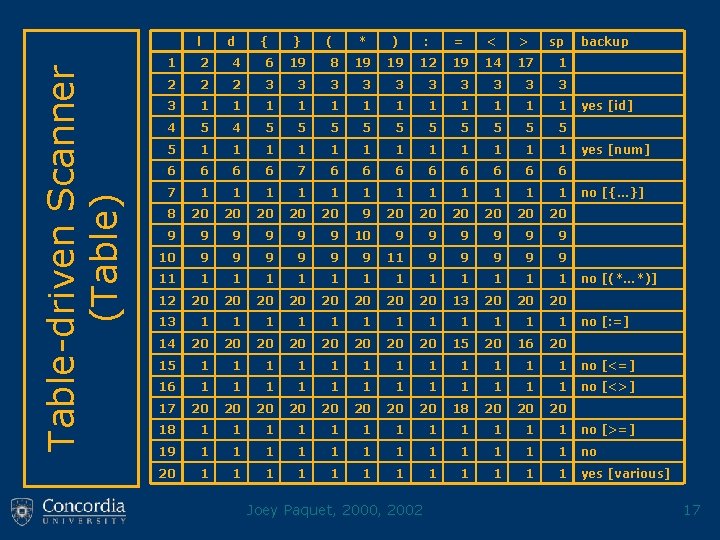

![Generate State Transition Table letter 0 letter 1 other 2 digit 0 1 2 Generate State Transition Table letter 0 letter 1 [other] 2 digit 0 1 2](https://slidetodoc.com/presentation_image_h2/958efb69f0a75096919c8afde796a438/image-11.jpg)

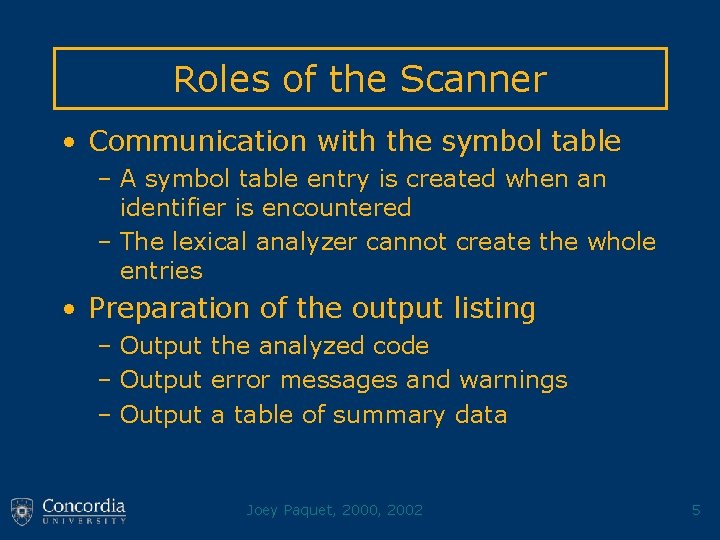

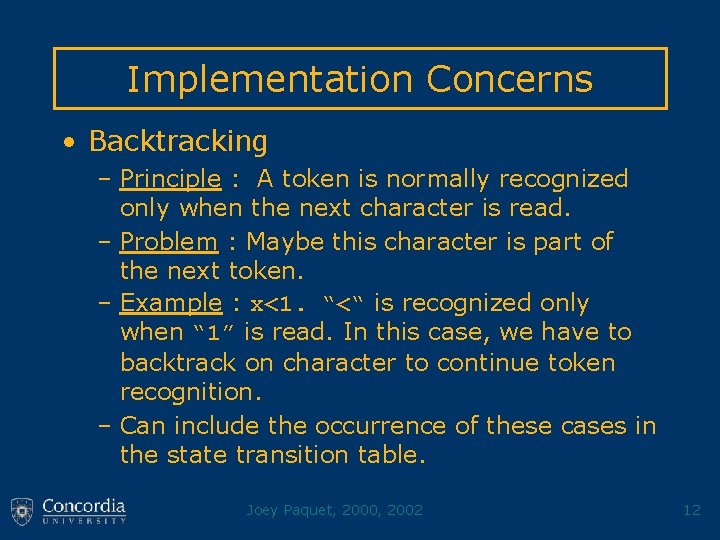

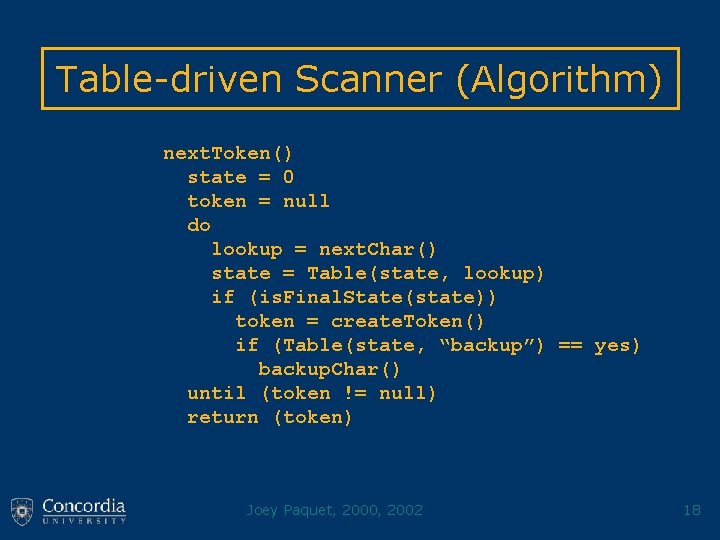

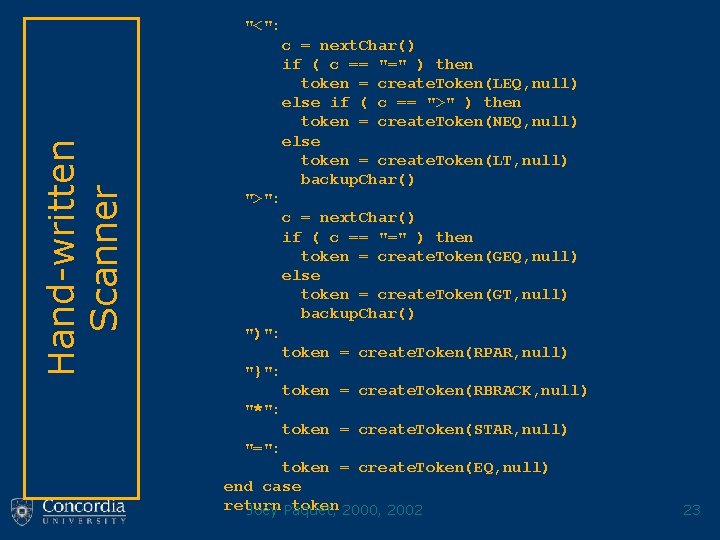

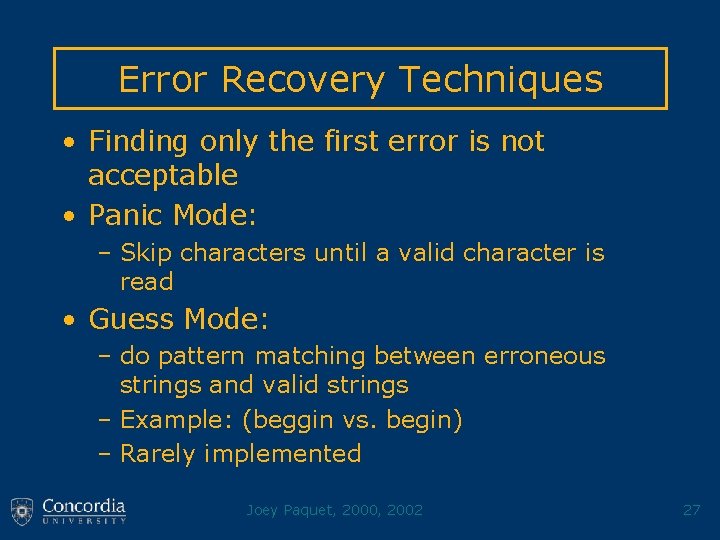

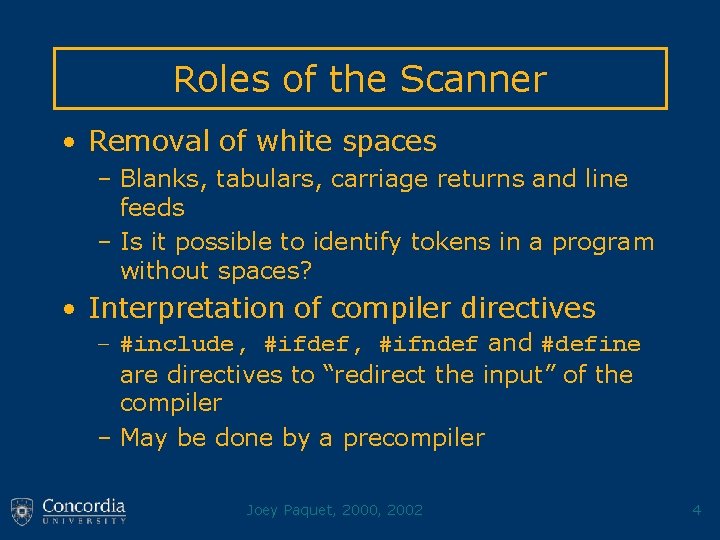

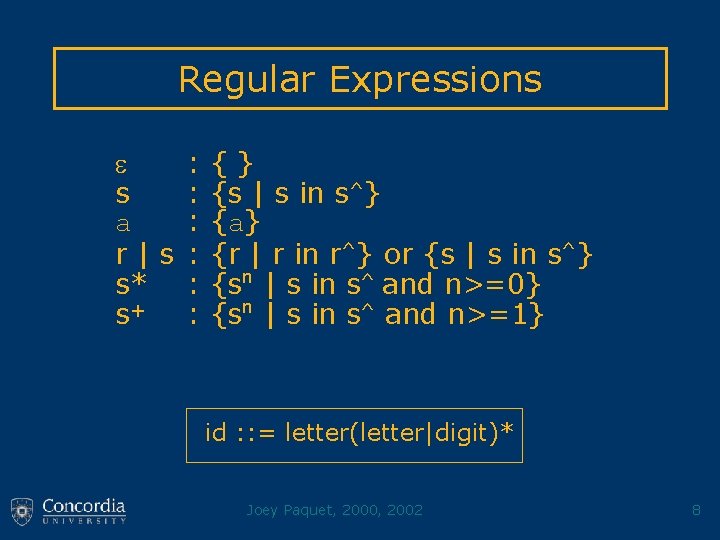

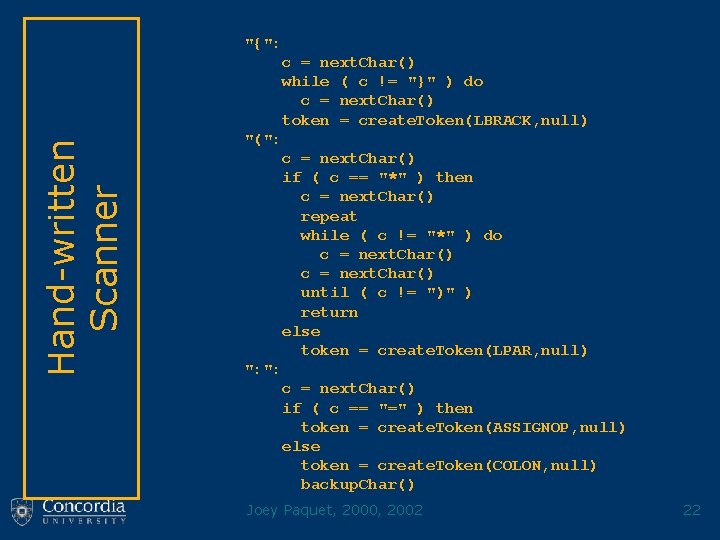

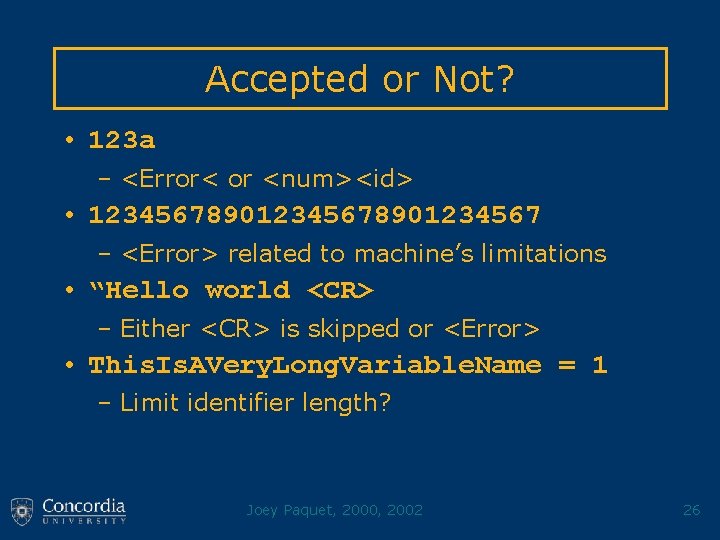

Generate State Transition Table letter 0 letter 1 [other] 2 digit 0 1 2 letter 1 1 digit other 1 2 Joey Paquet, 2000, 2002 final N N Y 11

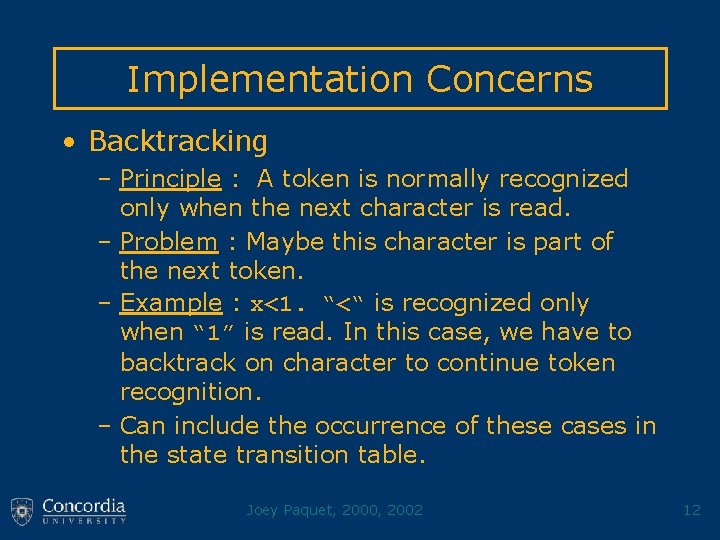

Implementation Concerns • Backtracking – Principle : A token is normally recognized only when the next character is read. – Problem : Maybe this character is part of the next token. – Example : x<1. “<“ is recognized only when “ 1” is read. In this case, we have to backtrack on character to continue token recognition. – Can include the occurrence of these cases in the state transition table. Joey Paquet, 2000, 2002 12

Implementation Concerns • Ambiguity – Problem : Some tokens’ lexemes are subsets of other tokens. – Example : • n-1. Is it <n><-><1> or <n><-1>? – Solutions : • Postpone the decision to the syntactic analyzer • Do not allow sign prefix to numbers in the lexical specification • Interact with the syntactic analyzer to find a solution. (Induces coupling) Joey Paquet, 2000, 2002 13

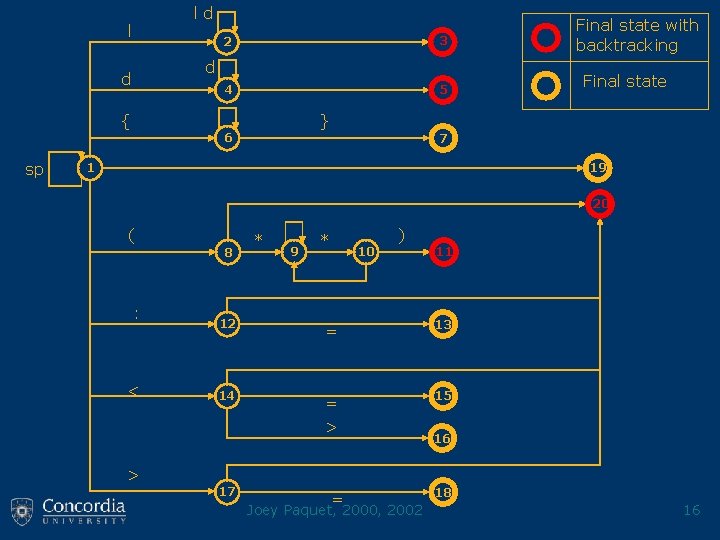

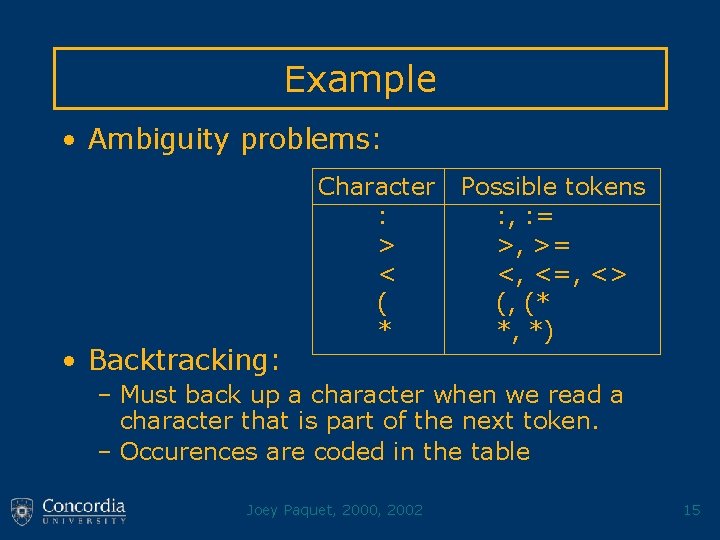

Example • Alphabet : – {: , *, =, (, ), <, >, {, }, [a. . z], [0. . 9]} • Simple tokens : – {(, ), {, }, : , <, >} • Composite tokens : – {: =, >=, <>, (*, *)} • Words : – id : : = letter(letter | digit)* – num : : = digit* Joey Paquet, 2000, 2002 14

Example • Ambiguity problems: • Backtracking: Character : > < ( * Possible tokens : , : = >, >= <, <=, <> (, (* *, *) – Must back up a character when we read a character that is part of the next token. – Occurences are coded in the table Joey Paquet, 2000, 2002 15

ld l 3 4 5 d d { sp 2 Final state with backtracking Final state } 6 7 1 19 20 ( : < > 8 * 9 * 10 ) 11 12 = 13 14 = > 15 17 = Joey Paquet, 2000, 2002 16 18 16

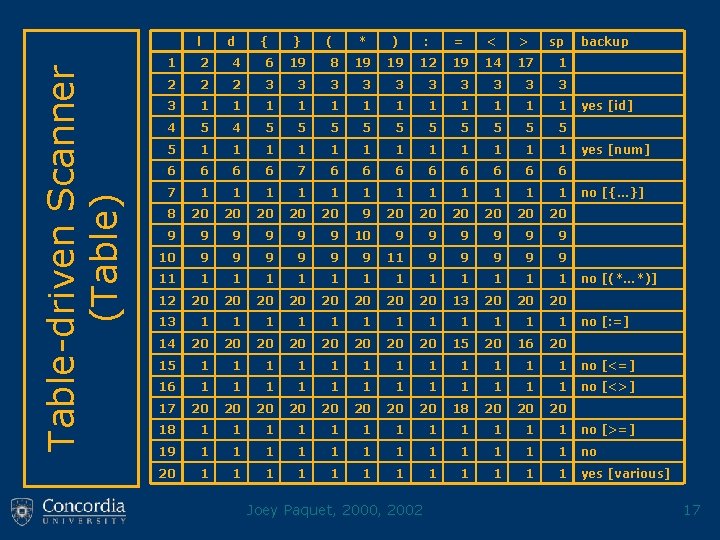

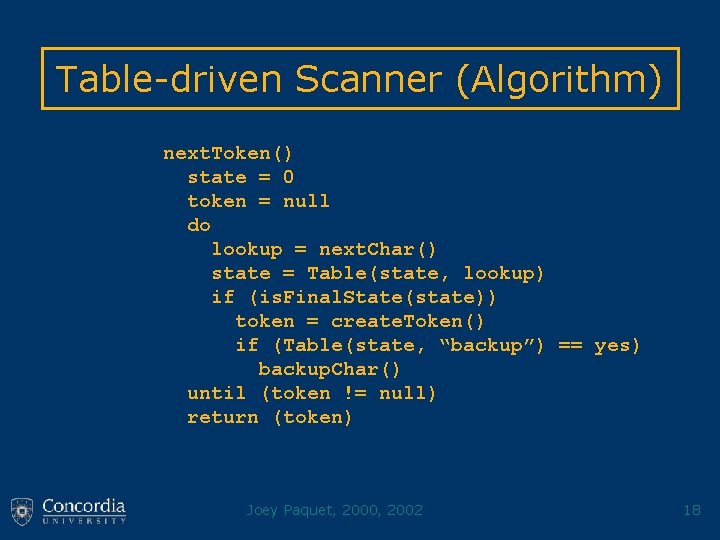

Table-driven Scanner (Table) l d { } ( * ) : = < > sp backup 1 2 4 6 19 8 19 19 12 19 14 17 1 2 2 2 3 3 3 1 1 1 4 5 5 5 1 1 1 6 6 7 6 6 6 6 7 1 1 1 8 20 20 20 9 9 9 9 9 9 10 9 9 9 11 1 1 1 12 20 20 13 20 20 20 13 1 1 1 14 20 20 15 20 16 20 15 1 1 1 no [<=] 16 1 1 1 no [<>] 17 20 20 18 20 20 20 18 1 1 1 no [>=] 19 1 1 1 no 20 1 1 1 yes [various] Joey Paquet, 2000, 2002 yes [id] yes [num] no [{…}] no [(*…*)] no [: =] 17

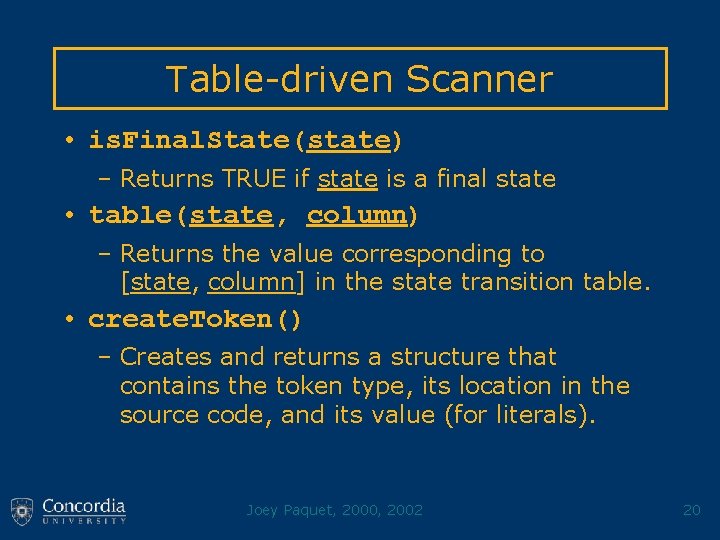

Table-driven Scanner (Algorithm) next. Token() state = 0 token = null do lookup = next. Char() state = Table(state, lookup) if (is. Final. State(state)) token = create. Token() if (Table(state, “backup”) == yes) backup. Char() until (token != null) return (token) Joey Paquet, 2000, 2002 18

Table-driven Scanner • next. Token() – Extract the next token in the program (called by syntactic analyzer) • next. Char() – Read the next character (except spaces) in the input program • backup. Char() – Backs up one character in the input file Joey Paquet, 2000, 2002 19

Table-driven Scanner • is. Final. State(state) – Returns TRUE if state is a final state • table(state, column) – Returns the value corresponding to [state, column] in the state transition table. • create. Token() – Creates and returns a structure that contains the token type, its location in the source code, and its value (for literals). Joey Paquet, 2000, 2002 20

![Handwritten Scanner next Token c next Char case c of a z Hand-written Scanner next. Token() c = next. Char() case (c) of "[a. . z],](https://slidetodoc.com/presentation_image_h2/958efb69f0a75096919c8afde796a438/image-21.jpg)

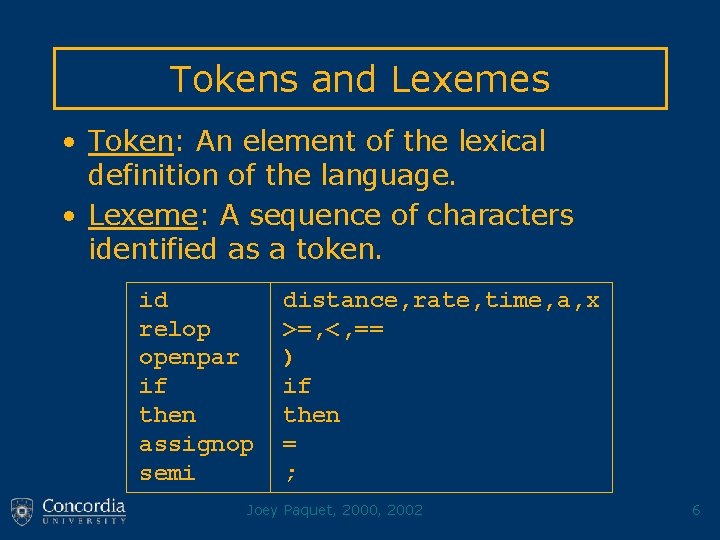

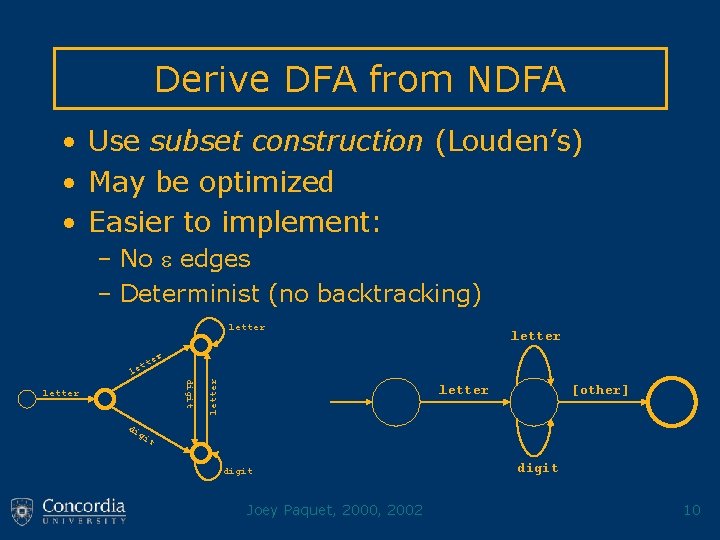

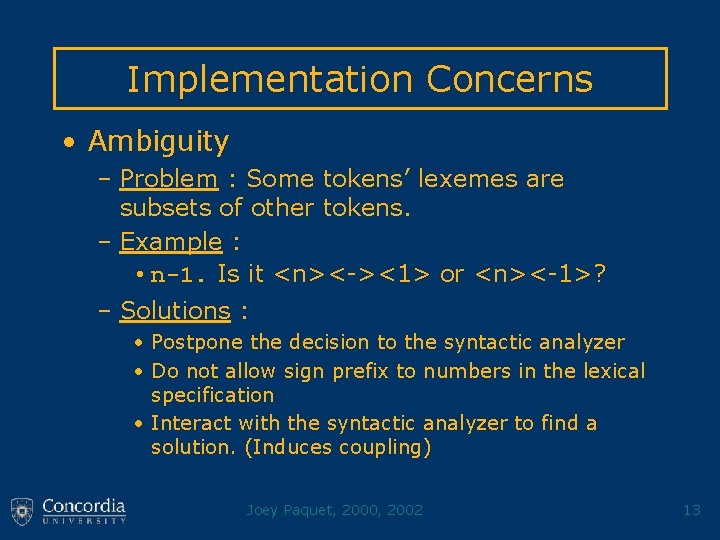

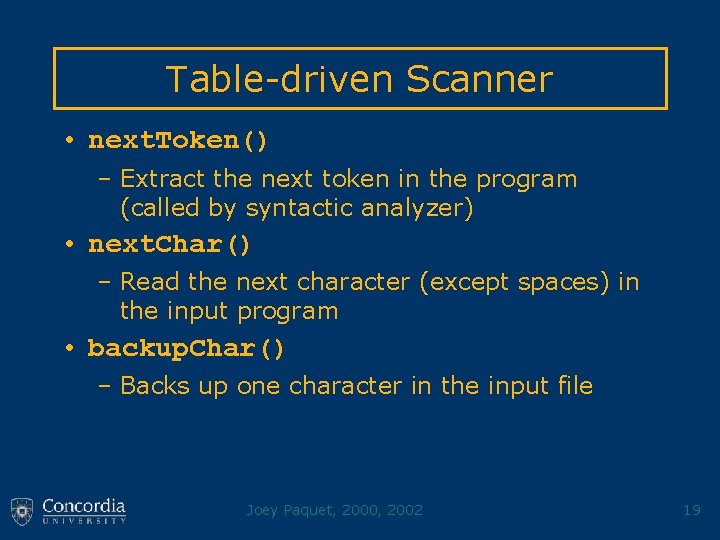

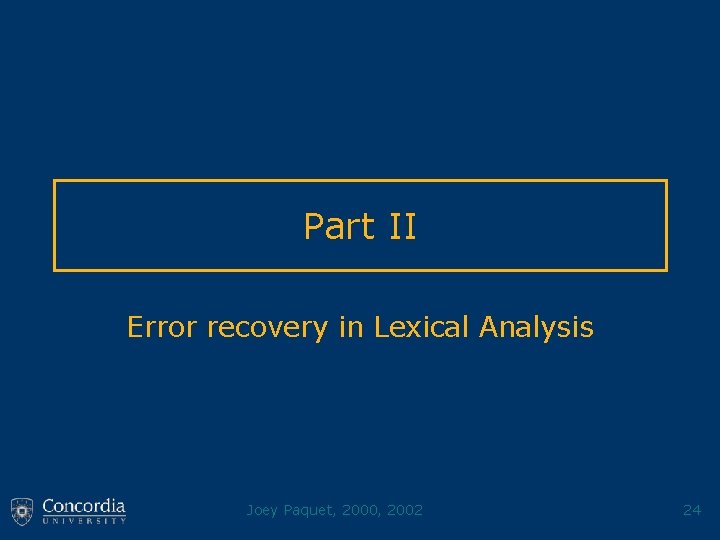

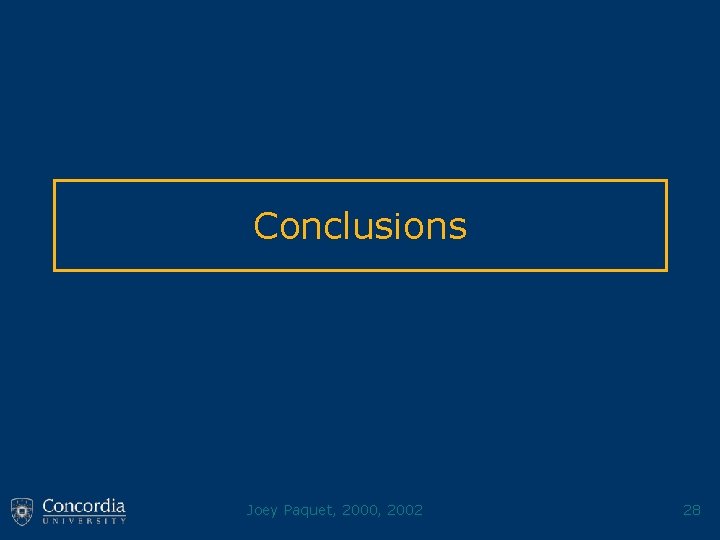

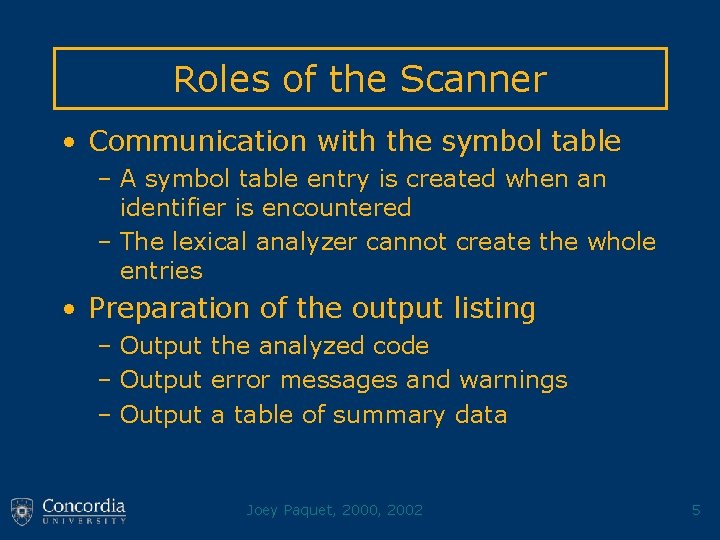

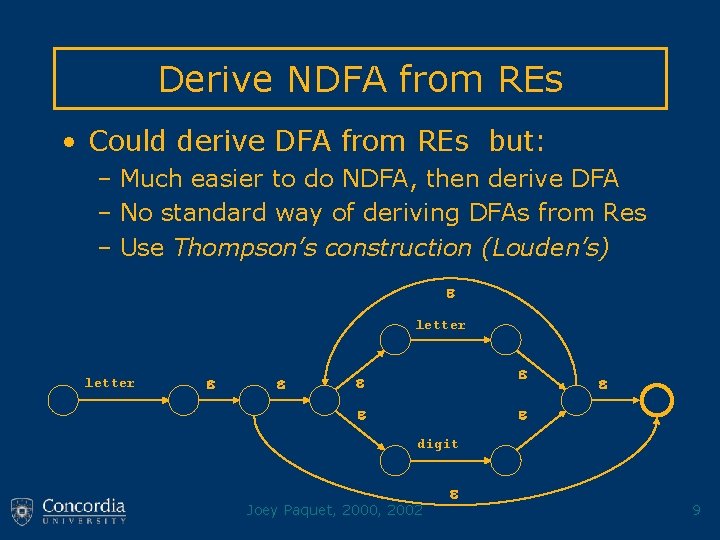

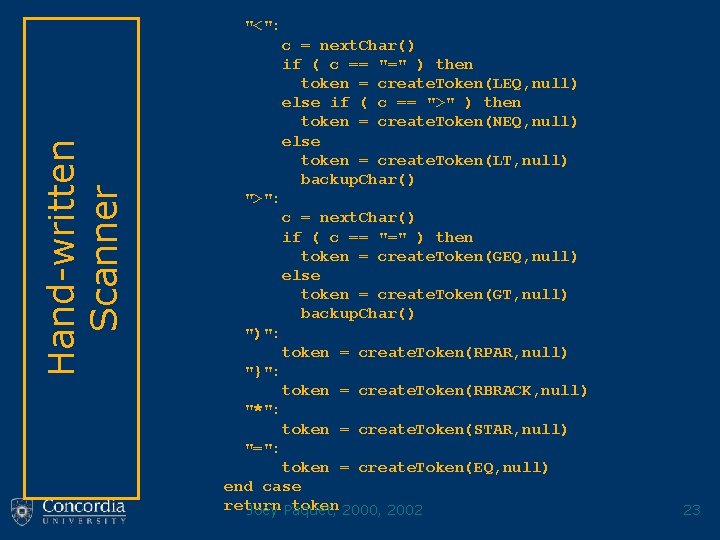

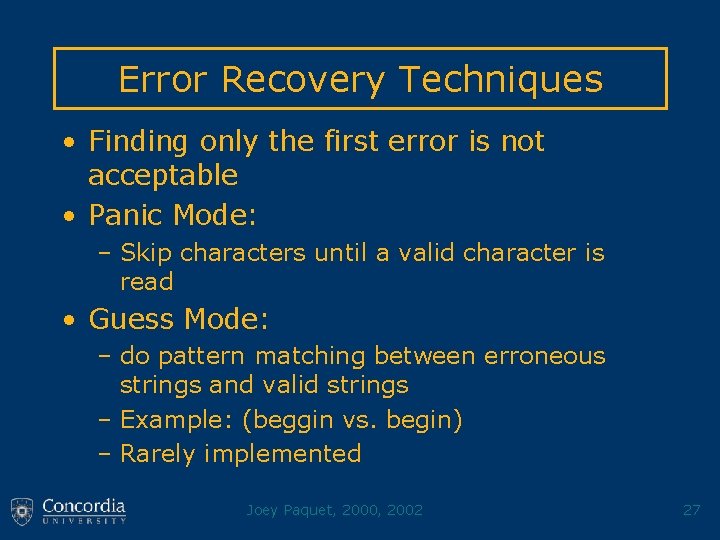

Hand-written Scanner next. Token() c = next. Char() case (c) of "[a. . z], [A. . Z]": c = next. Char() while (c in {[a. . z], [A. . Z], [0. . 9]}) do s = make. Up. String() c = next. Char() if ( is. Reserved. Word(s) )then token = create. Token(RESWORD, null) else token = create. Token(ID, s) backup. Char() "[0. . 9]": c = next. Char() while (c in [0. . 9]) do v = make. Up. Value() c = next. Char() token = create. Token(NUM, v) backup. Char() Joey Paquet, 2000, 2002 21

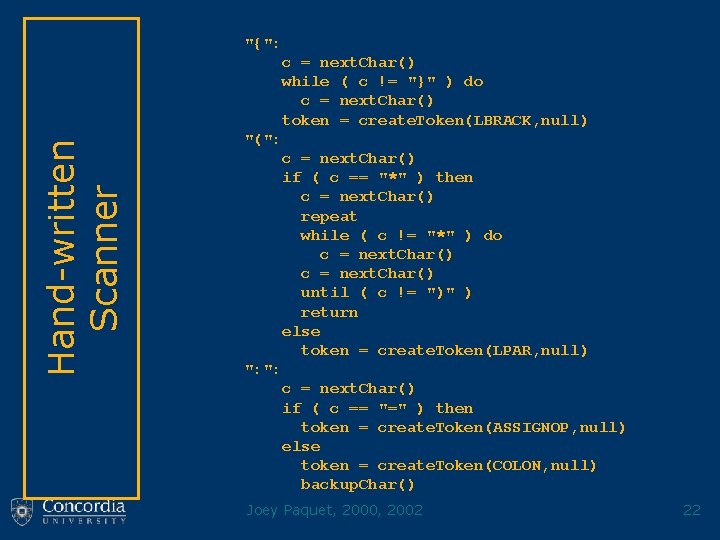

Hand-written Scanner "{": c = next. Char() while ( c != "}" ) do c = next. Char() token = create. Token(LBRACK, null) "(": c = next. Char() if ( c == "*" ) then c = next. Char() repeat while ( c != "*" ) do c = next. Char() until ( c != ")" ) return else token = create. Token(LPAR, null) ": ": c = next. Char() if ( c == "=" ) then token = create. Token(ASSIGNOP, null) else token = create. Token(COLON, null) backup. Char() Joey Paquet, 2000, 2002 22

Hand-written Scanner "<": c = next. Char() if ( c == "=" ) then token = create. Token(LEQ, null) else if ( c == ">" ) then token = create. Token(NEQ, null) else token = create. Token(LT, null) backup. Char() ">": c = next. Char() if ( c == "=" ) then token = create. Token(GEQ, null) else token = create. Token(GT, null) backup. Char() ")": token = create. Token(RPAR, null) "}": token = create. Token(RBRACK, null) "*": token = create. Token(STAR, null) "=": token = create. Token(EQ, null) end case return token 2000, 2002 Joey Paquet, 23

Part II Error recovery in Lexical Analysis Joey Paquet, 2000, 2002 24

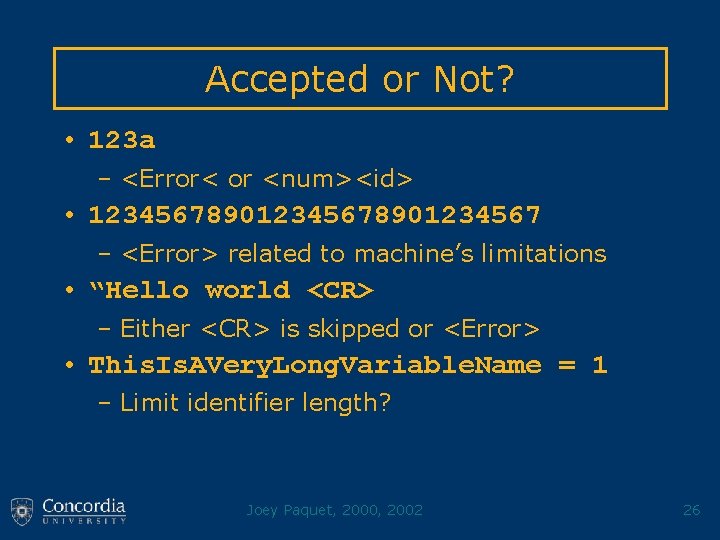

Possible Lexical Errors • Depends on the accepted conventions: – Invalid character – letter not allowed to terminate a number – numerical overflow – identifier too long – end of line before end of string – Are these lexical errors? Joey Paquet, 2000, 2002 25

Accepted or Not? • 123 a – <Error< or <num><id> • 12345678901234567 – <Error> related to machine’s limitations • “Hello world <CR> – Either <CR> is skipped or <Error> • This. Is. AVery. Long. Variable. Name = 1 – Limit identifier length? Joey Paquet, 2000, 2002 26

Error Recovery Techniques • Finding only the first error is not acceptable • Panic Mode: – Skip characters until a valid character is read • Guess Mode: – do pattern matching between erroneous strings and valid strings – Example: (beggin vs. begin) – Rarely implemented Joey Paquet, 2000, 2002 27

Conclusions Joey Paquet, 2000, 2002 28

Possible Implementations • Lexical Analyzer Generator (e. g. Lex) + safe, quick – Must learn software, unable to handle unusual situations • Table-Driven Lexical Analyzer + general and adaptable method, same function can be used for all table-driven lexical analyzers – Building transition table can be tedious and error-prone Joey Paquet, 2000, 2002 29

Possible Implementations • Hand-written + Can be optimized, can handle any unusual situation, easy to build for most languages – Error-prone, not adaptable or maintainable Joey Paquet, 2000, 2002 30

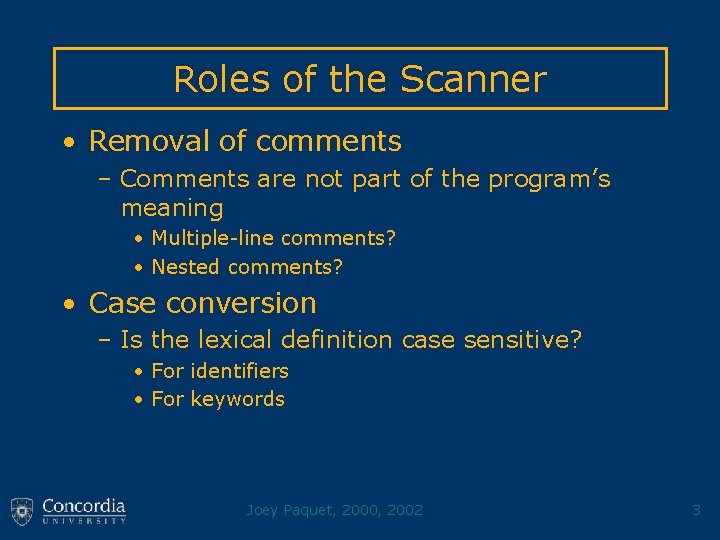

Lexical Analyzer’s Modularity • Why should the Lexical Analyzer and the Syntactic Analyzer be separated? – Modularity/Maintainability : system is more modular, thus more maintainable – Efficiency : modularity = task specialization = easier optimization – Reusability : can change to whole lexical analyzer without changing other parts Joey Paquet, 2000, 2002 31