Learning with Perceptrons and Neural Networks Artificial Intelligence

- Slides: 28

Learning with Perceptrons and Neural Networks Artificial Intelligence CMSC 25000 February 14, 2002

Agenda • Neural Networks: – Biological analogy • Perceptrons: Single layer networks • Perceptron training: Perceptron convergence theorem • Perceptron limitations • Neural Networks: Multilayer perceptrons • Neural net training: Backpropagation • Strengths & Limitations • Conclusions

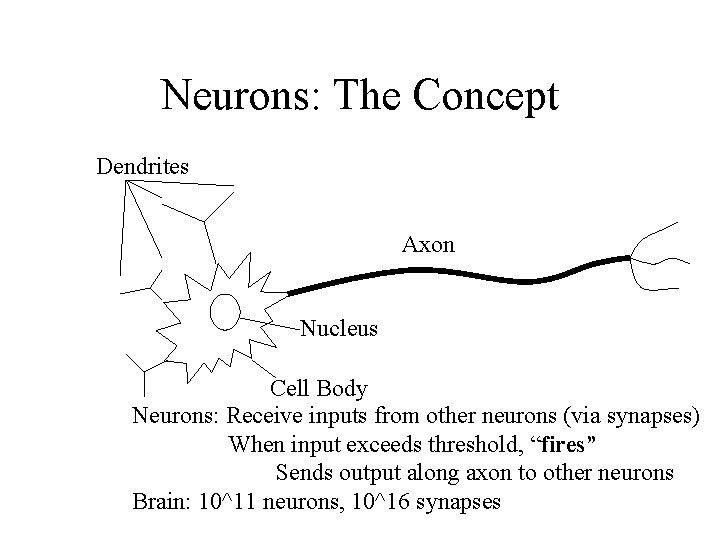

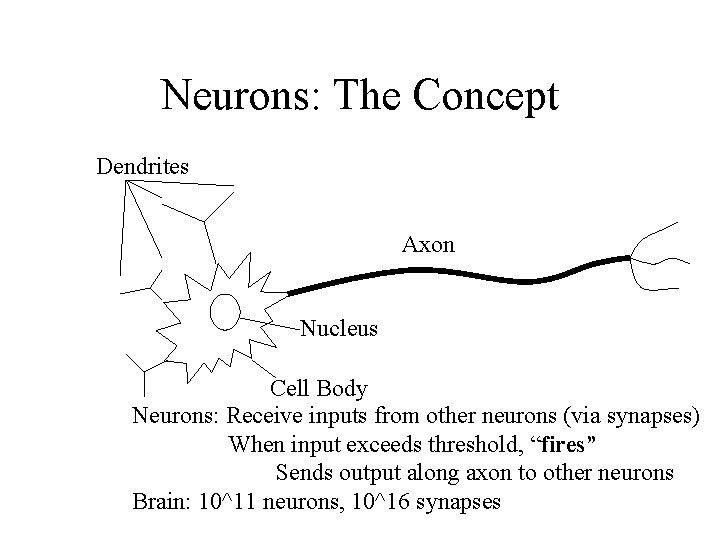

Neurons: The Concept Dendrites Axon Nucleus Cell Body Neurons: Receive inputs from other neurons (via synapses) When input exceeds threshold, “fires” Sends output along axon to other neurons Brain: 10^11 neurons, 10^16 synapses

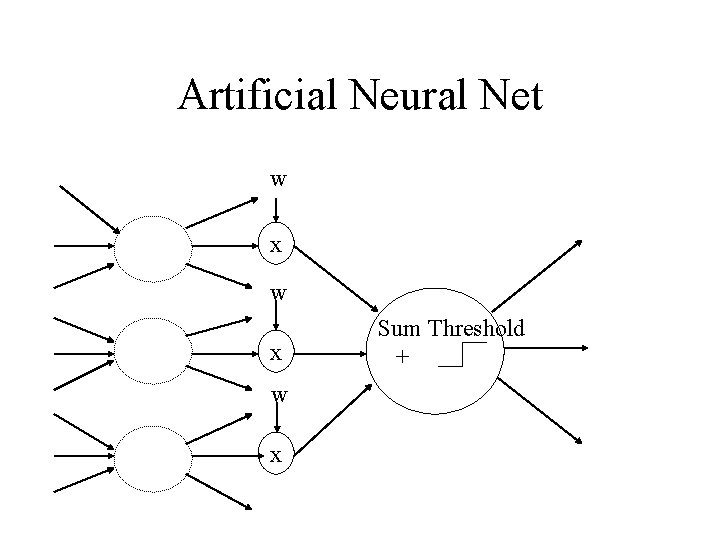

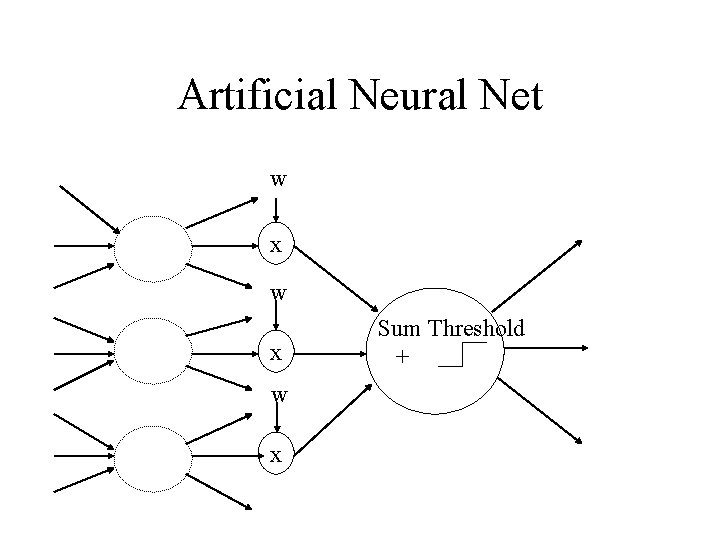

Artificial Neural Nets • Simulated Neuron: – Node connected to other nodes via links • Links = axon+synapse+link • Links associated with weight (like synapse) – Multiplied by output of node – Node combines input via activation function • E. g. sum of weighted inputs passed thru threshold • Simpler than real neuronal processes

Artificial Neural Net w x w x Sum Threshold +

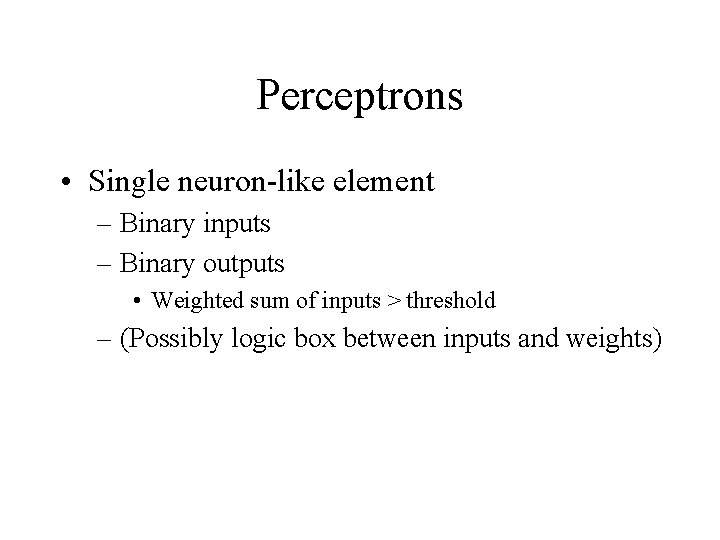

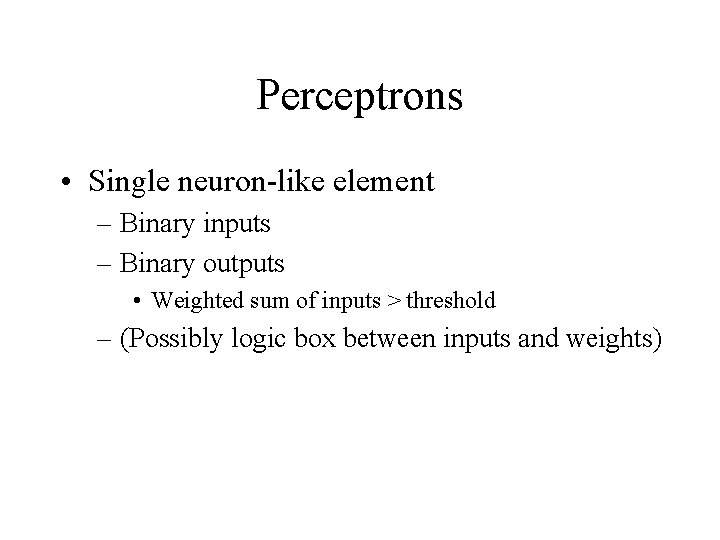

Perceptrons • Single neuron-like element – Binary inputs – Binary outputs • Weighted sum of inputs > threshold – (Possibly logic box between inputs and weights)

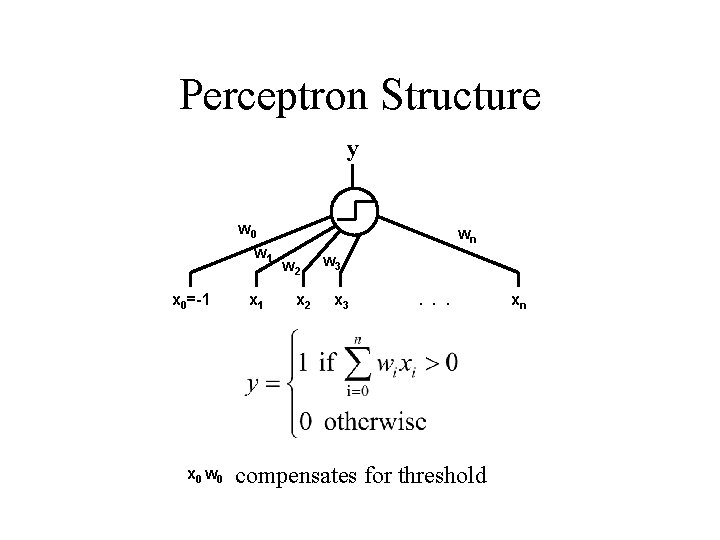

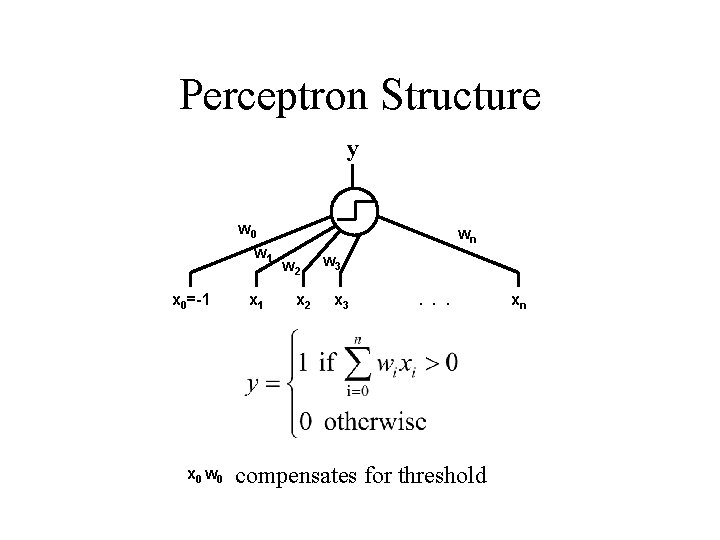

Perceptron Structure y w 0 w 1 x 0=-1 x 0 w 0 x 1 wn w 2 x 2 w 3 x 3 . . . compensates for threshold xn

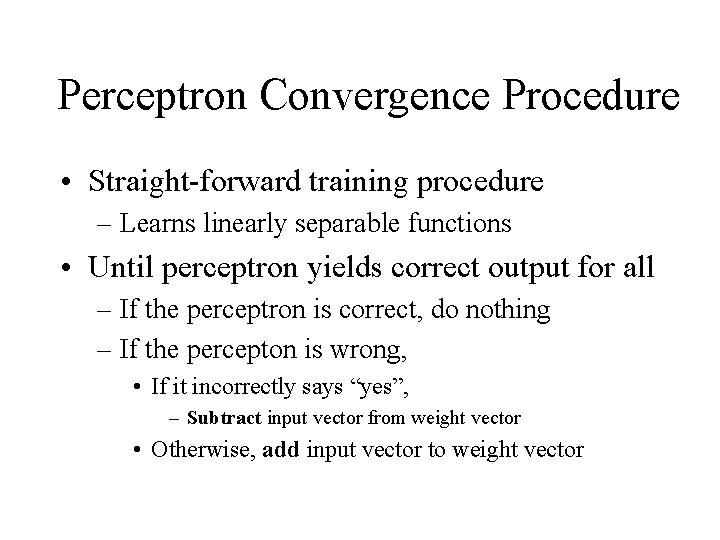

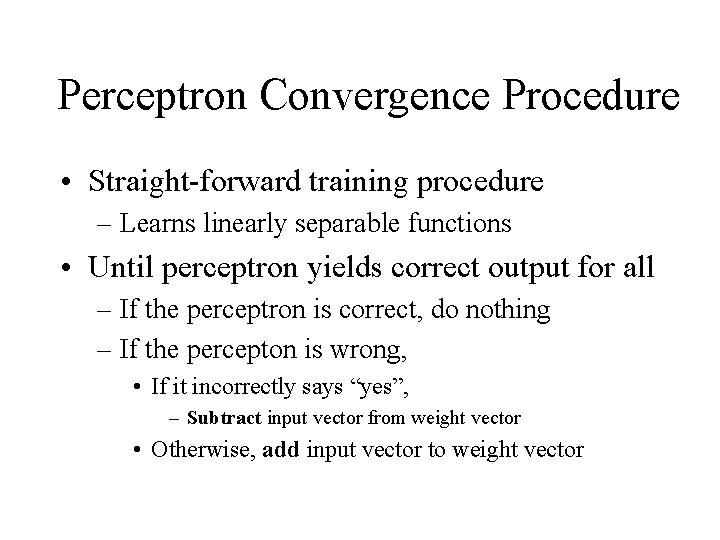

Perceptron Convergence Procedure • Straight-forward training procedure – Learns linearly separable functions • Until perceptron yields correct output for all – If the perceptron is correct, do nothing – If the percepton is wrong, • If it incorrectly says “yes”, – Subtract input vector from weight vector • Otherwise, add input vector to weight vector

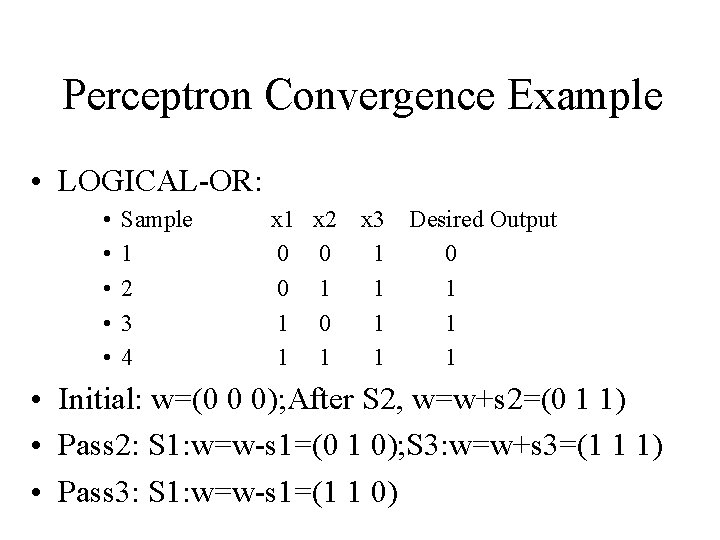

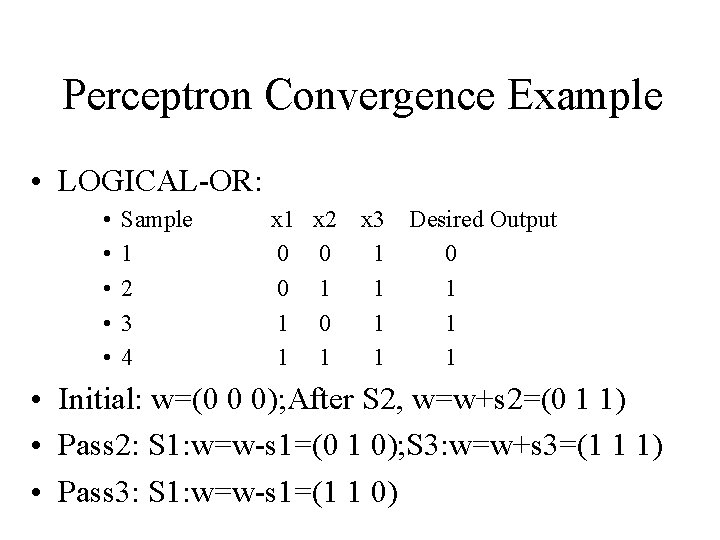

Perceptron Convergence Example • LOGICAL-OR: • • • Sample 1 2 3 4 x 1 x 2 0 0 0 1 1 x 3 1 1 Desired Output 0 1 1 1 • Initial: w=(0 0 0); After S 2, w=w+s 2=(0 1 1) • Pass 2: S 1: w=w-s 1=(0 1 0); S 3: w=w+s 3=(1 1 1) • Pass 3: S 1: w=w-s 1=(1 1 0)

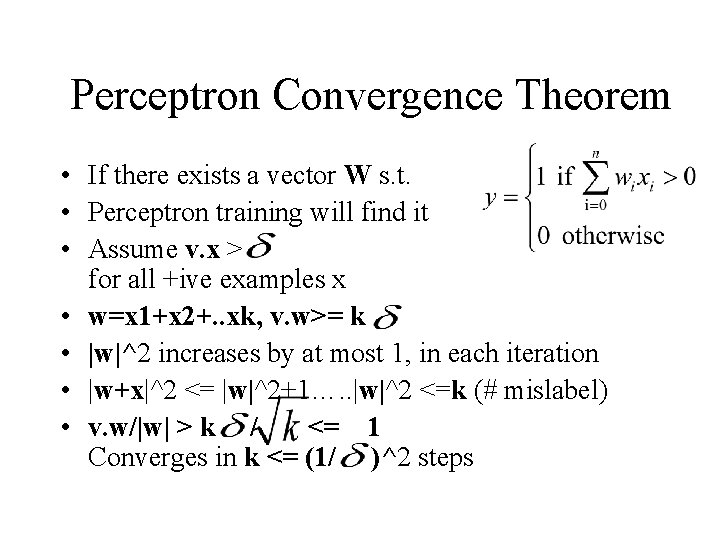

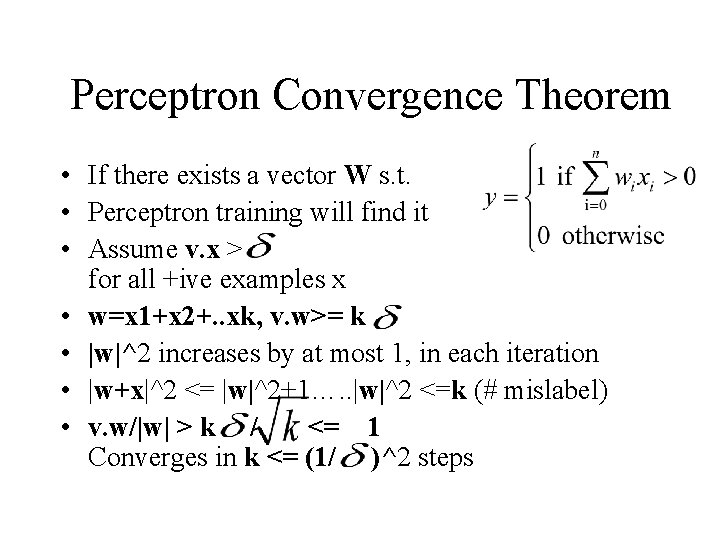

Perceptron Convergence Theorem • If there exists a vector W s. t. • Perceptron training will find it • Assume v. x > for all +ive examples x • w=x 1+x 2+. . xk, v. w>= k • |w|^2 increases by at most 1, in each iteration • |w+x|^2 <= |w|^2+1…. . |w|^2 <=k (# mislabel) • v. w/|w| > k / <= 1 Converges in k <= (1/ )^2 steps

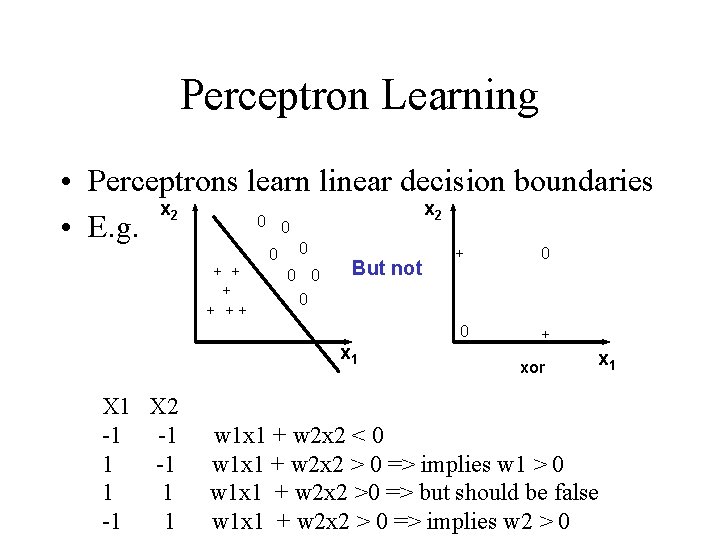

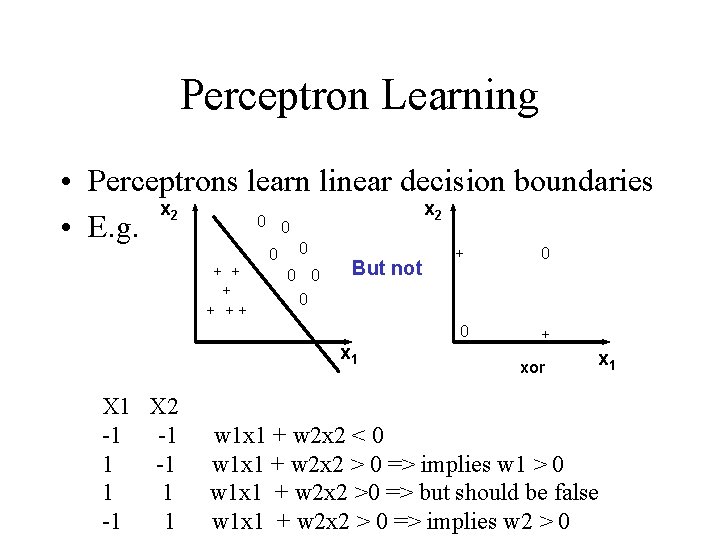

Perceptron Learning • Perceptrons learn linear decision boundaries x 2 0 0 • E. g. 0 0 But not + 0 x 1 X 2 -1 -1 1 0 + + ++ 0 xor x 1 w 1 x 1 + w 2 x 2 < 0 w 1 x 1 + w 2 x 2 > 0 => implies w 1 > 0 w 1 x 1 + w 2 x 2 >0 => but should be false w 1 x 1 + w 2 x 2 > 0 => implies w 2 > 0

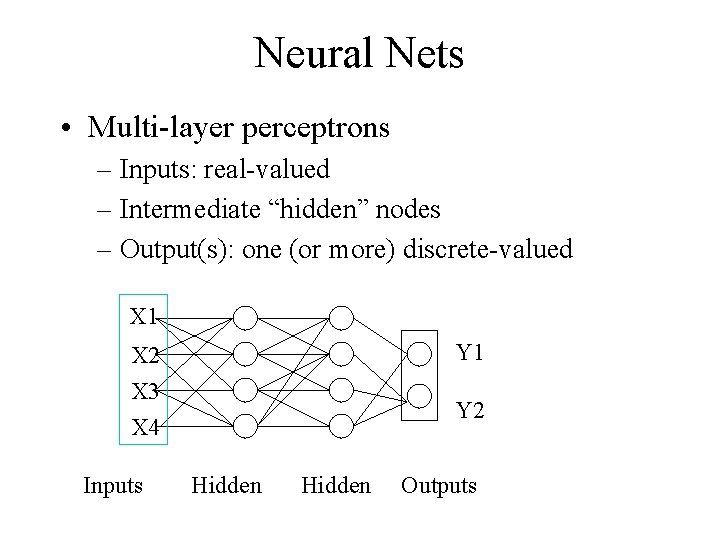

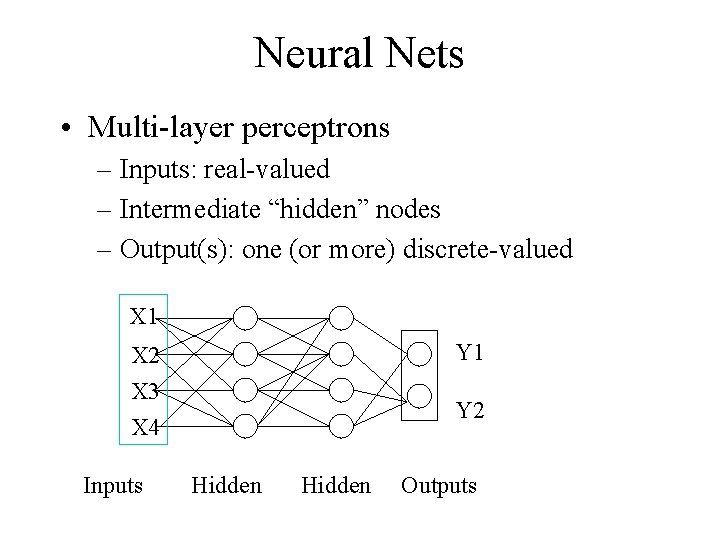

Neural Nets • Multi-layer perceptrons – Inputs: real-valued – Intermediate “hidden” nodes – Output(s): one (or more) discrete-valued X 1 Y 1 X 2 X 3 X 4 Inputs Y 2 Hidden Outputs

Neural Nets • Pro: More general than perceptrons – Not restricted to linear discriminants – Multiple outputs: one classification each • Con: No simple, guaranteed training procedure – Use greedy, hill-climbing procedure to train – “Gradient descent”, “Backpropagation”

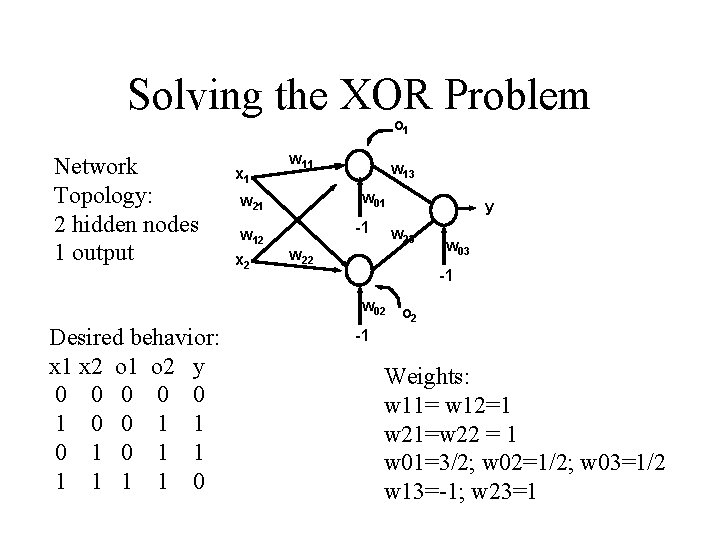

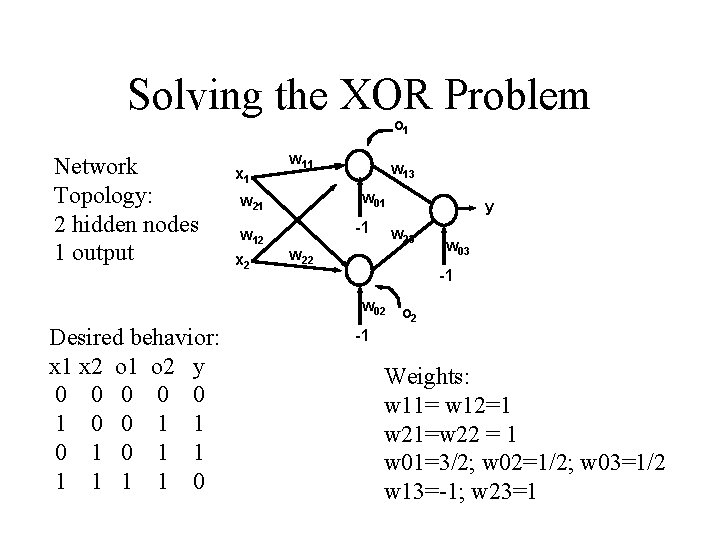

Solving the XOR Problem o 1 Network Topology: 2 hidden nodes 1 output Desired behavior: x 1 x 2 o 1 o 2 y 0 0 0 1 1 0 1 1 1 0 x 1 w 11 w 01 w 21 w 12 x 2 w 13 -1 y w 23 w 22 w 03 -1 w 02 o 2 -1 Weights: w 11= w 12=1 w 21=w 22 = 1 w 01=3/2; w 02=1/2; w 03=1/2 w 13=-1; w 23=1

Backpropagation • Greedy, Hill-climbing procedure – Weights are parameters to change – Original hill-climb changes one parameter/step • Slow – If smooth function, change all parameters/step • Gradient descent – Backpropagation: Computes current output, works backward to correct error

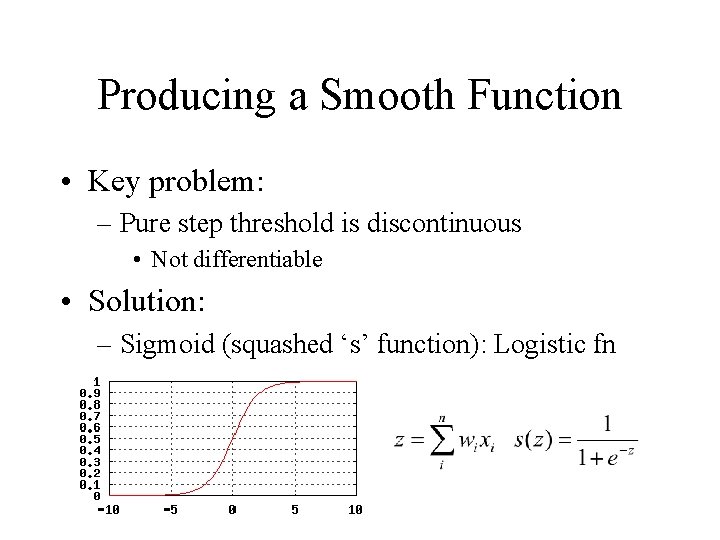

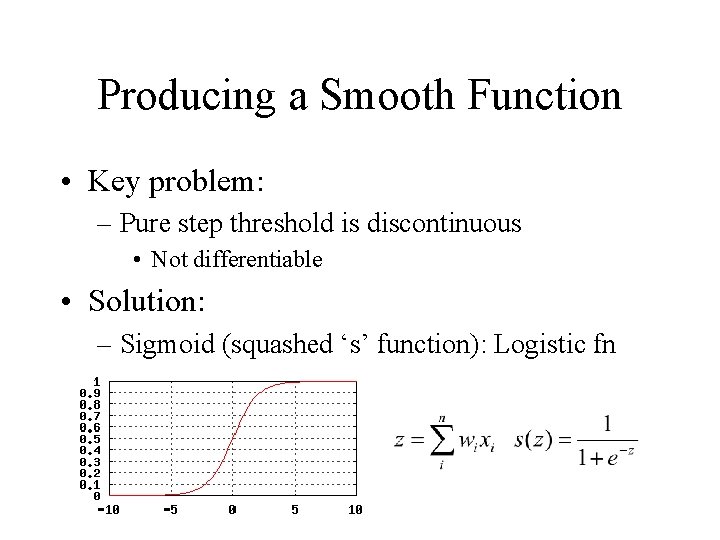

Producing a Smooth Function • Key problem: – Pure step threshold is discontinuous • Not differentiable • Solution: – Sigmoid (squashed ‘s’ function): Logistic fn

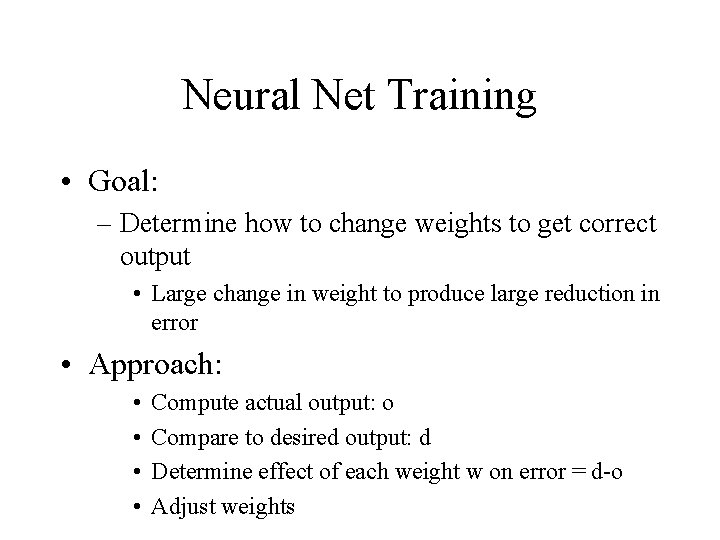

Neural Net Training • Goal: – Determine how to change weights to get correct output • Large change in weight to produce large reduction in error • Approach: • • Compute actual output: o Compare to desired output: d Determine effect of each weight w on error = d-o Adjust weights

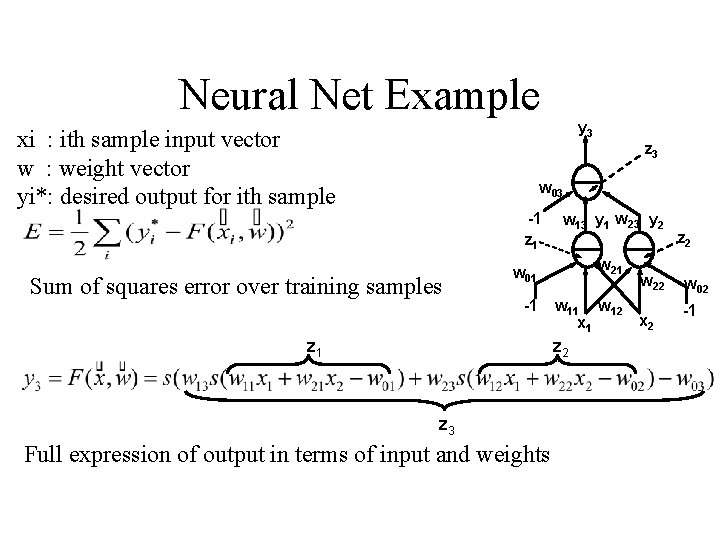

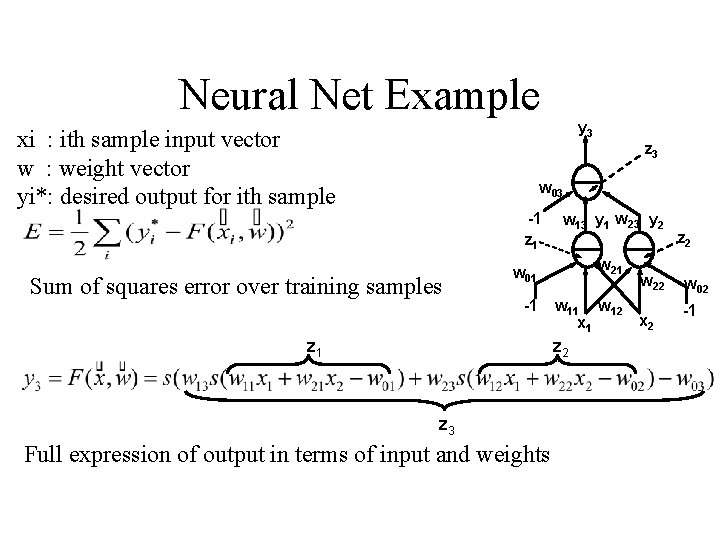

Neural Net Example y 3 xi : ith sample input vector w : weight vector yi*: desired output for ith sample z 3 w 03 -1 z 1 Sum of squares error over training samples w 13 y 1 w 23 y 2 w 21 w 01 -1 z 1 w 12 x 1 z 2 z 3 Full expression of output in terms of input and weights w 22 x 2 z 2 w 02 -1

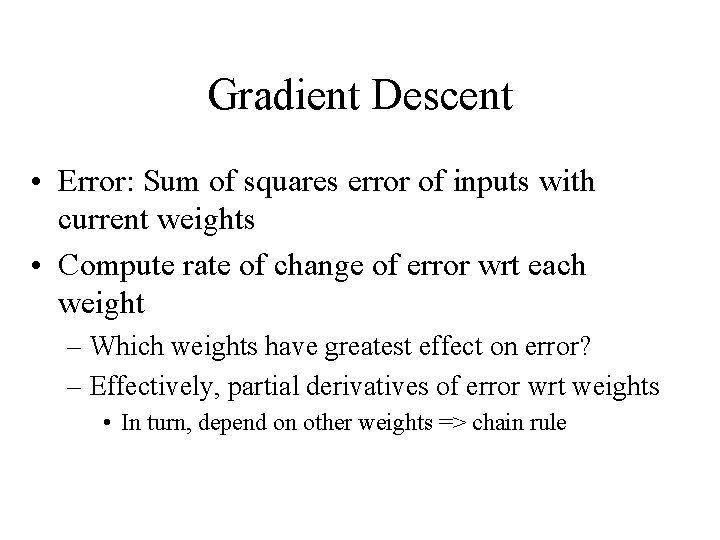

Gradient Descent • Error: Sum of squares error of inputs with current weights • Compute rate of change of error wrt each weight – Which weights have greatest effect on error? – Effectively, partial derivatives of error wrt weights • In turn, depend on other weights => chain rule

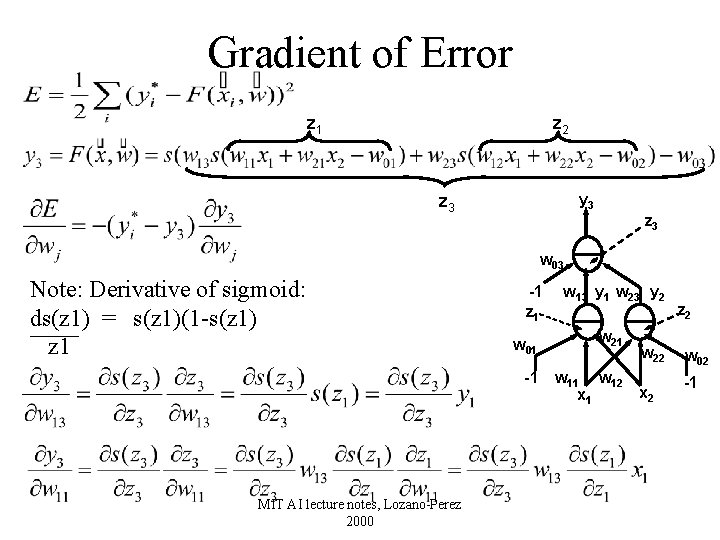

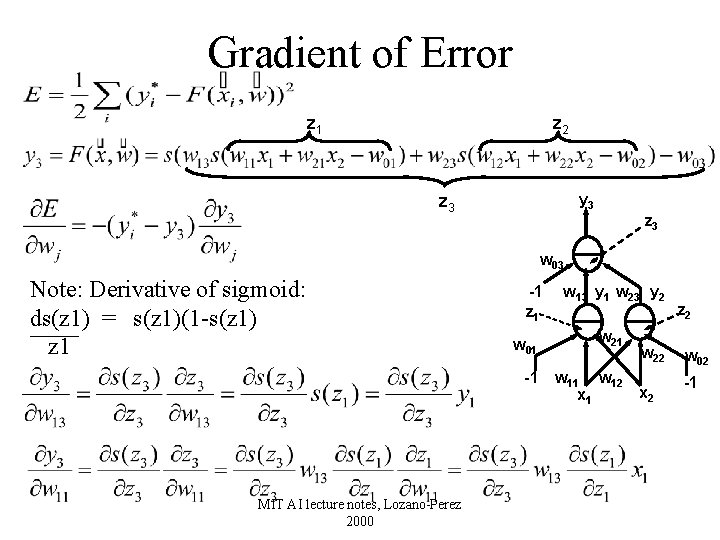

Gradient of Error z 1 z 2 y 3 z 3 w 03 Note: Derivative of sigmoid: ds(z 1) = s(z 1)(1 -s(z 1) z 1 -1 z 1 w 01 -1 MIT AI lecture notes, Lozano-Perez 2000 w 13 y 1 w 23 y 2 w 21 w 12 x 1 w 22 x 2 z 2 w 02 -1

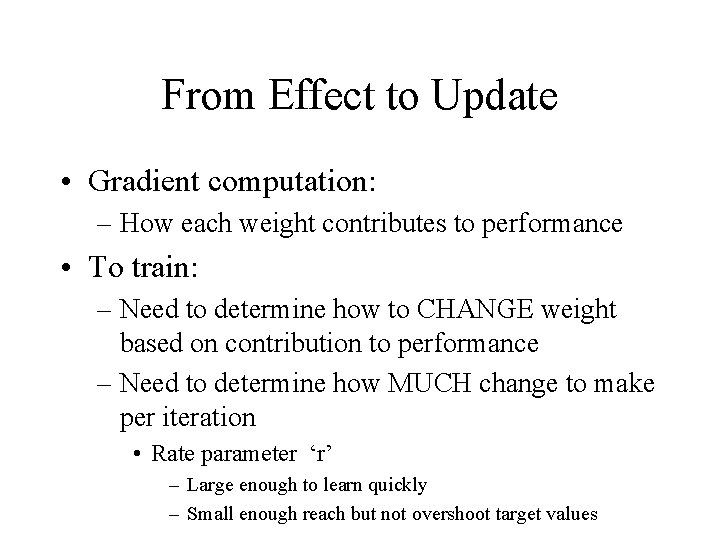

From Effect to Update • Gradient computation: – How each weight contributes to performance • To train: – Need to determine how to CHANGE weight based on contribution to performance – Need to determine how MUCH change to make per iteration • Rate parameter ‘r’ – Large enough to learn quickly – Small enough reach but not overshoot target values

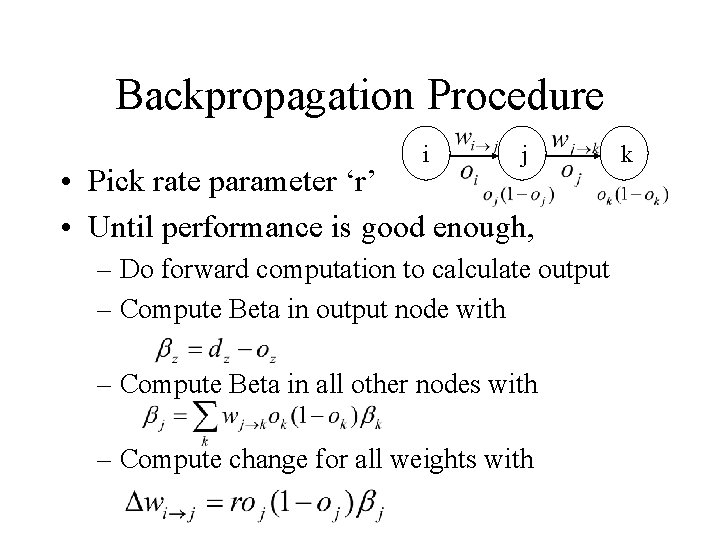

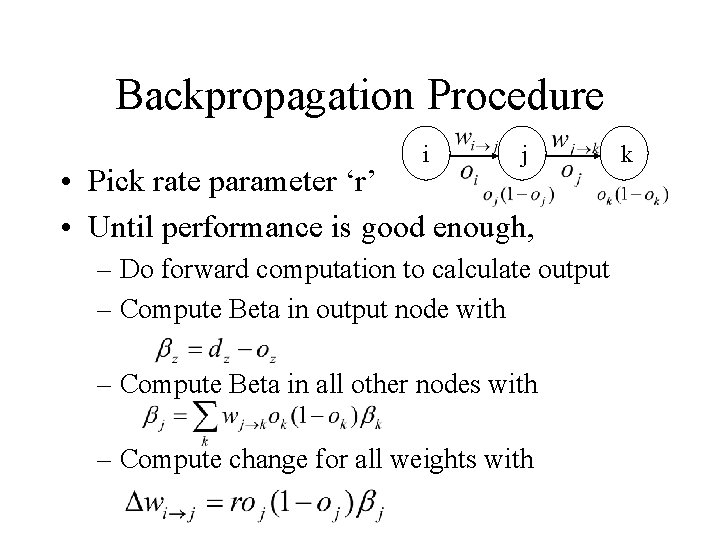

Backpropagation Procedure i j • Pick rate parameter ‘r’ • Until performance is good enough, – Do forward computation to calculate output – Compute Beta in output node with – Compute Beta in all other nodes with – Compute change for all weights with k

Backpropagation Observations • Procedure is (relatively) efficient – All computations are local • Use inputs and outputs of current node • What is “good enough”? – Rarely reach target (0 or 1) outputs • Typically, train until within 0. 1 of target

Neural Net Summary • Training: – Backpropagation procedure • Gradient descent strategy (usual problems) • Prediction: – Compute outputs based on input vector & weights • Pros: Very general, Fast prediction • Cons: Training can be VERY slow (1000’s of epochs), Overfitting

Training Strategies • Online training: – Update weights after each sample • Offline (batch training): – Compute error over all samples • Then update weights • Online training “noisy” – Sensitive to individual instances – However, may escape local minima

Training Strategy • To avoid overfitting: – Split data into: training, validation, & test • Also, avoid excess weights (less than # samples) • Initialize with small random weights – Small changes have noticeable effect • Use offline training – Until validation set minimum • Evaluate on test set – No more weight changes

Classification • Neural networks best for classification task – Single output -> Binary classifier – Multiple outputs -> Multiway classification • Applied successfully to learning pronunciation – Sigmoid pushes to binary classification • Not good for regression

Neural Net Conclusions • Simulation based on neurons in brain • Perceptrons (single neuron) – Guaranteed to find linear discriminant • IF one exists -> problem XOR • Neural nets (Multi-layer perceptrons) – Very general – Backpropagation training procedure • Gradient descent - local min, overfitting issues