Learning SQL with a Computerized Tutor Centered on

- Slides: 20

Learning SQL with a Computerized Tutor (Centered on SQL-Tutor) Antonija Mitrovic (University of Canterbury) Presented by Danielle H. Lee

Agenda o o Problem regarding to learning SQL Purpose of SQL-Tutor System Architecture of SQL-Tutor Evaluation of SQL-Tutor

Problem regarding to learning SQL o o o Burden of having to memorize database schemas (incorrect table or attribute names) Misconceptions in student’s understanding of the elements of SQL and the relational data model in general Not easy to learn SQL directly by working with a DBMS n n Inadequacy of feedback from a RDBMS o Example (in Ingres): E_USOB 63 line 1, the columns in the SELECT clause must be contained in the GROUP BY clause. Inability of a RDBMS to deal with semantic errors

Research By the Univ. of Canterbury o Database. Place n n n Web portal for database related lectures. SQL-tutor: teaches the SQL database query language NORMIT: data normalization tutor ER-tutor: teaches database design using the Entity-Relationship data model Constraint-based tutors

Automated Tutoring System o o o The School of Computing, Dublin City University Developed for an online course name ‘the introduction to databases’ To Provide a certain level advice and guide by using feedback, assessment, and personalized guidance Limited the contents to the SQL SELECT sentence. n The most fundamental of the SQL n Simple but having the capacity to become quite complex There are correction model and pedagogical model. n Correction model: Multi-level error categorization scheme according to three aspects (from, where, select) n Pedagogical model: analyses the information stored by the student’s answers, it provides feedback, assessment, and guidance

Purpose of Project o Personalized ITS for Database Courses n n n Personalized tutoring system for learning SQL To adapt SQL-tutor technology for use with a different audience and to explore some ways to maximize the educational value for every student. Exploration of personalized guidance technology based on the ideas of adaptive hypermedia

Purpose of SQL-Tutor system o o To explore and extend constraint based modeling Problem-solving environment intended to complement classroom instruction. Problem sets with nine levels of complexity defined by a human expert Students have a assigned educational level and the level is updated by observing the student’s behavior. n Novice, intermediate, or experienced

System Demo o http: //ictg. cosc. canterbury. ac. nz: 8000/sqltutor/login

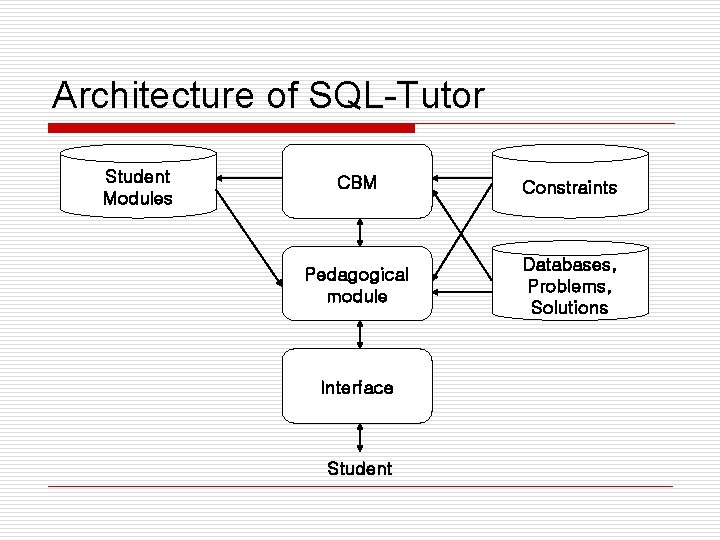

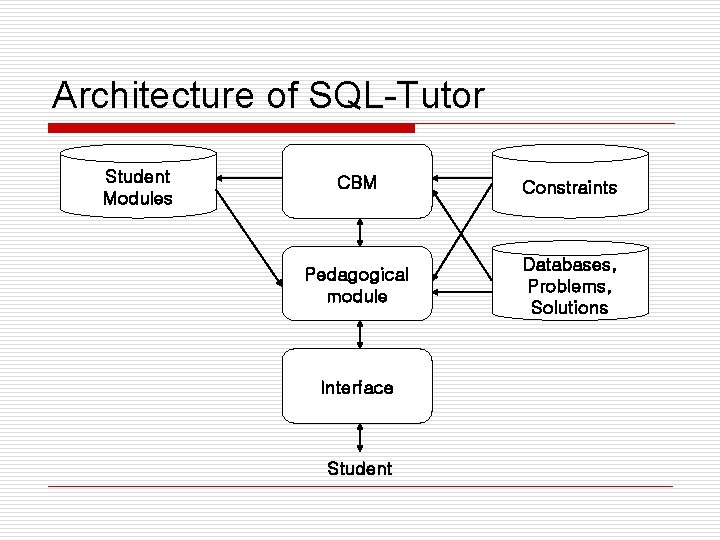

Architecture of SQL-Tutor Student Modules CBM Constraints Pedagogical module Databases, Problems, Solutions Interface Student

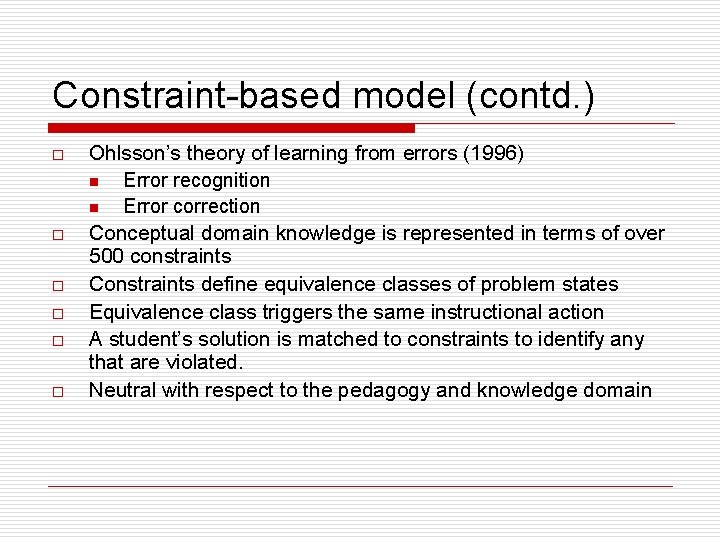

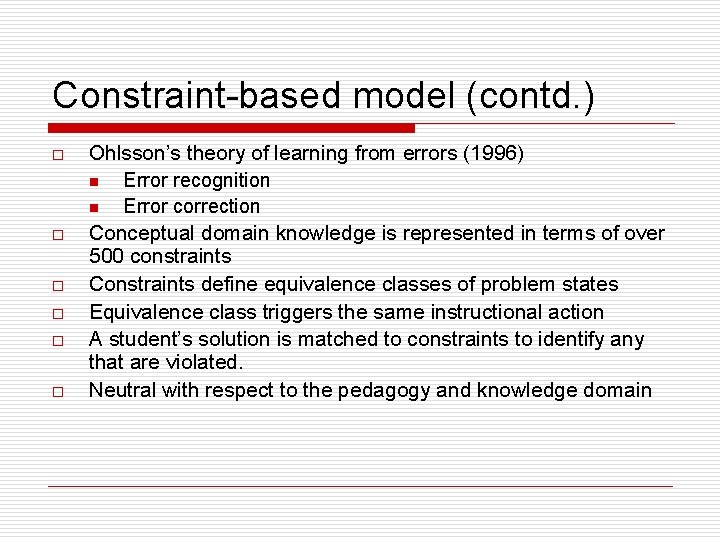

Constraint-based model (contd. ) o o o Ohlsson’s theory of learning from errors (1996) n Error recognition n Error correction Conceptual domain knowledge is represented in terms of over 500 constraints Constraints define equivalence classes of problem states Equivalence class triggers the same instructional action A student’s solution is matched to constraints to identify any that are violated. Neutral with respect to the pedagogy and knowledge domain

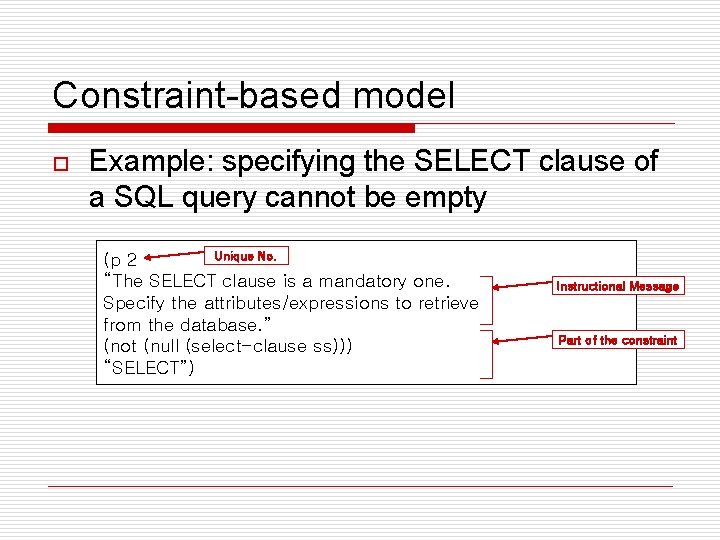

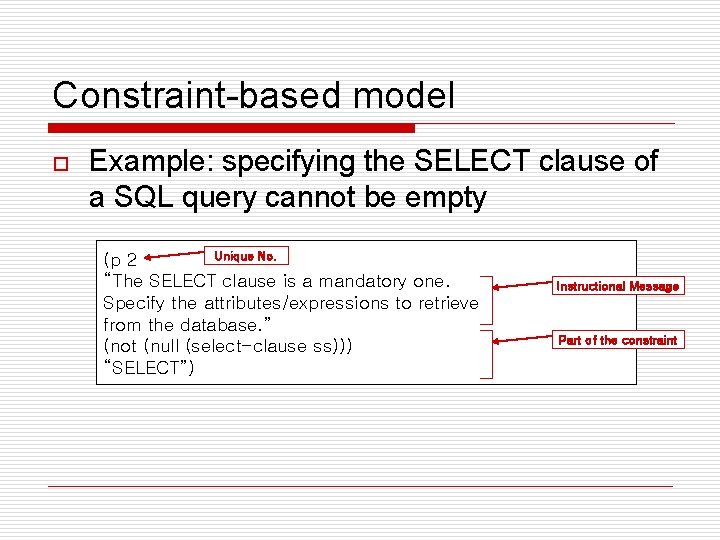

Constraint-based model o Example: specifying the SELECT clause of a SQL query cannot be empty Unique No. (p 2 “The SELECT clause is a mandatory one. Specify the attributes/expressions to retrieve from the database. ” (not (null (select-clause ss))) “SELECT”) Instructional Message Part of the constraint

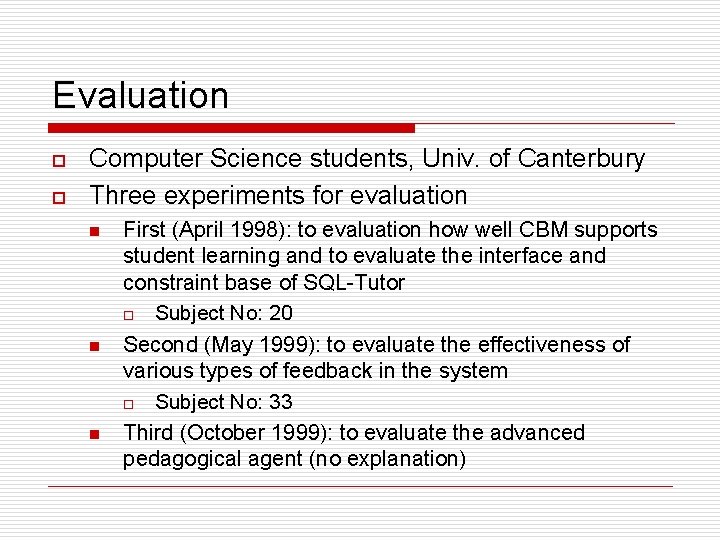

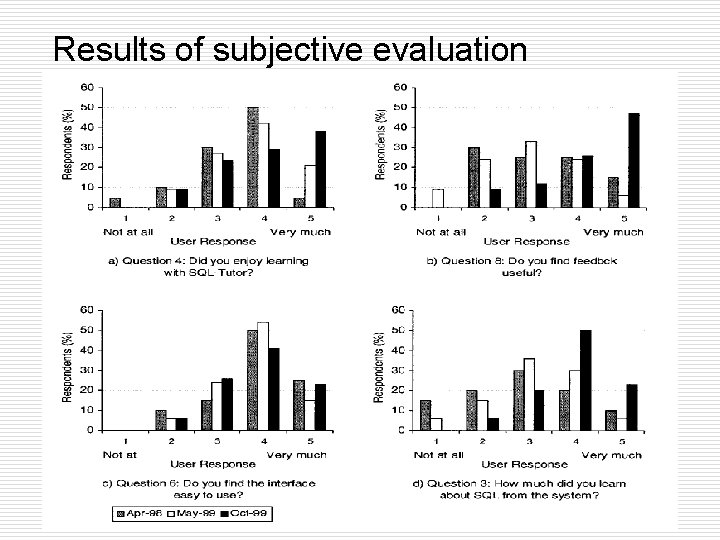

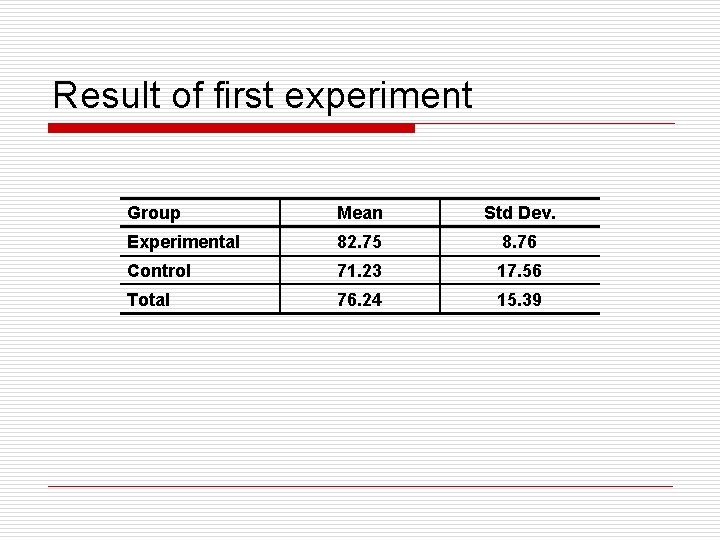

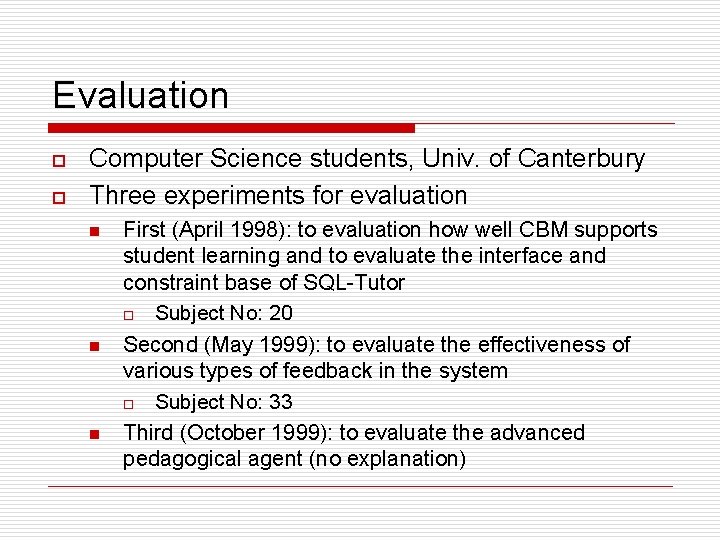

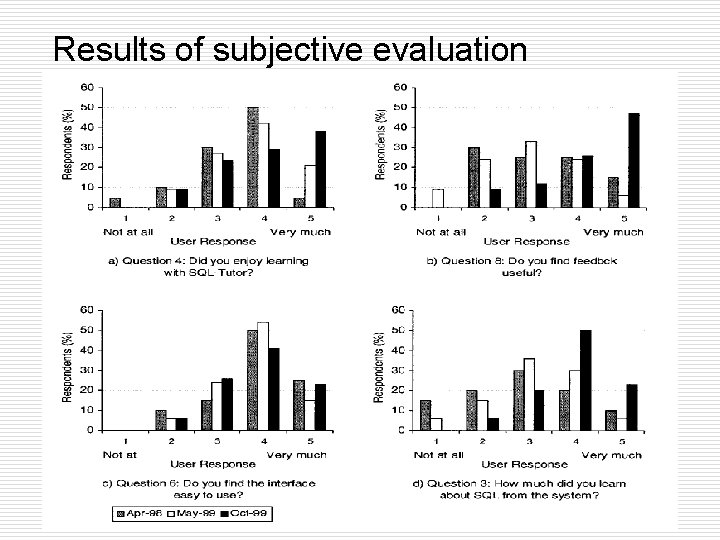

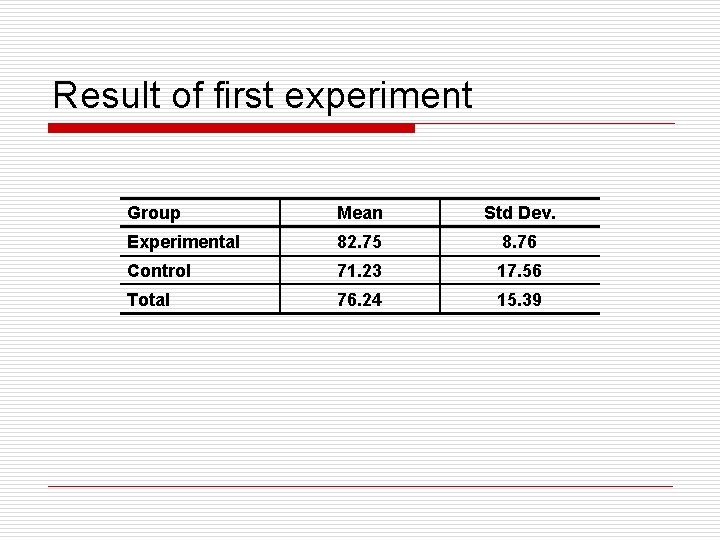

Evaluation o o Computer Science students, Univ. of Canterbury Three experiments for evaluation n First (April 1998): to evaluation how well CBM supports student learning and to evaluate the interface and constraint base of SQL-Tutor o Subject No: 20 Second (May 1999): to evaluate the effectiveness of various types of feedback in the system o Subject No: 33 Third (October 1999): to evaluate the advanced pedagogical agent (no explanation)

Results of subjective evaluation

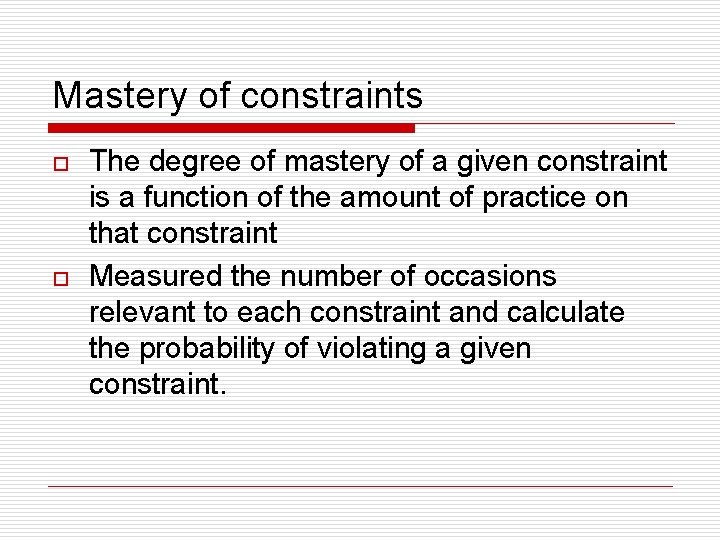

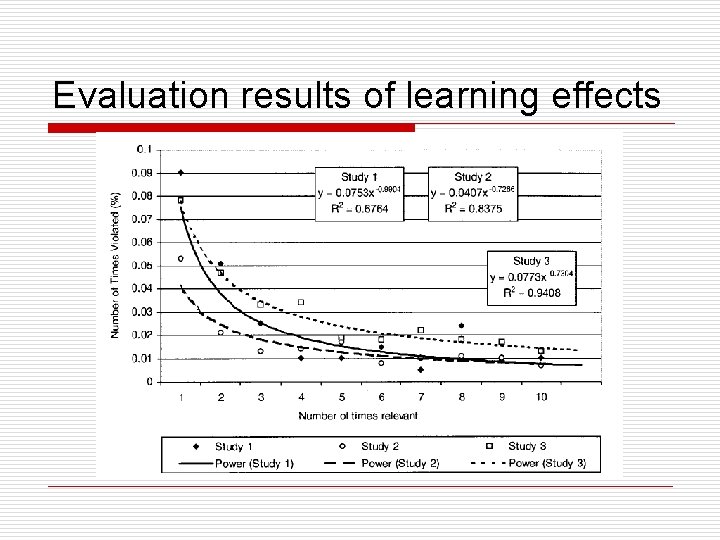

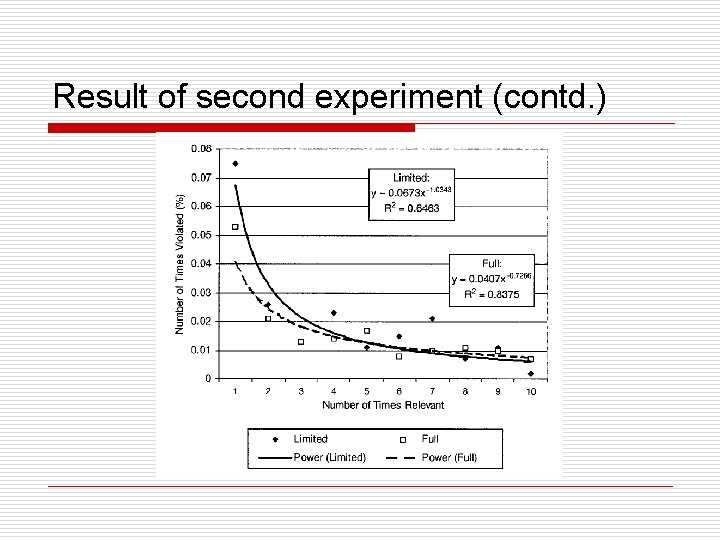

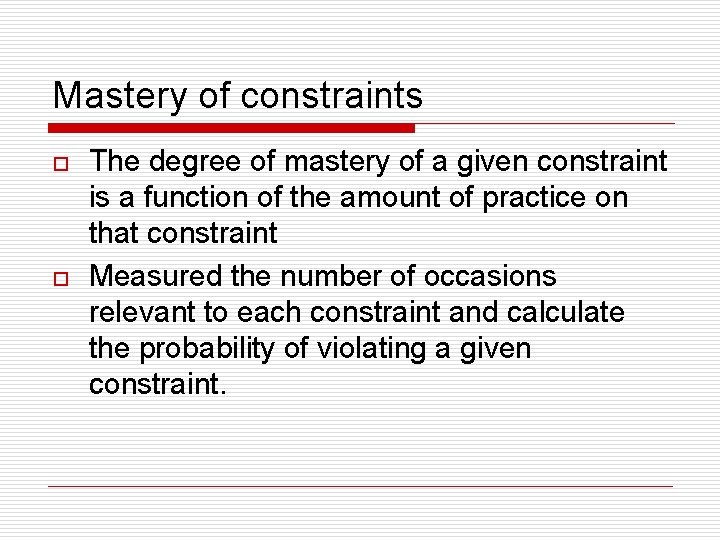

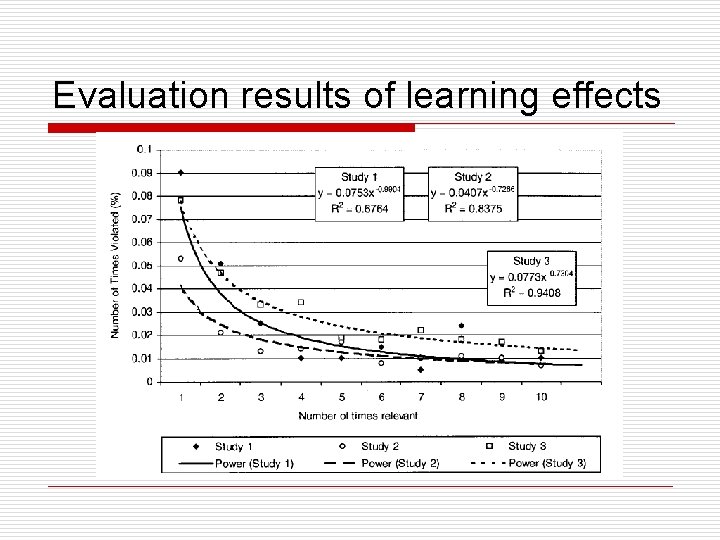

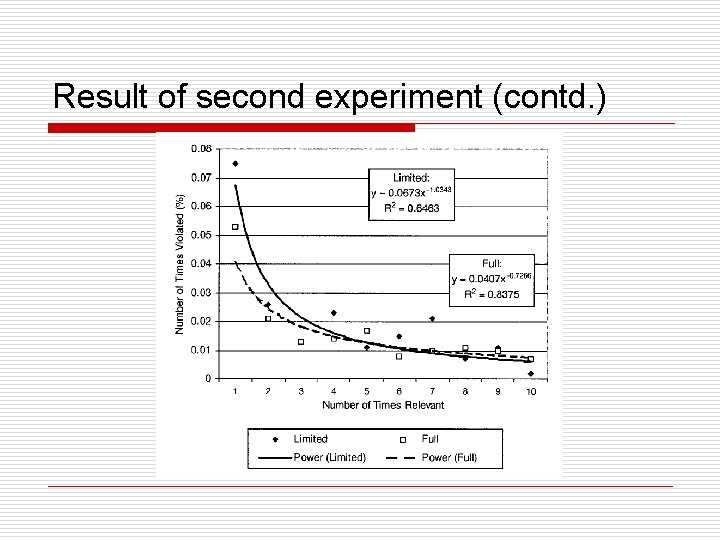

Mastery of constraints o o The degree of mastery of a given constraint is a function of the amount of practice on that constraint Measured the number of occasions relevant to each constraint and calculate the probability of violating a given constraint.

Evaluation results of learning effects

Result of first experiment Group Mean Std Dev. Experimental 82. 75 8. 76 Control 71. 23 17. 56 Total 76. 24 15. 39

Kinds of feedback o o o Positive/negative feedback Error flag Hint All errors Partial solution Complete solution

Result of second experiment (contd. )

Result of second experiment o o CBM-based general feedback is superior to offering a correct solution. Among six feedbacks, the initial learning rate is highest for all errors (0. 44) and error flag (0. 40), closely followed by positive/negative (0. 29) and hint (0. 26). The learning rate for partial (0. 15) and full solution (0. 13) are low.

Thank you