Learning Semanticsaware Distance Map with Semantics Layering Network

- Slides: 17

Learning Semantics-aware Distance Map with Semantics Layering Network for Amodal Instance Segmentation ACM MM 2019 Poster Ziheng Zhang∗, Anpei Chen∗, Ling Xie , Jingyi Yu, Shenghua Gao† Shanghai. Tech University 2019/11/15 Zhixuan Li 1

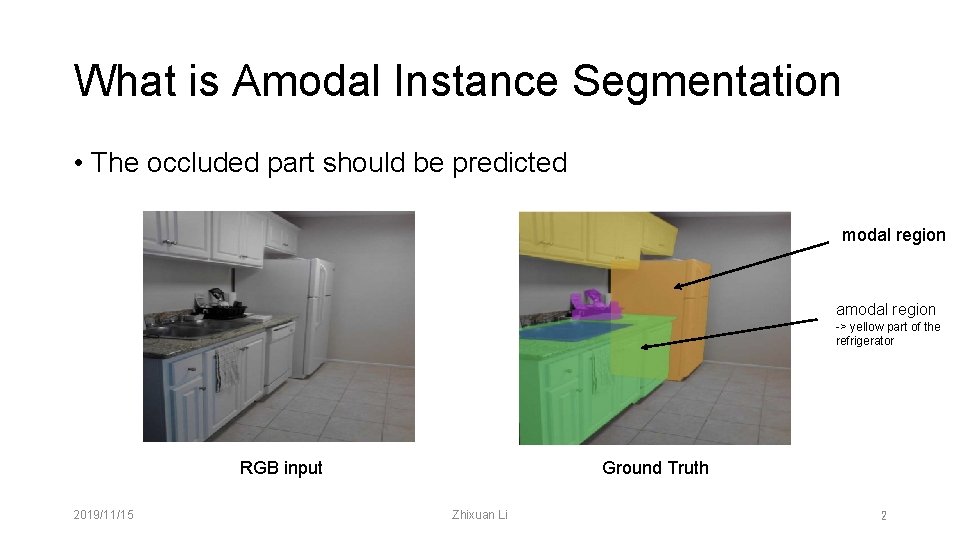

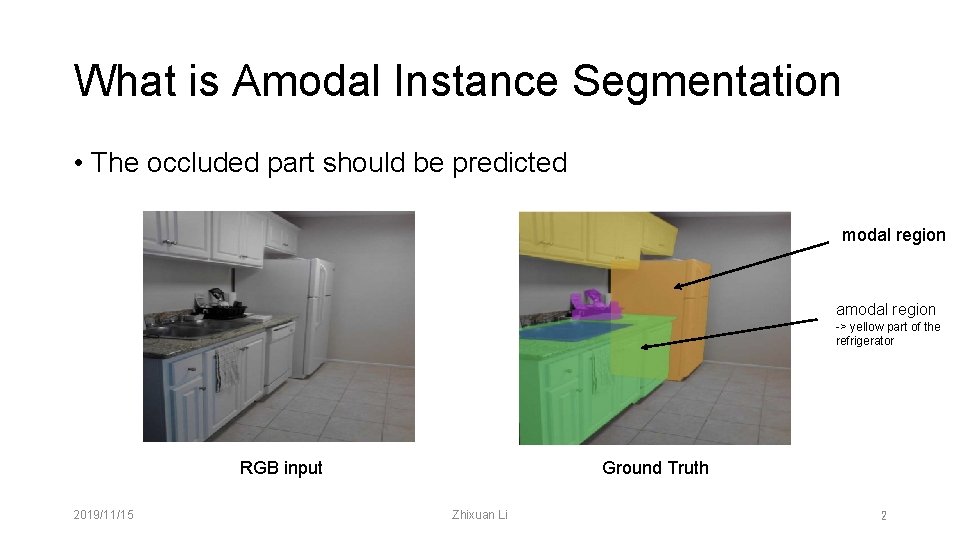

What is Amodal Instance Segmentation • The occluded part should be predicted modal region amodal region -> yellow part of the refrigerator RGB input 2019/11/15 Ground Truth Zhixuan Li 2

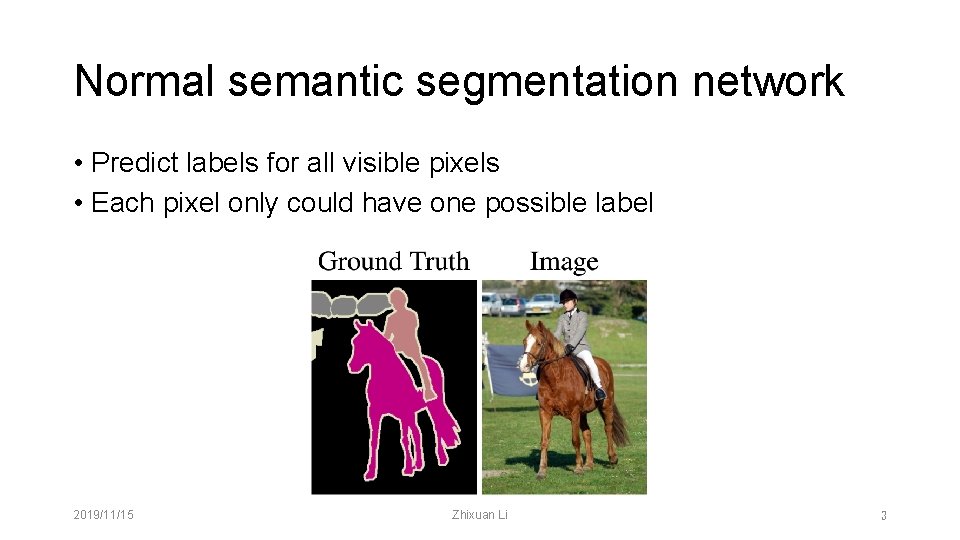

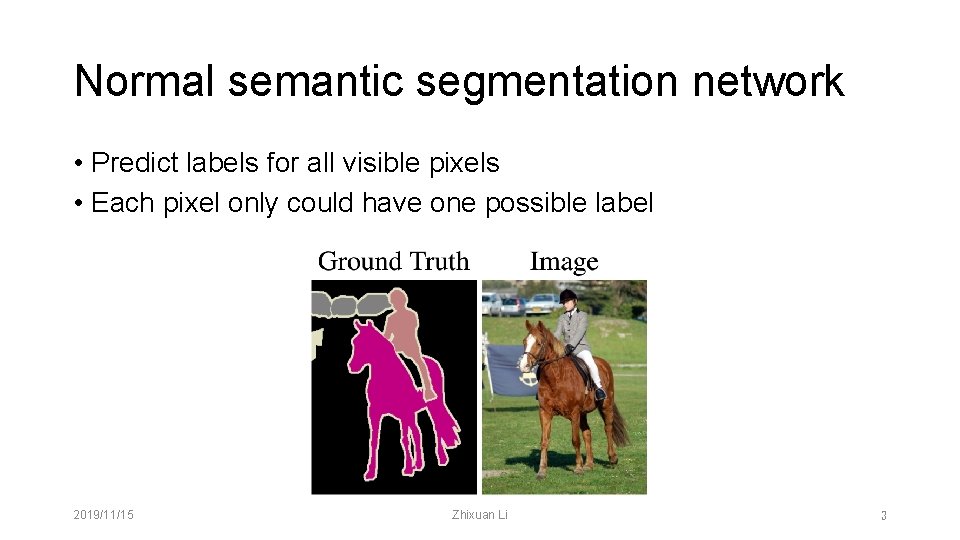

Normal semantic segmentation network • Predict labels for all visible pixels • Each pixel only could have one possible label 2019/11/15 Zhixuan Li 3

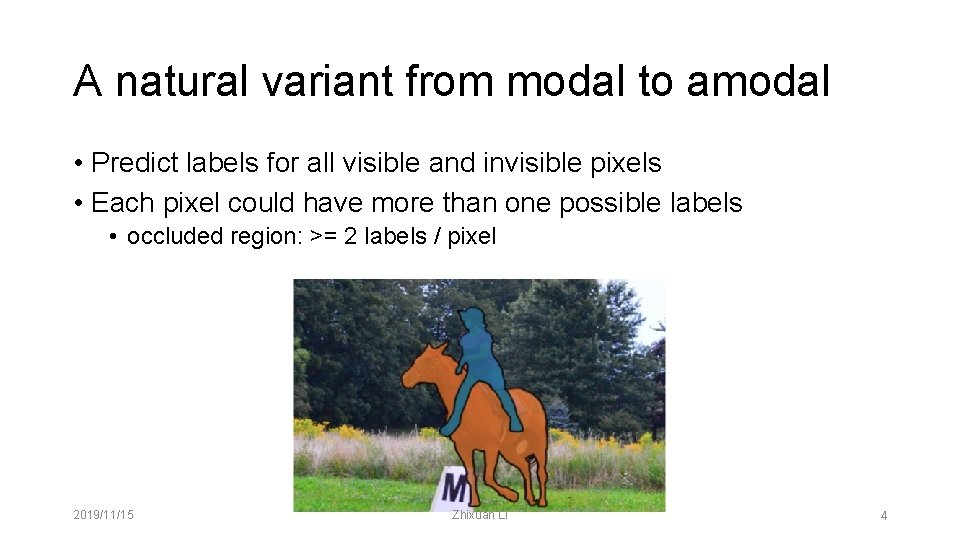

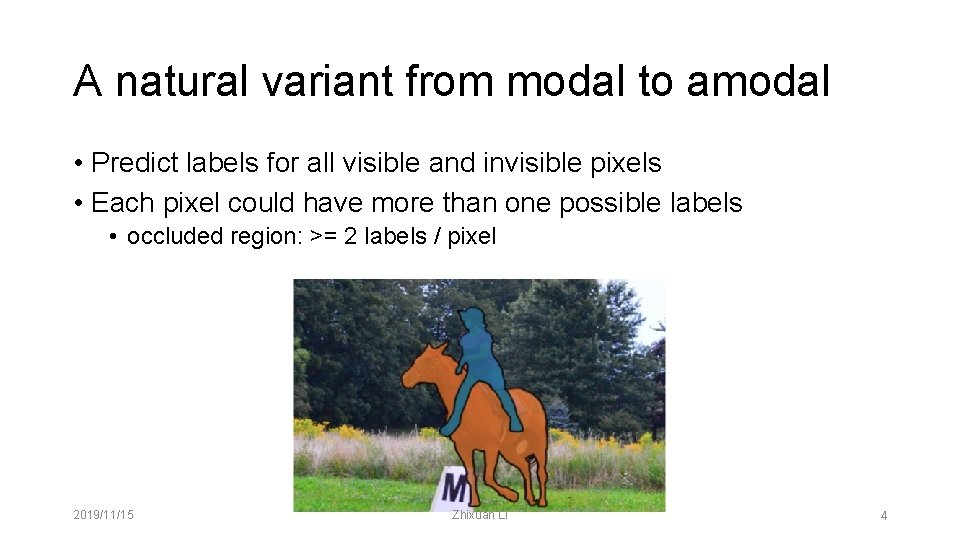

A natural variant from modal to amodal • Predict labels for all visible and invisible pixels • Each pixel could have more than one possible labels • occluded region: >= 2 labels / pixel 2019/11/15 Zhixuan Li 4

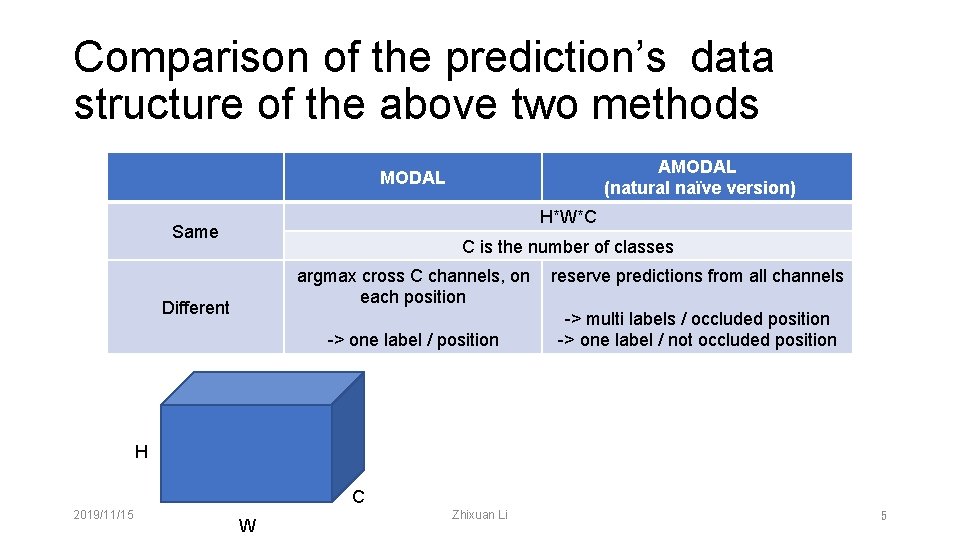

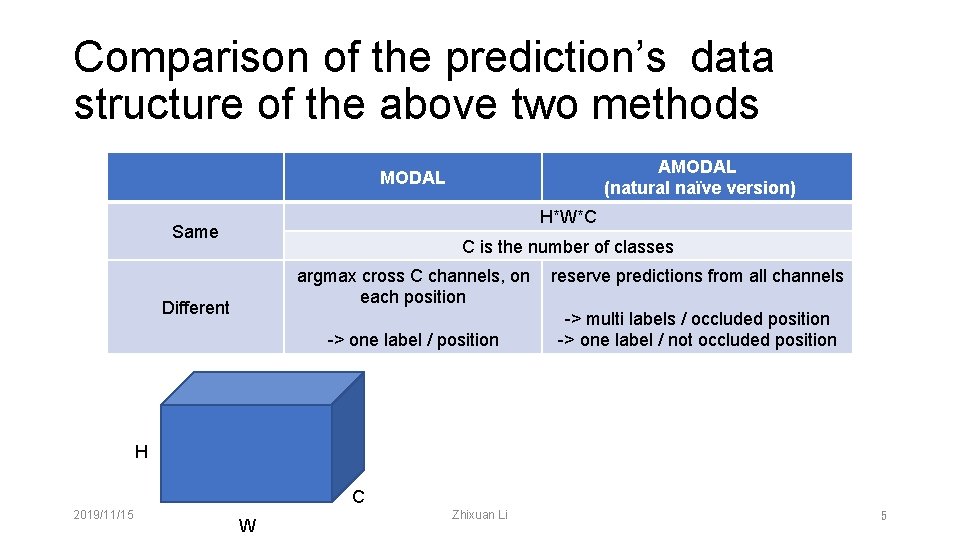

Comparison of the prediction’s data structure of the above two methods AMODAL (natural naïve version) MODAL H*W*C Same C is the number of classes argmax cross C channels, on each position Different -> one label / position reserve predictions from all channels -> multi labels / occluded position -> one label / not occluded position H C 2019/11/15 W Zhixuan Li 5

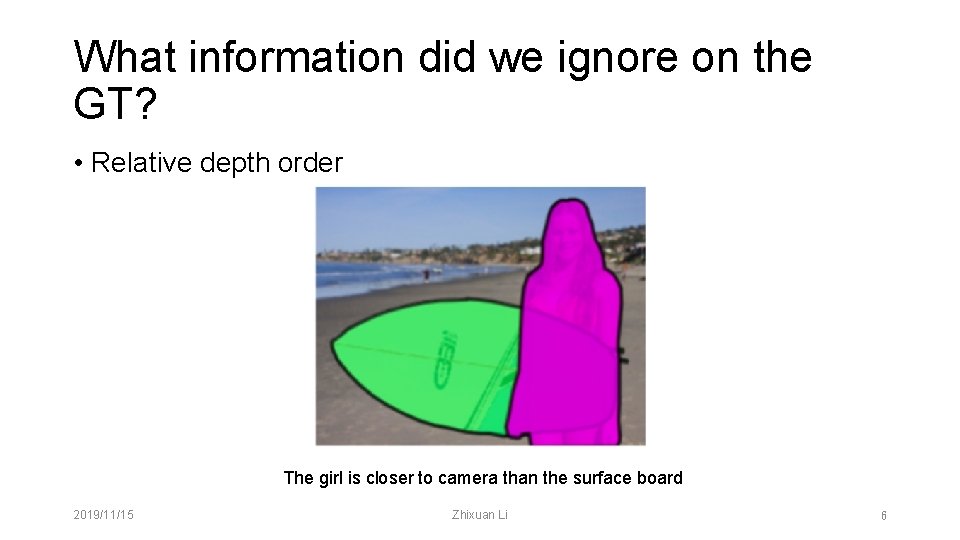

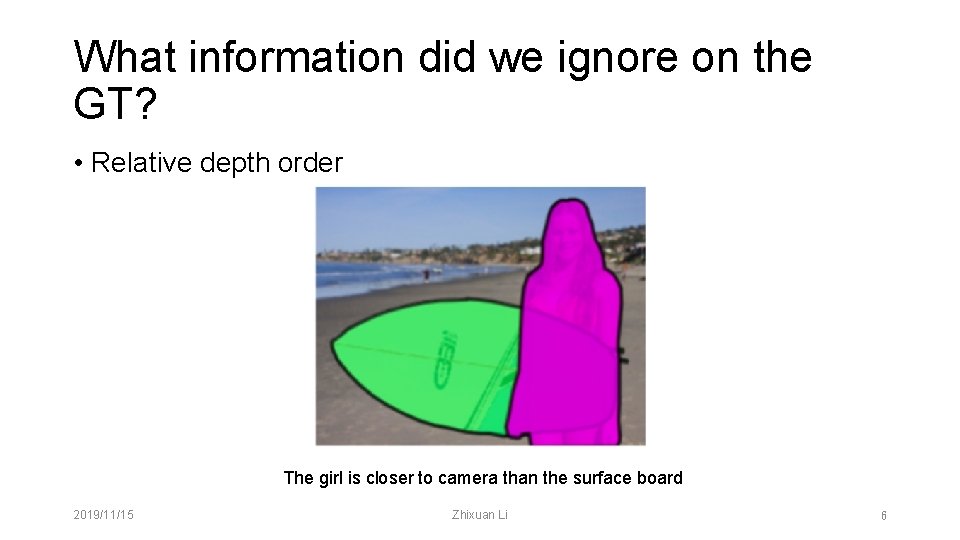

What information did we ignore on the GT? • Relative depth order The girl is closer to camera than the surface board 2019/11/15 Zhixuan Li 6

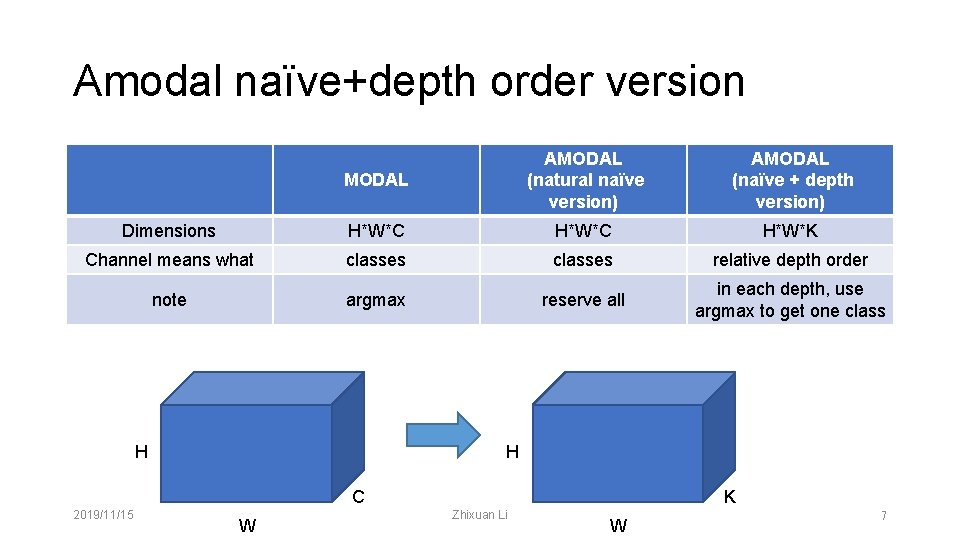

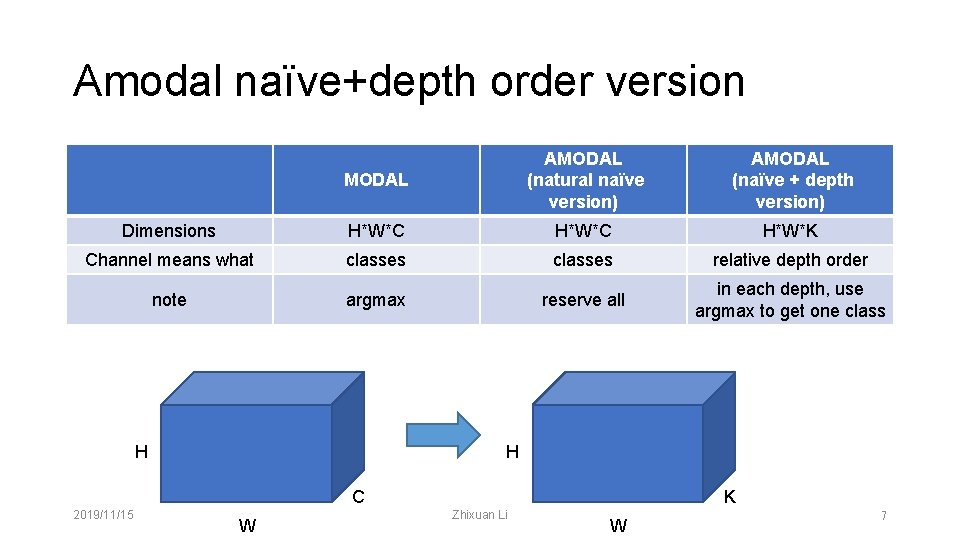

Amodal naïve+depth order version MODAL AMODAL (natural naïve version) AMODAL (naïve + depth version) Dimensions H*W*C H*W*K Channel means what classes relative depth order note argmax reserve all in each depth, use argmax to get one class H H K C 2019/11/15 W Zhixuan Li W 7

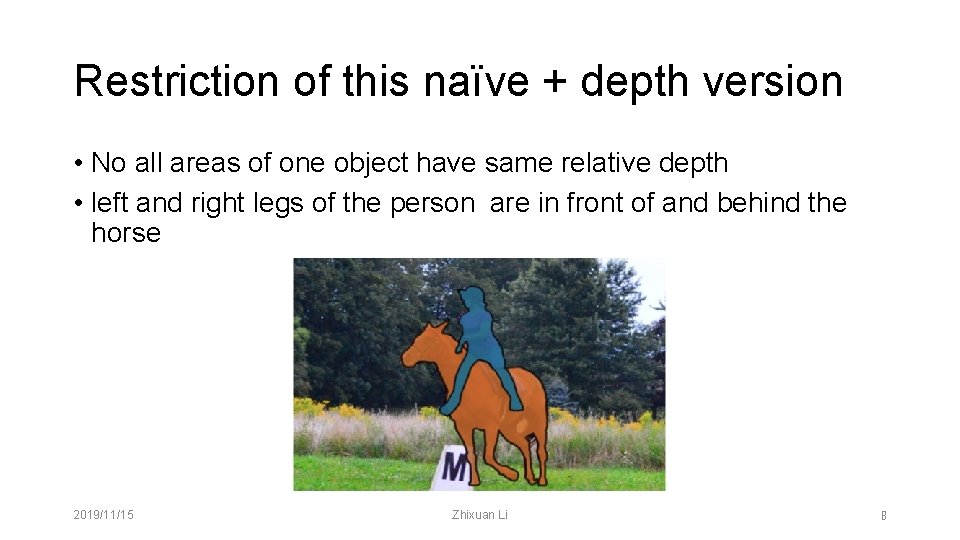

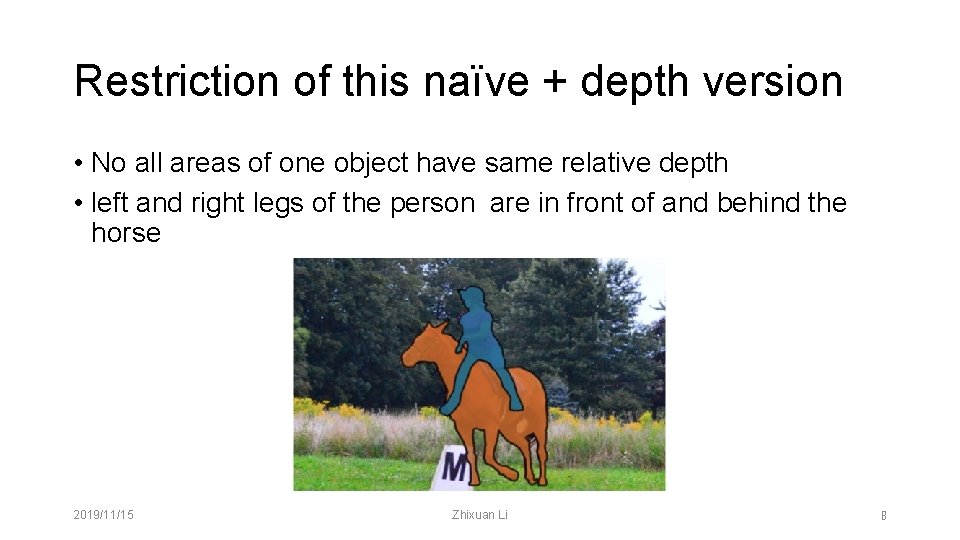

Restriction of this naïve + depth version • No all areas of one object have same relative depth • left and right legs of the person are in front of and behind the horse 2019/11/15 Zhixuan Li 8

SLN from this paper • Different pixels have different depths 2019/11/15 Zhixuan Li 9

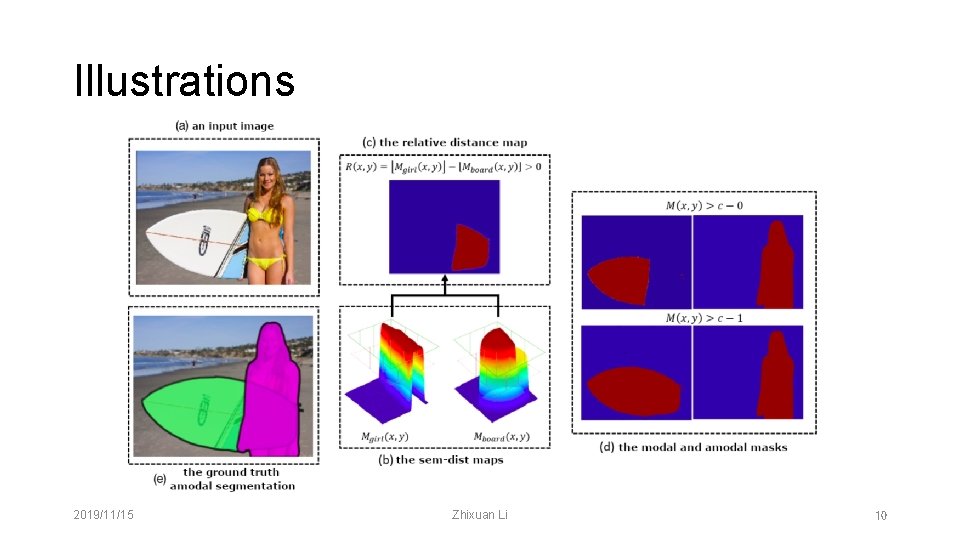

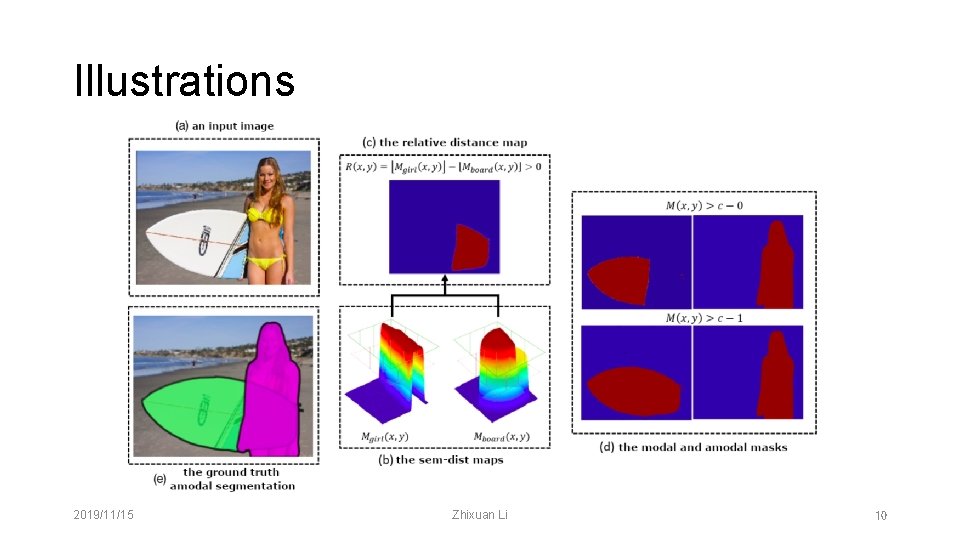

Illustrations 2019/11/15 Zhixuan Li 10

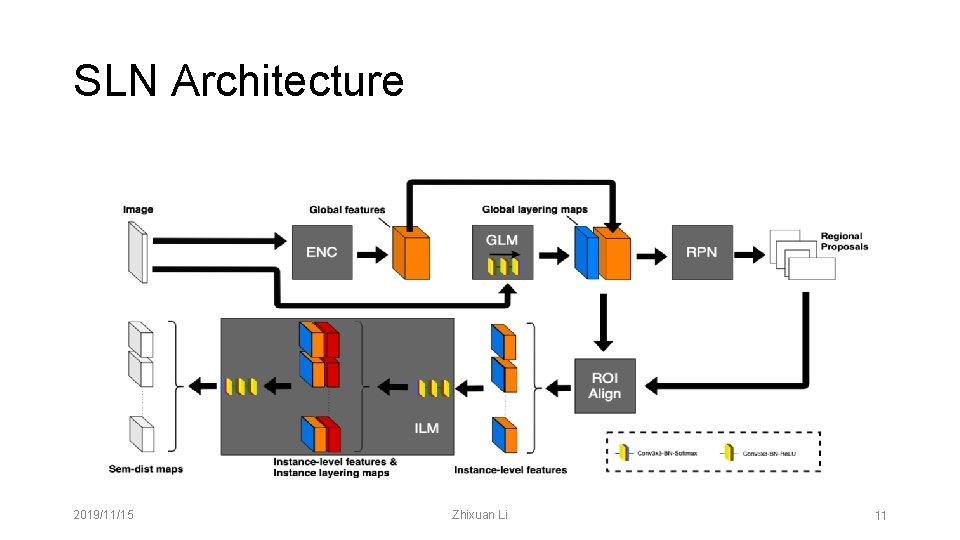

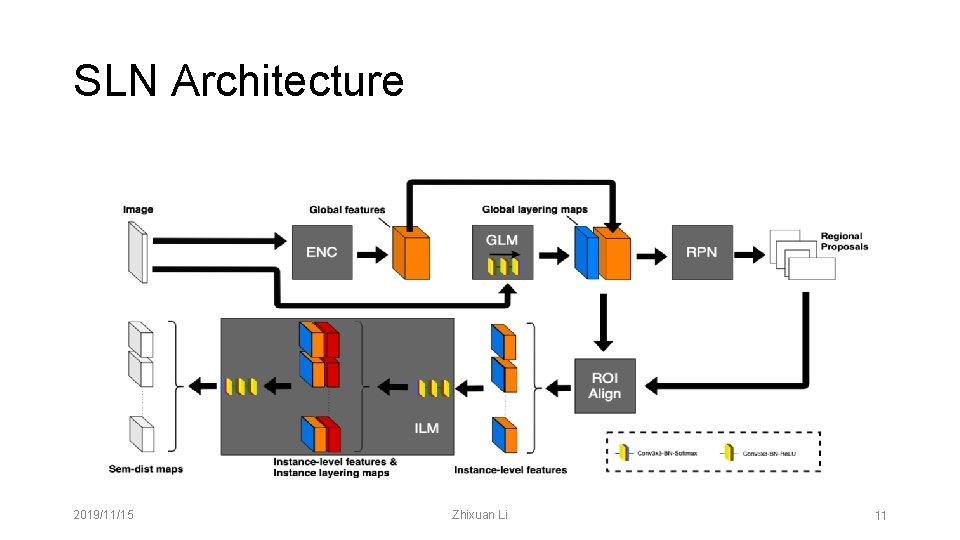

SLN Architecture 2019/11/15 Zhixuan Li 11

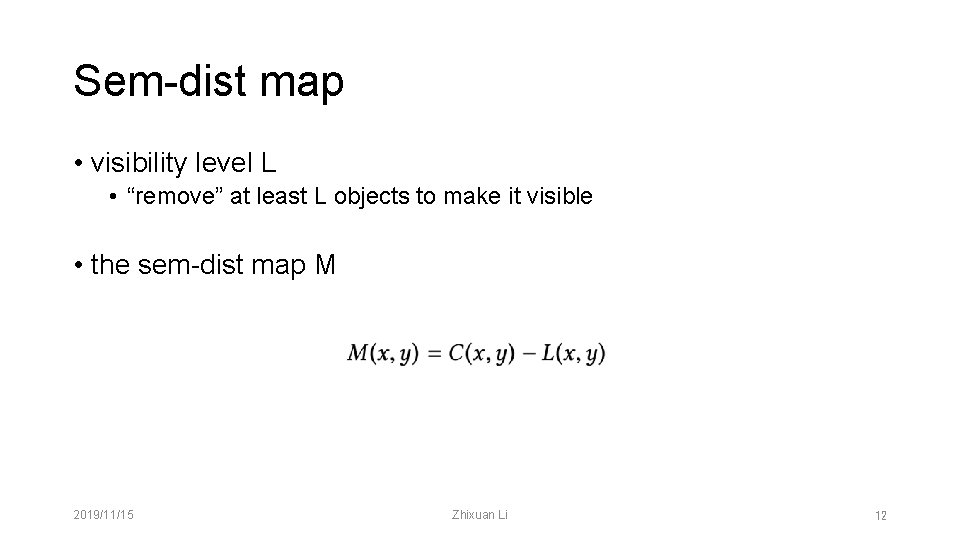

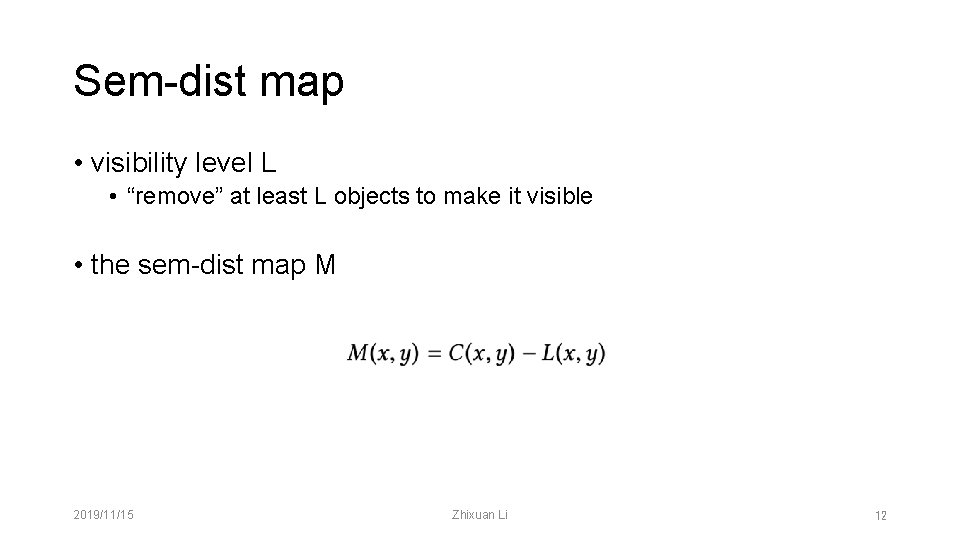

Sem-dist map • visibility level L • “remove” at least L objects to make it visible • the sem-dist map M 2019/11/15 Zhixuan Li 12

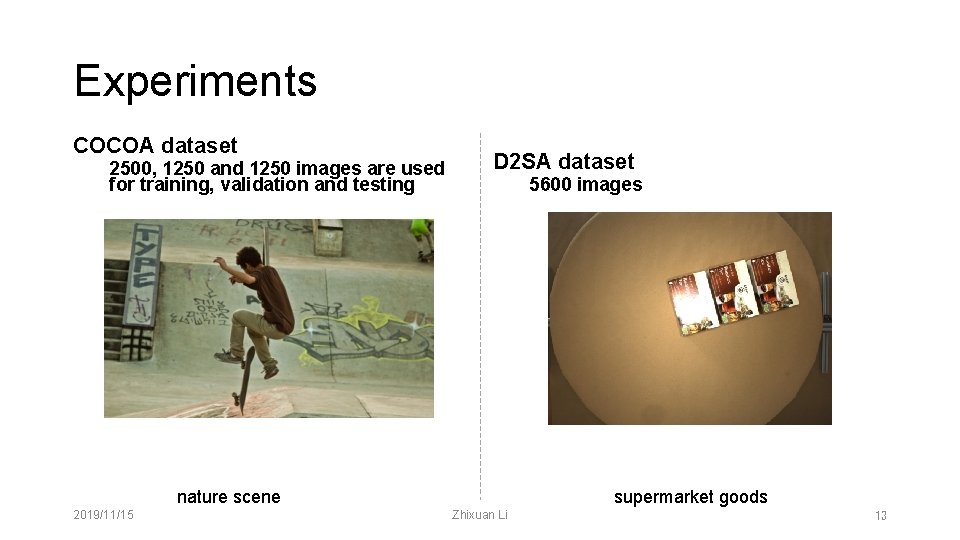

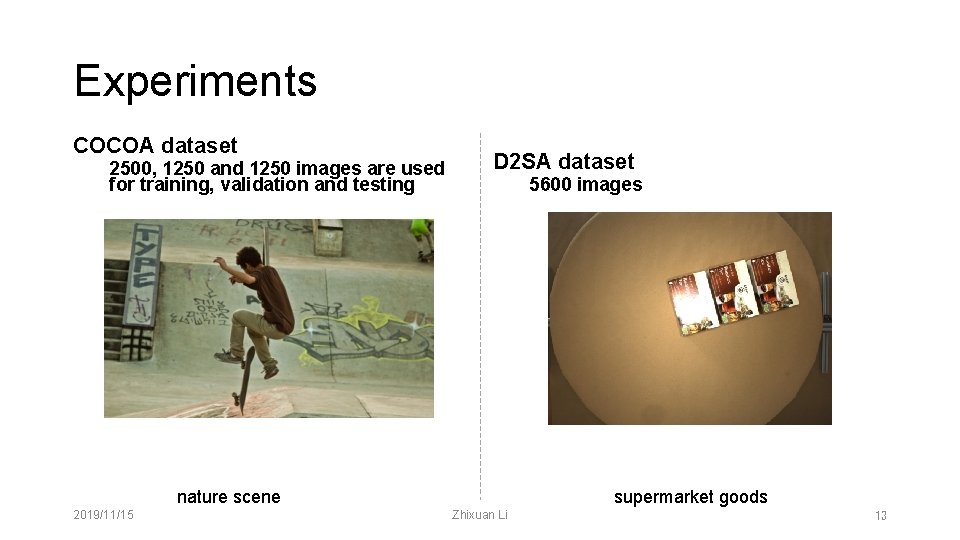

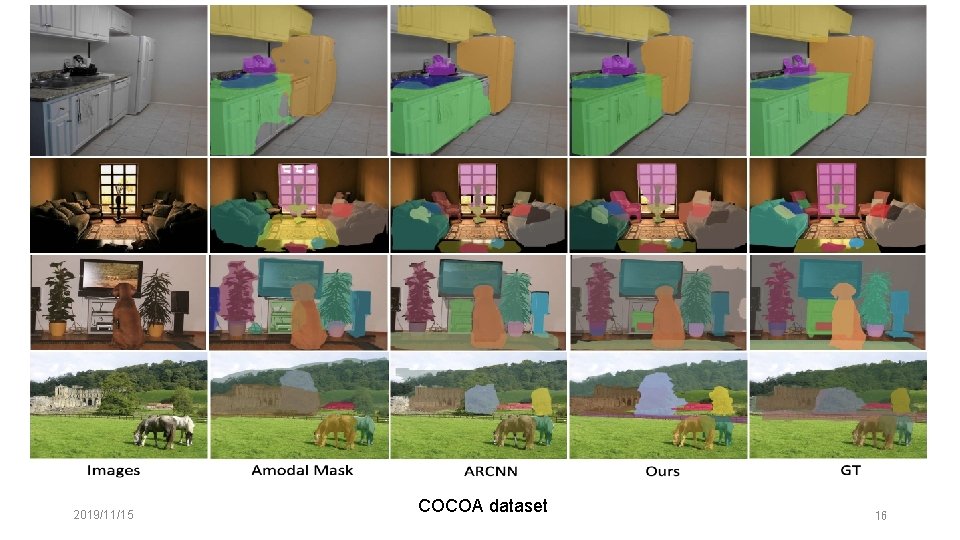

Experiments COCOA dataset 2500, 1250 and 1250 images are used for training, validation and testing D 2 SA dataset 5600 images nature scene 2019/11/15 supermarket goods Zhixuan Li 13

Metrics for amodal segmentation • mean average precision (AP) and mean average recall (AR) 2019/11/15 Zhixuan Li 14

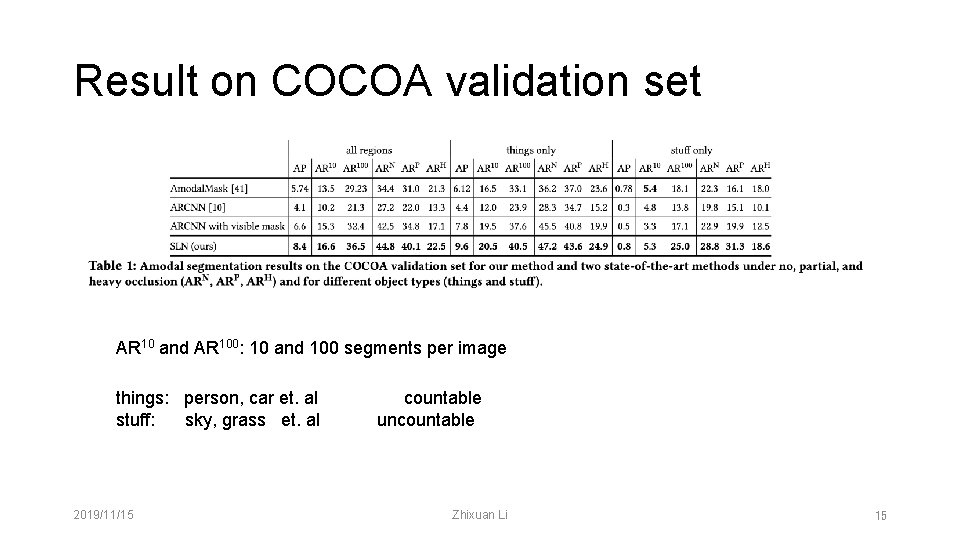

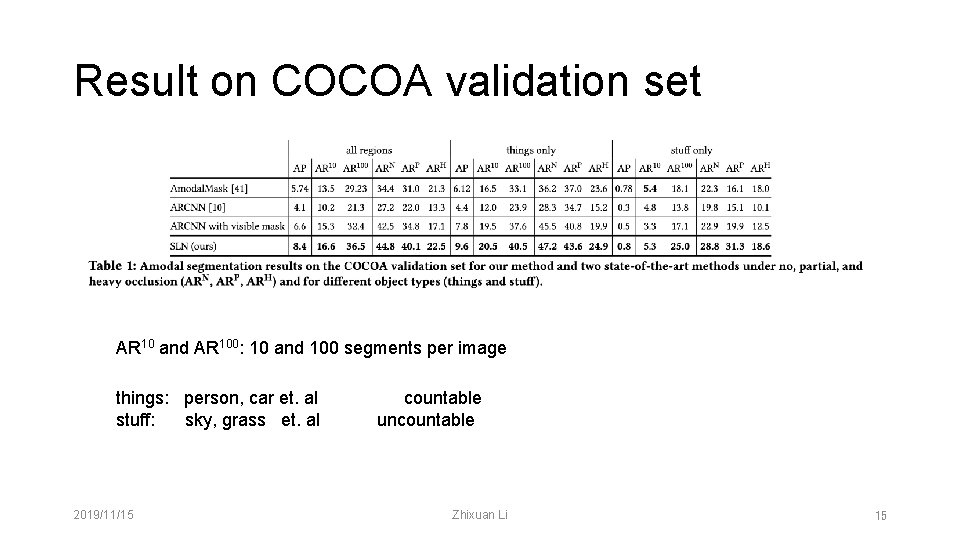

Result on COCOA validation set AR 10 and AR 100: 10 and 100 segments per image things: person, car et. al stuff: sky, grass et. al 2019/11/15 countable uncountable Zhixuan Li 15

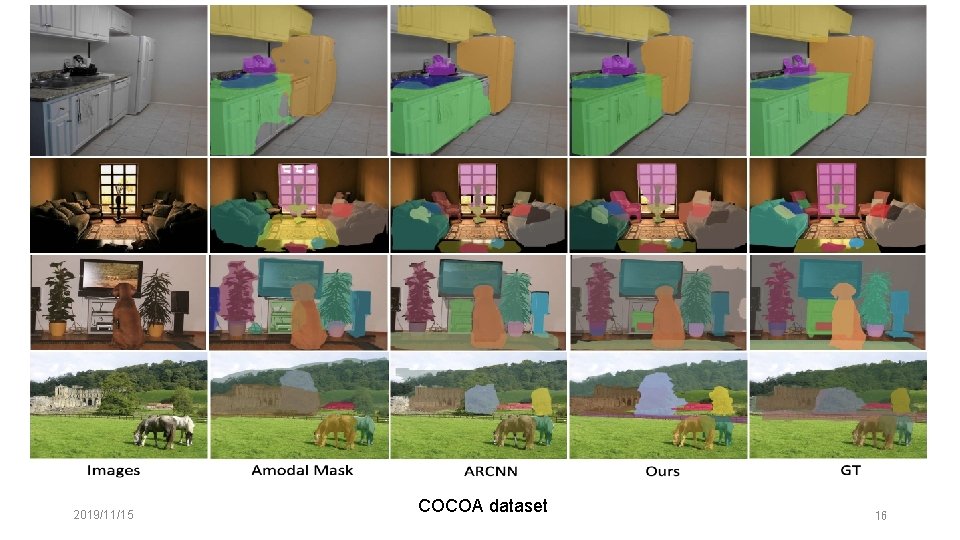

2019/11/15 COCOA dataset 16

Conclusions • STOA on COCOA and D 2 SA dataset • good baseline code 2019/11/15 Zhixuan Li 17