Learning Procedural Planning Knowledge in Complex Environments Douglas

- Slides: 21

Learning Procedural Planning Knowledge in Complex Environments Douglas Pearson douglas. pearson@threepenny. net March 2004

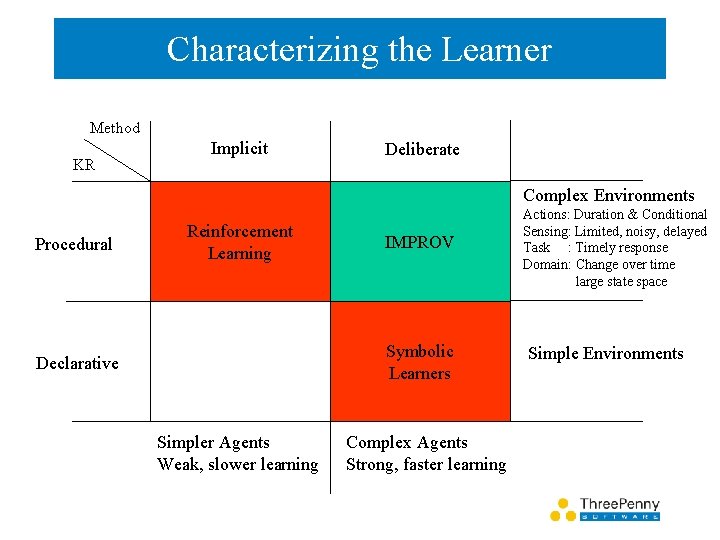

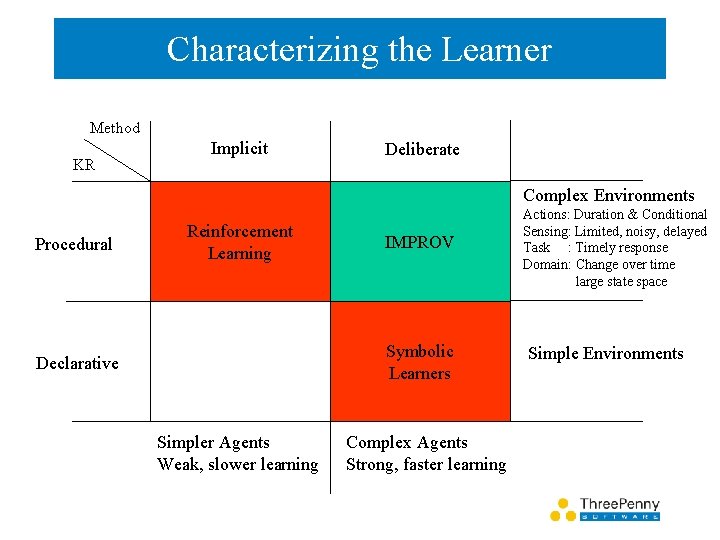

Characterizing the Learner Method KR Implicit Deliberate Complex Environments Procedural Reinforcement Learning IMPROV Symbolic Learners Declarative Simpler Agents Weak, slower learning Complex Agents Strong, faster learning Actions: Duration & Conditional Sensing: Limited, noisy, delayed Task : Timely response Domain: Change over time large state space Simple Environments

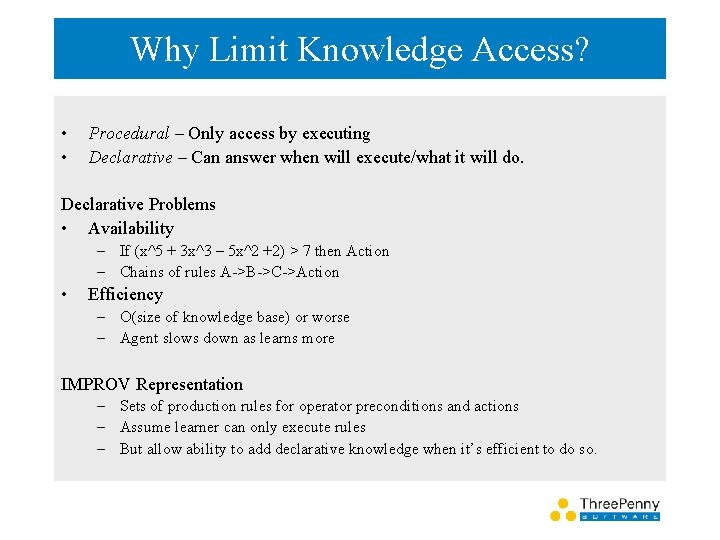

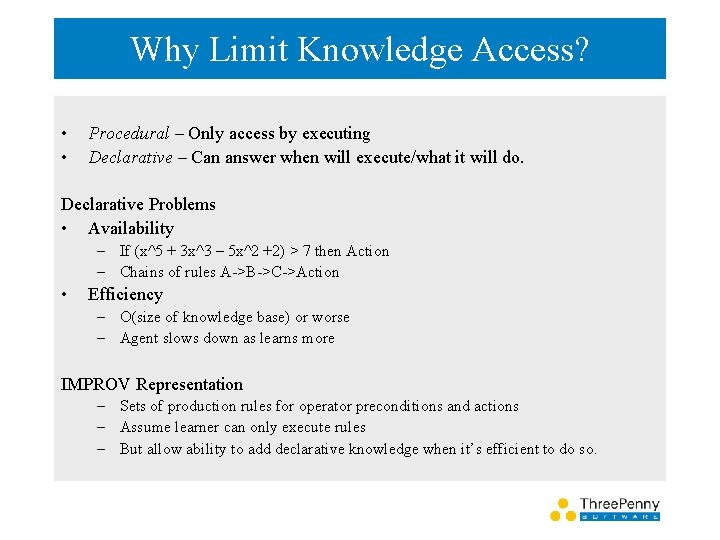

Why Limit Knowledge Access? • • Procedural – Only access by executing Declarative – Can answer when will execute/what it will do. Declarative Problems • Availability – If (x^5 + 3 x^3 – 5 x^2 +2) > 7 then Action – Chains of rules A->B->C->Action • Efficiency – O(size of knowledge base) or worse – Agent slows down as learns more IMPROV Representation – Sets of production rules for operator preconditions and actions – Assume learner can only execute rules – But allow ability to add declarative knowledge when it’s efficient to do so.

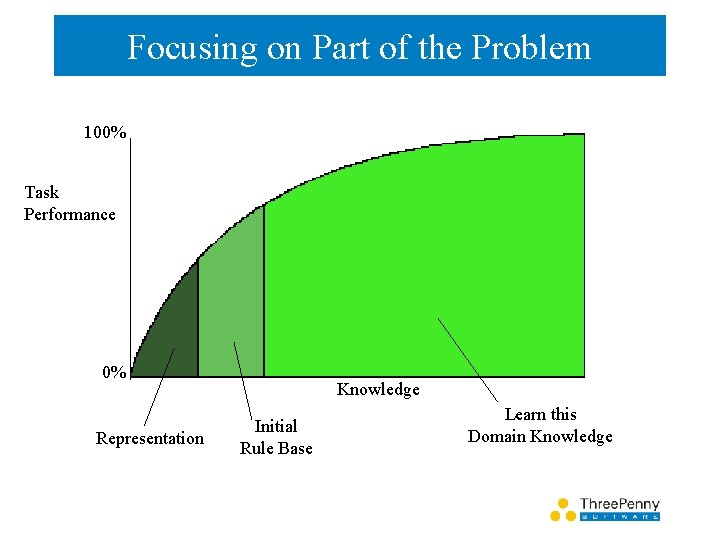

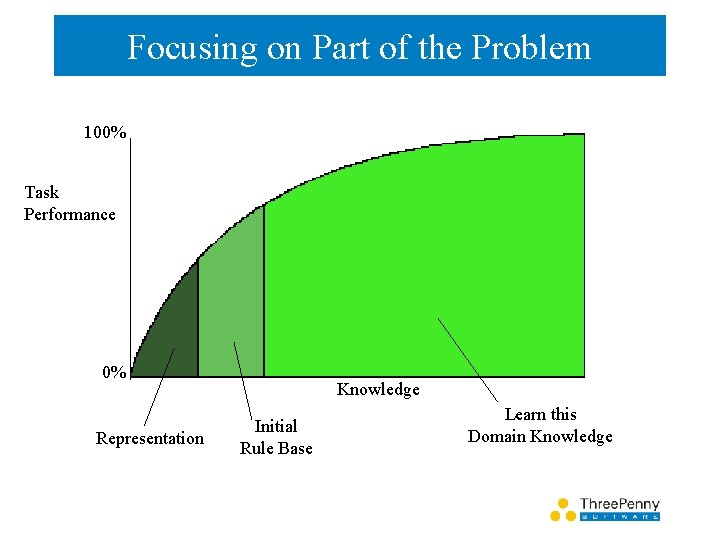

Focusing on Part of the Problem 100% Task Performance 0% Representation Knowledge Initial Rule Base Learn this Domain Knowledge

The Problem • Cast learning problem as – Error detection (incomplete/incorrect K) – Error correction (fixing or adding K) • But with just limited, procedural access • Aim is to support learning in complex, scalable agents/environments.

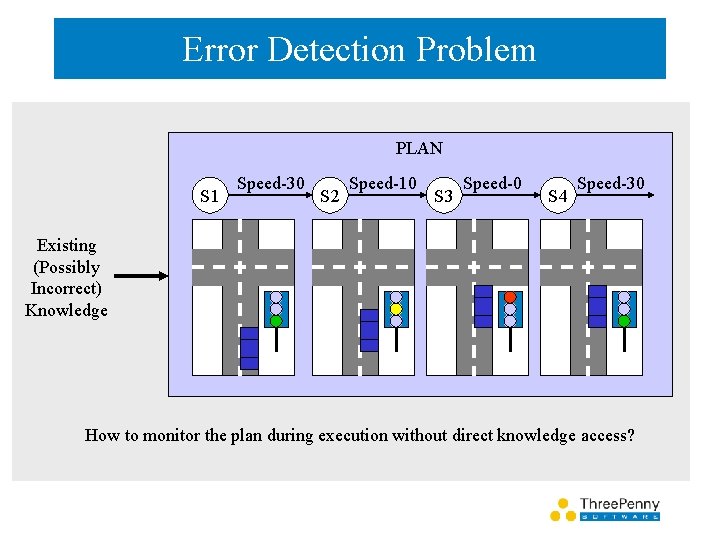

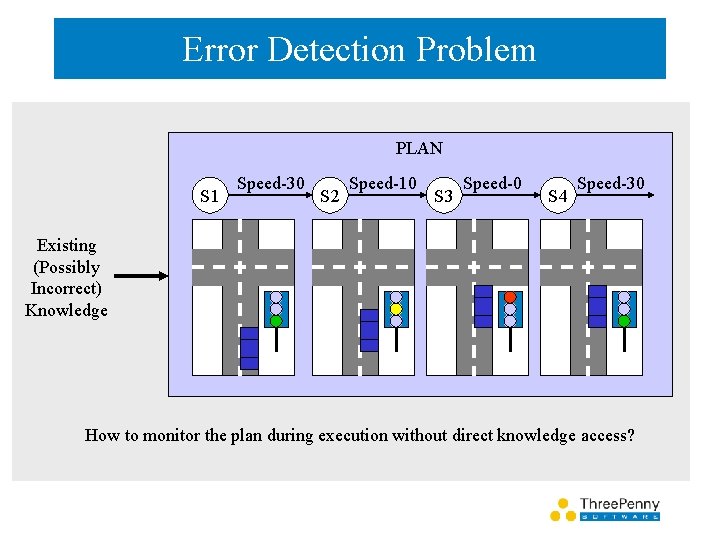

Error Detection Problem PLAN S 1 Speed-30 S 2 Speed-10 S 3 Speed-0 S 4 Speed-30 Existing (Possibly Incorrect) Knowledge How to monitor the plan during execution without direct knowledge access?

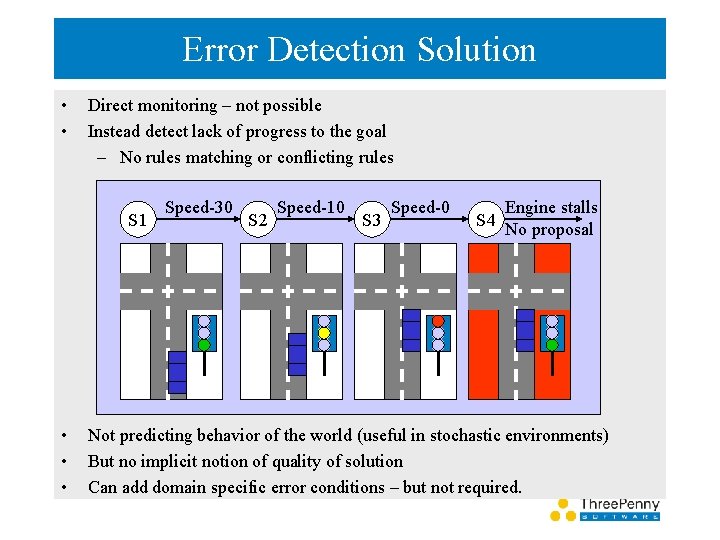

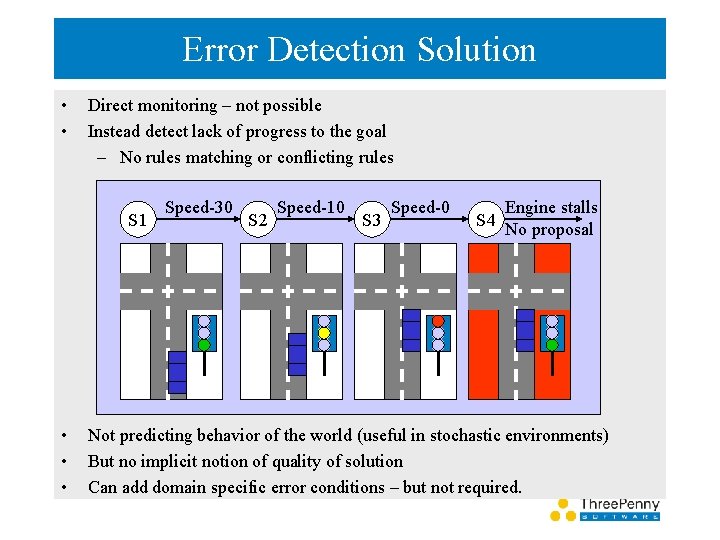

Error Detection Solution • • Direct monitoring – not possible Instead detect lack of progress to the goal – No rules matching or conflicting rules S 1 • • • Speed-30 S 2 Speed-10 S 3 Speed-0 S 4 Engine stalls No proposal Not predicting behavior of the world (useful in stochastic environments) But no implicit notion of quality of solution Can add domain specific error conditions – but not required.

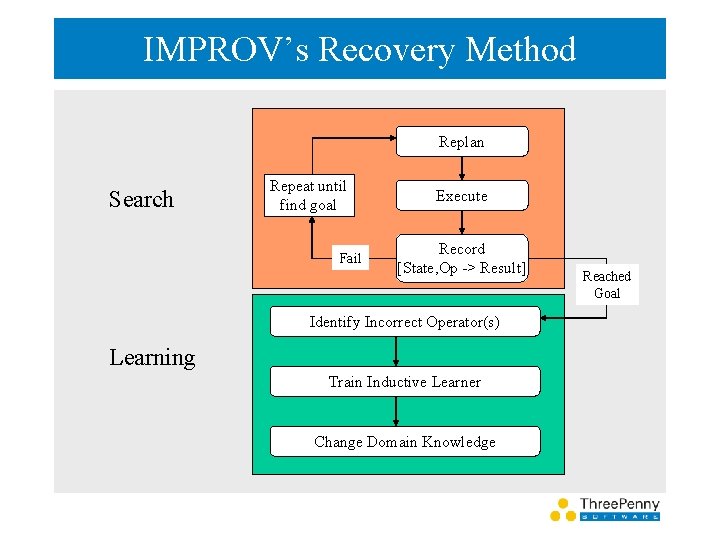

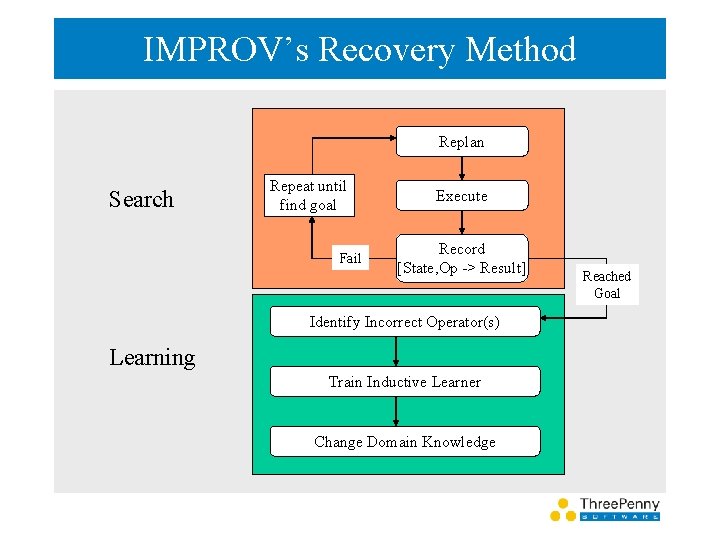

IMPROV’s Recovery Method Replan Search Repeat until find goal Fail Execute Record [State, Op -> Result] Identify Incorrect Operator(s) Learning Train Inductive Learner Change Domain Knowledge Reached Goal

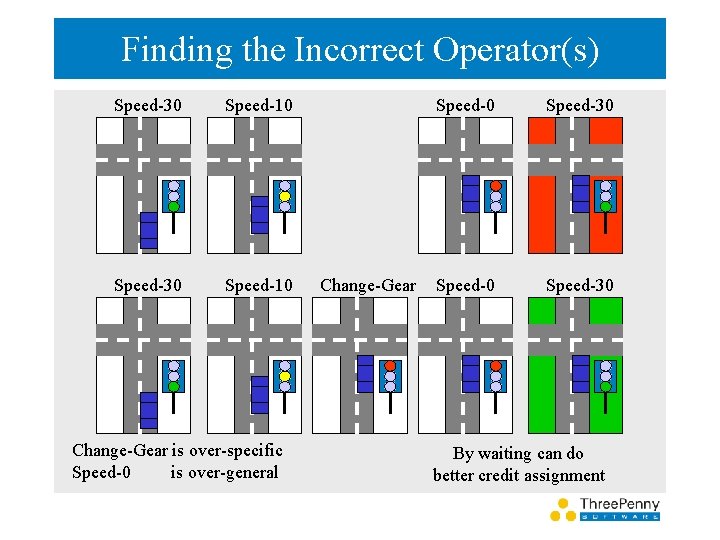

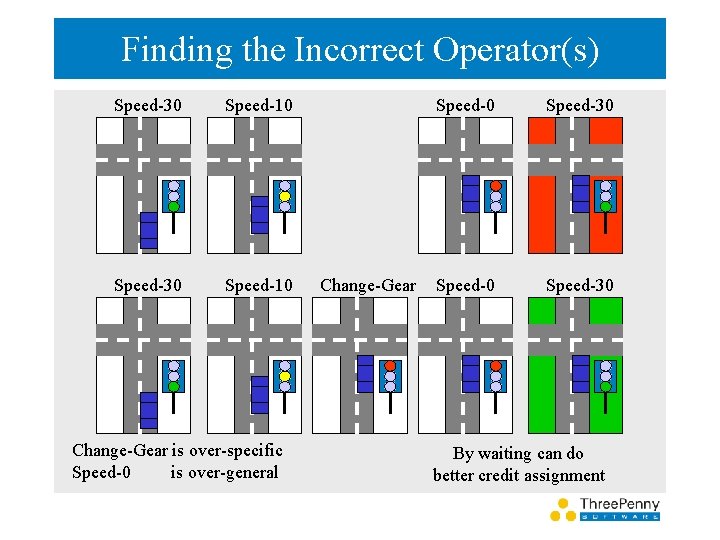

Finding the Incorrect Operator(s) Speed-30 Speed-10 Change-Gear is over-specific Speed-0 is over-general Change-Gear Speed-0 Speed-30 By waiting can do better credit assignment

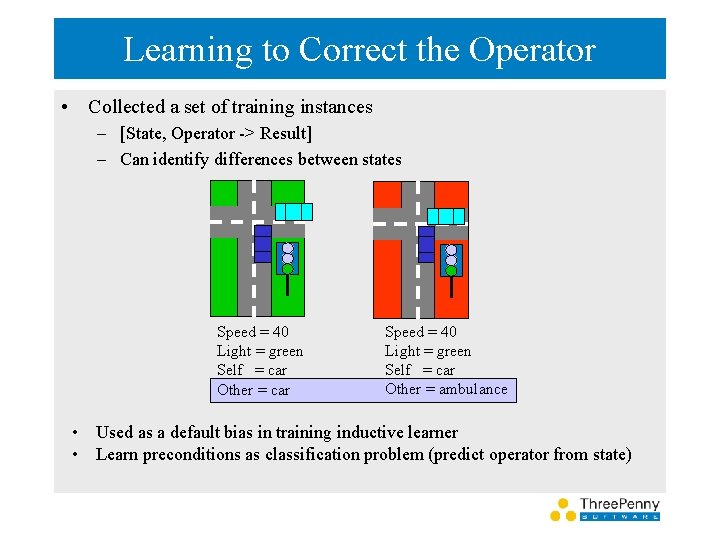

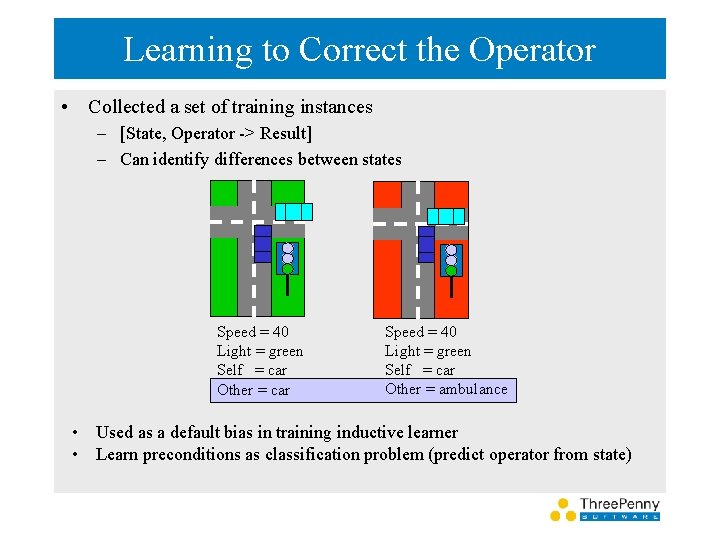

Learning to Correct the Operator • Collected a set of training instances – [State, Operator -> Result] – Can identify differences between states Speed = 40 Light = green Self = car Other = car Speed = 40 Light = green Self = car Other = ambulance • Used as a default bias in training inductive learner • Learn preconditions as classification problem (predict operator from state)

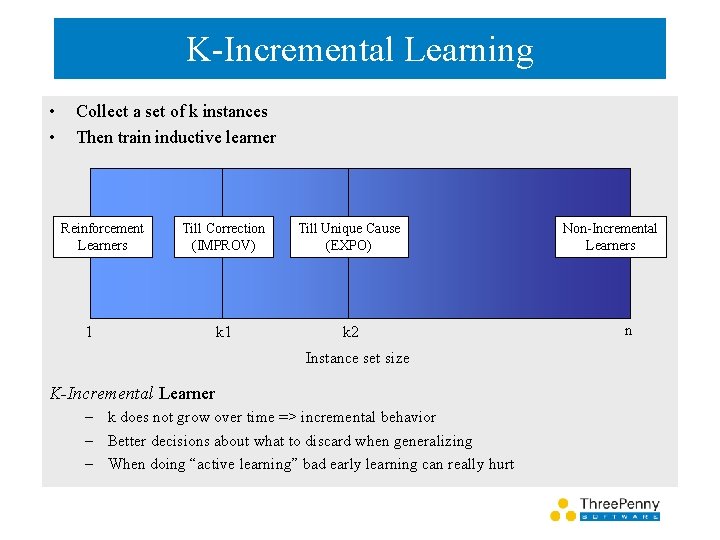

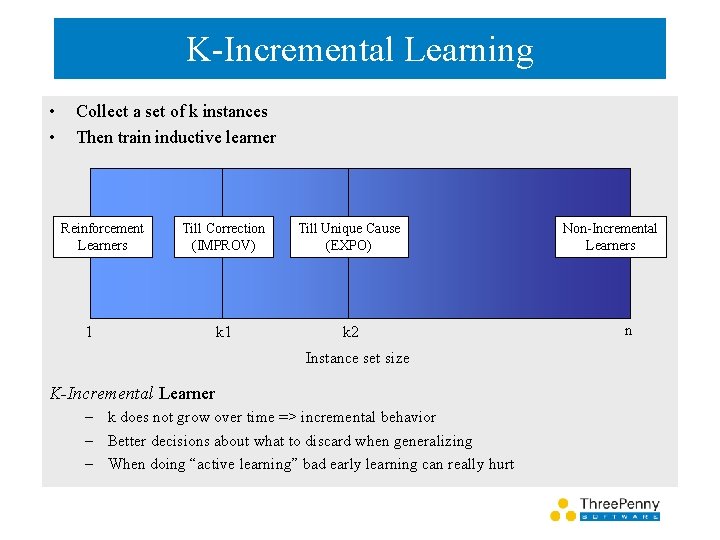

K-Incremental Learning • • Collect a set of k instances Then train inductive learner Reinforcement Learners Till Correction (IMPROV) Till Unique Cause (EXPO) k 1 k 2 1 Instance set size K-Incremental Learner – k does not grow over time => incremental behavior – Better decisions about what to discard when generalizing – When doing “active learning” bad early learning can really hurt Non-Incremental Learners n

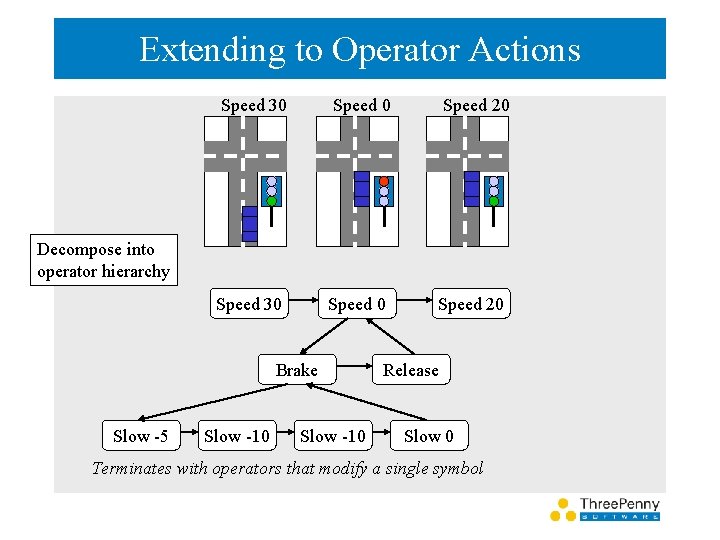

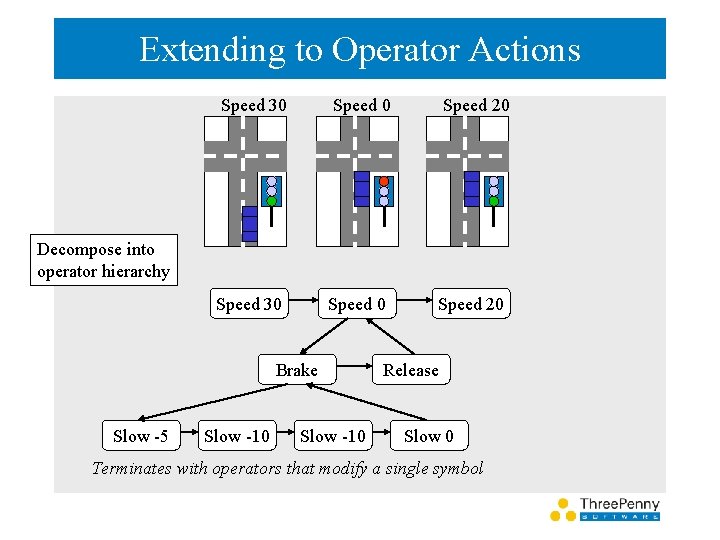

Extending to Operator Actions Speed 30 Speed 20 Decompose into operator hierarchy Brake Slow -5 Slow -10 Release Slow 0 Terminates with operators that modify a single symbol

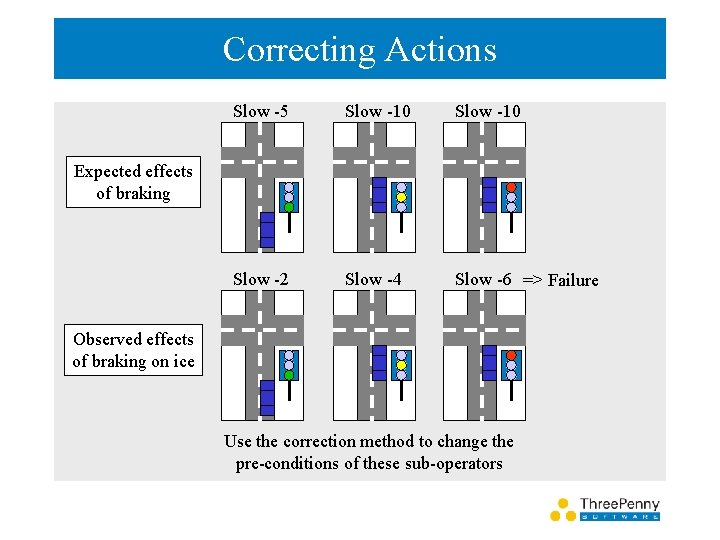

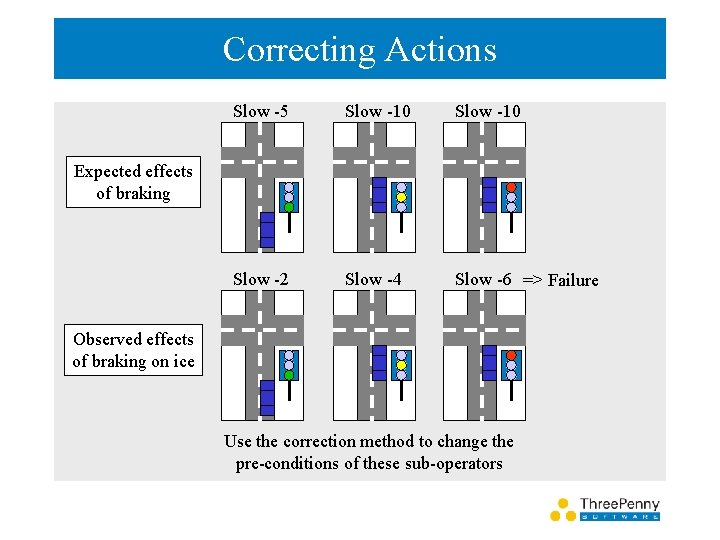

Correcting Actions Slow -5 Slow -10 Slow -2 Slow -4 Slow -6 => Failure Expected effects of braking Observed effects of braking on ice Use the correction method to change the pre-conditions of these sub-operators

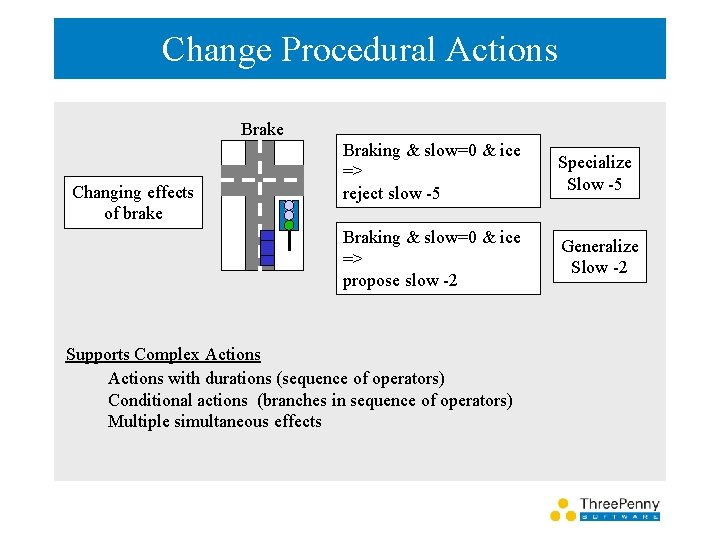

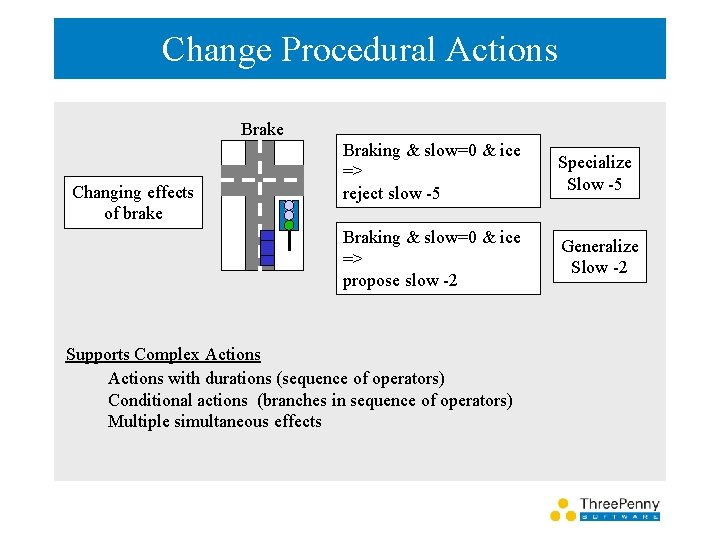

Change Procedural Actions Brake Changing effects of brake Braking & slow=0 & ice => reject slow -5 Specialize Slow -5 Braking & slow=0 & ice => propose slow -2 Generalize Slow -2 Supports Complex Actions with durations (sequence of operators) Conditional actions (branches in sequence of operators) Multiple simultaneous effects

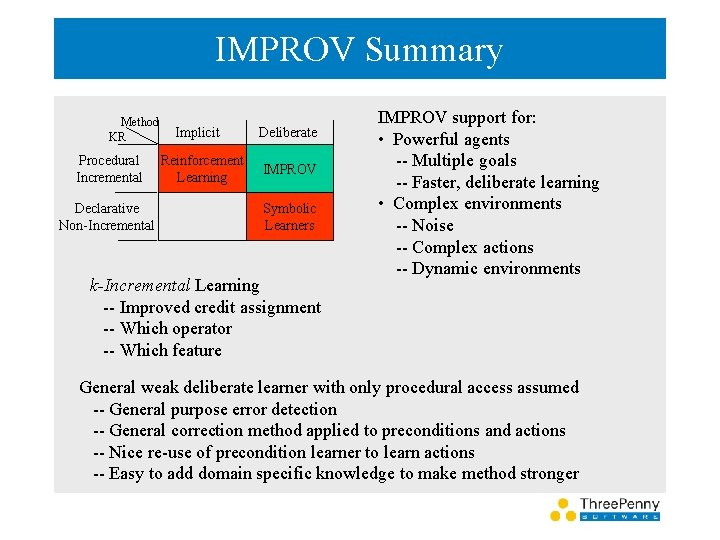

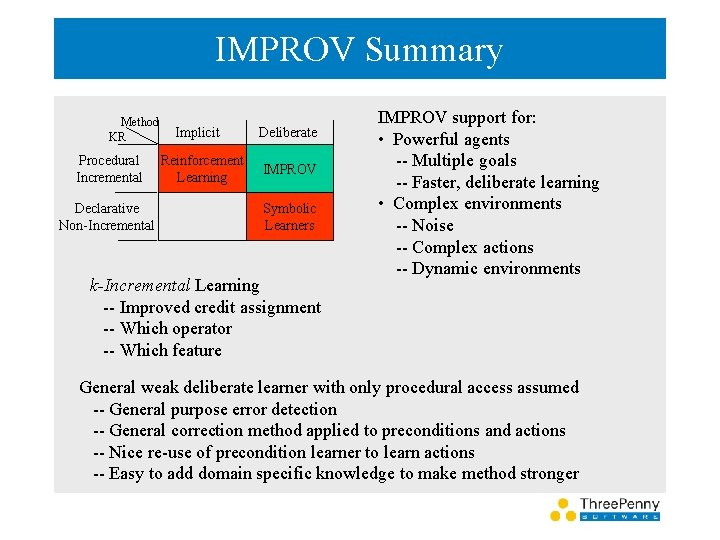

IMPROV Summary Method KR Procedural Incremental Declarative Non-Incremental Implicit Reinforcement Learning Deliberate IMPROV Symbolic Learners k-Incremental Learning -- Improved credit assignment -- Which operator -- Which feature IMPROV support for: • Powerful agents -- Multiple goals -- Faster, deliberate learning • Complex environments -- Noise -- Complex actions -- Dynamic environments General weak deliberate learner with only procedural access assumed -- General purpose error detection -- General correction method applied to preconditions and actions -- Nice re-use of precondition learner to learn actions -- Easy to add domain specific knowledge to make method stronger

Redux: Diagram-based Example-driven Knowledge Acquisition Douglas Pearson douglas. pearson@threepenny. net March 2004

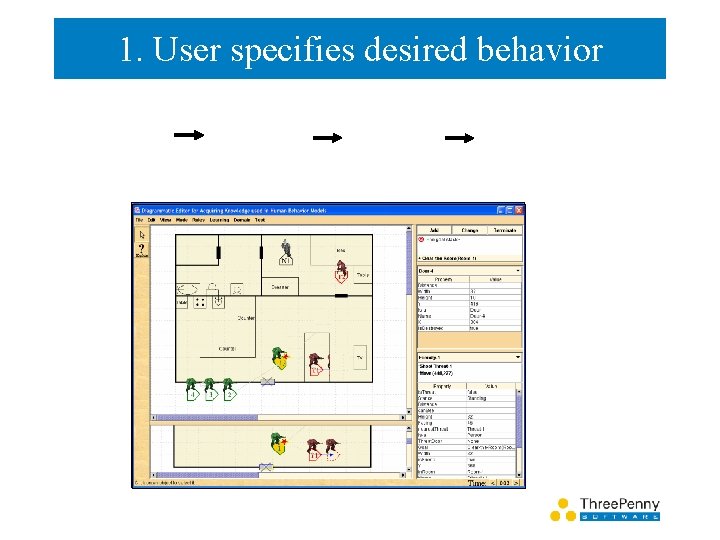

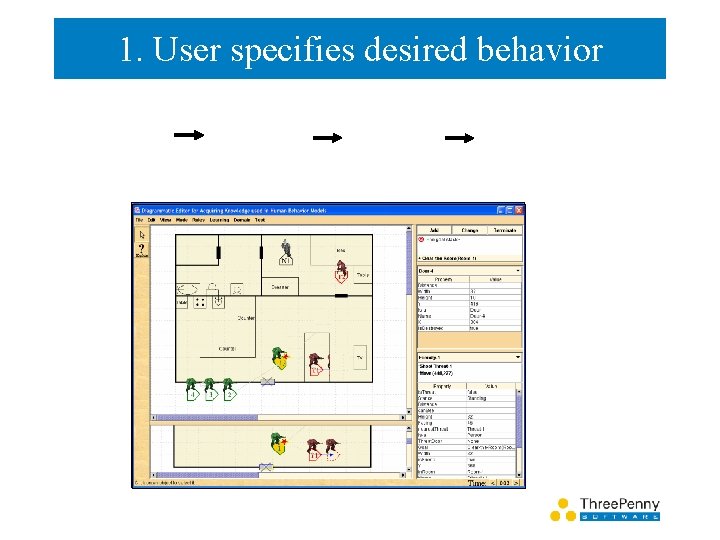

1. User specifies desired behavior

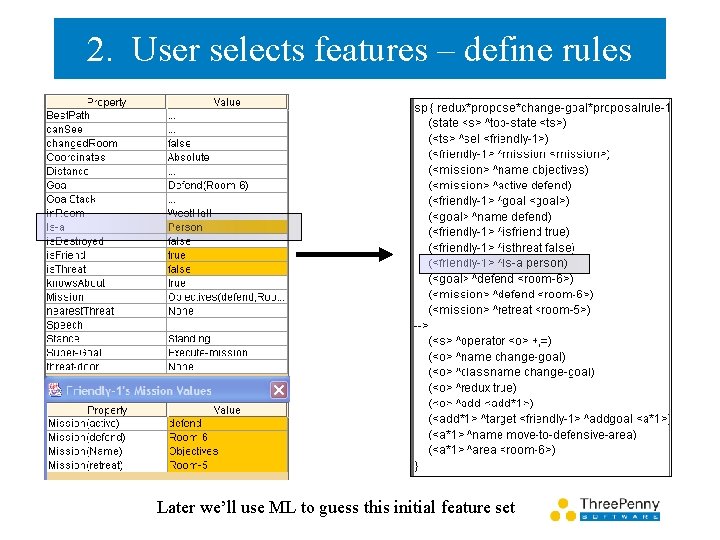

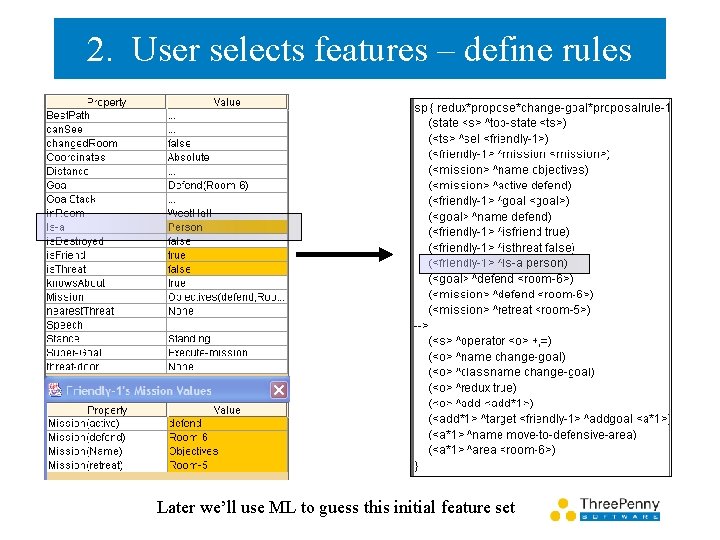

2. User selects features – define rules Later we’ll use ML to guess this initial feature set

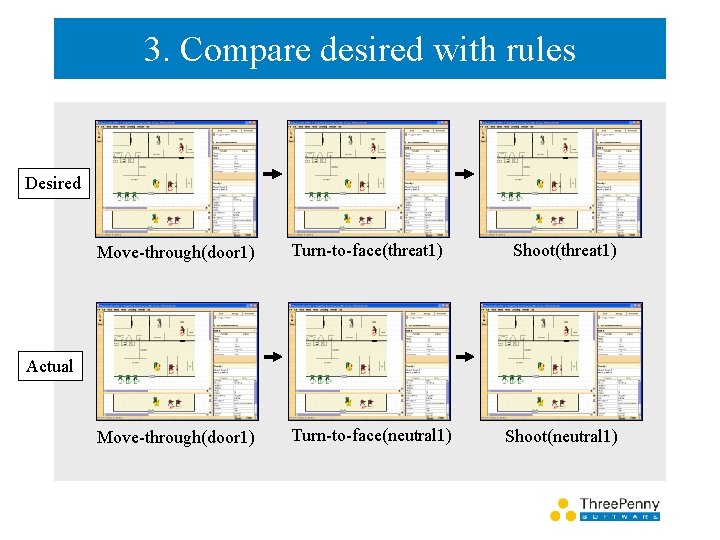

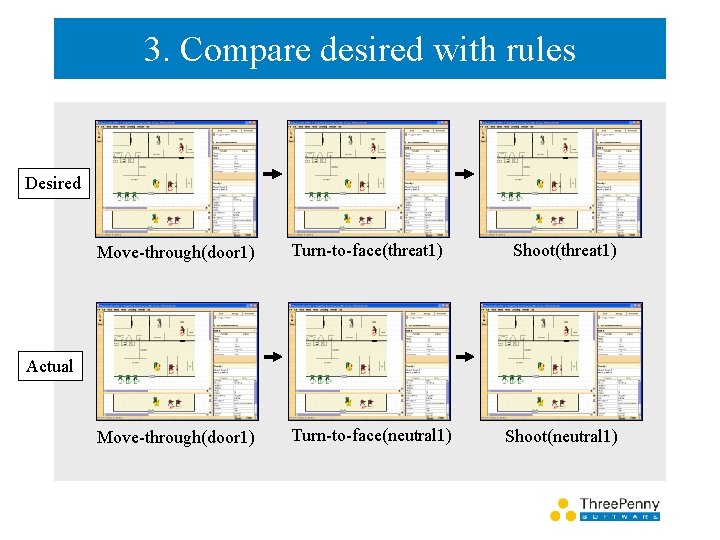

3. Compare desired with rules Desired Move-through(door 1) Turn-to-face(threat 1) Shoot(threat 1) Move-through(door 1) Turn-to-face(neutral 1) Shoot(neutral 1) Actual

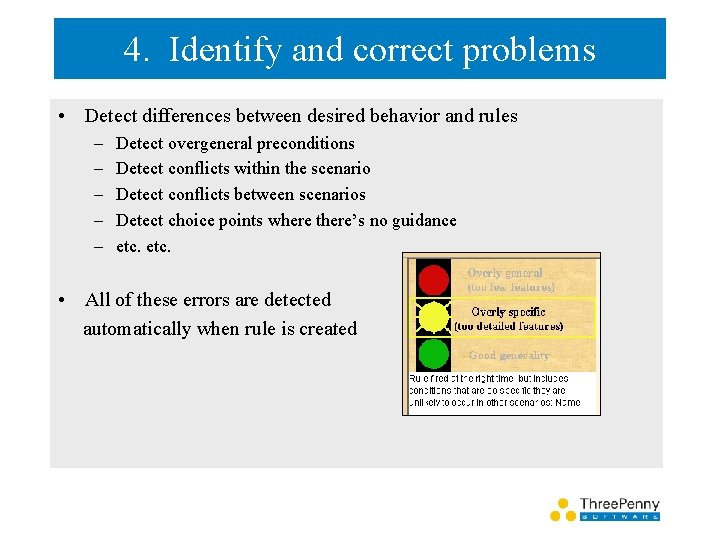

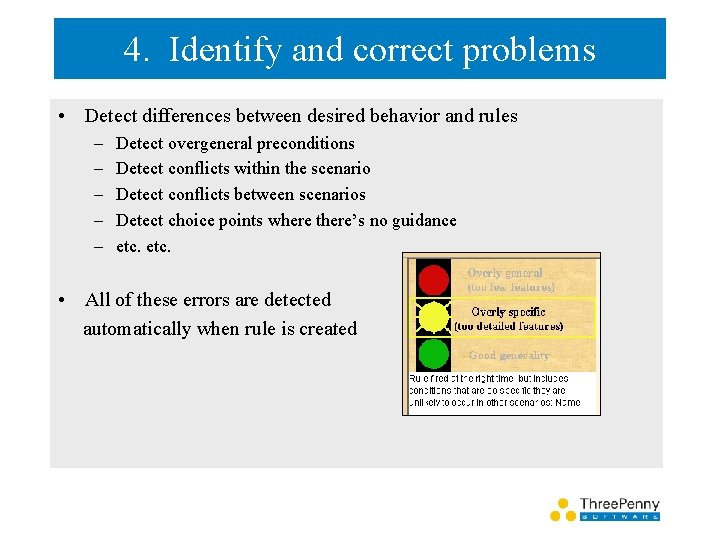

4. Identify and correct problems • Detect differences between desired behavior and rules – – – Detect overgeneral preconditions Detect conflicts within the scenario Detect conflicts between scenarios Detect choice points where there’s no guidance etc. • All of these errors are detected automatically when rule is created

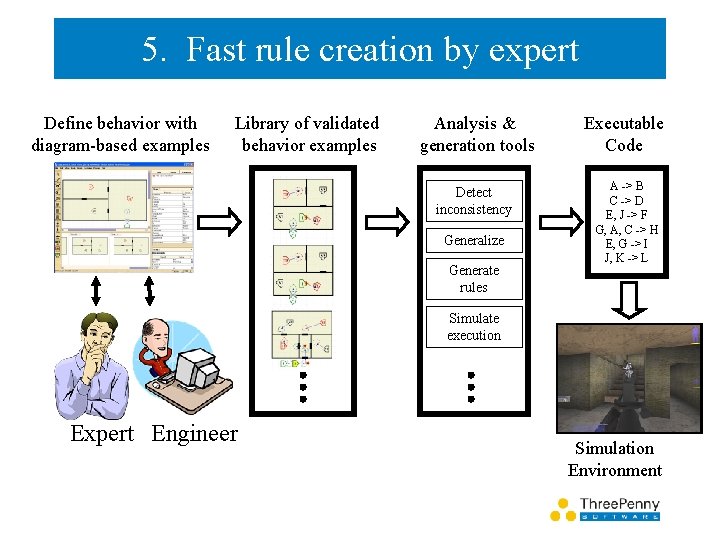

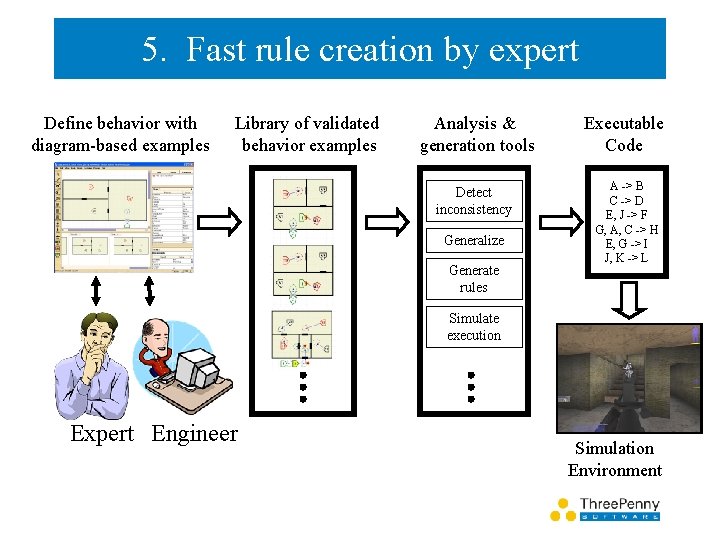

5. Fast rule creation by expert Define behavior with diagram-based examples Library of validated behavior examples Analysis & generation tools Executable Code Detect inconsistency A -> B C -> D E, J -> F G, A, C -> H E, G -> I J, K -> L Generalize Generate rules Simulate execution Expert Engineer Simulation Environment