Learning Mediation Strategies in heterogeneous Multiagent Systems Application

- Slides: 17

Learning Mediation Strategies in heterogeneous Multiagent Systems Application to adaptive services Romaric CHARTON MAIA Team - UMASS Workshop Wednesday, 23 rd June, 2004

Presentation Overview Learning Mediation Strategies in heterogeneous Multiagent Systems • Research and application fields • heterogeneous Multiagent Systems (h-MAS) • Typical example of interaction • Markov Decision Process based Mediation • Experiments • Works in progress 2

Research fields Domain : heterogeneous Multiagent Systems (h-MAS) • Learning behaviours of agents that interact with human beings • Organization of agents with different nature Approach : • Inspiration from the Agent-Group-Role model (Gutknecht and Ferber 1998) • Deal with real applications – dynamic environments – uncertainty – incomplete knowledge Use of Stochastic Models (MDP) + Reinforcement Learning 3

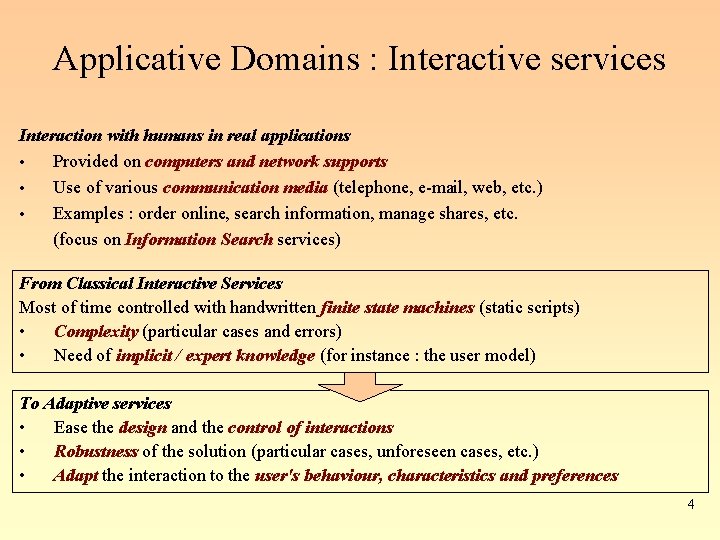

Applicative Domains : Interactive services Interaction with humans in real applications • Provided on computers and network supports • Use of various communication media (telephone, e-mail, web, etc. ) • Examples : order online, search information, manage shares, etc. (focus on Information Search services) From Classical Interactive Services Most of time controlled with handwritten finite state machines (static scripts) • Complexity (particular cases and errors) • Need of implicit / expert knowledge (for instance : the user model) To Adaptive services • Ease the design and the control of interactions • Robustness of the solution (particular cases, unforeseen cases, etc. ) • Adapt the interaction to the user's behaviour, characteristics and preferences 4

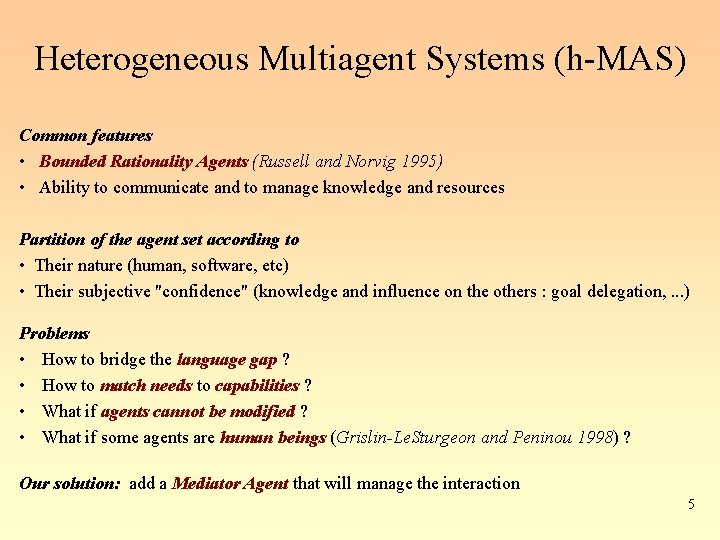

Heterogeneous Multiagent Systems (h-MAS) Common features • Bounded Rationality Agents (Russell and Norvig 1995) • Ability to communicate and to manage knowledge and resources Partition of the agent set according to • Their nature (human, software, etc) • Their subjective "confidence" (knowledge and influence on the others : goal delegation, . . . ) Problems • How to bridge the language gap ? • How to match needs to capabilities ? • What if agents cannot be modified ? • What if some agents are human beings (Grislin-Le. Sturgeon and Peninou 1998) ? Our solution: add a Mediator Agent that will manage the interaction 5

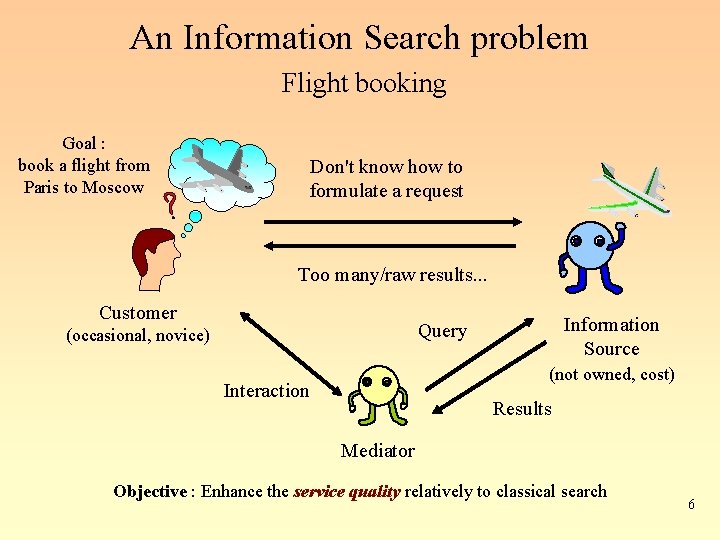

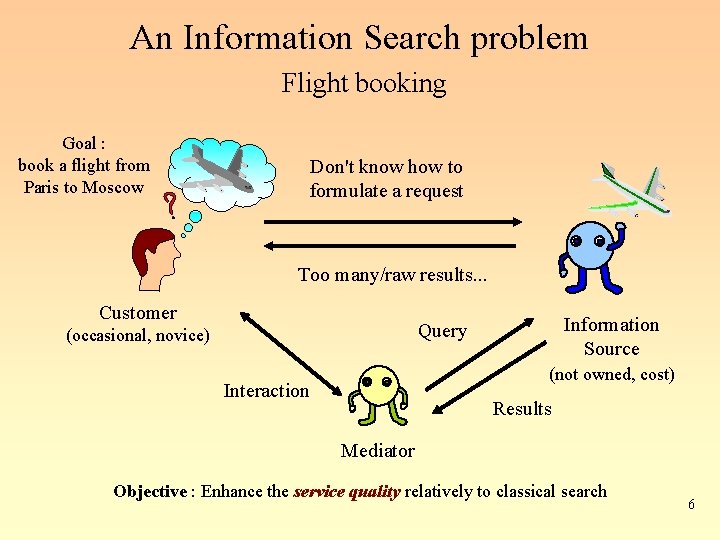

An Information Search problem Flight booking Goal : book a flight from Paris to Moscow Don't know how to formulate a request Too many/raw results. . . Customer Information Source Query (occasional, novice) (not owned, cost) Interaction Results Mediator Objective : Enhance the service quality relatively to classical search 6

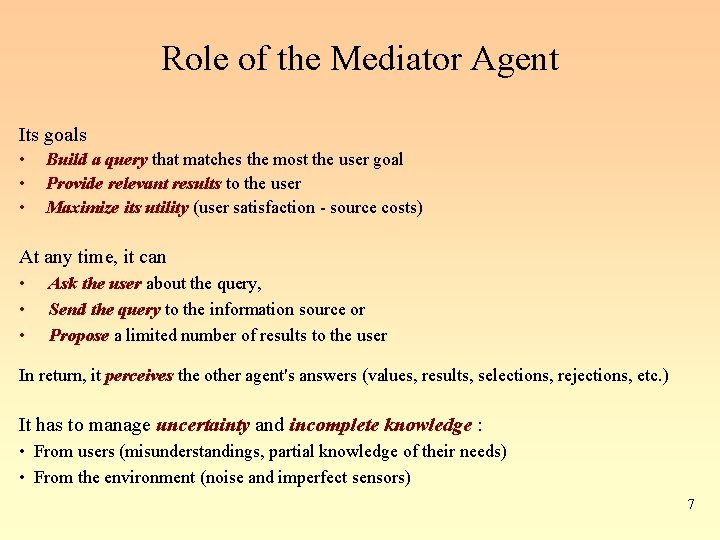

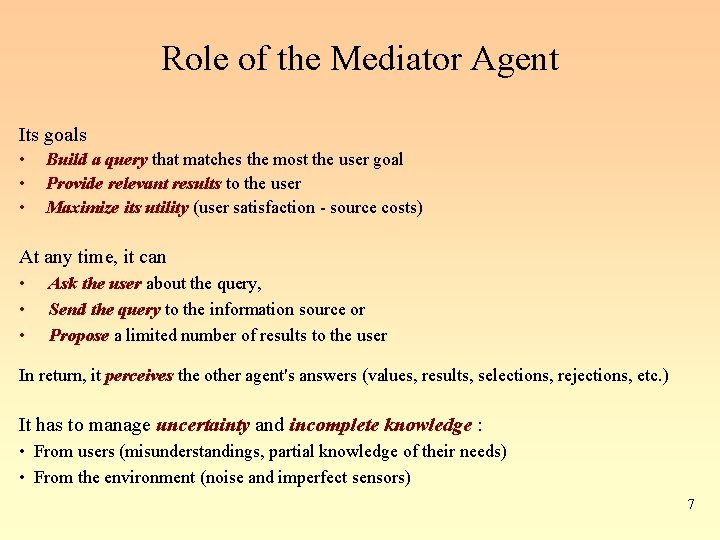

Role of the Mediator Agent Its goals • • • Build a query that matches the most the user goal Provide relevant results to the user Maximize its utility (user satisfaction - source costs) At any time, it can • • • Ask the user about the query, Send the query to the information source or Propose a limited number of results to the user In return, it perceives the other agent's answers (values, results, selections, rejections, etc. ) It has to manage uncertainty and incomplete knowledge : • From users (misunderstandings, partial knowledge of their needs) • From the environment (noise and imperfect sensors) 7

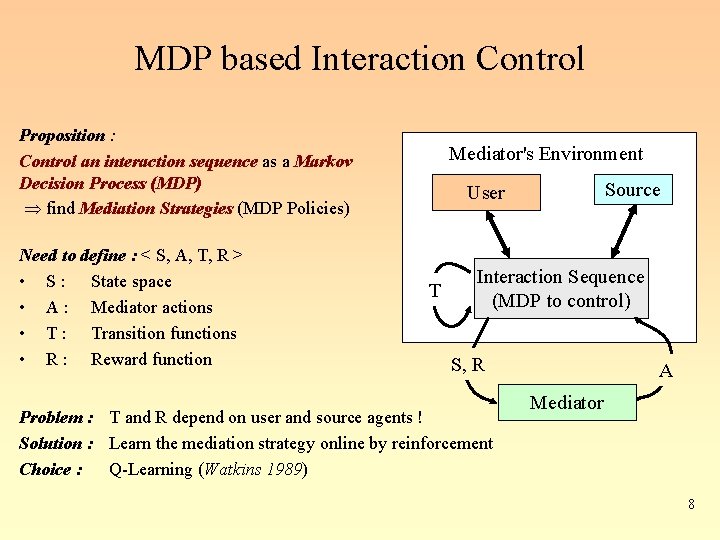

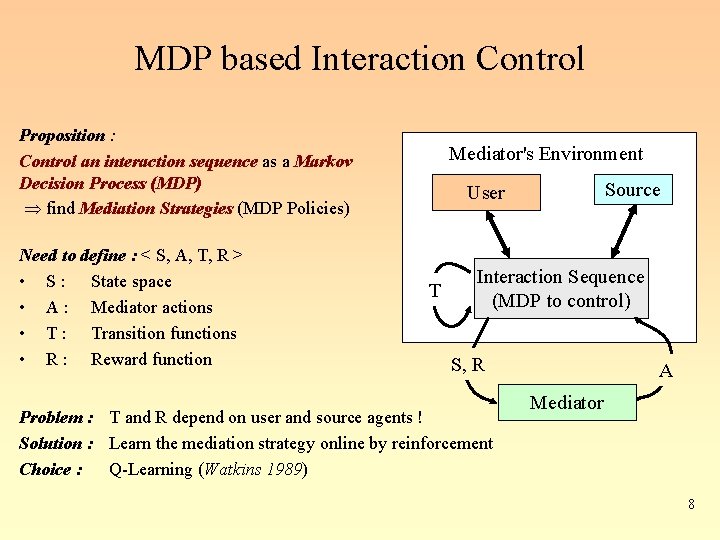

MDP based Interaction Control Proposition : Control an interaction sequence as a Markov Decision Process (MDP) find Mediation Strategies (MDP Policies) Need to define : < S, A, T, R > • S : State space • A : Mediator actions • T : Transition functions • R : Reward function Mediator's Environment Source User T Interaction Sequence (MDP to control) S, R Problem : T and R depend on user and source agents ! Solution : Learn the mediation strategy online by reinforcement Choice : Q-Learning (Watkins 1989) A Mediator 8

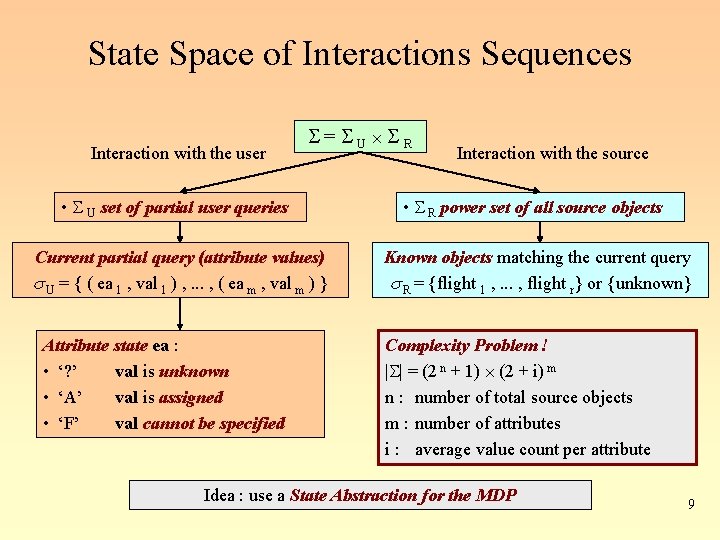

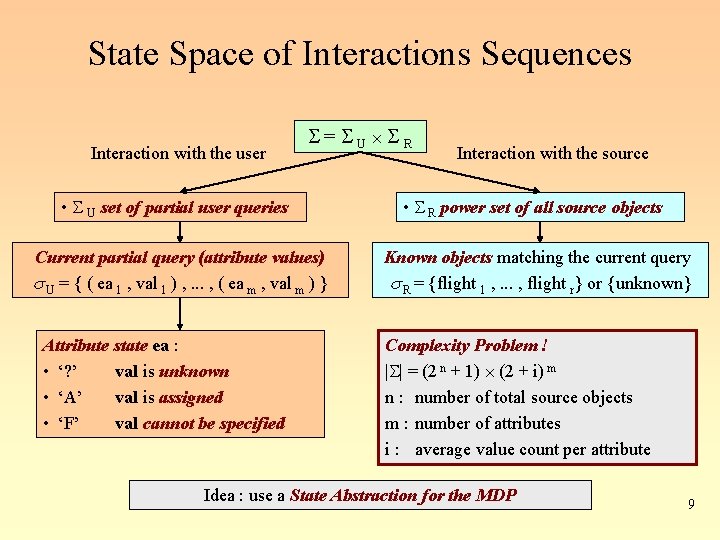

State Space of Interactions Sequences Interaction with the user = U R Interaction with the source • U set of partial user queries • R power set of all source objects Current partial query (attribute values) s U = { ( ea 1 , val 1 ) , . . . , ( ea m , val m ) } Known objects matching the current query s R = {flight 1 , . . . , flight r} or {unknown} Attribute state ea : • ‘? ’ val is unknown • ‘A’ val is assigned • ‘F’ val cannot be specified Complexity Problem ! | | = (2 n + 1) (2 + i) m n : number of total source objects m : number of attributes i : average value count per attribute Idea : use a State Abstraction for the MDP 9

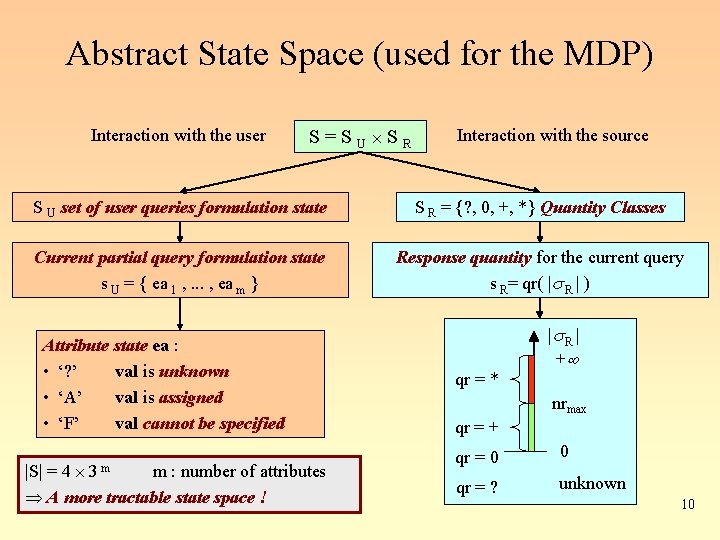

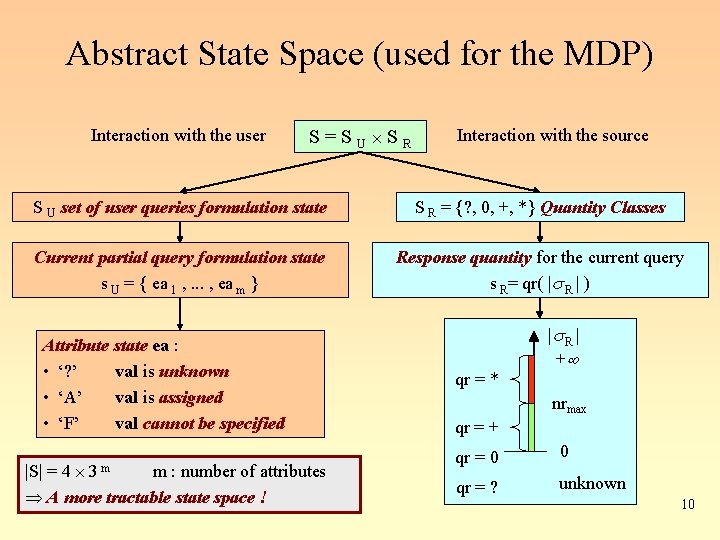

Abstract State Space (used for the MDP) Interaction with the user S=SU SR Interaction with the source S U set of user queries formulation state S R = {? , 0, +, *} Quantity Classes Current partial query formulation state s U = { ea 1 , . . . , ea m } Response quantity for the current query s R= qr( |s R | ) Attribute state ea : • ‘? ’ val is unknown • ‘A’ val is assigned • ‘F’ val cannot be specified |S| = 4 m : number of attributes A more tractable state space ! 3 m qr = * |s R | + nrmax qr = + qr = 0 0 qr = ? unknown 10

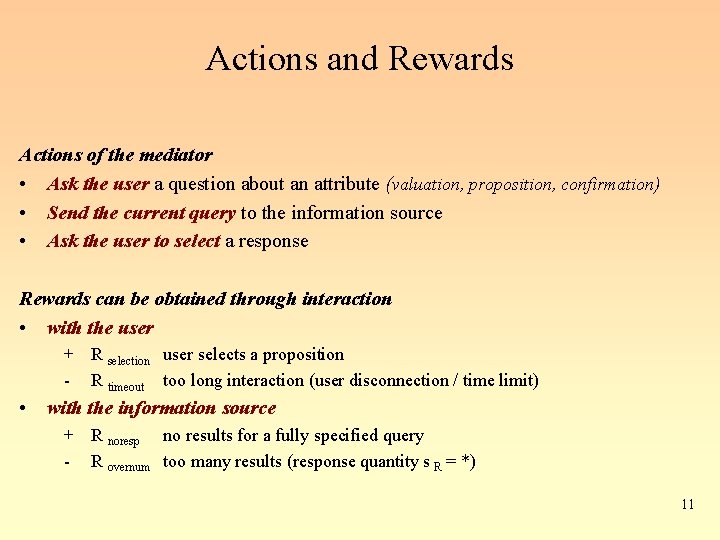

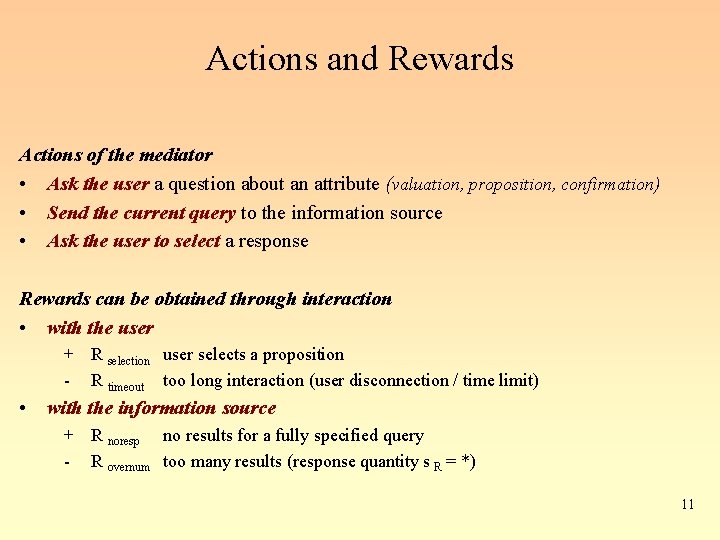

Actions and Rewards Actions of the mediator • Ask the user a question about an attribute (valuation, proposition, confirmation) • Send the current query to the information source • Ask the user to select a response Rewards can be obtained through interaction • with the user + R selection user selects a proposition - R timeout too long interaction (user disconnection / time limit) • with the information source + R noresp no results for a fully specified query - R overnum too many results (response quantity s R = *) 11

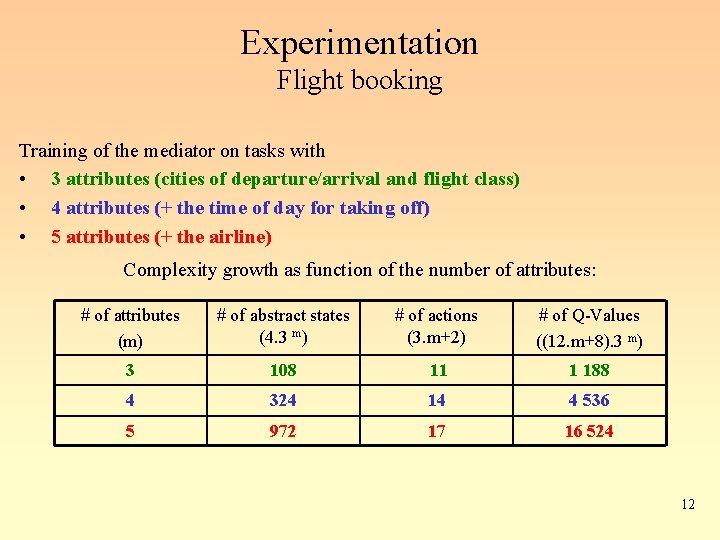

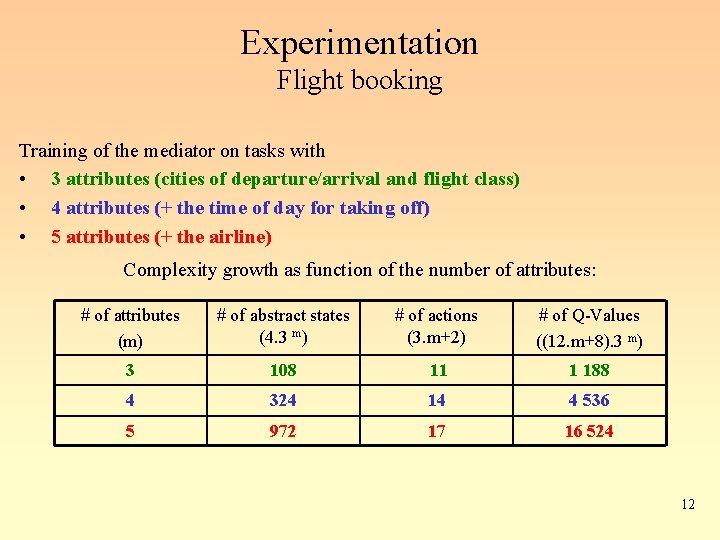

Experimentation Flight booking Training of the mediator on tasks with • 3 attributes (cities of departure/arrival and flight class) • 4 attributes (+ the time of day for taking off) • 5 attributes (+ the airline) Complexity growth as function of the number of attributes: # of attributes (m) # of abstract states (4. 3 m) # of actions (3. m+2) # of Q-Values ((12. m+8). 3 m) 3 108 11 1 188 4 324 14 4 536 5 972 17 16 524 12

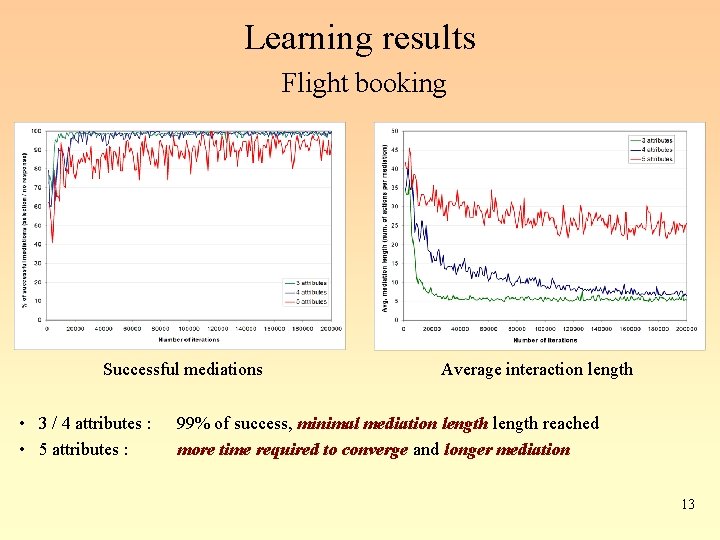

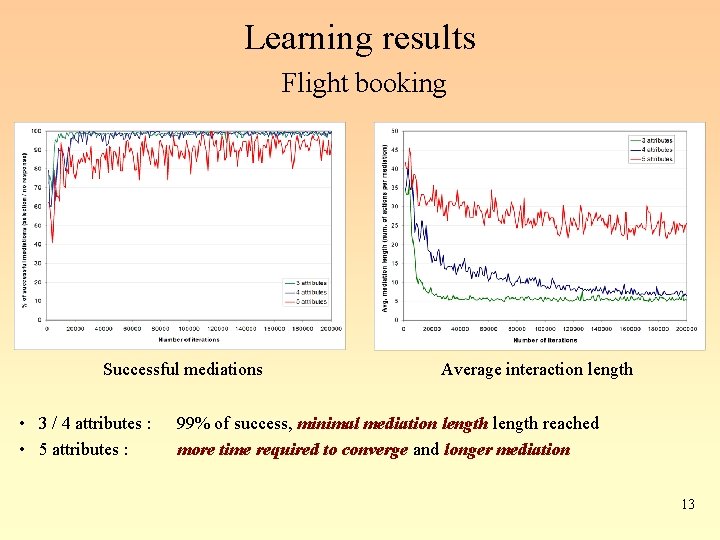

Learning results Flight booking Successful mediations • 3 / 4 attributes : • 5 attributes : Average interaction length 99% of success, minimal mediation length reached more time required to converge and longer mediation 13

Conclusion Mediation Strategies in h-MAS • • Reinforcement learning of mediation strategies is possible Answer to users needs (majority, but also particular, through profiles) Software model • • Towards "user oriented" design (utility based on user's satisfaction) Implementation of a Mediator prototype Limits • • • Limited richness of the learning due to the simulated answer generator User is at most partially observable Degradation of performance for more complex tasks 14

Current Works Deal with Partial Observation • Challenge : Get rid of the ad-hoc state space abstraction • Key question : "What must be kept in / from the interaction history ? " • Study of memory based approaches : – HQ-Learning (Wiering and Schmidhuber 1997) – U-Trees (Mc. Callum 1995) –. . . Deal with structured tasks • Challenge : Reduced state space complexity, better guidance. . . and service composition ? • Main idea : Exploit or discover the task structure (sub-tasks, dependencies, etc. ) • Hierarchical models are promising – MAX-Q (Dietterich 2000) / HEX-Q (Hengst 2002) – HAM (Parr 1998) / PHAM (Andre and Russell 2000) – H-MPD and H-POMDP –. . . 15

References (Andre and Russell 2000) Andre D. et Russell S. J, . Programmable Reinforcement Learning Agents. In NIPS, 2000. (Dietterich 2000) Dietterich T. G. , An overview of MAXQ hierarchical reinforcement learning. In SARA, 2000. (Ferber 1995) Ferber J. , Les Systèmes Multi-Agents. Vers une intelligence collective. Interéditions, 1995. (Gutknecht and Ferber 1998) Gutknecht O. and Ferber J. , Un méta-modèle organisationnel pour l'analyse, la conception et l'exécution de systèmes multi-agents. In JFIADSMA'98, pp. 267, 1998. (Grislin-Le. Sturgeon and Peninou 1998) Grislin-Le Sturgeon E. and Péninou A. , Les interactions Homme-SMA : réflexions et problématiques de conception. Systèmes Multi-Agents de l'interaction à la Socialité. In JFIADSMA'98, Hermès, pp. 133 -145, 1998. (Hengst 2002) Hengst B, Discovering Hierarchy in Reinforcement Learning with HEXQ. In ICML, pp. 243 -250, Sydney Australia, 2002. (Mc. Callum 1995) Mc. Callum A. K. , Reinforcement Learning with selective Perception and Hidden State. Ph. D Thesis, University of Rochester, New York, 1995. (Parr 1998) Parr R. E. , Hierarchical Control and Learning for Markov Decision Process - Ph. D Thesis of University of California, Berkeley, 1998. (Russell and Norvig 1995) Russell S. and Norvig P. , Artificial Intelligence: A Modern Approach, The Intelligent Agent Book. Prentice Hall, 1995. (Watkins 1989) Watkins C. , Learning from Delayed Rewards. Ph. D Thesis of the King's College, University of Cambridge, England, 1989. (Wiering et Schmidhuber 1997) Wiering M. , Schmidhuber J, HQ-Learning. In Adaptive Behavior 6: 2, 1997. 16

Thank you for your attention. Any questions ? 17