Learning and Conditioning Learning any relatively permanent change

- Slides: 21

Learning and Conditioning

Learning: any relatively permanent change in behavior that is based upon experience. Behaviorists: psychologists who insist that psychologists should study only observable, measurable behaviors, not mental processes. I. The Assumptions of Behaviorism A. Behaviorists are deterministic. B. Behaviorists believe that mental explanations are ineffective. C. Behaviorists believe that the environment plays a powerful role in molding behavior.

II. Two Key Types of Behaviorists A. Radical Behaviorists: believe that internal states are caused by events in the environment, or by genetics. B. Methodological Behaviorists: study only events that they can measure and observe, BUT they sometimes use those observations to make inferences about internal events. 1) Intervening Variable: something that cannot be directly observed yet links a variety of procedures to a variety of possible responses.

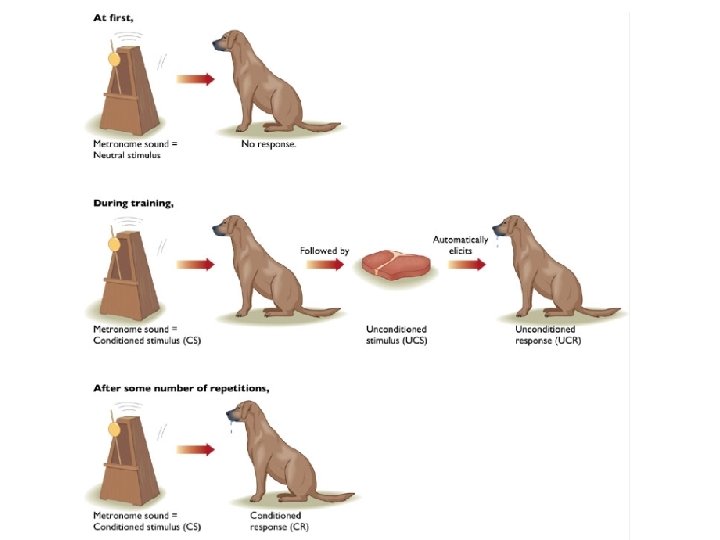

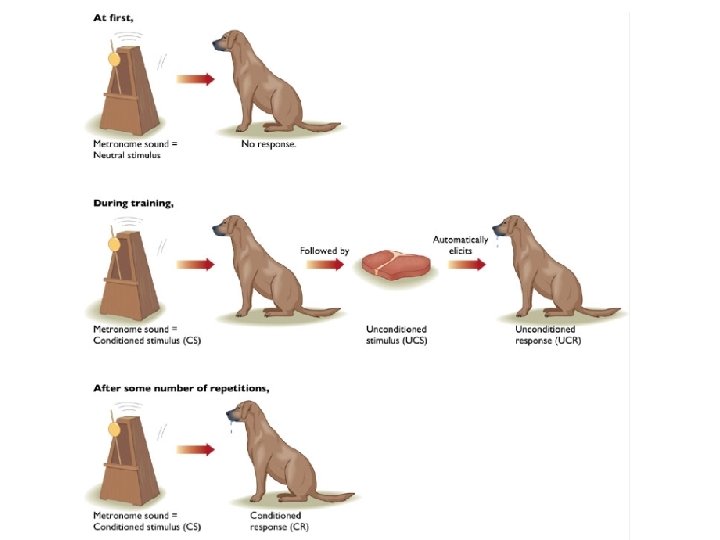

III. Pavlov and Classical Conditioning A. Classical Conditioning: learning based on association of a stimulus that does not ordinarily elicit a particular response with another stimulus that does elicit the response. Applies to involuntary responses. 1) Classical Conditioning Terminology Unconditioned Stimulus (UCS) An event that consistently and automatically elicits an unconditioned response. (Food) Unconditioned Response (UCR) An action that the unconditioned stimulus automatically elicits. (Salivation) Conditioned Stimulus (CS) Formerly the neutral stimulus, having been paired with the unconditioned stimulus, elicits the same response. (Bell) That response depends upon its consistent pairing with the UCS. Conditioned Response (CR) The response elicited by the conditioned stimulus due to the training. (Salivation) Usually it closely resembles the UCR.

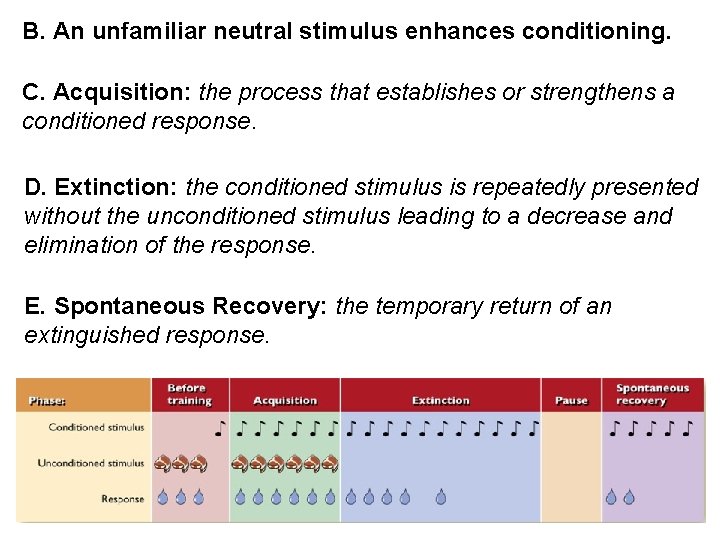

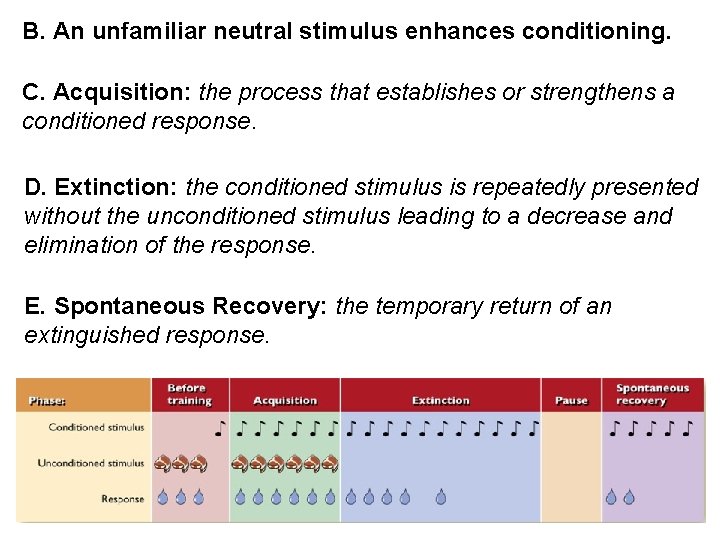

B. An unfamiliar neutral stimulus enhances conditioning. C. Acquisition: the process that establishes or strengthens a conditioned response. D. Extinction: the conditioned stimulus is repeatedly presented without the unconditioned stimulus leading to a decrease and elimination of the response. E. Spontaneous Recovery: the temporary return of an extinguished response.

F. Simultaneous conditioning: the conditioned stimulus and the unconditioned stimulus are presented at the same time. G. Compound conditioning: two or more conditioned stimuli are presented together with the unconditioned stimulus. H. Stimulus generalization: the extension of a conditioned response from the training stimulus to similar stimuli. I. Stimulus discrimination: the process of learning to respond differently to two stimuli because they produce two different outcomes. J. Drug Tolerance and Classical Conditioning K. Pavlov believed that after enough training, the CS became the UCS, rather than simply a signal that the UCS was coming. Later research determined that this is NOT the case, rather the CS does indeed become a signal that the UCS is coming.

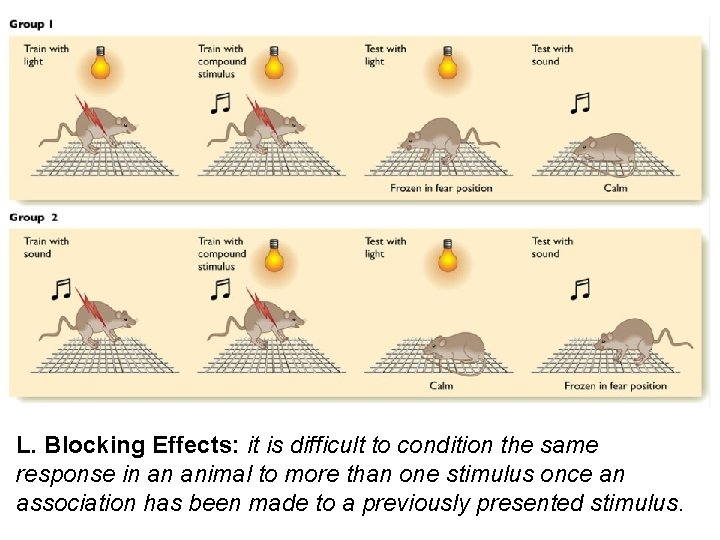

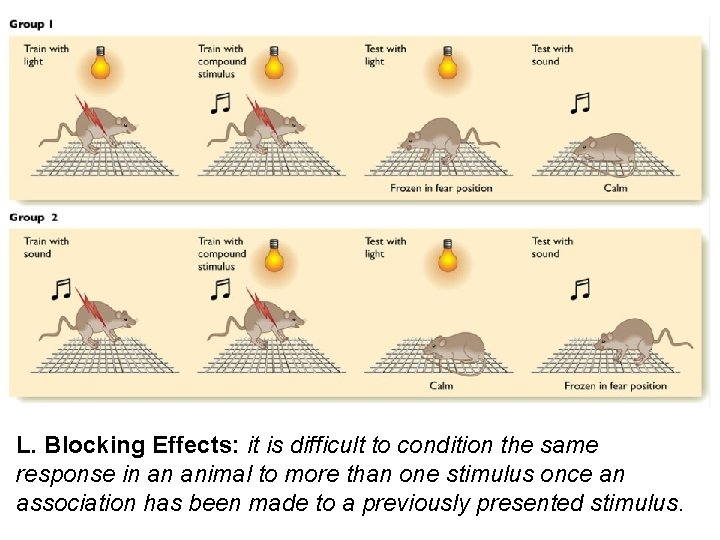

L. Blocking Effects: it is difficult to condition the same response in an animal to more than one stimulus once an association has been made to a previously presented stimulus.

Behaviorism: Classical Conditioning • John Watson: Conditioning of Fear • Orphan boy ‘Little Albert’ – 1. Albert liked the furry rat – 2. Rat presented with loud CRASH! – 3. Albert cried because of noise – 4. Eventually, site of rat made Albert cry

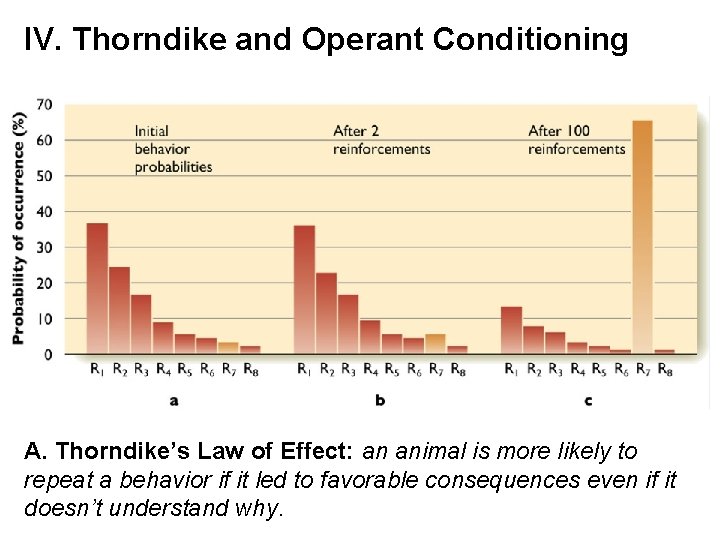

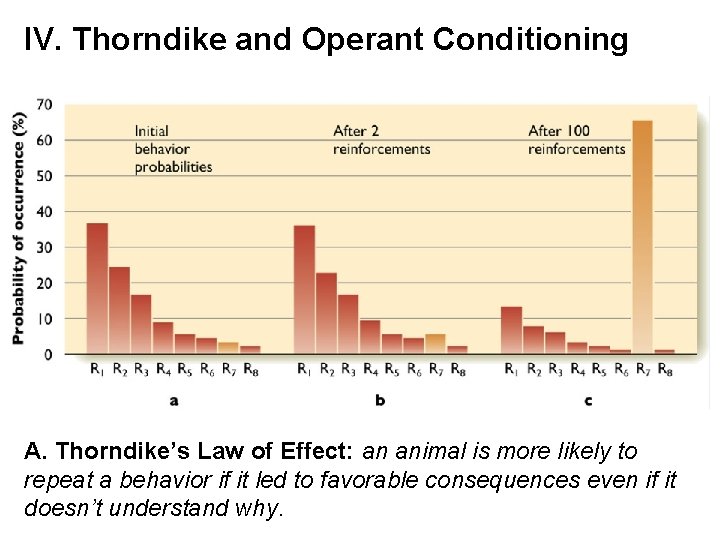

IV. Thorndike and Operant Conditioning A. Thorndike’s Law of Effect: an animal is more likely to repeat a behavior if it led to favorable consequences even if it doesn’t understand why.

B. Operant Conditioning: learning based on association of behavior with its consequences. The individual learns from the consequences of “operating” in the environment. Applies to voluntary responses. C. Forms of Operant Conditioning 1) Reinforcer: an event that follows a response and increases the future probability of that recent response. Primary Reinforcers: are reinforcing because of their own properties. (Food) Secondary Reinforcers: are reinforcing because of previous experiences. (Money) “I’ve learned through experience that I can exchange money for food. ” 2) Punishment is an event that decreases the probability of a response. (Pain)

Operant Conditioning: Reinforcement • Increases likelihood of a behavior reoccurring – Positive: Giving a reward • Candy for finishing a task – Negative: Removing something aversive • No chores for getting an A+ on homework

Operant Conditioning: Punishment • Decreases likelihood of a behavior reoccurring – Positive: Adding something aversive • Extra Chores – Negative: Removing something pleasant • Taking away car keys

D. Extinction: occurs if responses stop producing reinforcements. E. Stimulus Generalization: occurs when a new stimulus is similar to the original reinforced stimulus. The more similar the new stimulus is to the old, the more strongly the subject is likely to respond. F. Discriminative Stimulus: a stimulus that indicates which response is appropriate or inappropriate.

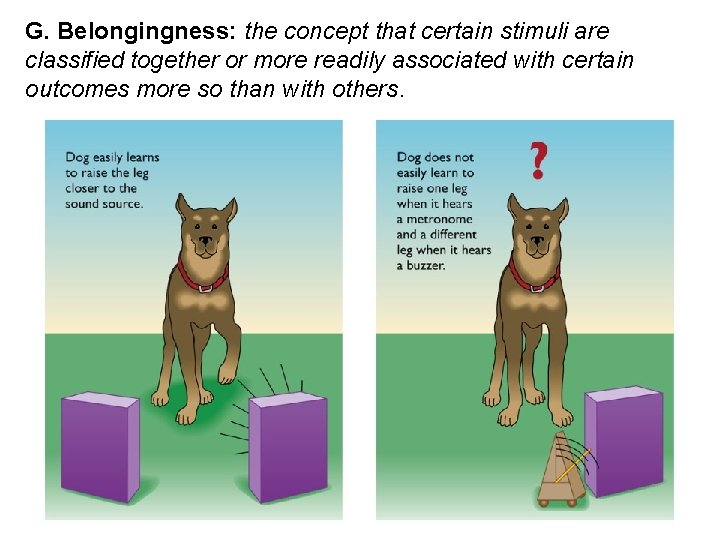

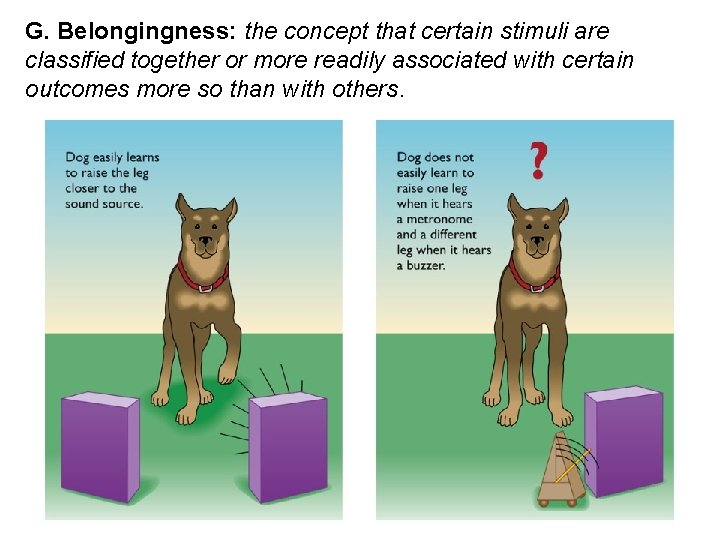

G. Belongingness: the concept that certain stimuli are classified together or more readily associated with certain outcomes more so than with others.

V. Skinner and the Shaping of Behavior A. Shaping: establishes new responses by reinforcing successive approximations to it. B. Schedule of Reinforcement: is a set of rules of procedures for delivery of reinforcement. C. Continuous Reinforcement: provides reinforcement every time a response occurs. D. Intermittent Reinforcement: sometimes a particular response is reinforced and other times it is not. 1) Ratio: when the delivery of reinforcement depends on the number of responses given by the individual. 2) Interval: when delivery of reinforcement depends on the amount of time that has passed since the last reinforcement.

E. Four Subcategories of Intermittent Reinforcement 1) Fixed-Ratio Schedule: provides reinforcement only after a certain “fixed” number of correct responses have been made. 2) Variable-Ratio Schedule: provides reinforcement after a variable number of correct responses. 3) Fixed-Interval Schedule: provides reinforcement for the first response made after a specific time interval. 4) Variable-Interval Schedule: provides reinforcement after a variable amount of time has elapsed. Extinction of responses tends to take longer when an individual has been on an intermittent schedule, especially one that is variable, rather than a continuous schedule. One explanation for this difference is that on an intermittent schedule, the lack of reinforcement does not signify the complete ending of all reinforcements. It’s harder to tell when your experiences with reinforcement are truly over.

VI. Applications of Operant Conditioning A. Animal Training B. Breaking Bad Habits 1) Behavior Modification: the clinician determines which reinforcers sustain an undesirable behavior and then tries to change the behavior by reducing the opportunities for reinforcement of the unwanted behavior and providing reinforcers for a more acceptable behavior.

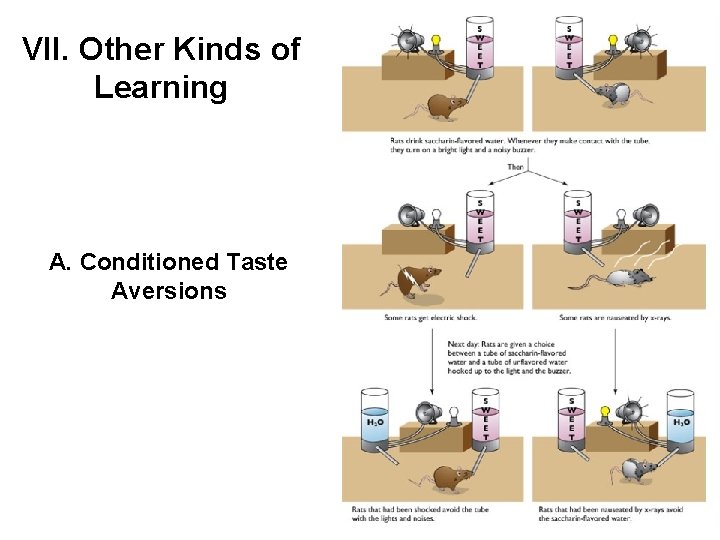

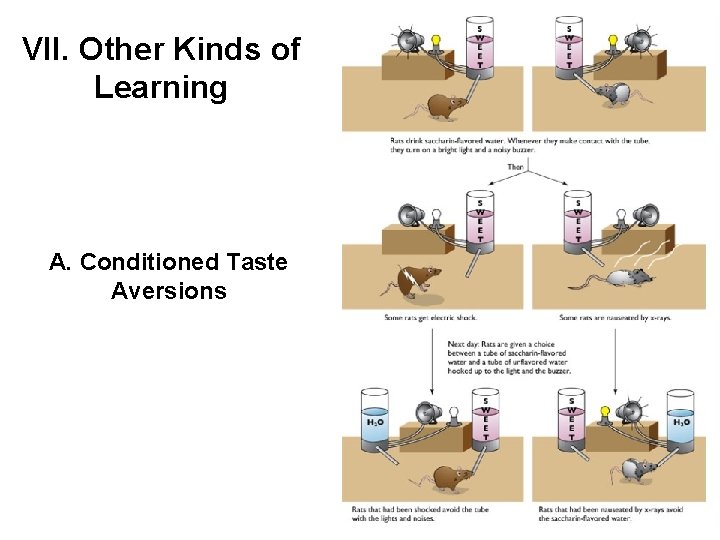

VII. Other Kinds of Learning A. Conditioned Taste Aversions

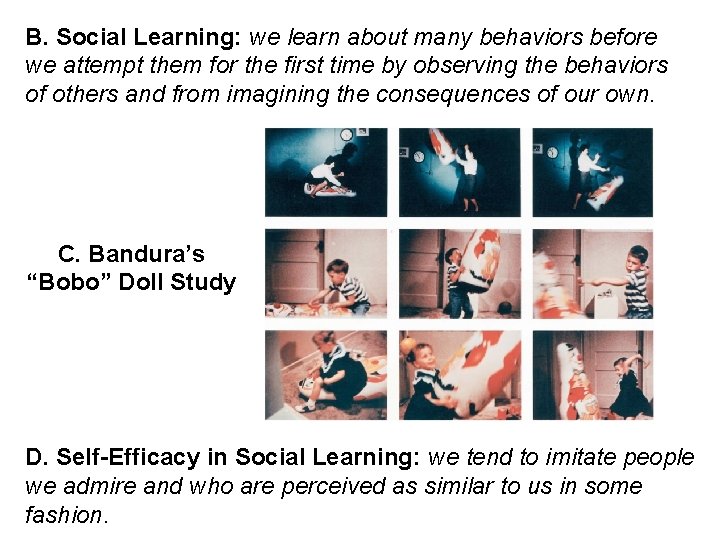

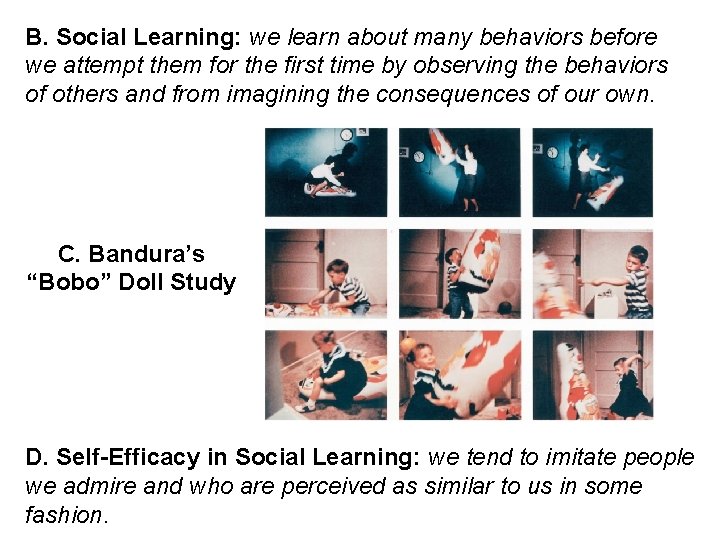

B. Social Learning: we learn about many behaviors before we attempt them for the first time by observing the behaviors of others and from imagining the consequences of our own. C. Bandura’s “Bobo” Doll Study D. Self-Efficacy in Social Learning: we tend to imitate people we admire and who are perceived as similar to us in some fashion.