Learned Convolutional Sparse Coding Hillel Sreter Raja Giryes

- Slides: 32

Learned Convolutional Sparse Coding Hillel Sreter & Raja Giryes ICASSP 2018

Outline ● ● ● Motivation and applications Brief sparse coding background (ASC) Approximate sparse coding (CSC) Convolution sparse coding (ACSC) Approximate Convolution sparse coding 2

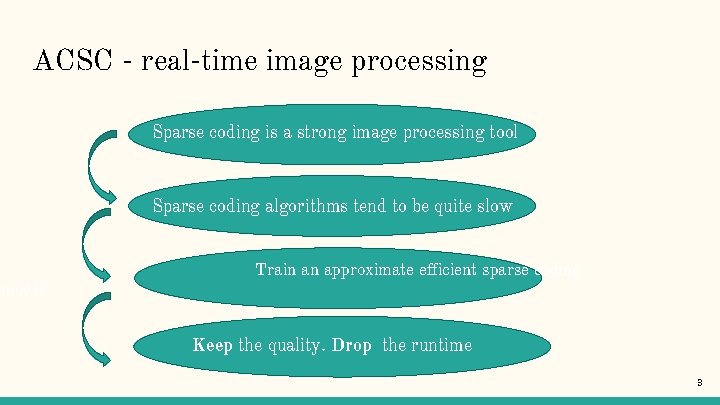

ACSC - real-time image processing Sparse coding is a strong image processing tool Sparse coding algorithms tend to be quite slow model Train an approximate efficient sparse coding Keep the quality. Drop the runtime 3

ACSC - advantages Sparse coding has been shown to work well for Image processing task. Yet, it tends to be slow Requires multiple minutes on CPU. Approximate convolutional sparse coding cuts the runtime to a fraction while maintaining the sparse coding performance. Requires less than a second on a CPU. 4

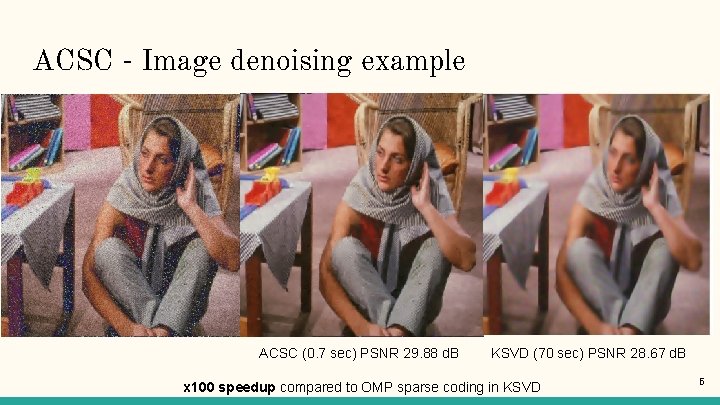

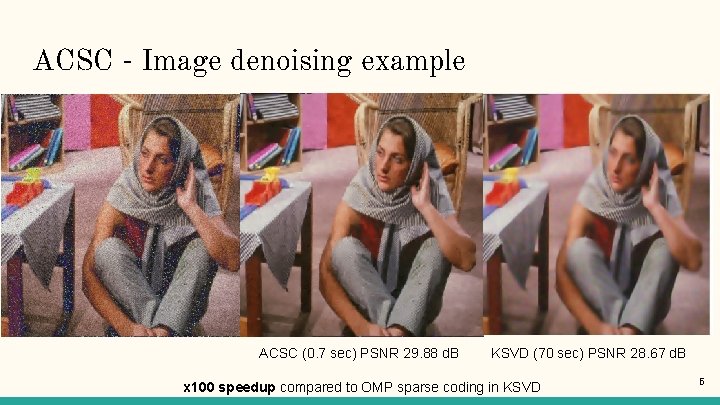

ACSC - Image denoising example ACSC (0. 7 sec) PSNR 29. 88 d. B KSVD (70 sec) PSNR 28. 67 d. B x 100 speedup compared to OMP sparse coding in KSVD 5

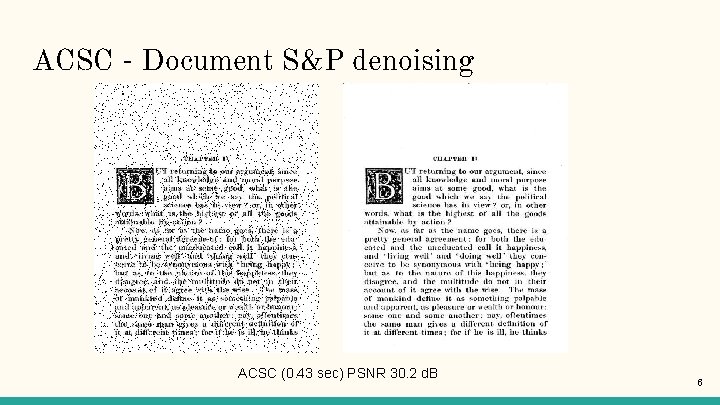

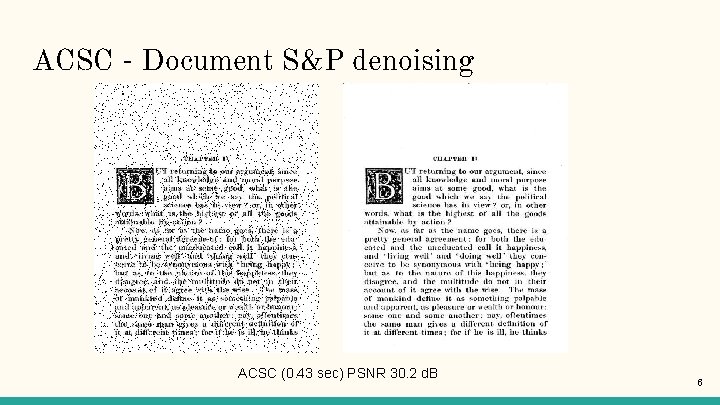

ACSC - Document S&P denoising ACSC (0. 43 sec) PSNR 30. 2 d. B 6

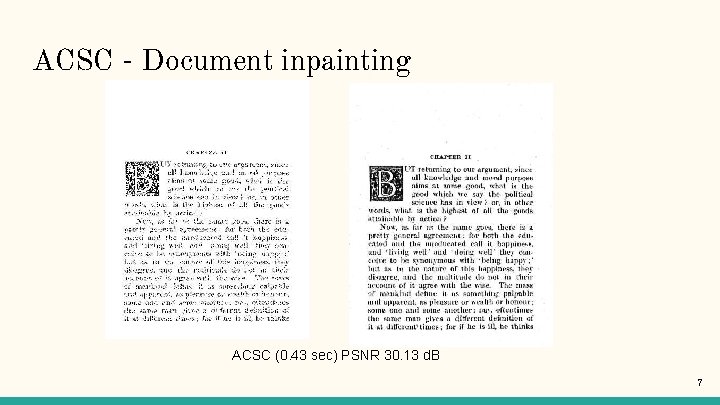

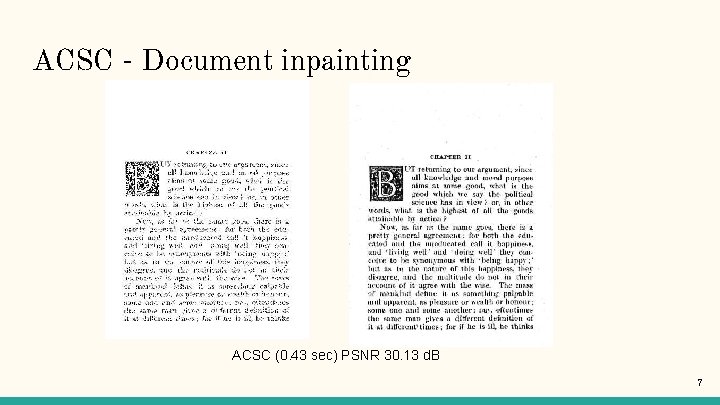

ACSC - Document inpainting ACSC (0. 43 sec) PSNR 30. 13 d. B 7

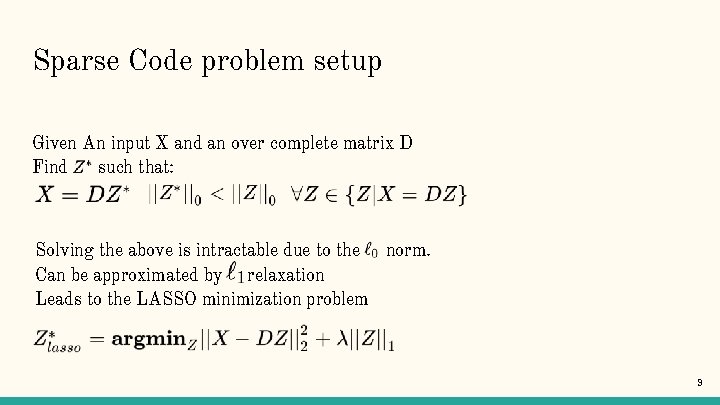

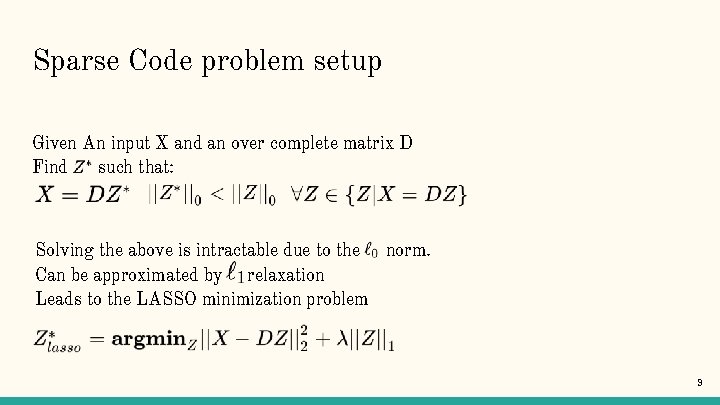

Sparse Code problem setup Given An input X and an over complete matrix D Find such that: 8

Sparse Code problem setup Given An input X and an over complete matrix D Find such that: Solving the above is intractable due to the norm. Can be approximated by relaxation Leads to the LASSO minimization problem 9

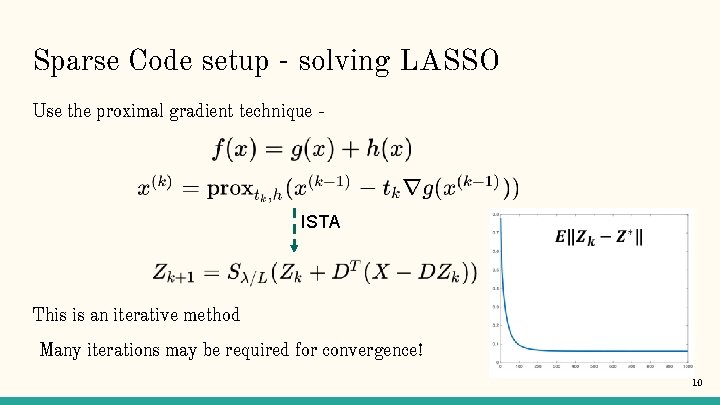

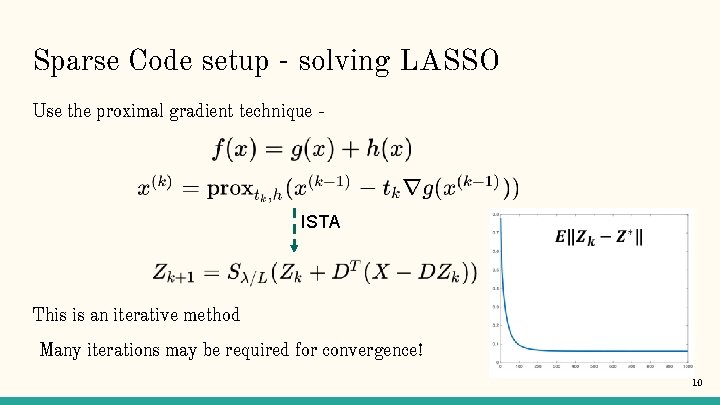

Sparse Code setup - solving LASSO Use the proximal gradient technique - ISTA This is an iterative method Many iterations may be required for convergence! 10

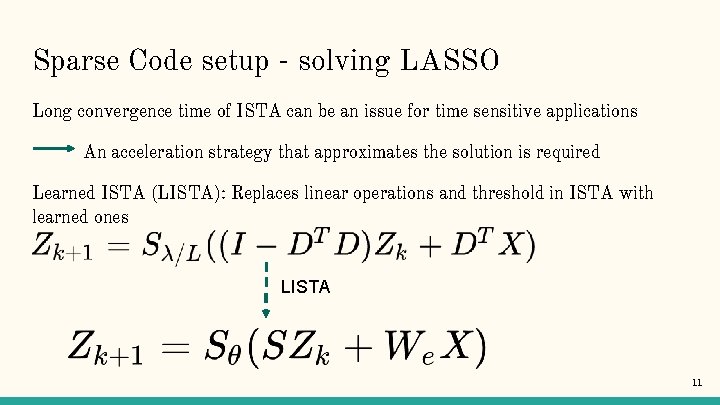

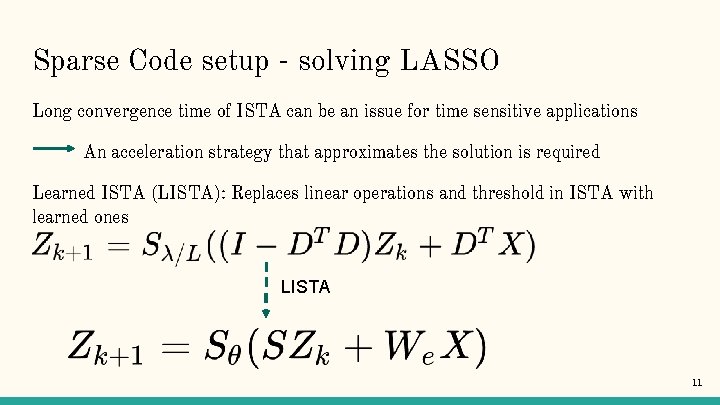

Sparse Code setup - solving LASSO Long convergence time of ISTA can be an issue for time sensitive applications An acceleration strategy that approximates the solution is required Learned ISTA (LISTA): Replaces linear operations and threshold in ISTA with learned ones LISTA 11

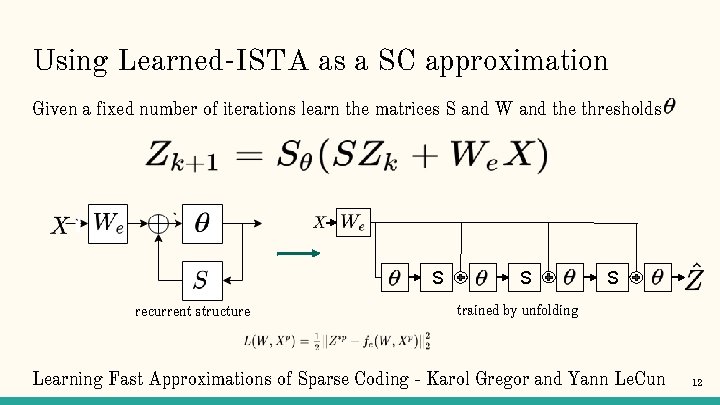

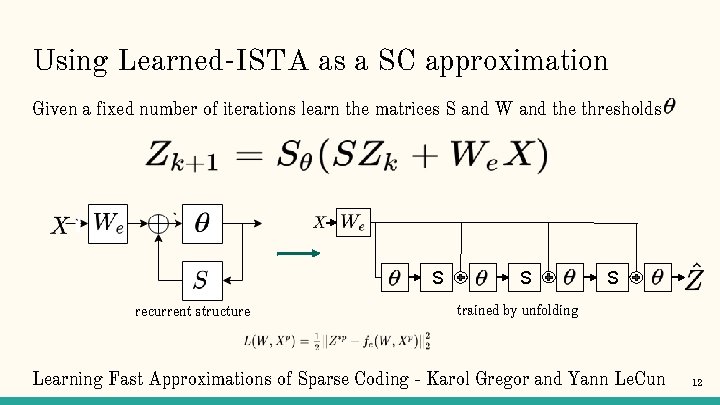

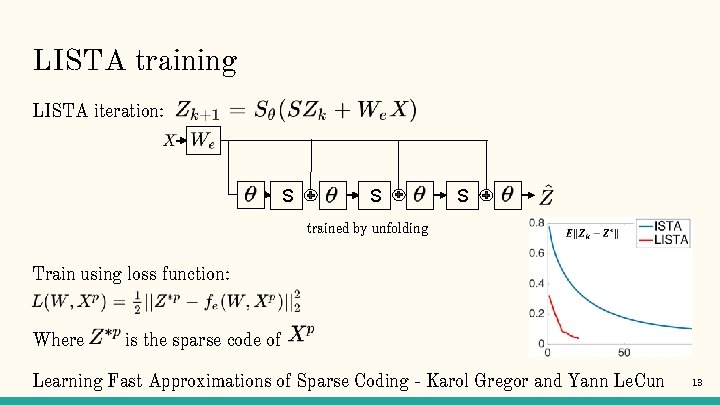

Using Learned-ISTA as a SC approximation Given a fixed number of iterations learn the matrices S and W and the thresholds S recurrent structure S S trained by unfolding Learning Fast Approximations of Sparse Coding - Karol Gregor and Yann Le. Cun 12

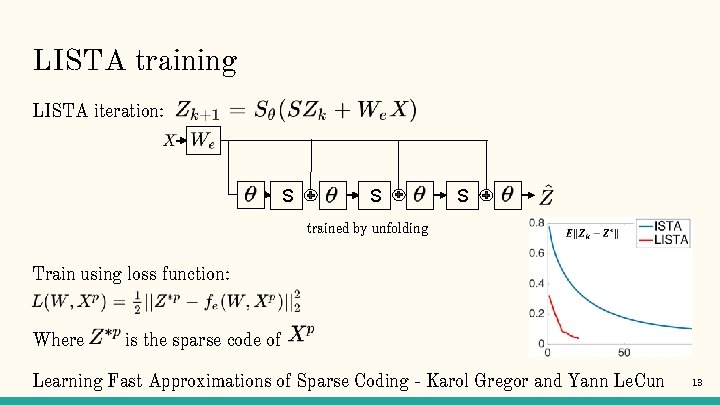

LISTA training LISTA iteration: S S S trained by unfolding Train using loss function: Where is the sparse code of Learning Fast Approximations of Sparse Coding - Karol Gregor and Yann Le. Cun 13

LISTA disadvantages LISTA is a patch based method. Therefore, we have ● Loss of spatial information. ● Inefficient for large patches ● Image properties not incorporated (i. e. translation invariance). Solution: Use convolutional structure. 14

Approximate Convolutional Sparse Coding (ACSC) Learn a convolutional sparse coding of the whole image instead of patches A global algorithm: ● Image is processed as whole. ● Efficient. ● Convolution based inherently incorporates image properties. This overcomes the disadvantages of LISTA being a patch based method: ● Loss of spatial information. ● Not Inefficient for large patches ● Image properties not incorporated (i. e. translation invariance) 15

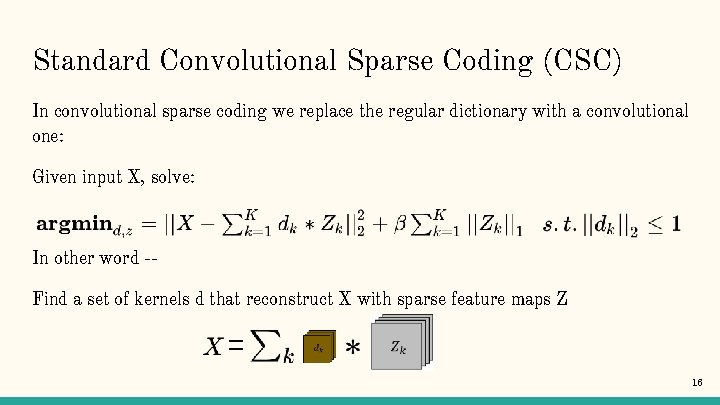

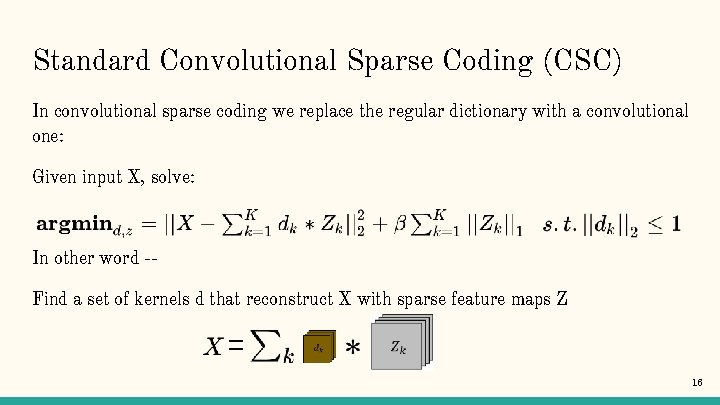

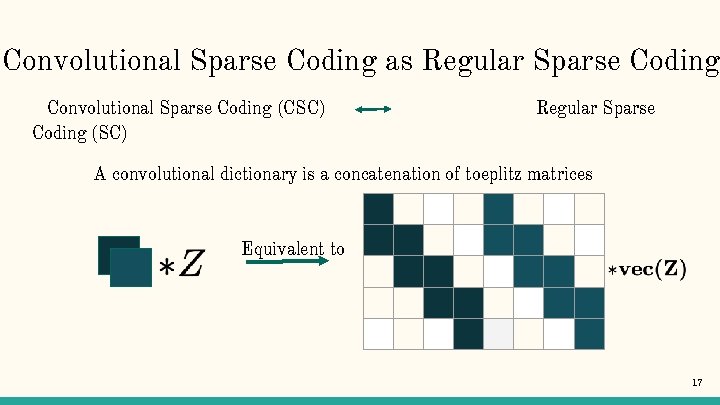

Standard Convolutional Sparse Coding (CSC) In convolutional sparse coding we replace the regular dictionary with a convolutional one: Given input X, solve: In other word -Find a set of kernels d that reconstruct X with sparse feature maps Z 16

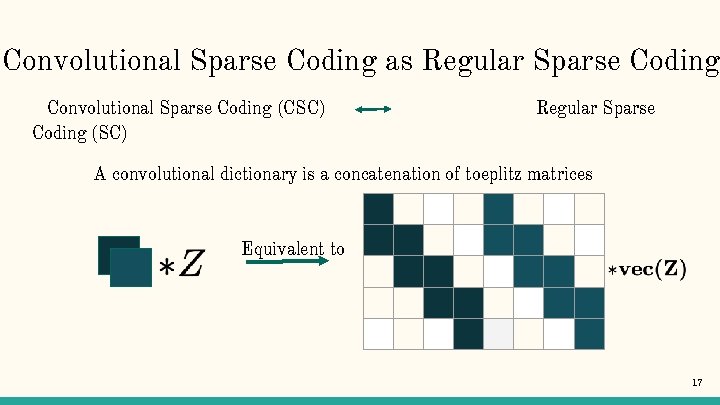

Convolutional Sparse Coding as Regular Sparse Coding Convolutional Sparse Coding (CSC) Coding (SC) Regular Sparse A convolutional dictionary is a concatenation of toeplitz matrices Equivalent to 17

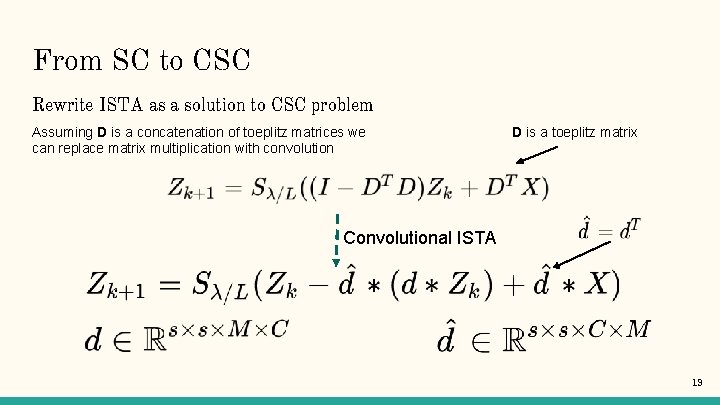

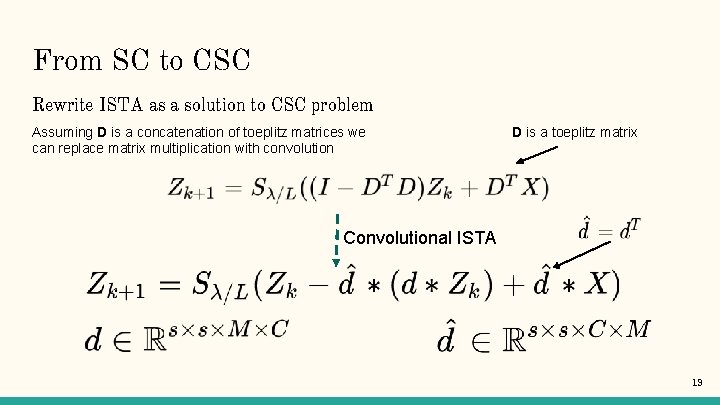

From SC to CSC Rewrite ISTA as a solution to CSC problem Assuming D is a concatenation of toeplitz matrices we can replace matrix multiplication with convolution D is a toeplitz matrix Convolutional ISTA 19

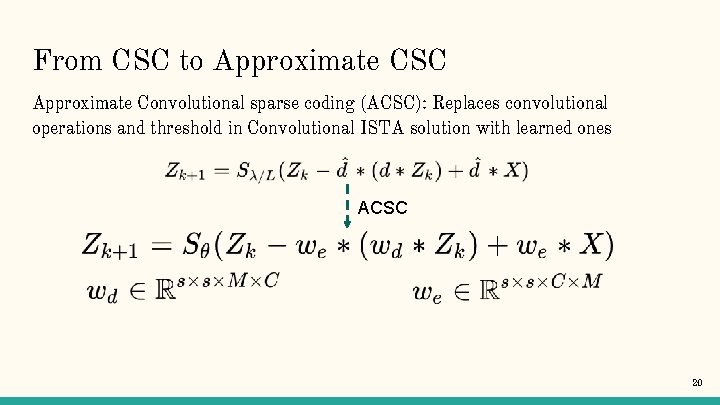

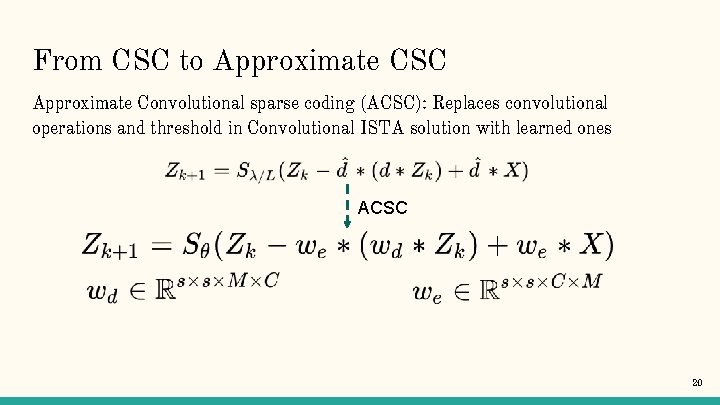

From CSC to Approximate CSC Approximate Convolutional sparse coding (ACSC): Replaces convolutional operations and threshold in Convolutional ISTA solution with learned ones ACSC 20

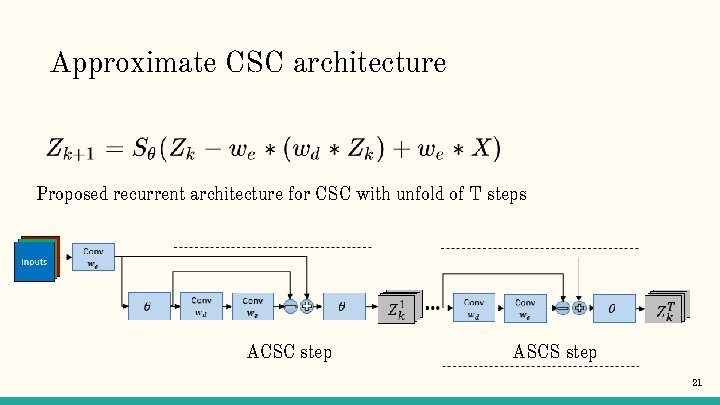

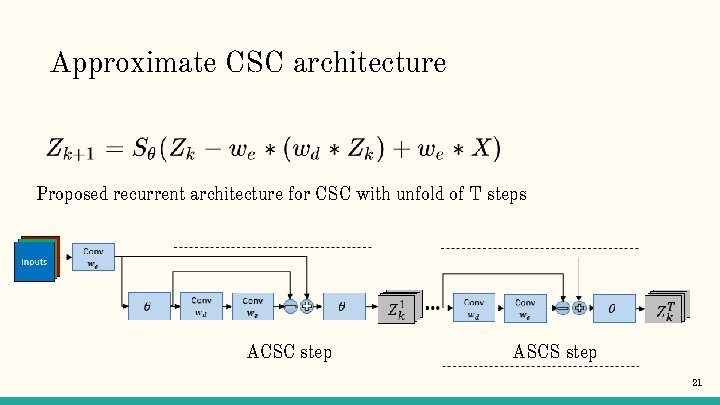

Approximate CSC architecture Proposed recurrent architecture for CSC with unfold of T steps ACSC step ACSCASCS step 21

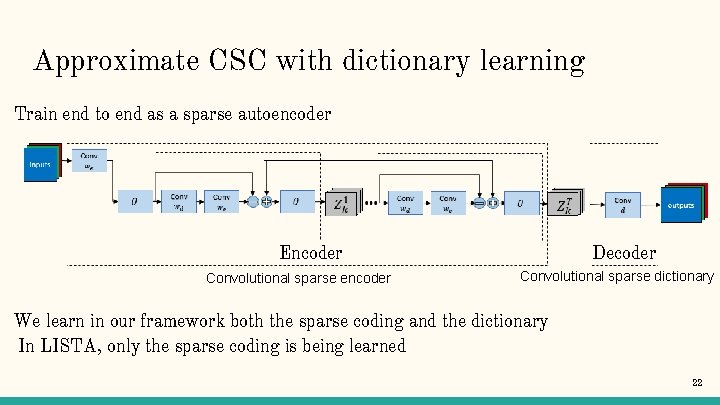

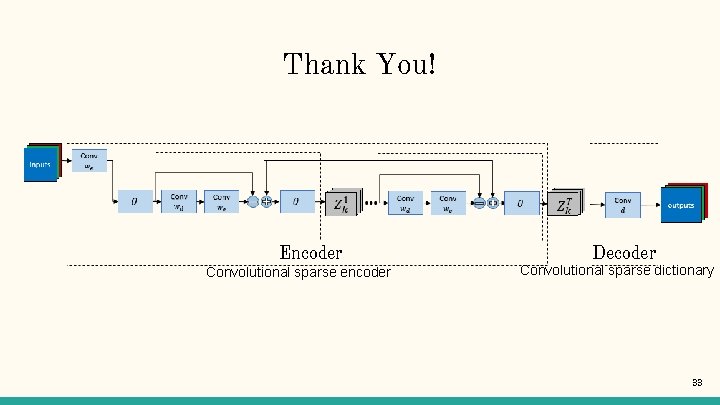

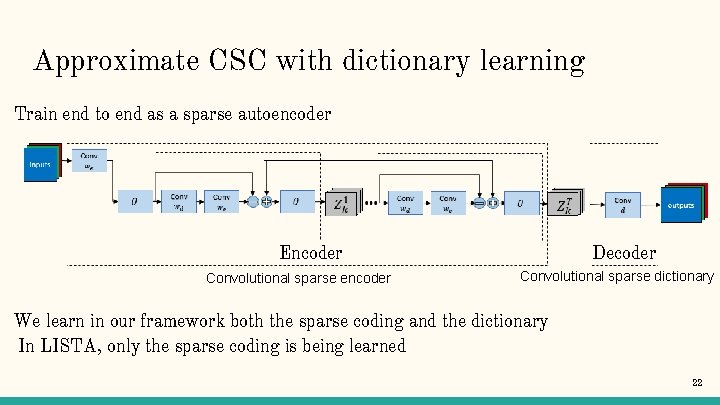

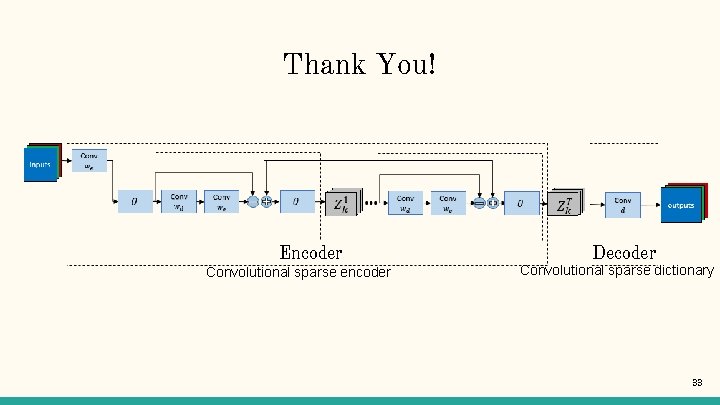

Approximate CSC with dictionary learning Train end to end as a sparse autoencoder Encoder Convolutional sparse encoder Decoder Convolutional sparse dictionary We learn in our framework both the sparse coding and the dictionary In LISTA, only the sparse coding is being learned 22

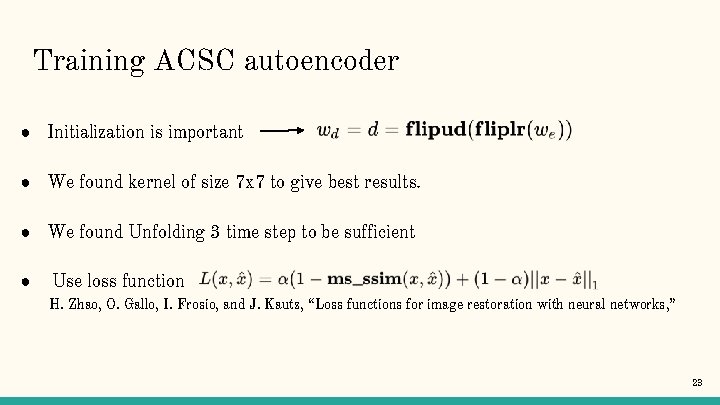

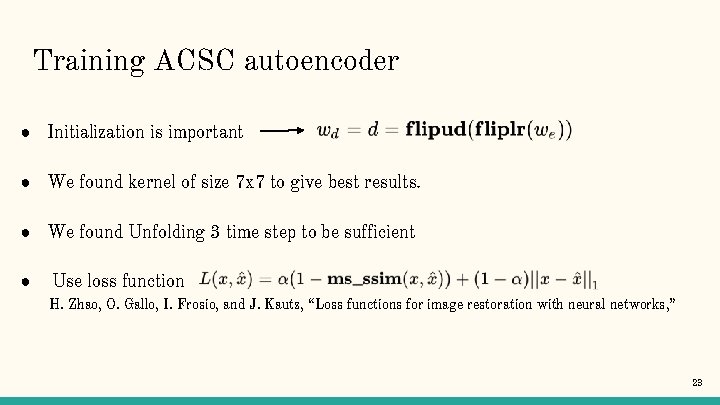

Training ACSC autoencoder ● Initialization is important ● We found kernel of size 7 x 7 to give best results. ● We found Unfolding 3 time step to be sufficient ● Use loss function H. Zhao, O. Gallo, I. Frosio, and J. Kautz, “Loss functions for image restoration with neural networks, ” 23

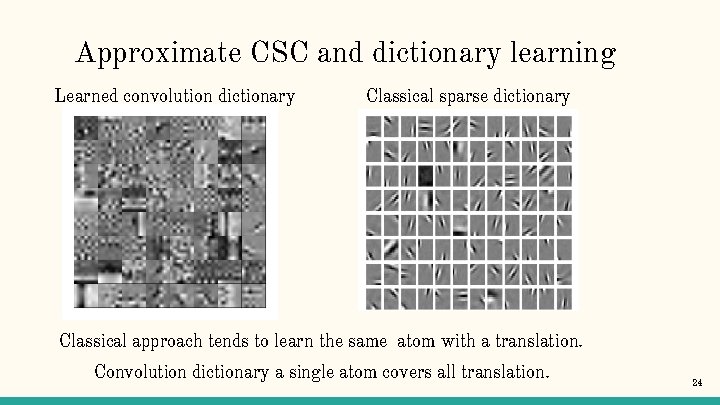

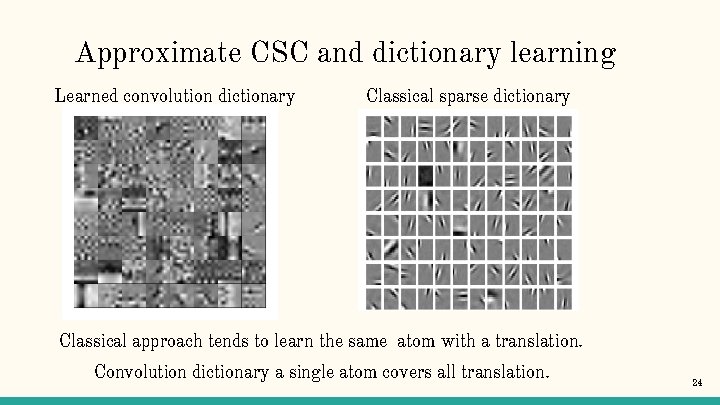

Approximate CSC and dictionary learning Learned convolution dictionary Classical sparse dictionary Classical approach tends to learn the same atom with a translation. Convolution dictionary a single atom covers all translation. 24

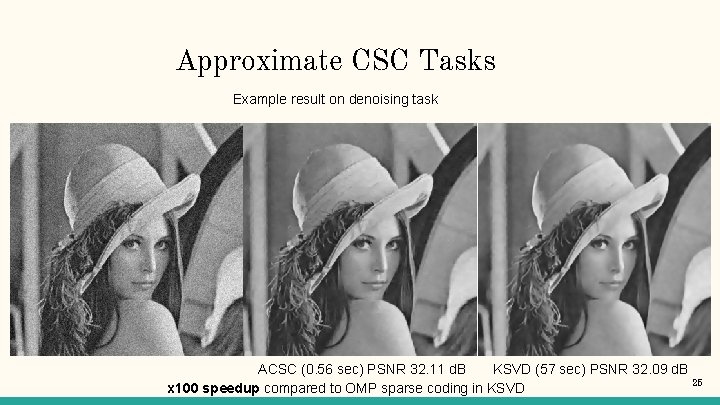

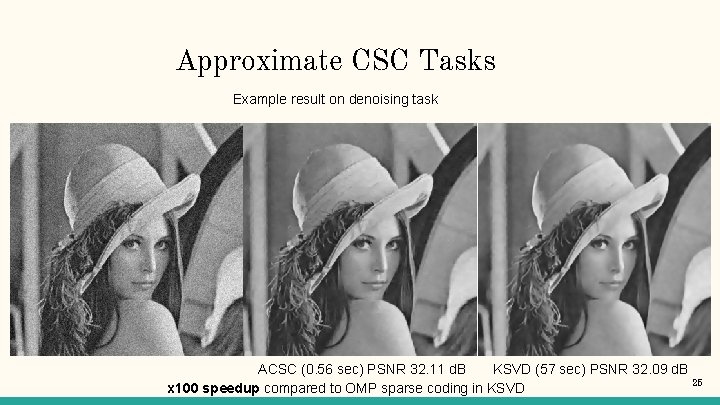

Approximate CSC Tasks Example result on denoising task ACSC (0. 56 sec) PSNR 32. 11 d. B KSVD (57 sec) PSNR 32. 09 d. B 25 x 100 speedup compared to OMP sparse coding in KSVD

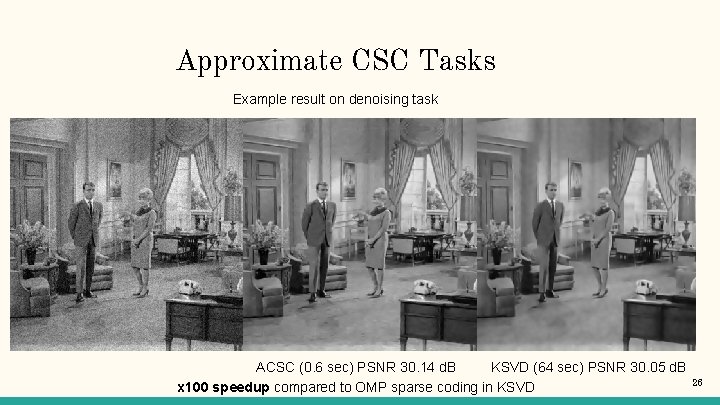

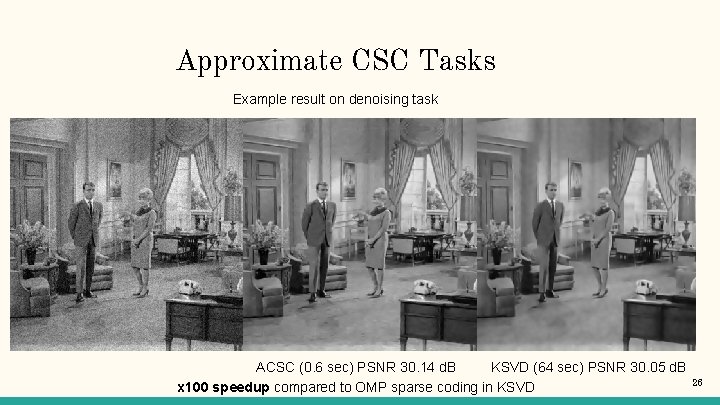

Approximate CSC Tasks Example result on denoising task ACSC (0. 6 sec) PSNR 30. 14 d. B KSVD (64 sec) PSNR 30. 05 d. B x 100 speedup compared to OMP sparse coding in KSVD 26

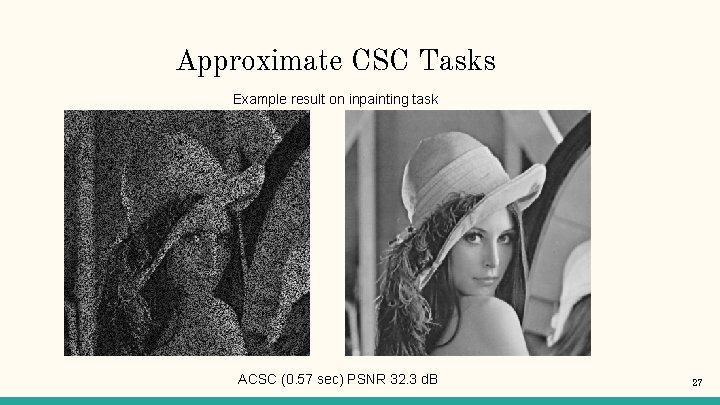

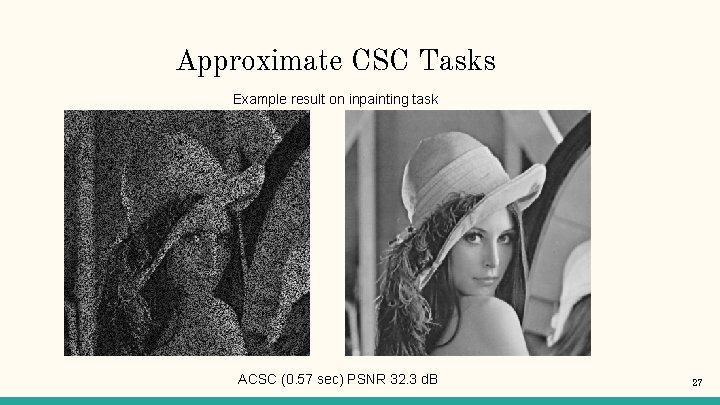

Approximate CSC Tasks Example result on inpainting task ACSC (0. 57 sec) PSNR 32. 3 d. B 27

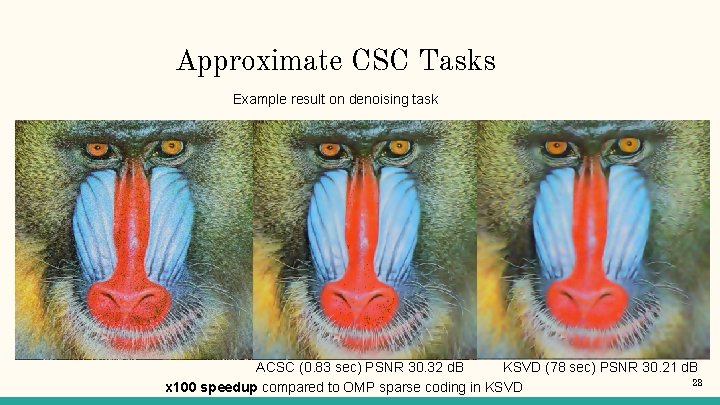

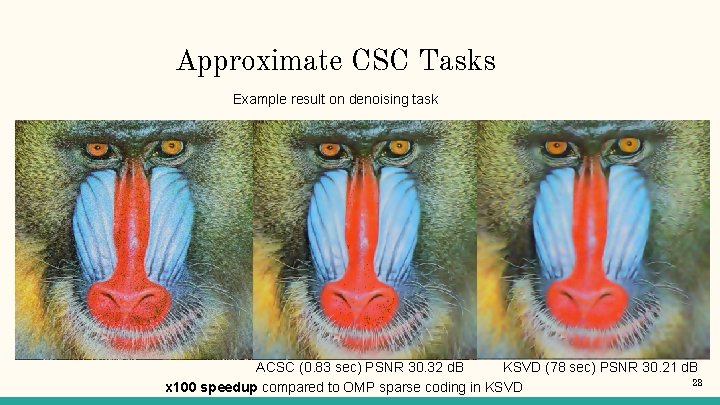

Approximate CSC Tasks Example result on denoising task ACSC (0. 83 sec) PSNR 30. 32 d. B KSVD (78 sec) PSNR 30. 21 d. B 28 x 100 speedup compared to OMP sparse coding in KSVD

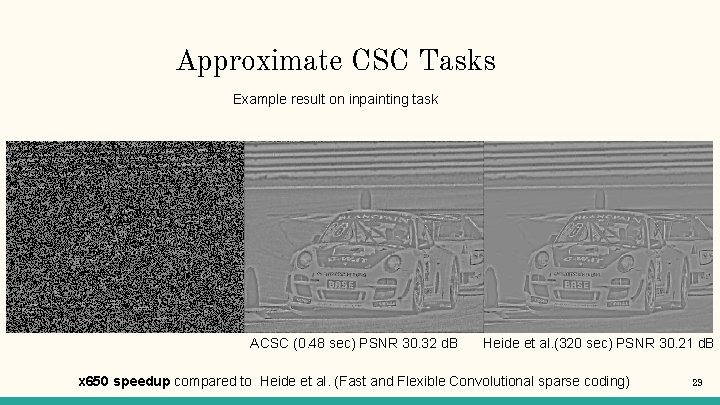

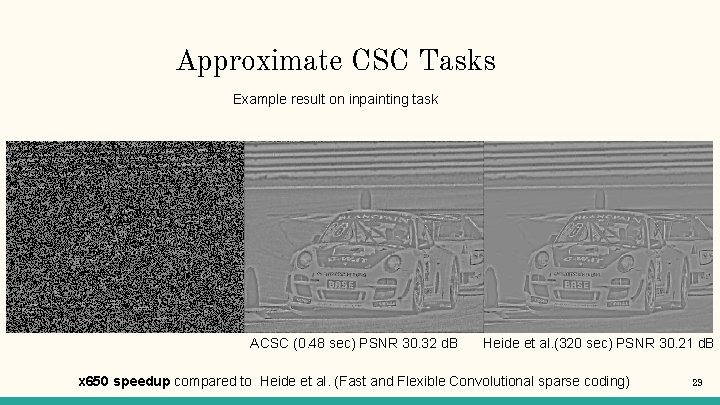

Approximate CSC Tasks Example result on inpainting task ACSC (0. 48 sec) PSNR 30. 32 d. B Heide et al. (320 sec) PSNR 30. 21 d. B x 650 speedup compared to Heide et al. (Fast and Flexible Convolutional sparse coding) 29

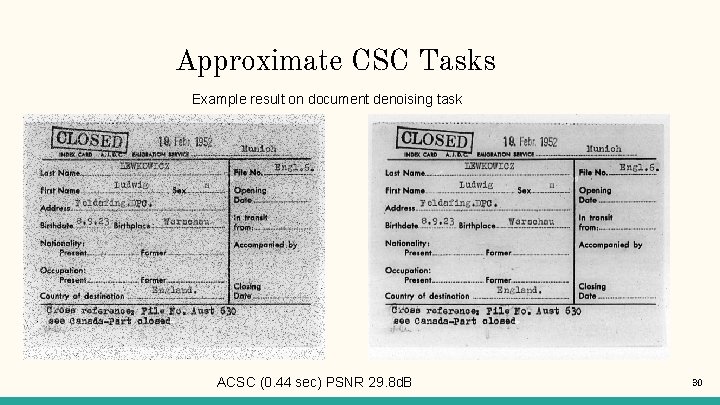

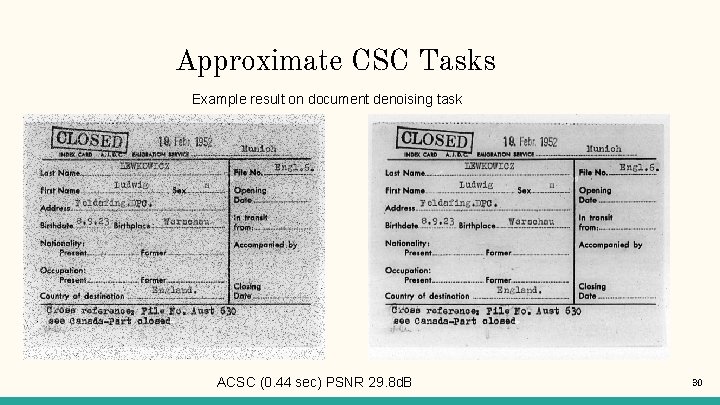

Approximate CSC Tasks Example result on document denoising task ACSC (0. 44 sec) PSNR 29. 8 d. B 30

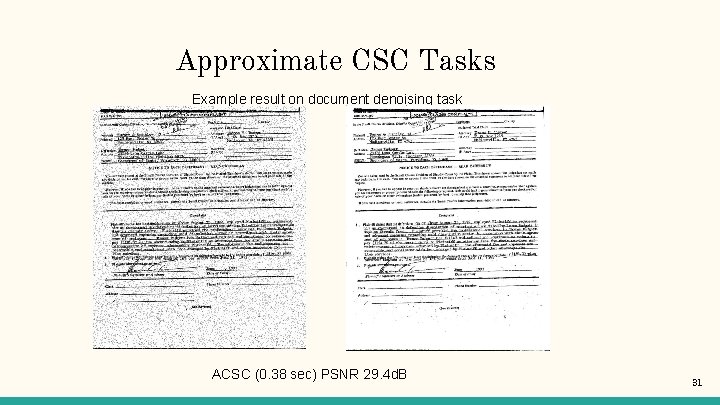

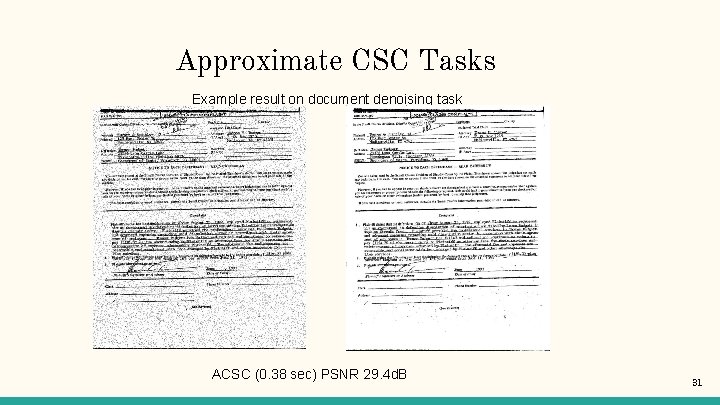

Approximate CSC Tasks Example result on document denoising task ACSC (0. 38 sec) PSNR 29. 4 d. B 31

Conclusion ● LISTA is good for accelerating sparse coding for patches ● Yet, it is slow when working on images as a whole ● Our proposed learned CSC is good for processing the whole image ● Provide comparable performance to other sparse coding based techniques on images with up to x 100 speedup 32

Thank You! Encoder Convolutional sparse encoder Decoder Convolutional sparse dictionary 33