Layerwise Performance Bottleneck Analysis of Deep Neural Networks

Layer-wise Performance Bottleneck Analysis of Deep Neural Networks Hengyu Zhao, Colin Weinshenker*, Mohamed Ibrahim*, Adwait Jog*, Jishen Zhao University of California Santa Cruz, *The College of William and Mary The First International Workshop on Architectures for Intelligent Machines 1

2

Training 3

Inference • This work focuses on TRAINING. 4

Image. Net Large Scale Visual Recognition Challenge Recent Winners Alex. Net: 8 layers: 2012 VGG: 16 or 19 layers: 2014 Google. Net: 22 layers: 2014 Res. Net: 152 layers: 2015 Modern neural networks go deeper! 5

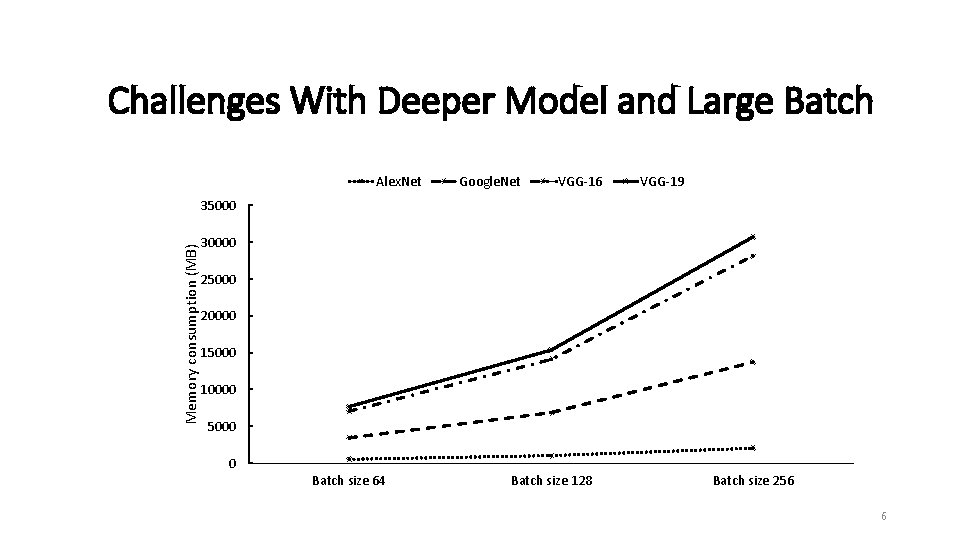

Challenges With Deeper Model and Large Batch Alex. Net Google. Net VGG-16 VGG-19 Memory consumption (MB) 35000 30000 25000 20000 15000 10000 5000 0 Batch size 64 Batch size 128 Batch size 256 6

Problem Larger models need more powerful new architecture! 7

Motivation To build new powerful systems, we need to address the compute and memory bottlenecks in deep learning. 8

Our Work • We build a layer-wise model for training VGG-16 and Alex. Net on GPUs. • We identify GPU performance bottlenecks in compute and cache resources, by characterizing the performance and data access behaviors of Alex. Net and VGG-16 models in a layer-wise manner. 9

Background 10

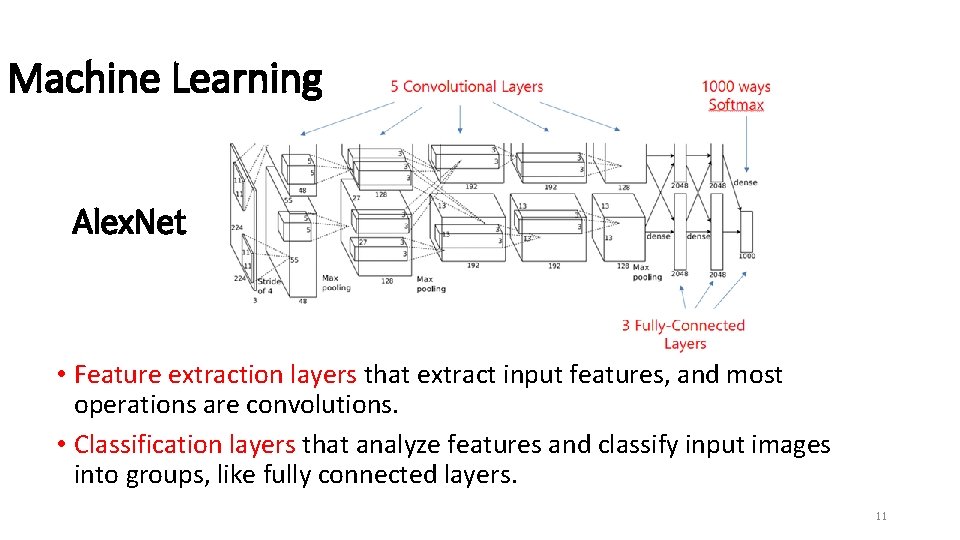

Machine Learning Alex. Net • Feature extraction layers that extract input features, and most operations are convolutions. • Classification layers that analyze features and classify input images into groups, like fully connected layers. 11

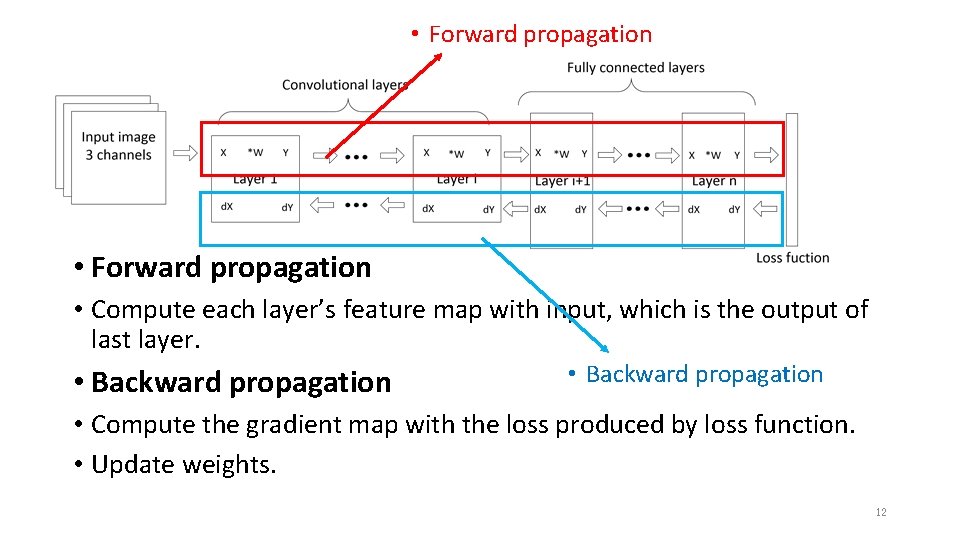

• Forward propagation • Compute each layer’s feature map with input, which is the output of last layer. • Backward propagation • Compute the gradient map with the loss produced by loss function. • Update weights. 12

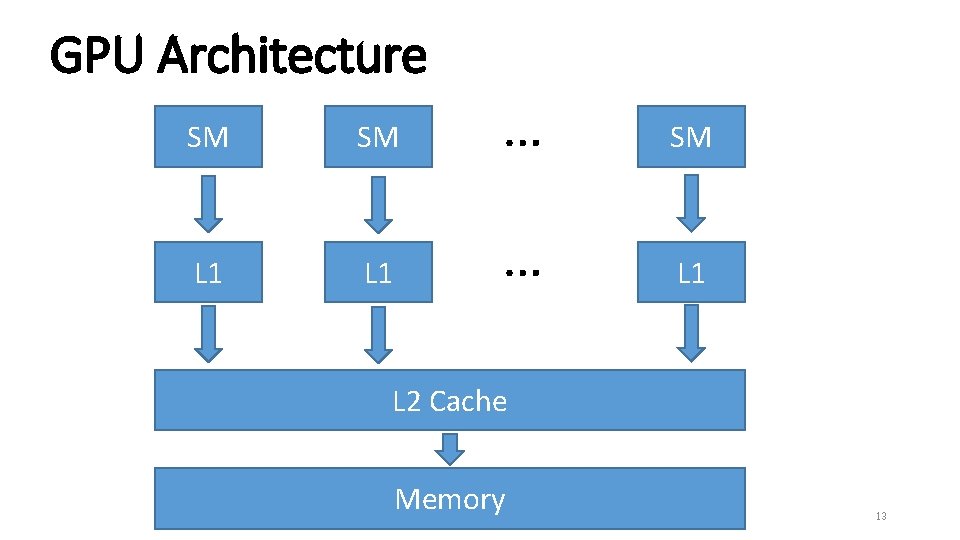

GPU Architecture SM SM … SM L 1 … L 1 L 2 Cache Memory 13

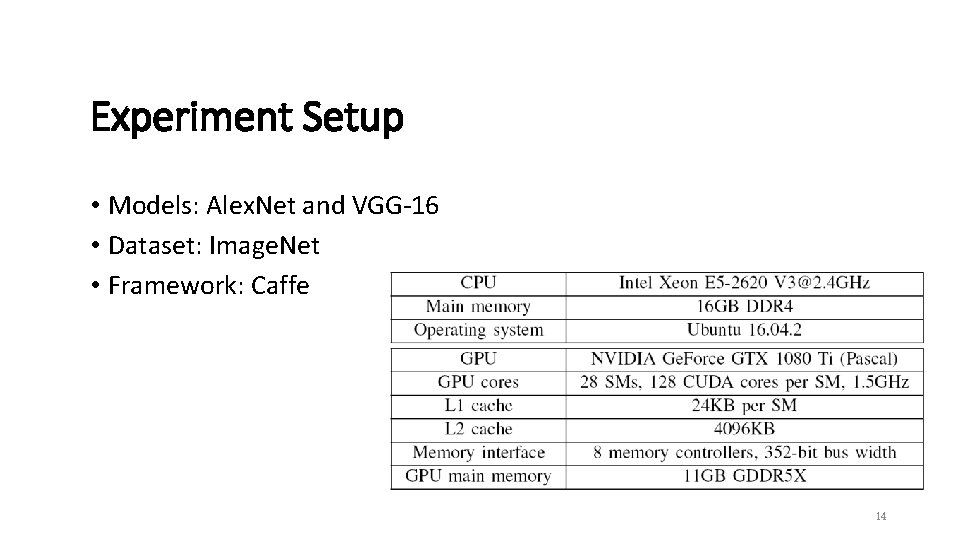

Experiment Setup • Models: Alex. Net and VGG-16 • Dataset: Image. Net • Framework: Caffe 14

Real Machine Characterization • Execution time and Instruction • L 1 Cache • L 2 Cache • Memory 15

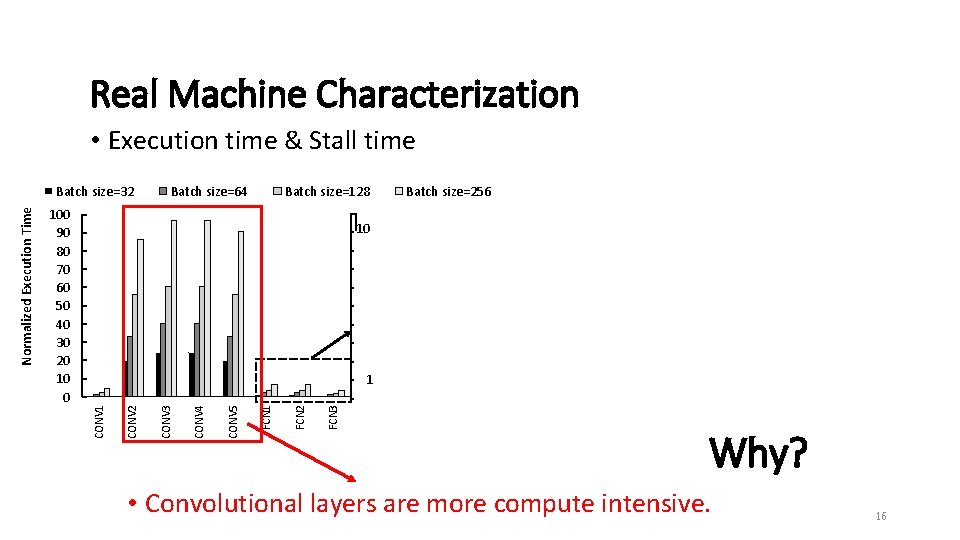

Real Machine Characterization • Execution time & Stall time Batch size=64 Batch size=128 Batch size=256 10 FCN 3 FCN 2 FCN 1 CONV 5 CONV 4 CONV 3 1 CONV 2 100 90 80 70 60 50 40 30 20 10 0 CONV 1 Normalized Execution Time Batch size=32 Why? • Convolutional layers are more compute intensive. 16

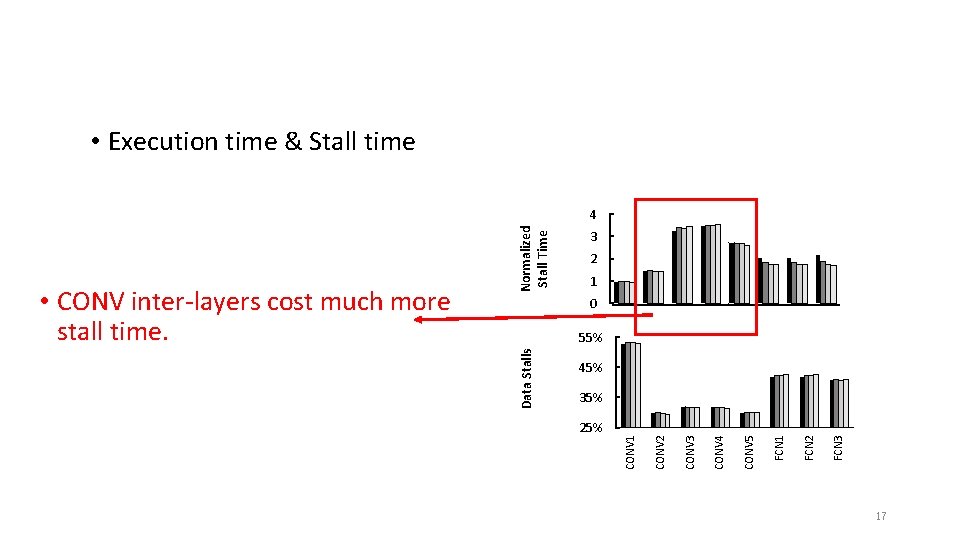

• Execution time & Stall time 3 2 1 0 55% 45% FCN 3 FCN 2 FCN 1 CONV 5 CONV 4 CONV 3 25% CONV 2 35% CONV 1 Data Stalls • CONV inter-layers cost much more stall time. Normalized Stall Time 4 17

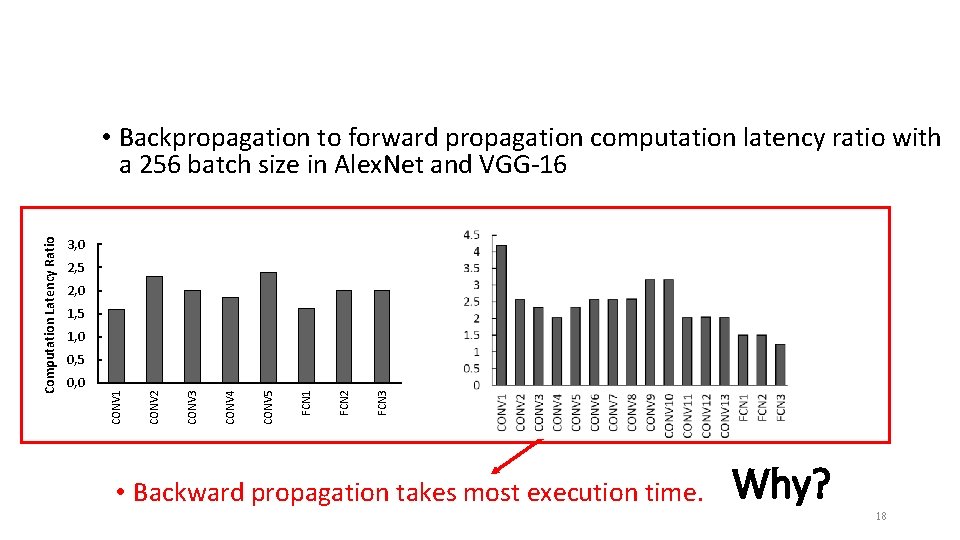

3, 0 2, 5 2, 0 1, 5 1, 0 FCN 3 FCN 2 FCN 1 CONV 5 CONV 4 CONV 3 0, 0 CONV 2 0, 5 CONV 1 Computation Latency Ratio • Backpropagation to forward propagation computation latency ratio with a 256 batch size in Alex. Net and VGG-16 • Backward propagation takes most execution time. Why? 18

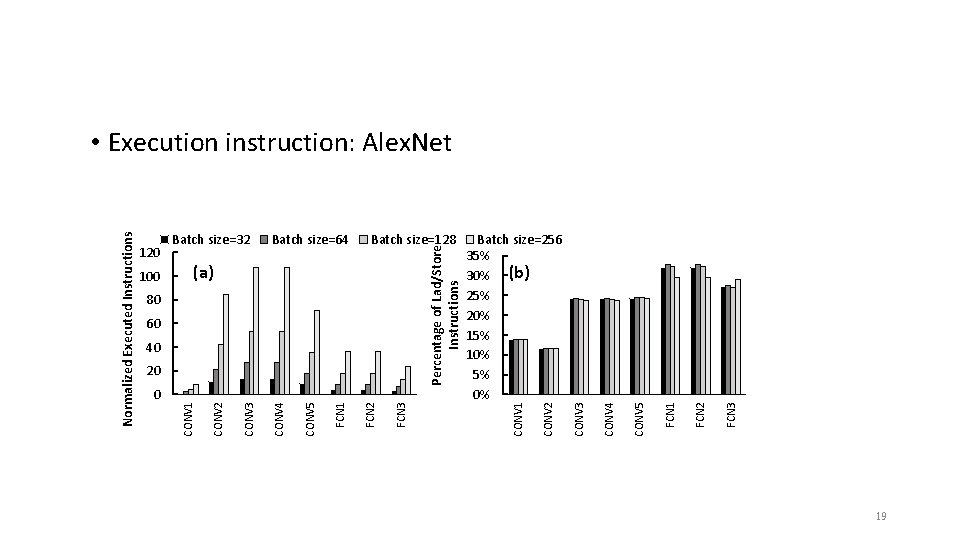

Normalized Executed Instructions FCN 3 Batch size=64 FCN 2 FCN 1 CONV 5 CONV 4 Batch size=32 (a) 80 60 40 20 Percentage of Lad/Store Instructions 0 CONV 3 100 CONV 2 120 CONV 1 Batch size=128 Batch size=256 35% 30% (b) 25% 20% 15% 10% 5% 0% FCN 3 FCN 2 FCN 1 CONV 5 CONV 4 CONV 3 CONV 2 CONV 1 • Execution instruction: Alex. Net 19

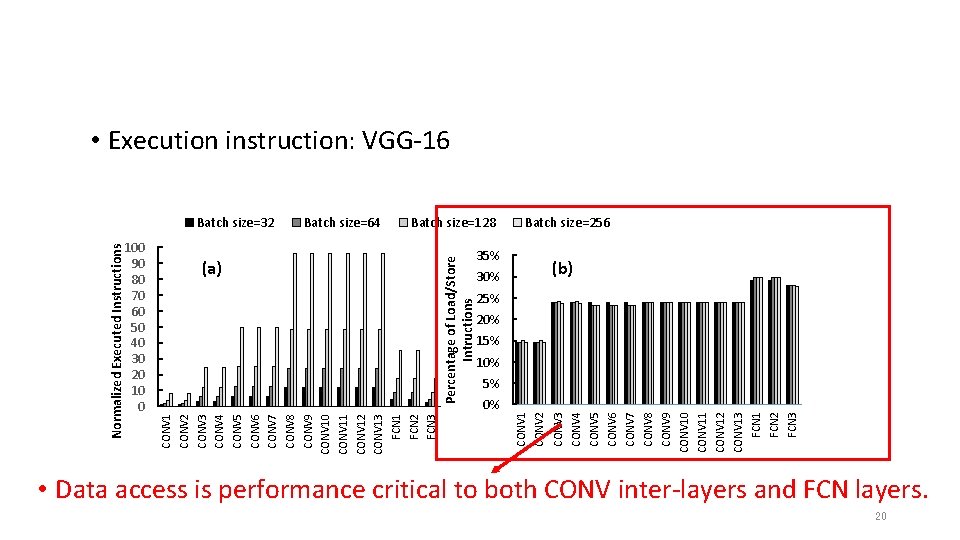

100 90 80 70 60 50 40 30 20 10 0 0% FCN 3 FCN 2 FCN 1 CONV 13 CONV 12 CONV 11 CONV 10 CONV 9 CONV 8 CONV 7 CONV 6 30% CONV 5 35% CONV 4 (a) CONV 3 Batch size=128 CONV 2 Batch size=64 Percentage of Load/Store Intructions Batch size=32 CONV 1 CONV 2 CONV 3 CONV 4 CONV 5 CONV 6 CONV 7 CONV 8 CONV 9 CONV 10 CONV 11 CONV 12 CONV 13 FCN 1 FCN 2 FCN 3 Normalized Executed Instructions • Execution instruction: VGG-16 Batch size=256 (b) 25% 20% 15% 10% 5% • Data access is performance critical to both CONV inter-layers and FCN layers. 20

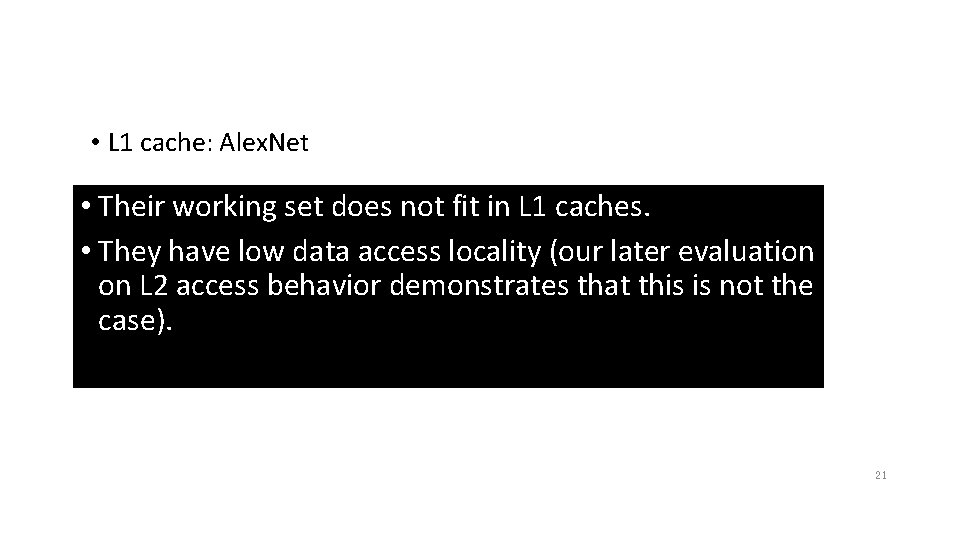

• L 1 cache: Alex. Net Batch size=64 Batch size=128 80% (b) 70% 60% 50% 40% 30% 20% Batch size=256 FCN 3 FCN 2 FCN 1 CONV 5 CONV 4 CONV 3 CONV 2 CONV 1 FCN 3 FCN 2 FCN 1 CONV 5 CONV 4 CONV 3 CONV 2 L 1 Cache Hit Rate Batch size=32 CONV 1 L 1 Cache Throughput (GB/s) • Their working set does not fit in L 1 caches. (a) • They have low data access locality (our later evaluation on L 2 access behavior demonstrates that this is not the case). 350 300 250 200 150 100 50 0 21

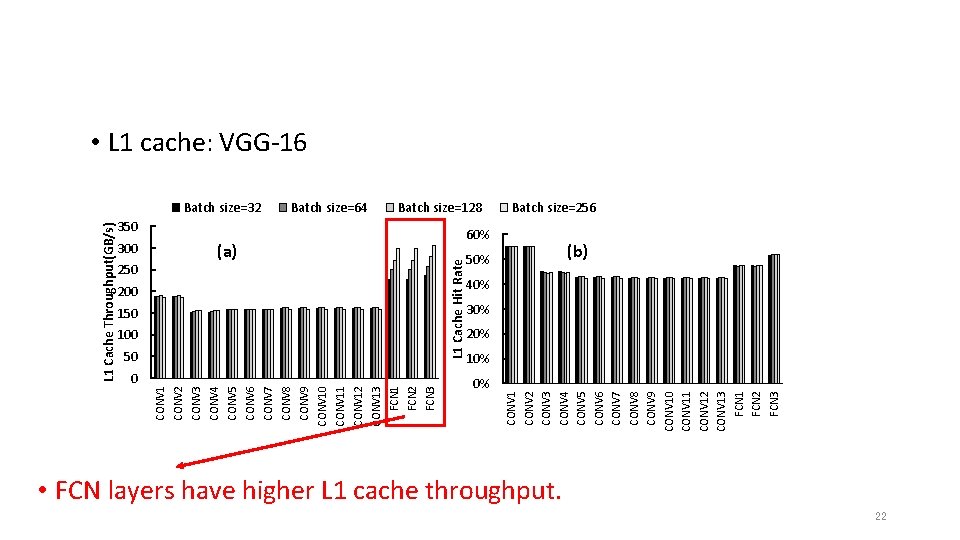

L 1 Cache Throughput(GB/s) 250 0 Batch size=64 (a) 200 150 100 50 L 1 Cache Hit Rate Batch size=32 Batch size=128 350 0% CONV 1 CONV 2 CONV 3 CONV 4 CONV 5 CONV 6 CONV 7 CONV 8 CONV 9 CONV 10 CONV 11 CONV 12 CONV 13 FCN 1 FCN 2 FCN 3 300 CONV 1 CONV 2 CONV 3 CONV 4 CONV 5 CONV 6 CONV 7 CONV 8 CONV 9 CONV 10 CONV 11 CONV 12 CONV 13 FCN 1 FCN 2 FCN 3 • L 1 cache: VGG-16 Batch size=256 60% 50% (b) 40% 30% 20% 10% • FCN layers have higher L 1 cache throughput. 22

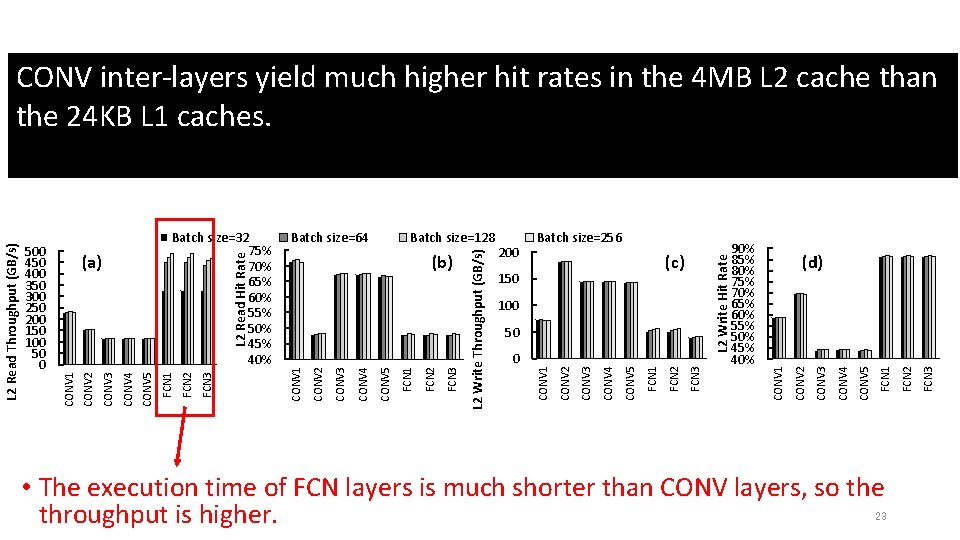

CONV inter-layers yield much higher hit rates in the 4 MB L 2 cache than the 24 KB L 1 caches. • The execution time of FCN layers is much shorter than CONV layers, so the 23 throughput is higher. FCN 3 FCN 2 FCN 1 CONV 5 CONV 4 CONV 3 0 CONV 2 50 (d) CONV 2 100 90% 85% 80% 75% 70% 65% 60% 55% 50% 45% 40% CONV 1 (c) 150 L 2 Write Hit Rate 200 Batch size=256 CONV 1 FCN 1 CONV 5 CONV 4 CONV 3 CONV 2 CONV 1 FCN 3 (b) L 2 Write Throughput (GB/s) Batch size=128 L 2 Read Hit Rate FCN 3 FCN 2 FCN 1 CONV 5 CONV 4 CONV 3 Batch size=64 FCN 2 Batch size=32 75% 70% 65% 60% 55% 50% 45% 40% (a) CONV 2 500 450 400 350 300 250 200 150 100 50 0 CONV 1 L 2 Read Throughput (GB/s) • L 2 cache: Alex. Net

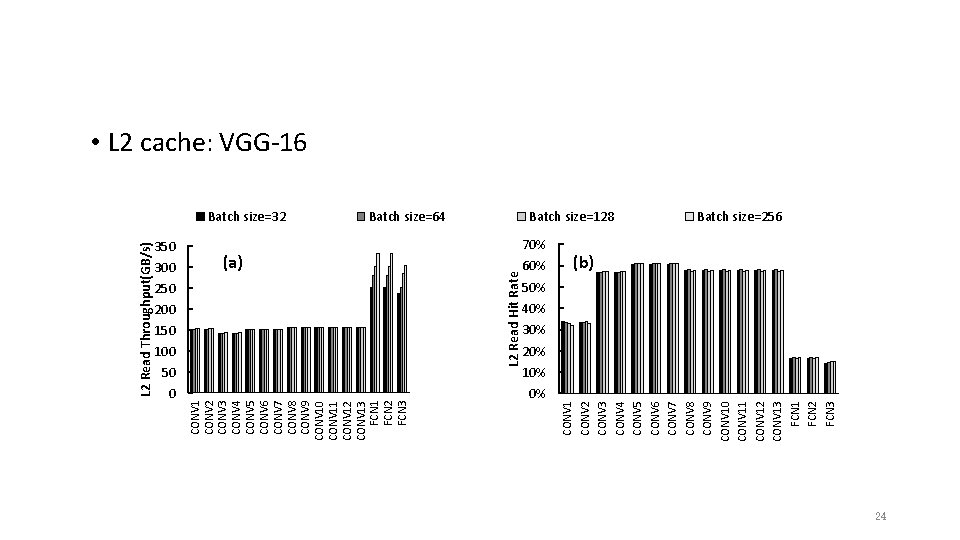

L 2 Read Throughput(GB/s) 350 300 250 200 150 100 50 0 CONV 1 CONV 2 CONV 3 CONV 4 CONV 5 CONV 6 CONV 7 CONV 8 CONV 9 CONV 10 CONV 11 CONV 12 CONV 13 FCN 1 FCN 2 FCN 3 Batch size=64 (a) 70% 60% 0% CONV 1 CONV 2 CONV 3 CONV 4 CONV 5 CONV 6 CONV 7 CONV 8 CONV 9 CONV 10 CONV 11 CONV 12 CONV 13 FCN 1 FCN 2 FCN 3 Batch size=32 L 2 Read Hit Rate • L 2 cache: VGG-16 Batch size=128 Batch size=256 (b) 50% 40% 30% 20% 10% 24

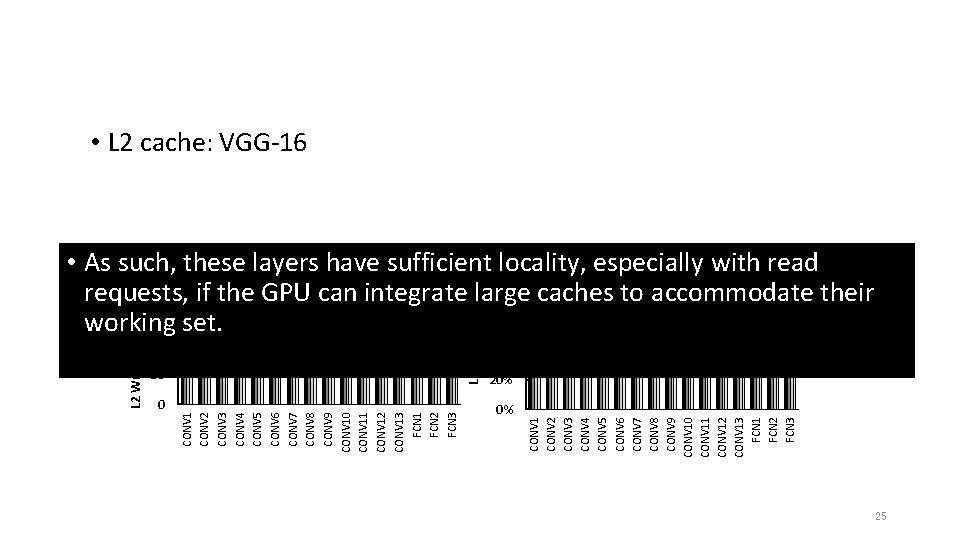

• L 2 cache: VGG-16 50 0 40% 20% 0% CONV 1 CONV 2 CONV 3 CONV 4 CONV 5 CONV 6 CONV 7 CONV 8 CONV 9 CONV 10 CONV 11 CONV 12 CONV 13 FCN 1 FCN 2 FCN 3 L 2 Write Hit Rate 100 CONV 1 CONV 2 CONV 3 CONV 4 CONV 5 CONV 6 CONV 7 CONV 8 CONV 9 CONV 10 CONV 11 CONV 12 CONV 13 FCN 1 FCN 2 FCN 3 L 2 Write Throughput(GB/s) 250 100% • As such, these layers with read (c) have sufficient locality, especially (d) 200 80% requests, if the GPU can integrate large caches to accommodate their 150 60% working set. 25

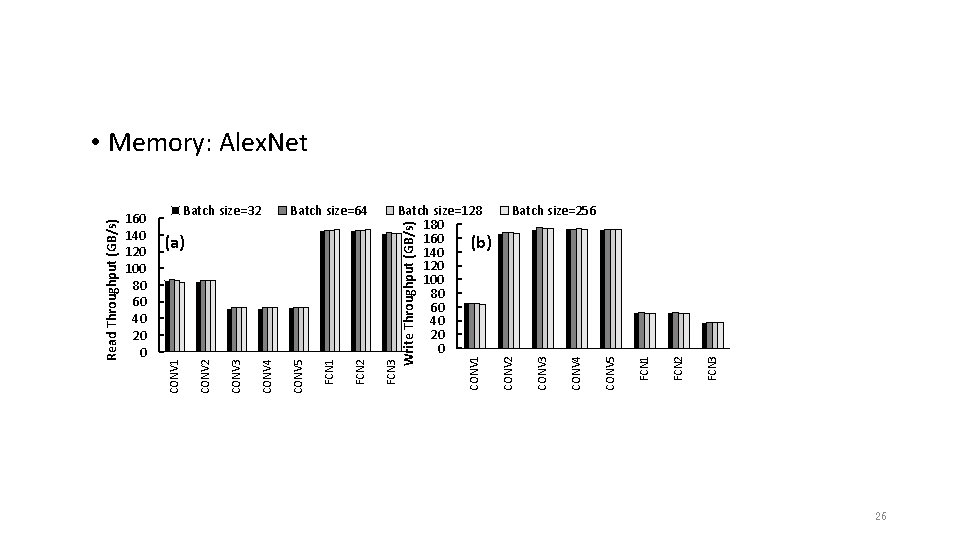

FCN 3 FCN 2 FCN 1 CONV 5 CONV 4 CONV 3 Batch size=256 CONV 2 CONV 1 FCN 2 FCN 1 CONV 5 CONV 4 CONV 3 (a) Batch size=128 180 160 (b) 140 120 100 80 60 40 20 0 Write Throughput (GB/s) Batch size=64 FCN 3 Batch size=32 CONV 2 160 140 120 100 80 60 40 20 0 CONV 1 Read Throughput (GB/s) • Memory: Alex. Net 26

• Memory: VGG-16 200 (a) 150 Write Throughput(GB/s) Read Throughput(GB/s) • CONV inter-layers have much higher memory write throughput, because Batch size=32 Batch size=64 Batch size=128 Batch size=256 they have lower L 2 write hit rates. 250 200 (b) 150 0 CONV 1 CONV 2 CONV 3 CONV 4 CONV 5 CONV 6 CONV 7 CONV 8 CONV 9 CONV 10 CONV 11 CONV 12 CONV 13 FCN 1 FCN 2 FCN 3 • FCN layers have much higher memory read throughput, because they have 100 lower 50 L 2 read hit rates. 50 27

Conclusion • The execution time of convolutional inter-layers dominates the total execution time. In particular, backpropagation of these inter-layers consumes significantly longer execution time than forward propagation. • The working set of convolutional inter-layers does not fit in L 1 cache, while convolutional input layer can exploit L 1 cache sufficiently. • Interconnect network can also be a performance bottleneck that substantially increase GPU memory bandwidth demand. 28

Thank you. 29

- Slides: 29