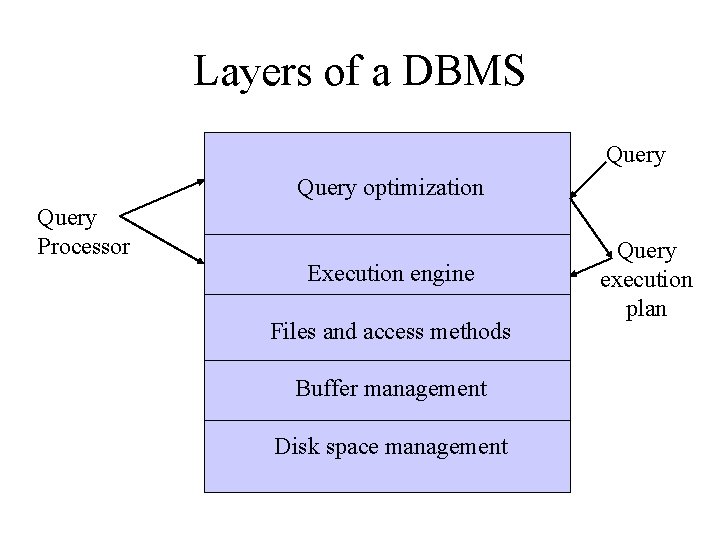

Layers of a DBMS Query optimization Query Processor

Layers of a DBMS Query optimization Query Processor Execution engine Files and access methods Buffer management Disk space management Query execution plan

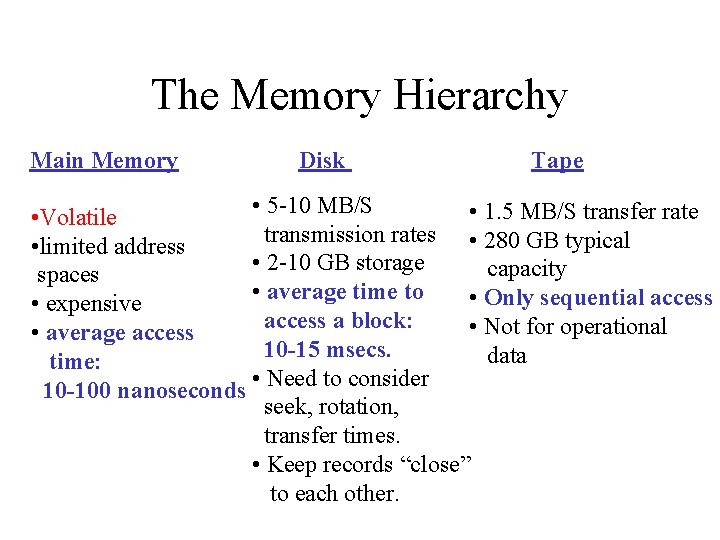

The Memory Hierarchy Main Memory Disk Tape • 5 -10 MB/S • 1. 5 MB/S transfer rate • Volatile transmission rates • 280 GB typical • limited address • 2 -10 GB storage capacity spaces • average time to • Only sequential access • expensive access a block: • Not for operational • average access 10 -15 msecs. data time: 10 -100 nanoseconds • Need to consider seek, rotation, transfer times. • Keep records “close” to each other.

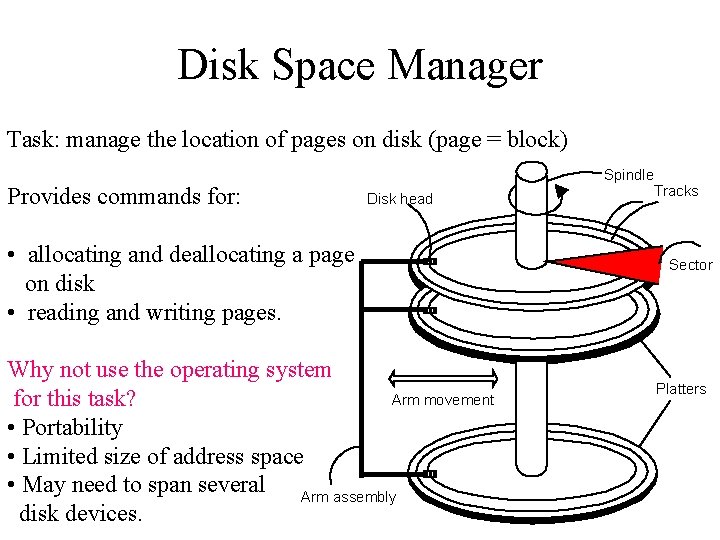

Disk Space Manager Task: manage the location of pages on disk (page = block) Provides commands for: Disk head • allocating and deallocating a page on disk • reading and writing pages. Why not use the operating system Arm movement for this task? • Portability • Limited size of address space • May need to span several Arm assembly disk devices. Spindle Tracks Sector Platters

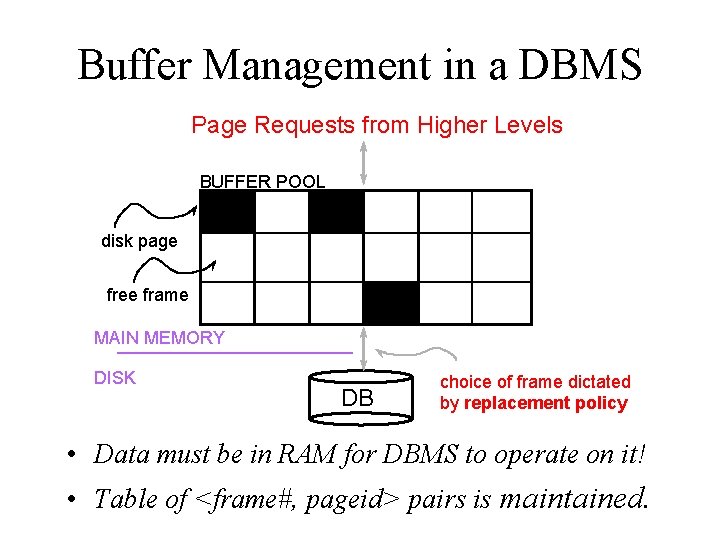

Buffer Management in a DBMS Page Requests from Higher Levels BUFFER POOL disk page free frame MAIN MEMORY DISK DB choice of frame dictated by replacement policy • Data must be in RAM for DBMS to operate on it! • Table of <frame#, pageid> pairs is maintained.

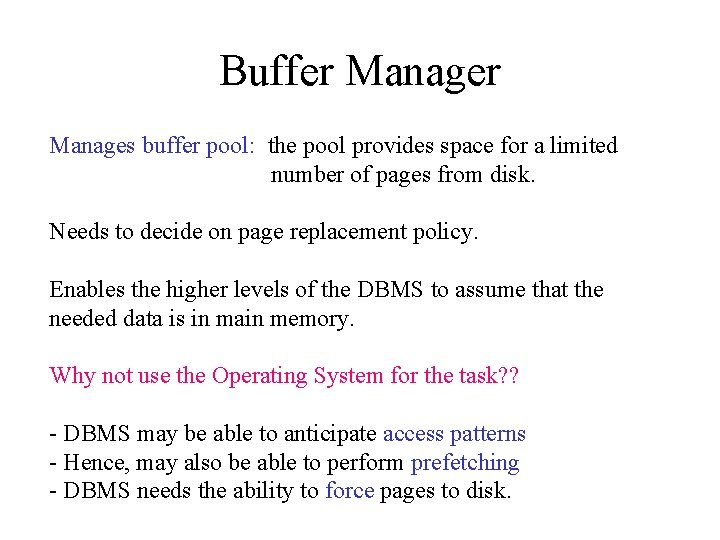

Buffer Manages buffer pool: the pool provides space for a limited number of pages from disk. Needs to decide on page replacement policy. Enables the higher levels of the DBMS to assume that the needed data is in main memory. Why not use the Operating System for the task? ? - DBMS may be able to anticipate access patterns - Hence, may also be able to perform prefetching - DBMS needs the ability to force pages to disk.

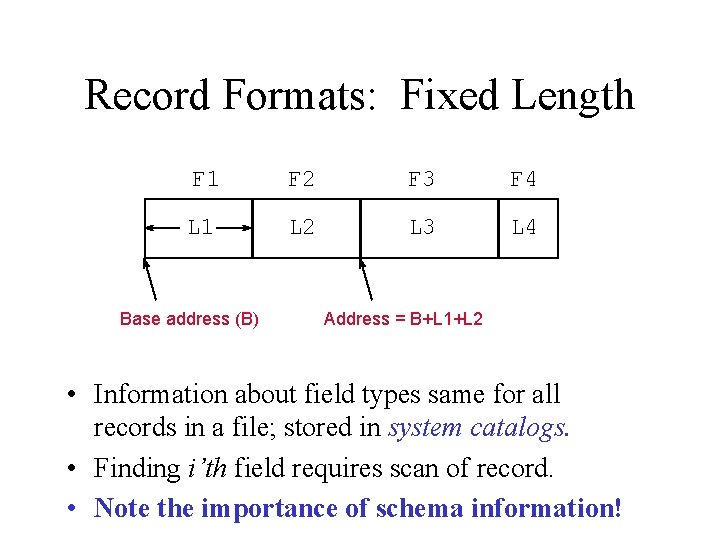

Record Formats: Fixed Length F 1 F 2 F 3 F 4 L 1 L 2 L 3 L 4 Base address (B) Address = B+L 1+L 2 • Information about field types same for all records in a file; stored in system catalogs. • Finding i’th field requires scan of record. • Note the importance of schema information!

Files of Records • Page or block is OK when doing I/O, but higher levels of DBMS operate on records, and files of records. • FILE: A collection of pages, each containing a collection of records. Must support: – insert/delete/modify record – read a particular record (specified using record id) – scan all records (possibly with some conditions on the records to be retrieved)

File Organizations – Heap files: Suitable when typical access is a file scan retrieving all records. – Sorted Files: Best if records must be retrieved in some order, or only a `range’ of records is needed. – Hashed Files: Good for equality selections. • File is a collection of buckets. Bucket = primary page plus zero or more overflow pages. • Hashing function h: h(r) = bucket in which record r belongs. h looks at only some of the fields of r, called the search fields.

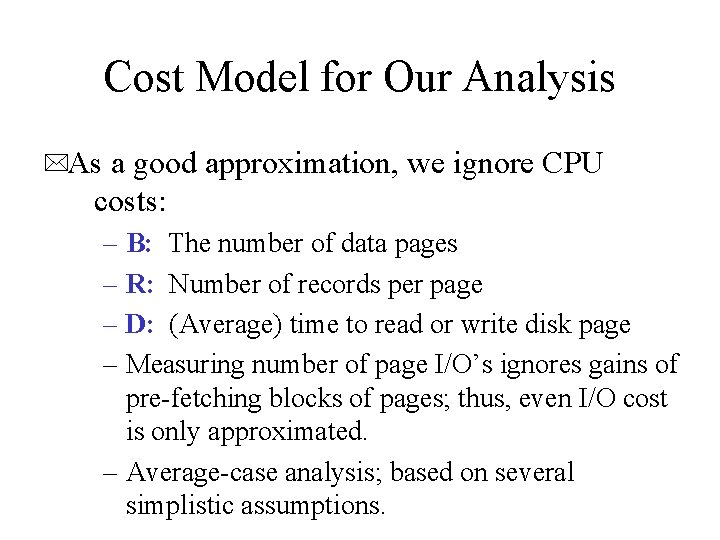

Cost Model for Our Analysis *As a good approximation, we ignore CPU costs: – B: The number of data pages – R: Number of records per page – D: (Average) time to read or write disk page – Measuring number of page I/O’s ignores gains of pre-fetching blocks of pages; thus, even I/O cost is only approximated.

Sorting • Illustrates the difference in algorithm design when your data is not in main memory: – Problem: sort 1 Gb of data with 1 Mb of RAM. • Arises in many places in database systems: – Data requested in sorted order (ORDER BY) – Needed for grouping operations – First step in sort-merge join algorithm – Duplicate removal – Bulk loading of B+-tree indexes.

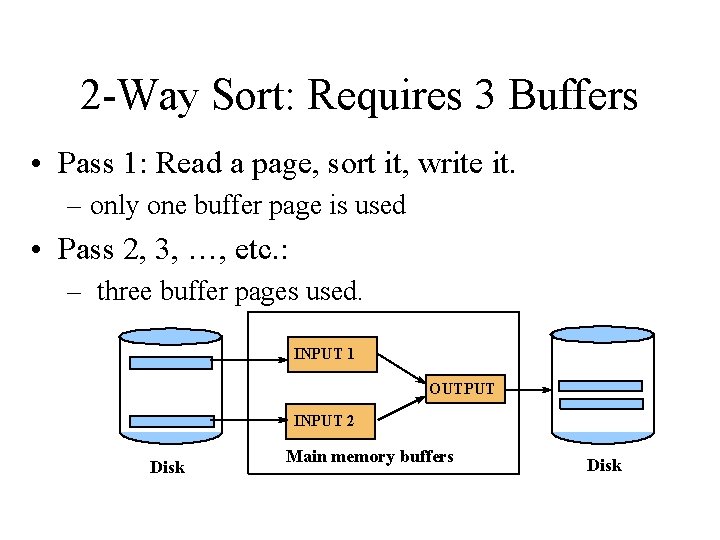

2 -Way Sort: Requires 3 Buffers • Pass 1: Read a page, sort it, write it. – only one buffer page is used • Pass 2, 3, …, etc. : – three buffer pages used. INPUT 1 OUTPUT INPUT 2 Disk Main memory buffers Disk

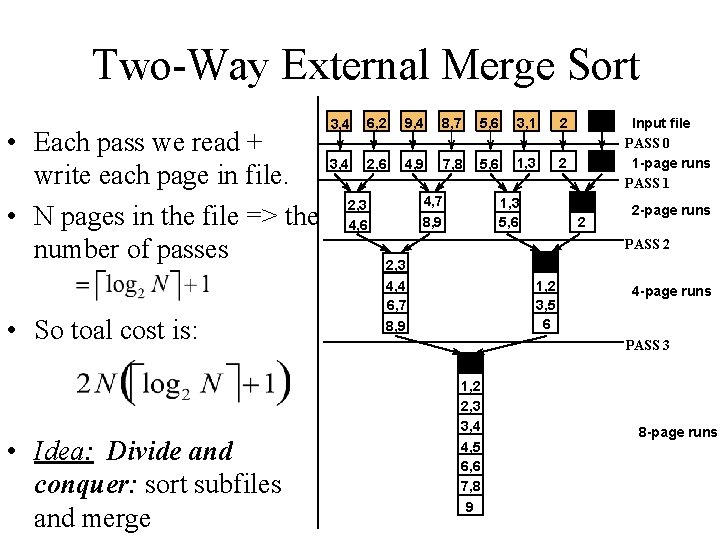

Two-Way External Merge Sort • Each pass we read + write each page in file. • N pages in the file => the number of passes • So toal cost is: 3, 4 6, 2 9, 4 8, 7 5, 6 3, 1 2 3, 4 2, 6 4, 9 7, 8 5, 6 1, 3 2 4, 7 8, 9 2, 3 4, 6 1, 3 5, 6 2 2 -page runs PASS 2 2, 3 4, 4 6, 7 8, 9 1, 2 3, 5 6 4 -page runs PASS 3 1, 2 2, 3 3, 4 • Idea: Divide and conquer: sort subfiles and merge Input file PASS 0 1 -page runs PASS 1 4, 5 6, 6 7, 8 9 8 -page runs

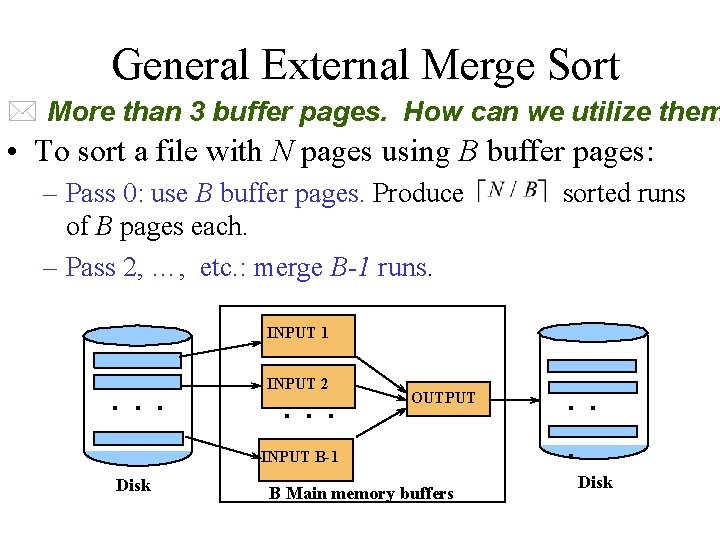

General External Merge Sort * More than 3 buffer pages. How can we utilize them • To sort a file with N pages using B buffer pages: – Pass 0: use B buffer pages. Produce of B pages each. – Pass 2, …, etc. : merge B-1 runs. sorted runs INPUT 1 . . . INPUT 2 . . . OUTPUT INPUT B-1 Disk B Main memory buffers . . . Disk

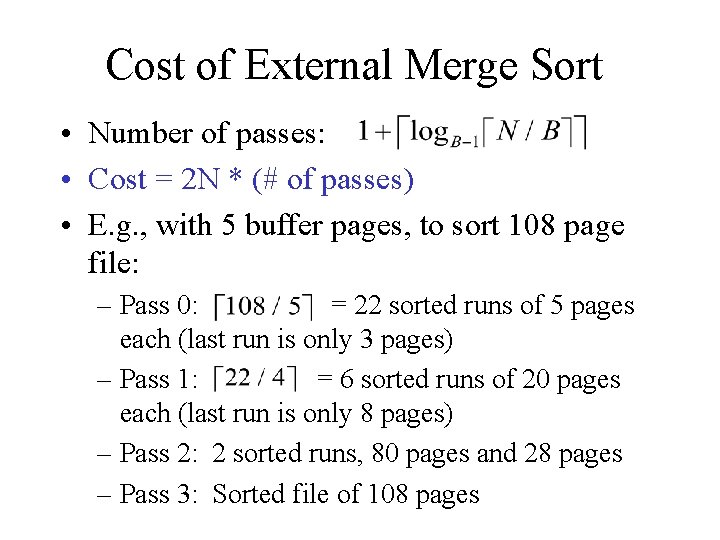

Cost of External Merge Sort • Number of passes: • Cost = 2 N * (# of passes) • E. g. , with 5 buffer pages, to sort 108 page file: – Pass 0: = 22 sorted runs of 5 pages each (last run is only 3 pages) – Pass 1: = 6 sorted runs of 20 pages each (last run is only 8 pages) – Pass 2: 2 sorted runs, 80 pages and 28 pages – Pass 3: Sorted file of 108 pages

Number of Passes of External Sort

Cost Model for Our Analysis *As a good approximation, we ignore CPU costs: – B: The number of data pages – R: Number of records per page – D: (Average) time to read or write disk page – Measuring number of page I/O’s ignores gains of pre-fetching blocks of pages; thus, even I/O cost is only approximated. – Average-case analysis; based on several simplistic assumptions.

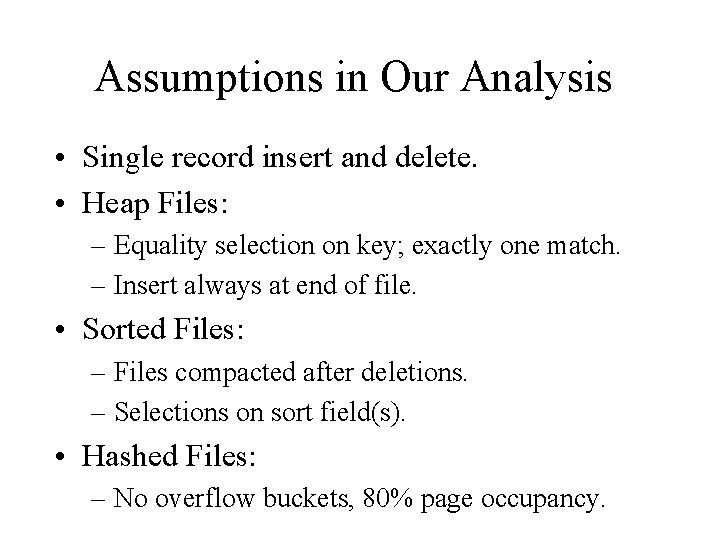

Assumptions in Our Analysis • Single record insert and delete. • Heap Files: – Equality selection on key; exactly one match. – Insert always at end of file. • Sorted Files: – Files compacted after deletions. – Selections on sort field(s). • Hashed Files: – No overflow buckets, 80% page occupancy.

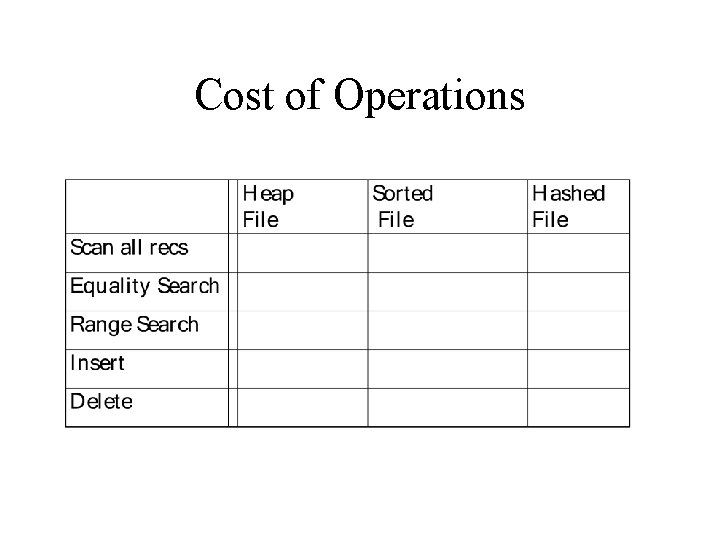

Cost of Operations

Cost of Operations

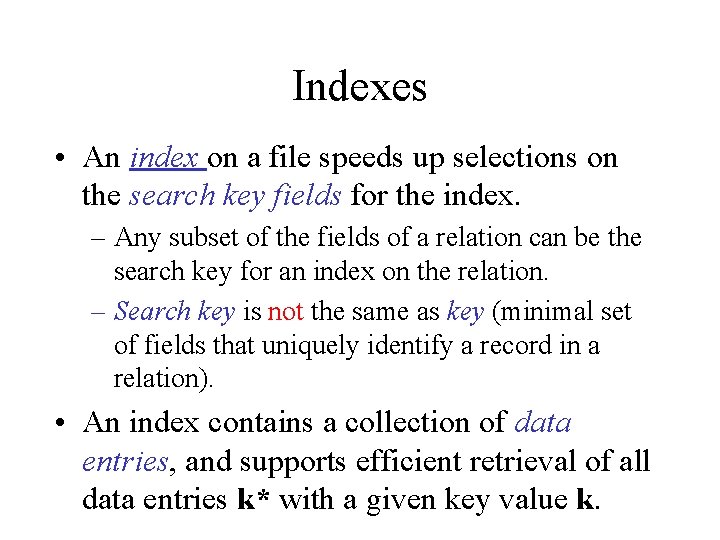

Indexes • An index on a file speeds up selections on the search key fields for the index. – Any subset of the fields of a relation can be the search key for an index on the relation. – Search key is not the same as key (minimal set of fields that uniquely identify a record in a relation). • An index contains a collection of data entries, and supports efficient retrieval of all data entries k* with a given key value k.

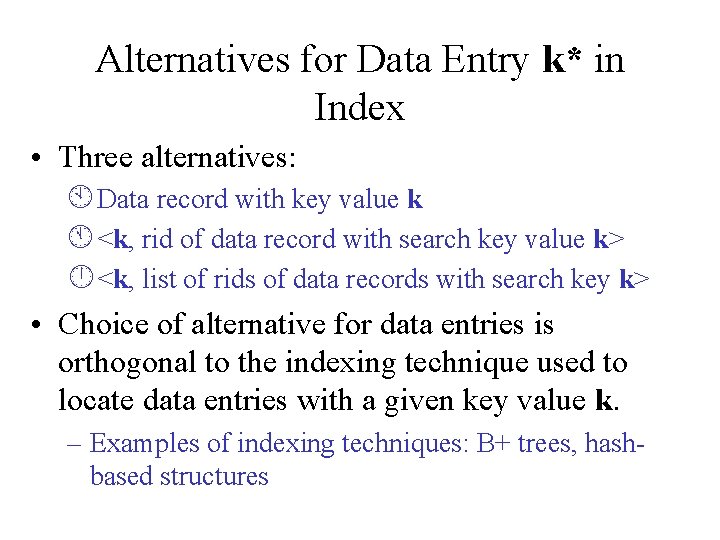

Alternatives for Data Entry k* in Index • Three alternatives: À Data record with key value k Á <k, rid of data record with search key value k> <k, list of rids of data records with search key k> • Choice of alternative for data entries is orthogonal to the indexing technique used to locate data entries with a given key value k. – Examples of indexing techniques: B+ trees, hashbased structures

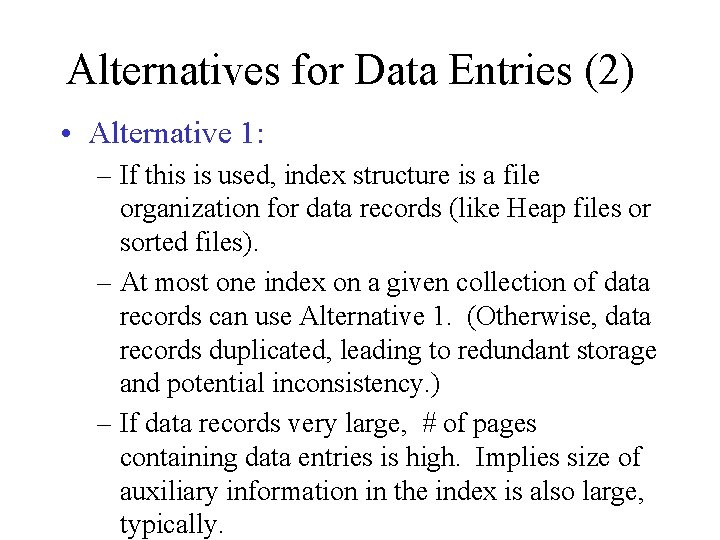

Alternatives for Data Entries (2) • Alternative 1: – If this is used, index structure is a file organization for data records (like Heap files or sorted files). – At most one index on a given collection of data records can use Alternative 1. (Otherwise, data records duplicated, leading to redundant storage and potential inconsistency. ) – If data records very large, # of pages containing data entries is high. Implies size of auxiliary information in the index is also large, typically.

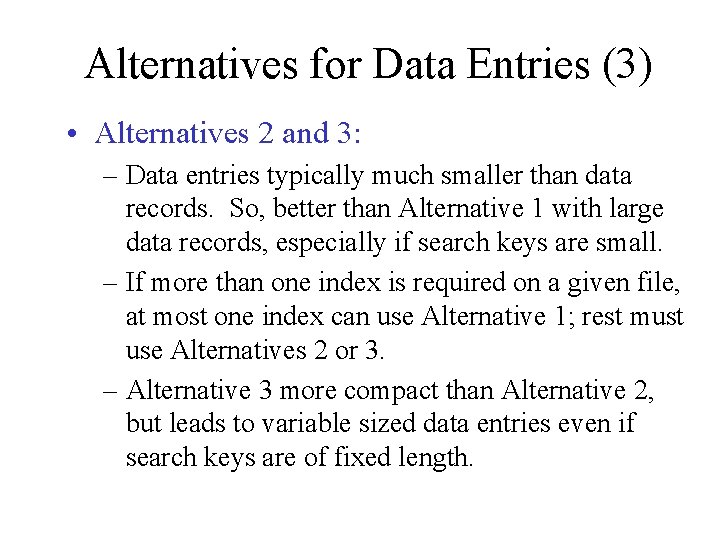

Alternatives for Data Entries (3) • Alternatives 2 and 3: – Data entries typically much smaller than data records. So, better than Alternative 1 with large data records, especially if search keys are small. – If more than one index is required on a given file, at most one index can use Alternative 1; rest must use Alternatives 2 or 3. – Alternative 3 more compact than Alternative 2, but leads to variable sized data entries even if search keys are of fixed length.

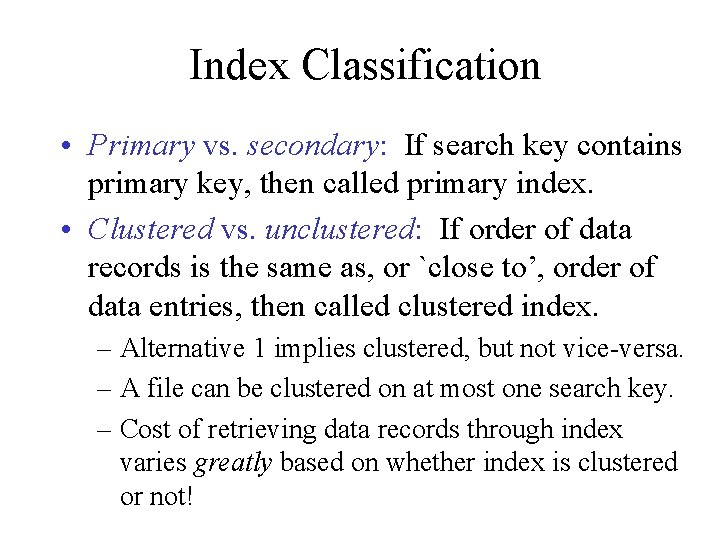

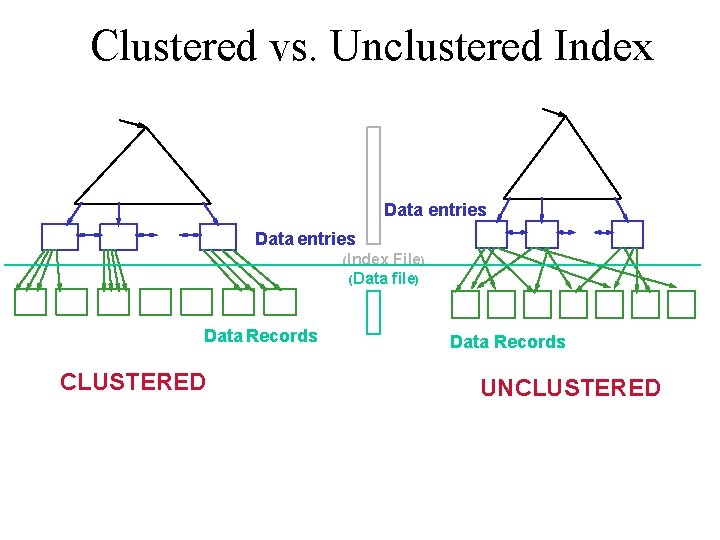

Index Classification • Primary vs. secondary: If search key contains primary key, then called primary index. • Clustered vs. unclustered: If order of data records is the same as, or `close to’, order of data entries, then called clustered index. – Alternative 1 implies clustered, but not vice-versa. – A file can be clustered on at most one search key. – Cost of retrieving data records through index varies greatly based on whether index is clustered or not!

Clustered vs. Unclustered Index Data entries (Index File) (Data file) Data Records CLUSTERED Data Records UNCLUSTERED

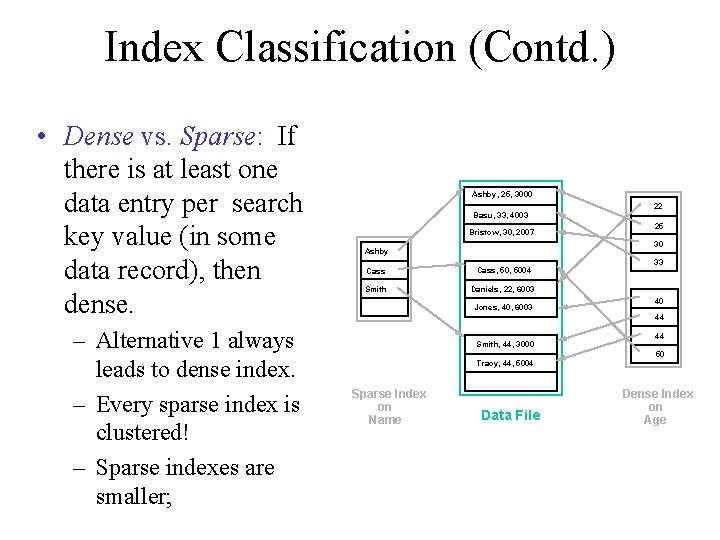

Index Classification (Contd. ) • Dense vs. Sparse: If there is at least one data entry per search key value (in some data record), then dense. – Alternative 1 always leads to dense index. – Every sparse index is clustered! – Sparse indexes are smaller; Ashby, 25, 3000 22 Basu, 33, 4003 Bristow, 30, 2007 25 30 Ashby Cass, 5004 Smith Daniels, 22, 6003 Jones, 40, 6003 33 40 44 Smith, 44, 3000 Tracy, 44, 5004 Sparse Index on Name Data File 44 50 Dense Index on Age

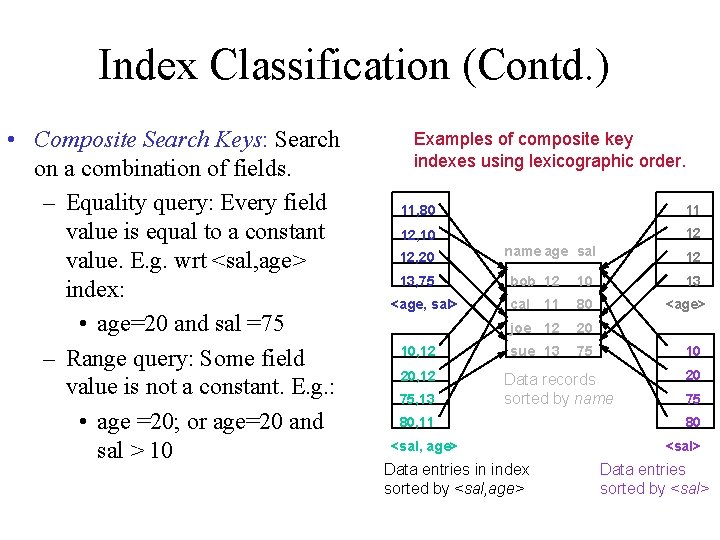

Index Classification (Contd. ) • Composite Search Keys: Search on a combination of fields. – Equality query: Every field value is equal to a constant value. E. g. wrt <sal, age> index: • age=20 and sal =75 – Range query: Some field value is not a constant. E. g. : • age =20; or age=20 and sal > 10 Examples of composite key indexes using lexicographic order. 11, 80 11 12, 10 12 12, 20 13, 75 <age, sal> 10, 12 20, 12 75, 13 name age sal bob 12 10 cal 11 80 joe 12 20 sue 13 75 12 13 <age> 10 Data records sorted by name 80, 11 <sal, age> Data entries in index sorted by <sal, age> 20 75 80 <sal> Data entries sorted by <sal>

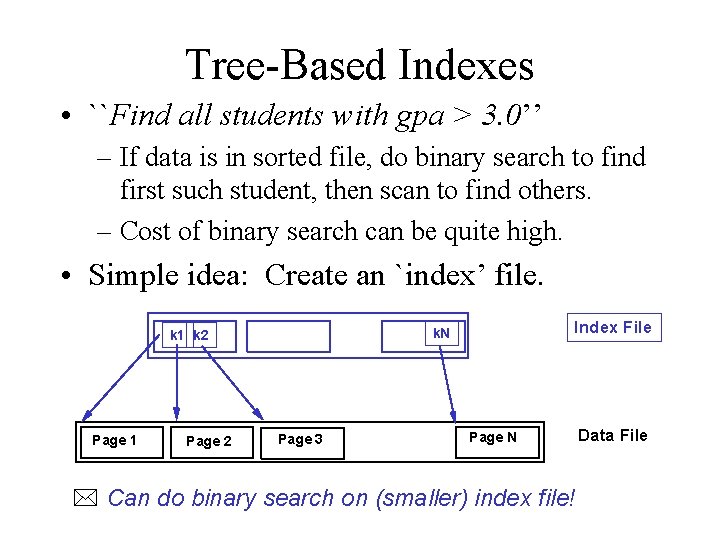

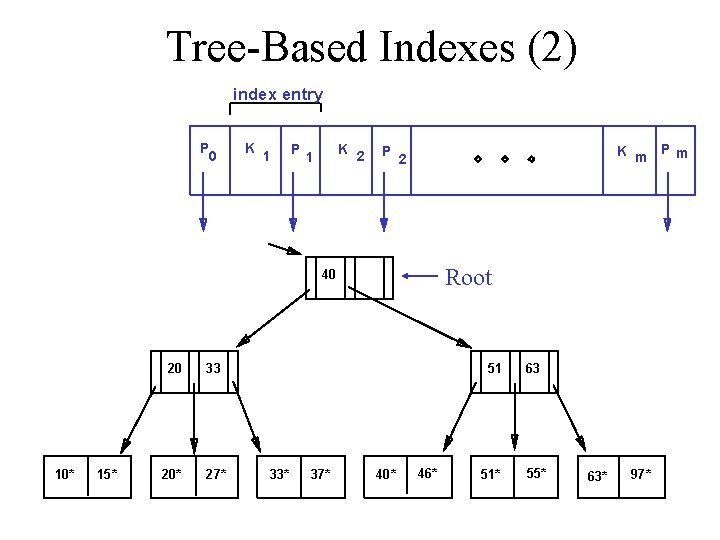

Tree-Based Indexes • ``Find all students with gpa > 3. 0’’ – If data is in sorted file, do binary search to find first such student, then scan to find others. – Cost of binary search can be quite high. • Simple idea: Create an `index’ file. Page 1 Page 2 Index File k. N k 1 k 2 Page 3 Page N * Can do binary search on (smaller) index file! Data File

Tree-Based Indexes (2) index entry P 0 K 1 P K 2 1 P K m 2 Root 40 10* 15* 20 33 20* 27* 51 33* 37* 40* 46* 51* 63 55* 63* 97* Pm

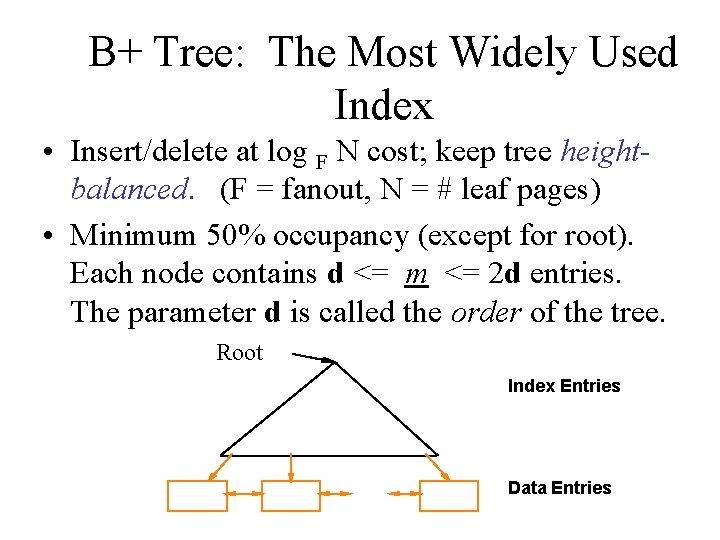

B+ Tree: The Most Widely Used Index • Insert/delete at log F N cost; keep tree heightbalanced. (F = fanout, N = # leaf pages) • Minimum 50% occupancy (except for root). Each node contains d <= m <= 2 d entries. The parameter d is called the order of the tree. Root Index Entries Data Entries

Example B+ Tree • Search begins at root, and key comparisons direct it to a leaf. • Search for 5*, 15*, all data entries >= 24*. . . 13 2* 3* 5* 7* 14* 16* 17 24 19* 20* 22* 30 24* 27* 29* 33* 34* 38* 39*

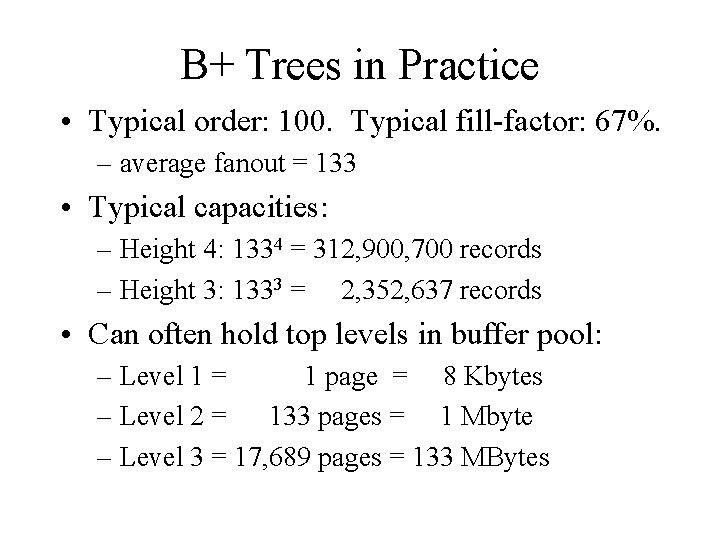

B+ Trees in Practice • Typical order: 100. Typical fill-factor: 67%. – average fanout = 133 • Typical capacities: – Height 4: 1334 = 312, 900, 700 records – Height 3: 1333 = 2, 352, 637 records • Can often hold top levels in buffer pool: – Level 1 = 1 page = 8 Kbytes – Level 2 = 133 pages = 1 Mbyte – Level 3 = 17, 689 pages = 133 MBytes

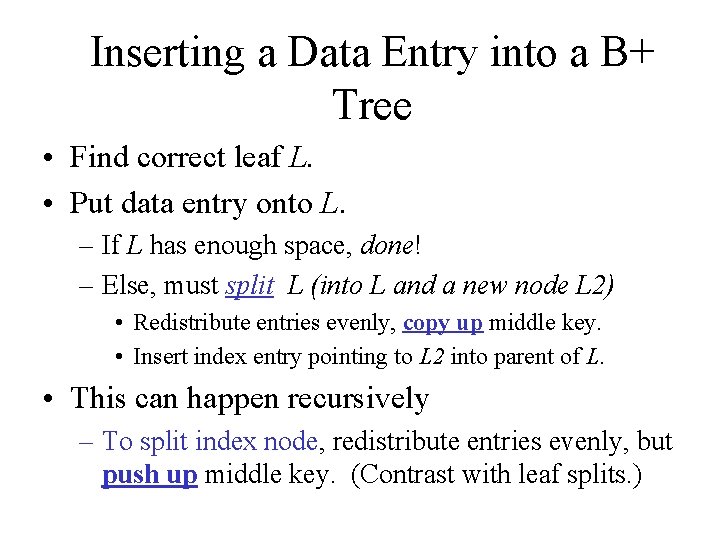

Inserting a Data Entry into a B+ Tree • Find correct leaf L. • Put data entry onto L. – If L has enough space, done! – Else, must split L (into L and a new node L 2) • Redistribute entries evenly, copy up middle key. • Insert index entry pointing to L 2 into parent of L. • This can happen recursively – To split index node, redistribute entries evenly, but push up middle key. (Contrast with leaf splits. )

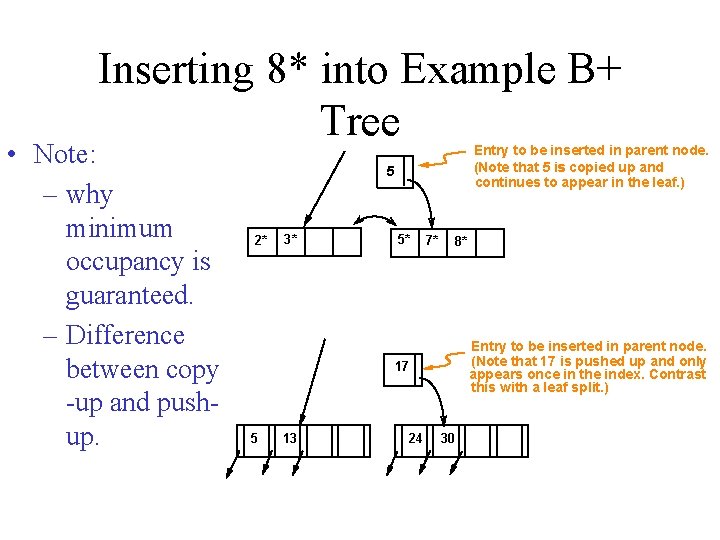

Inserting 8* into Example B+ Tree • Note: – why minimum occupancy is guaranteed. – Difference between copy -up and pushup. Entry to be inserted in parent node. (Note that 5 is s copied up and continues to appear in the leaf. ) 5 2* 3* 5* 7* 8* Entry to be inserted in parent node. (Note that 17 is pushed up and only appears once in the index. Contrast this with a leaf split. ) 17 5 13 24 30

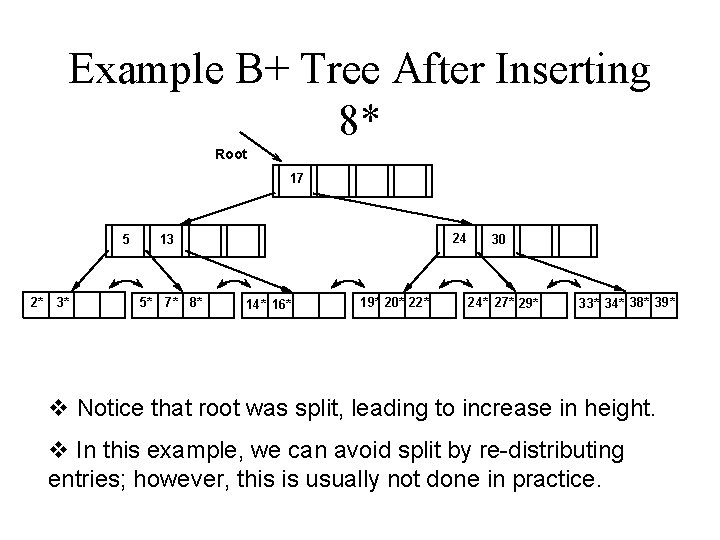

Example B+ Tree After Inserting 8* Root 17 5 2* 3* 24 13 5* 7* 8* 14* 16* 19* 20* 22* 30 24* 27* 29* 33* 34* 38* 39* v Notice that root was split, leading to increase in height. v In this example, we can avoid split by re-distributing entries; however, this is usually not done in practice.

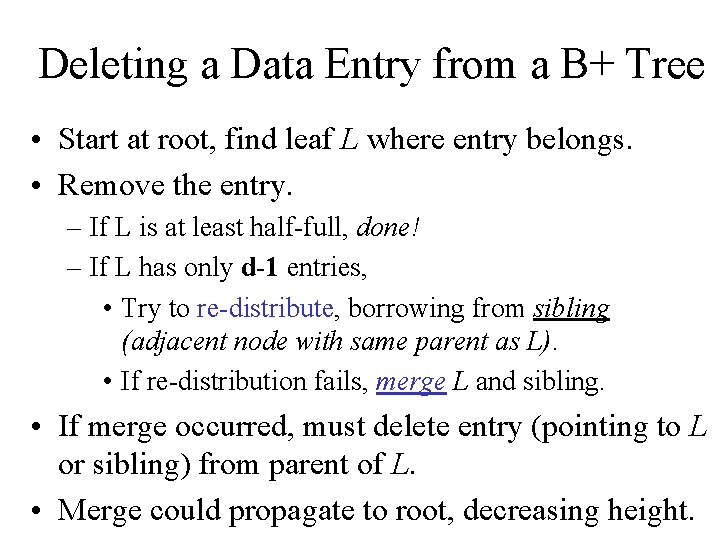

Deleting a Data Entry from a B+ Tree • Start at root, find leaf L where entry belongs. • Remove the entry. – If L is at least half-full, done! – If L has only d-1 entries, • Try to re-distribute, borrowing from sibling (adjacent node with same parent as L). • If re-distribution fails, merge L and sibling. • If merge occurred, must delete entry (pointing to L or sibling) from parent of L. • Merge could propagate to root, decreasing height.

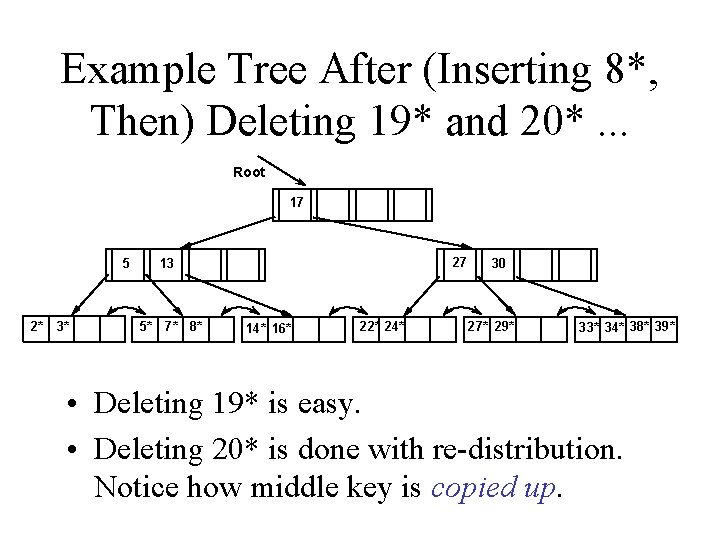

Example Tree After (Inserting 8*, Then) Deleting 19* and 20*. . . Root 17 5 2* 3* 27 13 5* 7* 8* 14* 16* 22* 24* 30 27* 29* 33* 34* 38* 39* • Deleting 19* is easy. • Deleting 20* is done with re-distribution. Notice how middle key is copied up.

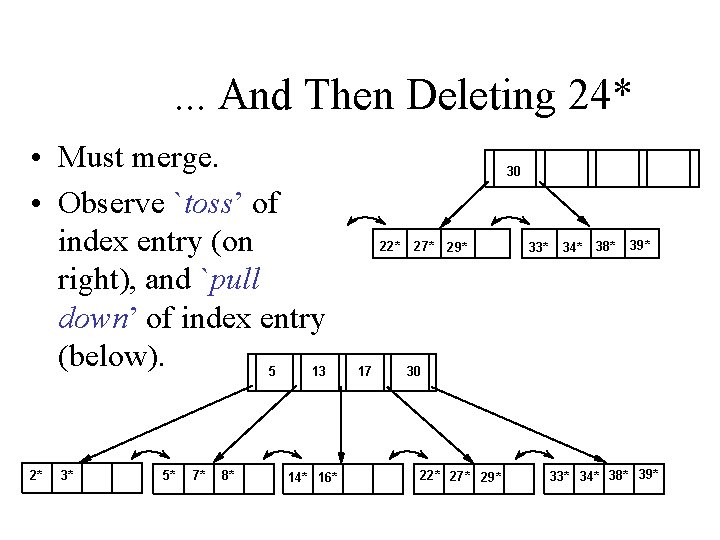

. . . And Then Deleting 24* • Must merge. • Observe `toss’ of index entry (on right), and `pull down’ of index entry (below). 5 2* 3* 5* 7* 8* 13 14* 16* 30 22* 17 27* 29* 33* 34* 38* 39* 30 22* 27* 29* 33* 34* 38* 39*

- Slides: 38